Abstract

Background

Health care providers are often called to respond to in-flight medical emergencies, but lack familiarity with expected supplies, interventions, and ground medical control support.

Objective

The objective of this study was to determine whether a mobile phone app (airRx) improves responses to simulated in-flight medical emergencies.

Methods

This was a randomized study of volunteer, nonemergency resident physician participants who managed simulated in-flight medical emergencies with or without the app. Simulations took place in a mock-up cabin in the simulation center. Standardized participants played the patient, family member, and flight attendant roles. Live, nonblinded rating was used with occasional video review for data clarification. Participants participated in two simulated in-flight medical emergencies (shortness of breath and syncope) and were evaluated with checklists and global rating scales (GRS). Checklist item success rates, key critical action times, GRS, and pre-post simulation confidence in managing in-flight medical emergencies were compared.

Results

There were 29 participants in each arm (app vs control; N=58) of the study. Mean percentages of completed checklist items for the app versus control groups were mean 56.1 (SD 10.3) versus mean 49.4 (SD 7.4) for shortness of breath (P=.001) and mean 58 (SD 8.1) versus mean 49.8 (SD 7.0) for syncope (P<.001). The GRS improved with the app for the syncope case (mean 3.14, SD 0.89 versus control mean 2.6, SD 0.97; P=.003), but not the shortness of breath case (mean 2.90, SD 0.97 versus control mean 2.81, SD 0.80; P=.43). For timed checklist items, the app group contacted ground support faster for both cases, but the control group was faster to complete vitals and basic exam. Both groups indicated higher confidence in their postsimulation surveys, but the app group demonstrated a greater increase in this measure.

Conclusions

Use of the airRx app prompted some actions, but delayed others. Simulated performance and feedback suggest the app is a useful adjunct for managing in-flight medical emergencies.

Keywords: in-flight medical emergencies, ground medical control, commercial aviation, simulation

Introduction

Epidemiologic evidence for in-flight medical emergencies from a ground-based medical support system estimated that medical emergencies occur in 1 of every 604 flights [1]. This is likely an underestimate because no mandatory reporting system exists, and uncomplicated issues often go unreported [2]. Air travel is increasing, with 895.5 million passengers flying in 2015 [3], leading to an increased frequency of in-flight medical emergencies. In one study, 42% of 418 health care providers surveyed reported being called on to give aid in an in-flight medical emergency [4].

The Federal Aviation Administration mandates that US-based airlines carry basic first aid kits stocked with bandages and splints, and at least one automated external defibrillator must be available [5]. Beyond the basic kit, no national or international standards exist, although there have been recent calls for consistency [6,7].

Health care personnel are also unlikely to be familiar with medical kit contents, flight crew communication, and medical emergency protocols [4]. Clinicians’ expertise typically consists of their specialty training and life support courses. Emergency response training is often limited as emergency medicine is not a mandatory rotation in medical education [8]. Although helpful, ground-based medical consultation support services (ground medical control) still depend on volunteers to be their “eyes and ears” [1,9]. The assumption is that volunteers will find and report clinical information relevant to the presenting medical emergency [1,10].

Comfort attending to an in-flight medical emergency is likely to vary substantially across provider backgrounds. Thus, there is a need for education about the environment and scenario-based basic in-flight medical emergency response training. In recent months, the aviation and health care industries have recognized this and called for education in emergency stabilization and flight medicine at both graduate and undergraduate levels [11,12].

Although several authors have discussed the management of in-flight emergencies [13-18], little real-time decision support exists outside of ground medical control. Normal emergency response mobile phone apps or cognitive aids may not take the environment into account. In response to this perceived need, a mobile phone app was designed by emergency, aerospace medicine, and radiology physicians (airRx) [19] to assist licensed health care personnel in dealing with the most common in-flight medical emergencies. The app offers complaint-specific recommended actions, care algorithms, and in-the-moment information regarding the likely available medications. While serving as a real-time decision support reference, the app also provides a method of just-in-time training (JITT) [20]. Pertinently, the JITT approach has been successful in on-the-job training for first responders in unfamiliar situations [21]. Studies have also shown that mobile phone-based cognitive aids promote adherence to protocols in both real and simulated clinical scenarios [22-24]. Therefore, a JITT-based mobile phone cognitive aid or app is a reasonable approach to delivering focused learning during an in-flight medical emergency. The objective of this study was to determine the usefulness of the airRx mobile phone app in responding to simulated in-flight medical emergencies. Our secondary objective was to examine whether access to the airRx app would increase confidence to respond to an in-flight medical emergency.

Methods

Design

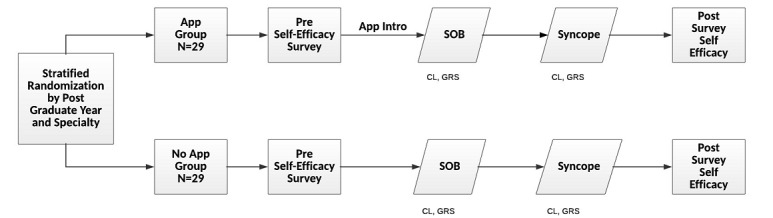

This was a prospective randomized controlled trial. Fifty-eight participants were block randomized by postgraduate year and specialty area to simulated in-flight medical emergencies with and without access to a smart device app. Although block randomization was used to assign participants to each group, the assessment and treatment of the two chief concerns—shortness of breath and syncope—were not randomized. Anticipating a case order effect, all simulations were run in the sequence order depicted in Figure 1 (overall study flow), with the shortness of breath case preceding the syncope case. This study design was approved by the University of Illinois–Peoria, Peoria, IL, Institutional Review Board.

Figure 1.

Study design. Participants were debriefed after the postsurvey. CL: checklist; GRS: Global Rating Scale; SOB: shortness of breath; SYN: syncope.

Participants

Participants were solicited from non-emergency medicine residency programs including diagnostic radiology, family medicine, internal medicine, pediatrics, psychiatry, combined medicine-pediatrics, and obstetrics and gynecology. Emergency medicine residents were excluded given their expertise and training in management of emergencies. Participants’ performances were kept confidential. They were compensated through a US $25 gift card and a copy of the airRx app at no cost to them. Participants were instructed to keep the scenarios confidential to minimize the relay of scenario information to future participants.

Intervention

The intervention tested is an app known as airRx (Figure 2). It was initially funded by a nonprofit organization and is now freely available on both the iOS and Android mobile phone platforms. It was created by the authors (RRB, MDS, CJC) and nonauthors (Joshua Timpe, MD; Claude Thibeault, MD; Paulo Alves; and John Vozenilek, MD) and designed to help non-critical care, non-emergency health care professionals manage common in-flight medical emergencies. The app version (airRx version 1.2.1, 2016) [19] was kept constant during the study. The app has a section on “universal starters” for users to consider for any in-flight medical emergency. There are also sections on medications and equipment to expect on most US major airlines, a complaint-based set of algorithms for management, and medicolegal information.

Figure 2.

Screenshot of the airRx app.

Case Development

Syncope and shortness of breath were chosen as our in-flight medical emergency scenarios because these are the top two commonly occurring in-flight medical emergencies noted in the literature [1,12]. Case development followed standard simulation case creation guidelines [22]. Real flight attendants (United Airlines, Chicago, Illinois) also participated in the process of scenario design and pilot testing to ensure content validity. Cases are available in Multimedia Appendix 1.

Simulation Environment

The study took place at a university hospital-affiliated simulation center. Space and movement limits that mimicked the floor distances of a Boeing 737 aircraft were created within a simulation laboratory with audiovisual recording capability.

The simulation center has a cadre of standardized participants who undergo general and scenario-specific orientation. The standardized participants went through dry runs of each scenario, received feedback on their performance, and were given earbuds for prompts in real time. In each scenario, there was one standardized participant passenger who became ill and one standardized participant passenger bystander who had relevant information if asked. Stable actor cohorts played these roles. Pathologic physical exam findings were given on cue through prewritten cards from the bystander standardized participant because healthy patient standardized participants could not mimic symptoms such as wheezing.

For each case there were also two standardized participant flight attendants who communicated with the investigators in the simulation control room (“pilot” and “ground medical support”) and relayed responses to the participants. Real flight attendants trained standardized participants to portray flight attendant roles through direct observations of their performance in pilot simulations, video review, and discussion of planned responses to questions. To isolate participant performances, we instructed the flight attendants to be helpful and follow directions but wait to inform ground medical control until instructed. Thus, we controlled for variable airline protocols, flight attendant training, and individual responses expected in real life.

All participants were prebriefed on the general premise of the simulation, safe space principles, and learner contracts, and given the opportunity to ask questions via a standardized script by the same personnel. Both the control and intervention groups were allowed to use any other phone apps they had on their personal smart device that would be accessible during airplane mode. The app group had up to 15 minutes to familiarize themselves with the app.

Scenarios began with the participants sitting in the simulated cabin with a brief pause before flight attendants announced the in-flight medical emergency and called for assistance; these were run for 8 minutes. The length of the simulation was determined based on pilot simulation cases in which, on average, most critical actions were completed by participants by 8 minutes. At the end of the simulation, participants were debriefed based on comparison of their performance with the action checklist.

Main Measures

The main measures assessed were subject checklist completion rates, global rating scales (GRS), time to critical actions, and pre-post simulation confidence surveys.

Instrument Development

There are no existing performance expectations for in-flight medical emergency responders that can serve as an external validity check. However, after literature review, consensus discussions among flight attendants (MC), aviation (MDS, CT, and PA), and emergency medicine experts (NN, WB) led to the development of optimal performance expectations reflected in scenario-specific rating forms, including both checklists and GRS. These were cross-checked for content validity by having other team members (MDS, RB) review the checklist items. Items included history gathering, physical examination, basic management choices, and communications actions.

The 4-point GRS (1=needs further instruction, 2=competent but with close supervision, 3=competent with minimal supervision, and 4=competent to perform independently) measures competence in managing the scenario and is similar to the entrustable professional activities scale used in undergraduate medical education [23]. We ran four pilot simulations per case with a sample group of resident physician participants. This allowed us to train the standardized participants and refine our simulation cases and checklist items. Some items were reworded for clarity, and several were dropped.

We also created pre-post simulation surveys for participants to self-assess their readiness for in-flight medical emergencies, knowledge of resources, medicolegal concerns, crew integration, in-flight medical emergency communications process, and willingness to respond. Surveys were pilot-tested for clarity, and usability questions (app group only) were derived from a previously developed technology usability survey [24] and administered immediately before and after the simulations.

Observation, Rating Method, and Data Collection

Raters were physicians and nurses, with research expertise, who were trained on the checklists for the scenarios. Raters had no conflicts of interest. Participants in the app group often had the app in hand; thus, we could not blind the raters. Primary and secondary raters observed behind two-way glass in the control room. Due to scheduling logistics, secondary rating was occasionally performed using the audiovisual recording. Because this occurred in less than 5% of cases, we did not test interrater reliability between live and video ratings. All observations were captured on paper, transferred into survey software (Qualtrics), and then extracted to a spreadsheet program (Excel v2013, Microsoft Corp). Checklist items were marked as either “observed” or “not observed.” Something could be “not observed” if it was not done, time ran out, the standardized participant patient prevented the action, or the observer missed the action. Five actions per case were timed. The number of these items was limited due to rater burden. Recorded times of the two raters were averaged together for statistical analysis.

Results

Sample size estimation was difficult due to unknown performance expectations, standard deviations, and effect sizes. However, we prospectively estimated our sample size to be 74 in total, or 37 per group, to have an 80% chance (power=0.80) of detecting a 20% improved performance overall in the checklist, with an assumed standard deviation of 30%. The study was stopped after interim analysis (29 participants per arm) due to resource constraints.

All statistical tests were performed against a two-sided alternative hypothesis with a significance level of 5% (α=.05) using R version 3.2.5 or latest version. Interrater reliability was calculated using Gwet’s AC1 (agreement coefficient 1), which is capable of handling more than two raters and response categories. The proportion of participants to complete each action, treated as binary variables, were compared using chi-square analysis or Fisher exact test as appropriate. In addition, the percentage of applicable completed actions was averaged between raters and compared between groups using independent sample t tests. The Likert-type global competency ratings and response times for the timed critical actions were not normally distributed, so both were compared using nonparametric Wilcoxon rank sum tests. Demographics were analyzed between groups using chi-square or Fisher exact test as appropriate. Mean ratings on the pre- and postsimulation surveys were analyzed using a linear mixed model. A log transformation was used as needed to meet model assumptions.

Analysis of participant demographics did not show differences in specialty, level of training, experience flying, or experience with in-flight medical emergencies (Table 1) between the two groups. The mean interrater reliability across the entire case was 0.90 for the syncope case and 0.94 for the shortness of breath case.

Table 1.

Participant demographics (N=58)

| Category | App (n=29), n (%) | Control (n=29), n (%) | P valuea | |

| Specialty |

|

|

.75 | |

|

|

Internal medicine | 8 (28) | 8 (28) |

|

|

|

Medicine-pediatrics | 5 (17) | 9 (31) |

|

|

|

Pediatrics | 4 (14) | 3 (10) |

|

|

|

Family medicine | 1 (3) | 2 (7) |

|

|

|

Obstetrics and gynecology | 2 (7) | 2 (7) |

|

|

|

Other (radiology and psychiatry) | 9 (31) | 5 (17) |

|

| Training level |

|

|

.84 | |

|

|

Postgraduate year 1 | 11 (38) | 10 (35) |

|

|

|

Postgraduate year 2 | 9 (31) | 8 (28) |

|

|

|

Postgraduate year 3 | 5 (17.2) | 8 (28) |

|

|

|

Postgraduate year 4 | 4 (13.8) | 3 (10) |

|

| Direct high acuity care |

|

|

.54 | |

|

|

Rarely, if ever | 2 (7) | 4 (14) |

|

|

|

Infrequently | 6 (21) | 6 (21) |

|

|

|

Regularly, but not frequently | 10 (35) | 13 (45) |

|

|

|

Frequently | 8 (28) | 3 (10) |

|

|

|

Very frequently | 3 (10) | 3 (10) |

|

| Announcement for medical professionals |

|

|

.49 | |

|

|

Yes | 6 (21) | 4 (14) |

|

|

|

No | 23 (79) | 25 (86) |

|

| Average number of flights per year |

|

|

.04 | |

|

|

None | 0 (0) | 2 (7) |

|

|

|

1 to 2 | 11 (38) | 14 (48) |

|

|

|

3 to 5 | 15 (52) | 6 (21) |

|

|

|

6 to 10 | 3 (10) | 7 (24) |

|

| Experienced call for medical help |

|

|

.19 | |

|

|

Once | 1 (17) | 3 (75) |

|

|

|

2-3 times | 5 (83) | 1 (25) |

|

| Responded to call for medical help |

|

|

.99 | |

|

|

Never | 3 (50) | 2 (50) |

|

|

|

Once | 1 (17) | 1 (25) |

|

|

|

2-3 times | 2 (33) | 1 (25) |

|

| Actively provided care |

|

|

.99 | |

|

|

No, other provided care | 1 (33) | 0 (0) |

|

|

|

Yes, I actively provided care | 2 (67) | 2 (100) |

|

aUsed Fisher extract test for P values.

The app group had a significantly higher mean percentage of total completed checklist items (mean 58.0, SD 8.1) compared with the control group (mean 49.8, SD 7.0) for the syncope scenario (t56=4.15, P<.001) and the shortness of breath scenario (mean 56.1, SD 10.3 versus mean 49.4, SD 7.4 for control; t56=2.82, P=.007).

For both cases, the app group demonstrated significantly greater requests for ground medical control, flight attendant assistance, and communications to inform and update the cabin crew. For the shortness of breath case, the app demonstrated significantly greater administration of steroids, administration of high flow oxygen, and communications to inform and update the cabin crew. However, the control group completed the cardiac and pulmonary exams and reassessed vitals more frequently. For the syncope case, the app group asked about dyspnea and palpitations, positioned the patient supine, and administered oxygen more frequently compared with the control group (Table 2).

Table 2.

Counts and proportion of completed checklist items (both raters).

| Checklist item | Completed checklist item, n (%) | P value | ||

| App (n=29) | Control (n=29) |

|

||

| Shortness of breath checklist items |

|

|

|

|

|

|

Introduces self and role | 47 (81) | 43 (74) | .37 |

|

|

Acknowledges patient by name and identifies family members | 45 (78) | 18 (31) | <.001 |

|

|

Asks patient for bystander for insight | 58 (100) | 57 (98) | .99 |

|

|

Request flight attendant assistance | 35 (60) | 19 (33) | .003 |

|

|

Informs/updates the cabin crew | 17 (31) | 5 (11) | .01 |

|

|

Asks patient basic history | 55 (95) | 57 (98) | .62 |

|

|

Asks patient allergies | 17 (30) | 17 (29) | .99 |

|

|

Asks patient about home oxygen use | 10 (17) | 12 (21) | .64 |

|

|

Elicits COPDa/asthma history | 12 (21) | 12 (21) | .99 |

|

|

Examines heart and lungs through auscultation | 46 (79) | 54 (93) | .03 |

|

|

Examines neck | 2 (3) | 2 (3) | .99 |

|

|

Examines for pedal edema | 6 (10) | 8 (14) | .57 |

|

|

Obtains vitals (BPb, HRc) | 13 (22) | 13 (22) | .99 |

|

|

Reassesses patient | 56 (97) | 58 (100) | .50 |

|

|

Administers steroids | 18 (32) | 4 (7) | .001 |

|

|

Administers albuterol treatment | 56 (97) | 56 (97) | .99 |

|

|

Requests for emergency medical kit | 58 (100) | 58 (100) | .99 |

|

|

Requests ground medical control consult | 26 (45) | 11 (19) | .003 |

|

|

Repeats vitals | 38 (66) | 52 (88) | .002 |

|

|

Administers high flow oxygen | 49 (85) | 40 (69) | .048 |

| Syncope checklist items |

|

|

|

|

|

|

Introduces self and role | 45 (78) | 44 (79) | .90 |

|

|

Acknowledges patient by name and identifies family members | 27 (47) | 18 (32) | .10 |

|

|

Asks patient for bystander for insight | 57 (98) | 53 (91) | .21 |

|

|

Requests emergency medical kit | 58 (100) | 58 (100) | .99 |

|

|

Informs the cabin crew | 39 (71) | 18 (40) | .002 |

|

|

Requests ground medical control | 29 (50) | 16 (28) | .02 |

|

|

Asks about patient’s allergies | 9 (15) | 14 (25) | .23 |

|

|

Asks about patient’s symptoms | 54 (93) | 57 (100) | .12 |

|

|

Asks about palpitations | 11 (19) | 2 (4) | .01 |

|

|

Asks about chest pain | 33 (57) | 27 (47) | .31 |

|

|

Asks about dyspnea | 35 (60) | 22 (39) | .02 |

|

|

Asks about arrhythmia history | 15 (26) | 11 (20) | .40 |

|

|

Asks about gastrointestinal bleeding history | 0 (0) | 3 (5) | .12 |

|

|

Asks patient basic history | 58 (100) | 54 (95) | .12 |

|

|

Requests flight attendant assistance | 24 (41) | 5 (9) | <.001 |

|

|

Auscultates heart and lungs | 42 (72) | 43 (74) | .83 |

|

|

Examines abdomen | 2 (3) | 2 (3) | .99 |

|

|

Examines patients neck | 2 (3) | 3 (5) | .99 |

|

|

Requests the AEDd | 20 (35) | 12 (21) | .12 |

|

|

Obtains vitals | 51 (88) | 51 (88) | .99 |

|

|

Repeats vitals | 17 (29) | 24 (41) | .17 |

|

|

Reassess patient | 56 (98) | 52 (90) | .11 |

|

|

Positions the patient | 48 (84) | 32 (56) | .001 |

|

|

Administers oxygen | 28 (52) | 8 (14) | <.001 |

|

|

Administers fluids | 54 (93) | 56 (97) | .68 |

aCOPD: chronic obstructive pulmonary disease.

bBP: blood pressure.

cHR: heart rate/pulse.

dAED: automatic external defibrillator.

For timed actions, the app group had significantly shorter response times for the “alert ground medical support” checklist item compared with the control group, and this was statistically significant for both cases (P=.01; Table 3). However, the control group for the shortness of breath case had a statistically significant shorter response time for the “obtains vitals” checklist item (P=.006; Table 3).

Table 3.

Timed critical actions for shortness of breath and syncope cases.

| Timed critical actions | App (n=29), mean (SD) | Control (n=29), mean (SD) | P value | |

| Shortness of breath |

|

|

|

|

|

|

Albuterol | 264.6 (104.4) | 239.9 (95.2) | .35 |

|

|

Oxygen | 268.0 (127.0) | 294.0 (157.7) | .59 |

|

|

Ground medical crew | 369.8 (143.4) | 452.2 (78.2) | .01 |

|

|

Emergency medical kit | 106.1 (35.5) | 108.5 (36.0)a | .74 |

|

|

Vitals | 336.5 (125.9) | 249.0 (113.6)a | .006 |

| Syncope |

|

|

|

|

|

|

Emergency medical kit | 101.7 (40.5) | 82.7 (33.5) | .05 |

|

|

Ground medical crew | 352.4 (155.0) | 441.5 (87.0) | .02 |

|

|

Vitals | 265.2 (107.7) | 228.2 (122.9) | .05 |

|

|

Supine position | 282.8 (139.5) | 321.4 (167.8)a | .32 |

|

|

Give fluids | 314.1 (107.4) | 260.3 (83.3) | .05 |

an=28.

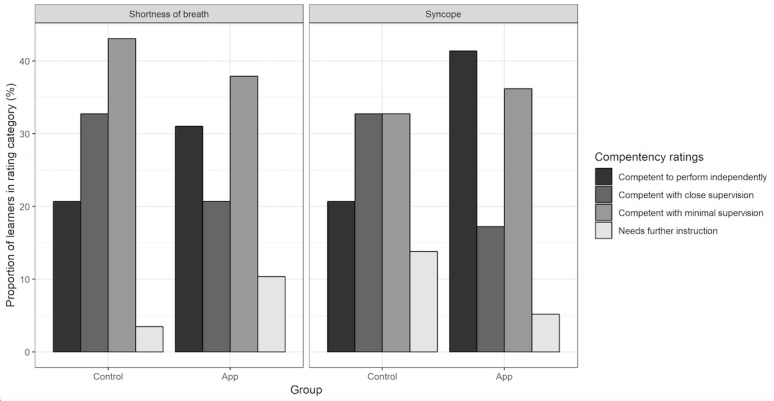

Comparing the performance of learners across the two groups, there was no significant difference in the GRS for the shortness of breath case; however, the app group was rated significantly higher (mean 3.14, SD 0.89) for the syncope case compared with the control group (mean 2.6, SD 0.97; P=.003; Figure 3). Additionally, although not statistically significant, there seemed to be a trend with upper postgraduate levels performing slightly better, and certain specialties (internal medicine) performing better than other ones (radiology).

Figure 3.

Global ratings for shortness of breath and syncope. IFME: in-flight medical emergency; SOB: shortness of breath; SYN: syncope.

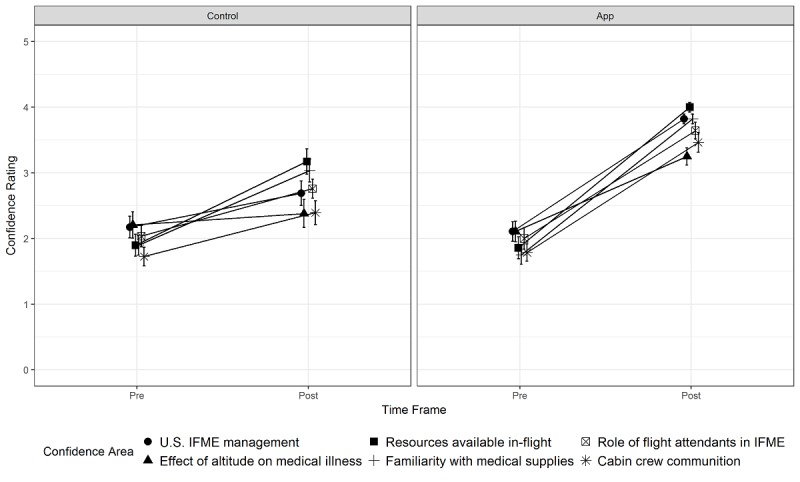

Postsimulation surveys (Figure 4) were associated with higher ratings from both the app and control groups relative to presurvey. However, the app group demonstrated increased confidence compared with the control group.

Figure 4.

Pre- and postsimulation learner confidence in managing in-flight medical emergencies (IFME).

Discussion

Principal Findings

Availability of medical care during an in-flight medical emergency is a passenger safety prerogative. To address this need, most US airlines mandate first aid and basic life support training for flight crew and contact with ground-based medical consultation services, generally staffed by emergency medicine physicians who provide protocol-driven treatment recommendations and help make decisions regarding plane diversion. Health care professionals are not obligated to volunteer, and factors influencing their willingness to respond include their specialty, ancillary training (eg, combat medic, paramedic), years of practice, and medicolegal concerns [25]. Our survey showed that confidence increased with training in this unfamiliar environment, but confidence to respond increased more in the app group. This increase in confidence should not be falsely reassuring of anticipated improved performance, but the user may be more likely to respond.

We successfully simulated in-flight medical emergencies by recreating space constraints, communications barriers, and equipment limitations present on a mobile aircraft. We noted improved performance in actions where the app encouraged communication with flight crew and ground medical support. The app helps ensure that the proper questions are asked of patients, which may yield more fruitful conversations with ground medical control and improve treatments administered. Effective communications with cabin crew and ground medical control are crucial so that ground medical control can advise the pilots on the need to divert for care. Such decisions are costly and affect the passengers on many levels [26].

The app both offers a cognitive aid similar to an Advanced Cardiac Life Support card, while simultaneously introducing an additional source of cognitive load [27,28]. We believe that certain actions or times could be positively or negatively affected by the app. For example, vitals or certain history or physical exam items might be delayed while the learner was reading the app. Although some reached statistical significance (auscultation favored control in the shortness of breath group), there was not a clear preponderance favoring the control group for these types of actions. The app makes it clear that high flow oxygen is indicated due to altitude, and we noted improvements in that choice. Similarly, we saw improvements in the supine positioning for the app group in the syncope case, which is prompted by the app. Overall, the app group completed a higher percentage of checklist items compared with the control group in both cases. However, we should caution that the app could delay times to basic physical assessment, including vital signs.

The literature has shown that checklists and GRS can be complementary [29]. GRS are sometimes better able to see subtle signs of expertise than checklists; however, checklists give raters very concrete items to view and thus may improve interrater reliability. In our study, we found that GRS did not show improved performance with the app in the shortness of breath case but did in the syncope case. Possible reasons for this include rating effects, practice effect with app (syncope case was always second), and additional preparation with the app during the approximately 10-minute break between simulation cases.

Limitations

Our limitations include the small amount of time learners interacted with the app before using it. We gave participants 15 minutes to familiarize themselves with the app, and chose this given average taxi-out times of 16.2 minutes [30]. Our simulation scenarios were relatively short at 8 minutes each, and extra time might have given either group a chance to meet missed checklist items. We did not control for the confounding variable of other app usage, and although we did not formally track this, we noted very little alternate health care app use. It is difficult to know how actual real-world performance would progress, but our gestalt was that the environment, cases, and witnessed performance were quite credible. Our relayed via cabin crew communication method for ground medical support was held constant and mimicked that found on many airlines, but there is no standard expectation. We expect changing technology and situational urgency will alter the method of communication. We also did not analyze for standardized participant effects, but we had nearly the same cohort throughout the entire project. We did not blind the raters because it was clear due to the app use in view. In hindsight, we could have given both groups the same device to create partial blinding. We anticipated a case order effect and we kept our case order the same for this reason. We did not take a G-theory approach to looking at the variability in case, case order, standardized participants, and raters, in part because sample size would have been prohibitively large. Our results are likely also subject to volunteer bias in that those who were more trained, able, and confident to perform likely self-volunteered for our study. Finally, our study focused on resident physicians; however, the electronic app being tested is also applicable to allied health professions or physicians some years out of residency training, which would make for an interesting future study.

Conclusion

We found that the use of a mobile phone app modestly improved performance of nonemergency resident physician participants during simulated in-flight medical emergencies. We caution that app use may delay or distract from basic physical assessments. The app improved participants’ confidence in in-flight medical emergency response more than simulation practice alone.

Future Directions

Future studies are needed to examine whether the app is used during real in-flight medical emergencies. It will also be interesting to examine the effect of introducing this electronic app to other health care professionals as well as attending physicians. Finally, we maintained the contents of our airline emergency kits as per Federal Aviation Administration guidelines; however, international flights may have considerable variations in medical kit contents. It might be useful to investigate the effects of different medical kits on the management of simulated in-flight medical emergencies.

Acknowledgments

The authors would like to acknowledge Dr Paulo Alves, Dr Claude Thiebeault, and United Airline personnel Maria Theresa Cook for their insight and feedback on the simulation cases and checklists and standardized patient training; and Chase Salazar, Blair Engelmann, and Kimberly Cooley for administrative research support. We would like to acknowledge United Airlines for allowing us to use five of their flight attendant personnel to assist in the initial simulations and to assist in teaching the flight attendant role.

Abbreviations

- AED

automatic external defibrillator

- BP

blood pressure

- COPD

chronic obstructive pulmonary disease

- GRS

global rating scale

- HR

heart rate/pulse

- JITT

just-in-time training

Overview of the airRx app.

CONSORT‐EHEALTH checklist (V 1.6.1).

Footnotes

Conflicts of Interest: This work was conducted at Jump Simulation, a collaboration of OSF HealthCare and the University of Illinois College of Medicine at Peoria. The Illinois Corporation, AirRx, is a nonprofit foundation with 501-c3 status. AirRx owns the app, airRx, and supported the research by providing standardized patient meals and gift cards to participants. AirRx also received funds from the period of time when it was selling the app, which partially defrayed the costs of app development. The airRx app has subsequently become free on both Android and iOS platforms.

References

- 1.Peterson DC, Martin-Gill C, Guyette FX, Tobias AZ, McCarthy CE, Harrington ST, Delbridge TR, Yealy DM. Outcomes of medical emergencies on commercial airline flights. N Engl J Med. 2013 May 30;368(22):2075–2083. doi: 10.1056/NEJMoa1212052. http://europepmc.org/abstract/MED/23718164 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Goodwin T. In-flight medical emergencies: an overview. BMJ. 2000 Nov 25;321(7272):1338–1341. doi: 10.1136/bmj.321.7272.1338. http://europepmc.org/abstract/MED/11090521 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Smallen D. Bureau of Transportation Statistics. 2016. [2019-05-13]. 2015 US-based airline traffic data https://www.bts.gov/newsroom/2015-us-based-airline-traffic-data .

- 4.Chatfield E, Bond WF, McCay B, Thibeault C, Alves PM, Squillante M, Timpe J, Cook CJ, Bertino RE. Cross-sectional survey of physicians on providing volunteer care for in-flight medical events. Aerosp Med Hum Perform. 2017 Sep 01;88(9):876–879. doi: 10.3357/AMHP.4865.2017. [DOI] [PubMed] [Google Scholar]

- 5.Nable JV, Tupe CL, Gehle BD, Brady WJ. In-flight medical emergencies during commercial travel. N Engl J Med. 2015 Sep 03;373(10):939–945. doi: 10.1056/NEJMra1409213. [DOI] [PubMed] [Google Scholar]

- 6.Thibeault C, Evans AD, Pettyjohn FS, Alves PM, Aerospace Medical Association AsMA medical guidelines for air travel: in-flight medical care. Aerosp Med Hum Perform. 2015 Jun;86(6):572–573. doi: 10.3357/AMHP.4221.2015. [DOI] [PubMed] [Google Scholar]

- 7.FAA proposed rule for AEDs and inflight medical kits. Aviat Space Environ Med. 2000 Oct;71(10):1072. [PubMed] [Google Scholar]

- 8.Khandelwal S, Way DP, Wald DA, Fisher J, Ander DS, Thibodeau L, Manthey DE. State of undergraduate education in emergency medicine: a national survey of clerkship directors. Acad Emerg Med. 2014 Jan;21(1):92–95. doi: 10.1111/acem.12290. doi: 10.1111/acem.12290. [DOI] [PubMed] [Google Scholar]

- 9.Alves P, MacFarlane H. The Challenges of Medical Events in Flight. Phoenix, AZ: MedAire; 2011. [2019-05-13]. https://www.medaire.com/docs/default-document-library/the-challenges-of-medical-events-in-flight.pdf?sfvrsn=0 . [Google Scholar]

- 10.Donner HJ. Is there a doctor onboard? Medical emergencies at 40,000 feet. Emerg Med Clin North Am. 2017 May;35(2):443–463. doi: 10.1016/j.emc.2017.01.005.S0733-8627(17)30005-6 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.American Medical Association. 2018. Air Travel Safety H-45.979 https://policysearch.ama-assn.org/policyfinder/detail/In%20flight%20emergencies?uri=%2FAMADoc%2FHOD.xml-0-4034.xml .

- 12.Stone EG. In-flight emergency. Ann Emerg Med. 2017 Apr;69(4):520–521. doi: 10.1016/j.annemergmed.2016.11.013.S0196-0644(16)31376-2 [DOI] [PubMed] [Google Scholar]

- 13.Hinkelbein J, Neuhaus C, Wetsch WA, Spelten O, Picker S, Böttiger BW, Gathof BS. Emergency medical equipment on board German airliners. J Travel Med. 2014;21(5):318–323. doi: 10.1111/jtm.12138. http://onlinelibrary.wiley.com/resolve/openurl?genre=article&sid=nlm:pubmed&issn=1195-1982&date=2014&volume=21&issue=5&spage=318 . [DOI] [PubMed] [Google Scholar]

- 14.Hon KL, Leung KK. Review of issues and challenges of practicing emergency medicine above 30,000-feet altitude: 2 anonymized cases. Air Med J. 2017;36(2):67–70. doi: 10.1016/j.amj.2016.12.006.S1067-991X(16)30355-8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Silverman D, Gendreau M. Medical issues associated with commercial flights. Lancet. 2009 Jun 13;373(9680):2067–2077. doi: 10.1016/S0140-6736(09)60209-9.S0140-6736(09)60209-9 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Rayman RB, Zanick D, Korsgard T. Resources for inflight medical care. Aviat Space Environ Med. 2004 Mar;75(3):278–280. [PubMed] [Google Scholar]

- 17.Sand M, Bechara F, Sand D, Mann B. In-flight medical emergencies. Lancet. 2009 Sep 26;374(9695):1062–1063; author reply 1062. doi: 10.1016/S0140-6736(09)61697-4.S0140-6736(09)61697-4 [DOI] [PubMed] [Google Scholar]

- 18.Donaldson E, Pearn J. First aid in the air. Aust N Z J Surg. 1996 Jul;66(7):431–434. doi: 10.1111/j.1445-2197.1996.tb00777.x. [DOI] [PubMed] [Google Scholar]

- 19.Google Play. airRX https://play.google.com/store/apps/details?id=org.osfhealthcare.airrx& .

- 20.Knutson A, Park ND, Smith D, Tracy K, Reed DJ, Olsen SL. Just-in-time training: a novel approach to quality improvement education. Neonatal Netw. 2015;34(1):6–9. doi: 10.1891/0730-0832.34.1.6. [DOI] [PubMed] [Google Scholar]

- 21.Motola I, Burns WA, Brotons AA, Withum KF, Rodriguez RD, Hernandez S, Rivera HF, Issenberg SB, Schulman CI. Just-in-time learning is effective in helping first responders manage weapons of mass destruction events. J Trauma Acute Care Surg. 2015 Oct;79(4 Suppl 2):S152–S156. doi: 10.1097/TA.0000000000000570. [DOI] [PubMed] [Google Scholar]

- 22.Bond WF, Spillane L. The use of simulation for emergency medicine resident assessment. Acad Emerg Med. 2002 Nov;9(11):1295–1299. doi: 10.1197/aemj.9.11.1295. http://onlinelibrary.wiley.com/resolve/openurl?genre=article&sid=nlm:pubmed&issn=1069-6563&date=2002&volume=9&issue=11&spage=1295 . [DOI] [PubMed] [Google Scholar]

- 23.Ten Cate O, Chen HC, Hoff RG, Peters H, Bok H, van der Schaaf M. Curriculum development for the workplace using Entrustable Professional Activities (EPAs): AMEE Guide No. 99. Med Teach. 2015;37(11):983–1002. doi: 10.3109/0142159X.2015.1060308. [DOI] [PubMed] [Google Scholar]

- 24.Lewis JR. IBM computer usability satisfaction questionnaires: psychometric evaluation and instructions for use. Int J Human Computer Interact. 1995 Jan;7(1):57–78. doi: 10.1080/10447319509526110. [DOI] [Google Scholar]

- 25.Stewart PH, Agin WS, Douglas SP. What does the law say to Good Samaritans? A review of Good Samaritan statutes in 50 states and on US airlines. Chest. 2013 Jun;143(6):1774–1783. doi: 10.1378/chest.12-2161.S0012-3692(13)60411-0 [DOI] [PubMed] [Google Scholar]

- 26.Ho SF, Thirumoorthy T, Ng BB. What to do during inflight medical emergencies? Practice pointers from a medical ethicist and an aviation medicine specialist. Singapore Med J. 2017 Jan;58(1):14–17. doi: 10.11622/smedj.2016145. doi: 10.11622/smedj.2016145.j58/1/14 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Sonnenfeld N, Keebler J. A quantitative model for unifying human factors with cognitive load theory. Proc Hum Factors Ergon Soc Annual Meeting; 2016 Human Factors and Ergonomics Society Annual Meeting; Sep 19-23, 2016; Washington, DC. 2016. Sep 23, pp. 403–407. [Google Scholar]

- 28.Fraser KL, Ayres P, Sweller J. Cognitive load theory for the design of medical simulations. Simul Healthc. 2015 Oct;10(5):295–307. doi: 10.1097/SIH.0000000000000097. [DOI] [PubMed] [Google Scholar]

- 29.Ilgen JS, Ma IW, Hatala R, Cook DA. A systematic review of validity evidence for checklists versus global rating scales in simulation-based assessment. Med Educ. 2015 Feb;49(2):161–173. doi: 10.1111/medu.12621. [DOI] [PubMed] [Google Scholar]

- 30.Goldberg B, Chesser D. Bureau of Transportation Statistics. 2008. [2019-05-28]. Sitting on the Runway: Current Aircraft Taxi Times Now Exceed Pre-9/11 Experience https://www.bts.gov/archive/publications/special_reports_and_issue_briefs/special_report/2008_008/entire .

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Overview of the airRx app.

CONSORT‐EHEALTH checklist (V 1.6.1).