Abstract

Technological advances in multi-articulated prosthetic hands have outpaced the development of methods to intuitively control these devices. In fact, prosthetic users often cite "difficulty of use" as a key contributing factor for abandoning their prostheses. To overcome the limitations of the currently pervasive myoelectric control strategies, namely unintuitive proportional control of multiple degrees-of-freedom, we propose a novel approach: proprioceptive sonomyographic control. Unlike myoelectric control strategies which measure electrical activation of muscles and use the extracted signals to determine the velocity of an end-effector; our sonomyography-based strategy measures mechanical muscle deformation directly with ultrasound and uses the extracted signals to proportionally control the position of an end-effector. Therefore, our sonomyography-based control is congruent with a prosthetic user’s innate proprioception of muscle deformation in the residual limb. In this work, we evaluated proprioceptive sonomyographic control with 5 prosthetic users and 5 able-bodied participants in a virtual target achievement and holding task for 5 different hand motions. We observed that with limited training, the performance of prosthetic users was comparable to that of able-bodied participants and thus conclude that proprioceptive sonomyographic control is a robust and intuitive prosthetic control strategy.

Subject terms: Motor control, Biomedical engineering

Introduction

Currently, there are approximately 50,000 individuals living with upper limb loss in the US1. Upper extremity amputations most commonly occur in working age adults as a result of trauma1,2 and frequently affects the dominant extremity3. This significantly impacts activities of daily living, and contributes to overuse injuries to the sound limb4. The most common upper extremity amputation occurs at the transradial level (57%)3. A large proportion of individuals with upper limb loss discontinue the use of their prosthesis5–7. Prosthetic non-wear has been reported in 20% of individuals with upper limb loss and rejection rates for upper limb prosthetic users range from 35%–45% for myoelectric and cable controlled systems5. Currently, commercially-available advanced prosthetic hands, while capable of dexterous grips, lack intuitive control. Dissatisfaction with prosthesis technology is highly associated with rejection5. Most non-users (88%) reported the systems as being “too difficult or tiring” to use8. However, 74% of those who have abandoned their upper limb prostheses stated that they would reconsider prosthetic use if technological advancements were made to improve their functionality and usability8. Therefore, there is a significant unmet need for better technological solutions to benefit individuals with upper limb loss.

Despite enormous investment of resources in the development of new multi-articulated upper limb prostheses, one of the limiting factors remains how to accurately and intuitively control these advanced hand and wrist systems. For the past 50 years, electromyography has been the predominant method for sensing muscle activation, primarily utilizing surface electromyography (sEMG) electrodes. Decades of research have gone into improving myoelectric control technology for upper extremity prostheses. Yet, a recent study found that inertial measurement units on the foot are more effective in controlling the state-of-the-art multi-articulated DEKA arm compared to the most sophisticated myoelectric control method9.

A fundamental challenge with sEMG signals is the poor amplitude resolution and low signal-to-noise, especially with dry electrodes, typically used in prosthetic applications10–12, making it challenging for users to accurately achieve multiple graded levels of sEMG amplitude. Thus, in the most commonly used myoelectric control strategy, called direct control (DC), sEMG amplitude is thresholded to drive joint velocity instead of joint position. Velocity control limits the ability to achieve dexterous manipulation of the terminal device. Thus, DC users grip objects with excessive force and velocity as compared to able-bodied individuals13. The artificial velocity control signal is not congruent with proprioceptive feedback from residual muscles and is nonintuitive. Therefore, many users prefer body powered hooks over myoelectric systems as the cable tension provides congruent sensory feedback for terminal device position and thus is more intuitive8,14. Even with more sophisticated grasp decoding algorithms using pattern recognition with multiple electrodes15–21, the ability to obtain graded proportional control is limited by the fundamental challenges with sEMG amplitude resolution. Other invasive strategies, such as implantable myoelectric systems22–24, targeted muscle reinnervation25 and peripheral implant26,27 strategies, can produce more robust graded signals. Implanted devices and surgical procedures are associated with risk and side effects. While there is interest in these invasive technologies, users still prefer noninvasive methods28. Thus, there continues to be a need for a robust noninvasive strategy that can provide intuitive real-time proprioceptive control over multiple degrees-of-freedom to enable prosthetic users to make full use of advanced commercial hands.

In recent years, sonomyography, or ultrasound-based sensing of mechanical muscle contractions, has been actively pursued as an alternative to myoelectric control29. Ultrasound imaging provides a non-invasive sensing modality that can spatially resolve individual muscles, including those deep inside the tissue, and detect dynamic activity within different functional compartments in real-time. In fact, sonomyography has been shown to be useful for detecting individual finger positions30,31, along with other complex muscle deformation patterns32. Previous research has also shown that ultrasound techniques could be used for real-time classification of hand movements by predicting forearm muscle deformation patterns in able-bodied individuals29,31 as well as individuals with transradial amputation33.

In this paper, we propose a new sonomyographic control strategy to classify volitional motion intent and further extend our paradigm to enable intuitive, position-based proportional control over multiple degrees-of-freedom. Since our strategy relies on mechanical muscle contractions and relaxations that are congruent with underlying proprioceptive feedback in the residual limb, we refer to our strategy as proprioceptive sonomyographic control. We validated our techniques on able-bodied participants and applied it to five individuals with upper-extremity limb loss, one of whom had a congenital limb deficiency. We asked the participants to perform predefined hand motions while ultrasound images of their forearm muscle movements were captured using a portable, commercial ultrasound system. We extracted representative ultrasound images from the collected ultrasound data to build a training dictionary, and performed leave-one-out validation to quantify prediction accuracy using a supervised learning algorithm using k-nearest neighbors. Participants were then asked to perform the same hand motions in real-time while being shown an on-screen cursor that moved up or down in proportion to their muscle contract and relaxation respectively. A series of targets were presented at different levels of motion completion and the participant’s ability to perform graded control to reach these targets using forearm muscle deformation was measured. The goals of this work were, 1) to determine the ability of individuals with upper-extremity limb loss to perform different hand motions using our proprioceptive sonomyographic control strategy, with minimal training and 2) to determine the accuracy and stability with which individuals with limb loss can perform these motions in a graded manner to different levels of motion completion.

Methods

Subjects and experimental setup

We recruited four individuals with unilateral and one bilateral, upper-extremity amputation at the MedStar National Rehabilitation Hospital (Washington DC, USA) and George Mason University (Fairfax VA, USA). All of the individuals with limb loss were at the time using electrically powered, myoelectric prostheses with varying levels of proficiency. Subject-specific amputation details and demographics are available in Table 1. Five able-bodied participants were also recruited and served as a control group for this study. Demographics for able-bodied participants are listed in Table 2. All experiments described in this work were approved by the George Mason University Institutional Review Board and MedStar National Rehabilitation Hospital Institutional Review Board and performed in accordance with relevant guidelines and regulations. All participants provided written, informed consent prior to participating in the study, and were also compensated for their participation.

Table 1.

Demographics and amputation details of prosthetic users.

| Subject ID | Sex | Age | Years since amputation | Amputation type | Amputation level and side | Phantom limb | Phantom pain |

|---|---|---|---|---|---|---|---|

| Am1 | M | 68 | 50 | Traumatic | Transradial (L*) | Yes | No |

| Am2 | M | — | — | Congenital | Transradial (L*) | No | No |

| Am3 | M | 56 | 46 | Traumatic | Transradial (R*) | No | No |

| Am4 | M | 30 | 7.5 | Traumatic |

Wrist disarticulation (L*), Transradial (R) |

Yes (L*) Yes (R) |

Yes (L*), No (R) |

| Am5 | M | 38 | 1.5 | Traumatic | Transradial (R*) | No | No |

(L) and (R) indicate amputation of left or right arm respectively and *indicates arm used for this study.

Table 2.

Demographics of able-bodied participants.

| Subject ID | Age | Sex | Dominant arm |

|---|---|---|---|

| Ab1 | 29 | M | Right |

| Ab2 | 26 | M | Right |

| Ab3 | 28 | M | Right |

| Ab4 | 25 | F | Left |

| Ab5 | 24 | M | Right |

For the entire course of this study, all participants were seated upright with their forearm comfortably supported and their elbow below the shoulder to minimize fatigue. Subjects were instrumented with a clinical ultrasound system (Terason uSmart 3200T, Terason) connected to a low-profile, high-frequency, linear, 16HL7 transducer as shown in Fig. 1. The imaging depth was set to 4 cm without focusing. For individuals with limb loss, the transducer was positioned on the residuum below the elbow (see Fig. 1), such that all individual phantom finger movements resulted in considerable movement in the field-of-view. For able-bodied participants, the transducer was manually positioned on the volar aspect of the dominant forearm, approximately 4 cm–5 cm from the olecranon process in order to image both the deep and superficial flexor muscle compartments. Additionally, able-bodied participants were asked to place their forearm inside an opaque enclosure that prevented direct observation of arm and hand movements below the elbow. This ensured that able-bodied participants relied on their kinesthetic sense to perform all motion control tasks. The transducer was then secured in a custom-designed probe holder and held in place with a stretchable cuff as shown in Fig. 1 (inset). Ultrasound image sequences from the clinical ultrasound system were acquired and transferred to a PC (Intel Core i7-7700HQ, 16GB RAM, 4GB NVIDIA GeForce GTX 1040Ti) in real-time using a USB based video grabber (DVI2USB 3.0, Epiphan Systems, Inc.). The captured screen was then downscaled and cropped to (100 × 140 pixels) to include only the relevant ultrasound image. We typically obtained frame rates higher than 15 fps with this setup. The acquired image frame was then processed in MATLAB (The MathWorks, Inc.) using custom-developed algorithms as described in the following section.

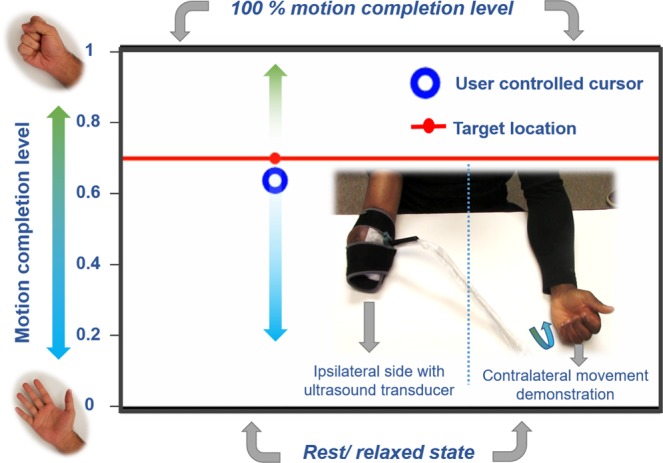

Figure 1.

Photograph of the experimental setup showing an individual with transradial amputation instrumented with an ultrasound transducer on the residuum (inset). The interface for the target holding motion control task described in experiment 2 shows the target position, movement bounds and the cursor position, which is controlled by muscle deformations in the subject’s residuum. Participants with unilateral amputation were asked to demonstrate the perceived motion using their contralateral intact limb.

Control algorithms

Following probe placement, the participants underwent an initial training phase during which they performed repeated iterations of a set of motions (power grasp, wrist pronation, thumb flexion, index flexion, key grasp, tripod, point), one motion at a time. Movements were timed to a metronome such that the participant first transitioned from rest to the end state of the selected motion within one second, then held that position for one second, then transitioned from the end state back to rest within one second and held that position for the same amount of time. This process was repeated five times in a sequence for each motion. For each ultrasound image frame in the motion sequence, we computed its distance from the first frame in the sequence. Since the first frame in the sequence corresponds to rest, the computed signal represents a deviation from rest and movement into a motion. The distance metric was calculated in real-time as (1-P), where P is the Pearson’s correlation coefficient between the two frames. A visualization of the signal was provided to participants in real-time as well. Since in the signal areas of low-similarity to rest (high muscle deformation) appeared as peaks and areas of high similarity to rest (low muscle deformation) appeared as valleys, the participants were able to track the extent to which their muscles were contracted and whether this contraction was consistent over time. Furthermore, using the same signal, we could extract the ultrasound frames corresponding to a motion end state, and the ultrasound frames corresponding to rest, by identifying plateaus in the signal as these would occur only where the participant was holding the motion or holding at rest. The ultrasound frames at each plateau were averaged into a single representative image and added to a training database with a corresponding motion or rest label. The process was repeated until training images corresponding to each movement had been added to the database. The quality of the training database was then determined by performing leave-one-out cross-validation with a 1-nearest-neighbor classifier using Pearson’s correlation coefficient as a similarity measure.

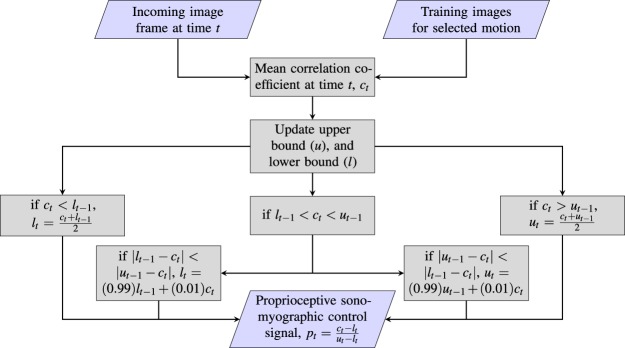

After training, the participants were asked to perform a specific motion at varying levels of completion while the proportional proprioceptive sonomyographic control signal was computed for each incoming frame in real-time. First, all training frames corresponding to the preselected motion were selected from the training database. The mean correlation value, ct, of the incoming image frame at time-point, , versus all frames of the selected motion in the training database was computed. This value was then mapped to a motion completion value between ‘0’ and ‘1’ based on an upper bound, , and a lower-bound, , that were initialized previously during a calibration process. The calibration process was structured in the same way as the training process, only instead of computing the control signal as (1-P), where P is the Pearson’s correlation coefficient of the incoming frame to the first frame, the mean correlation value to the trained motion frames, ct, was used instead. The upper bound, , was simply the average signal value at the motion plateaus; while the lower-bound, , was the average signal value at the rest plateaus. Since it is highly unlikely that a participant reaches the exact same motion end state and rest for every single iteration of a given motion, both bounds were dynamically updated as a weighted sum of the instantaneous correlation signal, ct and the closest bound. This process is described in detail in Fig. 2, but the general approach to the bound update procedure is as follows: if a correlation signal higher than the expected upper bound was observed, the upper bound was increased; likewise, if a correlation signal lower than the expected lower-bound was observed, the lower bound was decreased; over time, both of the expected bounds were relaxed very slowly, i.e. the expected upper bound was lowered and the expected lower bound was increased, under the assumption that the bounds are uncertain estimates. The effect of the dynamic nature of these updates and the uncertainty of the estimates is a system that is more responsive if we underestimate the initial bounds than it is if we overestimate. An underestimation would result in the participant-controlled end-effector being nudged towards 100% completion, or towards rest, earlier than expected; whereas an overestimation of the bounds would result in the participant being unable to fully reach 0% completion (rest) or 100% completion (motion end state). And though we could use fixed bounds and decide not to update the bounds dynamically, we would be risking overestimation and other problems such as variations in the participants muscle activation strength due to fatigue, and variations in signal amplitude due to sensor movements, and environmental noise34. Although heuristic, dynamic bound adjustment attempts to mitigate the effect of these factors.

Figure 2.

Image analysis pipeline for computing proportional control signal for an incoming, pre-annotated image frame in real-time. Upper bound (u) and lower bound (l) are initialized as and respectively.

Experimental protocols

Experiment 1 - motion discriminability task

The aim of this experiment was to determine the extent to which our system can distinguish between multiple motions performed by able-bodied subjects and prosthetic users. Participants were asked to perform repetitions of a pre-selected motion, interleaved with rest phases between each repetition, in response to audible metronome cues. During the course of the experiment, participants were provided with a view of ultrasound images acquired from their residuum (or intact limb for able-bodied participants) in conjunction with the real-time signal of the current image frame’s deviation from rest as described in the previous section (also see video in Supplementary Video M1).

The study involved blocks of trials, each consisting of five repetitions of a predefined set of motions. Trials were repeated until cross-validation (CV) accuracy exceeded 85% and participants reported that they were comfortable performing the motions. All of the participants listed in Table 1 participated in this experiment. Subject-specific motion sets and number of iterations performed by each prosthetic user are listed in Table 3 and motions are pictorially depicted in Fig. 3. All able-bodied participants performed five iterations of power grasp, wrist pronation, tripod, key grasp, and point each. Outcome measures for this experiment were leave-one out cross-validation accuracies for the first trial block, the best trial block, and the average accuracy over all trial blocks.

Table 3.

User-intended motions and number of iterations of each motion performed by prosthetic users.

| Subject ID | Motions performed | Number of iterations per motion |

|---|---|---|

| Am1 | PG, WP, Tr, KG, Po | 20 |

| Am2 | PG, WP, In, Th | 5 |

| Am3 | PG, WP, Tr, KG, Po | 25 |

| Am4 | PG, WP, Tr, KG, Po | 20 |

| Am5* | PG, WP, Tr, KG, Po | 15 (S1), 25 (S2) |

PG = power grasp, WP = wrist pronation, Tr = tripod, KG = key grasp, Po = point, In = index flexion, Th = thumb flexion.

*Motions performed over two different sessions, where S1 = session 1 and S2 = session 2.

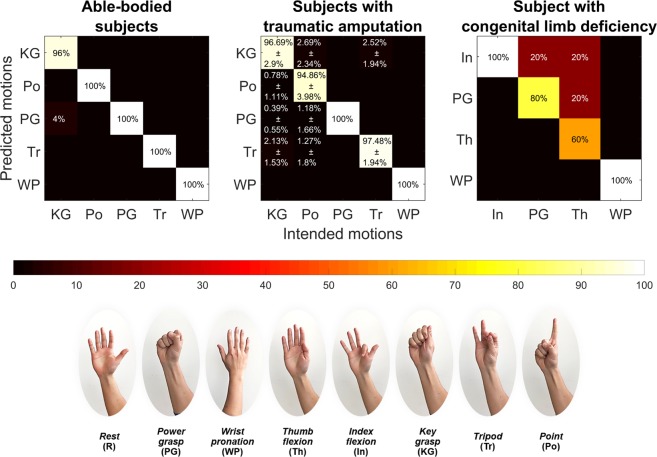

Figure 3.

Aggregate confusion matrices showing post-training motion discriminability for able-bodied subjects, traumatic and congenital prosthetic users.

Experiment 2 - proportional graded control target holding task

The aim of this experiment was to quantify proprioceptive sonomyographic control performance of prosthetic users and able-bodied participants at graded muscle activation levels for multiple motions. A motor control task was implemented, where the participant controlled an on-screen cursor that could move up or down in proportion to the degree of muscle activation in the forearm as shown in Fig. 1. The cursor on the computer screen could move up towards a normalized bound of ‘1’ in proportion to the performed completion level of a selected motion, reaching exactly ‘1’ when the motion was at 100% completion. Similarly, the cursor could move down towards the normalized bound of ‘0’ as the user returned from motion completion towards rest, reaching ‘0’ when the user was completely at rest (see video in Supplementary Video M2).

After an initial calibration step to initialize the bounds, the control interface presented the user with a target position randomly chosen from a predefined set of quantized, equidistant positions (), between the normalized upper and lower bounds. The target remained fixed at that position for a set hold-time, , and then moved to the next position until all points were exhausted. For each target position, the participant was prompted to move the cursor to the target by contracting or relaxing their muscles and holding the cursor position until the hold period expired. Participants with unilateral limb loss were also asked to demonstrate the imagined motion, and the extent of flexion using their intact, contralateral arm. Figure 1 shows a screen-shot of the control interface with the target and the user-controlled cursor.

We first conducted a pilot study with Am2 and Am3, with five quantized positions () and a hold time, s to validate our control algorithms. For the pilot study, the hold period commenced when the cursor first entered the current target’s quantization bounds, defined as of the motion range around the target. If the user failed to enter the quantization bounds within a timeout period, s, the target automatically moved to the next set-point.

Following the pilot study, all able-bodied participants and prosthetic users Am3, Am4 and Am5 were recruited to perform the same motion control task; Am1 and Am2 were not available. The extended study was performed with eleven graded levels (). Each target was presented for a fixed amount of time s regardless of whether the participant entered the quantization bounds or not. However, the metrics were still calculated after the first time the quantization bounds were entered to avoid including any time that may have been spent transitioning from one target to another. At the end of the 10 s, the target moved to the next target location, randomly chosen from the set of eleven target positions, without replacement. All participants performed three trials of each target level, for a total of 33 target positions.

Position error, stability error, task completion rate, and movement time served as evaluation metrics for proprioceptive sonomyographic control performance. Position error was computed as the mean of the difference between the cursor and the target position, while stability error was calculated to be the standard deviation of the difference between the cursor position and the target position. Both, position and stability errors were computed for the time when the cursor first entered the quantization bound till the hold time, expired. Task completion rate was defined as the percentage of targets, out of the total target locations presented, for which the participant was able to successfully reach within . It is important to note however, that the user was not required to stay within the bound, , for successful target acquisition. Finally, movement time was calculated as the time from target presentation to when the cursor first entered the quantization bounds . We performed a Fitt’s law analysis35–37 of movement times at various indices of difficulty (). For experiment 2, was defined as in Eq. 1.

| 1 |

where, is the distance between subsequent targets and is the target’s quantization bound.

Statistical analysis

Due to the extremely small sample size in the study and the difficulty to check the normality assumption required in a MANOVA analysis, we use the one-sided exact Wilcoxon-Mann-Whitney test38 to compare the able-bodied group with the prosthetic user group. For the position and stability errors, under the alternative, the distribution in the able-bodied group is shifted to the left of the distribution in the prosthetic user group. On the other hand, under the alternative, the distribution of task completion rate in the able-bodied group is shifted to the right of the distribution in the prosthetic user group.

To compare the effects of the index of difficulty on the movement time between the able-bodied group and the prosthetic user group for the Fitt’s law analysis, we fit the following linear mixed model39 including quadratic terms of the index of difficulty (). For the th subject, let denote the th index of difficulty, the corresponding movement time, and if subject is in the able-bodied group and otherwise.

| 2 |

where denotes the vector of the unknown fixed effects parameters, is a random intercept, is a random slope, and is the residual error. Here the two random effects are assumed to follow a bivariate normal distribution with mean 0 and an unknown variance-covariance matrix. The residual errors are assumed to be i.i.d. normally distributed with mean 0. Under the null hypothesis that there is no difference between the able-bodied group and the prosthetic user group, we have .

Results

Experiment 1

Our training and prediction strategy was first validated with able-bodied participants. Four out of the five able-bodied participants achieved cross-validation accuracies of 100% within five repetitions (or one trial) across all five motions. Figure 3 shows the aggregate confusion matrix for all able-bodied participants. It shows that four out of five motions were predicted with 100% accuracy and key grasp was incorrectly predicted as power grasp in just one out of 25 motion instances (5 participants performing 5 motions each).

The same evaluation was then performed with the prosthetic user group. All of the prosthetic users, including the individual with congenital limb deficiency (Am2), were able to successfully complete the task, with an average validation accuracy of 96.8 5.4% for at least 4 motions. Figure 3 shows the aggregate confusion matrix for all subjects with traumatic amputation and the congenital limb deficiency, Am2. For subjects with traumatic amputation, the average cross-validation accuracy was 96.76% for five motions, with key grasp and point having the lowest prediction accuracies. In contrast, Am2 achieved a cross-validation accuracy of 85% for four motions with tripod having the least prediction accuracy at 60%. Having been born without a hand, the participant reported not having clear digit or grasp-based movement context such as the phantom sensation of a thumb movement. This lack of feeling in the residual limb could have negatively affected motion performance and thus also impacted the ability of our system to tell certain motions apart. In fact, the participant had to be guided to attempt to recreate 4 different patterns of movement while looking at the ultrasound for context, instead of being told to imagine his fingers in a specific position like we had done with the other participants. Nonetheless, all participants typically completed the training phase in an hour or less, with relatively high motion discriminability.

We also analyzed the influence of treating rest as a separate motion class on the prediction accuracies, instead of treating it simply as the absence of motion (low similarity to motion frames). When rest was excluded from cross-validation, motion discriminability improved for all participants. The mean cross-validation accuracy for all trials increased by 7.8% to 96.8% when rest was excluded as shown in Table 4. Table 4 also shows cross-validation accuracies with and without rest for the initial and best set of trials for prosthetic users. In both cases, the mean cross-validation accuracy for the initial trial was comparable to best accuracy figures showing that the classifier was able to consistently predict motions when presented with representative image frames repeated across several trials.

Table 4.

Comparison of cross-validation accuracies for prosthetic users with and without rest as a motion class.

| Subject ID | Cross-validation accuracy (%) | |||||

|---|---|---|---|---|---|---|

| Including rest | Excluding rest | |||||

| First trial | Best trial | Average across all trials | First trial | Best trial | Average across all trials | |

| Am1 | 88.33 | 90.83 | 87.71 | 100.00 | 100.00 | 99.00 |

| Am2* | 80.50 | 80.50 | 80.50 | 85.00 | 85.00 | 85.00 |

| Am3 | 82.50 | 95.00 | 86.33 | 96.00 | 100.00 | 96.80 |

| Am4 | 100.00 | 100.00 | 100.00 | 100.00 | 100.00 | 100.00 |

| Am5 (S1) | 86.67 | 86.67 | 85.00 | 100.00 | 100.00 | 100.00 |

| Am5 (S2) | 92.50 | 93.33 | 89.33 | 96.00 | 100.00 | 96.80 |

| Mean ± SD | 89.23 ± 6.82 | 91.49 ± 6.32 | 89.00 ± 6.38 | 96.71 ± 5.50 | 97.86 ± 5.67 | 96.80 ± 5.40 |

*Subject performed one trial of five repetitions. Refer to Table 3.

Experiment 2

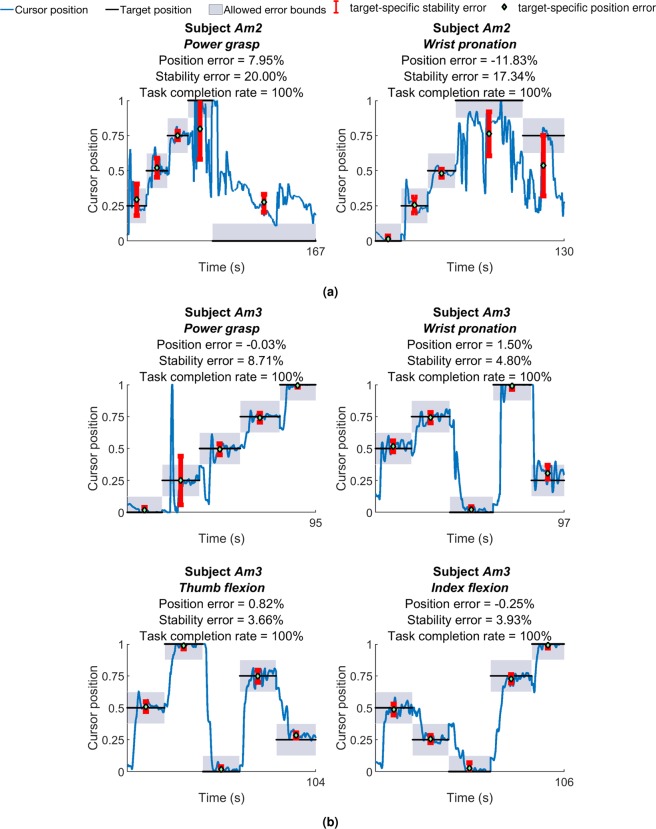

Figure 4a,b show results of the pilot target holding study of experiment 2 for prosthetic users, Am2 and Am3, respectively. The quantized target trajectory, user-controlled cursor position and associated quantization bounds are plotted against time. Both participants were able to successfully reach all of the targets presented in the pilot study. Am2 performed power grasp with position and stability error of 7.95% and 20% respectively. For wrist pronation position error was 11.83% whereas stability error was 17.83%. Participant Am3’s position errors ranged between −0.03% to 1.50% while stability error ranged from 3.66% to 8.71% across four motions. Power grasp had the lowest position error whereas thumb flexion was found to have the lowest stability error.

Figure 4.

Plots of user-controlled cursor position against target position for the pilot target holding task, for, (a) Am2 and (b) Am3. The target randomly moved between five quantized target levels within normalized bounds of rest (‘0’) and motion completion (‘1’). Position and stability errors for individual target position segments are also shown.

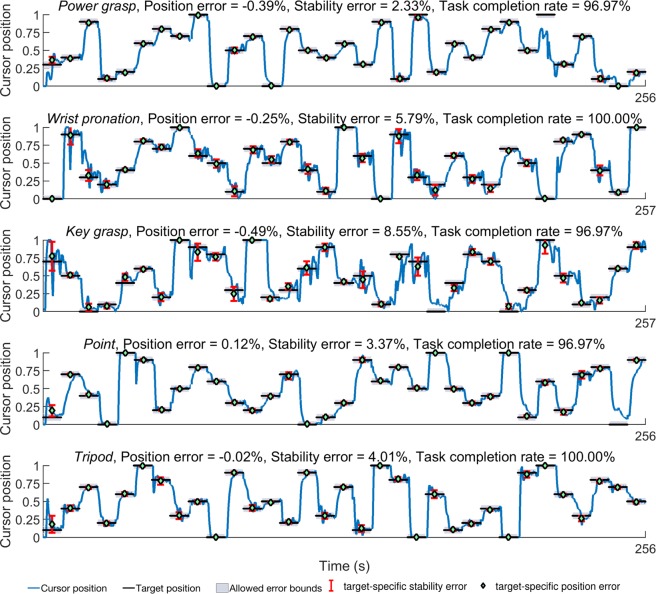

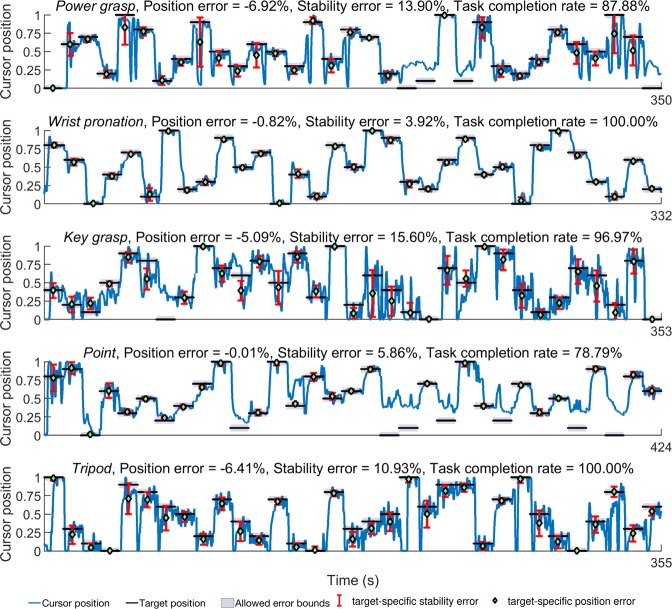

Table 5 shows outcome metrics for the subsequent, extended target holding task performed at eleven graded target levels for able-bodied participants and prosthetic users respectively. All of the able-bodied participants and prosthetic users, Am4 and Am5 performed five motions while participant Am3 performed 2 motions. Am3 also performed key grasp, however, those data were excluded from analysis due to error during the bound calibration stage. The -values of the exact Wilcoxon-Mann-Whitney test are presented in Table 5. Even without multiple comparisons adjustment, no significant difference at the significance level of 0.05 was detected between the two groups for any endpoint of interest with the smallest -value at 0.07. With any procedure for multiple comparisons adjustment, a more stringent significance level is needed; therefore, the conclusion of no significant results remains the same.

Table 5.

Outcome metrics for target holding task (experiment 2) for able-bodied and prosthetic users.

| Subject ID | Position error (%) | Stability error (%) | Task completion rate (%) | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| PG | WP | Po | KG | Tr | PG | WP | Po | KG | Tr | PG | WP | Po | KG | Tr | |

| Able-bodied Ab1 | −0.74 | 0.47 | 0.67 | 0.63 | −0.41 | 3.66 | 1.88 | 4.02 | 8.45 | 5.55 | 93.94 | 100.0 | 100.0 | 87.88 | 96.97 |

| Ab2 | 1.17 | 0.41 | −0.44 | 1.21 | −0.58 | 4.90 | 3.97 | 1.03 | 8.87 | 7.92 | 90.91 | 100.0 | 96.97 | 96.97 | 100.0 |

| Ab3 | −0.66 | 0.04 | 0.22 | −0.69 | −0.38 | 2.98 | 1.66 | 3.52 | 5.18 | 9.17 | 93.94 | 100.0 | 81.82 | 100.0 | 100.0 |

| Ab4 | −0.92 | −0.25 | 0.16 | 1.43 | −0.93 | 3.62 | 1.62 | 5.63 | 9.63 | 5.97 | 96.97 | 100.0 | 75.76 | 96.97 | 81.82 |

| Ab5 | −1.73 | −0.42 | 0.09 | −2.23 | −0.57 | 7.30 | 4.39 | 6.78 | 11.8 | 7.38 | 100.0 | 84.85 | 96.97 | 93.94 | 93.94 |

| Average | 1.04 | 0.32 | 0.32 | 1.24 | 0.57 | 4.49 | 2.70 | 4.20 | 8.79 | 7.20 | 95.15 | 96.97 | 90.30 | 95.15 | 94.55 |

| Prosthetic user Am3 | 2.37 | −0.23 | — | — | — | 10.19 | 4.61 | — | — | — | 69.70 | 100.0 | — | — | — |

| Am4 | −0.39 | −0.25 | 0.12 | −0.49 | −0.02 | 2.33 | 5.79 | 3.37 | 8.55 | 4.00 | 96.97 | 100.0 | 96.97 | 96.97 | 100.0 |

| Am5 | −6.91 | −0.82 | −0.01 | −5.09 | −6.41 | 13.89 | 3.92 | 5.86 | 15.60 | 10.90 | 87.88 | 100.0 | 78.79 | 96.97 | 100.0 |

| Average | 3.22 | 0.43 | 0.07 | 2.79 | 3.22 | 8.80 | 4.77 | 4.62 | 12.08 | 7.45 | 84.85 | 100.0 | 87.88 | 96.97 | 100.0 |

| Wilcoxon p-value | 0.29 | 0.52 | 0.96 | 0.57 | 0.57 | 0.29 | 0.07 | 0.57 | 0.29 | 0.57 | 0.91 | 0.63 | 0.71 | 0.52 | 0.29 |

PG = power grasp, WP = wrist pronation, Po = point, KG = key grasp, Tr = tripod.

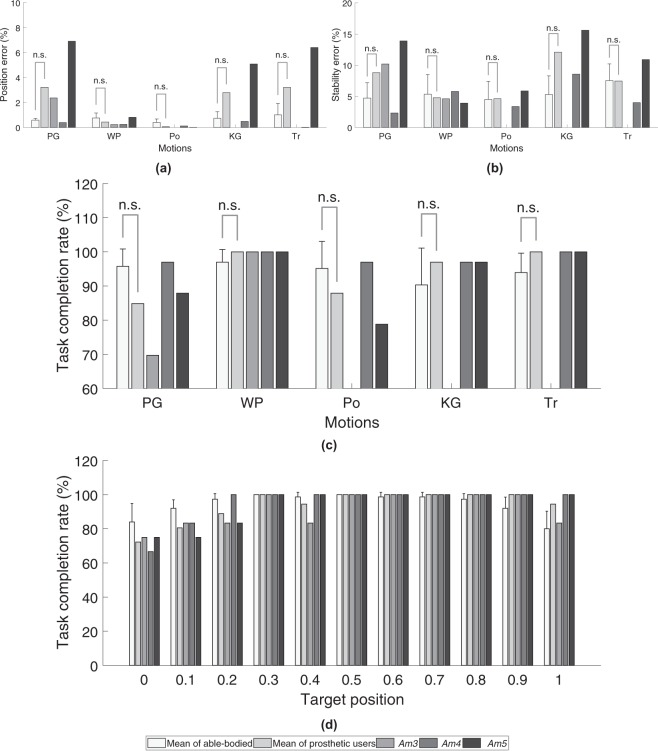

The average outcome metrics for the target holding task are also shown in Fig. 5. For the targets that were successfully acquired, Fig. 5a,b show that able-bodied participants performed all motions except point with lower position and stability errors compared to prosthetic users. Although, mean stability errors for able-bodied participants were lower than prosthetic users, there seems to be a correspondence between motion-specific stability errors across participants. Motions with high stability errors in able-bodied participants also have high stability errors in prosthetic users.

Figure 5.

Aggregate outcome metrics for the target holding task (experiment 2) for able-bodied participants and prosthetic users. (a) Position error for all motions, (b) stability error for all motions, (c) task completion rate for all motions and, (d) task completion rate at different target positions. Motion legend- PG = power grasp, WP = wrist pronation, Po = point, KG = key grasp, Tr = tripod.

The type of motion also seems to influence position errors and stability errors for all participants, i.e. motions with higher position error also have high stability error and vice versa. For example, as shown in Fig. 5a,b, for able-bodied participants, key grasp followed by power grasp and tripod have the highest stability and position errors. For prosthetic users, a similar trend is observed wherein key grasp, power grasp and tripod exhibited the highest mean stability and mean position errors out of the 5 motions that were performed.

For the extended target holding task, point was found to have the lowest average absolute position and stability error followed closely by wrist pronation for prosthetic users. However, average task completion rate (Fig. 5c) for point was 87.88%, while that of wrist pronation was 100% across all trials. Similarly, power grasp and tripod had comparable position and stability errors, although the average task completion rate was 84.85% for power grasp and 100% for tripod. Figure 5d also shows that there are small decreases in task completion rates at the lower quartile (0 to 0.25) and upper tenths (0.9 to 1.0) of the total motion completion range for prosthetic users as well as able-bodied participants. Timeseries plots of the cursor and target positions shown in Figs 6 and 7 also indicate that the unachieved targets were mostly presented in the latter half of the task.

Figure 6.

Timeseries plots showing prosthetic user Am4’s performance in the target holding task. The user-controlled cursor position and target locations for five motions along with position and stability errors are shown. Motion legend- PG = power grasp, WP = wrist pronation, Po = point, KG = key grasp, Tr = tripod.

Figure 7.

Timeseries plots showing prosthetic user Am5’s performance in the target holding task. The user-controlled cursor position and target locations for five motions along with position and stability errors are shown. Motion legend- PG = power grasp, WP = wrist pronation, Po = point, KG = key grasp, Tr = tripod.

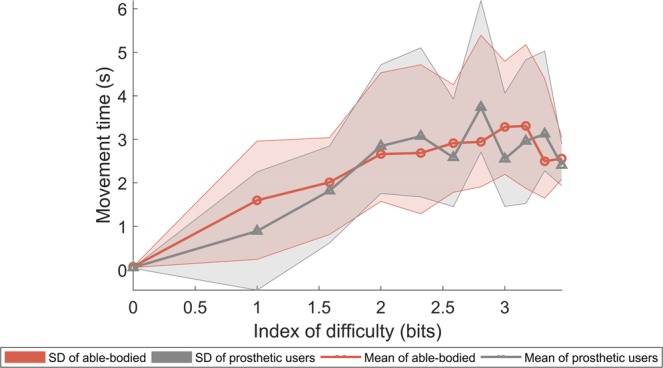

Finally, results from the Fitt’s law analysis for the movement time required to achieve each target against its corresponding index of difficulty was performed and results are shown in Fig. 8. For both able-bodied participants and prosthetic users, mean movement time increases with increasing task difficulty across all motions as is expected in a human-computer interaction task. A regression analysis was performed of the mean movement times versus index of difficulty for both able-bodied (R2 = 0.79) and prosthetic users (R2 = 0.72). Throughputs were found to be slightly higher for able-bodied participants at 1.35 bits/s compared to 1.19 bits/s for prosthetic users. Movement time intercept (y-axis intercept) was also higher for able-bodied participant at 0.72 s compared to 0.44 s for prosthetic users. For the linear-mixed model described in Eq. 2, the likelihood ratio test statistic takes the value of 1.315 leading to a p-value of 0.726 compared to the null distribution of with 3 degrees of freedom. Therefore, there is no evidence to show that there is a significant difference between the two groups. The main effects of the index of difficulty and the quadratic term are estimated at and ,.

Figure 8.

Fitt’s law analysis for the target holding task (experiment 2) for able-bodied participants and prosthetic users.

Discussion

In this paper, we evaluated an ultrasound-based approach for sensing volitional user movement intent and extracting proportional control signals in response to muscle deformations in the forearm. Our proposed proprioceptive sonomyographic control strategy allows spatially resolved muscle activity detection in deep seated muscle compartments and extraction of proportional signals from individual functional muscle compartments. Position control based on the extent of muscle deformation is more directly congruent to natural motor control and has the potential to enable robust and intuitive dexterous manipulation that is currently not possible with myoelectric strategies.

Sonomyography offers position control capability with high number of graded levels

Conventional myoelectric control based on dry, surface electrodes suffer from limited amplitude resolution and hence, achieving multiple graded levels of amplitude continues to be a challenge10–12. As a result, myoelectric prostheses employing direct control (DC) utilize simple thresholding of sEMG signals to drive joint velocity as opposed to position. Since the velocity control signal is incongruent with proprioceptive feedback from residual musculature, the ability to intuitively manipulate the terminal device is limited13.

In contrast, ultrasound-derived signals have signal to noise >50 dB29,33 and can achieve multiple graded levels of amplitude. In previous work, we have shown that ultrasound signals can be proportionally mapped to joint angle29. In this study, we evaluated the ability of individuals to achieve true positional control of end-effectors using sonomyography.

Our results show that able bodied individuals and amputees can use sonomyography signals to control the position of a one degree of freedom virtual cursor with 1.10 ± 1.63% position error and 6.11 ± 3.46% stability error on average. All subjects were able to achieve targets multiple graded levels for a number of different motion classes with an average completion rate of 94.98 ± 7.30%.

In this study, we showed that able bodied individuals and amputees can use sonomyography signals to control the position of a 1 degree of freedom virtual cursor, by appropriately flexing their forearm muscles. All subjects were able to achieve multiple graded levels when targets at these levels were presented to them. Due to its one-to-one mapping with ultrasound images of deep seated forearm muscles, the sonomyography signal is a position signal, as opposed to a simple ON/OFF signal like conventional direct control strategies. In the case of velocity control, if an individual wanted the prosthetic hand to acquire a certain partial grasp, they would have to flex their muscles to make the cursor move, then they would have to hold the grasp until the prosthetic hand acquired the required pose, and then rest to make the hand stop moving. In case of position control, the individual would have to just imagine that partial grasp, and the hand would go to that position. This makes position control highly intuitive for users.

Short training time for high number of separable motions

In this study, users were asked to imagine performing different grasps based on their intuition and any phantom limb sensations. We demonstrated that a simple 1-nearest neighbor classifier with a correlation-based distance metric was able to classify user-intended motions for both able-bodied and individuals with upper-extremity limb loss. This image analysis pipeline makes our system agnostic to anatomical landmarks and removes the need for computationally expensive tracking algorithms30. However, due to the nature of our ultrasound-based image analysis pipeline, we must treat rest differently from other motions. The state of muscle deformation at perceived rest positions was found to be quite variable. For able bodied subjects, rest sometimes meant a neutral position with the fingers partially flexed, whereas at other times they extended the digits maximally when asked to rest. For individuals with limb loss, it was even more challenging to standardize a rest position. Fortunately, rest can be classified as a complement to the set of defined motion classes. When rest is eliminated as a potential class for our system to attempt to classify, our approach achieves motion discriminiability that is comparable to current myoelectric pattern recognition (PR) systems performing similar motions40,41. Our sonomyography-based approach is able to achieve this classification accuracy with less than an hour of training, compared to several weeks of training needed for PR to achieve comparable classification accuracy40.

Nonetheless, it is interesting to note that participants, Am1, Am3 and Am4 are long-time myoelectric prosthetic users and reported having an advanced level of control over their residual musculature owing to daily use with their prosthesis. This prior experience and repeated practice may have had some influence leading to their initially high cross-validation accuracies as seen in Table 4. This seems to suggest that sonomyography can leverage existing motor skills in current, experienced myoelectric prosthetic users without the need for extensive retraining. Along the same lines, participant Am5’s, lower initial accuracy of 86.67%, in the first session (S1) (see Table 4) may have been a result of limited exposure to a powered prosthesis or any other motion performance training. However, in the following session (S2), Am5 demonstrated a higher initial (92.5%) as well as average accuracy (89.33%) compared to his first session, indicating that the effect of training on our system may have been retained. Additionally, Am5 later reported an improvement in his ability to control his existing myoelectric prosthesis due to a clearer understanding of his residual muscle deformation in the context of phantom digit movements because of the ultrasound image feedback provided during motion training. Likewise, due to the ultrasound image feedback, the congenital participant Am2 was able to achieve high motion discriminability for four motions within approximately 30 minutes. This suggests that sonomyography can provide an intuitive control paradigm for individuals with traumatic amputation as well as congenital limb deficiency, who may either lack phantom limb sensations altogether or have limited context of muscular proprioception in their residuum. This is corroborated by contralateral arm demonstrations by subjects with unilateral amputation (see video in Supplementary Video M2) showing that the movements perceived in their phantom limb closely resemble the intended motion.

Sensorimotor congruency between limb movements and sonomyography

The effect of sensorimotor congruence extends to proportional control task performance as well. We show that participants with traumatic amputation and congenital limb deficiency, with no prior experience of using a sonomyography-based interface are able to demonstrate fine graded control of an end-effector controlled by muscle activity in the forearm. Position errors for prosthetic users and able-bodied participants were below 3.5%, with an average task completion rate higher than 94% for 11 graded targets. Furthermore, despite overall position errors for prosthetic users (1.9%) being higher compared to able-bodied participants (0.7%), there was no appreciable difference in Fitt’s law throughput for both prosthetic users and able-bodied subjects.

We believe that this natural alignment between the behavior of prosthetic users and able-bodied individuals, in the use of our sonomyographic control paradigm is due to the direct mapping between the extent muscle deformation in the forearm and the derived position-based proportional signal and is evidence of the instinctive nature of our strategy. We also demonstrate that the proprioceptive sonomyographic control signal can be used to control a commercial prosthetic device (see video in Supplementary Video M3). Subjects Am2 and Am5 demonstrate that our paradigm provides robust positional control of the prosthetic hand over the entire dynamic range of the motion. Additionally, both subjects clearly show using their contralateral intact limb, that there is a close correspondence between the perceived motion and the motion executed by the prosthetic hand.

Prior studies42–44 have shown that individuals using robotic manipulators or prosthetic arms can embody the end-effector into their body schema. Sato et al.42 demonstrated that congruence between visual and somatosensory inputs can induce a sense of ownership as well as agency in individuals with amputation when using a myoelectric prosthesis. As shown in Supplementary Video M3, with our sonomyographic control paradigm there is a one-to-one mapping from the individual’s muscle flexion and the position of terminal device. While in this study we did not directly attempt to quantify prosthetic embodiment, we believe that proprioceptive sonomyographic control may elicit similar effects of embodiment of the terminal device in prosthetic users as observed by Sato et al.42.

Limitations of the study

While the results of the current study demonstrate the potential of sonomyography, some limitations should be acknowledged. We did not evaluate simultaneous proportional control of multiple degrees of freedom. While our subjects were able to start using sonomyographic control within a few minutes of training, we did not systematically evaluate the effect of learning and retention over multiple days or weeks. Sonomyography is based on assessment of muscle deformation, which is directly related to the proprioceptive afferents in muscle spindles, however, we did not have any direct measures of proprioception. The subjects received visual feedback of the cursor position and the target position throughout the study. We did not try to separate the effect of visual and proprioceptive feedback. We did not directly compare between sEMG and sonomyography, since position control using sEMG is not practical. We did not include measures of cognitive load in this study. In future studies, we plan to address these limitations.

Translating sonomyography to practical prototypes

Our current study relied on clinical ultrasound devices for data collection from the participants’ forearms. In order for sonomyography-based approaches to be viable for prosthetic control, the size of the sensing and signal processing systems must be significantly reduced; and although clinical devices continue to be miniaturized and hand-held ultrasound systems are commercially available, the current form factor of commercial systems are still too large to be readily be integrated with commercial prosthetic arms. To overcome this, we are currently developing custom miniaturized and low-power ultrasound imaging instrumentation45 that can be directly integrated into a prosthetic shell for continuous, in-vivo monitoring of muscle activity and control of associated prosthetic devices. Another concern with sonomyography is the movement of the transducer relative to the anatomy during use or donning and doffing. In previous studies, we have shown that the effect of arm position on classification accuracy can be minimized by adding training samples at different arm positions. Earlier studies with sEMG electrodes integrated into prosthetic sockets have shown that electrode shift, donning/doffing, and arm position can have an adverse effect on long-term control reliability46–50. Considering the short training regime required for our approach (typically a few minutes), a re-calibration routine may be a viable solution to retrain the system and overcome sensor shifts. We have also shown in previous studies that the effect of user arm position on classification accuracy for able-bodied participants can be minimized by introducing some variation in training29. Although major changes in transducer position will likely severely degrade both classification accuracy and proportional control performance, minor variations in transducer location and orientation due to movement of the residuum inside a prosthetic shell can be mitigated in real-time by appropriate filtering and wavelet-based machine learning techniques51. This work establishes the feasibility of an ultrasound-based, noninvasive, muscle activity sensing modality, for use in real-time graded-control of multi-articulated prosthetic arms by individuals with upper-extremity amputation with limited training. Our novel proprioceptive sonomyographic control approach provides a means to achieve intuitive proportional control in future prosthetic devices and potentially significantly lower the rate of device rejection.

Supplementary information

Demonstration of ultrasound probe placement and sonomyographic training routine for prosthetic users.

Virtual cursor control task demonstration of experiment 2.

Demonstration of positional control of commercial prosthetic arm using proprioceptive sonomyographic control by Am2 and Am5.

Acknowledgements

This work is supported by multiple grants from: United States Department of Defense, Award Number: W81XWH-16-1-0722 and National Science Foundation, Grant Number: 1329829. Some of the authors also participated as subjects in the study.

Author Contributions

A.S.D., B.M., S.P., N.A., M.H.-L., W.M.J. and S.S. conceived the experiment(s). A.S.D., B.M., S.P. and R.H. conducted the experiment(s), A.S.D., B.M. and S.P. analyzed the results. G.D. performed statistical analysis. A.S.D., B.M., S.P., G.L. and S.S. wrote the manuscript. All authors reviewed the manuscript.

Data Availability

The datasets generated during and/or analyzed during the current study are available from the corresponding author on reasonable request.

Competing Interests

The authors declare no competing interests.

Footnotes

Publisher’s note: Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Ananya S. Dhawan, Biswarup Mukherjee and Shriniwas Patwardhan contributed equally.

Supplementary information

Supplementary information accompanies this paper at 10.1038/s41598-019-45459-7.

References

- 1.Ziegler-Graham K, MacKenzie EJ, Ephraim PL, Travison TG, Brookmeyer R. Estimating the prevalence of limb loss in the united states: 2005 to 2050. Arch. Phys. Medicine Rehabil. 2008;89:422–429. doi: 10.1016/j.apmr.2007.11.005. [DOI] [PubMed] [Google Scholar]

- 2.Dillingham TR, Pezzin LE, MacKenzie EJ. Incidence, acute care length of stay, and discharge to rehabilitation of traumatic amputee patients: An epidemiologic study. Arch. Phys. Medicine Rehabil. 1998;79:279–287. doi: 10.1016/S0003-9993(98)90007-7. [DOI] [PubMed] [Google Scholar]

- 3.Esquenazi A, Meier R. Rehabilitation in limb deficiency. 4. Limb amputation. Arch. physical medicine rehabilitation. 1996;77:S18–S28. doi: 10.1016/S0003-9993(96)90239-7. [DOI] [PubMed] [Google Scholar]

- 4.Østlie K, Franklin RJ, Skjeldal OH, Skrondal A, Magnus P. Musculoskeletal pain and overuse syndromes in adult acquired major upper-limb amputees. Arch. Phys. Medicine Rehabil. 2011;92:1967–1973.e1. doi: 10.1016/j.apmr.2011.06.026. [DOI] [PubMed] [Google Scholar]

- 5.Biddiss E, Chau T. Upper limb prosthesis use and abandonment: A survey of the last 25 years. Prosthetics Orthot. Int. 2007;31:236–257. doi: 10.1080/03093640600994581. [DOI] [PubMed] [Google Scholar]

- 6.Østlie K, et al. Prosthesis rejection in acquired major upper-limb amputees: A population-based survey. Disabil. Rehabil. Assist. Technol. 2012;7:294–303. doi: 10.3109/17483107.2011.635405. [DOI] [PubMed] [Google Scholar]

- 7.McFarland LV, Winkler SLH, Heinemann AW, Jones M, Esquenazi A. Unilateral upper-limb loss: Satisfaction and prosthetic-device use in veterans and servicemembers from Vietnam and OIF/OEF conflicts. The J. Rehabil. Res. Dev. 2010;47:299. doi: 10.1682/JRRD.2009.03.0027. [DOI] [PubMed] [Google Scholar]

- 8.Biddiss E, Chau T. Upper-limb prosthetics: Critical factors in device abandonment. Am. J. Phys. Medicine Rehabil. 2007;86:977–987. doi: 10.1097/PHM.0b013e3181587f6c. [DOI] [PubMed] [Google Scholar]

- 9.Resnik L, Klinger SL, Etter K. The deka arm: Its features, functionality, and evolution during the veterans affairs study to optimize the deka arm. Prosthetics orthotics international. 2014;38:492–504. doi: 10.1177/0309364613506913. [DOI] [PubMed] [Google Scholar]

- 10.Clancy EA, Morin EL, Merletti R. Sampling, noise-reduction and amplitude estimation issues in surface electromyography. J. Electromyogr. Kinesiol. 2002;12:1–16. doi: 10.1016/S1050-6411(01)00033-5. [DOI] [PubMed] [Google Scholar]

- 11.Daley H, Englehart K, Hargrove L, Kuruganti U. High density electromyography data of normally limbed and transradial amputee subjects for multifunction prosthetic control. J. Electromyogr. Kinesiol. 2012;22:478–484. doi: 10.1016/j.jelekin.2011.12.012. [DOI] [PubMed] [Google Scholar]

- 12.Fillauer CE, Pritham CH, Fillauer KD. Evolution and Development of the Silicone Suction Socket (3S) for Below-Knee Prostheses. J. Prosthetics & Orthot. 1989;1:92–103. doi: 10.1097/00008526-198901000-00007. [DOI] [Google Scholar]

- 13.Van Dijk L, Van Der Sluis CK, Van Dijk HW, Bongers RM. Task-Oriented Gaming for Transfer to Prosthesis Use. IEEE Transactions on Neural Syst. Rehabil. Eng. 2016;24:1384–1394. doi: 10.1109/TNSRE.2015.2502424. [DOI] [PubMed] [Google Scholar]

- 14.Carey SL, Lura DJ, Highsmith MJ. Differences in myoelectric and body-powered upper-limb prostheses: Systematic literature review. J. Rehabil. Res. Dev. 2015;52:247–262. doi: 10.1682/JRRD.2014.08.0192. [DOI] [PubMed] [Google Scholar]

- 15.Tenore F, et al. Decoding of individuated finger movements using surface Electromyography. Biomed. Eng. IEEE Transactions on. 2009;56:1427–1434. doi: 10.1109/TBME.2008.2005485. [DOI] [PubMed] [Google Scholar]

- 16.Cipriani C, et al. Online myoelectric control of a dexterous hand prosthesis by transradial amputees. IEEE Transactions on Neural Syst. Rehabil. Eng. 2011;19:260–270. doi: 10.1109/TNSRE.2011.2108667. [DOI] [PubMed] [Google Scholar]

- 17.Khushaba RN, Kodagoda S, Takruri M, Dissanayake G. Toward improved control of prosthetic fingers using surface electromyogram (EMG) signals. Expert. Syst. with Appl. 2012;39:10731–10738. doi: 10.1016/j.eswa.2012.02.192. [DOI] [Google Scholar]

- 18.Al-Timemy AH, Bugmann G, Escudero J, Outram N. Classification of finger movements for the dexterous hand prosthesis control with surface electromyography. IEEE J. Biomed. Heal. Informatics. 2013;17:608–618. doi: 10.1109/JBHI.2013.2249590. [DOI] [PubMed] [Google Scholar]

- 19.Ortiz-Catalan M, Håkansson B, Brånemark R. Real-time and simultaneous control of artificial limbs based on pattern recognition algorithms. IEEE Transactions on Neural Syst. Rehabil. Eng. 2014;22:756–764. doi: 10.1109/TNSRE.2014.2305097. [DOI] [PubMed] [Google Scholar]

- 20.Wurth S, Hargrove L. A real-time comparison between direct control, sequential pattern recognition control and simultaneous pattern recognition control using a Fitts’ law style assessment procedure. J. NeuroEngineering Rehabil. 2014;11:91. doi: 10.1186/1743-0003-11-91. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Young AJ, Smith LH, Rouse EJ, Hargrove LJ. A comparison of the real-time controllability of pattern recognition to conventional myoelectric control for discrete and simultaneous movements. J. NeuroEngineering Rehabil. 2014;11:1–10. doi: 10.1186/1743-0003-11-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Pasquina PF, et al. First-in-man demonstration of a fully implanted myoelectric sensors system to control an advanced electromechanical prosthetic hand. J. Neurosci. Methods. 2015;244:85–93. doi: 10.1016/j.jneumeth.2014.07.016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Baker JJ, Scheme E, Englehart K, Hutchinson DT, Greger B. Continuous detection and decoding of dexterous finger flexions with implantable myoelectric sensors. IEEE Transactions on Neural Syst. Rehabil. Eng. 2010;18:424–432. doi: 10.1109/TNSRE.2010.2047590. [DOI] [PubMed] [Google Scholar]

- 24.Weir RF, Troyk PR, DeMichele GA, Kerns DA, Schorsch JF. Implantable Myoelectric Sensors for Intramuscular EMG Recording. IEEE Transactions on Biomed. Eng. 2009;56:2009. doi: 10.1109/TBME.2008.2005942. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Kuiken TA, et al. Targeted muscle reinnervation for real-time myoelectric control of multifunction artificial arms. Jama. 2009;301:619–628. doi: 10.1001/jama.2009.116. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Micera S, Navarro X, Yoshida K. Interfacing with the peripheral nervous system to develop innovative neuroprostheses. IEEE Transactions on Neural Syst. Rehabil. Eng. 2009;17:417–419. doi: 10.1109/TNSRE.2009.2033426. [DOI] [PubMed] [Google Scholar]

- 27.Navarro X, et al. A critical review of interfaces with the peripheral nervous system for the control of neuroprostheses and hybrid bionic systems. J. Peripher. Nerv. Syst. 2005;10:229–258. doi: 10.1111/j.1085-9489.2005.10303.x. [DOI] [PubMed] [Google Scholar]

- 28.Engdahl SM, et al. Surveying the interest of individuals with upper limb loss in novel prosthetic control techniques. J. NeuroEngineering Rehabil. 2015;12:1–11. doi: 10.1186/s12984-015-0044-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Akhlaghi N, et al. Real-time classification of hand motions using ultrasound imaging of forearm muscles. IEEE Transactions on Biomed. Eng. 2016;63:1687–1698. doi: 10.1109/TBME.2015.2498124. [DOI] [PubMed] [Google Scholar]

- 30.Castellini C, Passig G, Zarka E. Using ultrasound images of the forearm to predict finger positions. IEEE Transactions on Neural Syst. Rehabil. Eng. 2012;20:788–797. doi: 10.1109/TNSRE.2012.2207916. [DOI] [PubMed] [Google Scholar]

- 31.Sikdar S, et al. Novel method for predicting dexterous individual finger movements by imaging muscle activity using a wearable ultrasonic system. IEEE Transactions on Neural Syst. Rehabil. Eng. 2014;22:69–76. doi: 10.1109/TNSRE.2013.2274657. [DOI] [PubMed] [Google Scholar]

- 32.Hodges P, Pengel L, Herbert R, Gandevia S. Measurement of muscle contraction with ultrasound imaging. Muscle & nerve. 2003;27:682–692. doi: 10.1002/mus.10375. [DOI] [PubMed] [Google Scholar]

- 33.Baker, C. A., Akhlaghi, N., Rangwala, H., Kosecka, J. & Sikdar, S. Real-time, ultrasound-based control of a virtual hand by a trans-radial amputee. In 38th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), 3219–3222 (2016). [DOI] [PubMed]

- 34.Johnson RE, Kording KP, Hargrove LJ, Sensinger JW. Adaptation to random and systematic errors: Comparison of amputee and non-amputee control interfaces with varying levels of process noise. PLoS ONE. 2017;12:1–19. doi: 10.1371/journal.pone.0170473. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Paul M. Fitts. The information capacity of the human motor system in controlling the amplitude of movement. J. Exp. Psychol. 1954;47:381–391. doi: 10.1037/h0055392. [DOI] [PubMed] [Google Scholar]

- 36.MacKenzie IS. Fitts’ law as a research and design tool in human-computer interaction. Human-Computer Interact. 1992;7:91–139. doi: 10.1207/s15327051hci0701_3. [DOI] [Google Scholar]

- 37.Scheme E, et al. Motion normalized proportional control for improved pattern recognition-based myoelectric control. IEEE Transactions on Neural Syst. Rehabil. Eng. 2014;22:149–157. doi: 10.1109/TNSRE.2013.2247421. [DOI] [PubMed] [Google Scholar]

- 38.Hollander, M. & Wolfe, D. A. Nonparametric Statistical Methods, Second Edition (John Wiley & Sons, New York, 1999).

- 39.Laird NM, Ware JH. Random-effects models for longitudinal data. Biom. 1982;38:963–974. doi: 10.2307/2529876. [DOI] [PubMed] [Google Scholar]

- 40.Powell MA, Kaliki RR, Thakor NV. User training for pattern recognition-based myoelectric prostheses: Improving phantom limb movement consistency and distinguishability. IEEE Transactions on Neural Syst. Rehabil. Eng. 2014;22:522–532. doi: 10.1109/TNSRE.2013.2279737. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Resnik L, et al. Evaluation of EMG pattern recognition for upper limb prosthesis control: a case study in comparison with direct myoelectric control. J. NeuroEngineering Rehabil. 2018;15:1–13. doi: 10.1186/s12984-018-0361-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Sato Y, Kawase T, Takano K, Spence C, Kansaku K. Body ownership and agency altered by an electromyographically controlled robotic arm. Royal Soc. open science. 2018;5:172170. doi: 10.1098/rsos.172170. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Romano D, Caffa E, Hernandez-Arieta A, Brugger P, Maravita A. The robot hand illusion: Inducing proprioceptive drift through visuo-motor congruency. Neuropsychol. 2015;70:414–420. doi: 10.1016/j.neuropsychologia.2014.10.033. [DOI] [PubMed] [Google Scholar]

- 44.Rosén B, et al. Referral of sensation to an advanced humanoid robotic hand prosthesis. Scand. J. Plast. Reconstr. Surg. Hand Surg. 2009;43:260–266. doi: 10.3109/02844310903113107. [DOI] [PubMed] [Google Scholar]

- 45.Tarbox, E. et al. Low-power ultrasound imaging systems using time delay spectrometry. In 2017 IEEE International Ultrasonics Symposium (IUS), 1–4 (2017).

- 46.Young AJ, Hargrove LJ, Kuiken TA. Improving myoelectric pattern recognition robustness to electrode shift by changing interelectrode distance and electrode configuration. IEEE Transactions on Biomed. Eng. 2012;59:645–652. doi: 10.1109/TBME.2011.2177662. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Hwang HJ, Hahne JM, Müller KR. Real-time robustness evaluation of regression based myoelectric control against arm position change and donning/doffing. PLoS ONE. 2017;12:1–22. doi: 10.1371/journal.pone.0186318. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Jiang N, Muceli S, Graimann B, Farina D. Effect of arm position on the prediction of kinematics from emg in amputees. Med. & biological engineering & computing. 2013;51:143–151. doi: 10.1007/s11517-012-0979-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Geng Y, Zhou P, Li G. Toward attenuating the impact of arm positions on electromyography pattern-recognition based motion classification in transradial amputees. J. NeuroEngineering Rehabil. 2012;9:1–11. doi: 10.1186/1743-0003-9-74. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Young AJ, Hargrove LJ, Kuiken TA. The effects of electrode size and orientation on the sensitivity of myoelectric pattern recognition systems to electrode shift. IEEE Transactions on Biomed. Eng. 2011;58:2537–2544. doi: 10.1109/TBME.2011.2159216. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Khan, A. A., Dhawan, A., Akhlaghi, N., Majdi, J. A. & Sikdar, S. Application of wavelet scattering networks in classification of ultrasound image sequences. In 2017 IEEE International Ultrasonics Symposium (IUS), 1–4 (2017).

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Demonstration of ultrasound probe placement and sonomyographic training routine for prosthetic users.

Virtual cursor control task demonstration of experiment 2.

Demonstration of positional control of commercial prosthetic arm using proprioceptive sonomyographic control by Am2 and Am5.

Data Availability Statement

The datasets generated during and/or analyzed during the current study are available from the corresponding author on reasonable request.