Abstract

The ability to identify and localize our own limbs is crucial for survival. Indeed, the majority of our interactions with objects occur within the space surrounding the hands. In non-human primates, neurons in the posterior parietal and premotor cortices dynamically represent the space near the upper limbs in hand-centered coordinates. Neuronal populations selective for the space near the hand also exist in humans. It is unclear whether these remap the peri-hand representation as the arm is moved in space. Furthermore, no combined neuronal and behavioral data are available about the possible involvement of peri-hand neurons in the perception of the upper limbs in any species. We used fMRI adaptation to demonstrate dynamic hand-centered encoding of space by reporting response suppression in human premotor and posterior parietal cortices to repeated presentations of an object near the hand for different arm postures. Furthermore, we show that such spatial representation is related to changes in body perception, being remapped onto a prosthetic hand if perceived as one's own during an illusion. Interestingly, our results further suggest that peri-hand space remapping in the premotor cortex is most tightly linked to the subjective feeling of ownership of the seen limb, whereas remapping in the posterior parietal cortex closely reflects changes in the position sense of the arm. These findings identify the neural bases for dynamic hand-centered encoding of peripersonal space in humans and provide hitherto missing evidence for the link between the peri-hand representation of space and the perceived self-attribution and position of the upper limb.

Introduction

In everyday life, we do not explicitly perceive a boundary between the space near the body and more distant space. Nevertheless, psychologists have long theorized that we are surrounded by an “invisible bubble” of space (Hall, 1966), and behavioral experiments in humans have since provided evidence for the existence of a representation of the space near the body (“peripersonal space”; Halligan and Marshall, 1991). The space surrounding the hands is particularly important, primarily because we use them to interact with objects. Previous behavioral studies showed that sensory stimuli near the hands are processed in a reference frame centered on the upper limb (“peri-hand space”; Spence et al., 2004; Farnè et al., 2005; Brozzoli et al., 2011a).

Single-cell recordings in the posterior parietal and premotor cortices of macaques have identified neurons with both tactile and visual receptive fields. The latter are restricted to the space extending 30–40 cm from the location of the tactile receptive field (Hyvärinen and Poranen, 1974; Rizzolatti et al., 1981a,b; Graziano et al., 1994; Duhamel et al., 1998). The spatial alignment of visual and somatosensory receptive fields allows the construction of a body-part-centered representation of the peripersonal space (Graziano and Gross, 1993; Fogassi et al., 1996). Indeed, the responses of peri-hand neurons to an object approaching the limb are “anchored” to the hand itself, so that when the arm moves the visual receptive fields are updated accordingly (Graziano et al., 1997).

To remap their visual receptive fields onto the current hand position, the peri-hand neurons must integrate proprioceptive and visual information about the position of the arm (Salinas and Abbott, 1996; Andersen 1997; Graziano, 1999; Graziano et al., 2000). However, neuroimaging evidence for peri-hand space remapping is still missing in humans. Moreover, the possible involvement of peri-hand neurons in the perception of the upper limbs can only be speculated upon on the basis of the receptive field properties (Lloyd et al., 2003; Ehrsson et al., 2004; Makin et al., 2008).

Using fMRI adaptation, we suggested previously the existence of neurons with visual receptive fields restricted to peri-hand space (Brozzoli et al., 2011b). However, this experiment did not test the hypothesis that the responses of these neurons are anchored to the hand, nor did it relate their activity to the sense of ownership or the perceived position of the hand.

Here, in two neuroimaging experiments on healthy humans, we used fMRI adaptation to reveal selectivity in the intraparietal and premotor cortices for visual stimuli near the hand that is anchored to the hand as it is moved in space. Moreover, we provide evidence that the remapping of peri-hand space is directly related to the perception of the hand by showing that it can also occur to a prosthetic hand but only when perceived as one's own during an illusion (the “rubber hand illusion”; Botvinick and Cohen, 1998). Our results establish that human premotor and intraparietal areas dynamically encode peri-hand space in hand-centered coordinates and reveal that such a mechanism is related to the perceived location and self-attribution of the limb.

Materials and Methods

Participants

A total of 42 right-handed participants took part in the study. Twenty-six participants (22–52 years old; mean ± SD age, 29 ± 6 years; 22 males) took part in experiment 1. Sixteen participants (20–52 years old; mean ± SD age, 31 ± 8 years; 11 males) were recruited for experiment 2 (nine of whom had taken part in experiment 1). The study was approved by the local ethical committee at the Karolinska Institute. None of the participants had any history of neurological or sensory disorders. Informed consent was obtained from all participants.

Experimental setup

During 3 T fMRI scanning using the echo planar imaging protocol, the participants lay with the head tilted ∼30° forward to allow a direct view of an MR-compatible table (42 × 35 cm, with an adjustable slope), mounted on the bed above the waist and adjusted to allow comfortable positioning of the right hand on its surface. In both experiments, the visual stimulus consisted of a red ball (3 cm diameter) mounted on the tip of a wooden stick (50 cm long). The researchers listened to audio instructions regarding the onset and location of the stimuli and to a metronome at 80 beats per minute, which ensured that the pace of visual stimulation was controlled. An MR-compatible camera (MRC Systems) was used to monitor eye movements. All participants successfully maintained fixation throughout all scanning sessions.

Experiment 1: hand-centered encoding of space

Experimental setup and design.

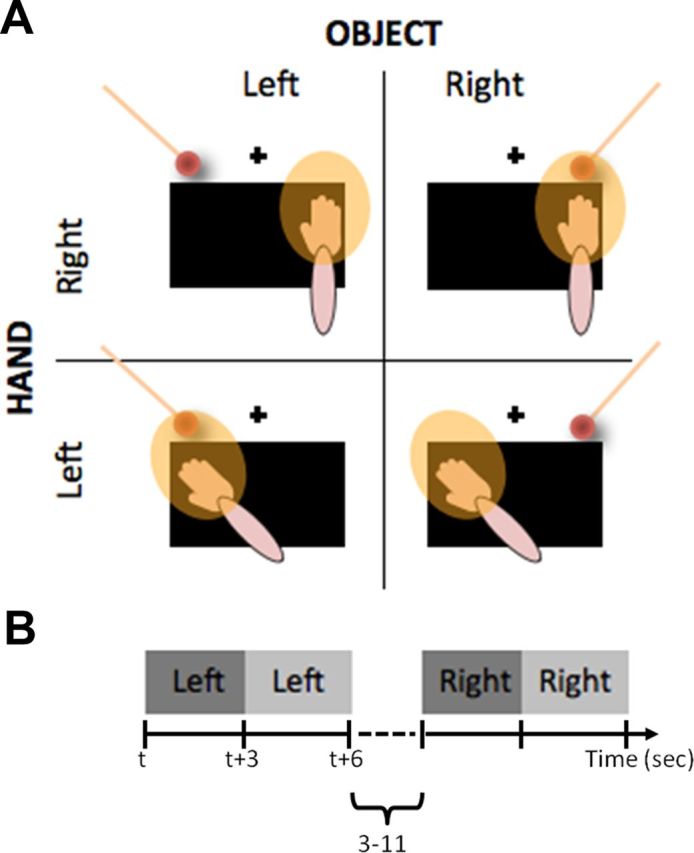

The participant's right hand was placed in one of two possible locations on the table, either on the right side [hand on the right (HR)] or the left side [hand on the left (HL)], relative to the body midline. The arm was moved between scan sessions to avoid possible movement-related artifacts. The distance between the two positions was 25 cm, as measured from the middle finger of the right hand. Halfway between the two positions, a spherical object (2 cm diameter) serving as the fixation point was mounted at the edge of the table.

Two trained experimenters moved the object for 6 s at a distance of 2 cm from the participant's fingers in either of the two possible locations. The positions of the visual stimuli were denoted as OR (object on the right) and OL (object on the left). Each 6 s period was divided into a pair of 3 s events, indicated by ORfirst and ORsecond for the object on the right and OLfirst and OLsecond for the object on the left, respectively. For each of the two hand positions, 26 pairs of OR and OL stimuli were presented in a fully randomized event-related design, separated by a jittered intertrial interval (7 ± 4 s) with no stimulation. Importantly, the object was always presented in the same two locations according to eye-centered coordinates. To monitor the alertness of the participants, catch trials were presented in an unpredictable way during each run. These involved stopping the object for 3 s either in the first (two ORfirst and two OLfirst per run) or the second (two ORsecond and two OLsecond per run) period of each trial. Participants were instructed to press a button with the left hand as soon as they noticed this (100% accuracy). Catch trials were modeled as a regressor of no interest.

Data analyses.

In the first-level analysis, we defined separate regressors for the first and second part of each 6 s visual stimulus (resulting in four regressors denoted as ORfirst, ORsecond, OLfirst, and OLsecond, respectively, containing all 3 s repetitions of the corresponding stimulus) in the same way for sessions performed with HR and HL. The results of this analysis were used as contrast estimates for each condition for each subject (contrast images). To accommodate intersubject variability, we entered the contrast images into a random-effects group analysis (second-level analysis). To account for the problem of multiple comparisons, we report peaks of activation surviving a significance threshold of p < 0.05, Bonferroni corrected using the familywise error rate or, given the strong a priori hypotheses, using small-volume corrections centered around relevant peak coordinates from previous studies (Ehrsson et al., 2005; Brozzoli et al., 2011b; Gentile et al., 2011; Petkova et al., 2011). For each peak, the coordinates in MNI space and the t and the p values are reported. The term “uncorrected” follows the p value in those cases in which we mention activations that did not survive correction for multiple comparisons but are nevertheless worth reporting descriptively with respect to our hypotheses.

The most important contrast for directly testing our hypothesis is the one-tailed interaction contrast defined as {[(ORfirst vs ORsecond)HR + (OLfirst vs OLsecond)HL] vs [(ORfirst vs ORsecond)HL + (OLfirst vs OLsecond)HR]} (see Fig. 2, Table 1). This contrast reveals voxels displaying a significant interaction between the BOLD adaptation for the visual stimulus and the position of the hand. We predicted a larger BOLD-adaptation effect for the two “near” conditions (ORHR and OLHL) as opposed to the two “far” conditions (ORHL and OLHR). Importantly, this interaction contrast allowed us to identify such brain regions while rigorously controlling for all properties of the stimuli other than an increased adaptation effect to an object close to the hand.

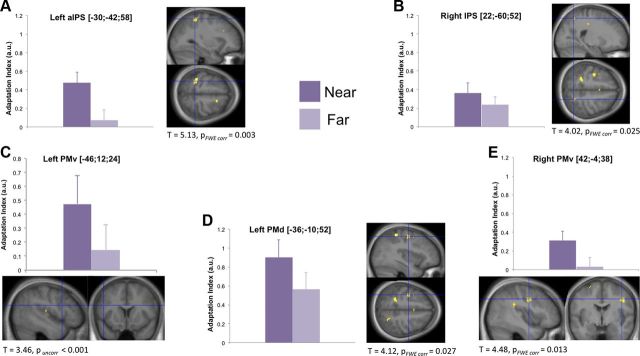

Figure 2.

Selective BOLD adaptation in response to an object presented near the hand for different hand positions. The IPS bilaterally (A, B), left PMd (D), and bilateral PMv (C, E) showed significantly stronger adaptation to the object presented near rather than far from the hand (p < 0.05 corrected; contrast: {[(ORfirst vs ORsecond)HR + (OLfirst vs OLsecond)HL] vs [(ORfirst vs ORsecond)HL + (OLfirst vs OLsecond)HR]; see Materials and Methods). The bar graphs report the average adaptation index, calculated as the difference in contrast estimates between the first and the second presentation of the object, for near and far conditions (dark and light color, respectively; error bars represent SEM). The activation maps thresholded at p < 0.001 uncorrected for display purposes and superimposed onto the average anatomical high-resolution T1-weighted MRI image of the participants' brains. The detailed statistics after correction for multiple comparisons are reported for each key area. aIPS, Anterior IPS; FWE, familywise error.

Table 1.

Experiment 1: hand-centered encoding of space

| Anatomical location | MNI coordinates |

Peak |

|||

|---|---|---|---|---|---|

| x | y | z | t value | p value | |

| L. anterior part of IPS | −30 | −42 | 58 | 5.13 | 0.003 |

| R. IPS | 22 | −60 | 52 | 4.02 | 0.025 |

| L. precentral gyrus (PMd) | −36 | −10 | 52 | 4.12 | 0.027 |

| R. precentral gyrus (PMv) | 42 | −4 | 38 | 4.48 | 0.013 |

| L. precentral sulcus (PMv) | −46 | 12 | 24 | 3.46 | <0.001* |

| R. lateral parietal operculum | 44 | −34 | 24 | 4.49 | <0.001* |

| R. putamen | 20 | 2 | 14 | 3.31 | 0.001* |

*Uncorrected for multiple comparisons. Interaction contrast: {[(ORfirst vs ORsecond)HR + (OLfirst vs OLsecond)HL] vs [(ORfirst versus ORsecond)HL + (OLfirst versus OLsecond)HR]}.

We also defined and inspected two contrasts to reveal the main effects of the position of the visual stimulation: {[(ORfirst vs ORsecond)HR + (ORfirst vs ORsecond)HL] vs [(OLfirst vs OLsecond)HR + (OLfirst vs OLsecond)HL]} for the object to the right; and {[(OLfirst vs OLsecond)HR + (OLfirst vs OLsecond)HL] vs [(ORfirst vs ORsecond)HR + (ORfirst vs ORsecond)HL]} for the object to the left. These contrasts identify voxels displaying significant BOLD adaptation in response to the object in one of the two locations, regardless of its position relative to the hand.

Experiment 2: remapping of hand-centered space onto a rubber hand that feels like one's own

Behavioral experiment.

Before the scanning sessions, a subset of participants took part in a behavioral experiment aimed at obtaining subjective and objective measures of the illusion in the same setup that would later be used in the scanner. The participants lay supine with their right hand placed on the right side of the table, in the same position (HR) as that used in experiment 1 (see above). A white cloth was used to hide the hand from the participants' sight. A gender-matched, realistic-looking rubber hand was placed on the table in a position equivalent to location HL in experiment 1 (see above). The distance between the middle fingers of the two hands was 22 cm. The rubber hand was rotated ∼45° with respect to the real hand (matching the rotation of the arm when the hand was placed on the left in experiment 1). Thus, the rubber hand was placed in an anatomically plausible position, which is necessary to induce the rubber hand illusion (Ehrsson et al., 2004; Tsakiris and Haggard, 2005). This setup allowed the experimenter to apply iso-directional strokes to the rubber hand and real hand as defined in an arm-centered spatial reference frame because this is known to maximize the illusion (Costantini and Haggard, 2007). Importantly, the rubber hand was placed in the same location as the real hand had been placed in experiment 1 (in the left postural condition), meaning that we could test our hypothesis about peri-hand space remapping onto the rubber hand in the same part of space for which we had data available regarding the real hand. The participants were instructed to look at the rubber hand where the strokes were being applied. A trained experimenter used two paintbrushes to deliver synchronous or asynchronous visuotactile stimulation during 1 min intervals, stroking all the fingers at ∼1 Hz. The participants rated four statements on a scale from −3 (“completely disagree”) to +3 (“agree completely”) after 1 min of synchronous or asynchronous stimulation. Statement 1 (“It seemed as if I were feeling the touch of the paintbrush at the point at which I saw the rubber hand being touched”) and statement 2 (“I felt as if the rubber hand were my hand”) were meant to probe the subjective strength of the illusion, whereas statement 3 (“It felt as if my real hand were turning rubbery”) and statement 4 (“It appeared visually as if the rubber hand were drifting toward the right”) served as control questions. The order of the stimulation periods was counterbalanced across participants. The questionnaire was only given to 13 of 16 participants because of the limitation in booked scanning time. Immediately after the questionnaire, we obtained objective behavioral evidence of the illusion by using an intermanual pointing task that probed changes in the perceived location of the hidden real hand (in a total of eight participants). Participants were exposed to six 1-min intervals of stimulation, divided into three synchronous and three asynchronous blocks, the order of which was randomized. Immediately before (“pre”) and after (“post”) each stimulation interval, the participants closed their eyes and had their left index finger at a fixed starting position on a panel aligned vertically over the location of the real right hand and the rubber hand. After a go signal, they performed a swift sliding movement with their left index finger stopping at the perceived location of the right index finger. A ruler, invisible to the participant, was used to record the end position of each movement. For each trial, the difference between post- and pre-measurements was interpreted as follows: a value <0 represented a drift toward the location of the rubber hand, whereas a value >0 corresponded to an overshoot beyond the location of the real hand.

Behavioral data analyses.

All data acquired in the behavioral assessments were tested for normality using the Kolmogorov–Smirnov test. The data obtained from the questionnaires did not pass the test; hence, nonparametric statistics were used. Comparisons were made for each of the four statement judgments between the two conditions (synchronous and asynchronous) using Wilcoxon's signed-rank tests. In contrast, the data obtained from the pointing localization task did pass the test for normality. Comparisons were made between the two conditions using two-tailed paired-sample t tests. Each post–pre difference was also contrasted against 0.

fMRI setup and design.

We used the same experimental setup for fMRI acquisition as in the behavioral experiments, with a few minor modifications as described below. An MR-compatible light-emitting diode was attached to the edge of the table at a distance of 12 cm from the middle finger of the real hand and at a distance of 10 cm from the middle finger of the rubber hand. The diode served as the fixation point, and the participants were instructed to maintain their gaze on this point. A trained experimenter used the same paintbrushes to deliver synchronous or asynchronous stimulation. All visual stimuli close to the rubber hand were delivered by a second experimenter using the same object as in experiment 1.

A mixed block- and event-related design was used in the fMRI experiment. A trial started with a period of visuotactile stimulation during which the experimenter applied brushstrokes to corresponding locations on the fingers of the real hand and the rubber hand, either synchronously or asynchronously at 1 Hz (with iso-directional strokes as in the behavioral experiment described above). To help the experimenter applying the same number of brushstrokes in the different conditions, he listened to an auditory metronome at 1 Hz over earphones. In the case of synchronous stimulation, the participant was asked to report the onset of the illusion (Ehrsson et al., 2004) by pressing a button with the left hand, which was placed in a resting position underneath the tilted support. In the case of asynchronous trials, out-of-synch brushstrokes were delivered to the real and the rubber hands for a period of time (AsynchPRE) identical in duration to one of the preceding synchronous trials (SynchPRE). This ensured that the duration and amount of visuotactile stimulation was perfectly balanced across synchronous and asynchronous trials. To match the key response, after an interval identical to one of the preceding onset times for synchronous trials, a 500 ms flash was emitted from the diode during the asynchronous trials. Participants were instructed to press a button with their left hand as soon as they noticed this. The maximum duration of the induction period (for both SynchPRE and AsynchPRE) was set to 24 s; trials in which the participant did not press the button within this period were aborted and modeled as conditions of no interest. After the participant's response, synchronous (or asynchronous) stimulation continued for a period ranging from 13 to 17 s (SynchPOST or AsynchPOST). One second after the end of this stimulation period, the ball was presented 2 cm from the tip of the index finger of the rubber hand (corresponding to the OLHL stimulation in experiment 1). Finally, a 7 s baseline interval separated consecutive trials. The order of synchronous and asynchronous trials was randomized. All participants performed three experimental sessions each containing 24 trials, equally divided into synchronous and asynchronous trials.

Data analyses.

Regressors modeling the instances of visual stimulation close to the rubber hand were defined according to the protocols developed for experiment 1. Specifically, each 6 s stimulus was divided into two 3-s events, leading to the definition of four different regressors. Synchfirst and Asynchfirst modeled the first 3 s of each visual stimulus after the synchronous and asynchronous stimulation periods, respectively, and Synchsecond and Asynchsecond modeled the last 3 s of object presentation in each condition.

To identify all voxels displaying a stronger adaptation effect for the visual stimulation after the induction of the illusion than the control, we defined the following contrast: [(Synchfirst vs Synchsecond) vs (Asynchfirst vs Asynchsecond)]. This contrast is fully balanced in terms of all sensory properties of the stimuli, including the position of the visual stimulation and the rubber hand.

Regression analyses.

We also investigated the relationship between the fMRI data and the behavioral measurements collected before scanning. Therefore, we ran two independent whole-brain regression analyses. For the proprioceptive drift, we computed individual scores by taking the difference of the average drifts between the synchronous and asynchronous conditions. The individual values were entered as a covariate in a regression analysis to identify significant positive correlations between the proprioceptive drift and the differential adaptation to visual stimuli after synchronous or asynchronous conditions. For the questionnaire, we computed the difference in subjective ratings between synchronous and asynchronous blocks for statement 1 (“referral of touch onto the rubber hand”) and statement 2 (“sense that the rubber hand is one's own hand”) separately. The individual scores were entered as covariates in separate regression analyses with the same adaptation effect described above. These regression analyses are independent because they assessed the correlation between BOLD adaptation and the two behavioral measures separately. They are unbiased because they tested the correlation between the behavioral measures and the BOLD adaptation at every voxel in the whole brain. Thus, this approach allows statistical inferences to be made, avoids any circularity in the statistics, and does not suffer from the inherent selection bias of region-of-interest-based approaches.

Results

Experiment 1: hand-centered encoding of space

We first probed the cortical mechanisms underlying the encoding of visually presented objects in coordinates centered on the upper limb. To this end, BOLD adaptation was assessed when an object was presented visually either near or far from the participant's right hand for two different arm postures. We could thus test the hypothesis that parietal and premotor areas remap peri-hand space along with the hand as it is moved to a different location. Such a finding would constitute compelling evidence for the dynamic encoding of space in hand-centered coordinates in the human brain.

The participants' right hand was placed fully visible on a table, either to the right or to the left of a central fixation point. We measured BOLD-adaptation responses to an object that was presented either on the right or the left side (Fig. 1A,B), i.e., near or far from the hand depending on the arm's position. The strength of this 2 × 2 factorial design is that the interaction term corresponds to adaptation responses specifically related to visual stimuli near the hand. This contrast effectively rules out all possible effects related to the absolute position of the visual stimuli in external space, proprioceptive and visual feedback from the arm in different postures, and low-level visual processing associated with the moving object.

Figure 1.

Setup for experiment 1. A, The participant's right hand was placed on a tilted table in front of them, on either the left or the right of a central fixation point (black cross). A three-dimensional object was presented to either the left or the right of the fixation point. The resulting 2 × 2 factorial design allowed direct testing for the selective encoding of the object within the peri-hand space (yellow halo around the hand). B, In each trial, the object appeared in one of the two locations for 6 s, with a jittered intertrial interval (7 ± 4 s) with no stimulation.

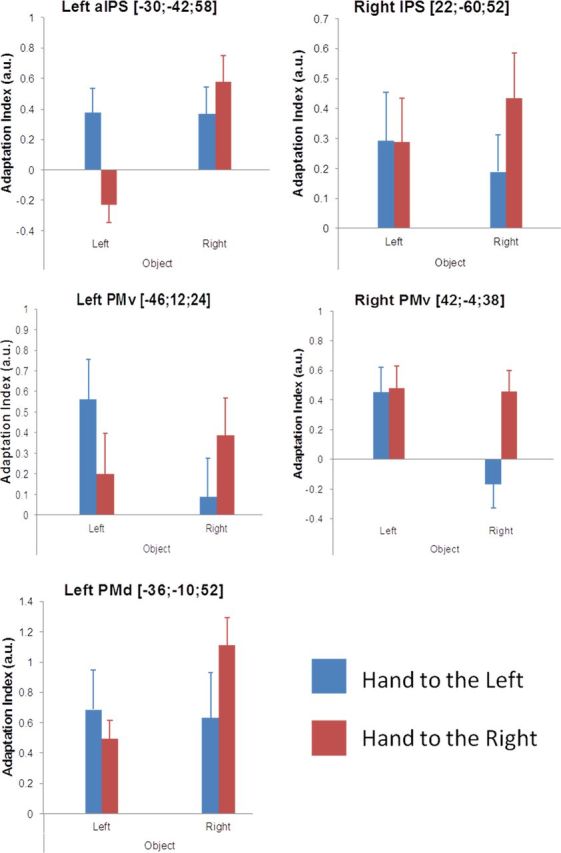

The interaction showed that the bilateral cortices lining the intraparietal sulcus (IPS; Fig. 2A,B), the bilateral ventral premotor cortices (PMv; Fig. 2C,E), and the left dorsal premotor cortex (PMd; Fig. 2D) exhibited adaptation specific to the encoding of visual stimuli in hand-centered coordinates. At a lower threshold (p = 0.001 uncorrected), a similar modulation was observed in the right putamen, which is worth reporting descriptively because this structure is thought to contain peripersonal space neurons in human (Brozzoli et al., 2011b) and non-human (Graziano et al., 1993) primates. These areas showed greater adaptation when the visual stimulus was presented near the hand compared with far away across the two arm positions (Table 1), a pattern of responses that is also evident from the effect size plots in Figure 2.

Additional post hoc analyses confirmed near-hand adaptation responses in all key areas during both conditions in which the object was presented near the hand (all p < 0.001 uncorrected, for [(ORfirst vs ORsecond)HR] and [(OLfirst vs OLsecond) HL] contrasts). This is also evident in Figure 3 in which we descriptively plot the effect size of the BOLD adaptation for the individual conditions. Furthermore, in the bilateral PMv and right IPS, we observed a similar degree of near-hand adaptation in the two arm postures (no significant differences for neither [(ORfirst vs ORsecond)HR] vs [(OLfirst vs OLsecond)HL] nor [(OLfirst vs OLsecond)HL] vs [(ORfirst vs ORsecond) HR] contrasts), but in the left PMd and the left anterior IPS, the near-hand adaptation effect was greater when the arm was placed to the right (p < 0.001 uncorrected for [(ORfirst vs ORsecond)HR] vs [(OLfirst vs OLsecond) HL] contrast; Fig. 3). We speculate that the latter could reflect a greater representation of space surrounding the right hand in these left-lateralized areas when the right hand is placed in the right hemifield as opposed to the left one. It is important to point out that one should interpret the plotted adaptation responses for the individual conditions with great caution because they are not controlled for a number of factors (for example, arm posture, visual stimulation in different hemifields, encoding in head- or body-midline centered coordinates).

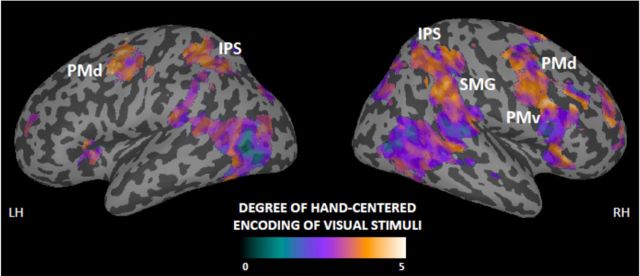

Figure 3.

Effect size of BOLD adaptation for individual conditions. The bar graphs report the adaptation index, calculated as the difference in contrast estimates between the first and the second presentation of the object, for each of the four conditions used to define the interaction contrast in the factorial design (blue bars refer to the conditions with the hand to the left and red bars to the conditions with the hand to the right; error bars represent SEM; the corresponding activation map is shown in Fig. 2). aIPS, Anterior IPS.

The experimental design also allowed us to test for spatial encoding of the visual stimuli regardless of the position of the hand (main effects of object position). As expected, these contrasts produced significant adaptation mainly in early visual areas, consistent with the repeated activation of retinotopically organized visual representations of the object. The anatomical distinction between these areas showing absence of hand-centered processing and the frontoparietal areas exhibiting hand-centered encoding is further illustrated descriptively in Figure 4. In this figure, we display the effect sizes of the interaction contrast (hand-centered encoding; yellow) and main effect contrast (absence of hand-centered encoding; blue–purple) as a single color-coded map for all voxels in the whole brain.

Figure 4.

Descriptive mapping of contrast estimates for the spatial encoding of visual stimuli. The figure displays areas showing BOLD adaptation to the visual stimulus using a gradient indexing the degree of hand-centered encoding (0 represents absence of hand-centered responses, whereas larger values represent stronger hand-centered encoding). Although early visual areas adapted to the object independently of its position relative to the hand, the posterior parietal and premotor cortices showed a high degree of hand-centered encoding. The anatomical labels correspond to the regions in which the hand-centered responses were statistically significant (interaction contrast; shown in Fig. 2). The map was derived as follows. We computed the difference between the contrast estimates for the interaction and each of the main effects of visual stimulation on the left or right side (see Materials and Methods). We then selected the minimum value of the two differences and assigned that to the corresponding voxel. The values were then rescaled into a color map starting from zero and overlaid onto the inflated cortical surfaces of the standard brain. We restricted this analysis to voxels showing a basic response to the presentation of the visual stimuli (by using the inclusive mask from the contrast “all visual stimuli vs baseline,” thresholded at p < 0.001 uncorrected). SMG, Supramarginal gyrus.

In summary, the results of the first experiment clearly demonstrate adaptation responses to visual stimuli in the premotor and parietal cortices and the putamen that are restricted to the space near the hand and that are anchored to the hand as it is moved in space. In other words, these areas display responses distinctly characteristic of neuronal encoding of objects in hand-centered coordinates.

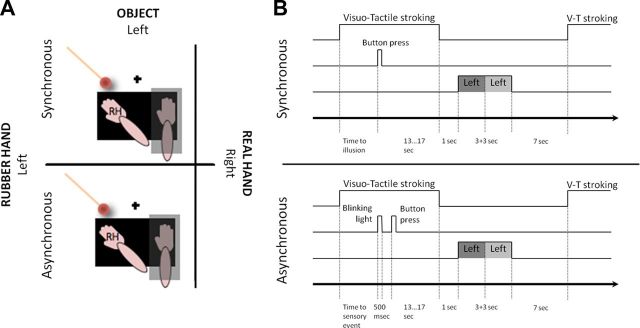

Experiment 2: remapping of hand-centered space onto a rubber hand that feels like one's own

We moved on to test the hypothesis that the remapping of hand-centered space is centrally mediated and directly related to the subjective perception of one's hand. To this end, we used the rubber hand illusion (Botvinick and Cohen, 1998) to experimentally manipulate the perceived ownership and localization of the hand. In this illusion, participants experience ownership over a prosthetic hand in direct view after a period of synchronous brushstrokes applied to the rubber and real hands, with the latter being hidden from view. Brushstrokes applied to the two hands asynchronously do not elicit the illusion and serve as a control for otherwise equivalent conditions. In the present experiment, we induced the illusion and then directly examined the dynamic remapping of hand-centered space onto the prosthetic hand immediately after the induction period (Fig. 5A,B). Importantly, the position of the prosthetic hand in the left hemifield and the position of the hidden real right hand matched the two hand positions used in experiment 1 (Fig. 5A; see Materials and Methods). Finally, because the illusion involves both a subjective feeling of owning the prosthetic hand and a drift in the perceived location of the hand (Botvinick and Cohen, 1998), we investigated how these two key percepts related to the neural hand-centered remapping responses.

Figure 5.

Setup for experiment 2. A, The participant's right hand was placed on the same tilted support as used in experiment 1. A rubber hand was placed to the left of the fixation point, in a position equivalent to the left position of the real right hand in experiment 1. A light-emitting diode served as the fixation point (black cross). Two paintbrushes were used to deliver synchronous or asynchronous visuotactile stimulation (top and bottom part, respectively). The spherical object (identical to that in experiment 1) was presented close to the rubber hand. B, A mixed block- and event-related design was used (see Materials and Methods) V-T, Visuotactile.

Behavioral and BOLD evidence confirming the illusion

To confirm the successful induction of the rubber hand illusion in the scanner environment, we conducted a questionnaire and an intermanual pointing experiment directly before the scanning session in a subset of participants (see Materials and Methods).

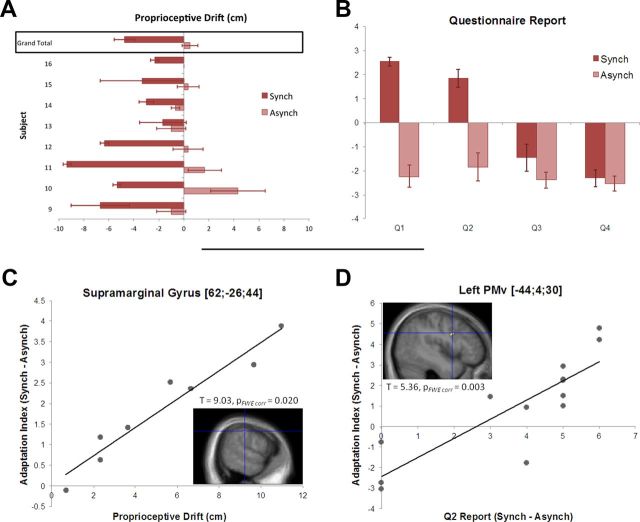

The results of the questionnaire showed that the participants experienced a significantly stronger sense of ownership over the prosthetic hand after the synchronous condition than the asynchronous one (Fig. 6B, Q2; Wilcoxon's signed-rank test, n = 13, Z = 2.80, p = 0.005). The participants also affirmed the illusory referral of touch to the rubber hand and, again, more strongly so after the synchronous condition compared with the asynchronous one (Fig. 6B, Q1; Wilcoxon's signed-rank test, n = 13, Z = 3.18, p = 0.002). Finally, the synchronous condition was accompanied by a significantly greater drift in the perceived location of the right hand toward the left side (i.e., toward the prosthetic hand) than the asynchronous condition (Fig. 6A; two-tailed t test, n = 8, t = 4.03, p = 0.005).

Figure 6.

Correlations between behavioral percepts of the illusion and neural hand-centered remapping. A, Individual proprioceptive drift and group average. The bars represent the difference between post- and pre-measurements (negative values more to the left, positive values more to the right), in the synchronous and the asynchronous condition (average of 3 trials; error bars represent SEM). The drift was significantly larger in the synchronous than in the asynchronous condition (drift in the synchronous condition: −4.75 ± 2.6 cm, 2-tailed t test against 0, t = 4.53, p = 0.001; drift in the asynchronous condition: 0.5 ± 1.78, 2-tailed t test against 0, t = 0.12, p = 0.45). B, Participants were asked to rate four statements on a scale from −3 (“completely disagree”) to +3 (“agree completely”) after 1 min of synchronous or asynchronous stimulation. They reported stronger illusory referral of touch to the rubber hand after the synchronous condition compared with the asynchronous one (Q1, Wilcoxon's signed-rank test, n = 13, Z = 3.18, p = 0.002). They also experienced a significantly stronger sense of ownership over the prosthetic hand after the synchronous than the asynchronous condition (Q2, Wilcoxon's signed-rank test, n = 13, Z = 2.80, p = 0.005). C, A whole-brain second-level regression model revealed a significant linear relationship (p < 0.05 corrected) between the proprioceptive drift toward the rubber hand and the effect size of the BOLD-adaptation response indexing hand-centered remapping to the rubber hand across individuals (contrast described in Fig. 7 and Materials and Methods). D, Significant linear regression (p < 0.05 corrected) between the subjectively rated strength of ownership (questionnaire data) and the BOLD-adaptation response indexing hand-centered remapping to the rubber hand across individuals. FWE, Familywise error.

Next, we took advantage of the fact that we could also provide fMRI evidence for the successful induction of the illusion. Direct comparison between the synchronous visuotactile stimulation period and the asynchronous control revealed significant activation in the bilateral ventral premotor and right intraparietal cortices and additional activation in the bilateral inferior parietal cortices and right anterior insular cortex. Previous fMRI studies revealed that the rubber hand illusion is associated with increased BOLD activation in these areas (Ehrsson et al., 2004). Therefore, the combination of the behavioral and imaging data presented above confirms that the participants were experiencing the rubber hand illusion during the scan sessions.

Adaptation responses indicate remapping of peri-hand space onto the rubber hand

We predicted that adaptation responses to the visual stimulus near the rubber hand would be significantly stronger after periods of synchronous rather than asynchronous stimulation. Such findings would be compatible with remapping of the peri-hand space representation onto the location of the rubber hand only when the latter is perceived as one's own, in analogy with the dynamic remapping we observed in experiment 1 when the participant's own hand was moved to the same location.

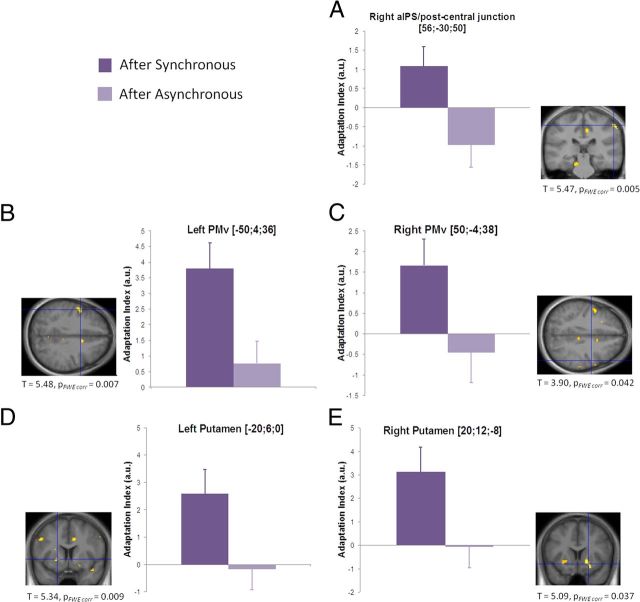

As predicted, the presentation of the object near the prosthetic hand led to stronger BOLD adaptation in the premotor, posterior parietal, and putaminal regions after the synchronous compared with the asynchronous stimulation periods (for all significant peaks, see Fig. 7, Table 2). It is noteworthy that this contrast was matched in terms of the visual input from the rubber hand and all low-level features of the visual stimulus. The peak of activation in the right posterior parietal cortex was centered on the most superior part of the supramarginal gyrus, with the cluster extending into the cortices lining the junction of the intraparietal and the postcentral sulci (Fig. 7A). In the premotor cortices, significant adaptation responses were found in bilateral PMv (Fig. 7B,C). We also observed bilateral adaptation in the putamen (Fig. 7D,E).

Figure 7.

Differential BOLD adaptation to an object presented near the prosthetic hand after the synchronous and asynchronous induction periods. The right IPS (A), PMv bilaterally (B, C), and the putamen bilaterally (D, E) exhibited significantly stronger BOLD adaptation to the repeated presentation of the ball near the prosthetic hand after the synchronous compared with the asynchronous condition. The bar graphs plot the effect size of the adaptation, for the synchronous and asynchronous conditions, separately (dark and light color, respectively; error bars represent SEM). aIPS, Anterior IPS; FWE, familywise error.

Table 2.

Experiment 2: remapping of hand-centered space onto the owned rubber hand

| Anatomical location | MNI coordinates |

Peak |

|||

|---|---|---|---|---|---|

| x | y | z | t value | p value | |

| R. anterior IPS/postcentral junction | 56 | −30 | 50 | 5.47 | 0.005 |

| L. precentral gyrus (PMv) | −50 | 4 | 36 | 5.48 | 0.007 |

| R. precentral gyrus (PMv) | 50 | −4 | 38 | 3.90 | 0.042 |

| L. putamen | −20 | 6 | 0 | 5.34 | 0.009 |

| R. putamen | 20 | 12 | −8 | 5.09 | 0.037 |

| R. lateral parietal operculum | 48 | −10 | 24 | 5.40 | <0.001* |

| L. IPS | −28 | −74 | 50 | 3.90 | <0.001* |

| L. supramarginal gyrus | −60 | −28 | 34 | 3.73 | 0.001* |

*Uncorrected for multiple comparisons. [(Synchfirst vs Synchsecond) vs (Asynchfirst vs Asynchsecond)].

In summary, the pattern of frontoparietal adaptation responses observed is fully compatible with the encoding of the object in a spatial reference frame remapped onto the rubber hand, only when participants experience it as their own hand.

Contribution of hand-centered space remapping to sense of position and ownership of the hand

Next, we looked for areas displaying a systematic relationship between the degree of neural hand-centered spatial remapping and the degree of perceptual changes experienced during the illusion. To this end, we ran two independent whole-brain linear-regression analyses in experiment 2 in which we looked for the following: (1) correlations between the subjectively rated strength of ownership (questionnaire data) and the effect size of the BOLD-adaptation response indicative of hand-centered remapping of space onto the rubber hand; and (2) correlations between the proprioceptive drift toward the rubber hand and the same BOLD-adaptation effect size (for details, see Materials and Methods).

The results showed that the more individual participants mislocalized the location of their right hand toward the location of the rubber hand, the stronger the adaptation responses indicative of hand-centered remapping of space in the right posterior parietal cortex, with the peak centered on the superior segment of the supramarginal gyrus (Fig. 6C). The cluster extended toward the anterior part of the IPS, and the location of the peak in MNI space corresponded well with the adaptation response observed in the main analysis of experiment 2 (compare with Fig. 7A). In contrast, we found that the stronger the participants rated the feeling of ownership over the rubber hand, the stronger the rubber hand-centered adaptation in the left PMv (Fig. 6D). Again, the location of this peak corresponded well with the adaptation responses observed in this area in the main analysis of experiment 2 (compare with Fig. 7B).

Discussion

This study has two main findings. First, we showed that neuronal populations in the human intraparietal, premotor, and inferior parietal cortices and in the putamen construct a dynamic representation of peri-hand space in coordinates centered on the upper limb. Second, we revealed the link between the hand-centered encoding of space and the perception of the hand with respect to its location and identity. These findings are relevant because they associate the encoding of peripersonal space with the perceived ownership and localization of limbs, which has important bearings on models of bodily self-perception (Graziano and Botvinick, 2002; Botvinick, 2004; Makin et al., 2008; Tsakiris, 2010; Ehrsson, 2012).

Dynamic remapping of the hand-centered representation of space

A set of premotor–parietal–putaminal regions showed selective BOLD adaptation to visual stimuli presented near the participant's hand across different postures. Such adaptation responses are more closely related to the receptive field properties of neurons than a traditional analysis (Grill-Spector et al., 2006; Weigelt et al., 2008; Doeller et al., 2010; Malach, 2012). Crucially, we can rule out general effects related to the arm crossing the body midline (Lloyd et al., 2003) and encoding of visual stimuli in reference frames not centered on the upper limb, e.g., head centered (Fischer et al., 2012), eye centered (Bernier and Grafton, 2010), or allocentric. Furthermore, the object was always presented within reaching space. Consequently, our findings are specifically related to peri-hand space rather than to a general representation of reaching space, providing compelling evidence for encoding in hand- or arm-centered coordinates.

In experiment 1, the peri-hand space remapping across the two arm positions was mediated by the integration of afferent visual and proprioceptive signals. The ventral premotor and intraparietal cortices receive such afferent sensory information via projections from somatosensory and visual areas (Rizzolatti et al., 1998; Graziano and Botvinick, 2002), forming with the putamen a visual–somesthetic network that processes the space on and near the body surface (Graziano et al., 1997). In contrast, during the rubber hand illusion, the peri-hand space remapping onto the rubber hand occurred as a result of a central process. Here, an initial conflict between the seen and felt positions of the hand is resolved by the recalibration of the peri-hand space representation so that tactile, visual, and proprioceptive signals fuse perceptually. Crucially, specific peri-hand space BOLD adaptation was detected in the premotor and posterior parietal cortices when both the real hand was physically moved and the arm was perceived to have moved by means of the illusion. This suggests that peri-hand space remapping arises from the dynamic integration of visual and proprioceptive signals at the level of multimodal frontoparietal areas.

Our results also shed light on the neural mechanism underlying behavioral and neurophysiological findings reporting selective processing of peri-hand visual stimuli (di Pellegrino et al., 1997; Farnè et al., 2000; Pavani and Castiello, 2004; Spence et al., 2004; Makin et al., 2009). An attentional account has been proposed as a possible interpretation for part of these behavioral findings (Spence et al., 2000, 2004; Kennett et al., 2001), in line with the notion that peripersonal space and cross-modal spatial attention might share common mechanisms (Maravita et al., 2003; Driver and Noesselt, 2008). The hand-centered spatial remapping allows the encoding of visual stimuli within the same reference frame as somatosensory information, leading to more robust multisensory interactions that can facilitate behavior.

Peri-hand space and sense of position

Our findings clarify that information about arm position is present in the bilateral ventral premotor, the right anterior intraparietal, and the bilateral inferior parietal cortices (Young et al., 2004; Naito et al., 2005), providing more direct evidence for the link between the arm position sense and the representation of peri-hand space than previous studies. In previous research, visual stimuli have been presented near the hand placed in a single position (Makin et al., 2007; Brozzoli et al., 2011b). Other studies have manipulated the posture of the arm but without assessing the selective encoding of peri-hand space (Lloyd et al., 2003). Here, we revealed remapping of peri-hand space by testing for selective encoding in combinations with a postural manipulation.

The strongest support for a link to the perceived hand position was found in the posterior parietal cortex, in which peri-hand space remapping onto the rubber hand correlated significantly with the proprioceptive drift toward the rubber hand. This is in keeping with the known neurophysiological functions of the posterior parietal cortex and its role in supporting the body-schema representation (Head and Holmes, 1911; Kammers et al., 2009) and the planning of manual actions (Culham and Valyear, 2006; Gallivan et al., 2011). Neurons in area 5 of the macaque brain encode the hand position by integrating visual and proprioceptive signals (Graziano et al., 2000; Graziano and Botvinick, 2002). Similarly, the human intraparietal cortex integrates visual and proprioceptive information about the upper limb (Lloyd et al., 2003; Ehrsson et al., 2004). Here, we provide evidence for the role of this area in constructing a “proprioceptive skeleton” for the representation of peripersonal space, onto which selective visual responses can be grounded (Cardinali et al., 2009).

These findings concur with a hierarchical view on proprioception whereby afferent signals from muscles, skin, and joints first reach their cortical targets in primary somatosensory (Iwamura et al., 1983; Pons et al., 1992; Naito et al., 2005) and motor (Lemon and Porter, 1976; Naito et al., 2002) cortices. The information is then transferred via direct connections to areas in the intraparietal (Cavada and Goldman-Rakic, 1989; Lewis and Van Essen, 2000) and premotor (Luppino et al., 1999; Rizzolatti and Luppino, 2001) cortices in which proprioceptive signals are integrated with visual, auditory, and vestibular signals in a common reference frame. The result is a representation of the arm position encoded in the same coordinates used for nearby objects, facilitating object-directed actions (Jeannerod et al., 1995; Tunik et al., 2005; Makin et al., 2012). This is consistent with an involvement of parietal and premotor regions in action planning and execution (Fogassi and Luppino, 2005; Culham and Valyear, 2006; Bernier and Grafton, 2010).

Peri-hand space and limb ownership

Our data suggest that, during the rubber hand illusion, the central representation of peri-hand space is remapped onto the owned model hand. Interestingly, we found the strongest association between the feeling of limb ownership and the coding of hand-centered space in the PMv. In this area, the degree of hand-centered spatial encoding correlated with the subjective sense of hand ownership. This is consistent with previous studies that related ventral premotor activity to the subjective level of ownership (Ehrsson et al., 2004, 2005; Petkova et al., 2011). This sheds light on the nature of the multisensory mechanisms mediating body ownership. In fact, despite the current consensus that we come to experience limbs (Botvinick and Cohen, 1998; Ehrsson et al., 2004; Ehrsson, 2007) and whole bodies as ours (Lenggenhager et al., 2007; Ionta et al., 2011; Petkova et al., 2011; Schmalzl and Ehrsson, 2011) as a result of interactions between vision, touch, and proprioception, the precise mechanisms have remained unclear. Based on changes in visual sensitivity of peri-hand neurons when objects were presented close to a visible fake arm, Graziano speculated that remapping of peri-hand space could support the embodiment of prosthetic limbs (Graziano, 1999; Graziano et al., 2000), although he could not test this. Ehrsson et al. (2004) theorized that the premotor activity associated with the feeling of limb ownership in humans might reflect multisensory integration in hand-centered coordinates. The present results speak to these hypotheses because they inform us about the body-part-centered reference frame used in the neural computations supporting self-attribution of limbs. This is an important conclusion because it constrains models of body ownership (Makin et al., 2008; Tsakiris, 2010; Ehrsson, 2012), explains the spatial limits described in behavioral studies (Tsakiris and Haggard, 2005; Costantini and Haggard, 2007; Lloyd, 2007; Tsakiris et al., 2007; Folegatti et al., 2012), and provides a framework within which to study the peripersonal space as a crucial boundary zone between self and non-self.

Concluding remarks

Previous studies investigated the representation of the peripersonal space by examining behavioral responses in patients with brain lesions (Farnè et al., 2000, 2005), probing reaction times during presentation of cross-modal stimuli near the body (Spence et al., 2004), and by registering changes in the excitability of the primary motor cortex to the presentation of objects near the hands (Makin et al., 2009). Here, we have taken a more direct approach and measured the BOLD-adaptation signatures of hand-centered encoding of space in the human brain. Unlike previous fMRI studies (Lloyd et al., 2003; Sereno and Huang, 2006; Makin et al., 2007; Macaluso and Maravita, 2010; Brozzoli et al., 2011b), we directly tested for hand-centered encoding by manipulating the position of the hand in view. Furthermore, we provided evidence that such encoding is directly related to changes in body perception. Thus, the present data bridge a gap between neurophysiological studies on non-human primates and behavioral and neuropsychological observations in humans and extend our knowledge of the brain mechanisms involved in the representation of the peripersonal space.

Notes

Supplemental material for this article is available at http://130.237.111.254/ehrsson/pdfs/Brozzoli&Gentile_et_al._SI.pdf. This material has not been peer reviewed.

Footnotes

This study was funded by the European Research Council, the Swedish Foundation for Strategic Research, the Human Frontier Science Program, the James S. McDonnell Foundation, the Swedish Research Council, and Söderberska Stiftelsen. C.B. was supported by Marie Curie Actions, and G.G. is supported by Karolinska Institute. The fMRI scans were conducted at the MR-Centre at the Karolinska University Hospital Huddinge. We thank Tamar R. Makin for comments on a previous version of this manuscript and Alexander Skoglund for technical support.

The authors declare no competing financial interests.

References

- Andersen RA. Multimodal integration for the representation of space in the posterior parietal cortex. Philos Trans R Soc Lond B Biol Sci. 1997;352:1421–1428. doi: 10.1098/rstb.1997.0128. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bernier PM, Grafton ST. Human posterior parietal cortex flexibly determines reference frames for reaching based on sensory context. Neuron. 2010;68:776–788. doi: 10.1016/j.neuron.2010.11.002. [DOI] [PubMed] [Google Scholar]

- Botvinick M. Neuroscience. Probing the neural basis of body ownership. Science. 2004;305:782–783. doi: 10.1126/science.1101836. [DOI] [PubMed] [Google Scholar]

- Botvinick M, Cohen J. Rubber hands “feel” touch that eyes see. Nature. 1998;391:756. doi: 10.1038/35784. [DOI] [PubMed] [Google Scholar]

- Brozzoli C, Makin T, Cardinali L, Holmes N, Farnè A. Peripersonal space: A multisensory interface for body-objects enteractions. In: Murray MM, Wallace MT, editors. Frontiers in the neural basis of multisensory processes. London: Taylor and Francis; 2011a. [PubMed] [Google Scholar]

- Brozzoli C, Gentile G, Petkova VI, Ehrsson HH. fMRI-adaptation reveals a cortical mechanism for the coding of space near the hand. J Neurosci. 2011b;31:9023–9031. doi: 10.1523/JNEUROSCI.1172-11.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cardinali L, Brozzoli C, Farnè A. Peripersonal space and body schema: two labels for the same concept? Brain Topogr. 2009;21:252–260. doi: 10.1007/s10548-009-0092-7. [DOI] [PubMed] [Google Scholar]

- Cavada C, Goldman-Rakic PS. Posterior parietal cortex in rhesus monkey: I. Parcellation of areas based on distinctive limbic and sensory corticocortical connections. J Comp Neurol. 1989;287:393–421. doi: 10.1002/cne.902870402. [DOI] [PubMed] [Google Scholar]

- Costantini M, Haggard P. The rubber hand illusion: sensitivity and reference frame for body ownership. Conscious Cogn. 2007;16:229–240. doi: 10.1016/j.concog.2007.01.001. [DOI] [PubMed] [Google Scholar]

- Culham JC, Valyear KF. Human parietal cortex in action. Curr Opin Neurobiol. 2006;16:205–212. doi: 10.1016/j.conb.2006.03.005. [DOI] [PubMed] [Google Scholar]

- di Pellegrino G, Làdavas E, Farné A. Seeing where your hands are. Nature. 1997;388:730. doi: 10.1038/41921. [DOI] [PubMed] [Google Scholar]

- Doeller CF, Barry C, Burgess N. Evidence for grid cells in a human memory network. Nature. 2010;463:657–661. doi: 10.1038/nature08704. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Driver J, Noesselt T. Multisensory interplay reveals crossmodal influences on “sensory-specific” brain regions, neural responses, and judgments. Neuron. 2008 Jan 10;57:11–23. doi: 10.1016/j.neuron.2007.12.013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Duhamel JR, Colby CL, Goldberg ME. Ventral intraparietal area of the macaque: congruent visual and somatic response properties. J Neurophysiol. 1998;79:126–136. doi: 10.1152/jn.1998.79.1.126. [DOI] [PubMed] [Google Scholar]

- Ehrsson HH. The experimental induction of out-of-body experiences. Science. 2007;317:1048. doi: 10.1126/science.1142175. [DOI] [PubMed] [Google Scholar]

- Ehrsson HH. The concept of body ownership and its relation to multisensory integration. In: Stein BE, editor. The new handbook of multisensory processes. Cambridge, MA: Massachusetts Institute of Technology; 2012. in press. [Google Scholar]

- Ehrsson HH, Spence C, Passingham RE. That's my hand! Activity in premotor cortex reflects feeling of ownership of a limb. Science. 2004;305:875–877. doi: 10.1126/science.1097011. [DOI] [PubMed] [Google Scholar]

- Ehrsson HH, Kito T, Sadato N, Passingham RE, Naito E. Neural substrate of body size: illusory feeling of shrinking of the waist. PLoS Biol. 2005;3:e412. doi: 10.1371/journal.pbio.0030412. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Farnè A, Pavani F, Meneghello F, Làdavas E. Left tactile extinction following visual stimulation of a rubber hand. Brain. 2000;123:2350–2360. doi: 10.1093/brain/123.11.2350. [DOI] [PubMed] [Google Scholar]

- Farnè A, Demattè ML, Làdavas E. Neuropsychological evidence of modular organization of the near peripersonal space. Neurology. 2005;65:1754–1758. doi: 10.1212/01.wnl.0000187121.30480.09. [DOI] [PubMed] [Google Scholar]

- Fischer E, Bülthoff HH, Logothetis NK, Bartels A. Human areas V3A and V6 compensate for self-induced planar visual motion. Neuron. 2012;73:1228–1240. doi: 10.1016/j.neuron.2012.01.022. [DOI] [PubMed] [Google Scholar]

- Fogassi L, Luppino G. Motor functions of the parietal lobe. Curr Opin Neurobiol. 2005;15:626–631. doi: 10.1016/j.conb.2005.10.015. [DOI] [PubMed] [Google Scholar]

- Fogassi L, Gallese V, Fadiga L, Luppino G, Matelli M, Rizzolatti G. Coding of peripersonal space in inferior premotor cortex (area F4) J Neurophysiol. 1996;76:141–157. doi: 10.1152/jn.1996.76.1.141. [DOI] [PubMed] [Google Scholar]

- Folegatti A, Farnè A, Salemme R, de Vignemont F. The rubber hand illusion: two's a company, but three's a crowd. Conscious Cogn. 2012;21:799–812. doi: 10.1016/j.concog.2012.02.008. [DOI] [PubMed] [Google Scholar]

- Gallivan JP, McLean DA, Smith FW, Culham JC. Decoding effector-dependent and effector-independent movement intentions from human parieto-frontal brain activity. J Neurosci. 2011;31:17149–17168. doi: 10.1523/JNEUROSCI.1058-11.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gentile G, Petkova VI, Ehrsson HH. Integration of visual and tactile signals from the hand in the human brain: an fMRI study. J Neurophysiol. 2011;105:910–922. doi: 10.1152/jn.00840.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Graziano MS. Where is my arm? The relative role of vision and proprioception in the neuronal representation of limb position. Proc Natl Acad Sci U S A. 1999;96:10418–10421. doi: 10.1073/pnas.96.18.10418. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Graziano MS, Gross CG. A bimodal map of space: somatosensory receptive fields in the macaque putamen with corresponding visual receptive fields. Exp Brain Res. 1993;97:96–109. doi: 10.1007/BF00228820. [DOI] [PubMed] [Google Scholar]

- Graziano MS, Yap GS, Gross CG. Coding of visual space by premotor neurons. Science. 1994;266:1054–1057. doi: 10.1126/science.7973661. [DOI] [PubMed] [Google Scholar]

- Graziano MS, Hu XT, Gross CG. Visuospatial properties of ventral premotor cortex. J Neurophysiol. 1997;77:2268–2292. doi: 10.1152/jn.1997.77.5.2268. [DOI] [PubMed] [Google Scholar]

- Graziano MS, Cooke DF, Taylor CS. Coding the location of the arm by sight. Science. 2000;290:1782–1786. doi: 10.1126/science.290.5497.1782. [DOI] [PubMed] [Google Scholar]

- Graziano MS, Botvinick MM. How the brain represents the body: insights from neurophysiology and psychology. In: Prinz W, Hommel B, editors. Common mechanisms in perception and action: attention and performance XIX. Oxford: Oxford UP; 2002. pp. 136–157. [Google Scholar]

- Grill-Spector K, Henson R, Martin A. Repetition and the brain: neural models of stimulus-specific effects. Trends Cogn Sci. 2006;10:14–23. doi: 10.1016/j.tics.2005.11.006. [DOI] [PubMed] [Google Scholar]

- Hall ET. The hidden dimension. Garden City, NY: Anchor Books; 1966. [Google Scholar]

- Halligan PW, Marshall JC. Left neglect for near but not far space in man. Nature. 1991;350:498–500. doi: 10.1038/350498a0. [DOI] [PubMed] [Google Scholar]

- Head H, Holmes G. Sensory disturbances from cerebral lesions. Brain. 1911–1912;34:102–254. [Google Scholar]

- Hyvärinen J, Poranen A. Function of the parietal associative area 7 as revealed from cellular discharges in alert monkeys. Brain. 1974;97:673–692. doi: 10.1093/brain/97.1.673. [DOI] [PubMed] [Google Scholar]

- Ionta S, Heydrich L, Lenggenhager B, Mouthon M, Fornari E, Chapuis D, Gassert R, Blanke O. Multisensory mechanisms in temporo-parietal cortex support self-location and first-person perspective. Neuron. 2011;70:363–374. doi: 10.1016/j.neuron.2011.03.009. [DOI] [PubMed] [Google Scholar]

- Iwamura Y, Tanaka M, Sakamoto M, Hikosaka O. Functional subdivisions representing different finger regions in area 3 of the first somatosensory cortex of the conscious monkey. Exp Brain Res. 1983;51:315–326. [Google Scholar]

- Jeannerod M, Arbib MA, Rizzolatti G, Sakata H. Grasping objects: the cortical mechanisms of visuomotor transformation. Trends Neurosci. 1995;18:314–320. [PubMed] [Google Scholar]

- Kammers MP, Verhagen L, Dijkerman HC, Hogendoorn H, De Vignemont F, Schutter DJ. Is this hand for real? Attenuation of the rubber hand illusion by transcranial magnetic stimulation over the inferior parietal lobule. J Cogn Neurosci. 2009;21:1311–1320. doi: 10.1162/jocn.2009.21095. [DOI] [PubMed] [Google Scholar]

- Kennett S, Eimer M, Spence C, Driver J. Tactile-visual links in exogenous spatial attention under different postures: convergent evidence from psychophysics and ERPs. J Cogn Neurosci. 2001;13:462–478. doi: 10.1162/08989290152001899. [DOI] [PubMed] [Google Scholar]

- Lemon RN, Porter R. Afferent input to movement-related precentral neurones in conscious monkeys. Proc R Soc Lond B Biol Sci. 1976;194:313–339. doi: 10.1098/rspb.1976.0082. [DOI] [PubMed] [Google Scholar]

- Lenggenhager B, Tadi T, Metzinger T, Blanke O. Video ergo sum: manipulating bodily self-consciousness. Science. 2007;317:1096–1099. doi: 10.1126/science.1143439. [DOI] [PubMed] [Google Scholar]

- Lewis JW, Van Essen DC. Corticocortical connections of visual, sensorimotor, and multimodal processing areas in the parietal lobe of the macaque monkey. J Comp Neurol. 2000;428:112–137. doi: 10.1002/1096-9861(20001204)428:1<112::aid-cne8>3.0.co;2-9. [DOI] [PubMed] [Google Scholar]

- Lloyd DM. Spatial limits on referred touch to an alien limb may reflect boundaries of visuo-tactile peripersonal space surrounding the hand. Brain Cogn. 2007;64:104–109. doi: 10.1016/j.bandc.2006.09.013. [DOI] [PubMed] [Google Scholar]

- Lloyd DM, Shore DI, Spence C, Calvert GA. Multisensory representation of limb position in human premotor cortex. Nat Neurosci. 2003;6:17–18. doi: 10.1038/nn991. [DOI] [PubMed] [Google Scholar]

- Luppino G, Murata A, Govoni P, Matelli M. Largely segregated parietofrontal connections linking rostral intraparietal cortex (areas AIP and VIP) and the ventral premotor cortex (areas F5 and F4) Exp Brain Res. 1999;128:181–187. doi: 10.1007/s002210050833. [DOI] [PubMed] [Google Scholar]

- Macaluso E, Maravita A. The representation of space near the body through touch and vision. Neuropsychologia. 2010;48:782–795. doi: 10.1016/j.neuropsychologia.2009.10.010. [DOI] [PubMed] [Google Scholar]

- Makin TR, Holmes NP, Zohary E. Is that near my hand? Multisensory representation of peripersonal space in human intraparietal sulcus. J Neurosci. 2007;27:731–740. doi: 10.1523/JNEUROSCI.3653-06.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Makin TR, Holmes NP, Ehrsson HH. On the other hand: dummy hands and peripersonal space. Behav Brain Res. 2008;191:1–10. doi: 10.1016/j.bbr.2008.02.041. [DOI] [PubMed] [Google Scholar]

- Makin TR, Holmes NP, Brozzoli C, Rossetti Y, Farnè A. Coding of visual space during motor preparation: approaching objects rapidly modulate corticospinal excitability in hand-centered coordinates. J Neurosci. 2009;29:11841–11851. doi: 10.1523/JNEUROSCI.2955-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Makin TR, Holmes NP, Brozzoli C, Farnè A. Keeping the world at hand: rapid visuomotor processing for hand-object interactions. Exp Brain Res. 2012;219:421–428. doi: 10.1007/s00221-012-3089-5. [DOI] [PubMed] [Google Scholar]

- Malach R. Targeting the functional properties of cortical neurons using fMR-adaptation. Neuroimage. 2012;62:1163–1169. doi: 10.1016/j.neuroimage.2012.01.002. [DOI] [PubMed] [Google Scholar]

- Maravita A, Spence C, Driver J. Multisensory integration and the body schema: close to hand and within reach. Curr Biol. 2003;13:R531–R539. doi: 10.1016/s0960-9822(03)00449-4. [DOI] [PubMed] [Google Scholar]

- Naito E, Roland PE, Ehrsson HH. “I feel my hand moving”: a new role of the primary motor cortex in somatic perception of limb movement. Neuron. 2002;36:979–988. doi: 10.1016/s0896-6273(02)00980-7. [DOI] [PubMed] [Google Scholar]

- Naito E, Roland PE, Grefkes C, Choi HJ, Eickhoff S, Geyer S, Zilles K, Ehrsson HH. Dominance of the right hemisphere and role of area 2 in human kinesthesia. J Neurophysiol. 2005;93:1020–1034. doi: 10.1152/jn.00637.2004. [DOI] [PubMed] [Google Scholar]

- Pavani F, Castiello U. Binding personal and extrapersonal space through body shadows. Nat Neurosci. 2004;7:14–16. doi: 10.1038/nn1167. [DOI] [PubMed] [Google Scholar]

- Petkova VI, Björnsdotter M, Gentile G, Jonsson T, Li TQ, Ehrsson HH. From part- to whole-body ownership in the multisensory brain. Curr Biol. 2011;21:1118–1122. doi: 10.1016/j.cub.2011.05.022. [DOI] [PubMed] [Google Scholar]

- Pons TP, Garraghty PE, Mishkin M. Serial and parallel processing of tactual information in somatosensory cortex of rhesus monkeys. J Neurophysiol. 1992;68:518–527. doi: 10.1152/jn.1992.68.2.518. [DOI] [PubMed] [Google Scholar]

- Rizzolatti G, Luppino G. The cortical motor system. Neuron. 2001;31:889–901. doi: 10.1016/s0896-6273(01)00423-8. [DOI] [PubMed] [Google Scholar]

- Rizzolatti G, Scandolara C, Matelli M, Gentilucci M. Afferent properties of periarcuate neurons in macaque monkeys. I. Somatosensory responses. Behav Brain Res. 1981a;2:125–146. doi: 10.1016/0166-4328(81)90052-8. [DOI] [PubMed] [Google Scholar]

- Rizzolatti G, Scandolara C, Matelli M, Gentilucci M. Afferent properties of periarcuate neurons in macaque monkeys. II. Visual responses. Behav Brain Res. 1981b;2:147–163. doi: 10.1016/0166-4328(81)90053-x. [DOI] [PubMed] [Google Scholar]

- Rizzolatti G, Luppino G, Matelli M. The organization of the cortical motor system: new concepts. Electroencephalogr Clin Neurophysiol. 1998;106:283–296. doi: 10.1016/s0013-4694(98)00022-4. [DOI] [PubMed] [Google Scholar]

- Salinas E, Abbott LF. A model of multiplicative neural responses in parietal cortex. Proc Natl Acad Sci U S A. 1996;93:11956–11961. doi: 10.1073/pnas.93.21.11956. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schmalzl L, Ehrsson HH. Experimental induction of a perceived “telescoped” limb using a full-body illusion. Front Hum Neurosci. 2011;5:34. doi: 10.3389/fnhum.2011.00034. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sereno MI, Huang RS. A human parietal face area contains aligned head-centered visual and tactile maps. Nat Neurosci. 2006;9:1337–1343. doi: 10.1038/nn1777. [DOI] [PubMed] [Google Scholar]

- Spence C, Pavani F, Driver J. Crossmodal links between vision and touch in covert endogenous spatial attention. J Exp Psychol Hum Percept Perform. 2000;26:1298–1319. doi: 10.1037//0096-1523.26.4.1298. [DOI] [PubMed] [Google Scholar]

- Spence C, Pavani F, Driver J. Spatial constraints on visual-tactile cross-modal distractor congruency effects. Cogn Affect Behav Neurosci. 2004;4:148–169. doi: 10.3758/cabn.4.2.148. [DOI] [PubMed] [Google Scholar]

- Tsakiris M. My body in the brain: a neurocognitive model of body ownership. Neuropsychologia. 2010;48:703–712. doi: 10.1016/j.neuropsychologia.2009.09.034. [DOI] [PubMed] [Google Scholar]

- Tsakiris M, Haggard P. The rubber hand illusion revisited: visuotactile integration and self-attribution. J Exp Psychol Hum Percept Perform. 2005;31:80–91. doi: 10.1037/0096-1523.31.1.80. [DOI] [PubMed] [Google Scholar]

- Tsakiris M, Hesse MD, Boy C, Haggard P, Fink GR. Neural signatures of body ownership: a sensory network for bodily self-consciousness. Cereb Cortex. 2007;17:2235–2244. doi: 10.1093/cercor/bhl131. [DOI] [PubMed] [Google Scholar]

- Tunik E, Frey SH, Grafton ST. Virtual lesions of the anterior intraparietal area disrupt goal-dependent on-line adjustments of grasp. Nat Neurosci. 2005;8:505–511. doi: 10.1038/nn1430. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Weigelt S, Muckli L, Kohler A. Functional magnetic resonance adaptation in visual neuroscience. Rev Neurosci. 2008;19:363–380. doi: 10.1515/revneuro.2008.19.4-5.363. [DOI] [PubMed] [Google Scholar]

- Young JP, Herath P, Eickhoff S, Choi J, Grefkes C, Zilles K, Roland PE. Somatotopy and attentional modulation of the human parietal and opercular regions. J Neurosci. 2004;24:5391–5399. doi: 10.1523/JNEUROSCI.4030-03.2004. [DOI] [PMC free article] [PubMed] [Google Scholar]