Significance

We show evidence of a division of labor between neurons recorded within the superficial and deep layers of visual cortex in sensory coding and behavior. Neurons within the superficial layers of extrastriate area V4 outperformed their deep layer counterparts in coding the shape of visual stimuli, both in latency and accuracy. In contrast, neurons within the deep layers outperformed their superficial layer counterparts in the coding of selective attention and the planning of eye movements, particularly the latter. The results suggest that a general pattern may exist across sensory neocortex.

Keywords: attention, neural coding, visual cortex

Abstract

Neurons in sensory areas of the neocortex are known to represent information both about sensory stimuli and behavioral state, but how these 2 disparate signals are integrated across cortical layers is poorly understood. To study this issue, we measured the coding of visual stimulus orientation and of behavioral state by neurons within superficial and deep layers of area V4 in monkeys while they covertly attended or prepared eye movements to visual stimuli. We show that whereas single neurons and neuronal populations in the superficial layers conveyed more information about the orientation of visual stimuli than neurons in deep layers, the opposite was true of information about the behavioral relevance of those stimuli. In particular, deep layer neurons encoded greater information about the direction of planned eye movements than superficial neurons. These results suggest a division of labor between cortical layers in the coding of visual input and visually guided behavior.

Extrastriate area V4 comprises an intermediate processing stage in the primate visual hierarchy (1, 2). V4 neurons exhibit selectivity to color (3, 4), orientation (5, 6), and contour (7, 8), and appear to be segregated according to some of these properties across the cortical surface (9). Distinct from their purely sensory properties, V4 neurons are also known to encode information about behavioral and cognitive factors, particularly covert attention (10), but also reward value (11) and the direction of planned saccadic eye movements (12–14). As with other neocortical areas, V4 is organized by a characteristic laminar structure, in which granular layer IV neurons receive feedforward sensory input from hierarchically “lower” visual cortical areas, namely area V1 and V2 (1, 15–17). Projections from area V4 to hierarchically “higher” visual areas, such as temporal occipital area and posterior inferotemporal cortex, originate largely from layers II–III (18), whereas layer 5 neurons project back to V1 and V2 and subcortically to the superior colliculus (18–20).

Recent studies have found laminar differences in attention-related modulation of neural activity. Buffalo et al. (21) observed that changes in local field potential power due to the deployment of covert attention differed between superficial and deep layers; gamma-band increases were found in superficial layers and alpha-band decreases were found in deep layers. Increases in firing rate with attention were observed to be similar in both laminar divisions. Nandy et al. (22) compared attention-driven changes in spiking activity across 3 laminar compartments of V4 and observed significant firing rate modulation in superficial, granular, and deep layers. In addition, they observed subtle, but reliable, differences in other aspects of activity across layers (e.g., spike count correlations). However, no previous studies have compared stimulus tuning properties, or looked for differences in other types of behavioral modulation across layers.

To investigate the layer dependence of stimulus and behavioral modulation in area V4, we measured the selectivity of V4 neurons to both factors in monkeys performing an attention-demanding task that dissociated covert attention from eye movement preparation. We then compared the orientation tuning and behavioral modulation of neurons and neuronal populations recorded in superficial and deep layers.

Results

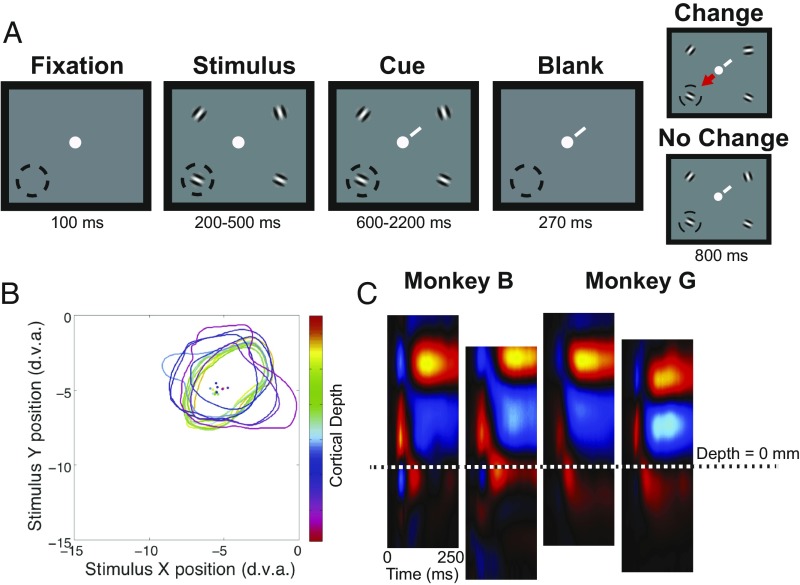

Two monkeys (G and B) were trained to perform an attention-demanding task (23) that required them to detect orientation changes in one of 4 peripheral oriented grating stimulus patches while maintaining central fixation (Fig. 1A, Methods) (12). Upon detection of a change, monkeys were rewarded for saccadic eye movements to the patch opposite the orientation change. This task allowed us to dissociate behavioral conditions in which the monkey covertly attended, prepared a saccade to, or ignored a receptive field (RF) target. Both monkeys performed well above chance. We recorded the activity of 698 units (277 single units and 421 multiunits) at 421 sites using 16-channel linear array electrodes while monkeys performed the task. Electrodes were delivered perpendicular, or nearly perpendicular, to the cortical surface as guided by magnetic resonance imaging, and confirmed by RF alignment (Fig. 1B). In each recording session, data from the 16 electrode channels were assigned laminar depths, relative to a common current source density (CSD) marker (Fig. 1C, Methods). The sizes of RFs in superficial and deep layers were statistically indistinguishable (unpaired t test, P > 0.05) from each other (SI Appendix, Fig. S1).

Fig. 1.

Behavioral task and perpendicular recordings in area V4. (A) Panels depict phases of the attention task, and lower left dashed circle denotes the RF positions of recorded neurons. Task began with fixation at a central fixation point. Following fixation, randomly oriented Gabor gratings appeared at 4 positions. After an additional period, a cue (white diagonal line) appeared near the fixation point and indicated which grating was the target. A blank period followed in which the gratings disappeared, and then the stimuli reappeared on the screen with the target presented either at the same orientation or at a new orientation. Monkeys were rewarded for making saccadic eye movements to the stimulus opposite the changed target (arrow) or for maintaining fixation when the orientation did not change. (B) Colored contours and corresponding dots show the RF borders and RF centers, respectively, mapped at electrode channels across difference cortical depths for an example V4 recording. (C) Example CSD with alignment feature for the 2 monkey subjects. The delineation between superficial and deep layers is indicated by the dotted line. d.v.a., degrees of visual angle.

Orientation Selectivity.

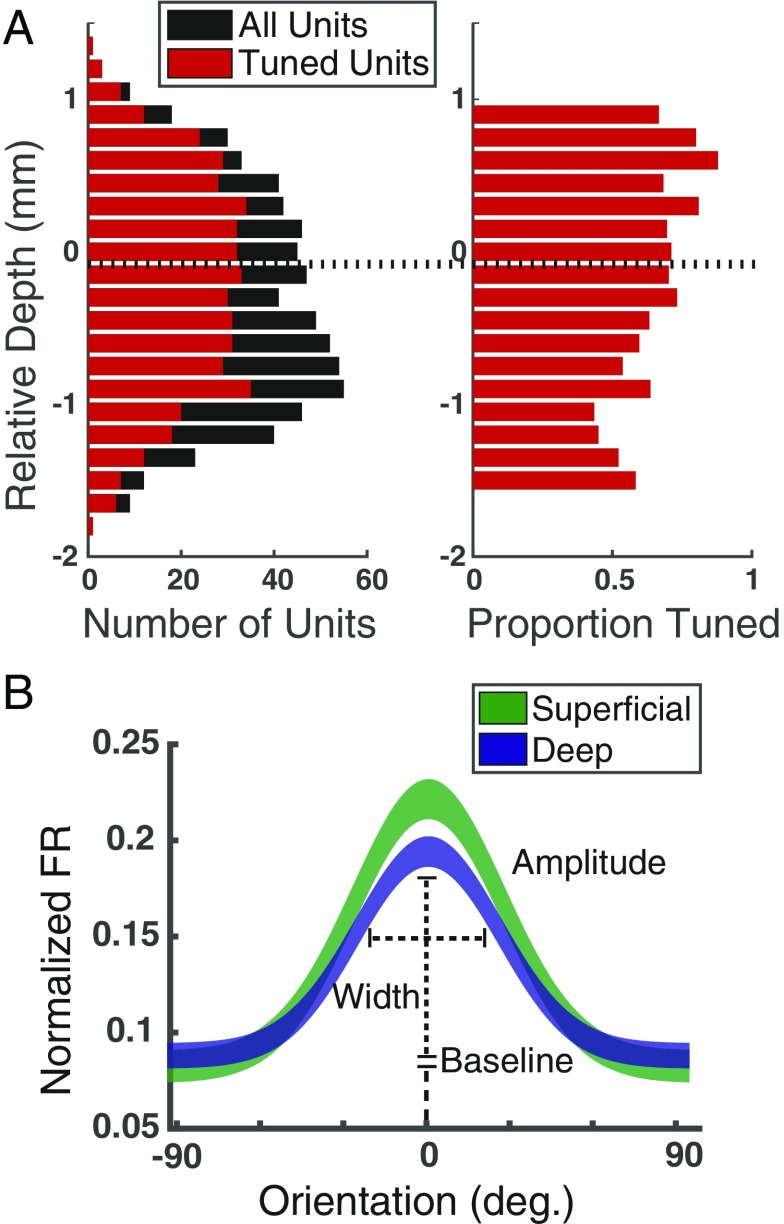

We first examined the proportion of units exhibiting significant orientation tuning and compared that proportion across layers (Methods). Overall, 69.05% (482/698; P < 0.005) of units were significantly tuned for orientation (Fig. 2A). Of these, we found that a significantly higher proportion of superficial units (79.4%) were tuned compared with deep units (62.7%), a difference that was significant (χ2, P < 10−5), and evident in both monkeys (monkey G, 74.8% in superficial, 65.5% in deep; monkey B, 84.7% in superficial, 60.7% in deep). Next, we fit Gaussian functions to the normalized mean firing rates elicited by the 8 orientations for each of the 698 units (Fig. 2B, Methods). Across superficial and deep layers, 35.5% (n = 248) of units were well fit by a Gaussian (R2 > 0.7). Comparing fit parameters, differences in width and baseline did not differ between superficial and deep layers (width, superficial = 0.84, deep = 0. 67, P > 0.05; baseline, superficial = 0.10, deep = 0.10, P > 0.05). However, the mean amplitude of superficial layer units exceeded that of deep layer units by 30% (Fig. 2B, superficial = 0.17; deep = 0.13; P = 0.018).

Fig. 2.

Orientation tuning in superficial and deep layers of area V4. (A, Left) Distribution of tuned units (red) among total units recorded (black) across cortical depth, relative to the superficial/deep CSD border. (A, Right) The same data plotted as a proportion. (B) Average Gaussian tuning fits, and definitions of fit parameters, for superficial (green) and deep (blue) neurons. Line thicknesses denote ±SEM.

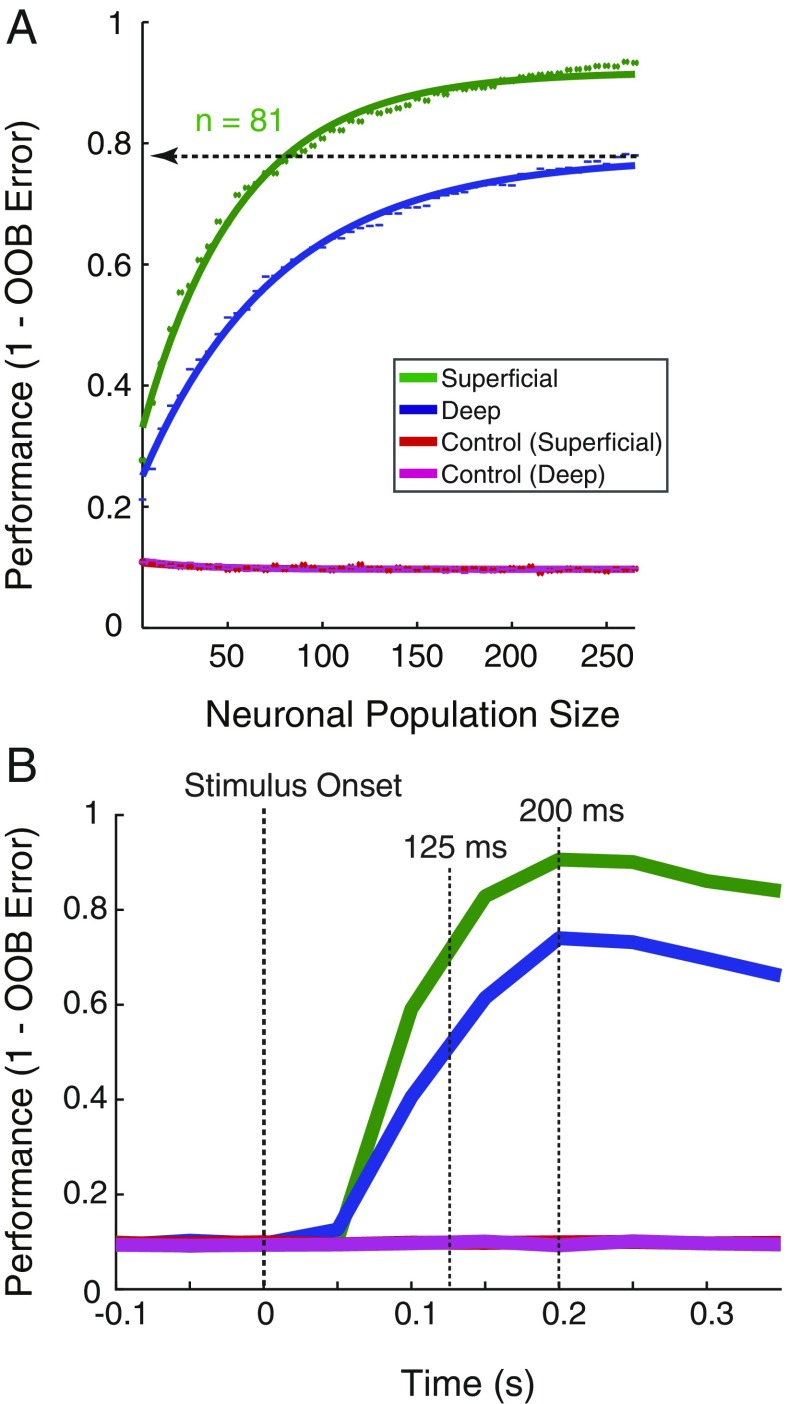

Measurements of orientation tuning in individual units suggest that superficial layer units in our dataset were better tuned to stimulus orientation than their deep layer counterparts. However, we considered that these measurements likely fail to capture all of the information conveyed by neurons about orientation. We therefore took a population decoding approach (24) to measure the information available about orientation in the activity of all units within superficial or deep layers (Methods). A random forest decoder was utilized for its robustness to feature noise and low trial count, its ability to draw nonlinear decision boundaries, and its interpretability. We found that differences in decoder performance resulted from statistically distinguishable distributions of feature importance between superficial and deep layer, rather than from population outliers (SI Appendix, Fig. S2) (25, 26). To quantify the difference in decoding between layers, decoder performance was then computed as a function of neuronal population size (Fig. 3A). We fit “neuron-dropping” curves (NDCs) (27) to the performance values and compared the confidence intervals of the fit parameters for slope (b) and asymptote (c) for superficial and deep populations. Both superficial and deep units performed significantly above chance for all population sizes greater than zero. The NDC for superficial populations had a significantly greater slope than the deep NDC (superficial b = 0.019, 95% CI: 0.019, 0.020; deep b = 0.014, 95% CI: 0.013, 0.014), and asymptotic performance was significantly higher for superficial compared with deep units (superficial, c = 0.918, 95% CI: 0.915, 0.921; deep, c = 0.778, 95% CI: 0.773, 0.783). To simplify these differences, we compared the number of neurons needed to achieve equal performance by the 2 populations. In this case, whereas the deep layer population achieved an asymptotic performance of 0.78 (78%) with 265 units, an equivalent performance was achieved by a superficial population of only 81 units, a population of less than a third the size. Last, we performed the same comparison on the subset of well-isolated single units (n = 277). The results of this analysis revealed the same result as with the overall population; asymptotic decoding performance was significantly greater for superficial layer units than for deep layer units (superficial, c = 0.843, 95% CI: 0.821, 0.865; deep, c = 0.554, 95% CI: 0.534, 0.574) (SI Appendix, Fig. S3).

Fig. 3.

Orientation coding in superficial and deep layers of area V4. (A) Performance of a random forest classifier at decoding stimulus orientation across different population sizes of superficial (green) and deep (blue) neurons, along with shuffled controls for both (red and purple). Points indicate mean values for the 100 decoder cycles at each size. Solid lines indicate the fit saturating function. The horizontal line indicates the asymptotic performance of the deep layer population, and the number of superficial layer units (n) needed to reach an equivalent performance. (B) Time course of population mean performance at decoding stimulus orientation. Data are aligned to stimulus onset and performance is computed throughout the precue period; 150-ms bins were used, and 25-ms steps were taken. Performance is given for the leading edge of the bin. Population sizes for both laminar compartments were set to 269 units. Stimulus onset is indicated by the first dotted vertical line. The second vertical dotted line indicates the time at which superficial layer populations achieve the peak performance level of the deep neuron populations (125 ms). The third vertical dotted line indicates the peak time for both superficial and deep unit populations (200 ms).

The large differences in orientation decoding between superficial and deep layers suggested that the pattern of orientation coding might differ in other ways as well. Thus, we also examined temporal pattern of orientation decoding between the 2 laminar compartments using the same decoder. To do this, we measured decoding performance in sequential 150-ms bins of population activity, separately decoded from 100 ms before stimulus onset to 600-ms poststimulus. This analysis produced striking differences in the time course of superficial and deep orientation decoding (Fig. 3B). Both superficial and deep populations reached a peak in performance at 200 ms, with the superficial population achieving a performance of 90%, and the deep population achieving 74%. This performance difference between the 2 populations persisted throughout the precue, stimulus period (>0.30 s). Moreover, the analysis revealed that the decoding of orientation was achieved faster with the superficial population. For example, the superficial population reached the peak performance of the deep population (74%) in only 125 ms, which is 75 ms faster than deep layer neurons. Thus, as suggested by the single-unit analysis, we found that stimulus orientation was not only more accurately encoded by populations of superficial layer neurons than deep layer neurons, but encoding proceeded faster for superficial neurons as well.

Coding of Eye Movement Preparation and Covert Attention.

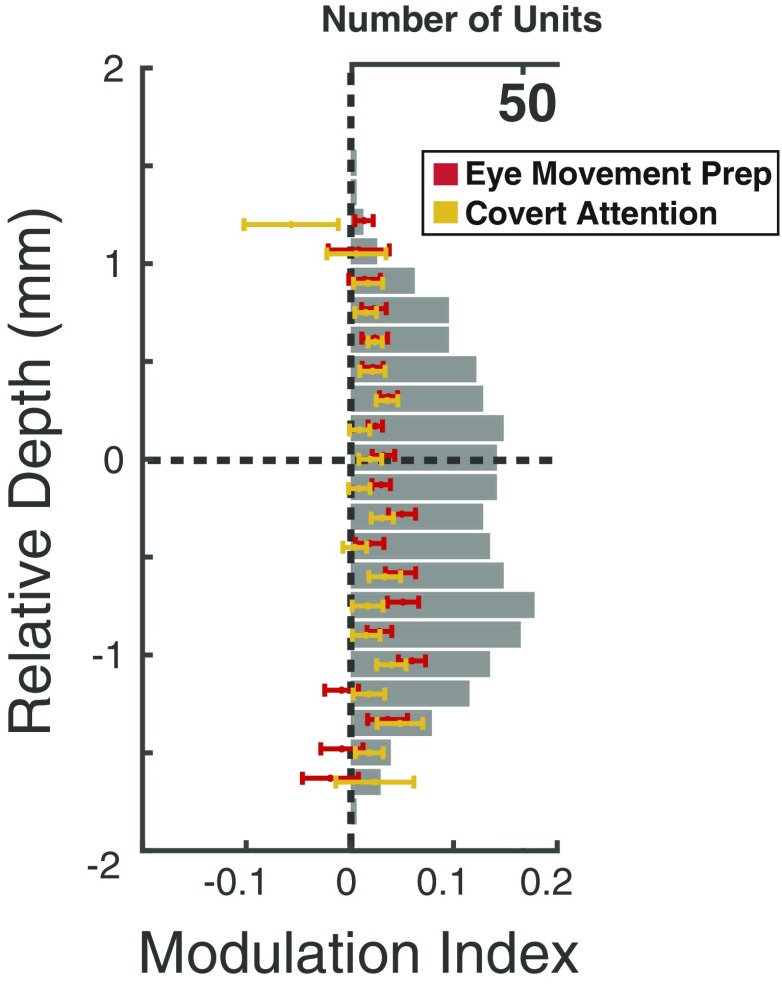

We next examined activity across superficial and deep layers when monkeys covertly attended the visual stimulus, prepared a saccade to that stimulus, or ignored it. We first compared the average modulation for individual neuronal recordings made at varying laminar depths aligned to the superficial/deep boundary (Fig. 4). Modulation was calculated as the deviation in mean RF activity during a behavior of interest, relative to the control condition (Methods). Overall, modulation across depth was significantly greater during eye movement preparation than during covert attention (P = 0.0024), a result we reported previously (12). However, we observed no significant main effect of depth (P > 0.05), or an interaction of attention type and depth (P > 0.05). Nonetheless, movement-related modulation appeared to peak within the deep layers, suggesting that the difference in attention type was due to greater eye movement modulation in those layers. Thus, we directly compared the magnitude of modulation in the 2 attention types collapsed within superficial or deep layers. This revealed that while there was no significant difference in modulation in superficial layers (P > 0.05), modulation within deep layers was significantly greater during saccade preparation than during covert attention (P = 0.0041).

Fig. 4.

Modulation indices across cortical depth. Individual medians and SEMs are plotted at each depth for covert attention (yellow) and saccade preparation (red), along with the total number of units recorded (gray). Depths with fewer than 5 neurons were removed for visualization.

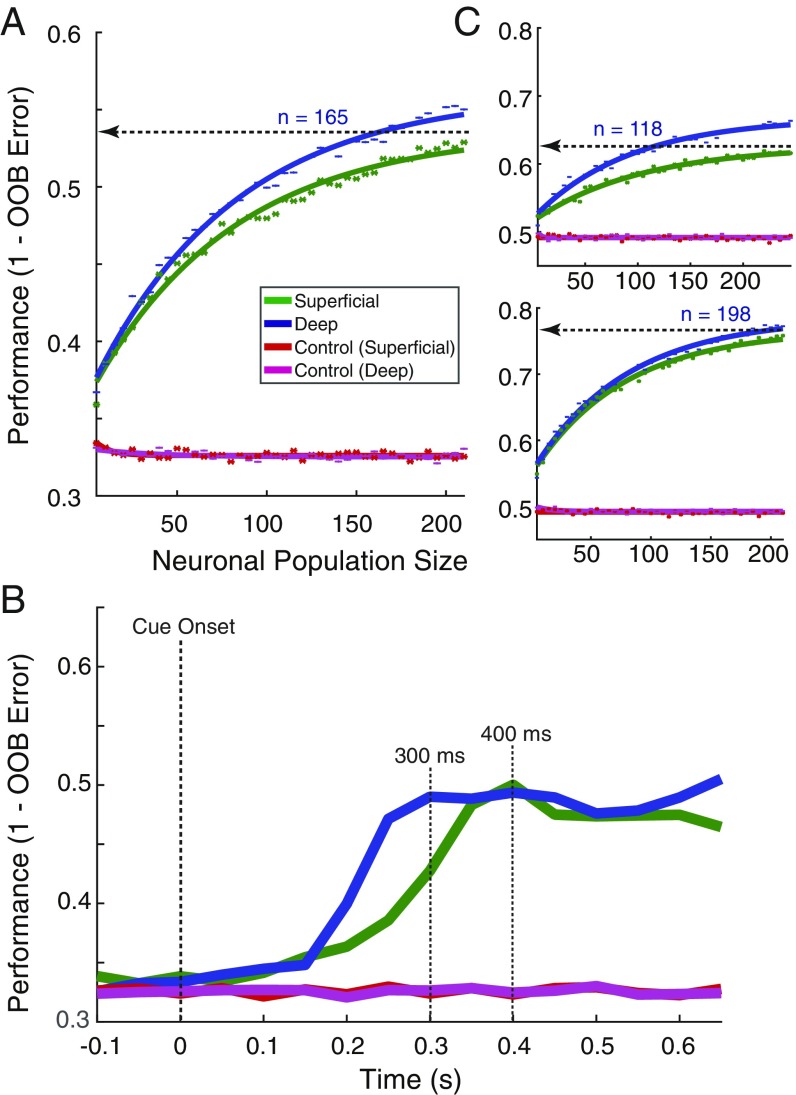

Next, as with stimulus orientation, we decoded the behavioral condition using population activity from superficial (n = 247) or deep (n = 378) layers (Fig. 5), and classified activity as occurring during covert attention, saccade preparation, or control trials. We observed differences that resulted from statistically distinguishable distributions of feature importance between superficial and deep layers, rather than from population outliers (SI Appendix, Fig. S4). As with orientation decoding, we computed decoder performance as a function of neuronal population size and fit the resultant NDCs (Fig. 5A). Both superficial and deep populations yielded performances that exceeded chance (33.3%) beginning with the smallest population size of 5 units. However, they differed in how well activity could be used to decode the postcue behavioral condition. Although the slopes of the NDCs did not differ significantly (superficial b = 0.013, 95% CI: 0.012, 0.014; deep b = 0.013, 95% CI: 0.012, 0.014), the fitted asymptotic performance for deep units significantly exceeded that of superficial units (superficial, c = 0.536, 95% CI: 0.531, 0.541; deep, c = 0.561, 95% CI: 0.556, 0.566). As with the comparison of orientation tuning, we compared the number of neurons needed to achieve equal performance by the 2 populations. In this case, whereas a full population of 210 superficial units only achieved a fitted asymptotic performance of 54%, deep layer populations achieved the same performance with only 165 units. Results were similar in analyses run separately in the 2 monkeys (SI Appendix, Fig. S5).

Fig. 5.

Behavioral modulation in superficial and deep layers of V4. (A) Performance of random forest decoder at distinguishing between the 3 behavioral conditions (covert attention, saccade preparation, or control) from superficial and deep units, as a function of population size. Horizontal line denotes the asymptotic performance of the superficial layer population, and the number of deep layer units (n) needed to reach an equivalent performance. (B) Mean decoder performance in distinguishing the 3 behavioral conditions over time (as in Fig. 3B). Data are aligned to the onset of the attention cue, and performance is computed throughout the postcue period. The first vertical dotted line indicates the cue onset. The second vertical dotted line indicates the achievement of the first peak in performance by the deep unit populations. The third vertical line indicates the same for the superficial unit population. (C) Performance of the decoder at distinguishing between pairs of conditions: (Top) saccade preparation from control; (Bottom) covert attention from control.

Perhaps more dramatic than overall performance differences of the 2 laminar compartments was the difference in the time course of population decoding. As with orientation, we used sequential 150-ms bins of firing rates to quantify changes in decoder performance over time (Fig. 5B). We found that throughout the postcue period, decoding performance from the superficial and deep populations peaked at roughly the same level of performance (∼49%), well above chance level. However, decoding of behavioral condition proceeded much faster for the deep layer population. Whereas the superficial layer population reached its peak performance of 49% at 400 ms, the deep layer population reached the same peak within 300 ms. Thus, decoding of the behavioral condition following the cue was achieved ∼100 ms earlier in deep layers than in superficial layers. Note also that this result indicates that the relatively small difference in overall performance observed in the NDC curves is much larger within the initial postcue period. Thus, in contrast to orientation, where superficial layers outperformed deep layers, decoding of behavioral condition from the same dataset was more accurate and proceeded faster in deep layers.

To investigate the conditions driving performance, we next conducted pairwise decoding of each of the attentional conditions (covert and saccade) versus the control (Fig. 5C). As before, feature importance histograms indicated normally distributed values, with the mean value of deep populations greater than superficial for all attentional condition pairs (SI Appendix, Fig. S4 B and C). When decoding covert attention versus control, we found that the NDC slopes were not significantly different (superficial b = 0.013, 95% CI: 0.012, 0.014; deep b = 0.013, 95% CI: 0.012, 0.014), and the asymptotic performance differences were significant, but small (superficial, c = 0.766, 95% CI: 0.761, 0.771; deep, 0.785, 95% CI: 0.779, 0.790). However, the results were more dramatic for the decoding of saccade preparation versus control. As with the previous NDCs, the slopes were not significantly different, (superficial b = 0.010, 95% CI: 0.008, 0.011; deep b = 0.011, 95% CI: 0.010, 0.012). However, the asymptotic performance was significantly greater for deep units (superficial, c = 0.627, 95% CI: 0.621, 0.633; deep, c = 0.668, 95% CI: 0.663, 0.673), and the greater asymptotic performance rendered decoding more efficient for the superior population. Whereas a full population of 245 superficial units only achieved a performance of 62.7%, deep layer populations achieved the same performance with less than one-half the population size (n = 118). Thus, the decoding of behavioral conditions was greatest for units in the deep layers, where saccade preparation was most robustly encoded.

Discussion

We observed significantly greater orientation selectivity among units within the superficial layers of V4 using both tuning measures in single neurons and decoding of population activity. In contrast, using both unit and population activity, we observed that deeper layers conveyed more information about the behavioral relevance of visual stimuli. In particular, we found that neurons within deep layers conveyed more information than superficial neurons about the planning of saccadic eye movements. These results suggest a division of labor between superficial and deep layer neurons in the feedforward processing of stimulus features and the application of sensory information to behavior. Below, we discuss both the potential limitations of our observations and the extent to which they may generalize beyond extrastriate area V4.

The robust differences in orientation selectivity we observed between the superficial and deep layer units raise important questions, such as whether those differences result simply from the known compartmentalization of orientation versus color tuning across V4 (9), and any potential bias in the sampling of the cortical surface in our recordings. However, even if our recordings had oversampled one compartment or the other (e.g., more color compartments), doing so would not be expected to introduce an overall bias between upper and lower layers. It is also worth noting that since the primary evidence of feature-specific compartments in V4 comes from optical imaging, where much of the signal derives from superficial layers (28), those compartments may be less well defined within infragranular layers. Indeed, anatomical evidence indicates that intrinsic horizontal connections in V4, which appear to reciprocally connect columns across millimeters of cortex, exist predominantly in superficial layers, similar to earlier (e.g., V1, V2) and later stages of visual cortex (29).

Second, our results raise the important question of how much they generalize within the orientation domain and to other features, e.g., color or contour. Although we observed clear differences in the coding of orientation between the superficial and deep layers, we did not exhaustively test the influence of other related parameters known to interact with orientation tuning (e.g., contrast, size, spatial frequency, chromatic vs. achromatic, bars vs. gratings). These other parameters could conceivably have diminished, or perhaps even reversed, the laminar differences we observed. Thus, it is important to emphasize that our results demonstrate greater coding in superficial layers within only a limited regime of stimulus feature space. Furthermore, substantial previous evidence suggests that neurons in V4 are unique in the computation of stimulus contour, not orientation, the former deriving from the orientation-specific input they receive from V1 and V2 (7, 8, 30, 31). In such a case, our observations within orientation selectivity also might not generalize to all other types of selectivity. Instead, the results might only generalize to some features computed at earlier stages. Nonetheless, our results reveal the importance of assessing the laminar dependence of stimulus selectivity across visual cortex.

Our observation of more robust attention and eye movement signals in deep layers may be the most important of our observations. Although it is clear that visually driven activity is affected by impending eye movements at many stages of the primate visual system (32–35), few studies have examined the influence of motor preparation on the responses of neurons in visual cortex. Moreover, we have shown both previously (12) and in the present study that the movement-related modulation of V4 activity is not only dissociable from modulation by covert attention, but it is more reliable. Those findings are consistent with the hypothesis that visual cortical areas contribute directly to visually guided saccades, particularly the refinement of saccadic plans according to features coded by particular visual areas (e.g., shape in area V4) (36–38). Our observation of stronger eye movement-related modulation in deep layers is also consistent with the fact that projections to the superior colliculus emanate principally from layer V pyramidal neurons throughout extrastriate visual cortex (39). Moreover, deep layer neurons are a major source of feedback projections (1), and thus the relative robustness of behavioral signals within deep layers may reflect the projection of those signals to earlier stages of visual processing. Consistent with this notion, a previous study of attentional effects in areas V1, V2, and V4 found evidence of a “backward” progression of modulation in these areas that begins in V4 and proceeds to V1 (21). Thus, the unique contributions of deep layer neurons to oculomotor output and in top–down influences may account for their superior coding of behavioral variables.

Methods

Subjects, Behavioral Task, Visual Stimuli, and Neuronal Recordings.

Details of the subjects, the task, the stimuli, and recording techniques are described in Steinmetz and Moore (12). All experimental procedures were in accordance with the National Institutes of Health, Guide for the Care and Use of Laboratory Animals (40), the Society for Neuroscience Guidelines and Policies, and Stanford University Animal Care and Use Committee. In brief, 2 male rhesus macaques were surgically implanted with recording chambers. Monkeys were trained on an attention task that dissociated covert attention from saccade preparation. Trials were initiated when the monkey fixated a central point. After 100 ms of central fixation, a 300-to-500-ms “stimulus epoch” occurred, where 4 oriented Gabor patches appeared at 4 locations equidistant from the fixation point. This was followed by the “cue epoch,” lasting 600 to 2,200 ms. During this epoch, a line appeared near the central fixation point, directed toward one of the Gabor patches, indicating that it would potentially change orientations. After a variable interval, the array of stimuli disappeared briefly (270 ms) and then reappeared. Monkeys were trained to detect changes in orientation (45° to 90°) of any of the 4 stimuli upon reappearance. To dissociate the direction of covert attention from that of saccade preparation, monkeys were given a reward for responding to an orientation change with a saccade to the stimulus opposite the changed stimulus (i.e., antisaccade). If no change occurred at the cued location (50% of trials), the monkey was rewarded for maintaining fixation. Monkey G correctly responded on 69% of trials (77%, change trials; 62%, catch trials) and monkey B correctly responded on 67% of trials, (62%, change trials; 70%, catch trials).

Electrophysiological recordings were made from area V4 on the surface of the prelunate gyrus with 16-channel, linear array U-Probes (Plexon). Electrodes were cylindrical in shape (180 mm diameter) with a row of 16 circular platinum/iridium electrical contacts (15 µm diameter) at 150-µm center-to-center spacing (total length of array = 2.25 mm). Recordings in both monkeys were recorded between 5° and 8° eccentricities. Recorded neuronal waveforms were classified as either “single neurons” (n = 277) or multineuron clusters (n = 421). We use “units” to refer to activity of both types. Single-unit sorting was initially performed manually using Offline Sorter (Plexon) by identifying clusters of waveforms with similar shapes. The initial sorting was refined by computing the Fisher Linear Discriminant between the clustered waveforms and all other waveforms on the same channel (41), projecting the waveforms along this dimension, and reclassifying waveforms according to their value on this axis. We also computed an estimation of the false-positive rate for waveforms of each cluster (41). This calculation considers the rate of spikes, the duration of the experiment, and the number of waveforms too close together in time to plausibly arise from a single neuron to arrive at a figure estimating what percentage of the total spike count arose from neuron(s) besides the one in question. Details of the columnar recordings and data analyses are provided in the SI Appendix.

Supplementary Material

Acknowledgments

This work was supported by National Eye Institute grant EY014924 to T.M., a National Science Foundation graduate fellowship to N.A.S., and an Howard Hughes Medical Institute medical research fellowship to W.W.P. We thank S. Hyde for valuable assistance with animal care and B. Schneeveis for designing and building the 3D electrode angler.

Footnotes

The authors declare no conflict of interest.

This article is a PNAS Direct Submission.

This article contains supporting information online at www.pnas.org/lookup/suppl/doi:10.1073/pnas.1819398116/-/DCSupplemental.

References

- 1.Felleman D. J., Van Essen D. C., Distributed hierarchical processing in the primate cerebral cortex. Cereb. Cortex 1, 1–47 (1991). [DOI] [PubMed] [Google Scholar]

- 2.Hilgetag C. C., O’Neill M. A., Young M. P., Indeterminate organization of the visual system. Science 271, 776–777 (1996). [DOI] [PubMed] [Google Scholar]

- 3.Zeki S., The distribution of wavelength and orientation selective cells in different areas of monkey visual cortex. Proc. R. Soc. Lond. B Biol. Sci. 217, 449–470 (1983). [DOI] [PubMed] [Google Scholar]

- 4.Chang M., Xian S., Rubin J., Moore T., Latency of chromatic information in area V4. J. Physiol. Paris 108, 11–17 (2014). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Desimone R., Schein S. J., Visual properties of neurons in area V4 of the macaque: Sensitivity to stimulus form. J. Neurophysiol. 57, 835–868 (1987). [DOI] [PubMed] [Google Scholar]

- 6.Roe A. W., et al. , Toward a unified theory of visual area V4. Neuron 74, 12–29 (2012). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Pasupathy A., Connor C. E., Responses to contour features in macaque area V4. J. Neurophysiol. 82, 2490–2502 (1999). [DOI] [PubMed] [Google Scholar]

- 8.Yau J. M., Pasupathy A., Brincat S. L., Connor C. E., Curvature processing dynamics in macaque area V4. Cereb. Cortex 23, 198–209 (2013). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Tanigawa H., Lu H. D., Roe A. W., Functional organization for color and orientation in macaque V4. Nat. Neurosci. 13, 1542–1548 (2010). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Motter B. C., Focal attention produces spatially selective processing in visual cortical areas V1, V2, and V4 in the presence of competing stimuli. J. Neurophysiol. 70, 909–919 (1993). [DOI] [PubMed] [Google Scholar]

- 11.Baruni J. K., Lau B., Salzman C. D., Reward expectation differentially modulates attentional behavior and activity in visual area V4. Nat. Neurosci. 18, 1656–1663 (2015). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Steinmetz N. A., Moore T., Eye movement preparation modulates neuronal responses in area V4 when dissociated from attentional demands. Neuron 83, 496–506 (2014). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Tolias A. S., et al. , Eye movements modulate visual receptive fields of V4 neurons. Neuron 29, 757–767 (2001). [DOI] [PubMed] [Google Scholar]

- 14.Bichot N. P., Rossi A. F., Desimone R., Parallel and serial neural mechanisms for visual search in macaque area V4. Science 308, 529–534 (2005). [DOI] [PubMed] [Google Scholar]

- 15.Ungerleider L. G., Galkin T. W., Desimone R., Gattass R., Cortical connections of area V4 in the macaque. Cereb. Cortex 18, 477–499 (2008). [DOI] [PubMed] [Google Scholar]

- 16.Yukie M., Iwai E., Laminar origin of direct projection from cortex area V1 to V4 in the rhesus monkey. Brain Res. 346, 383–386 (1985). [DOI] [PubMed] [Google Scholar]

- 17.Nakamura H., Gattass R., Desimone R., Ungerleider L. G., The modular organization of projections from areas V1 and V2 to areas V4 and TEO in macaques. J. Neurosci. 13, 3681–3691 (1993). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Markov N. T., et al. , Anatomy of hierarchy: Feedforward and feedback pathways in macaque visual cortex. J. Comp. Neurol. 522, 225–259 (2014). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Leichnetz G. R., Spencer R. F., Hardy S. G. P., Astruc J., The prefrontal corticotectal projection in the monkey; an anterograde and retrograde horseradish peroxidase study. Neuroscience 6, 1023–1041 (1981). [DOI] [PubMed] [Google Scholar]

- 20.Gilbert C. D., Wiesel T. N., Functional Organization of the Visual Cortex, Changeux J.-P., Glowinski J., Imbert M., Bloom F. E., Eds., (Progress in Brain Research, Elsevier, 1983), pp. 209–218. [DOI] [PubMed] [Google Scholar]

- 21.Buffalo E. A., Fries P., Landman R., Buschman T. J., Desimone R., Laminar differences in gamma and alpha coherence in the ventral stream. Proc. Natl. Acad. Sci. U.S.A. 108, 11262–11267 (2011). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Nandy A. S., Nassi J. J., Reynolds J. H., Laminar organization of attentional modulation in macaque visual area V4. Neuron 93, 235–246 (2017). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Simons D. J., Rensink R. A., Change blindness: Past, present, and future. Trends Cogn. Sci. 9, 16–20 (2005). [DOI] [PubMed] [Google Scholar]

- 24.Nandy A. S., Mitchell J. F., Jadi M. P., Reynolds J. H., Neurons in macaque area V4 are tuned for complex spatio-temporal patterns. Neuron 91, 920–930 (2016). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Strobl C., Malley J., Tutz G., An introduction to recursive partitioning: Rationale, application, and characteristics of classification and regression trees, bagging, and random forests. Psychol. Methods 14, 323–348 (2009). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Ho T. K., A data complexity analysis of comparative advantages of decision forest constructors. Pattern Anal. Appl. 5, 102–112 (2002). [Google Scholar]

- 27.Lebedev M. A., How to read neuron-dropping curves? Front. Syst. Neurosci. 8, 102 (2014). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Bonhoeffer T., Grinvald A., The layout of iso-orientation domains in area 18 of cat visual cortex: Optical imaging reveals a pinwheel-like organization. J. Neurosci. 13, 4157–4180 (1993). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Fujita I., Fujita T., Intrinsic connections in the macaque inferior temporal cortex. J. Comp. Neurol. 368, 467–486 (1996). [DOI] [PubMed] [Google Scholar]

- 30.Pasupathy A., Connor C. E., Shape representation in area V4: Position-specific tuning for boundary conformation. J. Neurophysiol. 86, 2505–2519 (2001). [DOI] [PubMed] [Google Scholar]

- 31.Kosai Y., El-Shamayleh Y., Fyall A. M., Pasupathy A., The role of visual area V4 in the discrimination of partially occluded shapes. J. Neurosci. 34, 8570–8584 (2014). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Moore T., Tolias A. S., Schiller P. H., Visual representations during saccadic eye movements. Proc. Natl. Acad. Sci. U.S.A. 95, 8981–8984 (1998). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Sheinberg D. L., Logothetis N. K., Noticing familiar objects in real world scenes: The role of temporal cortical neurons in natural vision. J. Neurosci. 21, 1340–1350 (2001). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Reppas J. B., Usrey W. M., Reid R. C., Saccadic eye movements modulate visual responses in the lateral geniculate nucleus. Neuron 35, 961–974 (2002). [DOI] [PubMed] [Google Scholar]

- 35.Bosman C. A., Womelsdorf T., Desimone R., Fries P., A microsaccadic rhythm modulates gamma-band synchronization and behavior. J. Neurosci. 29, 9471–9480 (2009). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Moore T., Armstrong K. M., Fallah M., Visuomotor origins of covert spatial attention. Neuron 40, 671–683 (2003). [DOI] [PubMed] [Google Scholar]

- 37.Moore T., Shape representations and visual guidance of saccadic eye movements. Science 285, 1914–1917 (1999). [DOI] [PubMed] [Google Scholar]

- 38.Schafer R. J., Moore T., Attention governs action in the primate frontal eye field. Neuron 56, 541–551 (2007). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Fries W., Cortical projections to the superior colliculus in the macaque monkey: A retrograde study using horseradish peroxidase. J. Comp. Neurol. 230, 55–76 (1984). [DOI] [PubMed] [Google Scholar]

- 40.National Research Council , Guide for the Care and Use of Laboratory Animals (National Academies Press, Washington, DC, ed. 8, 2011). [Google Scholar]

- 41.Hill D. N., Mehta S. B., Kleinfeld D., Quality metrics to accompany spike sorting of extracellular signals. J. Neurosci. 31, 8699–8705 (2011). [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.