Summary

Navigation engages many cortical areas, including visual, parietal, and retrosplenial cortices. These regions have been mapped anatomically and with sensory stimuli, and studied individually during behavior. Here, we investigated how behaviorally-driven neural activity is distributed and combined across these regions. We performed dense sampling of single-neuron activity across the mouse posterior cortex and developed unbiased methods to relate neural activity to behavior and anatomical space. Most parts of posterior cortex encoded most behavior-related features. However, the relative strength with which features were encoded varied across space. Therefore, posterior cortex could be divided into discriminable areas based solely on behaviorally-relevant neural activity, revealing functional structure in association regions. Multimodal representations combining sensory and movement signals were strongest in posterior parietal cortex, where gradients of single-feature representations spatially overlapped. We propose that encoding of behavioral features is not constrained by retinotopic borders and instead varies smoothly over space within association regions.

INTRODUCTION

During visually guided navigation, sensory information is processed and transformed into motor plans to achieve rewarding outcomes. This processing involves the dorsal posterior part of cortex, which includes visual, parietal, and retrosplenial areas. These areas represent visual stimuli (Glickfeld and Olsen, 2017), combine visual and locomotion information for predictive coding (Keller et al., 2012; Saleem et al., 2013), transform sensory cues into motor plans (Goard et al., 2016; Harvey et al., 2012; Licata et al., 2017; Pho et al., 2018), and maintain navigation-related signals such as heading direction and route progression (Alexander and Nitz, 2015; Nitz, 2006). Despite progress in understanding these processes in individual areas, a high resolution spatial map of navigation-related features across posterior cortex has not been established. Here we investigated how the representations that support visually guided navigation are distributed and combined across cortical space. To what degree is information localized or distributed? How do representations relate to known area boundaries? Where are visual and movement information combined to inform task-relevant behaviors?

Pioneering studies have mapped and segmented mouse posterior cortex using anatomical features and retinotopy. Tract tracing studies have identified a number of visual areas (V1, PM, AM, AL, LM) (Glickfeld and Olsen, 2017; Wang and Burkhalter, 2007). These areas represent retinotopic copies of the visual space, and functional boundaries between them can be defined based on reversal of the visual field sign (Garrett et al., 2014; Marshel et al., 2011). Although retinotopic copies suggest discrete areas, anatomical studies using cyto- and chemoarchitecture have, in some cases, identified transitions shifted relative to retinotopic boundaries (Zhuang et al., 2017), or have found smooth anatomical gradients at sharp retinotopic borders (Allen Institute, 2017; Gămănuţ et al., 2018). Also, the organization within parietal and retrosplenial areas has been challenging to identify because these areas are not well driven by simple visual stimuli (Zhuang et al., 2017) and lack internal anatomical boundaries (Gămănuţ et al., 2018).

It is therefore not fully understood how the organization of posterior cortex may impact the encoding of behavioral variables and the emergence of multimodal representations for navigation. In particular, it is unclear if posterior cortex, especially higher association regions, encodes behavioral information in functionally discrete areas, as suggested by retinotopic mapping. Alternatively, secondary and higher regions could form a smooth continuum of functional properties, as suggested by anatomical studies. Much work on the function of secondary visual areas has emphasized the differences between their responses to simple visual stimuli such as drifting gratings (Andermann et al., 2011; Glickfeld et al., 2013; Juavinett and Callaway, 2015; Marshel et al., 2011). However, some of these studies also provided evidence that nearby regions of cortex tend to be functionally more similar than distant ones (Andermann et al., 2011; Marshel et al., 2011). This is consistent with recent work that has revealed that areas in mouse posterior cortex are highly interconnected. Retrograde tracing has found nearly all-to-all inter-area connectivity (Gămănuţ et al., 2018). Also, most V1 neurons broadcast information to multiple areas via branching axon collaterals (Han et al., 2018). Theoretical models have proposed that these connectivity patterns are consistent with an organization in which the probability of connectivity between cortical locations decays smoothly with distance, such that similarity in encoding decreases gradually over space (Song et al., 2014). A smooth functional organization could explain apparent discrepancies in area boundaries and suggest spatial proximity as a simple principle for the emergence of multimodal representations.

Here, we mapped behavior-related cortical activity in mice during a visually guided navigation task using dense sampling with single-neuron resolution. We developed analyses to relate neural activity to behavior and cortical space in an unbiased manner. Representations of individual task-related features were highly distributed across posterior cortex, with each area encoding most features of the task, consistent with reports in anterior brain regions (Allen et al., 2017; Chen et al., 2017; Makino et al., 2017). Except for sharp transitions between primary visual, parietal, and retrosplenial areas, representations were spatially organized as gradients of encoding similarity. As a result, despite the lack of sharp boundaries, posterior cortex could be divided into discriminable areas based on quantitative differences in the encoding of task features. Multimodal sensory-motor representations emerged where gradients for the encoding of optic flow and locomotion overlapped, specifically near posterior parietal cortex (PPC). Our results add a map of encoding of task-related information to existing maps based on anatomical or simple functional properties and improve our understanding of distinctions and divisions between association areas.

RESULTS

A visually guided locomotion task that engages posterior cortex

We developed a task for mice with the goal to engage the visual and navigation-related networks in the dorsal posterior cortex. Mice interacted with a visual virtual reality environment comprised of dots displayed on a screen at randomized locations in 3D space (Methods; Figure 1A and Supplemental Video). The running velocity of the mouse on a spherical treadmill (ball), measured as the pitch and roll velocity of the ball, controlled linear and angular movement in the virtual environment (Harvey et al., 2009).

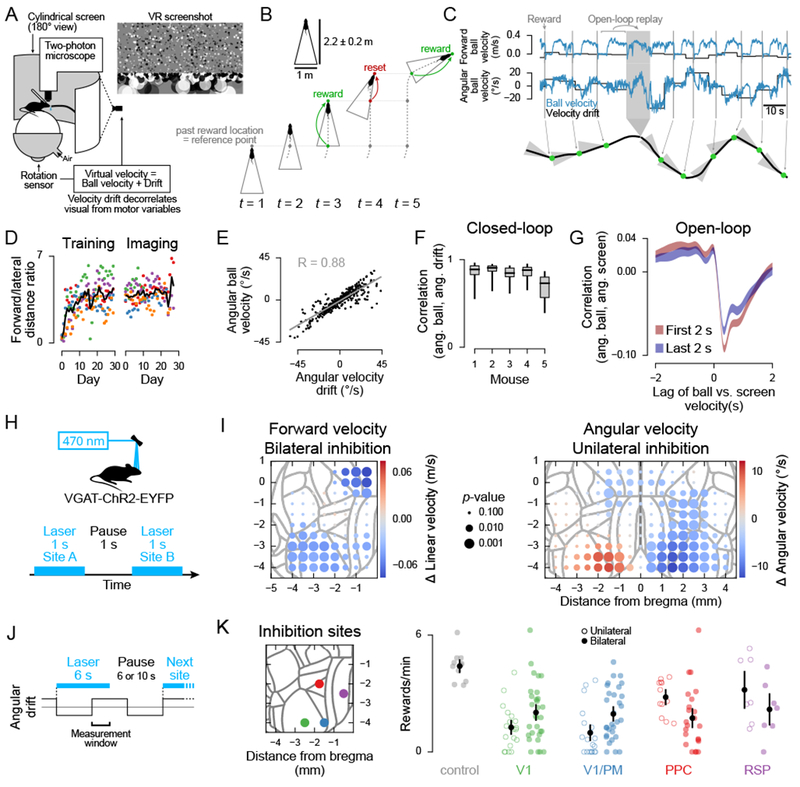

Figure 1. A visually guided locomotion task that engages posterior cortex.

(A) Experimental setup and screenshot of the virtual reality environment.

(B) Schematic of the reward condition in the task (top-down view). Dashed lines, path taken by the mouse. Solid gray triangle, invisible boundaries used to determine reward delivery (Methods).

(C) Top: Example velocity traces. Bottom: Corresponding top-down view of the path taken by the mouse. Green dots indicate reward times.

(D) Task performance during training and imaging. The ratio between the forward distance to the lateral distance covered by the mouse was used to assess the efficiency of the mouse’s behavior. Each dot is one session; colors indicate different mice. Black line, mean across mice (n = 5).

(E) Correlation between angular ball velocity and velocity offset for one session. Each dot shows the mean velocity during a single epoch with a single drift value. Only closed-loop segments are included. Gray line, least squares fit; R, Pearson correlation coefficient. (n = 386 drift epochs)

(F) Boxplot of the correlation between angular velocity and drift as in (E), for all sessions for each mouse. Line, median; box, first to third quartile; whiskers, range (23.6 ± 5.99 sessions per mouse, mean ± s.d).

(G) Cross-correlation between angular screen and ball velocity during open-loop segments. Red line, first 2 s of segment; blue line, last 2 s of segment; shading, mean ± s.e.m. across sessions (n = 118).

(H) Schematic of optogenetics setup during the task, with the drift velocity set to zero.

(I) Effect of targeted inhibition on locomotion velocity. Each dot represents an inhibition site that was randomly targeted. Dot color represents the difference between pre- and post-inhibition velocity, and dot size represents significance based on hierarchical bootstrap (Methods). Effects were smoothed with a 1 mm square window. Gray outlines, area parcellation from the Allen Mouse CCF (22,384 unilateral and 10,661 bilateral trials; 158.87 ± 21.14 per location, mean ± s.d.; 19 sessions; 3 mice).

(J) Protocol for targeted inhibitions during the full task (with velocity drift).

(K) Left: Map of targeted sites. Right: Each dot represents the average reward rate during inhibition after a drift switch (see “measurement window” in (J)) for a single session. In the control condition, the laser was on, but targeted to a site on the metal headplate. Black dots, mean; error bars, 5th and 95th percentile of a bootstrap distribution of the mean. Conditions vs. control: p < 10−4, except for RSP, p = 0.056. Unilateral vs. bilateral: p < 0.01 for V1, V1/PM, PPC, p = 0.21 for RSP. The difference between unilateral and bilateral mean effects was significantly different for V1 and PPC (p = 0.0016), V1/PM and PPC (p = 0.001), V1 and RSP (p = 0.038), and V1/PM and RSP (p = 0.023), but not for V1 and V1/PM (p = 0.717), and PPC and RSP (p = 0.939). Two-tailed p-values based on 104 resampling iterations.

See also Supplemental Video.

Mice were trained to run approximately two meters straight forward, in virtual world coordinates, from an invisible reference point to obtain a reward. The reference point was reset to the current position of the mouse after a reward or if the mouse did not maintain a straight path (Methods; Figure 1B–C). A random offset (drift) velocity was added to the linear and angular movement of the dots on the screen and changed every 6-12 seconds, independently of reward times. To obtain rewards, mice therefore continuously needed to adjust their running to compensate for the drift and run straight in the virtual world. This task required training, and mice reached steady state performance after about two weeks (Figure 1D–F).

In addition, we periodically switched to open-loop playback of a visual stimulus that was identical to a visual stimulus previously generated by the mouse’s behavior (Figure 1C). During open-loop segments, as expected, the instantaneous correlation between the angular ball and screen velocities was low. However, there was a strong cross-correlation, with the ball velocity lagging the screen velocity by about 360 ms (Figure 1G). Mice thus remained engaged and attempted to correct angular motion even during the open-loop playback.

A key feature of this task was the use of virtual reality to decorrelate optic flow and locomotion using drift and open-loop periods (Chen et al., 2013; Keller et al., 2012; Minderer et al., 2016). The same locomotor actions resulted in distinct optic flow patterns and vice versa. This made it possible to dissociate neural encoding of visual signals, locomotion signals, and visual-motor interactions.

To test whether activity in dorsal posterior cortex had a causal relationship with the behavior required for the task, we inhibited small volumes of cortex by optogenetically activating GABAergic interneurons (Guo et al., 2014; Zhao et al., 2011). We first tested the effect of inhibition on locomotion by setting the drift velocity to zero, thus fixing visual-motor relationships (Figure 1H). We mapped inhibition across a grid on each hemisphere. Cortical inhibition affected both forward and angular running velocity. Forward velocity was reduced when inhibiting either V1 or posterior motor cortex bilaterally (Figure 1I, left). Unilateral inactivation of medial V1 and PM caused an ipsiversive increase in angular velocity (Figure 1I, right). This effect was consistent with a blinding in the contralateral visual hemifield. Such blinding would create a net ipsiversive optic flow during forward running and an expected compensatory ipsiversive locomotor shift.

We also tested the effect of cortical inhibition in the full task, which included velocity drift, to understand if cortical regions were required to adapt to changing visual-motor mappings (Figure 1J). Around the times of drift switches, we inhibited four locations individually that showed different effects in the no-drift case: central V1, medial V1/PM, PPC, and retrosplenial cortex (RSP) (Figure 1K, left). As expected from the no-drift experiments, both unilateral and bilateral inhibition of V1 or PM reduced the reward rate. Also, although inhibition of either PPC or RSP had little effect on locomotion in the absence of drift, inhibition of these areas decreased the reward rate in the presence of drift (Figure 1I, 1K, right). Unilateral inhibition had a stronger effect than bilateral inhibition for V1 and PM, whereas bilateral effects were stronger for PPC and RSP. Together with the no-drift experiments and prior work on PPC and RSP (Buneo and Andersen, 2006; Cho and Sharp, 2001), these findings suggest that, in our task, V1/PM activity might be compared across hemispheres to determine locomotion direction and that PPC and RSP might be involved in adjusting behavior for changing sensorimotor mappings.

Unbiased recordings of cortical activity

While mice performed the task, we imaged the activity of cortical neurons (either layer 2/3 or layer 5) in transgenic mice that expressed GCaMP6s in a subset of excitatory neurons (Dana et al., 2014). In each session, we imaged a 650 μm by 650 μm field-of-view (Figure 2A). Over multiple sessions, we systematically tiled fields-of-view to cover dorsal posterior cortex, including areas identified in the Allen Institute Mouse Common Coordinate Framework (CCF) v.3: V1 (VisP), secondary visual areas (AL, AM, PM), parietal areas (RL, A), retrosplenial areas (RSPagl/MM, RSPd) and trunk somatosensory cortex (SSPd-tr) (Allen Institute, 2017) (Figure 2B–D).

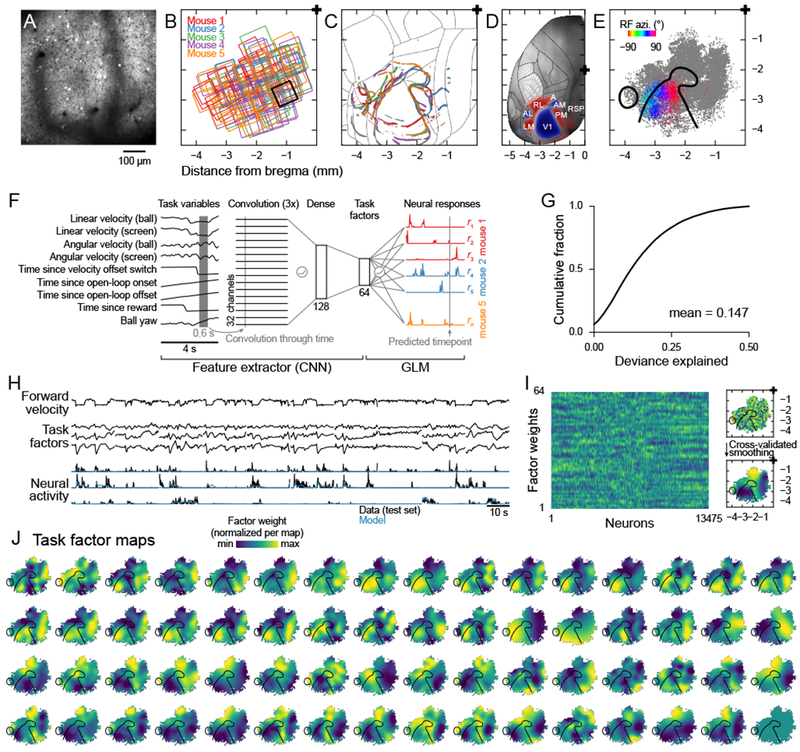

Figure 2. Relating neural activity, behavior, and cortical anatomy using a deep neural network model.

(A) Mean fluorescence image from one session.

(B) Overview of all fields-of-view. Each square represents one session, colors represent mice. Black square, example session in (A). 118 sessions from 5 mice, 23.6 ± 5.99 sessions per mouse, mean ± s.d.

(C) Field sign reversals (Methods) for all five mice, based on widefield epifluorescence imaging. Thin gray lines, area parcellation from the Allen Mouse CCF.

(D) Overlay of the brain template, area parcellation, and average field sign maps (across 79 mice), all obtained from the Allen Institute.

(E) All 23,213 detected neurons, registered with the Allen Mouse CCF. Gray dots, neuron location; colored dots, sources with significant visual receptive field fits; color indicates azimuth of the receptive field in the visual field; black lines, field sign reversals (zero field sign) based on map in (C).

(F) Schematic of the encoding model consisting of three convolutional layers (each with 32 channels), followed by two fully connected layers (128 units and 64 units), all connected through rectification nonlinearities. The response of each neuron was modeled as a linear combination of the final layer activations, passed through an output nonlinearity (the exponential function).

(G) Cumulative histogram of the fit quality (fraction of Poisson deviance explained) of all 23,213 neurons.

(H) Example traces for timepoints that were not used to fit the model. Top: Example task input variable (forward running velocity). Middle: Three example task factors, i.e. activations of the “Task factors” layer in (F). Bottom: Black lines, neural activity (deconvolved fluorescence); Blue lines, model prediction. Traces are discontinuous because data was split into 20 s long chunks and every fifth chunk was held out for testing.

(I) Left: Factor weights for all well-fit sources (fraction of deviance explained > 0.1). Sources were ordered to maximize the weight correlation between neighbors. Right: Schematic of smoothing procedure. Weight values at the location of the corresponding source were smoothed with a Gaussian filter whose width was determined by cross-validation across mice to maximize the prediction performance for the map of the held out mouse.

(J) Optimally smoothed maps for all 64 task factors. Black outlines indicate the field sign reversals based on map in (C).

See also Figure S1.

Posterior cortex was sampled without regard to anatomical boundaries to allow an unbiased analysis of activity distributions across cortical space. Also, we sampled densely at single-neuron resolution to uncover functionally distinct, but spatially overlapping, single-neuron properties and potential heterogeneities that might be obscured with spatial averaging, such as with wide-field imaging.

We identified time-varying fluorescence sources automatically (Pnevmatikakis et al., 2016) and then deconvolved the fluorescence traces (Friedrich et al., 2017) (Methods; Figure S1H–M). We included putative apical dendrites (Figure S1K), which have been shown to follow the activity of L5 somata (Peron et al., 2015). Our dataset comprised 18,127 somata (layer 2/3: 14,377; layer 5: 3,750) and 5,086 putative apical dendrites (Figure 2E). We refer to both somata and putative apical dendrites as “neurons”.

In each mouse, under the same imaging window, we also mapped retinotopy with wide-field calcium imaging. Visual field sign maps were aligned to the two-photon data using blood vessels (Figure S1A–D). Each mouse was registered to the Allen Institute CCF by aligning the field sign map to a CCF-aligned reference field sign map (www.brain-map.org) (Figure 2C–2D, S1E). This registration identified the position of each neuron within the CCF. We estimated the error of our alignment procedure using Allen Institute field sign maps that contained ground-truth CCF coordinates. Our procedure resulted in errors less than 130 μm (Figure 2C, S1E–F), which was smaller than the smallest posterior brain regions and less than the length scales for most of our analyses (see Methods for details on alignment).

Relating neural activity and complex behavior with a deep neural network model

We wanted to understand how the relationship between neural activity and task information varied across cortical space. To relate neural activity to behavior, we considered common approaches to model a neuron’s activity as a function of measured task variables (Peron et al., 2015; Pillow et al., 2008; Runyan et al., 2017). In these approaches, the experimenter defines and evaluates a small set of potential relationships between neural activity and task features. A benefit of these approaches is that they allow the experimenter to focus on specific encoding relationships.

However, our aim was to compare encoding across diverse cortical regions. We therefore considered it important to use an approach that did not rely on strong prior assumptions about the relationships between neural activity and task features. For example, in parietal and retrosplenial areas, there is little agreed-upon expectation for these relationships. Because these regions are far removed from the sensory periphery, encoding could include complex temporal patterns and nonlinear transformations of task variables. Further, to reveal the organization of encoding across cortical space, we considered it initially not critical to understand which aspects of the task were encoded in particular regions; this question is considered later. We initially asked how, rather than which, encoding properties changed across cortex.

We built an automated feature extractor that identified neural-activity-relevant features of task variables in a way that required minimal assumptions about encoding relationships (Figure 2F). The task feature extractor took the form of a convolutional neural network (CNN) that received as input 4 s long temporal snippets of each measured task variable. The convolutions were applied in the temporal dimension. The input task variables were the linear and angular locomotion (ball) velocity, the linear and angular optic flow (screen) velocity, the time since the last drift switch, the times since the last open-loop onset and offset, the time since the last reward, and rotation of the ball in yaw (yaw was not used to control the virtual environment).

The feature extractor was trained to reduce the input snippet to a set of 64 “task factors” that optimally describe neural activity. Each task factor was not constrained to represent an individual task variable and could represent a nonlinear combination of task variables, including complex temporal dependencies. The task factors were then used as the predictors in a regression model (Poisson-distributed generalized linear model, GLM) to explain the activity of each neuron (a linear visual receptive field estimate was added as an additional predictor, see Methods). The task factors were shared across and optimized for all neurons, but separate GLM weights were fit for each neuron. The GLMs predicted the deconvolved fluorescence activity of the given neuron at a time point 3 s into the 4 s input snippet. A neuron’s encoding could thus be described by its set of GLM weights across the task factors.

Importantly, the CNN and the GLMs were trained jointly on the entire dataset. Therefore, the predictors in our model were identified by the CNN based on an objective optimization procedure, rather than being manually specified. Each task factor corresponded to some aspect of the task that was relevant for neural activity. We chose 64 task factors because this number maximized the prediction quality on held-out data. We designed the model to ensure that each factor represented a different aspect of the task (Methods). Consequently, correlations between factors were low (Figure S1N), and factors were not well described by a lower-dimensional linear subspace (Figure S1O).

Our model captured a substantial amount of the neural activity variance (mean fraction of Poisson deviance explained: 0.147, Figure 2G). 78.1% of activity sources had fit qualities that were significantly higher than chance (false discovery rate of 0.001 (Benjamini and Hochberg, 1995)). Several versions of more traditional GLMs performed worse (e.g. Figure S8B).

Encoding maps revealed functional relationships between areas

We created encoding maps for each of the 64 task factors obtained from our model. In these maps, at each neuron’s anatomical location, we plotted that neuron’s weight for the given factor. The map for a given factor showed the spatial distribution of a factor’s weights and thus the importance across space of a particular aspect of the task for neural activity. We smoothed the maps using a Gaussian kernel chosen with cross-validation to give the best prediction performance across mice (Figure 2I).

The encoding of most task factors (63 of 64 factors) had structure across cortical space, indicating that task features were not uniformly represented across all areas (Figure 2J). This initial visualization indicated that we were able to capture functional relationships between cortical areas based solely on task-related neural activity. We note that the identity of the individual factors shown in Figure 2J depended on the initialization of the CNN (Methods, section Factor non-identifiability controls). However, the high dimensional encoding structure described by all factors collectively was unaffected by the initialization (Figure S2). We used this high dimensional structure for all analyses.

Cortical regions could be finely discriminated based solely on their task tuning

To analyze how the encoding of task features changed across cortical space, we examined the average spatial rate of change of the encoding maps (Figure 3A). The rate-of-change map showed sharp transitions in the encoding properties that divided the analyzed region into three areas: V1, RSP, and a secondary visual/parietal area. These boundaries did not align precisely with the retinotopic field sign borders and were better aligned with the boundaries defined by the Allen Institute, which are based on cyto- and chemoarchitecture (Figure 3A, S3) (Allen Institute, 2017). In particular, the peak rate of change in encoding at the lateral side of V1 was displaced laterally from the visual field sign border (Figure 3A, S3A–D) (Zhuang et al., 2017). Further, the rate of change of encoding did not reveal sharp transitions within the secondary visual/parietal area. For example, the anterior boundary of AM in the field sign map did not correspond to a peak in the rate of change of encoding properties of neurons (Figure S3E–F).

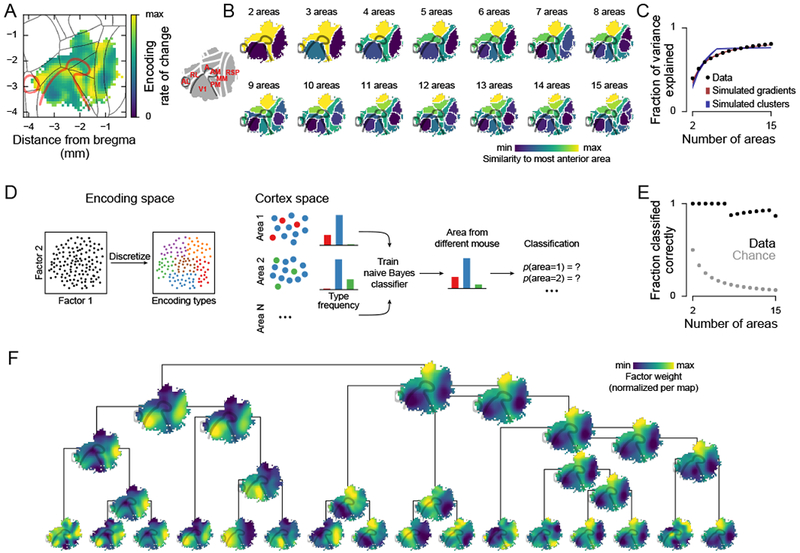

Figure 3. Cortical regions were discriminable based on task-related encoding properties.

(A) Average spatial rate of change of the encoding maps in Figure 2J. The rate of change (magnitude of central differences between neighboring points) was computed for each factor map and normalized by its maximum before averaging across factors.

(B) k-means clustering of factor maps from Figure 2J. Colors are based on a one-dimensional embedding of the cluster centroids.

(C) Black dots show fraction of map variance explained by the clusterings in (B). Shaded areas show variance explained ± s.d. across 100 simulations for two simulated datasets: random gradients (red) and maps with consistent clusters (blue; gradient model fits better than clustered model, p < 10−4; see Methods).

(D) Schematic of the area classifier. Neurons were grouped into 100 encoding types by k-means clustering in the factor weight space. To test area discriminability at a given number of areas, the frequency of each encoding type in each area was used to train a naïve Bayes classifier on four mice. The classifier was then used to compute the area probabilities based on the type frequencies in the fifth mouse. The process was repeated for all mice and the probabilities were averaged before maximum-likelihood classification.

(E) Fraction of areas classified correctly (n = 5 mice).

(F) Agglomerative hierarchical clustering of the 64 factors (only the top four levels are shown). The clustering metric was the correlation of the weights between the factors. The map of each cluster is the average of the maps in Figure 2J for all factors within the cluster.

See also Figures S2, S3 and S4.

To test whether the three main divisions contained sub-regions with different encoding properties, we grouped regions based on encoding similarity by applying k-means clustering to the encoding maps (Figure 3B). The fraction of variance explained increased smoothly with the number of clusters and was more similar to simulations of smooth maps than to simulations of clustered maps (Figure 3C). This suggested that there was no optimal number of clusters beyond the three main divisions and that clustering likely subdivided a continuum.

However, despite the lack of sharp boundaries, sub-regions within the three main divisions were statistically discriminable based on their encoding properties. To show this, we grouped neurons into encoding types based on their weights for task factors. Each spatial cluster (“areas” in Figure 3B) could then be characterized by the distribution of encoding types it contained. We trained a naïve Bayes classifier on this distribution from four mice and, in a fifth mouse, predicted the area identities based on the encoding type distributions (Figure 3D). For up to seven areas, each area in a map was discriminable from each other area. Even for 15 areas, only two confusions occurred (Figure 3E). This discriminability suggested that encoding properties varied consistently across mice within the three main areas apparent in the map of the rate of change of encoding (Figure 3A).

The order in which subdivisions emerged at increasingly finer map resolutions provided insights into the functional relationships between areas (Figure 3B). To highlight these relationships, we performed hierarchical clustering on the encoding maps (Figure 3F), and we overlaid the areas identified from clustering with the areas defined by the Allen Institute (Figure S4). Multiple interesting observations emerged from the k-means and hierarchical clustering. First, parietal regions clustered with medial secondary visual areas. Parietal regions could be subdivided into: a medial strip including area A, part of area AM, and area PM; a lateral region overlapping with RL; and, an anterior region close to somatosensory cortex. Second, RSP could be divided into separate clusters along the anterior-posterior axis, indicating that it may not be a functionally uniform region (see left branch of clustering hierarchy in Figure 3F). Third, AL was functionally more similar to the AM/MM clusters than to RL, which was consistent with connectivity (Gămănuţ et al., 2018) but differed from tuning preferences for simple visual stimuli (Marshel et al., 2011). Fourth, even at fine clustering resolution (15 clusters), we did not observe a cluster aligned with the retinotopically defined part of area AM. Finally, the most consistent boundaries in all maps were at the divisions between primary visual, parietal, and retrosplenial areas, but the boundaries within these main divisions differed substantially across maps.

Cortical areas were therefore statistically discriminable based on their functional relationship with task features at a resolution comparable to anatomical and retinotopic maps. However, except for the boundaries between V1, parietal, and retrosplenial areas, encoding properties varied gradually.

Neurons within an area had diverse encoding properties

Given that posterior cortex could be divided into discriminable areas, we wanted to understand the underlying structure of the discriminability at the resolution of single neurons. We initially hypothesized that the discriminability of cortical areas was due to a spatial compartmentalization of encoding properties across areas, such that each area would be characterized by neurons with encoding properties that were unique to that area.

We analyzed how neurons in each area were distributed across the space of encoding properties. The encoding properties of a neuron were defined as the neuron’s weights for the task factors. For visualization, we reduced the 64-dimensional weights into two dimensions with t-distributed stochastic neighbor embedding (t-SNE) (Figure 4A). For the following analyses, we used the parcellation into five areas for ease of display (Figure 4B), but similar results were found with ten areas or with the areas defined by the Allen Institute (Figure S5). If each area had unique encoding properties, neurons in encoding space would segregate by cortical area. Instead, each of the five cortical areas contained neurons in most parts of encoding space (Figure 4C).

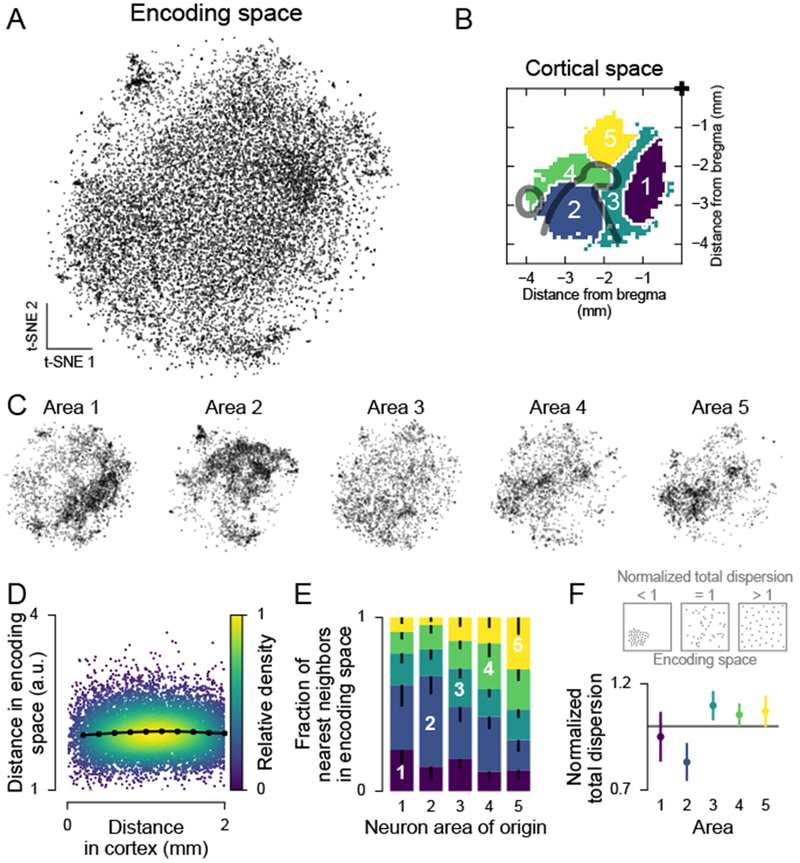

Figure 4. Anatomically defined populations showed diverse encoding properties.

(A) t-SNE of the factor weights for all neurons.

(B) Parcellation of cortex used in (C). From Figure 3B.

(C) Same embedding as in (A), but split by areas in (B).

(D) Relationship between anatomical and encoding-space distance. Each dot is one neuron pair. Black line, mean (computed in 200 μm bins). Pearson correlation for 0-500 μm: 0.032 (greater than zero, p < 10−323, n = 10,977,961 neuron pairs).

(E) Anatomical location of nearest-encoding-space-neighbors for neurons in all areas in (B). Neurons were randomly subsampled to match neuron numbers across areas. Colors as in (B). Error bars (only lower tail is drawn), fifth percentile of hierarchical bootstrap of the mean.

(F) Top: Schematic of the normalized total dispersion statistic. A normalized total dispersion value less (greater) than one means less (more) dispersion in weight space than the overall population. Bottom: Normalized total dispersion for the areas in (B). Error bars, 5th and 95th percentile of hierarchical bootstrap of the mean. The mean is different from 1 with: area 1, p = 0.256, n = 3518; area 2, p < 10−3, n = 3407; area 3, p = 0.018, n = 2389; area 4, p = 0.059, n = 2065; area 5, p = 0.075, n = 1959 neurons.

See also Figure S5.

We quantified these observations by analyzing the distribution of the full 64-dimensional encoding weights. The relationship between cell-cell anatomical distance and cell-cell distance in encoding space was weakly positive (Pearson correlation 0.031 over 0-500 μm cortical distance, p < 10−323, Figure 4D). Anatomically nearby neurons had a wide range of similarity in encoding, similar to anatomically far apart neurons. Consequently, a neuron in one cortical area often had a nearest neighbor in encoding space that was located in another area, indicating that areas overlapped in their encoding properties (Figure 4E). This overlap was extensive: for all areas except V1, most nearest-encoding neighbors were located in different areas. We also quantified the dispersion of the encoding weights of the neurons in an area by summing the variances of each of the 64 encoding dimensions (“total dispersion”). We then normalized each area’s total dispersion by the total dispersion across all areas. If areas had specialized single-neuron encoding properties, each area was expected to have a normalized total dispersion less than one. This was the case only for V1 (Figure 4F). In contrast, the area comprising parietal and secondary visual regions (PM, AM, A, MM) had a normalized total dispersion greater than one and thus tiled encoding space more evenly than the overall population (Figure 4F).

Therefore, each cortical region had diverse encoding properties that overlapped with those from different regions, indicating that encoding properties in our task were not spatially compartmentalized. The even tiling of encoding space by parietal and secondary visual areas indicated a diversity of encoding that was consistent with a role of these areas in the integration of information across the surrounding regions.

Neurons with similar encoding properties were distributed across cortex

Our results so far indicated that cortical areas were discriminable based on their encoding properties, but also that encoding properties were distributed across areas. Therefore, we considered that encoding properties could be distributed without regard to area boundaries, but non-randomly, such that areas were discriminable based on their characteristic profile of encoding properties. We tested whether distributed encoding properties were more likely than area-specific properties to be informative about the identity of a cortical area. We identified neurons with similar encoding properties by discretizing the encoding weight space into encoding types (Methods; Figure 3D). An inspection of the distribution of each of the encoding types across cortical space revealed that neurons from a single encoding type were typically highly distributed and found in many regions (Figure S6C).

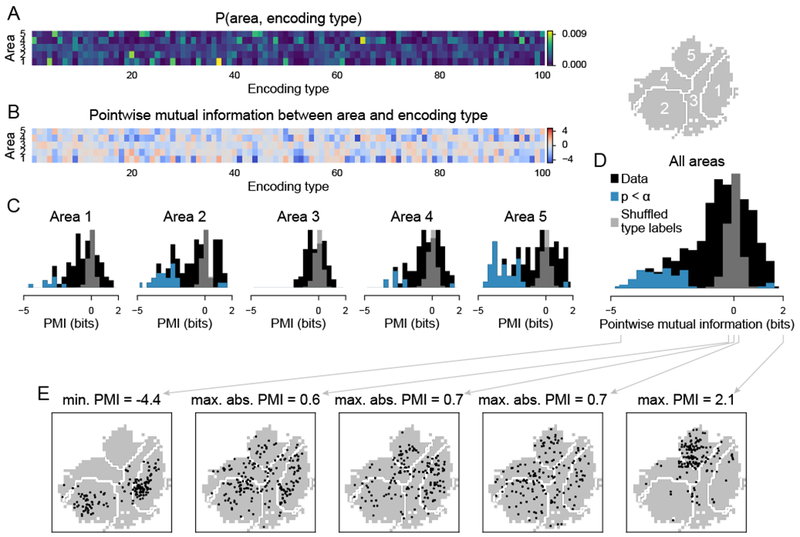

We quantified to what degree particular encoding types were under- or over-represented in different areas. We used the joint probability distribution of cortical areas and encoding types to compute the pointwise mutual information (PMI) between each area and encoding type (Figure 5A–B). The PMI measured how much the probability of observing a particular encoding type in a particular area deviated from statistical independence, that is, from a situation in which the encoding type was uninformative about the cortical area. The PMI was positive if the encoding type was enriched in the area and negative if it was selectively excluded from the area.

Figure 5. Neurons with similar encoding properties were distributed across cortex.

Encoding types were defined by applying k-means clustering to the weight space, as in Figure 3. Areas were defined based on the five-area parcellation shown in Figures 3B.

(A) Joint probability distribution of all areas and encoding types.

(B) Pointwise mutual information between area and encoding type (), based on the frequency of neurons of each type in each area.

(C) Histograms of the data in (B). Each histogram shows the PMI values of all encoding types with respect to one area. Gray, control distribution (shuffled type labels); blue, PMI values that were significantly different from zero; black, non-significant datapoints. Significance based on bootstrap estimate of PMI (hierarchically resampled data 104 times and re-computed probabilities). The significance threshold α was corrected for multiple comparisons by controlling the false discovery rate at 0.01. n = 500 encoding type/area pairs.

(D) Histogram of PMI values for all encoding type/area pairs. Colors as in (C). 64 pairs are significantly less than zero, 5 are significantly greater.

(E) Distribution across cortex of five example encoding types with strongest exclusion from one area (left), least informative about cortical location (middle), strongest specificity to one area (right).

See also Figure S6.

Few encoding types were significantly specific to a single cortical area. Only 5 of 100 encoding types had a PMI significantly greater than zero for any area (Figure 5B–D). Instead, neurons of the same encoding type were typically distributed across several areas. Most pairs of encoding type and area had PMI values near zero, indicating that the presence of an encoding type in a given area provided little information about the identity of the area (Figure 5E, middle three panels). However, a fair number of pairs of encoding type and cortical area (64 of 500) had PMI values that were significantly less than zero (Figure 5C–D). Neurons of these encoding types were significantly excluded from certain areas (Figure 5E, left). Consequently, these encoding types were informative about their cortical location without being specifically localized to a certain area. Similar results were obtained when using the Allen Institute-defined areas (Figure S6A).

Therefore, cortical areas were distinct because they had different relative profiles of encoding properties and not because of encoding types that were specific to individual areas. Areas were separable at the population scale but with many similarities at the level of individual neurons. For a given neuron with known encoding properties, it was difficult to identify in which area it resided (Figure 5E and S6). Similarly, for a given area, it was difficult to predict the encoding properties of a neuron chosen from that area (Figure 4C,E).

Encoding of measured task variables

Above, we analyzed encoding relationships using unsupervised methods to gain insights into the functional organization of cortex without relying on the interpretability of the encoded task features. Next, we considered how the encoding of explicitly measured task variables varied across cortical space.

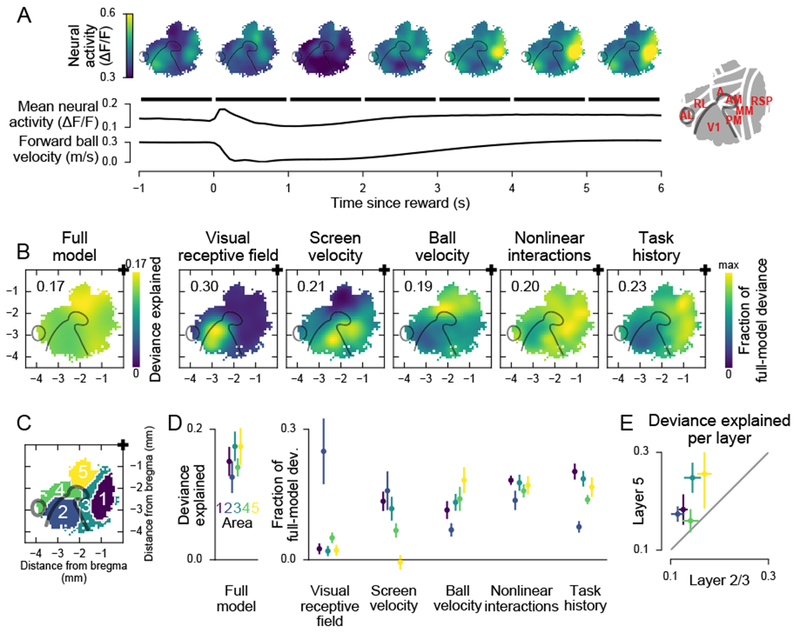

First, to visualize the large-scale involvement of cortex in the task, we considered how activity changed around the time of the reward (Figure 6A). In the visual-retrosplenial regions, activity was high during ongoing behavioral actions, including fast running prior to a reward, and activity was low when the mouse stopped following a reward (Figure 6A). The pattern was reversed in the parietal region. Parietal region activity was high during periods of acceleration and deceleration (Figure 6A). These patterns were consistent with a role for the visual-retrosplenial group in the maintenance of a straight locomotion trajectory. The activity in the parietal group was consistent with an involvement in the adjustment of behavior in response to task events (Buneo and Andersen, 2006).

Figure 6. Encoding of task variables.

(A) Mean neural activity, aligned to the reward. Top: Smoothed activity maps for 1 s bins. Bottom: Mean activity trace and forward ball velocity trace. Black bars indicate extent of map bins.

(B) Maps of encoding strength. Left: Map of full model fit quality across cortex. Right: Maps of unique contributions of different features to the full model fit. Fraction of full-model deviance was computed, separately for each neuron, as 1 − (Dnull − Dreduced)/(Dnull − Dfull), where Dreduced was the deviance of a model lacking the indicated feature, Dfull was the deviance of the full model, and Dnull was the deviance of a null model (Methods). All maps are scaled from zero to the value indicated in the top left corner.

(C) Parcellation of cortex used in (D). From Figure 3B.

(D) Data from (B), binned by areas in (C). Colors as in (C). Error bars, 5th and 95th percentile of hierarchical bootstrap of the mean. Number of neurons: area 1, 6318; area 2, 6256; area 3, 3820; area 4, 3708; area 5, 2882. Mean deviance explained is greater than zero in all areas with p < 10-3. Mean fraction of full-model deviance is greater than zero for all areas and conditions with p < 0.009 except for area 5, screen velocity (p = 0.610).

(E) Mean full-model deviance explained, split by layer. Error bars, 5th and 95th percentile of hierarchical bootstrap of the mean. Number of neurons: layer 2/3, 11639, layer 5, 3717.

See also Figure S7.

We next used our encoding model (Figure 2) to understand how encoding of specific task variables varied across cortex (Figures 6 and S7). For each neuron, we quantified how well the full model, with all task variables included, could explain the neuron’s activity (measured as deviance explained). From the full-model deviance, we subtracted the deviance explained by a model that included all variables except the variable of interest (Figure 6B,D). The difference in the performance of these models provided a conservative estimate of a variable’s contribution to a neuron’s activity (Methods).

The primary variables relevant for the task were locomotion velocity (ball velocity) and movement velocity through the virtual environment (screen velocity), which were decorrelated by adding drift and open-loop periods. Encoding of these variables varied mainly along the anterior-posterior axis (Figure 6B,D). Screen velocity encoding was strongest in medial V1 and PM, which correspond to the temporal visual field, where optic flow is strongest during forward locomotion. In contrast, locomotion velocity encoding was strongest in a region corresponding to the Allen CCF area VisA. As expected, linear visual receptive fields were restricted to V1 (Figure 6B,D).

In addition, we tested for the encoding of more complex features, including nonlinear combinations of task variables and task history (Methods). Nonlinear interactions between task variables were encoded almost equally strongly in all areas (Figure 6B,D). To test for encoding of task history, we compared models that had access to either 3 s or 0.3 s of past task variables. We found that task history was encoded most strongly in RSP and in the parietal areas (areas 3 and 5, Figure 6D). This finding is consistent with work showing long timescales and integration of navigationally-relevant variables in RSP and parietal areas (Alexander and Nitz, 2015; Morcos and Harvey, 2016; Runyan et al., 2017).

We also compared encoding in layer 2/3 and layer 5. The types of task variables encoded were similar across layers (Figure S7D). Also, the differences in encoded features between areas were consistent for both layers. However, encoding was generally stronger in layer 5 than in layer 2/3 (Figure 6E).

Although encoding of task features was not uniform across cortical space, it was striking that at least some neural activity in most areas could be explained by any given feature. Except for screen velocity in the most anterior area, the fraction of explainable deviance for any task feature in any of the five areas was significantly greater than zero (Figure 6D). Thus, if an experiment focused solely on a single area, it would likely find encoding of most task parameters of interest. Here, by analyzing a large portion of cortical space in a single study, we were able to find that areas differed not in terms of which features they encoded, but rather in the relative strength with which they encoded those features.

Distributedness of information across cortex depended on encoded features

Our maps of encoding properties suggested that the distribution of information may be related to the type of feature that is encoded. It appeared that simpler features, such as visual receptive fields, screen velocity, and ball velocity, were more spatially confined than more complex features, such as nonlinear feature interactions and task history. To test this idea, we grouped neurons by their encoding properties into encoding types (as in Figure 3D). We then asked how the features encoded by the neurons of a given type related to the spread of those neurons in cortex, quantified as the area of an ellipse fit to the cortical locations of all neurons of that type (Figure 7A).

Figure 7. Distributedness of information across cortex depended on encoded feature.

(A) Schematic of distributedness analysis. Encoding types were defined as in Figures 3 and 5. The spread in cortex (distributedness) of each encoding type was defined as the area of a 1-standard-deviation-contour of a Gaussian distribution fit to the cortical locations of the neurons with this type. The fraction of full-model deviance was defined as in Figure 6B and averaged for each neuron type.

(B) Distributedness versus fit quality for the full model. The three encoding types with highest fraction of full-model deviance are colored. Error bars, 5th and 95th percentile of hierarchical bootstrap of the mean. Error bars were only plotted for the colored types, to maintain visibility. Gray line, 5th and 95th percentile of hierarchical bootstrap of a linear regression fit. The regression slope was not different from zero (p = 0.14).

(C) Cortical locations of the neurons belonging to each of the colored types in (B).

(D) As in (B), but for the unique contributions of different features to the full model fit. Gray dots represent encoding types that were not significantly different from zero by bootstrap. The three types with the highest deviance explained for each feature are colored. The regression slopes were significantly different from zero (p < 10−4) for screen velocity, ball velocity, and nonlinear interactions, but not for task events (p = 0.058) or task history (p = 0.995). The slopes for both task history and task events were significantly different from the other features (p < 0.001). All p-values were determined by bootstrap (104 repeats).

(E) As in (C), but for the types colored in (D).

Encoding types with the strongest encoding of either visual or locomotion velocity had some of the smallest spreads in cortex (Figure 7D–E, see colored encoding types). For the encoding of nonlinear interactions and task history, the types with the strongest encoding had intermediate spreads (Figure 7D–E, see colored encoding types). We also tested specific encoding of task events, such as rewards, open-loop onset and offset, and drift switches. The few encoding types that had significant event-specific encoding were highly distributed across cortex (Figure 7D–E, see colored encoding types).

Therefore, encoding types associated with relatively simple stimuli appeared more localized, which is consistent with area specialization for simple visual stimuli (Andermann et al., 2011; Glickfeld et al., 2013; Marshel et al., 2011). However, most encoding types showed a wide spread across cortical space (Figure S6C). Neurons that were task-related in more complex, but nevertheless reliable, ways, such as nonlinear combinations of sensory, movement, and task features, tended to be highly distributed across cortex.

Multimodal representations emerged where encoding maps overlapped

We wanted to understand where task-relevant information, in particular the relationship between visual and locomotion information, was represented in a format that could be best decoded by a downstream region to solve the task. We focused on decoding of linear combinations of signals because theory suggests that linear representations allow optimal inference in the context of multimodal integration (Ma et al., 2006; Pitkow and Angelaki, 2017). We developed a simplified version of our encoding model in which task variables did not interact nonlinearly and therefore remained invariant to each other (Figure 8A; Methods). As above, the model took the form of a GLM, but rather than using the CNN-based feature extractor, we specified manually which task variable combinations and transformations to relate to neural activity.

Figure 8. Population decoding reveals that multimodal representations emerge where modalities overlap.

(A) Schematic of the encoding model and decoding. A separate encoding model was fit for each neuron. Population decoding was performed on local groups of ~50 neurons. A prior model was used to account for correlations between the decoded variable and other task variables (Methods).

(B) Maps of decoding accuracy for the modalities that were explicitly decorrelated in this study: angular and linear screen and ball velocity. Left and center-left: Decoding maps for the single-modality (screen and ball) features. Center-right: to illustrate where decoding accuracy of the screen and ball single-modality maps overlapped, the screen and ball maps were multiplied pixel-wise. Right: maps for the multi-modal feature (drift).

(C) Mean decoding performance (), split by layer. Error bars, 5th and 95th percentile of hierarchical bootstrap of the mean. Number of neurons: layer 2/3, 11639, layer 5, 3717. Colors indicate area as in legend below.

(D) Maps as in (B), but showing decoding accuracy for open-loop timepoints using an encoding model fit on closed-loop timepoints. Plots next to maps show mean by area. For these plots, closed-loop accuracy was computed using only the timepoints that were later replayed during open-loop segments. Error bars, 5th and 95th percentile of hierarchical bootstrap of the mean.

(E) Maps of decoding accuracy for long-timescale integration features.

See also Figure S8.

We used Bayes’ rule to invert the GLM and to measure how well individual features could be decoded from the neural activity of small local populations (~50 neurons; Methods). To assess decoding accuracy, we isolated the portion of task variance explained that was due to the neural activity and not due to correlations between the decoded variable and other task variables (Methods). Since features only interacted linearly in the GLM, a high decoding accuracy meant that the neural activity encoded the feature of interest in a manner invariant to other features.

The main reward-relevant task variables were the angular optic flow (screen) velocity and angular locomotion (ball) velocity. Optic flow and locomotion velocity were related by the drift velocity. Screen, ball, and drift velocity therefore formed a set of visual, motor, and visual-motor-integration variables. The visual component (angular screen velocity) could be decoded predominantly from the MM region medial to area PM (Figure 8B). The motor component (angular ball velocity) could be decoded from a mediolateral strip that overlapped with the parietal region A (Figure 8B). The visual-motor-integration component (angular drift) could be decoded best in a location that was anterior and medial of the retinotopically defined area AM. This location corresponded to the zone of maximal overlap between the visual and locomotion velocity decoding regions (Figure 8B). Similarly, linear velocity drift could be decoded best where linear visual and locomotion velocity decoding overlapped.

These results indicate two significant points. First, the best angular drift decoding was in a location that matched what our previous work has studied as PPC (Driscoll et al., 2017; Harvey et al., 2012; Morcos and Harvey, 2016; Runyan et al., 2017). Second, our data suggested that representations formed overlapping maps and that multimodal information could be decoded where the representations of component features overlapped.

To test how the combination of multimodal information depended on coherence between sensory and motor signals, we compared decoding performance during the task to decoding performance in the open-loop segments, during which the animal was not in control of the virtual environment (Figure 8D). Using an encoding model fit to closed-loop time points, we decoded task variables during open-loop times. If representations depended on behavioral control, the decoding performance of the closed-loop model was expected to be low during open-loop times. Decoding of angular velocity, the most task-relevant variable, was reduced during open-loop segments for both visual and motor components (Figure 8D, top). Decoding performance of linear visual and drift velocity was reduced in secondary and higher areas, but improved in primary visual cortex (Figure 8D, bottom). This suggests a disengagement of secondary and higher areas, and reduction in multimodal representations, when the animal was not in control of the environment. There was no decrease in mean activity in any area during open-loop segments (Figure S8E). Together with our optogenetic inhibition results (Figure 1I,K), our findings suggest that V1 represents visual-motor mismatch in a task-independent way, whereas higher areas such as PPC and RSP integrate sensory, motor and task information in a way that depends on coherent interactions with the environment.

We further found that the best decoding of higher order task features was broadly present in association areas (Figure 8E). All of the temporally or spatially integrated features that we considered were best decoded from RSP, consistent with a role for this region in integration of visual information during navigation (Alexander and Nitz, 2015; Cho and Sharp, 2001). Interestingly, however, decoding was not uniform across RSP. Rather, decoding of heading since the last reward was specific to posterior portions of RSP. RSP may therefore have important functional subdivisions.

The patterns of decoding across cortical space were broadly consistent for neurons in layer 2/3 and layer 5. However, decoding performance for nearly all features of the task was superior in layer 5 populations (Figure 8C, S8D).

DISCUSSION

Unbiased and unsupervised comparison of encoding properties during navigation

Both our experimental design and analysis approach were developed to address the challenges of comparing activity in a wide range of cortical areas. The use of a navigation task likely engages posterior cortex more strongly than passive viewing of stimuli (Andermann et al., 2011; Marshel et al., 2011). Our task was self-guided and continuous, potentially allowing more natural cooperation between brain areas than in rigid trial-based tasks. Also, the space of task variables was sampled densely, rather than at a few pre-defined states, which could help understand the distribution of encoding properties.

We developed a neural-network-based feature extractor and combined it with a linear encoding model (Batty et al., 2017; McIntosh et al., 2016) to relate neural activity to behavior in a way that requires few prior assumptions about the considered encoding relationships. Instead of relating activity directly to explicitly measured task variables, we started with unsupervised analyses. This approach allowed us to consider relationships that were based on hard-to-interpret features, such as nonlinear interactions or long temporal dependencies. These methodological advances helped us to compare task-related activity across widely varying cortical areas within a single experimental paradigm.

The spatial organization of behaviorally relevant information in cortex

In the context of our visually guided navigation task, encoding properties formed smooth gradients across large parts of posterior cortex. The only functional discontinuities in posterior cortex were found at the major anatomical borders between V1, parietal, and retrosplenial areas. Despite the lack of further discontinuities, different regions could be distinguished based on population-level differences in encoding profiles. On a cellular level, however, encoding properties were strikingly distributed, and most task features were encoded in most areas.

Our data appear consistent with a distributed architecture without sharp distinctions between local and long-range connectivity, in which functional similarity is related smoothly to cortical distance. A distributed architecture, in this sense, has been suggested by theoretical work. Modeling has shown that the inter-area connectivity of both primate and rodent cortex can be explained by an organizational rule that acts on the level of single axons (Song et al., 2014). According to this rule, the probability of a neuron to send an axon into any part of cortex decays smoothly (exponentially) with wiring distance. Networks based on this rule thus form a spatial continuum and lack discrete areas (Song et al., 2014). Such networks may appear modular in larger brains, such as those of primates, and distributed in smaller brains, such as from rodents, even with the same underlying principle.

Emergence of multimodal information between primary areas

These models make predictions for the formation and location of multimodal representations. Due to the rule of connectivity decay with distance, multimodal representations are expected to emerge at cortical locations between the peaks of encoding for individual features. We found that multimodal representations were common across posterior cortex, with most areas having significant encoding of both visual and locomotion signals. This finding is consistent with work showing movement-related signals in primary sensory cortices (Keller et al., 2012; Saleem et al., 2013). However, in our data, multimodal representations emerged to the greatest extent at locations where visual and movement gradients overlapped, most saliently in the location we have studied in depth as PPC, which is anterior and medial to the boundaries of retinotopic area AM (Driscoll et al., 2017; Harvey et al., 2012; Morcos and Harvey, 2016; Runyan et al., 2017). At this location, we found an overlap of angular screen and ball velocity encoding and the highest decoding accuracy for the most action-relevant task variable, angular drift.

More generally, our study shows that task-related activity can be used to identify functional structure in association areas that have been challenging to map with sensory stimuli. While angular drift could be decoded best in the location we have called PPC, other multimodal signals were present in a large group of areas including PM, MM, A, AM, and RL (as defined in the Allen CCF). This group was active during behavioral change-points (Figure 6) and encoded visual-motor integration features (Figures 6 and 8). A sub-region extending along the anterior-posterior direction (area 3) had the most diverse encoding of all regions (Figure 4) and strongly encoded nonlinear interactions of visual and locomotion features (Figure 6D). We hypothesize that functions typically ascribed to parietal cortex extend throughout this region, potentially in a posterior to anterior gradient from visual-motor integration to learned visually-guided motor planning. In addition, our results indicate that area PM has an important role in navigation (Andermann et al., 2011; Roth et al., 2012). Furthermore, RSP had prominent functional subdivisions along the anterior-posterior axis.

Beyond multimodal mixing, redundant and recurrently connected neural populations are thought to be requirements for other features of cortical processing. The prevalence of complex nonlinear stimulus transformations and their distributed representation in our data may be a signature of processing for probabilistic inference (Pitkow and Angelaki, 2017). Also, the widespread integration signals we found across secondary and association areas are consistent with the sharing of information between neural populations at different stages of a processing stream, as needed for predictive coding (Keller et al., 2012; Marques et al., 2018).

Implications for the future study of posterior dorsal cortex during behavior

The areas we identified based on the encoding of task information did not line up exactly with retinotopic boundaries. In the secondary visual regions, we found gradients of task encoding, in contrast to the sharp boundaries suggested by retinotopic mapping. Our results also suggested that the spatial structure of encoding may depend on the features considered. Future studies comparing cortical areas should consider the caveats associated with sampling and binning neural activity based on area boundaries defined by specific features, such as retinotopy.

Despite the distributed representations we observed, it is possible that computations in cortex could be localized. For example, computations to generate or transform representations could occur selectively in a specialized area and then this information could be broadcast widely. It will thus be of interest to develop experimental approaches that extend beyond encoding to test if computation is localized or distributed.

STAR METHODS

CONTACT FOR REAGENT AND RESOURCE SHARING

Further information and requests for resources and reagents should be directed to and will be fulfilled by the Lead Contact, Christopher Harvey (harvey@hms.harvard.edu).

EXPERIMENTAL MODEL AND SUBJECT DETAILS

Mice

All experimental procedures were approved by the Harvard Medical School Institutional Animal Care and Use Committee and were performed in compliance with the Guide for the Care and Use of Laboratory Animals. All imaging data were obtained from five male C57BL/6J-Tg(Thy1-GCaMP6s) GP4.12Dkim/J mice. For the optogenetic inactivation experiments, three male VGAT-ChR2-YFP mice were used (The Jackson Laboratory). All mice were 8-10 weeks old at the start of behavioral training, 12-24 weeks old during imaging and optogenetic experiments, and had not undergone previous procedures. Mice were kept on a reversed 12 h dark/light cycle and housed in groups of up to four littermates per cage. Mouse health was checked daily.

METHOD DETAILS

Behavior

Virtual reality system

The virtual reality system was programmed in MATLAB using the Psychophysics Toolbox 3. A PicoPro laser projector (Celluon Inc.) back-projected the virtual environment onto a half-cylindrical screen (24 inch diameter) at a framerate of 60 Hz. The luminance of the image was gamma-corrected and adjusted to account for the curvature of the screen.

The virtual environment was updated in response to the mouse’s locomotion on a custom-made open-cell Styrofoam ball (8 inch diameter, ~135 g). Two optical sensors (ADNS-9800, Avago Technologies) positioned 45° below the equator of the ball and separated by 90° in azimuth were connected to a Teensy-3.2 microcontroller (PJRC.COM) to measure the 3-dimensional rotation velocity of the ball. The pitch and roll velocity components controlled the linear and angular velocity in the virtual environment. The velocity gain was adjusted such that the distance travelled in the virtual environment equaled the distance traveled on the surface of the ball.

The virtual environment consisted of 6,000 dots placed uniformly at random in a 4 m by 4 m wide and 5 m high virtual arena. Movement of the mouse on the ball caused corresponding translation of the dots through the arena. The arena had continuous boundary conditions such that dots leaving the bounds on one side reappeared on the other. The viewpoint of the mouse was located in the center of the arena. Only dots within a radius of 2 m from the viewpoint of the mouse were visible. All dots had a diameter of 63 mm in the virtual world, corresponding to 1.8° of visual space at a distance of 2 m. Dots closer than 1 m from the mouse were collapsed in height onto the floor of the arena. The dots were either black or white, on a gray background. Each dot had a lifetime of 1 s, after which it vanished and a new dot was generated at a random location.

Task description

Mice were trained to run along a straight path for a fixed distance (“goal distance”) away from an invisible reference point to obtain a reward (4 μl of 10% sweetened condensed milk in water). The goal distance was adjusted daily to maintain the reward rate between 2 and 5 rewards per minute and was 2.21 ± 0.23 m (mean ± s.d.) for the imaging sessions.

To detect deviation from a straight path, we continuously measured the position of the reference point in relation to a triangular reward detection zone (Figure 1B). The reward detection zone was an isosceles triangle whose apex was at the mouse’s current position and whose base was behind the mouse and orthogonal to the mouse’s current heading direction. The goal distance defined the height of the triangle and the base had a fixed length of 1 m. When the reference point crossed any edge of the triangle due to the mouse’s locomotion, the point was reset to the current position of the mouse. If the point left the triangle by crossing its base, a reward was delivered. This mechanism rewarded the mouse for moving away from the current reference point in a straight path. The triangular reward detection zone ensured that more recent path segments were weighted more heavily than segments further in the past in determining the straightness of the mouse’s path.

To decorrelate the locomotion velocity from the optic flow on the screen, we added a drift to the movement of the mouse through the virtual environment. The drift velocity remained constant for 6-12 seconds (interval chosen uniformly at random). Then, new values were randomly drawn from a normal distribution, independently for the forward and angular directions. The standard deviation of the angular drift component was 18°/s. For comparison, the standard deviation of the angular running velocity during the task was 15.6°/s (after subtracting the drift). The standard deviation of the forward drift component was 0.06 m/s (s.d. of forward running velocity after subtracting drift, 0.17 m/s). To obtain data where locomotion and optic flow were completely decorrelated, we included a segment of open-loop playback after every ninth drift segment. During open-loop playback, we displayed a visual stimulus that was identical to the preceding 6-12 seconds of closed-loop behavior. No rewards were delivered during the open-loop segments.

Behavioral training

Before behavioral training, a titanium headplate was affixed to the skull of each mouse using dental cement (Metabond, Parkell). The center of the headplate was positioned over left posterior cortex (2.25 mm lateral and 2.50 mm posterior from bregma). To allow for an imaging plane parallel to the surface of cortex, the headplate was tilted by 16° from horizontal around the anterior-posterior axis. While performing the task, mice were head-restrained so that the headplate was tilted at 16° and the skull was level.

Starting three days before the first training session, mice were put on a water restriction schedule which limited their total consumption to 1 ml per day. The weight of each mouse was monitored daily and additional water was given if the weight fell below 80% of the pre-training weight. Mice were trained daily for 45-60 min at approximately the same time each day. Initially, the drift velocity was set to zero and the goal distance was set to a low value such that any movement on the ball would result in a reward. The goal distance was increased automatically after each reward within a session, and the initial and maximal goal distance were adjusted between sessions to maintain the reward rate between 2 and 5 rewards per minute. Once mice reached a goal distance of 2 m, drift was gradually introduced over several sessions. Training took approximately 2 weeks.

Optogenetic inactivation experiments

Three male VGAT-ChR2-YFP mice were used for the optogenetic inactivation experiments (The Jackson Laboratory). For these experiments, we followed the procedures described in (Guo et al., 2014).

Clear skull cap

The mouse was anaesthetized with isoflurane (1-2% in air). The scalp was resected to expose the entire dorsal surface of the skull. The periosteum was removed but the bone was left intact. A thin layer of cyanoacrylate glue was applied to the bone (Insta-Cure, Bob Smith Industries), followed by a layer of transparent dental acrylic (Jet Repair Acrylic, Lang Dental, P/N 1223-clear). A bar-shaped head plate was affixed to the skull posterior to the lambdoid suture using dental cement (Metabond, Parkell). Mice were then trained to perform the task.

Once mice reached steady state performance, they were anesthetized again and their skull cap was polished with a polishing drill (Model 6100, Vogue Professional) using denture polishing bits (HP0412, AZDENT). After polishing, clear nail polish was applied (Electron Microscopy Sciences, 72180). Fiducial marks were made on the skull cap to aid in laser alignment. An aluminum ring was then affixed to the skull using dental cement mixed with India ink to prevent stimulation light from reaching the mouse’s eyes.

Photostimulation

Light from a 470 nm collimated diode laser (LRD-0470-PFR-00200, Laserglow Technologies) was coupled into a pair of galvanometric scan mirrors (6210H, Cambridge Technology) and focused onto the skull by an achromatic doublet lens (f=300 mm, AC508-300-A-ML, Thorlabs). The laser (analog power modulation, off to 95% power rise time, 50 μs) and mirrors (<5 ms step time for steps up to 20 mm) allowed simultaneous stimulation of several sites by rapidly moving the beam between them. The beam of the diode laser had a top-hat profile with a diameter at the focus of approximately 200 μm.

For the grid-based inhibition mapping, stimulation trials were performed continuously throughout the behavioral session. For each stimulation trial, we randomly selected which hemisphere to stimulate (left, right or both), and then randomly selected one of 72 stimulation sites (per hemisphere) (Figure 1I). All sites were stimulated once before the first site was stimulated again. Sites were spaced in a regular grid at 500 μm intervals. During these experiments, we set the drift velocity in the task to zero.

For the inhibitions during the full task (with drift), two drift values were used (18°/s and −18°/s) and were switched every four seconds. The laser onset was aligned to the drift switch and the laser was on for six seconds. Inhibition trials were performed randomly at every second or third drift switch. The coordinates of the targeted sites, in millimeters lateral and posterior from bregma, were 2.5, −4 (V1), 1.5, −4 (V1/PM), 1.75, −2 (PPC), and 0.5, −2.5 (RSP) (Figure 1K). For control trials, the laser was targeted at a location on the metal headplate. Unilateral and bilateral trials were randomly interleaved.

Following Guo et al., the laser power at each stimulation site had a near-sinusoidal temporal profile (40 Hz) and time average of 5.7 mW. To ensure identical stimulus properties and mirror sounds, for unilateral stimulations, we still moved the laser between two sites, but the second site targeted the head-plate so that the light did not reach the brain.

Chronic cranial windows for imaging

After mice reached steady task performance, the cranial window surgery was performed. Twelve hours before the surgery, mice received a dose of dexamethasone (2 μg per g body weight). For the surgery, mice were anesthetized with isoflurane (1-2% in air). The dental cement covering the skull was removed and a circular craniotomy with a diameter slightly greater than 4 mm was made over the left hemisphere (centered 2.25 mm lateral and 2.50 mm posterior from bregma). The dura was removed. A glass plug consisting of two 4 mm diameter coverslips and one 5 mm diameter coverslip (#1 thickness, CS-4R and CS-5R, Warner Instruments), glued together with optical adhesive (NOA68, Norland), was inserted into the craniotomy and fixed in place with dental cement. India ink was mixed into the dental cement to prevent light contamination from the visual stimulus. An aluminum ring was then affixed to the head plate with dental cement. During imaging, this ring interfaced with light shielding around the microscope objective to prevent light contamination.

Widefield retinotopic mapping

Retinotopic maps were collected for the mice used for calcium imaging. Mapping was performed as described before (Driscoll et al., 2017). Mice were lightly anaesthetized with isoflurane (0.5 – 1.0% in air). GCaMP fluorescence (excitation, 455 nm; emission, 469 nm) was collected with a tandem-lens macroscope. A periodic visual stimulus consisting of a spherically corrected black and white checkered moving bar (Marshel et al., 2011) was presented on a 27 inch IPS LCD monitor (MG279Q, Asus). The monitor was positioned in front of the right eye at an angle of 30 degrees from the mouse’s midline.

Retinotopic maps were computed by taking the temporal Fourier transform of the imaging data at each pixel and extracting the phase of the signal at the stimulus frequency (Kalatsky and Stryker, 2003). The phase images were smoothed using a Gaussian filter (100 μm s.d.) and the field sign was computed (Sereno et al., 1994).

Two-photon imaging

Two-photon microscope design

Data were collected using a custom-build rotating two-photon microscope. The entire scan head of the microscope was mounted on a gantry that could be translated along two axes (dorsal-ventral and medial-lateral with respect to the mouse). The objective and collection optics were attached to the scan head assembly and could be rotated around the third axis, such that the objective could be positioned freely within the coronal plane of the mouse. Additionally, the spherical treadmill was mounted on a three-axis translation stage (Dover Motion) to position the mouse with respect to the objective. We positioned the objective such that the image plane was parallel to the left hemisphere of dorsal posterior cortex (approximately 16 degrees from horizontal). The head of the mouse remained in its natural position.

Excitation light (920 nm) from a titanium sapphire laser (Chameleon Ultra II, Coherent) was coupled into the scan head via periscopes aligned with the gantry. The scan head consisted of a resonant and a galvanometric scan mirror, separated by a scan lens-based relay telescope, and allowed for fast scanning. Average power at the sample was 60–70 mW. The collection optics were housed in an aluminum box to block light from the visual display. Emitted light was filtered (525/50 nm, Semrock) and collected by a GaAsP photomultiplier tube. The microscope was controlled by ScanImage 2016 (Vidrio Technologies).

Image acquisition

Images were acquired at 30 Hz at a resolution of 512 × 512 pixels (650 μm × 650 μm). The imaging depth below the dura was either between 120 μm and 170 μm (layer 2/3) or between 300 μm and 500 μm (layer 5). Imaging was continuous for the duration of the behavioral session, lasting between 55 and 70 min. We found that there was slow axial drift of approximately 6 μm/h, possibly due to physiological changes as the animal consumed liquid rewards. To compensate for the drift, the stage was moved continuously at a similar rate. The rate was adjusted between sessions based on residual drift observed between the beginning and end of each session. To synchronize imaging and behavioral data, the imaging frame clock and projector frame clock were recorded.

Registration to the Allen CCF

Before each two-photon imaging session, an image of the surface vasculature was recorded directly above the coordinates of the imaging session. To align each two-photon session to the retinotopic map for each mouse, matching features in the vasculature were identified manually between the two-photon surface vasculature image and an image of the vasculature obtained during retinotopic mapping. Using the features as control points, a similarity transformation (translation, rotation and scaling) was computed to align the two-photon imaging data to the retinotopic map (Figure S1A–D).

To combine data across mice, we aligned the field sign map of each mouse to a CCF-aligned reference field sign map available from the Allen Institute (www.brain-map.org) (Figure 2D and S1E). For each mouse, we first obtained outlines of V1 and AM, which were defined as pixels in the field sign map with an absolute value less than 0.06 (the field sign ranges from −1.0 to 1.0). We then manually determined the rotation and translation that best aligned the outlines of V1 and area AM to the CCF-aligned reference map (Figure S1E). This registration provided a position for each neuron in coordinates from the CCF.

Since two-photon imaging and widefield-imaging for retinotopic mapping were performed through the same imaging window, the alignment error between two-photon sessions within each mouse was expected to be very small. However, greater errors could be introduced during the registration to the CCF. We therefore estimated the potential error in identifying a neuron’s position due to the alignment procedure by using data from the Allen Institute that included, for individual mice, field sign maps with ground-truth CCF coordinates. To mimic our alignment procedure, we registered a field sign map from a single Allen Institute mouse to the average field sign map from their other mice. We estimated the CCF coordinates from the alignment and compared these coordinates to the real positions known for that mouse. This procedure was identical to the methods we used to align data across mice and to register our data to the CCF. This alignment introduced errors in the range of 100 to 130 μm (Figure S1F), which is smaller than the smallest posterior brain regions and less than the relevant length scales for most of our analyses.

Pre-processing of imaging data