Abstract

Objective

To systematically synthesize the literature on information visualizations of symptoms included as National Institute of Nursing Research common data elements and designed for use by patients and/or healthcare providers.

Methods

We searched CINAHL, Engineering Village, PsycINFO, PubMed, ACM Digital Library, and IEEE Explore Digital Library to identify peer-reviewed studies published between 2007 and 2017. We evaluated the studies using the Mixed Methods Appraisal Tool (MMAT) and a visualization quality score, and organized evaluation findings according to the Health Information Technology Usability Evaluation Model.

Results

Eighteen studies met inclusion criteria. Ten of these addressed all MMAT items; 13 addressed all visualization quality items. Symptom visualizations focused on pain, fatigue, and sleep and were represented as graphs (n = 14), icons (n = 4), and virtual body maps (n = 2). Studies evaluated perceived ease of use (n = 13), perceived usefulness (n = 12), efficiency (n = 9), effectiveness (n = 5), preference (n = 6), and intent to use (n = 3). Few studies reported race/ethnicity or education level.

Conclusion

The small number of studies for each type of information visualization limit generalizable conclusions about optimal visualization approaches. User-centered participatory approaches for information visualization design and more sophisticated evaluation designs are needed to assess which visualization elements work best for which populations in which contexts.

Keywords: visualization, symptom science, communication

INTRODUCTION

Information visualization is a key aspect of informatics and highly relevant to health care.1 Information visualizations (ie, techniques that support the understanding of abstract data2) have been used to present rich, complex information in ways that facilitate patient understanding of information and patient-provider communication.3 Information visualizations also have been shown to reduce information overload and improve recall.4,5

To date, information visualizations for patients and providers have been most widely studied in health risk communication.6–8 Two recent literature reviews of studies of visualizations for health risk communication noted that icons, graphs (eg, line, bar), and other types of visualizations (eg, pie charts, maps, photographs) have been used for communication.6,8 They reported that pictographs/icon arrays and bar graphs hold some promise for improving comprehension among users.6,8 Similarly, Garicia-Retamero and Cokley conducted a systematic review to evaluate the benefits of visual aids in risk communication. They reported that 87% of the studies reviewed showed that static visual aids tend to be beneficial. In addition, 75% of the studies that investigated the effect of static icon arrays found that they tend to be particularly helpful in improving accuracy of risk understanding and recall.7

A less frequently studied area is using information visualizations to return individual research results to participants.9 However, the All of Us precision medicine initiative is a key driver for escalating research in this area, given the intent to return a broad variety of information about genes, environment, and lifestyle (including symptom status) to All of Us participants.9 Research by multiple authors has addressed this context. For example, in a series of studies, Arcia and colleagues have reported the application of participatory design and other informatics methods to create and return individual research results through information visualizations to Latinos who participated in a large community survey.3,10–12 In the domain of returning laboratory results to patients, Zikmund-Fisher and colleagues have developed and tested a range of information visualizations, including icon arrays and color scales, to communicate risk to patients.13–15

The use of information visualizations has increased as the technologies utilized to create them have become more widely available and easy to use. These include modules integrated into electronic health records such as reporting software used for clinical dashboards,16,17 standalone specialized modules such as the Electronic Tailored Infographics for Community Education, Engagement, and Empowerment (EnTICE3) program,10,11 visualization software (eg, Tableau, R), and interactive websites such as Visualizing Health (vizhealth.org), Icon Array Generator (iconarray.com), or Chart Chooser (labs.juiceanalytics.com/chartchooser/index.html). A recently released National Academies report on return of individual biomarker results to research participants highlighted the importance of strategies that facilitate understanding of the meaning and limitations of the results and recommends leveraging new and existing health information technology to enable tailored, layered, and large-scale communications.18

An understudied, but important area given the prevalence of symptoms, is the use of information visualizations for communication of symptom status for patients and/or providers. Symptoms are burdensome and difficult to manage.19 Management of symptoms relies heavily on effective communication between providers and patients. In the area of pain, evidence suggests that ineffective patient-provider communication influences poor pain symptom management and may contribute to the opioid crisis.20–22 More generally, patient-rated physician communication quality has been positively associated with patient-rated symptom management quality; patients who rated their physician’s communication as high were more likely to report their symptom management needs as being met.23

A substantial body of literature has documented that there are disparities in assessment and treatment of symptoms.24–28 Research has shown that healthcare providers fail to recognize patient symptoms 50% to 80% of the time during visits.29–33 In addition, studies have shown that assessment and treatment of symptoms vary based on patient race and ethnicity. Racial/ethnic minorities, including Hispanic and black patients, are more likely to report having unmet symptom management needs compared to non-Hispanic, white patients.34,35 Overall, targeted interventions are needed to improve communication of symptoms between providers and patients in order to reduce disparities in symptom management.

Given the priority of symptom science in its strategic plan, the National Institute of Nursing Research (NINR) has designated a set of symptoms as common data elements (CDEs) and recommended measures for those symptoms: pain, fatigue, sleep, affective mood, affective anxiety, and cognitive function.36 As interest in symptom and information visualization research has increased,19 a better understanding of how the use of information visualizations can improve symptom assessment and reporting for self-management and communication between patients and providers is an essential foundation for improved symptom management. However, gaps in the literature remain. For example: Which symptoms have been studied using information visualizations? What populations (both patient and provider) have been the target of information visualizations and what are their characteristics? What types of information visualizations have been studied? How have the information visualizations been evaluated? To our knowledge, there has been no systematic review of the research in this area.

Therefore, the purpose of this systematic review is to examine and synthesize the research literature on information visualizations of symptoms included as NINR CDE symptoms for use by patients and/or providers.

METHODS

The Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) statement was used to guide our reporting.37

Search strategy

We searched for publications within CINAHL, Engineering Village, PsycINFO, PubMed, ACM Digital Library, and IEEE Explore Digital Library between 2007 and 2017. We used a set of search terms that were relevant to the query options for each database and included NINR CDE symptom terms (eg, “pain,” “fatigue,” “sleep disturbance,” “cognitive function,” “anxiety,” “depression,” “depression symptom,” “affective symptom,” or “mood”) and information visualization terms (eg, “visualization*,” “visual*,” “graph,” or “infographic”) (Supplementary Table S1). As a means to focus and narrow the symptom content of this review, we selected symptoms designated as NINR CDEs. Search yields were uploaded to Covidence, a web-based software platform that facilitates systematic reviews (covidence.org). Additional articles were identified using the reference lists of articles selected for inclusion based on database queries.

Inclusion criteria

Studies were eligible if they: (1) were full text, (2) peer reviewed, (3) written in English; (4) included information visualizations; (5) focused on 1 or more NINR CDE symptoms for use by patients and/or providers, and (6) used qualitative or quantitative methods to assess the information visualizations. Studies were excluded if the visualizations focused on radiologic imaging, presentation of research findings, and data collection or assessment tools. Studies were independently reviewed for inclusion by 2 of the authors (ML, TAK). Conflicts were resolved through discussion.

Quality assessment of study methodology

We used the Mixed Methods Appraisal Tool (MMAT) to evaluate the methodological quality of the studies reviewed.38 MMAT is designed to allow researchers to concurrently appraise quantitative, qualitative, and mixed-methods research and produce comparable scores across study designs. The study quality appraisal score is determined by dividing the number of criteria met by the total criteria in each applicable domain. Application of the MMAT has demonstrated high reliable inter-class correlation ranging from 0.84 to 0.94.38–41 Two reviewers (ML, TAK) independently reviewed and calculated scores for each study. Discrepancies between the reviewers were resolved by discussion and reviewing the studies again.

Quality assessment of visualizations

We characterized the information visualizations by assessing whether or not the study included: (1) an image(s) of the visualization, (2) a description of how the visualization was designed, (3) a description of how the visualization was presented to patients and/or providers, and (4) a description of how the visualization was evaluated. A point was awarded for each criterion that was met, and the total score was converted to a percentage.

Data extraction

We extracted the following information from each study: (1) methods (eg, design, sample); (2) visualization type (eg, graph, icon) and delivery medium (eg, web-based, paper); (3) visualization evaluation methods; and (4) study findings. The findings were organized according to the subjective (ie, perceived usefulness, perceived ease of use) and objective categories (ie, efficiency, effectiveness) of the Health Information Technology Usability Evaluation Model (Health-ITUEM).42 Findings that could not be classified into Health-ITUEM categories were initially classified as “other” and subsequently categorized as subjective preference or intent to use.

RESULTS

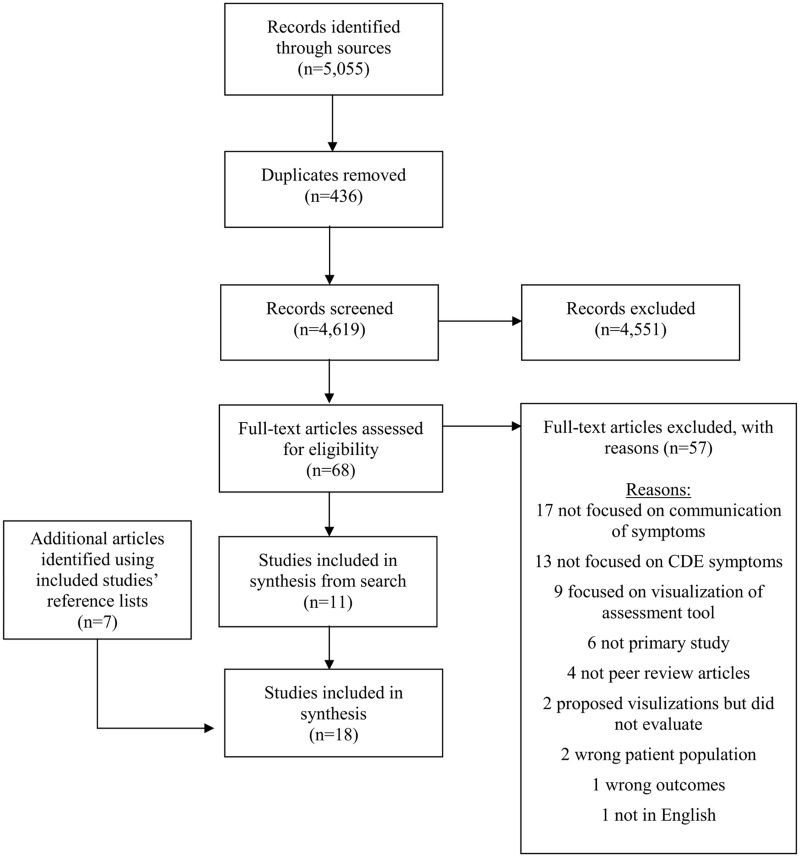

Of 18 studies that met our inclusion criteria (Figure 1), 9 used mixed methods,43–51 7 used quantitative descriptive methods,52–58 1 used qualitative methods,59 and 1 used a quantitative randomized controlled design60 (Table 1). Ten of 18 studies met all MMAT criteria (Supplementary Table S2).

Figure 1.

PRISMA flow diagram.

Table 1.

Characteristics of studies reviewed

| Demographic characteristics of studies |

CDE Symptoms Studied |

||||||

|---|---|---|---|---|---|---|---|

| Study | Design | Population | Sample size (n) | Age (years) | Fatigue | Pain | Sleep/Sleep disturbances |

| Ameringer et al. 201543 | Mixed methods | Patients (oncology) | 76 Patients | xŵ=18.5±4.2 | ✓ | ✓ | |

| Brundage et al. 201744 | Mixed methods | Providers (physicians, nurse practitioners, physician assistants) | 233 Providers | Not reported | ✓ | ✓ | |

| Davis et al. 200852 | Quantitative descriptive | Patients (oncology) | 64 Patients | Patient: x̄=64±12.4 | ✓ | ||

| Providers (physicians, nurses, pharmacists) | 22 Providers | Provider: Not reported | |||||

| Duke et al. 201060 | Quantitative randomized control trial | Providers (physicians) | 24 Providers | Not reported | ✓ | ||

| Hochstenbach et al. 201653 | Quantitative descriptive | Patients (oncology) | 11 Patients | Patient: x̄=53±15 | ✓ | ||

| Providers (nurses) | 3 Providers | Providers: x̄=52±2 | |||||

| Ismail et al. 201654 | Quantitative descriptive | Patients (women experiencing menopausal symptoms) | 30 Patients | Range 40-60 | ✓ | ✓ | ✓ |

| Jamison et al. 201755 | Quantitative descriptive | Physicians (pain) | 90 Providers | x̄=47.1±13.5 | ✓ | ||

| Kourtis et al. 201156 | Quantitative descriptive | Patients (asthma) | 84 Patients | Not reported | ✓ | ||

| Kuijpers et al. 201657 | Quantitative descriptive | Patients (oncology) | 548 Patients | Patient: x̄=60.6±12.3 | ✓ | ✓ | ✓ |

| Providers (nurses, physicians, paramedical professionals) | 227 Providers | Providers: x̄=45.2±10.8 | |||||

| Lalloo and Henry 201145 | Mixed methods | Patients (pain) | 23 Patients | Not reported | ✓ | ||

| Lalloo et al. 201446 | Mixed methods | Patients (pain) | 50 Patients | x̄=50±14 | ✓ | ||

| Lalloo et al. 201447 | Mixed methods | Patients (pain) | 17 Patients | Patient: x̄=15.4±SD 1.7 | ✓ | ||

| Providers (clinic administrative coordinator, anesthesiologist, advanced practice nurse, physiotherapist, psychologist, psychiatrist) | 8 Providers | Provider: x̄=46.7±9.6 | |||||

| Lin et al. 201748 | Mixed methods | Patients (oncology) | 106 Patients | Range 3-9 | ✓ | ||

| Loth et al. 201649 | Mixed methods | Patients (brain tumor) | 40 Patients | x̄=52.7±13.7 | ✓ | ✓ | |

| Macpherson et al. 201458 | Quantitative descriptive | Patients (oncology) | 72 Patients | Range 13-29 | ✓ | ✓ | ✓ |

| Schroeder et al. 201759 | Qualitative | Patients (irritable bowel syndrome) | 10 Patients | Patient: x̄=33 | ✓ | ||

| Providers | 10 Providers | Provider: Not reported | |||||

| Wallace et al. 201750 | Mixed methods | Providers (clinician/SleepCoacher mobile application users) | 3 Providers | Not reported | ✓ | ||

| Woods et al. 201551 | Mixed methods | Patients (women experiencing menopausal symptoms) | 30 Patients | Range 40-60 | ✓ | ✓ | ✓ |

Study and symptom characteristics

Study participants included patients (n = 9),43,45,46,48,49,51,54,56,58 providers (n = 4),44,50,55,60 or both (n = 5)47,52,53,57,59 (Table 1). Sample sizes were 10 to 548 patients and 3 to 233 providers. The age range was 3 to 76 for patients and 54 to 61 for providers. In the 12 studies that reported gender, the majority of the participants were females.43,44,47–49,52–59 Of the 6 studies that reported patient education levels, the majority of the participants had a college education.47,49,51,52,54,57 In the 3 studies that reported race or ethnicity, the majority of the participants were white.44,52,58

While 2 studies related to depression, anxiety, and cognitive function were reviewed in the full-text stage, they did not meet inclusion criteria; thus, no studies focused on the symptoms of affective mood, affective anxiety, or cognitive function were included in our review. The 3 remaining NINR CDE symptoms (pain, fatigue, and sleep) were identified in the 18 studies (Table 1) with 7 studies focused on more than 1 symptom.43,44,49,51,54,57,58 Pain was the most studied NINR CDE symptom and was featured in all but 4 studies.50,52,56,60

Visualizations

The types of visualizations in the 18 studies included (Table 2): graphs (n = 14),43,44,49–60 icons (n = 4),45–48 and virtual body maps (n = 2).46,47 Studies featured both simple (eg, line and bar graphs) and complex graphs. Complex graphs included radial heat maps, radial bar charts, and symptom relationship graphs. The radial heat map was used by Wallace et al.50 as a visualization of a 24-hour clock; it has 5 distinct rings that display an average data value at 15-minute intervals for noise anomalies, movement anomalies, sleep start time, actual wake time, and scheduled wake time. The radial bar chart has rings that reflect a 2-day segment with 15-minute intervals for current sleep state including normal sleep, oversleep, nap, noise anomaly, movement anomaly, noise and movement anomaly, aware, and early awakening.50 To create symptom relationship graphs, participants selected and clustered symptoms, drew causal relationships among the symptoms, and characterized the bothersomeness of the symptom cluster.43,51,54,58 Other studies used icons to symbolize symptoms. Lalloo et al.45–47 used icons as visual metaphors of 5 pain qualities including a flame on a matchstick (burning pain), an ice cube (freezing pain), a vice (squeezing pain), a knife (lacerating pain), and an anvil (aching pain). Virtual body maps are interactive diagrams of the anterior and posterior aspects of a body and were used in multiple studies of pain.46,47 The information visualizations were predominantly presented in an electronic format (n = 16);43–47,49–55,57–60 9 were interactive.43,45–47,50,51,54,58,60

Table 2.

Characteristics of visualizations

| Types of visualizations used in studies |

Visualization format of delivery |

||||||||

|---|---|---|---|---|---|---|---|---|---|

| Study | Graph | Icons | Virtual Body Map | Description | Electronic | Paper | Unclear | Interactive | Description |

| Ameringer et al. 201543 | ✓ | Symptom relationship graphs | ✓ | ✓ | A native iPad application | ||||

| Brundage et al. 201744 | ✓ | Line graph, Pie chart, Bar graph | ✓ | Web-based | |||||

| Davis et al. 200852 | ✓ | Bar graph,Line graph | ✓ | Laptop with touch screen | |||||

| Duke et al. 201060 | ✓ | Bar graph | ✓ | ✓ | Web-based | ||||

| Hochstenbach et al. 201653 | ✓ | Line graph | ✓ | Web-based application accessed via iPad | |||||

| Ismail et al. 201654 | ✓ | Symptom relationship graphs | ✓ | ✓ | iPad application | ||||

| Jamison et al. 201755 | ✓ | Line graph | ✓ | Smartphone application | |||||

| Kourtis et al. 201156 | ✓ | Modular line graph | ✓ | Not reported | |||||

| Kuijpers et al. 201657 | ✓ | Bar chart,Heat map | ✓ | Web-based | |||||

| Lalloo and Henry 201145 | ✓ | ✓ | ✓ | Web-based | |||||

| Lalloo et al. 201446 | ✓ | ✓ | ✓ | ✓ | Laptop computer | ||||

| Lalloo et al. 201447 | ✓ | ✓ | ✓ | ✓ | Laptop computer | ||||

| Lin et al. 201748 | ✓ | Frequency images | ✓ | Image card | |||||

| Loth et al. 201649 | ✓ | Bar graph | ✓ | Tablet personal computer | |||||

| Macpherson et al. 201458 | ✓ | Symptom relationship graphs | ✓ | ✓ | A native iPad application | ||||

| Schroeder et al. 201759 | ✓ | Bubble graph, Bar graph, Parallel coordinates plot | ✓ | Web-based | |||||

| Wallace et al. 201750 | ✓ | Time series area chart, Radial heat map, Stacked radial bar chart with/without radial spiral design | ✓ | ✓ | Native application | ||||

| Woods et al. 201551 | ✓ | Symptom relationship graphs | ✓ | ✓ | iPad application | ||||

Many studies had more than 1 goal for the visualization, including reporting, monitoring, understanding, making decisions, and/or communicating (Table 3). However, the most frequent goal (n = 13) was understanding symptoms,49,50,52,53,55,57 including understanding relationships of symptoms to disease processes and other symptoms43,51,54,58,59 or treatments.44,60

Table 3.

Goals of visualization

| Reporting symptom(s)a | Monitoring symptom(s)b | Understanding symptom(s) and/or its relationships/ trends | Decision making about treatment | Communicating with healthcare providers or patients | |

|---|---|---|---|---|---|

| Ameringer et al. 201543 | ✓ | ✓ | |||

| Brundage et al. 201744 | ✓ | ✓ | |||

| Davis et al. 200852 | ✓ | ||||

| Duke et al. 201060 | ✓ | ✓ | |||

| Hochstenbach et al. 201653 | ✓ | ✓ | ✓ | ✓ | |

| Ismail et al. 201654 | ✓ | ✓ | |||

| Jamison et al. 201755 | ✓ | ✓ | ✓ | ✓ | |

| Kourtis et al. 201156 | ✓ | ||||

| Kuijpers et al. 201657 | ✓ | ||||

| Lalloo & Henry 201145 | ✓ | ✓ | |||

| Lalloo et al. 201446 | ✓ | ✓ | |||

| Lalloo et al. 201447 | ✓ | ✓ | |||

| Lin et al. 201748 | ✓ | ||||

| Loth et al. 201649 | ✓ | ||||

| Macpherson et al. 201458 | ✓ | ✓ | |||

| Schroeder et al. 201759 | ✓ | ✓ | |||

| Wallace et al. 201750 | ✓ | ✓ | |||

| Woods et al. 201551 | ✓ | ✓ |

Reporting symptoms refers to visualizations that are used to help patients report their symptoms (eg, pain) to their providers.

Monitoring symptoms refers to visualizations of patient data used to help either patients or providers monitor patient symptoms.

Visualization assessment and findings

Thirteen studies met all visualization quality assessment criteria43–47,49–51,53,54,58–60 (Supplementary Table S3). Five studies did not descibe how the visualization was designed (n = 1), presented to participants (n = 3), or evaluated (n = 1), and/or did not include an image of the visualization (n = 2).

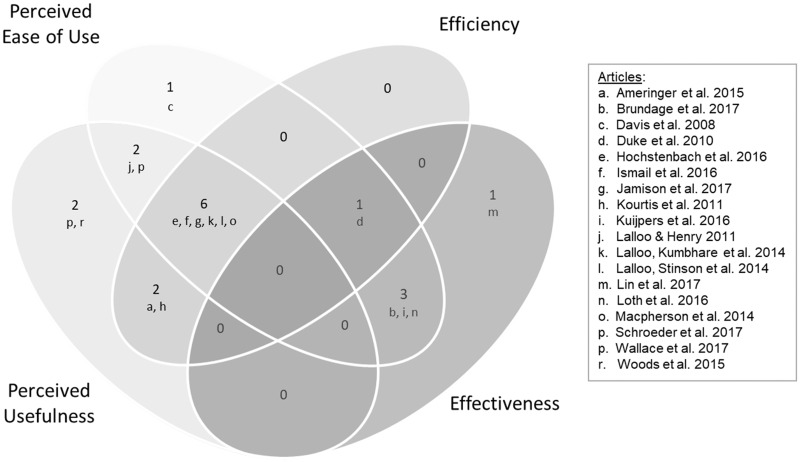

Health-ITUEM classification and other findings

All studies reported at least 1 assessment that could be classified into the Health-ITUEM subjective and objective categories (Figure 2). Ten studies included subjective assessments (ie, preference and intent to use) not included in the Health-ITUEM categories. Study findings are summarized in Supplementary Table S4.

Figure 2.

Study Findings Based on the Health Information Technology Usability Evaluation Model (HEALTH-ITUEM)*

*The numbers in the figure represent the number of articles.

Subjective findings related to visualizations

Thirteen studies reported findings related to perceived ease of use44–47,49,52–55,57–60 and 12 reported perceived usefulness.43,45–47,50,51,53–56,58,59 Patients and/or providers perceived bar49,52 and/or line graphs44,57 as “(very) easy” to understand. Likewise, icons (ie, Iconic Pain Assessment tool) and/or virtual body maps (ie, Pain-QuILT) used to facilitate self-report of pain were rated as “(very) easy” to use45,47 by patients and providers or to have “greater ease of use” by patients.46 In some instances, the ease of use ratings were more generally applied to an application that included a graph. For example, in the study by Jamison et al.55, the majority of patients who used the pain application, which featured a line graph, rated the application as “easy to use.” Similarly, patients who used the Computerized Symptom Capture Assessment Tool (C-SCAT) to visualize symptom relationship graphs described the tool as “easy to use”54 and rated the instructions as “(very) easy.”58 Providers reported having a good or excellent experience using the RXplore tool, which displays horizontal bar graphs of a drug’s most common side effects.60 In contrast, half of providers reported being worried that bar and line graphs of food symptom triggers (eg, pain) were too complicated for patients to interpret.59 Loth et al.49 found that patients had difficulty detecting differences in pain bar graphs displaying changes over time.

Patients perceived line graphs,53,56 icons,45 and the combination of icons and virtual body maps46,47 for pain as useful. This included use of the Pain-QuILT for initiating and promoting clear communication with the health team.46 Providers rated sleep visualizations using radial heat maps as useful.50 Schroeder et al.59 reported that line graphs and bar charts of patient nutrients and symptoms elicited patient and provider questions about the data collection and requests for comparative population data. Patients reported symptom relationship graphs as accurate depictions of symptoms,51,58 and were able to use an interactive digital interface (ie C-SCAT) to report, cluster, name, and articulate relationships among symptoms.43,51 One study highlighted ways that visualizations could be changed to improve usefulness.53 Nurses in the study by Hochstenbach et al.53 stated that the graphs needed to display more information to see pain trends.

Objective findings related to visualizations

Nine studies reported efficiency43,46,47,53–56,58,60 and 5 reported effectiveness.44,48,49,57,60 In regard to efficiency, studies reported completion time43,54,56,58 and compared completion rates to other clinical assessment tools.46,47,60 Patient completion rates were reported for line graphs as part of a pain application,55 a pain diary,53 and symptom relationship graphs.42,53,57 One study focused on clinicians.56 Kourtis et al.56 reported time required for the clinican to enter 1 month of patient diary-card information into the computer system to generate a line graph for patients. For comparison to other tools, patients who used a method that incorporated icons and virtual body maps (ie, Pain-QuILT) for self-reporting pain had similar completion times to traditional pain assessment methods, including verbal pain interview,46 McGill Pain Questionnaire, and Brief Pain Inventory.47 In addition, clinicians using a tool for visualizing drug side effects (ie, RXplore) answered questions faster compared to using an online evidence-based resource with no visualizations (ie, UpToDate).60

Five studies evaluated effectiveness of graphs44,49,57,60 and icons,48 3 via percentage of correct answers to objective understanding questions.49,57,60 The percentage of correct answers ranged from 59%57 to 80%49 in patients and 74%57 to 78%57,60 in providers. Brundage et al. found that clinician interpretation accuracy was dependent on both the type of graph and how the information was displayed within the graph. Clinicians had more accurate responses for line graphs in which higher scores correspond to better symptom outcomes.44 In addition, Lin et al. evaluated the effectiveness of pain frequency images by assessing comprehension. They found that children were unable to understand meanings conveyed by the symbols represented in the pain frequency images.48

Other findings: preference and intent to use

Nine studies44,46–48,50,52,57,59 reported findings that we classified as preference (n = 6) or intent to use (n = 3). Providers preferred bar charts44,52 and heat maps57 for symptom visualization over other graphical formats (eg, pie charts, line graphs). Three studies evaluated patient preferences for pain visualizations.46–48 Lalloo et al.47 found that patients preferred the method that incorporated icons and virtual body maps (ie, Pain-QuILT) for self-reporting pain compared to traditional clinical pain assessment methods (ie, McGill Pain Questionnaire and Brief Pain Inventory). In addition, Lin et al.48 reported that children preferred “spikey” pain frequency images compared to other visual representations such as icons.

Three studies reported on intent to use the visualizations in practice.50,59,60 Providers reported that they were likely to use graphical tools for patient communication about sleep,50 identifying food symptom triggers in patients with irritable bowel syndrome,59 and visualizing drug side effects (ie, RXplore).60

DISCUSSION

To the best of our knowledge, this study represents the first systematic review related to information visualizations of NINR CDE symptoms for patients and/or providers. We found that information visualizations have been used to represent symptom information about pain, fatigue, and sleep with pain being the most studied symptom. Although we did review the full text of 2 articles that focused on the remaining NINR CDE symptoms, ie, cognitive function, depression, and anxiety, these studies were ultimately not included in the review, as they only proposed and did not evaluate the visualizations.61,62

Furthermore, the majority of studies focused on pain in the context of cancer. This finding is not surprising, as cancer and cancer treatment related symptoms represent the most widely studied area of symptomatology research.19 The limited use of information visualizations for other clinical contexts suggests the need for additional symptom and symptom information visualization research for other symptoms and in common chronic and rare diseases.63,64

Only a few studies reported race, ethnicity, and education, and no studies explicitly addressed health literacy, numeracy, graph literacy, or limited English proficiency. Thus, it is not possible to assess the generalizability of findings to other populations. More research on symptom information visualizations is needed in populations at high risk for health disparities. This work is especially prudent, as research has consistently shown that there are disparities in symptom management among racial/ethnic minorities.34,35,65 Another health disparate population that has not been well studied and is increasing in the United States is individuals with limited English proficiency66 for whom communication is challenging.67,68 The use of symptom information visualizations should be explored as a potential solution.3,12

Consistent with the health risk communication literature,6,7 the majority of studies in our review used simple graphs (eg, line graph, pie chart, and bar graph). This is likely due to the wider availability of tools to support the creation of such graphs (eg, commonly used spreadsheet and statistical software) or developers’ familiarity with the creation of graphs. Such graphs are appropriate and may be preferred for visualization of some measures. In other instances, information-rich designs that provide additional context are preferred.3 Five studies in this review used information-rich designs—radial sleep graphs50 and symptom relationship graphs43,51,54,58—to visualize multiple dimensions (eg, time of day or relationships among symptoms). The goals of the information visualizations for symptoms were multi-faceted and included reporting, monitoring, understanding, treatment decision making, as well as patient-provider communication. However, the review did not reveal a pattern of matching between goal and type of visualization. Although guidance exists about the match between data type/attributes and information visualization in general (eg, Ware, 201269) and, in a more limited manner, for patient-reported outcomes in specific populations,10–12,67 this review suggests that there is a substantial knowledge gap regarding information visualizations related to patient symptoms.

The majority of studies evaluated visualizations using both subjective and objective measures. Subjective measures are an important foundational step in evaluation of information visualizations, and all studies included a subjective assessment. Only 7 studies compared preferences for 2 or more types of information visualizations, so no conclusions can be made. In terms of objective measures, 8 studies measured only efficiency and 4 measured only effectiveness; 1 study measured both. Such outcomes should be studied in combination in order for the visualizations to be relevant for clinical purposes, as there are multiple factors that can influence implementation including the provider time70,71 and patient health literacy.72 Additionally, no studies in our review evaluated the actual impact of use of symptom information visualizations on patient outcomes and symptom management, indicating a significant knowledge gap. A randomized clinical trial published after the time period covered in our review begins to address this gap. Kroenke et al.73 assessed the effectiveness of providing patient reported outcome measure information system (PROMIS) symptom scores to clinicians using graphs and found that use of graphs did not significantly contribute to symptom improvement at 3-month follow-up.

The majority of studies included in this review used mixed methods and descriptive quantitative designs. Only 1 study involved users in the development of the information visualization.50 According to Chen, 3 out of the 10 unsolved problems that hinder the growth of information visualization relate to the lack of user-centered perspectives.74 User-centered participatory design in the development of information visualizations can address this gap,3 which may be particularly important for symptoms, which are inherently patient perceptions.

Limitations

Several limitations influence the interpretation and generalizability of the review findings. First, our review was limited to articles focused on visualizations of NINR CDE symptoms. Second, our search terms did not include nonspecific symptom concepts such as “energy” in place of “fatigue” that have been used in some studies (eg,3) Third, we restricted our searches to English language published literature and did not include “gray literature.” Consequently, our review may have missed some relevant studies. Moreover, the small number of studies for each type of information visualization limits generalizable conclusions about optimal visualization approaches.

CONCLUSION

A variety of visualizations have been developed to represent symptom information for patients and/or providers. The increasing availability of tools for the design and dissemination of information visualizations provides the opportunity for visualizations beyond those (eg, bar graphs, line graphs) that can be created in statistical programs. User-centered participatory approaches for information visualization development and more sophisticated evaluation designs are needed to assess which visualization elements work best for which populations in which contexts. While studies in this review focused on subjective perceptions (ease of use, usefulness, preference, and intent to use) and objective findings (efficiency and effectiveness), rigorous studies are also needed to test the impact of visualizations on symptom management and patient outcomes.

FUNDING

This work is supported by the Reducing Health Disparities Through Informatics training grant (T32NR007969) and the Precision in Symptom Self-Management (PriSSM) Center (P30NR016587). Dr Koleck is also supported by K99NR017651.

CONTRIBUTORS

Study conceptualization (SB, ML, TAK), search and retrieval of relevant articles (ML, TAK), analysis (ML, TAK), manuscript drafting and substantive edits (SB, ML, TAK).

SUPPLEMENTARY MATERIAL

Supplementary material is available at Journal of the American Medical Informatics Association online.

Conflict of interest statement. None declared.

Supplementary Material

REFERENCES

- 1. Shneiderman B, Plaisant C, Hesse BW.. Improving healthcare with interactive visualization. Computer 2013; 465: 58–66. [Google Scholar]

- 2.Cairo A. Functional Art: An Introduction to Information Graphics and Visualizations. San Francisco, CA: New Riders 2012. [Google Scholar]

- 3. Arcia A, Suero-Tejeda N, Bales ME.. Sometimes more is more: iterative participatory design of infographics for engagement of community members with varying levels of health literacy. J Am Med Inform Assoc 2016; 231: 174–83. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4. Houts PS, Doak CC, Doak LG, et al. The role of pictures in improving health communication: a review of research on attention, comprehension, recall, and adherence. Patient Educ Couns 2006; 612: 173–90. [DOI] [PubMed] [Google Scholar]

- 5. Houts PS, Bachrach R, Witmer JT, et al. Using pictographs to enhance recall of spoken medical instructions. Patient Educ Couns 1998; 352: 83–8. [DOI] [PubMed] [Google Scholar]

- 6. Meloncon L, Warner E.. Data visualizations: a literature review and opportunities for technical and professional communication In: 2017 IEEE International Professional Communication Conference (ProComm). 2017. 1–9. doi: 10.1109/IPCC.2017.8013960. [Google Scholar]

- 7. Garcia-Retamero R, Okan Y, Cokely ET.. Using visual aids to improve communication of risks about health: a review. Sci World J 2012; 2012: 1.. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8. Ancker JS, Senathirajah Y, Kukafka R, et al. Design features of graphs in health risk communication: a systematic review. J Am Med Inform Assoc 2006; 136: 608–18. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9. U.S. Department of Health & Human Service. The Future of Health Begins with You. 2018. https://allofus.nih.gov/. Accessed 15 May 2018.

- 10. Arcia A, Woollen J, Bakken SR.. A systematic method for exploring data attributes in preparation for designing tailored infographics of patient reported outcomes. EGEMS 2018; 6: 2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Arcia A, Merrill J A, & Bakken S. Consumer engagement and empowerment through visualization of consumer-generated health data. In M. Edmunds, C. Hass, & E. Holve (Eds.), Consumer Informatics and Digital Health: Solutions for Health and Health Care. New York City, NY: Springer Publishing. (In Press). [Google Scholar]

- 12. Arcia A, Velez M, Bakken S.. Style guide: an interdisciplinary communication tool to support the process of generating tailored infographics from Electronic Health Data using EnTICE3. eGEMs 2015; 3:doi: 10.13063/2327-9214.1120. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13. Zikmund-Fisher BJ, Witteman HO, Dickson M, et al. Blocks, ovals, or people? Icon type affects risk perceptions and recall of pictographs. Med Decis Making 2014; 344: 443–53. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14. Zikmund-Fisher BJ, Scherer AM, Witteman HO, et al. Effect of harm anchors in visual displays of test results on patient perceptions of urgency about near-normal values: experimental study. J Med Internet Res 2018; 203: e98. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15. Zikmund-Fisher BJ, Witteman HO, Fuhrel-Forbis A, et al. Animated graphics for comparing two risks: a cautionary tale. J Med Internet Res 2012; 144: e106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16. Dowding D, Randell R, Gardner P, et al. Dashboards for improving patient care: review of the literature. Int J Med Inf 2015; 842: 87–100. [DOI] [PubMed] [Google Scholar]

- 17. Dowding D, Merrill JA, Onorato N, et al. The impact of home care nurses’ numeracy and graph literacy on comprehension of visual display information: implications for dashboard design. J Am Med Inform Assoc 2018; 252: 175–82. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.National Academies of Sciences, Engineering, and Medicine. Returning Individual Research Results to Participants: Guidance for a New Research Paradigm. Washington, DC: The National Academies Press 2018. doi: 10.17226/25094. [PubMed]

- 19. Johansen MA, Henriksen E, Berntsen G, et al. Electronic symptom reporting by patients: a literature review. Stud Health Technol Inform 2011; 169: 13–7. [PubMed] [Google Scholar]

- 20. Matthias MS, Krebs EE, Collins LA, et al. “ I’m not abusing or anything”: patient-physician communication about opioid treatment in chronic pain. Patient Educ Couns 2013; 932: 197–202. [DOI] [PubMed] [Google Scholar]

- 21. Matthias MS, Parpart AL, Nyland KA, et al. The patient-provider relationship in chronic pain care: providers’ perspectives. Pain Med 2010; 1111: 1688–97. [DOI] [PubMed] [Google Scholar]

- 22. Hughes HK, Korthuis PT, Saha S, et al. A mixed methods study of patient-provider communication about opioid analgesics. Patient Educ Couns 2015; 984: 453–61. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23. Walling AM, Keating NL, Kahn KL, et al. Lower patient ratings of physician communication are associated with unmet need for symptom management in patients with lung and colorectal cancer. J Oncol Pract 2016; 126: e654–69. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24. Basch E, Iasonos A, McDonough T, et al. Patient versus clinician symptom reporting using the National Cancer Institute Common Terminology Criteria for Adverse Events: results of a questionnaire-based study. Lancet Oncol 2006; 711: 903–9. [DOI] [PubMed] [Google Scholar]

- 25. Atkinson TM, Ryan SJ, Bennett AV, et al. The association between clinician-based common terminology criteria for adverse events (CTCAE) and patient-reported outcomes (PRO): a systematic review. Support Care Cancer 2016; 248: 3669–76. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26. Atkinson TM, Li Y, Coffey CW, et al. Reliability of adverse symptom event reporting by clinicians. Qual Life Res 2012; 217: 1159–64. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27. Check DK, Chawla N, Kwan ML, et al. Understanding racial/ethnic differences in breast cancer-related physical well-being: the role of patient-provider interactions. Breast Cancer Res Treat doi: 10.1007/s10549-018-4776-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28. Siefert ML, Hong F, Valcarce B, et al. Patient and clinician communication of self-reported insomnia during ambulatory cancer care clinic visits. Cancer Nurs 2014; 372: E51–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29. Newell S, Sanson-Fisher RW, Girgis A, et al. How well do medical oncologists’ perceptions reflect their patients’ reported physical and psychosocial problems? Data from a survey of five oncologists. Cancer 1998; 838: 1640–51. [PubMed] [Google Scholar]

- 30. Bruera E, Sweeney C, Calder K, et al. Patient preferences versus physician perceptions of treatment decisions in cancer care. J Clin Oncol 2001; 1911: 2883–5. [DOI] [PubMed] [Google Scholar]

- 31. Wenrich MD, Curtis JR, Ambrozy DA, et al. Dying patients’ need for emotional support and personalized care from physicians: perspectives of patients with terminal illness, families, and health care providers. J Pain Symptom Manage 2003; 253: 236–46. [DOI] [PubMed] [Google Scholar]

- 32. Chang VT, Hwang SS, Feuerman M, et al. Symptom and quality of life survey of medical oncology patients at a Veterans Affairs medical center. Cancer 2000; 885: 1175–83. [DOI] [PubMed] [Google Scholar]

- 33. Butow PN, Goldstein D, Bell ML, et al. Interpretation in consultations with immigrant patients with cancer: how accurate is it? J Clin Oncol 2011; 2920: 2801–7. [DOI] [PubMed] [Google Scholar]

- 34. John DA, Kawachi I, Lathan CS, et al. Disparities in perceived unmet need for supportive services among patients with lung cancer in the Cancer Care Outcomes Research and Surveillance Consortium. Cancer 2014; 12020: 3178–91. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35. Martinez KA, Snyder CF, Malin JL, et al. Is race/ethnicity related to the presence or severity of pain in colorectal and lung cancer? J Pain Symptom Manage 2014; 486: 1050–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36. National Institutes of Nursing Research. Common Data Elements at NINR. n.d. https://www.ninr.nih.gov/site-structure/cde-portal. Accessed 15 May 2018.

- 37. Moher D, Liberati A, Tetzlaff J, et al. Preferred reporting items for systematic reviews and meta-analyses: the PRISMA statement. PLoS Med 2009; 67: e1000097. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38. Pluye P, Gagnon M-P, Griffiths F, et al. A scoring system for appraising mixed methods research, and concomitantly appraising qualitative, quantitative and mixed methods primary studies in mixed studies reviews. Int J Nurs Stud 2009; 464: 529–46. [DOI] [PubMed] [Google Scholar]

- 39. Johnston A, Abraham L, Greenslade J, et al. Review article: staff perception of the emergency department working environment: integrative review of the literature. Emerg Med Australas 2016; 281: 7–26. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40. Tretteteig S, Vatne S, Rokstad AMM.. The influence of day care centres for people with dementia on family caregivers: an integrative review of the literature. Aging Ment Health 2016; 205: 450–62. [DOI] [PubMed] [Google Scholar]

- 41. Mey A, Plummer D, Dukie S, et al. Motivations and barriers to treatment uptake and adherence among people living with HIV in Australia: a mixed-methods systematic review. AIDS Behav 2017; 212: 352–85. [DOI] [PubMed] [Google Scholar]

- 42. Brown W, Yen P-Y, Rojas M, et al. Assessment of the Health IT Usability Evaluation Model (Health-ITUEM) for evaluating mobile health (mHealth) technology. J Biomed Inform 2013; 466: 1080–7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43. Ameringer S, Erickson JM, Macpherson CF, et al. Symptoms and symptom clusters identified by adolescents and young adults with cancer using a symptom heuristics app. Res Nurs Health 2015; 386: 436–48. [DOI] [PubMed] [Google Scholar]

- 44. Brundage M, Blackford A, Tolbert E, et al. Presenting comparative study PRO results to clinicians and researchers: beyond the eye of the beholder. Qual Life Res 2018; 271: 75–90. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45. Lalloo C, Henry JL.. Evaluation of the iconic pain assessment tool by a heterogeneous group of people in pain. Pain Res Manag 2011; doi: 10.1155/2011/463407. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46. Lalloo C, Kumbhare D, Stinson JN, et al. Pain-QuILT: clinical feasibility of a web-based visual pain assessment tool in adults with chronic pain. J Med Internet Res 2014; 16:doi: 10.2196/jmir.3292. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47. Lalloo C, Stinson JN, Brown SC, et al. Pain-QuILT: assessing clinical feasibility of a web-based tool for the visual self-report of pain in an interdisciplinary pediatric chronic pain clinic. Clin J Pain 2014; 3011: 934. [DOI] [PubMed] [Google Scholar]

- 48. Lin FS, Lin CY, Lee CY. Children’s understanding of the graphs of pain frequency. In: 2017 International Conference on Applied System Innovation (ICASI). 2017: 369–72. doi: 10.1109/ICASI.2017.7988615.

- 49. Loth FL, Holzner B, Sztankay M, et al. Cancer patients’ understanding of longitudinal EORTC QLQ-C30 scores presented as bar charts. Patient Educ Couns 2016; 9912: 2012–7. [DOI] [PubMed] [Google Scholar]

- 50. Wallace S, Guo H, Sasson D. Visualizing self-tracked mobile sensor and self-reflection data to help sleep clinicians infer patterns. In: proceedings of the 2017 CHI Conference Extended Abstracts on Human Factors in Computing Systems New York, NY: ACM; 2017: 2194–200. doi: 10.1145/3027063.3053138.

- 51. Woods NF, Ismail R, Linder LA, et al. Midlife women’s symptom cluster heuristics: evaluation of an iPad application for data collection. Menopause 2015; 22: 1058–66. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52. Davis KM, Lai J-S, Hahn EA, et al. Conducting routine fatigue assessments for use in clinical oncology practice: patient and provider perspectives. Support Care Cancer 2008; 164: 379–86. [DOI] [PubMed] [Google Scholar]

- 53. Hochstenbach LMJ, Zwakhalen SMG, Courtens AM, et al. Feasibility of a mobile and web-based intervention to support self-management in outpatients with cancer pain. Eur J Oncol Nurs 2016; 23: 97–105. [DOI] [PubMed] [Google Scholar]

- 54. Ismail R, Linder LA, MacPherson CF, et al. Feasibility of an iPad application for studying menopause-related symptom clusters and women’s heuristics. Inform Health Soc Care 2015; 41: 1–66. [DOI] [PubMed] [Google Scholar]

- 55. Jamison RN, Jurcik DC, Edwards RR, et al. A pilot comparison of a smartphone app with or without 2-way messaging among chronic pain patients. Clin J Pain 2017; 338: 676–86. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56. Kourtis G, Caiaffa M-F, Forte C, et al. Retrospective monitoring in the management of persistent asthma. Respir Care 2011; 565: 633–43. [DOI] [PubMed] [Google Scholar]

- 57. Kuijpers W, Giesinger JM, Zabernigg A, et al. Patients’ and health professionals’ understanding of and preferences for graphical presentation styles for individual-level EORTC QLQ-C30 scores. Qual Life Res 2016; 253: 595–604. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58. Macpherson CF, Linder LA, Ameringer S, et al. Feasibility and acceptability of an iPad application to explore symptom clusters in adolescents and young adults with cancer. Pediatr Blood Cancer 2014; 6111: 1996–2003. [DOI] [PubMed] [Google Scholar]

- 59. Schroeder J, Hoffswell J, Chung C-F, et al. Supporting patient-provider collaboration to identify individual triggers using food and symptom journals. CSCW Conf Comput Support Coop Work 2017; 2017: 1726–39. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60. Duke JD, Li X, Grannis SJ.. Data visualization speeds review of potential adverse drug events in patients on multiple medications. J Biomed Inform 2010; 432: 326–31. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61. Ford CEL, Malley D, Bateman A, et al. Selection and visualisation of outcome measures for complex post-acute acquired brain injury rehabilitation interventions. NeuroRehabilitation 2016; 391: 65–79. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62. Zorluoglu G, Kamasak ME, Tavacioglu L, et al. A mobile application for cognitive screening of dementia. Comput Methods Programs Biomed 2015; 1182: 252–62. [DOI] [PubMed] [Google Scholar]

- 63. Members WG, Lloyd-Jones D, Adams RJ, et al. Heart disease and stroke statistics—2010 update: a report from the American Heart Association. Circulation 2010; 121: e46–215. [DOI] [PubMed] [Google Scholar]

- 64. Schneider KM, O’Donnell BE, Dean D.. Prevalence of multiple chronic conditions in the United States’ Medicare population. Health Qual Life Outcomes 2009; 71: 82.. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65. Anderson KO, Green CR, Payne R.. Racial and ethnic disparities in pain: causes and consequences of unequal care. J Pain 2009; 1012: 1187–204. [DOI] [PubMed] [Google Scholar]

- 66. Whatley M, Batalova J. Limited English Proficient Population of the United States. migrationpolicy.org. 2013. http://www.migrationpolicy.org/article/limited-english-proficient-population-united-states Accessed March 26, 2014.

- 67.Lor M, Xiong P, Schwei RJ, Bowers BJ, Jacobs EA. Limited English proficient Hmong-and Spanish-speaking patients’ perceptions of the quality of interpreter services. International journal of nursing studies. 2016;54:75–83. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 68. Jacobs E, Chen AH, Karliner LS, et al. The need for more research on language barriers in health care: a proposed research agenda. Milbank Q 2006; 841: 111–33. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69. Ware C. Information Visualization: Perception for Design. Waltham, MA: Morgan Kauffman; 2012. [Google Scholar]

- 70. Hemsley B, Balandin S, Worrall L.. Nursing the patient with complex communication needs: time as a barrier and a facilitator to successful communication in hospital. J Adv Nurs 2012; 681: 116–26. [DOI] [PubMed] [Google Scholar]

- 71. Kimberlin C, Brushwood D, Allen W, et al. Cancer patient and caregiver experiences: communication and pain management issues. J Pain Symptom Manage 2004; 286: 566–78. [DOI] [PubMed] [Google Scholar]

- 72. Paasche-Orlow MK, Wolf MS.. Promoting health literacy research to reduce health disparities. J Health Commun 2010; 15 (Suppl 2): 34–41. [DOI] [PubMed] [Google Scholar]

- 73. Kroenke K, Talib TL, Stump TE, et al. Incorporating PROMIS symptom measures into primary care practice—a randomized clinical trial. J Gen Intern Med 2018; 338: 1245–52. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 74. Chen C. Top 10 unsolved information visualization problems. IEEE Comput Graph Appl 2005; 254: 12–6. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.