Abstract

Statistical decision theory suggests that choosing an ideal action requires taking several factors into account: (1) prior knowledge of the probability of various world states, (2) sensory information concerning the world state, (3) the probability of outcomes given a choice of action, and (4) the loss or gain associated with those outcomes. In previous work, we found that, in many circumstances, humans act like ideal decision makers in planning a reaching movement. They select a movement aim point that maximizes expected gain, thus taking into account outcome uncertainty (motor noise) and the consequences of their actions. Here, we ask whether humans can optimally combine prior knowledge and uncertain sensory information in planning a reach. Subjects rapidly pointed at unseen targets, indicated with dots drawn from a distribution centered on the invisible target location. Target location had a prior distribution, the form of which was known to the subject. We varied the number of dots and hence target spatial uncertainty. An analysis of the sources of uncertainty impacting performance in this task indicated that the optimal strategy was to aim between the mean of the prior (the screen center) and the mean stimulus location (centroid of the dot cloud). With increased target location uncertainty, the aim point should have moved closer to the prior. Subjects used near-optimal strategies, combining stimulus uncertainty and prior information appropriately. Observer behavior was well modeled as having three additional sources of inefficiency originating in the motor system, calculation of centroid location, and calculation of aim points.

Keywords: movement planning, motor control, statistical decision theory, decision making, reaching, optimality

Introduction

Statistical decision theory delineates four factors that should be taken into account in choosing an action: (1) prior knowledge, (2) sensory data, (3) uncertainty of the outcomes of planned actions, and (4) costs and benefits of the actual outcomes of actions (Blackwell and Girshick, 1954; Ferguson, 1967; Berger, 1985).

Because sensory data are corrupted by noise, estimation of world properties is necessarily uncertain. An observer may choose to combine several available sources of sensory information to achieve an estimate of a world property having minimum variance or maximum likelihood (ML). However, given additional prior information concerning the probability of various world states, one can combine prior and sensory information using Bayesian methods and generate estimates of possible world states having maximum a posteriori probability (MAP). Bayesian methods are currently popular as a model of perception (Knill and Richards, 1996; Mamassian et al., 2002; Kersten et al., 2004), using such priors as a preference for slower speeds (Weiss et al., 2002), illumination from above (Mamassian and Landy, 1998; Sun and Perona, 1998), convexity, and view from above (Mamassian and Landy, 2001).

Decisions or actions may result in variable outcomes. For example, Trommershäuser et al. (2003a,b) asked subjects to point rapidly at targets while avoiding nearby penalty regions. Because movements were rapid, they were also noisy. Subjects' movements were nearly optimal in the sense of choosing an aim point that maximized expected gain (MEG). Subjects also modified aim points appropriately when fingertip location feedback was artificially altered, suggesting they estimated their own movement outcome uncertainty (Trommershäuser et al., 2005).

ML or MAP estimation may appear ad hoc. However, because actions have costly or beneficial consequences, estimation methods can be described as optimal only with respect to a particular loss function. If one wishes to maximize the percentage of correct estimates, then ML and MAP are ideal methods (Maloney, 2002). If the costs and benefits of various outcomes are more complicated, the ideal method is MEG. Importantly, without a known loss function, the optimal course of action is undefined.

The work cited above suggests that observers combine sensory and prior information, but there has been very little work to determine whether humans can combine uncertain sensory and prior information optimally. Previously, Körding and Wolpert (2004a) concluded that humans behaved in a manner that was qualitatively consistent with a Bayesian computation. Subjects relied more on prior information as sensory uncertainty increased. But, sensory uncertainty was not measured; hence, it could not be established whether subjects behaved optimally.

Here, subjects performed a rapid pointing movement at an uncertain target location, with additional information concerning the prior probability distribution of target locations. We consider the combination of prior information with stimulus uncertainty and also other sources of error that affect optimal behavior as well as those that only affect overall performance. By measuring each source of error in our task and analyzing their impact on the outcome of each movement, we quantitatively demonstrate that human performance results from a nearly optimal combination of sensory and prior information.

Materials and Methods

Task

Subjects performed rapid pointing movements to a screen, trying to hit targets. The task was described to subjects using the following allegory. They were told that a coin had been inadvertently tossed into a fountain, and they were to attempt to retrieve it. Because the water was deep, they had to reach the object quickly before it sank. Subjects did not see the target itself, but only saw the resulting splash. They were also told that the person who threw the coin was aiming, albeit imperfectly, at the center of the fountain. Thus, they were effectively told to use both uncertain sensory information (the splash) and prior information (the aim and accuracy of the thrower). Subjects were given information about the prior distribution and were trained on the timing requirements of the task. Subjects were paid a bonus for good performance in the task.

Apparatus

Subjects were seated in a dimly lit room with the head positioned in a chin and forehead rest in front of a transparent polycarbonate screen mounted vertically just in front of a 21 inch computer monitor [Sony (Tokyo, Japan) Multiscan G500, 1920 × 1440 pixels, 85 Hz]. The viewing distance was 42.5 cm. An Optotrak (Northern Digital, Waterloo, Ontario, Canada) three-dimensional motion capture system (with two three-camera heads) was used to measure the position of the subject's right index finger. The camera heads were located above and to the left and right of the subject. Three infrared emitting diodes (IREDs) were located on a small (0.75 × 7 cm) wing, bent 20° at the center, attached to a ring that was slid to the distal joint of the right index finger. Position data for each IRED were recorded at 200 Hz. The cameras were spatially calibrated before each experimental run, providing root-mean-squared accuracy of 0.1 mm within the volume immediately surrounding the subject and monitor apparatus (∼2 m3). A custom aluminum table secured the monitor and polycarbonate screen. The screen was machined to accurately locate four IRED markers. A calibration procedure was repeated before each experimental run to ensure that the monitor display was in register with the Optotrak system, based on the calibration screen and an additional IRED located at the front edge of the table. The experiment was run using the Psychophysics Toolbox software (Brainard, 1997; Pelli, 1997) and the Northern Digital software library (for controlling the Optotrak) on a Pentium III Dell Precision workstation.

Stimuli

In an initial training session, target locations were chosen randomly and uniformly from a 16 × 10 cm rectangle centered on the screen. In the experimental session, target locations were chosen from an isotropic two-dimensional Gaussian distribution (SD of this prior probability distribution for target location was σp = 2 cm) centered on the screen (the prior distribution). Also in the experimental session, a white bivariate Gaussian blob (σp = 2 cm) with crosshairs at its center was displayed centered on the screen to provide a visual representation of the prior distribution. Next, in both training and experimental sessions, the locations of N sample dots were chosen randomly and independently from an isotropic two-dimensional Gaussian distribution centered on the target location (with SD σd = 4 cm). Sample dots were displayed as black dots (0.3 cm diameter) on a gray background (Fig. 1). The target itself was not displayed but was a 1.3 cm radius circle to which subjects attempted to point.

Figure 1.

The sequence of events in a single experimental trial. a, A gray screen was displayed until the subject moved his or her fingertip to the start position, which triggered the display of the prior distribution (b). After 1000 ms, the target dots were displayed (c), followed 50 ms later by a tone indicating that the subject could begin the reach. d, Starting at the onset of the movement, the subject had 700 ms to complete the reach to the screen. e, After the reach, auditory feedback was provided.

Procedure

Training session.

Subjects viewed an animation in which the target appeared (2.6 cm diameter, colored green), followed by N = 32 sample dots. They were told the allegory of a coin being tossed into a fountain, with the dots representing the resulting splash. The animation was identical to the stimuli used in the training session except for the display of the target itself. Subjects viewed the target and sample dots five times. They were then told “more or less water might splash up,” and watched 15 additional examples where N = 2, 4, 8, 16, or 32, chosen randomly. After watching the animation, subjects were instructed: “In the experiment, you'll see the splash, and your job will be to point quickly at where the coin landed.”

The timing of each training trial was as follows. First, sample dots were displayed, followed 500 ms later by a brief tone (50 ms) indicating that the subject could begin the reach. Movement time was defined as the time elapsed from when the fingertip passed through the start plane (located at the front edge of the table, parallel to the screen) until it reached the screen. If the movement time was longer than 700 ms, a timeout penalty was imposed. During the reach, a light gray disk (0.2 cm diameter) indicated the position of the fingertip projected orthogonally onto the screen. After completing the reach, feedback was given: the target was displayed (green, as in the animation), and a red dot (0.2 cm diameter) indicated the end point of the reach (for display purposes only; end points themselves had no size). Auditory feedback indicated whether or not the target was hit, and whether the subject had timed out. The next trial was triggered when the subject moved his/her pointing finger behind the start plane. Training included 30 trials each of N = 2, 4, 8, 16, and 32 sample dots in random order. These 150 trials took ∼15 min to complete.

Experimental sessions.

The analogy of tossing a coin into a fountain was maintained along with new information that the coin was now being tossed into the center of the fountain but with imperfect aim. Subjects viewed an animation of 20 sample coin tosses: the target was displayed on top of the white prior representation, and target location was chosen from the two-dimensional Gaussian prior distribution of target location (see above, Stimuli).

Following the animation, subjects were told again to attempt to touch the target (unseen green coin) but with the added knowledge that it would tend to be near the center of the white image (thrown inexpertly at the center of the screen). A trial began when the subject's pointing finger moved behind the table edge, which triggered the appearance of the prior image, followed 1000 ms later by the sample dots. Trials were identical to those of the training session, except that feedback was only given by auditory indications of hit, miss, or timeout. No indication of target location or finger position was given. Both the sample dots and white Gaussian prior distribution display remained onscreen for the duration of each trial. In addition to a base pay of $10.00/hour, subjects earned five cents for each hit and lost 25 cents for each timeout. A running tally of money earned was displayed after each trial. The sequence of events in a single experimental trial is shown in Figure 1. Subjects ran six experimental blocks. In each block, subjects completed 30 trials in each of the five conditions (2, 4, 8, 16, and 32 sample dots). Experimental blocks lasted ∼15 min.

Motor noise control session.

Each subject participated in an additional control experiment designed to measure uncertainty of motor outcome. On a second day, subjects made 150 reaches at 0.75-cm-long crosshairs; the location of the crosshairs on each trial was chosen from a 16 × 10 cm uniform distribution centered on the screen. The timing was identical to the training session. Subjects were instructed to touch the center of the crosshairs as accurately as possible. There was no feedback or extra pay for accuracy in this session, but timeouts were indicated by a beep. Data from trials resulting in a timeout were omitted from the analysis.

Centroid uncertainty control session.

Following the motor control session, additional trials were run to estimate subjects' accuracy in pointing at the centroid of a set of dots. Subjects participated in five blocks identical to the earlier training session but without feedback concerning the finger and target positions. Thus, there were 150 reaches for each of the five values of N. Subjects were instructed to aim at the centroid of the set of dots. Target and sample dot locations were chosen randomly as in the training session. No feedback was given other than to indicate timeouts. Again, data from trials resulting in a timeout were omitted from the analysis.

Subjects

Three subjects participated in the experiment. All participants had normal or corrected-to-normal vision and were members of the Department of Psychology or Center for Neural Science at New York University. Subjects gave informed consent before testing and were paid for their participation. Ages ranged from 21 to 45, and all subjects were naive to the purposes of the experiment.

Data analysis

Before each experimental session, subjects (fitted with IREDs) placed their pointing finger at a calibration location on the screen while the Optotrak recorded the location of the three IREDs on the finger 150 times. For each set of measurements, we computed the vectors from the central IRED to the two others, the cross product of those vectors (thus defining a coordinate system centered on the central IRED), and the vector from the central IRED to the known calibration location. We determined the best linear transformation that converted the three vectors defining the coordinate frame into the vector indicating fingertip location. On each trial, we recorded the three-dimensional positions of the IREDs at a rate of 200 Hz and converted them into fingertip location using this transformation. Trials in which the subject failed to reach the screen within 700 ms of movement onset were excluded (8 of 2700 experimental trials). The focus of our analysis was the finger landing point on the screen relative to the actual target location. Thus, end point data were transformed from Optotrak to screen coordinates. Data were analyzed separately for each subject.

Results

We begin by defining the ideal aiming strategy that maximizes gain in our task. We next discuss our subjects' aim points as a function of the number of sample dots, N, compared with the ideal strategy. Next, we analyze several aspects of subjects' performance including suboptimal aiming strategy, motor noise, sensory uncertainty, and errors in determining the aim point. We found that a simulated subject with these characteristics results in an efficiency (i.e., number of targets hit) indistinguishable from the performance obtained from our subjects. We conclude with an analysis of possible learning effects during the experimental trials.

Model and conditions

In the experiment, the number of target dots, N, was varied. If the observer perfectly determined the centroid of the set of dots, then uncertainty of target location (based on the dot cloud) would be given by the width of the centroid distribution (σd/√N). The optimal movement strategy was to aim along the line connecting the center of the screen (i.e., the best estimate of target location before observing the sample dots) and the centroid of the dots (the ML estimate of target location from the sample dots alone). If little was known about the target location from the data (i.e., if N was small), the estimate should have been closer to the center of the prior distribution. As N increased, the centroid of the dots became a more reliable estimate of target location, and the best estimate of target location approached the centroid of the dots.

We determined the probability of hitting the target for various choices of aim point by simulating our task. Five million trials were simulated for each value of N. In each trial, we determined the outcome of each of 100 aim points positioned evenly along the line from the center of the prior to the centroid of the dots. Target and dot locations were determined as in the experiment. We repeated this simulation for varying levels of noise added to the aim point, effectively adding simulated isotropic Gaussian motor noise with SD σm. We also varied the width of the prior distribution (σp) and the target dot distribution (σd). Let Dd be the distance between the center of the prior distribution and the centroid of the dots. An aim point will be described as the proportion α of the way from the center of the prior to the centroid of the dots at which the simulated subject aimed. For each value of N, σp, σd, and Dd, we determined the optimal proportion αopt that led to the maximum probability of hitting the target.

The results show that αopt is independent of Dd (results binned by Dd are identical). Furthermore, αopt is determined by the ratio of the SDs of the sampling distribution of the centroid and the prior [i.e., as a function of (σd/√N)/σp]. Figure 2 shows hit probability as a function of α and N for the values of σp and σd used in the experiment. The filled circles and curve along the ridge indicate the aim points resulting in maximum hit probability and hence, in our task, maximum expected gain. That is, they represent the optimal aim points αopt as a function of N for the likelihood and prior probability distributions used in the experiment. We repeated these simulations with a large range of motor noise (from 0 to 16 cm variance) and found that increasing motor noise σm flattened the probability surface but did not change the location of the ridge. Also, we found negligible effects of target size on the aiming strategies for sizes close to our target size. In other words, target size affects the probability of a hit but does not change the aiming strategy that maximizes that probability. The values of σp and σd were chosen because they produced a set of values of αopt with a reasonably large range.

Figure 2.

Simulation results. The height of the surface represents the probability of a target hit as a function of the number of target dots and the aim point from a simulation of the task. The optimal aim strategy that maximizes the number of hits (for a given number of sample dots) is indicated by the solid curve.

Aiming strategy

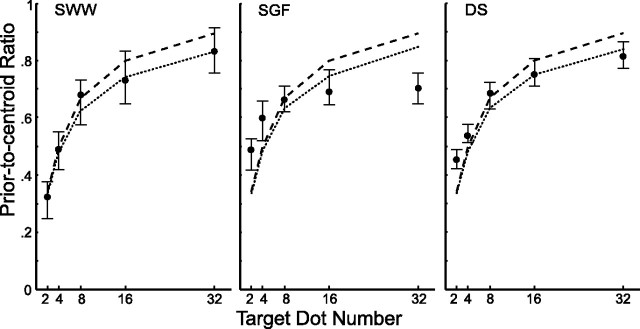

The simulation results discussed above (see Model and conditions) suggest that the optimal aiming strategy (defined by the ridge of the surface in Fig. 2) is independent of motor noise and of the distance between the center of the prior distribution and the centroid of the dots; these sources of uncertainty simply decrease the maximum expected gain. The aim point should move closer to the centroid of the sample dots as the number of sample dots is increased to maximize the probability of hitting the target. Figure 3 shows the median normalized aim point as a function of N for three subjects. The end point of the reach on each trial was projected onto the line joining the center of the prior distribution and the centroid of the sample dots. This location was expressed as a proportion α of the distance Dd from the center of the prior distribution to the centroid of the sample dots, and the median proportion over all trials with a given value of N was determined. A value of zero indicates that a subject aimed at the center of the prior distribution; a value of one indicates the subject aimed at the centroid of the sample dots.

Figure 3.

Results of the main experiment. Filled circles indicate subjects' median normalized landing point as a function of the number of target dots. An ordinate value of zero indicates the subject aimed at the center of the prior; a value of 1 indicates a median value at the centroid of the sample dots. Error bars represent the 95% confidence interval of the median. The dashed line corresponds to the optimal aiming strategy for an ideal model that calculates the centroid of the dots perfectly. The dotted line corresponds to the optimal aiming strategy for each subject based on an estimate of that subject's variability in estimating the centroid of the dots added to the sampling variability of the centroid as an estimate of the target location.

It is clear from Figure 3 that subjects changed their aim point as a function of N. The dashed lines indicate the ideal aiming strategy based on our simulations and the value σd/σp = 2 used to generate the stimuli. One subject (SWW) used an aiming strategy (set of α values) that was indistinguishable from optimal. The other two subjects' reaches were in qualitative agreement with the predictions but compressed in range so that reaches were too close to the centroid for small N and too close to the center of the prior distribution for large N.

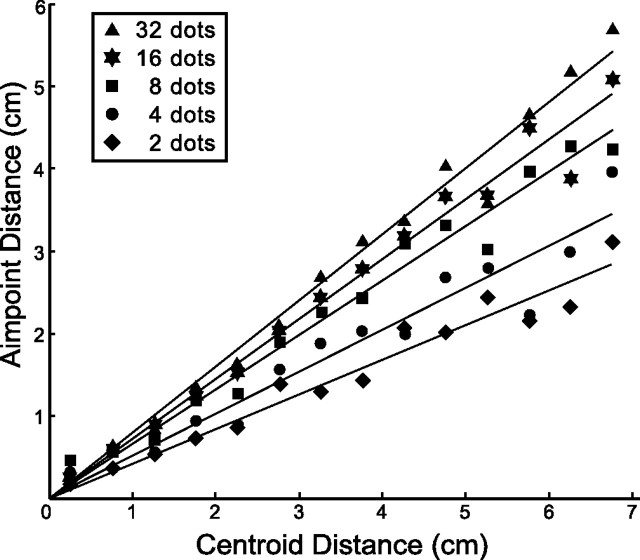

We determined above that the optimal strategy can be summarized as a percentage shift α along the line between prior and sample dot centroid that, for the fixed value of σd/σp used here, is a function of N but is independent of the distance Dd from the center of the prior to the sample dot centroid (see above, Model and conditions). To test whether subjects used a shift α that was independent of Dd, we computed an estimate of the distance Da from the center of the prior to the aim point for different values of Dd (binned at 5 mm intervals separately for each value of N). For this purpose, the movement end points were plotted in a rotated coordinate system (with the center of the prior at the origin, and the centroid of the dots on the positive x-axis). Da was estimated as the distance from the center of the prior to the centroid of the movement end points in each bin. Figure 4 shows Da as a function of Dd along with least-squares fitted lines constrained to pass through the origin. Straight-line fits to the combined data shown in Figure 4 account well for aim point as a function of Dd (all r2 > 0.92), indicating that α was indeed independent of Dd. This analysis was repeated for individual subjects; r2 never fell below 0.90 for three subjects and five values of N.

Figure 4.

Median aim point distance Da (the distance from the center of the prior to the aim point) as a function of the distance Dd from the center of the prior to the centroid of the dots. The data are well fit by a model in which subjects maintained a constant shift proportion α independent of Dd. We first performed a linear fit of the data and found that no y-intercepts were significantly different from zero. Thus, we performed a second linear fit of the data constrained to pass through the origin. Resulting r2 values are negligibly smaller than those associated with the unconstrained fit and never fell below 0.92 for any value of N. The data allowed for a range of Dd from 0 to 7 cm (bin width, 0.5 cm). Data were averaged over subjects. The variability of the data contributing to this plot is displayed in Figure 6 (dashed line).

Motor noise

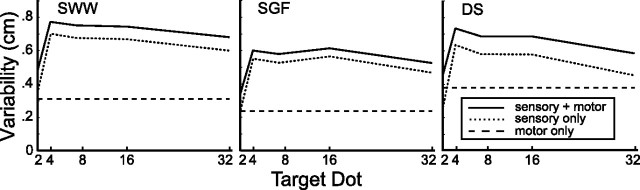

Movements are variable and increasingly so for faster movements [speed-accuracy tradeoff (Meyer et al., 1982)]. We measured motor noise in a session separate from the main experiment. Subjects pointed at crosshairs under identical time constraints as in the experiment sessions (movements were self-initiated, and movement time was limited under 700 ms). Under these conditions, motor noise was far larger than the uncertainty in localizing the crosshairs. This motor noise included the neural noise in motor commands controlling the arm as well as uncertainty in converting from retinal to exocentric coordinates. For each subject, we measured the variability of end point locations (measured relative to the location of the crosshairs in each trial) in the horizontal and vertical directions (σx and σy). In previous investigations (Trommershäuser et al., 2003a,b, 2005) using similar tasks to the one used in the current study, variability in reach end points was isotropic over a wide range of conditions and did not vary as a function of screen location or experimental condition. Here, Levene (1960) tests indicated no significant difference between σx and σy (p > 0.05). Therefore, for each subject, we calculated a pooled motor SD σm, represented in Figure 5 by the bottom dashed line.

Figure 5.

Sensory and motor uncertainty. The dashed line indicates estimates of motor noise σm from the motor control experiment. The solid line indicates the uncertainty, σs+m, in rapid pointing movements at the centroid of sets of dots. The dotted line indicates the uncertainty in sensory-based calculations of the centroid σs, determined as the portion of σs+m not accounted for by σm.

Centroid localization noise

An ideal observer can calculate the location of the centroid of the sample dots perfectly; such an observer will mislocalize the true target position only because of uncertainty in the prior (σp), variability of dot locations (σd) or mislocalization of the dots themselves. However, our subjects may have miscalculated sample dot centroids as well.

In a second separate session, we asked subjects to point at the centroids of sets of random dots. The conditions were the same as in the training and experimental sessions in terms of the number of dots, the distribution from which they were drawn, and the timing/speed constraints. However, subjects were required to point at the centroid, not at an unseen target, and there was no reference to an underlying prior distribution of targets. For each number of sample dots, N, we calculated the SD of end point locations (relative to the actual centroid) in the x- and y-directions. Levene tests indicated no significant differences between σx and σy (Bonferroni correction, five tests per subject). Accordingly, we calculated a pooled sensorimotor SD σs+m(N) for each subject, plotted as the solid line in Figure 5.

Note the sharp increase in variability from the two-dot condition to conditions with greater numbers of dots. Pointing at the centroid in the two-dot condition is essentially a two-dimensional spatial alignment task. Performance in the constituent one-dimensional tasks (bisection and three-point alignment) is extremely precise (Klein and Levi, 1985). Jiang and Levi (1991) found that performance in a two-dimensional alignment task was almost as good as for these one-dimensional tasks. Thus, it is not surprising that performance in the two-dot condition was more precise than in conditions with a greater number of dots, in which subjects must localize the centroid based on multiple dots. This nonmonotonic performance as a function of the number of dots has also been found for an alignment task (Hess et al., 1994).

Performance in this control task combined sensory uncertainty in estimating the centroid location with motor noise from performing the speeded reach. We assume that these noise sources were independent. Thus, we estimated the sensory uncertainty of observers' estimates of centroid location as σs(N) = [σs+m2(N)−σm2]1/2 (Fig. 5, dotted line).

Unlike the other noise sources, such as motor noise, uncertainty in calculating the sample dot centroid location impacts the optimal aim point. Variability in the calculation of the centroid compounds the uncertainty from the sampling variability of the dots themselves. Thus, observers with large values of σs(N) should rely more on prior information (and hence use a smaller value of α). We recomputed each subject's optimal strategy based on their total uncertainty in estimating target location [σs2(N)+(σd2/N)]1/2. This strategy is shown in Figure 3 as the dotted line; it differs little from that which ignores errors in centroid location calculations and changes none of our conclusions. The original ideal strategy (Fig. 3, dashed line) only takes into account the uncertainty produced by the stimulus at the screen. This revised strategy (Fig. 3, dotted line) also takes into account an additional source of error in the observer. This is analogous to the sequential ideal observer analysis of Geisler (1989).

Aim point shift error

After localizing the center of the prior distribution and the centroid of the sample dots, the aim point can be calculated from α and Dd. The optimal strategy is to shift a fraction of the way from the center of the prior toward the sample dot centroid, Da = αDd, where the fraction α is a function of N (Fig. 2). The center of the prior was indicated by crosshairs, and its localization should have been quite precise. However, aim point computation may have involved additional error. The amount of error might have depended on the magnitude of the shift, as would be predicted by Weber's law for position (Klein and Levi, 1985; Wilson, 1986; Whitaker et al., 2002). For example, if the strategy called for α = 0.5, the subject's uncertainty in locating this point on the screen should increase with Dd. Having already determined the median shift amounts for each subject and number of target dots (Fig. 3, filled circles), we next analyzed the variability of end point locations around the median shift values as a function of Dd.

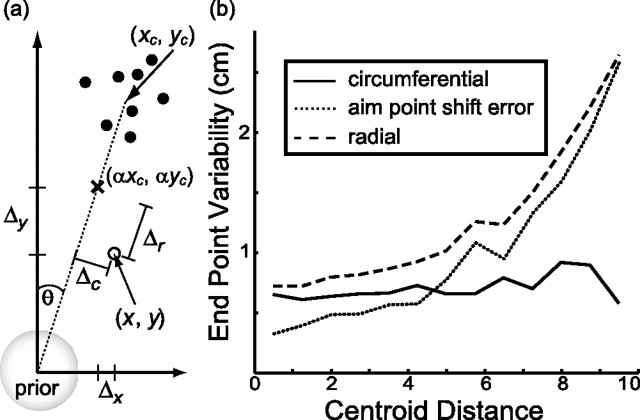

The analysis was performed as follows. We set the origin of a coordinate system at the center of the prior distribution. On a given trial (Fig. 6a), the centroid of the sample dots was at location (xc, yc) = (Dd cosθ, Dd sinθ). For a given value of N, the data of Figure 3 determine the shift strategy α, resulting in aim point (αxc, αyc). The landing point on that trial, (x, y), differed from the aim point by Δ = (Δx, Δy) = (x, y) − (αxc, αyc). We define radial error (Δr) as the component of Δ projected onto the direction from the center of the prior to the centroid of the dots, and circumferential error (Δc) as the remaining orthogonal component of Δ (Fig. 6a). The values of Δr and Δc were pooled over trials in which Dd fell in a given range (bin width, 0.75 cm). In each pool, the spread of the landing points around the aim point was then estimated, resulting in the values σr(Dd) (radial variability) and σc(Dd) (circumferential variability). Pooling across our subjects' collective 2700 trials, this bin width resulted in at least 100 trials in each of the first nine bins, including over 300 trials in bins 2–5. Results should be considered with caution beyond 8.25 cm (11th bin), because only 28 data points fell within this centroid distance range. There were too few data points to analyze centroid distances beyond Dd = 9.75 cm.

Figure 6.

Aim point shift error. a, For each trial, we determined the radial and circumferential components of the deviation of each movement end point from the aim point (as defined by the results in Fig. 3), Δr and Δc. b, The variability of these deviations, σr and σc, was calculated as a function of the distance Dd between the center of the prior distribution and the centroid of the sample dots in bins of width 0.75 cm, pooled over subjects. As Dd increased, σr increased whereas σc remained relatively constant. We estimated the aim point shift error σa (dotted line) as the portion of σr not accounted for by σc.

Figure 6b shows that σc changed little, whereas σr increased dramatically with increasing Dd. The value of σc was comparable with sensorimotor noise, σs+m. The results in Figure 6b were pooled across subjects. We repeated the analysis within subjects (∼900 experimental trials each) with bin size set to 1.5 cm to maintain similar numbers of trials per bin. The results showed the same trends.

We interpret the additional variability in the radial direction relative to the circumferential as error in the calculation of the aim point, because that calculation required the subject to determine the location along the radial direction corresponding to the proportion α. We assume that sensorimotor variability was independent of error in aim point calculation. Thus, for each value of Dd, we estimated the SD of calculating the screen position corresponding to the aim point as σa(Dd) = [σr2(Dd)−σc2(Dd)]1/2. This was done separately for each subject using the 1.5 cm bin size and used in subsequent analyses. Figure 6b (dotted line) shows σa pooled over subjects.

Subjects' imprecision σa at calculating the aim point α increased with increasing Dd. Although this result parallels Weber's law for spatial judgments such as bisection (Levi et al., 1988; Levi and Klein, 1990), it is unlikely that this error was attributable primarily to errors in sensory coding, because the errors were too large. To test this possibility, we had one subject perform a spatial bisection between pairs of points that were oriented randomly from trial to trial. Distance between the points ranged from 2 to 10 cm. The subject adjusted a third point in two dimensions until it appeared to lie halfway between the two points along the line joining them. Setting variability increased linearly with separation. Variability ranged from 0.022 cm for a 2 cm separation to 0.105 cm in the 10 cm condition. However, these values are nearly an order of magnitude smaller than the estimated values of σa. We suggest the large values of σa compared with those just described are attributable to differences in the task from two-point bisection. Our subjects were not simply bisecting two points when they chose aim points. Aiming strategies varied from ∼30–80% of the distance between the prior and the centroid. Aim points must also undergo a change in coordinate system in our task (unlike visual bisection judgments), as the aim point was used to direct a reaching movement.

It is important to point out that, like the motor error discussed above, the aim point variability σa reduces overall expected gain but does not change the optimal aim point. Thus, in our modeling, σa represents a noise source that limits overall performance but does not change our estimate of the behavior (aim points) that constitutes the best possible movement plan.

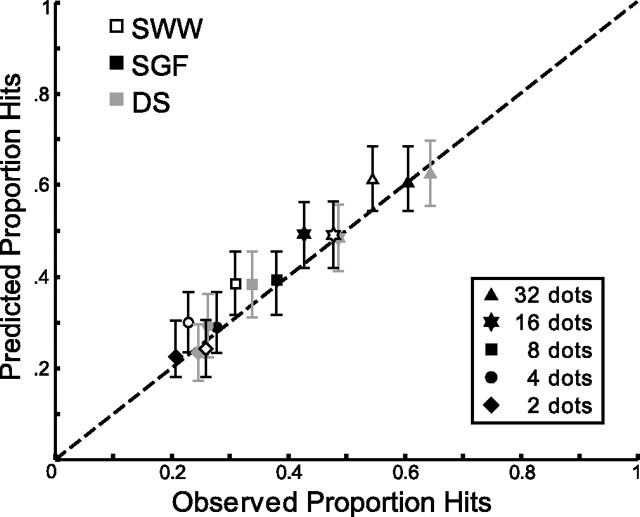

Ideal observer comparison

The results shown in Figure 2 were based on simulations of an ideal movement planner that was hampered only by the sampling variability in the target dots. As we have seen, our subjects differed from the ideal subject in several ways. Their choice of aim point was both suboptimal (Fig. 3) and imprecise (σa), and there was added uncertainty because of sensory miscalculation of the centroid location (σs) and errors in motor control (σm). We simulated the experiment using each subject's aiming strategy, hampered by these three additional sources of noise estimated as described above. For each subject and number of dots, 150 trials were simulated. In each trial, the centroid of the set of dots was calculated, and this location was perturbed by an amount chosen from an isotropic, two-dimensional Gaussian of width σs(N). Next, an aim point was determined based on the number of target dots and the subject's median aim point in that condition from Figure 3. This location was perturbed along the line joining the center of the prior and the noisy estimate of the centroid by a draw from a one-dimensional Gaussian of width σa(Dd). The resulting location was perturbed again by a draw from a two-dimensional Gaussian of width σm. For each condition, we computed the proportion of times this simulated movement end point landed within the target region. Figure 7 shows subjects' performance in each condition versus the performance from the simulations. The simulations predicted subjects' performance accurately, suggesting that performance is well characterized by these four sources of inaccuracy and imprecision.

Figure 7.

Model predictions. Performance of each subject in each condition is plotted versus simulated performance using estimates of each subject's aiming strategy (Fig. 3) and sensory, motor, and aiming imprecision (Figs. 5, 6). The dashed line represents a perfect correspondence between data and simulation. Error bars represent 95% confidence intervals determined from 1000 bootstrap replications of the simulated experiments.

We define efficiency as the fraction of ideal performance achieved by our subjects. Efficiency values (Table 1) were high but less than 100. We simulated ideal task performance in much of the same way as in Figure 7 but using the ideal aiming strategy (Fig. 2) and setting σa, σs, and σm to zero. By repeatedly simulating the ideal for the same number of trials as our subjects completed, we were able to estimate the distribution of possible performance (gains) generated by the ideal model. All efficiency values in Table 1 are significantly suboptimal (i.e., they fall below the fifth percentile of the frequency distribution of gains obtained from simulations of the ideal performance model).

Table 1.

Efficiency as a function of the number of dots for three subjects

| Efficiency (%) |

|||||

|---|---|---|---|---|---|

| 2 dots | 4 dots | 8 dots | 16 dots | 32 dots | |

| SWW | 88.21 | 86.75 | 81.89 | 75.16 | 72.18 |

| SGF | 82.36 | 83.96 | 83.37 | 75.41 | 71.41 |

| DS | 85.45 | 84.81 | 81.23 | 74.27 | 73.51 |

Efficiency is defined as the number of target hits achieved by the subject divided by the number that would be scored, on average, by an ideal performance model that used the optimal strategy and was hampered only by the widths of the prior probability distribution and the normalized likelihood function. All efficiency values are significantly less than 100%.

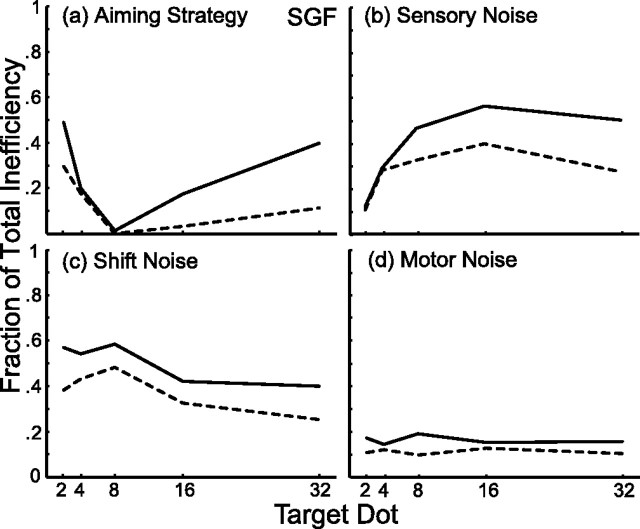

There are four factors that contribute to subjects' inefficiency (1 − efficiency): suboptimal choice of aim point (Fig. 3) and the three noise sources (σa, σs, and σm). We analyzed subjects' inefficiency in two ways. First, we determined how much inefficiency resulted from each factor alone compared with the ideal performance model. Second, we determined the amount by which inefficiency was reduced by eliminating each factor while retaining the other three, compared with that resulting from all four together. In each case, the change in inefficiency was computed as a fraction of the total inefficiency for that subject in each condition.

The results of this analysis are shown for subject SGF in Figure 8. The dashed lines indicate the proportion of inefficiency that was eliminated by removing each factor (leaving the other three factors intact). A value of zero indicates that inefficiency was not reduced by eliminating the factor, and a value of 1 indicates that inefficiency was completely accounted for by that factor. For example, changing SGF's aiming strategy to the optimal strategy accounted for ∼30% of the total inefficiency in the two-dot condition (Fig. 8a). Inefficiency in the eight-dot condition was not reduced by adopting the optimal strategy, because SGF's aiming strategy in this condition was nearly optimal (Fig. 3). Similarly, sensory noise had almost no effect on inefficiency in the two-dot condition (Fig. 8b), because this noise was small (Fig. 5, dotted line). In the other conditions, sensory noise accounted for a substantial fraction of overall inefficiency. In Figure 5c, the removal of shift noise from the simulations can be seen to account for a slightly greater fraction of SGF's inefficiency in the two-, four-, and eight-dot conditions versus the 16- and 32-dot conditions. This is because of the fact that centroid distances tend to be further from the prior when the number of target dots is low (because of the nature of the random sampling of dots around the true target location). Motor noise was small and independent of dot number and hence accounted for a small and relatively constant fraction of inefficiency (Fig. 8d).

Figure 8.

Causes of inefficiency (subject SGF). The dashed lines indicate the fraction of inefficiency accounted for by adopting the ideal aim strategy (a) or by removing sensory (b), shift (c), or motor (d) noise. A value of zero indicates that the factor had no effect on efficiency. Solid lines indicate the fraction of total inefficiency as calculated by adding each factor to the ideal performance model.

The solid lines in Figure 8 represent the increase in inefficiency (from ideal toward subject SGF's) when single sources of error (noise or suboptimal aiming strategy) were introduced into the simulations. It is not surprising that the solid and dashed lines do not overlap. The effect of added noise is greater when it is the only factor than when added to other noise sources. However, the solid line is also above the dashed line in Figure 8a where the factor is aiming strategy rather than added variability. As stated in the Introduction, adding noise to our simulations resulted in a flattening of the gain surface (relative to that shown in Fig. 2). For this reason, switching to a suboptimal aiming strategy has a greater effect when there is no noise (Fig. 2, solid line) than when all noise factors are present (Fig. 2, dashed line).

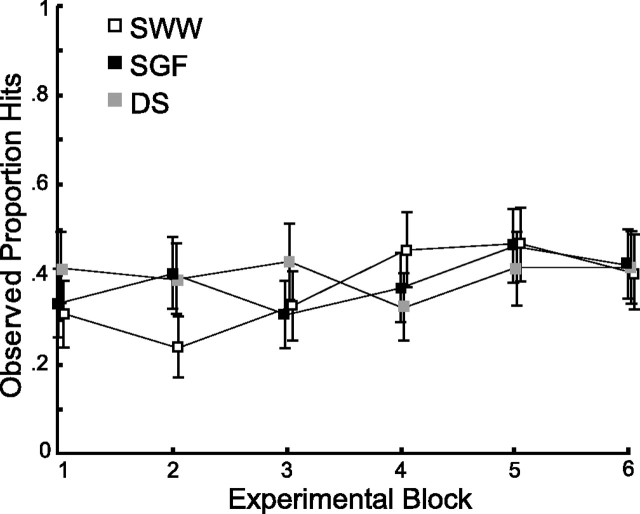

Tests for learning

In our previous work, we have seen very little evidence for learning in speeded reaching tasks with payoff and penalty regions, other than learning to deal with the time constraints of the task (Trommershäuser et al., 2003a,b). Figure 9 shows the proportion of hits on the target as a function of experimental session. Only one of the three subjects showed a significant effect of experimental session (one-way ANOVA, subject SWW; p < 0.01). A planned linear contrast found small but statistically significant linear trends for two subjects (SGF, p = 0.04; SWW, p < 0.01). Feedback about the target location was not given during the experimental session; subjects only received hit or miss information (and the overall hit rate was under 40%). Although we found significant increasing trends for two of three subjects, we suggest that the learning trend was small, and that learning was not required for subjects to attain the near-optimal integration of sensory and prior location information found in our task.

Figure 9.

Proportion of hits scored by each subject over the course of the experiment. Evidence for learning across the six blocks is weak.

Subideal observers

The ideal observer we used assumes, consistent with the instructions given to our subjects, that the target dot distribution width (σd) was constant over the duration of the experiment. This assumption resulted in an ideal aiming strategy that depended only on N and the ratio of σp to σd [i.e. (σd/√N)/σp]. Although we cannot determine from our results whether subjects maintained a constant estimate of σd over the course of the experiments, the close quantitative fit of our model to the observed data suggests that one estimate was learned in the training trials, and that subjects maintained that estimate into the experimental sessions.

However, consider a second, subideal observer who assumes nothing about σd and, rather, estimates target dot uncertainty trial by trial. We simulated this subideal observer in the following way. First, we calculated the optimal aimpoint for σp = 2 cm and σd ranging from 0 to 12 cm for each value of N. Next, we repeated our ideal observer simulations, except that on each trial, the simulated observer used the optimal shift strategy α based on the current values of N of the trial and the current sample dot variance of the stimulus. We included centroid calculation noise when calculating the sample variance. That is, the estimate of dot variance was based on distances of the stimulus dots from an estimated dot centroid corrupted by centroid calculation noise, rather than from the precise centroid of the sample dots. The average aiming strategy of this model was nearly identical to the optimal aiming strategy for N = 16 and 32. The model used slightly higher α values for N = 2, 4, and 8 (i.e., average shifts were closer to the centroid compared with the MEG aim points). More important, this simulation resulted in a variability in choosing the aimpoint highly dependent on N. Because of the estimate of sample dot variance that was highly uncertain for N = 2, the estimates of α of the model varied between 0 and 0.925 (mean ± 2 SDs). That is almost a uniform distribution of shifts between the prior and the centroid for N = 2. For N = 4, α varied between 0.253 and 0.806, also spanning almost the entire range of possible shifts. As N increased, the α range decreased to be almost indistinguishable from an ideal observer using a perfect estimate of σd (for N = 32, α varied between 0.855 and 0.922). Variability in the aiming strategies of our subjects showed no such dependency on N. We conclude that subjects followed our instructions and used a stable estimate of σd.

Another possible source of subideal performance would be for subjects to use an incorrect value of σp or a value that varies from trial to trial. Were subjects to use a fixed value of σp different from the true value of 2 cm, it would have affected all predictions of the shift similarly. For example, a reduced value of σp would have resulted in smaller predicted shifts for all values of N in Figure 3, which would have fit the data no better than the ideal Bayesian model. Similarly, a model that used a value of σp that varied from trial to trial would have resulted in an increase in variability over and above that we predicted based on sensory and motor uncertainty. We conclude that observers learned the width of the prior during the demonstration before the experiment, estimated the center of the prior easily as it was fixed and indicated by the cross hairs, and thus have no reason to adopt a more complicated model with additional parameters that we cannot estimate with our experimental design.

Discussion

We presented data from a rapid pointing task in which subjects show clear evidence of integrating uncertain stimulus information with prior knowledge of the distribution of possible target locations. Subjects modified aim points in a near-optimal manner as stimulus uncertainty was varied. The ideal observer was hampered only by the inherent location uncertainty of the target stimulus. We found that human subjects were further hampered by suboptimal shift strategies as well as uncertainty in estimation of the centroid of the target dots, in determination of the aim point, and in motor outcome. Motor noise made the smallest contribution to observer inefficiency in the task. Additionally, the effects of these factors were not additive. For example, the suboptimal aiming strategy had a large effect on inefficiency if it was the only factor but a far smaller effect if it was added on top of the three variability factors. Finally, although there was a small but significant trend of improvement across the course of the experiment (for two of three subjects), subjects did not require feedback-based learning to attain their near-optimal shift strategy in this task. In light of our subjects' nearly constant performance across blocks, it is unlikely that subjects learned any cognitive strategies during the experiment (although we cannot rule that out).

Two other studies have compared human performance with a Bayesian solution combining sensory and prior probability information (Körding and Wolpert, 2004a; Miyazaki et al., 2005). In these studies, it was impossible to determine whether subjects adopted the optimal Bayesian strategy that accounts for their specific motor and sensory uncertainty (rather than a strategy consistent with the optimal strategy for some observer). For example, Körding and Wolpert (2004a) asked subjects to point at a target with the hand blocked from view. Midway through the movement, a brief indication of finger position was displayed that was perturbed laterally from the actual finger position. The perturbation was random, and the prior distribution of perturbations was a Gaussian centered on a perturbation of 1 cm. The task was to point at (i.e., have the visual cursor land on) the target, so that subjects had to counteract the perturbation. Visual reliability of the brief positional feedback was varied (using a blurry, noisy cursor), and feedback as to final cursor position was only provided in the high-reliability condition. Consistent with a Bayesian calculation, they found that subjects relied more on the prior information (and less on the brief cursor display) as the cursor display was made less reliable. However, there was no independent measure of visual and other, extraretinal sources of uncertainty about the shift, so there was no independent way they could determine the optimal strategy to compare with subjects' performance. Instead, the investigators assumed the strategy was optimal Bayesian and used the measured shifts in movement end points to estimate subjects' uncertainties. Then, taking these uncertainty estimates as true and fixed and assuming an optimal Bayesian calculation, they estimated the precise form of the prior distribution subjects could have used based on details of subjects' movement end point shifts. In summary, they showed that performance was qualitatively consistent with the use of prior information but not necessarily with the optimal Bayesian calculation.

Optimality was also not defined within these experiments, because there was no explicit loss function imposed. Rather, they effectively assumed a quadratic loss function. In another study, Körding and Wolpert (2004b) sought to estimate the loss function used by their subjects in an aiming task by using an experimenter-imposed skewed error distribution. Again, the fitting procedure assumed optimality (in this case, MEG) rather than demonstrating that subjects were, indeed, behaving optimally. In contrast to their assumption in Körding and Wolpert (2004a), they concluded that a quadratic loss function provided a poor description of their data, because subjects penalized large errors less severely than that would predict. In the experiment presented here as well as in our previous work (Trommershäuser et al., 2003a,b, 2005), we avoid the problem of inferring an intrinsic loss function by imposing a specific loss function through monetary payoffs and penalties for different outcomes. Here, we obtained independent estimates of each source of uncertainty that would impact the definition of the optimal strategy for a given subject. We then determined the extent to which subjects were, in fact, optimal given both the inherent limits of their own sensory and motor systems and the imposed loss function.

It has recently become popular to compare human performance in perceptual and motor tasks to an ideal observer (e.g., ML, MAP, or MEG). Such work suggests that probability theory provides a suitable framework for understanding the sensory or motor system under study. This approach has been followed on both theoretical (Maloney, 2002; Kersten and Yuille, 2003; Kersten et al., 2004; Knill and Pouget, 2004) and empirical (Geisler, 1989; Mamassian and Landy, 2001; Lee, 2002; Battaglia et al., 2003; van Ee et al., 2003) grounds.

However, since the work of Sherrington (1918), it has been recognized that the pattern of errors (i.e., suboptimal behavior) can provide information concerning the underlying information sources and computations performed by sensory and motor systems. In modeling this task, we have identified four sources of error that lead to suboptimal behavior. Three of these factors added noise to movement end points; the fourth was a suboptimal choice of aim point.

One of these factors is σs(N), the uncertainty in locating the centroid of a cloud of N dots. Although we have described this as a sensory error (in contrast to motor noise σm), it may have several causes. It could arise from poor localization of the individual dots, although this is dubious, because observers are quite accurate in such localization tasks. It could be an additional fixed source of noise because of the calculation of the centroid from multiple dots, but that would not explain the dependence of σs on N. It could arise as a result of observers basing their centroid estimate on a subset of the dots. This behavior results in lowered calculation efficiency (Pelli and Farell, 1999). The degree of subsampling can be estimated by investigating performance as a function of added noise. For example, Dakin (2001) has used this technique to analyze an observer's ability to determine the mean orientation of a collection of oriented texture elements. However, the nonmonotonic behavior of σs(N) (Fig. 5) argues against attributing this uncertainty solely to subsampling.

Uncertainty in determining the centroid of the dot cloud combines with the sampling variance of the dots themselves as a cue to target location. As a result, the optimal strategy for our observers differs slightly from that of an ideal performance model that is not hampered by this source of uncertainty. Including this noise in the determination of the optimal aim point (Fig. 3) results in an optimal prediction that better matches subjects' performance in some cases, but not in others.

Aim point noise σa might be attributable to a sensory problem (determining the location corresponding to a shift value α) or could be an error in computation (noise in the determination of α itself). The nonlinearity of σa as a function of Dd is a clue to the source of this noise, but our experiments were not designed to uncover this source.

The work described here fits within a growing literature that views sensory and sensorimotor behavior within the context of statistical decision theory. As has been pointed out previously (Maloney, 2002; Kersten et al., 2004), perceptual tasks are formally identical to hypothesis testing or statistical estimation. There is a large literature on perceptual estimation as an optimal combination of sensory cues, as if observers use ML estimation (Landy et al., 1995; Landy and Kojima, 2001; Ernst and Banks, 2002; Gepshtein and Banks, 2003; Knill and Saunders, 2003; Alais and Burr, 2004; Hillis et al., 2004). Other studies also include a prior probability distribution and hence use MAP estimation as the model of performance. Some of these studies fit the MAP model to behavioral data by adjusting parameters of a model of (1) a prior distribution of possible world states (Mamassian and Landy, 1998, 2001; van Ee et al., 2003), (2) a prior distribution of the observer's sensory reliability (Battaglia et al., 2003), or (3) a model of sensory reliability (Körding and Wolpert, 2004a; Miyazaki et al., 2005). Another approach has been to measure the frequency of various events in the world from a sample of images or scenes and then adopt that distribution as a prior distribution to account for behavior (Geisler et al., 2001; Elder and Goldberg, 2002). The work presented here is different in that the prior distribution is neither fit to the data nor estimated from a sample of the environment. Rather, we have imposed a known prior and measured the sources of uncertainty in the task. Hence, we were able to predict performance with no additional free parameters. In this sense, we have been able to determine, for our task, how close performance is to optimal and the ways in which it falls short of that ideal.

Footnotes

This work was supported by National Institutes of Health Grant EY08266.

References

- Alais D, Burr D. The ventriloquist effect results from near-optimal bimodal integration. Curr Biol. 2004;14:257–262. doi: 10.1016/j.cub.2004.01.029. [DOI] [PubMed] [Google Scholar]

- Battaglia PW, Jacobs RA, Aslin RN. Bayesian integration of visual and auditory signals for spatial localization. J Opt Soc Am A. 2003;20:1391–1397. doi: 10.1364/josaa.20.001391. [DOI] [PubMed] [Google Scholar]

- Berger JO. NY: Springer; 1985. Statistical decision theory and Bayesian analysis, Ed 2. [Google Scholar]

- Blackwell D, Girshick MA. NY: Wiley; 1954. Theory of games and statistical decisions. [Google Scholar]

- Brainard DH. The psychophysics toolbox. Spat Vis. 1997;10:433–436. [PubMed] [Google Scholar]

- Dakin SC. Information limit on the spatial integration of local orientation signals. J Opt Soc Am A. 2001;18:1016–1026. doi: 10.1364/josaa.18.001016. [DOI] [PubMed] [Google Scholar]

- Elder JH, Goldberg RM. Ecological statistics of Gestalt laws for the perceptual organization of contours. J Vis. 2002;2:324–353. doi: 10.1167/2.4.5. [DOI] [PubMed] [Google Scholar]

- Ernst MO, Banks MS. Humans integrate visual and haptic information in a statistically optimal fashion. Nature. 2002;415:429–433. doi: 10.1038/415429a. [DOI] [PubMed] [Google Scholar]

- Ferguson TS. NY: Academic; 1967. Mathematical statistics: a decision theoretic approach. [Google Scholar]

- Geisler WS. Sequential ideal-observer analysis of visual discrimination. Psychol Rev. 1989;96:1–71. doi: 10.1037/0033-295x.96.2.267. [DOI] [PubMed] [Google Scholar]

- Geisler WS, Perry JS, Super BJ, Gallogly DP. Edge co-occurrence in natural images predicts contour grouping performance. Vis Res. 2001;41:711–724. doi: 10.1016/s0042-6989(00)00277-7. [DOI] [PubMed] [Google Scholar]

- Gepshtein S, Banks MS. Viewing geometry determines how vision and haptics combine in size perception. Curr Biol. 2003;13:483–488. doi: 10.1016/s0960-9822(03)00133-7. [DOI] [PubMed] [Google Scholar]

- Hess RF, Dakin SRG, Badcock D. Localization of element clusters by the human visual system. Vis Res. 1994;34:2439–2451. doi: 10.1016/0042-6989(94)90288-7. [DOI] [PubMed] [Google Scholar]

- Hillis JM, Watt SJ, Landy MS, Banks MS. Slant from texture and disparity cues: optimal cue combination. J Vis. 2004;4:967–992. doi: 10.1167/4.12.1. [DOI] [PubMed] [Google Scholar]

- Jiang B, Levi DM. Spatial-interval discrimination in two-dimensions. Vis Res. 1991;31:1931–1937. doi: 10.1016/0042-6989(91)90188-b. [DOI] [PubMed] [Google Scholar]

- Kersten D, Yuille A. Bayesian models of object perception. Curr Opin Neurobiol. 2003;13:150–158. doi: 10.1016/s0959-4388(03)00042-4. [DOI] [PubMed] [Google Scholar]

- Kersten D, Mamassian P, Yuille A. Object perception as Bayesian inference. Annu Rev Psychol. 2004;55:271–304. doi: 10.1146/annurev.psych.55.090902.142005. [DOI] [PubMed] [Google Scholar]

- Klein SA, Levi DM. Hyperacuity thresholds of 1 sec: theoretical predictions of empirical validation. J Opt Soc Am A. 1985;2:1170–1190. doi: 10.1364/josaa.2.001170. [DOI] [PubMed] [Google Scholar]

- Knill DC, Pouget A. The Bayesian brain: the role of uncertainty in neural coding and computation. Trends Neurosci. 2004;27:712–719. doi: 10.1016/j.tins.2004.10.007. [DOI] [PubMed] [Google Scholar]

- Knill DC, Richards W. Cambridge, UK: Cambridge UP; 1996. Perception as Bayesian inference. [Google Scholar]

- Knill DC, Saunders JA. Do humans optimally integrate stereo and texture information for judgments of surface slant? Vis Res. 2003;43:2539–2558. doi: 10.1016/s0042-6989(03)00458-9. [DOI] [PubMed] [Google Scholar]

- Körding KP, Wolpert DM. Bayesian integration in sensorimotor learning. Nature. 2004a;427:244–247. doi: 10.1038/nature02169. [DOI] [PubMed] [Google Scholar]

- Körding KP, Wolpert DM. The loss function of sensorimotor learning. Proc Natl Acad Sci USA. 2004b;101:9839–9842. doi: 10.1073/pnas.0308394101. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Landy MS, Kojima H. Ideal cue combination for localizing texture-defined edges. J Opt Soc Am A. 2001;18:2307–2320. doi: 10.1364/josaa.18.002307. [DOI] [PubMed] [Google Scholar]

- Landy MS, Maloney LT, Johnston EB, Young MJ. Measurement and modeling of depth cue combination: in defense of weak fusion. Vis Res. 1995;35:389–412. doi: 10.1016/0042-6989(94)00176-m. [DOI] [PubMed] [Google Scholar]

- Lee TS. Top-down influence in early visual processing: a Bayesian perspective. Physiol Behav. 2002;77:645–650. doi: 10.1016/s0031-9384(02)00903-4. [DOI] [PubMed] [Google Scholar]

- Levene H. Stanford, CA: Stanford UP; 1960. Contributions to probability and statistics. [Google Scholar]

- Levi DM, Klein SA. The role of separation and eccentricity in encoding position. Vis Res. 1990;30:557–585. doi: 10.1016/0042-6989(90)90068-v. [DOI] [PubMed] [Google Scholar]

- Levi DM, Klein SA, Yap YL. “Weber's Law” for position: unconfounding the role of separation and eccentricity. Vis Res. 1988;28:597–603. doi: 10.1016/0042-6989(88)90109-5. [DOI] [PubMed] [Google Scholar]

- Maloney LT. Statistical decision theory and biological vision. In: Heyer D, Mausfeld R, editors. Perception and the physical world. New York: Wiley; 2002. pp. 145–189. [Google Scholar]

- Mamassian P, Landy MS. Observer biases in the 3D interpretation of line drawings. Vis Res. 1998;38:2817–2832. doi: 10.1016/s0042-6989(97)00438-0. [DOI] [PubMed] [Google Scholar]

- Mamassian P, Landy MS. Interaction of visual prior constraints. Vis Res. 2001;41:2653–2688. doi: 10.1016/s0042-6989(01)00147-x. [DOI] [PubMed] [Google Scholar]

- Mamassian P, Landy MS, Maloney LT. Bayesian modeling of visual perception. In: Rao RP, Olshausen BA, Lewicki MS, editors. Probabilistic models of the brain: perception and neural function. Cambridge, MA: MIT; 2002. pp. 13–36. [Google Scholar]

- Meyer DE, Smith JK, Wright CE. Models for the speed and accuracy of aimed movements. Psychol Rev. 1982;89:449–482. [PubMed] [Google Scholar]

- Miyazaki M, Nozaki D, Nakajima Y. Testing Bayesian models of human coincidence timing. J Neurophysiol. 2005;94:395–399. doi: 10.1152/jn.01168.2004. [DOI] [PubMed] [Google Scholar]

- Pelli DG. The VideoToolbox software for visual psychophysics: transforming numbers into movies. Spat Vis. 1997;10:437–442. [PubMed] [Google Scholar]

- Pelli DG, Farell B. Why use noise? J Opt Soc Am A. 1999;16:647–653. doi: 10.1364/josaa.16.000647. [DOI] [PubMed] [Google Scholar]

- Sherrington CS. Observations on the sensual role of the proprioceptive nerve supply of the extrinsic eye muscles. Brain. 1918;41:332–343. [Google Scholar]

- Sun J, Perona P. Where is the sun? Nat Neurosci. 1998;1:183–184. doi: 10.1038/630. [DOI] [PubMed] [Google Scholar]

- Trommershäuser J, Maloney LT, Landy MS. Statistical decision theory and trade-offs in the control of motor response. Spat Vis. 2003a;16:255–275. doi: 10.1163/156856803322467527. [DOI] [PubMed] [Google Scholar]

- Trommershäuser J, Maloney LT, Landy MS. Statistical decision theory and the selection of rapid, goal-directed movements. J Opt Soc Am A. 2003b;20:1419–1433. doi: 10.1364/josaa.20.001419. [DOI] [PubMed] [Google Scholar]

- Trommershäuser J, Gepshtein S, Maloney LT, Landy MS, Banks MS. Optimal compensation for changes in task-relevant movement variability. J Neurosci. 2005;25:7169–7178. doi: 10.1523/JNEUROSCI.1906-05.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- van Ee R, Adams WJ, Mamassian P. Bayesian modeling of cue interaction: bistability in stereoscopic slant perception. J Opt Soc Am A. 2003;20:1398–1406. doi: 10.1364/josaa.20.001398. [DOI] [PubMed] [Google Scholar]

- Weiss Y, Simoncelli EP, Adelson EH. Motion illusions as optimal percepts. Nat Neurosci. 2002;5:598–604. doi: 10.1038/nn0602-858. [DOI] [PubMed] [Google Scholar]

- Whitaker D, Bradley A, Barrett BT, McGraw PV. Isolation of stimulus characteristics contributing to Weber's law for position. Vis Res. 2002;42:1137–1148. doi: 10.1016/s0042-6989(02)00030-5. [DOI] [PubMed] [Google Scholar]

- Wilson HR. Responses of spatial mechanisms can explain hyperacuity. Vis Res. 1986;26:453–469. doi: 10.1016/0042-6989(86)90188-4. [DOI] [PubMed] [Google Scholar]