Abstract

Treatment rules based on individual patient characteristics that are easy to interpret and disseminate are important in clinical practice. Properly planned and conducted randomized clinical trials are used to construct individualized treatment rules. However, it is often a concern that trial participants lack representativeness, so it limits the applicability of the derived rules to a target population. In this work, we use data from a single trial study to propose a two-stage procedure to derive a robust and parsimonious rule to maximize the benefit in the target population. The procedure allows a wide range of possible covariate distributions in the target population, with minimal assumptions on the first two moments of the covariate distribution. The practical utility and favorable performance of the methodology are demonstrated using extensive simulations and a real data application.

Keywords: Minimax linear decision, Biased sample, Classification, Individualized treatment rules, Personalized medicine

1. Introduction

In the new era of personalized medicine, it has been advocated that treatments should be recommended according to individual patient characteristics to account for considerable heterogeneity among patients’ responses to different treatments (Hayes et al., 2007; Hamburg and Collins, 2010). Randomized clinical trials (RCTs) are ideal for constructing such rules, since they provide internal validity by ensuring consistency, positivity and no unmeasured confounders (Greenland, 1990; Hernán and Robins, 2006) that may be violated in observational studies.

Sophisticated statistical methods have been developed to estimate optimal individualized treatment rules using data from randomized trials. Regression-based methods estimate outcome as a function of patient covariates and treatment, and then select the treatment that maximizes the predicted outcome for each individual (Brinkley et al., 2010; Qian and Murphy, 2011; Kang et al., 2014). Some recent developments directly search for the individualized treatment rules that maximize the benefit for future patients (Zhang et al., 2012; Zhao et al., 2012). When the primary outcome of interest is survival time subject to right censoring, some methods have been proposed in this regard using either regression-based methods Goldberg and Kosorok (2012); Huang et al. (2014) or direct search methods Zhao et al. (2015).

The derived optimal rules sometimes are complex and nonlinear, so they are highly variable and may not be practically useful. To better inform clinical practice, it is more desirable that a recommended treatment rule be easy to interpret and disseminate. As suggested in Orellana et al. (2010), the class of practically enforceable candidate regimes is significantly smaller than the class of arbitrary functions of covariates. Usually these regimes are comprised of functions that only depend on a small set of covariates, and are indexed by a set of finite dimensional parameters. Statistically, Qian and Murphy (2011) used a rich linear basis for better modeling the outcome, with a sparsity penalty imposed to preserve the parsimoniousness of the resulting rule, while most works considered and recommended rules from a linear functional class.

Unfortunately, it has been well known that the RCT may have limited generalizability when the distribution of treatment effect modifiers in trial participants differs from the one in a target population (Buchanan et al., 2016). A parsimonious treatment rule constructed from trial data using current methods cannot be directly carried over to a target population, when the population characteristics are different between the two. Some attempts have been made to adjust population difference using inverse probability-of-selection weight method (Cole and Stuart, 2010). This, however, requires complete knowledge of population selection mechanism, and it is yet to be extended to the context of developing optimal treatment rules.

In this paper, we aim to robustify the treatment rule from a trial so that the robustified rule is (1) parsimonious (linear in patient features in particular); (2) still maximizes a general benefit criterion when applied to the target population. We provide a new framework, called minimax linear decision (MiLD), to robustify the treatment rule. MiLD enables the construction of a linear rule that optimizes the general benefit function in the target population, allowing differences between the target and the trial populations in the means and covariances in treatment effect modifiers. It can be further extended to construct nonlinear rules using the ‘kernel trick’, which avoids the explicit feature mapping but only relies on a kernel function to learn a nonlinear decision boundary. Regardless, MiLD requires no further assumptions beyond the first two moments of the covariate distributions. Moreover, our developed framework is applicable to all types of outcomes.

The remainder of the paper is organized as follows. In Section 2, we formulate the problem of finding a robust and parsimonious treatment rule using data from a clinical trial. The proposed method, MiLD, is then developed to optimize a general benefit function in the target population, allowing the covariate distribution to be different between the two populations. Consistency and convergence rate results are established for the proposed method. We present simulation studies to evaluate performances of the proposed method in Section 4. We further illustrate the method using a data example from a US NIH funded SWOG trial on castration-resistant prostate cancer patients in Section 5. The proofs of theoretical results are given in the Appendix.

2. Methodology

2.1. Background and the optimal treatment rule

Let T denote the outcome of interest, A ∈ {−1, 1} denote a binary treatment, and X = (X1, …,Xm)T ∈ ℝm denote patient covariates. We assume the dimension is small to moderate, and fixed. The outcome could be a continuous, discrete, or time-to-event outcome subject to censoring. A treatment regime is a function mapping from the space of X to the space of treatments. A future patient with X = x is then assigned with treatment d(x). Let T(a) denote the potential outcome under treatment a ∈ {−1, 1} (Rubin, 1978), and T(d) = T{d(X)} be the potential outcome under the rule d for the given covariate X. The quality of d can be measured by the marginal mean outcome E{T(d)}, which represents the trial population mean outcome were all patients in the trial population to receive treatment according to d. We denote this quantity as , which is also called the value function of d. The optimal treatment regime d* maximizes over all possible d. We assume that in the trial,

-

(A1)

P(A = a|X) > 0 with probability one for a ∈ {−1, 1};

-

(A2)

{T(−1),T(1)} are independent of A conditional X;

-

(A3)

consistency so that .

Since , where the outer expectation EX(·) is taken with respect to the marginal distribution of X in the trial population, the optimal treatment for any patient with characteristics x is d*(x) = argmaxa∈{−1,1}E{T(a)|X = x} when there is no restriction of the functional form of the rule. These assumptions are typically standard and satisfied in randomized clinical trials (Robins et al., 2000), because application of the intervention to any individual is under the control of the investigator. This is however, not guaranteed in observational studies. For example, consistency assumption can be violated given that there could be variations of treatment, and each of them may have a different causal effect on the outcome. In addition, noncompliance can be severe in observational studies, where some subjects may never take what they are asked to take. If (A1)-(A3) are satisfied, the optimal treatment rule is

To assess the overall benefit of the obtained rule when applied to the target population, we let pX(x) denote the distribution of X in the trial population, and qX(x) denote the distribution of X in the target population, which is not necessarily the same as pX(x). Furthermore, we assume

-

(A4)

the support of qX(x) is contained in the support of pX(x), i.e., there is no under-coverage in the trial study;

-

(A5)

the potential outcome mean given X is the same between the trial population and the target population. Thus, the treatment works the same in both populations.

Thus, the only difference between the two populations is due to the covariate distributions. Let Pq denote the distribution of X in the target population and Pp denote the distribution of X in the trial population. Let denote the expectation is taken with respect to the distribution of X in the target population. Under (A4) and (A5), it is easy to observe that the expected outcome of rule d(X) for the target population is

where EX[·] denotes the expectation under X ~ pX(x). Consequently, the optimal rule obtained from the trial population, d*, is also the optimal for the target population if there is not any restriction to the formulation in d*.

2.2. A General Quality Value of Treatment Rules for the Target Population

We propose a general criterion assessing the quality of a decision rule for the target population, which includes both the value function and the correct allocation rate to the optimal rule as special cases. For a given rule d(X), the proposed quality assessment in the target population is defined as

where W(X) is a non-negative function, essentially a reward if the treatment rule d(x) is the optimal.

The quality value in the definition has a different interpretation depending on the choice of W(x). For example, let W(x) = W1(x) = E{T|A = d*(x),X = x}−E{T|A ≠ d*(x),X = x} = |f*(x)|. That is, for subject with covariate X = x, W(x) is the gain if he/she follows treatment rule d*. achieves optimal if d = d*. This is because . Hence, maximizing is equivalent to maximizing the value function in the target population. If we set W(X) = W2(X) ≡ 1, then and the criterion corresponds to the correct allocation rate of the optimal treatment. Additionally, in practice, W(X) could allow more general trade off between the benefit of optimal treatment and the relative cost of giving optimal treatment to patients instead of standard care. In this paper, we will focus on the choice of W = W1 and W2.

2.3. Learning Robust Linear Rules for the Target Population

In many practices, since the set of enforceable treatment rules are usually restrictive and cannot be arbitrary, a parsimonious and interpretable decision rule is preferred. In particular, we focus on a linear decision rule, i.e., d(x) = sign{f(x)} where f(x) = xTβ1 +β0. A patient x is assigned to treatment 1 if xTβ1 + β0 ≥ 0 and treatment −1 otherwise. For example, let the potential outcome model be . The optimal rule d*(x) = 1 if 3x/4 + sin(x)/4 − 1 ≤ 0 or 3x/4 + sin(x)/4 − 3 > 0, and d*(x) = −1 otherwise. Consider the space of linear decision rules with Therefore d*(x) is highly nonlinear, and Assume that X ~ N(2, 1) and X ~ N(4, 1) in the trial and target population, respectively. Then the optimal linear rule is using the trial data. However, sign(−X +0.28) should be the optimal linear rule in the target population, which lead to a benefit of 0.997, versus a benefit of −0.387 using the optimal rule derived from the trial data. Hence, the optimal linear rules could be substantially different between the two populations.

We should note that unless the true optimal rule is linear itself, once restricted to such a class of linear rules, the optimal rule within this class may no longer be the optimal for the target population, since both pX(x) and qX(x) can be very different. It is thus desirable to guarantee that is not small, regardless of X and which treatment is optimal; and ideally, the larger the better. To this end, we propose the following method, namely, Minimax Linear Decisions (MiLD). Note that

We introduce a lower bound α on , which represents the expected benefit that would have been obtained if the patients were to receive treatment j, whose optimal treatments would indeed be j in the target population. Hence, α controls the worst case overall benefits for each group, and we set the same lower bound for both quantities for simplicity. We then consider the following optimization problem:

| (1) |

where f1 and f−1 are the density of X in patients whose optimal treatment are 1 or −1 respectively. That is, we want to guarantee the quality of being given optimal treatment as large as possible among those who should indeed be treated with the same treatment.

Let denote the density of X for patients with d*(X) = j, j = ±1 in the target population. Then

where the density of X† is proportional to Hence, (1) is equivalent to

| (2) |

where and are the density of X† in patients with optimal treatment being 1 or −1 respectively.

However, and could be very different, and difficult to characterize based on the trial data. We propose to quantify the difference between the target population and the trial population in terms of the first two moment conditions, without making any specific distributional assumptions for the two populations. In other words, the covariate moments of X† in patients with d*(x) = j, j = ±1 in the target population could be different from that of the trial population to some degree.

Suppose that the means of covariate X† are , and intraclass covariance matrices are for patients with d*(X) = j in the target population, and and , j = ±1 respectively, in the trial population under the new density. We assume that the quantities in the target population belong to

| (3) |

Here, ν ≥ 0 and ρj ≥ 0 are known constants, and ∥ · ∥F is the Frobenius norm defined as . Such conditions define the closeness of the target population to the trial population. suggests that the mean in the target population belongs to an elliptical region around with shape determined by the covariance , j = ±1. The covariance matrix , centered around , can also vary to certain degrees. Clearly, the larger ν or ρj, the more different the two populations are. (2) is consequently written as

| (4) |

This yields a linear decision rule that safeguards against the possible difference of the distribution of X between the trial and the target populations. Such robustness of the treatment regime estimation is mainly due to the minimum requirements on the means and covariances. The above objective function has a similar form to the minimax probability machine techniques developed in Lanckriet et al. (2003), and their techniques for deriving the optimal linear rule can be employed if , were known. The key step is to recognize that the condition holds if and only if

| (5) |

by applying the generalized Chebychev inequality (Marshall and Olkin, 1960), where (Lemma 1, Lanckriet and others (2003)). The constraint (5) is imposed on the distance with respect to the mean of the covariates within the class, taking into account the effect of the covariance matrices, which could be representable of the class. In other words, we search a line such that the normalized margin between the means of classes is as large as possible. Consequently, (4) is equivalent to

As shown in the Appendix, a further simplified objective function can be obtained as

| (6) |

subject to . We can eliminate the equality constraint in (6) by letting β1 = β10 + Fu, where u ∈ ℝp−1, is a p × (p − 1) matrix. Let h denote the position of the maximum element in . F is constructed from a (p − 1) × (p − 1) identity matrix, with inserted into the hth row. We can see that F is an orthogonal matrix whose columns span the subspace of vectors orthogonal to . Hence, the optimization problem can be written as

The lower bound on the worst case allocation rate α* = (κ* − ν)2/{1 + (κ* − ν)2}, and ν should not exceed κ*. Here, and ν is defined in (3). More details can be found in the Appendix.

Remark 1 Although the MiLD is proposed to identify a robust linear rule, it can be easily generalized using nonlinear kernel functions. We seek a decision rule in the form of f(x) = φ(x)Tβ1 + β0, where φ(x) is a set of basis functions. The kernelization of the proposed approach is possible because the objective function (6) can be expressed in terms of inner products between different X′s. Hence, the objective and constraint can be expressed in terms of inner products of φ(X). Subsequently, we can compute the robust nonlinear rule by slightly modifying the algorithm.

2.4. Estimating robust rules using empirical data

To solve for the minimax linear decisions given the observed data from a clinical trial, we first need to estimate , which depends on both the optimal treatment rule d*(x) and the weight W(X). A three-step procedure is outlined below.

Step 1. Estimate d*(x) using a nonparametric method with the trial data, denoted by .

Step 2. Estimate using the initial estimate , denoted by .

Step 3. Implement MiLD based on the estimated .

In Step 1, there are several ways to estimate d*. Indirect estimation methods model the response as a function of X and A, and select treatment, which maximizes the predicted mean outcome (Qian and Murphy, 2011). Since a consistent estimator is required to guarantee the performance, a flexible nonparametric method in Step 1 is preferred. For example, support vector machine and support vector regression can be used to estimate E(T|X,A) for binary and continuous outcomes respectively. Alternatively, outcome weighted learning proposed in Zhao et al. (2012) circumvents the two-step procedure by directly optimizing the value function. We can employ a kernel function to induce nonlinearity in obtaining the initial estimator .

In a cancer clinical trial, it is common that the primary endpoint is survival time that subjects to censoring. Given that d*(x) is invariant over the covariate distribution, we suggest utilizing flexible nonparametric machine learning methods. In particular, we will use the random forest survival tree method (Ishwaran et al., 2008) to estimate E(T|X,A), an ensemble tree method that extended random forest method (Breiman, 2001) for analysis of right-censored survival data. It uses independent bootstrap samples to grow trees by randomly selecting a subset of variables at each node and splitting the node using a survival criterion adjusting for censoring status. The ensemble estimated cumulative hazard function is the average of the Nelson-Aalen estimator for each case’s terminal node. Easy-to-use software is available on R CRAN (https://cran.r-project.org). We can estimate E(T|X,A) correspondingly via . Then , where .

To estimate with a general weight W(x), we treat it as a multiplicative adjustment to X where we now sample from a density proportional to pX(x)W(x) instead of pX(x). This motivates us to employ techniques from importance sampling to estimate . In particular, can be estimated by

and can be estimated by

In our case, we choose

or W(X) = W2(X) ≡ 1.

Provided with the estimated , we solve for the robust linear treatment regimes in Step 3 using the procedure outlined in Section 2.3. In particular, estimates will be plugged in for and and related quantities. We obtain and accordingly.

2.5. Choices of (ν, ρj)

Sometimes pilot data from the target population or the general patient population data are available. We can use this information to choose ρj and ν. First we obtain as an initial estimate of d*(x) using the trial data. Based on this preliminary decision boundary , we can estimate and , denoted as and , for a target population using the pilot data. Then we can set , and , where b is a constant characterizing the potential differences between two population means, as well as the norm of the covariance matrix. If relevant data is not available, we can conduct sensitivity analyses on different combinations of (ν, ρj), and assess how the changes in (ν, ρj) will influence the resulting decision rules and the worst-case allocation rates.

3. Theoretical results

In this section, we will establish some theoretical properties of the proposed MiLD method. Assume that (Xi,Ai, Ti), i = 1, …, n, are i.i.d observations from the trial. Additionally, we assume that

Assumption 1

All covariates are bounded such that |X| ≤ M.

Assumption 2

Let be the unique solution to (1). lies in the interior of a compact set B.

We assume the following condition on f*(x) = E(T|X = x,A = 1) − E(T|X = x,A = −1):

Assumption 3

Margin condition: there exist K1, γ > 0 such that for all t > 0

Assumption 4

converges to fm, which could be different from f*. The convergence rate of estimated to fm satisfies , where ∥f∥2 = E{f(X)2}1/2 and the expectation is taken with respect to the distribution in the trial data.

Assumption 5

The difference of the covariates is uniformly bounded

for some constant CX > 0.

Assumption 6

Let be the estimate of f*(x) using the Step 1 data except that the ith observation (xi, ai, ti) is replaced by an independent observation . We assume that

for some constant ρ.

Assumption 3 is an analogue of the well-known margin condition (Tsybakov, 2004), which is commonly used to characterize the noise around the decision boundary in a binary classification problem. Here, the assumption describes the distribution of f*(X) when X is near the boundary {x : f*(x) = 0} in the target population, which usually contains more noise, and γ controls the size of these regions. Particularly, larger values of γ mean that the two treatment effects are less likely to be similar, and it is easier to distinguish patients who would have benefited from one treatment from those who would have benefited from the other. Usually, γ ∈ [0,m] for a smooth contrast function f*(x), unless f*(x) does not cross 0 at any point, i.e., all the patients benefit from one treatment (Audibert et al., 2007). For example, if X ~ Uniform[−1, 1], P(A = 1|X = P(A = −1|X) = 1/2) and E(T|X,A) = XA, then γ = 1. The bounded covariate assumption, Assumption 5, is satisfied in most real applications. Assumption 6 indicates that small changes in the data will only lead to small changes in the estimates. For example, if we use Cox regression with nonlinear basis functions, or nonparametric kernel estimators, this condition will be satisfied (Devroye et al., 2013). The following results are proved in the supplementary materials.

Theorem 1

Under the Assumptions 1-6, it holds that .

The first term reflects the approximation error due to the initial estimator . If is a consistent estimator of f*, where f* = fm, then the first term will disappear. The other terms bound the stochastic error, which arises from the variability inherent in a finite sample size, which captures the efficiency loss due to the first stage estimation. As an example, if we use random forest to estimate the required quantities, rn = n−θ with under some mild assumptions, where S denotes the number of strong features used in the estimating process (Biau, 2012). The theorem indicates that the convergence rate of the estimated linear treatment rules depends on the the initial treatment rule, and the behavior of f*(x) in the neighborhood of the boundary. For example, if a kernel estimator is used, the optimal rate of rn would be n−2/(m+4), and the conclusion rate is n−4γ/(m+4)(γ+2).

4. Simulation Studies

We conduct extensive simulations to evaluate the proposed methods. In all scenarios, the dimension of the covariate space is 10. Binary treatments A are generated from {−1, 1} with equal probability. Three different scenarios are presented, with outcomes generated as follows.

Scenario 1. We simulate the first half of patients from X1,X2 ~ N(1, 1),X3, …, X10 ~ N(0, 1) and the second half of patients with X1, …, X10 from N(0, 1). The survival time is the minimum of τ = 0.5 and , where is generated with

Here, c(X) = 1 for the first half of patients and c(X) = −1 for the other half, and is generated from an exponential distribution with mean 1. Censoring time C is generated from Uniform[0,1]. The censoring percentage is around 24%. The optimal decision boundary is d*(X) = sign(X1 + X2 − 1).

Scenario 2. X1, …, X10 are generated from N(0, 1). The survival time is the minimum of τ = 4 and , where is generated with the hazard rate function

Censoring time C is generated from Uniform[0, 5]. The censoring percentage is around 42%. The optimal decision boundary is d*(X) = −sign{2X1 + 3(X2 + 1)2 − 2}.

Scenario 3. X1, …, X10 are generated from N(0, 1). The survival time is the minimum of τ = 4 and , which is generated with

Censoring time C is generated from

The censoring percentage is around 51%. The optimal decision boundary is .

We also consider settings where there is a mismatch between the trial participants and the target population, and we will denote these mismatch scenarios as Scenarios 1’, 2’, and 3’ respectively. In Scenario 1, half of the patients will gain benefits from treatment 1 in both populations. We modify it in Scenario 1’ such that the proportion of patients with d*(x) = 1 is 1/3 in the trial population, and 1/2 in the target population. In Scenario 2’, we let the covariate distribution in the trial data with X1 ~ N(−0.25, 1.5) and other covariates following N(0, 1.5), which are different from the distribution in the target population with all covariates generated from N(0, 1). Eligibility criteria are usually applied for the trial recruitment, and thus trials might selectively enroll patients from the target population. Instead of randomly choosing patients for participation in the trial, patients are selectively enrolled with certain probability, denoted by π(X), into the trial data. In Scenario 3’, the model for the enrollment is , where logit(t) = log{t/(1−t)}. Hence, covariates predictive of participation in the trial could be predictive of treatment effects, where patients with d*(x) = 1 are less likely to participate in the trial. Subsequently, the covariate distributions in trial data and the target population are not the same.

We hope to discover a simple linear decision rule of high quality even if the true treatment effect might be nonlinear. MiLD will be evaluated for this purpose. In this paper, we utilize random survival forest to obtain the initial estimate of d*(x), but other methods can also be applied. Then we apply both and W2(x) ≡ 1 in MiLD, targeted to optimize the value and the allocation rate to the optimal rule, respectively. We denote them as MiLD-V and MiLD-P. The results might be affected with possible deviations between the target and the trial populations. When we do not have prior information on the target population, we can vary (ν, ρj) to evaluate the resulting changes. As suggested in Section 2.5, we set , . Here, is the Frobenius norm of and b is a constant that reflects the deviations relative to the covariance matrix norms and the means, in the trial populations. We consider two cases with b = 0 and b = 0.1 for both MiLD-V and MiLD-P.

We compare the proposed methods to the following two approaches.

OWL (outcome weighted learning): we find the best linear decision rule by directly targeting maximizing the overall expected outcome (Zhao et al., 2012).

COX: we find the linear decision rule based on a Cox regression model, where interactions between X and A are included.

All methods are performed by deriving the best linear treatment rules using trial data. We then calculate the misclassification rate under the estimated rules via Monte Carlo methods. Specifically, a large testing dataset of 10,000 from the target population is generated. Training datasets representing the trial population are repeatedly simulated, and each time the rules yielded by various methods are evaluated on this testing set. Different training data sample sizes n = 250,500 and 1000 are considered. We report the average misallocation to non-optimal treatments over 500 replicates. We also report the expected overall survival of the estimated rules using the derived treatment rules by different methods in the supplementary materials.

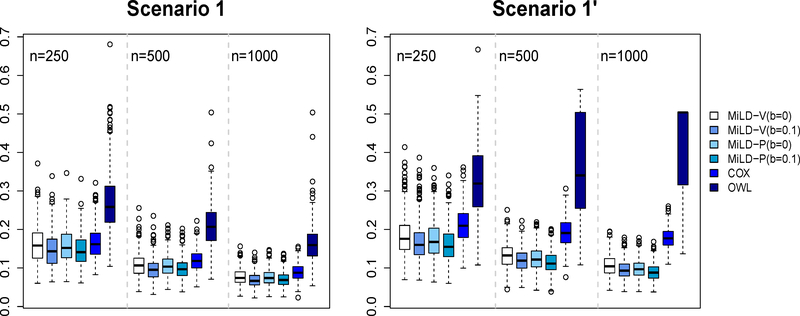

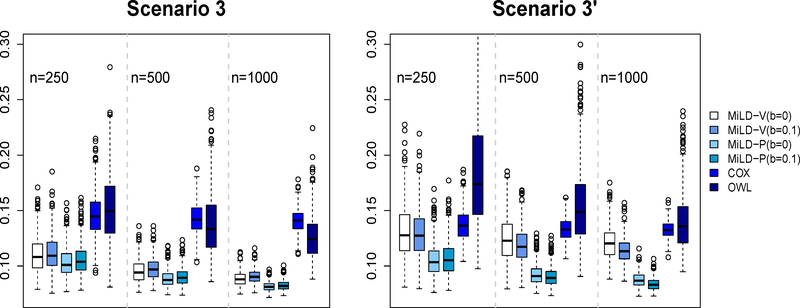

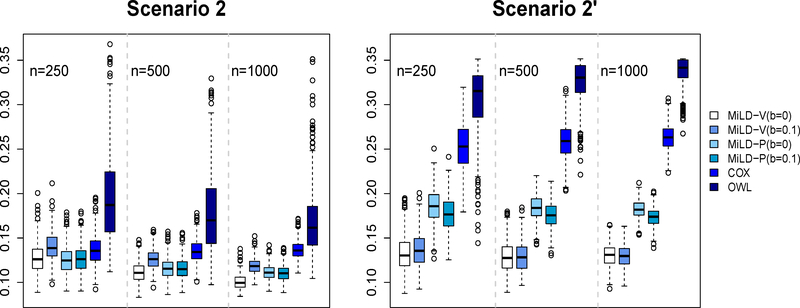

The results in Figures 1–3 present evidence that the proposed methods perform well compared with the other methods. The Cox regression method relies on model fitting, and thus many subjects are recommended the wrong treatments by the resulting linear decision rule, labeled as misallocation rates in Figures 1 - 3. Even in Scenarios 1 and 1’ where the optimal treatment rule is linear, the non-proportional hazards model negatively impacts the performance of Cox regression. The OWL method in general leads to a larger variability. On the other hand, both MiLD-V and MiLD-P perform well throughout, and the results are insensitive to the value of b. The limits of misallocation rates that MiLD-V and MiLD-P converge to are the lowest among all competitors. It can be seen that when the trial population is not representative as illustrated in Scenarios 1’, 2’ and 3’, MiLD-V and MiLD-P are robust to the bias, and they yield favorable results. We note that the results in MiLD with b = 0.1, which allows a larger deviation between the trial and the target population, give a slightly better result most of the time in these cases. We also illustrate the efficiency loss due to the first stage estimation of f*(x) numerically. Details can be found in supplementary material.

Figure 1:

Misallocation rates in Scenarios 1 and 1’ using trial data with sample sizes varying from 250 to 1000. The misallocation rate is evaluated by using 500 Monte Carlo repetitions.

Figure 3:

Misallocation rates in Scenarios 3 and 3’ using trial data with sample sizes varying from 250 to 1000. The misallocation rate is evaluated by using 500 Monte Carlo repetitions.

5. Data Analysis

Prior studies suggest that elevated markers of bone turnover are prognostic for poor survival in castration-resistant prostate cancer, but their predictive value for the bone-targeted therapy has not been fully investigated. We illustrate the proposed methods using data from SWOG 0421 study, a North American Intergroup phase III trial (participants: SWOG, Eastern Cooperative Oncology Group, Cancer and Leukemia Group B/Alliance) for men with metastatic castration-resistant prostate cancer. They were randomly assigned in a blinded fashion in a 1:1 ratio to docetaxel administered every 21 days at a dose of 75 mg/m2 with or without the bone-targeted oral agent atrasentan taken daily for up to 12 cycles (Quinn et al., 2013; Lara et al., 2014). S0421 enrolled 1038 eligible patients; of these, 855 submitted serum for the bone biomarkers and 778 patients had usable specimens at baseline. After removing missing observations, the sample size for our analysis is 751, where 371 patients are in the docetaxel + atrasentan arm and 380 patients are in the docetaxel + placebo arm.

Co-primary endpoints in this study were progression-free survival and overall survival. In our analysis, we use overall survival as our outcome, which is truncated at the end of the study, and could be censored due to loss of follow-up. We use 10 baseline covariates to inform the optimal treatment rule, including age (range 40–92), serum prostate-specific antigen (PSA, range 0.1–10414.1), indicator of bisphosphonate usage (61%), indicator of metastatic disease beyond the bones (55%), indicator of pain at baseline (60%), indicator of performance status (2–3 versus 0–1, 56% 2–3), and bone marker levels. Four bone markers are measured, including Bone alkaline phosphatase (BAP, range 1.9–1761.0 u/L), C-terminal of type 1 collagen (CICP, range 1.4–273.6 ng/mL), N-telopeptides of type 1 collagen (NTx, range 1.4–480.0 nM) and pyridinoline (PYD, range 0.3–15.0 nmol/L). The distribution of bone marker concentrations and serum PSA were skewed with a wide range; therefore, we use log transformation for these variables. All covariates are then standardized for analysis. We intend to find the optimal linear treatment rule that is robust to a potential difference between the trial population and the future population.

We compare the proposed methods with Cox regression and OWL method. Practitioners often directly generalize the results from a clinical trial to the general patient population. However, It is not uncommon that the participants in clinical trials are in general more healthier than the patient population, due to the restrictions on patient eligibility. Hence, in our data analysis, we mimic this phenomenon by changing the distribution of healthier patients in the trial population and the target population. We categorize patients to healthier patients whose serum PSA is below the median level and sicker patients whose serum PSA is above the median level. We employ a cross-validated type analysis. At each run, we partition the whole data set into 5 pieces, where 2 parts of the data are used as training data to estimate the optimal rules, and the remaining part as the validation set for evaluating the estimated rules. Specifically, each training data set consists of 2/3 healthier patients and 1/3 sicker patients; on the other hand, each validation set contains 1/3 healthier patients and 2/3 sicker patients. Thus, there is a substantial discrepancy between the training set and the validation set, which represents the trial population and the target population, respectively. The cross-validated values are obtained by averaging the empirical value on all 3 validation subsets. To adjust for censoring when calculating the empirical values, we use inverse probability of censoring weighting techniques, where the empirical value for a treatment decision rule d is calculated by with equaling to We use a kernel estimator developed in Li et al. (1999) to obtain which does not require a model assumption. The procedure is repeated 200 times. The averages and standard errors of these values are reported in Table 1, where a larger value corresponds to a longer expected survival time. The results show better performances of both MiLD-V and MiLD-P procedures compared to other methods. MiLD-V performs the best perhaps because it targets to optimize the value directly.

Table 1:

Mean (s.e.) cross-validated values (days)

| COX | OWL | MiLD-V | MiLD-P | ||

|---|---|---|---|---|---|

| b = 0 | b = 0.1 | b = 0 | b = 0.1 | ||

| 749.2 (69.8) | 711.1 (71.2) | 764.6 (70.2) | 765.0 (68.9) | 753.5 (69.4) | 753.7 (69.4) |

“s.e.” denotes standard errors.

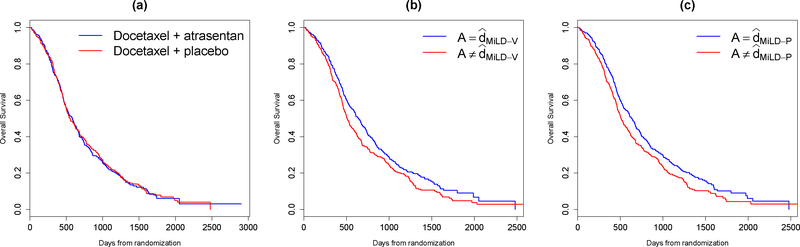

We then apply the proposed methods to the whole data set. The coefficients in the treatment decision rule recommended by MiLD-V and MiLD-P are presented in Table 2. The results yielded by MiLD-V and MiLD-P are close, where 80% of the patients receive the same treatment recommendation. In Figure 4(a), we compare the Kaplan–Meier curves for overall survival between two treatment arms. There does not appear to be separation. However, when comparing the group whose treatment assignments were in accordance with the treatments recommended by MiLD-V or MiLD-P with the other group, the Kaplan–Meier survival curves show a clear separation, as shown in Figures 4(b) and (c).

Table 2:

Coefficients for the estimated linear decision rules by MiLD-V and MiLD-P using the SWOG0421 data

| MiLD-V | MiLD-P | |

|---|---|---|

| Intercept | 0.021 | 0.037 |

| Age | 0.538 | 0.150 |

| Baseline serum PSA | −0.510 | −0.734 |

| Bisphosphonate usage (YES = 1) | 0.787 | 1.235 |

| Metastatic disease beyond the bones (YES = 1) | −0.301 | 0.008 |

| Pain (YES = 1) | −0.272 | 0.050 |

| Performance Status (‘2–3’ = 1) | 0.515 | 0.796 |

| BAP | 0.382 | 0.972 |

| CICP | −0.023 | 0.302 |

| NTx | 0.137 | 0.657 |

| PYD | −0.339 | −0.326 |

Figure 4:

Kaplan–Meier survival curves of overall survival in castration-resistant prostate cancer patients: (a) by treatment received; (b) by accordance between treatment recommended by MiLD-V and treatment received; (c) by accordance between treatment recommended by MiLD-P and treatment received.

6. Discussion

In practice, it is preferable to use interpretable decision rules when communicating with clinical practitioners about treatment recommendation. A canonical example is linear decision rule, which is attractive because it can be easily understood, and thus can be used to guide future research. Our present work shows that it is possible to identify high-quality linear decision rule that leads to a greater overall benefit, even if the truth may be nonlinear. Furthermore, the proposed method is robust across future populations, taking into account the fact that the study sample may not be representative.

We consider survival time outcomes in this paper. Such endpoints are critical in many settings especially in oncology. However, MiLD can be readily applied for binary or continuous outcomes, provided that we can use existing nonparametric methods to obtain preliminary estimates of the decision boundaries. Popular methods for binary outcomes include support vector machine and boosting (Hastie et al., 2009), and for continuous outcomes, we can apply random forest (Breiman, 2001) and support vector regression (Vapnik et al., 1997). Our current proposal suggests an L2 penalty for handling high-dimensional covariates. However, this does not provide sparse solutions. In certain circumstances, several important variables characterize the optimal treatment rules, where the means and covariance matrices of those unimportant variables do not matter. It would be interesting to develop robust methods that conduct variable selection simultaneously, which would eliminate the unimportant variables and further improve the ease of interpretation. Another interesting extension of the current work is to consider settings involving more than two treatments. While it is straightforward to conduct a series of pairwise comparisons, further development is required to identify the best rule among all treatments. It will also be interesting to investigate extensions to Boolean combination of linear rules, i.e., rules of the form {X1β11 + β01 ≥ 0} ∩ {X2β21 + β02 ≥ 0}.

Supplementary Material

Figure 2:

Misallocation rates in Scenarios 2 and 2’ using trial data with sample sizes varying from 250 to 1000. The misallocation rate is evaluated by using 500 Monte Carlo repetitions.

7. Appendix

Solving minimax linear decisions

It follows from the generalized Chebychev inequality (Marshall and Olkin, 1960) that (2) is equivalent to

where As shown in Lanckriet et al. (2003), and where Ip is the identified matrix. Recall that , We have , and We thus further rewrite the optimization problem to

We will assume that otherwise, the above problem is not identifiable. We also assume that are both positive definite. Since κ(α) is a monotone increasing function of α, we can rewrite our problem as

and these inequalities will become equalities at the optimum. We can eliminate the equality constraint by letting β1 = β10 + Fu, where u ∈ ℝp−1, and F ∈ ℝp×(p−1) is an orthogonal matrix whose columns span the subspace of vectors orthogonal to Hence, the optimization problem can be written as in (2). The optimal

where κ* is the optimal value of κ with The lower bound on the worst case allocation rate α* = (κ* − ν)2/{1 + (κ* − ν)2}, and ν should not exceed κ*.

Proof of Theorem 1

We first show that and where and For the convergence rate in means,

We will use McDiarmid’s inequality to bound (I).

where Mρ depends on M,CX and ρ. The last inequality follows from Assumptions 1, 5 and 6.

By McDiarmid’s inequality,

Then with probability ≥ 1 − δ,

| (7) |

For (II), by Cauchy-Schwaz inequality and the boundedness of X,

where the last step follows from Assumption 4. For (III),

For (IV),

where ∥f∥2 = E{f(x)2}1/2. Choosing the optimal we have that

| (8) |

Combining the above results, we obtain that with probability ≥ 1 − δ,

Therefore, is .

We then consider the bound on . Let and and . With probability ≥ 1 − δ,

Now we prove Theorem 1’s result. Define

and

where we use the estimated and in M(u). Since u belongs to a compact set, and in probability. Given that M(u) has a unique maximizer and u is in a compact set, we have Hence the conditions of Theorem 5.7 of van der Vaart (1998) are satisfied, and it follows that in probability, and subsequently in probability.

We now show the convergence rate of Given that u ↦ M(u) is twice differentiable at u*, we have M(u) − M(u*) ≲ −c∥u − u*∥2 for all u in the neighborhood of u* and some c > 0, where u* is the unique maximizer of M(u). Since and ,

Thus,

When is decreasing for any η ∈ (1, 2). In addition, in probability, and maximize Mn(u). The conditions of Theorem 14.4 in Kosorok (2008) are satisfied with Hence, and the desired result follows.

References

- Audibert J-Y, Tsybakov AB, et al. “Fast learning rates for plug-in classifiers.” The Annals of statistics, 35(2):608–633 (2007). [Google Scholar]

- Biau G “Analysis of a random forests model.” Journal of Machine Learning Research, 13(Apr):1063–1095 (2012). [Google Scholar]

- Breiman L “Random forests.” Machine learning, 45(1):5–32 (2001). [Google Scholar]

- Brinkley J, Tsiatis A, and Anstrom KJ “A generalized estimator of the attributable benefit of an optimal treatment regime.” Biometrics, 66(2):512–522 (2010). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Buchanan AL, Hudgens MG, Cole SR, Mollan K, Sax PE, Daar E, Adimora AA, Eron J, and Mugavero M “Generalizing evidence from randomized trials using inverse probability of sampling weights.” Pharmacy Practice Faculty Publications, Paper 77 (2016). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cole SR and Stuart EA “Generalizing Evidence From Randomized Clinical Trials to Target Populations The ACTG 320 Trial.” American journal of epidemiology, 172(1):107–115 (2010). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Devroye L, Györfi L, and Lugosi G A probabilistic theory of pattern recognition, volume 31 Springer Science & Business Media; (2013). [Google Scholar]

- Goldberg Y and Kosorok MR “Q-learning with censored data.” Annals of statistics, 40(1):529 (2012). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Greenland S “Randomization, statistics, and causal inference.” Epidemiology, 1(6):421–429 (1990). [DOI] [PubMed] [Google Scholar]

- Hamburg MA and Collins FS “The path to personalized medicine.” New England Journal of Medicine, 363(4):301–304 (2010). [DOI] [PubMed] [Google Scholar]

- Hastie T, Tibshirani R, and Friedman JH The Elements of Statistical Learning. New York: Springer-Verlag New York, Inc., second edition (2009). [Google Scholar]

- Hayes DF, Thor AD, Dressler LG, Weaver D, Edgerton S, Cowan D, Broadwater G, Goldstein LJ, Martino S, Ingle JN, et al. “HER2 and response to paclitaxel in node-positive breast cancer.” New England Journal of Medicine, 357(15):1496–1506 (2007). [DOI] [PubMed] [Google Scholar]

- Hernán MA and Robins JM “Estimating causal effects from epidemiological data.” Journal of epidemiology and community health, 60(7):578–586 (2006). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Huang X, Ning J, and Wahed AS “Optimization of individualized dynamic treatment regimes for recurrent diseases.” Statistics in medicine, 33(14):2363–2378 (2014). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ishwaran H, Kogalur UB, Blackstone EH, and Lauer MS “Random survival forests.” The annals of applied statistics, 2:841–860 (2008). [Google Scholar]

- Kang C, Janes H, and Huang Y “Combining biomarkers to optimize patient treatment recommendations.” Biometrics, 70(3):695–707 (2014). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kosorok MR Introduction to empirical processes and semiparametric inference. New York: Springer-Verlag; (2008). [Google Scholar]

- Lanckriet GR, Ghaoui LE, Bhattacharyya C, and Jordan MI “A robust minimax approach to classification.” The Journal of Machine Learning Research, 3:555–582 (2003). [Google Scholar]

- Lara PN, Ely B, Quinn DI, Mack PC, Tangen C, Gertz E, Twardowski PW, Goldkorn A, Hussain M, Vogelzang NJ, et al. “Serum biomarkers of bone metabolism in castration-resistant prostate cancer patients with skeletal metastases: results from SWOG 0421.” JNCI: Journal of the National Cancer Institute, 106(4) (2014). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Li K-C, Wang J-L, Chen C-H, et al. “Dimension reduction for censored regression data.” The Annals of Statistics, 27(1):1–23 (1999). [Google Scholar]

- Marshall AW and Olkin I “Multivariate chebyshev inequalities.” The Annals of Mathematical Statistics, 31(4):1001–1014 (1960). [Google Scholar]

- Orellana L, Rotnitzky A, and Robins JM “Dynamic regime marginal structural mean models for estimation of optimal dynamic treatment regimes, part I: main content.” The International Journal of Biostatistics, 6(2) (2010). [PubMed] [Google Scholar]

- Qian M and Murphy SA “Performance Guarantees for Individualized Treatment Rules.” The Annals of Statistics, 39:1180–1210 (2011). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Quinn DI, Tangen CM, Hussain M, Lara PN, Goldkorn A, Moinpour CM, Garzotto MG, Mack PC, Carducci MA, Monk JP, et al. “Docetaxel and atrasentan versus docetaxel and placebo for men with advanced castration-resistant prostate cancer (SWOG S0421): a randomised phase 3 trial.” The Lancet Oncology, 14(9):893–900 (2013). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Robins JM, Hernán MÁ, and Brumback B “Marginal structural models and causal inference in epidemiology.” Epidemiology, 11(5):550–560 (2000). [DOI] [PubMed] [Google Scholar]

- Rubin DB “Bayesian Inference for Causal Effects: The Role of Randomization.” The Annals of Statistics, 6:34–58 (1978). [Google Scholar]

- Tsybakov AB “Optimal aggregation of classifiers in statistical learning.” Annals of Statistics, 32:135–166 (2004). [Google Scholar]

- van der Vaart A Asymptotic Statistics. New York: Cambridge University Press; (1998). [Google Scholar]

- Vapnik V, Golowich SE, and Smola A “Support vector method for function approximation, regression estimation, and signal processing.” Advances in neural information processing systems, 281–287 (1997). [Google Scholar]

- Zhang B, Tsiatis AA, Laber EB, and Davidian M “A robust method for estimating optimal treatment regimes.” Biometrics, 68(4):1010–1018 (2012). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhao Y-Q, Zeng D, Laber EB, Song R, Yuan M, and Kosorok MR “Doubly robust learning for estimating individualized treatment with censored data.” Biometrika, 102(1):151–168 (2015). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhao YQ, Zeng D, Rush AJ, and Kosorok MR “Estimating Individualized Treatment Rules using Outcome Weighted Learning.” Journal of American Statistical Association, 107:1106–1118 (2012). [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.