Abstract

The goal of this paper is to give confidence regions for the excursion set of a spatial function above a given threshold from repeated noisy observations on a fine grid of fixed locations. Given an asymptotically Gaussian estimator of the target function, a pair of data-dependent nested excursion sets are constructed that are sub- and super-sets of the true excursion set, respectively, with a desired confidence. Asymptotic coverage probabilities are determined via a multiplier bootstrap method, not requiring Gaussianity of the original data nor stationarity or smoothness of the limiting Gaussian field. The method is used to determine regions in North America where the mean summer and winter temperatures are expected to increase by mid 21st century by more than 2 degrees Celsius.

1. Introduction

Our motivation comes from the following problem. Faced with a global change in temperature within the next century, it is important to assess which geographical regions are particularly at risk of extreme temperature change. The data used here, obtained from the North American Regional Climate Change Assessment Program (NARCCAP) project (Mearns et al., 2009, 2012, 2013), consists of two sets of 29 spatially registered arrays of mean seasonal temperatures for summer (June-August) and winter (December-February) evaluated at a fine grid of fixed locations 0.5 degrees apart in geographic longitude and latitude over North America over two time periods: late 20th century (1971–1999) and mid 21st century (2041–2069). Specifically, the data was produced by the WRFG climate model (Michalakes et al., 2004) using boundary conditions from the CGCM3 global model (Flato, 2005). We would like to determine the regions whose difference in mean summer or winter temperature between the two periods is greater than the 2◦C benchmark (Rogelj et al., 2009, Anderson and Bows, 2011). However, the observed differences may be confounded by the natural year-to-year temperature variability. Can we set confidence bounds on such regions that reflect the year-to-year variability in the data?

In mathematical terms, suppose that for some unknown target function μ : S → ℝ on a spatial domain S ⊂ ℝd (in our case the difference in mean temperature between the two time periods), we are interested in the excursion set of μ(s) above a fixed threshold c, defined as Ac := Ac(μ) := {s ϵ S : μ(s) ≥ c}. More specifically, based on an estimate of μ(s) we wish to obtain confidence regions for which the probability that

| (1) |

holds is asymptotically above a desired level, say 90%.

We obtain such sets as excursion sets of the estimate and we call them Coverage Probability Excursion (CoPE) sets. Assuming that μ(s) is continuous in the proximity of the level set ∂Ac = {s ∈ S: μ(s) = c}and that the estimator satisfies a functional central limit theorem (CLT), we show that the probability that the inclusions (1) hold is given asymptotically by the distribution of the supremum of the limiting Gaussian random field on the level set ∂Ac.

To apply this general framework to our data, we model the yearly average temperature fields Yi(s), i = 1,...,n, as realizations of a general linear model indexed by s ∈ S

| (2) |

with X indicating the two time periods. Setting a simple contrast vector w, the target function μ(s) = wT b(s) represents the difference of means to be estimated. For the general model (2) with arbitrary X and w, we show that under proper conditions on design and error fields ϵ(s) a least-squares fit at each location s will produce a consistent and asymptotically Gaussian estimator thereby allowing the construction of CoPE sets, as described above.

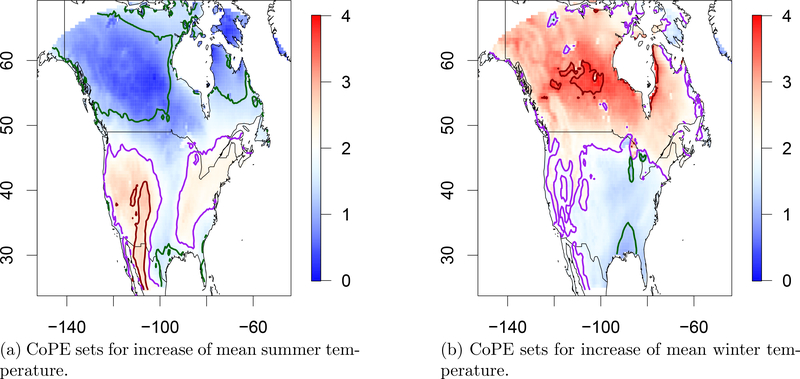

For illustration, Figure 1 shows CoPE sets for the temperature data. We target the region where the mean temperature in the future period (2041–2069) exceeds the mean temperature in the past (1971–1999) by 2◦C or more. With simultaneous approximate probability of 90% this region is no smaller than the region given by the red boundary and no larger than indicated by the green boundary .

Figure 1:

Output of our method for the increase of the mean summer (June-August) and winter (December-February) temperatures. Shown are heat maps of the estimate of the difference. The uncertainty in the excursion set estimate (purple boundary) for c = 2◦C is captured by the CoPE sets (red boundary) and (green boundary). The threshold was obtained according to Theorem 1 to guarantee inclusion with confidence 1−α = 0.9. The horizontal and vertical axes are indexed in degrees longitude and latitude, respectively.

Climate model output is a new high-dimensional type of geostatistical data. With the increasing interest in climate modeling, there is a large amount of data of this type, e.g. from NARCCAP and its European counterpart ENSEMBLES (van der Linden and Mitchell, 2009). Unlike the traditional data setup of spatial statistics, climate model output data is produced at all locations (in practice, on a fine grid) without measurement error (Bukovsky, 2012). In this sense, our setup is more similar to that of population studies in brain imaging, where a difference map between two conditions is estimated from repeated co-located image observations at a fine spatial grid under those conditions (see e.g. Worsley et al. (1996), Genovese et al. (2002), Taylor and Worsley (2007), Schwartzman et al. (2010)). In the discussion we suggest an approach to apply our ideas in the setting of dense discrete observed locations and in the presence of measurement error, inspired by functional data analysis.

The problem of finding the threshold for our CoPE sets involves computation of the quantiles of the supremum of a limiting Gaussian random field. While the general theory is valid whenever a functional CLT can be established for the estimator , we offer a practical procedure in the case of a linear model. In French (2014) a similar task was performed by Monte Carlo simulation assuming a parametric class for the covariance structure of the field. More generally for unknown and typically non-stationary covariance function, as we attempt here, we propose a simple and efficient multiplier bootstrap procedure (Wu, 1986, Hardle and Mammen, 1993, Mammen, 1992, 1993), that does not require estimating the unknown correlation function. The validity of this procedure for very high-dimensional data has been shown by Chernozhukov et al. (2013).

This problem has also been addressed and elegantly solved by Taylor and Worsley (2007) using the Gaussian kinematic formula. However, this method requires that the observations themselves be Gaussian and requires the field to be differentiable. The multiplier bootstrap allows us to avoid both these assumptions while being extremely fast to compute. We compare the finite sample performance of the Gaussian kinematic formula method and the multiplier bootstrap in a simulation.

Related Work

The problem of finding confidence regions for spatial excursion sets (exceedance regions) or level sets (contours), has been studied in other contexts. In the geostatistics literature, the target function is itself a Gaussian field, partially observed at relatively few spatial locations. The limited amount of information available is typically compensated by assuming that the field is stationary, which we do not. The uncertainty on the estimation of the random level contours and excursion sets has been addressed from a frequentist perspective for the former (Lindgren and Rychlik, 1995, Wameling, 2003, French and Sain, 2013) and the latter (French and Sain, 2013), as well as from a Bayesian perspective for both (Bolin and Lindgren, 2014). Incidentally, our techniques share some similarities with French (2014), although we will show that distinguishing between level contours and excursion sets is important.

In non-parametric density estimation and regression the target function is a probability density or regression function, estimated from realizations random vector or observations at a discrete set of locations, respectively. While the estimation of both level sets and contours have been well studied (Tsybakov, 1997, Cavalier, 1997, Cuevas et al., 2006, Willett and Nowak, 2007, Singh et al., 2009, Rigollet and Vert, 2009), there is less literature on confidence statements. Mason and Polonik (2009) showed asymptotic normality of plug-in level set estimates with respect to the measure of symmetric set difference. Mammen and Polonik (2013) proposed a bootstrapping scheme to obtain confidence regions analogous to our CoPE sets from vector-valued samples.

Other settings involving excursions over thresholds (albeit not necessarily excursion sets in our sense) include the following. Extreme value theory studies the occurence and size of exceedances over high thresholds (Davison and Smith, 1990). The spatial cumulative distribution function (SCDF) of a random field measures measures the proportion of the area where the field takes values below a certain threshold (Majure et al., 1995, Lahiri et al., 1999, Zhu et al., 2002). In a Bayesian framework, special loss functions have been proposed to obtain estimates of maxima or near-maxima or exceedance regions from the posterior (Wright et al., 2003, Zhang et al., 2008). A related approach is Bayesian hotspot detection (De Oliveira and Ecker, 2002). Scan statistics are widely used to detect hotspots or clusters (Patil and Taillie, 2004, Tango and Takahashi, 2005, Duczmal et al., 2006).

Software

All required functions for computation and visualization of CoPE sets developed in this paper are available in the R-package cope (Sommerfeld, 2015) in R (R Core Team, 2015).

2. Error control for excursion sets - CoPE sets

2.1. Setup

The domain S ⊂ ℝNon which all our functions and processes are defined, is assumed to be a compact (but not necessarily connected) subset of Euclidean space.

Assumption 1. We assume that

-

(a)

every open ball around a point in the contour or level set ∂Ac = {s ∈ S : μ(s) = c} has non-empty intersection with {s : μ(s) > c} and with {s : μ(s) < c}. Further, there exists η0 > 0 such that the target function μ(s) is continuous on the set U = {s ∈ S : |μ(s) − c| ≤ η0}.

-

(b)the estimators are continuous on U for all n ∈ ℕ large enough and there exist a sequence of numbers τn → 0 and a continuous function σ : U → ℝ+ bounded from above and below such that

weakly in C(U) as n → ∞. Here, G is a Gaussian field on U with mean zero, unit variance and continuous sample paths with probability one.(3) -

(c)there is a constant C > 0 such that for every s ∉ U we have

with Zn(s) a sequence of stochastic processes on S such that .(4)

Remark 1. The above assumptions need some explanation.

-

(a)

The first part of Assumptions 1 (a) ensures that μ(s) is not flat at the level c such that ∂Ac is in fact of lower dimension than N and excludes pathological cases where c is an isolated point in the image of μ(s) such that U = ∂Ac for positive η0. The second part guarantees that μ(s) is continuous whenever it has values close to c.

-

(b)

Part (b) says that needs to be a good estimator of μ(s) around the level c in the sense that it satisfies a funtional central limit theorem.

-

(c)

The last assumption concerns the quality of away from the level c. It need not be a good estimator there, not even consistent. We merely require that whenever μ(s) is smaller/larger than c that also is (eventually with high probability) smaller/larger than c. This flexibility can be useful when the target function μ(s) is not continuous outside of U since in this case it might not be possible to find an estimator that is consistent everywhere.

Remark 2.

-

(a)

The Assumptions 1 reflect the properties of the temperature data motivating the theory. Notably, we have disconnectedness of the domain in question (cf. Figure 1) due to islands and discontinuities can appear on the boundaries of large lakes.

-

(b)

While we require continuity of the target function around the level of interest c for our theory, simulations suggest (see the supplement) that this assumption is not essential to attain the desired coverage.

-

(c)

In general, Assumptions 1 may include estimators based on an increasing number of repeated observations (like in our data example) or an increasing number of sampling spatial points (like in the spatial statistics and nonparametric regression problems).

Finite Locations

In practice, we might check inclusion only on a subset of locations Dn, e.g. a grid of points, which may change with n. That is, we may want to control the probability that The following quantities of the set Dn will be relevant. Define for every s ∈ S the projections

Note that are functions of the point s. Further, let

Assumption 2. We assume that the sequence of sets Dn ⊂ S is such that and as .

Remark 3.

-

(a)

The quantity controls how dense Dn is around the contour ∂Ac. For example, if Dn is a regular grid with spacing δn, then .

-

(b)

On the other hand, describes the regularity of the function μ around ∂Ac. If μ(s) is Lipschitz continuous with Lipschitz constant L in a neighborhood of ∂Ac then . Hence, in this case, Assumption 2 is satisfied if .

Combining both observations, Assumption 2 holds, for example, if μ(s) is continuously differentiable in a neighborhood of the contour and Dn is a regular grid with spacing .

2.2. CoPE Sets

We will obtain nested estimates by thresholding the surface as follows:

| (5) |

where a is an appropriate non-negative constant to be determined. Note that and are themselves excursion sets. Moreover, for any choice of a ≥ 0 we have the inclusions , and hence the estimates obtained via (5) are nested. We refer to and as inner and outer confidence region, respectively. The function used to define the excursion sets is similar to the test statistic used in French and Sain (2013).

The following main result shows how the constant a in (5) can be chosen such that the desired inclusions hold with a predefined probability.

Theorem 1. If the Assumptions 1 hold, then

-

(a)

-

(b)for any subset D ⊂ S,

-

(c)for a sequence of sets Dn that additionally satisfy Assumption 2,

A direct consequence of Theorem 1 is that with asymptotic probability at least 1−α if we choose a such that The determination of a poses a computational challenge since the distribution of the supremum of and the set ∂Ac are unknown. In Section 3.2 we propose an easy and fast way to approximate this distribution by a multiplier bootstrap.

The second and third part of Theorem 1 address situations in which the fields are only observed on a, usually finite, set of locations D, e.g. a finite grid. In this case, the theorem asserts that, independent of the set D, the desired inclusions hold at all observed locations D asymptotically with probability at least 1 − α. This is in agreement with the intuition that the desired inclusion is easier to obtain if one only considers a small set of locations. The third part of the Theorem asserts that for a sequence of sets Dn that become dense around the contour sufficiently fast, the inclusion even holds with probability equal to 1−α. We address this issue further in the simulations section and in the discussion.

Confidence regions for the excursion set Ac yield confidence sets for the contour ∂Ac.

Corollary 1. Under the assumptions of Theorem 1, we have

where cl denotes the topological closure.

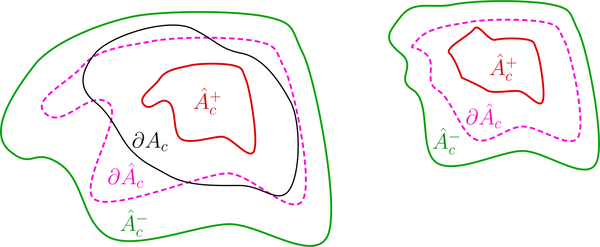

Note that, conversely, confidence sets for the contour do not automatically give confidence regions for the excursion set. In Figure 2 we show a simple schematic example of a pair of nested sets for which holds but does not. In fact, excluding these cases is the more laborious part of the proof of Theorem 1. The key is to divide the region S into a close-range zone where μ(s) is close to c, given by the inflated boundary and a long-range zone. Then, the strategy of the proof is to let the parameter η go to zero at an appropriate rate as n → ∞ such that, eventually, the probability of a part of falsely appearing in the long-range zone (as shown in Figure 2) vanishes. The probability of making an error remains in the close-range zone, and is asymptotically given by

Figure 2:

A simple example of nested sets that bound the contour ∂Ac but do not satisfy the inclusion

3. CoPE sets for general linear models

For concreteness and later application to the climate data, we here present how CoPE sets are obtained, in theory and in practice, when the target function is a parameter function in a general linear model and n is the number of repeated observations.

3.1. Asymptotic coverage probabilities

We consider the model

| (6) |

where Y(s) is an n × 1 vector of observations, X is a known n × p design matrix, b(s) = (b1(s),...,bp(s)) is an unknown p × 1 vector of parameters and ϵ(s) = (ϵ 1(s),..., ϵ n(s)) an unknown stochastic process. CLTs of the kind presented here are known in the case of independent errors (see e.g. Eicker (1963)). We show and prove versions tailored to our specific purpose for coherence and convenience. Specifically, for the noise ϵ, we assume that there exists a positive definite function with (s,s) = 1 for all s ∈ S, and a spatially varying positive definite matrix Ω(s) ∈ ℝn×n such that

The correlation function (·,·) describes the (non-stationary) spatial correlation structure of the error field, while Ω(s) describes the dependence structure between errors in different observations at each location. Note that it will not be necessary to estimate this function.

To estimate b(s) we use the generalized least squares estimator (GLS) (Rao and Touten- burg, 1995)

The matrix Ω(s) is unknown in general but can be replaced by a consistent estimate when a sufficiently simple model is assumed. We will use an autoregressive model for our data application in Section 5.

In the notation of Section (2), the target function μ(s) is now a linear combination wT b(s) for some vector of coefficients w ∈ ℝp. Naturally, now plays the role of the estimator .

Next, we establish a CLT for the parameter estimators in our setting, generalizing well-known CLTs in the case of independent errors (see e.g. Eicker (1963)).

Additional notation

Recall that for 1 ≤ p ≤ ∞, the p-norm ||A||p of a matrix A is defined to be ||A||p = sup||x||p=1 ||Ax||p. Hence, by definition ||Ax||p ≤ ||A||p||x||p for all x. When p = ∞ the matrix norm ||A||∞ is the maximum absolute row sum of A, i.e.

Definition 1. We denote the Lebesgue measure of a set A ⊂ S by |A|.For vectors s,t ∈ ℝN define the block (s, t] = (s1,t1]×···×(sN,tN] ⊂ℝN and for a stochastic process (s) with index set containing (s,t] define the increment of (s) around (s, t] (cf. Bickel and Wichura (1971)) as

In dimension N = 1 the definition of an increment yields ϵ((s1,t1] = ϵ (t1)− ϵ(s1), while in dimension N = 2, ϵ((s, t]) = ϵ(t1, t2) − ϵ(s1, t2) − ϵ(t1, s2) + ϵ(s1, s2).

Assumption 3. Write Z(s) = Ω−1/2(s)X(XT Ω−1(s)X)−1/2 for brevity. Assume that

-

(a)

the noise field (s) has continuous sample paths with probability one. Moreover, a centered unit variance Gaussian field with the same correlation function c(s1,s2) as the noise field (s) also has continuous sample paths with probability one.

-

(b)

the map s 7↦ Ω(s) is continuous as a function S → ℝn×n.

-

(c)

the de-correlated error field has independent components.

-

(d)

there exists δ > 0 and a constant Kδ > 0 such that independent of j and n and such that as n → ∞.

-

(e)

there exist γ ≥ 0 and β > 0 such that for a constant K(β γ,) > 0 independent of n and s we have .

-

(f)

the function satisfies vn(s)/‖vn(s)‖2 → v0(s) uniformly in s as n → ∞ for some continuous function v0 : S → ℝ.

Remark 4. These assumptions will ensure that wT b(s) enjoys a functional CLT as required in Assumptions 1. Let us give some explanation.

-

(a)

Conditions (a) and (b) ensure the continuity of the relevant fields, such that conver-gence can be considered in the space of continuous functions on S.

-

(b)

Condition (c) is, for example, satisfied for autoregressive moving average (ARMA) models, which we will employ in our data application. However, we note that condition (c) could be replaced by an appropriate mixing condition.

-

(c)

Conditions (d) and (e) are assumptions on the moments and increments of the error field, coupled with conditions on the design matrix X and the correlation structure Ω(s). In the simple case of independent noise and a first order linear model with equally spaced design points these conditions decouple and reduce to standard conditions on moments and increments of the noise field (see Remark 6).

Theorem 2 (CLT for general linear model). Under Assumption 3 (a)- (d) the weak con-vergence

holds as n → ∞, where G⊗p is an ℝp-valued mean zero, unit variance Gaussian random field with correlation function .

If additionally part (e) of Assumptions 3 holds, then we have

weakly as n → ∞, where G is a mean zero, unit variance Gaussian field on S with correlation function cov .

The statement of Theorem 2 guarantees that parts (b) and (c) of Assumptions 1 hold. Therefore, we can obtain CoPE sets for wT b(s) as follows.

Corollary 2 (CoPE sets for general linear model). If the function μ(s) = wT b(s) satisfies part (a) of Assumptions 1 and if Assumptions 2 and 3 hold, then

| (7) |

are CoPE sets for Ac ={s ∈ S : wT b(s) ≥ c}, that is,

Remark 5. Note that Theorem 2 and hence also Corollary 2 will (by Slutzky’s Theorem) continue to hold if Ω(s) is replaced by a consistent estimate . This is what one will typically obtain from a GLS estimate with a fixed correlation structure, e.g. an ARMA process with fixed orders but unknown coefficients.

3.2. Approximating the tail probabilities of G

3.2.1. Multiplier bootstrap

In practice, the distribution of G in Corollary 2 is unknown, because it depends on the unknown (non-stationary) covariance function. In our motivating application the only information we have about G is contained in the de-correlated residuals

of the linear regression.

A way of approximating the distribution of the limiting Gaussian field G is given by the multiplier or wild bootstrap first introduced by Wu (1986) and later studied by Mammen (1992, 1993), Hardle and Mammen (1993). Let g1,...,gn be i.i.d standard Gaussian random variables independent of the data. Then, conditional on the residuals , the field is Gaussian and has covariance

| (8) |

the sample covariance. The idea is to approximate , needed in Theorem 1 by . The latter can be efficiently computed by generating a large number M of i.i.d. copies , conditional on and evaluating

The sample covariance (8) itself is not a good estimator of the true covariance function in our high dimensional setting, where the number of locations is much higher than the sample size n (about ten thousand grid points vs. 58 field realizations in the climate data). However, the claim of the approximation is about the distribution of the supremum of the process instead. For a discrete set of locations, the supremum sups∈∂Ac |G(s)| has the distribution of the maximum of a high-dimensional Gaussian random vector. Extending the results of Mammen (1993) in the high dimensional setting, Chernozhukov et al. (2013) show that the distribution of the maximum can be well approximated by the Gaussian multiplier bootstrap using realizations of a not necessarily Gaussian random vector with the same covariance matrix. In this sense, the multiplier bootstrap is valid in our setting. This is confirmed by simulations in Section 4 below.

3.2.2. An alternative method for smooth Gaussian fields

If the limiting field is twice differentiable and the error field ∊(s) in (6) is Gaussian itself then the Gaussian Kinematic Formula (GKF) (e.g. Taylor (2006), Taylor et al. (2005), Taylor and Worsley (2007)) offers an elegant and accurate way of computing tail probabilities of Gaussian fields that does not require simulations.

The GKF is based on two properties of smooth Gaussian fields. First, for such fields the exceedance probability for high thresholds can be approximated by the expected Euler characteristic: for any set B ⊂ S with smooth boundary,

| (9) |

where χ is the Euler characteristic (see e.g. Adler and Taylor (2007)). Second, the expected Euler characteristic in (9) has the closed formula

| (10) |

with the d-th order Lipschitz-Killing curvature (LKC) (Taylor, 2006) dependent on the covariance Λ(s) of the partial derivatives of G and known functions ρd(a). The GKF shows that, at least for smooth fields, the tail probability of the supremum is intrinsically lowdimensional, as it depends only (up to an exponential error term) on N + 1 parameters. This gives an additional justification for the ability of the multiplier bootstrap method to estimate the tail probability despite the high dimensionality of the field.

We can also use the GKF to obtain CoPE sets: If the Assumptions (1) are satisfied then Theorem (1) implies in conjunction with (9) and(10) that

| (11) |

To use (11), the problem amounts to estimating the LKCs of the boundary ∂Ac. Taylor and Worsley (2007) propose a method to estimate LKCs based on a finite number of realizations of the Gaussian field G and a triangulation of a plug-in estimate ∂Âc of the boundary ∂Ac. In our application, however, we only have realizations of a generally non-Gaussian field (the residuals in of the linear model, cf. Section 5) with asymptotically the same covariance as G. For completeness, we compare this method to the multiplier bootstrap method in the simulations section below.

3.3. Algorithm

Combining the results of the previous sections we can give the exact procedure for obtaining CoPE sets for the parameters of the linear model Y(s) = Xb(s) + ∊(s).

Algorithm 1. Given a design matrix X and observations Y(s) following the linear model (6). If the assumptions of Corollary 2 hold, the following yields CoPE sets for wT b(s).

-

(a)

Compute the estimate and the corresponding de-correlated residuals with the empirical covariance matrix determined according to the model used for Ω(s).

-

(b)

Determine a such that approximately For example, use the multiplier bootstrap procedure with the residuals to generate i.i.d. copies of a Gaussian field with covariance structure given by the sample covariance. Then, use these and the plug-in estimate for the boundary ∂Ac to determine a such that .

-

(c)Obtain the nested CoPE sets defined by

with .

3.4. Model for the climate data

In our application we have a total of n = n(a) +n(b) observations, the first n(a) observations are the ’past’, the last n(b) are the ’future’. Within each period we model the change in mean temperature linearly in time. Model (6) takes the form

| (12) |

Without loss of generality, we may assume that and Our goal is to give CoPE sets for the excursion sets of the difference T(b)(s)−T(a)(s). Therefore, we define w = (−1,1,0,0)T. The next statement is a direct application of Corollary 2.

Proposition 1. If the design and the noise field in model (12) satisfy Assumptions 3 then Algorithm 1 yields asymptotically valid CoPE sets for T(b)(s) − T(a)(s).

Remark 6. In the case of independent noise fields 1(s),...,n(s)~ ϵ(s) we can replace parts (d)-(f) of Assumptions 3 by the following simpler conditions. A proof is given in the appendix.

There exist numbers δ, > 0 and γ ≥ 0 and constants Kδ > 0 and K(𝛽,γ) > 0 such that sups∈S and for every block B ℝD.

n(a) = n(b) = and both sets of design points and are equally spaced (possibly with different spacing for the periods (a) and (b)).

4. Simulations

The simulations in this section show that the proposed methods provide approximately the right coverage in practical non-asymptotic situations with non-smooth, non-stationary and non-Gaussian noise. Our objective in the design of the error fields with these properties is not to imitate the data but to introduce non-stationarity and non-Gaussianity in a transparent and reproducible way, showing the full potential of the method. In fact, the error field that we encounter in the data (cf. Section (5)) is better behaved as far as smoothness and stationarity are concerned than the artificial fields we investigate here.

4.1. Setup

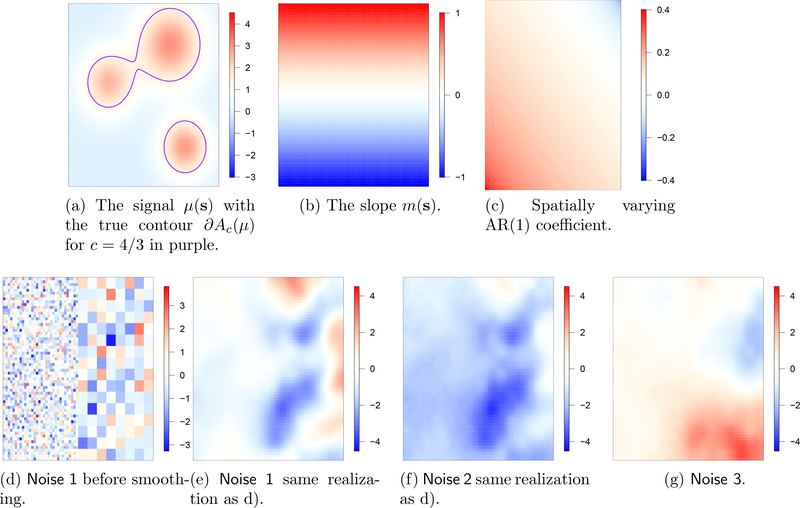

We consider a one-group version of model (12) (n = n(a), n(b) = 0) on tj = 0,1/(n − 1),2/(n − 1)...,1, with non-stationary and non-Gaussian noise over a square region S = [0,1]2 sampled at a uniform 64 × 64 grid. The signal μ = T(a) is a linear combination of three Gaussians and the slope field m(s) is linear in the y-coordinate and constant in the x-coordinate (Figure 3a,3b). We consider IID and AR(1) processes (1(s),...,n(s)) (according to the spatial pattern in Figure 3c) for three different base fields:

Figure 3:

The spatial signal, temporal slope, temporal AR coefficient, and one realization of each of the noise fields in the toy example.

Noise 1

Independent standard normal random variables are assigned to each pixel in the left half of S and to blocks of 4-by-4 pixels in the right half (Figure 3d). The entire picture is convolved with a Gaussian kernel with bandwidth 0.1 and all values are multiplied by a scaling factor of 50 (Figure 3e).

Noise 2

Identical to Noise 1 except the image is smoothed by a Laplace kernel with bandwidth 0.1 instead of a Gaussian and the scaling factor is 100 (Figure 3f).

Noise 3

Each pixel in the upper half is assigned a Laplace random variable with mean zero and variance two. In the lower half, pixels are assigned independent Student t-distributed random variables with 10 degrees of freedom. The entire picture is convolved with a Gaussian kernel of bandwidth 0.1 and scaled with a factor of 25 (Figure 3g).

The noise fields Noise 1–3 are intentionally designed to have non-homogeneous variance and scaling factors are chosen ad-hoc such that all three fields can be conveniently displayed on a common scale (Figure 3 d-f).

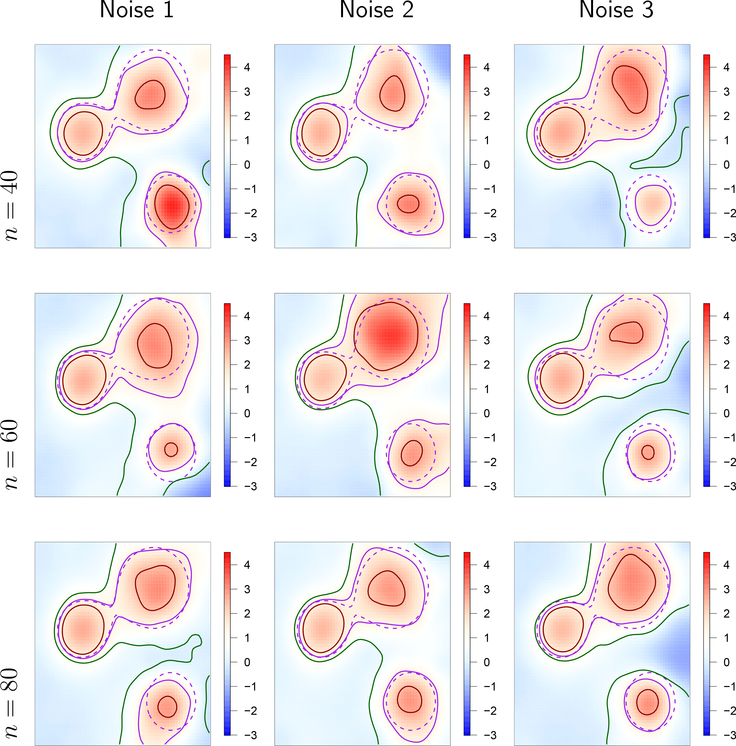

Our target is the excursion set of the mean function μ(s) at level c = 4/3. Note that the spatially varying AR parameter was not assumed to be known but was rather estimated in each trial from the data. We controlled the probability of coverage at the level 1 − α = 0.9 using Corollary 2. The threshold a was computed using the multiplier bootstrap procedure proposed in Section 3.2.1 using either the true boundary ∂Ac or the plug-in estimate ∂Âc These boundaries were determined as piece-wise linear splines with the R-function contourLines where values of fields on non-grid locations are determined via linear interpolation. The results using ∂Âc for each one run with the three noise fields and sample sizes n = 40,60,80 are shown in Figure 4.

Figure 4:

Single realizations of the output of our method at confidence level 90% for the three noise fields (rows) and for sample sizes n = 40,60,80 (columns) with the target function μ(s) from Figure 3a. In all pictures we show a heat map of the estimator , the boundary of Ac(μ) (solid purple) and (dashed purple) and the boundaries of (red) and (green). The threshold a was obtained using the multiplier bootstrap and the plug-in estimate of the boundary Ac.

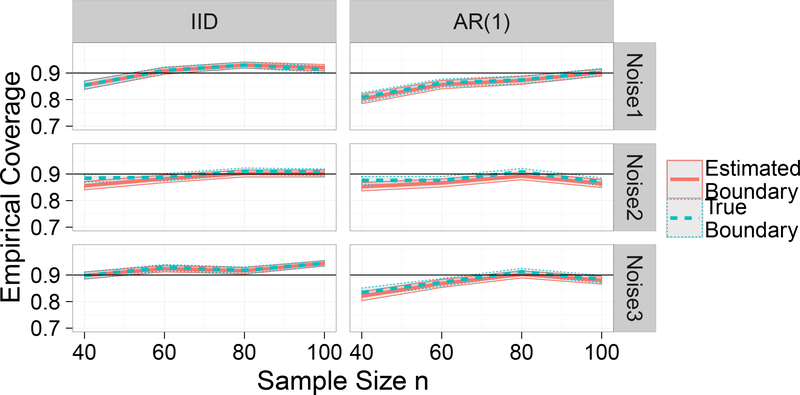

4.2. Performance of CoPE sets

We analyzed the performance of our method on 5000 runs of the toy examples in Section 4.1. Figure 5 shows the percentage of trials in which coverage was achieved, if either the true boundary ∂Ac or the plug-in estimator ∂Âc was used to determine the threshold. The empirical coverage is seen to approach the nominal level of 90% as the sample size increases, suggesting asymptotic unbiasedness. Comparing the coverage for the true and estimated boundaries, we see that the non-asymptotic bias is not caused by the lack of knowledge of the true boundary. It may be a consequence of the bootstrap procedure instead.

Figure 5:

Percentage of trials in which . The nominal coverage probability is 90%.

We conducted several additional experiments with different choices of parameters for the noise fields and obtained results very similar to those reported here.

Computational performance

As already noted in Section 3.2.1, the multiplier bootstrap allows for a very fast computation of CoPE sets. In the simulations, the CoPE sets for a sample of size n = 120, each on a grid of 64×64 = 4096 locations could be computed in less than two minutes on a standard laptop for all noise models. We found that only a negligible fraction of the total runtime of the method is spent on the multiplier bootstrap - with the pointwise generalized least squares estimation taking far longer.

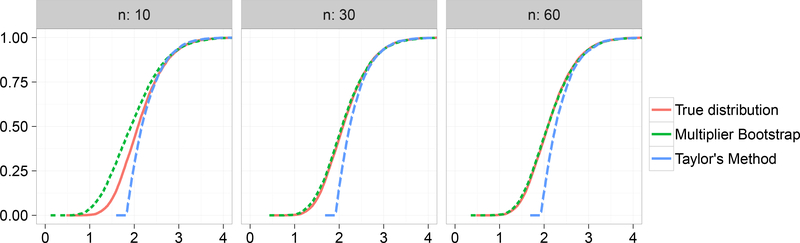

4.3. Estimation of exceedance probabilities

In this Section we compare the multiplier bootstrap with the method proposed by Taylor and Worsley (2007), as described in Section 3.2.2, where (s) is distributed according to Noise 1 (see Section 4.1 above), σ2(s) = var[(s)] and ∂Ac is the contour Ac(μ) of the function μ(s) shown in Figure 3a at level c = 4/3. The true cumulative density function for and its empirical approximations based on both methods are shown in Figure 6. The empirical cdfs are each based on a single i.i.d. sample ϵ1(s),..., ϵ n(s) for n = 10,30 and 60. For the multiplier bootstrap we generated 5,000 bootstrap realizations. The true cdf was calculated empirically using 10,000 i.i.d. samples of ϵ (s).

Figure 6:

The probability (the horizontal axis shows a) and approximations of it via the multiplier bootstrap or Taylor’s method based on a sample of size n. Here, the error field ϵ(s) has the distribution described in Noise 1 and Ac = Ac(μ) for the function μ shown in Figure 3a.

Both methods give a remarkably good approximation of the true distribution of the supremum, particularly for sample sizes of n = 30 and higher. However, while Taylor’s method only gives a valid approximation in the tail of the distribution, the multiplier bootstrap approximates all parts of the cdf.

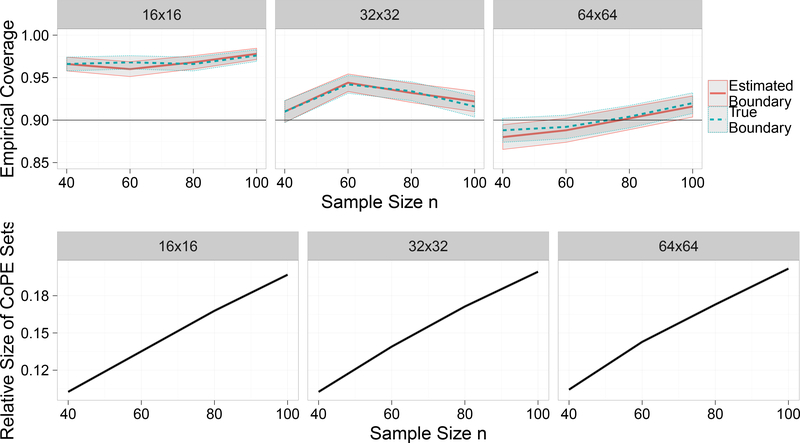

4.4. Effect of Grid Coarseness

In order to assess the influence of the coarseness of the grid we repeated the experiment of Section 4.1 on grids of sizes 16×16, 32×32 and 64×64, in the same setting as in the top left panel of Figure 5, with 500 trials.

The results are shown in Figure 7 (top row). For the lower resolutions 16 × 16 and 32 × 32, coverage is above the nominal level of 90%, but it approaches the nominal level as the resolution increases. This is consistent with parts b) and c) of Theorem 1 stating that the procedure may produce over-coverage if Dn is small but coverage will approach the nominal level for increasing grid size.

Figure 7:

Empirical coverage for CoPE sets (top row) and relative size of inner and outer CoPE sets for different grid sizes (bottom row).

In order to check whether over-coverage for lower resolutions is due to the CoPE sets being overly conservative, Figure 7 (bottom row) shows the ratio of number of grid points contained in the inner CoPE set over the number of grid points contained in the outer CoPE set. We see that there is hardly any difference between the resolutions. We conclude that CoPE sets do not become more conservative as the resolutions decreases.

5. Application to the climate data

Data processing

Since the climate model used to generate the data does not give meaningful values over water we applied a mask to only include temperature over land. This also has the benefit that the data shows no apparent discontinuities over land, in agreement with Assumptions 1.

For step (a) of Algorithm 1 we used the R-package nlme to obtain estimates and assuming an AR(1) correlation within past and future time periods and no correlation between the two periods. For step (b) we used the multiplier bootstrap to obtain the threshold a. The entire analysis for either the summer or winter data including the pointwise GLS and the multiplier bootstrap to obtain the CoPE sets was performed in under seven minutes on a regular laptop.

Results

The results for the climate data, shown in Figure 1, correspond to CoPE sets for T(b)(s) − T(a)(s). The target level is c = 2◦C and nominal coverage probability is fixed at 1 − α = 0.9.

For the mean summer temperature, it may be stated with 90% confidence that the Rocky Mountains and the Sierra Madre Occidental mountains of Mexico are at risk of exhibiting a warming of 2◦C or more in the given time period, while the Florida Peninsula, parts of the Mexican Gulf, large parts of the Canadian Northwest and the northern part of the Labrador Peninsula are not at risk.

For the mean winter temperature, some regions around the Hudson Bay and in the Canadian Shield are identified to be at a high risk while a comparatively small region north of the Mexican Gulf is considered not at risk for extreme warming.

In the supplement to this paper we show CoPE sets when the domain is restricted to the contiguous USA. These sets are only marginally different from the ones shown here.

6. Discussion

Discrete Locations and Measurement Errors

Consistent with our data, we have assumed that we have repeated observations of the entire fields contaminated with a continuous noise field and without nugget effect. Our theory for CoPE sets may be extended following Degras (2011) who considered a model of the form

| (13) |

with locations xj in the domain of interest, the ∊i independent copies of an unknown random process and ∊ ηij i.i.d. Gaussian noise (nugget effect). They show that (in dimension 1 or 2) under suitable assumptions on the target function and the model, a local linear estimate of μ, depending on a smoothing parameter, satisfies a functional CLT, that is converges weakly to a Gaussian random field with the same covariance as ϵ. The functional CLT gives the major part of our assumptions 1 needed to obtain CoPE sets. However, it still depends on the design locations xj becoming dense quickly. It is an interesting question for further research how the threshold a may be obtained in this setting and how it may be affected by the choice of the smoothing parameter.

More Efficient Estimators

In view of techniques from geostatistics, it is natural to ask whether more efficient estimators than pixel-wise regression can be used to estimate the parameter functions in the model (6). There is a trade-off in the feasibility of such estimators. While local pooling may increase the accuracy, optimal bandwidth will vary spatially and depend on the unknown covariance structure of the field. Obtaining an estimator in this fashion is feasible if one makes strong parametric assumptions on the covariance structure, but not so if one only assumes non-stationarity and general moment and increment conditions (as we do here).

A Proofs

Proof of Theorem 1. In part I of the proof, we show that

| (14) |

Note that (14) immediately gives the second part of the theorem since

In part II we show that for a sequence of sets Dn that satisfy Assumption 2 we have

| (15) |

This and part b) of the Theorem immediately give part c). Part a) also follows from (14) and (15) since Dn ≡ S trivially satisfies Assumption 2.

Part I

For η > 0 define the inflated boundary . Points outside of become irrelevant in the limit n → ∞ since their values are far from c and, if η goes to zero at an appropriate rate, we finally end up with the boundary ∂Ac.

Assume η < η0 and let C′ = infs∈U σ(s). We first show that

implies that . To see this, assume the above conditions hold and considers ∊ Ac. If we have that

and hence . If then

and therefore again . If finally s ∈ Ac \ U we have

and hence as well. Therefore we have shown that the above properties imply . An analogous argument works for the inclusion .

Now, let {ηn}n∊ℕ be a sequence of positive numbers such that ηn → 0 and .

With what we showed above, we can write

| (16) |

First, note that the last two probabilities in this expression go to one since and are tight and .

To prove convergence of the first term we need the following

Lemma 1. Under Assumptions 1 part (a) if ηn → 0 then the Hausdorff distance δn := dH .

Proof. Define the set (∂Ac)ε := {s ∊ S : d(s, ∂Ac) < ε}. We prove the assertion by showing that for any ε > 0 there exists an η > 0 such that . To this end, assume the contrary. Then, there exists ε > 0 such that for any η > 0 we find with d(sη,∂Ac) ≥ ε. The sequence (sη)η↓0 is contained in the compact set S and hence has a convergent subsequence with limit s*, say. By construction and continuity of μ on for η small enough, we have s* . On the other hand, 0 = d(s*, ∂Ac) =limη→0 d(sη, ∂Ac) ≥ ε, a contradiction.

Recall that for a function f : S → ℝ and some number δ > 0 the modulus of continuity is defined as

| (17) |

Since is weakly convergent, we have

| (18) |

for all positive ζ (Khoshnevisan, 2002, Prop. 2.4.1 and Exc. 3.3.1). Together with Lemma 1 this implies that for n large enough

in probability. Since converges in distribution to this yields

in distribution. In view of (16) this completes the proof of (14).

Part II

To show (15) we first prove that the inclusion does not hold if for some δ > 0 we have and .

To this end, assume the latter holds and let s0 ∈ ∂Ac such that . Then, with (17) and the abbreviation

we have for large enough n for the projection of s0 that

This shows . But by definition and hence does not hold.

Since an analogous calculation works for the inclusion we obtain the bound

By assumptions 1 and because and in probability by (18) the right hand side of this bound converges to . Since δ > 0 was arbitrary and has a continuous distribution the claim follows.

Proof of Corollary 1. For any pair of nested sets . we have that implies . On the other hand, the latter will certainly fail to hold if . Combining these two observations yields

Taking the limit n → ∞ of this inequality and using Theorem 1 gives the assertion.

Proof of Theorem 2. Define A = XT Ω−1(s)X−1 XT Ω−1/2(s), giving . To prove weak convergence of the process we first show convergence of the finite dimensional distributions and then tightness of the sequence (Khoshnevisan, 2002, Prop. 3.3.1).

For the former, let s1,...,sK ∈ S be arbitrary. We need to show that with

(here, ‘⊗’ denotes the Kronecker product of two matrices) we have convergence U → in distribution. We readily see that E [U] = 0 and for the covariance we compute

We employ the Cramér-Wold device to show convergence of U. Indeed, let and (α1,...,αK) ∈ ℝK×p be some fixed arbitrary vector and compute

By interchanging the sums and defining we have by Assumption 3 part (c) managed to write ⟨U, α⟩ as a sum of independent random variables ⟨U, α⟩ = Σj Wj. The goal is now to use the CLT in the form of Lyapunov for the random variables Wj. To this end compute var cov [U]α and note that since we have already showed U to have the right covariance the claimed convergence will follow once we establish the Lyapunov condition. For this purpose let δ be as in Assumption 3 to give

as n → ∞. This concludes the proof of convergence for the finite dimensional distributions.

Tightness of the sequnce follows from Assumption 3 (e) and (Bickel and Wichura, 1971, Thm. 3).

The second statement follows from the fact that

where the weak convergence from the previous part is used.

Proof of Corollary 2. This is an application of Theorem 1. Part (a) of Assumption 3 is tantamount to part (a) of Assumption 1. Theorem 2 gives part (b) of Assumption 1. Part (c) of the Assumptions follows from

where the supremum of the absolute value of the second summand is by part (b) of the Theorem. To conclude the proof, note that for .

Proof of Remark 6. We compute

It follows that

With this we obtain

Now we note that since the design points and are equally spaced by assumption we have and , and the same is true for the (b)-counterparts. This shows that and therefore Assumptions 3 d) -f) are valid.

Moreover, in the notation of Theorem 2, we have so that . This finally gives .

Supplementary Material

Footnotes

M.S. acknowledges support by the “Studienstiftung des Deutschen Volkes” and the SAMSI 2013–2014 program on Low-dimensional Structure in High-dimensional Systems. A.S. and S.S. were partially supported by NIH grant R01 CA157528. S.S. began working on this research while he was a Scientist with the Institute for Mathematics Applied to the Geosciences, National Center for Atmospheric Research, Boulder, CO. All authors wish to thank the North American Regional Climate Change Assessment Program (NARCCAP) for providing the data used in this paper. NARCCAP is funded by the National Science Foundation (NSF), the U.S. Department of Energy (DoE), the National Oceanic and Atmospheric Administration (NOAA), and the U.S. Environmental Protection Agency Office of Research and Development (EPA).

SUPPLEMENTARY MATERIAL

Supplementary material Additional simulations and data analysis.

R-package narccapdata: R-package containing the climate data analyzed in the article.

Contributor Information

Max Sommerfeld, FBMS, Universität Göttingen.

Stephan Sain, The Climate Corporation.

Armin Schwartzman, Division of Biostatistics, University of California, San Diego.

References

- Adler RJ and Taylor JE (2007). Random fields and geometry. Springer, New York. [Google Scholar]

- Anderson K and Bows A (2011). Beyond ‘dangerous’ climate change: emission scenarios for a new world. Philosophical Transactions of the Royal Society A: Mathematical, Physical and Engineering Sciences, 369(1934):20–44. [DOI] [PubMed] [Google Scholar]

- Bickel PJ and Wichura MJ (1971). Convergence Criteria for Multiparameter Stochastic Processes and Some Applications. The Annals of Mathematical Statistics, 42(5):1656–1670. [Google Scholar]

- Bolin D and Lindgren F (2014). Excursion and contour uncertainty regions for latent Gaussian models. Journal of the Royal Statistical Society: Series B (Statistical Methodology), pages n/a–n/a. [Google Scholar]

- Bukovsky MS (2012). Temperature Trends in the NARCCAP Regional Climate Models. Journal of Climate, 25(11):3985–3991. [Google Scholar]

- Cavalier L (1997). Nonparametric Estimation of Regression Level Sets. Statistics, 29(2):131–160. [Google Scholar]

- Chernozhukov V, Chetverikov D, and Kato K (2013). Gaussian approximations and multiplier bootstrap for maxima of sums of high-dimensional random vectors. The Annals of Statistics, 41(6):2786–2819. [Google Scholar]

- Cuevas A, González-Manteiga W, and Rodríguez-Casal A (2006). Plug-in Estimation of General Level Sets. Australian & New Zealand Journal of Statistics, 48(1):7–19. [Google Scholar]

- Davison AC and Smith RL (1990). Models for Exceedances over High Thresholds. Journal of the Royal Statistical Society. Series B (Methodological), 52(3):393–442. [Google Scholar]

- De Oliveira V and Ecker MD (2002). Bayesian hot spot detection in the presence of a spatial trend: application to total nitrogen concentration in Chesapeake Bay. Environmetrics, 13(1):85–101. [Google Scholar]

- Degras DA (2011). Simultaneous confidence bands for nonparametric regression with functional data. Statistica Sinica, 21(4). [Google Scholar]

- Duczmal L, Kulldorff M, and Huang L (2006). Evaluation of Spatial Scan Statistics for Irregularly Shaped Clusters. Journal of Computational and Graphical Statistics, 15(2):428–442. [Google Scholar]

- Eicker F (1963). Asymptotic Normality and Consistency of the Least Squares Estimators for Families of Linear Regressions. The Annals of Mathematical Statistics, 34(2):447–456. [Google Scholar]

- Flato GM (2005). The third generation coupled global climate model (CGCM3). Available on line at http://www.cccma.bc.ec.gc.ca/models/cgcm3.shtml.

- French JP (2014). Confidence regions for the level curves of spatial data. Environmetrics, 25(7):498–512. [Google Scholar]

- French JP and Sain SR (2013). Spatio-temporal exceedance locations and confidence regions. The Annals of Applied Statistics, 7(3):1421–1449. [Google Scholar]

- Genovese CR, Lazar NA, and Nichols T (2002). Thresholding of Statistical Maps in Functional Neuroimaging Using the False Discovery Rate. NeuroImage, 15(4):870–878. [DOI] [PubMed] [Google Scholar]

- Hardle W and Mammen E (1993). Comparing Nonparametric Versus Parametric Re-gression Fits. The Annals of Statistics, 21(4):1926–1947. [Google Scholar]

- Khoshnevisan D (2002). Multiparameter Processes: an introduction to random fields. Springer. [Google Scholar]

- Lahiri SN, Kaiser MS, Cressie N, and Hsu N-J (1999). Prediction of Spatial Cumulative Distribution Functions Using Subsampling. Journal of the American Statistical Association, 94(445):86–97. [Google Scholar]

- Lindgren G and Rychlik I (1995). How reliable are contour curves? Confidence sets for level contours. Bernoulli, 1(4):301–319. [Google Scholar]

- Majure JJ, Cook D, Cressie N, Kaiser MS, Lahiri SN, and Symanzik J (1995). Spatial CDF Estimation and Visualization with Applications to Forest Healthmonitoring. Iowa State University. Department of Statistics. Statistical Laboratory. [Google Scholar]

- Mammen E (1992). Bootstrap, wild bootstrap, and asymptotic normality. Probability Theory and Related Fields, 93(4):439–455. [Google Scholar]

- Mammen E (1993). Bootstrap and Wild Bootstrap for High Dimensional Linear Models. The Annals of Statistics, 21(1):255–285. [Google Scholar]

- Mammen E and Polonik W (2013). Confidence regions for level sets. Journal of Multi-variate Analysis, 122:202–214. [Google Scholar]

- Mason DM and Polonik W (2009). Asymptotic normality of plug-in level set estimates. The Annals of Applied Probability, 19(3):1108–1142. [Google Scholar]

- Mearns LO, Arritt R, Biner S, Bukovsky MS, McGinnis S, Sain S, Caya D, Correia J, Flory D, Gutowski W, Takle ES, Jones R, Leung R, Moufouma-Okia W, McDaniel L, Nunes AMB, Qian Y, Roads J, Sloan L, and Snyder M (2012). The North American Regional Climate Change Assessment Program: Overview of Phase I Results. Bulletin of the American Meteorological Society, 93(9):1337–1362. [Google Scholar]

- Mearns LO, Gutowski W, Jones R, Leung R, McGinnis S, Nunes A, and Qian Y (2009). A Regional Climate Change Assessment Program for North America. Eos, Transactions American Geophysical Union, 90(36):311–311. [Google Scholar]

- Mearns LO, Sain S, Leung LR, Bukovsky MS, McGinnis S, Biner S, Caya D, Arritt RW, Gutowski W, Takle E, Snyder M, Jones RG, Nunes AMB, Tucker S, Herzmann D, McDaniel L, and Sloan L (2013). Climate change projections of the North American Regional Climate Change Assessment Program (NARCCAP). Climatic Change, 120(4):965–975. [Google Scholar]

- Michalakes J, Dudhia J, Gill D, Henderson T, Klemp J, Skamarock W, and Wang W (2004). The weather research and forecast model: software architecture and performance. In Proceedings of the 11th ECMWF Workshop on the Use of High Performance Computing In Meteorology, volume 25, page 29 World Scientific. [Google Scholar]

- Patil GP and Taillie C (2004). Upper level set scan statistic for detecting arbitrarily shaped hotspots. Environmental and Ecological Statistics, 11(2):183–197. [Google Scholar]

- R Core Team (2015). R: A language and environment for statistical computing.

- Rao CR and Toutenburg H (1995). Linear models. Springer. [Google Scholar]

- Rigollet P and Vert R (2009). Optimal rates for plug-in estimators of density level sets. Bernoulli, 15(4):1154–1178. [Google Scholar]

- Rogelj J, Hare B, Nabel J, Macey K, Schaeffer M, Markmann K, and Meinshausen M (2009). Halfway to Copenhagen, no way to 2 °C. Nature Reports Climate Change, (0907):81–83. [Google Scholar]

- Schwartzman A, Dougherty RF, and Taylor JE (2010). Group Comparison of Eigenvalues and Eigenvectors of Diffusion Tensors. Journal of the American Statistical Association, 105(490):588–599. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Singh A, Scott C, and Nowak R (2009). Adaptive Hausdorff estimation of density level sets. The Annals of Statistics, 37(5B):2760–2782. [Google Scholar]

- Sommerfeld M (2015). R-Package cope: Coverage Probability Excursion (CoPE) Sets.

- Tango T and Takahashi K (2005). A flexibly shaped spatial scan statistic for detecting clusters. International Journal of Health Geographics, 4:11. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Taylor J, Takemura A, and Adler RJ (2005). Validity of the expected Euler characteristic heuristic. The Annals of Probability, 33(4):1362–1396. [Google Scholar]

- Taylor JE (2006). A Gaussian kinematic formula. The Annals of Probability, 34(1):122–158. [Google Scholar]

- Taylor JE and Worsley KJ (2007). Detecting Sparse Signals in Random Fields, with an Application to Brain Mapping. Journal of the American Statistical Association, 102(479):913–928. [Google Scholar]

- Tsybakov AB (1997). On nonparametric estimation of density level sets. The Annals of Statistics, 25(3):948–969. [Google Scholar]

- van der Linden P and Mitchell JF, editors (2009). ENSEMBLES: climate change and its impacts: summary of research and results from the ENSEMBLES project. Met Office Hadley Centre, Exeter, UK. [Google Scholar]

- Wameling A (2003). Accuracy of geostatistical prediction of yearly precipitation in Lower Saxony. Environmetrics, 14(7):699–709. [Google Scholar]

- Willett R and Nowak R (2007). Minimax Optimal Level-Set Estimation. IEEE Transactions on Image Processing, 16(12):2965–2979. [DOI] [PubMed] [Google Scholar]

- Worsley KJ, Marrett S, Neelin P, Vandal AC, Friston KJ, Evans AC, and others (1996). A unified statistical approach for determining significant signals in images of cerebral activation. Human brain mapping, 4(1):58–73. [DOI] [PubMed] [Google Scholar]

- Wright DL, Stern HS, and Cressie N (2003). Loss functions for estimation of extrema with an application to disease mapping. Canadian Journal of Statistics, 31(3):251–266. [Google Scholar]

- Wu CFJ (1986). Jackknife, Bootstrap and Other Resampling Methods in Regression Analysis. The Annals of Statistics, 14(4):1261–1295. [Google Scholar]

- Zhang J, Craigmile PF, and Cressie N (2008). Loss Function Approaches to Predict a Spatial Quantile and Its Exceedance Region. Technometrics, 50(2):216–227. [Google Scholar]

- Zhu J, Lahiri SN, and Cressie N (2002). Asymptotic inference for spatial CDFs over time. Statistica Sinica, 12(3):843–861. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.