Abstract

In this paper, we develop an algorithm-based approach to the problem of stability of salient performance variables during motor actions. This problem is reformulated as stabilizing subspaces within high-dimensional spaces of elemental variables. Our main idea is that the central nervous system does not solve such problems precisely, but uses simple rules that achieve success with sufficiently high probability. Such rules can be applied even if the central nervous system has no knowledge of the mapping between small changes in elemental variables and changes in performance. We start with a rule ”Act on the most nimble” (the AMN-rule), when changes in the local feedback-based loops occur for the most unstable variable first. This rule is implemented in a task-specific coordinate system that facilitates local control. Further, we develop and supplement the AMN-rule to improve the success rate. Predictions of implementation of such algorithms are compared with the results of experiments performed on the human hand with both visual and mechanical perturbations. We conclude that physical, including neural, processes associated with everyday motor actions can be adequately represented with a set of simple algorithms leading to sloppy, but satisfactory, solutions. Finally, we discuss implications of this scheme for motor learning and motor disorders.

Key words: algorithm, stability, reference frame, human movement, finger action

Introduction

Many functions of the central nervous system (CNS) can be described as combining numerous elements (we will refer to their output as elemental variables) into relatively low-dimensional sets related to such functions as cognition, perception, and action. The existence of such low-dimensional sets ensures stability of percepts, thoughts, and actions despite the variable contributions from the elements (sensory receptors, neurons, motor units, etc.) and changes in the environment (reviewed in Latash, 2017, 2018). Here, we try to offer a mathematical description of processes that could bring about such stability using, as an example, the production of voluntary movements by redundant (Bernstein, 1967) (or abundant, Gelfand and Latash, 1998; Latash, 2012) sets of elements. Our approach is based on an intuitive, simple, algorithmic principle.

Consider the following example as an illustration of our goal and approach. Imagine a walking person who suddenly steps on a slippery surface. The slip is typically followed by a very complex pattern of movements of all body parts resulting in restoring balance in a large percentage of cases. Each time a slip occurs the movement pattern looks unique. We assume here that such highly variable patterns emerge as a result of a relatively simple algorithm applied to cases with varying initial conditions at the slip.

Our approach may be seen as an extension of the idea that biological systems are reasonably sloppy (Latash, 2008; Loeb, 2012; Maszczyk, 2018). This means that biological systems do not solve problems exactly, but rather use simple rules that produce solutions that are good enough (e.g., successful most of the time). We start with a requirement that the algorithm should rely, as much as possible, on local actions, that is, on actions that change a neural variable xi based on the actual and previous values xi (t < tactual) of this very variable, whereas other variables do not affect the decision. If such a purely local algorithm is incapable of providing good stability properties, our second principle is that the number of nonlocal interventions should be kept at a minimum. In this study, we start with a rule ”Act on the most nimble” (the AMN-rule), when changes in the local variables occur for the most unstable variable first.

In the simplest mathematical setting, the equilibrium control problem can be seen as a linear requirement imposed on N elemental variables xi (with i running from 1 to N) describing states of a redundant set of effectors; the desired state of the object is defined by Yj (with j running from 1 to M). The task is to find a proper feedback matrix βi,j, which via the equation

| (1) |

links time derivatives ẋi and deviations δyj = (yj− Yj) of the instantaneous coordinates yj of the controlled object from their desired values Yj. The goal is to construct a simple algorithm producing such a feedback matrix βi,j for an arbitrary task matrix αi,j. Formally this implies that the spectrum {ωm} of the eigenvalues of the matrix ωjj' = ultimately becomes stable (with negative real parts) even when the task matrix αi,j experiences perturbations δαj,i.

Related issues have been addressed in the fields of control theory, in particular, optimal control (e.g., Diedrichsen et al., 2010; Todorov and Jordan, 2002). We would like to emphasize a few major differences:

First, our approach is not based on computing a value of a cost function, but on a simple rule;

Second, we focus on stability of action rather than on purposeful transitions to another state as in most earlier studies; and

Third, we expect the CNS to solve unknown motor tasks with unknown random Jacobian (J) matrices mapping elemental variables onto performance variables. This is in contrast to more traditional approaches where the CNS is assumed to know J. This is arguably the most important difference from earlier approaches. It is intuitively attractive: when we learn to use new tools, from a tennis racket to a car, we develop sets of rules leading to success without trying to compute all the new transformations from muscle action to motion of the racket or the car in space.

At this stage, we try to introduce and develop concepts and functional principles that may be realized by the CNS without an attempt to map them on specific neural processes. We assume, however, that these principles reflect physical (including physiological) processes within the body, and the mathematical descriptions developed in the paper are reflections of those processes. We do not assume that any of such computations actually take place within the body.

The paper takes the reader through a sequence of steps from simple versions of control to a version able to solve the problem of stabilizing subspaces in spaces of any dimensionality. We start by discussing the mathematical complexity of the problem, and specific requirements for solving this problem by an analog computer, which we see as a continuous dynamical system subject to a specific control - a model for the CNS at this stage. At this step, we introduce local algorithms and a new basis of neural variables where such local algorithms can be implemented. We present these algorithms in an order, showing that putting some structure in the matrix β improves the success rate. We start with the simplest algorithms and then refine them and explore how much improvement in the success rate is gained.

We show that a random choice of the feedback has extremely low chances of yielding the required stabilization. We next show that using the AMN-rule can increase the chances of stabilization considerably. The results are further improved when the feedback matrix is not random, but is tailored in a specific way with elemental variables organized into ”generations” including variables with velocities of the same order of magnitude. Further, we introduce the possibility of non-local actions, where each next generation is allowed to inhibit activity of previous generations. This is followed by analysis of the case where nimbleness of each generation is a tunable parameter. This allows finding a solution for a system of any dimensionality.

The next topic we discuss is the relations between noise and stability, since experimentally observed noise covariation allows revealing the structure of eigenvectors and their change in search for stability. For the purposes of the current study, we use ”noise” to imply spontaneous variance in physiological signals that is not related to the explicit task and is not induced by an identifiable external perturbation. We understand that this definition may attribute to noise physiologically meaningful processes. We conclude the theoretical part by considering a realization of the control algorithm in a hierarchical system, showing the importance of the feedback damping, and proposing a model of self-adjusting damping, which yields time dependencies of the state variables resembling ones observed experimentally. At the end, we present results of two experiments illustrating feasibility of our approach.

Control Complexity and Reference Frames

Finding a solution of M linear equations for N=M variables is a problem of polynomial complexity M2. It is sufficient to take the first M columns of the M × N matrix αi,j and invert the resulting M × M square matrix, provided it is not degenerate. Solutions of the problem for the first M variables {x1. . . xM} form an M-dimensional subspace SM in the entire N-dimensional space, parametrized by the remaining (N–M) variables {xM+1. . . xN}. Construction of the inverse matrix based on the sequential i,j finding of its line vectors complemented by orthonormalization of these vectors with those found earlier indeed implies the number of operations on the order of M2.

To solve this problem, one should construct and implement a dynamic process that has the M-dimensional subspace SM as a stable stationary manifold, as suggested by Eq.1. Here, we are going to propose several strategies of constructing heuristic algorithms that result in the dynamic stabilization of subspaces defined by systems of M equations for N=M variables.

The most general, Kolmogorov, complexity relies on the number of operations required to reach an objective. However, sometimes, e.g. in quantum informatics, different basic operations may have different complexity and, therefore, they can be ranked in a certain way. For example, a local operation, which involves just one variable, and produces a change in the contribution of the variable to the overall dynamics based only on the history of evolution of that very variable, is considered as the simplest one, while non-local operations producing a change in a variable depending on the history of other variables, are considered as complex. Our notion of simplicity implies locality, although at a later stage one can construct a corresponding cost function, if needed.

We will use terms ”local” and “bi-local” to address situations when a prescribed action on one chosen element depends only on the state of either that element or on another chosen element, respectively. Nonlinearity of the analog computer generated by such a local and/or bi-local algorithm may not result in universal stability of the dynamics. In other words, for some initial conditions the system may reach a stationary solution, while for other initial conditions it becomes unstable. In such situations, we will use the success rate R(N, M) of the algorithm as its quality characteristic.

Within this paper, we distinguish three types of bases. First, there is a measurement basis formed by experimentally accessible variables, for example positions, forces, or muscle activations. Second, there is another, task-dependent, basis formed by so-called modes, that are linear combinations of the former variables independently fluctuating under the action of noise and coinciding with the eigenvectors of the aforementioned matrix ωj,j′ (cf. Danion et al., 2003). The third basis is the reference system relying on the variables xi responsible for the control over the body state. To our knowledge, this coordinate system has not been defined previously. Each of the coordinates of the third system represents combinations of modes that are task specific and relatively quickly adjustable to changes in the external conditions of task execution, for example to changes in stability requirements (e.g. Asaka et al., 2008). This basis comprises the variables that experience local control.

The Control Structure

In the first approximation, we formulate the problem in terms of linear algebra: to solve the problem of subspace stabilization one takes an arbitrary M × N matrix that imposes conditions of Eq. (1) with Yi = 0 defining the required subspace SM = {yi = 0}. The task is to find a N × M linear feedback matrix that prescribes modifications of {xi} leading towards SM by determining the derivatives

| (2) |

As dynamics of the system is described by the equation

| (3) |

and a stable M-dimensional subspace SM exists if all the non-zero eigenvalues of the degenerate square N × N matrix of the rank M have negative real parts. The probability to satisfy this requirement for randomly chosen either or both and is low, and in order to stabilize SM with a high enough success rate R(N,M) for a chosen a randomly or systematically chosen linear feedback matrix may need to be modified A sequence of such modifications can be continued according to a certain algorithm until stability is achieved.

Each elementary act of the modification algorithm relies on three main factors: (i) the variable xi ; (ii) the history of its dynamics, which is described by a nonlinear functional zi({xi (t)}) that governs the action on the target variable xi(t) and/or another variable xf(t); and (iii) type of the action itself implementing the modification specified by a matrix Once the source and the target variables coincide, we encounter a so-called local algorithm when the matrix becomes diagonal.

Note a major difference between the proposed procedure for the stability search and typical approaches from the control theory toward stabilization of object dynamics. Successful control over an object implies that a random deviation of its trajectory in the configuration space from the prescribed one is corrected by feedbacks based on a properly constructed monodromy matrix governing the dynamics in the vicinity of the trajectory; the motion is stable once all eigenvalues of this matrix have negative real parts. Our stability search algorithm does not require that all eigenvalues of the monodromy matrix, which in our case is always have negative real parts, but may acquire this property as a result of sequential changes Moreover, in contrast to the well-designed control over an object, the proposed stability search algorithm does not always succeed to find the required

No algorithm

For the sake of presentation simplicity, assume that the desired subspace SM corresponds to zero values of all the variables yi∈{1,M}. Non-zero values of these variables will thus be employed as entries into the feedback loop given by a rectangular N × M matrix βi,j, such that Eq. (2) holds, while Eq. (3) describes the system dynamics. For a generic rectangular randomly chosen matrix αi,j and the randomly chosen feedback matrix βi,k the success rate R(N,M) found numerically scales approximately as 2–M, which is consistent with the assumption that all non-zero eigenvalues of the matrix may have both negative and positive real parts with equal probability and, roughly speaking, different eigenvalues are statistically independent one each other.

Local algorithm for random feedback

We begin with the simplest case of a local algorithm assuming that the feedback sign for a given xi changes when a positive quantity

| (4) |

exceeds a threshold value Z. This represents an example of the AMN control where, as a first guess, we consider the integral of the rates squared as a reflection of nimbleness. Further, we consider other strictly positive functions (see Eqs. 27 and 28). Formally, local control implies that Eq. (3) is modified by the presence of a sign function:

| (5) |

| (6) |

This strategy yields the 100% success rate for the case of M = 1, that is the case when matrix has the rank 1. In this case the dynamic equation

| (7) |

for the variable determines time dependencies of all the other variables Indeed, if the quantity is positive, y1 exponentially increases in time. This means that the variable xi corresponding to the largest |βi,1| produces the highest zi(t) and at some moment of time, when this quantity exceeds the threshold value Z, the sign of sign(Z – zi(t))βi,1 changes. For a positive α1,i, this results in a decrease of ω and slowing-down of the instability. For a random matrix however, the matrix element α1,i can equally be negative or positive; in the latter case, the change of the sign results in the instability speeding-up. In this case, the exponential growth of the variables xi continues, and after a while, the next biggest |βi,1| leads to a change of the corresponding feedback sign. Changes of the signs continue till ω becomes negative, and the dynamic process becomes stable. This will definitely occur for the matrix of the rank 1, but not necessarily for higher ranks. Numerical search shows that the success rate Rlocal drops with increasing rank M. The results of the numerical simulation are shown in Table 1. Compared to the random feedback (Rrandom), the success rate is higher for all M and N. It drops rather quickly with M while being relatively less sensitive to N (compare the R values for M = 4 and N = 15, 40, and 60). In the simulation, each element of αk,j was taken as independently distributed with zero mean and dispersion equal to unity. The βi,k matrix had the same statistics of its elements. We also checked that displacement of the Gaussian distribution for the elements of βi,k did not have a significant effect as long as it was on the order of unity. The number of trials varied with the dimensionality of the dynamical system such that the statistical error was less than 5%.

Table 1.

The success rate of various algorithms stabilizing the M-dimensional subspace of the N-dimensional space.

| M | 1 | 2 | 3 | 4 | 4 | 4 | 5 |

|---|---|---|---|---|---|---|---|

| N | 10 | 20 | 30 | 15 | 40 | 60 | 30 |

| Rrandom | 0.50 | 0.24 | 0.14 | – | 0.06 | – | – |

| Rlocal | 1 | 0.77 | 0.46 | 0.17 | 0.160 | 0.154 | 0.06 |

| Rtailored | 1 | 0.85 | 0.62 | 0.32/0.28 | 0.36 | 0.31 | 0.25/0.21 |

| Rgenerations | 1 | 0.9 | 0.68 | 0.42/0.46 | 0.66 | – | 0.45/0.31 |

Tailored linear feedback in the local algorithm

Within the general case of Eq. (5), we replace the random feedback matrix βi,j with another one, constructed to augment the success rate for higher M:

| (8) |

where is a column vector of the size of the ratio N/M, which we assume integer. Here q is a small parameter, which suggests that at each time scale one has to deal with a subspace of rank 1 using the same algorithm as for the case M = 1; the subspaces corresponding to larger matrix elements are assumed to have been stabilized earlier.

Tailored linear feedback, generations, and non-local algorithm

The idea of bi-local control allows excluding undesired changes in the feedback sign determined at an earlier stage of control that may be induced at a later stage. In the feedback matrix Eq. (8), we identify parts that belong to different generations, corresponding to different orders of the parameter q. The first generation corresponds to q0, and the last, the most recent, generation to qM-1. Each generation accounts for the feedback at the corresponding time scale. The idea of the control algorithm is that changing the sign of a variable belonging to a generation blocks changes of the signs of the variables belonging to all former generations. The term generation means that some of variables are faster than others, such that, at each time scale, the control occurs mainly in a subspace of a smaller dimension (close to one).

Formally, the bi-local control implies that the set of Eqs. (4, 5) is modified by the presence of step factors Θ(Z − zi) at the derivatives for the variables zi corresponding to ”generations” that happened after that of i, and reads

| (9) |

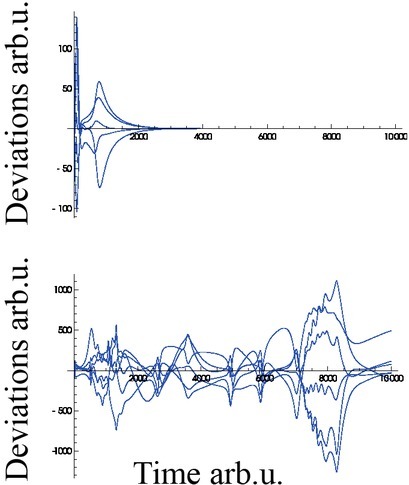

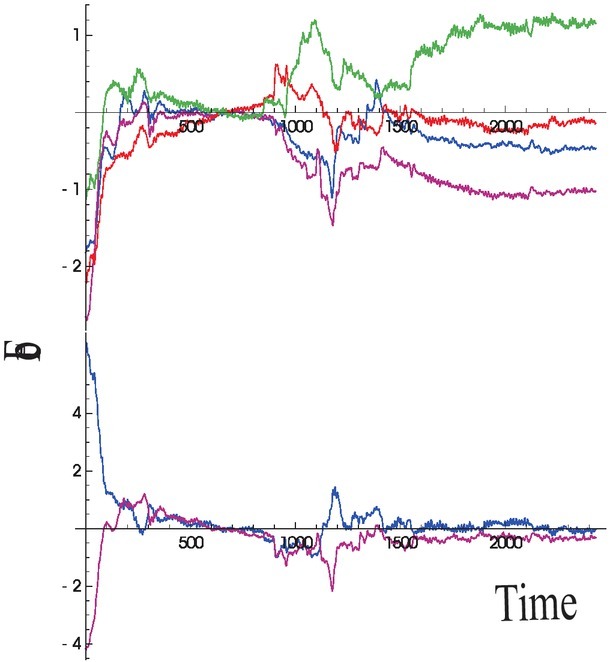

where Θ(x) is the step function. The first equation shows that the functionals zi(t) defining the feedback signs are no longer local, since dynamic equations ruling these quantities depend not only on the corresponding local squared velocities, but also on the values of the functionals for other variables. This construction further improves the success rate. The results of the numerical simulations for the success rate Rgenerations are shown in the last line of Table 1 for q = 0.07-0.1. Figure 1 presents an example of the time dependent deviations y1…4(t) for successful control with N = 4, M = 40, and q = 0.4, where the abrupt changes of the dependencies reflect changes of the feedback sign.

Figure 1.

An example of stabilizing a four-dimensional subspace in a 40-dimensional space. Time dependencies of the variables y1…4, that determine the subspace for yi = 0 are shown. The first variable y1 (the blue solid line) corresponds to the first generation of the variables x1…x10 having the strongest coupling. The second variable, y2, (the red dashed line) is coupled to the variables x11…x20 with weaker coupling constants, scaled by the factor q = 0.4 with respect to the first variable. The third and the fourth generations (the dash-dot and the dotted lines, green and black, respectively) have couplings scaled by the factors q2 and q3, respectively. Note seven sequential discontinuities of the derivatives of the dependencies related to the sign changes at the most nimble variables xi, that first occur in the first generation, then in the second, etc. The changes in each of the generations manifest themselves in all dependencies via the matrix . The scales of y( t) and t are arbitrary.

Feedback strength exploring algorithm

Simple local algorithms discussed above solve the problem with some probability, which is less than unity for more than one dimension. We have identified an algorithm, though of higher complexity, which solves the multi-dimensional problem with the probability one. The bi-local algorithm can be modified to gain the 100% success rate if instead of the fixed power factors qn entering Eq. (8) one allows sequential choosing of these feedback strength parameters for each next generation. In a sense, this algorithm implies learning, that is, given a task it modifies the feedback matrix once the sign-changing algorithm does not lead to subspace stabilization. More specifically, given q < 1, similar to Eq. (8), in

| (10) |

the power parameters p1 ≤ p2 ≤ pM-1 have to be chosen such that the subspace {yi = 0} is stable.

One begins with all pi = ∞, that is, with a one-dimensional subspace, which can always be made stable by proper choice of signs. Next, one sets p1 to zero, and starts to implement the sign changing algorithm in the subspace of the second diagonal cell of Eq. (10). If this algorithm does not lead to a stable subspace of the dimension 2, one increases p1 by unity, and implements the sign-changing algorithm for the second cell once again. Repeating this procedure leads to finding p1 such that the two-dimensional space becomes stable. Next, one turns to the third cell of the matrix in Eq. (10), puts p2 = p1, and implements the sign-changing algorithm complemented by augmentation of p2 by unity, if needed, until the third dimension gets stable. The procedure is sequentially applied to all the cells of Eq. (10) and yields a feedback matrix stabilizing the required subspace of the dimension M for the chosen

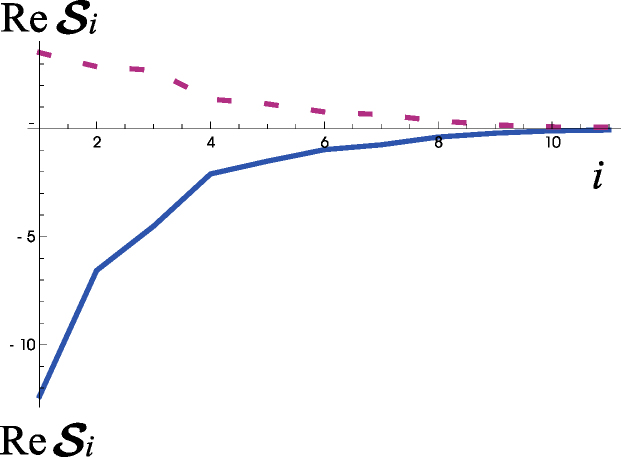

Figure 2 shows the average eigenvalues and their mean absolute value deviation from the average calculated with the help of this algorithm. Both these quantities are exponentially decreasing with the eigenvalue number. These results are not shown in Table 1, since all the entries are unities.

Figure 2.

The average sorted eigenvalues (solid line) and their average mean absolute value deviation (dotted line) obtained for the case N = 143, M = 11 with the help of the feedback strength-exploring algorithm. The average has been taken over 25 casts of the random matrix The scales are arbitrary since and have no dimension and 1 ≤ i ≤ M.

This result means that, theoretically, the local control based on changing the feedback sign for the most nimble variable (the AMN-rule) combined with a simple non-local control solves the feedback search problem for any dimension M. We think, however, that this solution may be too complex. Besides, based on everyday experience that shows failures at motor tasks, we believe that algorithms used for stabilization of actions are imperfect (leading to success in less than 100% of cases), similar to the ones described above based on the AMN-rule.

Feedback Structure and Susceptibility to Noise

We now address the question: what happens in the presence of noise with the convergence towards the stabilized subspace ensured by the feedback matrix

| (11) |

Here denotes successful implementation of the control algorithm. To remind, in this context, ”noise” implies external task-independent actions that can be of various origin. More specifically, the question is: how far from the average positions xi(t) satisfying the dynamic equations can the actual variables Xi(t) = xi(t) + δxi(t) deviate in the presence of a time-dependent noise ƒi(t)? This question can be immediately answered on the basis of the eigenvectors where

Consider the dynamic equations

| (12) |

for the deviations where denotes eigenvalues of The solution

| (13) |

of these equations in the Fourier representation

| (14) |

suggests that the spectral noise intensity of the coefficients xi(t) is related to the spectral noise intensity of ƒi(t) via the relation

| (15) |

which corresponds to the susceptibility

The simplest model includes random static forces ƒi acting on the relevant variables during a time interval T. In successive time intervals, these forces show random values. This yields:

| (16) |

Noise analysis may serve as a powerful tool of revealing the eigenvectors of the matrix Ωi,j in Eq. (11), which, via Eqs. (15, 16), also allows estimating the magnitudes of the corresponding eigenvalues. One needs to calculate the noise covariance matrix Cij from the experimentally observed deviations of the time-varying measured values from their average values in the stationary regime. Eigenvectors of this matrix should coincide with those of Assume that physical/physiological processes can be adequately expressed with an algorithm similar to the one described in the paper. In that case, the noise analysis may connect those physical/physiological processes with algorithm modeling processes. Note that for N = M, the non-zero eigenvalues of the N × N matrix coincide with M eigenvalues of the M × M matrix The remaining (N – M) eigenvalues are zeros, unless an additional requirement is imposed on the matrix

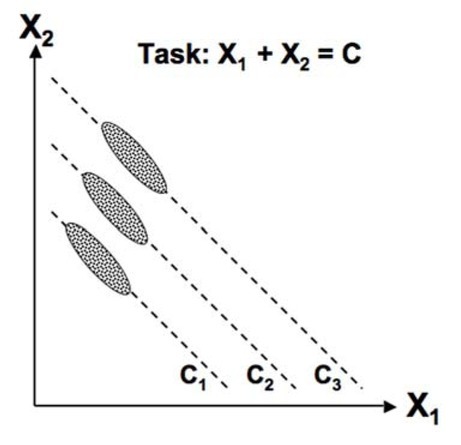

Given a task matrix and a feedback matrix, there exist two subspaces in the space of the variables xi, the so-called uncontrolled manifold (UCM) (Scholz and Schöner, 1999) and its orthogonal complement (ORT). The ORT is the task-specific subspace expected to show high stability, which implies that variance in the ORT is expected to be small. In the UCM, variance is generally expected to be large, unless there are other factors, outside the explicit task formulation, that keep it within a certain range. Not all possible combinations of the involved elemental variables are used across repetitive attempts. Such self-imposed additional constraints may be addressed as perfectionism; they may reflect optimization with respect to a cost function (e.g., Terekhov et al., 2010). Figure 3 illustrates the task x1 + x2 = C for different values of C. While all points on the slanted dashed lines correspond to perfect task performance, actual behavior shows much more constrained clouds of solutions that show larger deviations along the solution space (the UCM) compared to deviations along the ORT. Note that if the task is learned for a particular value of C, the solutions show robustness for other values of C.

Figure 3.

Consider a task of producing a constant sum of two variables, e.g., pressing with two fingers and producing a constant total force level. The dashed lines show solution spaces (uncontrolled manifolds, UCMs) for three different force levels, C1, C2, and C3. Across repetitive trials, clouds of data points form ellipses elongated along the corresponding UCM. This shape reflects lower stability along the UCM as compared to the orthogonal direction relevant to the task-imposed constraints. Note that the three data clouds are centered not randomly along the UCMs but reflect a certain preferred sharing of the task between the two effectors. This preference may reflect an optimization principle.

More formally, after a feedback matrix stabilizing an M-dimensional subspace is found, one may impose additional constraints that can either be in the form of explicit equations or follow minimization of a cost function. Then a subspace is stabilized of a dimension M+ exceeding the dimensionality M of the initial task subspace. Such perfectionism may be viewed as a secondary task decreasing variance in some directions of the UCM.

Recall the task-specific basis of variables along which the control is local. This basis is a conceptually new feature affording a simple structure of the control algorithm. The relation between the basis of modes and the control bases may change during the process of stability search: application of the local control leads to a change of that is, the way the task requirement is mapped onto the feedback action. Thereby it may affect both the mode basis and the magnitude of the corresponding mode susceptibilities. There exists such a case, where the mode susceptibilities vary without changing the corresponding eigenvectors of Then, the linear combinations and of the laboratory basis variables remain statistically independent, and only variance of one mode starts to exceed variance of the other. One can call this regime mode crossing, similarly to the phenomenon of term crossing in Quantum Mechanics (Akulin, 2014). In a general case when, along with a change of the susceptibilities, the local changes equally result in the emergence of an appreciable covariance between the modes, one encounters the phenomenon of so-called avoid crossing, when formerly the larger mode susceptibility, though approaching in magnitude the other one, remains always larger, while both eigenvectors and corresponding to these modes rotate.

Algorithm for Hierarchical Feedback

Earlier, we showed that ”tailored” feedback improves the ability of the system to find stability with the help of the AMN-rule as compared to random feedback. How can such a structure of appear in nature? One of possible answers is hierarchical architecture of the feedback channels that we discuss in this section. Hierarchical control gives an example of the stabilization search algorithm different from that considered earlier. We demonstrate that such an algorithm requires only local control, whereas the role of nonlocal control can be played by another randomly chosen linear feedback matrix once the current one does not yield stability. The idea of hierarchical control in the human body is very old. A comprehensive scheme of control with referent configurations has been suggested recently to be built on a hierarchical principle, starting with referent coordinates for a few task-specific, salient variables, and resulting in referent length values for numerous involved muscles (Latash, 2010; Feldman, 2015). We also explore another modification - the most nimble xi experiences not a step change of the sign of its contribution, but a smooth change of the feedback gain as the cosine of the corresponding functional zi.

Intrinsic instability of the hierarchical control

In mathematical terms, the time derivative of a vector of variables at n-th step depends on the variables the previous step, while the spatial dimension Nn of each step varies. At each step local control may be implemented. For example, the corresponding set of the local control equations for a three-level hierarchy has the form

| (17) |

based on the diagonal matrix elements of the local control operators To illustrate the universality of the AMN-rule the cosine function is used instead of the sign function. If at each level n of the hierarchy, we also include diagonal damping matrices of the dimension given by the number Nn of the variables at this level, the set of equations (17) can be written as a single matrix equation:

| (18) |

Alternatively, the local control operation may be applied not to susceptibility of a given variable to external factors, but to efficiency with which this variable acts on the variables of the next generation. In such a case, two operators on the left-hand side of Eq. (18) have to be interchanged:

| (19) |

Though offering a simple way to address many variables at once, hierarchical control may add instability. Therefore, the intermediate steps have to be damped in contrast to the one-step control of Eq. (9). In particular, note that for zero damping, even the simplest two-level control becomes unstable, and this is always the case for a higher number of the control levels. The origin of this instability is rather simple and can be illustrated with an example of a three-level control scheme, with just one variable at each level, when Eq. (18) takes the form:

| (20) |

corresponding to the characteristic equation

| (21) |

It is evident that, for any non-zero complex number on the right-hand side of this equation, the phase factors of the three roots of the cubic equation are equally distributed on the unit circle in the complex plane such that at least one of them has a positive real part. The same structure of the root distribution persists in a higher-dimensional case with l-level control, since the characteristic equation in this case has the form

| (22) |

and hence the roots of the characteristic polynomial given by l-th roots of the eigenvalues of the matrix are also uniformly distributed on the corresponding circles in the complex plane, with radii given by the eigenvalues moduli.

We thus come to a conclusion that damping is indispensable for stable hierarchical control. At the same time, all imply a trivial asymptotic situation of completely damped motion at all levels for all tasks. Therefore, in order to have a reasonable model, we have to assume vanishing of the damping rates at only one hierarchical level of control. Presumably, this level should have maximum dimensionality Nn.

Action of feedback loops

Stability can be improved by introducing intermediate feedback loops to the net feedback loop as shown in Figure 4. Adjusting gain in the feedback loops controls stability at each hierarchical level. This effect can be modeled when the damping parameters γi are taken depending on the local parameters zi, increasing, for instance, as

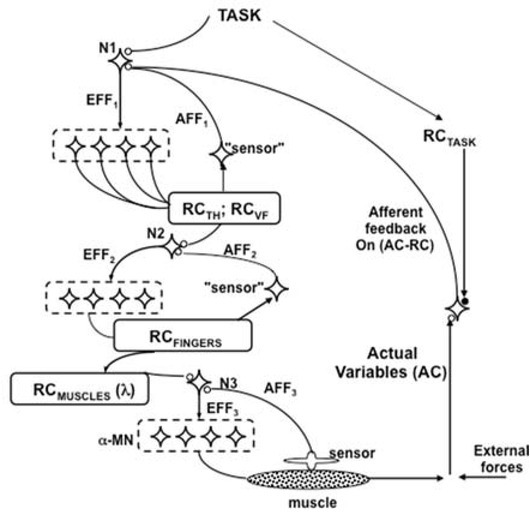

Figure 4.

A scheme of hierarchical control of the hand within the idea of control with referent configurations (RCs) of the body. At the top level, a low-dimensional set of referent values for salient, task-specific variables is reflected in the RC. A sequence of few-to-many transformations results in higher-dimensional RCs at the digit level and muscle level. Local feedback loops ensure stability with respect to the variables specified by the input. The global feedback loop ensures that the actual body configuration moves towards one of the solutions compatible with the task RC. At each level, inputs to a neuronal pool (N1, N2, and N3) are combined with afferent feedback (AFF) to produce the output (efferent signals, EFF). At the lowest level, elements are alpha-motoneurons and their referent coordinates correspond to the thresholds of the tonic stretch reflex (lambda).

| (23) |

for the variables at each damped level. The parameters γi are positive for all, but one, hierarchical levels, where they may remain zero, in order to avoid the trivial case of complete damping of all variables. For the local control of the damping, the dependence zi(t) can be given by a differential equation relating the positive rate of increase with an even power ƒ of either the corresponding variable

| (24) |

or the corresponding variable velocity,

| (25) |

where є < 1 is a numerical parameter, which may depend on the hierarchy level number and the variable number. Eq. (25) illustrates that the AMN-rule does not require a specific functional form of the action at the most nimble variable, but describes choice of such an action.

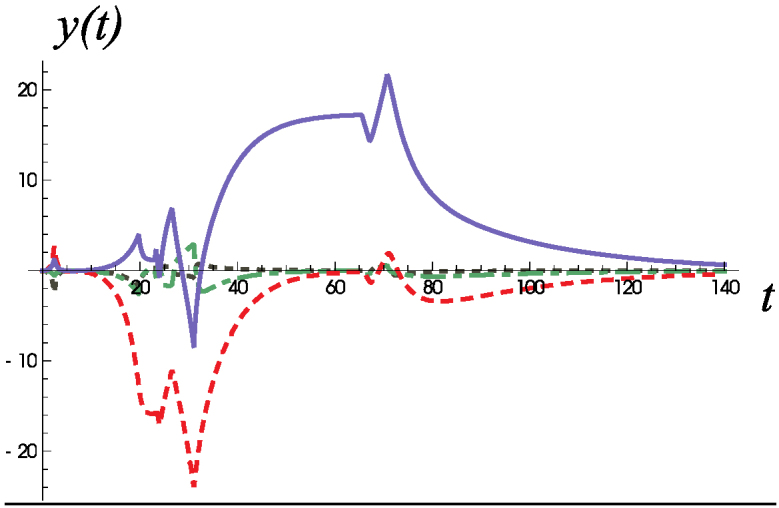

A typical time dependence for the successfully stabilized subspace is shown in Figure 5 (the top plot). Due to smoothness of the local feedback control, cos(0.1zi) instead of sign(10π − zi), the curves do not show cusp-like changes of the derivatives, typical of those in Figure 1. An example of unsuccessful control is shown in the bottom plot in Figure 5.

Figure 5.

Time dependencies for the successfully controlled five-dimensional subspace in the hierarchical cascade setting with N1/N2/N3 = 5/50/200 (upper plot). An example of unsuccessful control (lower plot). The scales are arbitrary since the evolution equations have no dimension.

The rather high success rate of hierarchical local control suggests a strategy, which can replace non-local control. Once the current random cast of the feedback matrices does not yield stabilization during a run, one may take another random cast in the course of the same run, and in case of failure, repeat the attempt again and again. For example, the probability 0.3 implies that just a few (4 − 5) such casts during a run are required in order to stabilize a 5-dimensional subspace. Changing the linear feedback matrices when local control turns out to be inefficient may be considered as a replacement for non-local control discussed in the previous section. Compare this strategy with a typical sequence of corrective actions by a person slipping on ice.

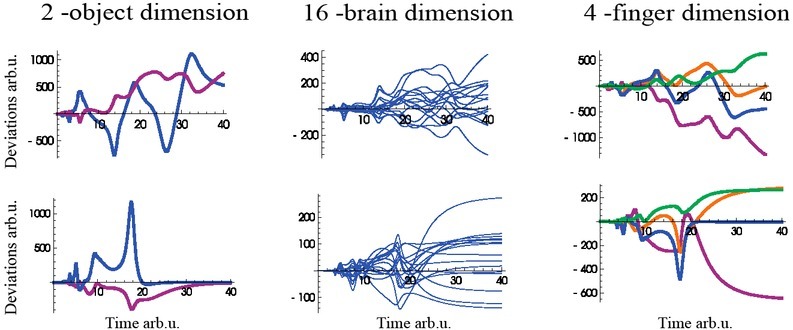

Note, that dimensionality at each step of the hierarchy does not need to be larger than the dimensionality at the previous step. We illustrate this by an example of control over a two-dimensional space y exerted by a three-level hierarchical feedback with locally changing parameters. The first-level variables belong to a space of the dimension N1 = 4; here the damping increases according to Eqs. (23, 25) with ε = 0.2, ƒ = 2, and μi = 0.1. The next level of the hierarchy comprises the variables that belong to a space of the dimension N2 = 16 without damping. The last level comprises variables in a four-dimensional space N3 = 4 with strong constant damping Γi = 5. The success rate for these parameters was R ≃ 0.8. Examples of unsuccessful and successful search of stability are shown in Figure 6 for the components of the vectors y, and Note that, in cases of successful control, the components of the high-dimensional vectors tend to asymptote with time; in contrast, in cases of unsuccessful control, they keep changing.

Figure 6.

Examples of successful (lower panels) and unsuccessful (upper panels) search for the new equilibrium control. We show coordinate variables as functions of time in arbitrary units. First column: deviations of two controlled coordinates from their prescribed value – zero. The second column: dynamics of the hypothetical elemental variables tend to some asymptotic values for successful control. Third column: dynamics of motor variables. One sees the dynamics of transition from the old equilibrium position to the new one. The scales are arbitrary since the evolution equations have no dimension.

Experiments with the Human Hand

Two experiments were performed to illustrate one of the central ideas of the suggested scheme and check some of its predictions. We used the task of accurate force (F) and moment-of-force (M) production by the four fingers of the dominant hand. Two types of perturbations were used. First, we used mechanical perturbations applied to a finger that led to actual changes in F and M. Second, we modified the visual feedback leading to changes in the mapping between the finger forces and the feedback shown on the monitor. The experimental procedures were approved by the Office for Research Protections at the Pennsylvania State University.

Experiments with mechanical perturbations

The main goal of the first experiment was to provide support for the principle ”act on the most nimble one” (AMN). Mechanical perturbations (lifting and lowering a finger) were applied during the performance of an accurate multi-finger steady-state task. According to our scheme, quick reactions to these perturbations are based on the AMN rule. We checked this prediction by comparing the directions of changes in the finger force space produced by unexpected perturbations of the steady-state force patterns (described as a vector with the first identifiable correction produced by the subjects (a correction vector, We expected the angle between and to be small, smaller than the angle between and (the finger mode vector defined by enslaving).

Methods

The “inverse piano” setup

Eight young, healthy subjects took part in the experiment (four males). They were right-handed, had no specialized hand training (such as playing musical instruments) and no injury to the hand. An ”inverse piano” apparatus was used to record finger forces and produce perturbations. The apparatus had four force sensors placed on posts powered by linear motors, which could induce motion of the sensors along their vertical axes (for details see Martin et al., 2011). Force data were collected using PCB model 208C01 single-axis piezoelectric force transducers (PCB Piezotronics, Depew, NY). The signals from the transducers were sent to individual PCB 484B11 signal conditioners – one conditioner per sensor – and then digitized at 1 kHz using a 16-bit National Instruments PCI-6052E analog-to-digital card (National Instruments Corp., Austin, TX). Each sensor was mounted on a Linmot PS01-23x80 linear actuator (Linmot, Spreitenbach, Switzerland). Each actuator could be moved independently of the others by means of a Linmot E400-AT four-channel servo drive. Data collection, visual feedback to the subject, and actuator control were all managed using a single program running in a National Instruments LabView environment. Visual feedback was provided by means of a 19” monitor placed 0.8 m from the subject. The feedback cursor (a white dot) represented F along the vertical axis and M in a frontal plane computed with respect to a horizontal axis passing through the midpoint between the middle and ring fingers along the horizontal axis. Pronation efforts led to leftward deviation of the cursor. An initial target was placed on the screen (a white circle) corresponding to the total force of 10 N and zero total moment.

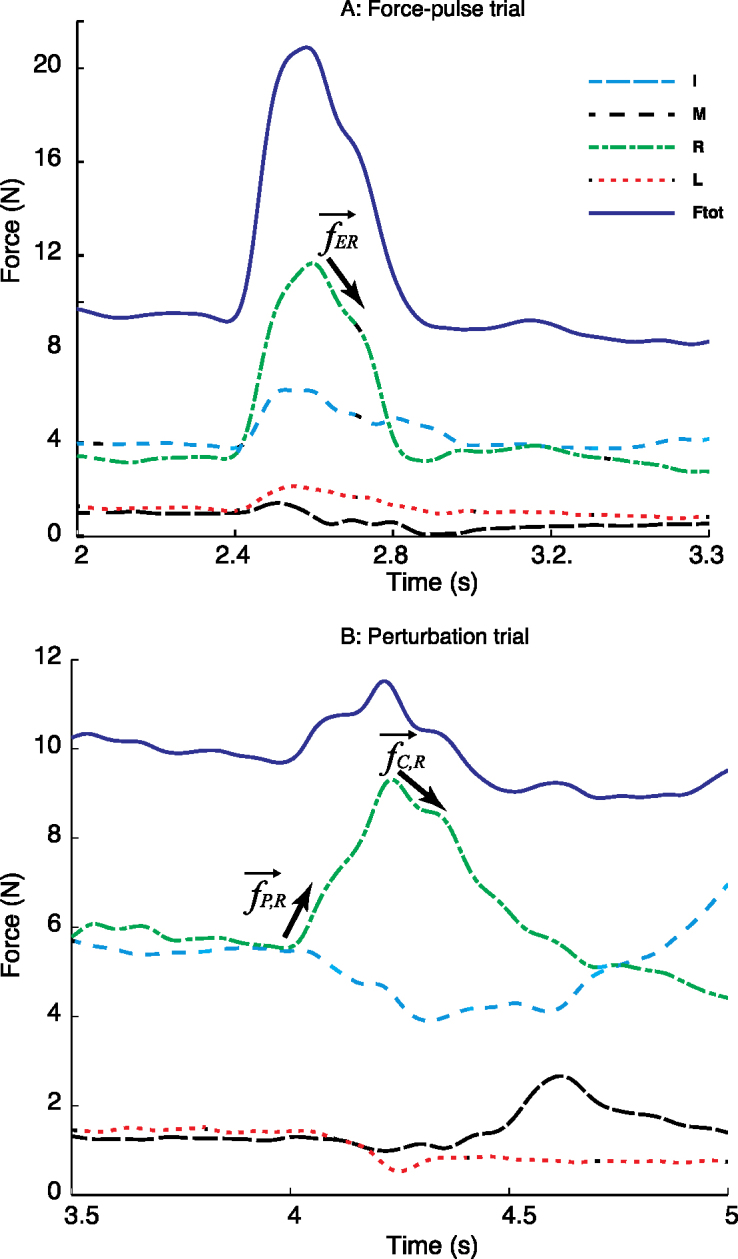

The experiment involved two parts: voluntary force–pulse production and reacting to unexpected perturbations. Prior to each trial, the subjects placed the fingers on the centers of the sensors and relaxed; sensor reading was set to zero. As a result, the sensors measured only active pressing forces. Then, a verbal command was given to the subject and data acquisition started. The subject was given 2 s to place the cursor over the initial target. During the force–pulse trials, the subjects were asked to produce a force pulse from the initial target in less than 1 s by an instructed finger (Figure 7A). Each finger performed three pulse trials in random order. In perturbation trials, one of the sensors unexpectedly moved up by 1 cm over 0.5 s. This led to an increase in total force (Figure 7B), while changes in the moment of force depended on what finger was perturbed. The subject was instructed to return to the target position as quickly as possible. Each finger was perturbed once per trial, with 10-s rest periods between each of the three repetitions. Perturbation conditions were block–randomized between fingers with 1-min rest periods between blocks.

Figure 7.

Typical time profiles of the force-pulse trial (A, top panel) and perturbation trial (B, bottom panel) performed by a representative subject. The total force profile is shown with the solid trace, and individual finger forces are shown with different dashed traces. The intervals used to compute the eigenvectors in the force space are shown with the arrows.

From the force-pulse trials we identified the onsets of the F decrease phase (Figure 7A). From the perturbation trials we identified the onsets of two time intervals: one corresponding to the perturbation-induced F change and the other corresponding to the earliest corrective action by the subjects. Each time interval contained 200 ms of the four–dimensional finger force (I, M, L, and R) data. Further, for each time interval, principal component analysis (PCA) based on the co-variation matrix was used to compute the first eigenvector in the finger force space, accounting for most variance across the time samples, for each subject and each trial separately.

Therefore, for each perturbation trial we obtained two eigenvectors in the finger force space. We will refer to these vectors as (force vector during the perturbation applied to the i-th finger), and (force vector during the earliest correction in trials with perturbations applied to the i-th finger). For each finger, from the three force–pulses trials we computed an average vector (force vector during the downward phase of force change in the force-pulse task by the i-th finger). Note that this vector reflected the unintentional force production by non-task fingers of the hand (enslaving; Zatsiorsky et al., 2000).

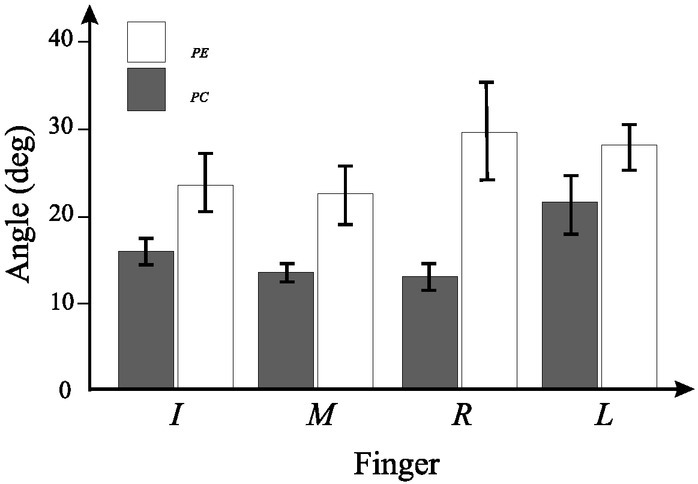

Finally, we computed the angles αPE between and and αPC between and for each finger and each subject separately (averaged across repetitions). The Harrison-Kanji test, which is an analog of two-factor ANOVA for circular data, was used with FINGER (4 levels: I, M, R, L) and ANGLE (2 levels, αPE and αPC) as factors. All data analyses were performed in Matlab (MathWorks, Inc.) software.

Results

Acting along the most nimble direction

During the force–pulse trials, forces of all four fingers changed in parallel (Figure 7A). There was a larger change in the force produced by the instructed finger and smaller changes in the other finger forces. These patterns are typical of enslaving reported in earlier studies (Danion et al., 2003; Zatsiorsky et al., 2000). PCA applied to the finger force changes produced similar results over the phase of force increase and the phase of force drop. The first PC accounted for over 95% of the total variance in the finger force space in all subjects and for each finger as the instructed finger. The loading factors at individual finger forces were of the same sign.

In trials with perturbations, lifting a force sensor produced a complex pattern of changes in the forces produced by all four fingers (as in Martin et al., 2011). Typically, the force of the perturbed finger increased, while the forces produced by the three other fingers dropped (Figure 7B). The total force increased. The first PC accounted for over 95% of the total variance in the finger force space in all subjects and for each finger as the perturbed finger. The loading factors at different fingers were of different signs; most commonly, the perturbed finger loading was of a different sign as compared to the loading of the three other fingers.

Overall, the angle between the vectors of perturbation and correction (αPC) was consistently lower than the one between the vectors of perturbation and voluntary force drop (αPE). Figure 7 shows averaged (across subjects) values of the two angles with standard error bars. The gross average of αPE was 15.9 ± 6.6◦, while it was 25.9 ± 10.9◦ for αPE. The Harrison-Kanji test confirmed the main effect of angle (F[1,56] = 19.17; p < 0.0001) without other effects.

Overall, these results confirm one of the predictions of the AMN-rule. Indeed, the first reactions to perturbations in the four-dimensional finger force space showed relatively small angles with the vector reflecting the effects of the perturbation on finger forces. We would like to emphasize that the first reaction was not along the direction of finger force (mode) that was most perturbed, but along a multi-dimensional eigenvector in the four-dimensional space that showed the largest instability. While sensory information may inform on changes in individual degrees of freedom (finger forces), there is no obvious source of such information on any particular eigenvector in the finger force space. Hence, this observation is non-trivial and we are unaware of feasible alternative interpretations.

Experiments with visual feedback perturbations

Four 6-axis force/moment sensors (Nano-17, ATI Industrial Automation, USA) mounted on the table were used to measure normal forces produced by the tips of the index (I), middle (M), ring (R), and little (L) fingers. To increase friction between the digits and the sensors, 320-grit sandpaper was placed on the contact surfaces of the sensors. The centers of the sensors were evenly spaced at 30 mm. The output signals from the sensors where digitized with the 16-bit resolution (PCI-6225, National Instrument) at 100 Hz. A LabVIEW program was used to provide visual feedback and store the data on the computer. Offline processing and analysis was done in Matlab.

At the beginning, the four-dimensional space of the finger forces was mapped onto the two-dimensional space of the screen according to a very natural rule: the vertical coordinate showed changes in the net resultant finger force (F), while the horizontal displacement showed the net moment of the finger forces (M). The subject was first requested to place the cursor into a position in the middle of the screen and, second, to keep it in this position at all times. Once this task was accomplished, the law according to which the deviation of the finger forces from the steady-state finger force values was mapped to the deviation of the point from the center of the screen was changed without the subject’s knowledge. The new law relating the four-dimensional space of the force deviations with the two-dimensional space of the cursor deviations from the center point was given by a new, randomly chosen, 2 × 4 Jacobian matrix, J. The subjects were always encouraged to keep the cursor in the target position in the center of the screen.

Manifestations of the feedback changes

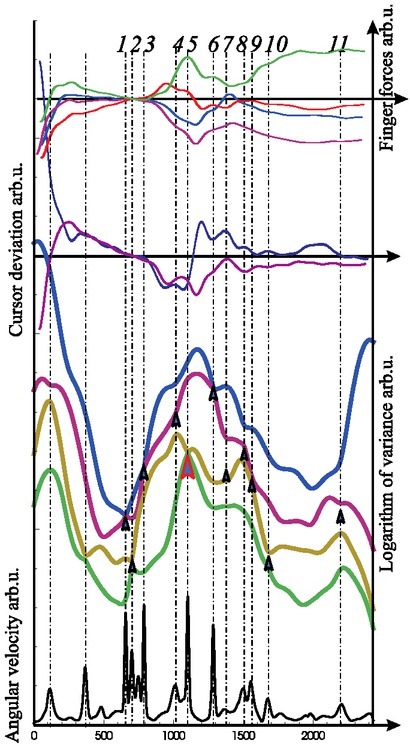

In some trials, the subjects were unable to bring the cursor into the target within 30 s (trial duration), while in other trials they succeeded at this task. Figure 8 presents an example of the time profiles of the finger forces (upper panel) and the coordinates of the point on the screen (lower panel) recorded during a successful trial. The overall stabilization success rate was R ≃ 0.55. Note a qualitative similarity between the dependencies depicted in Figure 8 and the corresponding calculated dependencies illustrated in Figure 6.

Figure 8.

The angles between the force vector produced by a quick perturbation applied to a finger and the force vector during the downward phase of the force-pulse trial by the same finger (αPE) and between the first vector and the vector of the corrective action (αPC). Averaged across subjects data are shown with standard error bars. Note that for each finger as the target finger αPE < αPC.

The sharp spikes and jumps on the experimental curves show approximately the moments of time when correcting actions took place similarly to the simulated curve in Figure 1. Though spikes in the simulated curves may be associated with the correcting actions of the feedback sign change, there is no formal rule allowing to identify such moments in the experimental data, and one can speak only about intuitive similarity between the dependencies. Since we were exploring the regime of searching for equilibrium in new, formerly unknown, conditions, we could not use across-trial statistical analysis, since each new trial corresponded to new initial and task conditions. Note that multiple sequential repetitions of the same experimental setting for the same subject would likely involve processes of learning and adaptation that are beyond the scope of questions addressed here. Information about the moments of changes of the feedback matrix can be extracted from the analysis of noise, since, as discussed in previous sections, the principle axes of the tensor susceptibility to noise (so-called, principle components) coincide with eigenvectors of the dynamic matrix and when directions of these axes change, the matrix changes as well, thus implying a change of the feedback matrix. Moreover, the larger the eigenvalue Ci of the smaller the susceptibility of the corresponding direction to noise, in accordance with Eq. (16).

Analysis of the principal components of the noise covariance reveals feedback changes

Human fingers are not independent force generators: when a person tries to press with one finger, other fingers of the hand show unintentional force increases (Kilbreath and Gandevia, 1994; Li et al., 1998), addressed as enslaving or lack of individuation (Schieber and Santello, 2004; Zatsiorsky et al., 2000). Enslaving patterns are person-specific and relatively robust; changes in enslaving have been reported with specialized practice (Slobounov et al., 2002). These patterns may be described as eigenvectors where i = {I−index; M−middle, R−ring, and L−little} stands for an instructed finger. Directions of may be viewed as preferred directions in the space of finger forces when the person is trying to press with individual fingers. They are related as:

| (26) |

to the forces Xi of the individual fingers i by an orthogonal matrix representing rotation in the four-dimensional space. We assume that these directions may change during the search for stability and they manifest statistically independent fluctuations thus being the eigenvectors of the deviation covariance matrix.

Since the task was formulated in a two-dimensional space, there should be only a two-dimensional sub-space in the space of the finger forces that governs the dynamics of the point on the screen. This implies that only two out of four eigenvalues of the matrix differ from zero, and the other two vanish, unless additional constraints are imposed upon the system, as mentioned in previous sections. In the latter case, all the eigenvalues differ from zero, but they are decreasing exponentially, as it was the case for the hierarchy of nimbleness shown in Figure 2. This has an important consequence: the directions that belong to the two-dimensional subspace relevant to feedback are less susceptible to noise, whereas the noisy directions correspond to the null-space and do not contribute significantly to the feedback.

The dependence Xi(n) of the finger forces was captured at the sequential time points t = nτ separated by time intervals of τ = 10–2 s. The covariance matrix was extracted from the data in several steps. First, for each Xi(n) an average time dependence xi(n) = xi(t) was calculated numerically as:

| (27) |

where τ is chosen as a time unit, while the averaging is performed over a time window ∼Yτ with the width Y. Next, the covariance of the finger forces

| (28) |

where was found numerically with the same Gaussian width Y.

The eigenvectors of the covariance C (Eq. 28) must coincide with the eigenvectors of the feedback matrix Ω (Eq. 11), and when the latter changes as a result of the equilibrium search algorithm, C must change as well. As a result of such a change, the eigenvectors corresponding to non-zero eigenvalues rotate. Note that the rotation rate of an orthogonal matrix (known as the angular velocity vector in the 3D space) is an antisymmetric n × n real matrix in an n−dimensional space, given by the logarithmic derivative of the orthogonal matrix. Square root of the trace of the square of this matrix gives the absolute value of the angular velocity, and the eigenvalues correspond to the rotation rates along the directions given by the corresponding eigenvectors.

Indeed, being a real and symmetric matrix, Ci,j can be set in a diagonal form by an orthogonal transformation given by a rotation matrix Ui,j(t) and its inverse matrix such that

| (29) |

The eigenvalues Ck(t) provide the principle components of noise in the orthogonal directions of statistically-independent modes, while the matrix Ui,k(t) can be viewed as a row of column eigenvectors relating these modes to the individual finger forces. All these quantities were found numerically from the data obtained for Ci,j(t). Note that the obtained orthogonal matrix Ui,k(t) experiences a time evolution corresponding to rotation in the 4-dimensional space of the finger forces, while the angular velocity of this rotation can be found as the eigenvalues of the left logarithmic derivative of Ui,k(t) defined as

| (30) |

The eigenvalues Ri of are real and have pairwise opposite signs, such that only two real numbers characterize rotation in the four-dimensional space. We calculated these quantities by replacing the derivative in Eq. (30) with the finite difference between two neighboring integer time points. In order to eliminate the so-called shot noise, which is an error-inducing influence of such a replacement, the calculation must be followed by averaging over a time interval shorter than Y. Figure 9 shows the results of such processing of the data presented in Figure 8.

Figure 9.

Finger forces in N as functions of time (in 10-2 s, upper panel) and the coordinates of the point position on the screen (lower panel). The law relating the forces to the position of the point was changed at t = 7 s, and the values of the finger forces at that time were chosen as zero.

One can identify eleven rotations of the covariance matrix basis presumably associated with changes of the feedback matrix. Note that the highest rotation velocity does not necessarily produce a strong effect on the finger forces, since the relevant quantity corresponds to the spike area, representing the rotation angle. The situation has much in common with dynamics of so-called adiabatic and diabatic molecular term crossings, well-known in Quantum Mechanics (Akulin, 2014). The maximum rotation velocity corresponds to the time moments when two or several eigenvalues of the matrix have a tendency to coincide, thus eliminating the difference between the large noise typical of the null-space and small noise typical of the orthogonal subspace in a stationary regime.

Discussion

The Stability Search Algorithm and Motor Control Hypotheses

The most important axiom in our approach is the assumption of task-specific coordinate systems organized to allow effective local control. A particular implementation has been introduced as the ”act on the most nimble” (AMN) rule. We have shown that this method can solve problems better than control with random matrices, but loses efficacy with an increase in the task dimensionality, not so much with the system dimensionality. Further, we considered a number of additional rules that improved the outcome. One of them is: if local control does not work, change the coordinate system. More specific rules that all improve the R(N,M) index include the following: (1) deal with one dimension at a time and do not return back to any of the previously involved dimensions; (2) organize elemental variables into generations by their nimbleness, i.e., characteristic rate of change; and (3) allow bi-local control to improve the performance.

Systems of coordinates in motor control

One of the important features of the suggested scheme is the identification of three systems of coordinates that can be used to describe processes associated with the neural control of movement. Most commonly, movement studies operate with variables directly measured by the available systems, for example kinematic, kinetic, or electromyographic variables. Some of these variables describe overall performance, for example fingertip coordinates during pointing. Other variables reflect processes in elements that contribute to the task-related performance (e.g., joint rotations, digit forces and moments, muscle activation, etc.).

One of the dominant ideas originating from the classical studies by Bernstein (1967) has been that elements are united by the CNS into relatively stable groups to reduce the number of variables manipulated at task-related neural levels. Such groups have been addressed as ”synergies” (D’Avella et al., 2003; Ivanenko et al., 2005; Ting and Mcpherson, 2005) or ”modes” (Krishnamoorthy et al., 2003). Some studies emphasized the relative invariance of the modes across tasks (Ivanenko et al., 2005; Torres-Oviedo and Ting, 2007), while other studies showed that the modes could change with changes in the stability requirements (Asaka et al., 2008; Danna-dos-Santos et al., 2008).

Within our scheme, measured variables produced by elements (e.g., digit forces, joint rotations, and muscle activations) are united into modes that are relatively stable across task variations. These modes may reflect preferred changes in referent body configuration (cf. Feldman, 2015) based on the person’s experience with everyday tasks. Mode composition is reflected in the structure of response to a noisy external input and can be reconstructed using matrix factorization techniques such as principal component analysis, factor analysis, and non-negative matrix factorization (reviewed in Tresch et al., 2006). Unlike many earlier studies, we do not assume that the number of modes (the dimensionality of the space where the control process takes place, xi in our notation) is smaller than the number of measured variables. It may be larger. For example, in our experiment, forces of four fingers were measured. The dimensionality of xi may be higher corresponding, for example, to the number of muscles or muscle compartments involved in the task.

According to our main assumption, there is another coordinate system that allows ensuring stability of performance using local control. We suggest using a term ”control coordinates” for this system. Unlike modes, control coordinates are sensitive to task changes, particularly to changes in conditions that affect stability of performance. When a person encounters a novel task, he/she searches for an adequate set of control coordinates that would allow implementing local control.

Our experiment showed that a quick reaction to an unexpected perturbation acted along directions in the finger force space that were close to the directions of finger force deviations produced by the perturbations. In contrast, these reactions formed larger angles with vectors reflecting finger modes, eigenvectors in the space of finger forces that reflected finger force changes when a person tried to act with one finger at a time. This result corroborates the idea that quick corrective actions are organized not along mode directions, but along axes of another coordinate system, close to the ones along which the system shows the quickest deviation in response to the perturbation.

Relations to the uncontrolled manifold and referent configuration hypotheses

Figure 4 offers a block diagram related to the control of the hand based on a few levels. At the upper level, the task is shared between the actions of the thumb and the opposing fingers represented as a single digit (virtual finger, Arbib et al., 1985) with the same mechanical effect as the four fingers combined. Further, the virtual finger action is shared among the actual fingers (our experiments analyzed four-finger coordination at that level). Even further, action of a finger is shared among a redundant set of muscles contributing to that finger’s action. At the bottom level, each muscle represents a set of motor units united by the tonic stretch reflex feedback to stabilize equilibrium states of the system ”muscle + reflexes + external load”. Only the last level may be viewed as based on relatively well-known neural mechanisms with the threshold of the tonic stretch reflex being the control variable for each muscle (Feldman, 1986).

While the scheme in Figure 4 ensures some stability of the action, changes in the overall action organization (e.g., changes in the RC trajectories) may be needed if the task changes or there is a major change in the external force field. The general principles suggested in this paper offer a solution for the problem of stabilizing action in such conditions. A few recent studies have shown that, when a major change in the external conditions of task execution takes place, corrective actions are seen in both range (ORT) and self-motion (UCM) spaces with respect to salient performance variables (Mattos et al., 2011, 2015). Moreover, self-motion (addressed as ”motor equivalent” motion) commonly dominates, while, by definition, it is unable to correct the action. These observations suggest that no single economy principle can form the foundation for such corrections. They allow interpretation within the set of principles suggested in this paper. Any perturbation is expected to induce large effects in less stable directions (those that span the UCM) as compared to more stable directions (ORT). According to the AMN-rule, corrective action is organized along the most nimble of the control coordinates, which projects primarily on the UCM. Hence, one can expect the corrective action to be primarily directed along the UCM as well.

Reasonably sloppy control may be good enough

Several recent publications have presented arguments in favor of the general idea that the CNS may not solve typical problems perfectly, but rather use a set of simple rules that lead to acceptable solutions for most problems (Latash, 2008; Loeb, 2012). Such rules may fail to solve specific problems and then healthy people make mistakes, fall, mishandle objects, spill coffee, etc. We presented a particular instantiation of such a set of rules (based on the AMN-rule) and showed that these rules were able to stabilize action with high probability. The experimental demonstration of relatively small angles between the vectors of perturbation-induced force changes and corrective changes in finger forces supports the feasibility of the AMN-rule.

Selecting a particular point (range) within the solution space has been discussed as resulting from optimizing the action with respect to some cost function (Prilutsky and Zatsiorsky, 2002). Note that only one point on the solution hyper-surface is optimal with respect to any given cost function. Other points within the UCM violate the optimality principle even though they lead to seemingly perfect performance. In a sense, large variance within the UCM combined with low variance within the ORT implies that the person is accurate, but sloppy. In the course of practice, when the person becomes as accurate as one can possibly be with respect to the explicit task, further practice may stabilize directions within the UCM to ensure that performance remains as close as possible to optimal with respect to a selected criterion. This is what we call ”perfectionism”. Note that perfectionism is never absolute, but the degree of sloppiness can be reduced.

Limitations and future directions

At the current stage, our approach may be seen as very much simplified, ignoring some of the well-established features of the system for the production of movements. These include, in particular, the complex, non-linear properties of muscles and time delays in feedback loops from sensory receptors. In our experiments, we used a rather simple linear system of multi-finger force/moment production. Application of this approach to more natural, complex tasks is definitely non-trivial. In such tasks, the system under consideration is so complex that currently neither our approach nor any other approach (to our knowledge) is able to offer a viable solution that would take into account all the features of the involved elements. We think, however, that the conceptual framework developed in our paper may be applicable across tasks and systems resulting in “good enough” solutions able to solve typical problems with acceptable probability.

At this stage, we only try to offer a conceptual solution for the problem of stabilizing relevant sub-spaces within high-dimensional spaces of elements involved in all natural actions. We view the basic idea as applicable to different plants, but this is something that currently remains outside the scope of the paper. That is why we compared some of the predictions to experiments with isometric multi-finger pressing tasks, when muscle length changes were minimal. It would require a major effort to translate the conceptual lessons from this work to the control of actual multi-joint actions. We hope to merge our approach with the framework of the equilibrium-point hypothesis and the ideas of hierarchical control with referent spatial coordinates.

Concluding comments

Implications for motor learning and motor disorders

We view, as the main message of our study, the suggestion that ensuring stability of action by a multi-element system does not require solving complex equations. Instead, it can be based on a relatively simple algorithm. Unlike most control schemes in the literature, the suggested algorithm, the "act on the most nimble" (AMN) rule, does not require knowledge by the controller about the relations between small changes in elemental variables and changes in a salient performance variable, e.g., as represented by the Jacobian of the system.

The theoretical part of our paper shows that adding relatively minor modifications to the main algorithm can improve significantly the ability of the system to find stable solutions (see previous sections). In the experiments, we observed how subjects searched for an appropriate implementation of the AMN-rule in an artificial task resembling a computer game when natural proprioceptive feedback could be in conflict with the visual feedback. These studies illustrate the process of searching for adequate control variables, sometimes successful and sometimes not (e.g., Figures 6, 9, and 10).

Figure 10.

The angular velocity R1(t) of rotation of the basis of the covariance matrix eigenvectors as function of time (bottom, solid line, arbitrary units). Logarithms of the eigenvalues Ck(t) of the covariance matrix (four bold curves above the velocity). The average of the covariance matrix was computed over Y = 100 sequential time points with the interval of 10-2 s between the points. The switching of the feedback eventually took place in the domains of ”avoid crossings” discussed, marked here by arrows, and corresponding to the maxima of the angular velocity. On the top of the plots, the finger forces and the cursor coordinates, corresponding to the dependencies in Figure 8 averaged over the same time intervals Y = 100, are shown for comparison. The ”avoid crossing” of the three eigenvalues occurs for the 4-th, 7-th, and 8-th intersections. The strongest contribution comes from the 5-th crossing, which presumably is relevant to changing of the direction in the two-dimensional orthogonal subspace.

The AMN rule can be discovered, refined, and optimized in the process of practice. The process of discovering acceptable solutions to the task of stabilizing a performance variable requires, as the first step, finding a set of adequate control coordinates, i.e. a set of variables that allow implementing local control (see previous sections). This process may be crucial for the development of high-level athletic skills, which require high stability of salient performance variables during frequent and unexpected changes in the external forces acting on the body. Typical examples include downhill skiing, snowboarding, figure skating, gymnastics, and other sports characterized by large, quickly-changing forces between the athlete's body and the environment. Our current knowledge on what the central nervous system of the athletes learns during training is all but non-existent. It is feasible that the central nervous system discovers adequate sets of control coordinates, which likely have to switch at different phases of the movement. Discovering precise switching times may represent another step of learning a complex skill.

The ability to discover adequate sets of control coordinates to be used in the main algorithm and the ability to switch from one set of control coordinates to another can be violated in various motor disorders. These processes may suffer from inadequate sensory input, for example, in patients with large-fiber peripheral neuropathy (so-called, "deafferented persons", Sainburg et al. 1993; Yousif et al. 2015). This condition may not allow forming adequate sets of control coordinates and providing sensory information on changes in elemental variables to be used in implementation of the AMN-rule. It may also impair the ability to time changes in the control coordinates appropriately. The mentioned mechanisms may also suffer from an injury to involved neural structures, in particular components of neural loops via the basal ganglia and the cerebellum to the cortex. Note that loss of movement stability and ability to learn stable performance in novel conditions is a sign of neurological disorders involving the cerebellum and the basal ganglia (reviewed in Celnik, 2015; Latash and Huang, 2015; Llinas and Welsh, 1993).

Our current knowledge of neural substrates that might be involved in the suggested scheme is virtually non-existent. Maybe future studies, in particular those involving persons with exceptional skills (such as high-level athletes) and persons with significantly impaired stability of actions (such as neurological patients) could provide better insights into the role of specific physiological structures in ensuring action stability across the repertoire of both functional and highly specialized movements.

Acknowledgements

Preparation of this manuscript was in part supported by a grant NS035032 from the National Institutes of Health, USA. We are grateful to Yen-Hsun Wu and Sasha Reschechtko for their help with the experiments.

References

- Akulin VM. Dynamics of Complex Quantum Systems. Springer Dordrecht Heidelberg; New York, London: 2014. pp. 195–260. Second Edition. –. [Google Scholar]

- Arbib MA, Iberall T, Lyons D. Goodwin AW, Darian-Smith I. Hand Function and the Neocortex. Berlin: Springer Verlag; 111-129; 1985. Coordinated control programs for movements of the hand. [Google Scholar]

- Asaka T, Wang Y, Fukushima J, Latash ML. Learning effects on muscle modes and multi-mode synergies. Exp Brain Res. 2008;184:323. doi: 10.1007/s00221-007-1101-2. –. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bernstein NA. A new method of mirror cyclographie and its application towards the study of labor movements during work on a workbench. Hygiene, Safety and Pathology of Labor. 1930. # 5, p. 3-9, and # 6, p. 3-11. (in Russian)

- Bernstein NA. The Co-ordination and Regulation of Movements. Pergamon Press; Oxford: 1967. [Google Scholar]

- Celnik P. Understanding and modulating motor learning with cerebellar stimulation. Cerebellum. 2005;14:171. doi: 10.1007/s12311-014-0607-y. –. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Danion F, Schöner G, Latash ML, Li S, Scholz JP, Zatsiorsky VM. A force mode hypothesis for finger interaction during multi-finger force production tasks. Biol Cybern. 2003;88:91. doi: 10.1007/s00422-002-0336-z. –. [DOI] [PubMed] [Google Scholar]

- Danna-Dos-Santos A, Degani AM, Latash ML. Flexible muscle modes and synergies in challenging whole-body tasks. Exp Brain Res. 2008;189:171. doi: 10.1007/s00221-008-1413-x. –. [DOI] [PMC free article] [PubMed] [Google Scholar]

- d’Avella A, Saltiel P, Bizzi E. Combinations of muscle synergies in the construction of a natural motor behavior. Nat Neurosci. 2003;6:300. doi: 10.1038/nn1010. –. [DOI] [PubMed] [Google Scholar]

- Diedrichsen J, Shadmehr R, Ivry RB. The coordination of movement: optimal feedback control and beyond. Trends Cogn Sci. 2010;14:31. doi: 10.1016/j.tics.2009.11.004. –. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Feldman AG. Once more on the equilibrium-point hypothesis (λ-model) for motor control. J Mot Behav. 1986;18:17. doi: 10.1080/00222895.1986.10735369. –. [DOI] [PubMed] [Google Scholar]

- Feldman AG. Referent control of action and perception: Challenging conventional theories in behavioral science. Springer, NY: 2015. [Google Scholar]

- Gelfand IM, Latash ML. On the problem of adequate language in movement science. Motor Control. 1998;2:306. doi: 10.1123/mcj.2.4.306. –. [DOI] [PubMed] [Google Scholar]

- Ivanenko YP, Cappellini G, Dominici N, Poppele RE, Lacquaniti F. Coordination of locomotion with voluntary movements in humans. J Neurosci. 2005;25:7238. doi: 10.1523/JNEUROSCI.1327-05.2005. –. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kilbreath SL, Gandevia SC. Limited independent flexion of the thumb and fingers in human subjects. J Physiol. 1994;479:487. doi: 10.1113/jphysiol.1994.sp020312. –. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Krishnamoorthy V, Goodman SR, Latash ML, Zatsiorsky VM. Muscle synergies during shifts of the center of pressure by standing persons: Identification of muscle modes. Biol Cybern. 2003;89:152. doi: 10.1007/s00422-003-0419-5. –. [DOI] [PubMed] [Google Scholar]

- Latash ML. Synergy. Oxford University Press: New York; 2008. [Google Scholar]

- Latash ML. Motor synergies and the equilibrium-point hypothesis. Motor Control. 2010;14:294. doi: 10.1123/mcj.14.3.294. –. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Latash ML. The bliss (not the problem) of motor abundance (not redundancy) Exp Brain Res. 2012;217:1. doi: 10.1007/s00221-012-3000-4. –. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Latash ML. Biological movement and laws of physics. Motor Control. 2017;21:327. doi: 10.1123/mc.2016-0016. –. [DOI] [PubMed] [Google Scholar]

- Latash ML. Stability of kinesthetic perception in efferent-afferent spaces: The concept of iso-perceptual manifold. Neurosci. 2018;372:97. doi: 10.1016/j.neuroscience.2017.12.018. –. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Latash ML, Huang X. Neural control of movement stability: Lessons from studies of neurological patients. Neurosci. 2015;301:39. doi: 10.1016/j.neuroscience.2015.05.075. –. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Li ZM, Latash ML, Zatsiorsky VM. Force sharing among fingers as a model of the redundancy problem. Exp Brain Res. 1998;119:276. doi: 10.1007/s002210050343. –. [DOI] [PubMed] [Google Scholar]

- Llinás R, Welsh JP. On the cerebellum and motor learning. Curr Opin Neurobiol. 1993;3:958. doi: 10.1016/0959-4388(93)90168-x. –. [DOI] [PubMed] [Google Scholar]