Abstract

History-dependent characteristic time scales in dynamics have been observed at several levels of organization in neural systems. Such dynamics can provide powerful means for computation and memory. At the level of the single neuron, several microscopic mechanisms, including ion channel kinetics, can support multiple-time-scale dynamics. How the temporally complex channel kinetics gives rise to dynamical properties of the neuron is not well understood. Here, we construct a model that captures some features of the connection between these two levels of organization. The model neuron exhibits history-dependent multiple-time-scale dynamics in several effects: first, after stimulation, the recovery time scale is related to the stimulation duration by a power-law scaling; second, temporal patterns of neural activity in response to ongoing stimulation are modulated over time; finally, the characteristic time scale for adaptation after a step change in stimulus depends on the duration of the preceding stimulus. All these effects have been observed experimentally and are not explained by current single-neuron models. The model neuron here presented is composed of an ensemble of ion channels that can wander in a large pool of degenerate inactive states and thus exhibits multiple-time-scale dynamics at the molecular level. Channel inactivation rate depends on recent neural activity, which in turn depends through modulations of the neural response function on the fraction of active channels. This construction produces a model that robustly exhibits nonexponential history-dependent dynamics, in qualitative agreement with experimental results.

Keywords: adaptation, multiple time scales, single-neuron model, nonexponential relaxation, short-term cellular memory, ion channel diffusive dynamics

Introduction

A ubiquitous feature of neuronal systems, at all levels of organization, is the appearance of a wide range of history-dependent time scales; not only the responses but also their characteristic time scales themselves are history and context dependent.

At the level of an ensemble of ionic channels, history-dependent characteristic time scales have been measured directly (Toib et al., 1998). This work showed the existence of a scaling relationship between stimulation duration and recovery time scale in Na+ channels. These results could not be understood by the simple picture of channel kinetics with a few well defined slow inactivation time scales. It was suggested that the observed scaling relationship reflects the existence of multiple inactive states of the sodium channel. This is consistent with other known multiple-time-scale behaviors of ionic channels that reflect their complex internal structure (Bassingthwaighte et al., 1994).

At the level of the single neuron, Tal et al. (2001) showed that temporal patterns of neural activity are strongly dependent on cumulative history of stimulation and activity. Indirect evidence for history dependent dynamics comes from scale-free fluctuations in the activity of neurons (Teich, 1989), heart cells (Soen and Braun, 2000), and synapses (Lowen et al., 1997). At a still higher level of organization, in vivo measurements on flies have shown that the time scales characteristic of adaptation in a visual interneuron depend on the conditioning stimulus parameters (de Ruyter van Steveninck et al., 1986; Fairhall et al., 2001). Thus, history-dependent multiple-time-scale dynamics appear repeatedly in experimental on excitable systems at several levels of organization. It is not clear, however, how multiple-time-scale dynamics at one level gives rise to multiple-time-scale dynamics at the next higher level. Here, we approach the question by focusing on the interaction between the levels of ionic channels and single-neuron activity.

Experiments have shown that probing neurons with complex stimuli and on long time scales results in temporally complex responses. These are often understood in terms of context- and history-dependent slow modulations of neural response parameters. Several underlying molecular mechanisms support these modulations in an activity-dependent manner (LeMasson et al., 1993; van Ooyen, 1994; Marom, 1998; Wang, 1998; Carr et al., 2003). Such mechanisms are usually understood to operate by adding uniquely defined slow time scales (Marom and Abbot, 1994; Fleidervish et al., 1996; Kim and Rieke, 2003) and cannot capture multiple-time-scale dynamics.

In this work, we construct a model that aims to describe modulations in neural response in connection with multiple-time-scale dynamics of ion channels. The ensemble of ion channels making up the neuron is assumed to have a space of inactive states modeled by a simple scheme (Millhauser et al., 1988). We start by investigating this model in the context of experiments on a membrane patch, using the same protocol of Toib et al. (1998) and finding a similar scaling relationship as that observed in the experiment. We then use the concept of neuronal excitability, the sensitivity of a neuron to inputs, to define a variable that scales up these kinetics to the level of the neuron. This idea is inspired by experiments suggesting that neuronal excitability is well defined locally in time and can be modulated by activity and stimulation over long time scales (Marder et al., 1996; Desai et al., 1999). Justification for the use of a single variable to describe neuronal excitability is derived from properties of the Hodgkin-Huxley neural response function, and construction of the neuron model is presented in detail. We then proceed to investigate some properties of the neuron model under different stimulation protocols. The model is shown to robustly reproduce several effects of multiple-time-scale dynamics that have been observed in experiment and are not explained by standard or biophysical models.

Materials and Methods

Membrane patch model. The kinetics of ion channels is described by a model based on the one proposed and studies by Millhauser et al. (1988), in which channels can occupy a linear chain of N inactive states; pairs of neighboring states are coupled by the same transition rate β. An additional rate constant, α0, characterizes the transition from active to inactive states under depolarization. Following the suggestion of Toib et al. (1998), we extend this model to include a voltage-dependent inactivation rate (see Results, scheme in Eq. 6). Rather than specifying this dependence in detail, because our stimulation protocol consists of fixed-level voltage pulses, we assume that the inactivation rate is zero in the absence of voltage and equal to a constant α0 in the presence of the applied voltage. Thus, in dimensionless time τ = tβ, the model behavior is determined by a single dimensionless parameter, the ratio α0/β. Because the values of the rate constants among inactive states are not known, we choose values that correspond to typical transition times of 10-100 ms. Choosing other values for β will scale the time axis accordingly, whereas different values of α0/β will modify the shape of the curves.

Neuron model. We start from a model of an ensemble of ion channels as described above and seek to connect it to neuronal dynamics. Denoting by μj(t) the population of the state j at time t, we define the dynamical variable of neuronal excitability X as the fraction of channels in the active (zeroth) state: X = μ0(t). The neural response is described by a continuous firing rate, which is assumed to be averaged over a time scale of many spikes (i.e., details of spikes and spike times are intentionally neglected), because we are interested in long-term modulations of neural activity. The rate is characterized by a sigmoid function of the input stimulus s, depicted in Figure 3a, and defined by the following:

|

(1) |

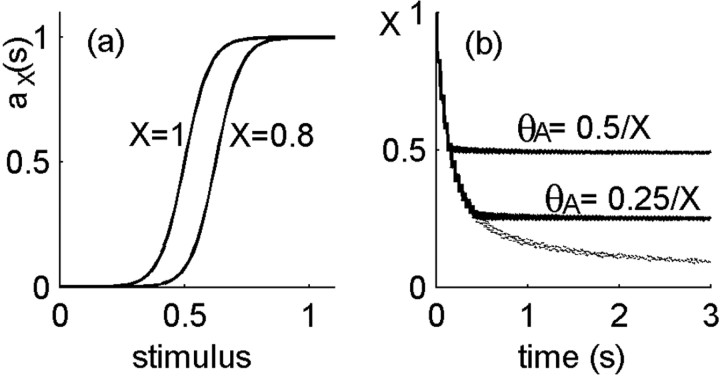

Figure 3.

Closing the loop between neural activity and channel kinetics. a, Neural activity is characterized by a sigmoid rate function with a fixed width and a threshold that is modulated by neuronal excitability X. As excitability increases from 0 toward 1, the activity threshold decreases as θA = cA/X. b, The effect of closing the loop on the dynamics of excitability: fraction of available ion channels as a function of time during stimulation in the neuron model (solid lines) compared with the model of a membrane patch (dashed, bottom line). In the neuron model, this fraction, the neural excitability, fluctuates around a steady-state value, X ≅ cA. In the membrane patch, it continuously decreases with time as more and more channels are driven into inactivation.

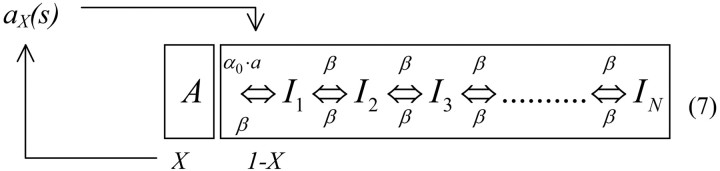

where the subscript X indicates that this function is parametrically dependent on neuronal excitability X. Here, s is the (dimensionless) stimulus, which can in principle be any stimulus that directly or indirectly activates the neuron; most conveniently, one may think of s as a current injected into the cell body. θA is the activity threshold and σ the sigmoid width. Activity is normalized to be dimensionless (i.e., the maximal activity is 1), whereas the dimensionless stimulus and sigmoid parameters θA and σ are measured in units of the typical range of stimulus. Excitability modulates the response function (Eq. 1) through the activity threshold θA(X) = cA/X. This form is consistent with the behavior of the Hodgkin-Huxley model (see Fig. 2), where X is the ratio between Na+ and K+ maximal conductance. If these maximal conductances can be slowly modulated, then X can be thought of as a slow dynamic variable. The value of the constant cA determines the sigmoid threshold when all channels are available (X = 1); it affects the steady-state value of the excitability under stimulation. Neural activity is coupled back to a channel model by setting the inactivation rate at time t equal to be α0 × αX(s(t)); the higher the neural activity, the higher is the rate to inactivate, and α0 is the maximal inactivation rate achieved when the activity of the neuron is maximal (=1).

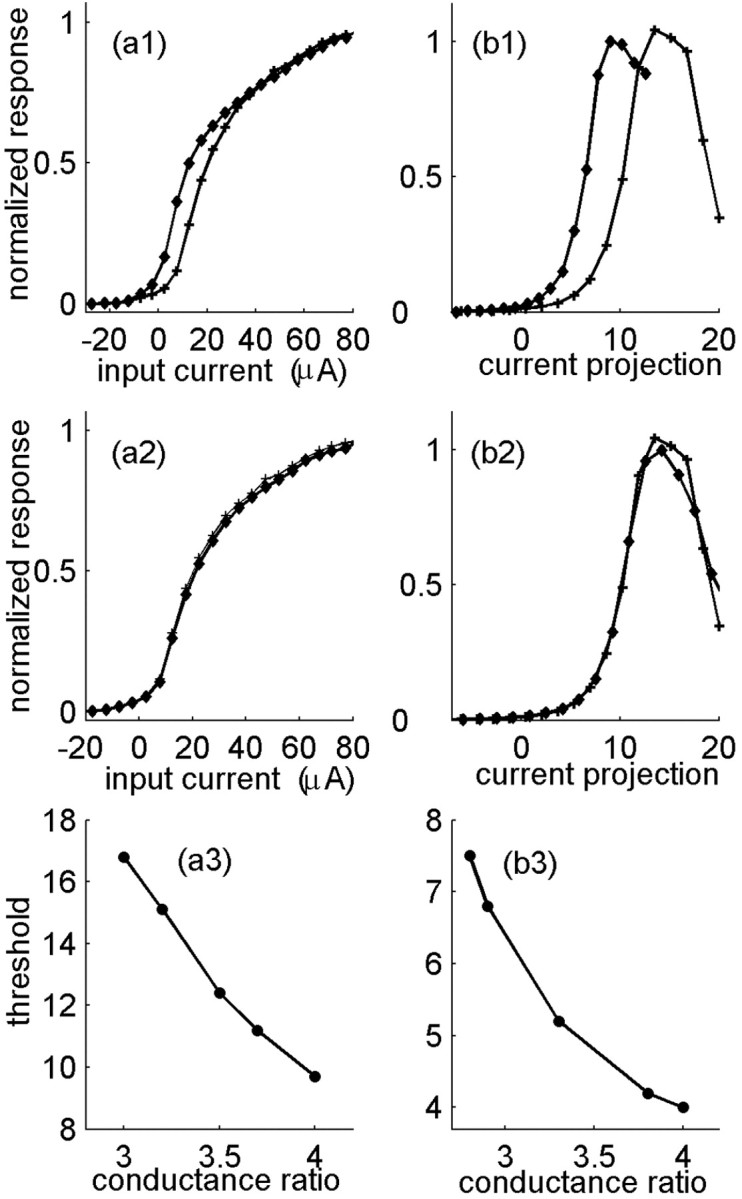

Figure 2.

Motivation for defining neuronal excitability. The normalized output firing rate is shown as a function of input current for the Hodgkin-Huxley model, with various values of the maximal conductances. Stimulus is a Gaussian noise with a correlation time of 500 ms (a) or 1 ms (b). Different values of the maximal conductances generally result in a different input/output function (a1, b1). In both curves, gk = 30 μS/cm2; gNa is changed between 90 μS/cm2 (+) and 120 μS/cm2 (diamonds). Changing the maximal conductance while keeping their ratio fixed (here 3) results in the same input/output function (a2, b2). gk = 30 μS/cm2 (+); gk = 40 μS/cm2 (diamonds). a3, b3, Dependence of the response threshold, defined as the input at half-maximal output, on the ratio of sodium to potassium maximal conductance.

Numerical simulation. The kinetics of the channels among their possible states was simulated by Markov matrices, which represent the discrete-time approximation of the rate equations describing the time evolution of the channel distribution. Neural activity is assumed to be instantaneously responsive to the stimulus and thus was updated continuously over time. A more detailed calculation, by Monte Carlo simulations of the individual channels, was also performed but does not add to the results that are presented here in terms of distributions. In the simulations presented here, unless stated otherwise, we used a number of channel inactive states N = 100 and rates in the range of 10-20 s-1, cA = 1/2, and σ = 0.1. The time step for the discrete time evolution was taken to be 0.02 times the shortest time scale in the problem (inverse transition rate) and was verified to be in the range in which discretization does not affect the results. The basic properties of the neural model were found to be robust with respect to these parameters.

Diffusion approximation. The continuous diffusion equation on a half space in one dimension is a useful tool to gain intuition about the model. Defining a coordinate z along the chain of channel states, we approximate the discrete states by a continuum and the random walk among the states by a diffusion process in z. This approximation has been used successfully in previous studies of ion channel dynamics (Millhauser et al., 1988; Frauenfelder et al., 1991; Goychuk and Hanggi, 2002). We assume that the coordinate z extends to infinity; this will be a good approximation as long as the stimulation time is short enough that the channels do not populate significantly the states farthest from the active state (t ≪ (N/ π)2/β, where N is the number of inactive states and β the typical rate of transition among them).

We use the diffusion approximation to estimate the recovery of the fraction of active channels μ0(t) (excitability in the neuron model) after stimulation of duration tS. During recovery, the problem is that of diffusion with an absorbing boundary, and thus, given the initial value μ0(tS) and the distribution of inactive channels μj(tS) (j > 0), one can in principle calculate this recovery time course exactly.

As explained in detail in Results, the neuron model under constant stimulation displays a stable value of excitability, X(tS) = μ0(t); therefore, at the end of a stimulation period X is approximately independent of stimulation time tS. In the membrane patch model, on the other hand, channels continue to inactivate as long as stimulation persists, and therefore the population of the active state decreases with stimulation time (see Fig. 3b). This empirical observation is then used as an initial condition to calculate the recovery curve in each case. To estimate the distribution of inactive channels, we use the solution of the diffusion equation with a reflecting boundary, representing the fact that during stimulation, channels are constantly being pushed back into the inactive pool. This solution reads as follows, after time tS:

|

(2) |

In the neuron model, we treat D an effective diffusion constant, which takes into account deviations from simple diffusion near the boundary. It can be empirically derived from a fit to the mean versus time of the simulation data (see Fig. 8). The approximation in Equation 2 captures well the decay of the channel distribution at large z and the behavior of the mean as a function of time but not the details of the distribution near the origin. For calculating the recovery to high thresholds, our results indicate that it is a sufficiently good approximation.

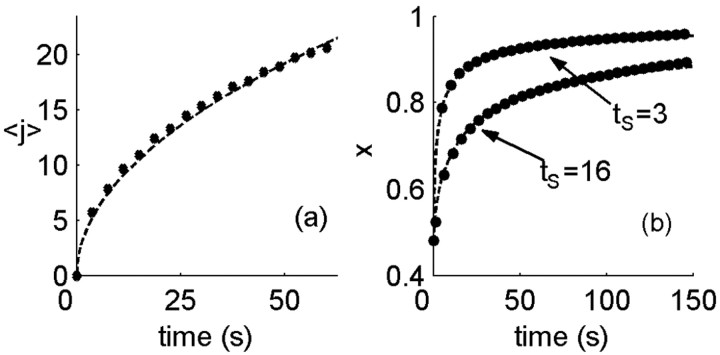

Figure 8.

Diffusion approximation to channel dynamics. a, Mean of channel distribution among states, 〈j〉, as a function of stimulation time (circles). Although channel kinetics deviate from simple diffusion near the active state, the mean follows closely a diffusion-like behavior,  , where tS is the stimulation time (dashed line). b, Excitability as a function of time in the recovery phase, starting from the end of stimulation. Shown for comparison are the results of the numerical simulation (circles) and an analytic estimate based on the diffusion equation (dashed line; see Materials and Methods for details). Parameters: β = α0 = 10 Hz; σ = 0.01; cA = 1/2.

, where tS is the stimulation time (dashed line). b, Excitability as a function of time in the recovery phase, starting from the end of stimulation. Shown for comparison are the results of the numerical simulation (circles) and an analytic estimate based on the diffusion equation (dashed line; see Materials and Methods for details). Parameters: β = α0 = 10 Hz; σ = 0.01; cA = 1/2.

The relationship between the channel distribution at the end of the stimulation period and the dynamics in the recovery phase is established by considering the recovery from a single point on the z-axis. Starting the entire distribution at z, the fraction of channels at the origin after time t is equal to the probability to return to the origin up to this time, as follows:

|

(3) |

This expression enables to calculate the recovery after time t from any given (normalized) distribution P(tS, z) as follows:

|

(4) |

Using the distribution of Equation 2, one finds the following:

|

(5) |

The argument tS here denotes the dependence of this solution on the history of stimulation duration. The scaling of the recovery time tR as defined by crossing a threshold θR, with the stimulation time tS is found from the implicit equation X(tS; tR) = θR. This leads to the linear scaling described in Results.

We use the diffusion approximation also to explain the dynamics of neural activity under strong stimulation. It is easiest to gain intuition into this problem in the limit of a hard threshold neural response (σ ≪ 1); here, one is looking for the time it takes the excitability variable to overcome the threshold for activity. In contrast to the dynamics of recovery to a high threshold, which are dominated by the tails of the channel distribution at the end of stimulation, here, the time to threshold is short and depends on the channel distribution in the vicinity of the active state. For strong inactivation, the frequency of activity is small and activity dominated by the dynamics of inactivation rather than that of the stimulus (see Fig. 9). Therefore, the boundary is absorbing most of the time, and only once in a while, a portion of the channels are returned to the state I1. In this limit, one can approximate the distribution of channels immediately after activity by the solution of the diffusion equation with an absorbing boundary and an additional δ function near the boundary resulting from the recently inactivated channels. Then, the time to the next threshold crossing behaves approximately as  , where tS is the stimulation duration. The activity itself then decays as

, where tS is the stimulation duration. The activity itself then decays as  , in agreement with the simulation result in Figure 10.

, in agreement with the simulation result in Figure 10.

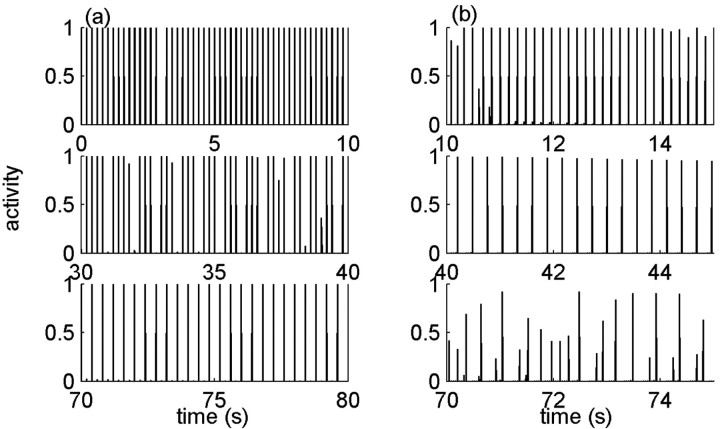

Figure 9.

Example of history dependence in the temporal pattern of response to stimuli. A periodic train of stimulus pulses is ongoing for a long time, and windows of response are chosen along the way for illustration. Model parameters here are: β = 1 Hz; α0 = 30 Hz; σ = 0.001; cA = 1/5. In this regimen, inactivation is strong and diffusive motion in the space of inactive states is very slow; the neural response is characterized by a hard threshold. a, Response to low frequency (5 Hz) starts by a one-to-one response (top), goes through an intermediate disordered response (middle), and then locks on a 1:2 response (bottom). b, In response to a high frequency of 25 Hz, the neuron responds only once every several pulses (top). The response frequency decreases at later times (middle) until the periodicity of the response breaks down completely (bottom).

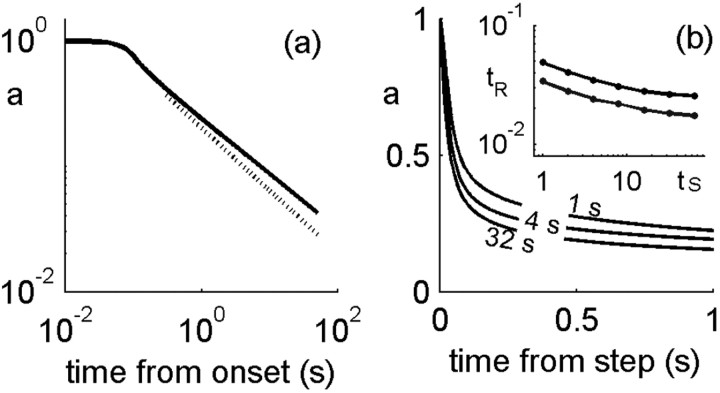

Figure 10.

History-dependent multiple time scales in adaptation. a, Activity in the model neuron is plotted as a function of time from stimulus onset. Two regimens are seen; the tail decays approximately as t-0.45 ( shown for comparison by a dashed line). b, Activity as a function of time since an abrupt change in stimulus strength from 0.6 to 1. For each line plotted, this time point in time was preceded by a different duration of the conditioning stimulus (1, 4, 32 s). At the switch to a higher stimulus, t = 0, the neuron responds with an increase in activity and then adapts nonexponentially with parameters that depend on the history. Inset, Time scale of decay, measured by threshold crossing for two values of the threshold, as a function of preceding stimulus duration. Here, β = α0 = 10 Hz; σ = 0. 1; cA = 1/2.

shown for comparison by a dashed line). b, Activity as a function of time since an abrupt change in stimulus strength from 0.6 to 1. For each line plotted, this time point in time was preceded by a different duration of the conditioning stimulus (1, 4, 32 s). At the switch to a higher stimulus, t = 0, the neuron responds with an increase in activity and then adapts nonexponentially with parameters that depend on the history. Inset, Time scale of decay, measured by threshold crossing for two values of the threshold, as a function of preceding stimulus duration. Here, β = α0 = 10 Hz; σ = 0. 1; cA = 1/2.

Results

Multiple-time-scale dynamics in a model of a membrane patch

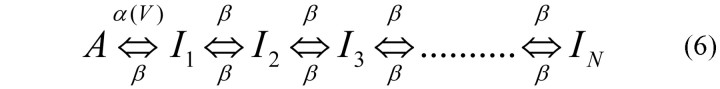

We first consider the dynamics of an ensemble of ion channels in a membrane patch, following the experiments of Toib et al. (1998), who showed that the characteristic time scale of recovery from inactivation exhibits a power law relationship with the duration of conditioning stimulus. These authors suggested that such behavior may arise from a multiplicity of inactive channel states, with a transition from active to inactive state that depends on the voltage: during the conditioning period, channels are pushed further into the inactive subspace, such that recovery becomes dependent on the distribution of channels among inactive states. Assuming that this basic phenomenon is independent of the specific configuration of inactive states and values of transition rates, the simplest form of a linear chain of inactive states was suggested, as shown in the following scheme:

Here, A is the active state, and {I1... IN} are states in which the channels are unavailable to respond to voltage. We refer to these states in what follows as inactive. Transitions among the inactive states are voltage independent and take place with a rate β, whereas the transition rate for inactivation depends on the applied voltage according to α(V). This scheme is a modification of the one introduced and studied by Millhauser et al. (1988); in their model, the transition rate between active and inactive was constant, and the multiplicity of inactive states was shown to result in a power law distribution of residence times.

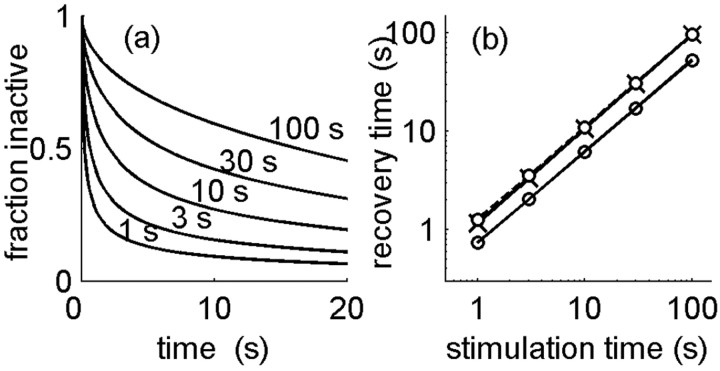

We use this model to carry out the experimental protocol of Toib et al. (1998), applying a fixed voltage stimulus V to the membrane patch for varying durations of time. The inactivation rate is taken to be constant in the presence of voltage, α(V) = α0, whereas in the absence of voltage, there is no inactivation, α(V = 0) = 0. (We do not specify the precise functional dependence of α(V); it is not important because we use only two values of voltage in the stimulation protocol.) Figure 1a shows the dynamics of recovery from inactivation presented as the fraction of inactive channels relative to the fraction at the end of the stimulation time. It is seen that the characteristic time course of these curves is nonexponential and depends on the history of the stimulation (i.e., on stimulation duration). In the experiments on ion channels, the recovery curves were approximately biexponential, and characteristic time scales could be extracted by fitting. Here, we define the characteristic time scale by a threshold crossing of the relative active fraction or equivalently, the time required to recover back a constant fraction of the missing channels. Figure 1b shows that this time scales with the stimulation duration according to a power law, tS = ctγR, similar to the experimental observation (Toib et al., 1998); moreover, the value of the scaling power γ is close to 1, which is similar to the one observed in the experiment (0.5-0.8, depending on the type of sodium channel).

Figure 1.

Multiple time scales in a model of a membrane patch. a, Fraction of inactivated channels after a depolarizing voltage applied for various durations, plotted as a function of time from end of depolarization. Model parameters: β = 1 Hz; α0 = 0.8 Hz. b, Characteristic time scale for recovery, defined by the decrease of inactivated fraction to a given threshold, plotted as a function of stimulation duration. These times obey a power-law scaling relationship with a power of ∼1. This scaling is weakly dependent on the choice of threshold for recovery (0.5 for top lines, crosses, and circles and 0.6 for bottom line). The same scaling is observed under cumulative inactivation; the dashed line with crosses shows the results for a periodic pulse train stimulus (25 Hz).

The model represented by the scheme above is characterized by one dimensionless parameter, the ratio of the two transition rates α0/β. The scaling exponent was found to be essentially independent of this parameter and gave a value between 0.9 and 1 for a ratio extending over two orders of magnitude, 0.1-10. Relating once again to the experiments, it was tested whether the scaling holds also for cumulative inactivation, namely in the presence of periodic voltage pulses extending over various durations instead of a constant voltage (Toib et al., 1998). Keeping the total integrated strength of the depolarizing voltage fixed, the scaling exponent was found to be the same as that for a constant voltage, again in the range of 0.9-1.

The scaling law of recovery in the model can be derived, at least in some limit, from the diffusion approximation to the channel dynamics. In this approximation, the channels are assumed to undergo continuous diffusion in one dimension, in which the origin represents the active state, and the rest of the space represents the degenerate inactive states. For large inactivation rates, the barrier for activation of channels is very high and can be approximated by a reflecting boundary at the origin. During the recovery time, in which no voltage is applied, the origin acts as an absorbing boundary. In both these cases, the dynamics of the channel distribution is exactly solvable; in relation to the experimental protocol, one solves the diffusion equation with reflecting boundary for a duration tS, thus obtaining the channel distribution at the end of the stimulation phase. This distribution then serves as an initial condition for the recovery phase, in which the boundary is absorbing. This computation results in the scaling law tS = ctR, namely a scaling power of γ = 1 (see Materials and Methods for details).

Defining the neuronal excitability variable

To connect the history-dependent time scales of the ensemble of ion channels with the dynamics of a neuron, we assume that the slow inactivation and recovery of channels affect the sensitivity of the neuron to inputs. Experiments have shown that slow inactivation of sodium channels induces slow modulations in the responsiveness of neurons to inputs (Fleidervish et al., 1996; Kim and Rieke, 2003). In general, excitability depends on many variables, for example, the availability of many different ion channel types or the level of calcium. In this section, we provide justification for a simplified description of excitability by one variable, which changes over typical time scales much slower than those of the action potential.

We motivate our description by examining the excitability of the Hodgkin-Huxley model, as reflected by its input/output function in a dynamic stimulus environment. Figure 2 shows this function computed for random slowly varying (a1,a2) or rapidly varying (b1,b2) stimuli. For a slowly varying stimulus, the input/output function is computed by counting spikes in an interval and plotting the local rate as a function of the mean stimulus in the interval. For a rapidly varying stimulus, one may use white-noise analysis techniques and characterize the response to different components of the stimulus (Rieke et al., 1999; Simoncelli et al., 2004). The simplest of these components is the projection of the stimulus onto the spike-triggered average, and this is the component used here, which defines the x-axis of the input/output response. The nonlinear input/output function to this stimulus component is then extracted from the data by statistical analysis (Brenner et al., 2000). Figure 2, b1 and b2, presents such an input/output function for the Hodgkin-Huxley model stimulated by a random signal that is drawn independently from a distribution each millisecond.

Slow channel inactivation can be represented in the Hodgkin-Huxley model by changes in maximal conductances: a significant fraction of inactivated sodium channels is equivalent to a corresponding fractional decrease in the maximal sodium conductance. As a quasi-static approximation, some insight can be gained by varying the maximal conductances “by hand” and observing the effect of this variation on the model behavior. Figure 2, a1 and b1, depicts the input/output functions for different values of the maximal sodium and potassium conductances in the two regimens of stimulus: in general, changing these values modifies the effective response threshold. As the sodium maximal conductance is increased, or as the potassium maximal conductance is decreased, the input/output function increases its sensitivity (lowers its threshold). Figure 2, a2 and b2, shows that the normalized input/output functions overlap if the maximal conductances are changed such that their ratio is kept constant (the maximal value of response in physical units changes by 10-20%). Thus, the response sensitivity depends strongly on the ratio of sodium to potassium maximal conductance and not on their individual values, at least in a limited range of parameters in which excitability is retained.

This result suggests that the ratio of available sodium to potassium channels can serve as an effective variable that controls the excitability or responsiveness to stimuli of the neuron. Characterizing the response function by a threshold, the input value at half-maximal response, Figure 2, a3 and b3, shows the dependence of this threshold on the ratio between the two types of conductance for the slow and rapid stimuli, respectively.

Closing the loop: activity-dependent inactivation and excitability-dependent activity

Following the motivation and justifications of the previous sections, we now specify the detailed structure of our model. The neuron is composed of an ensemble of sodium channels that can populate a large pool of inactive states, thus exhibiting a scaling behavior as discussed above, and an ensemble of potassium channels without such dynamics. This is consistent with the experimental findings of Toib et al. (1998), who reported that potassium channels of type ShakerB did not show a scaling behavior. Although other types of potassium channels may have complex temporal behavior, we here neglect this for sake of simplicity and attribute the modulations in excitability only to the sodium channels. Thus, in the model neuron, we follow the dynamics of one type of channel, the excitatory force, and assume that the restoring force remains of constant maximal value. The distribution of these channels among their different states is represented by a vector of length N + 1, μj(t), where j = 1:N are the inactive states and j = 0 is the active (available) state. Neural excitability X is defined as the fraction of sodium channels in the active (available) state, X(t) = μ0(t).

Neural activity a is described by a rate function, a coarse-grained, time-averaged representative of firing; temporal details at the resolution of action potentials are neglected. The time scale for averaging should be larger than the instantaneous interspike time. For typical firing rates measured in sensory neurons of up to 100 Hz, this implies an averaging over at least 10 ms. Activity is assumed to be evoked instantaneously by the external stimulus s according to a sigmoid input/output function aX(s) (Fig. 3a, Eq. 1). This assumption is consistent with a stimulus that varies over time scales also longer than the typical interspike time. The stimulus represents sensory or direct electrical input that drives spiking in the neuron; the precise type of input and its units will depend on the system and the experimental setup. For example, one may imagine a current directly injected into an isolated cell body in a slice experiment. Both stimulus s and neural activity a are defined to be dimensionless variables. In particular, for protocols in which the input is bounded, both variables have a maximal value of 1. Modulations in neural excitability are modeled by the parametric dependence of this input/output function on the internal excitability variable X. Drawing from the results for the Hodgkin-Huxley model shown in Figure 2, we take the input/output function to have a threshold that depends inversely on excitability as follows: θA = cA/X, where cA is a constant.

Neural excitability X is a dynamic variable representing the fraction of inactive channels. The rate of ion channel inactivation at a given time generally depends on neural activity: the higher the activity, the longer the membrane will be depolarized and the more channels will inactivate. We represent this property by making the inactivation rate proportional to neuronal activity in the model aα0, where a is the (normalized) neural activity and α0 is a constant inactivation rate. Once inactivated, the Na+ channels are allowed to move among the inactive states, I1... IN, connected by transition rates of similar magnitude, as in the model of the ensemble of channels described above. The scheme of transition rates and their connection with neural activity is depicted in Equation 7, as follows:

We note that closing the loop between activity and inactivation has a stabilizing effect on the fraction of channels that populate the available state A. This effect is illustrated in Figure 3b. Whereas in a collection of ion channels continuous stimulation results in a continuous depletion of channels from the active state, in the neuron model, if too many channels inactivate, there is little activity evoked, which in turn slows down inactivation and allows for some recovery. This implies that at the neuron level excitability may fluctuate around some value but will not continuously drift away, and thus neural excitability will be kept stable for a long time. Still, the fraction of inactivated channels changes its properties considerably through its distribution among the inactive state, and this property will affect the typical time scales that will arise in neural dynamics, as will be shown below.

The closing of the loop between neural activity and channel kinetics is a key ingredient of our model: the fraction of available channels determines neuronal excitability X, which parametrically modulates the neuronal input/output function; this function determines the responsiveness of the neuron to stimuli and hence its activity; neural activity, in turn, affects the rate of channel inactivation. The dynamical variable of excitability plays a central role here; it is a phenomenological variable at the neuronal level, which carries information about dynamics of the channel level. In simpler models of inactivation, a rate of transition out of a single inactivated state is defined, which determines the time scale of relaxation in the long-term behavior of the neuron (Zeevi and Bruckstein, 1981; Tabak et al., 2000; Kim and Rieke, 2003). Here, we have incorporated the degeneracy of inactive ion channel states into the neuron model, allowing to address directly the question of how the multiple time scales on the molecular level scale up to the neuron level and reflect in neural activity and response (Lowen et al., 1999).

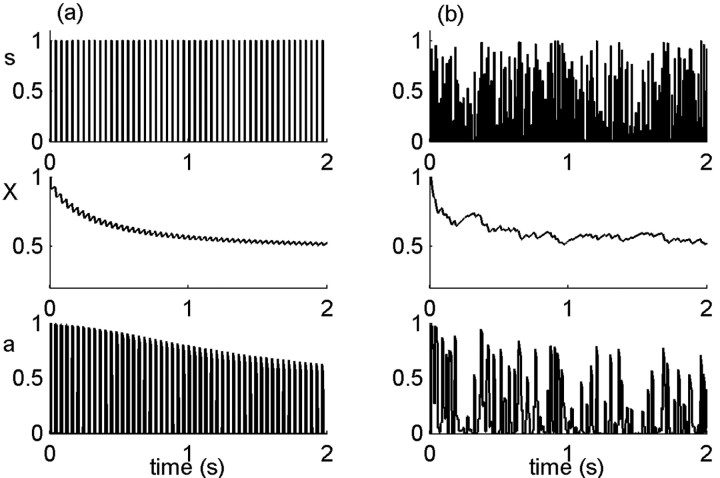

Figure 4 illustrates the results of typical model simulations. The variables stimulus, excitability and activity, are shown as a function of time for two stimulus types, a periodic pulse train (Fig. 4a) and a random stimulus (Fig. 4b). After neural activity, channels tend to inactivate and thereafter undergo voltage-independent transitions among the pool of inactive states. However, because activity depends on a sufficient degree of excitability, if too many channels have inactivated, there is no activity even in the presence of stimulus, and some channels will recover. This is seen in the behavior of the dynamic variables with time during the stimulation period: after a first strong decrease, excitability (Fig. 4, middle) fluctuates around the activity threshold. This stability of the excitability variable induces an effective separation of time scales in the neural response. On short time scales, neural activity (Fig. 4, bottom) is determined by the nonlinear input/output function with the steady-state value of excitability. Over long time scales, the cumulative effect of past history is in fact registered in a hidden degree of freedom, the distribution of channels among their inactive states, which is manifested only on long time dynamics. In the following sections, we explore some dynamic properties of this neuron model under different stimulation protocols.

Figure 4.

Stimulus, excitability, and neural response. A stimulus (s; top) is delivered to the model neuron. Activity (a; bottom) is elicited in response to the stimulus, according to a nonlinear response function (see Fig. 3a), which depends on the internal excitability variable (X; middle). a, Periodic unit pulses of width 10 ms and period 40 ms. b, Random stimulus, uniformly distributed in [0,1]. Parameters: α0 = β = 20 Hz; σ = 0.1; cA = 1/2.

History-dependent recovery: scaling relationship

We now address the question of how neuronal excitability recovers back to its maximal value after different stimulations; in particular, we would like to characterize the time course of this recovery. In analogy to the experimental protocols on the ensemble of ions in a membrane patch (Toib et al., 1998), we simulate a period of stimulation, then stop the stimulation and follow the dynamics of relaxation.

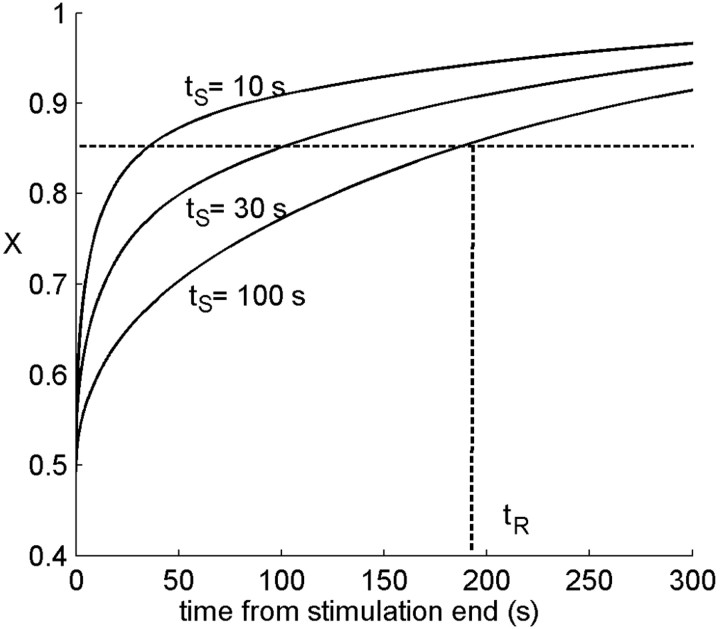

After stimulation has stopped, there is no force driving the channels to inactivation, the active state becomes absorbing, and excitability increases monotonically with a time course displayed in Figure 5, which is clearly nonexponential. The same simulation is repeated for various durations of the stimulating period, with all other stimulus properties unchanged. The resulting recovery curves, starting from the time at which stimulation ends, are depicted in Figure 5. We quantify these dynamics by an arbitrary recovery threshold (θR) on the excitability, defining a recovery time tR. Figure 5 shows that the recovery time depends on the history of stimulation and activity, although the excitability starts from the same steady-state value at the end of stimulation. Thus, the characteristic time scale for recovery (not just a recovery time) is history dependent. This implies that observing neuronal excitability alone, it may appear there is no Markov property to the system: the future dynamics is not determined solely by the current value of excitability. In fact, the hidden degrees of freedom of channel inactivation induce this apparent history dependence.

Figure 5.

Recovery of excitability after a stimulation period. After stopping the stimulation, neuronal excitability slowly recovers back to its maximal value. Recovery is shown after stimulation of duration 10, 30, and 100 s. Note that recovery is nonexponential, and that although excitability starts from the same value, the time course is different depending on the history. Defining a typical time for recovery by a fixed threshold, this time tR depends on history of stimulation and activity. Parameters: α0 [infi] = β = 20 Hz; σ = 0.1; cA = 1/2; stimulus frequency, 30 Hz.

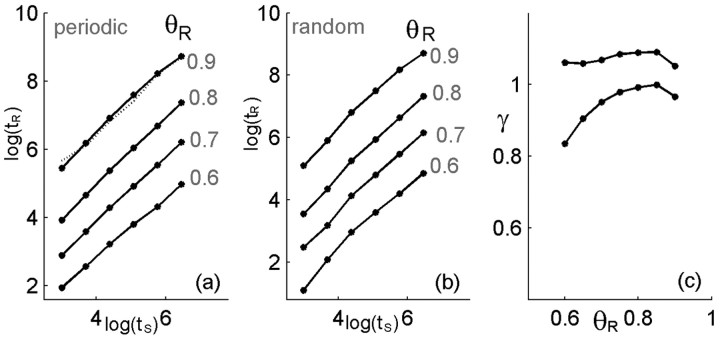

In their experiments on ion channels, Toib et al. (1998) have shown that the characteristic time for recovery of channels from inactivation scales as a power law with the duration of stimulation. Following these authors' suggestion, we have shown that this property is captured by the structure of ion channel states at the level of channel recovery (Millhauser et al., 1988; Toib et al., 1998). Figure 6 demonstrates that our model neuron displays a similar scaling behavior in the relaxation of its excitability after stimulation. Both for a periodic stimulus (Fig. 6a) and for a random stimulus (Fig. 6b), the time scale of recovery from inactivation tR shows a power-law scaling with stimulation duration (ts), which can be expressed as follows:

|

(8) |

Figure 6.

Scaling of recovery time with stimulation duration. Following the definitions of Figure 5, the time scale of recovery from inactivation shows a power-law scaling with stimulation duration tR∼(tS)γ. Results for different choices of the recovery threshold are shown for a periodic train of stimulus pulses (a) and for a random Poisson pulse train with the same mean rate (b) averaged over realizations. The best fit for γ in the scaling function is shown for the two stimulus types in c and is between 0.8 and 1.05. Parameters: α0 = β = 10 Hz; σ = 0.001; cA = 1/2.

where c and γ are constants. Because an arbitrary threshold was involved in the definition of the recovery time, the value of this time depends on the choice of threshold, as shown by the different curves in Figure 6, a and b. However, the functional behavior is seen to be similar, and the choice of recovery threshold affects the constant c and only weakly the scaling power γ, which is found to be between 0.75 and 1.05, as shown in Figure 6c. Changing the inactivation constant in the model, α0, and the stimulus frequency result in a similar scaling behavior with a scaling power γ depending only weakly on these parameters. Direct experimental measurements of recovery time scales at the level of the single neurons and their history dependence have not been performed. Therefore, these values can be compared with those measured for ion channels in a membrane patch (Toib et al., 1998) and are found to be of similar magnitude. We note that the main nontrivial result here is the existence of the scaling behavior between stimulus duration and recovery time; standard models of activity dependent neurons have a well defined relaxation time, which does not depend on the history of the system. It is not expected that a simple model as that presented here should predict precisely the power or account for the fine differences between different ion channel types. On the other hand, as discussed below, the simplicity of the model suggests that the appearance of activity-dependent multiple time scales in recovery will be rather general.

History-dependent recovery: underlying channel dynamics

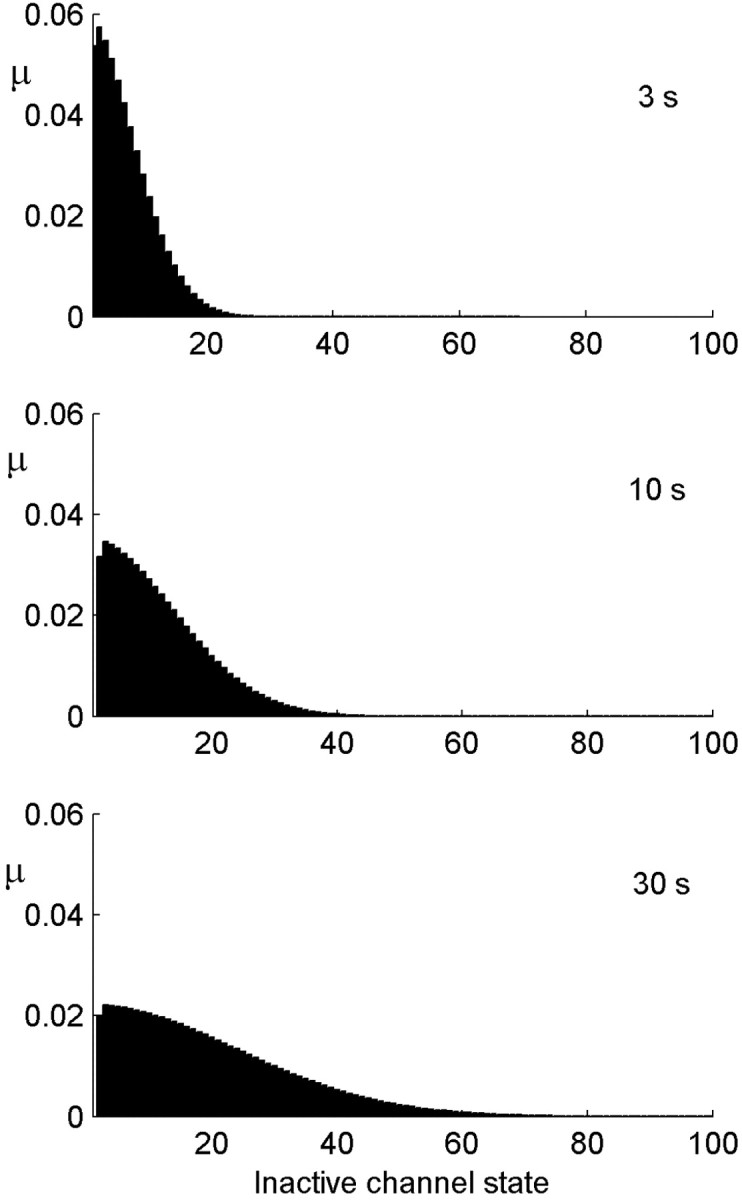

The dynamics of the ion channels within their degenerate inactive state space underlie the history-dependent multiple time scales that emerge in the dynamics of the neural model. We now consider these dynamics in some detail. Figure 7 shows the time evolution of the channel distribution during stimulation for various lengths of the stimulus history. As stimulation duration increases, the channel distribution broadens and develops a tail that decays as a Gaussian with the distance from the active state. The mean of the distribution of channels among states, 〈j〉, increases monotonically with time (Fig. 8a). It is important to recall that, although the inactive channels drift away from the active state in the mean, the portion of channels in the active state remains around the activity threshold and fluctuates in its vicinity.

Figure 7.

Distribution of the channels among the inactive states after different stimulus durations. The distribution becomes broader as the stimulation duration increases. The population at the origin itself is stable at a value of ∼0.5 (data not shown). Parameters:α0 =β = 20 Hz; σ = 0.1; cA = 1/2.

We turn once again to the diffusion approximation to explain the dynamics of the channel distribution. In the neuron model, deviations from diffusive dynamics occur near the boundary (the active state), where the transition rates change as a function of time depending on activity. At times when there is no stimulus, or when excitability is too low to generate activity, the active state acts as an absorbing boundary. At times after activity, only a fraction of the active channels are returned back into the inactive pool. The boundary switches between these two types of behavior according to the distribution of channels itself, which makes the problem nonlinear. Solutions of the diffusion equation on the half-line that start near the origin generally have a mean that grows as the square root of time. Figure 8a shows the mean calculated in the model, together with a fit of the form  . Allowing for an arbitrary fitting parameter C1, this semiquantitative prediction of diffusive dynamics captures well this property of the distribution among channel states during stimulation.

. Allowing for an arbitrary fitting parameter C1, this semiquantitative prediction of diffusive dynamics captures well this property of the distribution among channel states during stimulation.

Consider now the behavior of the model neuron in the recovery period, after stimulation has ended. Intuitively, it is clear that the longer was the stimulation period, the more channels have been pushed toward the deeply inactive states, and then the longer it will take for the system to recover. In fact, one can view the distribution of channels at the end of stimulation as a representation of past activity, which will determine the dynamics from here on; past temporal information has been transformed to information in the space of channel states. Because of the markovian nature of the model, this information determines completely the dynamics of recovery after the end of stimulation. In the absence of stimulation, the active state is absorbing at all times, and so the problem reduces to a diffusion equation with an absorbing boundary condition, which is exactly solvable in terms of the distribution at the end of the stimulation period. Neuronal excitability X(t) is equal to the cumulative probability to return to the active state over time. Figure 8b shows the analytic approximation derived in this way (solid lines) together with the model simulation (circles). For this analytic expression, the distribution at the end of stimulation was approximated by the solution of the diffusion equation with a reflecting boundary condition, allowing for an effective diffusion coefficient determined by the empirical measurement of  (Fig. 8a). From this approximation, one can find the scaling of the recovery time with the stimulation time; given a fixed recovery threshold (θR), excitability reaches the threshold at recovery time tR determined by the following equation:

(Fig. 8a). From this approximation, one can find the scaling of the recovery time with the stimulation time; given a fixed recovery threshold (θR), excitability reaches the threshold at recovery time tR determined by the following equation:

|

(9) |

This calculation results in the same linear scaling as found for the ensemble of channels, namely tS = ctR, where c depends on the effective diffusion coefficient and on the initial value of excitability (see Materials and Methods for additional details and formulas).

History-dependent temporal patterns in responsiveness

We now consider the manifestation of multiple-time-scale dynamics in neural responsiveness. Neurons are often categorized by the temporal activity patterns they produce in response to stimulation: tonic, bursting, etc. These responses reflect the interplay between external stimulation and internal neuronal characteristic time scales determined, for example, by ion channel content. Modeling studies have shown that internal neuronal mechanisms on different time scales can generate a wide range of responsiveness patterns (LeMasson et al., 1993; Mainen and Sejnowski, 1996). If the neuron has potentially a wide range of internal time scales that are evoked as a function of history, then a rich repertoire of activity patterns can be expected, which will also emerge as a function of history. Indeed, experiments reveal features such as the modulation of typical response frequency over long durations of stimulation (Tal et al., 2001). To illustrate similar effects in our model, we compare the response patterns of the neuron to the same stimulation but with different histories, see Figure 9. One finds, in some parameter regimens, mode locking and modulations of the typical response time over long durations of stimulation. The temporal activity patterns are illustrated in the regimen of strong inactivation and slow channel diffusion. Figure 9a shows the response to a periodic stimulus of low frequency; neural activity starts by a one-to-one response, then after some time starts to “miss” every fourth or fifth pulse as a result of slow cumulative inactivation, and finally settles on an ordered 1:2 response, namely, activity is evoked only every second stimulus pulse. Figure 9b shows the response to a higher frequency of stimulation; here, the neuron is able to respond only once every several pulses from the start. This frequency of response slowly decreases with time, although it is slow enough such that over the observed window the frequency seems constant. At even longer times, the periodicity of the response breaks down, and the neuron responds at random times and with variable response amplitude.

The different patterns of activity that emerge during persistent stimulation are related to slow changes in the balance between active and inactive channels and the distribution of the latter in their state space. Because this change is very slow, and because excitability is locally stable, observing the response in an intermediate-sized time window one may characterize the response by local properties that appear to be temporally stable. We next consider the functional form of this slow nonstationarity of response, which is especially significant in the case of adaptation to stimulus onset.

History-dependent adaptation to a step stimulus

Spike frequency adaptation is a widespread phenomenon in neural systems, which may be a tool in modulating the neural response and allow complex temporal processing. Nonexponential adaptation and relaxation have been measured in several systems (Thorson and Biederman-Thorson, 1974; Xu et al., 1996); it has also been reported in the dynamics of synapses (Lowen et al., 1997) as well as in memory functions at the cognitive level (Wixted and Ebbesen, 1991; Anderson, 1995). At the level of the neuron, channel inactivation plays a role in spike frequency adaptation to step stimuli (Fleidervish et al., 1996), and in this context, we would like to understand how the model presented here, which is based on channel inactivation to modulate neural excitability, responds to the classic step stimulus experiment. There are two issues to consider here: the functional form of the decay in activity and its dependence on history.

Figure 10a shows the response of the model neuron to a constant stimulus, starting from stimulus onset over a long time. There are two distinct decay regimens; the tail of the adaptation curve in this case follows approximately a power law of  (dashed line; best-fit power is -0.455).

(dashed line; best-fit power is -0.455).

Although nonexponential relaxation indicates the possibility of memory, it does not necessarily imply it. For example, it can arise from the superposition of several spatially separated exponential relaxation processes (Thorson and Biederman-Thorson, 1974). To explicitly demonstrate activity-dependent or memory effects in dynamics, a dependence of the time course of relaxation on history should be shown. We found that our model neuron exhibits a dependence of the adaptation time scale on the duration of the conditioning stimulus. Conditioning stimuli of strength 0.6 and different durations were applied to the model neuron, after which the stimulus strength was abruptly stepped up to 1. The neuron responded with a rise in activity followed by a slow adaptation, similar to the one described above for stimulus onset. Figure 10b shows different adaptation traces superimposed as a function of time from the step stimulus, corresponding to different length of conditioning stimulus. The time course of adaptation differs according to history: the initial transient decay becomes faster as the conditioning stimulus becomes longer, while the slower tails follow approximately the same power law.

Defining the typical time scale for relaxation at a threshold, the inset to Figure 10b shows the dependence of this time scale on the duration of the conditioning stimulus for two different threshold values. The dependence is weak and can be reasonably fit with a power of between -0.15 and -0.2, although the curves are not strictly power law.

The comparison of these results to experiment is not straightforward, because experiments of this protocol, which directly probe the single-neuron level, have not been performed as far as we know. In an experiment on the fly visual system in vivo, it was demonstrated that the time scale of adaptation to a step stimulus is proportional to the duration of the conditioning stimulus preceding the step (Fairhall et al., 2001). However it is not known whether this is a single-cell or a network property. Previous work on the same system had found a dependence of decay times on conditioning stimulus parameters in a slightly different protocol (de Ruyter van Steveninck et al., 1986). These authors could not localize the effect to a particular area in the visual system (Zaagman et al., 1983), leaving as a possibility that it is a collective network effect. Our results here show that, in principle, there could be a contribution from the single-cell level to such behavior. Additional experimental work is required to compare different stimulus protocols and to tease apart the mechanisms at work on different levels; most likely, several of those contribute simultaneously to the effect observed in vivo.

Discussion

In this work, we have constructed a model of neuronal responsiveness and activity, which has an underlying molecular structure in the spirit of ion channel kinetics. Rather than taking a detailed modeling approach that includes the structure of states for a specific channel, we have used an abstracted version that captures the internal degeneracy of the inactive voltage-independent part of this space (Millhauser et al., 1988). Because of their complex structure and multiplicity of conformations, a large space of internal nearly degenerate states are expected to be a rather general property of ion channels (Frauenfelder et al., 1991). A key ingredient in the model is closing the loop between neuronal activity and channel kinetics. The concept of neuronal excitability, a phenomenological dynamic variable at the neuronal level that reflects underlying channel dynamics, was used to close this loop: the fraction of available channels determines neuronal excitability, which determines neuronal activity; activity, in turn, affects the availability of channels by affecting the probability of inactivation. This step enables scaling up the diffusion-like kinetics of the ion channels to the level of a neuron. Several versions of the quantitative dependencies among these variables were tested. For example, excitability can enter through the threshold of the neuronal input/output function or through the maximal level of activity; inactivation can be a continuous or discrete function of activity. The results, presented in this work for one version of the model, are robust as long as the qualitative relationships between these variables are maintained.

Several interesting properties of the model neuron were found using simulations of different experimental protocols. First, after a period of stimulation, the internal excitability recovers back to its maximal value with a nonexponential relaxation curve, the characteristic time scale of which depends on the duration of the preceding stimulation. This history-dependent recovery dynamics results from the registering of the history in the distribution of channels among their multitude of degenerate states, providing an effective internal memory device. Second, during exposure to long persistent inputs, the response decays nonexponentially with power-law tails. This decay is so slow that over intermediate stretches of time, the response seems stationary; however, the local response properties vary slowly in their temporal patterns in response to the same stimulus. Thus, observing the neuron over intermediate time stretches, one finds a variety of temporal patterns that are determined by history of stimulation and activity. Finally, adaptation to an abrupt change in stimulus properties is also nonexponential, with a time course that depends on the duration of the preceding (conditioning) stimulus. These properties connect to the central experimental results in the literature reporting activity-dependent multiple-time-scale dynamics on several levels of organization (de Ruyter van Steveninck et al., 1986; Toib et al., 1998; Ellerkmann et al., 2001; Fairhall et al., 2001; Tal et al., 2001).

It is noted that in the same model neuron, different quantitative forms of multiple-time-scale dynamics emerge under different stimulation protocols. In particular, recovery after stimulation depends strongly on the duration of the conditioning stimulus, with a power of ∼1 (Fig. 6), whereas the time scale typical of adaptation to a step change in stimulus depends only weakly on the duration of the conditioning stimulus (Fig. 10). Intuitively, this is because the recovery of neuronal excitability reflects the recovery of channels form the multiple inactive states, whereas adaptation reflects the entry of channels into inactivation and is only indirectly related to the previous distribution of channels among the inactive states. This suggests the possibility that for experiments on neurons, different scaling behaviors of history dependence may be found for different experimental protocols. More detailed experiments at the level of the single neuron are required to test these predictions in detail.

Our model shows that the degeneracy of the functionally inactive state of ion channels is enough to endow the neuron model with multiple-time-scale, history-dependent behavior in responsiveness, adaptation, and recovery; no specific combination of rate constants is required. Models with specific configurations of transition rates among degenerate channel states were shown to result in power-law distributions of dwell time (Liebovitch et al., 1987). However, it is theoretically a robust result that escape from a cluster of states follows a nonexponential distribution of dwell times, independent of the detailed structure of state space and to some degree even of dimensionality (Nadler and Stein, 1996; Nadler et al., 1996). In the general case, a combination of power laws, stretched exponentials, or other nonexponential relaxation functions may appear. Such behaviors may be difficult to distinguish from a pure power law over a finite range of measurements, and moreover, a pure power law is not required for history dependence or for multiple time scales in response and recovery. It is intuitive that the dynamic properties of the neuron model presented here are related to the nonexponential dwell time distributions of the channels in the presence of internal degeneracy. Thus, we conjecture that history-dependent multiple-time-scale dynamics will be a general property of neurons with a similar internal degeneracy, although the nonexponential functional forms of relaxation may vary depending on the details.

In this work, we have described neuronal excitability by a single variable, representing the ratio between exciting and restoring types of channels in the Hodgkin-Huxley tradition. The underlying kinetics was attributed to the sodium channels, the excitatory force. Experiments have shown that the scaling of recovery with stimulation is not a universal property of ion channels, and that if present, the quantitative properties of this scaling depends on channel type (Toib et al., 1998). It is a challenging theoretical task to understand how a combination of excitatory and restoring forces, each with its own temporal scaling behavior, affects the properties of the dynamical system that describes a neuron.

Although this work has focused on models of a single neuron, it is acknowledged that multiple-time-scale dynamics are abundant at other levels of neural systems, for example, in single synapses (Lowen et al., 1997; Drew and Abbott, 2003; Fusi et al., 2005) or in networks of connected neurons. Computational or learning abilities will be significantly affected by the combination of all these levels in a way that is currently not understood. This places an important role for subsequent theoretical studies in anticipating these abilities. Models such as the one presented here can be further investigated in terms of their computational, adaptive, and learning properties. Several such model neurons can be integrated into a network, with or without multiple-time-scale behavior at the synapses. A comparison between systems with multiple time scales and those with a limited range of time scales, and an understanding of the functional role of these phenomena, is expected to stem out of such theoretical studies.

Footnotes

This work was supported in part by the United States-Israel Binational Science Foundation. We thank Shimon Marom for many illuminating discussions and Erez Braun for critical reading of this manuscript.

Correspondence should be addressed to Naama Brenner, Department of Chemical Engineering, Technion-Israel Institute of Technology, Haifa 32000, Israel. E-mail: nbrenner@tx.technion.ac.il.

Copyright © 2005 Society for Neuroscience 0270-6474/05/256479-11$15.00/0

References

- Anderson JR (1995) Cognitive psychology and its implications. New York: Freeman.

- Bassingthwaighte JB, Liebovitch LS, West BJ (1994) Fractal physiology. New York: Oxford UP.

- Brenner N, de Ruyter van Steveninck RR, Bialek W (2000) Adaptive rescaling maximizes information transmission. Neuron 29: 695-702. [DOI] [PubMed] [Google Scholar]

- Carr DB, Day M, Cantrell AR, Held J, Scheuer T, Catterall WA, Surmeier DJ (2003) Transmitter modulation of slow, activity-dependent alterations in sodium channel availability endows neurons with a novel form of cellular plasticity. Neuron 39: 793-806. [DOI] [PubMed] [Google Scholar]

- de Ruyter van Steveninck RR, Zaagman WH, Masterbroek HAK (1986) Adaptation of transient responses of a movement-sensitive neuron in the visual system of the blowfly C. erythrocephala Biol Cybern 54: 223-236. [Google Scholar]

- Desai NS, Rutherford LS, Turrigiano GG (1999) Plasticity in the intrinsic excitability of cortical pyramidal neurons. Nat Neurosci 2: 515-520. [DOI] [PubMed] [Google Scholar]

- Drew PJ, Abbott LF (2003) Scale-invariant synaptic dynamics in a computational model of recognition memory. Soc Neurosci Abstr 29: 89.10. [Google Scholar]

- Ellerkmann RK, Riazanski V, Elger CE, Urban BW, Beck H (2001) Slow recovery from inactivation regulates the availability of voltage-dependent Na(+) channels in hippocampal granule cells, hilar neurons and basket cells. J Physiol (Lond) 532: 385-397. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fairhall AL, Lewen GD, Bialek W, de Ruyter van Steveninck RR (2001) Efficiency and ambiguity in an adaptive neural code. Nature 412: 787-792. [DOI] [PubMed] [Google Scholar]

- Fleidervish IA, Friedman A, Gutnick MJ (1996) Slow inactivation of Na+ current and slow cumulative spike adaptation in mouse and guinea-pig neocortical neurons in slices. J Physiol (Lond) 493: 83-97. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Frauenfelder H, Sligar SG, Wolynes PG (1991) The energy landscapes and motions of proteins. Science 254: 1598-1603. [DOI] [PubMed] [Google Scholar]

- Fusi S, Drew PJ, Abbott LF (2005) Cascade models of synaptically stored memories. Neuron 45: 599-611. [DOI] [PubMed] [Google Scholar]

- Goychuk I, Hanggi P (2002) Ion channel gating: a first-passage time analysis of the Kramers type. Proc Natl Acad Sci USA 99: 3552-3556. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kim KJ, Rieke F (2003) Slow Na+ inactivation and variance adaptation in salamander retinal cells. J Neurosci 23: 1506-1516. [DOI] [PMC free article] [PubMed] [Google Scholar]

- LeMasson G, Marder E, Abbott LF (1993) Activity dependent regulation of conductances in model neurons. Science 259: 1915-1917. [DOI] [PubMed] [Google Scholar]

- Liebovitch LS, Fischbarg J, Koniarek JP (1987) Ion channel kinetics: a model based on fractal scaling rather than multistate Markov processes. Math Biosci 84: 37-68. [Google Scholar]

- Lowen SB, Cash SS, Poo MM, Teich MC (1997) Quantal neurotransmitter secretion rate exhibits fractal behavior. J Neurosci 17: 5666-5677. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lowen SB, Liebovitch LS, White JA (1999) Fractal ion channel behavior generates fractal firing patterns in neuronal models. Phys Rev E Stat Phys Plasmas Fluids Relat Interdiscip Topics 59: 5970-5980. [DOI] [PubMed] [Google Scholar]

- Mainen ZF, Sejnowski TJ (1996) Influence of dendritic structure on firing pattern in model neocortical neurons. Nature 382: 363-366. [DOI] [PubMed] [Google Scholar]

- Marder E, Abbott LF, Turrigiano GG, Liu Z, Golowasch J (1996) Memory from the dynamics of intrinsic membrane currents. Proc Natl Acad Sci USA 93: 13481-13486. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Marom S (1998) Slow changes in the availability of voltage-gated ion channels: effects on the dynamics of excitable membranes. J Membr Biol 161: 105-113. [DOI] [PubMed] [Google Scholar]

- Marom S, Abbot LF (1994) Modeling state-dependent inactivation of membrane currents. Biophys J 67: 515-520. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Millhauser GL, Salpeter EE, Oswald E (1988) Diffusion models for ion-channel gating and the origin of power-law distributions for single-channel recording. Proc Natl Acad Sci USA 85: 1503-1507. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nadler W, Stein DL (1996) Reaction-diffusion description of biological transport processes in general dimension. J Chem Phys 104: 1918-1936. [Google Scholar]

- Nadler W, Huang T, Stein DL (1996) Random walk on random partitions in one dimension. Phys Rev E Stat Phys Plasmas Fluids Relat Interdiscip Topics 54: 4037-4047. [DOI] [PubMed] [Google Scholar]

- Rieke F, Warland D, de Ruyter van Steveninck RR, Bialek W (1999) Spikes: exploring the neural code. Cambridge, MA: MIT.

- Simoncelli EP, Paninski L, Pillow JW, Schwartz O (2004) Characterization of neural responses with stochastic stimuli. In: The new cognitive neurosciences (Gazzaniga M, ed). Cambridge, MA: MIT.

- Soen Y, Braun E (2000) Scale-invariant fluctuations at different levels of organization in developing heart cell networks. Phys Rev E Stat Phys Plasmas Fluids Relat Interdiscip Topics 61: R2216-R2219. [DOI] [PubMed] [Google Scholar]

- Tabak J, Senn W, O'Donovan MJ, Rinzel J (2000) Modeling of spontaneous activity in developing spinal cord using activity-dependent depression in an excitatory network. J Neurosci 20: 3041-3056. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tal D, Jacobson E, Lyakhov V, Marom S (2001) Frequency tuning of input-output relation in a rat cortical neuron in vitro. Neurosci Lett 300: 21-24. [DOI] [PubMed] [Google Scholar]

- Teich MC (1989) Fractal character of the auditory neural spike train. IEEE Trans Biomed Eng 36: 150-160. [DOI] [PubMed] [Google Scholar]

- Thorson J, Biederman-Thorson M (1974) Distributed relaxation processes in sensory adaptation. Science 183: 161-172. [DOI] [PubMed] [Google Scholar]

- Toib A, Lyakhov V, Marom S (1998) Interaction between duration of activity and time course of recovery from slow inactivation in mammalian brain Na+ channels. J Neurosci 18: 1893-1903. [DOI] [PMC free article] [PubMed] [Google Scholar]

- van Ooyen A (1994) Activity-dependent neural network development. Network 5: 401-423. [DOI] [PubMed] [Google Scholar]

- Wang XJ (1998) Calcium coding and adaptive temporal computation in cortical pyramidal neurons. J Neurophysiol 79: 1549-1566. [DOI] [PubMed] [Google Scholar]

- Wixted JT, Ebbesen EB (1991) On the form of forgetting. Psychol Sci 2: 409-415. [Google Scholar]

- Xu Z, Payne JR, Nelson ME (1996) Logarithmic time course of sensory adaptation in electrosensory afferent nerve fibers in a weakly electric fish. J Neurophysiol 76: 2020-2032. [DOI] [PubMed] [Google Scholar]

- Zaagman WH, Masterbroek HAK, de Ruyter van Steveninck RR (1983) Adaptive strategies in fly vision: on tier image-processing qualities. IEEE Trans Syst Man Cybern 13: 900-906. [Google Scholar]

- Zeevi YY, Bruckstein AM (1981) Adaptive neural encoder with self inhibition and threshold control. Biol Cybern 40: 79-92. [DOI] [PubMed] [Google Scholar]