Abstract

In what they described as “a tactile analog of blindsight,” Paillard et al. (1983) reported the case of a woman with damage to the left parietal cortex who was profoundly impaired in detecting tactile stimuli but could nonetheless correctly identify their location (also Rossetti et al., 1995). This stands in direct contrast to reports of neurological patients who were unable to accurately locate stimuli that they could successfully detect (Head and Holmes, 1911; Halligan et al., 1995; Rapp et al., 2002). The combination of these findings suggests that detecting and locating tactile stimuli are doubly dissociable processes, presumably mediated by different neural structures. We conducted four psychophysical experiments seeking evidence for such a double dissociation in neurologically intact subjects. We compared people's accuracy in detecting versus locating a tactile stimulus presented to one of four fingers and followed by a vibrotactile mask presented to all four fingers. Accuracy scores for both the yes–no detection and four-alternative forced-choice location judgments were converted to a bias-free measure (d′), which revealed that subjects were better at detecting than locating the stimulus. Detection was also more sensitive than localization to manipulations involving the mask: detection accuracy increased more steeply than localization accuracy as the target-mask interval increased, and detection, but not localization, was affected by changes in the mask frequency. By comparing these results with simulated data generated by computational models, we conclude that detection and localization are not mutually independent as previous neurological studies might suggest, but rather localization is subsequent to detection in a serially organized sensory processing hierarchy.

Keywords: somatosensory, touch, blindsight, masking, vibrotactile, signal detection theory

Introduction

“Blindsight” is a striking neuropsychological syndrome in which a patient with damage to visual cortical areas is able to identify attributes of an object (e.g., its location) despite reporting that they cannot “see” the object (Weiskrantz et al., 1974; Azzopardi and Cowey, 1997; Stoerig and Cowey, 1997). Descriptions of this syndrome have had an enormous impact in psychology, neurology, and philosophy. Evidence for an equivalent dissociation between conscious perception of a stimulus and knowledge of its spatial properties is comparatively rare among sensory modalities other than vision, but one area in which such evidence exists is touch. Paillard et al. (1983) reported the case of a woman with damage to the left parietal cortex who was profoundly insensitive to tactile stimuli applied to her right hand and lower right arm. Despite this, she showed above-chance accuracy in identifying the location of stimuli that she failed to detect. A similar somatosensory deficit (as might be called “numb touch”) was reported by Rossetti et al. (1995) in their study of a man with a left thalamic lesion. The ability of these patients to locate tactile stimuli in the absence of conscious detection contrasts with classic reports (Head and Holmes, 1911) and more recent demonstrations (Halligan et al., 1995; Rapp et al., 2002) of patients who were unable to accurately locate stimuli that they could successfully detect. The combination of these findings indicates that detecting and locating tactile stimuli are doubly dissociable processes, presumably performed by different neural structures.

Although neurologically induced dissociations are an extremely important source of evidence regarding the functioning of perceptual systems, one must exercise caution when using the behavior of patients with brain damage to make inferences about normal functioning. Moreover, such neurological evidence is often based on a small number of cases for which relevant premorbid data are almost never available. In this regard, evidence for equivalent, but less dramatic, process dissociations in intact “normal” subjects is invaluable. Not surprisingly, there have been attempts to demonstrate blindsight in normal subjects. In one study, people made correct forced-choice judgments about the location of near-threshold visual stimuli that they reported not detecting (Meeres and Graves, 1990). However, such demonstrations, and indeed most clinical reports of blindsight, suffer a basic confound arising from the fact that yes–no detection decisions are subject to response bias, whereas forced-choice judgments are not (Campion et al., 1983). Thus, such dissociations may reflect differences in response bias rather than changes in perceptual processes (but see Azzopardi and Cowey, 1997). There has been one report of blindsight among normal observers that was not subject to this confound (Kolb and Braun, 1995); however, this particular study has met with controversy on other grounds (Morgan et al., 1997; Robichaud and Stelmach, 2003).

Given the problems that have afflicted the search for blindsight-like dissociations of visual perception, the objective of the present study was to examine this issue in a different sensory modality: touch. As mentioned earlier, studies of neurological patients have revealed a double dissociation between localization and detection of tactile stimuli, including evidence for blindsight-like localization of undetected stimuli. Thus, our psychophysical study has sought to obtain evidence for a similar dissociation in neurologically intact subjects.

Materials and Methods

The objective of the present experiments was to compare people's accuracy in detecting versus locating tactile stimuli. Under normal conditions, these tasks are trivially easy, and thus ceiling effects would preclude the possibility of observing any dissociation. Therefore, in these experiments, we used a backward masking procedure to reduce performance, permitting us to identify a possible separation between accuracy in tactile detection versus localization.

In each experiment, subjects were presented with a brief tactile stimulus (the target) on one of four fingers of their right hand (excluding the thumb). This was followed shortly afterward by a vibrotactile stimulus (the mask) presented simultaneously to all four fingers. The subjects were asked to report on the presence versus absence of the target stimulus and to identify its location (which finger). The temporal interval between the target and mask was systematically varied to document changes in detection and localization judgments as the target became more salient (with increasing separation between target and mask). Different stimulus onset asynchronies (SOAs) between the target and vibration were used in each experiment. Typically, these varied between 40 and 100 msec, with the exception of a very short SOA (20 msec) included in experiment 4 and a wider range of SOAs (30–180 msec) in experiment 2. Experiment 4 introduced a second manipulation of the mask. In addition to varying the target–mask SOA, the experiment examined the effect of changing the frequency of the vibrotactile stimulus used as the mask. We anticipated that differences in mask frequency would affect the amount of masking.

In experiments 1, 3, and 4, subjects gave a yes–no response to report whether they detected the target stimulus or not and a four-alternative forced-choice (4AFC) response to name the finger on which the target was presented. To avoid the confound arising from differences in sensitivity to response bias between yes–no and forced-choice responses, all response-accuracy data were converted to a bias-free score (d′). As another means to avoid this confound, experiment 2 investigated differences in detection versus localization accuracy by using the same response measure (2AFC) for both judgments. On each trial of this experiment, the subjects felt two masks, separated by 1 sec, both of which were presented to two fingers (index and middle) simultaneously. One of the two masks was preceded by the target stimulus presented to one of the two fingers. Thus, for the detection judgment, the subjects had to report when the target occurred (before the first or second mask); for the localization judgment, they had to report which finger received the target (index or middle).

In experiments 1 and 2, the detection judgment and the location judgment were made in separate blocks of trials. This provided independent measures of the subjects' accuracy on each task (i.e., so that the subjects' location judgment was not contaminated by their detection response or vice versa). In experiments 3 and 4, the subjects were required to make both detection and localization judgments on every trial. This enabled us to examine the subjects' localization accuracy conditional on their detection response (i.e., compare their accuracy in reporting the location of stimuli they detected vs stimuli they missed).

Finally, we compared the experimental findings to simulated data generated using different computational models. Specifically, we tested three models in which yes–no (detection) and forced-choice (localization) judgments were based on (1) the output of a single sensory process, (2) two different sensory processes arranged serially (the second process was performed on the output of the first process), or (3) the product of two independent sensory processes (operating in parallel). A summary of the different experiments is presented in Table 1.

Table 1.

Summary of experimental designs

|

|

Tasks |

Mask (Hz) |

SOAs (msec) |

|---|---|---|---|

| Experiment 1 | Detect (yes—no) or locate (4AFC) | 50 | 40, 60, 80, 100 |

| Experiment 2 | Detect (2AFC) or locate (2AFC) | 50 | 30, 60, 90, 120, 150, 180 |

| Experiment 3 | Detect (yes—no) and locate (4AFC) | 50 | 40, 60, 80 |

| Experiment 4 |

Detect (yes—no) and locate (4AFC) |

25 or 50 |

20, 40, 60, 80, 100 |

Experimental subjects. Forty-one first-year psychology students, aged between 17 and 25 years, participated in the experiments to obtain course credit. Of the total number, 24 were female, and 38 were right handed. The recruitment of subjects and the experimental procedures had been approved by the institutional ethics committee.

Experimental apparatus. The target stimulus and vibration mask were produced using nickel bimorph wafers (38 × 19 × 0.5 mm, length × width × thickness; Morgan Matroc, Bedford, OH) individually driven by electric pulses from custom-built amplifiers controlled by a computer running Labview (National Instruments, Austin, TX). The wafers were individually mounted on plastic blocks, aligned side-by-side and spaced 25 mm apart (center-to-center), housed inside a custom-built plastic case. Small plastic rods (15 mm tall, 3 mm diameter) were glued to the top face at the end of each wafer. These rods stood vertically with their end projecting through a small hole drilled into the top face of the case. The subjects placed their fingers directly onto the ends of these rods (buttons) so that the vertical deflections generated by the wafers were transmitted directly to their fingertips. The target was a single upward square-wave deflection lasting 5 msec. The amplitude of the target was 100 μm in experiment 1 but was increased to 150 μm in experiments 2, 3, and 4 (because of poor performance of many subjects in a pilot version of experiment 2). The mask was a sequence of square waves, alternating from the down position to the up position, holding each for 5 msec, in which the two positions differed in height by 150 μm (for illustration of stimulus sequence, see Fig. 1). In experiments 1, 3, and 4, the vibration lasted a total of 500 msec but was shortened to 250 msec in experiment 2 because two vibrations were presented on each trial. The frequency of the vibration was 50 Hz (i.e., the onset of each square wave was separated by 20 msec) or 25 Hz for half of the trials in experiment 4 (the onset of each square wave was separated by 40 msec).

Figure 1.

Top, Illustration of the stimulus sequence for experiment 1, in which a 5 msec target stimulus was presented to one finger and was followed, after a variable SOA, by a 50 Hz vibrotactile mask to all four fingers. A, B, Results of experiment 1. A, Average percentage correct for detection and localization of the target across different SOAs between the target and mask. For detection, this corresponds to the proportion of hits (positive responses on trials with the target present); for localization, this is the proportion correct in a four-alternative forced choice (chance performance, 25% correct). The false alarm rate is the proportion of catch trials (with no target) on which the subject reported detecting the target. B, Detection and localization accuracy converted into bias-free d′ scores. Error bars represent within-subject SEMs (Loftus and Masson, 1994).

Experimental procedure. The subjects sat with the four fingertips of their right hand (excluding the thumb) resting lightly on the buttons of the apparatus. The task was explained to them, and they were familiarized with both the target stimulus (presented on each finger without the mask) and the mask (presented on all fingers). The subjects then commenced a block comprising trials with different target–mask SOAs or different mask frequencies (experiment 4), all randomly intermixed (different random orders were generated for different subjects). On any trial, the target had equal probability of occurring on any of the four fingers (or two fingers, in experiment 2). Experiments 1, 3, and 4 also contained catch trials randomly intermixed among the experimental trials. On catch trials, there was no target stimulus; thus, this condition was used to determine each subject's false alarm rate for detection.

In experiments 1 and 2, subjects were asked to report whether they felt the target was present or absent (respond either “yes” or “no”) on half of the trials and to report to which finger the target had been presented on the remaining trials. Half of the subjects made the detection decision during the first half of the experiment and the localization decision during the second half; the remaining subjects were tested in the reverse order. In experiments 3 and 4, subjects made both detection and localization judgments (in that order) on all trials.

Computer simulations. To simulate performance in detection and localization tasks, we cast the specific decision procedures within the formal framework of Signal Detection Theory (Green and Swets, 1966). Briefly, when the target stimulus is present, the sensory signal elicited by the stimulus is given a numerical value greater than zero (the size of the number is varied systematically to represent changes in the strength of the signal). This value (s) is added to a random number (n) from a Gaussian distribution with a mean of zero and specified SD. The random number represents the “noise” inherent in the transduction and processing of the sensory signal, and the amount of noise present is specified by SD. Thus, the sensory input equals s + n. When the target stimulus is absent, the sensory input is just a random number from the noise distribution (= n). For detection, a positive response is given on any trial when the sensory input is greater than a threshold value, c (the decision criterion for detection). Clearly, the probability of correct detection (a “hit”) depends on where c is set, making detection vulnerable to response bias. However, because the probability of a false alarm (a positive detection response on a trial with no target) is also affected by the decision criterion, a bias-free measure of detection sensitivity can be calculated as the difference in the normalized probabilities (as z-scores) of a hit and a false alarm. This value, d′, equals the distance (in SD units) between the means of the s + n distribution and the n distribution (i.e., d′= s/SD).

Our computational models involve four separate sensory channels (representing four fingers). On trials with the target present, one channel contains the stimulus signal (i.e., the sensory input = s + n), and the other three contain only noise (three independently generated values of n). On trials without the target, all four channels contain only noise. To model detection performance, on trials when the target is present (i.e., one of the four channels contains the signal), a hit is computed whenever input on any of the four channels is >c; a false alarm is computed on catch trials (without the target) when any channel is >c. Thus, d′ is approximately equal to the distance between the s + n distribution and the maximum-of-four noise distribution (i.e., the distribution of the maxima of four randomly generated values).

In some models that we tested, the detection procedure (comparing the sensory input to a decision criterion) has also been used to model localization performance. In these cases, separate detection decisions are made on each of the four channels, and correct localization occurs when only the channel with the signal is above threshold (i.e., s + n > c, and all three n values <c). Localization accuracy is reduced to 50, 33, or 25%, when one, two, or all three of the noise channels, respectively, are also >c (the localization response being thus determined by a two-, three-, or four-way guess). Localization accuracy is also 25% when no channel has a value >c and equals 0% when s + n < c, whereas one or more noise channels is >c. In other models, rather than making binary detection decisions on each channel, localization is determined by ranking the four different sensory inputs and locating the target on the channel with the largest input. This localization is correct when the maximum input is on the s + n channel and is incorrect when it was one of the three other channels. Here, d′ equals the distance between the s + n distribution and the maximum-of-three noise distributions.

Results

Experiment 1

Subjects (n = 10) made detection and localization judgments on 160 trials each (32 trials at each SOA plus 32 catch trials). The mean accuracy (as percentage correct) for both detection and localization judgments across each of the four SOAs is shown in Figure 1A. Clearly, accuracy for the two judgments is similar and increases as the SOA increases. Indeed, these data could be taken as showing that subjects are more accurate at locating than detecting targets at the shortest SOA but more accurate at detecting than locating targets at longer SOAs. However, direct comparison between the two judgments would be seriously confounded because of the very different decision processes underlying each. Specifically, the fact that the subjects must adopt an implicit decision criterion to give yes–no judgments for detection makes this task very sensitive to response bias, whereas the 4AFC task used for the location judgment requires no decision criterion and thus is not sensitive to such bias.

To equate the two measures, both were converted to d′ scores (a bias-free measure of sensitivity) using the tables of Elliott (1964). For the detection task, d′ was calculated using the false alarm rate. In this experiment, the mean false alarm rate was 14%, measured as the proportion of positive detection responses on catch trials. The mean d′ scores for both detection and localization are shown in Figure 1B. On inspection of the figure, clearly, sensitivity in the detection task was higher overall than in the localization task. Also, clearly, accuracy on both judgments increased as the SOA increased. Finally, it appears that the increase in accuracy across SOAs was steeper for detection than localization. A repeated measures ANOVA confirmed these observations: there was a significant difference between the detection and localization tasks (F(1,9) = 6.91; p = 0.027), there was a significant linear trend overall across SOA (F(1,9) = 32.41; p < 0.001), and there was a significant interaction between linear trend and task (F(1,9) = 7.25; p = 0.025).

This experiment has identified two differences between detection and localization of tactile stimuli. First, subjects were more accurate at reporting the presence (vs absence) of a target stimulus than at reporting the location of the stimulus. Thus, detection of a tactile stimulus does not necessarily imply the ability to localize that stimulus. Second, although both detection and localization accuracy improved as the SOA between the target and mask increased, they improved at different rates. At the shortest SOA (40 msec), sensitivity for detection and localization was equivalent, but, as the SOA increased, the subjects became increasingly more sensitive at detecting than localizing the stimulus. These two differences argue against a model of tactile processing in which a single mechanism is responsible for detecting when a stimulus occurs and locating where it occurs. Such a model would, in its simplest form, predict equal sensitivity for detection and localization judgments, which the present experiment shows to be false.

Experiment 2

Rather than dealing with the difference between yes–no detection decisions and 4AFC localization judgments by converting both into a common d′ score, experiment 2 used the same response measure (2AFC) for both judgments. On each trial, subjects felt two consecutive masks, both of which were presented to two fingers simultaneously. One of the two masks was preceded by the target stimulus presented to one of the two fingers. For the detection judgment, the subjects had to report when the target occurred (before the first or second mask); for the localization judgment, they had to report which finger received the target (index or middle). Subjects (n = 12) made these judgments on 32 trials at each of the six target–mask SOAs.

The mean accuracy (as percentage correct) for both when (detection) and where (location) judgments across each of the six SOAs is shown in Figure 2A. As in experiment 1, the subjects were more accurate in judging when the target occurred than where it occurred. This was confirmed by a repeated measures ANOVA, which revealed a significant main effect of judgment type (when vs where; F(1,11) = 11.44; p = 0.006). Furthermore, although accuracy for both when and where judgments increased as the SOA between target and mask increased, the rate at which accuracy improved across SOAs was greater for judgments about when the target occurred than where it occurred. Because of ceiling effects on performance, it was not appropriate to use linear trends to analyze the functions relating SOA to accuracy in either judgment. To compare the two sets of data, Weibull functions were fitted to each (Fig. 2B). A lapse rate of 0.18 was incorporated into the curve fit because the raw scores indicated that accuracy flattened off at ∼91%. The values of the parameters α and β, which correspond to the position and slope of the function, respectively, were calculated for both functions, and SDs of these values were estimated. The value of the position parameter α ± SD for each function was 48.08 ± 1.52 for when judgments and 77.15 ± 2.67 for where judgments, confirming the difference in accuracy for the two judgments. The values of the slope parameter β ± SD are 2.268 ± 0.20 for when and 1.22 ± 0.08 for where, confirming the steeper improvement in accuracy across SOA for detection versus localization. The two functions differ significantly on both parameters (t(9) = 9.47, p < 0.001 for the difference in α; and t(9) = 4.92, p < 0.001 for β).

Figure 2.

Accuracy in detection and localization in experiment 2. Both judgments were made as two-alternative forced-choice decisions (subjects reported which of 2 temporal intervals contained the target or on which of 2 fingers the target was presented). A, Percentage correct for detection and localization responses across different SOAs between the target and mask. Error bars represent within-subject SEMs (Loftus and Masson, 1994). B, The same data as in A but with Weibull functions fitted to each set of data.

Experiment 2 has confirmed the dissociation between detection and localization of tactile stimuli. This experiment used a 2AFC task to record detection sensitivity so that these responses were formally equivalent to the 2AFC decision used for the localization judgment. Under these conditions, subjects continued to show greater accuracy for detection (judging when the stimulus occurred) than localization (judging where it occurred). Moreover, the shape of the psychometric function was different for the two judgments: as the SOA between target and mask increased, the improvement in accuracy was steeper for detection than localization.

Experiment 3

Experiments 1 and 2 demonstrated that detection and localization of tactile stimuli are dissociable and, more specifically, that localization must involve some additional (or separate) processing from detection. Experiment 3 sought to investigate whether localization was nonetheless contingent on detection or whether the two could be mediated by mutually independent processes. This was done by requiring subjects (n = 10) to make both detection (yes–no) and localization (4AFC) judgments on every trial so that we could examine their localization accuracy conditional on their detection response. Only three SOAs were used (40, 60, and 80 msec), and the number of trials at each SOA was increased to 100, so that there would be a sufficient number of trials on which the target was not detected (“misses”) so that we could make a reliable measure of localization accuracy in the absence of detection. Forty catch trials were included.

The false alarm rate for detection was low (mean ± SD, 8 ± 7.3%). The mean sensitivity (as d′) for both detection and localization judgments across each of the three SOAs is shown in Figure 3A. As in experiment 1, subjects were more sensitive for detection than localization, and accuracy for the two judgments showed different rates of improvement across the SOAs. A repeated measures ANOVA confirmed that there was a significant difference between the detection and location accuracy (F(1,9) = 13.92; p = 0.005), a significant linear trend overall across SOA (F(1,9) = 20.91; p = 0.001), and a significant interaction between linear trend and task (F(1,9) = 14.42; p = 0.004).

Figure 3.

Results of experiment 3. A, Detection and localization accuracy (as d′) across different SOAs between the target and mask. B, Localization accuracy (percentage correct) of targets that had been detected versus targets that were not detected (missed). Error bars represent within-subject SEMs (Loftus and Masson, 1994).

Accuracy for the location judgment was then split, separating those trials on which the subjects had detected the target from the trials when the target was not detected. The results are shown in Figure 3B. Clearly, the detected targets were much more accurately located than the undetected targets. An ANOVA confirmed that this difference was significant (F(1,9) = 74.35; p < 0.001). There was no significant overall linear trend for location accuracy across SOA (F(1,9) = 2.79; p = .129), nor was there an interaction between linear trend across SOA and target type (detected vs undetected; F < 1). Despite the difference in location accuracy between detected and undetected targets, location accuracy for the undetected targets was high (mean ± SD, 48.5 ± 13.8%) and was significantly above chance (25%; t(9) = 5.40; p < 0.001).

This experiment confirmed the findings of experiments 1 and 2, that subjects are better at detecting a masked tactile stimulus than locating it and that their detection sensitivity shows a steeper improvement across SOA than does their localization performance. By requiring the subjects to make both detection and localization judgments on each trial, this experiment was able to examine the relationship between the two. The results showed that the judgments are correlated, because subjects were much more accurate at locating targets they had detected than targets they had missed. This correlation could imply that the location judgment is contingent on detection: that subjects must first detect the tactile stimulus to locate it. However, the correlation could equally reflect a common source of variance in the processes underlying the two judgments. For example, the final processes responsible for detection and location may be mutually distinct, but, because each depends on a common source of input (through somatosensory channels), variations in detection and localization should be correlated to the extent that their common input is itself a source of variation in signal strength and noise.

The correlation between the two judgments was not perfect: not all detected targets were accurately located (accuracy was ∼80%), and undetected targets were located with above chance accuracy (48%, with chance being 25% for the 4AFC task). The fact that some detected targets were not correctly located indicates that detection is not sufficient for correct localization. The above-chance accuracy in locating missed targets is potentially more interesting, because it suggests that detection is not necessary for localization. Indeed, this observation could be interpreted as akin to the tactile analog of blindsight reported in neurological patients by Paillard et al. (1983) and Rossetti et al. (1995). However, one must be cautious when drawing such comparisons between responses made in a yes–no task with responses in a forced-choice task because the former, but not the latter, is sensitive to response bias. It is possible, for example, that the subjects set a conservative decision criterion for the yes–no detection judgment and consequently responded “no” on trials in which there was sensory information available to make the forced-choice location judgment. Therefore, the observed accuracy in locating undetected targets is consistent with the suggestion that explicit detection is not necessary for localization, but it remains possible that localization is dependent on the processes that permit detection.

An unexpected feature of this data is the remarkably flat function relating localization accuracy conditional on detection to SOA. This is at first surprising given the consistent increase in localization accuracy across SOA in general (i.e., not separating detected and undetected trials). The fact that localization accuracy conditional on detection is so flat represents additional evidence for the correlation between detection and localization. That is, the increase in localization accuracy normally seen across SOA must be attributable to an increase in the number of targets being detected: once the differential effect of detection is partitioned out (by separating detected from undetected targets), accuracy in locating targets changes little across SOA.

Experiment 4

Up to this point, we varied the salience of the target solely by manipulating the SOA between the target and mask. Experiment 4 introduced a second manipulation. In addition to varying the target–mask SOA, the experiment examined the effect of changing the frequency of the vibrotactile stimulus used as the mask. We anticipated that differences in mask frequency would affect the task by altering the amount of integration and/or interruption masking. Interruption masking refers to the notion that a stimulus presented after a target masks the perception of the target by prematurely terminating its sensory processing. Integration masking, on the other hand, attributes the failure to perceive the target to a failure in separating the two stimuli perceptually: that is, the target is perceived as part of the mask rather than being distinguishable from it (Kahneman, 1968). Because the rate of firing in somatosensory neurons in response to a vibration varies as a function of its frequency (Mountcastle et al., 1990; Romo et al., 1998), we hypothesized that vibrations of different frequency would produce different amounts of interruption masking. Second, because the vibration is essentially a sequence of mechanical deflections, each very similar to the target itself, integration of the target into the percept of the mask should vary as a function of the mask frequency. For example, a 50 Hz mask (as used in the previous experiments) would produce maximal integration masking at an SOA of 20 msec (because this matches the cycle time of the vibration) and less masking at longer SOAs; whereas a 25 Hz mask would produce maximal integration masking at an SOA of 40 msec (which matches the cycle time of this vibration). Thus, the 50 Hz mask should produce more masking than the 25 Hz mask at an SOA of 20 msec, but the reverse relationship should hold at SOAs of 40 msec or longer. Experiment 4 examined this very issue by asking subjects (n = 9) to detect and locate a tactile stimulus when followed by a 25 or 50 Hz vibration mask with an SOA of 20, 40, 60, 80, or 100 msec. There were 32 trials for each of the 10 experimental conditions plus 64 catch trials: 32 with the 50 Hz mask and 32 with the 25 Hz mask.

The mean sensitivity (as d′) for both detection and location judgments across each of the five SOAs is shown in Figure 4, A and B. For clarity of exposition, we separated detection scores (A) and localization scores (B) in the figure. Nonetheless, clearly, subjects were more sensitive at detection than location judgments, as in the previous experiments. Of particular interest for this experiment, it appears that detection accuracy was affected by the mask frequency. Specifically, the subjects were generally more sensitive in detecting targets followed by the 50 Hz mask than targets followed by the 25 Hz mask. However, this was reversed for trials with an SOA of 20 msec: on these trials, detection sensitivity was lower with the 50 Hz mask than the 25 Hz mask. This result is in line with our predictions regarding the effect of integration masking. According to these predictions, the 25 Hz mask would produce greater integration masking than the 50 Hz mask across the longer SOAs because the cycle time of the 25 Hz mask is longer and thus closer to the target–mask SOA. Moreover, we predicted that the 50 Hz mask would produce greater integration masking than would the 25 Hz mask at the shortest SOA (20 msec) because this SOA matches exactly the cycle duration of the 50 Hz mask.

Figure 4.

Results of experiment 4. In addition to varying the SOA between the target and mask, this experiment varied the frequency of the vibrotactile mask (25 vs 50 Hz). For clarity, detection (A) and localization (B) are shown separately. C, Accuracy (as percentage correct) in locating targets that had been detected versus targets that were not detected (missed). Error bars represent within-subject SEMs (Loftus and Masson, 1994).

In contrast to its effect on detection, mask frequency had no discernable effect on localization accuracy. This is important because it shows that variations in detection accuracy do not necessarily carry over into localization accuracy and thus could be taken as evidence for a further dissociation between detection and localization.

An ANOVA confirmed the observations made above. There was a significant difference overall between detection and localization accuracy (F(1,8) = 5.60; p = 0.046). There was no overall effect of mask frequency (F(1,8) = 1.64; p = 0.24), but there was an overall effect of SOA (F(1,8) = 20.24; p = 0.002). There were no significant two-way interactions between any of the three factors (F values ≤1.01), but there was a significant three-way interaction (F(1,8) = 6.824; p = 0.031). This three-way interaction confirms that the two masks differed in their effects on accuracy across SOA for the detection judgment (i.e., that detection was flatter with the 25 Hz mask than the 50 Hz mask) but had equivalent effects for the localization judgment. This interpretation of the three-way interaction was confirmed by separate ANOVAs run on the detection scores and localization scores. For detection, there was no main effect of mask frequency (F(1,8) = 1.09; p = 0.327), but there was a significant main effect of SOA (F(1,8) = 13.45; p = 0.006) and a significant interaction between mask frequency and SOA (F(1,8) = 6.10; p = 0.039). For localization, there was no main effect of mask frequency (F < 1), there was a significant main effect of SOA (F(1,8) = 24.30; p = 0.001), but there was no interaction between mask frequency and SOA (F(1,8) = 1.04; p = 0.337).

As in experiment 3, accuracy for the location judgment was split, separating those trials on which the subjects had detected the target from the trials when the target was not detected. The results are shown in Figure 4C. As in experiment 3, the detected targets were much more accurately located than the undetected targets. An ANOVA confirmed that this difference was significant (F(1,8) = 106.96; p < 0.001). There was a significant effect of SOA (F(1,8) = 6.74; p = 0.032) but no main effect of mask frequency (F < 1) or any significant interactions between these three factors (all F values <1). These results constitute evidence for the strong correlation between detection and location judgments.

Again, like experiment 3, subjects performed better than chance (25%) in locating targets that they had failed to detect. A single-sample t test was conducted on the overall score across the five SOAs. This confirmed that subjects were significantly above chance in locating undetected targets, both when followed by the 25 Hz mask (mean ± SD, 41.66 ± 15%, t(8) = 3.34; p = 0.01) and the 50 Hz mask (mean ± SD, 43.95 ± 11%; t(8) = 5.17; p = 0.001). There was, however, no difference in accuracy between these sets of scores (t < 1). Interpretation of this above-chance performance is confounded by the fact that the detection judgment required subjects to adopt a decision threshold, whereas the forced-choice localization judgment did not. Thus, the forced-choice decision should be sensitive to the signal carried by the missed targets (although their signal was below decision threshold for detection).

An additional interesting result was obtained in this experiment. As in experiments 1 and 3, the false alarm rate on trials with the 50 Hz mask was relatively low (15%). However, the false alarm rate on trials with the 25 Hz mask was higher (26%). This difference is statistically significant (t(8) = 3.04; p = 0.016), and we observed the same effect in other unpublished experiments. The higher false alarm rate might indicate that the 25 Hz mask created more noise in the sensory processes that lead to detection. Such an increase in noise would also explain why detection sensitivity was lower on these trials and, in particular, why the function relating detection to SOA was flatter than for trials with the 50 Hz mask.

Computer simulations

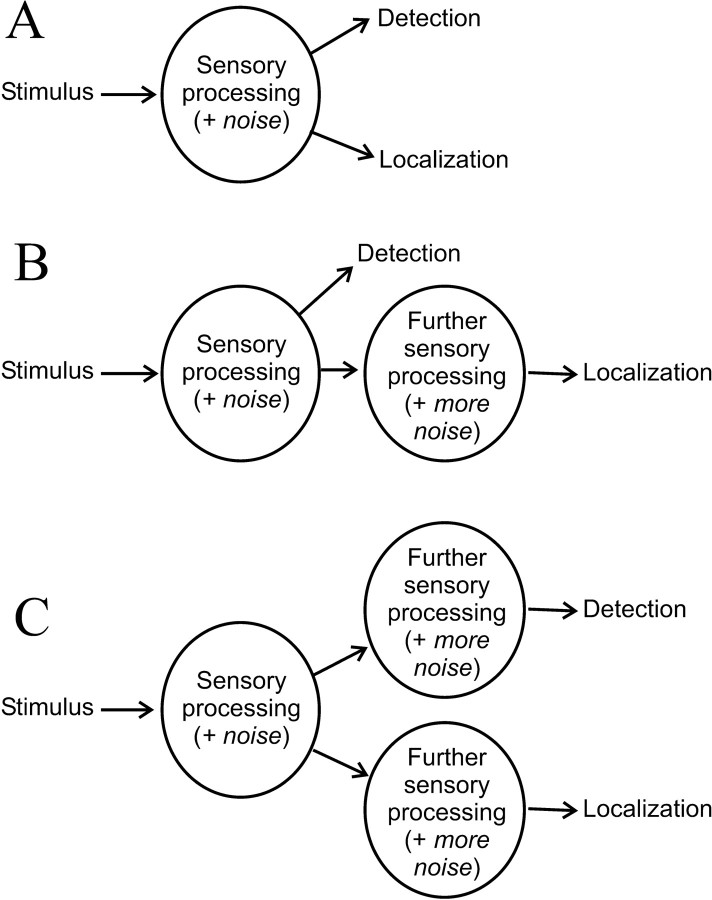

The present experiments have documented several dissociations between detection and localization of tactile stimuli. This may mean that a tactile stimulus undergoes two different sensory processes, one leading to detection and the other to localization. Alternatively, differences in detection sensitivity and localization accuracy may occur if the two judgments are based on different decision procedures even when they are applied to the same sensory information (i.e., the output of a common sensory process). To examine these possibilities, we created computational models of the different processes and compared the simulated data they produce with the experimental data reported above. We considered three different ways that the sensory processes might be organized (for illustration, see Fig. 5). In the first type of model, detection and localization are based on the same sensory processes, and any dissociation is attributable to differences in the decision procedures used to detect versus localize the stimulus. In the second type of model, detection and localization are based on different processes that are arranged in series (i.e., output of the first process is fed into the second process). Here, the initial process leads to detection, and the subsequent process leads to localization. The third type of model has detection and localization based on different processes arranged in parallel (i.e., they are mutually independent). In each case, we made plots of the data generated by the models and have selected that range of values corresponding to the accuracy (d′ or the proportion correct) typically observed in the above experiments.

Figure 5.

Illustrations of three different models of the relationship between the sensory processes leading to detection and those leading to localization of a tactile stimulus. A, Single-process model. The tactile stimulus undergoes sensory processing, the output of which is used for both detection and localization of the stimulus. Noise, inherent in the sensory processing, reduces accuracy for both detection and localization. B, Serial-processing model. The tactile stimulus undergoes “early” processing, which is used directly for detection, and then is subjected to additional processing required for stimulus localization. This additional processing adds more noise and thus reduces localization accuracy. C, Parallel-processing model. The tactile stimulus undergoes early sensory processing, after which separate processing mechanisms are engaged to generate detection and localization of the stimulus.

Detection and localization using a single source of sensory information

The simplest model of the sensory processes leading to detection and localization is one in which both judgments are made on the output of a single sensory process (or a common set of processes). We will first consider the case in which localization involves four separate detection judgments. This model incorrectly predicts that detection and localization accuracy are very similar (Fig. 6A). With some parameters (certain values of noise and decision criterion), detection and localization performance appear to dissociate in a similar manner to that observed in the present experiments. This occurs when localization accuracy plateaus (although detection sensitivity continues to increase) because the probability of a false alarm on one of the nontarget channels remains constant across increases in signal strength, thus preventing localization accuracy ever reaching 100% correct. However, this dissociation is only obtained with large amounts of noise that produce an unrealistically high false alarm rate (>50%). Furthermore, regardless of parameters, this model incorrectly predicts that localization accuracy should be at chance for undetected targets (Fig. 6B). This is because, for a target to be undetected, it must have been below detection threshold on each individual channel, in which case the localization response reduces to a four-way guess. This is obviously discrepant with the results of experiments 3 and 4 in which subjects could localize targets even when they reported that they did not detect them. Clearly, then, this simple model cannot accommodate the experimental findings.

Figure 6.

Simulated data generated using single-process models (see Fig. 5A). In one version of this model (A, B), detection involves comparing the sensory input on each channel (finger) with a decision threshold and giving a positive response if one or more channels are greater than the threshold. Localization involves locating the target on the suprathreshold channel. If no channels, or multiple channels, are above threshold, the localization response is reduced to a two-, three-, or four-way guess. In a different version of this model (C, D), detection involves the same process as described above, but localization is based on the channel with the strongest signal.

As an alternative to the above model, subjects may make four separate signal detection decisions to locate the target but make a single “omnibus” decision (using sensory information combined across channels) for detection. However, such an omnibus detection judgment would have much lower sensitivity than that performed on each channel separately because the noise (variance) between channels would be additive (i.e., SD would double across the four channels). Therefore, detection would always be worse than localization (in fact, d′ for detection would be exactly half of that for localization), a prediction that is not supported by the experimental findings.

Rather than conducting detection judgments concurrently on four fingers to locate the target stimulus, subjects may compare the signal strength between fingers and locate the stimulus on the finger with the strongest sensory signal. When this process is modeled computationally, it incorrectly predicts that localization is more accurate than detection (Fig. 6C). This is because the d′ for detection approximately equals the distance between the s + n distribution and the maximum-of-four noise distribution, whereas the d′ for localization equals the distance between the s + n and the maximum-of-three noise distributions. Because the maximum-of-four distribution is higher than the maximum-of-three distribution, the associated d′ smaller. It also predicts unrealistically high localization accuracy for missed targets (Fig. 6D).

This completes our survey of models in which a single source of sensory information is used for detection and localization judgments. Clearly, none accommodates the experimental data, confirming that, whatever combination of decision procedures is used, detection and localization judgments cannot be based on output from the same sensory process. Below we consider two possible ways in which different sensory processes for detection and localization might be organized. In one, the processes leading to detection and localization are arranged serially, and, in the other, these judgments arise from mutually independent (parallel) sensory processes.

Detection and localization using two serially arranged sources of sensory information

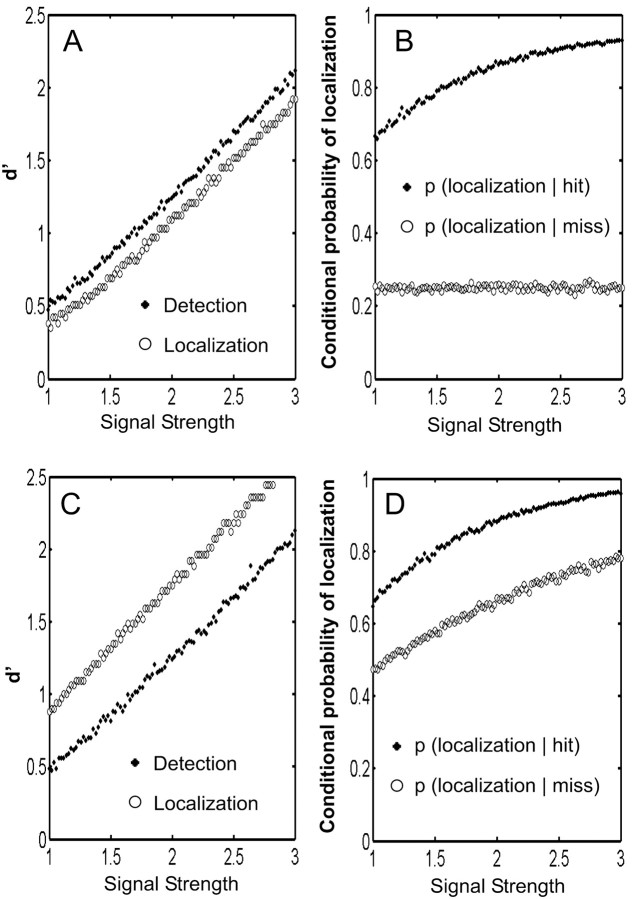

If detection and localization are not based on a single source of sensory information, they may be mediated by two sensory processes that are arranged in series. That is, the output of the sensory process leading to one judgment is fed into a second process that gives rise to the other judgment (Fig. 5B). Because detection sensitivity is consistently better than localization accuracy, we will only consider the case in which the early process leads to detection and the later process leads to localization. The logic of these models is that each sensory process adds its own noise, and therefore the additional processing for localization means that it is based on noisier data than that used for detection. As for the single process models, we considered two decision operations for localization, one in which the signal strengths are rank-ordered across fingers and the other involving separate detection judgments that are made on each finger. However, in the models we tested (described below), the outcome for localization was essentially identical regardless of the decision procedure used. Therefore, we will only present the simulated data from the more plausible model in which the target is located on the finger with the strongest signal.

For each model tested, the localization operation was performed on data with a greater amount of noise than the data on which the overall detection decision is made (for detection, SD = 1; for localization, an additional 2× SD of noise was added). Given this, it is not surprising that the serial models correctly predict that detection sensitivity is greater than localization accuracy and that localization accuracy improves at a slower rate than detection sensitivity as the signal strength increases (Fig. 7A). This conforms to the experimental findings that detection and localization show different rates of improvement across increases in SOA. If the amount of noise present in the detection process is increased, detection sensitivity is decreased and the function relating d′ to signal strength (SOA) is flattened (Fig. 7C). The false alarm rate is also increased. All of these are simple and unsurprising products of the model, but, importantly, they resemble the impact of lowering the mask frequency in experiment 4. Of perhaps greater importance, adding extra noise to the detection process has much less effect on localization accuracy, which is again consistent with the impact of changing mask frequency in experiment 4. This is presumably because the noise added at the detection stage is diluted by the amount of noise already present at the localization stage.

Figure 7.

Simulated data generated using a serial-process model (see Fig. 5B). If noise is present in the localization process, in addition to the noise present in the detection process, then localization accuracy will be lower than detection accuracy, and detection will improve more rapidly than localization across increases in signal strength (A). Localization accuracy is better for detected than missed targets and, for missed targets, is almost flat across increases in signal strength (B). If the amount of noise in the early process is increased, this reduces detection sensitivity but has little effect on localization accuracy (C) and has no impact on localization accuracy for detected versus missed targets (D).

These models also make a number of correct predictions about localization performance conditional on detection (Fig. 7B,D). First, they correctly predict much better localization accuracy for detected targets than undetected targets. This is because the localization process is dependent on the output of the detection process, even when that process did not lead to explicit detection. The models also correctly predict that localization accuracy is greater than chance for undetected targets. This is an inevitable consequence of making performance on a forced-choice task conditional on the outcome of a yes–no decision. On missed trials, the signal strength on all four channels must be less than the decision threshold. Nonetheless, the average signal strength on the channel with the target (s + n) will still be greater than the average signal strength on each of the other three channels. Finally, the models accurately predict that localization accuracy conditional on detection has a very shallow gradient across SOA. This is because the increase in localization accuracy with increasing signal strength is accompanied by an increase in detection. Thus, localization accuracy changes little, especially for undetected targets.

Detection and localization using two independent sources of sensory information

Thus far, we explored the predictions generated by models in which detection and localization are based on the same sensory process or different serially organized sensory processes. The final type of model is one in which detection and localization are based on the outputs of mutually independent (parallel) sensory processes. Because these processes are mutually independent, it is a trivial matter for such a model to predict different levels of performance for detection and localization, as well as different rates of improvement across increases in signal strength (corresponding to increases in SOA in the experiments). Such a model is also untroubled in predicting a change in detection sensitivity without a concurrent change in localization accuracy (as was observed in experiment 4 as the result of a change in mask frequency). However, if localization and detection were based on entirely independent sensory information, one would expect the two sets of responses to be completely uncorrelated. Therefore, localization accuracy should be equal for detected and undetected stimuli. The strong correlation we found between detection and localization (i.e., subjects were much better at localizing detected targets than undetected ones) identifies a dependency between these judgments. A solution to this is to assume that the sensory information used in each judgment has a common source at some level. Such an assumption is very reasonable because it is highly likely that the early sensory processes are common to both judgments. Thus, the parallel model that we examined in detail divides sensory processes into three parts: a common early process and two subsequent processes, independent of each other, that separately lead to detection and localization (Fig. 5C).

As before, the model predicts better detection sensitivity than localization accuracy, as well as different slopes for the two judgments, as long as the amount of noise present in the localization process is sufficiently greater than that in the detection process. It predicts accurate localization of undetected targets because noise added in the detection process would reduce detection sensitivity without affecting localization accuracy. It also readily allows for a change in detection sensitivity, without any change in localization accuracy, by increasing the noise present in the detection process. Finally, the model can predict a large separation of localization accuracy between detected and undetected targets and can even predict that localization accuracy for detected and undetected targets will be mostly flat across increases in signal strength. However, the model is seriously challenged when trying to account for all of these results simultaneously. In particular, it can account for the differences between detection sensitivity and localization accuracy and it can account for the pattern of localization accuracy for detected versus undetected targets, but it cannot adequately account for both using the same set of parameters (levels of noise). This is because the addition of independent noise to the detection and localization processes is necessary to produce differences in the levels of accuracy but dramatically reduces the correlation between those processes. Thus, adding enough noise to localization to separate it from detection brings localization of detected and undetected targets together (Fig. 8). One can obtain simulated results that approximate the full set of experimental data but only by adding very little noise to the detection process. Of course, this diminishes the very premise on which the parallel model was distinguished from the serial one. Furthermore, as soon as one adds more noise to the detection process (to simulate the change in detection, but not localization, when the mask frequency is changed), the localization accuracy for detected and undetected targets converge, something that was not observed in the results of experiment 4.

Figure 8.

Simulated data generated using a parallel-process model (see Fig. 5C). More noise must be present in the localization process than the detection process so that localization accuracy is lower than detection accuracy and detection improves more rapidly than localization across increases in signal strength (A). Localization accuracy is marginally better for detected than missed targets, and localization of both types of target improves across increases in signal strength (B).

Discussion

The present series of experiments has sought to investigate the relationship between the processes underlying the simple detection of tactile stimulation and the ability to locate that stimulation. This was done by asking subjects to detect and locate a brief mechanical stimulus, applied to one of four fingers, followed by a vibrotactile mask applied to all four fingers. In all experiments, the subjects were consistently more sensitive at detecting than locating the target. This dissociation was evidenced by two additional findings: (1) increments in the interval between the target and mask led to greater improvements in detection accuracy than localization accuracy; and (2) a change in the frequency of the mask significantly affected detection accuracy but had no effect on localization accuracy.

The greater sensitivity in detecting versus locating a tactile stimulus indicates that detection is not sufficient for accurate localization and that localization is not necessary for detection. The fact that a drop in detection sensitivity (produced by a decrease in mask frequency) was not accompanied by a decline in localization accuracy suggests that detection is not necessary for localization, and localization is not sufficient for detection. Additional evidence that localization is not contingent on detection came from experiments 3 and 4, which revealed that subjects performed significantly better than chance at locating stimuli that they failed to detect. However, as noted previously, this finding on its own cannot be taken as evidence of a dissociation between detection and localization because the yes–no decision used to report detection requires that subjects adopt a decision threshold, whereas the forced-choice decision used to index localization does not. Therefore, the forced-choice judgment will still be sensitive to the signal carried by missed target stimuli, although the signal strength was below the decision threshold for detection. Nonetheless, the observation of better-than-chance accuracy in locating undetected stimuli is a necessary (if not sufficient) result to support the conclusion that localization is not contingent on detection. Altogether, the present findings could be taken as demonstrating a double dissociation between tactile detection and localization and are therefore consistent with neuropsychological reports of a double dissociation between these same processes (Paillard et al., 1983; Halligan et al., 1995; Rossetti et al., 1995; Rapp et al., 2002).

A double dissociation between detection and localization implies that these judgments are based on mutually distinct processes (presumably identifiable with different neural mechanisms). To test this interpretation, we constructed computational models in which detection and localization decisions were based on the same sensory process or different sensory processes arranged either in series or in parallel. When the simulated data generated by the different models was compared with the experimental data reported here, only the serial-process model correctly reproduced all of the present results. Both of the dual-process models correctly predicted the dissociations between detection and localization: (1) that detection accuracy was higher and improved more rapidly with increased signal strength (SOA) than localization accuracy; (2) that detection accuracy could be reduced with little or no change in localization accuracy (as seen in experiment 4 when mask frequency was changed); and (3) that localization accuracy could be greater than chance for undetected targets. However, the serial-processes model, but not the parallel-processes model, correctly predicted the large difference in localization accuracy between detected versus undetected targets, as well as the fact that localization accuracy of detected and undetected targets changed little across increased signal strength. These particular experimental findings identify a strong correlation between the detection and localization responses, a feature that is captured by the serial model (because the localization process is dependent on the output of the detection process) but not the parallel model (because localization and detection are independent).

The present evidence for a dissociation between detection and localization derives from the differential effect of the vibrotactile mask in disrupting performance. The mask had a more disruptive effect on localization than on detection, especially at longer SOAs, whereas the frequency of the mask affected detection but not localization. What might these findings tell us about the processes involved? Typically, backward masking is thought to arise from one (or both) of two mechanisms: the mask may interrupt ongoing sensory processing of the target stimulus, or the target may be integrated into the percept of the mask (Kahneman, 1968) (for recent discussions about the mechanisms underlying masking, see Enns and Di Lollo, 2000; Keysers and Perrett, 2002). We attempted to dissect the contributions of these processes by examining the interaction between mask frequency and target–mask SOA. We hypothesized that the extent to which the target would be integrated into the mask would be a function of how similar the SOA is to the cycle duration (1/frequency) of the mask. For example, a 50 Hz mask, with a cycle duration of 20 msec, would produce maximal integration masking at an SOA of 20 msec. In contrast, a 25 Hz mask, having a cycle duration of 40 msec, would produce maximal integration masking at an SOA of 40 msec. Although both masks would produce progressively less masking at longer SOAs, the 25 Hz mask should always produce greater integration masking than the 50 Hz mask, except at an SOA of 20 msec. These predictions were born out by the data of experiment 4: the 25 Hz mask disrupted detection more than did the 50 Hz mask at SOAs of 40, 60, 80, and 100 msec, but the reverse was true when the SOA was 20 msec. Although this result concurs with our predictions based on integration masking, there is no reason to predict this interaction between frequency and SOA as a result of interruption masking. Interruption masking will decrease with increasing SOA and may be affected by the frequency of the mask (although it is not clear in which direction), but SOA and mask frequency should have independent, and thus additive, effects on interruption masking.

In experiment 4, a change in mask frequency did not produce an observable effect on localization accuracy, although it affected detection. We already noted that this apparent dissociation is nonetheless consistent with localization being contingent on the processes leading to detection. If this is the case, however, it implies that changes in mask frequency have no additional impact on localization (beyond their primary effect on detection). That is, integration masking affects the early sensory processes leading to detection but not the subsequent processes leading to localization. This differential susceptibility to integration masking may reveal something about the content of the processes leading to detection versus localization. For example, conscious detection of a target relies on the ability to individuate it as a temporally discrete event (separate from the mask) and is thus vulnerable to temporal integration of the target and mask. Localization, on the other hand, may arise from a spatial analysis, such as a comparison of the strength or duration of tactile stimulation across fingers, or may require attention to be focused on spatially restricted locations. In either case, the spatial nature of the task would make it immune to temporal integration masking. Nonetheless, if localization is subsequent to detection in a sensory processing hierarchy, then the spatial analysis for localization must depend on previous temporal analysis. For example, it might be necessary to identify the precise moment at which the target occurred (resulting in detection) and then restrict the spatial analysis to sensory input at that time point.

Conclusions

The present experiments have documented dissociations between detection and localization of tactile stimuli in normal subjects. By comparing the experimental findings with simulated data generated by different computational models, we conclude that detection and localization are subserved by different sensory processes arranged in series. Specifically, we argue that the processes that underlie localization of a tactile stimulus are subsequent to, and dependent on, the processes responsible for detection. In this regard, our findings are not consistent with previous reports of a blindsight-like syndrome in neurological patients who could accurately report the location of tactile stimuli that they failed to detect (Paillard et al., 1983; Rossetti et al., 1995). We suggest that the evidence provided by these neurological cases may be subject to the same confound that affects most reports of true (visual) blindsight (Campion et al., 1983), namely that the patient's ability to correctly identify the location of a stimulus that they reported not detecting may be an artifact of the difference in the psychometric properties of the yes–no and forcedchoice decisions used to index detection and localization, respectively (for the exception to this in a study of visual blindsight, see Azzopardi and Cowey, 1997).

Footnotes

This work was supported by Australian Research Council Grant DP0343552 and the James S. McDonell Foundation. We thank Irina Harris for comments.

Correspondence should be addressed to Justin Harris, School of Psychology, University of Sydney, Sydney 2052, Australia. E-mail: justinh@psych.usyd.edu.au.

DOI:10.1523/JNEUROSCI.0134-04.2004

Copyright © 2004 Society for Neuroscience 0270-6474/04/243683-11$15.00/0

References

- Azzopardi P, Cowey A (1997) Is blindsight like normal, near-threshold vision? Proc Natl Acad Sci USA 94: 14190–14194. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Campion J, Latto R, Smith YM (1983) Is blindsight and effect of scattered light, spared cortex and near-threshold vision? Behav Brain Sci 6: 423–486. [Google Scholar]

- Elliott PB (1964) Appendix 1: Tables of d′. In: Signal detection and recognition by human observers: contemporary readings (Swets JA, ed), pp 651–684. New York: Wiley.

- Enns JT, Di Lollo V (2000) What's new in visual masking? Trends Cogn Sci 4: 345–352. [DOI] [PubMed] [Google Scholar]

- Green DM, Swets JA (1966) Signal detection theory and psychophysics. New York: Wiley.

- Halligan PW, Hunt M, Marshall JC, Wade DT (1995) Sensory detection without localization. Neurocase 1: 259–266. [Google Scholar]

- Head H, Holmes G (1911) Sensory disturbances from cerebral lesions. Brain 34: 102–254. [Google Scholar]

- Kahneman D (1968) Methods, findings, and theory in studies of visual masking. Psychol Bull 70: 404–425. [DOI] [PubMed] [Google Scholar]

- Keysers C, Perrett DI (2002) Visual masking and RSVP reveal neural competition. Trends Cogn Sci 6: 120–125. [DOI] [PubMed] [Google Scholar]

- Kolb CF, Braun J (1995) Blindsight in normal observers. Nature 377: 336–338. [DOI] [PubMed] [Google Scholar]

- Loftus GR, Masson MEJ (1994) Using confidence intervals in within-subject designs. Psychon Bull Rev 1: 476–490. [DOI] [PubMed] [Google Scholar]

- Meeres SL, Graves RE (1990) Localization of unseen visual stimuli by humans with normal vision. Neuropsychologia 28: 1231–1237. [DOI] [PubMed] [Google Scholar]

- Morgan MJ, Mason AJS, Solomon JA (1997) Blindsight in normal subjects? Nature 385: 401–402. [DOI] [PubMed] [Google Scholar]

- Mountcastle VB, Steinmetz MA, Romo R (1990) Frequency discrimination in the sense of flutter: psychophysical measurements correlated with post-central events in behaving monkeys. J Neurosci 10: 3032–3044. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Paillard J, Michel F, Stelmach G (1983) Localization without content. A tactile analogue of “blind sight.” Arch Neurol 40: 548–551. [DOI] [PubMed] [Google Scholar]

- Rapp B, Hendel SK, Medina J (2002) Remodelling of somatosensory hand representations following cerebral lesions in humans. NeuroReport 13: 207–211. [DOI] [PubMed] [Google Scholar]

- Robichaud L, Stelmach LB (2003) Inducing blindsight in normal observers. Psychon Bull Rev 10: 206–209. [DOI] [PubMed] [Google Scholar]

- Romo R, Hernández A, Zainos A, Salinas E (1998) Somatosensory discrimination based on cortical microstimulation. Nature 392: 387–390. [DOI] [PubMed] [Google Scholar]

- Rossetti Y, Rode G, Boisson D (1995) Implicit processing of somaesthetic information: a dissociation between where and how? NeuroReport 6: 506–510. [DOI] [PubMed] [Google Scholar]

- Stoerig P, Cowey A (1997) Blindsight in man and monkey. Brain 120: 535–559. [DOI] [PubMed] [Google Scholar]

- Weiskrantz L, Warrington EK, Sanders MD, Marshall JC (1974) Visual capacity in the hemianopic field following a restricted occipital ablation. Brain 97: 709–728. [DOI] [PubMed] [Google Scholar]