Abstract

Background Hospital emergency departments (EDs) are dynamic environments, involving coordination and shared decision making by staff who care for multiple patients simultaneously. While computerized information systems have been widely adopted in such clinical environments, serious issues have been raised related to their usability and effectiveness. In particular, there is a need to support clinicians to communicate and maintain awareness of a patient's health status, and progress through the ED plan of care.

Objective This study used work-centered usability methods to evaluate an integrated patient-focused status display designed to support ED clinicians' communication and situation awareness regarding a patient's health status and progress through their ED plan of care. The display design was informed by previous studies we conducted examining the information and cognitive support requirements of ED providers and nurses.

Methods ED nurse and provider participants were presented various scenarios requiring patient-prioritization and care-planning tasks to be performed using the prototype display. Participants rated the display in terms of its cognitive support, usability, and usefulness. Participants' performance on the various tasks, and their feedback on the display design and utility, was analyzed.

Results Participants provided ratings for usability and usefulness for the display sections using a work-centered usability questionnaire—mean scores for nurses and providers were 7.56 and 6.6 (1 being lowest and 9 being highest), respectively. General usability scores, based on the System Usability Scale tool, were rated as acceptable or marginally acceptable. Similarly, participants also rated the display highly in terms of support for specific cognitive objectives.

Conclusion A novel patient-focused status display for emergency medicine was evaluated via a simulation-based study in terms of work-centered usability and usefulness. Participants' subjective ratings of usability, usefulness, and support for cognitive objectives were encouraging. These findings, including participants' qualitative feedback, provided insights for improving the design of the display.

Keywords: Emergency Medicine, clinical decision support, interfaces and usability, human–computer interaction, cognitive systems engineering

Background and Significance

Hospital emergency departments (EDs) are dynamic, high-risk, and fast-paced environments. Frequent interruptions and rapid task switching lead to high cognitive demands. Information systems are needed to support complex tasks related to communication and care coordination. 1 2 3 4 The implementation of computerized health information systems in emergency medicine (EM) has led to new opportunities for task support but has also created new challenges, in part due to shortcomings in design which impact the usability and usefulness of these systems. 5 6 7 Health information technology (IT) has improved legibility issues, historical information access, and data sharing capabilities. However, often due to suboptimal user interface design, these advances have also led to serious unintended consequences, such as wrong patient orders, increased cognitive workload, and decreased shared awareness. 6 7 8 9 In a response to documented usability issues, the Office of the National Coordinator for Health Information Technology recently released new health IT certification criteria requiring vendors to adopt user-centered design processes before distributing final products. 10 Despite this recommendation, vendors may not always comply, resulting in the release of products that still do not support workflow and risk patient safety. 8 11 12 13 Many health care organizations have expressed dissatisfaction with their current electronic health record (EHR) system, particularly its failure to decrease workload and increase productivity. Accordingly, the percentage of these organizations switching EHR vendors quadrupled from 2013 to 2014. 14 15

Previous work has investigated aspects of design and usability of electronic patient tracking systems which replaced manual whiteboards used to track ED patient information 16 17 18 19 as well as the usability of other aspects of EHR systems used in the ED, such as graphical user interfaces in software for tablet 20 and clinical decision support tools. 21 Previous work of this research team used methods from cognitive systems engineering to design a suite of patient tracking and ED management-related displays and evaluated those displays in laboratory and clinical simulation settings. 22 23 24 There has not, however, been a focus on designing an integrated, patient-focused view that helps clinicians on the ED care team develop, communicate about, and maintain awareness regarding a patient's health status and progress through the plan of care.

Therefore, this research focused on the development of a status display intended to facilitate care coordination and shared awareness about individual patients among ED providers and nurses on the patient care team. The display design was informed by a previous series of studies we conducted examining the information and cognitive support requirements of ED providers and nurses. We conducted an observational study to document communication patterns between nurses and providers in multiple EDs. 25 This was followed by a series of focus groups with ED providers and nurses that identified the information that ED providers desired from nurses and vice versa and the obstacles that exist to obtaining this information in today's environment (Hettinger AZ, Benda NC, Roth EM, et al. Ten best practices for improving emergency medicine provider-nurse communication. J Emerg Med, submitted for publication). 26

The studies collectively pointed to a need for a coherent patient-focused view of each patient that brings together information needed by all ED clinicians. Notable needs include (1) the ability for the nurse and physician to maintain awareness of what is new, what has changed, and what is pending relative to a given patient (e.g., a new test was ordered, results were returned, any negative changes in vital signs; (2) the ability of nurses and providers to have a shared view of the plan of care and disposition; and (3) the ability to see critical information on a timeline so as to be able to follow patient progress (e.g., patient was assigned to a room; patient was seen by physician), to anticipate future scheduled events (e.g., patient to be seen by consultant, patient to go to surgery), and to identify relationships between events and patient responses (e.g., the time course between administering a medication and changes in patient pain symptoms or vital signs).

Objectives

The purpose of this study was to evaluate a status display for EM, employing work-centered usability methods. 24 27 Key evaluation metrics included participants' performance on realistic tasks across multiple clinical scenarios, subjective ratings of the display's cognitive support for ED tasks, and ratings of the usability and usefulness of the display. The findings from this study can be used to improve the current design and also provide implications for improving health IT design in the ED on a broader scale.

Methods

Participants

A total of 20 clinicians currently working in an ED, including 10 nurses and 10 attending physician, residents, or physician assistants (labeled as providers below)—were recruited for this study by email distributed across three Mid-Atlantic urban ED hospital staff lists from a single hospital system. The not-for-profit hospitals included were a 912-bed academic medical center, 223-bed acute care teaching hospital, and a 609-bed acute care teaching and research hospital.

Materials

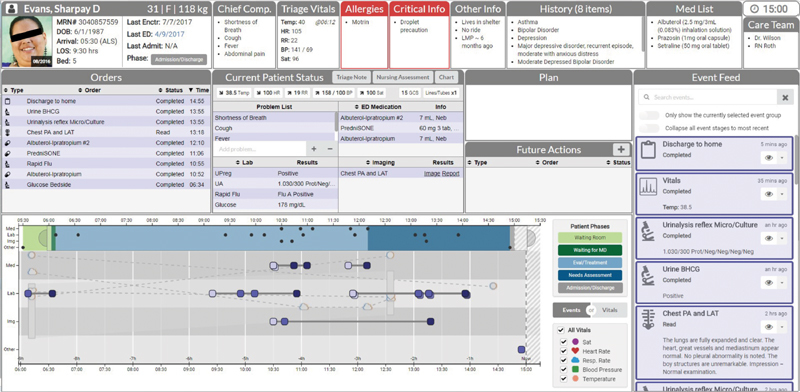

Participants interacted with a patient-focused status display, which was developed using an iterative user-centered design approach 28 was used to develop the patient-focused status display. The design process began with a full-day concept design meeting that involved all members of the interdisciplinary research team, including three ED providers (A.Z.H., S.J.P., R.J.F.), an ED nurse (E.S.F.), two experts in human factors, cognitive engineering, and display design (A.M.B. and E.M.R.), as well as human interface and software design specialists (D.L., N.B., T.C.K., D.J.H.). Based on the results of our prior studies of ED nurse and physician information needs, the design team identified the cognitive support requirements and information needs that the patient-focused status display needed to satisfy. We then identified major elements the display needed to include to satisfy the cognitive support requirements, and developed initial sketches of these display areas. Then, prototypes of increasing levels of fidelity (hand and computer-drawn sketches) were developed and refined based on research team feedback. Finally, a high fidelity prototype was developed on a Web-based platform. This prototype display was presented for feedback to two ED nurses and a physician not involved in the research project or previously familiar with the display. Feedback was generally positive, and a final design iteration was conducted to address their suggestions for improvement. The resulting patient-focused interface tested in the user evaluation reported here consists of seven distinct sections, or windows, for each patient profile ( Fig. 1 ). Users are able to navigate between patient profiles by clicking a dropdown menu at the top left-hand corner and selecting the patient they wish to view.

Fig. 1.

Image of the patient-focused display prototype with different sections for a given emergency department (ED) patient profile (For the purpose of the study, the patient’s name, image, and demographics were replaced with fictitious information).

Top Banner : The top banner contains patient demographic and critical information available at intake. The first section contains a picture of the patient taken upon their first time in the ED with identifying information such as their medical record number, date of birth, age, gender, and weight, as well as the mode of arrival, bed number, date of their last encounter with the health system, date of their last time in the ED, a link of records from their last hospital admission, and their current phase of care. The adjacent sections contain information on the patient's chief complaint, triage vitals, allergies and critical information (shown in red), other information that would be useful in the delivery of care, medical history, home medication list, care team members, and the current time.

Orders : This window provides a list of the orders entered for the patient since their arrival along with timestamped status updates for each order (e.g., an imaging order could have one of the following statuses: ordered, taken, read). The order type, name, status, and time can be sorted by alphabetical or chronological order.

Current Patient Status : This window summarizes the most up-to-date information available regarding the patient while receiving care in the ED. The top section displays a list of the patient's most recent vital signs, their Glasgow Coma Score, and the lines or tubes such as intravenous or urinary catheters that have been placed. Links to the patient's triage note, nursing assessment, and patient's chart are provided (though for purposes of this research, these linked to “placeholder” windows rather than actual records). The bottom section of the current patient status window includes four subsections showing the problem list (populated at triage), laboratory results, ED medications administered (including dosage and route of administration), and imaging orders (with image and radiologist's report as available). The completed medications, laboratories, and imaging orders can be sorted in alphabetical order.

Plan : This window provides information about the clinical plan for the patient. Members of the care team could use this section to communicate about what is happening next for the patient with the option to add and/or delete information in free-text.

Future Actions : The future actions window contains information about upcoming tasks and shows information about any order, scheduled action, or recurring event that has not yet been completed. Users are able to add custom to-do actions that should occur at a scheduled time, such as obtaining vital signs, or actions without a set time, such as a reminder to go talk to the patient's family.

Event Feed : The event feed provides a chronological display of each activity associated with a patient, in a standardized format. Activity type, time, relevant medical details, and status updates are provided. In addition to orders and test results, the event feed includes activities such as changes in care providers and discharge orders. Additionally, the event feed offers functional features, including a search bar, two toggle buttons that allow the filtering of information within the event feed, and a feature that will show the name(s) of the care team member who has or has not viewed or acknowledged the update, with the option to “push” the item to others on the care team.

Timeline : Finally, the timeline is a graphical display that provides a birds-eye view of all the events that have occurred with the patient throughout the course of their stay on both a small and larger scale. Providers are able to see clusters of events throughout the patient's ED stay, what phase of care the patient was in during an event(s), and the phase of an order. There are a total of five phases of care that are shown in the timeline—in the waiting room, waiting for a provider, receiving evaluation and/or treatment, needing assessment from a provider, and waiting for admission or discharge after a disposition order was placed. Events and orders are shown as colored squares that indicate what stage of progress an order is in. For example, a light purple color indicates when an event “was ordered,” medium purple indicates that an event is “in progress” to being completed (e.g., collected, in-laboratory), and dark purple indicates when it is completed. Users are able to view event details, such as the name or description, specific phase, and time it occurred by hovering over a colored square. Future scheduled events can also be seen in this section.

The timeline also displays patient vital signs in the background as icons, such as hearts and clouds, with a legend showing the representation for the different types. A toggle button allows users to alternate between displaying events or vital signs more prominently. These markers allow users to see how different actions, or events, may have affected vital signs.

Experimental Procedure

The study was conducted in a laboratory and facilitated by a trained researcher using a moderator's guide. Each participant provided verbal consent and then completed a demographic survey to ensure they had previous experience working with an EHR. Participants viewed the patient-focused display and study prompts on two 24″ liquid crystal display monitors and used a mouse and keyboard to navigate and interact with its various components. The patient-focused display contained deidentified data extracted from seven actual patient visits, representing a range of medical conditions and complexities. For the purpose of the study, the patient's name, image, and demographics were replaced with fictitious information.

Participants were provided with a notepad and pen for note taking or tracking purposes throughout the study session. The free-text notes were not included in the data. The video recording software Morae Recorder Version 3.3.4 (TechSmith) was used to capture participant mouse interactions with the display as well as audio recordings. The entire session took 2 hours or less.

The study was conducted in the following three phases:

Phase 1: Familiarization

In the first phase, participants familiarized themselves with the overall functionality of the interface by watching a 12-minute training video developed by the research team, freely interacting with the prototype for 5 to 10 minutes, and completing a 16-item multiple choice questionnaire testing their understanding of its seven components. The questionnaire required participants to look through two patient profiles and answer questions such as “Which of the following is not a medication that she is currently taking?” and “when was the ibuprofen order completed?”

Following the interface-familiarization phase, participants were provided with audio recordings of either an emergency physician or nurse (depending on the participant's role), describing each of the patient cases they would encounter in the next task performance phase of the study. The recordings were scripted by EM domain experts, and comprised information that would typically be presented to ED staff members during sign-over.

Phase 2: Task Performance

During the second phase, participants were given different patient care-related scenarios. A scenario involved one or more patient cases at a particular point in time. Descriptions of each patient's case are presented in Table 1 . Participants completed each scenario by performing tasks that required them to interact with the prototype display and make clinical decisions about the patients. Patient cases could be relevant to more than one scenario and could reflect changes due to time, between scenarios. For example, one patient presented with a skin infection in one scenario, and then developed a blood infection in a later scenario. The scenarios and their order of presentation were consistent within each category of participants, as their purpose was to create meaningful interactions with the display, rather than as a controlled independent variable. Providers and nurses completed different scenarios and different sets of tasks. Nurses completed four scenarios and providers completed five. Provider scenarios included reassessing patients after returning from a code and making disposition decisions. Nurse scenarios involved prioritization of care between different patients, and actions related to covering patients assigned to other nurses who may be temporarily unavailable (e.g., responding to a code). Tasks included ranking patients in the order of severity of illness, reassessing them at a later time, and indicating what they would do next for the care plan. These tasks are consistent with decisions that are made by EM providers. Participants were provided with approximately 10 minutes per scenario which was sufficient to allow them to complete the tasks.

Table 1. Description of cases for patients in the task-scenarios.

| Patient name | Case description |

|---|---|

| Sharpay Evans | 31-year-old female patient with shortness of breath, fever and cough, and abdominal pain, with influenza and possible preeclampsia Later, she is ready to be discharged. She has been given discharge instruction for influenza, a prescription for Tamiflu and close follow-up with her primary care doctor as well as return instructions |

| Theodore Gusterson | 87-year-old male presenting with shortness of breath and lower extremity edema. He presented with a congestive heart failure exacerbation, requiring treatment, and admission to the hospital |

| Bartholomew Milankovic | Russian only speaking 64-year-old male with difficulty breathing, diagnosed with pneumonia and sepsis. He is admitted to the ICU |

| Rani Kapoor | Ms. Kapoor is a 61-year-old female with cellulitis of her leg with a history of diabetes. She is stable and admitted to the floor under the hospitalists. Later tests indicate that the patient has developed necrotizing fasciitis |

| Penelope Drake | 21-year-old female presenting with sore throat, fever, and feeling unwell. She is diagnosed with mononucleosis that improves with medical management |

| Charles Klause | 25-year-old male presenting with intentional medication overdose and cardiac arrest. Requires critical stabilization intubation and admission to ICU |

Abbreviation: ICU, intensive care unit.

Phase 3: Subjective Assessments

The final phase of the study consisted of participants rating the usability, usefulness, and frequency of use of the interface with two questionnaires. The System Usability Scale (SUS) questionnaire measured the general usability of the display with 10 questions on a 5-point Likert scale ranging from “strongly disagree” to “strongly agree.” 29

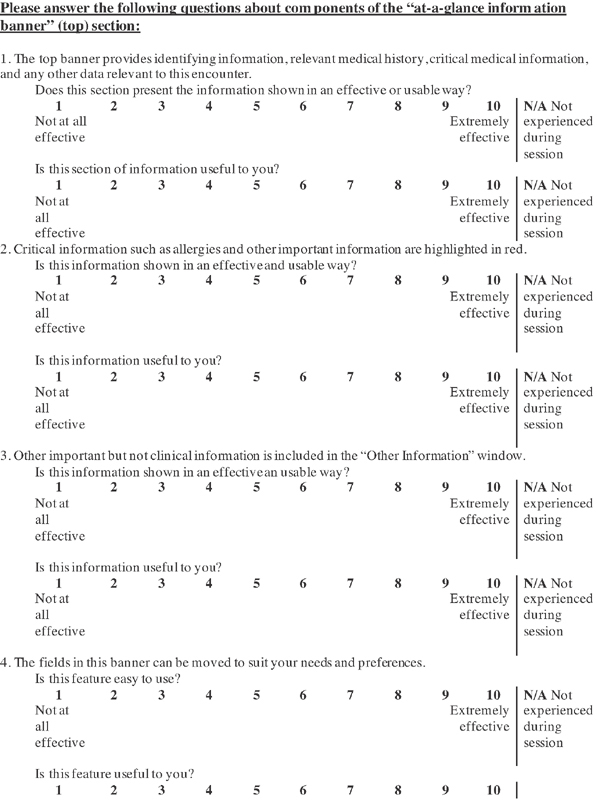

The second was the Specific Usability Questionnaire, developed by the research team, which asked participants to rate the usefulness and usability of each section of the interface and its specific components using a 9-point scale ranging from “not at all effective” or “not at all useful” (score of 1) to “extremely effective” or “extremely useful” (score of 9). Participants also had the option to indicate “N/A not experienced during session” for these questions. In addition to the overall usability and usefulness ratings for each section, the questionnaire included ratings for specific features in five of the seven screen-sections, which had more complex functionality (the plan and future actions sections were omitted for feature-related questions). Further, the Specific Usability Questionnaire measured how frequently a provider would use the information provided in the specific section by providing five options from 1 (never) to 5 (more than four times per shift) with an added option being “don't know/not experienced during session,” and asked participants to list a few tasks (no more than three) for which they would use the section of information to complete in free-text format. Example questions for the top banner section can be found in Fig. 2 .

Fig. 2.

Example of Specific Usability Questions for the top banner section.

Finally, based on their experiences in the different scenarios, participants were asked to rate how effectively the display supported 18 cognitive support objectives (CSOs) ( Fig. 2 , modeled after [author]) using a 9-point scale ranging from “not at all effective” (score of 1) to “extremely effective” (score of 9). 22 Participants were able to refer to the interface throughout all subjective assessments.

If the participant asked the moderator questions about any of the phases (e.g., if the participant was confused about interface functionality), the moderator would reiterate information that had been provided in the training video and the previously provided instructions but did not provide additional details or assistance.

Data Analysis

For the familiarization and task performance questions, the percentages of correct responses were computed. Answers were scored by these subject matter experts (SMEs) based on their consensus as to the correct answers. Unanswered questions were counted as incorrect. Provider questions were all multiple choice and correct answers were identified by EM physicians on the research team. To assess the nurse questions related to priority of care, two SMEs (both senior registered nurses) separately identified 4 high priorities of care. The SMEs agreed to a list of synonyms for these responses (e.g., check vital signs = assess = check blood pressure or temperature). Responses that included an expected response by the SMEs were coded as correct. Responses to ranking questions were coded as correct if the entire ranking order was correct, otherwise it was marked as incorrect.

For the general usability questions, SUS usability scores by each participant were calculated. Mean scores were calculated for the CSO questions, usability and usefulness. Median scores were calculated for the frequency questions. Inspection of the data and residual plots for mean general usability, CSO, specific usability, and usefulness scores did not indicate any serious violation of assumptions of normality, independence, and equality of variance. Therefore, three analysis of variance (ANOVA) models were developed to identify significant main effects and interactions between the variables. Ratings for the general usability questions (SUS) were analyzed using a t -test comparing the mean SUS scores between nurses and physicians. A two-way ANOVA model was developed for the ratings of CSO questions, with role as a between-subject variable. A repeated-measure analysis was employed for the objectives, as an average across all the questions would not allow differentiation across the components of the display. For the ratings of usability and usefulness of specific elements, a separate mixed ANOVA model was developed: role was again included as a between-subject variable, measure type (usability and usefulness), and screen-section were included as within-subject variables. All analyses were performed using R 3.5.0.

Finally, comments made by participants regarding the usability and usefulness of interface features and their responses to the specific question regarding tasks and interface components, were collated and categorized. Specifically, verbal feedback was categorized by general and specific component responses and then further divided into positive feedback, critiques, and suggestions. Responses to the question “List a few tasks (no more than 3) you would use this section of information to complete” were analyzed by a single coder by consolidating responses into thematic codes. The most frequently occurring themes for each screen-section by providers and nurses were identified. A second researcher reviewed the themes and constituent codes to check for consistency of labeling responses across participant type and screen-section. Additionally, two clinician researchers reviewed the themes to ensure accuracy and completeness of thematic codes from a clinical perspective.

Results

Participants

Ten nurses and 10 physician or physician assistant providers were recruited ( Table 2 ). All participants had familiarity with at least one health IT system and 19 of the participants reported to be expert or proficient in least two systems including certified commercially available EHR systems or “home-grown” health IT systems.

Table 2. Participants' demographic information.

| Current Position | Years in Position | Gender | Age | |

|---|---|---|---|---|

| Nurse | 10 nurses, 2 with charge nurse position | Range 1–15, median 4.25 | 9 female, 1 male | Range 24–54, mean 33.2 |

| Provider | 5 attending, 4 resident, 1 physician assistant | Range 1–14, median 3 | 8 female, 2 male | Range 28–41, mean 33.7 |

Familiarization

Two of the 16 familiarization questions were eliminated from the analysis because they were found to be ambiguous. Of the remaining 14 questions, 10 questions received 20 correct responses (100% of participants), 3 questions had 19 correct responses (95%), and 1 question had 18 correct responses (90%). This indicates a good level of understanding of the display functionality across the participants.

Task Performance Questions

Nurses and providers completed different tasks (designed based on their roles in the ED). As shown in Table 3 , performance of nurses was generally high across all tasks.

Table 3. No. of nurses who completed each task correctly.

| Scenario | Nurse tasks | No. of correct responses (10 nurse participants) |

|---|---|---|

| Scenario 1: Listen to sign-over from outgoing nurse regarding 2 patients, Mr. Gusterson and Mr. Milankovic, and answer questions about each | Task 1a: Which of the following tasks need to be completed before giving report to the ICU on Mr. Gusterson? | 10 |

| Task 1b: Which of the following medications does Mr. Milankovic still need to receive? | 10 | |

| Scenario 2: Review and plan next tasks for each of their own patients | Task 2: Please rank your three patients in order of severity of illness (Who do you need to go see first?) | 8 |

| Scenario 3: A nurse on your team has asked you to watch over her patients as she's pulled away to an emergency. Review these patients' charts and answer the ranking question | Task 3: Please rank the three patients you are watching in order of severity of illness (Who do you need to go see first?) | 9 |

| Scenario 4: Other nurse is back. Review Mr. Milankovic's chart and answer the prioritization question | Task 4 (qualitative): Identify a priority of nursing care for Mr. Milankovic. (What needs to be done next?) | 10 |

Abbreviation: ICU, intensive care unit.

For providers, there was wide variability in the correct response rate, from 0 to 100%, with all 10 participants responding correctly to only 1 question (see Table 4 ). Task 1b required the user to rank the acuity of three patients, whereas tasks 1a and 2 to 5 required users to select the best option from 4 choices given the status of the patient and current results.

Table 4. Number of providers who answered each question (task) correctly.

| Scenario | Provider tasks | No. of correct responses (10 provider participants) |

|---|---|---|

| Scenario 1: Listen to sign-over from outgoing physician regarding 3 patients, Mr. Gusterson, Mr. Milankovic, and Ms. Rani Kapoor, and answer questions about each | Task 1a: For Mr. Gusterson, indicate what you would do next | 0 |

| Task 1b: Rank the three patients that were signed out to you in order of severity of illness from most (1) to least (3) severe (Who do you need to go see first?) | 8 | |

| Scenario 2: The shift is busy and patients are waiting to be seen. Review Ms. Evans' information in preparation for going to complete their history and physical | Task 2: Please choose the most appropriate next step in the patient's (Ms. Evans) visit | 3 |

| Scenario 3: You have just returned from a code. Assess Mr. Milankovic | Task 3: Please choose the most appropriate next step in the patient's (Mr. Milankovic) visit | 4 |

| Scenario 4: Ms. Evans is ready to be discharged. She has been given discharge instruction for influenza, a prescription for Tamiflu and close follow up with her primary care doctor and return instructions | Task 4: Please evaluate the patient's chart (Ms. Evans) and choose the next best action | 7 |

| Scenario 5: It's the end of your shift. Assess the patient, Ms. Rani Kapoor, who has just been admitted with leg cellulitis | Task 5: Please choose the phrase that best describes this patient's (Ms. Kapoor's) current status | 10 |

General Usability Questions

The mean SUS score of all participants was 69.6. Mean SUS scores of nurses and providers were 73.45 and 65.75. These two scores fall into overall categories of “acceptable” to “marginally acceptable,” respectively. 30 There was no significant difference between nurses and physicians' SUS scores, t (18) = 0.999, p = 0.331.

Cognitive Support Objectives

All 18 CSOs received mean scores higher than neutral (5.00) on the 9-point Likert scale ( Table 5 ). Eighty-three percent (15 out of 18) had mean scores higher than 7, which indicates high level of support for objectives. The highest rating was for supporting providers in checking critical medical information about the patients, while the lowest rating was for supporting providers in noticing significant changes in a patient's clinical condition during the ED stay.

Table 5. Cognitive support objective questions with means and standard deviations of scores across all participants.

| Cognitive support objective questions Rate how effective the interface was/would be in supporting the following tasks/goals: |

All | Provider | Nurse |

|---|---|---|---|

| 1. Know critical medical information about the patient (e.g., allergies, current medications, precautions)? | 8.50 | 8.40 | 8.60 |

| 2. Know where the patient is in the care process (e.g., waiting for laboratory results, waiting for admission) | 8.05 | 7.40 | 8.70 |

| 3. Know what the plan is for the patient's care and likely disposition? | 8.00 | 7.70 | 8.33 |

| 4. Know important nonmedical information about the patient that may impact their care (e.g., patient does not have a ride home; patient speaks a language other than English; patient uses a walker)? | 7.85 | 7.40 | 8.30 |

| 5. Communicate about the status of orders and the patient's progress through the ED with others on the care team? | 7.80 | 7.20 | 8.40 |

| 6. Provide support for the sign-over process (e.g., at beginning or end of a shift)? | 7.75 | 7.50 | 8.00 |

| 7. Quickly assess the patient's current clinical condition (e.g., symptoms, laboratory results, vital signs)? | 7.70 | 7.20 | 8.20 |

| 8. Understand the status of orders for the patient (in process, waiting for results, completed)? | 7.65 | 7.20 | 8.10 |

| 9. Communicate about the patient's clinical condition with others on the care team? | 7.55 | 7.20 | 7.90 |

| 10. Support effective planning for the patient's care? | 7.50 | 7.20 | 7.80 |

| 11. Coordinate aspects of a patient's care with others on the care team (e.g., insuring tasks are complete, planning task assignments, asking for help) | 7.50 | 7.20 | 7.80 |

| 12. Allow you to understand what others on the care team know about the patient (e.g., that a physician has been notified about laboratory results; or that a nurse has seen a medication order)? | 7.40 | 6.90 | 7.90 |

| 13. Be alerted to/understand hold-ups in the patient's care/progress through the ED? | 7.20 | 6.50 | 7.90 |

| 14. Support an overall shared understanding of the patients' clinical condition and visit progress across the care team? | 7.20 | 6.50 | 7.90 |

| 15. Understand that the patient is waiting for you to complete an order/follow up (i.e., that you are the hold-up)? | 7.15 | 6.20 | 8.10 |

| 16. Provide support for documenting the patient visit (e.g., in the chart or nursing note)? | 6.55 | 6.10 | 7.00 |

| 17. Provide support for prioritizing your tasks across the multiple patients you might be caring for? | 5.80 | 4.80 | 6.80 |

| 18. Be alerted to/understand if there has been a significant change in the patient's clinical condition during the ED stay? | 5.75 | 5.10 | 6.40 |

Abbreviation: ED, emergency department.

The two-way ANOVA showed that there were significant main effects of role and objectives ( p < 0.001) on CSO score. Nurses gave a mean score of 1.02 points higher than providers (mean scores were 7.89 and 6.87, respectively). Tukey's honest significant difference (HSD) test was conducted to test for differences between the CSOs, and showed that mean scores for objectives 1 through 15 were similar (no significant differences). However, the mean scores for objectives 17 and 18 were significantly lower than objectives 1 through 12, and objective 16 was significantly lower than objective 1.

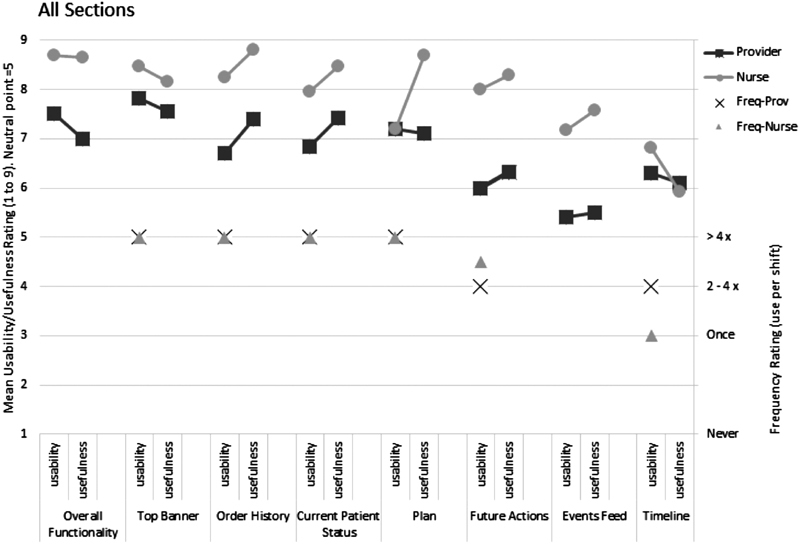

Usability, Usefulness, and Frequency of Use

Fig. 3 shows the specific usability and usefulness scores averaged over all questions for each section and the shared features (left axis scale), along with median frequency of use scores (right axis scale). One question regarding the event feed was removed from the analysis because it was included in the incorrect subsection. Among the 27 features, 23 (85%) had ratings higher than 6. Features with mean ratings below 6 all belonged to the timeline section.

Fig. 3.

Average usability, usefulness, and median frequency of use scores for each display section and for shared features.

Median scores were high for frequency of use with responses of either two to four times per shift, or more than four times per shift, except that median frequency of nurses on the timeline section was once per shift. For four of six sections, providers and nurses responded similarly. Frequency of use was also lowest for the timeline section, similar to usability and usefulness. Note that the frequency question for the event feed was inadvertently left out of data collection, and so could not be analyzed.

After insignificant interactions were removed, the final mixed ANOVA model had two significant main effects, two significant interactions, and one significant nested variable. Role was again significant ( p < 0.001), with nurse mean scores higher than providers (mean scores were 7.56 and 6.68, respectively). Section was also significant ( p < 0.001). Tukey's HSD test showed that mean scores for the events feed and timeline sections were similar to each other, but both significantly lower than the other sections. The interaction between role and section was significant ( p < 0.001). Nurses gave higher mean ratings on all sections than providers, but role effect was greater on some of the sections. For example, nurses gave higher scores to the events feed than the timeline compared with providers. Interaction between measure and section was also significant ( p = 0.02). Usability was higher than usefulness for top banner and timeline sections, for shared features, and vice versa in the remaining sections. The nested variable, feature, was also significant ( p < 0.001), indicating that the mean ratings for different features varied.

Participants' Comments on Screen Use—Tasks

Both nurse and provider participants provided free-text responses about tasks they would complete using each screen-section. Prominent themes identified via the qualitative analysis are shown in Table 6 below.

Table 6. Prominent response themes representing tasks for which participants indicated they would use each screen-section.

| Screen section | Nurse | Provider |

|---|---|---|

| Top Banner | • Medication management: reconciliation/administration • Initial assessment/reassessment • Verifying patient information • Giving/calling report |

• Review/look-up: patient medical history, medication list, and doses • Initial assessment/reassessment • Prioritizing patient • Verifying patient information |

| Order History | • Checking what needs to be done - medication/tests/laboratories • Delegating/completing task • Checking status of task • Prioritization tasks |

• Checking status of orders • Identifying: issues/delays/pending orders |

| Current Patient Status | • Review/look-up: laboratory/imaging results, medication orders • Medication management: reconciliation/ administration • Giving/calling report • Communication: discussing with MD and planning |

• Review/look-up: laboratory/imaging results Review/look-up: medication list and doses • Decision: when to reassess the patient |

| Plan | • Communication: update/notify MD: disposition and laboratories | • Communication: plan with team • Tracking patient progress |

| Future Actions | • Collecting laboratories • Planning • Prioritization tasks |

• Identifying: issues/delays/pending orders |

| Events Feed | • Communication: update/notify MD: labs • Decision: when to update MD |

• Shared awareness: seeing what team members have seen: orders/updates • Review/Look-up: orders/recent updates |

| Timeline | • Review/look-up: vital trends • Tracking patient progress • Tracking infusion and medication • Identifying: issues/delays/pending orders |

• Review/look-up: vital trends • Tracking patient progress • Identifying: issues/delays/pending orders |

Abbreviation: MD, Doctor of Medicine.

Additionally, informal comments by participants about the screen sections, in terms of positive feedback, critiques, and suggestions. These are summarized below:

Top banner : Readily available information related to phases of care is very useful. Suggestions included adding free-text and updating capability, and additional information, such as patient code status.

Orders : Useful and preferred over event feed.

Current patient status : Nurses found this a very helpful window and indicated that it offers easy access to imaging (which otherwise requires more clicks). A feature suggested both nurses and physicians were able to click laboratory values from hover and access as pop-up. Physicians offered additional suggestions including features for timestamps for results and ability to compare with previous results.

Plan : Nurses found this very helpful, and physicians concurred this would be helpful to the nurses. The nurses' concern was the plan presented was not up to date. Both participant groups suggested automating the name and timestamp information for faster entry process.

Future actions : The free-text field was appreciated by a nurse. No further feedback was provided by participants on this section.

Event feed : Both nurses and physicians found this section useful for training (junior nurses or residents) purposes. Nurses found some features confusing, and mentioned needing more time to familiarize with toggle buttons and push notifications.

Timeline : A participant suggested that this section would be more useful as part of quality improvement process after the patient encounter. Physicians found the vital toggles useful. However, most participants found this section very complex. Participants suggested a feature to enable minimizing the window.

Other suggestions included providing more free-text fields in sections which lacked this, changes in format of information presented, such as laboratory values in a matrix form, and the ability to minimize windows.

Discussion

Overall, ratings regarding usability, usefulness, and cognitive support indicated a positive reaction to the display. Both nurses and providers provided ratings of usability and usefulness that were positive, with all 7 sections and a majority of features (23 out of 27) receiving scores greater than 6 out of 9 when averaged across nurses and providers. Similarly, ratings regarding support for cognitive objectives, averaged across providers and nurses, were greater than 7 out of 9 for 15 out of 18 objectives. Participants also indicated high frequency of use for most display components. This finding is similar to that of Simpao et al, whose participants reported increased access and use of electronic graphical displays which presented clinical information when presented visually. 31 Participants also achieved high scores on the familiarization questions. Qualitative responses regarding the top potential uses for each of the sections align with the CSOs (for instance, knowing where the patient is in the care process, or reviewing critical medical information about the patient). Taken together, these results indicate that participants were familiar with the various sections and features of the interface, and overall assessed the interface positively.

The analysis did, however, point to opportunities for improvement. Mean scores of general usability, generated through the SUS tool, were 73.45 among nurses and 65.75 among providers. These scores fall in acceptable and marginally acceptable categories, respectively. 31 These scores are comparable to the SUS ratings reported by Kim et al for expert nurses using a standard ED information display, and higher than ratings by novice nurses using the same display in that study. 32 Also, while nurses were generally successful in completing the scenario-based tasks with the interface, the performance of providers was more variable. This may indicate that the interface provided less support for some aspects of physician work.

In considering task performance, it is important to note that the primary purpose of the tasks was to force “realistic” interaction with the interface, rather than to serve as a comprehensive assessment of how the interface would support all tasks. That said, by examining the tasks, we can identify some useful points for redesign. For instance, in providers' task 1, in which participants were asked to review information about a patient while reviewing a (recorded) verbal sign-over, none of the participants correctly identified a critical laboratory result that had been missed by the previous physician, perhaps because critical values were not highlighted on the display. Provider participants also did not consistently choose the best course of treatment for a patient with sepsis (task 2), although the treatments they tended to select are consistent with common protocols (e.g., overtreating with fluids and antibiotics for sepsis). This points to a possible need for more integrated clinical decision support into displays of health information. In contrast, the interface did provide support for providers in ranking which patients were most critically ill (task 1b) and in identifying new information that changed a previous diagnosis (tasks 3 and 4), perhaps because (compared with many current EHR systems), information was presented in an integrated view rather than on separately accessed screens. In general, the preference for more integrated information on electronic display is consistent with findings of previous studies. For instance, Hester et al have demonstrated that providing integrated visual information for care of patients with bronchiolitis in the ED led to improvement in certain workflow metrics such as reduced length of stay. 33

A design issue highlighted by the analysis was that the timeline view did not appropriately signal changes in vital signs when values were outside of the plot boundaries (e.g., were too high or too low to fit on the screen). Off screen values were shown at the boundary (top or bottom) and were animated (flashed), but were connected to previous values with dashed lines and therefore did not indicate the degree of change using the salient feature of slope. For one of the familiarization questions, “How does the most recent heart rate reading compare to the last reading?,” the correct answer was “Lower” to reflect a change from 171 to 139, but three providers answered that it stayed the same. After looking back at video screen recordings, we realized that the three participants who gave this answer used the vital signs shown in the timeline (via vitals toggle button) instead of the dropdown heart rate feature in the current patient status window. Because all heart rate values higher than 140 display at the top of the timeline plot, the line connecting the 171 value to the 139 value was horizontal, signaling no change. Two out of 7 participants who answered correctly also referred to the vitals shown in the timeline (via vitals toggle button) but hovered over each heart rate result before answering, and the rest of the participants (5) referred to the dropdown heart rate feature in the current patient status window. Interestingly, usability issues related to time-series data lying outside the visual boundaries were previously raised by Lin et al. 34 In a time-series visual display of patient physiological functions, participants assumed they had found the start of patient data, when it was actually the end of a gap in the timeline which extended outside the boundary of the plot. These findings indicate a need to address usability issues specific to continuous longitudinal data.

Additionally, some portions of the interface were rated significantly less useful or usable than others. In particular, the timeline and event feed windows had lower ratings of usability, usefulness, and frequency of use. Because these were novel features, it may be that participants were not sure of how these display elements could be used and integrated into their work with patients. These display elements were intended to be most useful as information dynamically changed: either in showing change and workflow over time (the timeline) or as new or changed information was updated (the event feed). The event feed also included specific features that supported team communication. Because the scenarios and tasks did not unfold over time, and because they did not incorporate team members, it is possible that this evaluation did not allow adequate assessment of these features.

Also, some CSOs were rated significantly lower than others. Participants gave lower ratings for support regarding being alerted to significant changes, and also noted this drawback in their verbal comments. This is an important critique, consistent with some of the task performance outlined above, and may be due to the fact that we did not include any highlighting or color coding of significant laboratory values or test results, and also that some critical changes were masked by the timeline design. Additionally, participants did not believe the display provided support for prioritizing tasks across multiple patients, likely because the display only provided information about a single patient at a time.

There were differences in ratings provided by nurse and provider participants. Overall, nurses gave higher scores for usability and usefulness. This difference did not occur across all display components, however, suggesting that the result is not simply due to a difference in how the two groups reacted to the questions. For example, nurses and providers had similar scores related to the timeline, and had more pronounced differences for the event feed and future events section. This may be due to cognitive different work needs across the skill sets. Explicit display of future actions and the updates provided by the event feed are consistent with nursing practice requirements to check on tasks to be done and outstanding orders or tests. It is also common for nurses to take on care tasks for patients assigned to other nurses (i.e., to help with workload, to cover breaks), so they would perceive value in having easy access to what events have occurred, and what tasks are to be completed. Nurses also gave the plan component a higher usefulness rating, perhaps reflecting their need for better communication regarding the physician-generated plan of care.

Participants generally gave display components higher ratings for usefulness compared with usability. This may be due to the fact that not all of the interactive features were fully functional in the prototype used for testing: some participant feedback indicated that they noticed a lack of some expected interactivity (e.g., in the current patient status section, the ability to click on laboratory results from the hover-box and access the same as a pop-up). Lower usability scores also point to design opportunities, for instance improving the visibility of health changes, while the higher scores for usefulness suggest that the correct information and features were present.

Based on general verbal feedback received throughout the study sessions, participants found the interface to be complex but not too cumbersome. A feature that was particularly appreciated was one which aided in tracking an event. Upon clicking a single item, associated information would be highlighted across other windows throughout the display, specifically in the order, event feed, and timeline windows. Then, depending on whether they were “completed” or “in progress” would determine whether it would show up in the current patient status or future order window. In terms of general critiques, participants stated that the interface lacked an alert system for outstanding orders, critical laboratory results, or abnormal values, and that they preferred the ability to view multiple patients at the same time compared with having to switch between their profiles, which is consistent with the low CSO response scores for questions 17 and 18. Future work could elicit more extensive feedback about the display features from potential users, to allow for a more detailed qualitative analysis. Findings can be used to support as well as enhance the current suggestions for improvement.

In summary, the patient-focused prototype interface proved useful for both nurses and providers with good performance across a range of cognitive support needs. The work-centered evaluation methodology identified specific usability problems which can be addressed as part of the ongoing iterative design of this prototype.

Limitations

Limitations of this usability assessment include the fact that the prototype evaluated was not fully functional. The prototype lacked certain aspects of interactivity (e.g., windows could not be resized or closed) and historical health records regarding the patients were not available. Additionally, the scenarios were not dynamic (i.e., did not unfold over time) and were not team based. Therefore, some of the potential benefits and functionality, such as being alerted to incoming events, or sharing information with team members, could not be evaluated. As a laboratory-based evaluation, the scenarios were limited in terms of their immersive nature. Additional evaluations could include more immersive, dynamic tasks completed with a team of participants, similar to methods used previously by this research team. 23

Additionally, the scenarios were necessarily limited to a small number of ED patients and tasks. While both the patients and tasks were carefully selected to provide realistic and challenging interaction with the prototype, it is possible that including patients with different health concerns, or developing different tasks, could have changed the results. Also, nurses and providers did not complete the same tasks: instead, tasks were chosen to best represent the work tasks that the different groups would be likely to complete. However, the tasks differences may have contributed to the differences in scores across the groups. Furthermore, the study did not compare the prototype to a control interface as the purpose was to simply assess the usability of the prototype. Future research could explore comparisons with existing ED displays or generic control interfaces. More objective evaluations, including screen capture-based measurements of interaction could also be considered.

Finally, the sample size was limited to 20 participants who all worked in the same hospital system. This sample size is consistent with recommendations for usability studies. 35 Participants did express a wide range of experiences with different EHR systems. Furthermore, the current sample size did allow us to identify statistically significant differences that highlighted both useful aspects of the prototype as well as needs for redesign, and which were consistent with qualitative feedback provided by the participants.

Conclusion

A new display for EM was assessed in a laboratory-based simulation study, using work-centered usability methods. Participants rated the display and its components high (above the mid-point) on scales for work-centered measures of usability and usefulness. Similarly, participants generally gave high scores for questions related to the CSOs of the display. General usability scores, based on the SUS tool, were rated as acceptable or marginally acceptable. Performance of clinicians on scenario-based tasks using the display, however, was more varied, depending on the type of task or question. Overall, these results demonstrate the value of work-centered usability testing of an electronic system that has been produced using an intensive user-centered design process, and provide a scope for improving the design of the display. Analysis of qualitative feedback collected from the participants during the study also generated several insights for improvement. The findings from this study will be used to improve the current design, with implications for improving health IT design in the ED on a broader scale.

Clinical Relevance Statement

The study represents innovative approach to information visualization in emergency medicine, with a focus on patient-focused information and user-centered design. This research demonstrates the value of employing a true user-centered design—an important step in overcoming the current design issues with health IT, with implications for team communication, shared awareness, and safer care in health care. While findings indicate scope for improvement, they also serve as evidence that such a display would be useful in clinical practice.

Multiple Choice Questions

-

Mean usability and usefulness scores for the 7 sections of the patient-focused status display were:

All higher than neutral.

All less than neutral.

Usability scores were higher than neutral, but usefulness scores varied between less and more than neutral.

Usefulness scores were higher than neutral, but usability scores varied between less and more than neutral.

Correct Answer: The correct answer is option d, all higher than neutral.

-

Which of the following statements is supported by the findings of this study?

Both providers and nurses provided similar ratings for usability and usefulness of the display sections.

There were no differences in how providers and nurses said they would use the various display sections.

The patient-focused display was found to support the cognitive objective of knowing critical medical information about the patient.

Providers performed better on their tasks using the display, than nurses on theirs.

Correct Answer: The correct answer is option c, the patient-focused display was found to support the cognitive objective of knowing critical medical information about the patient.

Acknowledgments

The authors are grateful to all participants for their time, as well as staff at MedStar Health for helping facilitate the study. They are also grateful to the late Dr. Robert L. Wears, who contributed to the conception and design of the study.

Funding Statement

Funding This study was funded by the Agency for Healthcare Research and Quality's (AHRQ) R01 grant (R01HS022542).

Conflict of Interest None declared.

Protection of Human and Animal Subjects

This study protocol was reviewed and approved by the lead author's Institutional Review Board (IRB), which also served as the IRB of Record for coauthors belonging to other institutions. Participants provided verbal consent to participate in the study.

References

- 1.Fong A, Ratwani R M. Understanding emergency medicine physicians multitasking behaviors around interruptions. Acad Emerg Med. 2018;25(10):1164–1168. doi: 10.1111/acem.13496. [DOI] [PubMed] [Google Scholar]

- 2.Kellogg K M, Wang E, Fairbanks R J, Ratwani R. 286 Sources of interruptions of emergency physicians: A pilot study. Ann Emerg Med. 2016;68(04):S111–S112. [Google Scholar]

- 3.Laxmisan A, Hakimzada F, Sayan O R, Green R A, Zhang J, Patel V L.The multitasking clinician: decision-making and cognitive demand during and after team handoffs in emergency care Int J Med Inform 200776(11-12):801–811. [DOI] [PubMed] [Google Scholar]

- 4.Fairbanks R J, Bisantz A M, Sunm M. Emergency department communication links and patterns. Ann Emerg Med. 2007;50(04):396–406. doi: 10.1016/j.annemergmed.2007.03.005. [DOI] [PubMed] [Google Scholar]

- 5.Bisantz A, Pennathur R, Fairbanks R et al. Emergency department status boards: a case study in information systems transition. J Cogn Eng Decis Mak. 2010;4(01):39–68. [Google Scholar]

- 6.Taylor S P, Ledford R, Palmer V, Abel E. We need to talk: an observational study of the impact of electronic medical record implementation on hospital communication. BMJ Qual Saf. 2014;23(07):584–588. doi: 10.1136/bmjqs-2013-002436. [DOI] [PubMed] [Google Scholar]

- 7.Farley H L, Baumlin K M, Hamedani A G et al. Quality and safety implications of emergency department information systems. Ann Emerg Med. 2013;62(04):399–407. doi: 10.1016/j.annemergmed.2013.05.019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Benda N C, Meadors M L, Hettinger A Z, Fong A, Ratwani R M.Electronic health records and interruptions: the need for new interruption management strategiesProceedings of the International Symposium on Human Factors and Ergonomics in Health Care: Improving the Outcomes;2015;4(1):70

- 9.Friedberg M W, Van Busum K, Wexler R, Bowen M, Schneider E C. A demonstration of shared decision making in primary care highlights barriers to adoption and potential remedies. Health Aff (Millwood) 2013;32(02):268–275. doi: 10.1377/hlthaff.2012.1084. [DOI] [PubMed] [Google Scholar]

- 10.Office of the National Coordinator for Health Information Technology (ONC), Department of Health and Human Services 2015. Edition Health Information Technology (Health IT) Certification Criteria, 2015 Edition Base Electronic Health Record (EHR) Definition, and ONC Health IT Certification Program Modifications. Available at:https://www.federalregister.gov/documents/2015/10/16/2015-25597/2015-edition-healthinformation-technology-health-it-certification-criteria-2015-edition-base. Accessed October 30, 2018 [PubMed]

- 11.Howe J L, Adams K T, Hettinger A Z, Ratwani R M. Electronic health record usability issues and potential contribution to patient harm. JAMA. 2018;319(12):1276–1278. doi: 10.1001/jama.2018.1171. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Ratwani R M, Benda N C, Hettinger A Z, Fairbanks R J. Electronic health record vendor adherence to usability certification requirements and testing standards. JAMA. 2015;314(10):1070–1071. doi: 10.1001/jama.2015.8372. [DOI] [PubMed] [Google Scholar]

- 13.Ratwani R M, Fairbanks R J, Hettinger A Z, Benda N C. Electronic health record usability: analysis of the user-centered design processes of eleven electronic health record vendors. J Am Med Inform Assoc. 2015;22(06):1179–1182. doi: 10.1093/jamia/ocv050. [DOI] [PubMed] [Google Scholar]

- 14.Cohen J K.The top 3 reasons hospitals switch EHR vendors. Becker Hospital Review Web site. April 15, 2018Available at:https://www.beckershospitalreview.com/ehrs/the-top-3-reasons-hospitals-switch-ehrvendors.html. Accessed October 30, 2018

- 15.Slabodkin G.ONC reports 4x spike in providers switching EHR vendors. Health Data Management websiteSeptember 10, 2015. Available at:https://www.healthdatamanagement.com/news/onc-reports-4x-spike-inproviders-switching-ehr-vendors. Accessed October 30, 2018

- 16.Aronsky D, Jones I, Lanaghan K, Slovis C M.Supporting patient care in the emergency department with a computerized whiteboard system J Am Med Inform Assoc 20081502184–194.. Doi: 10.1197/jamia.M2489 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Pennathur P R, Bisantz A M, Fairbanks R J, Perry S J, Zwemer F, Wears R L. Assessing the impact of computerization on work practice: information technology in emergency departments. Proc Hum Factors Ergon Soc Annu Meet. 2007;51(04):377381. [Google Scholar]

- 18.Wears R L, Perry S J. Status boards in accident & emergency departments: support for shared cognition. Theor Issues Ergon Sci. 2007;8(05):371–380. [Google Scholar]

- 19.Xiao Y, Schenkel S, Faraj S, Mackenzie C F, Moss J. What whiteboards in a trauma center operating suite can teach us about emergency department communication. Ann Emerg Med. 2007;50(04):387–395. doi: 10.1016/j.annemergmed.2007.03.027. [DOI] [PubMed] [Google Scholar]

- 20.Karahoca A, Bayraktar E, Tatoglu E, Karahoca D. Information system design for a hospital emergency department: a usability analysis of software prototypes. J Biomed Inform. 2010;43(02):224–232. doi: 10.1016/j.jbi.2009.09.002. [DOI] [PubMed] [Google Scholar]

- 21.Press A, McCullagh L, Khan S, Schachter A, Pardo S, McGinn T. Usability testing of a complex clinical decision support tool in the emergency department: lessons learned. JMIR Human Factors. 2015;2(02):e14. doi: 10.2196/humanfactors.4537. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Guarrera T K, McGeorge N M, Clark L N . Boca Raton: CRC Press, Taylor & Francis; Cognitive engineering design of an emergency department information system. [Google Scholar]

- 23.McGeorge N, Hegde S, Berg R L et al. Assessment of innovative emergency department information displays in a clinical simulation center. J Cogn Eng Decis Mak. 2015;9(04):329–346. doi: 10.1177/1555343415613723. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Clark L N, Benda N C, Hegde S et al. Usability evaluation of an emergency department information system prototype designed using cognitive systems engineering techniques. Appl Ergon. 2017;60:356–365. doi: 10.1016/j.apergo.2016.12.018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Benda N C, Hettinger A Z, Bisantz A M et al. Communication in the electronic age: an analysis of face-to-face physician-nurse communication in the emergency department. J Healthc Inform Res. 2017;1(02):218–230. doi: 10.1007/s41666-017-0008-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Kim T C, Bisantz A M, Benda N Cet al. Assessing the usability of a prototype emergency medicine patient-centered electronic health record displayProceedings of the 2018 Sixth IEEE International Conference on Healthcare Informatics (ICHI);2018. Doi: 10.1109/ICHI.2018.00083

- 27.Roth E, Stilson M, Scott R et al. Work-centered design and evaluation of a C2 visualization aid. Proc Hum Factors Ergon Soc Annu Meet. 2006;50(03):255259. [Google Scholar]

- 28.Hettinger A Z, Roth E M, Bisantz A M. Cognitive engineering and health informatics: applications and intersections. J Biomed Inform. 2017;67:21–33. doi: 10.1016/j.jbi.2017.01.010. [DOI] [PubMed] [Google Scholar]

- 29.Sauro J. Denver, CO: Measuring Usability LLC; 2011. A Practical Guide to the System Usability Scale: Background, Benchmarks, & Best Practices. [Google Scholar]

- 30.Simpao A F, Ahumada L M, Larru Martinez B et al. Design and implementation of a visual analytics electronic antibiogram within an electronic health record system at a tertiary pediatric hospital. Appl Clin Inform. 2018;9(01):37–45. doi: 10.1055/s-0037-1615787. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Bangor A, Kortum P T, Miller J T. An empirical evaluation of the System Usability Scale. Int J Hum Comput Interact. 2008;24(06):574–594. [Google Scholar]

- 32.Kim M S, Shapiro J S, Genes N et al. A pilot study on usability analysis of emergency department information system by nurses. Appl Clin Inform. 2012;3(01):135–153. doi: 10.4338/ACI-2011-11-RA-0065. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Hester G, Lang T, Madsen L, Tambyraja R, Zenker P. Timely data for targeted quality improvement interventions: use of a visual analytics dashboard for bronchiolitis. Appl Clin Inform. 2019;10(01):168–174. doi: 10.1055/s-0039-1679868. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Lin Y L, Guerguerian A M, Tomasi J, Laussen P, Trbovich P. “Usability of data integration and visualization software for multidisciplinary pediatric intensive care: a human factors approach to assessing technology”. BMC Med Inform Decis Mak. 2017;17(01):122. doi: 10.1186/s12911-017-0520-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Faulkner L. Beyond the five-user assumption: benefits of increased sample sizes in usability testing. Behav Res Methods Instrum Comput. 2003;35(03):379–383. doi: 10.3758/bf03195514. [DOI] [PubMed] [Google Scholar]