Abstract

AIM

To evaluate the reliability of the Motor Learning Strategies Rating Instrument (MLSRI-20) in gait-based, video-recorded physiotherapy interventions for children with cerebral palsy (CP).

METHOD

Thirty videos of 18 children with CP, aged 6 to 17 years, participating in either traditional or Lokomat-based physiotherapy interventions were rated using the MLSRI-20. Physiotherapist raters provided general and item-specific feedback after rating each video, which was used when interpreting reliability results.

RESULTS

Both interrater and intrarater reliability of the MLSRI-20 total score was good. The interrater reliability intraclass correlation coefficient (ICC) was 0.78 with a 95% confidence interval (CI) of 0.53–0.89 and a coefficient of variation (CV) of 11.8%. The intrarater reliability ICC was 0.89 with a 95% CI of 0.76–0.95 and CV of 7.8%. Rater feedback identified task delineation and interpretation of therapist verbalizations as sources of interrater reliability-related scoring challenges.

INTERPRETATION

The MLSRI-20 is a reliable tool for measuring the extent to which a physiotherapist uses motor learning strategies during a video-recorded intervention. These results have clinical and research implications for documenting and analyzing the motor learning content of physiotherapy interventions for children with CP.

Physiotherapy interventions for children with cerebral palsy (CP) often focus on activity-based goals targeting motor skill attainment and/or refinement.1 When transferred to a child’s daily routine, newly acquired skills can support enhanced participation in meaningful activities.1 Motor learning is the acquisition and retention of a motor skill2 and can be promoted by using motor learning strategies (MLS). MLS are specific actions of the physiotherapist involving the selection and manipulation of motor learning variables based on child-specific and task-specific factors.3 Implementation of MLS within physiotherapy are beneficial, as structuring sessions to support motor learning enhances the experience-dependent neuroplasticity that underlies how the brain encodes new information.4–6

It is important to understand the underlying content and focus of the intervention.7 Physiotherapists play an integral role in encouraging motor learning, even in technology-based interventions where they facilitate how the technology is implemented.8 Integrating technology, such as Nintendo Wii, WalkAide, and the Lokomat robotic-assisted gait trainer, within physiotherapy interventions may enhance motor learning opportunities.9–12 However, studies evaluating the efficacy of these technologies rarely detail the motor learning content within their study protocols,10,11 which prevents physiotherapists from understanding and replicating the intervention in clinical settings. Studies evaluating the benefits of the Lokomat in children with CP emphasize its repetitive properties and ability to adjust gait parameters, based on a child’s progress. However, they fail to elaborate on other important MLS used within these interventions, including how the physiotherapist communicates with the child, promotes problem solving, or links the tasks within the Lokomat to daily activities.11–13 Additionally, without a common language to specify the type of MLS used, it is difficult to elucidate an intervention’s ‘active ingredients’ to understand which components contribute to its success.7 Documenting the motor learning content of physiotherapy interventions using standardized terminology would allow comparisons and create an opportunity to understand the relationship between MLS use and each child’s needs.

The Motor Learning Strategies Rating Instrument (MLSRI-20) was developed to systematically document the type and extent of MLS used in motor learning-focused physiotherapy interventions.3 Initial validation work for this 33-item measure demonstrated excellent intrarater and moderate interrater reliability among physiotherapist student raters evaluating physiotherapy interventions for children with acquired brain injury.14 Revision and revalidation were recommended before using the instrument in clinical or research settings.14 Subsequent modifications by Levac and several motor learning colleagues15 resulted in the MLSRI-20, a 20-item assessment divided into three categories of MLS: (1) ‘What the therapist says’; (2) ‘What the therapist does’; and (3) ‘How the practice is organized’. Physiotherapist MLS use is rated on a 5-point scale based on the frequency and extent to which each MLS is observed (0=‘very little’ or 0%–5% of the time; 1=‘somewhat’ or 6%–24% of the time; 2=‘often’ or 25%–49% of the time; 3=‘very often’ or 50%–75% of the time; 4=‘mostly’ or 76%–100% of the time). Individual items are not tallied to a total MLSRI-20 score given the lack of practical value associated with its magnitude (i.e. a higher score does not indicate a superior motor learning session). Given the proportional nature of rating, total scores greater than 50 out of 80 possible points are unlikely. The value of the MLSRI-20 lies in its ability to score the distribution of the individual items, which creates a profile of MLS use (see Appendix S1, online supporting information).

The primary objective of this study was to evaluate the interrater and intrarater reliability of the MLSRI-20 in physiotherapy interventions for children with CP. Given the variety of treatment approaches used in CP, it is important to understand the application of MLS across intervention approaches. To enhance the generalizability of the reliability results, videos of traditional gait-based and technology-based (i.e. Lokomat) intervention were rated. Aspects of MLSRI-20 utility based on ease and confidence of rating were evaluated via physiotherapist rater feedback.

METHOD

Participants

Participants were 18 children with CP in Gross Motor Function Classification System16 levels I to IV, (age range 6–17y) who participated in one of three Lokomat clinical trials at Holland Bloorview Kids Rehabilitation Hospital in Toronto, Canada. Across studies, children completed at least one 8-week block of twice weekly traditional gait-based and/or Lokomat-based physiotherapy.17,18 Treating physiotherapists within the Lokomat studies were encouraged to use a motor learning approach and received MLS training. Videos of at least two 30- to 60-minute treatment sessions were recorded for each child to permit documentation of the intervention components within the Lokomat studies. These videos were eligible for use in the reliability study. The research ethics boards at Holland Bloorview Kids Rehabilitation Hospital and the University of Toronto approved the reliability study protocol. Written consent was obtained from the parent, child, and treating physiotherapist to use their Lokomat videos in the reliability study.

Physiotherapist rater recruitment and training

Four physiotherapists were randomly selected from eight interested and eligible physiotherapists employed at Holland Bloorview Kids Rehabilitation Hospital. Physiotherapists were excluded if they worked as a treating physiotherapist in the Lokomat studies to avoid raters scoring videos of themselves. All raters underwent two classroom-based training sessions to learn how to administer the MLSRI-20. Training was supported by the MLSRI-20 Instruction Manual15 and the MLS Online Training Program19 developed by the authors. After passing an MLSRI-20 criterion test, raters were randomly paired by a research assistant. They were not aware of their pairing and were asked not to speak about the videos with the other raters to avoid influencing MLSRI-20 scores.

Video selection and rating process

Videos were screened by the research assistant using a screening checklist that: (1) evaluated the audio and visual quality of the videos; (2) indicated the treatment approach; and (3) provided deidentified codes for the treating physiotherapist and child. This process ensured that there was no selection bias towards the children or treating physiotherapists. The first author then determined which videos were eligible for the reliability study, based on the checklist. The main inclusion criteria were audio and video quality (i.e. physiotherapist and child were heard and seen), with a secondary consideration of having a balanced number of traditional and Lokomat interventions and a variety of treating physiotherapists and children.

A sample of 30 videos adequately powers a reliability study.20 Thus, 15 videos were randomly assigned to each rater pair, for a total of 30 unique videos to evaluate inter-rater reliability. For intrarater reliability, the research assistant assigned one rater within the pair to rate the same video a second time (i.e. seven to eight videos per rater). Raters did not have access to previous MLSRI-20 rating forms when completing their second rating. The minimum time permitted between the first and repeat video ratings was 1 week, with at least two other videos rated in between.

The rater watched the video-recorded intervention, stopping the video to record observations on the MLSRI-20 Worksheet.3 Observations included documenting each MLS observed and their frequency of use, tasks observed, task order, and task variations. After completing the video, the rater translated worksheet observations into item scores on the MLSRI-20 Score Form. The rater also completed a feedback form indicating the amount of time spent rating the video, and their ease and confidence in scoring each of the three MLSRI-20 categories on a 10cm visual analog scale, where 0cm was ‘very difficult’ and 10cm was ‘no trouble at all’. Each category contained a comment section where raters could elaborate on challenges.

Statistical analysis

Analysis was conducted using MedCalc Statistical Software (version 12.3.0.0; MedCalc Software, Ostend, Belgium). Descriptive statistics were calculated and distribution analyses visually evaluated. While individual item scores are not tallied for a total score when using the MLSRI-20, the score for one item often influences the score of another. As such, analyzing the individual items alone would not capture the overall reliability of the MLSRI-20. The overall consistency of rating the collective group of MLS is required for the MLSRI-20’s clinical and research use. Therefore, interrater and intrarater reliability were determined for the total score as the primary indicators of rating consistency. However, reliability was also estimated for individual item scores to identify items that might require changes in rating procedure or item description.

The primary use of intraclass correlation coefficients (ICCs),21 with Bland-Altman plots22 and coefficients of variation,23 provided estimates of the association and agreement between ratings, evaluated the influence of the magnitude of the scores on the agreement, and assessed the relative and absolute variation between ratings.24 The ICC (2,1) uses the same set of raters randomly selected from a group of raters and was selected with the goal of generalizing results beyond this study.24 The associated 95% confidence intervals (CI) were calculated. An ICC of 0.90 or greater indicates excellent reliability, while 0.75 to 0.90 signifies good reliability, and less than 0.50 indicates poor reliability.21 For the MLSRI-20 to be reliable in clinical and research-based settings, the following a priori targets were set as indicators of acceptable reliability: a minimum total score ICC of 0.75 with minimum 95% CI lower bound of 0.60, and coefficient of variation of less than 10%.25 Secondary analysis involved evaluating individual item ICCs.

Descriptive statistics evaluated the utility of the MLSRI-20. Interrater and intrarater differences in physiotherapist rater ‘confidence’ and ‘ease’ of rating the MLSRI-20 were analyzed using paired t-tests. Pearson correlation coefficients evaluated associations between MLSRI-20 category scores and ease and confidence when rating each category, and assessed associations between MLSRI-20 total scores and time to rate each video. Rater feedback was grouped according to MLSRI-20 category and scrutinized alongside individual item ICCs to elucidate specific rating challenges.

RESULTS

Thirty videos of 18 children were rated. Twelve children had two treatment session videos included in the study. Sixteen videos were of traditional physiotherapy interventions, while 14 were of Lokomat-based interventions. There were 11 treating physiotherapists in the 30 videos. Physiotherapist raters had 3 to 18 years (mean 13y) of clinical experience, in in-patient and outpatient pediatric settings.

The MLSRI-20 total mean (SD) scores for interrater data were 27.00 (6.47) for the first rater pair and 27.17 (3.98) for the second rater pair, out of 80 possible points (range 17–40). The MLSRI-20 total mean (SD) scores for intrarater data were 29.71 (4.94), 27.25 (2.82), 25.50 (5.81), and 26.86 (4.50) for raters A through D respectively (range 16–38). While the histograms (not shown) of the total scores were normally distributed, histograms of the individual items were not. There was no difference in MLSRI-20 mean total scores between traditional physiotherapy and Lokomat interventions.

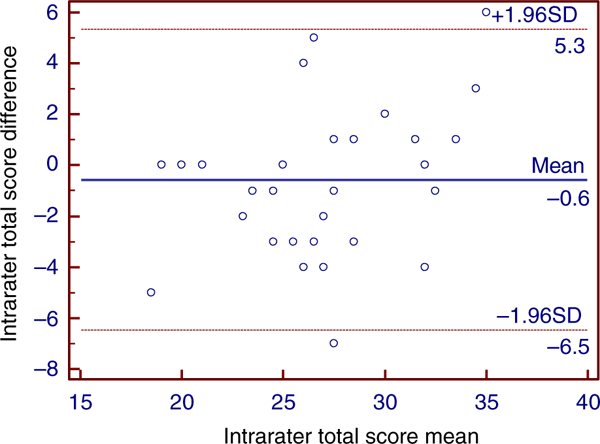

The ICC for interrater reliability of the MLSRI-20 total score was 0.78 with a 95% CI of 0.53 to 0.89, while the ICC for intrarater reliability was 0.89 with a 95% CI of 0.76 to 0.95. Tables SI and SII (online supporting information) outline interrater and intrarater reliability for the individual items. The total score coefficients of variation were 11.8% and 7.8% for the interrater and intrarater scenarios respectively. The Bland-Altman plot for interrater reliability did not demonstrate a scoring bias for MLSRI-20 total scores. The plot for intrarater reliability suggested a slight pattern toward greater differences in ratings as MLS use increased (higher scores; Fig. 1). For individual items, interrater ICCs were less than 0.50 for six of 10 items in ‘What the therapist says’ and three of five items in ‘How the practice is organized’ (Table SI), while intrarater ICCs (Table SII) did not identify any issues in ‘How the practice is organized’ and issues in only three items in ‘What the therapist says’.

Figure 1:

Bland–Altman plot of intrarater Motor Learning Strategies Rating Instrument-20 total scores.

Visual analogue scale results and physiotherapist rater comments from the feedback forms are summarized in Table I. There were no interrater differences in rating ease (p=0.91) or confidence (p=0.53), and no intrarater differences in rating ease (p=0.83) or confidence (p=0.13). The mean session video length was 34.0 minutes (SD 6.8; range 21.0–53.0). The mean time to rate a video was 77.1 minutes (27.3; range 40–160). There was no within-rater difference when comparing time to rate a video the first and second time (mean difference −4.3min, p=0.28) or time to rate the same video between raters (mean difference −2.5min, p=0.77). There was a significant relationship (r=0.97, p<0.01) between the ease and confidence in rating videos. While there was no association between time to rate a video and the MLSRI-20 total score (r=0.16, p=0.12), there was a weak inverse relationship between time to rate a video and rating ease or confidence (maximum r=−0.35, p<0.01).

Table I:

Overview of Motor Learning Strategies Rating Instrument-20 utility based on physiotherapist rater feedback

| Rating easea | Rating confidencea | Category scoreb | Comments | |||||

|---|---|---|---|---|---|---|---|---|

| MLSRI-20 category | Mean (SD) | Range | Mean (SD) | Range | Mean (SD) | Range | Videos (n=30) |

Most frequently reported challenges |

| ‘What the therapist says’ | 6.5 (2.1) | 1.3–10.0 | 6.3 (2.3) | 0.9–10.0 | 9.5 (3.2) | 3–14 | 20 | Hearing what the physiotherapist said (nine comments) Distinguishing between instructions and feedback (six comments) |

| ‘What the therapist does’ | 7.5 (1.7) | 1.9–10.0 | 7.8 (1.6) | 3.0–10.0 | 5.5 (1.6) | 3–9 | 11 | Scoring ‘provides environment where errors are a part of learning’ (five comments) Determining amount of ‘physical guidance’ provided by Lokomat (three comments) |

| ‘How the practice is organized’ | 7.4 (2.4) | 0.7–10.0 | 7.0 (2.6) | 0.7–10.0 | 12.7 (2.4) | 7–19 | 9 | Defining the tasks within a session (four comments) |

Visual analogue scale scores out of 10.

Category score out of 40 for ‘What the therapist says’, 20 for ‘What the therapist does’), 20 for ‘How the practice is organized’.

There was no association between ‘What the therapist says’ category scores and rating ease or confidence (maximum r=0.06, minimum p<0.76). There was a weak inverse relationship between ‘What the therapist does’ or ‘How the practice is organized’ category scores and rating ease or confidence (maximum r=−0.36, minimum p<0.05). Rater feedback highlighted two types of rating concerns: video-related and measure-related challenges. Video-related challenges included difficulties distinguishing between physiotherapist, physio-therapist assistant, and/or caregiver verbalizations, interference of background noise, and difficulties determining how much physical guidance the Lokomat provided. Measure-related challenges included difficulty in categorizing verbalizations (e.g. instruction vs feedback), delineating the tasks that comprised the intervention, and establishing if the physiotherapist provided opportunities for error.

DISCUSSION

The MLSRI-20 takes the complex MLS construct and, through a combination of observations and clinical judgment, enables a rater to convert independent observations into objective measurements. The MLSRI-20 demonstrates potential to fill a notable void in documenting and understanding motor learning-based physiotherapy interventions. It can be used by trained physiotherapist raters to reliably measure MLS use in physiotherapy interventions for children with CP. While the ICCs for interrater and intrarater reliability of MLSRI-20 total scores fall within acceptable limits, item scores should be interpreted with the knowledge that the CI lower bound and coefficients of variation were slightly outside of a priori targets for interrater scoring. However, because the MLSRI-20 is an assessment and not an outcome measure (i.e. not designed to measure small increments of change in use of MLS) the extent of interrater scoring variation is acceptable. As expected, interrater and intrarater reliability of individual items was lower than for the total scores. This information, in combination with rater feedback, will be used to augment MLSRI-20 training with the goal of improving reliability.

Video assessment can be used to evaluate clinical interactions in pediatric rehabilitation,26,27 and while it can be time-intensive and resource-intensive, it allows the rater to ‘revisit’ and confirm their observations. The MLSRI-20 Instruction Manual provides consistent scoring rules for rating each item. However, given the variability of clinical interactions and decreased awareness of the treating physiotherapist’s intentions, raters must make inferences regarding the situational context of observed actions, which introduces an element of interpretation to the scoring process. Physiotherapist raters reported the most difficulty and least confidence in rating ‘What the therapist says’ (mean scores 6.5 and 6.3 out of 10 respectively), and indicated greater ease of scoring interventions with fewer verbalizations. Ratings became increasingly difficult when multiple people were present because raters had to distinguish between physiotherapist verbalizations and comments made by others. Additionally, verbalizations can be rated under several items within the MLSRI-20. Therefore, the physiotherapist rater had to unpack the content of each verbalization (e.g. ‘You are not straightening your knee enough’ is rated as ‘telling’ rather than ‘asking’, feedback related to ‘movement performance’ and ‘what was done poorly’), which may have led to oversights or errors in rating.

Distinguishing between instruction and feedback was challenging when verbalizations occurred during a task. While the MLSRI-20 Instruction Manual addresses this distinction, there is opportunity to clarify existing instructions to improve understanding of the scoring rules. Video scoring examples within the MLS Online Training Program could address potential oversights or rating challenges by guiding the rater through the observation and scoring process in a systematic manner and clarifying scenarios that are difficult to capture in written format.

Rater feedback on the ‘How the practice is organized’ items focused on difficulty defining the tasks within the intervention. The Instruction Manual states that a task is ‘a therapy-based activity that has a beginning, middle, and end’ and can consist of ‘a number of motor skills’ that are ‘functional’ or ‘therapeutic’ in nature.15 The rater considers the context and focus of the therapist’s verbalizations when deciding if a change in task is a task variation or an entirely new task. Thus, the rater’s clinical judgement and experience can affect how tasks are defined. While raters did not identify task delineation as a challenge when rating other categories, it should affect how all individual items are scored. There is opportunity to eliminate rater subjectivity by having the treating physiotherapist outline the tasks, task variations, and task order with an intervention log for each recorded session.

LIMITATIONS

Estimates of reliability only pertain to the study sample and treatment setting.24 However, the investigative team aimed to create generalizable results by using two physiotherapist rater pairs and two treatment approaches. There is no clinical value associated with the MLSRI-20 total score, which limits the ability to interpret reliability solely based on total score. While total score ICCs allow evaluation of the measure as a whole, analysis is not complete without examining individual ICCs and rater feedback. Screening for video quality did not eliminate difficulties hearing physiotherapist verbalizations. However, video rating is required as observation and scoring is too complicated to be completed in real-time.3 Occasional video quality issues are part of scoring, particularly in clinical settings where there is less control over video recording. Since audio challenges presumably affect all physiotherapist raters equally, their impact on reliability should be minimal. While the MLS Online Training Program allowed raters to review MLS definitions/video examples, the MLSRI-20 Instruction Manual was only available in print. Given the clinical nuances associated with scoring, access to an online module with video scoring examples might have improved MLSRI-20 rating accuracy and consistency.

FUTURE IMPLICATIONS

The MLSRI-20 can be used to identify and compare MLS in intervention-based studies for children with CP. Exploration of optimal MLSRI-20 scores and the upper limits of the total score in children with CP is required. Current results will inform the development of a MLSRI-20 module within the MLS Online Training Program, ensuring all users receive comprehensive, standardized training. Because the MLSRI-20 was also designed for self-reflection, future research comparing its reliability when used for self-reflection with independent raters may provide insight into the relationship between observed MLS and intentional MLS use. Exploring physiotherapists’ perspectives and clinical decision-making when using MLS will further clarify treating physiotherapists’ intentions. Finally, evaluating MLSRI-20 reliability and validity in other motor skills-based interventions (e.g. occupational and speech therapy) may support multidisciplinary use of the assessment.

Supplementary Material

Motor Learning Strategies Rating Instrument-20 interrater reliability by individual item within categories

Motor Learning Strategies Rating Instrument-20 intrarater reliability by individual item within categories

The Motor Learning Strategies Rating Instrument-20 Score Form creates a profile of motor learning strategies use for a treatment session.

What this paper adds.

The Motor Learning Strategies Rating Instrument (MLSRI-20) is reliable for use by trained physiotherapist raters.

Measuring motor learning strategies can identify active ‘ingredients’ in physiotherapy interventions for children with cerebral palsy.

The MLSRI-20 promotes a common language in motor learning.

ACKNOWLEDGEMENTS

This study was funded by the Holland Bloorview Children’s Foundation Chair in Pediatric Rehabilitation. The authors would like to thank research support staff, Gloria Lee and Emily Brewer, and the physiotherapist raters for their contributions.

ABBREVIATIONS

- ICC

Intraclass correlation coefficient

- MLS

Motor learning strategies

- MLSRI-20

Motor Learning Strategies Rating Instrument

REFERENCES

- 1.Valvano J Activity-focused motor interventions for children with neurological conditions. Phys Occup Ther Pediatr 2004; 24: 79–107. [DOI] [PubMed] [Google Scholar]

- 2.Schmidt RA, Wrisberg CA. Motor Learning and Performance. Windsor: Human Kinetics, 2004. [Google Scholar]

- 3.Levac D, Missiuna C, Wishart L, DeMatteo C, Wright V. Documenting the content of physical therapy for children with acquired brain injury: Development and validation of the Motor Learning Strategy Rating Instrument. Phys Ther 2011; 91: 689–99. [DOI] [PubMed] [Google Scholar]

- 4.Diaz Heijtz R, Forssberg H. Translational studies exploring neuroplasticity associated with motor skill learning and the regulatory role of the dopamine system. Dev Med Child Neurol 2015; 57(Suppl. 2): 10–14. [DOI] [PubMed] [Google Scholar]

- 5.Dayan E, Cohen LG. Neuroplasticity subserving motor skill learning. Neuron 2011; 72: 443–54. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Krakauer JW. Motor learning: its relevance to stroke recovery and neurorehabilitation. Curr Opin Neurol 2006; 19: 84–90. [DOI] [PubMed] [Google Scholar]

- 7.Whyte J, Hart T. It’s more than a black box; it’s a Russian doll: defining rehabilitation treatments. Am J Phys Med Rehabil 2003; 82: 639–52. [DOI] [PubMed] [Google Scholar]

- 8.Beveridge B, Feltracco D, Struyf J, et al. “You gotta try it all”: Parents’ experiences with robotic gait training for their children with cerebral palsy. Phys Occup Ther Pediatr 2015; 35: 327–41. [DOI] [PubMed] [Google Scholar]

- 9.Winkels DG, Kottink AI, Temmink RA, Nijlant JM, Buurke JH. Wii™-habilitation of upper extremity function in children with cerebral palsy. An explorative study. Dev Neurorehabil 2013; 16: 44–51. [DOI] [PubMed] [Google Scholar]

- 10.Pool D, Blackmore AM, Bear N, Valentine J. Effects of short-term daily community walk aide use on children with unilateral spastic cerebral palsy. Pediatr Phys Ther 2014; 26: 308–17. [DOI] [PubMed] [Google Scholar]

- 11.Meyer-Heim A, Ammann-Reiffer C, Schmartz A, et al. Improvement of walking abilities after robotic-assisted locomotion training in children with cerebral palsy. Arch Dis Child 2009; 94: 615–20. [DOI] [PubMed] [Google Scholar]

- 12.Borggraefe I, Schaefer JS, Klaiber M, et al. Robotic- assisted treadmill therapy improves walking and standing performance in children and adolescents with cerebral palsy. EurJ Paediatr Neurol 2010; 14: 496–502. [DOI] [PubMed] [Google Scholar]

- 13.Ammann-Reiffer C, Bastiaenen C, Meyer-Heim A, van Hedel HJ. Effectiveness of robot-assisted gait training in children with cerebral palsy: a bicenter, pragmatic, randomized, cross-over trial (PeLoGAIT). BMC Pediatr 2017; 17:64. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Kamath T, Pfeifer M, Banerjee-Guenette P, et al. Reliability of the Motor Learning Strategy Rating Instrument for children and youth with acquired brain injury. Phys Occup Ther Pediatr 2012; 32: 288–305. [DOI] [PubMed] [Google Scholar]

- 15.Ryan JL, Wright FV, Levac DE. Motor Learning Strategies RatingInstrumentInstructionManual. Toronto: Holland Bloorview Kids Rehabilitation Hospital, 2016. [Google Scholar]

- 16.Palisano R, Rosenbaum P, Walter S, Russell D, Wood E, Galuppi B. Development and reliability of a system to classify gross motor function in children with cerebral palsy. Dev Med Child Neurol 1997; 39: 214–23. [DOI] [PubMed] [Google Scholar]

- 17.Hilderley AJ, Fehlings D, Lee GW, Wright FV. Comparison of a robotic-assisted gait training program with a program of functional gait training for children with cerebral palsy: design and methods of a two group randomized controlled cross-over trial. Springerplus 2016; 5:1886. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Wiart L, Rosychuk RJ, Wright FV. Evaluation of the effectiveness of robotic gait training and gait-focused physical therapy programs for children and youth with cerebral palsy: a mixed methods RCT. BMC Neurol 2016; 16: 86. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Ryan JL, Wright FV, Levac DE. Motor Learning Strategies Online Training Program. Toronto: Holland BloorviewKids Rehabilitation Hospital, 2017. [Google Scholar]

- 20.Donner A, Eliasziw M. Sample size requirements for reliability studies. Stat Med 1987; 6: 441–8. [DOI] [PubMed] [Google Scholar]

- 21.Koo TK, Li MY. A guideline of selecting and reporting intraclass correlation coefficients for reliability research. J Chiropr Med 2016; 15: 155–63. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Bland JM, Altman DG. Measuring agreement in method comparison studies. Stat Methods Med Res 1999; 8: 135–60. [DOI] [PubMed] [Google Scholar]

- 23.Thompson P, Beath T, Bell J, et al. Test retest reliability of the 10-metre fast walk test and 6-minute walk test in ambulatory school-aged children with cerebral palsy. Dev Med Child Neurol 2008; 50: 370–6. [DOI] [PubMed] [Google Scholar]

- 24.Portney LG, Watkins MP. Foundations of Clinical Research: Applications to Practice. Philadelphia: FA Davis Company, 2015. [Google Scholar]

- 25.Atkinson G, Nevill AM. Statistical methods for assessing measurement error (reliability) in variables relevant to sports medicine. Sports Med 1998; 26: 217–38. [DOI] [PubMed] [Google Scholar]

- 26.Di Rezze B, Law M, Eva K, Pollock N, Gorter JW. Development of a generic fidelity measure for rehabilitation intervention research for children with physical disabilities. Dev Med Child Neurol 2013; 55: 737–44. [DOI] [PubMed] [Google Scholar]

- 27.King G, Chiarello LA, Thompson L, et al. Development of an observational measure of therapy engagement for pediatric rehabilitation. Disabil Rehabil 2017: 10.1080/09638288.2017.1375031. [Epub ahead of print] [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Motor Learning Strategies Rating Instrument-20 interrater reliability by individual item within categories

Motor Learning Strategies Rating Instrument-20 intrarater reliability by individual item within categories

The Motor Learning Strategies Rating Instrument-20 Score Form creates a profile of motor learning strategies use for a treatment session.