Abstract

Course-based undergraduate research experiences (CUREs) provide students opportunities to engage in research in a course. Aspects of CURE design, such as providing students opportunities to make discoveries, collaborate, engage in relevant work, and iterate to solve problems are thought to contribute to outcome achievement in CUREs. Yet how each of these elements contributes to specific outcomes is largely unexplored. This lack of understanding is problematic, because we may unintentionally underemphasize important aspects of CURE design that allow for achievement of highly valued outcomes when designing or teaching our courses. In this work, we take a qualitative approach and leverage unique circumstances in two offerings of a CURE to investigate how these design elements influence outcome achievement. One offering experienced many research challenges that increased engagement in iteration. This level of research challenge ultimately prevented achievement of predefined research goals. In the other offering, students experienced fewer research challenges and ultimately achieved predefined research goals. Our results suggest that, when students encounter research challenges and engage in iteration, they have the potential to increase their ability to navigate scientific obstacles. In addition, our results suggest roles for collaboration and autonomy, or directing one’s own work, in outcome achievement.

INTRODUCTION

Course-based undergraduate research experiences (CUREs) are championed as a scalable way to expose students to research and provide opportunities for students to achieve a variety of beneficial outcomes. Documented outcomes of CURE participation include increases in students’ science self-efficacy, research skills, and content knowledge, as well as increased intent to persist in science careers (reviewed in Corwin et al., 2015a; e.g., Rodenbusch et al., 2016). Other proposed outcomes of CUREs that have been documented in a few studies, yet merit further investigation, include increased collaboration skills, project ownership, science identity, access to faculty and mentoring functions, ability to deal with scientific obstacles, and understanding of the nature of science (NOS; reviewed in Corwin et al., 2015a).

Arguments for why and how CUREs facilitate outcome achievement highlight the role of five design features of CUREs: discovery—students have opportunities to discover something unknown to themselves and the instructor during the course; relevance—the research is relevant to a community outside the classroom; iteration—students have opportunities to evaluate what went wrong or what could be improved when encountering obstacles and to troubleshoot, revise, and repeat their work to fix mistakes or address problems; collaboration—students work together with classmates and instructors toward a common scientific research goal; and use of science practices—students are involved in the practice and processes that scientists use when conducting their work (e.g., asking questions, proposing hypotheses, selecting methods, gathering and analyzing data, and developing and critiquing interpretations and arguments). Each design feature is predicted to act both independently and through interactions with other features to contribute to achievement of CURE outcomes (Auchincloss et al., 2014). The Laboratory Course Assessment Survey (LCAS) measures opportunities for students to pursue relevant scientific discoveries that are novel and meaningful to a community outside of the classroom, iterate to solve problems and improve their work, and collaborate to achieve a common scientific goal (Corwin et al., 2015b). Using this survey, Corwin and colleagues (2015b) found that CURE courses, in comparison with traditional labs, have more opportunities for students to make relevant discoveries and to engage in iteration than students in traditional laboratory courses. Furthermore, work from this group indicates that opportunities for relevant discovery, iteration, and collaboration each contribute to students’ development of project ownership, further supporting the argument that these features play a role in achieving CURE outcomes (Corwin et al., 2018).

Until recently, the CURE community has primarily focused CURE design on involving students in the pursuit of making relevant discoveries, as this was seen as the most beneficial aspect of CURE involvement. This has also been put forth as the defining feature of CUREs by several groups (Brownell and Kloser, 2015; Corwin et al., 2015b; Rowland et al., 2016). Indeed, making relevant discoveries has been posited to increase students’ project ownership and potentially their identity as scientists, ultimately contributing to increased persistence in science, technology, engineering, and mathematics (STEM; Hanauer and Dolan, 2014; Corwin et al., 2018). With this focus on relevant discoveries, we, as instructors, may be tempted to ensure that students succeed in discovering something novel by scaffolding our CUREs such that students are assured of obtaining meaningful findings to a scientific question. To do this, we may select projects of technical simplicity with higher probability of student progress (recommended by Hatfull et al., 2006). We may also troubleshoot anticipated issues in advance of the course or independent of students during the course to help students progress toward a research goal. This practice may indeed result in positive outcomes, such as the advancement of the research in question and promoting a sense of achievement and self-efficacy among students via mastery experiences (Schunk and Pajares, 2009). However, safeguarding students’ success by scaffolding CUREs to ensure scientific progress could be at the expense of other design features and their related outcomes. For example, designing a CURE to avoid obstacles or alleviate challenges may eliminate opportunities for iteration, in which students engage in their own processes of troubleshooting and reflection on their work after encountering a scientific obstacle.

Iteration is important in science, because it not only involves scientists in the challenging metacognitive process of troubleshooting and evaluating next steps when things do not go as planned, it also creates space for scientists to engage deeply with a project, investing time, energy, thought, and effort in consideration of how to advance project goals and deepening commitment to a project. Lost opportunities for iteration may compromise achievement of outcomes that result from engagement in iterative processes. Indeed, iteration contributed to project ownership above and beyond other CURE design features in a national sample of laboratory courses (Corwin et al., 2018). Furthermore, engaging students in dealing directly with unexpected setbacks or results during iteration, may improve their ability to troubleshoot and “navigate scientific obstacles” (Thiry et al., 2012). Having the patience to deal with setbacks and failures in research and navigate scientific obstacles is broadly recognized as a valuable skill and even a necessary disposition among scientists (Lopatto et al., 2008; Laursen et al., 2010; Harsh et al., 2011; Thiry et al., 2012; Andrews and Lemons, 2015). In addition, the ability to navigate obstacles may be a key ingredient in the persistence of early-career scientists. Harsh and colleagues (2011) describe how students underrepresented in STEM who learned to accept failure as part of the normal scientific process during undergraduate research experiences (UREs) were more likely to persist. This evidence suggests that we may need to allow students to experience challenges and “scientific failures” and give them time and space to iterate in order to develop the ability to navigate scientific obstacles. Yet, even in many CURE courses, students are often not allowed time and space for iteration due to the time constraints and schedule of the course. Without these opportunities, they may not improve their troubleshooting skills or increase their ability to navigate scientific obstacles.

Engaging students in iteration may also help students achieve another desired outcome: development of greater knowledge of the NOS, a highly valued outcome for majors and nonmajors alike (Patel et al., 2009; Thiry et al., 2012; Brownell and Kloser, 2015). Specifically, iteration may provide direct experience with the fact that the scientific method is much more mutable, cyclic, and iterative than often presented. This may help students to better understand the empirical NOS and to see the “myth” of the scientific method, described by Lederman and colleagues (2002, p. 501) as the misconception that there is a “recipe-like stepwise procedure that all scientists follow when doing science.” Iteration may also better engage students in the creative and imaginative aspects of science (Lederman et al., 2002, 2013; Osborne et al., 2003; Thiry et al., 2012; Brownell and Kloser, 2015). Despite these predictions, the links between students’ encounters with scientific obstacles, opportunities for iteration, and student outcomes have not been empirically described.

We used a qualitative approach to address the following two questions:

What are the experiences and outcomes of students who encounter high instances of scientific obstacles and ultimately do not achieve instructor-defined research goals (i.e., fail to make relevant discoveries) within a CURE?

What are the reported relationships between encountering scientific obstacles, proposed CURE design elements, and student outcomes in a CURE setting?

We addressed these questions within the context of a single CURE, where we leveraged naturally arising circumstances present in two offerings. In the first offering, which we call the “high-challenge” offering, students experienced a high incidence of scientific obstacles, iterated continually, and only 11% (two out of 18) of students achieved predefined research goals set for the course (i.e., a majority did not make novel scientific discoveries that could advance their understanding of their scientific research questions). This contrasted with the second offering, which we call the “low-challenge” offering, in which students encountered fewer obstacles, did fewer iterations, and all students (16 out of 16) achieved predefined research goals (i.e., they did make novel scientific discoveries in direct service of their questions). This circumstance allowed us to examine the role of iteration in a CURE for students who experienced success relatively quickly and for students who did not achieve predefined research goals (i.e., did not achieve the “discovery” goals set out by instructors, namely, the majority of students in the high-challenge offering).

For each offering, we employed the LCAS to examine students’ perceptions of course design and both open-ended questions and focus groups to characterize the challenges encountered within the course and students’ outcomes as a result of course participation. We targeted the two outcomes discussed earlier: ability to navigate scientific obstacles and understanding of the NOS, because we hypothesized that these would be affected by students’ experience with obstacles and engagement in iteration. Our results suggest roles for iteration in outcome achievement and bring into question whether designing courses to ensure students’ scientific success is necessary to achieve positive outcomes.

METHODS

This study was conducted with approval from the Internal Review Board for Human Subjects at the institution where this work was conducted (#16-1110).

Course Description and Study Participants

Seafood Forensics is a semester-long (15 week) CURE taught at a large, public, doctorate-granting (R1) southeastern university. The course is a lower-division elective attracting first-, second-, and third-year students and enrolls between 15 and 20 students per offering—the offerings in this study attracted primarily second-year students (Table 1). It meets once per week for 4 hours in a teaching laboratory room containing all materials and equipment necessary for the students to perform their research. This course is highly structured. Instruction begins outside class with relevant reading assignments and online tutorials. These assignments introduce students to the techniques that they will use in the laboratory and the relevance of their research. The instructors hold students accountable for reading assignments using online preclass quizzes (see the Supplemental Material for example class materials). In class, the students engage in a variety of activities including 1) student-led discussions on primary literature relevant to their research projects, 2) conducting literature searches and reading information relevant to their studies, 3) working collaboratively on their study questions and designs, 4) actively conducting research relevant to their studies, 5) gathering materials relevant to their studies (e.g., field trips to obtain seafood samples from local venues), and 6) constructing final papers or posters on their results. Relative time allocation is flexible and depends on what is needed to advance research, but the vast majority of class time is dedicated to actively conducting experiments.

TABLE 1.

Participant demographicsa

| High-challenge offering (18 students) | Low-challenge offering (16 students) | |||||

|---|---|---|---|---|---|---|

| Survey participants (% of participants) | Focus group participants (% of participants) | Survey participants (% of participants) | Focus group participants (% of participants) | Biology UG* | The university UG | |

| Participants | 17 | 8 | 15 | 15 | ||

| Race | ||||||

| White | 10 (59) | 5 (63) | 8 (53) | 8 (53) | 58.0% | 63% |

| Asian | 2 (12) | 1 (12) | 2 (13) | 2 (13) | 18.3% | 10% |

| Black | 5 (29) | 2 (25) | 2 (13) | 2 (13) | 6.4% | 8% |

| Multiracial | 0 (0) | 0 (0) | 2 (13) | 2 (13) | 4.1% | 4% |

| Unknown | 0 (0) | 0 (0) | 1 (7) | 1 (7) | 4.0% | 15% |

| Ethnicity | ||||||

| Not Hispanic | 17 (100) | 8 (100) | 14 (93) | 14 (93) | 91.4% | 92.5% |

| Hispanic | 0 | 0 (0) | 1 (7) | 1 (7) | 8.6% | 7.5% |

| Gender | ||||||

| Female | 12 (71) | 6 (75) | 11 (73) | 11 (73) | 57% | 58% |

| Male | 5 (29) | 2 (25) | 3 (20) | 3 (20) | 43% | 42% |

| Genderqueer | 0 | 0 | 1 (7) | 1 (7) | — | — |

| Academic status | ||||||

| First year | 4 (24) | 1 (13) | 2 (13) | 2 (13) | — | — |

| Second year | 7 (41) | 5 (62) | 10 (67) | 10 (67) | — | — |

| Third year | 6 (35) | 2 (25) | 1 (7) | 1 (7) | — | — |

| Fourth year | 0 | 0 | 2 (13) | 2 (13) | — | — |

aRace percentages do not sum to 100 because Hispanic was included as a race not an ethnicity. UG, undergraduate population.

All work in the course is done with the aim of addressing the central research question: What is the frequency of seafood mislabeling in the local region? This question is relevant to the scientific community, as it addresses ecological questions related to harvesting effects on fish populations and sustainability of the seafood industry. It also has relevance to the local community, as seafood mislabeling may result in fish with higher levels of mercury being stocked in place of fish with lower levels of mercury (health implications), and mislabeling can disguise where fish may come from, making consumers unaware that they may be purchasing fish harvested via socially irresponsible means (Marko et al., 2004, 2014). Students in the class work to discover the species identity of the samples collected from local venues by engaging in the science practices of extracting DNA from their samples; amplifying the cytochrome c oxidase gene; submitting their DNA products to the local sequencing facility; and analyzing their chromatograms to identity their fish, that is, DNA barcoding (Willette et al., 2017). Students work collaboratively as a class to collect and barcode their samples and to characterize the incidence of seafood mislabeling. Although class time is structured, there is flexibility for students to direct their own work and, and when necessary, iterate (revise and repeat their work) to correct errors or elucidate unexpected results. For example, if a DNA extraction fails, students have class time to troubleshoot and repeat the extraction until they succeed. Thus, the course offers opportunities for students to engage in all CURE course design elements described earlier. However, due to the nature of research, students may engage in each element to various degrees. For example, if all of the students’ DNA extractions work the first time, they may not need to iterate in order to make progress. Conversely, if extractions do not work, they may engage in a continual process of iteration.

Instructional Philosophy and Deliberate Actions Regarding Research Challenges.

The instructors’ (B.S. and J.B.) philosophy regarding course challenges stems from their desire to allow students to experience science as an iterative process that involves failure. Much of the instructional approach to destigmatizing failure depends on strong student–instructor relationships. The instructors believe that if they can break down the social barriers that often exist in the classroom between students and their instructors (i.e., if they can increase instructor immediacy), their students will see the class as a collaborative team of investigators (which includes the instructors) who are all working toward a shared goal. They believe that this will help students feel both comfortable and safe when trying new things and experiencing challenges in the laboratory. During the course, both instructors deliberately share stories about their experiences as scientists, the challenges they faced, and even talk to students about their interests outside science. The instructors repeatedly give their students permission and encouragement to direct their own work, often reminding the students that they trust them and their ability to make their own decisions. While the instructors give guidance and provided feedback, they view their role as facilitators of learning rather than as sources of knowledge and instruction. Thus, students are rarely given detailed answers to questions or step-by-step instructions on how to deal with obstacles, but rather are encouraged to find answers to questions in the primary literature. As the students have access to journal articles with PCR methods in them and knowledge of how to navigate scientific databases, the instructors feel that this approach facilitates students’ independent learning and use of scientific resources. Importantly, the instructors emphasize iteration as an exciting challenge and the key ingredient that makes science fun and results in learning. The instructors also share stories of and discussion about other aspects of science (e.g., funding, peer review, interactions with other scientists). Finally, the instructors express interest in the students and their professional goals, for example, by asking them what they want to do professionally, what prior experiences they have had with science, and so on. The instructors have applied this philosophy in every offering of the course, including the two offerings described in this work.

Importantly, when students experienced research challenges and obstacles in the two offerings described here, which were far more frequent during the first offering, the instructors talked to them informally (individually, in groups, or as a class) about the value of failure in science. Such discussions were focused on coping with, indeed embracing, failure and how this is a daily part of the science that creates opportunities to learn and advance. The instructors also modeled positive responses to failure, for example, by remaining optimistic when working through potential explanations and solutions and by discussing and modeling how they would troubleshoot research challenges. Students were frequently asked to reflect in their lab notebooks when a particular barcoding step did not work and to review the literature to find potential solutions. Finally, the instructors also often told stories about their own experiences with science failure, both as students and as scientists. The instructors predicted that this would help shape students’ views of scientific challenges and “failures” as opportunities to learn and grow. Outcomes of these actions are reflected in the Instructor Actions section of the Results.

Course Offerings and Participants.

Students in the Spring 2016 (high-challenge) and Spring 2017 (low-challenge) offering of Seafood Forensics participated in this study. The class is a biology majors’ elective and was open to enrollment by any biology major who had completed introductory biology. The offerings were cotaught by the same two instructors, with each instructor teaching simultaneously within each offering, and the offerings were similarly formatted. The only notable difference between the two offerings was that students in the low-challenge offering were provided with a different PCR protocol than students in the high-challenge offering. The three main changes regarding the protocol were

A published primer cocktail was used in the PCRs in the low-challenge offering (Willette et al., 2017).

Students in the high-challenge offering of the course selected primarily cooked fish for their work, whereas the students in the low-challenge offering of the course sampled from sushi restaurants and grocery stores, so their samples were not cooked before DNA extraction.

The reagents were changed from the high- to the low-challenge offering. In the high-challenge offering, students made their own “master mix,” which required them to add the reagents used in a PCR individually (nucleotides, taq polymerase, buffer, etc.). In the low-challenge offering of the course, they were provided with small beads that contained the PCR reagents, so there was less room for pipetting error (Willette et al., 2017).

At the start of both offerings, students were told that they were expected to collect seafood samples, extract DNA from the samples, amplify the DNA using PCR, and submit the sequences to obtain the identity of their fish. While these expectations for how students would contribute to the research were explicit in both offerings, students were not graded on the successful completion of these tasks, but rather on the quality of effort that they put forth in their research, keeping a detailed scientific notebook, actively engaging in the science each week, and learning about the content related to their research through reading scientific articles with topics and methods relevant to their work. Grades in the class were based largely on participation in lab exercises (35%), quizzes (10%), a midterm that included questions relating to the content students needed to know to conduct their research (20%), a final paper detailing the results and findings of their research (20%), and a final presentation on their findings (15%).

Students in the two offerings could elect whether to participate in a survey component, a focus group (FG) component, or both components of this study. Overall, 91% of the students completed the surveys and 66% participated in the FGs (Table 1). All students who participated in FGs also participated in the survey. Demographics of student participants largely reflected those at the university and were roughly similar between offerings, with the exception that there were several more first- and third-years in the high-challenge offering than in the low-challenge offering (Table 1). This study slightly overrepresents females in comparison with the university’s demographics. There were no major demographic differences between the two offerings or between FG respondents and survey respondents.

Study Components

We took a mixed-methods approach, using FGs and surveys consisting of both Likert-like and open-ended questions. All data were collected after the course was complete. Surveys were conducted before FGs to avoid biasing students’ individual responses with FG discussions. Data collection was conducted by individuals not associated with course instruction to avoid bias resulting from students’ desire to please instructors. Research productivity of students in each offering is based on the number of students who successfully identified a fish sample.

Surveys.

We used the LCAS (Corwin et al., 2015b; Supplemental Table S1) to assess students’ perceptions of three course design features: 1) opportunities to make relevant discoveries (a combination of relevance and discovery as explained in the introduction), 2) opportunities to iterate, and 3) opportunities to collaborate. This survey has been validated for populations of undergraduates in CURE courses, and we considered it valid for our measurement purposes. We also wrote open-ended survey questions to elicit descriptions of the course design, challenges encountered, and student outcomes (Supplemental Table S2). Particular emphasis was placed on students’ ability to navigate scientific obstacles (questions OE2 and OE3) and understand the NOS (question OE4; Supplemental Table S2). Cognitive interviews were conducted with three upper-division biology undergraduates to examine face validity of questions before survey administration, and questions were refined before distribution. Students completed surveys on the last day of class via Qualtrics survey software. In both courses, nearly all students completed the survey and gave consent for their information to be included in the study (Table 1).

Focus Groups.

FGs allow individuals with a common experience to share their experiences and build upon one another’s responses, and thus have an advantage over individual open-ended responses (Krueger and Casey, 2014). Like the open-ended questions, FG protocols and questions elicited descriptions of course design, the challenges encountered, and student outcomes. Several questions asked in the FGs were similar to those in the survey in order to allow students to build upon one another’s experiences and provide detail in targeted areas. Cognitive interviews were conducted with three upper-division biology undergraduates (as described earlier), and questions were refined before FG administration (Supplemental Table S3). Two FGs per course were conducted with 44 and 93% of the students in the high-challenge and low-challenge offerings participating, respectively. Each FG began with a reminder that participation was voluntary and that data would be kept confidential. FGs were semistructured and thus allowed for follow-up questions to elicit more detailed responses. FGs were recorded, transcribed in their entirety, and all identifying information was removed before analysis.

Data Analysis

Qualitative Data Analysis.

Qualitative analysis was conducted in a similar manner for both FG and open-ended survey question responses. Two authors (L.A.C. and L.E.G.) used deductive open coding to assign codes (Corbin et al., 2014). Open coding is a process of textual analysis in which researchers assign a set of codes (descriptors used to identify meaningful patterns in textual data) to segments of text that address a single complete thought (a unit of meaning). A new code is assigned each time a new unit of meaning is encountered (i.e., each time a new thought is introduced by a speaker or writer). The two authors began analyses using a set of a priori codes in four categories: 1) challenges students encountered, 2) course design features, 3) instructor actions, and 4) outcomes. All codes, definitions, and example quotes can be found in Supplemental Table S4. Challenge codes reflect challenges/obstacles students could have encountered during the course. Course Design Feature codes were derived from the work of Auchincloss and colleagues (2014) and describe aspects of how course structure and activities were designed. Instructor Actions codes reflect productive, counterproductive, and neutral student-described instructor actions during the course. Outcomes codes were derived from a review of the CURE literature (Corwin et al., 2015a) and describe various beneficial outcomes that may result from students’ participation in CUREs. The two authors began by reading the material, independently designating units of meaning (student quotes from the transcripts that represent a single complete thought and were coded with a single code), and coding the data using HyperRESEARCH software. They then came to consensus on units of meaning and a final codebook. The final codebook included one inductive code that was noted as a strong pattern, while the authors were reading the data: autonomy as a course design feature (Supplemental Table S4). Coders were unable to reach consensus on what did and did not constitute a science practice, and it was conflated with many other codes; therefore, this a priori code was removed from the final codebook. Likewise, two a priori Outcome codes (accessing mentoring functions and development of self-authorship) were removed due to their complexity and conflation with other codes. The two coders then worked collaboratively to code all of the data, coming to consensus on units of meaning and code assignments for the entire data set.

Across categories (i.e., challenges, course design features, instructor actions, and outcomes), code assignments were allowed to overlap, because students often spoke about challenges or instructor actions in concert with course design or course design in concert with their outcomes. For example, one unit of meaning may have been assigned two codes, one for a course design feature and one for an outcome, or one for a challenge and one for a course design feature. Within categories, codes were not allowed to overlap (i.e., a unit of meaning could not be coded with two different course design codes). Due to this coding design, which separated assignment of code categories, it was appropriate to calculate interrater reliability separately for each of the three categories. A third author (A.A.R.) independently coded a sample of 30% of the data, and interrater reliability was calculated for each code category: challenges codes: 0.77; course design feature codes: 0.74; instructor actions codes: 0.82; and outcomes codes: 0.86. All inconsistencies were resolved with discussion. These reliability values are considered “good” by the qualitative research community (Stemler, 2001).

After final coding, we identified broad themes in our data based on the frequency and patterns of code appearance, with more frequent codes that often interacted with other codes constituting themes that we discuss in our results. We quantified the total instances each code appeared for each data set (open-ended questions or FGs for the high-challenge or low-challenge offerings; Supplemental Table S5). We also calculated the number and percent of students who reported each code within the open-ended survey responses. We used only the open-ended response data sets in this calculation, because they represent views from the majority of the class. Unlike the FG data, which included responses from 44 and 93% of students in the high- and low-challenge offerings, respectively, the open-ended questions provided us the opportunity to assess the relative emphasis each offering placed on each code, because participation was high in both classes (94 and 93%). This analysis also allowed us to determine the proportion of students who reported each code independent of their peers (i.e., before FG participation; Supplemental Table S5). Thus, the open-ended code frequency assisted us in identifying the strongest themes within our data. We report the percent of student respondents reporting a code in the open-ended responses for each code discussed below (see Qualitative Analyses and Figures 1–4). After identification of themes, representative quotes were chosen for each code (Supplemental Table S4 and Results) and were lightly edited for confidentiality, clarity, and brevity before inclusion in the paper. For example, we added brackets to replace names with pronouns. After editing, we checked that the quotes represented their original meaning.

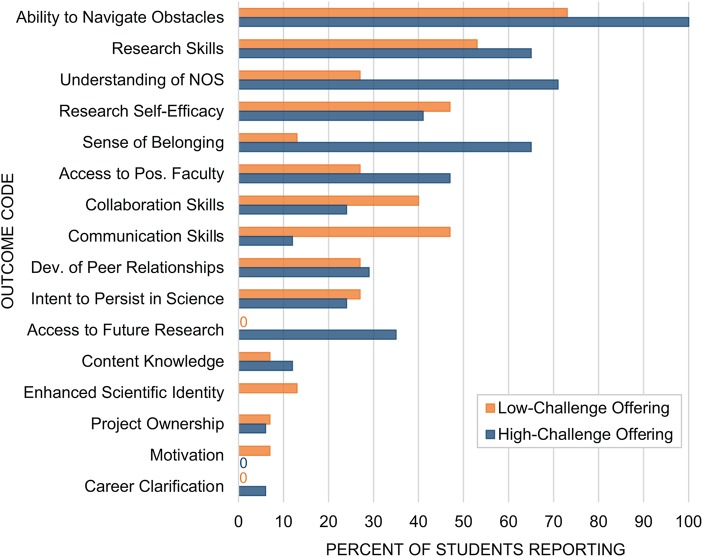

FIGURE 1.

Percent of students reporting different challenges in open-ended survey question responses.

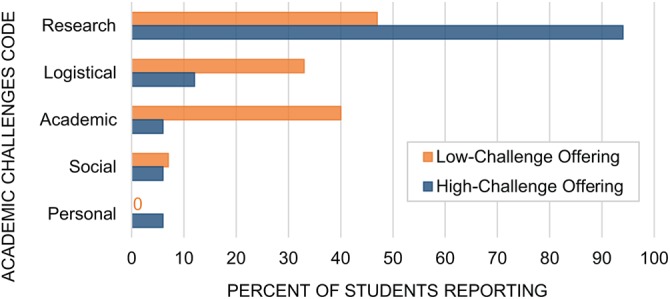

FIGURE 2.

Percent of students reporting different course design features in open-ended survey question responses.

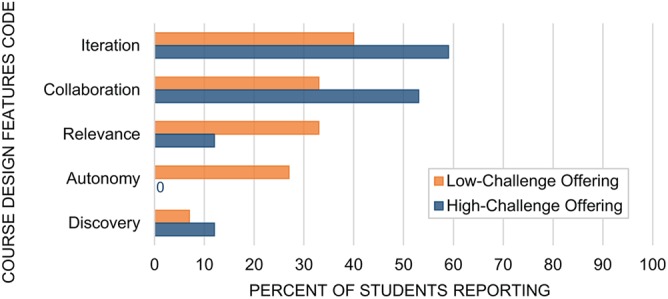

FIGURE 3.

Percent of students reporting different instructor actions in open-ended survey question responses.

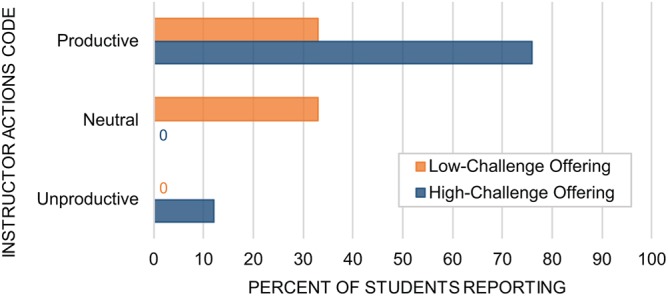

FIGURE 4.

Percent of students reporting different outcomes in open-ended survey question responses. Access to Pos. Faculty indicates access to positive faculty interaction.

Quantitative Data Analysis.

Given that we report on only two offerings of one course and therefore do not have replication in our experimental design, we calculated and report here only descriptive statistics including the means and standard deviations associated with our measurements.

RESULTS

Quantitative Analyses

Data from the LCAS survey allowed us to assess whether students had opportunities to make relevant discoveries, iterate, and collaborate during each course offering (note the LCAS measures relevance and discovery together in one measurement scale). Our analyses indicate that students in both offerings had many opportunities to engage in each design feature, as we observed high values for both. Estimates of mean ratings for relevant discovery for the high-challenge and the low-challenge offerings were 5.22 (SD = 0.51, SE = 0.12) and 5.16 (SD = 0.62, SE = 0.16), respectively, on a six-point scale. Estimates of mean ratings for iteration were 5.21 (SD = 0.57, SE = 0.13) and 5.3 (SD = 0.58, SE = 0.15), respectively, on a six-point scale. Estimates of mean ratings for collaboration were 3.93 (SD = 0.11, SE = 0.02) and 3.85 (SD = 0.15, SE = 0.04), respectively, on a four-point scale. These values are higher than values obtained for a national sample of other CURE courses (Corwin et al., 2015b). Thus, we can conclude that the two offerings were comparable and high in opportunities for students to make novel relevant discoveries, iterate, and engage in collaboration and that they reflect levels of these design features we would expect in CURE courses.

Research productivity differed drastically between the two offerings. Eleven percent (two out of 18) of the students in the high-challenge offering successfully identified one fish sample by the end of the course. In the low-challenge offering, all students (16 out of 16) successfully identified at least one fish sample by the end of the course. Table 2 shows how many samples each student identified. In general, students in the low-challenge offering identified their first sample in week 2 of the semester, while students in the high-challenge offering identified their first sample in week 14 (the second to last week) of the semester.

TABLE 2.

Number of seafood samples sequenced

| High-challenge offering | Low-challenge offering | ||

|---|---|---|---|

| Student | No. | Student | No. |

| H1 | 0 | L1 | 6 |

| H2 | 0 | L2 | 6 |

| H3 | 0 | L3 | 1 |

| H4 | 0 | L4 | 7 |

| H5 | 0 | L5 | 9 |

| H6 | 1 | L6 | 13 |

| H7 | 0 | L7 | 6 |

| H8 | 0 | L8 | 7 |

| H9 | 0 | L9 | 9 |

| H10 | 0 | L10 | 3 |

| H11 | 0 | L11 | 5 |

| H12 | 2 | L12 | 4 |

| H13 | 0 | L13 | 5 |

| H14 | 0 | L14 | 6 |

| H15 | 0 | L15 | 8 |

| H16 | 0 | ||

| H17 | 0 | ||

| Average | 0.2 | 6.3 | |

Qualitative Analyses

Students in both offerings discussed challenges they encountered, the various course design features, and a variety of outcomes they experienced. Yet, due to differences in course challenges, students’ level of emphasis on different design features and outcomes varied in frequency and intensity. We start by characterizing the challenges present, as these influenced students’ experience of the course design, which in turn influenced outcomes.

Course Challenges.

Challenges constituted difficulties, setbacks, or obstacles that the students experienced during the course that affected their overall course experience. We discuss logistical challenges, arising from course timing and organization, and academic challenges, associated with performing well on summative academic tasks, in the Supplemental Material. Though students discussed these challenges, they were not linked to course design features or outcomes and thus are not the focus of this work. We also coded for personal and social challenges having to do with personal experiences or social situations that affected students’ experience. Because of their low frequency (one student in each offering), we do not discuss these codes in this paper. In the next section, we focus on student research challenges that were frequently discussed and often linked with design features and outcomes in student descriptions.

Research Challenges.

Research challenges are associated with executing scientific research in the context of the course (e.g., technical challenges, challenges in analyzing data). Students discussed research challenges in both offerings; not surprisingly, more students in the high-challenge offering discussed research challenges (94% of respondents) than did students in the low-challenge offering (47% of respondents; Figure 1). Students in the high-challenge offering regularly described the frequency and ubiquity of challenges in the classroom, as represented by the following quote.

We all [went] through the same experiences of like “Oh none of us got a result today” and that, in terms of what problems we were addressing, it was more or less the whole class.—Student H8, high-challenge offering

Students expressed high intensity and incidence of research challenges in their language, describing that they “experienced many challenges, many challenges. As in a plethora of challenges” (Student H15, high-challenge offering), using words like “lots,” “oftentimes,” “countless times,” and “week after week.” Students in the high-challenge offering put extensive time and effort into their work as a result of research challenges.

By the second half of the semester, the instructors were keeping the lab open an extra 3 hours (so that it was 7 hours in total) if anyone wanted to stay and keep working. Several of us did and the entire time we were just trying to successfully get results of any type.—Student H3, high-challenge offering

Like the high-challenge offering students, several students in the low-challenge offering experienced research challenges, stating that it “took a long time to actually get results” (Student L1, low-challenge offering). Yet unlike in the high-challenge offering, these challenges were temporary or fewer in number, and students used words like “some challenges” to express this. For most of these students, the challenges were overcome early in the course. Thus, though both classes experienced similar kinds of challenges with PCR success or DNA extraction, their descriptions demonstrate that the challenges were more frequent and intense in the high-challenge offering than in the low-challenge offering.

Course Design Features.

Students’ responses indicated the presence of all four CURE design features included in the codebook (note that we did not code for science practices due to lack of consensus on the meaning of the code). They discussed iteration and collaboration frequently in both offerings, usually in conjunction with specific challenges or outcomes. Students also discussed discovery and relevance, but less frequently. It is important to note that discovery and relevance were coded as two separate constructs, even though they are measured together by one scale in the LCAS, because students’ discussions of these constructs differentiated between them. In other words, students did not always discuss how their pursuit of discoveries was relevant to an outside community and did not always discuss discovery when they were discussing the relevance of the work. Interestingly, students in the low-challenge offering frequently discussed an emergent design feature, Autonomy, defined as opportunities to direct one’s own work and independence in decision making. We discuss all course design features in the following sections, as they help to describe the course, and each relates to outcomes discussed in the subsequent sections.

Iteration.

Iteration was discussed frequently by students in both offerings. Yet, like research challenges, iteration codes were more prevalent in the high-challenge than in the low-challenge offering (59% of respondents vs. 40%, respectively; Figure 2). Students in both offerings described the repetitive nature of iteration, often highlighting that it had value.

Yeah and kind of the scientific method, if you see a problem and you ask a question. In our case it ended up being applying the scientific method to our actual research methods rather than seafood mislabeling. But we would go through and change one thing and see if it had a positive result, if we were able to get more sequencing results from that. If that didn’t work, we would go back and try to change another thing and just like going through each week and trying to figure out some way to get something. I think that was very valuable.—Student H6, high-challenge offering

Students also mentioned how the instructors encouraged them to iterate and helped frame iteration as a way to deal with the struggles they encountered and that this was different from other classes. This encouragement and expectation of iteration prompted students to work hard to do the science well, because it removed the pressure to do things quickly.

[Other courses] put a lot of pressure on students, especially the first time around to get it perfectly right, and then you’re more likely to make mistakes or conceal errors, and it’s just not ultimately beneficial for your learning. So, the fact that they don’t force you to have something completed by a time and just really encourage you to do it right, again, within your own time, I think is more beneficial to your learning.—Student L10, low-challenge offering

In the high-challenge offering, iteration was a continual and constant process throughout the semester; students stated that they were “constantly trying to fix this or that in some kind of way” (Student H12, high-challenge offering). The effort that they put into iterating was expressed in their investment of “lots of hours in the lab just trying to figure out what was going wrong” (Student H17, high-challenge offering). Though a few students expressed that this was “frustrating” or “disappointing,” many others in the high-challenge offering expressed that it was “fun” or “rewarding.” Students in the low-challenge offering expressed fewer emotions in connection with iteration. As in the high-challenge offering, students in the low-challenge offering would “troubleshoot” and “modify [the] protocol” until they obtained results. However, students in the low-challenge offering more frequently related that they would obtain results after a several iterations.

Collaboration.

Collaboration came through as a strong course design feature in both classes and again was more frequently discussed in the high-challenge than the low-challenge offering (53% of respondents vs. 33%, respectively; Figure 2). Students described the collaborative atmosphere as giving rise to overall positive affect, using words like “rewarding,” “fun,” “friendly,” “relaxed,” and “comfortable” to describe the collaborative experience in both offerings. Students in both offerings also described specific aspects of how collaboration would occur. Commonly, students would “help each other out” by “setting up solutions,” and “pouring gels.” In addition to this technical assistance, students appreciated the collaborative and noncompetitive nature of the course, which facilitated teamwork on experimental design and problem-solving.

Unlike other science courses, which are more competitive and not conducive to discussion, I had ample opportunity to discuss the course content with my peers and problem solve with them.—Student H4, high-challenge offering

Students expressed an awareness that this was an intentional and explicit design feature of the course implemented by the instructors.

[The course] was a collaborative environment, which is very unlike other classes at [the university]. The instructors are to thank for that. The first day, they made sure to emphasize the completion of a class agreement which highlighted the expectations of us and our interactions with each other.—Student L2, low-challenge offering

However, students also expressed that the students, not the instructors, would drive discussion during collaborative work.

[The class] would spend time—not like the professors leading the discussion—but it was like the team, the class working together and talking about what went wrong and what we wanted to study.—Student H8, high-challenge offering

Relevance.

Relevance was expressed less frequently, but was mentioned slightly more in the low-challenge offering than the high-challenge offering (33% of respondents vs. 12%, respectively; Figure 2). Students expressed relevance by discussing the importance of their work beyond the classroom, relating it to both science and society broadly, or to their local community.

We looked at all the social impacts and we looked at the ways it would make, like, in public health and in social justice and stuff like that and I think a lot of times, sciences courses are focused on only the science, right, and you don’t actually see what the realistic implications are.—Student L5, low-challenge offering

In discussing relevance, students expressed that this was a motivating factor during the course.

We learned about, like, the slavery that was going on in Thailand and, like, other factors that were affecting mislabeling. That knowledge in and of itself, was like, I don’t know about anyone else, but I want to know more about how that’s affecting us in this area. So that was even more inspiring within our research ‘cause it’s like, this is happening here … we need to figure out what’s going on [in the local region].—Student L8, low-challenge offering

While students discussed relevance as a motivating factor in both courses, it was not emphasized to the same extent as collaboration or iteration. While this could have been a result of the specific questions we asked, it could also be the result of this element being perceived as less important to some students in comparison with other elements. One student in the high-challenge offering compared and contrasted relevance with other factors that motivated her engagement when discussing the broad implications of the research.

I’m not sure if I was super-convinced that, like, the specific research we were doing was going to be very game-changing. So, I think a lot of my motivation came from more personal reasons, like I just really wanted to get results, because I’ve been spending a lot of time doing this, and it’ll be cool, and I want this course to continue. So like we talked a lot about the broader implications of our research and I agree that it was very important. But when it came down to do it, I think it was much more personal.—Student H4, high-challenge offering

This student implied that, while she found the relevant context of the work important, it was not a primary motivator in part, because the student didn’t feel that her particular results were going to be impactful. This could explain, in part, why relevance was discussed less frequently in the high-challenge offering.

Discovery.

Discovery was discussed in both offerings, albeit infrequently (12% of respondents in the high-challenge offering vs. 7% in the low-challenge offering; Figure 2). When discussing discovery, students expressed that this course was different from other courses in which they “always knew what the research was ‘supposed’ to result in and if [they] didn’t get the expected result [they] were wrong” (Student L8, low-challenge offering), recognizing the unexpected nature of discovery.

I think that’s the difference between approaching a novel problem and being in another class where it’s very content-based because someone can just tell you the answer if you don’t figure it out in another class. But no one can find out the answer besides you in a research study.—Student H12, high-challenge offering

The students in the high-challenge offering recognized that the unknown elements in the course contributed to the technical problems they had, citing that, because of the unknown elements, “something can go wrong,” increasing the necessity of iteration.

For most lectures … you just copy and paste it into Google and chances are someone’s already asked it on Yahoo. Someone else has already answered it, too, but no one’s ever been so like, Seafood mislabeling: What is the rate of it in [our state]? No one’s actually asked that question before so we had to keep going, keep trying [to get results]. We really didn’t have any other choice.—Student H6, high-challenge offering

Autonomy.

Autonomy was an emergent course design feature in both courses, but was reported more frequently by students in the low-challenge course than in the high-challenge course (27% of respondents vs. 0%, respectively; Figure 2). (Note that the instances of autonomy for the high-challenge offering were only reported in FGs, which are not quantified in these parenthetical statements. See Supplemental Table S5 for this quantification.) Autonomy consisted of expressions indicating that students felt they were able to direct their own decision making about their actions, stating that “never before have I had the opportunity to make so many of my own decisions about an experiment” (Student L1, low-challenge offering). In the low-challenge offering, students expressed that this autonomy arose because of the way the instructors approached directing lab activities.

The instructors never told us how to get the answer. They were never, like, oh you should do this. They were like, okay, let’s open up the floor. Let’s have a lab meeting.—Student L8, low-challenge offering

This approach increased this students’ interest, resulting in her investment of effort in the research.

Maybe if we were being told exactly what to do we wouldn’t have been quite as invested in it, but it was like our own choice to be taking risks in certain ways and coming in and, like, finding answers, which I think for me made the course all the more valuable.—Student L8, low-challenge offering

The students also emphasized that, though they had independence, they were also working collaboratively and that this balance created a rewarding experience.

And it was just—it was really exciting to be able to do that independently. And you always had the option to be independent, but you were never alone ‘cause everyone else was going through like, the same stuff and so if you were, like “Oh my gosh, how did you get that to work?,” then everyone in the room would swarm and be, like, I did this. Let me help you. I wanna help. And it was just—it was such a rewarding experience because it taught you how to be independent but you were never, like, alone.—Student L1, low-challenge offering

Instructor Actions.

We coded all instances in which students described specific actions their instructors took during the course. This was done to more fully characterize the course climate beyond course design. We anticipated that beyond course design, instructor–student interactions were another important component that could influence students’ responses to research challenges and obstacles. Students described a variety of the instructors’ actions in the course. Actions were coded as “productive”—meaning that the action was described by the student as having a positive cognitive, behavioral, social, or emotional outcome for the student; “counterproductive”—meaning that the action was described as a having a negative impact on an outcome; or “neutral”—meaning that the action was not described as having a positive or negative outcome. This additional coding allows us to explore, from the students’ perspective, which actions may be important in affecting success. The vast majority of action codes were productive, with few instances of counterproductive or neutral actions (Figure 3; for examples, see Supplemental Table S4). Thus, we discuss only productive actions below.

Productive Actions.

A commonly reported productive action that the instructors took was to deliberately share information about themselves, including their own stories of challenges and struggles in science. This resulted in students feeling as though they could connect with their instructors, and through their instructors, they often connected with science.

I appreciated how open [my instructors] were about their personal backgrounds and how they came to what they were doing … And I know that [my instructor] was very honest with us about [their] experience in grad school and how it wasn’t everything that [they] had kind of expected. And so their kind of honesty with the place that they’re coming from but then why they’re still excited about what they’re doing made science seem like a bit more of an open field than it had to me before. I could see a lot of myself in them, and that made me feel much more comfortable with the fact that I was in this department.—Student H9, high-challenge offering

In conjunction with these descriptions, students used the phrases “they really care” or “they genuinely cared” to describe that they perceived their instructors as truly “caring” about them as individuals and about the science they were doing in the course. One student mentioned the approachable nature of the instructors stating that they were “not, like, ‘we’re at the front of the classroom, you can’t approach us.’ They’re like ‘[we’re] people.’” This student felt that he/she could “go up and sit with them and talk to them” (Student L3, low-challenge offering). In describing how the instructors “cared,” the students would also mention specific actions the instructors took that demonstrated that they cared both for the students and about the research questions they were pursuing. The following statement demonstrates how the instructors conveyed motivation and enthusiasm for the research even when the class was experiencing challenges.

We spent a lot of time correcting for the actual technical problems we were having. It wasn’t for a lack of enthusiasm on the part of the professors. [My instructor] would send out emails with news articles we weren’t required to read or anything but [they’d] be like, ‘Look at this cool article!’ [They] still do!”—Student H13, low-challenge offering

This student spoke of the instructors’ unfailing enthusiasm and hard work as being a motivator to keep engaged with the class. Seeing their instructors maintain their own motivation and act to solve problems helped students to do the same.

And also seeing [the instructors] approach the problems, you know, they really didn’t know the answers either and so it was cool seeing them being so motivated to solve them because I knew they were coming from really kind of a similar place to us.—Student H9, high-challenge offering

Students also spoke about actions that contributed to the specific design features mentioned earlier. Instructors’ responses to the research challenges involved encouraging students to explore the literature and investigate why an experiment had not worked, but not telling students exactly what to do. Students stated that this allowed them autonomy and made the class more interesting (see section on Autonomy). The instructors also took specific actions that resulted in a sense of collaboration among the students, such as having the students fill out a mutual class agreement on the first day of class, as reflected in the Collaboration section. Overall, it was apparent that all of these actions, including sharing personal stories, demonstrating care and interest, and modeling responses to challenges, added to the efficacy of this class approach, perhaps moderating the effects of the course design elements listed earlier.

Course Outcomes and Connections to Challenges and Design.

With the exception of one outcome that was not coded in either offering (external validation from the scientific community), we found a minimum of two instances of each outcome in the data for both course offerings (Figure 4). This indicates that students in both offerings had potential to experience a broad range of outcomes. Notably, students in the high-challenge offering, despite not accomplishing designated scientific goals, discussed the same number of outcomes as students in the low-challenge offering. Definitions of all outcomes can be found in the Supplemental Table S4.

Outcomes reported by a minimum of 60% of respondents to the open-ended questions in at least one offering (Figure 4) include increases in ability to navigate scientific obstacles, understanding of the NOS, sense of belonging, and research skills. In addition, research self-efficacy was reported by a relatively high percent of students in both offerings (41 and 47% in high- and low-challenge, respectively). In the following sections, we describe these five outcomes and discuss how students related these outcomes to research challenges and design features. We chose the cutoff of 60%, because this is more than half of the students in an offering (11 out of 17 in the high-challenge offering and nine out of 15 in the low-challenge offering). Though it is below the cutoff, we include research self-efficacy because both courses describe this outcome relatively frequently. Themes that were reported by nearly half of the students in at least one offering (access to positive faculty interaction and communication skills) are discussed in the Supplemental Results. Although the other outcomes in Figure 4 are also of high importance in CURE research, they were not a priori foci of this study and did not emerge as central and frequent topics of discussion in either offering. Thus, they do not constitute central themes of this study.

Ability to Navigate Scientific Obstacles.

Ability to navigate scientific obstacles was expressed via statements indicating that students had successfully worked through a scientific obstacle to arrive at a solution or resolution (either cognitive or emotional) and when they expressed feeling better equipped (cognitively or emotionally) to work through a future scientific obstacle. This code was independently expressed by more students in the high-challenge offering than in the low-challenge offering (100% of respondents and 73%, respectively; Figure 4). However, there were not substantial differences in the ways students discussed their improvements in obstacle navigation. Students in both the high- and low-challenge offerings expressed what they learned about failure and how they will behave during future obstacles.

[When encountering a future research challenge,] I would try to figure out what part is not right or not working the way I want it to and to focus in on that specific area. If that doesn’t work, I would look at the process as a whole and see if there is some other factor that is affecting my results and see if there are any big changes I can make.—Student H16, high-challenge offering

This reflects the intention of the students to engage in careful and methodical troubleshooting behaviors when they encounter future obstacles. Students in both offerings also expressed changes in their ability to deal emotionally with future problems by reframing obstacles as opportunities for learning and growth.

Seafood Forensics has taught me not to give up when I encounter a problem. I have learned that it’s normal. Failures are just a part of the ride and help us to learn. Overcoming challenges in science makes the success so much sweeter. I am now not afraid to ask for help when I need it. It doesn’t mean I am unintelligent, but simply that there is a chance for growth.—Student L7, low-challenge offering

Such reframing may have been influenced in part by the instructors encouraging the students to engage in troubleshooting and discussing how failure can be dealt with through iteration (see Iteration). Finally, similar to the student above who expressed that they were not afraid to ask for help, a few students in each offering demonstrated their increased ability to navigate challenges by describing how they would leverage the knowledge of their community.

[Failure] is a normal thing that people go through and you just have to apply that the rest of your life too. And also not being scared to ask for help when you need it. ‘Cause sometimes you’re like, oh, I don’t want people to think I’m dumb, I don’t wanna ask for help but, like because of this class, it was just, like, so open. So now I just feel like, I am not stupid if I need help. This is like a normal thing.—Student H10, high-challenge offering

Increased ability to navigate scientific obstacles was frequently coded in combination with the codes for research challenges and iteration in both courses. When research challenges occurred, students iterated, and they stated that this improved their ability to deal with the scientific obstacles.

I had a lot of failures when trying to isolate the DNA [research challenge]. Over many trials and asking other people, I got to improve each time and finally graduate with real results [iteration]. I think that learning how to alter and persist with a procedure, seeking other help and keeping well detailed notes to find what works and what doesn’t was an important aspect for me to learn [increased ability to navigate obstacles].—Student L15, low-challenge offering

Understanding of the NOS.

Understanding of the NOS was reported in both offerings, but many more students independently reported this outcome in the high-challenge offering as compared with the low-challenge offering (71% of respondents vs. 27%, respectively; Figure 4). Within this code, the most common realization that students discussed was that the scientific process is not “straightforward” and often involves failure, debunking the “myth” of the scientific method.

I learned you’re not always going to get the result you want and science isn’t a series of consecutive steps to a[n] X-marked spot but a bunch of missteps and trip-ups, and backtracking and stumbling on a path you don’t know before making it to an end you weren’t aware you were headed to. That to me is what this class taught.—Student H16, high-challenge offering

Such quotes were often situated near discussions of research challenges and iteration in the classroom, and some students attributed their understanding of the NOS to these processes.

I was actually worried that our problem would not be very interesting and we would just kind of get similar results to other people. That turned out not to be the case. Nothing was cut and dry [sic]. And so, in the end, I think troubleshooting all of the problems that we had [iteration and research challenges] turned out to be a lot more interesting, in fact, than just having immediate success with the techniques. I learned a lot more about the techniques themselves and also, I guess, the nature of research.—Student H4, high-challenge offering

Students also expressed shifts in their knowledge that science is a social, collaborative endeavor and that it is situated within and affected by society.

In professional research you often have issues of funding, and you have to convince your supervisors/others in the lab that what you are doing is valuable, and there are a lot of other logistical issues. We were fortunate enough to have almost unlimited supplies.—Student L14, low-challenge offering

Sense of Belonging.

Sense of belonging was expressed in both courses, but more frequently in the high-challenge offering as compared with the low-challenge offering (65% of respondents vs. 13%, respectively; Figure 4). When expressing belonging, students described feelings of comfort and camaraderie making statements such as “It’s like a community, like, the small little classroom is like a community” (Student L9, low-challenge offering) and “We are not a class, we are a research team” (Student H15, high-challenge offering). Students in both sections discussed how their belonging created a sense of importance and responsibility in contributing to the research outcomes.

I remember this one time I missed class and I felt so bad because I realized, like, maybe it was just me overthinking things. I do that a lot. But I feel like it’s the fact that I feel as part of a team although I am an individual. So by not being there, maybe other samples would not have been [done] … I think the class makes you feel so involved.—Student L6, low-challenge offering

In both offerings, students commonly described how the course design element of collaboration contributed to their sense of belonging and community. Students in the high-challenge offering in particular spoke about how the collaborative atmosphere, as opposed to a competitive atmosphere, fostered this feeling.

I feel like over the semester we all got closer as students and peers because we’re all doing the same work and contributing to the same project. We all faced the same issues and worked with each other to overcome those challenges [collaboration]. There really wasn’t any pressure to get the best test score or write the best paper, and I felt that because there was a feeling of camaraderie [sense of belonging], we all got along so well and with the professors because we were all working to the same goal.—Student H16, high-challenge offering

As illustrated in the quote above, students in the high-challenge offering not only felt camaraderie because they were collaborating on the same goal but because of their shared experience of struggle.

Research Skills.

Research skills were described by many students in both classes (65 and 53% of survey respondents in the high- and low-challenge offerings, respectively). This may be because question OE5 (Supplemental Table S2) targeted this outcome. However, though this question asked about both skills and knowledge, students focused primarily on skills and not knowledge. Notably, students in the high-challenge offering, who did not experience the same level of research success as students in the low-challenge offering, still felt they had gained specific research skills in DNA extraction, PCR, and gel electrophoresis. When discussing gains in research skills, a few students highlighted the role of iteration in helping them to hone their skills.

I knew what PCR was from class in theory, but knowing actually how to set one up and run one is a very different thing. Same thing with gels, it’s always better to get actual experience. And unlike most labs where you try out gels one day and then move on to something else the next week, you can really hone your skills at some of the most basic lab techniques by doing them repeatedly until you are successful.—Student L14, low-challenge offering

Research Self-Efficacy.

Research self-efficacy, or students’ confidence in their ability to do research, was also discussed relatively frequently in both offerings (41 and 47% of survey respondents in the high- and low-challenge offerings, respectively). In both classes, students experienced increased research self-efficacy when they successfully performed a research task, although there were fewer of these expressions in the high-challenge offering than in the low-challenge offering.

Getting my first successful barcoding result was pretty neat because I realized that I could do science-y things.—Student H6, high-challenge offering

Students in the low-challenge offering often discussed the role of the design feature of autonomy when describing gains in confidence.

By just being allowed to independently go through the scientific process with little restriction [autonomy], I have gained a great deal of confidence in my ability to do research [research self-efficacy].—Student L3, low-challenge offering

Despite the important role that autonomy played in developing some students’ confidence, students in both offerings discussed how the social environment helped them develop confidence, highlighting how a supportive social environment and an environment that supports independence in research are not mutually exclusive.

I think this class is a really good kind of confidence booster, not necessarily just in science, but just in general because, yeah, we all started out kind of at this baseline, and even though I had some prior experience, I still didn’t really know exactly what we were doing, and just being able to deal with failures and having that network of people where you can go to and realize that everyone is in the same boat, and so it kind of made me feel a little more competent than I maybe thought I was previously.—Student L1, low-challenge offering

The above quote highlights how dealing with struggles together in a social environment helped students develop confidence. This sentiment was present in quotes from both offerings.

DISCUSSION

Students Who Fail to Achieve Predefined Research Goals May Still Experience Numerous Outcomes as a Result of CURE Participation

We used primarily qualitative data to gain insight into the experiences of students who fail to achieve instructor-defined research goals (i.e., fail to make discoveries in service of their research question) within the context of a single CURE. Our results show that students in the high-challenge offering, who largely did not achieve predefined research goals, still reported many positive outcomes as a result of CURE participation. In comparison with students in the low-challenge offering, all of whom achieved predefined research goals and generated novel results, students in the high-challenge offering reported the same total number of outcomes (Supplemental Table S5). Furthermore, students in the high-challenge offering reported certain outcomes more frequently than the students in the low-challenge offering. This work provides preliminary support for the hypothesis that students do not have to achieve specified predefined research goals to achieve positive outcomes in a CURE. This is not to say that we should disregard considerations of research difficulty or goals for research progress when we design CUREs. Indeed, there are positive outcomes that result from succeeding in accomplishing a predefined research goal that were not the focus of this work. Additionally, this work is limited in scope, and it could be that these students, who were primarily sophomores and juniors, may have had a different capacity to deal with challenges than students earlier in their academic development. Thus, we as authors still advocate for CURE instructors to involve students in research tasks of appropriate difficulty. Yet our results suggest that we do not have to shield our students from difficulty in order for them to achieve desirable CURE outcomes. We may choose, instead, to focus on how we can support our students when research challenges and obstacles present themselves, because allowing students to work through these obstacles may lead to positive outcomes.

CURE Course Design Features Contribute to a Variety of Outcomes

Our results support roles for multiple CURE design features in outcome achievement. We discuss these relationships in an order that reflects their prevalence and strength in the data.

Encountering Scientific Challenges and Iterating to Find Solutions Influenced Students’ Ability to Deal with Scientific Obstacles and Understand Aspects of the NOS.

In both courses, students perceived ample opportunities for iteration as described by the LCAS survey results and qualitative descriptions of specific instances in which they iterated (Figure 2). However, iteration was more frequent and intense for students in the high-challenge offering. This is not surprising, given that students expressed that experiencing research challenges resulted in increased iteration. What is more interesting is that the combined processes of encountering challenges and iterating, while receiving support from instructors, resulted in students’ achievement of valued outcomes, including increased ability to deal with scientific obstacles and understand the NOS.

Consistent and strong patterns emerged in students’ reported increases in their ability to deal with obstacles in connection with research challenges and iteration. Students often cited direct links between these three elements. Notably, students in both classes highlighted three strategies that they would use when encountering future challenges: 1) problem solving, which involves engaging in strategic planning, making decisions, and acting to solve a problem; 2) support seeking, which involves seeking comfort, help, and instrumental support; and 3) cognitive restructuring, which involves reframing a problem from negative to positive and engaging in self-encouragement. These categories are consistent with years of research describing patterns in how individuals cope with challenges and obstacles (reviewed in Skinner et al., 2003). Furthermore, each of these mechanisms is associated with adaptive coping, in which individuals make progress on solving a problem and support their well-being, as opposed to maladaptive coping, which is associated with lack of progress and decreases in well-being (e.g., Alimoglu et al., 2011; Shin et al., 2014; Struthers et al., 2000). Individuals who practice adaptive coping mechanisms generally are more successful and satisfied in their work than those who engage in maladaptive strategies (Alimoglu et al., 2011).

These results suggest that students in this CURE improved their ability to adaptively cope with scientific obstacles via their exposure to obstacles and opportunities to iterate. Literature on coping has found that adaptive coping mechanisms are used more frequently when individuals view problems or stressors as challenges, but not as threats, to their well-being (Skinner et al., 2003). According to self-determination theory, well-being results from relatedness, or having meaningful relationships with others; competence, or feeling capable and experiencing mastery; and autonomy, or being the causal agents in charge of one’s own actions (Deci and Ryan, 2000). In this CURE, efforts were made to avoid grading students on their successful completion of a specific task, but rather on their active engagement in the processes of science, knowledge, and clear communication about their science process and results, perhaps alleviating threats to competence. Instructors also specifically addressed the topic of failure, framing it as something that everyone in science experiences, normalizing it, and discussing how iteration can be used to solve failures. These discussions may have further alleviated threats to competence when failures occurred. Instructors also actively built relationships with students, using language and actions that made students feel that they were approachable, cared, and were invested in the students’ success and the success of the project. Instructors emphasized collaboration, and students reported experiencing a collaborative, as opposed to competitive, atmosphere. They recognized struggles as opportunities to empathize and build camaraderie. These actions supported relatedness. This is particularly notable, as students may not have the same potential to receive social support from peers and instructional staff or engage in shared struggle in individually mentored UREs, which involve fewer people. CUREs may present a unique social context in which relatedness during UREs can be well supported through instructor and peer interaction actions. Finally, students also reported experiencing autonomy, though this was reported to a lesser degree in the high-challenge offering. Notably, students often mentioned specific instructor actions that supported autonomy, such as modeling how to solve a problem instead of providing direct answers and “tell[ing] the students what to do.” Thus, it could be that students in this course had the opportunity to practice adaptive coping in part because they perceived scientific obstacles as challenges but not threats to their well-being and were supported in dealing with obstacles. Future research could further leverage self-determination theory and other motivational theories to examine how students deal with scientific obstacles during and after CURE or URE participation and how instructors’ actions and roles can support students’ views of obstacles as challenges, not threats.

Students also demonstrated an increased understanding of some aspects of the NOS as they encountered challenges and engaged in iteration. Specifically, students came to understand that the “scientific process” was not linear, but circular and repetitive, with no set of steps to follow—the “myth of the scientific process” (Lederman et al., 2013). This suggests that engaging students in the scientific process with the potential to encounter real struggles and iterate could help all students to better understand how research functions. However, more explicit instruction may be needed to further elucidate other aspects of NOS that were not reported by students in this course. This is in line with previous work that highlighted a role for inquiry in supporting NOS learning but also found that explicit instruction is necessary for comprehensive NOS understanding (Schwartz et al., 2004; Sadler et al., 2010; Russell and Weaver, 2011).

Collaboration Facilitated a Sense of Belonging in the Classroom.