Abstract

We are currently in the midst of Earth's sixth extinction event, and measuring biodiversity trends in space and time is essential for prioritizing limited resources for conservation. At the same time, the scope of the necessary biodiversity monitoring is overwhelming funding for professional scientific monitoring. In response, scientists are increasingly using citizen science data to monitor biodiversity. But citizen science data are ‘noisy’, with redundancies and gaps arising from unstructured human behaviours in space and time. We ask whether the information content of these data can be maximized for the express purpose of trend estimation. We develop and execute a novel framework which assigns every citizen science sampling event a marginal value, derived from the importance of an observation to our understanding of overall population trends. We then make this framework predictive, estimating the expected marginal value of future biodiversity observations. We find that past observations are useful in forecasting where high-value observations will occur in the future. Interestingly, we find high value in both ‘hotspots’, which are frequently sampled locations, and ‘coldspots’, which are areas far from recent sampling, suggesting that an optimal sampling regime balances ‘hotspot’ sampling with a spread across the landscape.

Keywords: citizen science, biodiversity, spatial and temporal sampling, dynamic models, predictive modelling

1. Introduction

Assessing biodiversity trends in space and time is essential for conservation [1–5]. Reliable biodiversity trend estimates, at multiple spatial scales [6], allow us to track our global progress in curbing biodiversity loss while managing our scarce conservation resources [1]. Unsurprisingly, reliable trend estimates are best derived from long-term [7,8], well-designed surveys, carried out over a wide spatial and temporal scale [1,9,10]. But scientific funding for long-term ecological and conservation research is failing to keep pace with conservation needs [11,12]. Increasingly, government agencies, scientific researchers and conservationists are turning to citizen science data to help inform the state of biodiversity at local [13–16], regional [17,18] and global scales [19–21].

Citizen science—the cooperation between scientific experts and non-experts—is an incredibly diverse and rapidly expanding field [22–24]. Projects generally fall along a continuum based on the level of associated structure [25,26], ranging from unstructured (e.g. opportunistic or incidental projects which require little to no training; iNaturalist) to structured (e.g. projects with specific objectives, rigorous protocols and survey design; UK Butterfly Monitoring Scheme). The level of structure, in turn, influences the degree of redundancies and gaps in the data, as well as the overall data quality of a particular project. For instance, observer skill [27], number of participants in a group and the technological capabilities of a participant may influence the data collected by some, but not necessarily all, citizen science projects. Generalizable among citizen science projects, however, are various redundancies and gaps (i.e. spatial and temporal biases) [28,29]. Observers submitting observations on weekends [30], sampling near roads and human settlements [31], and visiting known ‘hotspots’ for biodiversity [32] are all examples of how unstructured human behaviour leads to redundancies and gaps in citizen science data [33]. These biases are not restricted to citizen science projects. Indeed, our historical understanding of biodiversity is also biased due to variation in sampling effort, evident from natural history collections [28,34]. Many sampling methods have been proposed to optimize biodiversity sampling by professional scientists [35–39], frequently dependent on spatial scale [40]. While structured citizen science projects often adapt some aspects of optimal sampling in their methods (e.g. stratified sampling), little attention has been given to optimal sampling in unstructured citizen science projects [1].

One of the reasons that optimal sampling has been largely ignored in unstructured citizen science projects is because redundancies and gaps in the data are seen as a ‘necessary hurdle’ [41]. Also, in the case of broad-scale biodiversity data collected at large voluminous scales, the biases can generally be accounted for statistically [42,43]; for instance, by filtering or subsetting data [44], pooling multiple data sources [45], or machine learning and hierarchical clustering techniques [31,46]. Indeed, despite known biases, citizen science data have increased our knowledge of species distribution models [47,48], niche breadth [49], biodiversity [20,50], phenological research [51,52], invasive species detection [53,54] and phylogeographical research [55,56].

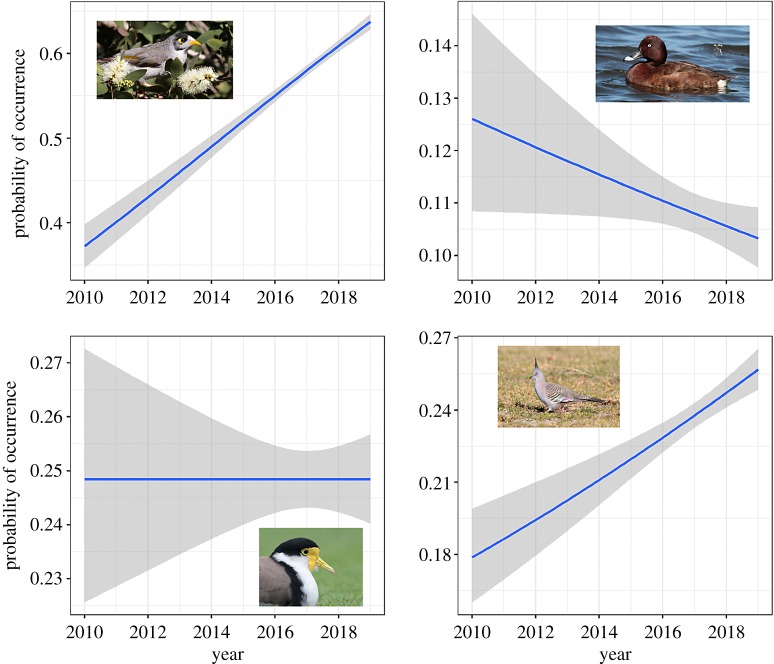

Despite the potential outcomes from citizen science data, estimating biodiversity trends is perhaps the most important, given the current need to efficiently and effectively monitor biodiversity [1,4,5]. From a conservation perspective, the goal is relatively straightforward: provide robust measures of species' trends through time, a critical component of the IUCN Red List index [57]. Estimating trends with citizen science data is best done with data from structured projects (i.e. less biases to account for, generally resulting in greater certainty) [18]. But unstructured and semistructured projects are increasingly harnessed for trend detection [10,58–62]. The robustness of these trend estimates is critical, and the goal should be to continuously decrease the uncertainty surrounding these estimates (e.g. figure 1). Unsurprisingly, uncertainty is generally related to the number of observations, as well as appropriate sampling, through time (e.g. https://github.com/coreytcallaghan/optimizing-citizen-science-sampling/blob/master/Figures/Noisy_miner_gif.gif).

Figure 1.

The ultimate goal in understanding population trends is to minimize the uncertainty for a population trend model, providing more robust measures of population trends. Shown here are four example population trend models, based on eBird data between 2010 and 2018, for noisy miner (top left), hardhead (top right), masked lapwing (bottom left) and crested pigeon (bottom right), in the Greater Sydney Region, Australia. Each model incorporates approximately 26 000 biodiversity sampling events. (Online version in colour.)

The number of citizen science projects which are focused on ecological and environmental monitoring is increasing [14,24], highlighting the potential that citizen science holds for the future of ecology, conservation and natural resource management [20,63–65]. But a major obstacle in the future use of citizen science data remains understanding how to best extract information from ‘noisy’ citizen science datasets [41]. As mentioned, this noise from citizen science [29] can sometimes be alleviated using ‘big data’ statistical approaches [31], but this is most applicable for data originating from large, successful citizen science projects—with lots of data. Even with big data, this is not always possible. But what about projects that are just beginning? Or projects focusing on taxa that are less popular with the general public [66,67]? Are there optimal strategies for sampling in space and time for estimating biodiversity trends?

Here, we investigate these questions with a specific objective: assess how spatial and temporal sampling by citizen scientists influences trend detection of biodiversity. Our approach is dynamic: we are interested in the parameters that influence the value of a given citizen science sampling event in both time and space. To do this, we (i) used 25 995 eBird citizen science sampling events, (ii) analysed linear trends for 235 species, (iii) calculated a measure of statistical leverage (i.e. marginal value)—the influence a given observation has on the population trend model of a species—for all checklists for each species, (iv) summed the leverages on a given checklist to provide a measure of marginal value for every checklist (i.e. the cumulative value/importance of a sampling event to inform our total knowledge of species' trends, across many species), (v) tested specific predictions (appendix 1 in the electronic supplementary material) which may influence the marginal value of a citizen science sampling event [33], and (vi) used these associations to predict the expected marginal value on a daily basis.

2. Methods

We tested our predictions throughout the Greater Sydney Region (approx. 12 400 km2), delineating grids across the region of varying size (5, 10, 25 and 50 km2), where a grid represented a ‘site’. We used the R statistical environment [68] to carry out all analyses, relying heavily on the tidyverse [69], ggplot2 [70] and sf [71] packages.

In order to test our predictions, we used the eBird basic dataset (version ebd—relDec—2018; available at https://ebird.org/data/download), subsetting the data between 1 January 2010 and 31 December 2018. eBird is a successful citizen science project with greater than 600 million observations contributed by greater than 400 thousand participants, globally [15,72,73]. eBird relies on volunteer birdwatchers who collect data in the form of ‘checklists’—a list of all species identified (audibly or visually) for given spatio-temporal coordinates. eBird relies on an extensive network of regional reviewers who are local experts of the avifauna [74] to ensure data quality [72].

(a). Trend detection model

We first filtered the eBird basic dataset [51,75,76], by the following criteria: (i) we only included complete checklists, (ii) we only included terrestrial bird species, (iii) we removed any nocturnal checklists, (iv) we only included checklists which were greater than 5 min and less than 240 min in duration, (v) we only included checklists which travelled less than 5 km or covered less than 500 Ha, and (vi) we only included checklists which had greater than four species on it, as checklists with less than four species were likely to be targeted searches for particular species [58,77].

For any species with more than 50 observations (n = 235), we fit a generalized linear model using the ‘glm’ function in R, based on presence/absence with a binomial family distribution [58,60]. The models consisted of a continuous term for day, beginning 1 January 2010, and a categorical term for county, providing a spatial component to the models (e.g. figure 1). We also included an offset term for the number of species seen on a given eBird checklist, accounting for temporal and spatial effort of that checklist [77]. This specific linear model may not be suitable for species which have varying detection probabilities throughout the full-annual cycle, but a large suite of models is possible in our framework. A total of 25 995 sampling events (i.e. eBird checklists) was used to fit each model. For the top 50 species in our analysis, we further investigated the robustness of these trend estimates in respect to sample size (appendix 2 in the electronic supplementary material).

(b). Statistical leverage

Statistical leverage measures the influence of a particular observation on the predicted relationship between the dependent and independent variables [78]. In other words, it is a measure of how much a given observation affects the statistical model. In our instance, as is likely to be the case for all trend detection models, we had multiple predictor variables. Because for trend detection, we are interested in one specific model parameter—the temporal component—dfBeta rather than Cook's distance is appropriate [79]. dfBeta measures the change to one model parameter, after omitting the ith observation from the dataset [79,80]. It follows the formula

where X is the predictor variable matrix, r the residual vector, i h the ith diagonal member and i x the ith line of matrix X. The value of dfBeta tends to decline with an increase in the number of observations as the trend becomes well understood.

In our case, every sampling event for each species received a dfBeta value (i.e. each species received 25 995 measures of dfBeta), using the ‘dfBetas’ function from R [68]. The measure of statistical leverage, then, of a given checklist was the sum of the absolute value of the dfBeta measures for each species (i.e. the sum of all 235 dfBetas). This measure of statistical leverage was thus a measure of a checklist's influence in understanding cumulative species' trends throughout the Greater Sydney Region, and accordingly represented the marginal value of that particular checklist. Failing to observe a species produces a dfBeta which can be quite important in detecting a species decline.

(c). Parameter calculation

After our model was fit from 2010 to 2018, we calculated the predicted parameters of interest for each day in 2018 (n = 365). For each individual grid, at each of the grain sizes, we dynamically calculated the following parameters, related to our predictions (appendix 1 in the electronic supplementary material): (i) whether a grid cell had ever been sampled, (ii) the distance to the nearest sampled grid cell, (iii) the median sampling interval of a grid cell, (iv) the median sampling interval of the nearest sampled grid cell, (v) days since the last sample in a grid cell, (vi) the duration of sampling in a grid cell (most recent sample minus the earliest sampled date), and (vii) the number of unique sampling days within the grid cell. Note that these parameters depend on the sampling in the days prior to that particular observation and do not consider the sampling in subsequent days.

We then subsetted the leverage calculations (see above) for each of the days in 2018, given we knew where people sampled, relative to the parameters for each of the grids on that day. We ran a linear regression for each of the different grain sizes considered in the analysis to investigate which parameters could forecast checklist influence. Prior to modelling, duration was highly correlated with median sampling interval for the majority of the grain size analyses, and as such, was excluded from consideration. Given the parameters' correlation varied among grain sizes (appendix 3 in the electronic supplementary material), we needed to ensure a robust, and simple model. All variables were log-transformed and then standardized prior to modelling, ensuring that the effect sizes of the given parameters were meaningful. The response variable, dfBeta (i.e. marginal value) was log-transformed prior to modelling to meet model assumptions. Thus, the final model included a log-transformed dfBeta response variable, regressed against log-transformed standardized median sampling interval, number of days sampled, days since the last sample, distance to the nearest sampled neighbour, and the neighbour's median sampling interval.

After our model was fitted, we used the ‘augment’ function from the broom package [81] to predict the expected leverage for every grid cell in the Greater Sydney Region, for every day. For grid cells which were unsampled, we assigned them the mean of the sampled grid cells, based on our lack of evidence that unsampled cells were significantly more valuable than sampled cells. Where one grid had multiple predicted leverages (i.e. where a grid had more than one checklist in a day), we randomly sampled to one of these expected leverages. This prediction process was repeated for every day of 2018.

3. Results and discussion

(a). Tests of predictions

We found weak evidence that visiting an unsampled site was marginally more valuable than visiting an already sampled site, but we did find that as grain size increases the importance of sampling unsampled sites also increases. There was no statistical clarity for the 5 km (p = 0.669; effect size = −0.25 ± 0.58) and 10 km (p = 0.093; effect size = 1.27 ± 0.76) grain sizes, but there was for the 25 km (p = 0.035; effect size = 2.98 ± 1.42) grain size. At the 50 km grain size, this test was not possible because all sites had been sampled. These results suggest that stratified sampling—an approach which aims for equal sampling among sites [38,57]—is not necessarily the most appropriate approach for detecting trends using citizen science data. In other words, citizen scientists are likely already sufficiently sampling biodiversity in space: they appropriately identify and sample ‘hotspots’ in space that should receive the most sampling attention. But the effect of citizen scientists visiting ‘popular’ locations (e.g. spots known for their bird diversity) could exclude the discovery of other known ‘hotspots’ in the same region.

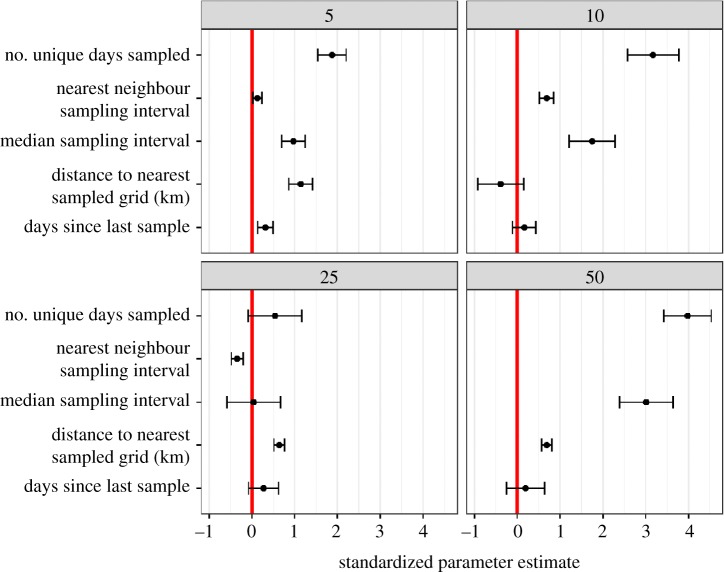

For those sampled sites, however, we found a generally positive relationship between a number of our predicted parameters (detailed predictions can be found in appendix 1 in the electronic supplementary material) and the marginal value of a sampling event (figure 2). Full summary statistics for each of our predictors can be found in appendix 4 in the electronic supplementary material, but the range, median and interquartile range, respectively, can be found in parentheses after the referenced parameter. The number of unique days sampled (5 km: 1–1222, 25, 228; 10 km: 1–1894, 79, 424; 25 km: 1–2946, 1103, 2071.5; 50 km: 11–3004, 2284, 808)—probably represented from known ‘hotspots’ identified by citizen scientists—had the strongest, positive, effect size, and this was robust to grain size comparisons. The median sampling interval (5 km: 1–2450, 31, 124; 10 km: 1–1401, 11, 57; 25 km: 1–821, 1, 5; 50 km: 1–193, 1, 0) was also strongly associated with high value samples, with an exception at the 25 km grain size. Distance to the nearest sampled site (5 km: 1.9–19.7, 5, 2.1; 10 km: 0–23.1, 10, 0.7; 25 km: 14.8–25, 22, 4.3; 50 km: 32.3–45.1, 37.5, 3.6) and the nearest-neighbour sampling interval (5 km: 1–2450, 43.5, 170; 10 km: 1–1401, 14, 54; 25 km: 1–821, 2, 5; 50 km: 1–1, 1, 0) influenced the value of a sampling event less than the other parameters. Surprisingly, the number of days since the last sample (5 km: 1–2935, 39, 228; 10 km: 1–1645, 10, 86; 25 km: 1–693, 1, 4; 50 km: 1–316, 1, 0), while positively associated, had less influence than other parameters. See figure 2 for standardized parameter estimates (i.e. effect sizes). The fact that days since last sample had a lower effect size than both the median sampling interval and the number of unique days sampled, highlights the value of ‘revisiting’ a site (i.e. ‘hotspot’) in order to extract the maximum amount of information.

Figure 2.

The parameter estimates (and 95% confidence intervals) for four separate linear models (i.e. at each of the respective grain sizes), showing the relative strength of the results and effect sizes for each of the predictors. Full summary statistics for each of the predictors can be found in appendix 4 in the electronic supplementary material. Variables were log-transformed and then standardized, allowing for direct comparison among effect sizes. (Online version in colour.)

The ‘history’ of a site is particularly important while considering whether to sample that site: the number of unique days sampled was the strongest predictor for all but the 25 km (second strongest) grain size, suggesting that observations from sites with a long time series are relatively more valuable. Because sites with larger median sampling intervals were positively associated with the marginal value of a citizen science observation, a secondary goal could be to decrease (i.e. left-shift the distribution) the median sampling interval of sites by targeting sites with the largest median sampling intervals; providing some structure to unstructured citizen science projects.

We found generally consistent results, albeit with variation in parameters: no predictor was consistently significant across all grain sizes. Nevertheless, our findings appear to be robust to spatial scale, at least within a regional level. It is critical to track biodiversity trends at multiple spatial scales [6], as biodiversity estimates sometimes change dependent on the spatial scale [40]. In comparison with other regions in Australia, the distribution of sampled grids in Sydney is generally similar—many grids unsampled or sampled only a few times, and then large variation among the rest of the grids (appendix 5 in the electronic supplementary material). Different regions have the same underlying ‘starting point’ in the current sampling regime, suggesting our results are generalizable among regions. Although this may only be applicable at a regional scale, and future work should further investigate these patterns at large, continental scales, where the grain size is proportional to the spatial scale of the study. For example, within all of Australia, it is likely that unsampled regions will be significantly more important because there are many ‘gaps’ in the data, and effort could thus be directed from well sampled regions to unsampled regions.

(b). Applications of our predictions

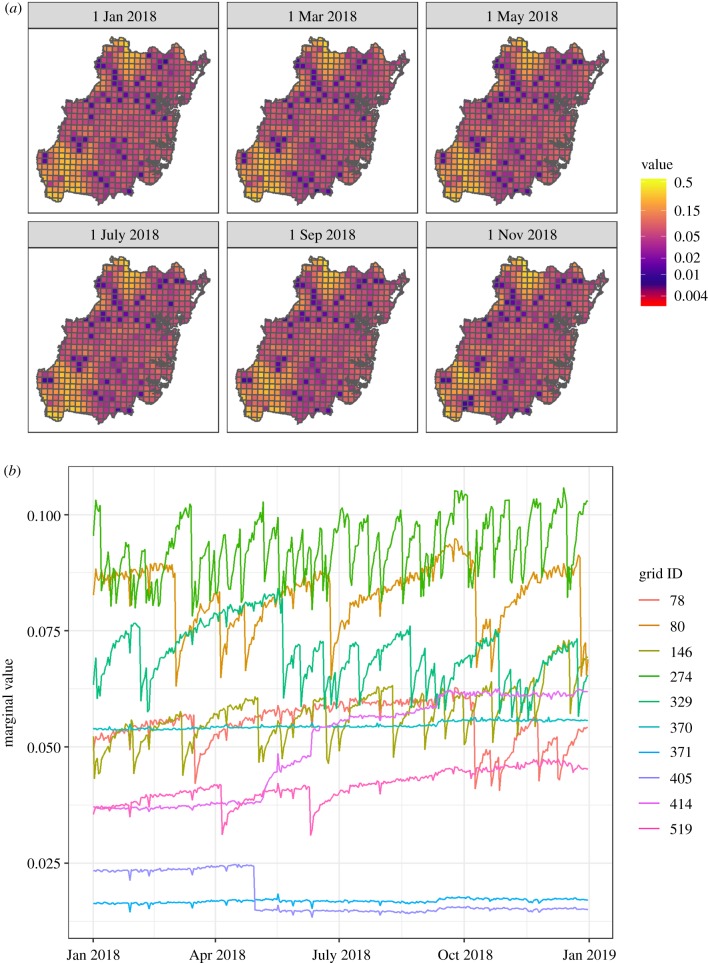

Providing dynamic feedback to citizen science participants has proved successful for many citizen science projects [44,82,83]. This feedback is generally in the form of leaderboards, presenting the number of submissions or number of unique species someone has contributed [84]. But leaderboards tend to focus on outputs, incentivizing finding rather than looking, leading to perverse outcomes related to the redundancies and gaps in citizen science data. We sought to develop an outcome-based incentive by using our fitted models to predict the expected value of a given citizen science observation, dynamically, for any given day (e.g. figure 3; https://github.com/coreytcallaghan/optimizing-citizen-science-sampling/blob/master/Figures/dynamic_map.gif). This approach required us to analyse data from the past first, using a model with all observations for 2018, based on statistical leverage calculated from 2010 to 2018, in order to predict the expected marginal value for any given day. We envision a dynamic approach (https://github.com/coreytcallaghan/optimizing-citizen-science-sampling/blob/master/Figures/dynamic_map.gif) in future citizen science projects, which would ultimately guide participants to sites which should be sampled on any given day—or in a given week, month or year. In this instance, leaderboards would move past numbers of species or submissions and could be derived based on a participant's cumulative value to the citizen science dataset. Instead of participants preferentially chasing specific species, this approach would guide participants to the sites with the highest expected marginal value for the biodiversity dataset. For example, we imagine visitor centres across the world at national parks or urban greenspaces providing their visitors on any given day a localized map showing which trail someone should visit if they are interested in contributing the greatest value to that park's biodiversity knowledge, through citizen science. The global pull of ecotourism [85] is increasing exponentially, creating the potential for people to contribute to local biodiversity knowledge in areas that are traditionally undersampled, and with this framework, the collective effort of citizen scientists can be maximized.

Figure 3.

(a) A map of predicted expected marginal value for six different days in 2018, throughout the Greater Sydney Region, showing the highest valued sites that would optimize the collective knowledge on biodiversity trends throughout the Greater Sydney Region. This prediction step is dynamic: predictions are updated as new observations are submitted to the citizen science database. Expected marginal value maps will need to be updated fast in parts of the world where sampling rates are high, but this can be done at a slower rate where sampling is less frequent, and this will also vary among projects. (b) The changes in expected marginal value for 2018 for 10 randomly chosen grid cells at the 5 km2 grid size. Some sites' expected marginal values remain relatively constant through time (e.g. grid 371) while others are highly variable (e.g. grid 274), and others undergo distinct step-changes (e.g. grid 405) corresponding to whether that grid was recently sampled or not. This is a dynamic approach, showing how the expected marginal value of a grid changes based on our parametrized model results (https://github.com/coreytcallaghan/optimizing-citizen-science-sampling/blob/master/Figures/dynamic_map.gif). (Online version in colour.)

Another critical component to efficiently direct effort and maximize the collective effort of citizen scientists is by understanding critical thresholds necessary for reliable estimates of trend detection. If the minimum number of sampling events for a region is understood, then citizen science effort could appropriately be directed to areas where these critical thresholds are not yet met. We preliminarily found that for the top 50 species in our analysis, approximately 11 700 checklists were necessary for a 50% reduction/convergence in the slope estimate based on our model (appendix 2 in the electronic supplementary material). This result is comparable with a study in the USA which found that approximately 10 000 eBird checklists were necessary to provide reliable trend estimates [60]. Future work should investigate critical thresholds for biodiversity analyses and how these interact with efficiently directing citizen science effort.

We focused our framework on a specific statistical outcome: trend detection. Many other ecological outcomes arise from citizen science datasets, including species distribution models [47,48], phylogeographical research [55,56], invasive species detection [53,54] or phenological research [51,52]. Each potential outcome will have different optimal sampling strategies in space and time, probably with nuanced trade-offs between outcomes. For example, an intended outcome of a species distribution model would be likely to place greater value on observations from unsampled sites [86] than for species trend detection. But these different outcomes can still be quantified in the same framework we introduce here: this framework could be applied to a wide suite of statistical models—including for different taxa and including more complicated trend analysis accounting for intra-annual varying detection probabilities. The key piece of information is some form of statistical leverage that can be calculated from a potential statistical model.

(c). Conclusions

Since eBird's inception in 2002, citizen scientists have collectively contributed greater than 30 million effort hours. And this is only one citizen science project, focused on birds. Our approach should be tested for other taxonomic groups, ensuring generalizability. Clearly, the cumulative effort put-forth by citizen scientists is immense; arguably, citizen science will continue to shape the future of ecology and conservation—as it has substantially for the past couple of centuries [63]—with an increasingly critical role in monitoring of biodiversity [20,65]. But we need to look towards the future. Are there mechanisms we can put in place now which will increase our collective knowledge gleaned from citizen science datasets for biodiversity in the future? We highlight general rules which could help guide citizen science participants to better sampling in space and time: the number of unique days sampled and the largest median sampling intervals both positively correlate with the marginal value of a citizen science observation. Moreover, we demonstrate a framework which citizen science projects can implement to better optimize their sampling designs, which can be tailored to specific citizen science project goals.

Supplementary Material

Acknowledgements

We thank the countless eBird contributors who are continuously making open-access bird observation data available, and the eBird team at the Cornell Lab for curating this valuable dataset. We also thank two anonymous reviewers who helped improve the manuscript.

Data accessibility

All eBird data are freely available for download (https://ebird.org/data/download), but the necessary portion of the eBird basic dataset (i.e. for the Greater Sydney Region), along with spatial data and code to reproduce our analyses, are available at: https://doi.org/10.5281/zenodo.3402307.

Authors' contributions

C.T.C., W.K.C., A.G.B.P., J.J.L.R. and R.E.M. conceived the study. C.T.C. and W.K.C. carried out the analysis and wrote the first draft of the manuscript. All authors contributed to editing and revising the manuscript.

Competing interests

We declare we have no competing interests.

Funding

We received no funding for this study.

References

- 1.Harrison PJ, Buckland ST, Yuan Y, Elston DA, Brewer MJ, Johnston A, Pearce-Higgins JW. 2014. Assessing trends in biodiversity over space and time using the example of British breeding birds. J. Appl. Ecol. 51, 1650–1660. ( 10.1111/1365-2664.12316) [DOI] [Google Scholar]

- 2.Wilson CD, Roberts D. 2011. Modelling distributional trends to inform conservation strategies for an endangered species. Divers. Distrib. 17, 182–189. ( 10.1111/j.1472-4642.2010.00723.x) [DOI] [Google Scholar]

- 3.McMahon SM, et al. 2011. Improving assessment and modelling of climate change impacts on global terrestrial biodiversity. Trends Ecol. Evol. 26, 249–259. ( 10.1016/j.tree.2011.02.012) [DOI] [PubMed] [Google Scholar]

- 4.Honrado JP, Pereira HM, Guisan A. 2016. Fostering integration between biodiversity monitoring and modelling. J. Appl. Ecol. 53, 1299–1304. ( 10.1111/1365-2664.12777) [DOI] [Google Scholar]

- 5.Yoccoz NG, Nichols JD, Boulinier T. 2001. Monitoring of biological diversity in space and time. Trends Ecol. Evol. 16, 446–453. ( 10.1016/S0169-5347(01)02205-4) [DOI] [Google Scholar]

- 6.Soberón J, Jiménez R, Golubov J, Koleff P. 2007. Assessing completeness of biodiversity databases at different spatial scales. Ecography 30, 152–160. ( 10.1111/j.0906-7590.2007.04627.x) [DOI] [Google Scholar]

- 7.Lindenmayer DB, et al. 2012. Value of long-term ecological studies. Austral Ecol. 37, 745–757. ( 10.1111/j.1442-9993.2011.02351.x) [DOI] [Google Scholar]

- 8.Magurran AE, Baillie SR, Buckland ST, Dick JM, Elston DA, Scott EM, Smith RI, Somerfield PJ, Watt AD. 2010. Long-term datasets in biodiversity research and monitoring: assessing change in ecological communities through time. Trends Ecol. Evol. 25, 574–582. ( 10.1016/j.tree.2010.06.016) [DOI] [PubMed] [Google Scholar]

- 9.Vellend M, et al. 2017. Estimates of local biodiversity change over time stand up to scrutiny. Ecology 98, 583–590. ( 10.1002/ecy.1660) [DOI] [PubMed] [Google Scholar]

- 10.Kery M, Dorazio RM, Soldaat L, Van Strien A, Zuiderwijk A, Royle JA. 2009. Trend estimation in populations with imperfect detection. J. Appl. Ecol. 46, 1163–1172. ( 10.1111/j.1365-2664.2009.01724.x) [DOI] [Google Scholar]

- 11.Bakker VJ, Baum JK, Brodie JF, Salomon AK, Dickson BG, Gibbs HK, Jensen OP, Mcintyre PB. 2010. The changing landscape of conservation science funding in the United States. Conserv. Lett. 3, 435–444. ( 10.1111/j.1755-263X.2010.00125.x) [DOI] [Google Scholar]

- 12.Ríos-Saldaña CA, Delibes-Mateos M, Ferreira CC. 2018. Are fieldwork studies being relegated to second place in conservation science? Glob. Ecol. Conserv. 14, e00389 ( 10.1016/j.gecco.2018.e00389) [DOI] [Google Scholar]

- 13.Callaghan CT, Gawlik DE. 2015. Efficacy of eBird data as an aid in conservation planning and monitoring. J. Field Ornithol. 86, 298–304. ( 10.1111/jofo.12121) [DOI] [Google Scholar]

- 14.Theobald EJ, et al. 2015. Global change and local solutions: tapping the unrealized potential of citizen science for biodiversity research. Biol. Conserv. 181, 236–244. ( 10.1016/j.biocon.2014.10.021) [DOI] [Google Scholar]

- 15.Sullivan BL, et al. 2017. Using open access observational data for conservation action: a case study for birds. Biol. Conserv. 208, 5–14. ( 10.1016/j.biocon.2016.04.031) [DOI] [Google Scholar]

- 16.Loss SR, Loss SS, Will T, Marra PP. 2015. Linking place-based citizen science with large-scale conservation research: a case study of bird-building collisions and the role of professional scientists. Biol. Conserv. 184, 439–445. ( 10.1016/j.biocon.2015.02.023) [DOI] [Google Scholar]

- 17.Barlow K, Briggs P, Haysom K, Hutson A, Lechiara N, Racey P, Walsh AL, Langton SD. 2015. Citizen science reveals trends in bat populations: the National Bat Monitoring Programme in Great Britain. Biol. Conserv. 182, 14–26. ( 10.1016/j.biocon.2014.11.022) [DOI] [Google Scholar]

- 18.Fox R, Warren MS, Brereton TM, Roy DB, Robinson A. 2011. A new Red List of British butterflies. Insect Conserv. Divers. 4, 159–172. ( 10.1111/j.1752-4598.2010.00117.x) [DOI] [Google Scholar]

- 19.Chandler M, et al. 2017. Contribution of citizen science towards international biodiversity monitoring. Biol. Conserv. 213, 280–294. ( 10.1016/j.biocon.2016.09.004) [DOI] [Google Scholar]

- 20.Pocock MJ, et al. 2018. A vision for global biodiversity monitoring with citizen science. Adv. Ecol. Res. 59, 169–223. ( 10.1016/bs.aecr.2018.06.003) [DOI] [Google Scholar]

- 21.Cooper CB, Shirk J, Zuckerberg B. 2014. The invisible prevalence of citizen science in global research: migratory birds and climate change. PLoS ONE 9, e106508 ( 10.1371/journal.pone.0106508) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Jordan R, Crall A, Gray S, Phillips T, Mellor D. 2015. Citizen science as a distinct field of inquiry. Bioscience 65, 208–211. ( 10.1093/biosci/biu217) [DOI] [Google Scholar]

- 23.Newman G, Wiggins A, Crall A, Graham E, Newman S, Crowston K. 2012. The future of citizen science: emerging technologies and shifting paradigms. Front. Ecol. Environ. 10, 298–304. ( 10.1890/110294) [DOI] [Google Scholar]

- 24.Pocock MJ, Tweddle JC, Savage J, Robinson LD, Roy HE. 2017. The diversity and evolution of ecological and environmental citizen science. PLoS ONE 12, e0172579 ( 10.1371/journal.pone.0172579) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Kelling S, et al. 2019. Using semistructured surveys to improve citizen science data for monitoring biodiversity. Bioscience 69, 170–179. ( 10.1093/biosci/biz010) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Welvaert M, Caley P. 2016. Citizen surveillance for environmental monitoring: combining the efforts of citizen science and crowdsourcing in a quantitative data framework. Springerplus 5, 1890 ( 10.1186/s40064-016-3583-5) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Kelling S, et al. 2015. Can observation skills of citizen scientists be estimated using species accumulation curves? PLoS ONE 10, e0139600 ( 10.1371/journal.pone.0139600) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Boakes EH, McGowan PJ, Fuller RA, Chang-qing D, Clark NE, O'Connor K, Mace GM. 2010. Distorted views of biodiversity: spatial and temporal bias in species occurrence data. PLoS Biol. 8, e1000385 ( 10.1371/journal.pbio.1000385) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Bird TJ, et al. 2014. Statistical solutions for error and bias in global citizen science datasets. Biol. Conserv. 173, 144–154. ( 10.1016/j.biocon.2013.07.037) [DOI] [Google Scholar]

- 30.Courter JR, Johnson RJ, Stuyck CM, Lang BA, Kaiser EW. 2013. Weekend bias in Citizen Science data reporting: implications for phenology studies. Int. J. Biometeorol. 57, 715–720. ( 10.1007/s00484-012-0598-7) [DOI] [PubMed] [Google Scholar]

- 31.Kelling S, Fink D, La Sorte FA, Johnston A, Bruns NE, Hochachka WM. 2015. Taking a ‘big data’ approach to data quality in a citizen science project. Ambio 44, 601–611. ( 10.1007/s13280-015-0710-4) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Geldmann J, Heilmann-Clausen J, Holm TE, Levinsky I, Markussen B, Olsen K, Rahbek C, Tøttrup AP. 2016. What determines spatial bias in citizen science? Exploring four recording schemes with different proficiency requirements. Divers. Distrib. 22, 1139–1149. ( 10.1111/ddi.12477) [DOI] [Google Scholar]

- 33.Callaghan CT, Rowley JJL, Cornwell WK, Poore AG, Major RE. 2019. Improving big citizen science data: moving beyond haphazard sampling. PLoS Biol. 17, e3000357 ( 10.1371/journal.pbio.3000357) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Pyke GH, Ehrlich PR. 2010. Biological collections and ecological/environmental research: a review, some observations and a look to the future. Biol. Rev. 85, 247–266. ( 10.1111/j.1469-185X.2009.00098.x) [DOI] [PubMed] [Google Scholar]

- 35.Etienne RS. 2005. A new sampling formula for neutral biodiversity. Ecol. Lett. 8, 253–260. ( 10.1111/j.1461-0248.2004.00717.x) [DOI] [Google Scholar]

- 36.Moreno CE, Halffter G. 2000. Assessing the completeness of bat biodiversity inventories using species accumulation curves. J. Appl. Ecol. 37, 149–158. ( 10.1046/j.1365-2664.2000.00483.x) [DOI] [Google Scholar]

- 37.Colwell RK, Coddington JA. 1994. Estimating terrestrial biodiversity through extrapolation. Phil. Trans. R. Soc. Lond. B 345, 101–118. ( 10.1098/rstb.1994.0091) [DOI] [PubMed] [Google Scholar]

- 38.Longino JT, Colwell RK. 1997. Biodiversity assessment using structured inventory: capturing the ant fauna of a tropical rain forest. Ecol. Appl. 7, 1263–1277. ( 10.1890/1051-0761(1997)007[1263:BAUSIC]2.0.CO;2) [DOI] [Google Scholar]

- 39.Ferrarini A. 2012. Biodiversity optimal sampling: an algorithmic solution. Proc. Int. Acad. Ecol. Environ. Sci. 2, 50. [Google Scholar]

- 40.Chase JM, Knight TM. 2013. Scale-dependent effect sizes of ecological drivers on biodiversity: why standardised sampling is not enough. Ecol. Lett. 16, 17–26. ( 10.1111/ele.12112) [DOI] [PubMed] [Google Scholar]

- 41.Parrish JK, Burgess H, Weltzin JF, Fortson L, Wiggins A, Simmons B. 2018. Exposing the science in citizen science: fitness to purpose and intentional design. Integr. Comp. Biol. 58, 150–160. ( 10.1093/icb/icy032) [DOI] [PubMed] [Google Scholar]

- 42.Isaac NJ, van Strien AJ, August TA, de Zeeuw MP, Roy DB. 2014. Statistics for citizen science: extracting signals of change from noisy ecological data. Methods Ecol. Evol. 5, 1052–1060. ( 10.1111/2041-210X.12254) [DOI] [Google Scholar]

- 43.Robinson OJ, Ruiz-Gutierrez V, Fink D. 2018. Correcting for bias in distribution modelling for rare species using citizen science data. Divers. Distrib. 24, 460–472. ( 10.1111/ddi.12698) [DOI] [Google Scholar]

- 44.Wiggins A, Crowston K. 2011. From conservation to crowdsourcing: a typology of citizen science. In 2011 44th Hawaii International Conference on System Sciences, Kauai, HI, pp. 1–10. Piscataway, NJ: IEEE. [Google Scholar]

- 45.Fithian W, Elith J, Hastie T, Keith DA. 2015. Bias correction in species distribution models: pooling survey and collection data for multiple species. Methods Ecol. Evol. 6, 424–438. ( 10.1111/2041-210X.12242) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Hochachka WM, Fink D, Hutchinson RA, Sheldon D, Wong WK, Kelling S. 2012. Data-intensive science applied to broad-scale citizen science. Trends Ecol. Evol. 27, 130–137. ( 10.1016/j.tree.2011.11.006) [DOI] [PubMed] [Google Scholar]

- 47.Bradsworth N, White JG, Isaac B, Cooke R. 2017. Species distribution models derived from citizen science data predict the fine scale movements of owls in an urbanizing landscape. Biol. Conserv. 213, 27–35. ( 10.1016/j.biocon.2017.06.039) [DOI] [Google Scholar]

- 48.van Strien AJ, van Swaay CA, Termaat T. 2013. Opportunistic citizen science data of animal species produce reliable estimates of distribution trends if analysed with occupancy models. J. Appl. Ecol. 50, 1450–1458. ( 10.1111/1365-2664.12158) [DOI] [Google Scholar]

- 49.Tiago P, Pereira HM, Capinha C. 2017. Using citizen science data to estimate climatic niches and species distributions. Basic Appl. Ecol. 20, 75–85. ( 10.1016/j.baae.2017.04.001) [DOI] [Google Scholar]

- 50.Stuart-Smith RD, et al. 2017. Assessing national biodiversity trends for rocky and coral reefs through the integration of citizen science and scientific monitoring programs. Bioscience 67, 134–146. ( 10.1093/biosci/biw180) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.La Sorte FA, Tingley MW, Hurlbert AH. 2014. The role of urban and agricultural areas during avian migration: an assessment of within-year temporal turnover. Glob. Ecol. Biogeogr. 23, 1225–1234. ( 10.1111/geb.12199) [DOI] [Google Scholar]

- 52.Supp S, La Sorte FA, Cormier TA, Lim MC, Powers DR, Wethington SM, Goetz S, Graham CH. 2015. Citizen-science data provides new insight into annual and seasonal variation in migration patterns. Ecosphere 6, 1–19. ( 10.1890/ES14-00174.1) [DOI] [Google Scholar]

- 53.Pocock MJ, Roy HE, Fox R, Ellis WN, Botham M. 2017. Citizen science and invasive alien species: predicting the detection of the oak processionary moth Thaumetopoea processionea by moth recorders. Biol. Conserv. 208, 146–154. ( 10.1890/es14-00290.1) [DOI] [Google Scholar]

- 54.Grason EW, McDonald PS, Adams J, Litle K, Apple JK, Pleus A. 2018. Citizen science program detects range expansion of the globally invasive European green crab in Washington State (USA). Manag. Biol. Invasion. 9, 39–47. ( 10.3391/mbi.2018.9.1.04) [DOI] [Google Scholar]

- 55.Bahls LL. 2014. New diatoms from the American West contribute to citizen science. Proc. Acad. Nat. Sci. Philadelphia 163, 61–85. ( 10.1635/053.163.0109) [DOI] [Google Scholar]

- 56.Drury JP, Barnes M, Finneran AE, Harris M, Grether GF. 2019. Continent-scale phenotype mapping using citizen scientists’ photographs. Ecography 42, 1436–1445. ( 10.1111/ecog.04469) [DOI] [Google Scholar]

- 57.Baillie JE, et al. 2008. Toward monitoring global biodiversity. Conserv. Lett. 1, 18–26. ( 10.1111/j.1755-263X.2008.00009.x) [DOI] [Google Scholar]

- 58.Walker J, Taylor P. 2017. Using eBird data to model population change of migratory bird species. Avian Conserv. Ecol. 12, 4. [Google Scholar]

- 59.Kery M, Royle JA, Schmid H, Schaub M, Volet B, Haefliger G, Zbinden N. 2010. Site-occupancy distribution modeling to correct population-trend estimates derived from opportunistic observations. Conserv. Biol. 24, 1388–1397. ( 10.1111/j.1523-1739.2010.01479.x) [DOI] [PubMed] [Google Scholar]

- 60.Horns JJ, Adler FR, Şekercioğlu ÇH. 2018. Using opportunistic citizen science data to estimate avian population trends. Biol. Conserv. 221, 151–159. ( 10.1016/j.biocon.2018.02.027) [DOI] [Google Scholar]

- 61.van Strien AJ, et al. 2013. Occupancy modelling as a new approach to assess supranational trends using opportunistic data: a pilot study for the damselfly Calopteryx splendens. Biodivers. Conserv. 22, 673–686. ( 10.1007/s10531-013-0436-1) [DOI] [Google Scholar]

- 62.Pagel J, Anderson BJ, O'Hara RB, Cramer W, Fox R, Jeltsch F, Roy DB, Thomas CD, Schurr FM. 2014. Quantifying range-wide variation in population trends from local abundance surveys and widespread opportunistic occurrence records. Methods Ecol. Evol. 5, 751–760. ( 10.1111/2041-210X.12221) [DOI] [Google Scholar]

- 63.Silvertown J. 2009. A new dawn for citizen science. Trends Ecol. Evol. 24, 467–471. ( 10.1016/j.tree.2009.03.017) [DOI] [PubMed] [Google Scholar]

- 64.Soroye P, Ahmed N, Kerr JT. 2018. Opportunistic citizen science data transform understanding of species distributions, phenology, and diversity gradients for global change research. Glob. Change Biol. 24, 5281–5921. ( 10.1111/gcb.14358) [DOI] [PubMed] [Google Scholar]

- 65.McKinley DC, et al. 2017. Citizen science can improve conservation science, natural resource management, and environmental protection. Biol. Conserv. 208, 15–28. ( 10.1016/j.biocon.2016.05.015) [DOI] [Google Scholar]

- 66.Mair L, Ruete A. 2016. Explaining spatial variation in the recording effort of citizen science data across multiple taxa. PLoS ONE 11, e0147796 ( 10.1371/journal.pone.0147796) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67.Ward DF. 2014. Understanding sampling and taxonomic biases recorded by citizen scientists. J. Insect Conserv. 18, 753–756. ( 10.1007/s10841-014-9676-y) [DOI] [Google Scholar]

- 68.R Core Team. 2018. R: a language and environment for statistical computing. Vienna, Austria: See https://www.R-project.org/. [Google Scholar]

- 69.Wickham H. 2017. tidyverse: easily install and load the ‘Tidyverse’; 2017. R package version 1.2.1. See https://CRAN.R-project.org/package=tidyverse.

- 70.Wickham H. 2016. Ggplot2: elegant graphics for data analysis. New York, NY: Springer; See http://ggplot2.org. [Google Scholar]

- 71.Pebesma E. 2018. sf: Simple Features for R; 2018. R package version 0.6-3. See https://CRAN.R-project.org/package=sf.

- 72.Sullivan BL, Wood CL, Iliff MJ, Bonney RE, Fink D, Kelling S. 2009. eBird: a citizen-based bird observation network in the biological sciences. Biol. Conserv. 142, 2282–2292. ( 10.1016/j.biocon.2009.05.006) [DOI] [Google Scholar]

- 73.Sullivan BL, et al. 2014. The eBird enterprise: an integrated approach to development and application of citizen science. Biol. Conserv. 169, 31–40. ( 10.1016/j.biocon.2013.11.003) [DOI] [Google Scholar]

- 74.Gilfedder M, Robinson CJ, Watson JE, Campbell TG, Sullivan BL, Possingham HP. 2019. Brokering trust in citizen science. Soc. Nat. Resour. 32, 292–302. ( 10.1080/08941920.2018.1518507) [DOI] [Google Scholar]

- 75.Callaghan C, Lyons M, Martin J, Major R, Kingsford R. 2017. Assessing the reliability of avian biodiversity measures of urban greenspaces using eBird citizen science data. Avian Conserv. Ecol. 12, 12 ( 10.5751/ACE-01104-120212) [DOI] [Google Scholar]

- 76.Johnston A, Fink D, Hochachka WM, Kelling S. 2018. Estimates of observer expertise improve species distributions from citizen science data. Methods Ecol. Evol. 9, 88–97. ( 10.1111/2041-210X.12838) [DOI] [Google Scholar]

- 77.Szabo JK, Vesk PA, Baxter PW, Possingham HP. 2010. Regional avian species declines estimated from volunteer-collected long-term data using List Length Analysis. Ecol. Appl. 20, 2157–2169. ( 10.1890/09-0877.1) [DOI] [PubMed] [Google Scholar]

- 78.Cook RD. 1977. Detection of influential observation in linear regression. Technometrics 19, 15–18. ( 10.2307/1268249) [DOI] [Google Scholar]

- 79.Barlow K, Briggs P, Haysom K, Hutson A, Lechiara N, Racey P. et al 1980. Regression diagnostics: identifying influential data and sources of collinearity. New York, NY: John Wiley & Sons. [Google Scholar]

- 80.Bollinger G. 1981. Regression diagnostics: identifying influential data and sources of collinearity. Los Angeles, CA: Sage Publications. [Google Scholar]

- 81.Robinson D. 2018. broom: convert statistical analysis objects into tidy data frames; R package version 0.4.4. See https://CRAN.R-project.org/package=broom.

- 82.Rowley JJL, Callaghan CT, Cutajar T, Portway C, Potter K, Mahony S. 2019. FrodID: citizen scientists provide validated biodiversity data on Australia's frogs. Herpetol. Conserv. Biol. 14, 155–170. [Google Scholar]

- 83.Xue Y, Davies I, Fink D, Wood C, Gomes CP. 2016. Avicaching: A two stage game for bias reduction in citizen science. In Proceedings of the 2016 International Conference on Autonomous Agents & Multiagent Systems, pp. 776–785. International Foundation for Autonomous Agents and Multiagent Systems; See http://ifaamas.org/Proceedings/aamas2016. [Google Scholar]

- 84.Wood C, Sullivan B, Iliff M, Fink D, Kelling S. 2011. eBird: engaging birders in science and conservation. PLoS Biol. 9, e1001220 ( 10.1371/journal.pbio.1001220) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 85.Sharpley R. 2006. Ecotourism: a consumption perspective. J. Ecotourism 5, 7–22. ( 10.1080/14724040608668444) [DOI] [Google Scholar]

- 86.Crawley M, Harral J. 2001. Scale dependence in plant biodiversity. Science 291, 864–868. ( 10.1126/science.291.5505.864) [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

All eBird data are freely available for download (https://ebird.org/data/download), but the necessary portion of the eBird basic dataset (i.e. for the Greater Sydney Region), along with spatial data and code to reproduce our analyses, are available at: https://doi.org/10.5281/zenodo.3402307.