Abstract

It has been debated whether the link between amygdala activity and subsequent memory is equally strong for positive and negative information. Moreover, it has been unclear whether amygdala activity at encoding corresponds with enhanced memory for all contextual aspects of the presentation of an emotional item, or whether amygdala activity primarily enhances memory for the emotional item itself. In the present functional magnetic resonance imaging study, participants encoded positive and negative stimuli while performing one of two tasks (judgment of animacy or commonness). Amygdala activity at encoding was related to subsequent memory for the positive and negative items but not to subsequent memory for the task performed. Amygdala activity showed no relationship to subsequent-memory performance for the neutral items. Regardless of the emotional content of the items, activity in the entorhinal cortex corresponded with subsequent memory for the item but not with memory for the task performed, whereas hippocampal activity corresponded with subsequent memory for the task performed. These results are the first to demonstrate that the amygdala can be equally engaged during the successful encoding of positive and negative items but that its activity does not facilitate the encoding of all contextual elements present during an encoding episode. The results further suggest that dissociations within the medial temporal lobe sometimes noted for nonemotional information (i.e., activity in the hippocampus proper leading to later memory for context, and activity in the entorhinal cortex leading to later memory for an item but not its context) also hold for emotional information.

Keywords: amygdala, emotion, encoding, entorhinal cortex, fMRI, hippocampus

Introduction

Emotional information often is remembered more accurately and persistently than nonemotional information. Animal research has demonstrated that this emotional memory boost is mediated, at least in part, by amygdalar modulation of hippocampal consolidation (Cahill and McGaugh, 1998; Phelps and LeDoux, 2005). Neuroimaging and patient studies have provided additional evidence that amygdala–hippocampal interactions can underlie humans’ enhanced memory for emotional information (Phelps, 2004).

Amygdala activity corresponds not only with the likelihood of remembering an emotional item but also with the feeling that it is remembered vividly (Dolcos et al., 2004; Kensinger and Corkin, 2004; Sharot et al., 2004). There currently is debate, however, about the role of the amygdala in encoding event details (Kensinger, 2004; Adolphs et al., 2005). Although amygdala activity corresponds with memory for some details (whether an item was seen or imagined) (Kensinger and Schacter, 2005a,b), the generality of this finding is unclear. At a behavioral level, emotion can enhance memory for some details, while having no effect, or a detrimental one, on memory for other details (Adolphs et al., 2001). Moreover, studies of patients with amygdalar damage have suggested that the region plays a role in memory for gist rather than for the details of the presentation of an item (Adolphs et al., 2005).

In the present study, participants viewed positive, negative, and neutral items and judged whether they were (1) animate or (2) commonly encountered. We investigated the neural processes leading to successful “item-and-source” memory (i.e., memory for both the item and the judgment) and those leading to “item-not-source” memory. A central goal was to examine whether amygdala activity would correspond with subsequent item-and-source memory. The design also allowed investigation of the role of other medial temporal-lobe regions in the successful encoding of emotional items. For nonemotional information, hippocampal activity corresponds with the mnemonic binding of an item to its context (item-and-source memory), whereas activity in the entorhinal cortex is related to the establishment of memory for an item but not for its context (item-not-source memory) (Davachi and Wagner, 2002; Davachi et al., 2003; Ranganath et al., 2004). The present study examined whether these dissociations within the medial temporal lobe would hold for emotional information.

Another goal of this study was to examine whether the relationship of the amygdala to successful encoding would be comparable for positive and negative stimuli. At a behavioral level, individuals often indicate that they remember negative information more vividly than positive information (Ochsner, 2000), and the trade-off between memory for gist and memory for detail can be more pronounced for negative than for positive events (Denburg et al., 2003). Thus, it is plausible that the amygdala has disparate influences on memory for positive and negative information. Although extensive research in animals and humans has suggested a critical role for the amygdala in processing reward-related stimuli (Hamann, 2001; McGaugh, 2004), neuroimaging studies examining the link of the amygdala to subsequent memory have primarily focused on negative stimuli. This study investigated whether the relationship of the amygdala to memory for items and their contexts would differ based on the valence of the item.

Materials and Methods

Participants.

Participants were 21 right-handed native English speakers (11 men) between the ages of 18 and 35 and with no history of psychiatric or neurological disorders. No participant was taking any medication that would affect the CNS, and no participant was depressed. Informed consent was obtained from all participants in a manner approved by the Harvard University and Massachusetts General Hospital Institutional Review Boards.

Materials.

Stimuli included 360 words (120 positive and arousing, 120 negative and arousing, 120 neutral) and 360 pictures (120 positive and arousing, 120 negative and arousing, 120 neutral). Words were selected from the Affective Norms for English Words (ANEW) database (Bradley and Lang, 1999) and were supplemented with additional neutral words. Pictures were selected from the IAPS (International Affective Picture System) database (Lang et al., 1997) and supplemented with additional neutral pictures. Words and pictures were chosen so that positive and negative words and positive and negative pictures were equated in arousal and in absolute valence (i.e., distance from neutral valence).

Positive, negative, and neutral words were matched in frequency, familiarity, imageability, and word length, as determined by normative data in the ANEW database (Bradley and Lang, 1999) and in the MRC psycholinguistic database (Coltheart, 1981). Positive, negative, and neutral pictures were matched for visual complexity [as rated by five young adults who did not participate in the magnetic resonance imaging (MRI) study], brightness [as assessed via Adobe Photoshop (Adobe Systems, San Jose, CA)], and the number of stimuli that included people, animals, or buildings and landscapes. In addition, because participants were asked to determine whether words and pictures described or depicted something (1) animate or (2) common (see below), stimuli were selected such that approximately half of the stimuli from each emotion category received a “yes” response to each question.

Procedure.

Participants were scanned as they viewed 180 words and 180 pictures (60 from each emotional category). Each word or picture was presented for 2500 ms. Preceding half of the stimuli was the prompt “Animate?,” and the prompt “Common?” preceded the remaining stimuli. The prompt was presented for 500 ms and indicated the question that participants should answer as they viewed the upcoming stimulus. Participants were instructed to respond “yes” to the “Animate?” prompt if a picture included an animate element or if a word described something animate [either the name of something animate (e.g., snake) or a word that would generally be used in reference to something animate (e.g., laugh)] and otherwise to respond “no.” Participants were asked to respond “yes” to the “Common?” prompt if the stimulus depicted or described something that they would encounter in a typical month.[The patterns of activity were comparable for items encoded with the two tasks (judgments of animacy versus commonness) so all reported results collapse across the two tasks.] Words and pictures from the different emotion categories and prompt conditions were pseudorandomly intermixed, with interstimulus intervals ranging from 4 to 14 s, to create jitter (Dale, 1999).

Outside of the scanner, after an ∼30 min delay, participants performed a surprise recognition task (debriefing indicated that no participant expected their memory to be tested). They were presented with 360 words and 360 pictures (120 from each emotion category), shown one at a time on a computer screen. Items that had been presented while the participant was in the scanner were intermixed with new items that had not been studied, and half of the items from each emotion category were unstudied items. The items that served as studied items versus as nonstudied foils were counterbalanced across participants. Care was taken to assure that the items in each emotion category that were studied versus unstudied were of comparable valence and arousal. In addition, studied and unstudied words were chosen so that they did not differ in frequency, familiarity, imageability, or word length, and studied and unstudied pictures were selected to be of comparable visual complexity, brightness, and general content (those with animals, people, or buildings/landscapes). For each item, participants were asked to indicate whether the item was one they had seen in the scanner and had been asked to judge for its animacy, was one they had studied in the scanner and had been asked to judge for its commonness, or was a new item that they had not seen in the scanner. Studied items assigned to the correct task were considered as item-and-source responses; studied items assigned to the incorrect task were considered to be item-not-source responses; and studied items given a new response were considered to be “misses.”

Image acquisition and data analysis.

Images were acquired on a 1.5 tesla Siemens (Erlangen, Germany) Sonata MRI scanner. Stimuli were back-projected onto a screen in the scanner bore, and participants viewed the items through an angled mirror attached to the head coil. Detailed anatomic images were acquired using a multiplanar rapidly acquired gradient echo sequence. Functional images were acquired using a T2*-weighted echo planar imaging sequence (repetition time, 3000 ms; echo time, 40 ms; field of view, 200 mm; flip angle, 90°). Twenty-nine axial-oblique slices (3.12 mm thickness; 0.6 mm skip between slices) were acquired in an interleaved manner.

All preprocessing and data analyses were conducted within SPM99 (Statistical Parametric Mapping; Wellcome Department of Cognitive Neurology, London, UK). Standard preprocessing was performed on the functional data, including slice-timing correction, rigid body motion correction, normalization to the Montreal Neurological Institute template (resampling at 3 mm cubic voxels), and spatial smoothing (using an 8 mm full-width at half-maximum isotropic Gaussian kernel).

For each participant, and on a voxel-by-voxel basis, an event-related analysis was first conducted in which all instances of a particular event type were modeled through convolution with a canonical hemodynamic response function. The following events were modeled for each emotion category (positive, negative, neutral) and for each item type (words, pictures): subsequent item-and-source memory, subsequent item-not-source memory, subsequent misses. Effects for each of these event types were estimated using a subject-specific, fixed-effects model. These data were then entered into a second-order, random-effects group analysis. Contrast analyses were performed to examine subsequent-memory effects (e.g., item-and-source vs item-not-source) for pictures and words of a particular emotion type. Conjunction analyses, using the masking function in SPM, examined the regions shared between two contrasts. The individual contrasts included in the conjunction analysis were analyzed at a threshold of p < 0.01 and with a voxel extent of 5 voxels [such that the conjoint probability of the conjunction analysis, using Fisher’s estimate (Fisher, 1950; Lazar et al., 2002), was p < 0.001].

To further explore the correlates of subsequent memory, region-of-interest (ROI) analyses were performed, using the ROI toolbox implemented in SPM (written by R. Poldrack, University of California, Los Angeles, Los Angeles, CA). Spherical ROIs included all significant voxels within an 8 mm radius of each chosen maximum voxel identified in the group statistical map. Within each of these ROIs, a hemodynamic response function was calculated for each individual subject and for each condition type (relative to fixation baseline) as a function of peristimulus time (0–21 s). Statistics were performed on the maximum (peak) percentage signal change reached within peristimulus times 3–15 s. These peak signal change values are displayed in the figures.

All activations are presented in neurological coordinates (i.e., activity on the right hemisphere is presented on the right side of the brain images). Voxel coordinates are reported in Talairach coordinates (Talairach and Tournoux, 1998) and reflect the most significant voxel within the cluster of activation.

Results

Behavioral results

An ANOVA conducted on the responses to the studied items with emotion type (positive, negative, neutral), memory type (item-and-source, item-not-source, miss), and item type (picture, word) as within-subject factors revealed a significant effect of memory type (item-and-source > item-not-source > miss; F(2,19) = 42.7; p < 0.001; partial η-squared = 0.82), an interaction between memory type and item type (item-and-source memory greater for pictures than for words; misses more likely for words; F(2,19) = 8.47; p < 0.01; partial η-squared = 0.47), and an interaction between emotion type and memory type (F(4,17) = 38.3; p < 0.001; partial η-squared = 0.9). The interaction between emotion type and memory type reflected the fact that although all three types of memory were comparable for the positive and negative items (all p > 0.2), item-not-source memory was significantly more likely to occur for the positive (27% for pictures, 29% for words) and negative (30% for pictures, 28% for words) items than for the neutral items (17% for pictures, 18% for words; t(20) > 3.6; p < 0.01), whereas misses were significantly less likely to occur for the positive (13% for pictures, 20% for words) and negative (9% for pictures, 21% for words) items than for the neutral items (18% for pictures, 25% for words; t(20) > 2.5; p < 0.05). The likelihood of item-and-source memory was not affected by emotion type (57% for positive items, 57% for neutral items, 56% for negative items). Thus, positive and negative items were more likely than neutral items to be identified as studied stimuli, but emotion did not enhance the likelihood that participants remembered the task that they had performed with an item (Table 1).

Table 1.

Mean (SE) retrieval responses as a function of emotion

| Presented items |

New items |

||||

|---|---|---|---|---|---|

| Item-and-source | Item-not-source | Miss | Correct rejections | False alarms | |

| Pictures | |||||

| Negative | 0.61 (0.04) | 0.30 (0.04) | 0.09 (0.04) | 0.93 (0.01) | 0.04 (0.01) |

| Positive | 0.61 (0.04) | 0.27 (0.04) | 0.13 (0.02) | 0.92 (0.02) | 0.04 (0.01) |

| Neutral | 0.62 (0.04) | 0.17 (0.02) | 0.18 (0.02) | 0.94 (0.02) | 0.03 (0.01) |

| Words | |||||

| Negative | 0.52 (0.03) | 0.28 (0.03) | 0.21 (0.02) | 0.79 (0.04) | 0.09 (0.02) |

| Positive | 0.54 (0.04) | 0.29 (0.02) | 0.20 (0.03) | 0.83 (0.04) | 0.08 (0.02) |

| Neutral | 0.52 (0.04) | 0.18 (0.03) | 0.25 (0.02) | 0.88 (0.03) | 0.06 (0.02) |

| All items | |||||

| Negative | 0.56 (0.03) | 0.30 (0.02) | 0.15 (0.02) | 0.87 (0.03) | 0.06 (0.01) |

| Positive | 0.57 (0.03) | 0.28 (0.02) | 0.16 (0.02) | 0.88 (0.03) | 0.06 (0.01) |

| Neutral | 0.57 (0.04) | 0.17 (0.02) | 0.22 (0.02) | 0.91 (0.02) | 0.04 (0.01) |

Subsequent-memory functional MRI results

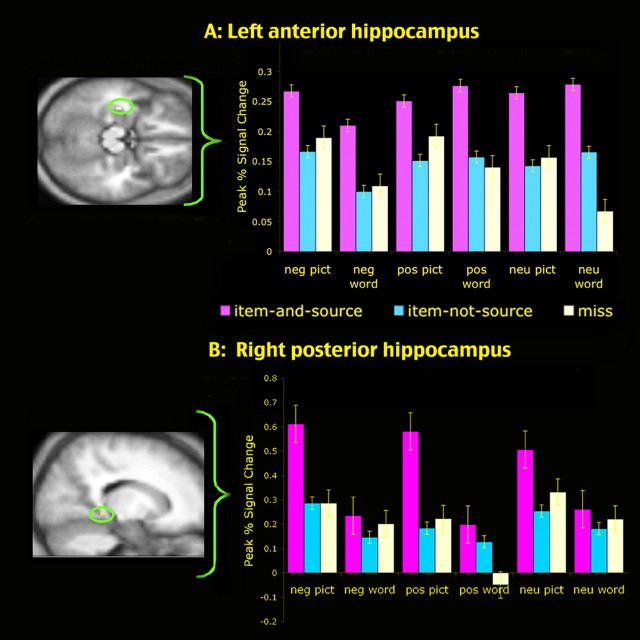

To examine the regions in which activity was associated with source memory for both the positive and the negative items, a conjunction analysis was performed to identify the regions that were active in the two contrast analyses of positive item-and-source > positive item-not-source and negative item-and-source > negative item-not-source (Table 2). This analysis was conducted separately for pictures and words and also for all stimuli (combining data from pictures and words). Of most interest, these analyses revealed that activity in the left anterior hippocampus showed this relationship to subsequent source memory, regardless of stimulus type (picture or word). Time courses extracted from this left anterior hippocampal region revealed that its activity was related to item-and-source memory for the neutral items as well as for the positive and negative items (Fig. 1A). This finding is consistent with previous studies demonstrating that the hippocampus plays a critical role in supporting the encoding of contextual or relational information present during an encoding episode (Sperling et al., 2001; Davachi and Wagner, 2002; Davachi et al., 2003; Prince et al., 2005) and further indicates that this region is involved in the contextual encoding of emotional as well as neutral stimuli. A second region of the medial temporal lobe, spanning the right posterior hippocampus and parahippocampal gyrus, also showed a correspondence to subsequent item-and-source memory. This region, however, was related to subsequent recognition only for pictures and not for words (Table 1, Fig. 1B). Given the role of the right hippocampus and parahippocampal gyrus in processing visuospatial information (Burgess et al., 2002; Henson, 2005; Sommer et al., 2005), it makes good sense that this region would show a selective correspondence to subsequent memory for pictures but not for words.

Table 2.

Regions in which activity corresponded with subsequent item-and-source memory for both the positive and the negative stimuli

| Region | Hemisphere | Talairach coordinates (x, y, z) | Approximate Brodmann area |

|---|---|---|---|

| Pictures | |||

| Middle frontal gyrus | L | −30, 11, 51 | 6 |

| Orbital frontal gyrus | R | 45, 46, −12 | 10/11 |

| L | −24, 26, −11 | 11 | |

| Superior temporal gyrus | R | 41, 15, −23 | 38 |

| Medial temporal gyrus | R | 45, −9, −9 | 21 |

| Hippocampus | L | −30, −12, −12 | |

| Hippocampus/parahippocampal gyrus | R | 39, −21, −12 | |

| R | 45, −24, −19 | ||

| Thalamus | R | 6, −17, 12 | |

| Words | |||

| Superior parietal lobe | R | 22, −47, 60 | 5 |

| Superior temporal gyrus | R | 46, 3, 4 | 22 |

| R | 32, 15, −21 | 38 | |

| Inferior/middle temporal gyrus | L | −52, −35, −3 | 20/21 |

| Hippocampus | L | −30, −12, −15 | |

| All items | |||

| Superior frontal gyrus | L | −9, 10, 61 | 6 |

| Superior parietal lobule | R | 24, −47, 55 | 5 |

| Superior temporal gyrus | R | 39, 13, −28 | 38 |

| Hippocampus | L | −36, −15, −14 | |

| Hippocampus/parahippocampal gyrus | R | 15, −35, −6 | |

| Striatum | R | −18, −6, −5 | |

L, Left; R, right.

Figure 1.

Activity in the anterior (A) and posterior (B) hippocampus was related to the successful encoding of item-and-source information for the negative, positive, and neutral items. In these hippocampal regions, encoding activity was equally low for items remembered without their source (item-not-source) and items forgotten (miss). Error bars indicate SEM. neg, Negative; pos, positive; neu, neutral; pict, picture.

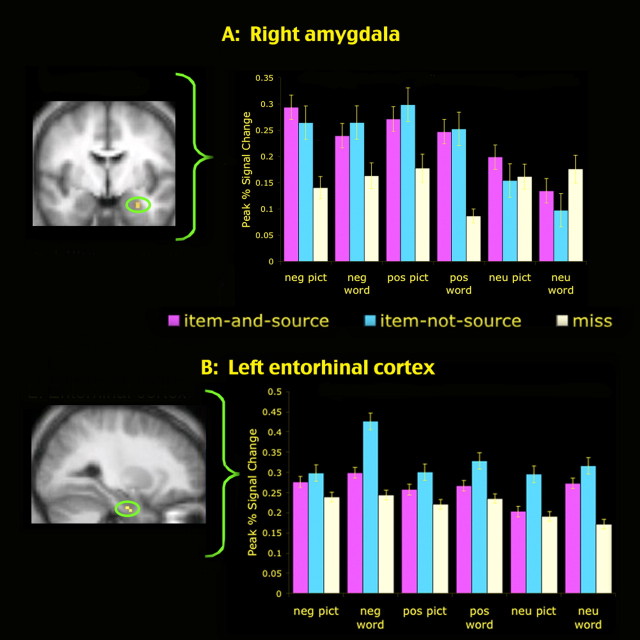

To examine the regions that were related to encoding of item information for both the positive and the negative items, a conjunction analysis was computed to identify the regions that were active in the two contrast analyses of positive item-not-source > positive miss and negative item-not-source > negative miss. These conjunction analyses were conducted separately for pictures and words and also for all stimuli (collapsing data from pictures and words). These analyses revealed a network of regions (Table 3), consistent with those previously implicated in subsequent memory performance (Wagner et al., 1999; Paller and Wagner, 2002). Of note, for both pictures and words, the amygdala and entorhinal cortex showed a relationship to subsequent item-not-source memory. The time course analyses from these regions revealed that the amygdala showed a comparable relationship to subsequent-memory responses for the positive and the negative stimuli. For both valences of emotional stimuli, amygdala activity corresponded with memory for the item but not with memory for the source information: amygdala activity was equally high for all subsequently remembered positive and negative items, regardless of whether or not the task was remembered. This relationship to item-not-source memory in the right amygdala was consistent for both men and women [see Cahill (2003) and Hamann (2005) for discussion of sex effects with regard to amygdala laterality], with no sex-related differences in laterality or in the magnitude of the effect within the right amygdala. [Although this null effect must be interpreted cautiously, and could stem in part from the relatively small group-comparison sample size of 11 men and 10 women, it also is plausible that sex-related laterality effects are larger when tasks encourage the monitoring of emotional responses and are less pronounced with encoding tasks, such as those used here, that do not require attention to be focused on the emotion elicited by the stimuli (for further discussion, see Kensinger and Schacter, 2006a).] The amygdala showed no memory-related activity for the neutral items (Fig. 2A). In contrast, the entorhinal cortex showed a relationship to item memory for the neutral items and also for the positive and negative items: for all item types, activity was greater for items remembered without their source than for items later forgotten (the response to items remembered with their source was nonsignificantly in between the response to items remembered without their source and the response to items later forgotten) (Fig. 2B).

Table 3.

Regions in which activity corresponded with subsequent item-not-source memory for both the positive and the negative stimuli

| Region | Hemisphere | Talairach coordinates (x, y, z) | Approximate Brodmann area |

|---|---|---|---|

| Pictures | |||

| Superior frontal gyrus | L | −15, 48, 31 | 8/9 |

| Inferior frontal gyrus | L | −48, 37, −13 | 47 |

| Inferior temporal gyrus | L | −35, −65, 7 | 37 |

| Fusiform gyrus | R | 42, −65, −8 | 18 |

| R | 30, −73, −11 | 18 | |

| Parahippocampal gyrus (entorhinal cortex) | L | −21, −10, −23 | |

| Amygdala | R | 28, −1, −23 | |

| Medial occipital gyrus | L | −40, −81, 8 | 19 |

| Words | |||

| Orbital frontal gyrus | R | 33, 44, −2 | 10/11 |

| Superior frontal gyrus | R | 17, −4, 39 | 6 |

| Anterior cingulate gyrus | R | 2, 18, 17 | 24 |

| Fusiform gyrus | L | −36, −62, −20 | 19/37 |

| R | 35, −76, −10 | 18 | |

| Parahippocampal gyrus (entorhinal cortex) | L | −37, −12, −26 | |

| Amygdala | R | 30, 3, −20 | |

| Cerebellum | R | 35, −75, −20 | |

| All items | |||

| Superior frontal gyrus | L | −3, 20, 43 | 8 |

| Middle frontal gyrus | L | −42, 31, 29 | 9, 46 |

| Inferior frontal gyrus | R | 33, 35, 1 | 45 |

| Inferior parietal lobule | L | −27, −63, 28 | 7 |

| Inferior temporal gyrus | R | 45, −67, 6 | 37 |

| Fusiform gyrus | R | 24, −79, −9 | 18, 19 |

| 36, −76, −11 | 18, 19 | ||

| 42, −64, −2 | 19 | ||

| L | −27, −73, −1 | 18, 19 | |

| −30, −82, −6 | 18, 19 | ||

| −36, −62, −17 | 19, 37 | ||

| Parahippocampal gyrus (entorhinal cortex) | L | −37, −10, −25 | |

| Amygdala | R | 30, −4, −23 | |

| Inferior/middle occipital gyrus | L | −42, −78, 12 | 19 |

| Inferior/middle occipital gyrus | R | 42, −78, 7 | 19 |

| Lingual gyrus | R | 15, −79, −6 | 18 |

| Orbital gyrus | R | 18, −98, 10 | 18 |

| Cerebellum | L | −6, −51, −25 | |

L, Left; R, right.

Figure 2.

Activity in the amygdala (A) and entorhinal cortex (B) corresponded to subsequent memory for item but not source information. In the amygdala, activity was equally high for negative and positive items that were remembered, regardless of whether the source was subsequently remembered or forgotten (i.e., item-and-source = item-not-source > miss). The amygdala showed no relationship to subsequent memory for the neutral items. In the entorhinal cortex, activity was greater for items remembered without their source than for items subsequently forgotten (i.e., item-not-source > miss), and this pattern held for the negative, positive, and neutral items. Error bars indicate SEM. neg, Negative; pos, positive; neu, neutral; pict, picture.

Comparison of activity in the amygdala, entorhinal cortex, and hippocampus proper

To further examine the robustness of the dissociations noted above, we defined regions of the medial temporal lobe in an unbiased manner as those regions that were more active during the encoding of pictures or words than during the baseline (fixation) task. Thus, any regions identified in this analysis were defined without regard to their relationship (or lack thereof) to subsequent-memory performance or to the emotion type of the stimuli (positive, negative, neutral). From this contrast, we defined regions of the right amygdala (Talairach coordinates: 28, −2, −20), left entorhinal cortex (−20, −10, −23), left anterior hippocampus (−35, −16, −33), and right posterior hippocampus/parahippocampal gyrus (24, −26, −3). We extracted the signal change time courses from these regions, computed the maximum signal change (compared with the baseline) that occurred within 3–15 s peristimulus time, and performed an ANOVA on these peak signal change values with item type (picture, word), emotion type (positive, negative, neutral), memory type (item-and-source, item-not-source, miss), and region (anterior hippocampus, posterior hippocampus/parahippocampal gyrus, entorhinal cortex, amygdala) as within-subjects factors. Critically, this ANOVA revealed an interaction between region and memory type (p < 0.05; partial η-squared = 0.41; with activity in the hippocampal regions relating to item-and-source memory and activity in the entorhinal cortex and amygdala relating to item-not-source memory) and a three-way interaction between region, emotion type, and memory type (p < 0.01; partial η-squared = 0.62; with activity in the amygdala relating to subsequent memory for positive and negative but not for neutral items and activity in all other regions showing comparable associations with subsequent-memory performance for positive, negative, and neutral items). When only activity in the left anterior hippocampus, right posterior hippocampus/parahippocampal gyrus, and entorhinal cortex were included as regions in the ANOVA (and not the amygdala), the analysis again showed an interaction between region and memory type (highlighting the distinct patterns of activity in the regions of the hippocampus proper compared with the entorhinal cortex) but no three-way interaction between emotion type, memory type, and region (emphasizing that in all regions aside from the amygdala, there is no effect of emotion type on the subsequent-memory relationship). Thus, the results from these analyses replicated the results from the contrast analyses discussed above (and depicted in Figs. 1 and 2).

Discussion

The present results suggest three main conclusions. First, activity in the amygdala shows a comparable relationship to the successful encoding of positive and negative information. There was no evidence that the amygdala was differentially or disproportionately related to memory for negative items. These results are consistent with recent studies that have demonstrated that the amygdala can respond to positive stimuli as well as negative stimuli (Hamann et al., 1999; Small et al., 2003; Anders et al., 2004) and, together with other research conducted in humans (Cahill et al., 1996; Kensinger and Corkin, 2004) and in animals (McGaugh et al., 1996; Pelletier et al., 2005), converge on the conclusion that the amygdala likely plays a role in modulating memory for high-arousal stimuli, regardless of their valence.

Second, although amygdala activity was related to the ability to remember that a particular emotional item had been studied, it did not correspond with memory for the task performed with either positive or negative items. These results contrast with a previous set of studies demonstrating that amygdala activity can relate to the likelihood that some types of event details (whether an item was imagined or perceived) are remembered (Kensinger and Schacter, 2005a,b). Thus, it appears that although the amygdala can relate to memory for some event details, there are conditions in which the amygdala relates principally to memory for the item itself. These differences in neural activity correspond with a different behavioral pattern of performance in the two experiments: the ability to distinguish seen from imagined items was significantly greater for emotional items than for neutral ones (Kensinger and Schacter, 2005a,b, 2006b), whereas in the present study, memory for the task performed was not enhanced by emotion. These results highlight the fact that the presence of emotion does not enhance memory for all aspects of an encoding episode and that amygdala engagement at encoding does not ensure that all encoding details will be accurately remembered.

This conclusion is broadly supported by studies of Adolphs and colleagues (Adolphs et al., 2001; Denburg et al., 2003), who have demonstrated that emotion enhances an individual’s ability to remember information essential to a scene or story but reduces an individual’s memory for less relevant scene or story details. They also have provided evidence that this emotion-related effect on memory may be mediated by the amygdala, as suggested by the absence of the effect in individuals with amygdala damage (Adolphs et al., 2005). The item-and-source memory assessments in the present study are quite different from the gist and event detail assessments used by Adolphs et al. (2005) (often assessed using multiple-choice questions regarding the studied stories). Nevertheless, the results of the present study converge with those of Adolphs et al. (2005) in suggesting that amygdala engagement at encoding can boost memory for some, but not all, aspects of the presentation of an item. Because the present study did not include separate assessments of memory for item gist, it is not possible to determine the extent to which the amygdala-mediated enhancement in item-not-source memory demonstrated here corresponds with the amygdala-mediated enhancement in gist memory suggested by the studies of Adolphs et al. (2005). Although it is plausible that in the present experiment participants were able to make accurate item recognition decisions based only on memory for gist, it is equally possible that participants recognized the scenes because of their memory for at least some of the scene details. Future studies will be required to adjudicate between these alternatives.

More generally, additional research will be needed to better understand the circumstances in which amygdala activity does, and does not, relate to the encoding of event details. Given the present data, it seems plausible that amygdala activity relates to the encoding of details that are intrinsically linked to the emotional item itself (e.g., its physical appearance or its gist) but not to elements that are more peripheral or extrinsic to the item [e.g., the task performed with the item; also see Mather et al. (2006) for evidence that the neural processes supporting memory for spatial location are disrupted by high arousal]. Thus, the role of the amygdala during encoding of event details may depend on the particular type of detail that is assessed.

The third conclusion to emerge from this study is that the same regions of the medial temporal lobe that have previously been shown to be related to the encoding of item-and-source or item-not-source information for neutral items also show these relationships to subsequent memory for positive and negative items. In particular, encoding activity in the hippocampus proper and posterior hippocampus/parahippocampal gyrus was related to later memory for the task, whereas activity in the entorhinal cortex was related to the ability to remember the item but not its context. These results are consistent with previous studies that have suggested that the hippocampus proper and posterior parahippocampal gyrus binds contextual elements of an encoding episode into a stable memory trace, whereas the entorhinal cortex leads to familiarity-based memory traces (Davachi and Wagner, 2002; Davachi et al., 2003; Ranganath et al., 2004; Prince et al., 2005). Note, however, that other data have revealed conditions in which encoding-related activity in multiple regions within the medial temporal lobe is associated with subsequent item-and-source memory (Gold et al., 2005).

In summary, the results of the present study suggest that amygdala activity at encoding influences the likelihood that an emotional item is remembered and further demonstrate that the relationship of the amygdala to subsequent memory can be equally strong for positive and negative items. The current results also emphasize that although amygdala activity can enhance the likelihood that information is remembered vividly (Dolcos et al., 2004; Kensinger and Corkin, 2004) and with some additional detail (Kensinger and Schacter, 2005a), amygdala activity does not lead to enhanced memory for all contextual details of an encoding episode. It may be that the amygdala primarily enhances memory for details directly linked to an emotional item and plays less of a role in modulating memory for contextual elements that are tangential to the item itself.

Footnotes

This work was supported by National Institutes of Health Grants MH60941 (D.L.S.) and MH070199 (E.A.K.). The Martinos Center was supported by the National Center for Research Resources (Grant P41RR14075) and by the MIND Institute. We acknowledge Coren Apicella and Mickey Muldoon for assistance with data management and analysis.

References

- Adolphs R, Denburg NL, Tranel D (2001). The amygdala’s role in long-term declarative memory for gist and detail. Behav Neurosci 115:983–992. [DOI] [PubMed] [Google Scholar]

- Adolphs R, Tranel D, Buchanan TW (2005). Amygdala damage impairs emotional memory for gist but not details of complex stimuli. Nat Neurosci 8:512–518. [DOI] [PubMed] [Google Scholar]

- Anders S, Lotze M, Erb M, Grodd W, Girbaumer N (2004). Brain activity underlying emotional valence and arousal: a response-related fMRI study. Hum Brain Mapp 23:200–209. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bradley MM, Lang PJ (1999). In: Affective Norms for English Words [CD-ROM] Gainesville, FL: The NIMH Center for the Study of Emotion and Attention, University of Florida.

- Burgess N, Maguire EA, O’Keefe J (2002). The human hippocampus and spatial and episodic memory. Neuron 35:625–641. [DOI] [PubMed] [Google Scholar]

- Cahill L (2003). Sex- and hemisphere-related influences on the neurobiology of emotionally influenced memory. Prog Neuropsychopharmacol Biol Psychiatry 27:1235–1241. [DOI] [PubMed] [Google Scholar]

- Cahill L, McGaugh JL (1998). Mechanisms of emotional arousal and lasting declarative memory. Trends Neurosci 21:294–299. [DOI] [PubMed] [Google Scholar]

- Cahill L, Haier RJ, Fallon J, Alkire MT, Tang C, Keator D, Wu J, McGaugh JL (1996). Amygdala activity at encoding correlated with long-term, free recall of emotional information. Proc Natl Acad Sci USA 93:8016–8021. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Coltheart M (1981). The MRC psycholinguistic database. Q J Exp Psychol 33A:497–505. [Google Scholar]

- Dale AM (1999). Optimal experimental design for event-related fMRI. Hum Brain Mapp 8:109–114. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Davachi L, Wagner AD (2002). Hippocampal contributions to episodic encoding: insights from relational and item-based learning. J Neurophysiol 88:982–990. [DOI] [PubMed] [Google Scholar]

- Davachi L, Mitchell JP, Wagner AD (2003). Multiple routes to memory: distinct medial temporal lobe processes build item and source memories. Proc Natl Acad Sci USA 100:2157–2162. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Denburg NL, Buchanan TW, Tranel D, Adolphs R (2003). Evidence for preserved emotional memory in normal older persons. Emotion 3:239–253. [DOI] [PubMed] [Google Scholar]

- Dolcos F, LaBar KS, Cabeza R (2004). Interaction between the amygdala and the medial temporal lobe memory system predicts better memory for emotional events. Neuron 42:855–863. [DOI] [PubMed] [Google Scholar]

- Fisher RA (1950). In: Statistical methods for research workers London: Oliver and Boyd.

- Gold JJ, Smith CN, Bayley PJ, Squire LR (2005). Activity throughout the medial temporal lobe predicts subsequent accuracy of item and source memory judgments. Soc Neurosci Abstr 31:315.8. [Google Scholar]

- Hamann S (2001). Cognitive and neural mechanisms of emotional memory. Trends Cognit Sci 5:394–400. [DOI] [PubMed] [Google Scholar]

- Hamann S (2005). Sex differences in the responses of the human amygdala. Neuroscientist 11:288–293. [DOI] [PubMed] [Google Scholar]

- Hamann SB, Ely TD, Grafton ST, Kilts CD (1999). Amygdala activity related to enhanced memory for pleasant and aversive stimuli. Nat Neurosci 2:289–293. [DOI] [PubMed] [Google Scholar]

- Henson R (2005). A mini-review of fMRI studies of human medial temporal lobe activity associated with recognition memory. Q J Exp Psychol B 58:340–360. [DOI] [PubMed] [Google Scholar]

- Kensinger EA (2004). Remembering emotional experiences: the contribution of valence and arousal. Rev Neurosci 15:241–251. [DOI] [PubMed] [Google Scholar]

- Kensinger EA, Corkin S (2004). Two routes to emotional memory: Distinct neural processes for valence and arousal. Proc Natl Acad Sci USA 101:3310–3315. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kensinger EA, Schacter DL (2005a). Emotional content and reality-monitoring ability: FMRI evidence for the influence of encoding processes. Neuropsychologia 43:1429–1443. [DOI] [PubMed] [Google Scholar]

- Kensinger EA, Schacter DL (2005b). Retrieving accurate and distorted memories: neuroimaging evidence for effects of emotion. NeuroImage 27:167–177. [DOI] [PubMed] [Google Scholar]

- Kensinger EA, Schacter DL (2006a). Processing emotional pictures and words: effects of valence and arousal. In: Cognit Affect Behav Neurosci in press. [DOI] [PubMed]

- Kensinger EA, Schacter DL (2006b). Reality monitoring and memory distortion: effects of negative, arousing content. In: Mem Cognit in press. [DOI] [PubMed]

- Lang PJ, Bradley MM, Cuthbert BN (1997). . In: International affective picture system (IAPS): technical manual and affective ratings Gainesville, FL: The Center for Research in Psychophysiology, University of Florida.

- Lazar NA, Luna B, Sweeney JA, Eddy WF (2002). Combining brains: a survey of methods for statistical pooling of information. NeuroImage 16:538–550. [DOI] [PubMed] [Google Scholar]

- Mather M, Mitchell KJ, Raye CL, Novak DL, Greene EJ, Johnson MK (2006). Emotional arousal can impair feature binding in working memory. J Cogn Neurosci. [DOI] [PubMed]

- McGaugh JL (2004). The amygdala modulates the consolidation of memories of emotionally arousing experiences. Annu Rev Neurosci 27:1–28. [DOI] [PubMed] [Google Scholar]

- McGaugh JL, Cahill L, Roozendaal B (1996). Involvement of the amygdala in memory storage: interaction with other brain systems. Proc Natl Acad Sci USA 93:13508–13514. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ochsner KN (2000). Are affective events richly “remembered” or simply familiar? The experience and process of recognizing feelings past. J Exp Psychol Gen 129:242–261. [DOI] [PubMed] [Google Scholar]

- Paller KA, Wagner AD (2002). Observing the transformation of experience into memory. Trends Cogn Sci 6:93–102. [DOI] [PubMed] [Google Scholar]

- Pelletier JG, Likhtik E, Filali M, Pare D (2005). Lasting increases in basolateral amygdala activity after emotional arousal: implications for facilitated consolidation of emotional memories. Learn Mem 12:96–102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Phelps EA (2004). Human emotion and memory: interactions of the amygdala and hippocampal complex. Curr Opin Neurobiol 14:198–202. [DOI] [PubMed] [Google Scholar]

- Phelps EA, LeDoux JE (2005). Contributions of the amygdala to emotion processing: from animal models to human behavior. Neuron 20:175–187. [DOI] [PubMed] [Google Scholar]

- Prince SE, Daselaar SM, Cabeza R (2005). Neural correlates of relational memory: successful encoding and retrieval of semantic and perceptual associations. J Neurosci 25:1203–1210. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ranganath C, Yonelinas AP, Cohen MX, Dy CJ, Tom SM, D’Esposito M (2004). Dissociable correlates of recollection and familiarity within the medial temporal lobes. Neuropsychologia 42:2–13. [DOI] [PubMed] [Google Scholar]

- Sharot T, Delgado MR, Phelps EA (2004). How emotion enhances the feeling of remembering. Nat Neurosci 7:1376–1380. [DOI] [PubMed] [Google Scholar]

- Small DM, Gregory MD, Mak YE, Gitelman D, Mesulam MM, Parrish T (2003). Dissociation of neural representation of intensity and affective valuation in human gustation. Neuron 39:701–711. [DOI] [PubMed] [Google Scholar]

- Sommer T, Rose M, Weiller C, Buchel C (2005). Contributions of occipital, parietal and parahippocampal cortex to encoding of object-location associations. Neuropsychologia 43:732–743. [DOI] [PubMed] [Google Scholar]

- Sperling RA, Bates JF, Cocchiarella AJ, Schacter DL, Rosen BR, Albert MS (2001). Encoding novel face-name associations: a functional MRI study. Hum Brain Mapp 14:129–139. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Talairach J, Tournoux P (1988). In: Co-planar stereotaxic atlas of the human brain New York: Thieme Medical.

- Wagner AD, Koutstaal W, Schacter DL (1999). When encoding yields remembering: insights from event-related neuroimaging. Philos Trans R Soc Lond B Biol Sci 354:1307–1324. [DOI] [PMC free article] [PubMed] [Google Scholar]