Abstract

Background

This paper presents the protocol for the National Institute of Mental Health (NIMH)–funded University of Washington’s ALACRITY (Advanced Laboratories for Accelerating the Reach and Impact of Treatments for Youth and Adults with Mental Illness) Center (UWAC), which uses human-centered design (HCD) methods to improve the implementation of evidence-based psychosocial interventions (EBPIs). We propose that usability—the degree to which interventions and implementation strategies can be used with ease, efficiency, effectiveness, and satisfaction—is a fundamental, yet poorly understood determinant of implementation.

Objective

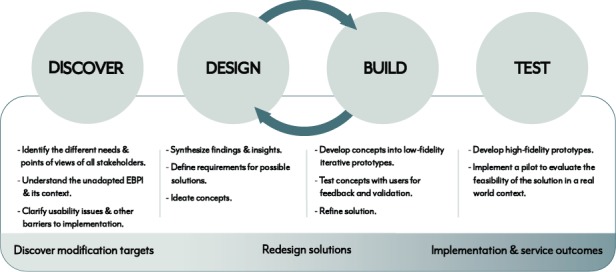

We present a novel Discover, Design/Build, and Test (DDBT) framework to study usability as an implementation determinant. DDBT will be applied across Center projects to develop scalable and efficient implementation strategies (eg, training tools), modify existing EBPIs to enhance usability, and create usable and nonburdensome decision support tools for quality delivery of EBPIs.

Methods

Stakeholder participants will be implementation practitioners/intermediaries, mental health clinicians, and patients with mental illness in nonspecialty mental health settings in underresourced communities. Three preplanned projects and 12 pilot studies will employ the DDBT model to (1) identify usability challenges in implementing EBPIs in underresourced settings; (2) iteratively design solutions to overcome these challenges; and (3) compare the solution to the original version of the EPBI or implementation strategy on usability, quality of care, and patient-reported outcomes. The final products from the center will be a streamlined modification and redesign model that will improve the usability of EBPIs and implementation strategies (eg, tools to support EBPI education and decision making); a matrix of modification targets (ie, usability issues) that are both common and unique to EBPIs, strategies, settings, and patient populations; and a compilation of redesign strategies and the relative effectiveness of the redesigned solution compared to the original EBPI or strategy.

Results

The UWAC received institutional review board approval for the three separate studies in March 2018 and was funded in May 2018.

Conclusions

The outcomes from this center will inform the implementation of EBPIs by identifying cross-cutting features of EBPIs and implementation strategies that influence the use and acceptability of these interventions, actively involving stakeholder clinicians and implementation practitioners in the design of the EBPI modification or implementation strategy solution and identifying the impact of HCD-informed modifications and solutions on intervention effectiveness and quality.

Trial Registration

ClinicalTrials.gov NCT03515226 (https://clinicaltrials.gov/ct2/show/NCT03515226), NCT03514394 (https://clinicaltrials.gov/ct2/show/NCT03514394), and NCT03516513 (https://clinicaltrials.gov/ct2/show/NCT03516513).

International Registered Report Identifier (IRRID)

DERR1-10.2196/14990

Keywords: implementation science, human-centered design, evidence-based psychosocial interventions

Introduction

Background

Psychosocial interventions (eg, psychotherapy, counseling, and case management) are a preferred mode of treatment by most people seeking care for mental health problems, particularly among low-income, minority, geriatric, and rural populations [1-7]. Despite numerous studies demonstrating the effectiveness of evidence-based psychosocial interventions (EBPIs), they are rarely available in community service settings [8-10]. A landmark report by the United States’ Institute of Medicine [11] noted that EBPI availability is limited by clinicians’ abilities to effectively learn and adopt new practices (ie, capacity), intervention complexity, and limited support to sustain quality delivery. EBPI implementation is particularly challenging because most people receive treatment for mental illness in nontraditional or integrated settings such as primary care [12] and schools [13,14]. EBPIs were typically not developed for these settings, resulting in poor contextual fit and low adoption [15,16]. To address EBPI implementation barriers, decades of research has focused on provider, patient, setting, and policy barriers [17], yet actionable and cost-efficient solutions remain elusive [18]. Numerous implementation strategies have been developed to facilitate EBPI delivery [19-21], but the interventions and their accompanying implementation strategies are complex processes that are often difficult to deliver [22,23]. As a result, the science-to-service gap for EBPIs remains significant.

Usability as a Key Implementation Factor

The usability of EBPIs and implementation strategies are a major challenge to successful implementation and one that has largely been largely overlooked by health care researchers who focus on promoting the implementation of evidence-based practices in routine service settings. Usability is defined as the degree to which a program can be used easily, efficiently, and with satisfaction/low user burden by a particular stakeholder [24]. Although the concept of usability has most traditionally been applied to digital products, usability metrics and assessment procedures are much more broadly relevant. Indeed, with regard to EBPIs and implementation strategies, usability has also been identified as a key determinant of implementation outcomes (eg, intervention adoption, service quality, and cost) and clinical outcomes (eg, symptoms and functioning) [25].

Evidence-Based Psychosocial Interventions Usability

EBPIs are complex psychosocial interventions involving interpersonal or informational activities, techniques, or strategies with the aim of reducing symptoms and improving functioning or wellbeing [11]. Usability standards sit in striking contrast to the current state of EBPIs, which are generally difficult to learn, requiring several months of training and supervision [26-28]; impose a high degree of user burden or cognitive load [22]; and do not fit well into typical provider and patient workflows [29]. Most implementation research focuses on creating “hospitable soil” (ie, modifying individual or organizational contexts to fit the EBPI) rather than “better seeds” (improving the EBPI to fit the context) [25]. Indeed, recent reviews of implementation measurement instruments [30] and implementation strategies [31] indicate that attention to intervention-level determinants has been sparse.

Implementation Strategy Usability

Implementation strategies can be defined as methods or techniques used to enhance the adoption, implementation, and sustainment of a clinical program or practice [23]. Most implementation strategies are complex psychosocial interventions that share many of the same usability pitfalls as EBPIs [23]. The development of numerous multicomponent implementation strategies has the potential to further decrease their usability in real-world contexts, inadvertently contributing to the emerging gap between implementation research and implementation practice [32]. A critical step in improving EBPI implementation is to redesign both EBPIs and their implementation supports to improve usability as well as implementation and service outcomes while retaining the effective components of each.

Human-Centered Design

Human-centered design (HCD, also known as user-centered design) has the potential to improve EBPI and implementation strategy usability. HCD is a field of science that has produced methods to develop compelling, intuitive, and easily adopted products and tools [22,33]. HCD and implementation science share the common goal of improving the use of innovative and effective practices in real-world contexts. Although implementation focuses on individuals and systems to effect change, HCD focuses on developing more usable innovations by systematically collecting stakeholder input and improving innovation-stakeholder and innovation-context fit. Although HCD is traditionally discussed in the context of digital technologies [33], HCD approaches are not restricted to technology-based solutions. Recent work has applied HCD to EBPIs to enhance usability, decrease burden, and increase contextual appropriateness [22,34,35]. Furthermore, HCD approaches may be applied to the evaluation and redesign of implementation strategies [36,37], where the pool of potential users may also be expanded to individuals functioning in intermediary, purveyor, or other facilitative roles [38]. Overall, HCD serves as a design framework to guide the development of solutions to community-identified problems.

Study Purpose

Center Aims and Structure

The mission of the University of Washington’s ALACRITY (Advanced Laboratories for Accelerating the Reach and Impact of Treatments for Youth and Adults with Mental Illness) Center (UWAC) is to study the utility of HCD as a methodological approach to improve the implementation of EBPIs in highly accessible service settings (eg, rural, urban, low-income, nonspecialty mental health). The research team reflects a diverse set of research and practice experts in mental health services, implementation science, and user-centered design. To support this mission, we created the Discover, Design and Build, and Test (DDBT) framework, a multiphase process that draws from established HCD frameworks [39] and applies HCD methods to modify EBPIs and implementation strategies (described below). The UWAC Methods Core will oversee, collect, and integrate data collected using the DDBT framework across all projects funded by the center. These include three primary research projects (described below), each of which will focus on the redesign of an EBPI and implementation strategy, or both. Twelve pilot projects to be funded by the UWAC via a competitive process will also employ the DDBT framework, but because these projects are not yet funded, their specifics are not discussed here. Through data collection and integration, we will address the following Method Core aims.

Identification of Evidence-Based Psychosocial Interventions and Implementation Strategy Modification Targets to Improve Learnability, Usability, and Sustained Quality of Care

During the early phases of each study, stakeholders will provide information concerning the main challenges in the use of EBPI or implementation strategy components. Components may include content elements [40] (ie, discrete tasks used to bring about intended outcomes during an intervention or implementation process) and structures [41] (ie, dynamic processes that guide the selection, organization, and delivery of content elements) that need modification as well as multilevel barriers and facilitators of EBPI implementation. Identified issues may be those that affect learnability (ie, the extent to which users can rapidly build understanding in or facilitate the use of an innovation), usability (defined previously), and quality of care (ie, delivery with fidelity and impact on target outcomes). To accomplish this aim, the UWAC Methods Core will build on the established methods of evaluating the usability of complex psychosocial interventions [34].

Developing Design Solutions to Address Modification Targets

This aim will aggregate a typology of design solutions to improve mutable EBPI and implementation strategy modification targets based on the research projects. Early work has already begun on the identification of EBPI modification types [42], and the UWAC Methods Core will extend this work to implementation strategies to create a matrix of design solutions mapped to specific modification targets.

Effect of Design Solutions on Learnability, Usability, and Sustained Quality of Care Through Changes in Modification Targets

As per the Institute of Medicine report on psychosocial interventions, the three pilot projects we specified in the center protocol will focus on developing support tools for the efficient training of frontline clinicians in EBPI elements (learnability), modifying and combining EBPI elements so that frontline clinicians serving rural and minority patients can use them effectively and with ease (usability), and developing decision support tools to assist frontline clinicians in the faithful delivery of EBPI elements without the need of expert intervention or supervision (sustained quality). A key goal of our proposed work is to determine the effects of design solutions on implementation outcomes [43] such as time to skill acquisition, sustained adherence to treatment protocol, and clinician/patient adoption of EBPI strategies. We hypothesize that core elements of the EBPI or implementation strategy can be maintained while streamlining these innovations to improve implementation and enhancing real-world effectiveness. Testing this hypothesis during the funded research projects will be the center’s major contribution to the field of implementation science.

Methods

Overview

The UWAC Methods Core will achieve its aims by integrating data collected across a series of center-supported research studies. These include three studies articulated at the time of the grant submission (Table 1) as well as 12 pilot studies that will be competitively awarded over the course of the award. Table 2 shows the timeline of study activities and Center products. Multimedia Appendix 1 presents example Consolidated Standards of Reporting Trials (CONSORT) diagrams for practitioner and patient participants. The University of Washington’s Institutional Review Board has approved and regulates the ethical execution of this research. Approval for the three separate studies was given on March 12, 2018 (trial number NCT03514394); March 21, 2018 (trial number NCT03515226); and March 23, 2018 (trial number NCT03516513). All participants will provide informed consent. The consent form will describe the University of Washington’s policy to preserve, protect, and share research data in accordance with academic, scientific, and legal norms.

Table 1.

Center project objectives and outcomes.

| Project | Redesign objective | Outcomes |

| Project 1: Clinician Training in Rural Primary Care Medicine |

Learnability |

|

| Project 2: Usability of EBPI for Depression in Rural, Native American Communities | Intervention usability |

|

| Project 3: Quality/Decision Support for EBPIs in Primary Care Settings |

Quality of care |

|

aEBPI: evidence-based psychosocial interventions.

Table 2.

Center timeline.

| Milestones | Year 1 - 2018-2019 | Year 2 - 2019-2020 | Year 3 - 2020-2021 | Year 4 - 2021-2022 | ||||||||||||||

|

|

Qa 01 | Q 02 | Q 03 | Q 04 | Q 01 | Q 02 | Q 03 | Q 04 | Q 01 | Q 02 | Q 03 | Q 04 | Q 01 | Q 02 | Q 03 | Q 04 | ||

| Study #1: Learnability | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ |

|

|

|

|

||

|

|

Discover | ✓ | ✓ |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

Design/Build |

|

|

✓ | ✓ | ✓ |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

Test |

|

|

|

|

|

✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ |

|

|

|

|

|

| Study #2: Usability |

|

|

|

|

|

✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ |

|

|

|

||

|

|

Discover |

|

|

|

|

|

✓ |

|

|

|

|

|

|

|

|

|

|

|

|

|

Design/Build |

|

|

|

|

|

|

✓ | ✓ |

|

|

|

|

|

|

|

|

|

|

|

Test |

|

|

|

|

|

|

|

|

✓ | ✓ | ✓ | ✓ | ✓ |

|

|

|

|

| Study #3: Quality of Care |

|

|

|

|

|

|

|

✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ |

|

||

|

|

Discover |

|

|

|

|

|

|

|

✓ | ✓ |

|

|

|

|

|

|

|

|

|

|

Design/Build |

|

|

|

|

|

|

|

|

|

✓ | ✓ |

|

|

|

|

|

|

|

|

Test |

|

|

|

|

|

|

|

|

|

|

|

✓ | ✓ | ✓ | ✓ |

|

|

| Center Products |

|

|

|

|

|

|

|

|

|

|

✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ||

|

|

Typology of EBPIb targets |

|

|

|

|

|

|

|

|

|

|

✓ | ✓ | ✓ | ✓ | ✓ | ✓ | |

|

|

Matrix of targeted modifications |

|

|

|

|

|

|

|

|

|

|

✓ | ✓ | ✓ | ✓ | ✓ | ✓ | |

aQ: quarter.

bEBPI: evidence-based psychosocial interventions.

Project Descriptions of the University of Washington’s Advanced Laboratories for Accelerating the Reach and Impact of Treatments for Youth and Adults With Mental Illness Center Research

Each of the projects will focus on a different aspect of EBPI implementation and include the redesign of a patient-facing intervention, an implementation strategy, or both. In addition to their project-specific data needs, all projects will collect a common core set of data that will be integrated by the Methods Core for collective analyses. Study 1 focuses on improving learnability by implementing a novel EBPI training program to support the delivery of a manualized telephone-based cognitive behavioral therapy (tCBT) [44] by bachelor degree–level social work students who manage health care for migrant farm workers in central Washington State. Study 2 focuses on the usability of problem-solving therapy (PST) [45] and cognitive processing therapy (CPT) [46] interventions for the management of depression and anxiety by master’s degree–level social workers from seven federally qualified health centers serving Native American and frontier communities in Eastern Montana. Study 3 will address the quality of care and shared decision making by master’s degree–level care managers to treat depression in urban primary care clinics in the Seattle, Washington metro area. All three studies will employ the DDBT framework. Across projects, selected EBPIs were chosen based on input from community partners and because they address aspects of common problems seen in low-income communities (especially depression and trauma).

University of Washington’s Advanced Laboratories for Accelerating the Reach and Impact of Treatments for Youth and Adults With Mental Illness Center Procedures: Discover, Design, Build, and Test Framework

The three-phase DDBT framework (Figure 1) is intended to gather the requisite information to drive iterative redesign of existing EBPIs or implementation strategies to improve usability and implementation outcomes (eg, contextual appropriateness, and adoption) while retaining an intervention’s core components. As indicated above, DDBT is rooted in traditional HCD frameworks [39], but applies them in novel ways to EBPIs and implementation strategies. Target stakeholders (eg, clinicians, patients, and trainers) are engaged in each phase to ensure that the implementation solution meets needs of its stakeholders (ie, it is useful) and is easy to use and understand (ie, it is usable). Table 3 displays the planned data collection approaches across projects and DDBT phases. Additional human subjects and data protection details can be found in Multimedia Appendix 2.

Figure 1.

Discover, design/build, and test (DDBT) framework. EBPI: evidence-based psychosocial interventions.

Table 3.

Planned data collection across projects and DDBT (Discover, Design/Build, and Test) phases.

| Projects and phases |

Think aloud | Semistructured interviews | Remote survey | Contextual observation | Iterative design | As-is analysis | Cognitive walk- through | Quantitative instruments | |

| Project 1 (Learnability) | |||||||||

|

|

Discover |

|

✓ | ✓ |

|

|

✓ |

|

✓ |

|

|

Design/Build | ✓ |

|

✓ |

|

✓ |

|

✓ |

✓ |

|

|

Test | ✓ |

|

|

✓ |

|

|

|

✓ |

| Project 2 (Usability) | |||||||||

|

|

Discover | ✓ | ✓ |

|

|

|

✓ |

|

✓ |

|

|

Design/Build |

|

|

|

|

✓ |

|

✓ |

✓ |

|

|

Test |

|

|

|

|

|

|

|

✓ |

| Project 3 (Quality of Care) | |||||||||

|

|

Discover |

|

✓ |

|

|

|

✓ |

|

✓ |

|

|

Design/Build | ✓ |

|

|

|

✓ |

|

|

✓ |

Discover Phase

Overview

The first step of our framework leverages important aspects of HCD [39,47,48], including identification of current and potential stakeholders, their needs, influential aspects of the target setting(s), and understanding what facilitates and inhibits the usability of current tools and workflows. A design that is usable in one context, for one person, may not be usable for another person or in a different context, necessitating an understanding of the use setting in redesign efforts [49-52]. Thus, projects must use the Discover phase to gather information about two sets of information: the context of deployment (eg, individuals, their needs, and work settings) and information about the innovation itself (eg, usability issues), both of which are discussed below. Even if investigators enter into the Discover phase with a particular solution in mind (eg, expecting that a digital tool will be a useful approach to improve implementation), the appropriateness of those solutions must be continually reassessed with user input. Methodological procedures used across projects in this phase are below.

Identification of Stakeholders

HCD, and hence, the DDBT framework, emphasizes explicitly identifying the range of target stakeholders to ensure new products effectively meet their needs [53,54]. Consistent with the established methods [34,55,56], each project will engage in an explicit user-identification process that includes brainstorming an overly inclusive list of potential users, articulating the subset of user characteristics that are most relevant and then describing, prioritizing, and selecting main user groups. Although we anticipate that a variety of stakeholders may be identified in each project, DDBT is most focused on gathering input from primary users [53], defined as those whose activities are most proximal to the innovation and whose needs and constraints redesign solutions must be prepared to address first. For EBPIs, primary users are most often clinicians and service recipients [22]. For implementation strategies, primary users tend to be implementation practitioners or intermediaries [57] as well as the targets of the strategy. Secondary users—who may have additional needs that can be accommodated without compromising a product’s ability to meet the primary user(s) needs—may include system administrators (who often make adoption decisions) and families/caregivers among others [58]. Although primary users will be prioritized, some projects will also collect information from a subset of secondary users. Across projects, characteristics of stakeholders that might influence their experiences with EBPIs or implementation strategies (eg, previous training or treatment experiences) will be tracked to identify possible confounds.

In the learnability study (Study 1), the pool of stakeholder participants will include 15 bachelor degree-level social work students (ie, targets of the training strategy) and educators who are in the position to train these future clinicians (ie, deliverers of the training strategy). In the intervention usability study (Study 2), users will be master’s degree–level clinicians who deliver behavioral health care in clinics serving Native American and frontier community members as well as representative patients from these communities. The quality of care and decision-making study (Study 3) will target both EBPI-experienced and EBPI-naive care managers in integrated primary care settings. Across studies, recruited representative users will include both those with experience in the identified intervention or strategy as well as novices to ensure broad applicability across stakeholders.

Identification of Stakeholder Needs: Semistructured Interviews, Focus Groups, and Remote Survey

All stakeholders will be observed and interviewed to identify key challenges they face in the use of EBPIs and implementation strategies. In Study 1, we will collect information from students and educators through focus groups and individual interviews about topics such as their experiences with distance learning and computerized training (implementation strategies), how they see these technologies fitting into their daily lives, and perceived utility of this form of training in addressing key issues they have experienced as novice users of EBPIs. To achieve convergence within a mixed-methods paradigm [59], we will also conduct remote, national surveys of educators and trainees to confirm the information we obtain. In Study 2, we will conduct focus groups and interviews with clinicians about the challenges they face in implementing EBPIs in rural settings, modifications they have made to EBPIs to accommodate those challenges, and reasons for EBPI de-adoption. This includes role-play observations of their modifications and the strategies they currently feel are helpful but not present in the original EBPIs. In Study 3, we will observe and interview care managers about the challenges they see with existing quality control methods and at which points in their workflows, or with which patient types, they need the most assistance. Given the importance of value alignment as a component of contextual appropriateness [60], all interviews and focus groups will also cover what the end user values about their work as service providers or implementation practitioners and suggestions for how it could be improved, given their service context and patient population.

Contextual Observation of Stakeholders in Their Service Settings

Although qualitative interviews that clarify perceived challenges in applying EBPIs and implementation strategies are important, they are rarely a substitute for direct observation of professional behavior. Each of the primary research projects will engage in structured observations. In Study 2, for example, watching video tapes of clinician sessions and having the clinician explain their thought processes and the challenges they faced during implementation, can offer suggestions for improvement in the design of an EBPI or implementation strategy. In Study 2, the research team will also shadow representative social workers from clinics serving indigenous peoples to capture existing workflows and identify bottlenecks for implementing EBPIs. In Study 3, the research team will observe clinicians in urban primary care clinics to identify opportunities to introduce decision-support strategies.

Usability Testing via Direct Interactions With the Original Evidence-Based Psychosocial Interventions or Implementation Strategy

We will also conduct usability evaluations of each EBPI or strategy. A variety of usability testing techniques exist, which may be applied to either interventions or implementation strategies, including quantitative instruments, cognitive walkthroughs, and lab-based user testing [31,34,36,49,50]. Cognitive walkthroughs, for example, may be applied to psychosocial implementation strategies by first conducting a task analysis [61] to identify their subcomponents, prioritizing those tasks, developing scenarios to embed the tasks, walking through the tasks with users individually or in a group format, asking for ratings of the likelihood of successful completion, and soliciting “failure or success stories” that describe the assumptions underlying the ratings [62]. Another method, the “think-aloud” protocol [63,64], is a commonly employed technique in which the stakeholders verbalize their experiences and thought processes as they use a tool to complete a task during a usability testing session. For example, in Study 3, before the intervention and implementation tools are designed, the investigators must watch how key stakeholders interact with existing decision supports, including the potential use of electronic health records. In this phase, the investigators remain open to the possibility that electronic health records are the wrong design solution to provide decision support. Depending on the project, usability testing will occur with clinicians, patients, other stakeholders, or a combination.

Design and Build Phase

Overview

Based on the information gleaned from the Discover phase, the Design and Build phase is intended to iteratively and systematically develop, evaluate, and refine prototypes of interventions and implementation strategies using the techniques below. Prototyping and rapid iteration involves a process of making new ideas sufficiently tangible to quickly test their value [65]. Consistent with the HCD literature, early prototype testing based on data from the Discover phase is conducted with small samples (for instance, N=5) to answer design questions using paper versions of redesign solutions or storyboards to represent more complex psychosocial processes (eg, exposure procedures to treat anxiety and interactions between instructors and learners during training activities) [66,67]. This reduces waste of unnecessary investment in developing “high-fidelity” prototypes until as late in the process as possible.

Usability Testing

The Design and Build phase employs the same usability testing techniques described above in the Discover phase (eg, using a think aloud protocol). However, across the iterative prototyping process, this testing is increasingly summative (versus formative in the Discover phase) and intended to determine whether identified usability problems and contextual constraints have been addressed [49]. Therefore, usability data resulting from the Design and Build phase is most informative when it is compared to similar data collected previously. If intervention or implementation strategy usability has not improved over successive iterations, then additional redesign solutions may need to be explored.

Test Phase

Overview

The Test phase involves small-scale testing of the intervention or strategy in a form that fully functions as it is intended, with a larger number of users, and in their actual milieu. The emphasis of this testing phase is on user experience, satisfaction with the end design, reported benefit over alternative or existing processes, and implementation outcomes [43]. This includes a structured review of how the solution would fit into existing workflows within the clinical settings in which the solution is to be deployed (ie, appropriateness). To learn where the DDBT framework is helpful—or potentially harmful (changing the EBPI or implementation strategy so much that it is no longer effective)—each study funded by the UWAC will also collect the same measures in the Test phase, including quantitative usability evaluation instruments [34,36]. The three preplanned projects will compare the redesigned solution to the original, often in the context of small-scale hybrid effectiveness-implementation trials [68]. Across the three projects, hybrid type 1, which emphasizes effectiveness over implementation (Study 2); type 2, which equally emphasizes the two (Study 3); and type 3, which emphasizes implementation (Study 1), are represented. All patients seen in the Test phase of these studies will be new to the clinicians. In Study 1 (learnability), 12 students over two successive cohorts will be randomized to tCBT training as usual (original strategy) or a redesigned tCBT training that incorporates an adaptive intelligent tutoring system [69]. The intelligent tutoring system is an adaptive learning tool that bases the presentation of material on trainees’ learning needs. It reflects a standardized method that can help mitigate trainee drift. Each student will see five patients (N=60 patients). Patient participants in this study will receive eight weekly sessions of the tCBT intervention. In Study 2 (usability), six clinicians will be randomized to either PST (original intervention; 10 weekly sessions) or the new, redesigned solution. Each clinician will see five patients (N=30). In Study 3 (quality of care), six clinicians will be randomized to the usual care delivery model (intervention and strategy) or care supported by the decision-making tool. Each clinician will be assigned five patients (N=30) to deliver up to 10 sessions of PST.

Test Phase Measures

Data collected during the Test phase of the studies will inform future hypotheses about potential targets for EBPI and strategy modification. Because the UWAC studies will be small-scale randomized trials with stakeholders who were not involved in the Discover and Design and Build phases, we will have an opportunity for unbiased comparison of the identified redesign solutions. Additionally, the studies will use a common set of outcome measures in Aim 3 analyses. Because all solutions developed in this Center are aimed at enhancing usability; promoting perceptual implementation outcomes (ie, those that tend to be measured from the perspectives of critical stakeholders rather than behaviorally) such as acceptability, feasibility, and appropriateness [25] and behavioral implementation outcomes such as integrity; and containing costs, all studies will use the same usability and implementation measures. In addition, we will collect common patient-reported outcomes (eg, depression and functioning) across studies. We will also determine the need to conduct additional qualitative interviews with patients, clinicians, and implementation practitioners for the purpose of quantitative data explanation and elaboration [59] or each project.

First, three different versions of the well-established System Usability Scale [70] will be used across studies depending on whether EBPIs, implementation strategies, or traditional digital technologies are being assessed. The System Usability Scale, a widely used brief measure of the usability of a digital product, is often considered the industry standard for measuring usability. Adaptation of the System Usability Scale that will also be used across studies include the Intervention Usability Scale [34] and the Implementation Strategy Usability Scale [36] (presented in Multimedia Appendices 3 and 4, respectively).

Second, clinician and implementation practitioner perceptual implementation outcomes (ie, acceptability, appropriateness, and feasibility) will be evaluated via three recently validated instruments [71]. These include (1) the Acceptability of Intervention Measure, (2) the Intervention Appropriateness Measure, and (3) the Feasibility of Intervention Measure. These are brief, pragmatic measures that can be tailored for application to either interventions or implementation strategies and have strong internal reliability, test-retest reliability, and sensitivity to change [71].

Third, each study will collect data on the number of training hours needed before reaching adequate integrity in the EBPI or modified solution as well as the degree of sustained integrity (or integrity drift) over time. Sustained integrity in Study 1 will be measured using Cognitive Therapy Scales [72]. The PST Therapist Adherence Scale will be used to review audio sessions for Studies 2 and 3 by raters blinded to the condition. The CPT Adherence and Competence Protocol (P Nishith et al, unpublished data, 1994) will assess CPT fidelity in Study 2. Time to reach adequate integrity and sustained integrity over time will be compared between the original EBPI or strategy and the redesigned solution and combined with the cost information (below).

Fourth, implementation costs in each study will include inputs such as the time clinicians devote to learning a new technique, time experts spend delivering training, and costs of any ongoing supervision needed to mitigate skill drift [73]. These costs are relevant to both EBPI and implementation strategy redesign, as better-designed EBPIs are expected to require fewer resources to implement and better designed implementation strategies should be able to more efficiently support the learnability of EBPIs.

Finally, a variety of patient-reported outcomes will evaluate the effectiveness of redesigned EBPIs or implementation strategies. The Sheehan Disability Scale [74,75], a brief analog scale measuring functioning in work, social, and health domains will be administered to patient participants in the Test phase across projects. The 9-item Patient Health Questionnaire (PHQ-9) [76] measures the presence of depression symptoms over the last 2 weeks.

University of Washington’s Advanced Laboratories for Accelerating the Reach and Impact of Treatments for Youth and Adults With Mental Illness Data, Analyses, and Intended Outcomes

The UWAC is designed to allow for integration of data across projects to identify common EBPI and implementation strategy modification targets, develop a matrix of redesign solutions for each target, and determine if these redesigned innovations address the goals of the UWAC.

Aim 1: Identify Evidence-Based Psychosocial Interventions and Implementation Strategy Modification Targets to Improve Learnability, Usability, and Quality of Care (Discover Phase)

Discover Phase Overview

The field lacks methods for understanding usability problems of EBPIs, strategies to support these EBPIs, and methods to intentionally link EBPI usability problems to redesign targets. Aside from a few notable examples of team-based implementation approaches [77,78], there has been little systematic work explicitly involving stakeholders in the identification of EBPI modification targets, and none that use HCD methods. Similarly, researchers increasingly acknowledge the need for deliberate selection and tailoring of multifaceted implementation strategies [79] but few methods exist. During the Discover phase of each UWAC project, the Methods Core will employ the techniques described above (eg, qualitative interviews, focus groups, contextual observation, and user testing methods) to identify modification targets and improve strategy usability. Exploratory qualitative analysis, informed by the Consolidated Framework for Implementation Research (CFIR) [80], will allow us to identify and categorize the most critical multilevel determinants (ie, barriers and facilitators) that emerge from interviews, focus groups, and contextual evaluations that may impact implementation. Discrete usability issues—defined as aspects of the intervention or strategy that make it unpleasant, inefficient, onerous, or impossible for the user to achieve their goals in typical usage situations [81]—will be identified for all projects and categorized within the User Action Framework (UAF) [82,83], which details how usability problems can impact the stakeholder experience at any stage of the user interaction cycle (ie, planning, translation, action, and feedback). Although the three studies have different foci, interviews across all projects will address a core set of questions guided by the CFIR and a previous framework for reporting adaptations and modifications to evidence-based interventions (FRAME) [84] (eg, “What content elements or organizing structures of EBPIs and implementation strategies are commonly identified by clinicians as needing modification?”). In addition to reporting raw data to the UWAC Methods Core, each study will report their own analyses and synthesis of usability issues and the evidence for them.

Data Analysis

Sample sizes for Aims 1 and 2 were informed by estimates from the user-centered design literature, which recommends a sample size of 5-10 to capture critical design information [66]. There is debate in the HCD literature about the appropriate sample size for user testing and growing agreement that sample size depends on the goals of the test, the complexity of its elements, and the number of groups or strata being compared [67,85]. One objective of the UWAC is therefore to pool data across projects to provide guidance for the necessary sample sizes to reach saturation for interventions and implementation strategies. We anticipate that the 12 pilot studies to be funded will propose, and ultimately work with, different sample sizes, allowing us to evaluate what sample sizes are sufficient.

Qualitative analysis will be used to identify and categorize modification targets/usability problems. Data will be organized for coding using qualitative data analysis software. Transcripts, field notes, and notes from the think-aloud sessions will be coded by two assistants. As indicated above, the CFIR [80] will guide initial analysis of contextual evaluation data and the UAF [83] will be used to categorize identified usability problems. Projects will also employ a revised version of a previous [82] augmented UAF, which includes severity ratings. In this approach, severity ratings will be given to each identified usability problem based on likelihood of a user experiencing the problem; impact on a user, if encountered; and potential for the problem to interfere with an EBPI’s or implementation strategy’s impact on its target outcomes. Data will be coded using an integrated deductive and inductive approach [86]. We will use existing CFIR codes, codes identified during interview development (ie, deductive approach), and codes developed through a close reading of an initial subset of transcripts (ie, inductive approach). These codes will result in themes that will provide a way of identifying and understanding the most salient strategies, structures, barriers, and facilitators within which design solutions can be developed. After a stable set of codes and themes are developed, a consensus coding process will be used [87,88]. The analysis will also assess and explore key themes that do not follow the established frameworks (eg, UAF and CFIR), looking for other guiding literature and frameworks as appropriate.

Outcomes

Integrated data analysis across projects for this aim will result in the identification of EBPI modification targets to be used in future research. An initial version of this typology will be deployed for review and additions from the field. As we discover new targets, these will be added to the matrix and made available via an online resource.

Aim 2: Develop Design Solutions to Address Modification Targets (Design and Build Phase)

Design and Build Phase Overview

Aim 2 will build on Aim 1 and aggregate a set of design solutions the Center team identifies as effective in improving modification targets across UWAC projects. In tracking all modification approaches, we will identify both the redesign solutions that advance into the final design based on iterative prototyping as well as those that are not selected. We will also explicitly assess differences in the modification targets identified for EBPIs and implementation strategies. Through this, the UWAC will be able to rapidly identify the most successful (based on user testing) design solution types for specific targets and steadily aggregate these across projects into a database to be shared with the research and practice communities.

Data Analysis

To facilitate aggregation of redesign solutions across studies, we will use FRAME [84] as a starting point for coding interventions and implementation strategies. This coding scheme identifies both content and contextual factors modified as part of the EBPI implementation, with strong interrater agreement. Using the same qualitative coding process described in Aim 1, trained raters will code design modifications according to this protocol, which will be compiled into a continuously updated database of design solutions including information about EBPI/strategy type, setting, professional characteristics (eg, clinicians and implementation practitioners), and patient characteristics. We anticipate that the database will include discrete usability problems with corresponding indicators of problem severity; aspects of the innovations and contexts for which they were identified; redesign solutions attempted; and, when available, the outcomes from the application of those solutions.

Outcomes

Aim 2 will generate the warehouse of modification targets and redesign solutions organized by modification target type and will share information about less preferred or less usable solutions (as determined by testing with stakeholders). We will make information about these design patterns available in a way that supports both searching for solutions to specific problems and browsing for design inspiration [89].

Aim 3: Determine if Design Solutions Affect Learnability, Usability, and Sustained Quality of Care Through Changes in Modification Targets (Test Phase)

Test Phase Overview

The final overarching aim of the UWAC is to test whether EBPIs and strategies modified through the DDBT Framework result in improved learnability for clinicians and service systems, EPBI and implementation strategy usability, and sustained quality of care to deliver EBPIs in low-resource community settings. Although the principle of adapting modifiable aspects of an intervention or strategy to the needs of a setting is a central tenet of implementation science, systematic testing of adaptation mechanisms of change is new. Very little research has examined the mediating factors that are most proximal to implementation success [18]. A key goal of our work is to determine the effects of design solutions on implementation and clinical outcomes, which is critical to understanding why these solutions work or fail in real-world settings. We hypothesize that core elements and functions of EBPIs and strategies can be maintained while streamlining those innovations to improve implementation and enhance real-world effectiveness. Testing this hypothesis during the proposed and future research studies will be the UWAC’s major contribution to the field of implementation science, as it will offer such a test of adaptation to local context while providing a framework for understanding the limits of such adaptation. The Test phase of each study is focused on gathering information (feasibility, recruitment and retention rates, response and attrition rates, etc) for future larger-scale grant applications to test the effectiveness of the adapted solutions. Thus, sample sizes were set primarily for practical reasons and driven by estimated effect sizes rather than hypothesis testing.

Data Analysis

Primary analyses focus on comparison of the key DDBT and implementation outcomes (learnability, EBPI/strategy usability, and sustained quality of care) for the original, unadapted EBPI/strategy as compared to the modified EBPI/strategy. Each project and each outcome can be summarized as an effect size (Cohen d) and corresponding 95% CI. There will be no subgroup or adjusted analyses. Missing data will be reported with regard to attrition and related feasibility data. Full information maximum likelihood imputation will be used for statistical analyses to include missing data, where applicable. The UWAC Methods Core will aggregate data across projects to facilitate a series of Bayesian meta-analyses. These meta-analyses will summarize the effectiveness of using HCD approaches to improve EBPIs and strategies on each implementation outcome. The Bayesian approach will allow us to adjust our inferential probability assumptions about the effects of HCD approaches on modifications. Bayesian statistics are useful even when studies are conducted in varied settings with varied scientists using varied measures, as each subsequent study represents a form of conceptual replication. Each additional study will improve our ability to draw inference (eg, “power”) from the collection of studies while simultaneously preventing false alarms in individual studies resulting from random error.

Over time, we will use meta-regressions to examine the effectiveness of adaptations for particular outcomes (eg, on average, does modifying EBPI content or modifying an implementation strategy workflow have a greater impact on clinician integrity drift?), where these meta-regressions can also control for site characteristics (eg, rural vs urban). Similarly, we may be able to examine the effectiveness of specific HCD approaches (eg, the “think aloud” approach) for impacting specific implementation outcomes. The ultimate question is one of mediation: Does a DDBT-modified EBPI or strategy lead to better implementation outcomes, which in turn lead to better (larger, more rapid, or more widespread) patient outcomes? Although the initial center studies are not likely to yield large enough sample sizes to meaningfully test such an implementation mechanism question, the UWAC Methods Core will steadily aggregate data, allowing us to eventually test a range of mediation-focused hypotheses via multivariate network meta-analyses.

Outcomes

Aim 3 is centered on clinician- and patient-reported outcomes to ascertain the difference between modified EBPIs and strategies compared to treatment as usual. These pilot randomized controlled trials will generate preliminary data on learnability, usability, and quality of care to inform subsequent tests of these adaptions on a larger scale in low-resource community settings.

Results

The UWAC received institutional review board approval for the three separate studies in March 2018 and was funded in May 2018. Approvals for the 12 pilot studies are being obtained as the studies are identified and funded. At the time of publication, data collection for Studies 1 and 2 had been initiated.

Discussion

Innovation

We acknowledge that learnability, usability, and sustained quality of care are not the only important variables in the implementation of EBPIs and that intervention characteristics feature highly in implementation frameworks. To date, little work has been done to directly address EBPI or implementation strategy complexity and even less work has actively involved end users in the actual design of modifications, from beginning to end. Moreover, while the field is aware that “there is no implementation without adaptation” [25] and that EBPI characteristics drive adoption potential, no single resource or compilation of the needed targets for modification or redesign solutions is most usable by clinicians. An explicit focus on modification targets for implementation strategies is almost fully absent. Although tailoring interventions and strategies are frequently advocated in the implementation literature, specific methods of tailoring are sorely needed [79]. Very few implementation approaches attend to mechanisms of action [18,90] and even fewer attend to usability [25]. Because all UWAC studies use the DDBT framework and collect common core outcomes, we will be able to contribute substantially to the literature by providing preliminary evidence of how robust the DDBT framework is in developing EPBI and implementation strategy modifications and solutions, creating a typology of modification targets that will be disseminated publicly to facilitate future research by uncovering cross-cutting and context-specific modification needs and creating a matrix of redesign solutions matched to modification targets that will further fuel mechanistic science in implementation research.

Conclusion and Impact

Incorporation of HCD methods into implementation science has strong potential to improve the degree to which innovations are compelling, learnable, and ultimately implementable. Both fields share the common objective of facilitating the use of innovative products [22]. An extensive implementation science literature supports the importance of organizations, individuals, and contexts to the adoption and sustainment of EBPIs [91,92]. Despite a wealth of over 60 frameworks such as the CFIR [80], implementation research often assumes a relatively static innovation or implementation strategy, the high-integrity delivery of which tends to be a cornerstone of most studies. In contrast, HCD focuses primarily on the product itself, based on the assumption that a well-designed and compelling innovation is much more likely to be adopted, well-used, and sustained. The current, integrated DDBT framework draws on the strengths of each of these traditions to develop streamlined and effective EBPIs and implementation strategies. In the pursuit of integrated methods, we anticipate that the work of the UWAC will also identify a variety of barriers to integration that can be addressed as collaborative work between HCD and implementation evolves. To this end, we will build on related ongoing work to integrate these fields, such as a concept mapping study comparing HCD and implementation strategies and identifying pathways for collaboration [93]. As the science of adaptation continues to advance [94], findings yielded from the current Methods Core aims are intended to contribute significantly to both the implementation and HCD literature.

Acknowledgments

This paper was supported by the National Institute of Mental Health (grants P50MH115837, R01MH102304, R34MH109605, and R34MH109605). The funding body had no role in the study design, writing of the manuscript, or decision to submit the article for publication.

Abbreviations

- ALACRITY

Advanced Laboratories for Accelerating the Reach and Impact of Treatments for Youth and Adults with Mental Illness

- CFIR

Consolidated Framework for Implementation Research

- CPT

cognitive processing therapy

- DDBT

Discover, Design and Build, and Test

- EBPI

evidence-based psychosocial interventions

- FRAME

Framework for Reporting Adaptations and Modifications to Evidence-based Interventions

- HCD

human-centered design

- PST

problem-solving therapy

- SUS

System Usability Scale

- tCBT

telephone-based cognitive behavioral therapy

- UAF

User Action Framework

- UWAC

University of Washington’s Advanced Laboratories for Accelerating the Reach and Impact of Treatments for Youth and Adults with Mental Illness Center

Appendix

Example CONSORT (Consolidated Standards of Reporting Trials) diagrams for provider and patient participants in the test phase of University of Washington’s Advanced Laboratories for Accelerating the Reach and Impact of Treatments for Youth and Adults with Mental Illness Center studies.

Human subjects and data protection.

Intervention Usability Scale.

Implementation Strategy Usability Scale.

Footnotes

Authors' Contributions: All authors contributed to study concept and design. PAA is the center director, led the center and design of all studies, and obtained funding. ARL, SAM, and DCA created the protocol for the Methods Core. ARL and SAM codirect the Methods Core. DCA and MDP assisted with statistical analysis planning. SAM and EF provided essential input on the methodology. ARL developed the initial manuscript outline and ARL, BNR, and PAA drafted the manuscript with contributions from all authors. All authors are contributing to the conduct of the studies and have read and approved the final manuscript for publication.

Conflicts of Interest: DCA is a cofounder with equity stake in a technology company, Lyssn.io, focused on tools to support training, supervision, and quality assurance of psychotherapy and counseling.

References

- 1.Raue PJ, Weinberger MI, Sirey JA, Meyers BS, Bruce ML. Preferences for depression treatment among elderly home health care patients. Psychiatr Serv. 2011 May;62(5):532–7. doi: 10.1176/ps.62.5.pss6205_0532. http://europepmc.org/abstract/MED/21532080. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Arean PA, Raue PJ, Sirey JA, Snowden M. Implementing evidence-based psychotherapies in settings serving older adults: challenges and solutions. Psychiatr Serv. 2012 Jun;63(6):605–7. doi: 10.1176/appi.ps.201100078. http://europepmc.org/abstract/MED/22638006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Quiñones Ana R, Thielke SM, Beaver KA, Trivedi RB, Williams EC, Fan VS. Racial and ethnic differences in receipt of antidepressants and psychotherapy by veterans with chronic depression. Psychiatr Serv. 2014 Feb 01;65(2):193–200. doi: 10.1176/appi.ps.201300057. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Fernandez Y Garcia Erik, Franks P, Jerant A, Bell RA, Kravitz RL. Depression treatment preferences of Hispanic individuals: exploring the influence of ethnicity, language, and explanatory models. J Am Board Fam Med. 2011 Jan 05;24(1):39–50. doi: 10.3122/jabfm.2011.01.100118. http://www.jabfm.org/cgi/pmidlookup?view=long&pmid=21209343. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.McHugh RK, Whitton SW, Peckham AD, Welge JA, Otto MW. Patient preference for psychological vs pharmacologic treatment of psychiatric disorders. J Clin Psychiatry. 2013 Jun 15;74(06):595–602. doi: 10.4088/jcp.12r07757. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Houle J, Villaggi B, Beaulieu M, Lespérance François, Rondeau G, Lambert J. Treatment preferences in patients with first episode depression. J Affect Disord. 2013 May;147(1-3):94–100. doi: 10.1016/j.jad.2012.10.016. [DOI] [PubMed] [Google Scholar]

- 7.Dwight Johnson M, Apesoa-Varano C, Hay J, Unutzer J, Hinton L. Depression treatment preferences of older white and Mexican origin men. Gen Hosp Psychiatry. 2013 Jan;35(1):59–65. doi: 10.1016/j.genhosppsych.2012.08.003. http://europepmc.org/abstract/MED/23141027. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Linden M, Golier J. Challenges in the implementation of manualized psychotherapy in combat-related PTSD. CNS Spectr. 2008 Oct 07;13(10):872–80. doi: 10.1017/s1092852900016977. [DOI] [PubMed] [Google Scholar]

- 9.Chambers DA. Adm Policy Ment Health. USA: Springer; 2008. Mar 27, Advancing the science of implementation: a workshop summary; pp. 3–10. [DOI] [PubMed] [Google Scholar]

- 10.Goldman HH, Azrin ST. Public policy and evidence-based practice. Psychiatric Clinics of North America. 2003 Dec;26(4):899–917. doi: 10.1016/s0193-953x(03)00068-6. [DOI] [PubMed] [Google Scholar]

- 11.Institute of Medicine . Psychosocial Interventions For Mental And Substance Use Disorders: A Framework For Establishing Evidence-based Standards. Washington, DC: National Academies Press; 2015. [PubMed] [Google Scholar]

- 12.Olfson M, Kroenke K, Wang S, Blanco C. Trends in office-based mental health care provided by psychiatrists and primary care physicians. J Clin Psychiatry. 2014 Mar 15;75(03):247–253. doi: 10.4088/jcp.13m08834. [DOI] [PubMed] [Google Scholar]

- 13.Farmer EMZ, Burns BJ, Phillips SD, Angold A, Costello EJ. Pathways into and through mental health services for children and adolescents. Psychiatr Serv. 2003 Jan;54(1):60–6. doi: 10.1176/appi.ps.54.1.60. [DOI] [PubMed] [Google Scholar]

- 14.Merikangas KR, He J, Burstein M, Swendsen J, Avenevoli S, Case B, Georgiades K, Heaton L, Swanson S, Olfson M. Service utilization for lifetime mental disorders in U.S. adolescents: results of the National Comorbidity Survey-Adolescent Supplement (NCS-A) J Am Acad Child Adolesc Psychiatry. 2011 Jan;50(1):32–45. doi: 10.1016/j.jaac.2010.10.006. http://europepmc.org/abstract/MED/21156268. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Lyon AR, Ludwig K, Romano E, Koltracht J, Vander Stoep A, McCauley E. Using modular psychotherapy in school mental health: provider perspectives on intervention-setting fit. J Clin Child Adolesc Psychol. 2014 Oct 17;43(6):890–901. doi: 10.1080/15374416.2013.843460. http://europepmc.org/abstract/MED/24134063. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Stewart RE, Chambless DL, Stirman SW. Decision making and the use of evidence-based practice: Is the three-legged stool balanced? Practice Innovations. 2018 Mar;3(1):56–67. doi: 10.1037/pri0000063. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Flottorp SA, Oxman AD, Krause J, Musila NR, Wensing M, Godycki-Cwirko M, Baker R, Eccles MP. A checklist for identifying determinants of practice: a systematic review and synthesis of frameworks and taxonomies of factors that prevent or enable improvements in healthcare professional practice. Implement Sci. 2013 Mar 23;8(1):35. doi: 10.1186/1748-5908-8-35. https://implementationscience.biomedcentral.com/articles/10.1186/1748-5908-8-35. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Lewis CC, Klasnja P, Powell BJ, Lyon AR, Tuzzio L, Jones S, Walsh-Bailey C, Weiner B. From classification to causality: Advancing understanding of mechanisms of change in implementation science. Front Public Health. 2018 May 7;6:136. doi: 10.3389/fpubh.2018.00136. doi: 10.3389/fpubh.2018.00136. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Cook CR, Lyon AR, Locke J, Waltz T, Powell BJ. Adapting a compilation of implementation strategies to advance school-based implementation research and practice. Prev Sci. 2019 Aug 31;20(6):914–935. doi: 10.1007/s11121-019-01017-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Leeman J, Birken SA, Powell BJ, Rohweder C, Shea CM. Beyond "implementation strategies": classifying the full range of strategies used in implementation science and practice. Implement Sci. 2017 Nov 03;12(1):125. doi: 10.1186/s13012-017-0657-x. https://implementationscience.biomedcentral.com/articles/10.1186/s13012-017-0657-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Powell BJ, Waltz TJ, Chinman MJ, Damschroder LJ, Smith JL, Matthieu MM, Proctor EK, Kirchner JE. A refined compilation of implementation strategies: results from the Expert Recommendations for Implementing Change (ERIC) project. Implement Sci. 2015 Feb 12;10(1):21. doi: 10.1186/s13012-015-0209-1. https://implementationscience.biomedcentral.com/articles/10.1186/s13012-015-0209-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Lyon AR, Koerner K. User-centered design for psychosocial intervention development and implementation. Clin Psychol Sci Pract. 2016 Jun 17;23(2):180–200. doi: 10.1111/cpsp.12154. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Proctor EK, Powell BJ, McMillen JC. Implementation strategies: recommendations for specifying and reporting. Implementation Sci. 2013 Dec 1;8(1) doi: 10.1186/1748-5908-8-139. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.International Organization for Standardization ISO: International Organization for Standardization. [2019-09-10]. ISO 9241-420:2011 - Ergonomics of human-system interaction — Part 420: Selection of physical input devices https://www.iso.org/standard/52938.html.

- 25.Lyon AR, Bruns EJ. User-centered redesign of evidence-based psychosocial interventions to enhance implementation—hospitable soil or better seeds? JAMA Psychiatry. 2019 Jan 01;76(1):3. doi: 10.1001/jamapsychiatry.2018.3060. [DOI] [PubMed] [Google Scholar]

- 26.Alexopoulos GS, Raue PJ, Kiosses DN, Seirup JK, Banerjee S, Arean PA. Comparing engage with PST in late-life major depression: a preliminary report. Am J Geriatr Psychiatry. 2015 May;23(5):506–13. doi: 10.1016/j.jagp.2014.06.008. http://europepmc.org/abstract/MED/25081818. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Sburlati ES, Schniering CA, Lyneham HJ, Rapee RM. A model of therapist competencies for the empirically supported cognitive behavioral treatment of child and adolescent anxiety and depressive disorders. Clin Child Fam Psychol Rev. 2011 Mar 4;14(1):89–109. doi: 10.1007/s10567-011-0083-6. [DOI] [PubMed] [Google Scholar]

- 28.Gehart D. The core competencies and MFT education: practical aspects of transitioning to a learning-centered, outcome-based pedagogy. J Marital Fam Ther. 2011 Jul;37(3):344–54. doi: 10.1111/j.1752-0606.2010.00205.x. [DOI] [PubMed] [Google Scholar]

- 29.Crabb R, Areán Patricia A, Hegel M. Sustained adoption of an evidence-based treatment: a survey of clinicians certified in problem-solving therapy. Depress Res Treat. 2012;2012:986547. doi: 10.1155/2012/986547. doi: 10.1155/2012/986547. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Lewis CC, Fischer S, Weiner BJ, Stanick C, Kim M, Martinez RG. Outcomes for implementation science: an enhanced systematic review of instruments using evidence-based rating criteria. Implementation Sci. 2015 Nov 4;10(1) doi: 10.1186/s13012-015-0342-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Dopp A, Parisi K, Munson S, Lyon A. A glossary of user-centered design strategies for implementation experts. Transl Behav Med. 2018 Dec 07; doi: 10.1093/tbm/iby119. [DOI] [PubMed] [Google Scholar]

- 32.Lyon AR, Comtois KA, Kerns SEU, Landes SJ, Lewis CC. Closing the science-practice gap in implementation before it opens. In: Mildon R, Albers B, Shlonsky A, editors. Effective Implementation Science. New York: Springer International Publishing; 2020. [Google Scholar]

- 33.Norman D, Draper S. User centered system design: new perspectives on human-computer interaction. Hillsdale, N.J. Hillsdale, NJ: L Erlbaum Associates; 1986. [Google Scholar]

- 34.Lyon AR, Koerner K, Chung J. How implementable is that evidence-based practice? A methodology for assessing complex innovation usability. Proceedings from the 11th Annual Conference on the Science of Dissemination and Implementation. Washington, DC, USA. Implementation Science. 2019 Mar 25;14(1):878. doi: 10.1186/s13012-019-0878-2. [DOI] [Google Scholar]

- 35.Alexopoulos GS, Raue PJ, Gunning F, Kiosses DN, Kanellopoulos D, Pollari C, Banerjee S, Arean PA. "Engage" therapy: Behavioral activation and improvement of late-life major depression. Am J Geriatr Psychiatry. 2016 Apr;24(4):320–6. doi: 10.1016/j.jagp.2015.11.006. http://europepmc.org/abstract/MED/26905044. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Lyon A, Coifman J, Cook H, Liu F, Ludwig K, Dorsey S, Koerner K, Munson S, McCauley E. The Cognitive Walk-Through for Implementation Strategies (CWIS): A pragmatic methodology for assessing strategy usability. 11th Annual Conference on the Science of Dissemination and Implementation; 2018; Washington, DC. 2018. p. A. [Google Scholar]

- 37.Mohr DC, Lyon AR, Lattie EG, Reddy M, Schueller SM. Accelerating digital mental health research from early design and creation to successful implementation and sustainment. J Med Internet Res. 2017 May 10;19(5):e153. doi: 10.2196/jmir.7725. https://www.jmir.org/2017/5/e153/ [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Proctor E, Hooley C, Morse A, McCrary S, Kim H, Kohl PL. Intermediary/purveyor organizations for evidence-based interventions in the US child mental health: characteristics and implementation strategies. Implementation Sci. 2019 Jan 14;14(1) doi: 10.1186/s13012-018-0845-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.ISO: International Standards Organization. 2019. [2019-09-19]. ISO 9241-210:2019 Ergonomics of human-system interaction — Part 210: Human-centred design for interactive systems https://www.iso.org/standard/77520.html.

- 40.Chorpita Bruce F, Becker Kimberly D, Daleiden Eric L. Understanding the common elements of evidence-based practice: misconceptions and clinical examples. J Am Acad Child Adolesc Psychiatry. 2007 May;46(5):647–52. doi: 10.1097/chi.0b013e318033ff71. [DOI] [PubMed] [Google Scholar]

- 41.Lyon AR, Stanick C, Pullmann MD. Toward high‐fidelity treatment as usual: Evidence‐based intervention structures to improve usual care psychotherapy. Clin Psychol Sci Pract. 2018 Dec 12;25(4):e12265. doi: 10.1111/cpsp.12265. [DOI] [Google Scholar]

- 42.Meza RD, Jungbluth N, Sedlar G, Martin P, Berliner L, Wiltsey-Stirman S, Dorsey S. Clinician-reported modification to a CBT approach in children’s mental health. Journal of Emotional and Behavioral Disorders. 2019 Feb 13;:106342661982836. doi: 10.1177/1063426619828369. [DOI] [Google Scholar]

- 43.Proctor E, Silmere H, Raghavan R, Hovmand P, Aarons G, Bunger A, Griffey R, Hensley M. Outcomes for implementation research: conceptual distinctions, measurement challenges, and research agenda. Adm Policy Ment Health. 2011 Mar 19;38(2):65–76. doi: 10.1007/s10488-010-0319-7. http://europepmc.org/abstract/MED/20957426. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Dwight-Johnson M, Aisenberg E, Golinelli D, Hong S, O'Brien M, Ludman E. Telephone-based cognitive-behavioral therapy for latino patients living in rural areas: A randomized pilot study. Psychiatric Services. 2011 Aug 01;62(8) doi: 10.1176/appi.ps.62.8.936. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Nezu AM. Efficacy of a social problem-solving therapy approach for unipolar depression. Journal of Consulting and Clinical Psychology. 1986;54(2):196–202. doi: 10.1037//0022-006x.54.2.196. [DOI] [PubMed] [Google Scholar]

- 46.Resick PA, Schnicke MK. Cognitive processing therapy for sexual assault victims. Journal of Consulting and Clinical Psychology. 1992;60(5):748–756. doi: 10.1037//0022-006x.60.5.748. [DOI] [PubMed] [Google Scholar]

- 47.Holtzblatt KW, Wood S. Rapid Contextual Design: A How-to Guide to Key Techniques for User-Centered Design. San Francisco, CA: Morgan Kaufmann; 2004. [Google Scholar]

- 48.Maguire M. Methods to support human-centred design. International Journal of Human-Computer Studies. 2001 Oct;55(4):587–634. doi: 10.1006/ijhc.2001.0503. [DOI] [Google Scholar]

- 49.Rubin J, Chisnell D. Handbook of usability testing: how to plan, design, and conduct effective tests. 2nd ed. Indianapolis, IN: Wiley; 2008. [Google Scholar]

- 50.Albert W, Tullis T. Measuring The User Experience: Collecting, Analyzing, And Presenting Usability Metrics (interactive Technologies) Waltham, MA: Morgan Kaufmann; 2019. [Google Scholar]

- 51.Beyer H, Holtzblatt K. Contextual Design: Defining Customer-centered Systems (interactive Technologies) Waltham, MA: Morgan Kaufmann; 1998. [Google Scholar]

- 52.Gould JD, Lewis C. Designing for usability: key principles and what designers think. Commun ACM. 1985;28(3):300–311. doi: 10.1145/3166.3170. [DOI] [Google Scholar]

- 53.Cooper A, Reimann R, Cronin D. About Face 3: The Essentials Of Interaction Design. Indianapolis, IN: Wiley; 2019. [Google Scholar]

- 54.Grudin J, Pruitt J. Personas, Participatory Design and Product Development: An Infrastructure for Engagement. Proceedings of Participation and Design Conference; 2002; Sweden. 2002. https://pdfs.semanticscholar.org/eb77/6eaa198047962dabe619e9cdd3de4433a44e.pdf. [Google Scholar]

- 55.Kujala S, Mäntylä M. How effective are user studies? In: McDonald S, Waern Y, Cockton G, editors. People and computers XIV - usability or else! London: Springer-Verlag; 2000. pp. 61–71. [Google Scholar]

- 56.Kujala S, Kauppinen M. Identifying and Selecting Users for User-Centered Design. Nordic Conference on Computer Human Interaction; 2004; Tampere, Finland. 2004. https://pdfs.semanticscholar.org/23e1/079142e2651c85ef28b23a4798d09da4b172.pdf. [DOI] [Google Scholar]

- 57.Franks RP, Bory CT. Who supports the successful implementation and sustainability of evidence-based practices? Defining and understanding the roles of intermediary and purveyor organizations. New Dir Child Adolesc Dev. 2015 Sep 16;2015(149):41–56. doi: 10.1002/cad.20112. [DOI] [PubMed] [Google Scholar]

- 58.Cooper A, Reimann R, Cronin D. About face 3: The essentials of interaction design. Indianapolis, IN: Wiley Pub; 2007. [Google Scholar]

- 59.Palinkas LA, Aarons GA, Horwitz S, Chamberlain P, Hurlburt M, Landsverk J. Mixed method designs in implementation research. Adm Policy Ment Health. 2010 Oct 22;38(1):44–53. doi: 10.1007/s10488-010-0314-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Tornatzky LG, Klein KJ. Innovation characteristics and innovation adoption-implementation: A meta-analysis of findings. IEEE Trans Eng Manage. 1982 Feb;EM-29(1):28–45. doi: 10.1109/tem.1982.6447463. [DOI] [Google Scholar]

- 61.Wei J, Salvendy G. The cognitive task analysis methods for job and task design: review and reappraisal. Behaviour & Information Technology. 2004 Jul;23(4):273–299. doi: 10.1080/01449290410001673036. [DOI] [Google Scholar]

- 62.Bligård L, Osvalder A. Enhanced cognitive walkthrough: Development of the cognitive walkthrough method to better predict, identify, and present usability problems. Advances in Human-Computer Interaction. 2013;2013:1–17. doi: 10.1155/2013/931698. [DOI] [Google Scholar]

- 63.Boren T, Ramey J. Thinking aloud: reconciling theory and practice. IEEE Trans Profess Commun. 2000;43(3):261–278. doi: 10.1109/47.867942. [DOI] [Google Scholar]

- 64.Jääskeläinen R. Think aloud protocol. In: Gambier Y, Doorslaer L, editors. Handbook of Translation Studies: Volume 1. Amsterdam, Netherlands: John Benjamins Publishing Company; 2010. pp. 371–373. [Google Scholar]

- 65.Wilson J, Rosenberg D. Rapid prototyping for user interface design. In: Helander M, editor. Handbook of Human-Computer Interaction. Amsterdam, Netherlands: North-Holland; 1988. pp. 859–875. [Google Scholar]

- 66.Nielsen J, Landauer T. A mathematical model of the finding of usability problems. Proceedings of the INTERACT '93 and CHI '93 Conference on Human Factors in Computing Systems; 1993; Amsterdam, Netherlands. 1993. pp. 206–213. https://dl.acm.org/citation.cfm?id=169166. [DOI] [Google Scholar]

- 67.Hwang W, Salvendy G. Number of people required for usability evaluation. Commun ACM. 2010 May 01;53(5):130. doi: 10.1145/1735223.1735255. [DOI] [Google Scholar]

- 68.Curran GM, Bauer M, Mittman B, Pyne JM, Stetler C. Effectiveness-implementation hybrid designs. Medical Care. 2012;50(3):217–226. doi: 10.1097/mlr.0b013e3182408812. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69.Craig SD, Graesser AC, Perez RS. Advances from the Office of Naval Research STEM Grand Challenge: expanding the boundaries of intelligent tutoring systems. IJ STEM Ed. 2018 Apr 12;5(1) doi: 10.1186/s40594-018-0111-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 70.Brooke J. SUS: A quick and dirty usability scale. In: Jordan PW, Thomas B, Weerdmeester BA, McClelland AL, editors. Usability evaluation in industry. London: Taylor and Francis; 1996. [Google Scholar]

- 71.Weiner BJ, Lewis CC, Stanick C, Powell BJ, Dorsey CN, Clary AS, Boynton MH, Halko H. Psychometric assessment of three newly developed implementation outcome measures. Implementation Sci. 2017 Aug 29;12(1) doi: 10.1186/s13012-017-0635-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 72.Vallis TM, Shaw BF, Dobson KS. The Cognitive Therapy Scale: Psychometric properties. Journal of Consulting and Clinical Psychology. 1986;54(3):381–385. doi: 10.1037//0022-006x.54.3.381. [DOI] [PubMed] [Google Scholar]

- 73.Raghavan R. The role of economic evaluation in disseminationimplementation research. In: Brownson RC, Colditz GA, Proctor EK, editors. Dissemination and implementation research in health: Translating science to practice. New York: Oxford Scholarship; 2012. pp. 94–113. [Google Scholar]

- 74.Sheehan DV, Harnett-Sheehan K, Raj BA. The measurement of disability. International Clinical Psychopharmacology. 1996;11(Supplement 3):89–95. doi: 10.1097/00004850-199606003-00015. [DOI] [PubMed] [Google Scholar]

- 75.Leon AC, Olfson M, Portera L, Farber L, Sheehan DV. Assessing psychiatric impairment in primary care with the sheehan disability scale. Int J Psychiatry Med. 1997 Jul;27(2):93–105. doi: 10.2190/t8em-c8yh-373n-1uwd. [DOI] [PubMed] [Google Scholar]

- 76.Kroenke K, Spitzer RL, Williams JBW. The PHQ-9: validity of a brief depression severity measure. J Gen Intern Med. 2001 Sep;16(9):606–13. doi: 10.1046/j.1525-1497.2001.016009606.x. https://onlinelibrary.wiley.com/resolve/openurl?genre=article&sid=nlm:pubmed&issn=0884-8734&date=2001&volume=16&issue=9&spage=606. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 77.Aarons GA, Fettes DL, Hurlburt MS, Palinkas LA, Gunderson L, Willging CE, Chaffin MJ. Collaboration, negotiation, and coalescence for interagency-collaborative teams to scale-up evidence-based practice. J Clin Child Adolesc Psychol. 2014 Mar 10;43(6):915–28. doi: 10.1080/15374416.2013.876642. http://europepmc.org/abstract/MED/24611580. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 78.Hurlburt M, Aarons GA, Fettes D, Willging C, Gunderson L, Chaffin MJ. Interagency collaborative team model for capacity building to scale-up evidence-based practice. Child Youth Serv Rev. 2014 Apr;39:160–168. doi: 10.1016/j.childyouth.2013.10.005. http://europepmc.org/abstract/MED/27512239. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 79.Powell BJ, Beidas RS, Lewis CC, Aarons GA, McMillen JC, Proctor EK, Mandell DS. Methods to improve the selection and tailoring of implementation strategies. J Behav Health Serv Res. 2015 Aug 21;44(2):177–194. doi: 10.1007/s11414-015-9475-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 80.Damschroder LJ, Aron DC, Keith RE, Kirsh SR, Alexander JA, Lowery JC. Fostering implementation of health services research findings into practice: a consolidated framework for advancing implementation science. Implementation Sci. 2009 Aug 07;4(1) doi: 10.1186/1748-5908-4-50. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 81.Lavery D, Cockton G, Atkinson MP. Comparison of evaluation methods using structured usability problem reports. Behaviour & Information Technology. 1997 Jan;16(4-5):246–266. doi: 10.1080/014492997119824. [DOI] [Google Scholar]

- 82.Khajouei R, Peute L, Hasman A, Jaspers M. Classification and prioritization of usability problems using an augmented classification scheme. J Biomed Inform. 2011 Dec;44(6):948–57. doi: 10.1016/j.jbi.2011.07.002. https://linkinghub.elsevier.com/retrieve/pii/S1532-0464(11)00120-1. [DOI] [PubMed] [Google Scholar]