Abstract

Heterogeneity is an unwanted variation when analyzing aggregated datasets from multiple sources. Though different methods have been proposed for heterogeneity adjustment, no systematic theory exists to justify these methods. In this work, we propose a generic framework named ALPHA (short for Adaptive Low-rank Principal Heterogeneity Adjustment) to model, estimate, and adjust heterogeneity from the original data. Once the heterogeneity is adjusted, we are able to remove the batch effects and to enhance the inferential power by aggregating the homogeneous residuals from multiple sources. Under a pervasive assumption that the latent heterogeneity factors simultaneously affect a fraction of observed variables, we provide a rigorous theory to justify the proposed framework. Our framework also allows the incorporation of informative covariates and appeals to the ‘Bless of Dimensionality’. As an illustrative application of this generic framework, we consider a problem of estimating high-dimensional precision matrix for graphical model inference based on multiple datasets. We also provide thorough numerical studies on both synthetic datasets and a brain imaging dataset to demonstrate the efficacy of the developed theory and methods.

Keywords: Multiple sourcing, batch effect, semiparametric factor model, principal component analysis, brain image network

1. Introduction

Aggregating and analyzing heterogeneous data is one of the most fundamental challenges in scientific data analysis. In particular, the intrinsic heterogeneity across multiple data sources violates the ideal ‘independent and identically distributed’ sampling assumption and may produce misleading results if it is ignored. For example, in genomics, data heterogeneity is ubiquitous and referred to as either ‘batch effect’ or ‘lab effect’. As characterized in [29], microarray gene expression data obtained from different labs on different processing dates may contain systematic variability. Furthermore, [30] pointed out that heterogeneity across multiple data sources may be caused by unobserved factors that have confounding effects on the variables of interest, generating spurious signals. In finance, it is also known that asset returns are driven by varying market regimes and economy status, which can be regarded as a temporal batch effect. Therefore, to properly analyze data aggregated from multiple sources, we need to carefully model and adjust the unwanted variations.

Modeling and estimating heterogeneity effect is challenging for two reasons. (i) Typically, we can only access a limited number of samples from an individual group, given the high cost of biological experiments, technological constraint or fast economy regime switching. (ii) The dimensionality can be much larger than the total aggregated number of samples. The past decade has witnessed the development of many methods for adjusting batch effect in high throughput genomics data. See, for example, [43], [2], [30], and [25]. Though progresses have been made, most of the aforementioned papers focus on the practical side and none of them has a systematic theoretical justification. In fact, most of these methods are developed in a case-by-case fashion and are only applicable to certain problem domains. Thus, there is still a gap that exists between practice and theory.

To bridge this gap, we propose a generic theoretical framework to model, estimate, and adjust heterogeneity across multiple datasets. Formally, we assume the data come from m different sources: the ith data source contributes ni samples, each having p measurements such as gene expressions of an individual or stock returns of a day. To explicitly model heterogeneity, we assume that the batch-specific latent factor influence the observed data in batch i (j indexes variables; t indexes samples) as in the approximate factor model:

| (1.1) |

where is an unknown factor loading for variable j and is a true uncorrupted signal. We consider a random loading The linear term models the heterogeneity effect. We assume that is independent of and shares the same common distribution with mean 0 and covariance Σp×p across all data sources. In the matrix-form model, (1.1) can be written as

| (1.2) |

where Xi is a p×ni data matrix in the ith batch, Λi is a p×Ki factor loading matrix with in the jth row, Fi is an ni × Ki factor matrix and Ui is a signal matrix of dimension p × ni. We allow the number of latent factors Ki to depend on batch i. We emphasize here that within one batch, our model is homogeneous. Heterogeneity in this paper refers to that the batch effect terms are different across different groups i = 1,…,m, which are unwanted variations in our study.

To see more clearly on how model (1.2) characterizes the heterogeneity, note that for the tth sample , which is the tth column of Xi,

| (1.3) |

Therefore, the heterogeneity is carried by the low-rank component in the population covariance matrix of . We need to clarify that since we assume both Fi and Ui have mean zero, heterogeneity mentioned in this paper is for covariance structure as shown above instead of mean structure. In addition, our model differs from the random/mixed effect regression model studied in the literature [45, 23, 11] in that our models are factor models without any factors observed, while the mixed/random effect model is a regression model that requires covariate matrices to estimate the batch-specific term.

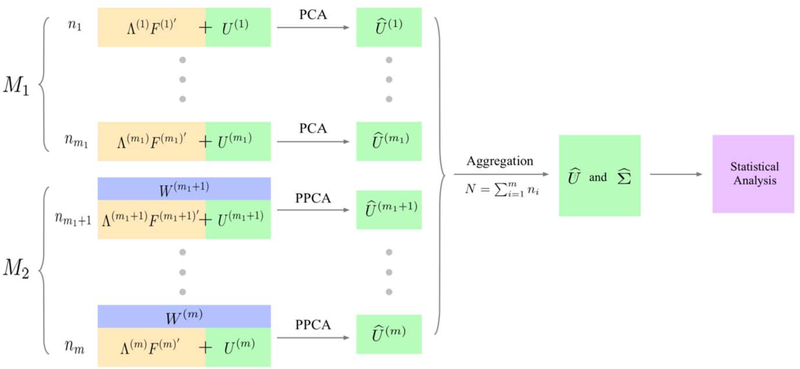

Under a pervasive assumption, the heterogeneity component can be estimated by directly applying principal component analysis (PCA) or Projected-PCA (PPCA), which is more accurate when there are sufficiently informative covariates Wi [18]. Let be the estimated heterogeneity component and the heterogeneity-adjusted signal, which can be treated as homogeneous across different datasets and thus can be combined together for downstream statistical analysis. This whole framework of heterogeneity adjustment is termed ALPHA (short for Adaptive Low-rank Principal Heterogeneity Adjustment) and is schematically shown in Figure 1.

Fig 1.

Schematic illustration of ALPHA: Depending on whether we can find some sufficiently informative covariates W, we implement principal component analysis (PCA) or Projected-PCA (PPCA) methods (labeled respectively M1 and M2) to remove the heterogeneity effects ΛF′ for each batch of data. This decision was made adaptively by a heuristic method. After removing the unwanted variations, the homogeneous data are aggregrated for further analysis.

The proposed ALPHA framework is fully generic and applicable to almost all kinds of multivariate analysis of the combined, heterogeneity adjusted datasets. As an illustrative example, in this paper, we focus on the problem of Gaussian graphical model inference based on multiple datasets. It is a powerful tool to explore complex dependence structure among variables X = (X1,…,Xp)′. The sparsity pattern of the precision matrix Ω = Σ−1 encodes the information of an undirected graph G = (V,E) where V consists of p vertices corresponding to p variables in X and E describes their dependence relationship. To be specific, Vi and Vj are linked by an edge if and only if (the (i,j)th element of Ω), meaning that Xi and Xj are dependent conditioning on the rest of the variables. For heterogeneous data across m data sources, we need to first adjust for heterogeneity using the ALPHA framework. The idea of covariate-adjusted precision matrix estimation has been studied by [7], but they assumed observed factors and no heterogeneity issue, i.e., m = 1.

A significant amount of literature has focused on the estimation of the precision matrix Ω for graphical models for homogeneous data. [49] and [20] developed the Graphical Lasso method using the L1 penalty and [27] and [42] used a non-convex penalty. Furthermore, [40] and [33] studied the theoretical properties under different assumptions. Estimating Ω can be equivalently reformulated as a set of node-wise sparse linear regression that utilizes Lasso or Danzig selector for each node [35, 48]. To relax the assumption of Gaussian data, [32] and [31] extend the graphical model to the case of semiparametric Gaussian copula and transelliptical family. Via the ALPHA framework, we can combine the adjusted data to construct an estimator for the precision matrix Ω by the above methods. Recent works also focus on joint estimation of multiple Gaussian or discrete graphical models which share some common structure [22, 15, 47, 8, 21]. They are concerned with both the commonality and individual uniqueness of the graphs. In comparison, ALPHA emphasizes more on heterogeneity-adjusted aggregation for one single graph.

The rest of the paper is organized as follows. Section 2 lays out a basic problem setup and necessary assumptions. We model the heterogeneity by a semiparametric factor model. Section 3 introduces the ALPHA methodology for heterogeneity adjustment. Two main methods PCA and PPCA will be introduced for adjusting the factor effects under different regimes. A guiding rule of thumb is also proposed to determine which method is more appropriate. The heterogeneity-adjusted data will be combined to provide valid graph estimation in Section 4. The CLIME method of [9] is applied for precision matrix estimation. Synthetic and real data analyses are carried out to demonstrate the proposed framework in Section 5. Section 6 contains further discussions and all the proofs are relegated to the appendix.

2. Problem setup

To more efficiently use the external covariate information in removing heterogeneity effect, we first present a semiparametric factor model. Then, based on whether the collected external covariates have explaining power on factor loadings, we discuss two different regimes where PCA or PPCA should be used. We will state the conditions under which these methods can be formally justified.

2.1. Semiparametric factor model

We assume that for subgroup i, we have d external covariates for variable j. In stock returns, these can be attributes of a firm; in brain imaging, these can be the physical locations of voxels. We assume that these covariates have some explanatory power on the loading parameters in (1.1) so that it can be further modeled as , where gi(·) is the external covariate effects on and is the part that can not be explained by the covariates. Thus, model (1.1) can be written as

| (2.1) |

Model (2.1) does not put much restriction. If is not informative at all, i.e., gi(·) = 0, the model reduces to a regular factor model. In a matrix form, model (2.1) can be written as

| (2.2) |

In (2.2), Gi(Wi) and Γi are p×Ki matrices. More specifically, and γjk are the (j,k)th element of Gi(Wi) and Γi respectively. Expression (2.2) suggests that the observed data can be decomposed into a low-rank heterogeneity term and a homogeneous signal term Ui. Letting be the tth column of Ui, we assume all share the same distribution for any t ≤ ni and for all subgroups i ≤ m with .

There has been a large literature on factor models in econometrics [3, 5, 17, 44], machine learning [10, 36] and random matrix theories [26, 38, 46]. We refer the interested readers to those relevant papers and the references therein. However, none of these models incorporate the external covariate information. The semiparametric factor model (2.1) was first proposed by [14] and further investigated by [13] and [18]. Using sufficiently informative external covariates, we are able to more accurately estimate the factors and loadings, and hence yield better adjustment for heterogeneity.

Here we collect some notations of eigenvalues and matrix norms used in the paper. For matrix M, we use λmax(M), λmin(M) and λi(M) to denote the maximum eigenvalue, the minimum eigenvalue and the ith eigenvalue of M respectively. We define the quantities , , , and to be its entry-wise maximum, spectral, Frobenius, induced ℓ1 and element-wise ℓ1 norms.

2.2. Modeling assumptions and general methodology

In this subsection, we explicitly list all the required modeling assumptions. We start with an introduction of the data generating processes.

Assumption 2.1 (Data generating process). (i) .

(ii) are independently and identically sub-Gaussian distributed with mean zero and covariance Σ within and between subgroups, and independent of . Let .

(iii) is a stationary process, with arbitrary temporal dependency. The tail of the factors is sub-Gaussian, i.e., there exist C1, C2 > 0 such that for any and .

The above set of assumptions are commonly used in the literature, see [5] and [18]. We omit detailed discussions here.

Based on whether the external covariates are informative, we specify two regimes, each of which requires some additional technical conditions.

2.2.1. Regime 1: External covariates are not informative

For the case that the external covariates do not have enough explanatory power on the factor loadings Λi, we ignore the semiparametric structure and model (2.2) reduces to the traditional factor model, extensively studied in econometrics [3, 44, 37]. PCA will be employed in Section 3.1 to estimate the heterogeneous effect. It requires the following assumptions.

Assumption 2.2. (i) (Pervasiveness) There are two positive constants cmin, cmax > 0 so that

(ii) .

The first condition is common and essential in the factor model literature (e.g., [44]). It requires the factors to be strong enough such that the covariance matrix has spiked eigenvalues. We emphasize here that this condition is actually not so stringent as it looks. Consider a single-factor model Yit = bift + uit, i = 1,…,p, t = 1,…,T. The pervasive assumptions actually imply that . Note that since cmin can be a small constant, our pervasive assumption just says that the factors have non-negligible effect on a non-vanishing proportion of outcomes. In addition, this condition is trivially true if ’s can be regarded as random samples from a population with non-degenerate covariance matrix [17]. Practically, in fMRI data analysis for instance, the lab environment (temperature, air pressure, etc.) or the mental status of the subject being scanned may cause the BOLD (Blood-Oxygen-Level Dependent) level to be uniformly higher at certain time t. This means the brain heterogeneity is globally driven by the factors . If the batch effect is only limited to a small number of dimensions, we think it is more appropriate to assume sparsity instead of pervasiveness on top eigenvectors, which is quite different from our problem setups and thus beyond the scope of our paper. The second condition holds if the population has a sub-Gaussian tail.

2.2.2. Regime 2: External covariates are informative

When covariates are informative, we will employ the PPCA [18] to estimate the heterogeneous effect. It requires the following assumptions.

Assumption 2.3. (i) (Pervasiveness) There are two positive constants cmin and cmax so that

(ii) .

This assumption is parallel to Assumption 2.2 (i). Pervasiveness is trivially satisfied if are independent and Gi is sufficiently smooth.

Assumption 2.4. (i) .

(ii) Write . we assume are independent of .

(iii) Define . We assume

Condition (i) is parallel to Assumption 2.2 (ii) whereas Condition (ii) is natural since Γi can not be explained by Wi. Condition (iii) imposes cross-sectional weak dependence of , which is much weaker than assuming independent and identically distributed . This condition is mild as main serial dependency has been taken care of by gk(·)’s.

3. The ALPHA framework

We introduce the ALPHA framework for heterogeneity adjustment. Methodologically, for each sub-dataset we aim to estimate the heterogeneity component and subtract it from the raw data. Theoretically, we aim to obtain the explicit rates of convergence for both the corrected homogeneous signal and its sample covariance matrix. Those rates will be useful when aggregating the homogeneous residuals from multiple sources.

This section covers details for heterogeneity adjustments under the above two regimes: they correspond to estimating Ui by either PCA or PPCA. From now on, we drop the superscript i whenever there is no confusion as we focus on the ith data source. We use the notation if F is estimated by PCA and if estimated by PPCA. This convention applies to other related quantities such as and , the heterogeneity-adjusted estimator. In addition, we use notations such as and to denote the final estimators, which are and if PCA is used, and and if PPAC is used.

Estimators for latent factors under regimes 1 and 2 satisfy , which corresponds to normalization in Assumption 2.1 (i). By the principle of least squares, the residual estimator of U then admits the form

| (3.1) |

3.1. Estimating factors by PCA

In regime 1, we directly use PCA to adjust data heterogeneity. PCA estimates F by where the kth column of is the eigenvector of corresponding to the kth largest eigenvalue. We have the following theoretical results.

Theorem 3.1. Under Assumptions 2.1 and 2.2, we have

where and .

Note that we do not explicitly assume bounded . In some applications it might be natural to assume a sparse covariance so that all terms involving can be eliminated, while in other applications such as the graphical model, it is more natural to impose sparsity structure on the precision matrix. In this case, one may want to keep track of the effect of as it can be as large as as .

3.2. Estimating factors by Projected-PCA

In regime 2, we would like to incorporate the external covariates using the Projected-PCA (PPCA) method proposed by [18]. The method applies PCA on the projected data and by projection, covariates information is leveraged to reduce dimensionality. We now briefly introduce the method.

For simplicity, we only consider d = 1, that is, we only have a single covariate. The general case can be found in [18]. To model the unknown function gk(Wj), we adopt a sieve based idea which approximates gk(·) by a linear combination of basis functions (e.g., B-spline, Fourier series, polynomial series, wavelets). Then

| (3.2) |

Here are the sieve coefficients of gk(Wj), corresponding to the kth factor loading; Rk is the remainder function representing the approximation error; J denotes the number of sieve bases which may grow slowly as p diverges. We take the same basis functions in (3.2) for all k though they can be different.

Define for each k ≤ K, and correspondingly . Then we can write

Let , and Rk(Wj) be the (j,k)th element of R(W)p×K. The matrix form (2.2) can be written as

| (3.3) |

recalling that the data index i is dropped. Thus the residual contains three parts: the sieve approximation error , unexplained loading and true signal U.

The idea of PPCA is simple: since the factor loadings are a function of the covariates in (3.3) and U and Γ are independent of W, if we project (smooth) the observed data onto the space of W, the effect of U and Γ will be significantly reduced and the problem becomes nearly a noiseless one, given that the approximation error R(W) is small.

Define P as the projection onto the space spanned by the basis functions of W:

| (3.4) |

By (3.3), . Thus, F can be estimated from the ‘noiseless projected data’ PX, using the conventional PCA. Let the columns of be the eigenvectors corresponding to the top K eigenvalues of the n × n matrix , which is the sample covariance of the projected data. Then, is the PPCA estimator of F. It only differs from PCA in that we use smoothed or projected data PX.

We need the following conditions for basis functions and accuracy of sieve approximation.

Assumption 3.1. (i) There are dmin, dmax > 0 s.t.

almost surely and maxν≤J,j≤p Eϕν(Wj)2< ∞.

(ii) There exists k ≥ 4 s.t. as where X is the support of Wj and maxv,k |bv,k| < ∞.

Condition (ii) is mild; for instance, when {φν} is polynomial basis or Bsplines, it is implied by the condition that smooth curve gk(·) belongs to a Hölder class for some L > 0, with [34,12].

Recalling the definition of νp in Assumption 2.4 (iii), we have the following results.

Theorem 3.2. Choose and assume where . Under Assumptions 2.1, 2.3, 2.4 and 3.1,

where and if there exists C s.t. vp>C/n.

3.3. A guiding rule for estimating the number of factors, the number of basis functions and determining regimes

We now address the problem of estimating the number of factors for two different regimes. Extensive literature has made contributions to this problem in regime 1, i.e., the regular factor model [4, 1, 28]. [28] and [1] proposed to use ratio of adjacent eigenvalues of to infer the number of factors. They showed the estimator correctly identifies K with probability tending to 1, as long as Kmax ≥ K and Kmax = O(ni ∧ p).

For the semiparametric factor model, [18] proposed

Here Kmax is of the same order as Jd. It was shown that under regular assumptions which we omit here. When we have genuine and pervasive covariates, typically outperforms . More details can be found in [18].

Once we use and to estimate the number of factors under the regular factor model and semiparametric factor model respectively, we naturally have an adaptive rule to decide whether the covariates W are informative enough to use PPCA over PCA. We compare two eigen-ratios:

If the former is larger we identify the dataset as regime 1 and apply regular PCA to get ; otherwise it is regime 2 and PPCA is used to obtain . The intuition behind this comparison is that the maximal eigen-ratios can be perceived as signal-to-noise ratios in terms of estimating the spiky heterogeneity term. Given that and , the first ratio measures the eigen-gap between and Σ and the second ratio measures the eigen-gap between and PΣP. If G(W) is much more important than Γ in explaining the loading structure, projection preserves signal and reduces error to improve the eigen-gap. Conversely, if W is weak in providing useful information, projection reduces both noise and signal. Therefore, if projection enlarges the maximum eigen-gap, we prefer PPCA over PCA to estimate the spiky heterogeneity part. Our proposed guiding rule effectively tells whether projection can further contrast spiky and non-spiky parts of covariance.

The above signal-to-noise ratio comparison can be extended to choose the number of basis functions. Notice that we can regard regular PCA as PPCA with number of basis J = p and hence P = I. In this line of thinking, we can index P by J and maximize over , where J = p corresponds to PCA. Here we use notation and PJ to exhibit their dependency on J. We implement this guiding rule in real data analysis.

In practice, there is still chance of misspecification of the true number of factors K by ALPHA. One might be curious about how this will affect the performance of ALPHA and the subsequent statistical analysis. To clarify this issue, we conduct sensitivity analysis on the number of factors in Section G.3 in the appendix. The take-home message is that the overestimation of K will not hurt, while underestimation of K might mislead subsequent statistical inference.

3.4. Summary of ALPHA

We now summarize the final procedure and convergence rates. We first divide m subgroups into two classes based on whether the collected covariates have significant influence on the loadings.

ALPHA consists of the following three steps.

Step 1: (Preprocessing) For data source i, determine whether it belongs to or according to the guiding rule given in Section 3.3 and correspondingly estimate K by Ǩ, which equals or (and choose J if necessary).

Step 2: (Adjustment) Apply Projected-PCA to estimate if if , otherwise use PCA to remove the heterogeneity, resulting in adjusted data , which is either or .

Step 3: (Aggregation) Combine adjusted data to conduct further statistical analysis. For example, estimate sample covariance by where is the aggregated sample size; or estimate sparse precision matrix Ω by existing graphical model methods.

We summarize the ALPHA procedure in Algorithm 1 given in Section A. We also summarize the convergence of and below. To ease presentation, we consider a typical regime in practice: for some constant C. We focus on the situation of sufficiently smooth curves k = ∞ so that J diverges very slowly (say with rate ) and bounded ϕmax and νp (defined respectively in Theorem 3.2 and Assumption 2.4). Based on discussions of the previous subsections, for estimation of U in , we have

Therefore, PPCA dominates PCA as long as the effective covariates are provided However, dominates all the remaining terms so that

In addition, for estimation of , we have

| (3.5) |

where , depending on . If we consider a very sparse covariance matrix so that is bounded, we can simply drop the term δ in both regimes. Then, regime 1 achieves better rate if but regime 2 outperforms otherwise.

4. Post-ALPHA inference

We have summarized the order of biases caused by adjusting heterogeneity for each data source in Section 3.4. Now we combine the adjusted data together for further statistical analysis. As an example, we study estimation of the Gaussian graphical model. Assume further and consider the following class of the precision matrices:

| (4.1) |

To simplify the analysis, we assume R is fixed, but all the analysis can be easily extended to include growing R.

To estimate Ω = Σ−1 via CLIME, we need a covariance estimator as the input. We assume here the number of factors is known, i.e., the exception probability of recovering Ki has been ignored for ease of discussion. Such an estimator is naturally given by

| (4.2) |

Since the number of data sources is huge, we focus on the case of diverging N and p.

4.1. Covariance estimation

Let ΣN be the oracle sample covariance matrix, i.e., . We consider the difference between our proposed and in this subsection. The oracle estimator obviously attains the rate .

Let where is the kth column of Fi. It is not hard to verify that is Gaussian distributed with mean zero and variance Σ. Note that are i.i.d. with respect to k and i, using the assumption . By the standard concentration bound (e.g. Lemma 4.2 of [19]),

where . Therefore, by (3.5), we have

| (4.3) |

where and .

We now examine the difference of the ALPHA estimator from the oracle estimator for two specific cases. In the first case, we apply PCA to all data sources, i.e., all and Ki is bounded. We then have am,N,p = m log p/N. This rate is dominated by the oracle error rate if and only if . This means traditional PCA performs optimally for adjusting heterogeneity as long as the number of subgroups grows more slowly than the order of .

If we apply PPCA to all data sources, i.e., and Ki is bounded, then . This rate is of smaller order than rate if p/log p > CN for some constant C > 0. The advantage of using PPCA is that when ni is bound so that , we can still achieve optimal rate of convergence so long as we have a large enough dimensionality at least of the order N.

4.2. Precision matrix estimation

In order to obtain an estimator for the sparse precision matrix from , we apply the CLIME estimator proposed by [9]. For a given , CLIME solves the following optimization problem:

| (4.4) |

where and λ is a tuning parameter. Note that (4.4) can be solved column-wisely by linear programming. However, CLIME does not necessarily generate a symmetric matrix. We can simply symmetrize it by taking the one with minimal magnitude of and . The resulting matrix after symmetrization, still denoted as with a little bit abuse of notation, also attains good rate of convergence. In particular, we consider the sparse precision matrix class in (4.1). The following lemma guarantees recovery of any sparse matrix .

Theorem 4.1. Suppose and let . Choosing , we have

Furthermore, and .

Here we stress that we choose CLIME for the precision matrix estimation because it only relies on the max-norm guarantee . The intuition is that for any true Ω with bounded, ,

One can see from above that fast convergence of encourages feasibility of Ω, which is a necessary step for establishing consistency of the resulting M-estimator. Interested readers can refer to the proof of Theorem 4.1 for more details. Other possible methods for precision matrix recovery (e.g. graphical Lasso in [20], graphical Dantzig selector in [48] and graphical neighborhood selection in [35]) can be considered for post-ALPHA inference as well, but their convergence rate needs to be studied in a case-by-case fashion.

Theorem 4.1 shows that CLIME has strong theoretical guarantee of convergence under different matrix norms. The rate of convergence has two parts, one corresponding to the minimax optimal rate [48] while the other is due to the error caused by estimating the unknown factors under various situations. The discussions at the end of Section 4.1 suggest that the latter error is often negligible.

In addition, we numerically investigate how misspecification of the number of factors K will affect the precision matrix estimation in Section G.3 in the appendix.

5. Numerical studies

In this section, we first validate the established theoretical results through Monte Carlo simulations. Our purpose is to show that after heterogeneity adjustment, the proposed aggregated covariance estimator approximates well the oracle sample covariance ΣN, thereby leading to accurate estimation of the true co-variance matrix Σ and precision matrix Ω. We also compare the performance of PPCA and regular PCA on heterogeneity adjustment under different settings.

In addition, we analyze a real brain image data using the proposed procedure. The dataset to be analyzed is the ADHD-200 data [6]. It consists of rs-fMRI images of 608 subjects, of whom 465 are healthy and 143 are diagnosed with ADHD. We dropped subjects with missing values in our analysis. Following [39], we divided the whole brain into 264 regions of interest (ROI, p = 264), which are regarded as nodes in our graphical model. Each brain was scanned for multiple times with sample sizes ranging from 76 to 261 (76 ≤ ni ≤ 261). In each scan, we acquired the blood-oxygen-level dependent (BOLD) signal within each ROI. Note that subjects have different ages, genders etc., which results in heterogeneity over the covariance structure of the data. We need to remove this unwanted heterogeneity; otherwise it will dilute or corrupt the true biological signal, i.e., the difference in the brain functional network between healthy people and patients due to the disease ADHD.

5.1. Preliminary analysis

To apply our ALPHA framework, we need to first argue the pervasiveness condition Assumption 2.2 holds for the real dataset considered. This is done in Section G.2, together with further discussions on pervasiveness. We also collect the physical locations of the 264 regions as the external covariates. Ideally, we hope these covariates to be pervasive in explaining the batch effect (Assumption 2.3), while bearing no association with the graph structure of ut. This is reasonably true because: the level of batch effect is non-uniform over different locations of the brain when scanned in fMRI machines; furthermore it has been widely acknowledged in biological studies that spatial adjacency does not necessarily imply brain functional connectivity.

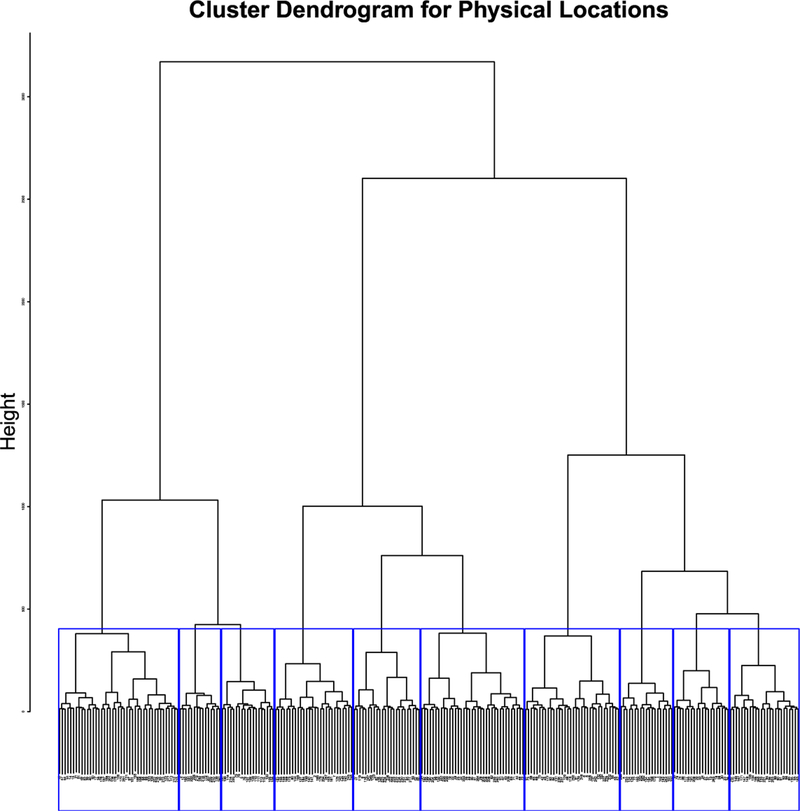

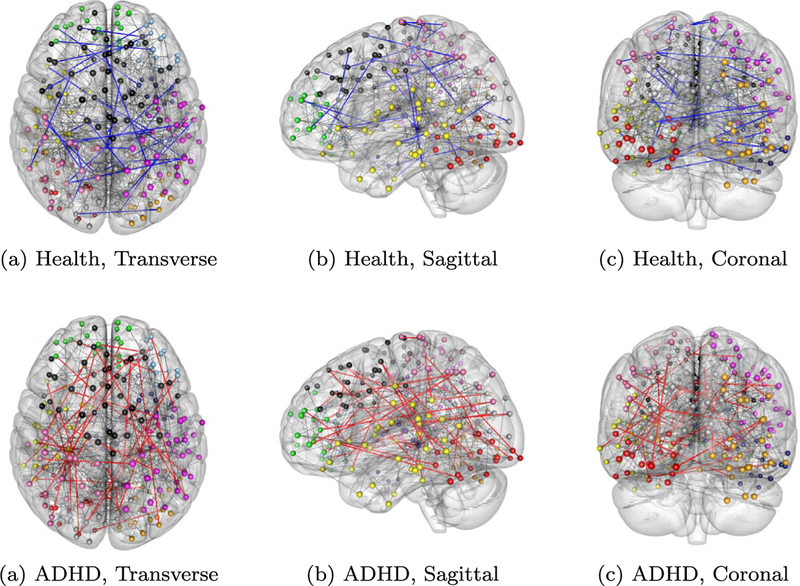

To construct from the physical locations, we simply split the 264 regions into 10 clusters (J = 10) by the hierarchy clustering (Ward’s minimum variance method) and use the categorical indices as the covariates of the nodes. The clustering result is shown in Figure 2 and the spatial locations of the 264 regions are shown in Figure 6 in 10 different colors. Black (middle), green (left) and blue (right) represent roughly the region of frontal lobe; gray (middle), pink (left) and magenta (right) occupy the region of parietal lobe; red (left) and orange (right) are in the area of occipital lobe; finally yellow (left) and navy (right) provide information about temporal lobe.

Fig 2.

Cluster Dendrogram for physical locations with J = 10.

Fig 6.

Estimated brain functional connectivity networks using physical locations as covariates to correct heterogeneity. 10 region clusters are labeled in 10 colors. Black, blue and red edges represent respectively common edges, unshared edges in the healthy group and in the ADHD group.

Here J = 10 is only used to calibrate our synthetic model in the next subsection. In the real data analysis, we will choose J adaptively according to our heuristic guiding rule of the maximal eigen-gap discussed in Section 3.3. Note that here since the covariate W is one-dimensional (d = 1) and discrete, the sieve basis functions are just indicator functions 𝟙(w − 0.5 ≤ W < w + 0.5) for w = 1,…,10. We use the same external covariates for all subjects in both healthy and diseased groups.

The next question is how to divide the subjects into and based on whether the selected covariates explain the loadings effectively. We implemented the method given in Section 3.3 and discovered that 398 healthy (85.6%) and 126 diseased samples (88.1%) prefer PPCA over PCA, meaning that the physical locations indeed have explanatory powers on factor loadings of most subjects. We identified them as subjects in while the others were classified as in . Based on the class labels, we employed the corresponding method to estimate the number of factors and adjust the heterogeneity. We used Kmax = 3. The estimated number of factors for the two groups are summarized in Table 1.

Table 1.

Distribution of estimated number of factors for healthy and ADHD groups

| 1 | 2 | 3 | |

|---|---|---|---|

| Healthy | 253 | 148 | 64 |

| ADHD | 78 | 40 | 25 |

5.2. Synthetic datasets

In this simulation study, for stability, we use the first 15 subjects in the healthy group to calibrate the simulation models. We specify four asymptotic settings for our simulation studies:

m = 500, ni = 10 for i = 1,..,m, p = 100, 200,…,600 and G(W) ≠ 0;

m = 100, 200,…,1000, ni = 10 for i = 1,…,m, p = 264 and G(W) ≠ 0;

m = 100, ni = 10, 20,…,100 for i = 1,…,m, p = 264 and G(W) ≠ 0;

m = 20, 40,…,200, ni = 20, 40,…,200 for i = 1,…,m, p = 264 and G(W) = 0.

Here the last setting represents regime 1, where we should expect PCA to work well when the number of subjects is of order of square root of the total sample size, i.e., . The first three settings represent regime 2 with informative covariates; they present asymptotics with growing p, m and ni respectively. The details on model calibration and data generation can be found in Section G.1.

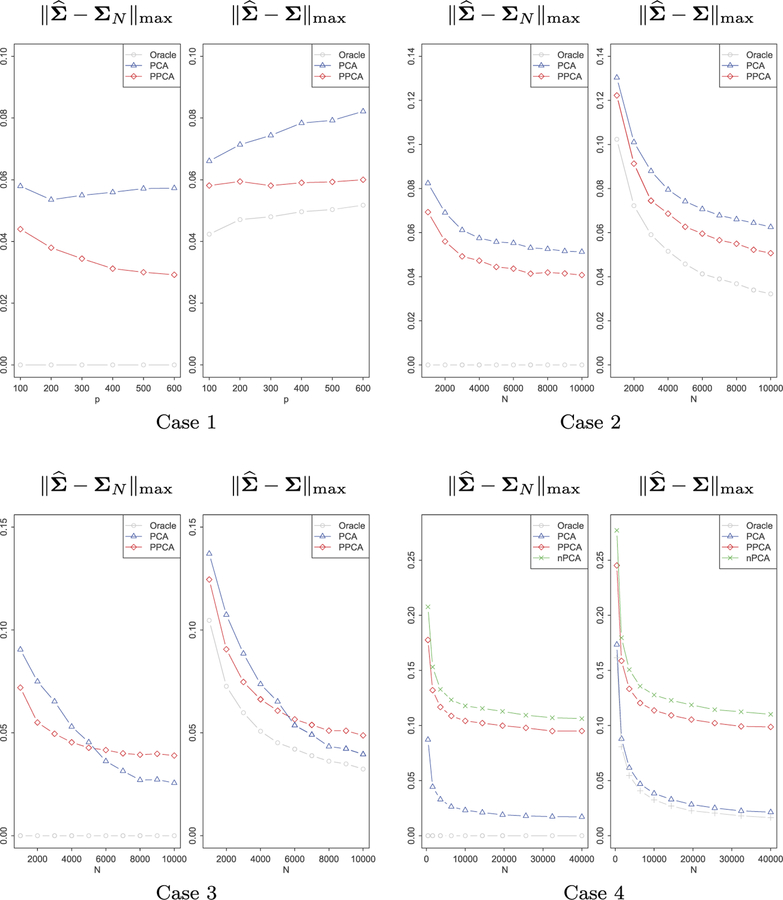

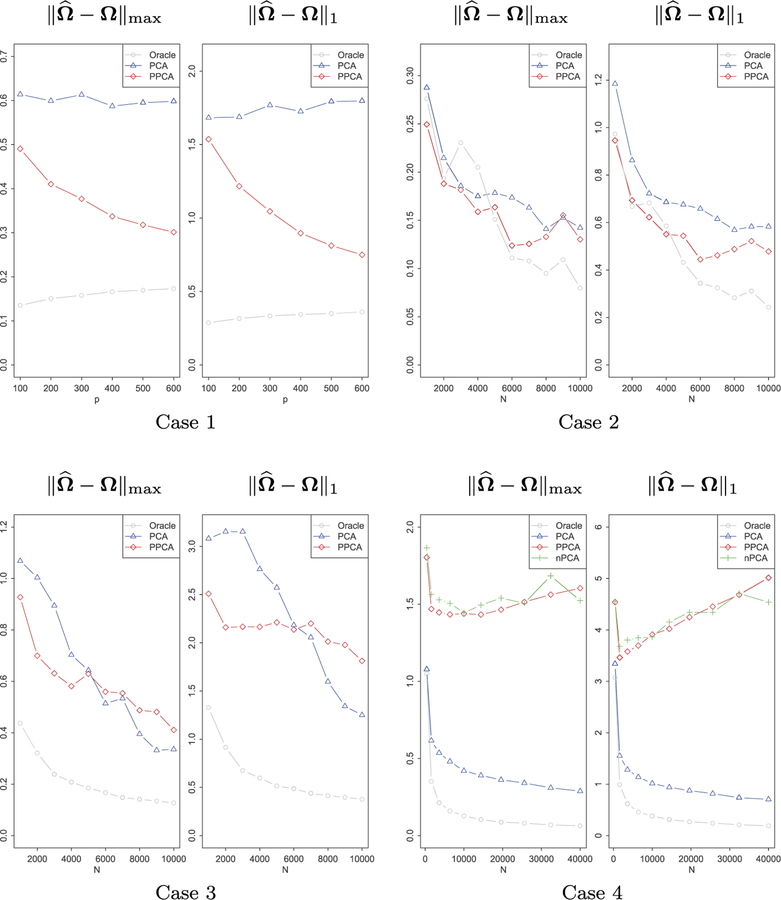

We first investigate the errors of estimating covariance of ut in max-norm after applying PPCA or PCA for heterogeneity adjustment. We also compare them with the estimation errors if we naively pool all the data together without any heterogeneity adjustment. However, the estimation error of the naively pooled sample covariance is too large to fit in the graph for the first 3 cases, which we thus do not plot. Denote the oracle sample covariance of ut by ΣN as before. The estimation errors, based on 100 simulations, under the four settings are presented in Figure 3.

Fig 3.

Estimation of Σ by PCA, PPCA and the oracle sample covariance matrix for 4 different settings. Case 1: m and ni are fixed while the dimension p increases; case 2: ni and p are fixed while m increases; case 3: m and p are fixed while ni increases; case 4: p is fixed, and both m and ni increase and conditions for PPCA are violated.

In Case 1, m and ni are fixed while dimension p increases. This setting highlights the advantages of Projected-PCA over regular PCA. From the left panel, we observe that increase of dimensionality improves the performance of Projected-PCA. This is consistent with the rate we derived in theories. In Case 2, ni and p are fixed while m increases. Both PPCA and PCA benefit from an increasing number of subjects. However, since ni is small, again PPCA outperforms. In Case 3, m and p are fixed while ni increases. Both methods achieve better estimation as ni increases, but more importantly, regular PCA outperforms PPCA when ni is large enough. This is again consistent with our theories. As illustrated by Section 4.1, when m is fixed, PCA attains the convergence rate , while PPCA only achieves , which is worse than PCA when p/log p = o(N). In Case 4, p is fixed, and both m and ni increase. Note that the covariates have no explanation power at all, i.e., Condition 2.3 about pervasiveness does not hold so that PPCA is not applicable. Adjusting by PCA behaves much better and PPCA sometimes is as bad as ‘nPCA’, corresponding to no heterogeneity adjustment. This is not unexpected as we utilize a noisy external covariates.

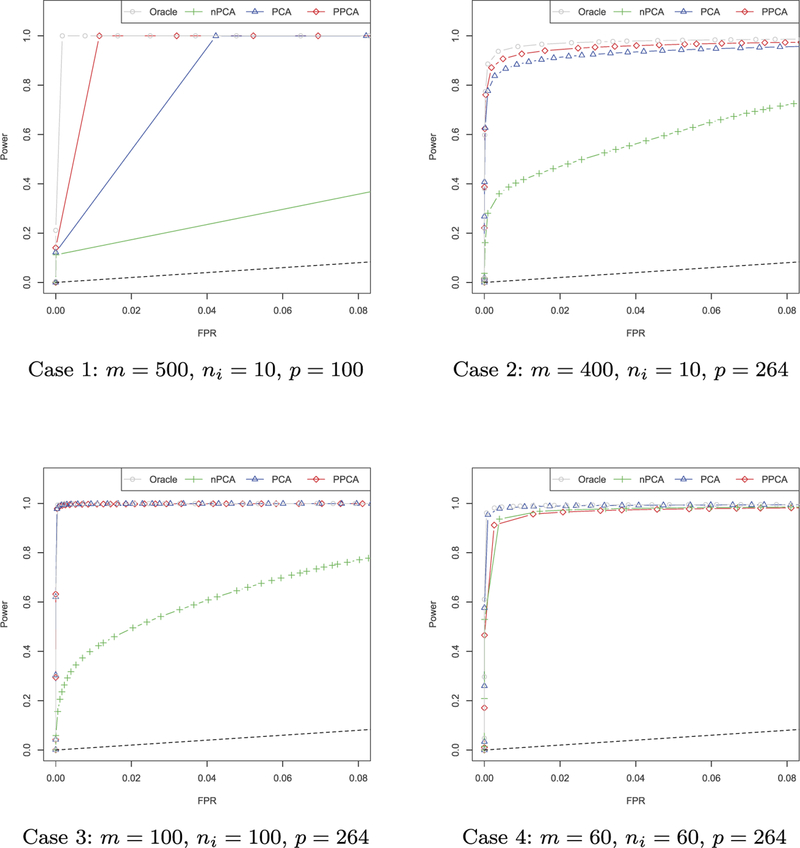

Now we focus on estimation error of the precision matrix. We plug , obtained from data after adjusting for heterogeneity, into CLIME to get the estimator of . In Figure 4, and are depicted under the four asymptotic settings. From the plots we see and share similar behavior with shown in Figure 3: in Case 1, ni is small, so it is advantageous to use PPCA and PPCA behaves better as dimension increases; in Case 2, both PPCA and PCA benefit from an increasing number of subjects and PPCA outperforms PCA; in Case 3, PCA outperforms PPCA when ni is large enough since m is fixed; in Case 4, the covariates have no explanation power at all so that PPCA does not make sense. In the first three cases, if we do not adjust data heterogeneity, and will be too large to fit in the current scale.

Fig 4.

Estimation of Ω. Presented are the estimation errors in max-norm and L1-norm for 4 different settings. In Case 4, nPCA refers to no PCA, i.e., we do not adjust heterogeneity.

We also present the ROC curves of our proposed methods in Figure 5, which is of interest to readers concerned with sparsity pattern recovery. The black dashed line is the 45 degree line, representing performance of random guess. It is obvious from those plots that heterogeneity adjustment very much improves the sparsity recovery of the precision matrix. When the sample size of each subject is small, genuine pervasive covariates increase the power of PPCA while if the sample size is relatively large, PCA is sufficiently good in recovering graph structures. Also notice that in all cases, the naive method without heterogeneity adjustment can still achieve a certain amount of power, but we can improve the performance dramatically by correcting the batch effects.

Fig 5.

ROC curves for sparsity recovery of Ω for 4 different settings.

5.3. Brain image network data

We report the estimated graphs for both the healthy group and the ADHD patient group with batch effects removed using our ALPHA framework in this subsection. We took various sparsity levels of the networks from 1% to 5% (corresponding to the same set of λ’s for two groups) and selected the common edges, which are stable with respect to tuning, to be depicted.

The brain network produced by our proposed method is presented in Figure 6. It gives 90.7% identical edges for the two networks. However if we ignore heterogeneity and naively pool the data from all subjects together, it generates 10.2% unshared edges, roughly 1% more than ALPHA produces. Therefore, by heterogeneity adjustment, we found less difference in brain functional networks between ADHD patients and healthy people. In addition, we investigate how those unshared edges are distributed across the 10 clusters. We summarized the total degree of unshared edge vertices within each cluster in Table 2. As we can see, in the left occipital lobe (red) and the left parietal lobe (pink), there are significant difference in functional connectivity structure between healthy people and patients, although in general the difference is weak. These are signs that ADHD is a complex disease that affects many regions of the brain. The general methodology we provide here could be valuable for further understanding the mechanism of the disease.

Table 2.

The degree of unshared edge vertices for each cluster

| red | orange | blue | green | yellow | navy | pink | black | magenta | gray | |

|---|---|---|---|---|---|---|---|---|---|---|

| Health | 3 | 4 | 3 | 2 | 7 | 6 | 10 | 12 | 11 | 6 |

| ADHD | 9 | 6 | 7 | 5 | 12 | 5 | 6 | 15 | 9 | 10 |

6. Discussions

Heterogeneity is usually informed by the domain knowledge of the dataset. In particular, it occurs with high chance when the data come from different sources or subgroups. In the brain image dataset we used in the numerical study, heterogeneity across patients can stem from difference in age, gender, etc. When it is less clear whether heterogeneity exists, we can calculate multiple summary statistics for all the subgroups and see whether they are significantly different. In the case of pervasive heterogeneity, we can test it by the magnitude of dominating eigenvalues of the covariance matrix in each subgroup. A systematic testing method for heterogeneity is important and we leave it for now as a future research topic. Note that even if all the subgroups are actually homogeneous, ALPHA does not hurt the statistical efficiency under appropriate scaling assumptions. Specifically, for the PCA-based ALPHA, we showed in Section 4.1 that as long as the number of subgroups , enjoys the oracle max-norm rate. This means that given homogeneous data, when the number of data splits is not large, ALPHA yields the same statistical rate as the full-sample oracle estimator. For the PPCA-based ALPHA, enjoys the oracle rate when .

As we have seen, ALPHA is adaptive to factor structures and is flexible to include external information. However, this advantage of PPCA is accompanied by more assumptions and the practical issue of selecting proper basis functions and the number of them in sieve approximation. One contribution of the paper lies in seamless integration of PCA and PPCA, which leverages effective external covariates. If no valuable covariates exist and the sample size is relatively large for each data source, we have shown conventional PCA is still an effective tool.

Note that our framework is compatible with any statistical procedure that only requires an accurate estimator as the input, like CLIME we illustrate in this work. The ALPHA procedure gives theoretical guarantee for and , which serve as foundations for establishing the statistical properties of the subsequent procedure. Besides, ALPHA has potential application and in regression analysis. If the residual terms are true predictors for the response of interest , we can first apply ALPHA to extract the residuals before the regression procedure. For example, the residual BOLD signal we obtained by ALPHA in the brain functional network analysis (Section 5.3) is potentially useful in predicting whether a person has ADHD. This is a typical logistic regression problem based on ALPHA adjustment. We leave the detailed study of combining ALPHA with regression models for future investigation. One recent work [16] has adopted a method similar to ALPHA that extracts residuals for model selection in high dimensional regression.

Finally, we point out two current limitations of ALPHA. The first limitation lies in its pervasiveness assumption of the heterogeneity terms . More specifically, for each subgroup i, ALPHA requires the signal strength of the heterogeneous part to overwhelm the homogeneous residual part Ui so that PCA or PPCA can accurately estimate and remove it. Such requirement can be violated in practice when the heterogeneous term has similar signal strength as the homogeneous term. Additionally, statistical methods that require more than the max-norm error guarantee , say in the general non-sparse situation, may be inappropriate for the post-ALPHA inference for now.

Acknowledgments

This project was supported by National Science Foundation grants DMS-1206464, DMS1406266 and 2R01-GM072611-12.

Appendix A: Algorithm for ALPHA

The pseudo code for the algorithm ALPHA is shown as follows.

Appendix B: A key lemma

Recall that we defined

| (B.1) |

where we used notations such as and to denote the final estimators, which are and if PCA is used, and and if PPCA is used.

The following lemma holds for no matter whether PCA or PPCA is applied.

Lemma B.1. For any K by K matrix H such that if log P = O(n),

where ; and furthermore

where .

The above lemma states that the error of estimating U by (or estimating by ) is decomposed into two parts. The first part is inevitable even when the factor matrix F in (3.1) is known in advance. The second part is caused by the uncertainty from estimating F. Since the true F is identifiable up to an orthonormal transformation H, we need to carefully choose H to bound the error Π (or Δ). We will provide explicit rates of convergence for those terms in the following two sections.

Proof. By definition of , . We first look at the converge of . Obviously where

Since we have

Similarly , so

According to Lemma F.4 (i), ||UF/n ||max = OP (1) and noting both ||F||max and are , we conclude the result for easily.

Now we consider in the following.

So and it suffices to bound the two terms.

Decompose J1 by . Therefore,

since . Similar to J1, we decompose J2 only replacing with . According to Lemma F.4 (i), , hence . We then conclude that .

Now let us take a look at IV. where

By assumption, ||H||max ≤ ||H|| = OP (1). Simple decompositions of D1 gives

Since , we have

It is also not hard to show Under both Theorems C.1 and D.1 (replacing by for regime 1 and for regime 2), we can check the following relationship holds:

Therefore we have

□

Appendix C: Proof of Theorem 3.1

Recall that PCA estimates F by where the kth column of is the eigenvector of corresponding to the kth largest eigenvalue. By the definition of , we have

where K is a K by K diagonal matrix with top K eigenvalues of in descending order as diagonal elements. Define a K by K matrix H as in [17]:

It has been shown that are all OP(1).

The following lemma provides all the rates of convergences that are needed for downstream analysis.

Lemma C.1. Under Assumptions 2.1 and 2.2, we have and

(i) and ;

(ii) ;

(iii) ;

(iv) .

Combining the above results with Lemma B.1, we have

where and additionally

where . Thus we complete the proof for Theorem 3.1. We are left to check Lemma C.1, which is done in the following three subsections.

C.1. Convergence of factors

Recall . Substituting , we have,

| (C.1) |

To bound , note that there is a constant C > 0, so that

Hence we need to bound since . The following lemma gives the stochastic bounds for each individual term.

Lemma C.2. (i) ,

(ii) ,

Proof. (i) Obviously according to Lemma F.1. attains the same rate. In addition, again according to Lemma F.1. So combining the three terms, we have We now refine the bound for Then the refined rate of is

(ii) Since by Lemma F.1,

is bounded by while is bounded by

which based on results of Lemma F.2 and (i) is □

The final rate of convergence for and are summarized as follows.

Proposition C.1.

| (C.2) |

Proof. The results follow from Lemmas C.2. □

C.2. Rates of and

Note first that the two matrices under consideration is both K by K, so we do not lose rates bounding them by their Frobenius norm.

Let us find out rate for . Basically we need to bound for i = 1, 2, 3. Firstly

Since and by Lemma F.1, we have Secondly,

Finally,

So combining three terms we have

Now we bound . Since , we have

Therefore has the same rate since . So

C.3. Rate of

In order to study rate of ,we essentially need to bound for i = 1, 2, 3. We handle each term separately.

By Lemma F.5, Therefore,

From bounding , the last term has rate

So combining three terms, we conclude

Appendix D: Proof of Theorem 3.2

Recall that by the definition of , we have

where K is a K × K diagonal matrix with the first K largest eigenvalues of in descending order as its diagonal elements. Define the K by K matrix H as in [18]:

It has been shown that , and , are all OP(1). Here we remind that though H and K are different from those in regime 1 defined in the previous section, they play essentially the same roles (thus with same notations).

The following lemma provides all the rates of convergences that are needed for downstream analysis.

Lemma D.1. Choose and assume where Under Assumptions 2.1, 2.3, 2.4 and 3.1, we have and

(i) and

(ii)

(iii)

(iv)

Combining the above lemma with Lemma B.1, we obtain

where and

where if there exists C s.t. νp > C/n. We choose to keep terms here although it makes a long presentation of the rate.

Thus we complete the proof for Theorem 3.2. We are left to check Lemma D.1, which is done in the following three subsections.

D.1. Convergence of factors

Recall . Substituting , we have,

| (D.1) |

where Ai,i ≤ 3 has nothing to do with R(W) and Γ:

Ai, 3 ≤ i ≤ 8 takes care of terms involving R(W):

the remaining are terms involving Γ:

To bound , as in Theorem C.1 we only need to bound for i = 1,…,15 since again we have The following lemma gives the rate for each term.

Lemma D.2. (i) ,

(ii) ,

(iii) and ,

(iv) and ,

(v) and ,

(vi)

Proof. (i) Because , By Lemma F.3 and F.4, and

Hence

(ii) We have By Lemma F.3 and F.4, and By Assumption 3.1, Note the fact that for matrix , , ,. So

(iii) Note that , and Hence we have Thus

Similarly, attains the same rate of convergence.

In addition, notice A9, A10 have similar representation as A4, A5. The only difference is to replace R by Γ. It is not hard to see Therefore .

(iv) Note that

and Hence

A11 has similar representation as A6. Since

we have

(v) According to Lemma F.4, Thus

since The rate of convergence for A8 can be bounded in the same way. So do A12 and A13. Given that we have .

(vi) Obviously, and . We conclude . Same bound holds for A15. □

The final rate of convergence for and are summarized as follows.

Proposition D.1. Choose and assume and νp = O(1),

| (D.2) |

Proof. The max norm result follows from Lemmas D.2 and (D.1), while the Frobenius norm result has been shown in [18]. □

D.2. Rates of and

Note first that the two matrices under consideration is both K by K, so we do not lose rates bounding them by their Frobenius norm.

It has been proved in [18] that . By the choice of J, the last term vanishes. So

[18] also showed that . Since and are both , we easily show since

D.3. Rate of

By (D.1), in order to bound we essentially need to bound for We do not bother going into the details of each term again as in Lemma D.2. However, we point out the difference here. All Ai are separated into two types: the ones starting with F and the ones starting with U.

If a term Ai starts with F, say Ai = FQ, in Lemma D.2, we bound in using . Now we use bound so that we obtain all related rates by just changing rate to .

Terms starting with U includes Ai, i = 2,3,8,13. In Lemma D.2, we bound , i = 3,8,13 using while we bound using . Correspondingly now we need to control and separately to update the rates. The derivation is relegated to Lemma F.5. We have and .

So we replace the corresponding terms in Lemma D.2. It is not hard to see the dominating term is . Therefore, has the same rate.

Appendix E: Proof of Theorem 4.1

Proof. Denote the oracle empirical covariance matrix as

As in [9] the upper bound on is obtained by proving

| (E.1) |

Once the two bounds are established, we proceed by observing

and then it readily follows that if ,

where the first term of the last inequality uses the constraint of (4.4) while the optimality condition of (4.4) is applied to bound by . So it remains to find in (E.1). Since ,, so we just need to bound and . Obviously,

We have shown in (4.3) that given by (4.2) attains the rate . Thus . Similar proof as in [9] can also reach error bounds under and , which we omit. The proof is now complete. □

Appendix F: Technical lemmas

Lemma F.1. (i) ,

(ii) ,

(iii)

Proof. We simply apply Markov inequality to get the rates.

since

□

Lemma F.2. (i) .

(ii) ,

(iii)

Proof. (i) where λk is the kth column of Λ. Since is mean zero sub-Gaussian with variance proxy , we have .

(ii) . We need tobound each term separately. The second term is bounded by the upper tail bound of Hanson-Wright inequality for sub-Gaussian vector [24, 41] i.e.

Choose s = log n and apply union bound, we have . Then we deal with the first term. By Chernoff bound,

where . [24] showed that

For , the right hand side is less than exp(3tr(Σ)η/2) ≤ exp(Cpη). Choose η = Cθ2, we have

We minimize the right hand side and choose θ = s/(2Cp), it is easy to check and see that . So we conclude that .

(iii) Let be the kth column of F. where are U and canceling the tth column and element respectively. From (ii) we know the second term is of order . Define subGaussian , which is independent with ut. Thus

where . Similar to (ii), we choose η = Cθ2n here. It is not hard to see . Thus . □

Lemma F.3.(i) .

(ii) .

(iii) .

Proof. This results can be found in the paper of Fan, Liao and Wang (2014). But the conditions they used are a little bit different from our conditions. In particular, we allow no time (sample) dependence and only require bounded instead of . By Markov inequality, it is sufficient to show the expected value of each term attains the corresponding rate of convergence.

and are both O(np) following the same proof as above. Thus the proof is complete. □

Lemma F.4. (i)

(ii) .

(iii) .

Proof. (i) It is not hard to see . The detailed proof by Chernoff bound is given in the following. By union bound and Chernoff bound, we have

The expectation is calculated by fist conditioning on F,

where the second equality uses the sub-Gaussianity of ujt and the last inequality is from and . Therefore, choosing , we have

Thus .

(ii) , where . Consider the tail probability condition on W:

The right hand side can be further bounded by

Choose θ to minimize the upper bound and take expectation with respect to W, we obtain

Finally choose t ≍ , the tail probability is arbitrarily small with a proper constant. So . The second part of the results follows similarly. Note and the first term dominates. So the same derivation gives

where since it is assumed eigenvalues of is bounded almost surely. Hence, .

(iii) . Using Chernoff bound again, we get

Since , the right hand side is easy to bound by first conditioning on F.

Therefore, choosing , we have

So we conclude . By similar derivation as in (ii), we also have and are both of order . □

Lemma F.5. (i) ,

(ii) and .

Proof. (i) . The second term is . So it suffices to focus on the first term. Let and so that Var(vt) = I. Write , so we have . Also denote . Thus and .

| (F.1) |

where and are two unit vectors of dimension p. We will bound the right hand side with arbitrary unit vectors and .

Note that and . By Bernstein inequality, we have for constant C > 0,

Choose in (F.1), we can easily show that the exception probability is small as long as C is large enough. Therefore, noting ,. Finally .

(ii) The rates of and can be similarly derived as (i). Denote , so

Denote the kth column of Φ(W)B by (ΦB)k, we have

where we use max . □

Appendix G: More details on synthetic data analysis

G.1. Model calibration and data generation

We calibrate (estimate) the 264 by 264 covariance matrix of ut by our proposed method to the data in the healthy group. Plugging it as input in CLIME solver delivers a sparse precision matrix Ω, which will be taken as truth in the simulation. Note that after regularization in CLIME, Ω−1 is not the same as , and we set the true covariance Σ = Ω−1. To obtain the covariance matrix used, in setting 1, we also calibrate, using the same method, a sub-model that involves only the first 100 regions. We then copy this 100 × 100 matrix multiple times to form a p × p block diagonal matrix and use it for simulations in setting 1. We describe how we calibrate these ‘true models’ and generate data from the models as follows.

(External covariates) For each j ≤ p, generate the external covariate W i.i.d. from the multinomial distribution with (Wj = s) = ws,s ≤ 10 where are calibrated with the hierarchy clustering results of the real data.

(Calibration) For the first 15 healthy subjects, obtain estimators for F, B and Γ by PPCA, resulting in and according to [18]. Use the rows of the estimated factors to fit a stationary VAR model ft = Aft−1 + ∈t, where ∈t ∼ N(0, Σ∈), and obtain the estimators and .

- (Simulation) For each subject i ≤ m, pick one of the 15 calibrated models and their associated parameters from above at random and do the following.

-

(a)Generate (entries of Γi) i.i.d. from where is the sample variance of all entries of . For the first three settings, compute the ‘true’ loading matrix . For the last setting, set Λi = Γi since G(W) = 0.

-

(b)Generate factors from the VAR model with where the parameters and are taken from the fitted values in step 2.

-

(c)Finally, generate the observed data , where each column of Ui is randomly sampled from N(0,Ω−1), where Ω has been calibrated by the CLIME solver as described at beginning of the section.

-

(a)

G.2. More on pervasiveness

In this subsection, we discuss the pervasive assumption, which requires the spikes to grow with order p, and present numerical performance of ALPHA for different levels of cmin and cmax (defined in Assumption 2.3). The readers will have a rough idea about how the spikiness (or the constant in front of the rate) affects the performance. We particularly consider the cases when cmax is small or cmin is large. As a threshold matter, we verify that the real data is consistent with the pervasive assumption.

Denote the maximum and minimum eigenvalues of the matrix by λmax and λmin respectively, and denote the maximum eigenvalue of the matrix by . We first investigate the magnitude of λmin, λmax and derived from the real data. Following exactly the same data generation procedure as in the original simulation study, we randomly generate 1,000 subjects. We find that λmax has mean 15.352 and standard deviation 4.918, λmin has mean 10.069 and standard deviation 5.416 and has mean 1.317 and standard deviation 0.119. We also investigate the signal-to-noise ratio , which has mean 7.711 and standard deviation 4.230. Therefore, our real data demonstrates a spiked covariance structure while the spikes are not extremely spiky.

Then we manipulate the data generation process correspondent to two different cases. One is to multiply the original loading matrix Λ by 3, called Modified (a), while the other is to divide Λ by 3, called Modified (b). Note that in the case of Modified (b), λmin will be 1/9 of the original λmin and thus smaller than , so we do not see a clear eigen-gap in this case. Table 3 compares the performance of recovering the precision matrix Ω under the original and modified setting when ni = 100.

Table 3.

Gaussian Graphical Model Analysis

| Original | 0.564 | 3.445 | 1.188 |

| Modified (a) | 0.524 | 3.052 | 1.066 |

| Modified (b) | 0.749 | 4.914 | 1.719 |

We can see from the table above that the performance of ALPHA in the case of Modified (a) is slightly better than that in the original case. Note that increasing cmin makes the heterogeneity part more spiky. Larger cmin allows PCA or PPCA to distinguish the spiky heterogeneity term more easily. In contrast, decreasing cmax makes the original spiky heterogeneity term hard to detect. We also tend to miss several heterogeneity factors while extracting them. Therefore, in Modified (b), the estimation error becomes significantly larger compared with the original case.

G.3. Sensitivity analysis on the number of factors

In this section, we study how the estimated number of factors affects the recovery of the Gaussian graphical model through simulations. The specification of the number of factors is critical to the validity of our ALPHA method, which inspires us to assess the performance of and on our simulated datasets in the first place. Recall that

where P is the projection operator defined in (3.5) in the main text. The final estimator of the number of factors, denote by Ǩ, comes from the heuristic strategy we developed for choosing between PCA or PPCA. We choose PCA if and choose PPCA vice versa. The intuition is that we favor the method that yields larger eigen-ratio between the spiked and non-spiked part of the covariance.

Analogous to the simulation study in our paper, we generate m = 1,000 people’s BOLD data based on calibrated “true” data. We investigate the accuracy of the proposed , and Ǩ for two cases: (i) ni = 20,p = 264 and (ii) ni = 100, p = 264, presented in Table 4. As we can see from the table, when ni is small, outperforms , and when ni is large, is better. Note also that our heuristic estimator Ǩ has great performance in both cases of large and small ni.

Table 4.

Accuracy of , and Ǩ

| ni = 20 | ni = 100 | |||||

|---|---|---|---|---|---|---|

| TotErr | OverEst | UnderEst | TotErr | OverEst | UnderEst | |

| 38.7% | 0% | 38.7% | 0.7% | 0% | 0.7% | |

| 29.7% | 6.8% | 22.9% | 4.7% | 2.7% | 2.0% | |

| Ǩ | 29.7% | 6.8% | 22.9% | 3.5% | 2.3% | 1.2% |

Given the performance of our proposed estimators of the factor number, we now artificially enlarge this estimation error and see how it affects the Gaussian graphical model analysis. Let η be a random perturbation with P(η = 0) = 1/2, P(η = 1) = 1/3 and P(η = 2) = 1/6. Define K+: = K + η and K−: = max(K−η,0), where K is the true number of factors. As the notations indicate, K+ overestimates the factor number while K− underestimates it. Since P(η ≠ 0) = 1/2, their estimation accuracy is only 50%, worse than that of and as presented. We use K+ and K− as the estimators of the number of factors respectively to recover the precision matrix of U and compare their performance with that of Ǩ. The results are presented in Table 5.

Table 5.

Gaussian Graphical Model Analysis

| ni = 20 | ni = 100 | |||||

|---|---|---|---|---|---|---|

| Oracle | 0.687 | 4.131 | 1.311 | 0.335 | 2.018 | 0.695 |

| Ko | 0.873 | 2.824 | 1.351 | 0.536 | 2.006 | 2.017 |

| Ǩ | 1.156 | 8.581 | 2.950 | 0.564 | 3.445 | 1.188 |

| K + | 0.771 | 3.27 | 1.49 | 0.586 | 2.154 | 1.074 |

| K − | 1.618 | 11.384 | 4.062 | 1.84 | 15.133 | 4.941 |

“Oracle” above means that we directly use the generated noise U to calculate its sample covariance and plug it in CLIME to recover the precision matrix. Ko means we know the true number of pervasive factors, and use PCA or Projected-PCA (choosing the method that yields larger eigen-ratio) to adjust factors. As we can see from the table above, K+ is nearly as good as Ko, which means that overestimating the number of factors does not hurt the recovery accuracy. In contrast, underestimating the number factors will seriously increase the estimation error of Ω, as shown by K−, because the unadjusted pervasive factors heavily corrupt the covariance of U. Nevertheless, both K+ and K− uses partial information of the true number of factors. In comparison, our procedure Ǩ, without any prior knowledge about the number of factors, have a great performance in recovering Ω.

Contributor Information

Jianqing Fan, Department of Operations Research and Financial Engineering, Princeton University, Princeton, NJ 08544, USA, jqfan@princeton.edu.

Han Liu, Department of Electrical Engineering and Computer Science, Northwestern University, Evanston, IL 60208, USA, hanliu@northwestern.edu.

Weichen Wang, Department of Operations Research and Financial Engineering, Princeton University, Princeton, NJ 08544, USA, nickweichwang@gmail.com.

Ziwei Zhu, Department of Operations Research and Financial Engineering, Princeton University, Princeton, NJ 08544, USA, zzw9348ustc@gmail.com.

References

- [1].Ahn SC and Horenstein AR (2013). Eigenvalue ratio test for the number of factors. Econometrica 81 1203–1227. MR3064065 [Google Scholar]

- [2].Alter O, Brown PO and Botstein D (2000). Singular value decomposition for genome-wide expression data processing and modeling. Proceedings of the National Academy of Sciences 97 10101–10106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [3].Bai J (2003). Inferential theory for factor models of large dimensions. Econometrica 71 135–171. MR1956857 [Google Scholar]

- [4].Bai J and Ng S (2002). Determining the number of factors in approximate factor models. Econometrica 70 191–221. MR1926259 [Google Scholar]

- [5].Bai J and Ng S (2013). Principal components estimation and identification of static factors. Journal of Econometrics 176 18–29. MR3067022 [Google Scholar]

- [6].Biswal BB, Mennes M, Zuo X-N, Gohel S, Kelly C, Smith SM, Beckmann CF, Adelstein JS, Buckner RL and Colcombe S (2010). Toward discovery science of human brain function. Proceedings of the National Academy of Sciences 107 4734–4739. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [7].Cai TT, Li H, Liu W and Xie J (2012). Covariate-adjusted precision matrix estimation with an application in genetical genomics. Biometrika ass058 MR3034329 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [8].Cai TT, Li H, Liu W and Xie J (2015). Joint estimation of multiple high-dimensional precision matrices. The Annals of Statistics 38 2118–2144. MR3497754 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [9].Cai TT, Liu W and Luo X (2011). A constrained 1 minimization approach to sparse precision matrix estimation. Journal of the American Statistical Association 106 594–607. MR2847973 [Google Scholar]

- [10].Cai TT, Ma Z and Wu Y (2013). Sparse PCA: Optimal rates and adaptive estimation. The Annals of Statistics 41 3074–3110. MR3161458 [Google Scholar]

- [11].Chen C, Grennan K, Badner J, Zhang D, Gershon E, Jin L and Liu C (2011). Removing batch effects in analysis of expression microarray data: an evaluation of six batch adjustment methods. PloS one 6 e17238. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [12].Chen X (2007). Large sample sieve estimation of semi-nonparametric models. Handbook of Econometrics 6 5549–5632. [Google Scholar]

- [13].Connor G, Hagmann M and Linton O (2012). Efficient semiparametric estimation of the fama–french model and extensions. Econometrica 80 713–754. MR2951947 [Google Scholar]

- [14].Connor G and Linton O (2007). Semiparametric estimation of a characteristic-based factor model of common stock returns. Journal of Empirical Finance 14 694–717. [Google Scholar]

- [15].Danaher P, Wang P and Witten DM (2014). The joint graphical lasso for inverse covariance estimation across multiple classes. Journal of the Royal Statistical Society: Series B (Statistical Methodology) 76 373–397. MR3164871 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [16].Fan J, Ke Y and Wang K (2016). Decorrelation of covariates for high dimensional sparse regression. arXiv preprint arXiv:1612.08490 [Google Scholar]

- [17].Fan J, Liao Y and Mincheva M (2013). Large covariance estimation by thresholding principal orthogonal complements. Journal of the Royal Statistical Society: Series B (Statistical Methodology) 75 603–680. MR3091653 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [18].Fan J, Liao Y and Wang W (2016). Projected principal component analysis in factor models. The Annals of Statistics 44 219–254. MR3449767 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [19].Fan J, Rigollet P and Wang W (2015). Estimation of functionals of sparse covariance matrices. Annals of statistics 43 2706 MR3405609 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [20].Friedman J, Hastie T and Tibshirani R (2008). Sparse inverse covariance estimation with the graphical Lasso. Biostatistics 9 432–441. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [21].Guo J, Cheng J, Levina E, Michailidis G and Zhu J (2015). Estimating heterogeneous graphical models for discrete data with an application to roll call voting. The annals of applied statistics 9 821 MR3371337 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [22].Guo J, Levina E, Michailidis G and Zhu J (2011). Joint estimation of multiple graphical models. Biometrika asq060 MR2804206 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [23].Higgins J, Thompson SG and Spiegelhalter DJ (2009). A reevaluation of random-effects meta-analysis. Journal of the Royal Statistical Society: Series A (Statistics in Society) 172 137–159. MR2655609 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [24].Hsu D, Kakade SM and Zhang T (2012). A tail inequality for quadratic forms of subgaussian random vectors. Electron. Commun. Probab 17 MR2994877 [Google Scholar]

- [25].Johnson WE, Li C and Rabinovic A (2007). Adjusting batch effects in microarray expression data using empirical bayes methods. Biostatistics 8 118–127. [DOI] [PubMed] [Google Scholar]

- [26].Johnstone IM and Lu AY (2009). On consistency and sparsity for principal components analysis in high dimensions. Journal of the American Statistical Association 104 682–693. MR2751448 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [27].Lam C and Fan J (2009). Sparsistency and rates of convergence in large covariance matrix estimation. Annals of Statistics 37 4254 MR2572459 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [28].Lam C and Yao Q (2012). Factor modeling for high-dimensional time series: inference for the number of factors. The Annals of Statistics 40 694–726. MR2933663 [Google Scholar]

- [29].Leek JT, Scharpf RB, Bravo HC, Simcha D, Langmead B, Johnson WE, Geman D, Baggerly K and Irizarry RA (2010). Tackling the widespread and critical impact of batch effects in high-throughput data. Nature Reviews Genetics 11 733–739. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [30].Leek JT and Storey JD (2007). Capturing heterogeneity in gene expression studies by surrogate variable analysis. PLoS Genet 3 1724–1735. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [31].Liu H, Han F and Zhang C. h. (2012). Transelliptical graphical models. In Advances in Neural Information Processing Systems [PMC free article] [PubMed] [Google Scholar]

- [32].Liu H, Lafferty J and Wasserman L (2009). The nonparanormal: Semiparametric estimation of high dimensional undirected graphs. The Journal of Machine Learning Research 10 2295–2328. MR2563983 [PMC free article] [PubMed] [Google Scholar]

- [33].Loh P-L and Wainwright MJ (2013). Structure estimation for discrete graphical models: Generalized covariance matrices and their inverses. The Annals of Statistics 41 3022–3049. MR3161456 [Google Scholar]

- [34].Lorentz GG (2005). Approximation of functions, vol. 322 American Mathematical Soc. MR0213785 [Google Scholar]

- [35].Meinshausen N and Buhlmann P (2006). High-dimensional graphs and variable selection with the lasso. The Annals of Statistics 1436–1462. MR2278363 [Google Scholar]

- [36].Negahban S and Wainwright MJ (2011). Estimation of (near) low-rank matrices with noise and high-dimensional scaling. The Annals of Statistics 1069–1097. MR2816348 [Google Scholar]

- [37].Onatski A (2012). Asymptotics of the principal components estimator of large factor models with weakly influential factors. Journal of Econometrics 168 244–258. MR2923766 [Google Scholar]

- [38].Paul D (2007). Asymptotics of sample eigenstructure for a large dimensional spiked covariance model. Statistica Sinica 17 1617 MR2399865 [Google Scholar]

- [39].Power JD, Cohen AL, Nelson SM, Wig GS, Barnes KA, Church JA, Vogel AC, Laumann TO, Miezin FM and Schlaggar BL (2011). Functional network organization of the human brain. Neuron 72 665–678. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [40].Ravikumar P, Wainwright MJ, Raskutti G and Yu B (2011). High-dimensional covariance estimation by minimizing 1-penalized log-determinant divergence. Electronic Journal of Statistics 5 935–980. MR2836766 [Google Scholar]

- [41].Rudelson M and Vershynin R (2013). Hanson-wright inequality and sub-gaussian concentration. Electron. Commun. Probab 18 MR3125258 [Google Scholar]

- [42].Shen X, Pan W and Zhu Y (2012). Likelihood-based selection and sharp parameter estimation. Journal of the American Statistical Association 107 223–232. MR2949354 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [43].Sims AH, Smethurst GJ, Hey Y, Okoniewski MJ, Pepper SD, Howell A, Miller CJ and Clarke RB (2008). The removal of multiplicative, systematic bias allows integration of breast cancer gene expression datasets–improving meta-analysis and prediction of prognosis. BMC medical genomics 1 42. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [44].Stock JH and Watson MW (2002). Forecasting using principal components from a large number of predictors. Journal of the American statistical association 97 1167–1179. MR1951271 [Google Scholar]

- [45].Verbeke G and Lesaffre E (1996). A linear mixed-effects model with heterogeneity in the random-effects population. Journal of the American Statistical Association 91 217–221. [Google Scholar]

- [46].Wang W and Fan J (2017). Asymptotics of empirical eigenstructure for high dimensional spiked covariance. Annals of statistics 45 1342–1374. MR3662457 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [47].Yang S, Lu Z, Shen X, Wonka P and Ye J (2015). Fused multiple graphical lasso. SIAM Journal on Optimization 25 916–943. MR3343365 [Google Scholar]

- [48].Yuan M (2010). High dimensional inverse covariance matrix estimation via linear programming. The Journal of Machine Learning Research 11 2261–2286. MR2719856 [Google Scholar]

- [49].Yuan M and Lin Y (2007). Model selection and estimation in the gaussian graphical model. Biometrika 94 19–35. MR2367824 [Google Scholar]