Abstract

Background

Clinical predictive tools quantify contributions of relevant patient characteristics to derive likelihood of diseases or predict clinical outcomes. When selecting predictive tools for implementation at clinical practice or for recommendation in clinical guidelines, clinicians are challenged with an overwhelming and ever-growing number of tools, most of which have never been implemented or assessed for comparative effectiveness. To overcome this challenge, we have developed a conceptual framework to Grade and Assess Predictive tools (GRASP) that can provide clinicians with a standardised, evidence-based system to support their search for and selection of efficient tools.

Methods

A focused review of the literature was conducted to extract criteria along which tools should be evaluated. An initial framework was designed and applied to assess and grade five tools: LACE Index, Centor Score, Well’s Criteria, Modified Early Warning Score, and Ottawa knee rule. After peer review, by six expert clinicians and healthcare researchers, the framework and the grading of the tools were updated.

Results

GRASP framework grades predictive tools based on published evidence across three dimensions: 1) Phase of evaluation; 2) Level of evidence; and 3) Direction of evidence. The final grade of a tool is based on the highest phase of evaluation, supported by the highest level of positive evidence, or mixed evidence that supports a positive conclusion. Ottawa knee rule had the highest grade since it has demonstrated positive post-implementation impact on healthcare. LACE Index had the lowest grade, having demonstrated only pre-implementation positive predictive performance.

Conclusion

GRASP framework builds on widely accepted concepts to provide standardised assessment and evidence-based grading of predictive tools. Unlike other methods, GRASP is based on the critical appraisal of published evidence reporting the tools’ predictive performance before implementation, potential effect and usability during implementation, and their post-implementation impact. Implementing the GRASP framework as an online platform can enable clinicians and guideline developers to access standardised and structured reported evidence of existing predictive tools. However, keeping GRASP reports up-to-date would require updating tools’ assessments and grades when new evidence becomes available, which can only be done efficiently by employing semi-automated methods for searching and processing the incoming information.

Keywords: Predictive analytics, Clinical prediction, Clinical decision support, Evidence-based medicine

Background

Modern healthcare is building upon information technology to improve the effectiveness, efficiency, and safety of healthcare and clinical processes [1–7]. In particular, clinical decision support (CDS) systems operate in three levels [8, 9]: 1) Managing information, through facilitating the reach to clinical knowledge, the search for, and the retrieval of relevant information needed to make clinical decisions, 2) Focusing users’ attention, through flagging abnormal results, providing lists of possible explanations for such results, or generating clinical and drug interaction alerts, and 3) Recommending specific actions and decisions, tailored for the clinical condition of the patient, which is often supported by clinical models and smart clinical guidelines.

Clinical predictive tools (here referred to simply as predictive tools) belong to the third level of CDS and include various applications ranging from the simplest manually applied clinical prediction rules to the most sophisticated machine learning algorithms [10, 11]. Through the processing of relevant clinical variables, clinical predictive tools derive the likelihood of diseases and predict their possible outcomes in order to provide patient specific diagnostic, prognostic, or therapeutic decision support [12, 13].

Why do we need grading and assessment of predictive tools?

Traditionally, the selection of predictive tools for implementation in clinical practice has been conducted based on subjective evaluation of and exposure to particular tools [14, 15]. This is not optimal since many clinicians lack the required time and knowledge to evaluate predictive tools, especially as their number and complexity have increased tremendously in recent years.

More recently, there has been an increase in the mention and recommendation of selected predictive algorithms in clinical guidelines. However, this represents only a small proportion of the amount and variety of proposed clinical predictive tools, which have been designed for various clinical contexts, target many different patient populations and comprise a wide range of clinical inputs and techniques [16–18]. Unlike in the case of treatments, clinicians generating guidleines have no available methods to objectively summarise or interpret the evidence behind clinical predictive tools. This is made worse by the complex nature of the evaluation process itself and the variability in the quality of the published evidence [19–22].

Although most reported tools have been internally validated, only some have been externally validated and very few have been implemented and studied for their post-implementation impact on healthcare [23, 24]. Various studies and reports indicate that there is an unfortunate practice of developing new tools instead of externally validating or updating existing ones [13, 25–28]. More importantly, while a few pre-implementation studies compare similar predictive tools along some predictive performance measures, comparative studies for post-implementation impact or cost-effectiveness are very rare [29–38]. As a result, there is lack of a reference against which predictive tools can be compared or benchmarked [12, 39, 40].

In addition, decision makers need to consider the usability of a tool, which depends on the specific healthcare and IT settings in which it is embedded, and on users’ priorities and perspectives [41]. The usability of tools is consistently improved when the outputs are actionable or directive [42–45]. Moreover, clinicians are keen to know if a tool has been endorsed by certain professional organisations they follow, or recommended by specific clinical guidelines they know [26].

Current methods for appraising predictive tools

Several methods have been proposed to evaluate predictive tools [13, 24, 46–60]. However, most of these methods are not based on the critical appraisal of the existing evidence. Two exceptions are the TRIPOD statement [61, 62], which provides a set of recommendations for the reporting of studies developing, validating, or updating predictive tools; and the CHARMS checklist [63], which provides guidance on critical appraisal and data extraction for systematic reviews of predictive tools. Both of these methods examine only the pre-implementation predictive performance of the tools, ignoring their usability and post-implementation impact. In addition, none of the currently available methods provide a grading system to allow for benchmarking and comparative effectiveness of tools.

On the other hand, looking beyond predictive tools, the GRADE framework, grades the quality of published scientific evidence and strength of clinical recommendations, in terms of their post-implementation impact. GRADE has gained a growing consensus, as an objective and consistent method to support the development and evaluation of clinical guidelines, and has increasingly been adopted worldwide [64, 65]. According to GRADE, information based on randomised controlled trials (RCTs) is considered the highest level of evidence. However, the level of evidence could be downgraded due to study limitations, inconsistency of results, indirectness of evidence, imprecision, or reporting bias [65–67]. The strength of a recommendation indicates the extent to which one can be confident that adherence to the recommendation will do more good than harm, it also requires a balance between simplicity and clarity [64, 68].

The aim of this study is to develop a conceptual framework for evidence-based grading and assessment of predictive tools. This framework is based on the critical appraisal of information provided in the published evidence reporting the evaluation of predictive tools. The framework should provide clinicians with standardised objective information on predictive tools to support their search for and selection of effective tools for their intended tasks. It should support clinicians’ informed decision making, whether they are implementing predictive tools at their clinical practices or recommending such tools in clinical practice guidelines to be used by other clinicians.

Methods

Guided by the work of Friedman and Wyatt, and their suggested three phases approach, which became an internationally acknowledged standard for evaluating health informatics technologies [20, 21, 41, 69], we aimed to extract the main criteria along which predictive tools can be similarly evaluated before, during and after their implementation.

We started with a focused review of the literature in order to examine and collect the evaluation criteria, and measures proposed for the appraisal of predictive tools along these three phases. The concepts used in the search included “clinical prediction”, “tools”, “rules”, “models”, “algorithms”, “evaluation”, and “methods”. The search was conducted using four databases; MEDLINE, EMBASE, CINAHL and Google Scholar, with no specific timeframe. This literature review was then extended to include studies describing methods evaluating CDS systems and more generally, health information systems and technology. Following the general concepts of the PRISMA guidelines [70], the duplicates of the retrieved studies, from the four databases, were first removed. Studies were then screened, based on their titles and abstracts, for relevance, then the full text articles were assessed for eligibility and only the eligible studies were included in the review. We included three types of studies evaluating predictive tools and other CDS systems; 1) studies describing the methods or processes of the evaluation, 2) studies describing the phases of the evaluation, and 3) studies describing the criteria and measures used in the evaluation. The first author manually extracted the methods of evaluation described in each type of study, and this was then revised and confirmed by the second and last authors. Additional file 1: Figure S1 shows the process of study selection for inclusion in the focused review of the literature.

Using the extracted information, we designed an initial version of the framework and applied it to asses and grade five predictive tools. We reviewed the complete list of 426 tools published by the MDCalc medical reference website for decision support tools and applications and calculators (https://www.mdcalc.com) [71]. We excluded tools which are not clinical - their output is not related to providing individual patient care, such as scores of ED crowding and calculators of waiting times. We also excluded tools which are not predictive - their output is not the result of statistically generating new information but rather the result of a deterministic equation, such as calculators deriving a number from laboratory results. The five example tools were then randomly selected, using a random number generator [72], from a shorter list of 107 eligible predictive tools, after being alphabetically sorted and numbered.

A comprehensive and systematic search for the published evidence, on each of the five predictive tools, was conducted, using MEDLINE, EMBASE, CINAHL and Google Scholar, and refined in four steps. 1) The primary studies, describing the development of the tools, were first identified and retrieved. 2) All secondary studies that cited the primary studies or that referred to the tools’ names or to any of their authors, anywhere in the text, were retrieved. 3) All tertiary studies that cited the secondary studies or that were used as references by the secondary studies were retrieved. 4) Secondary and tertiary studies were examined to exclude non-relevant studies or those not reporting the validation, implementation or evaluation of the tools. After the four steps, eligible evidence was examined and grades were assigned to the predictive tools. Basic information about the tool, such as year of publication, intended use, target population, target outcome, and source and type of input data were extracted, from the primary studies, to inform the first “Tool Information” section of the framework. Eligible studies were then examined in detail for the reported evaluations of the predictive tools. Additional file 1: Figure S2 shows the process of searching the literature for the published evidence on the predictive tools.

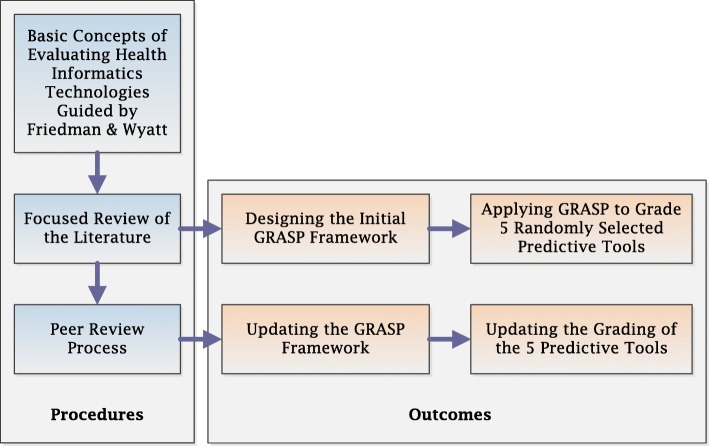

The framework and its application to the selected five predictive tools were then peer reviewed by six expert healthcare professionals. Three of these professionals are clinicians, who work in hospitals and have over 10 years of experience using CDS systems, while the other three are healthcare researchers, who work in research organisations and have over 20 years of experience in developing, implementing or evaluating CDS systems. The reviewers were provided with the framework’s concept design and its detailed report template. They were also provided with the summarised and detailed grading of the five predictive tools, as well as the justification and published evidence underpinning the grade assignment. After a brief orientation session, reviewers were asked to feedback on how much they agreed with each of the framework’s dimensions and corresponding evaluation criteria, the ‘Tool information’ section as well as the grading of the five exemplar predictive tools. The framework was then refined and the grading of the five predictive tools was updated based on the reviewers’ feedback. Figure 1 shows the flowchart of the GRASP framework overall development process.

Fig. 1.

The GRASP Framework Development Flowchart

Results

The focused review of literature

The search in the four databases, after removing the duplicates, identified a total of 831 studies. After screening the titles and abstracts, 647 studies were found not relevant to the topic. The full text of the remaining 184 studies were then examined to exclude non-eligible studies, which were 134 studies, based on the inclusion criteria. Only 50 studies were identified as eligible. Twenty three of the 50 studies described methods for the evaluation of predictive tools [13, 17, 24, 40, 44, 46–61, 63, 73], ten studies described the evaluation of CDS systems [2, 74–82], and 11 studies described the evaluation of hospital information systems and technology [83–93]. One study described the NASSS framework, a guideline to help predict and evaluate the success of healthcare technologies [94]; and five studies described the GRADE framework for evaluating clinical guidelines and protocols [64–68]. The following three subsections describe the methods used to evaluate predictive tools as described in the focussed literature review. A summary of the evaluation criteria and examples of corresponding measures for each phase can be found in Additional file 1: Table S1.

Before implementation – predictive performance

During the development phase, the internal validation of the predictive performance of a tool is the first step to make sure that the tool is doing what it is intended to do [54, 55]. Predictive performance is defined as the ability of the tool to utilise clinical and other relevant patient variables to produce an outcome that can be used to supports diagnostic, prognostic or therapeutic decisions made by clinicians and other healthcare professionals [12, 13]. The predictive performance of a tool is evaluated using measures of discrimination and calibration [53]. Discrimination refers to the ability of the tool to distinguish between patients with and without the outcome under consideration. This can be quantified with measures such as sensitivity, specificity, and the area under the receiver operating characteristic curve – AUC (or concordance statistic, c). The D-statistic is a measure of discrimination for time-to-event outcomes, which is commonly used in validating the predictive performance of prognostic models using survival data [95]. The log-rank test, or sometimes referred to as the Mantel-Cox test, is used to establish if the survival distributions of two samples of patients are statistically different. They are commonly used to validate the discrimination power of clinical prognostic models [96]. On the other hand, calibration refers to the accuracy of prediction, and indicates the extent to which expected and observed outcomes agree [48, 56]. Calibration is measured by plotting the observed outcome rates against their corresponding predicted probabilities. This is usually presented graphically with a calibration plot that shows a calibration line, which can be described with a slope and an intercept [97]. It is sometimes summarised using the Hosmer-Lemeshow test or the Brier score [98]. To avoid over-fitting, tools’ predictive performance must always be assessed out-of-sample, either via cross-validation or bootstrapping [56]. Of more interest than the internal validity is the external validity (reliability or generalisability), where the predictive performance of a tool is estimated in independent validation samples of patients from different populations [52].

During implementation – potential effect & usability

Before wide implementation, it is important to learn about the estimated potential effect of a predictive tool, when used in the clinical practice, on three main categories of measures: 1) Clinical effectiveness, such as improving patient outcomes, estimated through clinical effectiveness studies, 2) healthcare efficiency, including saving costs and resources, estimated through feasibility and cost-effectiveness studies, and 3) patient safety, including minimising complications, side effects, and medical errors. These categories are defined by the Institute of Medicine as objectives for improving healthcare performance and outcomes, and are differently prioritised by clinicians, healthcare professionals and health administrators [99, 100]. The potential effect, of a predictive tool, is defined as the expected, estimated or calculated impact of using the tool on different healthcare aspects, processes or outcomes, assuming the tool has been successfully implemented and is used in the clinical practice, as designed by its developers [41, 101]. A few predictive tools have been studied for their potential to enhance clinical effectiveness and improve patient outcomes. For example, the spinal manipulation clinical prediction rule was tested, before implementation, on a small sample of patients to identify those with low back pain most likely to benefit from spinal manipulation [102]. Other tools have been studied for their potential to improve healthcare efficiency and save costs. For example, using a decision analysis model, and assuming all eligible children with minor blunt head trauma were managed using the CHALICE rule (Children’s Head Injury Algorithm for the Prediction of Important Clinical Events), it was estimated that CHALICE would reduce unnecessary expensive head computed tomography (CT) scans, by 20%, without risking patients’ health [103–105]. Similarly, the use of the PECARN (Paediatric Emergency Care Applied Research Network) head injury rule was estimated to potentially improve patient safety through minimising the exposure of children to ionising radiation resulting in fewer radiation-induced cancers and lower net quality adjusted life years loss [106, 107].

In addition, it is important to learn about the usability of predictive tools. Usability is defined as the extent to which a system can be used by the specified users to achieve specified and quantifiable objectives in a specified context of use [108, 109]. There are several methods to make a system more usable and many definitions have been developed, based on the perspective of what usability is and how it can be evaluated, such as the mental effort needed and the user attitude or the user interaction, represented in the easiness of use and acceptability of systems [110, 111]. Usability can be evaluated through measuring the effectiveness of task management with accuracy and completeness, measuring efficiency of utilising resources in completing tasks and measuring users’ satisfaction, comfort with, and positive attitudes towards, the use of the tools [112, 113]. More advanced techniques, such as think aloud protocols and near live simulations, are recently used to evaluate usability [114]. Think aloud protocols are a major method in usability testing, since they produce a larger set of information and a richer content. They are conducted either retrospectively or concurrently, where each method has its own way of detecting usability problems [115]. The near live simulations provide users, during testing, with an opportunity to go through different clinical scenarios while the system captures interaction challenges and usability problems [116, 117]. Some researchers add learnability, memorability and freedom of errors to the measures of usability. Learnability is an important aspect of usability and a major concern in the design of complex systems. It is the capability of a system to enable the users to learn how to use it. Memorability, on the other hand, is the capability of a system to enable the users to remember how to use it, when they return back. Learnability and memorability are measured through subjective survey methods, asking users about their experience after using systems, and can also be measured by monitoring users’ competence and learning curves over successive sessions of system usage [118, 119].

After implementation – post-implementation impact

Some predictive tools have been implemented and used in the clinical practice for years, such as the PECARN head injury rule or the Ottawa knee and ankle rules [120–122]. In such cases, clinicians might be interested to learn about their post-implementation impact. The post-implementation impact of predictive tools is defined as the achieved change or influence, of a predictive tool, on different healthcare aspects, processes or outcomes, after the tool has been successfully implemented and used in the clinical practice, as designed by its developers [2, 42]. Similar to the measures of potential effect, post-implementation impact is reported along three main categories of measures: 1) Clinical effectiveness, such as improving patient outcomes, 2) Healthcare efficiency, such as saving costs and resources, and 3) Patient safety, such as minimising complications, side effects, and medical errors. These three categories of post-implementation impact measures are differently prioritised by clinicians, healthcare professionals and health administrators. In this phase of evaluation, we follow the main concepts of the GRADE framework, where the level of evidence for a given outcome is firstly determined by the study design [64, 65, 68]. High quality experimental studies, such as randomised and nonrandomised controlled trials, and the systematic reviews of their findings, come on top of the evidence levels followed by observational well-designed cohort or case-control studies and lastly subjective studies, opinions of respected authorities, and reported of expert committees or panels [65–67]. For simplicity, we did not include GRADE’s detailed criteria for higher and lower quality of studies. However, effect sizes and potential biases are reported as part of the framework, so that consistency of findings, trade-offs between benefits and harms, and other considerations can also be assessed.

Developing the GRASP framework

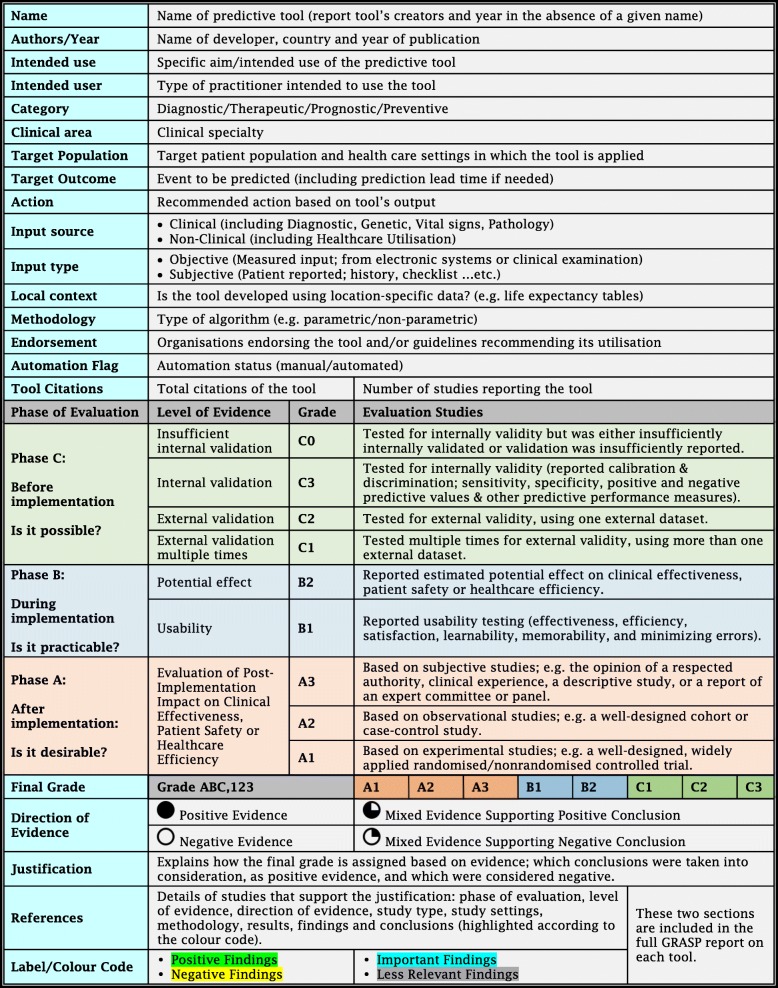

Our suggested GRASP framework (abbreviated from Grading and Assessment of Predictive Tools) is illustrated in Table 1. Based on published evidence, the GRASP framework uses three dimensions to grade predictive tools: Phase of Evaluation, Level of Evidence and Direction of Evidence.

Table 1.

The GRASP Framework Detailed Report

Phase of evaluation

Assigns a letter A, B and/or C based on the highest phase of evaluation reported in the published literature. A tool is assigned the lowest phase, C, if its predictive performance has been tested and reported for validity; phase B if its usability and/or potential impact have been validated; and the highest phase, A, if it has been implemented in clinical practice and its post-implementation impact has been reported.

Level of evidence

Assigns a numerical score within each phase of evaluation based on the level of evidence associated with the evaluation process. Tools in phase C of evaluation can be assigned three levels. A tool is assigned the lowest level, C3, if it has been tested for internal validity; C2 if it has been tested for external validity once; and C1 if it has been tested for external validity multiple times. Similarly, tools in phase A of evaluation can be assigned the lowest level of evidence, A3, if their post-implementation impact has been evaluated only through subjective or descriptive studies; A2 if it has been evaluated via observational studies; and A1 if post-implementation impact has been measured using experimental evidence. Tools in phase B of evaluation are assigned grade B2 if they have been tested for potential impact and B1 if they have been tested for usability. Effect sizes for each outcome of interest together with study type, clinical settings and patient populations are also reported.

Direction of evidence

Due to the large heterogeneity in study design, outcome measures and patient subpopulations contained in the studies, synthesising measures of predictive performance, usability, potential effect or post-implementation impact into one quantitative value is not possible. Furthermore, acceptable values of predictive performance or post-implementation impact measures depend on the clinical context and the task at hand. For example, tools like Ottawa knee rule [122] and Wells’ criteria [123, 124] are considered effective only when their sensitivity is very close to 100%, since their task is to identify patients with fractures or pulmonary embolism before sending them home. On the other hand, tools like LACE Index [125] and Centor score [126] are accepted to show sensitivities of around 70%, since their tasks, to predict 30 days readmission risk or identify that pharyngitis is bacterial, aim to screen patients who may benefit from further interventions. Therefore, for each phase and level of evidence, we assign a direction of evidence, based on the conclusions reported in the studies, and provide the user with the option to look at the summary of the findings for further information.

Positive evidence is assigned when all studies reported positive conclusions while negative evidence is assigned when all studies reported negative or equivocal conclusions. In the presence of mixed evidence, studies are farther ranked according to their quality as well as their degree of matching with the orginal tool specifications (namely target population, target outcome and settings). Mixed evidence is then classified considering this ranking as supporting an overall positive conclusion or supporting an overall negative conclusion. Details and illustration of this protocol can be found in Additional file 1: Table S2 and Figure S3.

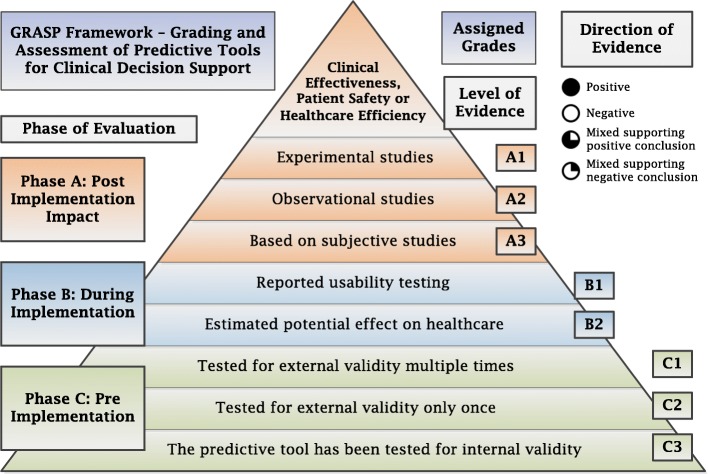

The final grade of a predictive tool is based on the highest phase of evaluation, supported by the highest level of positive evidence, or mixed evidence that supports an overall positive conclusion. Figure 2 shows the GRASP framework concept; a visual presentation of the framework three dimensions, phase of evaluation, level of evidence, and direction of evidence, explaining how each tool is assigned the final grade. Table 1 shows the GRASP framework detailed report.

Fig. 2.

The GRASP Framework Concept

Applying the GRASP framework to grade five predictive tools

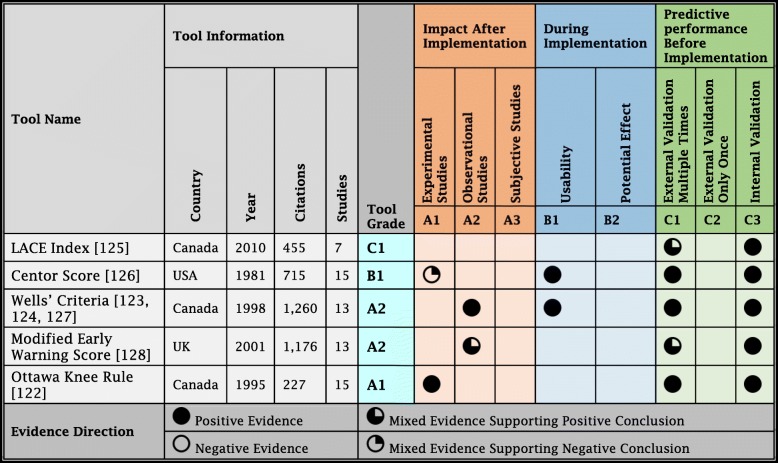

In order to show how GRASP works, we applied it to grade five randomly selected predictive tools; LACE Index for Readmission [125], Centor Score for Streptococcal Pharyngitis [126], Wells’ Criteria for Pulmonary Embolism [123, 124, 127], The Modified Early Warning Score (MEWS) for Clinical Deterioration [128] and Ottawa Knee Rule [122]. In addition to these seven primary studies, describing the development of the five predictive tools, our systematic search for the published evidence revealed a total of 56 studies; validating, implementing, and evaluating the five predictive tools. The LACE Index was evaluated and reported in six studies, the Centor Score in 14 studies, the Wells’ Criteria in ten studies, the MEWS in 12 studies, and the Ottawa Knee Rule in 14 studies. To apply the GRASP framework and assign a grade to each predictive tool, the following steps were conducted; 1) The primary study or studies were first examined for the basic information about the tool and the reported details of development and validation. 2) Other studies were examined for their phases of evaluation, levels of evidence and direction of evidence. 3) Mixed evidence was sorted into positive or negative. 4) The final grade was assigned and supported by the detailed justification. A summary of grading the five tools is shown in Table 2 and a detailed GRASP report on each tool is provided in the Additional file 1: Tables S3-S7.

Table 2.

Summary of Grading the Five Predictive Tools

LACE Index is a prognostic tool designed to predict 30 days readmission or death of patients after discharge from hospitals. It uses multivariable logistic regression analysis of four administrative data elements; length of stay, admission acuity, comorbidity (Charlson Comorbidity Index) and emergency department (ED) visits in the last 6 months, to produce a risk score [125]. The tool has been tested for external validity twice; using a sample of 26,045 patients from six hospitals in Toronto and a sample of 59,652 patients from all hospitals in Alberta, Canada. In both studies, the LACE Index showed positive external validity and superior predictive performance to the previous similar tools endorsed by the Centres for Medicare and Medicaid Services in the United States [129, 130].

Two studies examined the predictive performance of LACE Index on small sub-population samples; 507 geriatric patients in the United Kingdom and 253 congestive heart failure patients in the United States, and found that the index performed poorly [131, 132]. Two more studies reported that the LACE Index performed well but not better that their own developed tools [133, 134]. Using the mixed evidence protocol, the mixed evidence here supports external validity, since the two negative conclusion studies have been conducted on very small samples of patients and on different subpopulations than the one the LACE Index was developed for. There was no published evidence on the usability, potential effect or post-implementation impact of the LACE Index. Accordingly, the LACE Index has been assigned Grade C1.

Centor Score is a diagnostic tool that uses a rule-based algorithm on clinical data to estimate the probability that pharyngitis is streptococcal in adults who present to the ED complaining of sore throat [126]. The score has been tested for external validity multiple times and all the studies reported positive conclusions [135–142]. This qualifies Centor score for Grade C1. One study conducted a multicentre cluster RCT usability testing of the integration of Centor score into electronic health records. The study used “Think Aloud” testing with ten primary care providers, post interaction surveys in addition to screen captures and audio recordings to evaluate usability. Within the same study, another “Near Live” testing, with eight primary care providers, was conducted. Conclusions reported positive usability of the tool and positive feedback of users on the easiness of use and usefulness [143]. This qualifies Centor score for Grade B1.

Evidence of the post-implementation impact of Centor score is mixed. One RCT conducted in Canada reported a clinically important 22% reduction in overall antibiotic prescribing [144]. Four other studies, three of which were RCTs, reported that implementing Centor score did not reduce antibiotic prescribing in clinical practice [145–148]. Using the mixed evidence protocol, we found that the mixed evidence does not support positive post-implementation impact of Centor score. Therefore, Centor score has been assigned Grade of B1.

Wells’ Criteria is a diagnostic tool used in the ED to estimate pre-test probability of pulmonary embolism [123, 124]. Using a rule-based algorithm on clinical data, the tool calculates a score that excludes pulmonary embolism without diagnostic imaging [127]. The tool was tested for external validity multiple times [149–153] and its predictive performance has been also compared to other predictive tools [154–156]. In all studies, Wells’ criteria was reported externally valid, which qualifies it for Grade C1. One study conducted usability testing for the integration of the tool into the electronic health record system of a tertiary care centre’s ED. The study identified a strong desire for the tool and received positive feedback on the usefulness of the tool itself. Subjects responded that they felt the tool was helpful, organized, and did not compromise clinical judgment [157]. This qualifies Wells’ criteria for Grade B1. The post-implementation impact of Well’s Criteria on efficiency of computed tomography pulmonary angiography (CTPA) utilisation has been evaluated through an observational before-and-after intervention study. It was found that the Well’s Criteria significantly increased the efficiency of CTPA utilisation and decreased the proportion of inappropriate scans [158]. Therefore, Well’s Criteria has been assigned Grade A2.

The Modified Early Warning Score (MEWS) is a prognostic tool for early detection of inpatients’ clinical deterioration and potential need for higher levels of care. The tool uses a rule-based algorithm on clinical data to calculate a risk score [128]. The MEWS has been tested for external validity multiple times in different clinical areas, settings and populations [159–165]. All studies reported that the tool is externally valid. However, one study reported MEWS poorly predicted the in-hospital mortality risk of patients with sepsis [166]. Using the mixed evidence protocol, the mixed evidence supports external validity, qualifying MEWS for Grade C1. No literature has been found regarding its usability or potential effect.

The MEWS has been implemented in different healthcare settings. One observational before-and-after intervention study failed to prove positive post-implementation impact of the MEWS on patient safety in acute medical admissions [167]. However, three more recent observational before-and-after intervention studies reported positive post-implementation impact of the MEWS on patient safety. One study reported significant increase in frequency of patient observation and decrease in serious adverse events after intensive care unit (ICU) discharge [168]. The second reported significant increase in frequency of vital signs recording, 24 h post-ICU discharge and 24 h preceding unplanned ICU admission [169]. The third, an 8 years study, reported that the post-implementation 4 years showed significant reductions in the incidence of cardiac arrests, the proportion of patients admitted to ICU and their in-hospital mortality [170]. Using the mixed evidence protocol, the mixed evidence supports positive post-implementation impact. The MEWS has been assigned Grade A2.

Ottawa Knee Rule is a diagnostic tool used to exclude the need for an X-ray for possible bone fracture in patients presenting to the ED, using a simple five items manual check list [122]. It is one of the oldest, most accepted and successfully used rules in CDS. The tool has been tested for external validity multiple times. One systematic review identified 11 studies, 6 of them involved 4249 adult patients and were appropriate for pooled analysis, showing high sensitivity and specificity predictive performance [171]. Furthermore, two studies discussed the post-implementation impact of Ottawa knee rule on healthcare efficiency. One nonrandomised controlled trial with before-after and concurrent controls included a total of 3907 patients seen during two 12-month periods before and after the intervention. The study reported that the rule decreased the use of knee radiography without patient dissatisfaction or missed fractures and was associated with reduced waiting times and costs per patient [172]. Another nonrandomised controlled trial reported that the proportion of ED patients referred for knee radiography was reduced. The study also reported that the practice based on the rule was associated with significant cost savings [173]. Accordingly, the Ottawa knee rule has been assigned Grade A1.

In the Additional file 1, a summary of the predictive performance of the five tools is shown in Additional file 1: Table S8. The c-statistics of LACE Index, Centor Score, Wells’ Criteria and MEWS are reported in Additional file 1: Figure S4. The usability of Centor Score and Wells Criteria are reported in Additional file 1: Table S9 and post-implementation impact of Wells Criteria, MEWS and Ottawa knee rule is reported in Additional file 1: Table S10.

Peer review of the GRASP framework

On peer-review, experts found the GRASP framework logical, helpful and easy to use. The reviewers strongly agreed to all criteria used for evaluation. The reviewers suggested adding more specific information about each tool, such as the author’s name, the intended user of the tool and the recommended action based on the tool’s findings. The reviewers showed a clear demand for knowledge regarding the applicability of tools to their local context. Two aspects were identified and discussed with the reviewers. Firstly, the operational aspect of how easy it would be to implement a particular tool and if the data required to use the tool is readily available in their clinical setting. Secondly, the validation aspect of adopting a tool developed using local predictors, such as life expectancy (which is location specific) or information based on billing codes (which is hospital specific). Following this discussion, elements related to data sources and context were added to the information section of the framework. One reviewer suggested assigning grade C0 to the reported predictive tools that did not meet C3 criteria, i.e. those tools which were tested for internally validity but were either insufficiently internally validated or the internal validation was insufficiently reported in the study, in order to differentiate them from those tools for which neither predictive performance nor post-implementation impact have been reported in the literature.

Discussion

Brief summary

It is challenging for clinicians to critically evaluate the growing number of predictive tools proposed to them by colleagues, administrators and commercial entities. Although most of these tools have been assessed for predictive performance, only a few have been implemented or evaluated for comparative predictive performance or post-implementation impact. In this manuscript, we present GRASP, a framework that provides clinicians with an objective, evidence-based, standardised method to use in their search for, and selection of tools. GRASP builds on widely accepted concepts, such as Friedman and Wyatt’s evaluation approach [20, 21, 41, 69] and the GRADE system [64–68].

The GRASP framework is composed of two parts; 1) the GRASP framework concept, which shows the grades assigned to predictive tools based on the three dimensions: Phase of Evaluation, Level of Evidence and Direction of Evidence, and 2) the GRASP framework detailed report, which shows the detailed quantitative information on each predictive tool and justifies how the grade was assigned. The GRASP framework is designed for two levels of users: 1) Expert users, who will use the framework to assign grades to predictive tools and report their details, through the critical appraisal of published evidence about these tools. This step is essential to provide decision making clinicians with grades and detailed reports on predictive tools. Expert users include healthcare researchers who specialise in evidence-based methods and have experience in developing, implementing or evaluating predictive tools. 2) End users, who will use the GRASP framework detailed report of tools and their final grades, produced by expert users, to compare existing predictive tools and select the most suitable tool(s) for their predictive tasks, target objectives, and desired outcomes. End users include clinicians and other healthcare professionals involved in the decision making and selection of predictive tools for implementation at their clinical practice or for recommendation in clinical practice guidelines to be used by other clinicians and healthcare professionals. The two described processes; the grading of predictive tools by expert users and the selection decisions made by end users, should occur before the recommended tools are made available for use in the hands of the practicing clinicians.

Comparison with previous literature

Previous approaches to the appraisal of predictive tools from the published literature, namely the TRIPOD statement [61, 62] and the CHARMS checklist [63], examine only their predictive performance, ignoring their usability and post-implementation impact. On the other hand, the GRADE framework appraises the published literature in order to evaluate clinical recommendations based on their post-implementation impact [64–68]. More broadly, methods for the evaluation of health information systems and technology focus on the integration of systems into tasks, workflows and organisations [84, 91]. The GRASP framework takes into account all phases of the development and translation of a predictive algorithm: predictive performance before implementation, using similar concepts as those utilised in TRIPOD and CHARMS; usability and potential effect during implementation, and post-implementation impact on patient outcomes and processes of care after implementation, using similar concepts as those utilised in the GRADE system. The GRASP grade is not the result of combining and synthesising selected measures of predictive performance (e.g. AUC), potential effect (e.g. potential saved money), usability (e.g. user satisfaction) or post-implementation impact (e.g. increased efficacy) from the existing literature, like in a meta-analysis; but rather the result of combining and synthesising the reported qualitative conclusions.

Walker and Habboushe, at the MDCalc website; classified and reported the most commonly used medical calculators and other clinical decision support applications. However, the website does not provide users with a structured grading system or an evidence-based method for the assessment of the presented tools. Therefore, we believe that our proposed framework can be adopted and used by MDCalc, and similar clinical decision support resources, to grade their tools.

Quality of evidence and conflicting conclusions

One of the main challenges in assessing and comparing clinical predictive tools is dealing with the large variability in the quality and type of studies in the published literature. This heterogeneity makes it impractical to quantitatively synthesise measures of predictive performance, usability, potential effect or post-implementation impact into single numbers. Furthermore, as discussed earlier, a particular value of a predictive performance metric that is considered good for some tasks and clinical settings may be considered insufficient for others. In order to avoid complex decisions regarding the quality and strength of reported measures, we chose to assign a direction of evidence, based on positive or negative conclusions as reported in the studies under consideration, since synthesizing qualitative conclusions is the only available option which adds some value. We then provide the end user with the option to look at a summary of the reported measures, in the GRASP detailed report, for further details.

It is not uncommon to encounter conflicting conclusions when a tool has been validated in different patient subpopulations. For example, LACE Index for readmission showed positive external validity when tested in adult medical inpatients [129, 130], but showed poor predictive performance when tested in a geriatric subpopulation [131]. Similarly, the MEWS for clinical deterioration demonstrated positive external validity when tested in emergency patients [159, 161, 165], medical inpatients [160, 164], surgical inpatients [162], and trauma patients [163], but not when tested in a subpopulation of patients with acute sepsis [166]. Part of these disagreements could be explained by changes in the distributions of important predictors, which affect the discriminatory power of the algorithms. For example, sepsis patients have similarly disturbed physiological measures such as those used to generate MEWS. In addition, conflicting conclusions may be encountered when a study examines the validity of a proposed tool in a healthcare setting or outcome different from those the tool was primarily developed for.

Integration and socio-technical context

As is the case with other healthcare interventions, examining the post-implementation impact of predictive tools is challenging, since it is confounded by co-occurrent socio-technical factors [174–176]. This is complicated further by the fact that predictive tools are often integrated into electronic health record systems, since this facilitates their use, and are influenced by their usability [42, 177]. The usability, therefore, is an essential and major contributing factor in the wide acceptance and successful implementation of predictive tools and other CDS systems [42, 178]. It is clearly essential to involve user clinicians in the design and usability evaluations of predictive tools before their implementation. This should eliminate their concerns that integrating predictive tools into their workflow would increase their workload, consultation times, or decrease their efficiency and productivity [179].

Likewise, well designed post-implementation impact evaluation studies are required in order to explore the influence of organisational factors and local differences on the success or failure of predictive tools [180, 181]. Data availability, IT systems capabilities, and other human knowledge and organisational regulatory factors are crucial for the adoption, acceptance, and successful implementation of predictive tools. These factors and differences need to be included in the tools assessments, as they are important when making decisions about selecting predictive tools, in order to estimate the feasibility and resources needed to implement the tools. We have to acknowledge that it is not possible to include such wide range of variables in deciding or presenting the grades assigned by the framework to the predictive tools, which remain simply at a high-level. However, all the necessary information, technical specifications, and requirements of the tools, as reported in the published evidence, should be fully accessible to the users, through the framework's detailed reports on the predictive tools. Users can compare such information, of one or more tools, to what they have at their healthcare settings, then make selection and implementation decisions.

Local data

There is a challenging trade-off between the generalisability and the customisation of a given predictive tool. Some algorithms are developed using local data. For example, Bouvy’s prognostic model, for mortality risk in patients with heart failure, uses life quality and expectancy scores from the Netherlands [182]. Similarly, Fine’s prediction rule identifies low-risk patients with community-acquired pneumonia based on national rates of acquired infections in the United States [183]. This necessitates adjustment of the algorithm to the local context, therefore producing a new version of the tool, which requires re-evaluation.

Other considerations

GRASP evaluates predictive tools based on the critical appraisal of the existing published evidence. Therefore, it is subject to publication bias, since statistically positive results are more likely to be published than negative or null results [184, 185]. The usability and potential effect of predictive tools are less studied and hence the published evidence needed to support level B of the grading system is often lacking. We have nevertheless chosen to keep this element in GRASP since it is an essential part of the safety evaluation of any healthcare technology. It also allows for early redesign and better workflow integration, which leads to higher utilisation rates [114, 157, 186]. By keeping it, we hope to encourage tool developers and evaluators to increase their execution and reporting of these type of studies.

The grade assigned to a tool provides relevant evidence-based information to guide the selection of predictive tools for clinical decision support, but it is not prescriptive. An A1 tool is not always better than an A2 tool. A user may prefer an A2 tool showing improved patient safety in two observational studies rather than an A1 tool showing reduced cost in one experimental study. The grade is a code (not an ordinal quantity) that provides information on three relevant dimensions: phase of evaluation, level of evidence, and direction of evidence as reported in the literature.

Study limitations and future work

One of the limitations of the GRASP framework is that the Direction of Evidence dimension is based on the conclusions of the considered studies on each predictive tool, which confers some subjectivity to this dimension. However, the end-user clinicians are provided with the full details of all available studies on each tool, through the GRASP detailed report, where they can access the required objective information to support their decisions. In addition, we applied the GRASP framework to only five predictive tools and consulted a small number of healthcare experts for their feedback. This could have limited the conclusions about the framework’s coverage and/or validity. Although GRASP framework is not a predictive tool, it could be thought of as a technology of Grade C3, since it has only been internally validated after development. However, conducting a large-scale validation study of the framework, extending the application of the framework to a larger number of predictive tools, and studying its effect on end-users’ decisions is out of the scope of this study and is left for future work.

To validate, update, and evaluate the GRASP framework, the authors are currently working on three more studies. The first study should validate the design and content of the framework, through seeking the feedback of a wider international group of healthcare experts, who have published work on developing, implementing or evaluating predictive tools. This study should help to update the criteria used, by the framework, to grade predictive tools and improve the details provided, by the framework, to the end users. The second study should evaluate the impact of using the framework on improving the decisions made by clinicians, regarding evaluating and selecting predictive tools. The experiment should compare the performance and outcomes of clinicians’ decisions with and without using the framework. Finally, the third study aims to apply the framework to a larger consistent group of predictive tools, used for the same clinical task. This study should show how the framework provides clinicians with an evidence-based method to compare, evaluate and select predictive tools, through reporting and grading tools based on the critical appraisal of published evidence.

Conclusion

The GRASP framework builds on widely accepted concepts to provide standardised assessment and evidence-based grading of predictive tools. Unlike other methods, GRASP is based on the critical appraisal of published evidence reporting the tools’ predictive performance before implementation, potential effect and usability during implementation, and their post-implementation impact. Implementing the GRASP framework as an online platform can enable clinicians and guideline developers to access standardised and structured reported evidence of existing predictive tools. However, keeping the GRASP framework reports up-to-date would require updating tools’ assessments and grades when new evidence becomes available. Therefore, a sustainable GRASP system would require employing automated or semi-automated methods for searching and processing the incoming published evidence. Finally, we recommend that GRASP framework be applied to predictive tools by working groups of professional organisations, in order to provide consistent results and increase reliability and credibility for end users. These professional organisations should also be responsible for making their associates aware of the availability of such evidence-based information on predictive tools, in a similar way of announcing and disseminating clinical practice guidelines.

Supplementary information

Additional file 1: Table S1. Phases, Criteria, and Measures of Evaluating Predictive Tools. Table S2. Evaluating Evidence Direction Based on the Conclusions of Studies. Table S3. LACE Index for Readmission – Grade C1. Table S4. Centor Score for Streptococcal Pharyngitis – Grade B1. Table S5. Wells’ Criteria for Pulmonary Embolism – Grade A2. Table S6. Modified Early Warning Score (MEWS) – Grade A2. Table S7. Ottawa Knee Rule – Grade A1. Table S8. Predictive Performance of the Five Tools – Before Implementation. Table S9. Usability of Two Predictive Tools – During Implementation. Table S10. Post-Implementation Impact of Three Predictive Tools. Figure S1. Study Selection for the Focused Review of Literature. Figure S2. Searching the Literature for Published Evidence on Predictive Tools. Figure S3. The Mixed Evidence Protocol. Figure S4. Reported C-Statistic of LACE Index, Centor Score, Wells Criteria and MEWS [187–192].

Acknowledgments

We would like to thank Professor William Runciman, Professor of Patient Safety, Dr. Megan Sands, Senior Staff Specialist in Palliative Care, Dr. Thilo Schuler, Radiation Oncologist, Dr. Louise Wiles, Patient Safety Research Project Manager, and research fellows Dr. Virginia Mumford and Dr. Liliana Laranjo, for their contribution in testing the GRASP framework and for their valuable feedback and suggestions.

Abbreviations

- AUC

Area under the curve

- CDS

Clinical decision support

- CHALICE

Children’s Head Injury Algorithm for the Prediction of Important Clinical Events

- CHARMS

Critical appraisal and data extraction for systematic reviews of prediction modelling studies

- CT

Computed tomography

- CTPA

Computed tomography pulmonary angiography

- E.g.

For example

- ED

Emergency department

- GRADE

The Grading of Recommendations Assessment, Development and Evaluation

- GRASP

Grading and assessment of predictive tools

- I.e.

In other words or more precisely

- ICU

Intensive care unit

- IT

Information technology

- LACE

Length of Stay, Admission Acuity, Comorbidity and Emergency Department Visits

- MDCalc

Medical calculators

- MEWS

Modified Early Warning Score

- NASSS

Nonadoption, abandonment, and challenges to the scale-up, spread, and sustainability

- PECARN

Paediatric Emergency Care Applied Research Network

- RCTs

Randomised controlled trials

- ROC

Receiver operating characteristic curve

- TRIPOD

Transparent reporting of a multivariable prediction model for individual prognosis or diagnosis

- UK

United Kingdom

- US

United States

Authors’ contributions

MK contributed to the detailed design of the framework and led its execution and implementation. BG contributed to the conception of the framework and was responsible for the overall supervision of this work. FM provided advice on the enhancement and utility of the methodology. All the authors have been involved in drafting the manuscript and revising it. Finally, all the authors gave approval of the manuscript to be published and agreed to be accountable for all aspects of the work.

Funding

This work was financially supported by the Commonwealth Government Funded Research Training Program, Australia, to help the authors to carry out the study. The funding body played no role in the design of the study and collection, analysis, and interpretation of the data and in writing the manuscript.

Availability of data and materials

Data sharing is not applicable to this article as no datasets were generated or analysed during the current study.

Ethics approval and consent to participate

No ethics approval was required for any element of this study.

Consent for publication

Not applicable.

Competing interests

The authors declare that they have no competing interests.

Footnotes

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Contributor Information

Mohamed Khalifa, Email: mohamed.khalifa@mq.edu.au.

Farah Magrabi, Email: farah.magrabi@mq.edu.au.

Blanca Gallego, Email: b.gallego@unsw.edu.au.

Supplementary information

Supplementary information accompanies this paper at 10.1186/s12911-019-0940-7.

References

- 1.Middleton B, et al. Enhancing patient safety and quality of care by improving the usability of electronic health record systems: recommendations from AMIA. J Am Med Inform Assoc. 2013;20(e1):e2–e8. doi: 10.1136/amiajnl-2012-001458. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Kawamoto K, et al. Improving clinical practice using clinical decision support systems: a systematic review of trials to identify features critical to success. BMJ. 2005;330(7494):765. doi: 10.1136/bmj.38398.500764.8F. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Osheroff JA. Improving outcomes with clinical decision support: an implementer’s guide. New York: Imprint HIMSS Publishing; 2012.

- 4.Osheroff JA, et al. A roadmap for national action on clinical decision support. J Am Med Inform Assoc. 2007;14(2):141–145. doi: 10.1197/jamia.M2334. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Øvretveit J, et al. Improving quality through effective implementation of information technology in healthcare. Int J Qual Health Care. 2007;19(5):259–266. doi: 10.1093/intqhc/mzm031. [DOI] [PubMed] [Google Scholar]

- 6.Castaneda C, et al. Clinical decision support systems for improving diagnostic accuracy and achieving precision medicine. J Clin Bioinforma. 2015;5(1):4. doi: 10.1186/s13336-015-0019-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Capobianco E. Data-driven clinical decision processes: it’s time: BioMed Central; 2019. [DOI] [PMC free article] [PubMed]

- 8.Musen MA, Middleton B, Greenes RA. Clinical decision-support systems. In: Biomedical informatics: Springer; 2014. p. 643–74.

- 9.Shortliffe EH, Cimino JJ. Biomedical informatics: computer applications in health care and biomedicine: Springer Science & Business Media; 2013.

- 10.Adams ST, Leveson SH. Clinical prediction rules. BMJ. 2012;344:d8312. doi: 10.1136/bmj.d8312. [DOI] [PubMed] [Google Scholar]

- 11.Wasson JH, et al. Clinical prediction rules: applications and methodological standards. N Engl J Med. 1985;313(13):793–799. doi: 10.1056/NEJM198509263131306. [DOI] [PubMed] [Google Scholar]

- 12.Beattie P, Nelson R. Clinical prediction rules: what are they and what do they tell us? Aust J Physiother. 2006;52(3):157–163. doi: 10.1016/S0004-9514(06)70024-1. [DOI] [PubMed] [Google Scholar]

- 13.Steyerberg EW. Clinical prediction models: a practical approach to development, validation, and updating: Springer Science & Business Media; 2008.

- 14.Ansari S, Rashidian A. Guidelines for guidelines: are they up to the task? A comparative assessment of clinical practice guideline development handbooks. PLoS One. 2012;7(11):e49864. doi: 10.1371/journal.pone.0049864. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Kish MA. Guide to development of practice guidelines. Clin Infect Dis. 2001;32(6):851–854. doi: 10.1086/319366. [DOI] [PubMed] [Google Scholar]

- 16.Ebell MH. Evidence-based diagnosis: a handbook of clinical prediction rules, vol. 1: Springer Science & Business Media; 2001.

- 17.Kappen T, et al. Prediction models and decision support. 2015. General discussion I: evaluating the impact of the use of prediction models in clinical practice: challenges and recommendations; p. 89. [Google Scholar]

- 18.Taljaard M, et al. Cardiovascular disease population risk tool (CVDPoRT): predictive algorithm for assessing CVD risk in the community setting. A study protocol. BMJ Open. 2014;4(10):e006701. doi: 10.1136/bmjopen-2014-006701. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Berner ES. Clinical decision support systems, vol. 233: Springer; 2007.

- 20.Friedman CP, Wyatt J. Evaluation methods in biomedical informatics: Springer Science & Business Media; 2005.

- 21.Friedman CP, Wyatt JC. Evaluation methods in biomedical informatics. 2006. Challenges of evaluation in biomedical informatics; pp. 1–20. [Google Scholar]

- 22.Lobach DF. Evaluation of clinical decision support. In: Clinical decision support systems: Springer; 2016. p. 147–61.

- 23.Plüddemann A, et al. Clinical prediction rules in practice: review of clinical guidelines and survey of GPs. Br J Gen Pract. 2014;64(621):e233–e242. doi: 10.3399/bjgp14X677860. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Wallace E, et al. Impact analysis studies of clinical prediction rules relevant to primary care: a systematic review. BMJ Open. 2016;6(3):e009957. doi: 10.1136/bmjopen-2015-009957. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Altman DG, et al. Prognosis and prognostic research: validating a prognostic model. BMJ. 2009;338:b605. doi: 10.1136/bmj.b605. [DOI] [PubMed] [Google Scholar]

- 26.Bouwmeester W, et al. Reporting and methods in clinical prediction research: a systematic review. PLoS Med. 2012;9(5):e1001221. doi: 10.1371/journal.pmed.1001221. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Hendriksen J, et al. Diagnostic and prognostic prediction models. J Thromb Haemost. 2013;11(s1):129–141. doi: 10.1111/jth.12262. [DOI] [PubMed] [Google Scholar]

- 28.Moons KG, et al. Prognosis and prognostic research: what, why, and how? BMJ. 2009;338:b375. doi: 10.1136/bmj.b375. [DOI] [PubMed] [Google Scholar]

- 29.Christensen S, et al. Comparison of Charlson comorbidity index with SAPS and APACHE scores for prediction of mortality following intensive care. Clin Epidemiol. 2011;3:203. doi: 10.2147/CLEP.S20247. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Das K, et al. Comparison of APACHE II, P-POSSUM and SAPS II scoring systems in patients underwent planned laparotomies due to secondary peritonitis. Ann Ital Chir. 2014;85(1):16–21. [PubMed] [Google Scholar]

- 31.Desautels T, et al. Prediction of sepsis in the intensive care unit with minimal electronic health record data: a machine learning approach. JMIR Med Inform. 2016;4(3):e28. doi: 10.2196/medinform.5909. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Faruq MO, et al. A comparison of severity systems APACHE II and SAPS II in critically ill patients. Bangladesh Crit Care J. 2013;1(1):27–32. doi: 10.3329/bccj.v1i1.14362. [DOI] [Google Scholar]

- 33.Hosein FS, et al. A systematic review of tools for predicting severe adverse events following patient discharge from intensive care units. Crit Care. 2013;17(3):R102. doi: 10.1186/cc12747. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Kim YH, et al. Performance assessment of the SOFA, APACHE II scoring system, and SAPS II in intensive care unit organophosphate poisoned patients. J Korean Med Sci. 2013;28(12):1822–1826. doi: 10.3346/jkms.2013.28.12.1822. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Köksal Ö, et al. The comparison of modified early warning score and Glasgow coma scale-age-systolic blood pressure scores in the assessment of nontraumatic critical patients in emergency department. Niger J Clin Pract. 2016;19(6):761–765. doi: 10.4103/1119-3077.178944. [DOI] [PubMed] [Google Scholar]

- 36.Moseson EM, et al. Intensive care unit scoring systems outperform emergency department scoring systems for mortality prediction in critically ill patients: a prospective cohort study. J Intensive Care. 2014;2(1):40. doi: 10.1186/2052-0492-2-40. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Reini K, Fredrikson M, Oscarsson A. The prognostic value of the modified early warning score in critically ill patients: a prospective, observational study. Eur J Anaesthesiol. 2012;29(3):152–157. doi: 10.1097/EJA.0b013e32835032d8. [DOI] [PubMed] [Google Scholar]

- 38.Yu S, et al. Comparison of risk prediction scoring systems for ward patients: a retrospective nested case-control study. Crit Care. 2014;18(3):R132. doi: 10.1186/cc13947. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Laupacis A, Sekar N. Clinical prediction rules: a review and suggested modifications of methodological standards. JAMA. 1997;277(6):488–494. doi: 10.1001/jama.1997.03540300056034. [DOI] [PubMed] [Google Scholar]

- 40.Moons KG, et al. Risk prediction models: II. External validation, model updating, and impact assessment. Heart. 2012. 10.1136/heartjnl-2011-301247. [DOI] [PubMed]

- 41.Friedman CP, Wyatt JC. Evaluation of biomedical and health information resources. In: Biomedical informatics: Springer; 2014. p. 355–87.

- 42.Bates DW, et al. Ten commandments for effective clinical decision support: making the practice of evidence-based medicine a reality. J Am Med Inform Assoc. 2003;10(6):523–530. doi: 10.1197/jamia.M1370. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Gong Y, Kang H. Usability and clinical decision support. In: Clinical decision support systems: Springer; 2016. p. 69–86.

- 44.Kappen TH, et al. Barriers and facilitators perceived by physicians when using prediction models in practice. J Clin Epidemiol. 2016;70:136–145. doi: 10.1016/j.jclinepi.2015.09.008. [DOI] [PubMed] [Google Scholar]

- 45.Sittig DF, et al. A survey of factors affecting clinician acceptance of clinical decision support. BMC Med Inform Decis Mak. 2006;6(1):6. doi: 10.1186/1472-6947-6-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Collins GS, et al. External validation of multivariable prediction models: a systematic review of methodological conduct and reporting. BMC Med Res Methodol. 2014;14(1):40. doi: 10.1186/1471-2288-14-40. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Debray T, et al. A framework for developing, implementing, and evaluating clinical prediction models in an individual participant data meta-analysis. Stat Med. 2013;32(18):3158–3180. doi: 10.1002/sim.5732. [DOI] [PubMed] [Google Scholar]

- 48.Debray TP, et al. A guide to systematic review and meta-analysis of prediction model performance. BMJ. 2017;356:i6460. doi: 10.1136/bmj.i6460. [DOI] [PubMed] [Google Scholar]

- 49.Debray TP, et al. A new framework to enhance the interpretation of external validation studies of clinical prediction models. J Clin Epidemiol. 2015;68(3):279–289. doi: 10.1016/j.jclinepi.2014.06.018. [DOI] [PubMed] [Google Scholar]

- 50.Harris AH. Path from predictive analytics to improved patient outcomes: a framework to guide use, implementation, and evaluation of accurate surgical predictive models. Ann Surg. 2017;265(3):461–463. doi: 10.1097/SLA.0000000000002023. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Reps JM, Schuemie MJ, Suchard MA, Ryan PB, Rijnbeek PR. Design and implementation of a standardized framework to generate and evaluate patient-level prediction models using observational healthcare data. J Am Med Inform Assoc. 2018;25(8):969–75. [DOI] [PMC free article] [PubMed]

- 52.Steyerberg EW, et al. Internal and external validation of predictive models: a simulation study of bias and precision in small samples. J Clin Epidemiol. 2003;56(5):441–447. doi: 10.1016/S0895-4356(03)00047-7. [DOI] [PubMed] [Google Scholar]

- 53.Steyerberg EW, et al. Internal validation of predictive models: efficiency of some procedures for logistic regression analysis. J Clin Epidemiol. 2001;54(8):774–781. doi: 10.1016/S0895-4356(01)00341-9. [DOI] [PubMed] [Google Scholar]

- 54.Steyerberg EW, Harrell FE., Jr Prediction models need appropriate internal, internal-external, and external validation. J Clin Epidemiol. 2016;69:245. doi: 10.1016/j.jclinepi.2015.04.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Steyerberg EW, Vergouwe Y. Towards better clinical prediction models: seven steps for development and an ABCD for validation. Eur Heart J. 2014;35(29):1925–1931. doi: 10.1093/eurheartj/ehu207. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Steyerberg EW, et al. Assessing the performance of prediction models: a framework for some traditional and novel measures. Epidemiology (Cambridge, Mass.) 2010;21(1):128. doi: 10.1097/EDE.0b013e3181c30fb2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Toll D, et al. Validation, updating and impact of clinical prediction rules: a review. J Clin Epidemiol. 2008;61(11):1085–1094. doi: 10.1016/j.jclinepi.2008.04.008. [DOI] [PubMed] [Google Scholar]

- 58.Vickers AJ, Cronin AM. Traditional statistical methods for evaluating prediction models are uninformative as to clinical value: towards a decision analytic framework. In: Seminars in oncology: Elsevier; 2010. [DOI] [PMC free article] [PubMed]

- 59.Vickers AJ, Cronin AM. Everything you always wanted to know about evaluating prediction models (but were too afraid to ask) Urology. 2010;76(6):1298–1301. doi: 10.1016/j.urology.2010.06.019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Wallace E, et al. Framework for the impact analysis and implementation of clinical prediction rules (CPRs) BMC Med Inform Decis Mak. 2011;11(1):62. doi: 10.1186/1472-6947-11-62. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Collins GS, et al. Transparent reporting of a multivariable prediction model for individual prognosis or diagnosis (TRIPOD): the TRIPOD statement. BMC Med. 2015;13(1):1. doi: 10.1186/s12916-014-0241-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Moons KG, et al. Transparent reporting of a multivariable prediction model for individual prognosis or diagnosis (TRIPOD): explanation and elaboration. Ann Intern Med. 2015;162(1):W1–W73. doi: 10.7326/M14-0698. [DOI] [PubMed] [Google Scholar]

- 63.Moons KG, et al. Critical appraisal and data extraction for systematic reviews of prediction modelling studies: the CHARMS checklist. PLoS Med. 2014;11(10):e1001744. doi: 10.1371/journal.pmed.1001744. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Atkins D, et al. Grading quality of evidence and strength of recommendations. BMJ (Clinical research ed) 2004;328(7454):1490. doi: 10.1136/bmj.328.7454.1490. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65.Guyatt GH, et al. Rating quality of evidence and strength of recommendations: GRADE: an emerging consensus on rating quality of evidence and strength of recommendations. BMJ. 2008;336(7650):924. doi: 10.1136/bmj.39489.470347.AD. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66.Guyatt G, et al. GRADE guidelines: 1. Introduction—GRADE evidence profiles and summary of findings tables. J Clin Epidemiol. 2011;64(4):383–394. doi: 10.1016/j.jclinepi.2010.04.026. [DOI] [PubMed] [Google Scholar]

- 67.Guyatt GH, et al. GRADE guidelines: a new series of articles in the journal of clinical epidemiology. J Clin Epidemiol. 2011;64(4):380–382. doi: 10.1016/j.jclinepi.2010.09.011. [DOI] [PubMed] [Google Scholar]

- 68.Atkins D, et al. Systems for grading the quality of evidence and the strength of recommendations I: critical appraisal of existing approaches the GRADE working group. BMC Health Serv Res. 2004;4(1):38. doi: 10.1186/1472-6963-4-38. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69.Friedman CP, Wyatt JC. Challenges of evaluation in medical informatics. In: Evaluation methods in medical informatics: Springer; 1997. p. 1–15.

- 70.Moher D, et al. Preferred reporting items for systematic reviews and meta-analyses: the PRISMA statement. Ann Intern Med. 2009;151(4):264–269. doi: 10.7326/0003-4819-151-4-200908180-00135. [DOI] [PubMed] [Google Scholar]

- 71.Walker G, Habboushe J. MD+Calc (Medical reference for clinical decision tools and content) 2018. [Google Scholar]

- 72.Ltd, R.a.I.S. Random.org. 2019. Available from: https://www.random.org/. Cited 1 Jan 2018.

- 73.Moons KG, et al. Prognosis and prognostic research: application and impact of prognostic models in clinical practice. BMJ. 2009;338:b606. doi: 10.1136/bmj.b606. [DOI] [PubMed] [Google Scholar]

- 74.Bright TJ, et al. Effect of clinical decision-support systemsa systematic review. Ann Intern Med. 2012;157(1):29–43. doi: 10.7326/0003-4819-157-1-201207030-00450. [DOI] [PubMed] [Google Scholar]

- 75.Garg AX, et al. Effects of computerized clinical decision support systems on practitioner performance and patient outcomes: a systematic review. JAMA. 2005;293(10):1223–1238. doi: 10.1001/jama.293.10.1223. [DOI] [PubMed] [Google Scholar]

- 76.Hunt DL, et al. Effects of computer-based clinical decision support systems on physician performance and patient outcomes: a systematic review. JAMA. 1998;280(15):1339–1346. doi: 10.1001/jama.280.15.1339. [DOI] [PubMed] [Google Scholar]

- 77.Johnston ME, et al. Effects of computer-based clinical decision support systems on clinician performance and patient outcome: a critical appraisal of research. Ann Intern Med. 1994;120(2):135–142. doi: 10.7326/0003-4819-120-2-199401150-00007. [DOI] [PubMed] [Google Scholar]

- 78.Kaplan B. Evaluating informatics applications—clinical decision support systems literature review. Int J Med Inform. 2001;64(1):15–37. doi: 10.1016/S1386-5056(01)00183-6. [DOI] [PubMed] [Google Scholar]

- 79.Kaushal R, Shojania KG, Bates DW. Effects of computerized physician order entry and clinical decision support systems on medication safety: a systematic review. Arch Intern Med. 2003;163(12):1409–1416. doi: 10.1001/archinte.163.12.1409. [DOI] [PubMed] [Google Scholar]

- 80.McCoy AB, et al. A framework for evaluating the appropriateness of clinical decision support alerts and responses. J Am Med Inform Assoc. 2011;19(3):346–352. doi: 10.1136/amiajnl-2011-000185. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 81.Pearson S-A, et al. Do computerised clinical decision support systems for prescribing change practice? A systematic review of the literature (1990-2007) BMC Health Serv Res. 2009;9(1):154. doi: 10.1186/1472-6963-9-154. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 82.Wright A, Sittig DF. A framework and model for evaluating clinical decision support architectures. J Biomed Inform. 2008;41(6):982–990. doi: 10.1016/j.jbi.2008.03.009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 83.Ammenwerth E, et al. Evaluation of health information systems—problems and challenges. Int J Med Inform. 2003;71(2):125–135. doi: 10.1016/S1386-5056(03)00131-X. [DOI] [PubMed] [Google Scholar]

- 84.Ammenwerth E, Iller C, Mahler C. IT-adoption and the interaction of task, technology and individuals: a fit framework and a case study. BMC Med Inform Decis Mak. 2006;6(1):3. doi: 10.1186/1472-6947-6-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 85.Aqil A, Lippeveld T, Hozumi D. PRISM framework: a paradigm shift for designing, strengthening and evaluating routine health information systems. Health Policy Plan. 2009;24(3):217–228. doi: 10.1093/heapol/czp010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 86.Chaudhry B, et al. Systematic review: impact of health information technology on quality, efficiency, and costs of medical care. Ann Intern Med. 2006;144(10):742–752. doi: 10.7326/0003-4819-144-10-200605160-00125. [DOI] [PubMed] [Google Scholar]

- 87.Hersh WR, Hickam DH. How well do physicians use electronic information retrieval systems?: a framework for investigation and systematic review. JAMA. 1998;280(15):1347–1352. doi: 10.1001/jama.280.15.1347. [DOI] [PubMed] [Google Scholar]

- 88.Kaufman D, et al. Applying an evaluation framework for health information system design, development, and implementation. Nurs Res. 2006;55(2):S37–S42. doi: 10.1097/00006199-200603001-00007. [DOI] [PubMed] [Google Scholar]

- 89.Kazanjian A, Green CJ. Beyond effectiveness: the evaluation of information systems using a comprehensive health technology assessment framework. Comput Biol Med. 2002;32(3):165–177. doi: 10.1016/S0010-4825(02)00013-6. [DOI] [PubMed] [Google Scholar]

- 90.Lau F, Hagens S, Muttitt S. A proposed benefits evaluation framework for health information systems in Canada. Health Q (Toronto, Ont.) 2007;10(1):112–116. [PubMed] [Google Scholar]

- 91.Yusof MM, et al. An evaluation framework for health information systems: human, organization and technology-fit factors (HOT-fit) Int J Med Inform. 2008;77(6):386–398. doi: 10.1016/j.ijmedinf.2007.08.011. [DOI] [PubMed] [Google Scholar]

- 92.Yusof MM, et al. Investigating evaluation frameworks for health information systems. Int J Med Inform. 2008;77(6):377–385. doi: 10.1016/j.ijmedinf.2007.08.004. [DOI] [PubMed] [Google Scholar]

- 93.Yusof MM, Paul RJ, Stergioulas LK. Towards a framework for health information systems evaluation. In: System sciences, 2006. HICSS’06. Proceedings of the 39th annual Hawaii international conference on: IEEE; 2006.

- 94.Greenhalgh T, et al. Beyond adoption: a new framework for theorizing and evaluating nonadoption, abandonment, and challenges to the scale-up, spread, and sustainability of health and care technologies. J Med Internet Res. 2017;19(11):e367. doi: 10.2196/jmir.8775. [DOI] [PMC free article] [PubMed] [Google Scholar]