Abstract

Cross frequency coupling (CFC) is emerging as a fundamental feature of brain activity, correlated with brain function and dysfunction. Many different types of CFC have been identified through application of numerous data analysis methods, each developed to characterize a specific CFC type. Choosing an inappropriate method weakens statistical power and introduces opportunities for confounding effects. To address this, we propose a statistical modeling framework to estimate high frequency amplitude as a function of both the low frequency amplitude and low frequency phase; the result is a measure of phase-amplitude coupling that accounts for changes in the low frequency amplitude. We show in simulations that the proposed method successfully detects CFC between the low frequency phase or amplitude and the high frequency amplitude, and outperforms an existing method in biologically-motivated examples. Applying the method to in vivo data, we illustrate examples of CFC during a seizure and in response to electrical stimuli.

Research organism: Human, Rat

Introduction

Brain rhythms - as recorded in the local field potential (LFP) or scalp electroencephalogram (EEG) - are believed to play a critical role in coordinating brain networks. By modulating neural excitability, these rhythmic fluctuations provide an effective means to control the timing of neuronal firing (Engel et al., 2001; Buzsáki and Draguhn, 2004). Oscillatory rhythms have been categorized into different frequency bands (e.g., theta [4–10 Hz], gamma [30–80 Hz]) and associated with many functions: the theta band with memory, plasticity, and navigation (Engel et al., 2001); the gamma band with local coupling and competition (Kopell et al., 2000; Börgers et al., 2008). In addition, gamma and high-gamma (80–200 Hz) activity have been identified as surrogate markers of neuronal firing (Rasch et al., 2008; Mukamel et al., 2005; Fries et al., 2001; Pesaran et al., 2002; Whittingstall and Logothetis, 2009; Ray and Maunsell, 2011), observable in the EEG and LFP.

In general, lower frequency rhythms engage larger brain areas and modulate spatially localized fast activity (Bragin et al., 1995; Chrobak and Buzsáki, 1998; von Stein and Sarnthein, 2000; Lakatos et al., 2005; Lakatos et al., 2008). For example, the phase of low frequency rhythms has been shown to modulate and coordinate neural spiking (Vinck et al., 2010; Hyafil et al., 2015b; Fries et al., 2007) via local circuit mechanisms that provide discrete windows of increased excitability. This interaction, in which fast activity is coupled to slower rhythms, is a common type of cross-frequency coupling (CFC). This particular type of CFC has been shown to carry behaviorally relevant information (e.g., related to position [Jensen and Lisman, 2000; Agarwal et al., 2014], memory [Siegel et al., 2009], decision making and coordination [Dean et al., 2012; Pesaran et al., 2008; Wong et al., 2016; Hawellek et al., 2016]). More generally, CFC has been observed in many brain areas (Bragin et al., 1995; Chrobak and Buzsáki, 1998; Csicsvari et al., 2003; Tort et al., 2008; Mormann et al., 2005; Canolty et al., 2006), and linked to specific circuit and dynamical mechanisms (Hyafil et al., 2015b). The degree of CFC in those areas has been linked to working memory, neuronal computation, communication, learning and emotion (Tort et al., 2009; Jensen et al., 2016; Canolty and Knight, 2010; Dejean et al., 2016; Karalis et al., 2016; Likhtik et al., 2014; Jones and Wilson, 2005; Lisman, 2005; Sirota et al., 2008), and clinical disorders (Gordon, 2016; Widge et al., 2017; Voytek and Knight, 2015; Başar et al., 2016; Mathalon and Sohal, 2015), including epilepsy (Weiss et al., 2015). Although the cellular mechanisms giving rise to some neural rhythms are relatively well understood (e.g. gamma [Whittington et al., 2000; Whittington et al., 2011; Mann and Mody, 2010]), the neuronal substrate of CFC itself remains obscure.

Analysis of CFC focuses on relationships between the amplitude, phase, and frequency of two rhythms from different frequency bands. The notion of CFC, therefore, subsumes more specific types of coupling, including: phase-phase coupling (PPC), phase-amplitude coupling (PAC), and amplitude-amplitude coupling (AAC) (Hyafil et al., 2015b). PAC has been observed in rodent striatum and hippocampus (Tort et al., 2008) and human cortex (Canolty et al., 2006), AAC has been observed between the alpha and gamma rhythms in dorsal and ventral cortices (Popov et al., 2018), and between theta and gamma rhythms during spatial navigation (Shirvalkar et al., 2010), and both PAC and AAC have been observed between alpha and gamma rhythms (Osipova et al., 2008). Many quantitative measures exist to characterize different types of CFC, including: mean vector length or modulation index (Canolty et al., 2006; Tort et al., 2010), phase-locking value (Mormann et al., 2005; Lachaux et al., 1999; Vanhatalo et al., 2004), envelope-to-signal correlation (Bruns and Eckhorn, 2004), analysis of amplitude spectra (Cohen, 2008), coherence between amplitude and signal (Colgin et al., 2009), coherence between the time course of power and signal (Osipova et al., 2008), and eigendecomposition of multichannel covariance matrices (Cohen, 2017). Overall, these different measures have been developed from different principles and made suitable for different purposes, as shown in comparative studies (Tort et al., 2010; Cohen, 2008; Penny et al., 2008; Onslow et al., 2011).

Despite the richness of this methodological toolbox, it has limitations. For example, because each method focuses on one type of CFC, the choice of method restricts the type of CFC detectable in data. Applying a method to detect PAC in data with both PAC and AAC may: (i) falsely report no PAC in the data, or (ii) miss the presence of significant AAC in the same data. Changes in the low frequency power can also affect measures of PAC; increases in low frequency power can increase the signal to noise ratio of phase and amplitude variables, increasing the measure of PAC, even when the phase-amplitude coupling remains constant (Aru et al., 2015; van Wijk et al., 2015; Jensen et al., 2016). Furthermore, many experimental or clinical factors (e.g., stimulation parameters, age or sex of subject) can impact CFC in ways that are difficult to characterize with existing methods (Cole and Voytek, 2017). These observations suggest that an accurate measure of PAC would control for confounding variables, including the power of low frequency oscillations.

To that end, we propose here a generalized linear model (GLM) framework to assess CFC between the high-frequency amplitude and, simultaneously, the low frequency phase and amplitude. This formal statistical inference framework builds upon previous work (Kramer and Eden, 2013; Penny et al., 2008; Voytek et al., 2013; van Wijk et al., 2015) to address the limitations of existing CFC measures. In what follows, we show that this framework successfully detects CFC in simulated signals. We compare this method to the modulation index, and show that in signals with CFC dependent on the low-frequency amplitude, the proposed method more accurately detects PAC than the modulation index. We apply this framework to in vivo recordings from human and rodent cortex to show examples of PAC and AAC detected in real data, and how to incorporate new covariates directly into the model framework.

Materials and methods

Estimation of the phase and amplitude envelope

To study CFC we estimate three quantities: the phase of the low frequency signal, ; the amplitude envelope of the high frequency signal, ; and the amplitude envelope of the low frequency signal, . To do so, we first bandpass filter the data into low frequency (4–7 Hz) and high frequency (100–140 Hz) signals, and , respectively, using a least-squares linear-phase FIR filter of order 375 for the high frequency signal, and order 50 for the low frequency signal. Here we choose specific high and low frequency ranges of interest, motivated by previous in vivo observations (Canolty et al., 2006; Tort et al., 2008; Scheffer-Teixeira et al., 2013). However, we note that this method is flexible and not dependent on this choice. We select a wide high frequency band consistent with recommendations from the literature (Aru et al., 2015) and the mechanistic explanation that extracellular spikes produce this broadband high frequency activity (Scheffer-Teixeira et al., 2013). We use the Hilbert transform to compute the analytic signals of and , and from these compute the phase and amplitude of the low frequency signal and and the amplitude of the high frequency signal .

Modeling framework to assess CFC

Generalized linear models (GLMs) provide a principled framework to assess CFC (Penny et al., 2008; Kramer and Eden, 2013; van Wijk et al., 2015). Here, we present three models to analyze different types of CFC. The fundamental logic behind this approach is to model the distribution of as a function of different predictors. In existing measures of PAC, the distribution of versus is assessed using a variety of different metrics (e.g., Tort et al., 2010). Here, we estimate statistical models to fit as a function of , , and their combinations. If these models fit the data sufficiently well, then we estimate distances between the modeled surfaces to measure the impact of each predictor.

The model

The model relates , the response variable, to a linear combination of , the predictor variable, expressed in a spline basis:

| (1) |

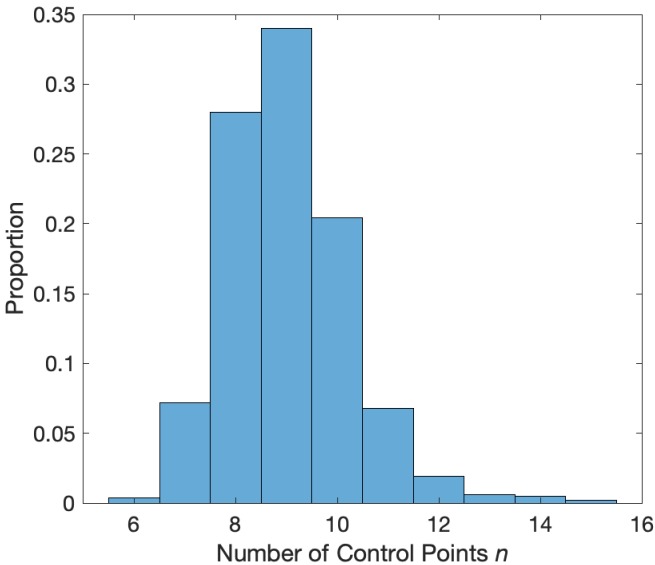

where the conditional distribution of given is modeled as a Gamma random variable with mean parameter and shape parameter , and are undetermined coefficients, which we refer to collectively as . We choose this distribution as it guarantees real, positive amplitude values; we note that this distribution provides an acceptable fit to the example human data analyzed here (Figure 1). The functions correspond to spline basis functions, with control points equally spaced between 0 and , used to approximate . We note that the spline functions sum to 1, and therefore we omit a constant offset term. We use a tension parameter of 0.5, which controls the smoothness of the splines. We note that, because the link function of the conditional mean of the response variable varies linearly with the model coefficients the model is a GLM, though the spline basis functions situate the model in the larger class of Generalized Additive Models (GAMs). Here we fix , which is a reasonable choice for smooth PAC with one or two broad peaks (Kramer and Eden, 2013). To support this choice, we apply an AIC-based selection procedure to 1000 simulated instances of signals of duration 20 s with phase-amplitude coupling and amplitude-amplitude coupling (see Materials and methods: Synthetic Time Series with PAC and Synthetic Time Series with AAC, below, for simulation details). For each simulation, we fit the model in Equation 1 to these data for 27 different values of from to . For each simulated signal, we record the value of that minimizes the AIC, defined as

where is the deviance from the model in Equation 1. The values of that minimize the AIC tend to lie between and (Figure 2). These simulations support the choice of as a sufficient number of splines.

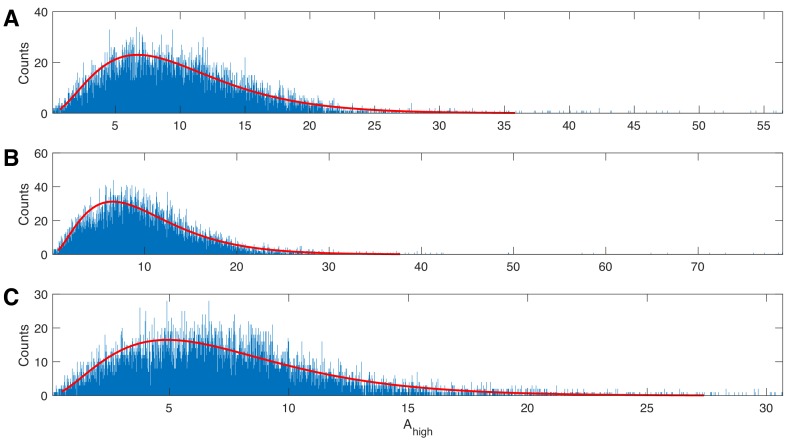

Figure 1. The gamma distribution provides a good fit to example human data.

Three examples of 20 s duration recorded from a single electrode during a human seizure. In each case, the gamma fit (red curve) provides an acceptable fit to the empirical distributions of the high frequency amplitude.

Figure 2. Distribution of the number of control points that minimize the AIC.

Values of between 7 and 12 minimize the AIC in a simulation with phase-amplitude coupling and amplitude-amplitude coupling.

For a more detailed discussion and simulation examples of the PAC model, see Kramer and Eden (2013). We note that the choices of distribution and link function differ from those in Penny et al. (2008) and van Wijk et al. (2015), where the normal distribution and identity link are used instead.

The model

The model relates the high frequency amplitude to the low frequency amplitude:

| (2) |

where the conditional distribution of given is modeled as a Gamma random variable with mean parameter and shape parameter . The predictor consists of a single variable and a constant, and the length of the coefficient vector is 2.

The model

The model extends the model in Equation 1 by including three additional predictors in the GLM: , the low frequency amplitude; and interaction terms between the low frequency amplitude and the low frequency phase: , and . These new terms allow assessment of phase-amplitude coupling while accounting for linear amplitude-amplitude dependence and more complicated phase-dependent relationships on the low frequency amplitude without introducing many more parameters. Compared to the original model in Equation 1, including these new terms increases the number of variables to , and the length of the coefficient vector to . These changes result in the following model:

| (3) |

Here, the conditional distribution of given and is modeled as a Gamma random variable with mean parameter and shape parameter , and are undetermined coefficients. We note that we only consider two interaction terms, rather than the spline basis function of phase, to limit the number of parameters in the model.

The statistics and

We compute two measures of CFC, and which use the three models defined in the previous section. We evaluate each model in the three-dimensional space (, , ) and calculate the statistics and . We use the MATLAB function fitglm to estimate the models; we note that this procedure estimates the dispersion directly for the gamma distribution. In what follows, we first discuss the three model surfaces estimated from the data, and then how we use these surfaces to compute the statistics and .

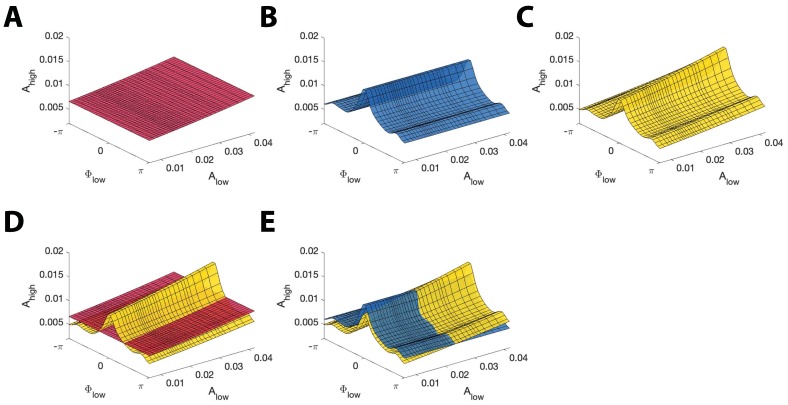

To create the surface , which fits the model in the three-dimensional (, , ) space, we first compute estimates of the parameters in Equation 3. We then estimate by fixing at one of 640 evenly spaced values between the 5th and 95th quantiles of observed; we choose these quantiles to avoid extremely small or large values of . Finally, at the fixed , we compute the high frequency amplitude values from the model over 100 evenly spaced values of between and . This results in a two-dimensional curve in the two-dimensional (, ) space with fixed . We repeat this procedure for all 640 values of to create a surface in the three-dimensional space (, , ) (Figure 3C). To create the surface , which fits the model in the three-dimensional (, , ) space, we estimate the coefficient vector for the model in Equation 2. We then estimate the high frequency amplitude over 640 evenly spaced values between the 5th and 95th quantiles of observed, again to avoid extremely small or large values of . This creates a mean response function which appears as a curve in the two-dimensional (, ) space. We extend this two-dimensional curve to a three-dimensional surface by extending along the dimension (Figure 3A).

Figure 3. Example model surfaces used to determine and .

(A,B,C) Three example surfaces (A) , (B) , and (C) in the three-dimensional space (, , ). (D) The maximal distance between the surfaces (red) and (yellow) is used to compute . (E) The maximal distance between the surfaces (blue) and (yellow) is used to compute .

To create the surface , which fits the model in the three-dimensional (, , ) space, we first estimate the coefficients for the model in Equation 1. From this, we then compute estimates for the high frequency amplitude using the model with 100 evenly spaced values of between and . This results in the mean response function of the model. We extend this curve in the dimension to create a surface in the three-dimensional (, , ) space. The surface has the same structure as the curve in the (, ) space, and remains constant along the dimension (Figure 3B).

The statistic measures the effect of low frequency phase on high frequency amplitude, while accounting for fluctuations in the low frequency amplitude. To compute this statistic, we note that the model in Equation 3 measures the combined effect of and on , while the model in Equation 2 measures only the effect of on . Hence, to isolate the effect of on , while accounting for , we compare the difference in fits between the models in Equations 2 and 3. We fit the mean response functions of the models in Equations 2 and 3, and calculate as the maximum absolute fractional difference between the resulting surfaces and (Figure 3D):

| (4) |

That is we measure the largest distance between the and the models. We expect fluctuations in not present in to be the result of , that is PAC. In the absence of PAC, we expect the surfaces and to be very close, resulting in a small value of . However, in the presence of PAC, we expect to deviate from , resulting in a large value of . We note that this measure, unlike R2 metrics for linear regression, is not meant to measure the goodness-of-fit of these models to the data, but rather the differences in fits between the two models. We also note that is an unbounded measure, as it equals the maximum absolute fractional difference between distributions, which may exceed 1.

To compute the statistic , which measures the effect of low frequency amplitude on high frequency amplitude while accounting for fluctuations in the low frequency phase, we compare the difference in fits of the model in Equation 3 from the model in Equation 1. We note that the model in Equation 3 predicts as a function of and , while the model in Equation 1 predicts as a function of only. Therefore we expect a difference in fits between the models in Equations 1 and 3 results from the effects of on . We fit the mean response functions of the models in Equations 1 and 3 in the three-dimensional (, , ) space, and calculate as the maximum absolute fractional difference between the resulting surfaces and (Figure 3E):

| (5) |

That is we measure the distance between the and the models. We expect fluctuations in not present in to be the result of , that is AAC. In the absence of AAC, we expect the surfaces and to be very close, resulting in a small value for . Alternatively, in the presence of AAC, we expect to deviate from , resulting in a large value of .

Estimating 95% confidence intervals for and

We compute 95% confidence intervals for and via a parametric bootstrap method (Kramer and Eden, 2013). Given a vector of estimated coefficients for , we use its estimated covariance and estimated mean to generate 10,000 normally distributed coefficient sample vectors , . For each , we then compute the high frequency amplitude values from the , , or model, . Finally, we compute the statistics and for each as,

| (6) |

| (7) |

The 95% confidence intervals for the statistics are the values of and at the 0.025 and 0.975 quantiles (Kramer and Eden, 2013).

Assessing significance of AAC and PAC with bootstrap p-values

To assess whether evidence exists for significant PAC or AAC, we implement a bootstrap procedure to compute p-values as follows. Given two signals and , and the resulting estimated statistics and we apply the Amplitude Adjusted Fourier Transform (AAFT) algorithm (Theiler et al., 1992) on to generate a surrogate signal . In the AAFT algorithm, we first reorder the values of by creating a random Gaussian signal and ordering the values of to match . For example, if the highest value of occurs at index , then the highest value of will be reordered to occur at index . Next, we apply the Fourier Transform (FT) to the reordered and randomize the phase of the frequency domain signal. This signal is then inverse Fourier transformed and rescaled to have the same amplitude distribution as the original signal . In this way, the algorithm produces a permutation of such that the power spectrum and amplitude distribution of the original signal are preserved.

We create 1000 such surrogate signals , and calculate and between and each . We define the p-values and as the proportion of values in and greater than the estimated statistics and , respectively. If the proportion is zero, we set .

We calculate p-values for the modulation index in the same way. The modulation index calculates the distribution of high frequency amplitudes versus low frequency phases and measures the distance from this distribution to a uniform distribution of amplitudes. Given the signals and , and the resulting modulation index MI between them, we calculate the modulation index between and 1000 surrogate permutations of using the AAFT algorithm. We set to be the proportion of these resulting values greater than the MI value estimated from the original signals.

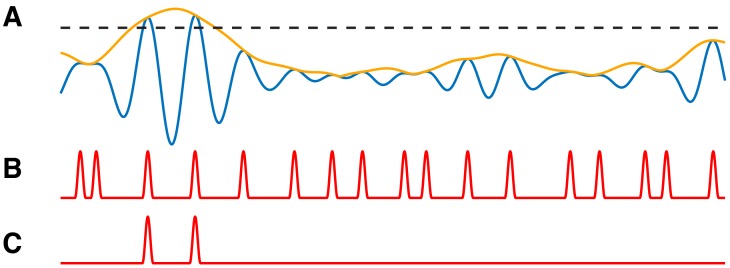

Synthetic time series with PAC

We construct synthetic time series to examine the performance of the proposed method as follows. First, we simulate 20 s of pink noise data such that the power spectrum scales as . We then filter these data into low (4–7 Hz) and high (100–140 Hz) frequency bands, as described in Materials and methods: Estimation of the phase and amplitude envelope, creating signals and . Next, we couple the amplitude of the high frequency signal to the phase of the low frequency signal. To do so, we first locate the peaks of and determine the times , of the relative extrema. We note that these times correspond approximately to . We then create a smooth modulation signal M which consists of a 42 ms Hanning window of height centered at each , and a value of 1 at all other times (Figure 4A). The intensity parameter in the modulation signal corresponds to the strength of PAC. corresponds to no PAC, while results in a 100% increase in the high frequency amplitude at each , creating strong PAC. We create a new signal with the same phase as , but with amplitude dependent on the phase of by setting,

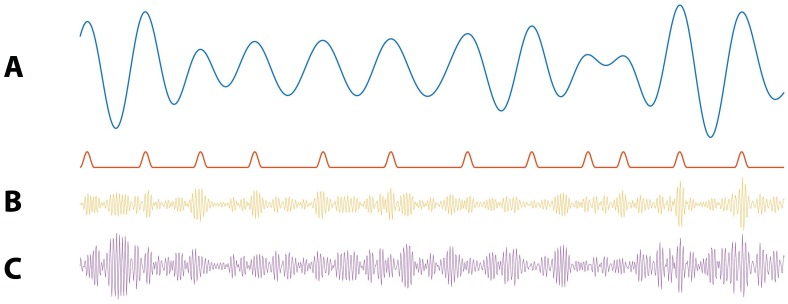

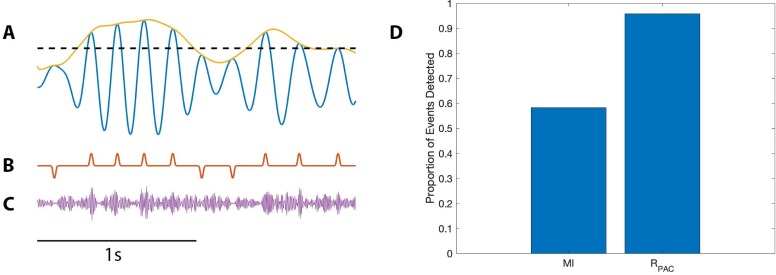

Figure 4. Illustration of synthetic time series with PAC and AAC.

(A) Example simulation of (blue) and modulation signal M (red). When the phase of is near 0 radians, M increases. (B) Example simulation of PAC. When the phase of is approximately 0 radians, the high frequency amplitude (yellow) increases. (C) Example simulations of AAC. When the amplitude of is large, so is the amplitude of the high frequency signal (purple).

We create the final voltage trace as

where is a new instance of pink noise multiplied by a small constant . In the signal , brief increases of the high frequency activity occur at a specific phase (0 radians) of the low frequency signal (Figure 4B).

Synthetic time series with AAC

To generate synthetic time series with dependence on the low frequency amplitude, we follow the procedure in the preceding section to generate , , and . We then induce amplitude-amplitude coupling between the low and high frequency components by creating a new signal such that

where is the intensity parameter corresponding to the strength of amplitude-amplitude coupling. We define the final voltage trace as

where is a new instance of pink noise multiplied by a small constant (Figure 4C).

Human subject data

A patient (male, age 32 years) with medically intractable focal epilepsy underwent clinically indicated intracranial cortical recordings for epilepsy monitoring. In addition to clinical electrode implantation, the patient was also implanted with a 10 × 10 (4 mm ×4 mm) NeuroPort microelectrode array (MEA; Blackrock Microsystems, Utah) in a neocortical area expected to be resected with high probability in the temporal gyrus. The MEA consists of 96 platinum-tipped silicon probes, with a length of 1.5 mm, corresponding to neocortical layer III as confirmed by histology after resection. Signals from the MEA were acquired continuously at 30 kHz per channel. Seizure onset times were determined by an experienced encephalographer (S.S.C.) through inspection of the macroelectrode recordings, referral to the clinical report, and clinical manifestations recorded on video. For a detailed clinical summary, see patient P2 of Wagner et al. (2015). For these data, we analyze the 100–140 Hz and 4–7 Hz frequency bands to illustrate the proposed method; a more rigorous study of CFC in these data may require a more principled choice of high frequency band. All patients were enrolled after informed consent and consent to publish was obtained, and approval was granted by local Institutional Review Boards at Massachusetts General Hospital and Brigham Women’s Hospitals (Partners Human Research Committee), and at Boston University according to National Institutes of Health guidelines.

Code availability

The code to perform this analysis is available for reuse and further development at https://github.com/Eden-Kramer-Lab/GLM-CFC (Nadalin and Kramer, 2019; copy archived at https://github.com/elifesciences-publications/GLM-CFC).

Results

We first examine the performance of the CFC measure through simulation examples. In doing so, we show that the statistics and accurately detect different types of cross-frequency coupling, increase with the intensity of coupling, and detect weak PAC coupled to the low frequency amplitude. We show that the proposed method is less sensitive to changes in low frequency power, and outperforms an existing PAC measure that lacks dependence on the low frequency amplitude. We conclude with example applications to human and rodent in vivo recordings, and show how to extend the modeling framework to include a new covariate.

The absence of CFC produces no significant detections of coupling

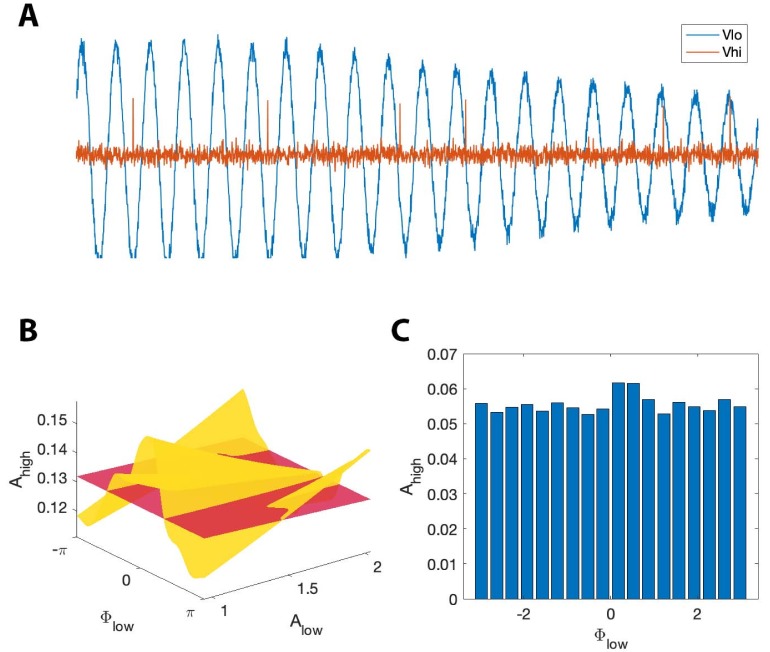

We first consider simulated signals without CFC. To create these signals, we follow the procedure in Materials and methods: Synthetic Time Series with PAC with the modulation intensity set to zero (). In the resulting signals, is approximately constant and does not depend on or (Figure 5A). We estimate the model, the model, and the model from these data; we show example fits of the model surfaces in Figure 5B. We observe that the models exhibit small modulations in the estimated high frequency amplitude envelope as a function of the low frequency phase and amplitude.

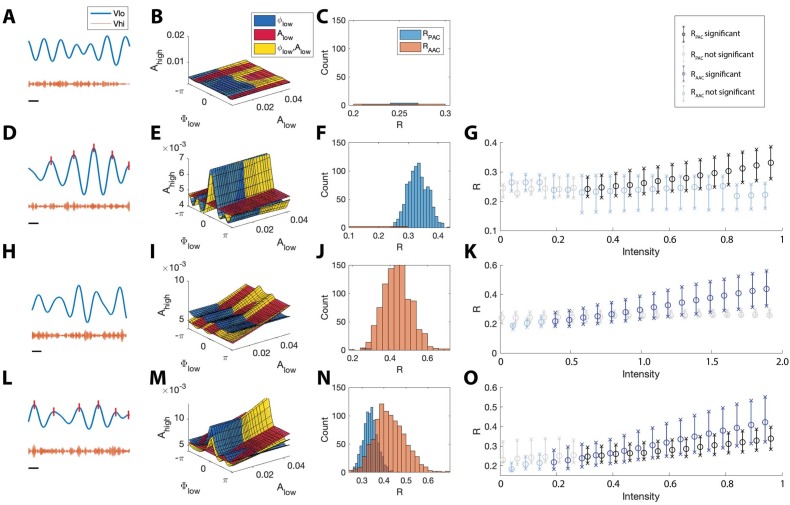

Figure 5. The statistical modeling framework successfully detects different types of cross-frequency coupling.

(A–C) Simulations with no CFC. (A) When no CFC occurs, the low frequency signal (blue) and high frequency signal (orange) evolve independently. (B) The surfaces , , and suggest no dependence of on or . (C) Significant (<0.05) values of and from 1000 simulations. Very few significant values for the statistics R are detected. (D–G) Simulations with PAC only. (D) When the phase of the low frequency signal is near 0 radians (red tick marks), the amplitude of the high frequency signal increases. (E) The surfaces , , and suggest dependence of on . (F) In 1000 simulations, significant values of frequently appear, while significant values of rarely appear. (G) As the intensity of PAC increases, so do the significant values of (black), while any significant values of remain small. (H–K) Simulations with AAC only. (H) The amplitudes of the high frequency signal and low frequency signal are positively correlated. (I) The surfaces , , and suggest dependence of on . (J) In 1000 simulations, significant values of frequently appear. (K) As the intensity of AAC increases, so do the significant values of (blue), while any significant values of remain small. (L–O) Simulations with PAC and AAC. (L) The amplitude of the high frequency signal increases when the phase of the low frequency signal is near 0 radians and the amplitude of the low frequency signal is large. (M) The surfaces , , and suggest dependence of on and . (N) In 1000 simulations, significant values of and frequently appear. (O) As the intensity of PAC and AAC increase, so do the significant values of and . In (G,K,O), circles indicate the median, and x’s the 5th and 95th quantiles.

To assess the distribution of significant R values in the case of no cross-frequency coupling, we simulate 1000 instances of the pink noise signals (each of 20 s) and apply the R measures to each instance, plotting significant R values in Figure 5C. We find that for all 1000 instances, and are less than 0.05 in only 0.6% and 0.2% of the simulations, respectively, indicating no significant evidence of PAC or AAC, as expected.

We also applied these simulated signals to assess the performance of two standard model comparison procedures for GLMs. Simulating 1000 instances of pink noise signals (each of 20 s) with no induced PAC or AAC, we performed a chi-squared test for nested models (Kramer and Eden, 2016) between models and , and detected significant PAC (p < 0.05) in 59.7% of simulations. Similarly, performing a chi-squared test for nested models between models and , we detected significant AAC (p < 0.05) in 41.5% of simulations. Using an AIC-based model comparison, we found a decrease in AIC from the model to the model (consistent with significant PAC) in 98.6% of simulations, and a decrease in AIC from the model to the model (consistent with significant AAC) in 87.2% of simulations. By contrast, we rarely detect significant PAC (<0.6% of simulations) or AAC (<0.2% of simulations) in the pink noise signals using the two statistics and implemented here. We conclude that, in this modeling regime, two deviance-based model comparison procedures for GLMs are less robust measures of significant PAC and AAC.

The proposed method accurately detects PAC

We next consider signals that possess phase-amplitude coupling, but lack amplitude-amplitude coupling. To do so, we simulate a 20 s signal with modulated by (Figure 5D); more specifically, increases when is near 0 radians (see Materials and methods, ). We then estimate the model, the model, and the model from these data; we show example fits in Figure 5E. We find that in the model is higher when is close to 0 radians, and the model follows this trend. We note that, because the data do not depend on the low frequency amplitude (, the and models have very similar shapes in the (, , ) space, and the model is nearly flat.

Simulating 1000 instances of these 20 s signals with induced phase-amplitude coupling, we find for only 0.6% of the simulations, while for 96.5% of the simulations. We find that the significant values of lie well above 0 (Figure 5F), and that as the intensity of the simulated phase-amplitude coupling increases, so does the statistic (Figure 5G). We conclude that the proposed method accurately detects the presence of phase-amplitude coupling in these simulated data.

The proposed method accurately detects AAC

We next consider signals with amplitude-amplitude coupling, but without phase-amplitude coupling. We simulate a 20 s signal such that is modulated by (see Materials and methods, ); when is large, so is (Figure 5H). We then estimate the model, the model, and the model (example fits in Figure 5I). We find that the model increases along the axis, and that the model closely follows this trend, while the model remains mostly flat, as expected.

Simulating 1000 instances of these signals we find that for 97.9% of simulations, while for 0.3% of simulations. The significant values of lie above 0 (Figure 5J), and increases in the intensity of AAC produce increases in (Figure 5K). We conclude that the proposed method accurately detects the presence of amplitude-amplitude coupling.

The proposed method accurately detects the simultaneous occurrence of PAC and AAC

We now consider signals that possess both phase-amplitude coupling and amplitude-amplitude coupling. To do so, we simulate time series data with both AAC and PAC (Figure 5L). In this case, increases when is near 0 radians and when is large (see Materials and methods, and ). We then estimate the model, the model, and the model from the data and visualize the results (Figure 5M). We find that the model increases near , and that the model increases linearly with . The model exhibits both of these behaviors, increasing at and as increases.

Simulating 1000 instances of signals with both AAC and PAC present, we find that in 96.7% of simulations and in 98.1% of simulations. The distributions of significant and values lie above 0, consistent with the presence of both PAC and AAC (Figure 5N), and as the intensity of PAC and AAC increases, so do the values of and (Figure 5O). We conclude that the model successfully detects the concurrent presence of PAC and AAC.

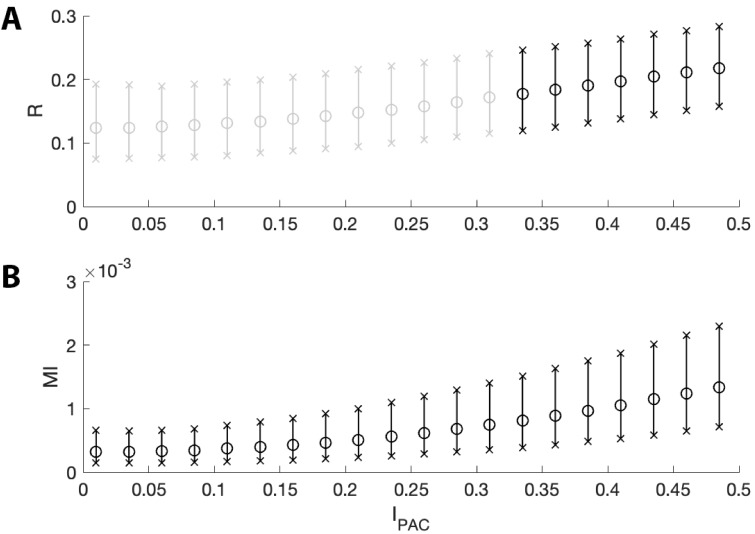

and modulation index are both sensitive to weak modulations

To investigate the ability of the proposed method and the modulation index to detect weak coupling between the low frequency phase and high frequency amplitude, we perform the following simulations. For each intensity value between 0 and 0.5 (in steps of 0.025), we simulate 1000 signals (see Materials and methods) and compute and a measure of PAC in common use: the modulation index MI (Tort et al., 2010) (Figure 6). We find that both MI and , while small, increase with ; in this way, both measures are sensitive to small values of . However, we note that is not significant for very small intensity values (), while MI is significant at these small intensities. Significant appears when the MI exceeds 0.7 × 10-3, a value below the range of MI values detected in many existing studies (Tort et al., 2008; Zhong et al., 2017; Jackson et al., 2019; Axmacher et al., 2010; Tort et al., 2018). We conclude that, while the modulation index may be more sensitive than to very weak phase-amplitude coupling, can detect phase-amplitude coupling at MI values consistent with those observed in the literature.

Figure 6. The two measures of PAC increase with intensities near zero.

The mean (circles) and 5th to 95th quantiles (x’s) of (A) and (B) MI for intensity values between 0 and 0.5. Black bars indicate or is below 0.05 for ≥95% of simulations; gray bars indicate is not below 0.05 for ≥95% of simulations. While both measures increase with intensity, MI detects more instances of significant PAC than does for very small values of .

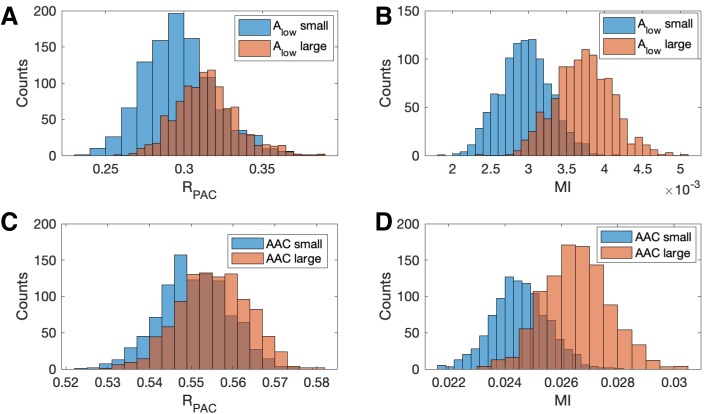

The proposed method is less affected by fluctuations in low-frequency amplitude and AAC

Increases in low frequency power can increase measures of phase-amplitude coupling, although the underlying PAC remains unchanged (Aru et al., 2015; Cole and Voytek, 2017). Characterizing the impact of this confounding effect is important both to understand measure performance and to produce accurate interpretations of analyzed data. To examine this phenomenon, we perform the following simulation. First, we simulate a signal with fixed PAC (intensity , see Materials and methods). Second, we filter into its low and high frequency components and , respectively. Then, we create a new signal as follows:

| (8) |

where is a pink noise term (see Materials and methods). We note that we only alter the low frequency component of and do not alter the PAC. To analyze the PAC in this new signal we compute and MI.

We show in Figure 7 population results (1000 realizations each of the simulated signals and ) for the R and MI values. We observe that increases in the amplitude of produce increases in MI and . However, this increase is more dramatic for MI than for ; we note that the distributions of almost completely overlap (Figure 7A), while the distribution of MI shifts to larger values when the amplitude of increases (Figure 7B). We conclude that the statistic — which includes the low frequency amplitude as a predictor in the GLM — is more robust to increases in low frequency power than a method that only includes the low frequency phase.

Figure 7. Increases in the amplitude of the low frequency signal, and the amplitude-amplitude coupling (AAC), increase the modulation index more than .

(A,B) Distributions of (A) and (B) MI when is small (blue) and when is large (red). (C,D) Distributions of (C) and (D) MI when AAC is small (blue) and when AAC is large (red).

We also investigate the effect of increases in amplitude-amplitude coupling (AAC) on the two measures of PAC. As before, we simulate a signal with fixed PAC (intensity ) and no AAC (intensity ). We then simulate a second signal with the same fixed PAC as , and with additional AAC (intensity ). We simulate 1000 realizations of and and compute the corresponding and MI values. We observe that the increase in AAC produces a small increase in the distribution of values (Figure 7C), but a large increase in the distribution of MI values (Figure 7D). We conclude that the statistic is more robust to increases in AAC than MI.

These simulations show that at a fixed, non-zero PAC, the modulation index increases with increased and AAC. We now consider the scenario of increased and AAC in the absence of PAC. To do so, we simulate 1000 signals of 200 s duration, with no PAC (intensity ). For each signal, at time 100 s (i.e., the midpoint of the simulation) we increase the low frequency amplitude by a factor of 10 (consistent with observations from an experiment in rodent cortex, as described below), and include AAC between the low and high frequency signals (intensity for and intensity for ). We find that, in the absence of PAC, detects significant PAC (p<0.05) in 0.4% of the simulated signals, while MI detects significant PAC in 34.3% of simulated signals. We conclude that in the presence of increased low frequency amplitude and amplitude-amplitude coupling, MI may detect PAC where none exists, while , which accounts for fluctuations in low frequency amplitude, does not.

Sparse PAC is detected when coupled to the low frequency amplitude

While the modulation index has been successfully applied in many contexts (Canolty and Knight, 2010; Hyafil et al., 2015b), instances may exist where this measure is not optimal. For example, because the modulation index was not designed to account for the low frequency amplitude, it may fail to detect PAC when depends not only on , but also on . For example, since the modulation index considers the distribution of at all observed values of , it may fail to detect coupling events that occur sparsely at only a subset of appropriate occurrences. , on the other hand, may detect these sparse events if these events are coupled to , as accounts for fluctuations in low frequency amplitude. To illustrate this, we consider a simulation scenario in which PAC occurs sparsely in time.

We create a signal with PAC, and corresponding modulation signal M with intensity value (see Materials and methods, Figure 8A–B). We then modify this signal to reduce the number of PAC events in a way that depends on . To do so, we preserve PAC at the peaks of (i.e., when ), but now only when these peaks are large, more specifically in the top 5% of peak values.

Figure 8. PAC events restricted to a subset of occurrences are still detectable.

(A) The low frequency signal (blue), amplitude envelope (yellow), and threshold (black dashed). (B–C) The modulation signal increases (B) at every occurrence of , or (C) only when exceeds the threshold and .

We define a threshold value to be the 95th quantile of the peak values, and modify the modulation signal M as follows. When M exceeds 1 (i.e., when ) and the low frequency amplitude exceeds (i.e., ), we make no change to M. Alternatively, when M exceeds one and the low frequency amplitude lies below (i.e., , we decrease M to 1 (Figure 8C). In this way, we create a modified modulation signal such that in the resulting signal , when and is large enough, is increased; and when and is not large enough, there is no change to . This signal hence has fewer phase-amplitude coupling events than the number of times .

We generate 1000 realizations of the simulated signals , and compute and MI. We find that while MI detects significant PAC in only 37% of simulations, detects significant PAC in 72% of simulations. In this case, although the PAC occurs infrequently, these occurrences are coupled to , and , which accounts for changes in , successfully detects these events much more frequently. We conclude that when the PAC is dependent on , more accurately detects these sparse coupling events.

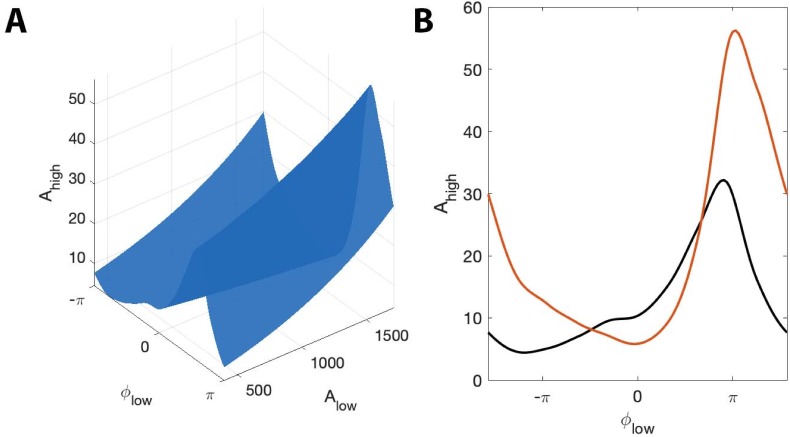

The CFC model detects simultaneous PAC and AAC missed in an existing method

To further illustrate the utility of the proposed method, we consider another scenario in which impacts the occurrence of PAC. More specifically, we consider a case in which increases at a fixed low frequency phase for high values of , and decreases at the same phase for small values of . In this case, we expect that the modulation index may fail to detect the coupling because the distribution of over would appear uniform when averaged over all values of ; the dependence of on would only become apparent after accounting for .

To implement this scenario, we consider the modulation signal M (see Materials and methods) with an intensity value . We consider all peaks of and set the threshold to be the 50th quantile (Figure 9A). We then modify the modulation signal M as follows. When M exceeds 1 (i.e., when ) and the low frequency amplitude exceeds (i.e., ), we make no change to M. Alternatively, when M exceeds one and the low frequency amplitude lies below (i.e. , we decrease M to 0 (Figure 9B). In this way, we create a modified modulation signal M such that when and is large enough, is increased; and when and is small enough, is decreased (Figure 9C).

Figure 9. PAC with AAC is accurately detected with the proposed method, but not with the modulation index.

(A) The low frequency signal (blue), amplitude envelope (yellow), and threshold (black dashed). (B) The modulation signal (red) increases when and , and deceases when and . (C) The modulated signal (purple) increases and decreases with the modulation signal. (D) The proportion of significant detections (out of 1000) for MI and .

Using this method, we simulate 1000 realizations of this signal, and calculate MI and for each signal (Figure 9D). We find that detects significant PAC in nearly all (96%) of the simulations, while MI detects significant PAC in only 58% of the simulations. We conclude that, in this simulation, more accurately detects PAC coupled to low frequency amplitude.

A simple stochastic spiking neural model illustrates the utility of the proposed method

In the previous simulations, we created synthetic data without a biophysically principled generative model. Here we consider an alternative simulation strategy with a more direct connection to neural dynamics. While many biophysically motivated models of cross-frequency coupling exist (Sase et al., 2017; Chehelcheraghi et al., 2017; Sotero, 2016; Hyafil et al., 2015a; Lepage and Vijayan, 2015; Onslow et al., 2014; Fontolan et al., 2013; Malerba and Kopell, 2013; Jirsa and Müller, 2013; Spaak et al., 2012; Wulff et al., 2009; Tort et al., 2007), we consider here a relatively simple stochastic spiking neuron model (Aljadeff et al., 2016). In this stochastic model, we generate a spike train () in which an externally imposed signal modulates the probability of spiking as a function of and . We note that high frequency activity is thought to represent the aggregate spiking activity of local neural populations (Ray and Maunsell, 2011; Buzsáki and Wang, 2012; Ray et al., 2008a; Jia and Kohn, 2011); while here we simulate the activity of a single neuron, the spike train still produces temporally focal events of high frequency activity. In this framework, we allow the target phase () modulating to change as a function of : when is large, the probability of spiking is highest near , and when is small, the probability of spiking is highest near More precisely, we define as

where is a sinusoid oscillating between 1 and 2 with period 0.1 Hz. We define the spiking probability, , as

where , is a triangle wave, and we choose so that the maximum value of is 2. We note that the spiking probability is zero except near times when the phase of the low frequency signal is near . We then define as:

where is the binary sequence generated by the stochastic spiking neuron model, and is Gaussian noise with mean zero and standard deviation 0.1. In this scenario, the distribution of over appears uniform when averaged over all values of . We therefore expect the modulation index to remain small, despite the presence of PAC with maximal phase dependent on . However, we expect that , which accounts for fluctuations in low frequency amplitude, will detect this PAC. We show an example signal from this simulation in Figure 10A. As expected, we find that detects PAC (, ); we note that the (, ) surface exhibits a single peak near at small values of , and at at large value of (Figure 10B). The (, ) surface deviates significantly from the surface, resulting in a large value. However, the non-uniform shape of the (, ) surface is lost when we fail to account for . In this scenario, the distribution of over appears uniform, resulting in a low MI value (Figure 10C).

Figure 10. , but not MI, detects phase-amplitude coupling in a simple stochastic spiking neuron model.

(A) The phase and amplitude of the low frequency signal (blue) modulate the probability of a high frequency spike (orange). (B) The surfaces (red) and (yellow). The phase of maximal modulation depends on . (C) The modulation index fails to detect this type of PAC.

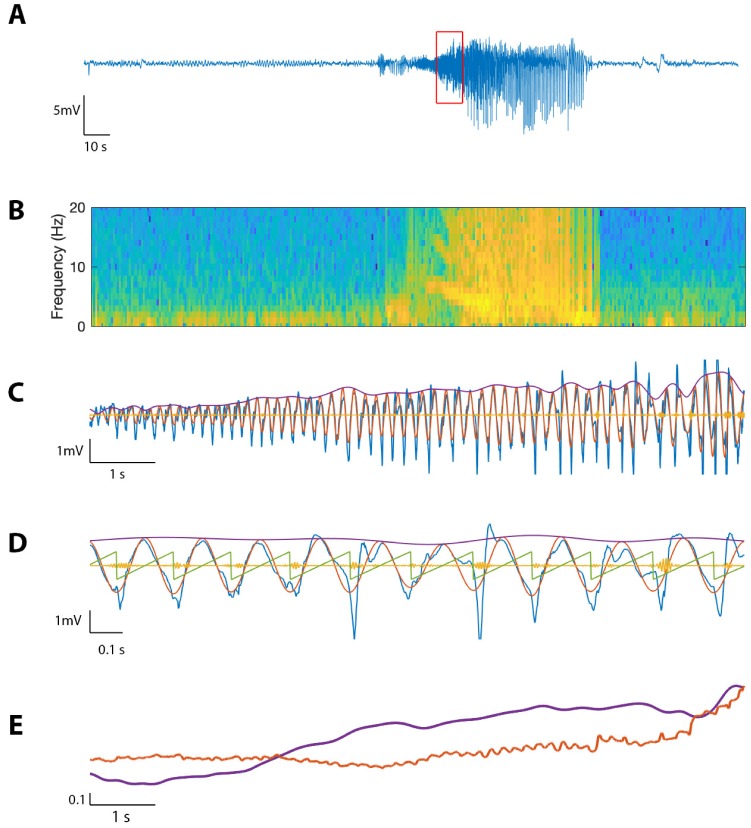

Application to in vivo human seizure data

To evaluate the performance of the proposed method on in vivo data, we first consider an example recording from human cortex during a seizure (see Materials and methods: Human subject data). Visual inspection of the LFP data (Figure 11A) reveals the emergence of large amplitude voltage fluctuations during the approximately 80 s seizure. We compute the spectrogram over the entire seizure, using windows of width 0.8 s with 0.002 s overlap, and identify a distinct 10 s interval of increased power in the 4–7 Hz band (Figure 11B). We analyze this section of the voltage trace , filtering into (100–140 Hz) and (4–7 Hz), and extracting , , and as in Methods (Figure 11C). Visual inspection reveals the occurrence of large amplitude, low frequency oscillations and small amplitude, high frequency oscillations.

Figure 11. The proposed method detects cross-frequency coupling in an in vivo human recording.

(A,B) Voltage recording (A) and spectrogram (B) from one MEA electrode over the course of a seizure; PAC and AAC were computed for the time segment outlined in red. (C) The 10 s voltage trace (blue) corresponding to the outlined segment in (A), and (red), (yellow), and (purple). (D) A 2 s subinterval of the voltage trace (blue), (red), (yellow), (purple), and (green). (E) (purple) and (red) for the 10 s segment in (C), normalized and smoothed.

We find during this interval significant phase-amplitude coupling computed using (, , Figure 12), and using the modulation index (, ). To examine the phase-amplitude coupling in more detail, we isolate a 2 s segment (Figure 11D) and display the signal , the high frequency signal , the low frequency phase , and the low frequency amplitude . We observe that when is near , the amplitude of tends to increase, consistent with the presence of PAC and a significant value of and MI.

Figure 12. The surface shows how PAC changes with the low frequency amplitude and phase during an interval of human seizure.

(A) The full model surface (blue) in the (, , ) space, and components of that surface when (B) is small (black), and is large (red).

We also find significant amplitude-amplitude coupling computed using (, ). Comparing and over the 10 s interval (each smoothed using a 1 s moving average filter and normalized), we observe that both and steadily increase over the duration of the interval (Figure 11E).

Application to in vivo rodent data

As a second example to illustrate the performance of the new method, we consider LFP recordings from from the infralimbic cortex (IL) and basolateral amygdala (BLA) of an outbred Long-Evans rat before and after the delivery of an experimental electrical stimulation intervention described in Blackwood et al. (2018). Eight microwires in each region, referenced as bipolar pairs, sampled the LFP at 30 kHz, and electrical stimulation was delivered to change inter-regional coupling (see Blackwood et al., 2018 for a detailed description of the experiment). Here we examine how cross-frequency coupling between low frequency (5–8 Hz) IL signals and high frequency (70–110 Hz) BLA signals changes from the pre-stimulation to the post-stimulation condition. To do so, we filter the data into low and high frequency signals (see Materials and methods), and compute the MI, and between each possible BLA-IL pairing, sixteen in total.

We find three separate BLA-IL pairings where reports no significant PAC pre- or post-stimulation, but MI reports significant coupling post-stimulation. Investigating further, we note that in all three cases, the amplitude of the low frequency IL signal increases from pre- to post-stimulation, and , the measure of amplitude-amplitude coupling, increases from pre- to post-stimulation. These observations are consistent with the simulations in Results: The proposed method is less affected by fluctuations in low-frequency amplitude and AAC, in which we showed that increases in the low frequency amplitude and AAC produced increases in MI, although the PAC remained fixed. We therefore propose that, consistent with these simulation results, the increase in MI observed in these data may result from changes in the low frequency amplitude and AAC, not in PAC.

Using the flexibility of GLMs to improve detection of phase-amplitude coupling in vivo

One advantage of the proposed framework is its flexibility: covariates are easily added to the generalized linear model and tested for significance. For example, we could include covariates for trial, sex, and stimulus parameters and explore their effects on PAC, AAC, or both.

Here, we illustrate this flexibility through continued analysis of the rodent data. We select a single electrode recording from these data, and hypothesize that the condition, either pre-stimulation or post-stimulation, affects the coupling. To incorporate this new covariate into the framework, we consider the concatenated voltage recordings from the pre-stimulation condition and the post-stimulation condition :

From , we obtain the corresponding high frequency signal and low frequency signal , and subsequently the high frequency amplitude , low frequency phase , and low frequency amplitude . We use these data to generate two new models:

| (9) |

| (10) |

where is an indicator function specifying whether the signal is in the pre-stimulation () or post-stimulation () condition. The effect of the indicator function is to include the effect of stimulus condition on the high frequency amplitude. The models in Equations 9 and 10 now include the effect of low frequency amplitude, low frequency phase, and condition on high frequency amplitude. To determine whether the condition has an effect on PAC, we test whether the term in Equation 9 is significant, that is whether there is a significant difference between the models in Equations 9 and 10. If the difference between the two models is very small, we gain no improvement in modeling by including the interaction between and . In that case, the impact of on can be modeled without considering stimulus condition , that is the impact of stimulus condition on PAC is negligible.

To measure the difference between the models in Equations 9 and 10, we construct a surface from the model in Equation 9, and a surface from the model in Equation 10 in the (, , , P) space, assessing the models at . We compute , which measures the impact of stimulus condition on PAC, as:

| (11) |

We find for the example rodent data an value of 0.3608, with a p-value of 0.0005. Hence, we find evidence for a significant effect of stimulus on PAC.

To further explore this assessment of stimulus condition on PAC, we simulate 1000 instances of a 40 s signal divided into two conditions: no PAC for the first 20 s () and non-zero PAC for the final 20 s . We design this simulation to mimic an increase in PAC from pre-stimulation to post-stimulation (Figure 13A). Using the models in Equations 9 and 10, and computing , we find for 100% of simulated signals. We also simulate 1000 instances of a 40 s signal with no PAC () for the entire 40 s, that is PAC does not change from pre-stimulation to post-stimulation (Figure 13B), and find in this case for only 4.6% of simulations. Finally, we simulate 1000 instances of a 40 s signal with fixed PAC , and with a doubling of the low frequency amplitude occuring at 20 s (i.e., pre-stimulation the low frequency amplitude is 1, and post-stimulation the low frequency amplitude is 2). We find for only 3.6% of simulations. We conclude that this method effectively determines whether stimulation condition significantly changes PAC.

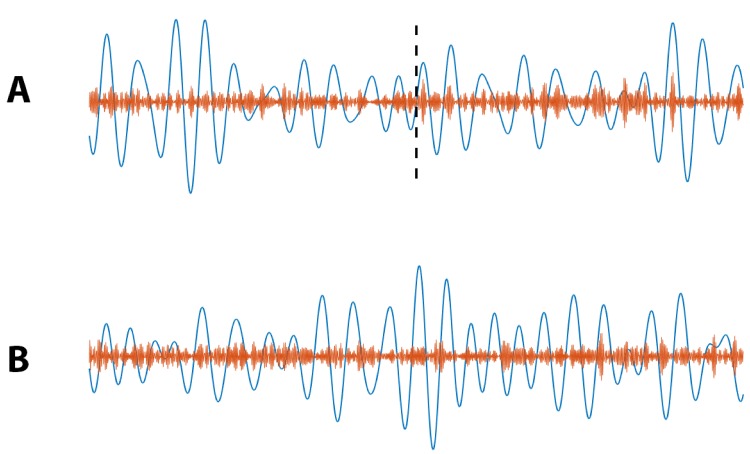

Figure 13. Example simulated (blue) and (orange) signals for which (A) PAC increases at 20 s (indicated by black dashed line), and (B) no increase in PAC occurs.

This example illustrates the flexibility of the statistical modeling framework. Extending this framework is straightforward, and new extensions allow a common principled approach to test the impact of new predictors. Here we considered an indicator function that divides the data into two states (pre- and post-stimulation). We note that the models are easily extended to account for multiple discrete predictors such as gender and participation in a drug trial, or for continuous predictors such as age and time since stimulus.

Discussion

In this paper, we proposed a new method for measuring cross-frequency coupling that accounts for both phase-amplitude coupling and amplitude-amplitude coupling, along with a principled statistical modeling framework to assess the significance of this coupling. We have shown that this method effectively detects CFC, both as PAC and AAC, and is more sensitive to weak PAC obscured by or coupled to low-frequency amplitude fluctuations. Compared to an existing method, the modulation index (Tort et al., 2010), the newly proposed method more accurately detects scenarios in which PAC is coupled to the low-frequency amplitude. Finally, we applied this method to in vivo data to illustrate examples of PAC and AAC in real systems, and show how to extend the modeling framework to include a new covariate.

One of the most important features of the new method is an increased ability to detect weak PAC coupled to AAC. For example, when sparse PAC events occur only when the low frequency amplitude () is large, the proposed method detects this coupling while another method not accounting for misses it. While PAC often occurs in neural data, and has been associated with numerous neurological functions (Canolty and Knight, 2010; Hyafil et al., 2015b), the simultaneous occurrence of PAC and AAC is less well studied (Osipova et al., 2008). Here, we showed examples of simultaneous PAC and AAC recorded from human cortex during a seizure, and we note that this phenomena has been simulated in other works (Mazzoni et al., 2010).

While the exact mechanisms that support CFC are not well understood (Hyafil et al., 2015b), the general mechanisms of low and high frequency rhythms have been proposed. Low frequency rhythms are associated with the aggregate activity of large neural populations and modulations of neuronal excitability (Engel et al., 2001; Varela et al., 2001; Buzsáki and Draguhn, 2004), while high frequency rhythms provided a surrogate measure of neuronal spiking (Rasch et al., 2008; Mukamel et al., 2005; Fries et al., 2001; Pesaran et al., 2002; Whittingstall and Logothetis, 2009; Ray and Maunsell, 2011; Ray et al., 2008b). These two observations provide a physical interpretation for PAC: when a low frequency rhythm modulates the excitability of a neural population, we expect spiking to occur (i.e., an increase in ) at a particular phase of the low frequency rhythm () when excitation is maximal. These notions also provide a physical interpretation for AAC: increases in produce larger modulations in neural excitability, and therefore increased intervals of neuronal spiking (i.e., increases in ). Alternatively, decreases in reduce excitability and neuronal spiking (i.e., decreases in ).

The function of concurrent PAC and AAC, both for healthy brain function and during a seizure as illustrated here, is not well understood. As PAC occurs normally in healthy brain signals, for example during working memory, neuronal computation, communication, learning and emotion (Tort et al., 2009; Jensen et al., 2016; Canolty and Knight, 2010; Dejean et al., 2016; Karalis et al., 2016; Likhtik et al., 2014; Jones and Wilson, 2005; Lisman, 2005; Sirota et al., 2008), these preliminary results may suggest a pathological aspect of strong AAC occurring concurrently with PAC.

Proposed functions of PAC include multi-item encoding, long-distance communication, and sensory parsing (Hyafil et al., 2015b). Each of these functions takes advantage of the low frequency phase, encoding different objects or pieces of information in distinct phase intervals of . PAC can be interpreted as a type of focused attention; modulation occurring only in a particular interval of organizes neural activity - and presumably information - into discrete packets of time. Similarly, a proposed function of AAC is to encode the number of represented items, or the amount of information encoded in the modulated signal (Hyafil et al., 2015b). A pathological increase in AAC may support the transmission of more information than is needed, overloading the communication of relevant information with irrelevant noise. The attention-based function of PAC, that is having reduced high frequency amplitude at phases not containing the targeted information, may be lost if the amplitude of the high frequency oscillation is increased across wide intervals of low frequency phase.

Like all measures of CFC, the proposed method possesses specific limitations. We discuss five limitations here. First, the choice of spline basis to represent the low frequency phase may be inaccurate, for example if the PAC changes rapidly with . Second, the value of depends on the range of observed. This is due to the linear relationship between and in the model, which causes the maximum distance between the surfaces and to occur at the largest or smallest value of . To mitigate the impact of extreme values on , we evaluate the surfaces and over the 5th to 95th quantiles of . We note that an alternative metric of AAC could instead evaluate the slope of the surface; to maintain consistency of the PAC and AAC measures, we chose not to implement this alternative measure here. Third, the frequency bands for and must be established before R values are calculated. Hence, if the wrong frequency bands are chosen, coupling may be missed. It is possible, though computationally expensive, to scan over all reasonable frequency bands for both and , calculating R values for each frequency band pair. Fourth, we note that the proposed modeling framework assumes the data contain approximately sinusoidal signals, which have been appropriately isolated for analysis. In general, CFC measures are sensitive to non-sinusoidal signals, which may confound interpretation of cross-frequency analyses (Cole and Voytek, 2017; Kramer et al., 2008; Aru et al., 2015). While the modeling framework proposed here does not directly account for the confounds introduced by non-sinusoidal signals, the inclusion of additional predictors (e.g. detections of sharp changes in the unfiltered data) in the model may help mitigate these effects. Fifth, we simulate time series with known PAC and AAC, and then test whether the proposed analysis framework detects this coupling. The simulated relationships between and (,) may result in time series with simpler structure than those observed in vivo. For example, a latent signal may drive both and , and in this way establish nonlinear relationships between the two observables and . We note that, if this were the case, the latent signal could also be incorporated in the statistical modeling framework (Yousefi et al., 2019).

We chose the statistics and for two reasons. First, we found that two common methods of model comparison for GLMs provide less robust measures of significance than and . While the statistics and are less powerful than standard model comparison tests, the large amount of data typically assessed in CFC analysis may compensate for this loss. We showed that the statistics and performed well in simulations, and we note that these statistics are directly interpretable. While many model comparison methods exist - and another method may provide specific advantages - we found that the framework implemented here is sufficiently powerful, interpretable, and robust for real-world neural data analysis.

The proposed method can easily be extended by inclusion of additional predictors in the GLM. Polynomial predictors, rather than the current linear predictors, may better capture the relationship between and . One could also include different types of covariates, for example classes of drugs administered to a patient, or time since an administered stimulus during an experiment. To capture more complex relationships between the predictors (, ) and , the GLM could be replaced by a more general form of Generalized Additive Model (GAM). Choosing GAMs would remove the restriction that the conditional mean must be linear in each of the model parameters (which would allow us to estimate knot locations directly from the data, for example), at the cost of greater computational time to estimate these parameters. The code developed to implement the method is flexible and modular, which facilitates modifications and extensions motivated by the particular data analysis scenario. This modular code, available at https://github.com/Eden-Kramer-Lab/GLM-CFC, also allows the user to change latent assumptions, such as choice of frequency bands and filtering method. The code is freely available for reuse and further development.

Rhythms, and particularly the interactions of different frequency rhythms, are an important component for a complete understanding of neural activity. While the mechanisms and functions of some rhythms are well understood, how and why rhythms interact remains uncertain. A first step in addressing these uncertainties is the application of appropriate data analysis tools. Here we provide a new tool to measure coupling between different brain rhythms: the method utilizes a statistical modeling framework that is flexible and captures subtle differences in cross-frequency coupling. We hope that this method will better enable practicing neuroscientists to measure and relate brain rhythms, and ultimately better understand brain function and interactions.

Acknowledgements

This work was supported in part by the National Science Foundation Award #1451384, in part by R01 EB026938, in part by R21 MH109722, and in part by the National Science Foundation (NSF) under a Graduate Research Fellowship.

Funding Statement

The funders had no role in study design, data collection and interpretation, or the decision to submit the work for publication.

Contributor Information

Mark A Kramer, Email: mak@bu.edu.

Frances K Skinner, Krembil Research Institute, University Health Network, Canada.

Laura L Colgin, University of Texas at Austin, United States.

Funding Information

This paper was supported by the following grants:

National Science Foundation NSF DMS #1451384 to Jessica K Nadalin, Mark A Kramer.

National Science Foundation GRFP to Jessica K Nadalin.

National Institutes of Health R21 MH109722 to Alik S Widge.

National Institutes of Health R01 EB026938 to Alik S Widge, Uri T Eden, Mark A Kramer.

Additional information

Competing interests

No competing interests declared.

Author contributions

Conceptualization, Software, Formal analysis, Validation, Investigation, Visualization, Methodology, Writing—original draft, Writing—review and editing.

Data curation, Writing—review and editing.

Resources, Data curation, Software.

Resources, Data curation, Software.

Resources, Investigation, Writing—review and editing.

Investigation, Writing—review and editing.

Software, Methodology, Writing—review and editing.

Conceptualization, Software, Supervision, Methodology, Writing—review and editing.

Ethics

Human subjects: All patients were enrolled after informed consent, and consent to publish, was obtained and approval was granted by local Institutional Review Boards at Massachusetts General Hospital and Brigham Women's Hospitals (Partners Human Research Committee), and at Boston University according to National Institutes of Health guidelines (IRB Protocol # 1558X).

Animal experimentation: The animal experimentation received IACUC approval from the University of Minnesota (IACUC Protocol # 1806-36024A).

Additional files

Data availability

In vivo human data available at https://github.com/Eden-Kramer-Lab/GLM-CFC (copy archived at https://github.com/elifesciences-publications/GLM-CFC). In vivo rat data available at https://github.com/tne-lab/cl-example-data (copy archived at https://github.com/elifesciences-publications/cl-example-data).

References

- Agarwal G, Stevenson IH, Berényi A, Mizuseki K, Buzsáki G, Sommer FT. Spatially distributed local fields in the Hippocampus encode rat position. Science. 2014;344:626–630. doi: 10.1126/science.1250444. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Aljadeff J, Lansdell BJ, Fairhall AL, Kleinfeld D. Analysis of neuronal spike trains, deconstructed. Neuron. 2016;91:221–259. doi: 10.1016/j.neuron.2016.05.039. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Aru J, Aru J, Priesemann V, Wibral M, Lana L, Pipa G, Singer W, Vicente R. Untangling cross-frequency coupling in neuroscience. Current Opinion in Neurobiology. 2015;31:51–61. doi: 10.1016/j.conb.2014.08.002. [DOI] [PubMed] [Google Scholar]

- Axmacher N, Henseler MM, Jensen O, Weinreich I, Elger CE, Fell J. Cross-frequency coupling supports multi-item working memory in the human Hippocampus. PNAS. 2010;107:3228–3233. doi: 10.1073/pnas.0911531107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Başar E, Schmiedt-Fehr C, Mathes B, Femir B, Emek-Savaş DD, Tülay E, Tan D, Düzgün A, Güntekin B, Özerdem A, Yener G, Başar-Eroğlu C. What does the broken brain say to the neuroscientist? oscillations and connectivity in schizophrenia, Alzheimer’s disease, and bipolar disorder. International Journal of Psychophysiology. 2016;103:135–148. doi: 10.1016/j.ijpsycho.2015.02.004. [DOI] [PubMed] [Google Scholar]

- Blackwood E, Lo M, Widge SA. Continuous phase estimation for phase-locked neural stimulation using an autoregressive model for signal prediction. Conference of the IEEE Engineering in Medicine and Biology Society. 2018;2018:4736–4739. doi: 10.1109/EMBC.2018.8513232. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Börgers C, Epstein S, Kopell NJ. Gamma oscillations mediate stimulus competition and attentional selection in a cortical network model. PNAS. 2008;105:18023–18028. doi: 10.1073/pnas.0809511105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bragin A, Jandó G, Nádasdy Z, Hetke J, Wise K, Buzsáki G. Gamma (40-100 hz) oscillation in the Hippocampus of the behaving rat. The Journal of Neuroscience. 1995;15:47–60. doi: 10.1523/JNEUROSCI.15-01-00047.1995. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bruns A, Eckhorn R. Task-related coupling from high- to low-frequency signals among visual cortical Areas in human subdural recordings. International Journal of Psychophysiology. 2004;51:97–116. doi: 10.1016/j.ijpsycho.2003.07.001. [DOI] [PubMed] [Google Scholar]

- Buzsáki G, Draguhn A. Neuronal oscillations in cortical networks. Science. 2004;304:1926–1929. doi: 10.1126/science.1099745. [DOI] [PubMed] [Google Scholar]

- Buzsáki G, Wang XJ. Mechanisms of gamma oscillations. Annual Review of Neuroscience. 2012;35:203–225. doi: 10.1146/annurev-neuro-062111-150444. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Canolty RT, Edwards E, Dalal SS, Soltani M, Nagarajan SS, Kirsch HE, Berger MS, Barbaro NM, Knight RT. High gamma power is phase-locked to theta oscillations in human neocortex. Science. 2006;313:1626–1628. doi: 10.1126/science.1128115. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Canolty RT, Knight RT. The functional role of cross-frequency coupling. Trends in Cognitive Sciences. 2010;14:506–515. doi: 10.1016/j.tics.2010.09.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chehelcheraghi M, van Leeuwen C, Steur E, Nakatani C. A neural mass model of cross frequency coupling. PLOS ONE. 2017;12:e0173776. doi: 10.1371/journal.pone.0173776. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chrobak JJ, Buzsáki G. Gamma oscillations in the entorhinal cortex of the freely behaving rat. The Journal of Neuroscience. 1998;18:388–398. doi: 10.1523/JNEUROSCI.18-01-00388.1998. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cohen MX. Assessing transient cross-frequency coupling in EEG data. Journal of Neuroscience Methods. 2008;168:494–499. doi: 10.1016/j.jneumeth.2007.10.012. [DOI] [PubMed] [Google Scholar]

- Cohen MX. Multivariate cross-frequency coupling via generalized eigendecomposition. eLife. 2017;6:e21792. doi: 10.7554/eLife.21792. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cole SR, Voytek B. Brain oscillations and the importance of waveform shape. Trends in Cognitive Sciences. 2017;21:137–149. doi: 10.1016/j.tics.2016.12.008. [DOI] [PubMed] [Google Scholar]

- Colgin LL, Denninger T, Fyhn M, Hafting T, Bonnevie T, Jensen O, Moser MB, Moser EI. Frequency of gamma oscillations routes flow of information in the Hippocampus. Nature. 2009;462:353–357. doi: 10.1038/nature08573. [DOI] [PubMed] [Google Scholar]

- Csicsvari J, Jamieson B, Wise KD, Buzsáki G. Mechanisms of gamma oscillations in the Hippocampus of the behaving rat. Neuron. 2003;37:311–322. doi: 10.1016/S0896-6273(02)01169-8. [DOI] [PubMed] [Google Scholar]

- Dean HL, Hagan MA, Pesaran B. Only coherent spiking in posterior parietal cortex coordinates looking and reaching. Neuron. 2012;73:829–841. doi: 10.1016/j.neuron.2011.12.035. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dejean C, Courtin J, Karalis N, Chaudun F, Wurtz H, Bienvenu TC, Herry C. Prefrontal neuronal assemblies temporally control fear behaviour. Nature. 2016;535:420–424. doi: 10.1038/nature18630. [DOI] [PubMed] [Google Scholar]

- Engel AK, Fries P, Singer W. Dynamic predictions: oscillations and synchrony in top-down processing. Nature Reviews Neuroscience. 2001;2:704–716. doi: 10.1038/35094565. [DOI] [PubMed] [Google Scholar]

- Fontolan L, Krupa M, Hyafil A, Gutkin B. Analytical insights on theta-gamma coupled neural oscillators. The Journal of Mathematical Neuroscience. 2013;3:16. doi: 10.1186/2190-8567-3-16. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fries P, Reynolds JH, Rorie AE, Desimone R. Modulation of oscillatory neuronal synchronization by selective visual attention. Science. 2001;291:1560–1563. doi: 10.1126/science.1055465. [DOI] [PubMed] [Google Scholar]

- Fries P, Nikolić D, Singer W. The gamma cycle. Trends in Neurosciences. 2007;30:309–316. doi: 10.1016/j.tins.2007.05.005. [DOI] [PubMed] [Google Scholar]

- Gordon JA. On being a circuit psychiatrist. Nature Neuroscience. 2016;19:1385–1386. doi: 10.1038/nn.4419. [DOI] [PubMed] [Google Scholar]

- Hawellek DJ, Wong YT, Pesaran B. Temporal coding of reward-guided choice in the posterior parietal cortex. PNAS. 2016;113:13492–13497. doi: 10.1073/pnas.1606479113. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hyafil A, Fontolan L, Kabdebon C, Gutkin B, Giraud AL. Speech encoding by coupled cortical theta and gamma oscillations. eLife. 2015a;4:e06213. doi: 10.7554/eLife.06213. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hyafil A, Giraud AL, Fontolan L, Gutkin B. Neural Cross-Frequency coupling: connecting architectures, mechanisms, and functions. Trends in Neurosciences. 2015b;38:725–740. doi: 10.1016/j.tins.2015.09.001. [DOI] [PubMed] [Google Scholar]

- Jackson N, Cole SR, Voytek B, Swann NC. Characteristics of waveform shape in Parkinson's Disease Detected with Scalp Electroencephalography. Eneuro. 2019;6:ENEURO.0151-19.2019. doi: 10.1523/ENEURO.0151-19.2019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jensen O, Spaak E, Park H. Discriminating valid from spurious indices of Phase-Amplitude coupling. Eneuro. 2016;3:ENEURO.0334-16.2016. doi: 10.1523/ENEURO.0334-16.2016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jensen O, Lisman JE. Position reconstruction from an ensemble of hippocampal place cells: contribution of theta phase coding. Journal of Neurophysiology. 2000;83:2602–2609. doi: 10.1152/jn.2000.83.5.2602. [DOI] [PubMed] [Google Scholar]

- Jia X, Kohn A. Gamma rhythms in the brain. PLOS Biology. 2011;9:e1001045. doi: 10.1371/journal.pbio.1001045. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jirsa V, Müller V. Cross-frequency coupling in real and virtual brain networks. Frontiers in Computational Neuroscience. 2013;7:78. doi: 10.3389/fncom.2013.00078. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jones MW, Wilson MA. Theta rhythms coordinate hippocampal–prefrontal interactions in a spatial memory task. PLOS Biology. 2005;11:e402. doi: 10.1371/journal.pbio.0030402. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Karalis N, Dejean C, Chaudun F, Khoder S, Rozeske RR, Wurtz H, Bagur S, Benchenane K, Sirota A, Courtin J, Herry C. 4-Hz oscillations synchronize prefrontal-amygdala circuits during fear behavior. Nature Neuroscience. 2016;19:605–612. doi: 10.1038/nn.4251. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kopell N, Ermentrout GB, Whittington MA, Traub RD. Gamma rhythms and beta rhythms have different synchronization properties. PNAS. 2000;97:1867–1872. doi: 10.1073/pnas.97.4.1867. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kramer MA, Tort AB, Kopell NJ. Sharp edge artifacts and spurious coupling in EEG frequency comodulation measures. Journal of Neuroscience Methods. 2008;170:352–357. doi: 10.1016/j.jneumeth.2008.01.020. [DOI] [PubMed] [Google Scholar]

- Kramer MA, Eden UT. Assessment of cross-frequency coupling with confidence using generalized linear models. Journal of Neuroscience Methods. 2013;220:64–74. doi: 10.1016/j.jneumeth.2013.08.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kramer MA, Eden UT. Case Studies in Neural Data Analysis: A Guide for the Practicing Neuroscientist. The MIT Press; 2016. [Google Scholar]

- Lachaux JP, Rodriguez E, Martinerie J, Varela FJ. Measuring phase synchrony in brain signals. Human Brain Mapping. 1999;8:194–208. doi: 10.1002/(SICI)1097-0193(1999)8:4<194::AID-HBM4>3.0.CO;2-C. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lakatos P, Shah AS, Knuth KH, Ulbert I, Karmos G, Schroeder CE. An oscillatory hierarchy controlling neuronal excitability and stimulus processing in the auditory cortex. Journal of Neurophysiology. 2005;94:1904–1911. doi: 10.1152/jn.00263.2005. [DOI] [PubMed] [Google Scholar]

- Lakatos P, Karmos G, Mehta AD, Ulbert I, Schroeder CE. Entrainment of neuronal oscillations as a mechanism of attentional selection. Science. 2008;320:110–113. doi: 10.1126/science.1154735. [DOI] [PubMed] [Google Scholar]

- Lepage KQ, Vijayan S. A Time-Series model of phase amplitude cross frequency coupling and comparison of spectral characteristics with neural data. BioMed Research International. 2015;2015:1–8. doi: 10.1155/2015/140837. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Likhtik E, Stujenske JM, Topiwala MA, Harris AZ, Gordon JA. Prefrontal entrainment of amygdala activity signals safety in learned fear and innate anxiety. Nature Neuroscience. 2014;17:106–113. doi: 10.1038/nn.3582. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lisman J. The theta/gamma discrete phase code occuring during the hippocampal phase precession may be a more general brain coding scheme. Hippocampus. 2005;15:913–922. doi: 10.1002/hipo.20121. [DOI] [PubMed] [Google Scholar]

- Malerba P, Kopell N. Phase resetting reduces theta-gamma rhythmic interaction to a one-dimensional map. Journal of Mathematical Biology. 2013;66:1361–1386. doi: 10.1007/s00285-012-0534-9. [DOI] [PubMed] [Google Scholar]

- Mann EO, Mody I. Control of hippocampal gamma oscillation frequency by tonic inhibition and excitation of interneurons. Nature Neuroscience. 2010;13:205–212. doi: 10.1038/nn.2464. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mathalon DH, Sohal VS. Neural oscillations and synchrony in brain dysfunction and neuropsychiatric disorders: it's about time. JAMA Psychiatry. 2015;72:840. doi: 10.1001/jamapsychiatry.2015.0483. [DOI] [PubMed] [Google Scholar]

- Mazzoni A, Whittingstall K, Brunel N, Logothetis NK, Panzeri S. Understanding the relationships between spike rate and Delta/gamma frequency bands of LFPs and EEGs using a local cortical network model. NeuroImage. 2010;52:956–972. doi: 10.1016/j.neuroimage.2009.12.040. [DOI] [PubMed] [Google Scholar]

- Mormann F, Fell J, Axmacher N, Weber B, Lehnertz K, Elger CE, Fernández G. Phase/amplitude reset and theta–gamma interaction in the human medial temporal lobe during a continuous word recognition memory task. Hippocampus. 2005;15:890–900. doi: 10.1002/hipo.20117. [DOI] [PubMed] [Google Scholar]

- Mukamel R, Gelbard H, Arieli A, Hasson U, Fried I, Malach R. Coupling between neuronal firing, field potentials, and FMRI in human auditory cortex. Science. 2005;309:951–954. doi: 10.1126/science.1110913. [DOI] [PubMed] [Google Scholar]

- Nadalin J, Kramer M. GitHub; 2019. https://github.com/Eden-Kramer-Lab/GLM-CFC [Google Scholar]

- Onslow AC, Bogacz R, Jones MW. Quantifying phase-amplitude coupling in neuronal network oscillations. Progress in Biophysics and Molecular Biology. 2011;105:49–57. doi: 10.1016/j.pbiomolbio.2010.09.007. [DOI] [PubMed] [Google Scholar]

- Onslow AC, Jones MW, Bogacz R. A canonical circuit for generating phase-amplitude coupling. PLOS ONE. 2014;9:e102591. doi: 10.1371/journal.pone.0102591. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Osipova D, Hermes D, Jensen O. Gamma power is phase-locked to posterior alpha activity. PLOS ONE. 2008;3:e3990. doi: 10.1371/journal.pone.0003990. [DOI] [PMC free article] [PubMed] [Google Scholar]