Abstract

Background

The automation of data analysis in the form of scientific workflows has become a widely adopted practice in many fields of research. Computationally driven data-intensive experiments using workflows enable automation, scaling, adaptation, and provenance support. However, there are still several challenges associated with the effective sharing, publication, and reproducibility of such workflows due to the incomplete capture of provenance and lack of interoperability between different technical (software) platforms.

Results

Based on best-practice recommendations identified from the literature on workflow design, sharing, and publishing, we define a hierarchical provenance framework to achieve uniformity in provenance and support comprehensive and fully re-executable workflows equipped with domain-specific information. To realize this framework, we present CWLProv, a standard-based format to represent any workflow-based computational analysis to produce workflow output artefacts that satisfy the various levels of provenance. We use open source community-driven standards, interoperable workflow definitions in Common Workflow Language (CWL), structured provenance representation using the W3C PROV model, and resource aggregation and sharing as workflow-centric research objects generated along with the final outputs of a given workflow enactment. We demonstrate the utility of this approach through a practical implementation of CWLProv and evaluation using real-life genomic workflows developed by independent groups.

Conclusions

The underlying principles of the standards utilized by CWLProv enable semantically rich and executable research objects that capture computational workflows with retrospective provenance such that any platform supporting CWL will be able to understand the analysis, reuse the methods for partial reruns, or reproduce the analysis to validate the published findings.

Keywords: provenance, Common Workflow Language, CWL, Research Object, RO, BagIt, interoperability, scientific workflows, containers

Introduction

Amongst the many big data domains, genomics is considered the most demanding with respect to all stages of the data lifecycle, including acquisition, storage, distribution, and analysis [1]. Because genomic data are growing at an unprecedented rate due to improved sequencing technologies and reduced cost, it is currently challenging to analyse the data at a rate matching their production. With data growing exponentially in size and volume, the practice of performing computational analyses using workflows has overtaken more traditional research methods using ad hoc scripts, which were the typical modus operandi over the past few decades [2,3]. Scientific workflow design and management has become an essential part of many computationally driven data-intensive analyses enabling automation, scaling, adaptation, and provenance support [4]. Increased use of workflows has driven rapid growth in the number of computational data analysis workflow management systems (WMSs), with hundreds of heterogeneous approaches now existing for workflow specification and execution [5]. There is an urgent need for a common format and standard to define workflows and enable sharing of analysis results using a given workflow environment.

Common Workflow Language (CWL) [6] has emerged as a workflow definition standard designed to enable portability, interoperability, and reproducibility of analyses between workflow platforms. CWL has been widely adopted by >20 organizations, providing an interoperable bridge overcoming the heterogeneity of workflow environments. Whilst a common standard for workflow definition is an important step towards interoperable solutions for workflow specifications, sharing and publishing the results of these workflow enactments in a common format is equally important. Transparent and comprehensive sharing of experimental designs is critical to establish trust and ensure authenticity, quality, and reproducibility of any workflow-based research result. Currently there is no common format defined and agreed upon for interoperable workflow archiving or sharing [7].

In this paper, we utilize open source standards such as CWL together with related efforts such as research objects (ROs) [8], BagIt [9], and PROV [10] to define CWLProv, a format for the interoperable representation of a CWL workflow enactment. We focus on production of a workflow-centric executable RO as the final result of a given CWL workflow enactment. This RO is equipped with the artefacts used in a given execution including the workflow inputs, outputs, and, most importantly, the retrospective provenance. This approach enables the complete sharing of a computational analysis such that any future CWL-based workflow can be rerun given the best practices discussed later for software environment provision are followed.

The concept of workflow-centric ROs has been previously considered [8, 11,12] for structuring the analysis methods and aggregating digital resources used in a given analysis. The ROs generated in these studies typically aggregate data objects, example inputs, workflow specifications, attribution details, and details about the execution environment amongst various other elements. These previous efforts were largely tied to a single platform or WMS. CWLProv aims to provide a platform-independent solution for workflow sharing, enactment, and publication. All the standards and vocabularies used to design CWLProv have an overarching goal to support a domain-neutral and interoperable solution (detailed in Section Applied Standards and Vocabularies).

The remainder of this paper is structured as follows. In Section Background we discuss the key concepts and related work, followed by a summary of the published best practices and recommendations for workflow representation and sharing in Section Levels of Provenance and Resource Sharing. This section also details the hierarchical provenance framework that we define to provide a principled approach for provenance capture and method sharing. Section CWLProv 0.6.0 and Utilized Standards introduces CWLProv and outlines its format, structure, and the details of the standards and ontologies it utilizes. Section Practical Realization of CWLProv presents the implementation details of CWLProv using cwltool [13], and Section CWLProv Evaluation with Bioinformatics Workflows demonstrates and evaluates the implemented module for 3 existing workflow case studies. We discuss the challenges of interoperable workflow sharing and the limitations of the proposed solution, listing several possible future research directions, in Section Discussion and Future Directions before finally drawing conclusions in SectionConclusion.

Background

This work draws upon a range of topics such as “provenance" and “interoperability." We define these here to provide better context for the reader.

Provenance

A number of studies have advocated for complete provenance tracking of scientific workflows to ensure transparency, reproducibility, analytic validity, quality assurance, and attribution of (published) research results [14]. The term “provenance" is defined by the World Wide Web Consortium (W3C) [15] as:

“Provenance is information about entities, activities, and people involved in producing a piece of data or thing, which can be used to form assessments about its quality, reliability, or trustworthiness."

Provenance for workflows is commonly divided into the following 3 categories: retrospective provenance, prospective provenance, and workflow evolution. “Retrospective provenance" refers to the detailed record of the implementation of a computational task including the details of every executed process together with comprehensive information about the execution environment used to derive a specific product. “Prospective provenance" refers to the “recipes" used to capture a set of computational tasks and their order, e.g., the workflow specification [16]. This is typically given as an abstract representation of the steps (tools/data analysis steps) that are necessary to create a particular research output, e.g., a data artefact. “Workflow evolution" refers to tracking of any alteration in the existing workflow resulting in another version of the workflow that may produce either the same or different resultant data artefacts [17]. In this work, our focus is mainly on improving representation and capture of retrospective provenance.

Interoperability

The concept of interoperability varies in different domains. Here we focus on computational interoperability, defined as:

“The ability of 2 or more components or systems to exchange information and to use the information that has been exchanged [18]."

The focus of this study is to propose and devise methods to achieve syntactic, semantic, and pragmatic interoperability as defined in the Levels of Conceptual Interoperability Model [19]. Syntactic interoperability is achieved when a common data format for information exchange is unambiguously defined. The next level of interoperability, referred to as semantic interoperability, is reached when the content of the actual information exchanged is unambiguously defined. Once there is agreement about the format and content of the information, pragmatic interoperability is achieved when the context, application, and use of the shared information and data exchanged are also unambiguously defined. In the section Evaluation Results, we relate these general definitions to specific workflow applications with respect to workflow-centric ROs and describe to what extent these interoperability requirements are addressed.

Related work

We focus on relevant studies and efforts trying to resolve the issue of availability of required resources used in a given computational analysis. In addition, we cover efforts directed towards provenance capture of workflow enactments. We restrict our attention to scientific workflows and studies related to the bioinformatics domain.

Workflow software environment capture

Freezing and packaging the runtime environment to encompass all the software components and their dependencies used in an analysis is a recommended and widely adopted practice [20] especially after use of cloud computing resources where images and snapshots of the cloud instances are created and shared with fellow researchers [21]. Nowadays, preservation and sharing of the software environment, e.g., in open access repositories, is becoming a regular practice in the workflow domain as well. Leading platforms managing infrastructure and providing cloud computing services and configuration on demand include DigitalOcean [22], Amazon Elastic Compute Cloud [23], Google Cloud Platform [24], and Microsoft Azure [25]. The instances launched on these platforms can be saved as snapshots and published with an analysis study to later recreate an instance representing the computing state at analysis time.

Using “system-wide packaging" for data-driven analyses, although simplest on the part of the workflow developers and researchers, has its own caveats. One notable issue is the size of the snapshot as it captures everything in an instance at a given time; hence, the size can range from a few gigabytes to many terabytes. To distribute research software and share execution environments, various lightweight and container-based virtualization and package managers are emerging, including Docker, Singularity, Debian Med, and Bioconda.

Docker [26] is a lightweight container-based virtualization technology that facilitates the automation of application development by archiving software systems and environment to improve portability of the applications on many common platforms including Linux, Microsoft Windows, Mac OS X, and cloud instances. Singularity [27] is also a cross-platform open source container engine specifically supporting high-performance computing resources. An existing Docker format software image can be imported and used by the Singularity container engine. Debian Med [28] contributes packages of medical practice and biomedical research to the Debian Linux distribution, lately also including workflows. Bioconda [29] packages, based on the open source package manager Conda [30], are available for Mac OS X and Linux environments, directing towards availability and portability of software used in the life sciences domain.

Data/method preservation, aggregation, and sharing

Preserving and sharing only the software environment is not enough to verify results of any computational analysis or reuse the methods (e.g., workflows) with a different dataset. It is also necessary to share other details including data (example or the original), scripts, workflow files, input configuration settings, the hypothesis of the experiment, and any/all trace/logging information related to “what happened," i.e., the retrospective provenance of the actual workflow enactment. The publishing of resources to improve the state of scholarly publications is now supported by various online repositories, including Zenodo [31], GitHub [32], myExperiment [33], and Figshare [34]. These repositories facilitate collaborative research, in addition to public sharing of source code and the results of a given analysis. There is however no standard format that must be followed when someone shares artefacts associated with an analysis. As a result, the quality of the shared resources can range from a highly annotated, properly documented and complete set of artefacts to raw data with undocumented code and incomplete information about the analysis as a whole. Individual organizations or groups might provide a set of “recommended practices," e.g., in readme files, to attempt to maintain the quality of shared resources. The initiative Code as a Research Object [35] is a joint project between Figshare, GitHub, and Mozilla Science Lab [36] and aims to archive any GitHub code repository to Figshare and produce a DOI to improve the discovery of resources (for the source code that supports this work we have used a similar publishing feature with Zenodo).

ReproZip [37] aims to resolve portability issues by identifying and packaging all dependencies in a self-contained package that when unpacked and executed on another system (with ReproZip installed) should reproduce the methods and results of the analysis. Each package also contains a human-readable configuration file containing provenance information obtained by tracing system calls during system execution. The corresponding provenance trace is however not formatted using existing open standards established by the community. Several platform-dependent studies have been targeted towards extensions to existing standards by implementing the RO model and improving aggregation of resources. Belhajjame et al. [8] proposed the application of ROs to develop workflow-centric ROs containing data and metadata to support the understandability of the utilized methods (in this case workflow specifications). They explored 5 essential requirements to workflow preservation and identified data and metadata that could be stored to satisfy the said requirements. These requirements include providing example data, preserving workflows with provenance traces, annotating workflows, tracking the evolution in workflows, and packaging the auxiliary data and information with workflows. They proposed extensions to existing ontologies such as Object Reuse and Exchange (ORE), the Annotation Ontology (AO), and PROV-O, with 4 additional ontologies to represent workflow-specific information. However, as they state, the scope of the proposed model at that time was not focused on interoperability of heterogeneous workflows because it was demonstrated for a workflow specific to Taverna WMS using myExperiment, which makes it quite platform-dependent.

A domain-specific solution was proposed by Gomez-Perez et al. [38] by extending the RO model to equip workflow-centric ROs with information catering to the specific needs of the earth science community, resulting in enhanced discovery and reusability by experts. They demonstrated that the principles of ROs can support extensions to generate aggregated resources leveraging domain-specific knowledge. Hettne et al. [11] used 3 genomic workflow case studies to demonstrate the use of ROs to capture methods and data supporting querying and useful extraction of information about the scientific investigation under observation. The solution was tightly coupled with the Taverna WMS and hence, if shared, would not be reproducible outside of the Taverna environment. Other notable efforts to use ROs for workflow preservation and method aggregation have been undertaken in systems biology [39], in clinical settings [40], and in precision medicine [41].

Provenance capture and standardization

A range of standards for provenance representation have been proposed. Many studies have emphasized the need for provenance focusing on aspects such as scalability, granularity, security, authenticity, modelling, and annotation [14]. They identify the need to support standardized dialogues to make provenance interoperable. Many of these were used as inputs to initial attempts at creating a standard Provenance Model to tackle the often inconsistent and disjointed terminology related to provenance concepts. This ultimately resulted in the specification of the Open Provenance Model (OPM) [42] together with an open source model for the governance of OPM [43]. Working towards similar goals of interoperability and standardization of provenance for web technologies, the W3C Provenance Incubator Group [44] and the authors of OPM together set the fourth provenance challenge at the International Provenance and Annotation Workshop, 2010 (IPAW’10), that later resulted in PROV, a family of documents serving as the conceptual model for provenance capture and its representation, sharing, and exchange over the Web [45] regardless of the domain or platform. Since then, a number of studies have proposed extensions to this domain-neutral standard. The model is general enough to be adapted to any field and flexible enough to allow extensions for specialized cases.

Michaelides et al. [46] presented a domain-specific PROV-based solution for retrospective provenance to support portability and reproducibility of a statistical software suite. They captured the essential elements from the log of a workflow enactment and represented them using an intermediate notation. This representation was later translated to PROV-N and used as the basis for the PROV Template System. A Linux-specific system provenance approach was proposed by Pasquier et al. [47], who demonstrated retrospective provenance capture at the system level. Another project, UniProv, is working to extract information from Unicore middleware and transform it into a PROV-O representation to facilitate the backtracking of workflow enactments [48]. Other notable domain-specific efforts leveraging the established standards to record provenance and context information are PROV-man [49], PoeM [50], and micropublications [51]. Platforms such as VisTrails and Taverna have built in retrospective provenance support. Taverna [39] implements an extensive provenance capture system, TavernaProv [52], using both PROV ontologies as well as ROs aggregating the resources used in an analysis. VisTrails [53] is an open source project supporting platform-dependent provenance capture, visualization, and querying for extraction of required information about a workflow enactment. Chirigati et al. [37] provide an overview of PROV terms and how they can be translated from the VisTrails schema and serialized to PROV-XML. WINGS [54] can report fine-grained workflow execution provenance as Linked Data using the Open Provenance Model for Workflows ontology [55], which builds on both PROV-O and OPM.

All these efforts are fairly recent and use a standardized approach to provenance capture and hence are relevant to our work on the capture of retrospective provenance. However, our aim is a domain-neutral and platform-independent solution that can be easily adapted for any domain and shared across different platforms and operating systems.

As evident from the literature, there are efforts in progress to resolve the issues associated with effective and complete sharing of computational analysis including both the results and provenance information. These studies range from highly domain-specific solutions and platform-dependent objects to open source flexible interoperable standards. CWL has widespread adoption as a workflow definition standard and hence is an ideal candidate for portable workflow definitions. The next section investigates existing studies focused on workflow-centric science and summarizes best-practice recommendations put forward in these studies. From this we define a hierarchical provenance and resource-sharing framework.

Levels of Provenance and Resource Sharing

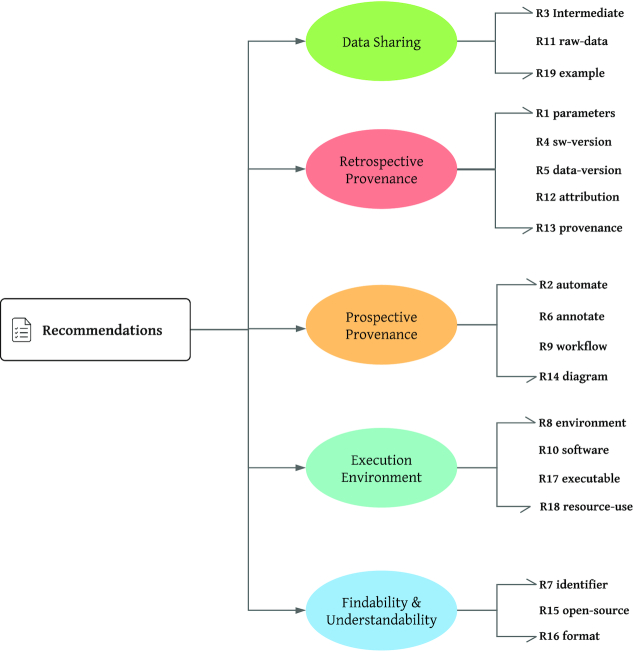

Various studies have empirically investigated the role of automated computational methods in the form of workflows and published best-practice recommendations to support workflow design, preservation, understandability, and reuse. We summarize a number of these recommendations and their justifications in Table 1, where each recommendation addresses a specific requirement of workflow design and sharing. These recommendations can be clustered into broad themes as shown in Fig. 1. This classification can be made in >1 way, e.g., according to how these recommendations are supporting each FAIR dimension (Findable, Accessible, Interoperable, and Reusable) [56]. In this study, we have focused on categories with respect to workflow design, prospective provenance, data sharing, retrospective provenance, the computational environment required/used for an analysis, and better findability and understandability of all shared resources.

Table 1.

Summarized recommendations and justifications from the literature covering best practices on reproducibility, accessibility, interoperability, and portability of workflows

| R No. | Recommendations | Justifications |

|---|---|---|

| R1-parameters | Save and share all parameters used for each software executed in a given workflow (including default values of parameters used) [55, 57–59]. | Affects reproducibility of results because different inputs and configurations of the software can produce different results. Different versions of a tool might upgrade the default values of the parameters. |

| R2-automate | Avoid manual processing of data, and if using shims [60], then make these part of the workflow to fully automate the computational process [57, 59]. | This ensures the complete capture of the computational process without broken links so that the analysis can be executed without the need for performing manual steps. |

| R3-intermediate | Include intermediate results where possible when publishing an analysis [55, 58, 59]. | Intermediate data products can be used to inspect and understand shared analysis when re-enactment is not possible. |

| R4-sw-version | Record the exact software versions used [57, 59]. | This is necessary for reproducibility of results because different software versions can produce different results. |

| R5-data-version | If using public data (reference data, variant databases), then it is necessary to store and share the actual data versions used [3, 61, 57, 59]. | This is needed because different versions of data, e.g., human reference genome or variant databases, can result in slightly different results for the same workflow. |

| R6-annotation | Workflows should be well-described, annotated, and offer associated metadata. Annotations such as user-contributed tags and versions should be assigned to workflows and shared when publishing the workflows and associated results [8, 12, 55, 62, 63]. | Metadata and annotations improve the understandability of the workflow, facilitate independent reuse by someone skilled in the field, make workflows more accessible, and hence promote the longevity of the workflows. |

| R7-identifier | Use and store stable identifiers for all artefacts including the workflow, the datasets, and the software components [62, 63]. | Identifiers play an important role in the discovery, citation, and accessibility of resources made available in open access repositories. |

| R8-environment | Share the details of the computational environment [8, 61, 63]. | Such details support analysis of requirements before any re-enactment or reproducibility is attempted. |

| R9-workflow | Share workflow specifications/descriptions used in the analysis [8, 55, 58, 63, 64]. | The same workflow specifications can be used with different datasets, thereby supporting reusability. |

| R10-software | Aggregate the software with the analysis and share this when publishing a given analysis [61, 63, 64]. | Making software available reduces dependence on third-party resources and as a result minimizes “workflow decay" [65]. |

| R11-raw-data | Share raw data used in the analysis [8, 55, 58, 63, 64]. | When someone wants to validate published results, availability of data supports verification of claims and hence establishes trust in the published analysis. |

| R12-attribution | Store all attributions related to data resources and software systems used [55,64]. | Accreditation supports proper citation of resources used. |

| R13-provenance | Workflows should be preserved along with the provenance trace of the data and results [8, 12, 55, 59, 64]. | A provenance trace provides a historical view of the workflow enactment, enabling end users to better understand the analysis retrospectively. |

| R14-diagram | Data flow diagrams of the computational analysis using workflows should be provided [58, 61]. | These diagrams are easy to understand and provide a human-readable view of the workflow. |

| R15-open-source | Open source licensing for methods, software, code, workflows, and data should be adopted instead of proprietary resources [58, 59, 63, 64, 66]. | This improves availability and legal reuse of the resources used in the original analysis, while restricted licenses would hinder reproducibility. |

| R16-format | Data, code, and all workflow steps should be shared in a format that others can easily understand, preferably in a system-neutral language [8, 58, 66]. | System-neutral languages help achieve interoperability and make an analysis understandable. |

| R17-executable | Promote easy execution of workflows without making significant changes to the underlying environment [3]. | In addition to helping reproducibility, this enables adapting the analysis methods to other infrastructures and improves workflow portability. |

| R18-resource-use | Information about compute and storage resources should be stored and shared as part of the workflow [61]. | Such information can assist users in estimating the resources needed for an analysis and thereby reduce the amount of failed executions. |

| R19-example | Example input and sample output data should be preserved and published along with the workflow-based analysis [8, 65]. | This information enables more efficient test runs of an analysis to verify and understand the methods used. |

This list is not exhaustive; other studies have identified separate issues (e.g., laboratory work provenance and data security) that are beyond the scope of this work.

Figure 1:

Recommendations from Table 1 classified into these categories.

Sharing “all artefacts” from a computational experiment (following all recommendations and best practices) is a demanding task without any informed guidance. It requires consolidated understanding of the impact of the many different artefacts involved in that analysis. This places extra efforts on workflow designers, (re)-users, authors, and reviewers and expectations on the community as a whole. Given the numerous WMSs and differences in how each system deals with provenance documentation, representation, and sharing of these artefacts, the granularity of provenance information preserved will vary for each workflow definition approach. Hence, devising 1 universal but technology-specific solution for provenance capture and the related resource sharing is impossible. Instead we propose a generic framework of provenance in Fig. 2 that all WMSs can benefit from and conform to with minimum technical overhead.

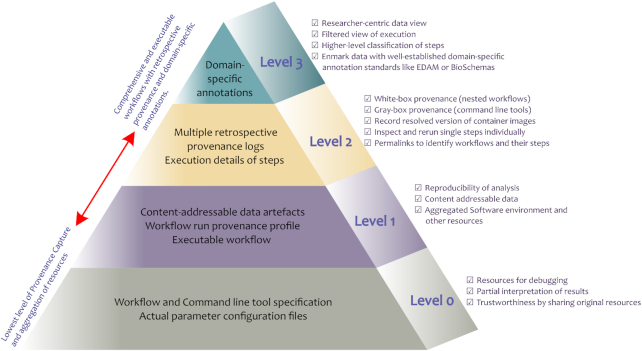

Figure 2:

Levels of provenance and resource sharing and their applications.

The recommendations in Table 1 aid in our understanding to define this framework by classifying the granularity of the provenance and related artefacts where the uppermost level exhibits comprehensive, reproducible, understandable, and provenance-rich computational experiment sharing. The purpose of this framework is 3-fold. First, because of its generic nature it provides uniformity in the provenance granularity across various WMSs belonging to different workflow definition approaches. Second, it provides comprehensive and well-defined guidelines that can be used by researchers to conduct principled analysis of the provenance of any published study. Third, due to its hierarchical nature, the framework can be leveraged by the workflow authors to progress incrementally towards the most transparent workflow-centric analysis. Overall, this framework will help achieve a uniform level of provenance and resource sharing with a given workflow-centric analysis guaranteed to fulfill the respective provenance applications.

Our proposed provenance levels are ordered from general to specific. In brief, Level 0 is unstructured information about the overall workflow enactment; Level 1 adds structured retrospective provenance, access to primary data, and executable workflows; Level 2 enhances the white-box provenance for individual steps; and Level 3 adds domain-specific annotations for improved understanding. These levels are described in the following sub-sections and mapped to the requirements in Table 1 that these levels aim to satisfy.

Level 0

To achieve this level, researchers should share the workflow specifications, input parameters used for a given workflow enactment, raw logs, and output data preferably through an open access repository. This is the least information that could be shared without exerting any extra effort to support seamless reuse or understandability of a given analysis. The artefacts shared at this level would only require uploading of the associated resources to a repository without necessarily providing any supporting metadata or provenance information. Information captured at Level 0 is the bare minimum that can be used for result interpretation.

Workflow definitions based on Level 0 can also potentially be repurposed for other analyses. As argued by Ludäscher, a well-written scientific workflow and its graphical representation is itself a source of prospective provenance, giving the user an idea of the steps taken and data produced [67]. Therefore, a well-described workflow specification indirectly provides prospective provenance without aiming for it. In addition to the textual workflow specification, its graphical representation should also be shared, if available, for better understandability, fulfilling R14-diagram. At this level, reproducing the workflow would only be possible if the end user devotes extra efforts to understand the shared artefacts and carefully recreate the execution environment. As open access journals frequently require availability of methods and data, many published studies now share workflow specifications and optionally the outputs, thereby achieving Level 0 and specifically satisfying R1-parameters and R9-workflow (Table 1). In addition, the resources shared should have open licence starting from Level 0, and this practice proposed by R15-open-source should be adopted at each higher level.

Level 1

At Level 1, R4-sw-version,R5-data-version, R12-attribution, and R13-provenance should be satisfied by providing retrospective provenance of the workflow enactment, i.e., a structured representation of machine-readable provenance that can answer questions such as “what happened," “when it happened," “what was executed," “what was used," “who did this,” and “what was produced." Seamless re-enactment of the workflow should be supported at this level. This is only possible when along with provenance information, R8-environment and R10-software are satisfied by potentially packaging the software environment for analysis sharing or there is enough information about the software environment to guide the user to reliably re-enact the workflow. Hence, R17-executable should be satisfied, making it possible for the end users to re-enact the shared analyses without making major changes to the underlying software environment.

In addition to the software availability and retrospective provenance, access to input data should also be provided, fulfilling R11-raw-data. These data can be used to re-enact the published methods or utilized in a different analysis, e.g., for performance comparison of methods. At Level 1, it is preferable to provide content-addressable data artefacts such as input, output, and intermediate files, avoiding local paths and file names to make a given workflow executable outside its local environment. The intermediate data artefacts should also be provided to facilitate inspection of all step results, hence satisfying R3-intermediate. All resources, including workflow specifications and provenance, should be shared in a format that is understandable across platforms, preferably in a technology-neutral language as proposed by R16-format.

While software and data can be digitally captured, the hardware and infrastructure requirements also need to be captured to fulfill R18-resource-use. This kind of information can naturally vary widely with runtime environments, architectures, and data sizes [68], as well as rapidly becoming outdated as hardware and cloud offerings evolve. Nevertheless a snapshot of the workflow’s overall execution resource usage for an actual run can be beneficial to give a broad overview of the requirements and can facilitate cost-efficient re-computation by taking advantage of spot-pricing for cloud resources [69].

Level 2

It is a common practice in scientific workflows to modularize the workflow specifications by separating the related tasks into “sub-workflows” or “nested workflows” [20] to be incorporated and used in other workflows or be assigned to compute and storage resources in case of distributed computing [70]. These modular solutions promote understanding and reusability of the workflows as researchers are inclined to use these modules instead of workflow as a whole for their own computational experiments. An example of a sub-workflow is the mandatory “preprocessing” [71] needed for the Genome Analysis ToolKit (GATK) best-practice pipelines used for genomic variant calling. These steps can be separated into a sub-workflow to be used before any variant calling pipeline, be it somatic or germline.

At Level 1, retrospective provenance is coarse grained, and as such, there is no distinction between workflows and their sub-workflows. Ludäscher [67] distinguishes between workflow provenance as black-box and database provenance as white-box. The reasoning behind this distinction is that often the steps in a workflow, especially those based on GUI-based platforms, provide levels of abstraction/obscurity to the actual tasks being implemented. In our previous work we used an empirical case study to demonstrate that declarative approaches to workflow definition resulted in transparent workflows with the fewest assumptions [61]. This resolves the black-box/white-box issue to some extent, but to further support research transparency, we propose to share retrospective provenance logs for each nested/sub-workflow, making the details of a workflow enactment as explicit as possible and moving a step closer to white-box provenance. These provenance logs will support the inspection and automatic re-enactment of targeted components of a workflow such as a single step or a sub-workflow individually without necessarily having to re-enact the full analysis. Some existing make-like systems such as Snakemake support partial re-enactments but typically rely on fixed file paths for input data and require manual intervention to provide the specific directory structure. With detailed provenance logs and the corresponding content-addressable data artefacts, the partial reruns can be achieved with automatic generation of input configuration setting.

In addition, we propose to include permalinks at Level 2 to identify the workflows and their individual steps, which facilitates the inspection of each step and aims to improve the longevity of the shared resources, hence supporting R7-identifier. Improving R18-resource-use for Level 2 would include resource usage per task execution. Along with execution times this can be useful information to identify bottlenecks in a workflow and for more complex calculations in cost optimization models [72]. At this provenance level resource usage data will however also become more noisy and highly variant on scheduling decisions by the workflow engine, e.g., sensitivity to cloud instance reuse or co-use for multiple tasks, or variation in data transfers between tasks on different instances. Thus, Level 2 resource usage information should be further processed with statistical models for it to be meaningful for a user keen to estimate the resource requirement for re-enactment of a given analysis.

Level 3

Levels 0–2 are generic and domain-neutral and can apply to any scientific workflow. However, domain-specific information/metadata about data and processes plays an important role in better understanding of the analysis and exploitation of provenance information, e.g., for meaningful queries to extract information to the domain under consideration [73,74]. The addition of domain-specific metadata, e.g., file formats, user-defined tags, and other annotations to generic retrospective provenance can improve the white-boxness by providing domain context to the analysis as described in R6-annotations. Annotations can range from adding textual description and tags to marking data with more systematic and well-defined domain-specific ontologies such as EDAM [75] and BioSchemas [76] in the case of bioinformatic workflows. Some studies also propose to provide example or test data sets, which eventually helps in analyzing the methods shared and verifying their results (as described in R19-example).

At Level 3, the information from previous levels, combined with specific metadata about data artefacts, facilitates higher level classification of workflow steps into motifs [77] such as data retrieval, preprocessing, analysis, and visualization. This level of provenance, resource aggregation, and sharing can provide a researcher-centric view of data and enable users to re-enact a set of steps or full workflow by providing a filtered and annotated view of the execution. This can be non-trivial to achieve with mainstream methods of workflow definition and sharing because it requires guided user annotations with controlled vocabularies, but this can be simplified by reusing related tooling from existing efforts such as BioCompute Objects [41] and DataCrate [78].

Communicating resource requirements (R18-resource-use) at Level 3 would involve domain-specific models for hardware use and cost prediction, as suggested for dynamic cloud costing [79] in BioSimSpace [80], or predicting assembler and memory settings through machine learning of variables such as source biome, sequencing platform, file size, read count, and base count in the European Bionformatics Institute (EBI) Metagenomics pipeline [81]. For robustness such models typically need to be derived from resource usage across multiple workflow runs with varied inputs, e.g., by a multi-user workflow platform. Taking advantage of Level 3 resource usage models might require preprocessing workflow inputs and calculations in an environment like R or Python, so we recommend that models be provided with separate sidecar workflows for interoperable execution before the main workflow.

By explicit enumeration of the levels of provenance, it should be possible to quantify and directly assess the effort required to reuse a workflow and reproduce experiments directly. The similar effort 5-star Open Data [82] strongly advocates open-licensed structured representation, use of stable identifiers for data sharing, and following Linked Data principles to cross-relate data. One challenge in achieving the Open Data stars is that it necessitates tool support during data processing. In our framework we proposed systematic workflow-centric resource sharing using structured Linked Data representation, including recording of the executed data operations. Hence, our effort compliments the already proposed 5-star Open Data principles and contributes to further understanding by sharing the computational method following the same principles.

Requiring researchers to achieve the above-defined levels individually is unrealistic without guidance and direct technical support. Ideally, the conceptual meaning of these levels would be translated into a practical solution utilizing the available resources. However, given the heterogeneity of workflow definition approaches, it is expected that the proposed framework, when translated into practical solutions, will result in varying workflow-centric solutions tied to specific WMSs. To support interoperability of the workflow-centric analysis achieving the provenance levels, we propose CWLProv, a format for annotating resource aggregations equipped with retrospective provenance. The next section describes CWLProv and the associated standards that are applied in this process.

CWLProv 0.6.0 and Utilized Standards

Here we present CWLProv, a format for the methodical representation of workflow enactment, associated artefacts, and capturing and using retrospective provenance information. Keeping in view the recommendations from Table 1, e.g.,R15-open-source and R16-format, we leverage open source, domain-independent, system-neutral, interoperable, and most importantly community-driven standards as the basis for the design and formatting of reproducible and interoperable workflow-based ROs. The profile description in this section corresponds to CWLProv 0.6.0 [83] (see [84] for the latest profile).

Applied standards and vocabularies

We follow the recommendation “Reuse vocabularies, preferably standardized ones” [85] from best practices associated with data sharing, representation, and publication on the Web to achieve consensus and interoperability of workflow-based analyses. Specifically we integrate CWL for workflow definition, ROs for resource aggregation, and the PROV-Data Model (PROV-DM) to support the retrospective provenance associated with workflow enactment. The key properties and principles of these standards are described below.

Common Workflow Language

CWL [6] provides declarative constructs for workflow structure and command line tool interface definition. It makes minimal assumptions about base software dependencies, configuration settings, software versions, parameter settings, or indeed the execution environment more generally [61]. The CWL object model supports comprehensive recording and capture of information for workflow design and execution. This can subsequently be published as structured information alongside any resultant analysis using that workflow.

CWL is a community-driven standard effort that has been widely adopted by many workflow design and execution platforms, supporting interoperability across a set of diverse platforms. Current adopters include Toil, Arvados, Rabix [86], Cromwell [87], REANA, and Bcbio [88], with implementations for Galaxy, Apache Taverna, and AWE currently in progress.

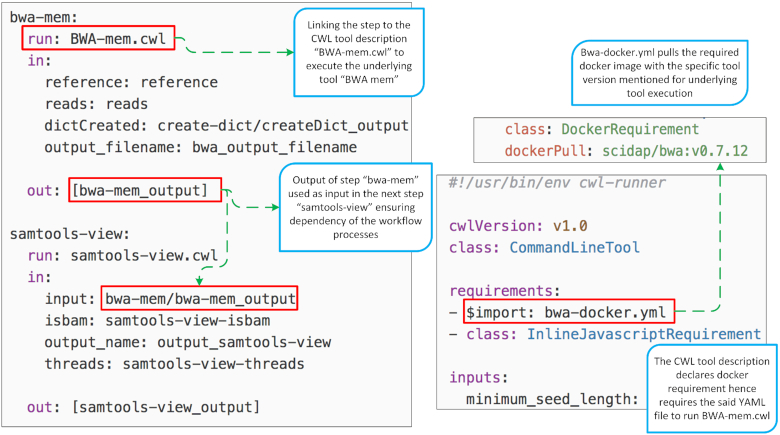

A workflow in CWL is composed of “steps,” where each step refers either to a command line tool (also specified using CWL) or another workflow specification incorporating the concept of “sub-workflows." Each “step” is associated with “inputs” that are composed of any data artefact required for the execution of that step (Fig. 3). As a result of the execution of each step, “outputs” are produced that can become (part of) “inputs” for the next steps, making the execution data-flow oriented. CWL is not tied to a specific operating system or platform, which makes it an ideal approach for interoperable workflow definitions.

Figure 3:

Left: A snapshot of part of a GATK workflow described using CWL. Two steps named as “bwa-mem" and “samtools-view" are shown, where the former links to the tool description executing the underlying tool (BWA-mem for alignment) and provides the output used as input for samtools. Right: Snapshot of BWA-mem.cwl and the associated Docker requirements for the exact tool version used in the workflow execution.

Research Object

An RO encapsulates all of the digital artefacts associated with a given computational analysis contributing towards preservation of the analysis [89], together with their metadata, provenance, and identifiers.

The aggregated resources can include but are not limited to input and output data for analysis results validation, computational methods such as command line tools and workflow specifications to facilitate workflow re-enactment, attribution details regarding users, retrospective as well as prospective provenance for better understanding of workflow requirements, and machine-readable annotations related to the artefacts and the relationships between them. The goal of ROs is to make any published scientific investigation and the produced artefacts “interoperable, reusable, citable, shareable, and portable."

The 3 core principles [90] of the RO approach are to support “identity," “aggregation," and “annotation” of research artefacts. They look to enable accessibility of tightly coupled, interrelated, and well-understood aggregated resources involved in a computational analysis as identifiable objects, e.g., using unique (persistent) identifiers such as DOIs and/or ORCIDs. The RO approach is well aligned with the idea of interoperable and platform-independent solutions for provenance capture of workflows because of its domain-neutral and platform-independent nature.

While ROs can be serialized in several different ways, in this work we have reused the BDBag approach based on BagIt (see box), which has been shown to support large-scale workflow data [91]. This approach is also compatible with data-archiving efforts from the NIH Data Commons, Library of Congress, and the Research Data Alliance. The specialized workflow-centric RO in this study encompasses the components mentioned in the previous paragraph annotated with various targeted tools and a PROV-based workflow provenance profile to capture the detailed retrospective provenance of the CWL workflow enactment.

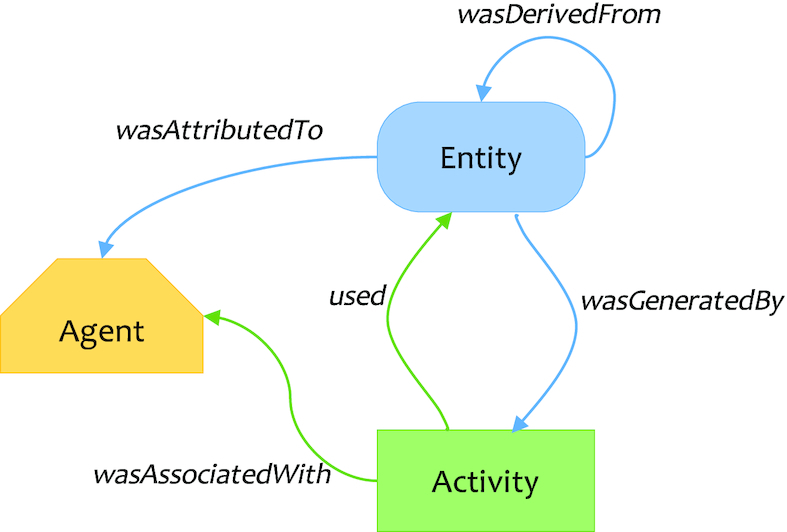

PROV Data Model

The W3C developed PROV, a suite of specifications for unified/interoperable representation and publication of provenance information on the Web. The underlying conceptual PROV-DM [15] provides a domain-agnostic model designed to capture fundamental features of provenance with support for extensions to integrate domain-specific information (Fig. 4).

Figure 4:

Core concepts of the PROV Data Model. Adapted from W3C PROV Model Primer [92].

We use mainly 2 serializations of PROV for this study, PROV-Notation (PROV-N) [93] and PROV-JSON [94]. PROV-N is designed to achieve serialization of PROV-DM instances by formally representing the information using a simplified textual syntax to improve human readability. PROV-JSON is a lightweight interoperable representation of PROV assertions using JavaScript constructs and data types. The key design and implementation principles of these 2 serializations of PROV are in compliance with the goals of this study, i.e., understandable and interoperable, and hence are a natural choice to support the design of an adaptable provenance profile. For completeness we also explored serializing the provenance graph as PROV-XML [95], as well as PROV-O [96], which provides a mapping to Linked Data and ontologies, with potential for rich queries and further integration using a triple store. One challenge here is the wide variety of OWL and RDF formats; we opted for Turtle, N-Triples, and JavaScript Object Notation for Linked Data (JSON-LD) but concluded that requiring all of these PROV and RDF serializations would be an unnecessary burden for other implementations of CWLProv.

CWLProv research object

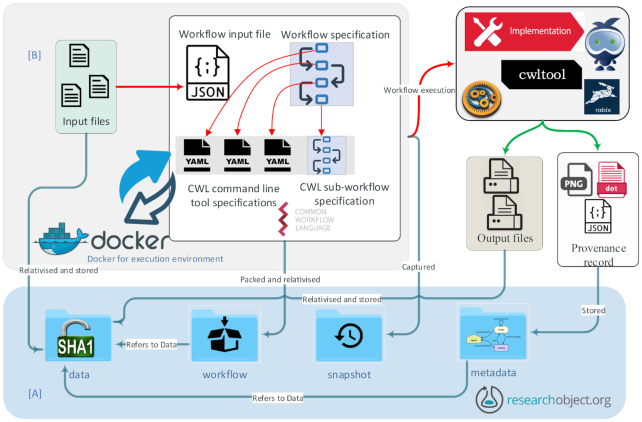

The provenance framework defined in the previous section can be satisfied by using a structured approach to share the identified resources. In this section, we define the representation of data and metadata to be shared for a given workflow enactment, stored as multiple files in their native formats. The folder structure of the CWLProv RO complies with theBagIt [9] format such that its content and completeness can be verified with any BagIt tool or library (see box What is BagIt?). The files used and generated by the workflow are here considered the data payload; the remaining directories include metadata of how the workflow results were created. We systematized the aggregated resources into various collections for better understanding and accessibility for a CWL workflow execution (Fig. 5).

Figure 5:

Schematic representation of the aggregation and links between the components of a given workflow enactment. Layers of execution are separated for clarity. The workflow specification and command line tool specifications are described using CWL. Each individual command line tool specification can optionally interact with Docker to satisfy software dependencies. [A] The RO layer shows the structure of the RO including its content and interactions with different components in the RO and [B] the CWL layer.

data/

data/ is the payload collection of the RO; in CWLProv this contains all input and output files used in a given workflow enactment. Each data file should be labelled and identified based on a hashed checksum rather than derived from its file path during workflow execution. This use of content-addressable reference and storage [97] simplifies identifier generation for data and helps to avoid local dependencies, e.g., hard-coded file names. However, the workflow execution engine might use other unique identifiers for file objects. It is advised to reuse such identifiers to avoid redundancy and to comply with the system/platform used to run the workflow.

workflow/

CWLProv ROs must include a system-independent executable version of the workflow under the workflow/ folder. When using CWL, this sub-folder must contain the complete executable workflow specification file, an input file object with parameter settings used to enact the workflow and an output file object generated as a result of workflow enactment. The latter contains details of the workflow outputs such as data files produced by the workflow but may exclude intermediate outputs.

To ensure RO portability, these file objects may not exactly match the file names at enactment time, as the absolute paths of the inputs are recommended to be replaced with relativized content-addressed paths within the RO; e.g., /home/alice/exp15/sequence.fa is replaced with ../data/b1/b1946ac92492d2347c6235b4d2611184. The input file object should also capture any dependencies of the input data files, such as .bam.bai indexes neighbouring .bam files. Any folder objects should be expanded to list contained files and their file names at time of enactment.

In the case of a CWL workflow, cwltool can aggregate the CWL description and any referenced external descriptions (such as sub-workflows or command line tool descriptions) into a single workflow file using cwltool -pack. This feature is used in our implementation (details in section Practical Realizationof CWLProv) to rewrite the workflow files, making them re-executable without depending on workflow or command line descriptions on the file system outside the RO. Other workflow definition approaches, WMS, or CWL executors should apply similar features to ensure that workflow definitions are executable outside their original file system location.

What is BagIt?

BagIt is an Internet Engineering Task Force Internet Standard (RFC8493) [9] that defines a structured file hierarchy for the purpose of digital preservation of data files. BagIt was initiated by the US Library of Congress and the California Digital Library and is now used by libraries and archives to ensure safe transmission and storage of datasets using “bags."

A bag is indicated by the presence of bagit.txt and a payload of digital content stored as files and sub-folders in the data/ folder. Other files are considered tag files to further describe the payload. All the payload files are listed in a manifest with checksums of their byte content, e.g.,manifest-sha256.txt and equivalent for tag files in tagmanifest-sha256.txt. Basic metadata can be provided in bag-info.txt as key-value pairs.

A bag can be verified to be complete if all the files listed in the manifests exist and is also considered valid if the manifest matches the checksum of each file, ensuring that they have been correctly transferred.

BDBag (Big Data bag) [91] is a profile of BagIt that adds an RO [98] metadata/manifest.json in JSON-LD [99] format to contain richer Linked Data annotations that may not fit well in bag-info.txt, e.g., authors of an individual file. BDBags can include a fetch.txt to reference external resources using ARK MinIDs or HTTP URLs, allowing bags that contain large files without necessarily transferring their bytes.

snapshot/

snapshot/ comprises copies of the workflow and tool specifications files “as-is” at enactment time, without any rewrites, packing, or relativizing as described above.

It is recommended to use snapshot resources only for validity-checking results and for understanding the workflow enactment because these files might contain absolute paths or be host-specific and thus may not be possible to re-enact elsewhere. Preserving these files untouched may nevertheless retain information that could otherwise get lost, e.g., commented-out workflow code, or identifiers baked into file names.

A challenge in capturing snapshot files is that they typically live within a file system hierarchy, which can difficult to replicate accurately, and may have internal references to other files. In our implementation we utilize cwltool -print-depsto find indirectly referenced files and store their snapshots in a flat folder.

metadata/

Each CWLProv RO must contain an RO manifest file metadata/manifest.json and 2 sub-directories metadata/logs and metadata/provenance. The RO manifest, part of the BDBag [91] profile, follows the JSON-LD structure defined for Research Object Bundles [98] and can provide structured Linked Data for each file in the RO, such as file type and creation date. Further detail about the manifest file contents is documented on GitHub as CWLProv specification [83].

Any raw log information from the workflow enactment should be made available in metadata/logs. This typically includes the actual commands executed for each step. Similar to the snapshot files, log files may however be difficult to process outside the original enactment system. An example of such processing is CWL-metrics [100], which post-processes cwltool log files to capture runtime metrics of individual Docker containers.

Capturing the details of a workflow execution requires rich metadata in provenance files (see section Retrospective Provenance Profile). These should exist in the sub-folder metadata/provenance. It is recommended to make the availability of a primary provenance file mandatory, which should conform with the PROV-N [93] format. This file describes the top-level workflow execution. As described in Level 2 (Section Levels of Provenance and Resource Sharing), it is quite possible to have nested workflows. In that case, a separate provenance file for each nested workflow execution should be included in this folder. If there are additional formats of provenance files such as PROV-JSON [94], PROV-XML [95], PROV-O [96], etc., then these should be included in the said folder, with a declaration using conformsTo to declare their formats in the RO manifest being mandatory. The nested workflow profile should be named such that there is a link between the respective step in the primary workflow and the nested workflow, preferably using unique identifiers.

Because the PROV-DM has a generalized structure, there might be some provenance aspects specific to particular workflows that are hard to capture by only using PROV-N; hence, ontologies such as wfdesc [101] can be used to describe the abstract representation of the workflow and its steps. Use of wfprov [102] to capture some workflow provenance aspects is also encouraged. Alternative extensions such as ProvOne [103] can also be utilized if the WMS or workflow executor is using these extensions already.

CWLProv reuses Linked Data standards like JSON-LD [99], W3C PROV [15], and Research Object [11]. A challenge with Linked Data in distributed and desktop computing is how to make identifiers that are absolute Uniform Resource Identifiers (URIs) and hence globally unique. For example, for CWLProv a workflow may be executed by an engine that does not know where its workflow provenance will be stored, published, or finally integrated. To this end CWLProv generators should use the proposed arcp [104] URI scheme to map local file paths within the RO BagIt folder structure to absolute URIs for use within the RO manifest and associated PROV traces. Consumers of CWLProv ROs that do not contain an arcp-based External-Identifier should generate a temporary arcp base to safely resolve any relative URI references not present in the CWLProv folder. Implementations processing a CWLProv RO may convert arcp URIs to local file:/// or http:// URIs depending on how and where the CWLProv RO was saved, e.g., using the “arcp.py” library [105].

Retrospective provenance profile

As stated earlier, the primary provenance file should conform to the PROV-N [93] serialization of the PROV data model and may optionally use ontologies specific to the workflow execution. The key features used in the structure of the retrospective provenance profile for a CWL workflow enactment in CWLProv are listed in Table 2. These features are not tied to any platform or workflow definition approach and hence can be used to document retrospective provenance of any workflow irrespective of the workflow definition approach.

Table 2.

Fulfilling recommendations with the CWLProv profile of W3C PROV, extended with RO Model’s wfdesc (prospective provenance) and wfprov (retrospective provenance)

| PROV type | Subtype | Relation | Range | Recommendation |

|---|---|---|---|---|

| Plan | wfdesc:Workflow | wfdesc:hasSubProcess | wfdesc:Process | R9-workflow |

| wfdesc:Process | ||||

| Activity | wfprov:WorkflowRun | wasAssociatedWith | wfprov:WorkflowEngine | R8-environment |

| ↳ hadPlan | wfdesc:Workflow | R9-workflow, R17-executable | ||

| wasStartedBy | wfprov:WorkflowEngine | R8-environment | ||

| ↳ atTime | ISO8601 timestamp | R13-provenance | ||

| wasStartedBy | wfprov:WorkflowRun | R9-workflow | ||

| wasEndedBy | wfprov:WorkflowEngine | R8-environment | ||

| ↳ atTime | ISO8601 timestamp | R13-provenance | ||

| wfprov:ProcessRun | wasStartedBy | wfprov:WorkflowRun | R10-software | |

| ↳ atTime | ISO8601 timestamp | R14-provenance | ||

| used | wfprov:Artifact | R11-raw-data | ||

| ↳ role | wfdesc:InputParameter | R1-parameters | ||

| wasAssociatedWith | wfprov:WorkflowRun | R9-workflow | ||

| ↳ hadPlan | wfdesc:Process | R17-executable, R16-format | ||

| wasEndedBy | wfprov:WorkflowRun | R13-provenance | ||

| ↳ atTime | ISO8601 timestamp | R13-provenance | ||

| SoftwareAgent | wasAssociatedWith | wfprov:ProcessRun | R8-environment | |

| ↳ cwlprov:image | docker image id | R4-sw-version | ||

| SoftwareAgent | wfprov:WorkFlowEngine | wasStartedBy | Person ORCID | R12-attribution |

| label | cwltool -version | R4-sw-version | ||

| Entity | wfprov:Artefact | wasGeneratedBy | wfprov:Processrun | R3-intermediate, R7-identifier |

| ↳ role | wfdesc:OutputParameter | R1-parameters | ||

| Collection | wfprov:Artefact | hadMember | wfprov:Artefact | R3-intermediate |

| Dictionary | hadDictionaryMember | wfprov:Artefact | ||

| ↳ pairKey | filename | R7-identifier |

Indentation with ↳ indicates n-ary relationships, which are expressed differently depending on PROV syntax. Namespaces: http://www.w3.org/ns/prov# (default), http://purl.org/wf4ever/wfdesc# (wfdesc), http://purl.org/wf4ever/wfprov# (wfprov), https://w3id.org/cwl/prov# (cwlprov).

The core mapping is following the PROV data model as in Fig. 4: the PROV Activity represents the duration of a workflow run, as well as individual step executions, which used file and data (Entity), which again may be wasGeneratedBy previous step activities. The workflow engine (e.g., cwltool) is the Agent controlling these activities according to the workflow definition (Plan).

PROV is a general standard not specific to workflows, and it lacks features to relate a plan (i.e., a workflow description) with sub-plans and workflow-centric retrospective provenance elements, e.g., specific workflow enactment and its related steps enactment. We have used wfdesc and wfprov to represent a few elements of prospective and retrospective provenance, respectively. In addition, the provenance profile documented details of all the uniquely identified activities, e.g., workflow enactment and related command line tool invocations, and their associated entities (e.g., input and output data artefacts, input configuration files, workflows, and command line tool specifications). The profile also documents the relationship between activities such as which activity (workflow enactment) was responsible for starting and ending another activity (command line tool invocation).

As described in Section Levels of Provenance and Resource Sharing, to achieve maximum white-box provenance, the inner workings of a nested workflow should also be included in the provenance trace. If a step represents a nested workflow, a separate provenance profile is included in the RO. Moreover, in the parent workflow trace, this relationship is recorded using “has_provenance" as an attribute of the Activity step, which refers to the profile of the nested workflow.

Practical Realization of CWLProv

CWLProv [83] provides a format that can be adopted by any workflow executor or platform, provided that the underlying workflow definition approach is at least as declarative as CWL; i.e., it captures the necessary components described in Section Applied Standards and Vocabularies. In the case of CWL, as long as the conceptual constructs are common amongst the available implementations and executors, a workflow enactment can be represented in CWLProv format. To demonstrate the practical realization of the proposed model we consider a Python-based reference implementation of CWL cwltool.

cwltool is a feature-complete reference implementation of CWL. It provides extensive validation of CWL files, as well as offering a comprehensive set of test cases to validate new modules introduced as extensions to the existing implementation. Thus it provides the ideal choice for implementing CWLProv for provenance support and resource aggregation. The existing classes and methods of the implementation were utilized to achieve various tasks such as packaging of the workflow and all associated tool specifications together. In addition, the existing Python library prov [106] was used to create a provenance document instance and populate it with the required artefacts generated as the workflow enactment proceeds.

It should be noted that we elected to implement CWLProv in the reference implementation cwltool instead of the more scalable and production-friendly CWL implementations like Toil [107], Arvados [108], Rabix [86], CWL-Airflow [109], or Cromwell [87]. An updated list of implementations is available at the CWL [110]. Compared to cwltool these generally have extensive scheduler and cloud compute support, and extensions for large data transfer and storage, and should therefore be considered for any adopters of the CWL. In this study we have however focused on cwltool because its code base was found to be easy to adapt for rich provenance capture without having to modify subsystems for distributed execution or data management, and as a reference implementation better informing us on how to model CWLProv for the general case rather than being tied into execution details of the more sophisticated CWL workflow engines.

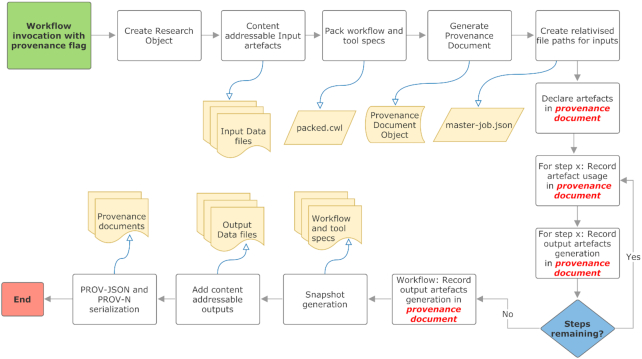

CWLProv support for cwltool is built as an optional module, which, when invoked as “cwltool-provenance ro/ workflow.cwl job.json," will automatically generate an RO with the given folder name “ro/" without requiring any additional information from the user. Each input file is assigned a hash value and placed in the folder “ro/data," making it content-addressable to avoid local dependencies (Fig. 6).

Figure 6:

High-level process flow representation of retrospective provenance capture.

To avoid including information about attribution without consent of the user, we introduce an additional flag “ -enable-user-provenance."If a user provides the options -orcid and -full-name, this information will be included in the provenance profile related to user attribution. Enabling “ -enable-user-provenance” and not providing the full name or ORCID will store user account details from the local machine for attribution, i.e., the details of the agent that enacted the workflow.

The workflow and command line tool specifications are aggregated in 1 file to create an executable workflow and placed in folder “ro/workflow." This folder also contains transformed input job objects containing the input parameters with references to artefacts in the ro/data based on relativizing the paths present in the input object. These 2 files are sufficient to re-enact the workflow, provided the other required artefacts are also included in the RO and comply with the CWLProv format. The cwltool control flow [111] indicates the points when the execution of the workflow and command line tools involved in the workflow enactment start, end, and how the output is reported back. This information and the artefacts are captured and stored in the RO.

When the execution of a workflow begins, CWLProv extensions to cwltool generate a provenance document (using the prov library), which includes default namespaces for the workflow enactment “activity." The attribution details as an agent are also added at this stage if user provenance capture is enabled, e.g., to answer “who ran the workflow?" Each step of the workflow can correspond to either a command line tool or another nested workflow referred to as a sub-workflow in the CWL documentation. For each nested workflow, a separate provenance profile is initialized recursively to achieve a white-box finer-grained provenance view as explained in Section Levels of Provenance and Resource Sharing. This profile is continually updated throughout the nested workflow enactment. Each step is identified by a unique identifier and recorded as an activity in the parent workflow provenance profile, i.e., the “primary profile." The nested workflow is recorded as a step in the primary profile using the same identifier as the “nested workflow enactment activity” identifier in the respective provenance profile. For each step in the activity, the start time and association with the workflow activity are created and stored as part of the overall provenance to answer the question “when did it happen?"

The data used as input by these steps are either provided by the user or produced as an intermediate result from the previous steps. In both cases, the Usage is recorded in the respective provenance profile using checksums as identifiers to answer the question “what was used?" The non-file input parameters such as strings and integers are stored “as-is” using an additional optional argument, prov:value. Upon completion, each step typically generates some data. The provenance profile records the generation of outputs at the step level to record “what was produced?” and “which process produced it?" Once all steps complete, the workflow outputs are collected and the generation of these outputs at the workflow level is recorded in the provenance profile. Moreover, by using the checksum of these files generated by the cwltool, content-addressable copies are saved in the folder ro/data. The provenance profile refers to these files using the same checksum such that they are traceable or can be used for further analysis if required. The workflow specification, command line tool specifications, and JSON job file are archived in the ro/snapshot folder to preserve the actual workflow history.

This prototype implementation provides a model and guidance for workflow platforms and executors to identify their respective features that can be utilized in devising their own implementation of CWLProv.

Achieving recommendations with provenance levels

Table 3 maps the best practices and recommendations from Table 1 to the Levels of Provenance (Fig. 2). The shown methods and implementation readiness indicate to which extent the recommendations are addressed by the implementation of CWLProv (detailed in this section).

Table 3.

Recommendations and provenance levels implemented in CWLProv

| Recommendation | Level 0 | Level 1 | Level 2 | Level 3 | Methods |

|---|---|---|---|---|---|

| R1-parameters | • | • | CWL, BP | ||

| R2-automate | • | CWL, Docker | |||

| R3-intermediate | • | PROV, RO | |||

| R4-sw-version | • | • | CWL, Docker, PROV | ||

| R5-data-version | • | • | CWL, BP | ||

| R6-annotation | • | * | CWL, RO, BP | ||

| R7-described | • | CWL, RO | |||

| R7-identifier | • | • | • | RO, CWLProv | |

| R8-environment | * | * | GFD.204 | ||

| R9-workflow | • | • | • | CWL, wfdesc | |

| R10-software | • | • | CWL, Docker | ||

| R11-raw-data | • | • | CWLProv, BP | ||

| R12-attribution | • | RO, CWL, BP | |||

| R13-provenance | • | • | PROV, RO | ||

| R14-diagram | ▓ | * | CWL, RO | ||

| R15-open-source | • | CWL, BP | |||

| R16-format | • | • | CWL, BP | ||

| R17-executable | ▓ | • | CWL, Docker | ||

| R18-resource-use | * | * | CWL, GFD.204 | ||

| R19-example | * | ▓ | RO, BP |

BP: best practices need to be followed manually; CWL: Common Workflow Language and embedded annotations; CWLProv: additional attributes in PROV; PROV: W3C Provenance model; RO: RO model and BagIt; wfdesc: prospective provenance in PROV.

• Implemented.

▓ Partially implemented.

*Implementation planned/ongoing.

Note that other approaches may solve this mapping differently. For instance, Nextflow [112] may fulfill R18-resource-use at Provenance Level 2 because it can produce trace reports with hardware resource usage per task execution [113], but not for the overall workflow. While a Nextflow trace report is a separate CSV file with implementation-specific columns, our planned R18-resource-use approach for CWL is to combine CWL-metrics [114], permalinks, and the standard GFD.204 [115] to further relate resource use with Level 1 and Level 2 provenance within the CWLProv RO.

In addition to following the recommendations from Table 1 through computational methods, the workflow authors are also required to exercise best practices for workflow design and authoring. For instance, to achieve R1-parameters the workflow must be written in such a way that parameters are exposed and documented at the workflow level, rather than hard-coded within an underlying Python script. Similarly, while the CWL format supports rich details of user annotations that can fulfill R6-annotation, for these to survive into an RO at execution time, such annotation capabilities must actually be used by workflow authors instead of unstructured text files.

It should be a goal of a scientific WMS to guide users towards achieving the required level of the provenance framework through automation where possible. For instance, a user may in the workflow have specified a Docker container image without preserving the version, but the provenance log could still record the specific container version used at execution time, achieving R4-sw-version retrospectively by computation rather than relying on a prospective declaration in the workflow definition.

CWLProv Evaluation with Bioinformatics Workflows

CWLProv as a standard supports syntactic, semantic, and pragmatic interoperability (defined in Section Interoperability) of a given workflow and its associated results. We have defined a “common data format” for workflow sharing and publication such that any executor or WMS with CWL support can interpret this information and make use of it. This ensures the syntactic interoperability between the workflow executors on different computing platforms. Similarly the “content” of the shared aggregation artefact as a workflow-centric RO is unambiguously defined, thus ensuring uniform representation of the workflow and its associated results across different platforms and executors, hence supporting semantic interoperability. With Level 3 provenance satisfied providing domain-specific information along with level 0–2 provenance tracking, we posit that CWLProv would be able to accomplish pragmatic interoperability by providing unambiguous information about the “context," “application,” and “use” of the shared/published workflow-centric ROs. Hence, extension of the current implementation (described in section Practical Realization of CWLProv) in future to include domain-rich information in the provenance traces and the CWLProv RO will result in pragmatic interoperability.

To demonstrate the interoperability and portability of the proposed solution, we evaluate CWLProv and its reference implementation using open source bioinformatics workflows available on GitHub from different research initiatives and from different developers. Conceptually, these workflows are selected for evaluation owing to their extensive use in real-life data analyses and variety of the input data. Alignment workflow is included in the evaluation because it is one of the most time-consuming yet mandatory steps in any variant calling workflow. Practically, choosing the workflows by these particular groups out of numerous existing implementations is justified in each section below.

RNA-seq analysis workflow

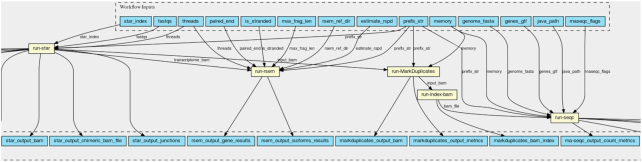

RNA sequencing (RNA-seq) data generated by next-generation sequencing platforms is composed of short sequence reads that can be aligned to a reference genome, where the alignment results form the basis of various analyses such as quantitating transcript expression and identifying novel splice junctions and isoforms and differential gene expression [116]. RNA-seq experiments can link phenotype to gene expression and are widely applied in multi-centric cancer studies [20]. Computational analysis of RNA-seq data is performed by different techniques depending on the research goals and the organism under study [117]. The workflow [118] included in this case study has been defined in CWL by one of the teams [119] participating in the NIH Data Commons initiative [120], a large research infrastructure program aiming to make digital objects (such as data generated during biomedical research and software/tools required to utilize such data) shareable and accessible and hence aligned with the FAIR principles [56].

This workflow (Fig. 7), designed for the pilot phase of the NIH Data Commons initiative [121], adapts the approach and parameter settings of Trans-Omics for Precision Medicine (TOPMed) [122]. The RNA-seq pipeline originated from the Broad Institute [123]. There are 5 steps in the workflow: (i) Read alignment using STAR [124] produces aligned BAM files including the genome BAM and transcriptome BAM. (ii) The genome BAM file is processed using Picard MarkDuplicates [125], producing an updated BAM file containing information on duplicate reads (such reads can indicate biased interpretation). (iii) SAMtools index [126] is then used to generate an index for the BAM file, in preparation for the next step. (iv) The indexed BAM file is processed further with RNA-SeQC [127], which takes the BAM file, human genome reference sequence, and Gene Transfer Format (GTF) file as inputs to generate transcriptome-level expression quantifications and standard quality control metrics. (v) In parallel with transcript quantification, isoform expression levels are quantified by RSEM [128]. This step depends only on the output of the STAR tool, and additional RSEM reference sequences.

Figure 7:

Portion of an RNA-seq workflow generated by CWL viewer [129].

For testing and analysis, the workflow author provided example data created by down-sampling the read files of a TOPMed public access data set [130]. Chromosome 12 was extracted from the Homo Sapien Assembly 38 reference sequence and provided by the workflow authors. The required GTF and RSEM reference data files are also provided. The workflow is well documented, with a detailed set of instructions for the steps performed to down-sample the data also provided for transparency. The availability of example input data, use of containerization for underlying software, and detailed documentation are important factors in choosing this specific CWL workflow for CWLProv evaluation.

Alignment workflow