Abstract

The field of brain-computer interfaces is poised to advance from the traditional goal of controlling prosthetic devices using brain signals to combining neural decoding and encoding within a single neuroprosthetic device. Such a device acts as a “co-processor” for the brain, with applications ranging from inducing Hebbian plasticity for rehabilitation after brain injury to reanimating paralyzed limbs and enhancing memory. We review recent progress in simultaneous decoding and encoding for closed-loop control and plasticity induction. To address the challenge of multi-channel decoding and encoding, we introduce a unifying framework for developing brain co-processors based on artificial neural networks and deep learning. These “neural co-processors” can be used to jointly optimize cost functions with the nervous system to achieve desired behaviors ranging from targeted neuro-rehabilitation to augmentation of brain function.

Introduction

A brain-computer interface (BCI) [1,2,3,4] is a device that can (a) allow signals from the brain to be used to control devices such as prosthetics, cursors or robots, and (b) allow external signals to be delivered to the brain through neural stimulation. The field of BCIs has made enormous strides in the past two decades. The genesis of the field can be traced to early efforts in the 1960s by neuroscientists such as Eb Fetz [4] who studied operant conditioning in monkeys by training them to control the movement of a needle in an analog meter by modulating the firing rate of a neuron in their motor cortex. Others such as Delgado and Vidal explored techniques for neural decoding and stimulation in early versions of neural interfaces [6,7]. After a promising start, there was a surprising lull in the field until the 1990s when, spurred by the advent of multi-electrode recordings as well as fast and cheap computers, the field saw a resurgence under the banner of brain-computer interfaces (BCIs; also known as brain-machine interfaces and neural interfaces) [1,2].

A major factor in the rise of BCIs has been the application of increasingly sophisticated machine learning techniques for decoding neural activity for controlling prosthetic arms [8,9,10], cursors [11,12,13,14,15,16], spellers [17,18] and robots [19,20,21,22]. Simultaneously, researchers have explored how information can be biomimetically or artificially encoded and delivered via stimulation to neuronal networks in the brain and other regions of the nervous system for auditory [23], visual [24], proprioceptive [25], and tactile [26,27,28,29,30] perception.

Building on these advances in neural decoding and encoding, researchers have begun to explore bi-directional BCIs (BBCIs) which integrate decoding and encoding in a single system. In this article, we review how BBCIs can be used for closed-loop control of prosthetic devices, reanimation of paralyzed limbs, restoration of sensorimotor and cognitive function, neuro-rehabilitation, enhancement of memory, and brain augmentation.

Motivated by this recent progress, we propose a new unifying framework for combining decoding and encoding based on “neural co-processors” which rely on artificial neural networks and deep learning. We show that these “neural co-processors” can be used to jointly optimize cost functions with the nervous system to achieve goals such as targeted rehabilitation and augmentation of brain function, besides providing a new tool for testing computational models and understanding brain function [31].

Simultaneous Decoding and Encoding in BBCIs

Closed-Loop Prosthetic Control

Consider the problem of controlling a prosthetic hand using brain signals. This involves (1) using recorded neural responses to control the hand, (2) stimulating somatosensory neurons to provide tactile and proprioceptive feedback, and (3) ensuring that stimulation artifacts do not corrupt the recorded signals being used to control the hand. Several artifact reduction methods have been proposed for (3) – we refer the reader to [32,33,34]. We focus here on combining (1) decoding with (2) encoding.

Most state-of-the-art decoding algorithms for intracortical BCIs are based on a linear decoder such as the Kalman filter. Typically, the state vector x for the Kalman filter is chosen to be a vector of kinematic quantities to be estimated, such as hand position, velocity, and acceleration. The likelihood (or measurement) model for the Kalman filter specifies how the kinematic vector xt at time t relates linearly (via a matrix B) to the measured neural activity vector yt:

while a dynamics model specifies how xt linearly changes (via matrix A) over time:

nt and mt are zero-mean Gaussian noise processes. The Kalman filter computes the optimal estimates for kinematics xt (both mean and covariance) given current and all past neural measurements.

One of the first studies to combine decoding and encoding was by O’Doherty, Nicolelis, and colleagues [35] who showed that stimulation of somatosensory cortex could be used to instruct a rhesus monkey which of two targets to move a cursor to; the cursor was subsequently controlled using a BCI based on linear decoding to predict the X- and Y-coordinate of the cursor. A later study by the same group [36] demonstrated true closed-loop control. Monkeys used a BCI based on primary motor cortex (M1) recordings and Kalman-filter-based decoding to actively explore virtual objects on a screen with artificial tactile properties. The monkeys were rewarded if they found the object with particular artificial tactile properties. During brain-controlled exploration of an object, the associated tactile information was delivered to somatosensory cortex (S1) via intracortical stimulation. Tactile information was encoded as a high-frequency biphasic pulse train (200 Hz for rewarded object, 400 Hz for others) presented in packets at a lower frequency (10 Hz for rewarded, 5 Hz for unrewarded objects). Because stimulation artifacts masked neural activity for 5–10 ms after each pulse, an interleaved scheme of alternating 50 ms recording and 50 ms stimulation was used. The monkeys were able to select the desired target object within a second or less based only on its tactile properties as conveyed through stimulation.

Klaes, Andersen and colleagues [37] have also demonstrated that a monkey can utilize intracortical stimulation in S1 to perform a match-to-sample task where the goal is to move a virtual arm and find a target object that delivers stimulation similar to a control object. In their experiment, the monkey controlled a virtual arm using a Kalman-filter-based decoding scheme where the Kalman filter’s state was defined as the virtual hand’s position, velocity and acceleration in three dimensions. The encoding algorithm involved stimulating S1 via three closely located electrodes using a 300 Hz biphasic pulse train for up to 1 second while the virtual hand held the object. After training, the monkey was able to move the virtual hand to the correct target with success rates between 70% and more than 90% over the course of 8 days (chance level was 50%).

Finally, Flesher and colleagues [38] have recently shown that a paralyzed patient can use a bidirectional BCI for closed-loop control of a prosthetic hand in a continuous force matching task. Control signals were decoded from multi-electrode recordings in M1 using a linear decoder that mapped M1 firing rates to movement velocities of the robotic arm. Initial training data for the linear decoder was obtained by asking the subject to observe the robotic hand performing hand shaping tasks such as “pinch” (thumb/index/middle flexion-extension), “scoop” (ring/pinky flexion/extension) or grasp (all finger flexion) and recording M1 firing rates, followed by a second training phase involving computer-assisted control to fine tune the decoder weights. The subject then performed a 2D force matching task with the robotic hand using the trained decoder to pinch, scoop or grasp a foam object either gently or firmly while using stimulation of S1 to get feedback on the force applied. The encoding algorithm linearly mapped torque sensor data from the robotic hand’s finger motors to pulse train amplitude of those stimulating electrodes that previously elicited percepts on the corresponding fingers of the subject. The researchers showed that the subject was able to continuously control the flexion/extension of the pinch and scoop dimensions while evaluating the applied torque based on force feedback from S1 stimulation. The success rate for pinch, scoop or grasp with gentle or firm forces) was significantly higher with stimulation feedback compared to feedback from vision alone.

Reanimating Paralyzed Limbs

Rather than controlling a prosthetic limb, BBCIs can also be used to control electrical stimulation of muscles to restore movement in a paralyzed limb. Moritz, Perlmutter, and Fetz [39] demonstrated this approach in two monkeys by translating the activity of single motor cortical neurons into electrical stimulation of wrist muscles to move a cursor on a computer screen. The decoding scheme involved operant conditioning to volitionally control activity of a motor cortical neuron to initially move a cursor into a target. After training, the activity from the motor cortical neuron was converted into electrical stimuli which was delivered to the monkey’s temporarily paralyzed wrist muscles (this type of stimulation is called functional electrical stimulation, or FES). Flexor FES current was set to be proportional to the rate above a threshold (0.8 x [firing rate – 24] with a maximum of 10 mA), and extensor FES was inversely proportional to the rate below a second threshold (0.6 x [12 – firing rate] with a maximum of 10 mA). Both monkeys were able to modulate the activity of cortical neurons to control their paralyzed wrist muscles and move a manipulandum to acquire five targets. Ethier et al. [40] extended these results to grasping and moving objects using a linear decoder with a static nonlinearity applied to about 100 neural signals from M1.

Extending the approach to humans, Bouton et al. [41] showed that a quadraplegic man with a 96-electrode array implanted in the hand area of the motor cortex could use cortical signals to electrically stimulate muscles in his paralyzed forearm and produce six different wrist and hand motions. For decoding, six separate support vector machines were applied to mean wavelet power features extracted from multiunit activity to select one out of these six motions. The encoding scheme involved activating the movement associated with the highest decoder output using an electrode stimulation pattern previously calibrated to evoke that movement. Surface electrical stimulation was delivered as monophasic rectangular pulses at 50 Hz pulse rate and 500 μs pulse width, with stimulation intensity set to a piecewise linear function of decoder output. These results were extended to multi-joint reaching and grasping movement by Ajiboye et al. [42]: a linear decoder similar to a Kalman filter was used to map neuronal firing rates and high frequency power at electrodes in the hand area of the motor cortex to percent activation of stimulation patterns associated with elbow, wrist or hand movements. The researchers showed that a tetraplegic subject could perform multi-joint arm movements for point-to-point target acquisitions with 80–100% accuracy and volitionally reach and drink a mug of coffee.

One shortcoming of the above approaches is that continued electrical stimulation of muscles results in muscle fatigue, rendering the technique impractical for day-long use. An alternate approach to reanimation is to use brain signals to stimulate the spinal cord. Spinal stimulation may simplify encoding and control because it activates functional synergies, reflex circuits, and endogenous pattern generators. Capogrosso, Courtine, and colleagues [43] demonstrated the efficacy of brain-controlled spinal stimulation for hind limb reanimation for locomotion in paralyzed monkeys. They used a decoder based on linear discriminant analysis to predict foot-strike and foot-off events during locomotion. The encoder used this prediction to activate extensor and flexor “hotspots” in the lumbar spinal cord via epidural electrical stimulation to correctly produce the extension and flexion of the impaired leg.

Restoring Motor and Cognitive Function

One of the early pioneers exploring bidirectional BCIs for restoration of brain function was Jose Delgado [6] who designed an implantable BBCI called the stimoceiver that could communicate with a computer via radio. Delgado was the first to combine decoding with encoding to shape behavior: his decoding algorithm detected spindles in the amygdala of a monkey and for each detection, triggered stimulation in the reticular formation, which is associated with negative reinforcement. After six days, spindle activity was reduced to 1 percent of normal levels, making the monkey quiet and withdrawn. Unfortunately, efforts to extend this approach to humans to treat depression and other disorders yielded inconsistent results.

Delgado’s work did eventually inspire commercial brain implants such as Neuropace’s RNS system that detects onset of seizures using time- and frequency-based methods from brain surface recordings (ECoG) and stimulates the region where the seizure originates. Also inspired by Delgado’s work is the technique of deep brain stimulation (DBS), a widely prescribed form of neurostimulation for reducing tremors and restoring motor function in Parkinson’s patients. Current DBS systems are open-loop but Herron et al. have recently demonstrated closed-loop DBS [44] by triggering DBS based on movement intention, which was decoded as reduction in ECoG power in the low frequency (“mu”) band over motor cortex.

Enhancing Memory and Augmenting Brain Function

Besides restoration of lost function, BBCIs can also be used for augmentation of brain function. Berger, Deadwyler and colleagues [45,46] have demonstrated that BBCIs implanted in the hippocampus of monkeys and rats can be used to enhance memory in delayed match-to-sample (DMS) and nonmatch-to-sample tasks. They first fit a multiinput/multi-output (MIMO) nonlinear filtering model to simultaneously recorded spiking data from hippocampal CA3 and CA1 during successful trials, with CA3 as input to the model and CA1 as output. The trained MIMO model was later used to decode CA3 activity and predict CA1 activity encoded as patterns of biphasic electrical pulses. Deadwyler et al. [46] showed that in the four monkeys tested, performance in the DMS task was enhanced in the difficult trials, which had more distractor objects or required information to be held in memory for longer durations. However, it is unclear how the approach could be used when the brain is not healthy such as in Alzheimer’s patients [47] where simultaneous recordings from areas such as CA3 and CA1 for training the model in successful trials will not be available.

Nicolelis suggested several brain augmentation schemes based on BBCIs in his book [48], including direct brain-to-brain communication. He and his colleagues subsequently showed how rats can use brain-to-brain interfaces (BBIs) to solve sensorimotor tasks [49]: an “encoder” rat identified a stimulus and pressed one of two levers while its M1 cortex activity was transmitted to the M1 cortex of a “decoder” rat. The stimulation pattern was based on a Z score computed from the difference in the number of spikes between the current trial and a template trial. If the decoder rat made the same choice as the encoder rat, both rats were rewarded for the successful transfer of information between their two brains. Rao, Stocco and colleagues utilized noninvasive technologies to demonstrate the first human brain-to-brain interface [50,51,52]. The intention of a “Sender” who could perceive but not act was decoded from motor or visual cortex using EEG; this information was delivered via transcranial magnetic stimulation (TMS) to the motor or visual cortex of a “Receiver” who could act but not perceive. The researchers showed that tasks such as a video game [50] or “20 questions” [52] could be completed successfully through direct brain-to-brain collaboration (see [53,54] for other examples). More recently, brain-to-brain interfaces have been used to create a network of brains or “BrainNet” allowing groups of humans [55] or rats [56] to solve tasks collaboratively.

Inducing Plasticity and Rewiring the Brain

Hebb’s principle for plasticity states that connections from a group A of neurons to a group B are strengthened if A consistently fires before B, thereby strengthening the causal relationship from A to B. Jackson, Mavoori and Fetz [57] demonstrated that such plasticity can be artificially induced in the motor cortex of freely behaving primates by triggering stimulation at a site B a few milliseconds after a spike was detected at site A. After two days of continuous spike-triggered stimulation, the output generated by site A shifted to resemble the output from B, consistent with a strengthening of any weak synaptic connections that may have existed from neurons in A to neurons in B. Such an approach could be potentially quite useful for neurorehabilitation by rewiring the brain for restoration of motor function after traumatic brain injury, stroke or neuropsychiatric disorders such as depression and PTSD. Along these lines, Guggenmos, Nudo, and colleagues [58] have shown that the approach can be used to improve reaching and grasping functions in a rat after traumatic brain injury to the rat’s primary motor cortex (caudal forelimb area). Their approach involves creating an artificial connection between the rat’s premotor cortex (rostral forelimb area or RFA) and somatosensory cortex S1 and for each spike detected by an electrode in RFA, delivering an electric pulse to S1 after 7.5 milliseconds. All of these prior approaches have relied on 1-to-1 spike-to-stimulation-pulse protocols, leaving open the question of how the approach can be generalized to induction of goal-directed multi-electrode plasticity.

From Proof-of-Concept to Real-World Applications

Most of the BBCIs reviewed above (see Table 1) involved proof-of-concept demonstrations. An important question is how close are we to real-world applications of BCIs. While a small number of BCIs such as deep brain stimulators and Neuropace’s RNS epilepsy control system are already being used for medical applications, the vast majority of BCIs are still in their “laboratory testing” phase. Consider, for example, the most commonly cited BCI application of communication using brain signals alone. The maximum bit rate achieved by a human using an invasive BCI is currently about 3.7 bits/sec and 39.2 correct characters per minute [16]. This is an order of magnitude lower than average human typing speeds of about 150-200 characters per minute. In noninvasive EEG-based BCIs, the highest bit rate has been achieved using steady state visually evoked potentials (SSVEP): each choice on a menu is associated with a flickering visual stimulus (e.g., an LED) flashing at a specific known frequency. SSVEP BCIs have achieved bit rates as high as 5.3 bits/sec or 60 characters per minute [59], which is again an order of magnitude less than manual typing speeds, with the added drawback of visual fatigue. BBCIs are even more in their infancy. For example, the noninvasive brain-to-brain interfaces in humans cited above have bit rates of less than 1 bit/sec, partly due to safety considerations of transcranial magnetic stimulation.

Table 1.

Summary and comparison of some notable BBCIs built so far.

| BBCI | Input & Output | Decoding & Encoding | Achievements | Limitations |

|---|---|---|---|---|

| O’Doherty et al., 2011 | Spikes from monkey M1 cortex & intracortical microstimulation in S1 cortex | Unscented Kalman filter & Biphasic pulse trains of different frequencies | Simultaneous brain-controlled cursor and artificial tactile feedback in monkeys | Artificial tactile feedback limited to reward/no reward information |

| Flesher et al., 2016 | Spikes from human M1 cortex & intracortical microstimulation in S1 cortex | Velocity-based linear decoder & linear encoding of torque to pulse train amplitude | Simultaneous brain-controlled prosthetic hand & force feedback in humans | Simple force matching task with only two levels (gentle or firm) |

| Moritz et al., 2008 | Spikes from monkey M1 cortex & functional electrical stimulation of muscles | Volitional control of firing rate of single neuron & linear encoder | Direct brain control of paralyzed muscles to restore wrist movement in monkeys | Simple flexion and extension movements of the wrist only, muscle fatigue with prolonged use |

| Bouton et al., 2016 | Multiunit activity from hand area of human M1 cortex & functional electrical stimulation of paralyzed forearm muscles | Support vector machines for classifying 1 of 6 wrist/hand motions & previously calibrated stimulation for each motion | Direct brain control of forearm muscles for hand/wrist control in paralyzed human | Decoder based on classification of 6 fixed motions, muscle fatigue with prolonged use |

| Ajiboye et al., 2017 | Spikes and high frequency power in hand area of human M1 cortex & functional electrical stimulation of paralyzed arm muscles | Linear decoder & percent activation of stimulation patterns associated with hand, wrist or elbow movements | Direct brain control of arm muscles for multi-joint movements and point-to-point target acquisitions in paralyzed human | Percent activation of fixed stimulation patterns, muscle fatigue with prolonged use |

| Capogrosso et al., 2016 | Multiunit activity from leg area of monkey M1 cortex & epidural electrical stimulation of the lumbar spinal cord | Linear discriminant analysis to predict foot strike/foot off & activation of spinal hotspots for extension/flexion | Direct brain control of the spinal cord for restoring locomotion in paralyzed monkeys | Simple two state decoder and encoder models, viability for restoration of bipedal walking in humans yet to be demonstrated |

| Delgado, 1969 | Local field potentials in monkey amygdala & electrical stimulation in the reticular formation | Decoder algorithm for detecting fast “spindle” waves & an electrical stimulation for each detection | First BBCI to control behavior and induce neuroplasticity in animals | Results not consistent from subject to subject, did not generalize to treating depression in humans |

| Deadwyler et al., 2017 | Spikes from area CA3 in monkey hippocampus & electrical microstimulation in area CA1 in hippocampus | Multi-input/multioutput (MIMO) nonlinear filtering model to decode CA3 activity & encode predicted CA1 activity as biphasic electrical pulses | First demonstration of memory enhancement in a short-term memory task in a monkey | Applicability to memory restoration in Alzheimer’s or other patients unclear due to MIMO model training requirement |

| Jackson et al., 2006 | Spikes from a region of monkey M1 cortex & intracortical microstimulation of a different region of M1 | Single spike detection & biphasic electrical pulse for each spike detection | First demonstration of Hebbian plasticity induction using a BBCI in a freely behaving monkey | Single input/single output protocol, not designed for multi-input/multioutput goal-directed plasticity induction |

| Guggenmos et al., 2013 | Spikes from rat premotor cortex & intracortical microstimulation of S1 somatosensory cortex | Single spike detection in premotor cortex & electrical pulse stimulation in S1 after 7.5 ms. | First demonstration of improved motor function after traumatic brain injury in a rat using a BBCI for plasticity induction | Single input/single output protocol, plasticity induction not geared toward optimizing behavioral or rehabilitation metrics |

Towards A Unifying Framework: Neural Co-Processors based on Deep Learning

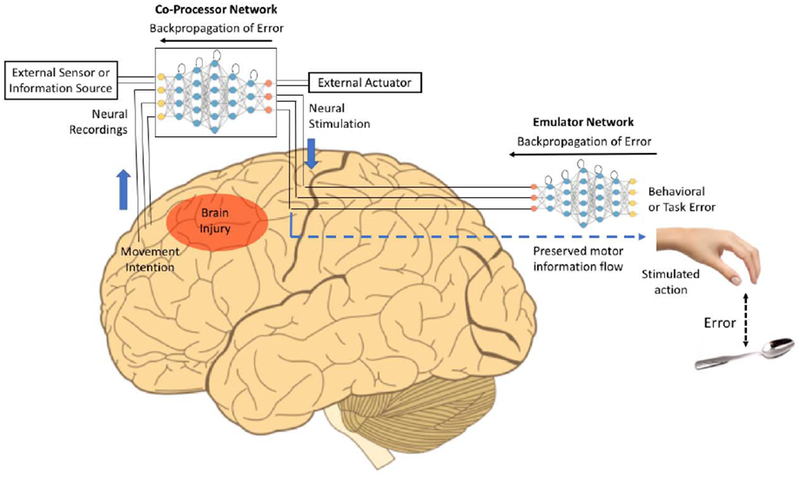

A major limitation of current BBCIs is that they treat decoding and encoding as separate processes, and they do not co-adapt and jointly optimize a cost function with the nervous system. We propose that these limitations may addressed using a “neural coprocessor” as shown in Figure 1. A neural co-processor uses two artificial neural networks, a co-processor network (CPN) and an emulator network (EN), combined with a new type of deep learning that approximates backpropagation through both biological and artificial networks.

Figure 1: Neural Co-Processor for the Brain for Restoring and Augmenting Function.

A deep recurrent artificial network is used to map input neural activity patterns in one set of regions to output stimulation patterns in other regions (“Co-Processor Network” or CPN). The CPN’s weights are optimized to minimize brain-activity-based error (between stimulation patterns and target neural activity patterns when known), or more generally, to minimize behavioral error or task error using another network, an emulator network. The emulator network is also a deep recurrent network that is pre-trained through backpropagation to learn the biological transformation from stimulation or neural activity patterns at the stimulation site to the resulting output behaviors. During CPN training, errors are backpropagated through the emulator network to the CPN to adapt the CPN’s weights but not the emulator network’s weights. The trained CPN thus produces optimal stimulation patterns that minimize behavioral error, thereby creating a goal-directed artificial information processing pathway between the input and output regions. The CPN also promotes neuroplasticity between weakly connected regions, leading to neural augmentation or targeted rehabilitation. External information from artificial sensors or other information sources can be integrated into the CPN’s information processing as additional inputs to the neural network and outputs can be computed for external actuators as well. The example here shows the CPN creating a new information processing pathway between prefrontal cortex and motor cortex, bypassing an intermediate area affected by brain injury or stroke. The CPN is trained to transform movement intentions in the prefrontal cortex to appropriate movement-related stimulation patterns in the motor cortex for restoration of movement and rehabilitation.

Suppose the goal is to restore movement in a stroke or spinal cord injury (SCI) patient, e.g., to enable the hand to reach a target object (see Figure 1). The CPN is a multilayered recurrent neural network that maps neural activity patterns from a large number of electrodes in areas A1, A2, etc. (e.g., movement intention areas spared by the stroke) to appropriate stimulation patterns in areas B1, B2, etc. (e.g., intact movement execution areas in the cortex or spinal cord). When the subject forms the intention to move the hand to a target (e.g., during a rehabilitation session), the CPN maps the resulting neural activity pattern to a stimulation pattern. Unfortunately, to train the CPN, we do not have a set of “target stimulation patterns” that produce the intended movements. However, for any stimulation pattern, we can compute the error between the resulting hand movement and the target. How can this behavioral error be translated and backpropagated through the CPN to generate better stimulation patterns?

We propose the use of an emulator network (EN) that emulates the biological transformation between stimulation patterns and behavioral output. The EN is a deep recurrent neural network whose weights can be learned using standard backpropagation from a dataset consisting of a large variety of stimulation (or neural activity) patterns in areas B1, B2, etc. and the resulting movements or behavior. After training, the EN acts as a surrogate for the biological networks mediating the transformation between inputs in B1, B2, etc. and output behavior.

With the help of a trained EN, we can train the weights of the CPN to produce the optimal stimulation patterns for minimizing behavioral error (e.g., error between current hand position and a target location). For each neural input pattern X (e.g., movement intention) that the subject produces in areas A1, A2 etc., the CPN produces an output stimulation pattern Y in areas B1, B2 etc., which results in a behavior or movement Z.

The error E between actual movement Z and the intended movement target Z’ is first backpropagated through the EN but without modifying its weights. We continue to backpropagate the error through the CPN, this time modifying the CPN’s weights. In other words, the behavioral error is backpropagated through a concatenated CPN-EN network but only the CPN’s weights are changed. This allows the CPN to progressively generate better stimulation patterns that enable the brain to better achieve the target behavior, thereby resulting in a co-adaptive BBCI.

Furthermore, by repeatedly pairing patterns of neural inputs with patterns of output stimulation, the CPN promotes neuroplasticity between connected brain regions via Hebbian plasticity. Note that unlike previous plasticity induction methods [57,58], the plasticity induced spans multiple electrodes and is goal-directed since the CPN is trained to minimize behavioral errors. After a sufficient amount of coupling between regions X and Y, neurons in region X can be expected to automatically recruit neurons in region Y to achieve a desired response (such as a particular hand movement). As a result, in some cases, the neural co-processor system may eventually be no longer required after a period of use and may be removed once function is restored or augmented to a satisfactory level.

To illustrate the generality of the neural co-processor framework beyond restoring motor function, consider a co-processor for emotional well-being (e.g., to combat trauma, depression, or stress). The emulator network could first be trained by stimulating one or more emotion-regulating areas of the brain and noting the effect of stimulation on the subject’s emotional state, as captured, for example, by a mood score based on a questionnaire answered by the subject [60]. The CPN could then be trained to map emotional intentions or other brain states to appropriate stimulation patterns that lead to a desired emotional state (e.g., less traumatic, stressful or depressed state).

Another example is using a neural co-processor to create a sensory prosthesis that converts sensory stimuli from an external sensor (Figure 1), such as a camera, microphone, or even infrared or ultrasonic sensor, into stimulation patterns that take into account the ongoing dynamics of the brain. In this case, the CPN in Figure 1 takes as input both external sensor information and current neural activity to generate an appropriate stimulation pattern in the context of the current state of the brain. The emulator network could be trained based on the subject’s reports of perceptual states generated by a variety of stimulation patterns.

More generally, the external input to the CPN could be from any information source, even the internet, allowing the brain to request information via the external actuator component in Figure 1. The resulting information is conveyed via the CPN’s input channels and processed in the context of current brain activity. The emulator network in this case would be trained in a manner similar to the sensory prosthesis example above to allow the CPN to convert abstract information (such as text) to appropriate stimulation patterns that the user can understand.

Finally, we note that neural co-processors can be useful tools for testing new computational models of brain and nervous system function [31]. Rather than using traditional artificial neural networks in the CPN in Figure 1, one could use more realistic cortical models such as networks of integrate-and-fire or Hodgkin-Huxley neurons, along with biological learning rules such as spike-timing dependent plasticity rather than backpropagation. A critical test for putative computational models of the nervous system would then be: can the model successfully interact with its neurobiological counterpart and be eventually integrated within the nervous system’s information processing loops?

Challenges

A first challenge in realizing the above vision for neural co-processors is obtaining an error signal for training the two networks. In the simplest case, the error may simply be a neural error signal: the goal is drive neural activity in areas B1, B2, etc. towards known target neural activity patterns, and we therefore train the CPN directly to approximate these activity patterns without using an EN. However, we expect such scenarios to be rare. In the more realistic case of restoring motor behavior, such as in stroke rehabilitation where the goal is, for example, to reach towards a target, a computer vision system could be used to quantify the error between the hand and the target or a sensor worn on the hand may indicate error in the force applied. Similarly, in speech rehabilitation after stroke, a speech analysis system could quantify the error between the generated speech and the target speech pattern.

A bigger challenge is training an EN to be a sufficiently accurate model of the transformation from stimulation patterns to behavioral output. It may be difficult to obtain a sufficient amount of data containing enough examples of how stimulation affects behavior. One possible solution is to record neural activities in regions that are causally related or correlated with observed behavior and use this data to train the EN, under the assumption that stimulation patterns will approximate the neural activity patterns. Another possible approach is to build the EN in a modular fashion, starting from biological structures closest to the target behavior and going up the hierarchy, e.g., learning to emulate the transformation from limb muscles to limb movements, spinal activity to muscle activity, etc. Finally, one could combine the above ideas with the concept of transfer learning using networks trained across similar neural regions or even across subjects, and incorporate prior knowledge from computational neuroscience models of the biological system being emulated. Regardless of the training method used, we expect that the EN (and CPN) will need to be regularly updated with new neural data as the brain adapts to having the CPN as part of its information processing loops.

Conclusions

Traditionally, much of BCI research has focused on the problem of decoding, specifically, how can movement intention be extracted from noisy brain signals to control prosthetic devices? More recently, there has been growing interest in “closing the loop” using bidirectional BCIs (BBCIs) which incorporate sensory feedback, e.g., from artificial tactile sensors, via stimulation. The ability to simultaneously decode neural activity from one region and encode information to deliver via stimulation to another region confers on BBCIs tremendous versatility. In this article, we have reviewed how BBCIs can be used to not only control prosthetic arms with sensory feedback but also (1) control paralyzed limbs using motor intention signals from the brain, (2) restore and augment cognitive function and memory, and (3) induce neuroplasticity for rehabilitation. Promising proof-of-concept results have been obtained in animal models and in some cases, humans, but mostly under laboratory conditions.

To transition to real-world conditions, BBCIs must co-adapt with the nervous system and jointly optimize behavioral cost functions. We introduced the concept of a neural coprocessor which uses artificial neural networks to jointly optimize behavioral error functions with biological neural networks. A trained emulator network is used as a surrogate for the biological network producing the behavioral output. Behavioral errors are backpropagated through the emulator network to the co-processor network which adapts its weights to minimize errors and delivers optimal stimulation patterns for specific neural input patterns. We illustrated how a neural co-processor could be used to improve motor function in a stroke or spinal cord injury patient. Such co-processors have not yet been validated in animal models or humans, but if successful, they could potentially be applied to modalities other than movement such as:

Mapping inputs from one memory-related area to another to facilitate or restore access to particular memories (e.g., in memory loss) or to unlearn traumatic memories (e.g., in PTSD),

Mapping inputs from novel external sensors or one sensory area to another to restore or augment sensation and perception,

Connecting areas involved in emotion processing to augment or rehabilitate emotional function, and

Augmenting the brain’s knowledge, skills, information processing, and learning capabilities with deep artificial neural networks.

Highlights.

Bidirectional brain-computer interfaces (BBCIs) combine neural decoding and encoding within a single neuroprosthetic device.

BBCIs have been used to control prosthetic limbs, induce plasticity for rehabilitation, reanimate paralyzed limbs and enhance memory.

Neural co-processors for the brain rely on artificial neural networks and deep learning to jointly optimize cost functions with the nervous system.

Neural coprocessors can be used to achieve functions ranging from targeted neurorehabilitation to augmentation of brain function.

Acknowledgments

This work was supported by NSF grants EEC-1028725 and 1630178, CRCNS/NIMH grant no. 1R01MH112166-01, and a grant from the W. M. Keck Foundation. The author would like to thank Eb Fetz, Chet Moritz, Andrea Stocco, Steve Perlmutter, Dimi Gklezakos, Jon Mishler, Richy Yun, and James Wu for discussions related to topics covered in this article.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

Conflict of interest statement

Nothing declared.

References and recommended reading

Papers of particular interest have been highlighted as:

• of special interest

•• of outstanding interest

- 1.Rao RPN. Brain-Computer Interfacing: An Introduction, New York, NY: Cambridge University Press, 2013. [Google Scholar]

- 2.Wolpaw J, Wolpaw EW. (eds.) Brain-Computer Interfaces: Principles and Practice. Oxford University Press, 2012. [Google Scholar]

- 3.Moritz CT, Ruther P, Goering S, Stett A, Ball T, Burgard W, Chudler EH, Rao RP. New Perspectives on Neuroengineering and Neurotechnologies: NSF-DFG Workshop Report. IEEE Trans Biomed Eng. 63(7):1354–67, 2016. [DOI] [PubMed] [Google Scholar]

- 4.Lebedev MA, Nicolelis MA. Brain-Machine Interfaces: From Basic Science to Neuroprostheses and Neurorehabilitation. Physiol Rev. 97(2):767–837, 2017. [DOI] [PubMed] [Google Scholar]

- 5. •.Fetz EE. Operant conditioning of cortical unit activity. Science. 1969. February 28;163(870):955–58. [DOI] [PubMed] [Google Scholar]; This article was the first to demonstrate a brain-computer interface in a monkey. The author presents results showing that neurons in the motor cortex can be trained to control an artificial device through operant conditioning.

- 6. •.Delgado J Physical Control of the Mind: Toward a Psychocivilized Society. Harper and Row, New York, 1969. [Google Scholar]; This book makes the case for bidirectional BCIs as therapeutic devices and describes the first results obtained from a bidirectional BCI called the stimoceiver that modulated neural activity in a monkey that led to a change in its behavior.

- 7.Vidal JJ. Toward direct brain-computer communication. Annu. Rev. Biophys. Bioeng. 1973;2:157–80. [DOI] [PubMed] [Google Scholar]

- 8.Chapin JK, Moxon KA, Markowitz RS, Nicolelis MA. Real-time control of a robot arm using simultaneously recorded neurons in the motor cortex. Nat Neurosci. 2(7):664–70, 1999. [DOI] [PubMed] [Google Scholar]

- 9.Velliste M, Perel S, Spalding MC, Whitford AS and Schwartz AB. Cortical control of a prosthetic arm for self-feeding. Nature. 453:1098–1101, 2008. [DOI] [PubMed] [Google Scholar]

- 10. •.Hochberg LR, Bacher D, Jarosiewicz B, Masse NY, Simeral JD, Vogel J, Haddadin S, Liu J, Cash SS, van der Smagt P, Donoghue JP. Reach and grasp by people with tetraplegia using a neurally controlled robotic arm. Nature. 485(7398):372–75, 2012. [DOI] [PMC free article] [PubMed] [Google Scholar]; The authors demonstrate that two people with tetraplegia can use an invasive brain-computer interface based on motor cortex activity to control a robotic arm to perform three-dimensional reach and grasp movements, including drinking coffee from a bottle.

- 11.Wolpaw JR, McFarland DJ, Neat GW, Forneris CA. An EEG-based brain-computer interface for cursor control. Electroencephalogr Clin Neurophysiol. 1991. March;78(3):252–59. [DOI] [PubMed] [Google Scholar]

- 12.Serruya MD, Hatsopoulos NG, Paninski L, Fellows MR, Donoghue JP. Instant neural control of a movement signal. Nature. 2002. March 14;416(6877):141–42. [DOI] [PubMed] [Google Scholar]

- 13. •.Wolpaw JR, McFarland DJ. Control of a two-dimensional movement signal by a noninvasive brain-computer interface in humans. Proc Natl Acad Sci USA. 2004. December 21;101(51):17849–54. [DOI] [PMC free article] [PubMed] [Google Scholar]; The authors demonstrate how a noninvasive BCI based on scalp-recorded electroencephalographic (EEG) signals can achieve performance in a 2D cursor control task that are competitive with certain invasive BCIs using an adaptive linear decoding scheme based on mu- and beta-rhythm frequency bands.

- 14.Li Z, O’Doherty JE, Hanson TL, Lebedev MA, Henriquez CS, Nicolelis MA. Unscented Kalman filter for brain-machine interfaces. PLoS One. 2009. July 15;4(7):e6243. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Gilja V, Pandarinath C, Blabe CH, Nuyujukian P, Simeral JD, Sarma AA, Sorice BL, Perge JA, Jarosiewicz B, Hochberg LR, Shenoy KV, Henderson JM. Clinical translation of a high-performance neural prosthesis. Nature Med. 21(10):1142–5, 2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16. •.Pandarinath C, Nuyujukian P, Blabe CH, Sorice BL, Saab J, Willett FR, Hochberg LR, Shenoy KV, Henderson JM. High performance communication by people with paralysis using an intracortical brain-computer interface. Elife. 2017. February 21;6 pii: e18554. [DOI] [PMC free article] [PubMed] [Google Scholar]; Combining a specially designed Kalman filter known as the recalibrated feedback intention-trained Kalman filter (ReFIT-KF) for cursor control and a hidden Markov model for clicking, the authors demonstrate the highest information rate to date for communication via an invasive brain-computer interface.

- 17.Farwell LA, Donchin E. Talking off the top of your head: toward a mental prosthesis utilizing event-related brain potentials. Electroencephalogr Clin Neurophysiol. 1988. December;70(6):510–23. [DOI] [PubMed] [Google Scholar]

- 18.Sellers EW, KQbler A, Donchin E. Brain-computer interface research at the University of South Florida Cognitive Psychophysiology Laboratory: the P300 Speller. IEEE Trans Neural Syst Rehabil Eng. 2006. June;14(2):221–24. [DOI] [PubMed] [Google Scholar]

- 19.Bell CJ, Shenoy P, Chalodhorn R, Rao RPN. Control of a humanoid robot by a noninvasive brain-computer interface in humans. J Neural Eng. 2008. June;5(2):214–20. [DOI] [PubMed] [Google Scholar]

- 20.Galán F, Nuttin M, Lew E, Ferrez PW, Vanacker G, Philips J, Millán J del R. A brain-actuated wheelchair: asynchronous and non-invasive brain-computer interfaces for continuous control of robots. Clin Neurophysiol. 2008;119(9):2159–69. [DOI] [PubMed] [Google Scholar]

- 21.Millán JJ del R, Galán F, Vanhooydonck D, Lew E, Philips J, Nuttin M. Asynchronous non-invasive brain-actuated control of an intelligent wheelchair. Conf. Proc. IEEE Eng. Med. Biol Soc. 2009;3361–64. [DOI] [PubMed] [Google Scholar]

- 22.Bryan M, Nicoll G, Thomas V, Chung M, Smith JR, Rao RPN. Automatic extraction of command hierarchies for adaptive brain-robot interfacing. Proceedings of ICRA 2012, 2012 May 5–12. [Google Scholar]

- 23.Niparko J (Ed.). Cochlear implants: Principles and practices (2nd ed.). Philadelphia: Lippincott, 2009. [Google Scholar]

- 24.Weiland JD, Liu W, Humayun MS. Retinal prosthesis. Annu Rev Biomed Eng. 2005;7:361–401. [DOI] [PubMed] [Google Scholar]

- 25.Tomlinson T, Miller LE. Toward a Proprioceptive Neural Interface That Mimics Natural Cortical Activity. Adv Exp Med Biol. 2016. ; 957: 367–388. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Tabot GA, Dammann JF, Berg JA, Tenore FV, Boback JL, Vogelstein RJ, Bensmaia SJ. Restoring the sense of touch with a prosthetic hand through a brain interface. Proc Natl Acad Sci U S A. 2013. November 5;110(45):18279–84. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Tyler DJ. Neural interfaces for somatosensory feedback: bringing life to a prosthesis. Curr Opin Neurol. 2015. 28(6): 574–581. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28. •.Dadarlat MC, O’Doherty JE, Sabes PN. A learning-based approach to artificial sensory feedback leads to optimal integration. Nature Neurosci. 2015. January;18(1):138–44. [DOI] [PMC free article] [PubMed] [Google Scholar]; The authors present a new learning-based approach to sensory encoding for artificial sensory feedback in which two monkeys learned to extract hand position information relative to an unseen target from intracortical microstimulation patterns delivered via 7-8 electrodes implanted in primary somatosensory cortex.

- 29.Flesher SN, Collinger JL, Foldes ST, Weiss JM, Downey JE, Tyler-Kabara EC, Bensmaia SJ, Schwartz AB, Boninger ML, Gaunt RA. Intracortical microstimulation of human somatosensory cortex. Sci Transl Med. 2016. October 19;8(361):361ra141. [DOI] [PubMed] [Google Scholar]

- 30.Cronin JA, Wu J, Collins KL, Sarma D, Rao RP, Ojemann JG, Olson JD. Task-Specific Somatosensory Feedback via Cortical Stimulation in Humans. IEEE Trans Haptics. 2016. Oct-Dec;9(4):515–522. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Wander JD, Rao RPN. Brain-computer interfaces: a powerful tool for scientific inquiry. Curr Opin Neurobiol. 2014. April;25:70–5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Mena GE, Grosberg LE, Madugula S, Hottowy P, Litke A, Cunningham J, Chichilnisky EJ, Paninski L. Electrical stimulus artifact cancellation and neural spike detection on large multi-electrode arrays. PLoS Comput Biol. 2017. November 13;13(11):e1005842. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.O’Shea DJ, Shenoy KV. ERAASR: an algorithm for removing electrical stimulation artifacts from multielectrode array recordings. J Neural Eng. 2018. April;15(2):026020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Zhou A, Johnson BC, Muller R. Toward true closed-loop neuromodulation: artifact-free recording during stimulation. Curr Opin Neurobiol. 2018. June;50:119–127. [DOI] [PubMed] [Google Scholar]

- 35.O’Doherty JE, Lebedev MA, Hanson TL, Fitzsimmons NA, Nicolelis MA. A brain-machine interface instructed by direct intracortical microstimulation. Front Integr Neurosci. 2009;3:20. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36. ••.O’Doherty JE, Lebedev MA, Ifft PJ, Zhuang KZ, Shokur S, Bleuler H, Nicolelis MA. Active tactile exploration using a brain-machine-brain interface. Nature. 2011;479(7372):228–31. [DOI] [PMC free article] [PubMed] [Google Scholar]; This article presents results from two monkeys who were able to perform tactile exploration of virtual objects with different artificial tactile properties using a virtual arm controlled by motor cortex activity, while receiving feedback via intracortical microstimulation in the somatosensory cortex.

- 37.Klaes C, Shi Y, Kellis S, Minxha J, Revechkis B, Andersen RA. A cognitive neuroprosthetic that uses cortical stimulation for somatosensory feedback. J Neural Eng. 2014. October;11(5):056024. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38. ••.Flesher S et al. Intracortical Microstimulation as a Feedback Source for Brain-Computer Interface Users Brain-Computer Interface Research pp. 43–54, 2017. [Google Scholar]; The article presents first results from patients who are able to use artificial sensory feedback provided through intracortical microstimulation in S1 cortex to solve a force-feedback task involving a prosthetic hand controlled by M1 cortex activity.

- 39. ••.Moritz CT, Perlmutter SI, Fetz EE. Direct control of paralysed muscles by cortical neurons. Nature. 2008;456, 639–42. [DOI] [PMC free article] [PubMed] [Google Scholar]; The authors show that monkeys can be operantly conditioned to modulate the firing rates of motor cortical neurons to control the electrical stimulation of paralyzed muscles to move the wrist and solve a motor task.

- 40.Ethier C, Oby ER, Bauman MJ, Miller LE. Restoration of grasp following paralysis through brain-controlled stimulation of muscles. Nature. 2012. May 17;485(7398):368–71. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41. ••.Bouton CE, Shaikhouni A, Annetta NV, Bockbrader MA, Friedenberg DA, Nielson DM, Sharma G, Sederberg PB, Glenn BC, Mysiw WJ, Morgan AG, Deogaonkar M, Rezai AR. Restoring cortical control of functional movement in a human with quadriplegia. Nature. 2016. May 12;533(7602):247–50. [DOI] [PubMed] [Google Scholar]; This work extends prior work done in monkeys on cortical control of paralyzed muscles to a human with quadriplegia. Multiunit activity from hand area of M1 cortex was classified into 6 wrist/hand motions using functional electrical stimulation of paralyzed forearm muscles.

- 42. ••.Ajiboye AB, Willett FR, Young DR, Memberg WD, Murphy BA, Miller JP, Walter BL, Sweet JA, Hoyen HA, Keith MW, Peckham PH, Simeral JD, Donoghue JP, Hochberg LR, Kirsch RF. Restoration of reaching and grasping movements through brain-controlled muscle stimulation in a person with tetraplegia: a proof-of-concept demonstration. Lancet. 2017. May 6;389(10081):1821–1830. [DOI] [PMC free article] [PubMed] [Google Scholar]; Neural activity from the hand area of human M1 cortex was mapped to percent activation of functional electrical stimulation patterns applied to paralyzed arm muscles to elicit hand, wrist or elbow movements and achieve point-to-point target acquisitions in a tetraplegic human.

- 43.Capogrosso M, Milekovic T, Borton D, Wagner F, Moraud EM, Mignardot JB, Buse N, Gandar J, Barraud Q, Xing D, Rey E, Duis S, Jianzhong Y, Ko WK, Li Q, Detemple P, Denison T, Micera S, Bezard E, Bloch J, Courtine G. A brain-spine interface alleviating gait deficits after spinal cord injury in primates. Nature. 2016. November 10;539(7628):284–288. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Herron JA, Thompson MC, Brown T, Chizeck HJ, Ojemann JG, Ko AL. Cortical Brain-Computer Interface for Closed-Loop Deep Brain Stimulation. IEEE Trans Neural Syst Rehabil Eng. 2017. November;25(11):2180–2187. [DOI] [PubMed] [Google Scholar]

- 45. ••.Berger T, Hampson R, Song D, Goonawardena A, Marmarelis V, Deadwyler S. A cortical neural prosthesis for restoring and enhancing memory. Journal of Neural Engineering. 2011; 8(4):046017. [DOI] [PMC free article] [PubMed] [Google Scholar]; This paper shows that a bidirectional BCI can be used to map multi-neuronal activity in area CA3 of the hippocampus to stimulation patterns in area CA1 using a nonlinear model to enhance the performance of a rat in a memory task.

- 46.Deadwyler SA, Hampson RE, Song D, Opris I, Gerhardt GA, Marmarelis VZ, Berger TW. A cognitive prosthesis for memory facilitation by closed-loop functional ensemble stimulation of hippocampal neurons in primate brain. Exp Neurol. 2017. January;287(Pt 4):452–460. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Senova S, Chaillet A, Lozano AM. Fornical Closed-Loop Stimulation for Alzheimer’s Disease. Trends Neurosci. 2018. July;41(7):418–428. [DOI] [PubMed] [Google Scholar]

- 48.Nicolelis MAL. Beyond Boundaries. Macmillan, 2011. [Google Scholar]

- 49. •.Pais-Vieira M, Lebedev M, Kunicki C, Wang J, Nicolelis MA. A brain-to-brain interface for real-time sharing of sensorimotor information. Sci Rep. 2013;3:1319. [DOI] [PMC free article] [PubMed] [Google Scholar]; The article describes the first brain-to-brain interface in animals in which neural activity in M1 cortex of an “encoder” rat was converted to intracortical microstimulation in M1 cortex of a “decoder” rat, allowing the rats to achieve a common behavioral goal.

- 50. •.Rao RP, Stocco A, Bryan M, Sarma D, Youngquist TM, Wu J, Prat CS. A direct brain-to-brain interface in humans. PLoS One. 2014. November 5;9(11 ):e111332. [DOI] [PMC free article] [PubMed] [Google Scholar]; The article presents the first noninvasive brain-to-brain interface in humans. Motor imagery was decoded from EEG signals recorded from the motor cortex of one subject and used to deliver information to the hand area of the motor cortex of another subject through transcranial magnetic stimulation (TMS), allowing the two subjects to collaborate and solve a visual-motor task.

- 51.Rao RPN, Stocco A. When two brains connect. Sci. Am. Mind 25, 36–39 (2014). [Google Scholar]

- 52.Stocco A, Prat CS, Losey DM, Cronin JA, Wu J, Abernethy JA, Rao RPN. Playing 20 Questions with the Mind: Collaborative Problem Solving by Humans Using a Brain-to-Brain Interface. PLoS One. 2015. September 23;10(9):e0137303. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Grau C, Ginhoux R, Riera A, Nguyen TL, Chauvat H, Berg M, Amengual JL, Pascual-Leone A, Ruffini G. Conscious brain-to-brain communication in humans using non-invasive technologies. PLoS One. 2014. August 19;9(8):e105225. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Lee W, Kim S, Kim B, Lee C, Chung YA, Kim L, Yoo SS. Non-invasive transmission of sensorimotor information in humans using an EEG/focused ultrasound brain-to-brain interface. PLoS One. 2017. June 9;12(6):e0178476. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55. •.Jiang L, Stocco A, Losey DM, Abernethy JA, Prat CS, Rao RPN. BrainNet: A Multi-Person Brain-to-Brain Interface for Direct Collaboration Between Brains. arXiv: 1809.08632, Nature Scientific Reports (to appear), 2019. [DOI] [PMC free article] [PubMed] [Google Scholar]; The article presents results from a noninvasive brain-to-brain interface that decodes EEG from the visual cortices of two subjects and transmits the information via TMS to the visual cortex of a third subject. The authors show that this “BrainNet” interface allows more than two brains to collaboratively solve a task that none of the individual brains could solve on their own.

- 56. •.Pais-Vieira M, Chiuffa G, Lebedev M, Yadav A, Nicolelis MA. Building an organic computing device with multiple interconnected brains. Sci Rep. 2015. July 9;5:11869. [DOI] [PMC free article] [PubMed] [Google Scholar]; The article presents the first demonstration of a “Brainet” of four interconnected rat brains with electrode arrays implanted bilaterally in S1 cortex for recording and stimulation. The Brainet functioned as an organic computing device that could be used to solve problems involving discrete classification, sequential and parallel computations, and memory storage/retrieval.

- 57. ••.Jackson A, Mavoori J, Fetz EE. Long-term motor cortex plasticity induced by an electronic neural implant. Nature. 2006;444(7115):56–60. [DOI] [PubMed] [Google Scholar]; The authors present the first demonstration of cortical plasticity induction in M1 cortex based on activity-dependent electrical stimulation in freely behaving monkeys using a bidirectional brain-computer interface.

- 58. •.Guggenmos DJ, Azin M, Barbay S, Mahnken JD, Dunham C, Mohseni P, Nudo RJ. Restoration of function after brain damage using a neural prosthesis. Proc Natl Acad Sci U S A. 2013. December 24;110(52):21177–82. [DOI] [PMC free article] [PubMed] [Google Scholar]; The authors show that activity-dependent stimulation using a bidirectional BCI can be used to induce cortical plasticity in rats to improve reaching and grasping functions after traumatic brain injury to the primary motor cortex.improve reaching and grasping functions in a rat after traumatic brain injury to the rat’s primary motor cortex (caudal forelimb area). Their approach involves creating an artificial connection between the rat’s premotor cortex (rostral forelimb area or RFA) and somatosensory cortex S1 and for each spike detected by an electrode in RFA, delivering an electric pulse to S1 after 7.5 milliseconds.

- 59.Chen X, Wang Y, Nakanishi M, Gao X, Jung TP, Gao S. High-speed spelling with a noninvasive brain-computer interface. Proceedings of the National Academy of Sciences. 2015;112(44):E6058–E6067. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Sani OG, Yang Y, Lee MB, Dawes HE, Chang EF, Shanechi MM. Mood variations decoded from multi-site intracranial human brain activity. Nature Biotechnol. 2018. November;36(10):954–961. [DOI] [PubMed] [Google Scholar]