Abstract.

Tissue window filtering has been widely used in deep learning for computed tomography (CT) image analyses to improve training performance (e.g., soft tissue windows for abdominal CT). However, the effectiveness of tissue window normalization is questionable since the generalizability of the trained model might be further harmed, especially when such models are applied to new cohorts with different CT reconstruction kernels, contrast mechanisms, dynamic variations in the acquisition, and physiological changes. We evaluate the effectiveness of both with and without using soft tissue window normalization on multisite CT cohorts. Moreover, we propose a stochastic tissue window normalization (SWN) method to improve the generalizability of tissue window normalization. Different from the random sampling, the SWN method centers the randomization around the soft tissue window to maintain the specificity for abdominal organs. To evaluate the performance of different strategies, 80 training and 453 validation and testing scans from six datasets are employed to perform multiorgan segmentation using standard 2D U-Net. The six datasets cover the scenarios, where the training and testing scans are from (1) same scanner and same population, (2) same CT contrast but different pathology, and (3) different CT contrast and pathology. The traditional soft tissue window and nonwindowed approaches achieved better performance on (1). The proposed SWN achieved general superior performance on (2) and (3) with statistical analyses, which offers better generalizability for a trained model.

Keywords: tissue window, computed tomography, deep learning, segmentation

1. Introduction

Computed tomography (CT) is a quantitative imaging technique that produces imaging intensities normalized in Hounsfield units (HU) (e.g., air as and water as 0 HU). The quantitative meaning of intensity units allows clinical practitioners to define typical window ranges (e.g., the range of intensities to display) to enhance the visual contrasts for particular tissues or organs by applying tissue windows.1 A tissue window is an intensity band-pass filter, which only keeps the intensities within the band and censors the intensities beyond the maximal/minimal values. The band is commonly decided according to the HU of targeting organ. For instance, a lung window () is typically applied to investigate lung images, and a soft tissue window () is commonly employed to enhance the image contrast for abdominal organs.1 Tissue windows not only improve the image contrast for human visualization2 but also filter out texture/noise in unrelated tissues, organs, and background.

In recent years, the tissue window filtering process has been widely adapted to deep learning methods on CT image analyses.3–6 The rationale of using tissue window normalization (preprocessing) is to get rid of the unnecessary information before the machine learning stage, which enhances the specificity of the trained deep learning model. The “specificity” in this study is referred to the performance of deploying a trained deep learning network on testing data with the same imaging acquisition as the training data. The hypothesis behind that is the HU values are standardized and homogenous across different cohorts. However, this hypothesis might not always be valid for some imaging scenarios, including but, not limited to, (1) different CT hardware, (2) potential confounds of CT reconstruction kernels, (3) different contrast-enhanced CT imaging, (4) dynamic variations in acquisition, and (5) physiological changes. As a result, the generalizability of the trained model using fixed tissue window might be degraded when it is applied to the heterogeneous clinical CT scans (Fig. 1). The “generalizability” in this study is defined as the performance of deploying a trained deep learning network on testing data with different imaging acquisition from the training data.

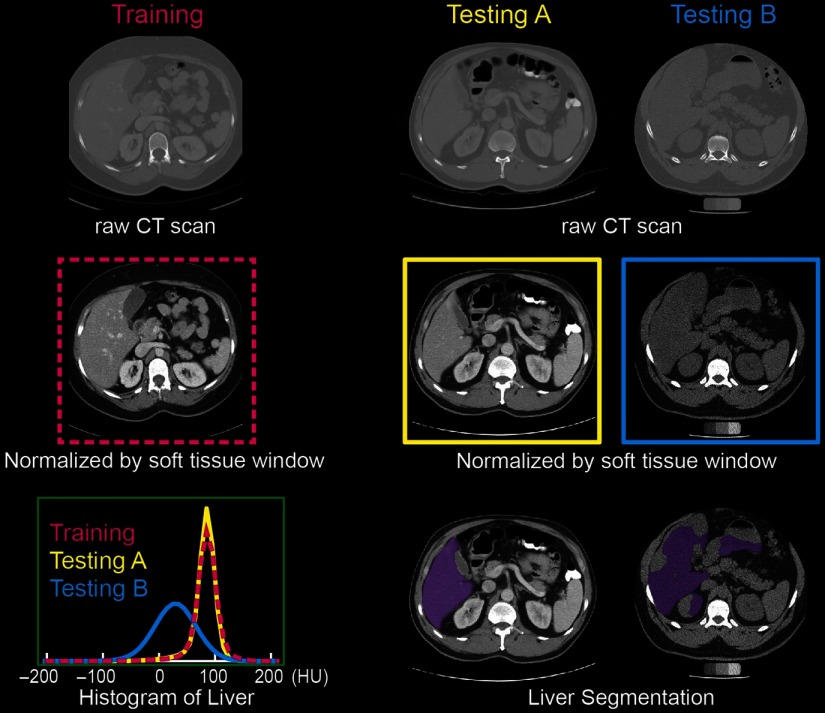

Fig. 1.

The soft tissue window normalization works well when the distribution of the testing scan (testing A) matches the training scan. However, the performance might be degraded on the testing scan (testing B) with different CT contrast. The mechanism of modifying the contrast is to apply a soft tissue window () on the raw CT scans.

In this paper, we investigate the effectiveness of standard soft tissue window normalization (STN) for canonical multiorgan segmentation task, compared with whole intensity range (WIR, without using tissue windows). Moreover, we propose a stochastic tissue window normalization (SWN) method to leverage the generalizability upon STN. Different from using random windows, we limit the window variations to be centralized around the soft tissue window to improve specificity.

Eighty noncontrast CT scans with healthy organs are used to train a standard 2D U-Net from Ref. 7. Then, 20 scans from the same cohort and 433 scans from different cohorts are used to evaluate the effectiveness of STN, WIR, and SWN, which covers the scenarios, where the training and testing scans are from (1) same scanner and same population, (2) same CT contrast but different pathology, and (3) different CT contrast and pathology.

2. Method

2.1. Stochastic Tissue Window Normalization

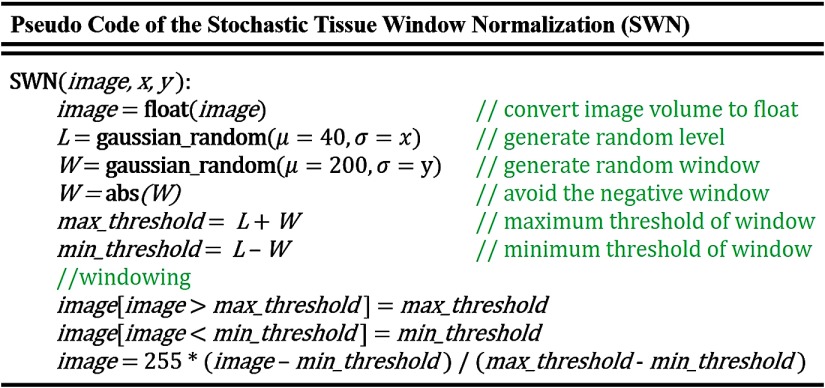

Figure 2 demonstrates the principle of training an organ segmentation network using SWN, which randomly samples the window size and location beyond the STN. A tissue window is determined by two parameters: window level (center) and window size.1 Instead of only pursuing generalizability by natively sampling random windows, we force the randomly sampled windows to be centered around soft tissue window to maintain the specificity. To achieve that, we used the soft tissue window (window level , half-window size ) as the centers of the random sampling. The pseudocode of the proposed SWN method is shown in Fig. 3. Briefly, we employed two Gaussian distributions to add variability upon the soft tissue window. The new windows are randomly sampled from the following two Gaussian distributions:

| (1) |

| (2) |

where and are the two coefficients to control the variabilities of the random windows. In the study, we used the format “” to show the values of and for any experiments performed by SWN. During the training, a 2D training image slice is normalized by the sampled window with the following steps:

| (3) |

Note that the and are different for each input during training, which are randomly sampled from the aforementioned two Gaussian distributions. For WIR, the intensity within whole major intensity range () is normalized for training without applying any tissue windows. In the testing stage, we preprocess every testing scan using standard soft tissue window for STN and SWN while not using such window for WIR.

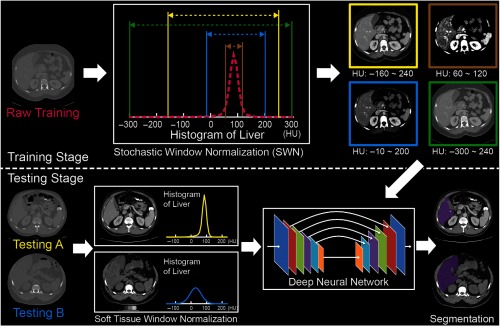

Fig. 2.

The workflow of deploying the proposed SWN to train a standard 2D U-Net segmentation network.

Fig. 3.

Pseudocode of the SWN. The terms are defined based on Eqs. (1)–(3).

2.2. Multiorgan Segmentation Network

To evaluate the effectiveness of using tissue window normalization, we keep the training network and processing standardized. The canonical 2D U-Net7 is employed as the base network. The same data augmentation stages (random cropping, padding, rotation, and translation) are performed to enhance the spatial generalizability. First, all input CT image voxels are converted to floating-point numbers with 32 bits (“float”). Then, all the input 2D CT images (after windowing and preprocessing) are further normalized from 0 to 255 (“float”) with resolution . The number of input channels is one, and the number of output channels is eight (including background, spleen, right kidney, left kidney, liver, stomach, pancreas, and body mask). The Adam optimizer8 with learning rate 0.00001 is used with a batch size of six. Weighted cross-entropy is used as the loss function, whose weights of eight channels are [1, 10, 10, 10, 5, 10, 10, 1]. The models are trained with a maximum of 100 epochs. When training each epoch, every image is windowed once across different windowing methods. The level and window size are randomly decided for each time when using the proposed SWN. Therefore, the windows are different, even for the same image across different epochs. During testing stage, the standard soft tissue window (without randomness) is used for SWN to have a fair comparison with the STW method. The learning rate, epoch number, and the weights were optimized from internal validation and were applied to all testing cohort consistently. Notably, the same hyperparameters are used across all experiments except the tissue window normalization.

2.3. Training and Validation Data (Same Scanner and Population)

Multiatlas Labeling Beyond the Cranial Vault (MLBCV, multiorgan): 100 abdominal CT scans were obtained from the International Conference on Medical Image Computing and Computer Assisted Intervention (MICCAI) 2015 MLBCV challenge.9 The data were acquired from portal venous phase CT modality with variable volume sizes ( to ) and field-of-views (approximately to ). The in-plane resolution varies from to . Among 100 scans, 80 were used as training while the remaining 20 were used as validation. Six organs (spleen, right kidney, left kidney, liver, stomach, and pancreas) from MLBCV are used as training targets.

3. Data

3.1. Testing Data (Same CT Contrast but Different Pathology)

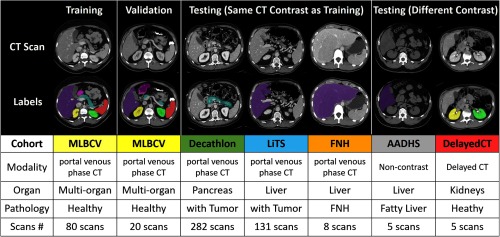

Figure 4 summarizes the six datasets used in this study. All datasets were acquired in deidentified form under Institutional Review Board approval.

Fig. 4.

Summary of training, validation, and testing cohorts.

3.1.1. Decathlon (pancreas)

Two hundred and eighty-two abdominal CT scans with manual pancreas segmentation were obtained from MICCAI 2018 Medical Segmentation Decathlon (pancreas tumor) dataset. The data were acquired from portal venous phase CT modality. The details of the data can be found at Ref. 10.

3.1.2. Liver tumor segmentation (liver)

One hundred and thirty-one abdominal CT scans with liver manual segmentation were obtained from liver tumor segmentation (LiTS) challenge. The data were acquired from portal venous phase CT modality. The details of the data can be found at Ref. 11.

3.1.3. Focal nodular hyperplasia (liver)

Eight abdominal CT scans with liver manual segmentation were internally acquired from patients with focal nodular hyperplasia (FNH) lesion. The data were acquired from contrast-enhanced in portal venous phase CT modality with in-plane image size and resolution from 0.5 mm to 0.8 mm. The slice thickness is 5 mm.

3.2. Testing Data (Different CT Contrast and Pathology)

3.2.1. AA-DHS (liver)

Five abdominal CT scans with fatty liver diagnosis and manual liver segmentations were obtained from African American-Diabetes Heart Study (AA-DHS) dataset. The data were acquired from noncontrast CT modality with in-plane resolution . The details of the data can be found at Ref. 12.

3.2.2. Delayed (kidneys)

Five abdominal CT scans with manual left and right kidney segmentation were acquired internally with excretory phase sequences. The scans were performed in the prone position at an 8-min delay per institutional protocol with 3-mm axial reconstructions.

4. Simulation

4.1. Specificity and Generalizability Analysis

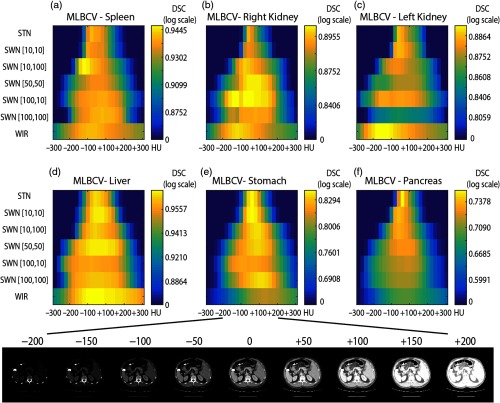

The 20 validation CT scans were used to evaluate the specificity and generalizability of STN, WIR, and SWN. To test the specificity and generalizability, we performed a simulation, which adds or subtracts constant values on 20 validation scans (from to in steps of 25 HU). That experiment simulates the intensity variations in testing data when applying the trained model. The 20 validation CT scans were used since the data were acquired from the same scanner as the training data. Therefore, the spatial effects will be minimized and the difference in performance is solely from the global variations on intensities. Figure 5 shows the variations of segmentation performance on six organs with the changes in raw intensities.

Fig. 5.

This figure shows the specificity and generalizability of STN, WIR, and SWN. To test the different tissue window normalization strategy, the testing scans have been added or subtracted constant values and fed into the same network. The color indicates the mean Dice values across 20 validation scans for each organ. The width of the yellow color range in each row shows the generalizability, and the brightness indicates the specificity. The proposed SWN has better generalizability compared with STN and better specificity compared with WIR. (a) MLBCV—spleen, (b) MLBCV—right kidney, (c) MLBCV—left kidney, (d) MLBCV—liver, (e) MLBCV—stomach, and (f) MLBCV—pancreas.

5. Empirical Validation

The 20 MLBCV scans are used to evaluate the performance of different window normalization strategies for the scenarios that the training and testing scans are from the “same scanner and population.” The Dice similarity coefficient (DSC) has been used as the metrics to show the segmentation accuracy.

The Decathlon, LiTS, and FNH cohorts are employed to evaluate the performance of different window normalization strategies for the scenarios that the training and testing scans are from “same CT contrast but different pathology.”

The AA-DHS and delayed cohorts are employed to evaluate the performance of different window normalization strategies for the scenarios that the training and testing scans are from “different CT contrast and pathology.”

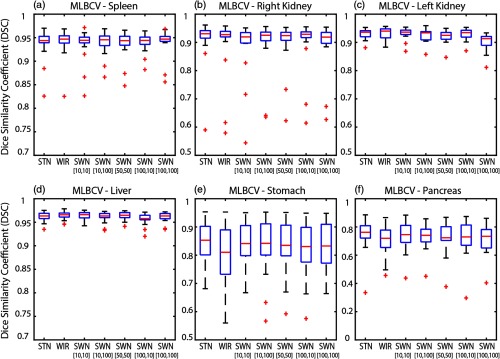

5.1. Internal Validation (MLBVC)

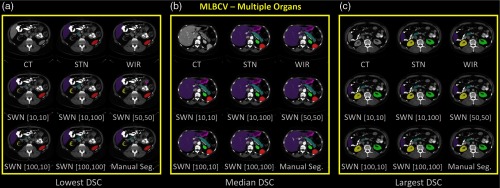

The qualitative and quantitative results of 20 MLBVC validation scans are shown in Figs. 6 and 7, respectively. The detailed measurements of six labels are presented in Table 1. As the training and validation datasets are from the same cohort and the same scanner, the intensities of training scans and testing scans are homogeneous. Therefore, the canonical STN or WIR methods achieved superior performance in either median DSC or mean DSC for all six organs. In Table 1, the best DSC results are marked as bold. Briefly, the greater median and mean DSC indicate the better segmentation performance referring to the manual segmentations. The smaller standard deviation (STD) of DSC means the variation of the segmentation performance is smaller and more consistent across the cases. The symbol “—” indicates that the difference between the corresponding method and the reference method (“Ref.”) is not significant. The symbols “↑” and “↓” mean significantly higher and lower, respectively, using the Wilcoxon signed-rank test with . The symbol “*” means the false discovery rate (FDR) corrected value within the corresponding abdominal organ is , with number of comparisons = 12 of each organ.

Fig. 6.

The qualitative results of applying different intensity normalization strategies. The segmentation results of three scans with the (a) lowest DSC, (b) median DSC, and (c) highest DSC (in SWN [50, 50]) are presented for each experiment.

Fig. 7.

The quantitative results of applying different intensity normalization strategies to MLBCV dataset, which is from the “same scanner and same population” as training. (a) MLBCV—spleen, (b) MLBCV—right kidney, (c) MLBCV—left kidney, (d) MLBCV—liver, (e) MLBCV—stomach, and (f) MLBCV—pancreas.

Table 1.

Segmentation performance on MLBCV.

| STN | WIR | SWN [10, 10] | SWN [10, 100] | SWN [50, 50] | SWN [100, 10] | SWN [100, 100] | |

|---|---|---|---|---|---|---|---|

| MLBCV—spleen | |||||||

| Median | 0.9438 | 0.9469 | 0.9444 | 0.9456 | 0.9437 | 0.9442 | 0.9469 |

| Mean | 0.9380 | 0.9407 | 0.9359 | 0.9391 | 0.9368 | 0.9399 | 0.9413 |

| STD | 0.0317 | 0.0294 | 0.0333 | 0.0247 | 0.0283 | 0.0194 | 0.0279 |

| Ref. | — | ↓ | — | — | — | — | |

| — | Ref. | — | — | — | — | — | |

| MLBCV—right kidney | |||||||

| Median | 0.9307 | 0.9274 | 0.9194 | 0.9252 | 0.9240 | 0.9288 | 0.9187 |

| Mean | 0.8898 | 0.8942 | 0.8894 | 0.8948 | 0.8995 | 0.8999 | 0.8936 |

| STD | 0.1231 | 0.1046 | 0.0966 | 0.0894 | 0.0801 | 0.0887 | 0.0859 |

| Ref. | — | * ↓ | — | — | — | — | |

| — | Ref. | * ↓ | — | — | — | — | |

| MLBCV—left kidney | |||||||

| Median | 0.9360 | 0.9400 | 0.9364 | 0.9337 | 0.9251 | 0.9346 | 0.9132 |

| Mean | 0.8840 | 0.8859 | 0.8855 | 0.8801 | 0.8762 | 0.8835 | 0.8571 |

| STD | 0.2087 | 0.2096 | 0.2093 | 0.2083 | 0.2074 | 0.2089 | 0.2040 |

| Ref. | — | — | — | * ↓ | — | * ↓ | |

| — | Ref. | — | — | * ↓ | — | * ↓ | |

| MLBCV—liver | |||||||

| Median | 0.9633 | 0.9659 | 0.9662 | 0.9633 | 0.9648 | 0.9577 | 0.9634 |

| Mean | 0.9622 | 0.9646 | 0.9639 | 0.9613 | 0.9640 | 0.9575 | 0.9611 |

| STD | 0.0096 | 0.0086 | 0.0089 | 0.0110 | 0.0086 | 0.0127 | 0.0103 |

| Ref. | * ↑ | * ↑ | — | — | * ↓ | — | |

| * ↓ | Ref. | — | * ↓ | — | * ↓ | * ↓ | |

| MLBCV—stomach | |||||||

| Median | 0.8528 | 0.8102 | 0.8412 | 0.8418 | 0.8348 | 0.8306 | 0.8325 |

| Mean | 0.8377 | 0.8029 | 0.8380 | 0.8327 | 0.8305 | 0.8234 | 0.8307 |

| STD | 0.0805 | 0.1052 | 0.0777 | 0.0995 | 0.0891 | 0.0944 | 0.0845 |

| Ref. | ↓ | — | — | — | — | — | |

| ↑ | Ref. | ↑ | ↑ | — | — | — | |

| MLBCV—pancreas | |||||||

| Median | 0.7620 | 0.7196 | 0.7453 | 0.7407 | 0.7234 | 0.7294 | 0.7336 |

| Mean | 0.7483 | 0.7030 | 0.7357 | 0.7344 | 0.7313 | 0.7215 | 0.7167 |

| STD | 0.1149 | 0.1140 | 0.1038 | 0.0886 | 0.1091 | 0.1279 | 0.1046 |

| Ref. | * ↓ | — | * ↓ | — | * ↓ | * ↓ | |

| * ↑ | Ref. | * ↑ | * ↑ | * ↑ | — | — | |

Note: The best DSC results are marked as bold. The symbol “—” indicates that the difference between the corresponding method and the reference method (“Ref.”) is not significant. The symbols “↑” and “↓” mean significantly higher and lower, respectively, using the Wilcoxon signed-rank test with . “*” means the FDR corrected value is also .

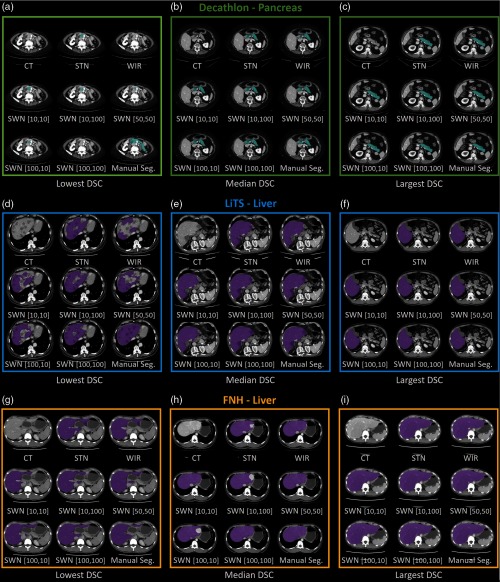

5.2. External Validation on Same Imaging Protocol

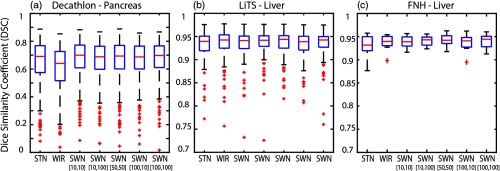

We group the results of Decathlon, LiTS, and FNH as the external validation results on the same CT modality since such datasets were acquired from the same imaging protocol (portal venous phase) as the training datasets but from different sites. The qualitative and quantitative results of different methods are shown in Figs. 8 and 9. The corresponding detailed measurements are provided in Table 2. When performing the trained model on external validation datasets with the same imaging protocol but different sites and pathologies, the proposed SWN method achieved superior performance compared with the canonical STN and WIR methods.

Fig. 8.

The qualitative results of applying different intensity normalization strategies. The segmentation results of three scans with the lowest, median, and highest DSC (in SWN [50,50]) are presented for each experiment. (a)–(c) Decathlon—pancreas, (d)–(f) LiTS—liver, (g)–(i) FNH—liver.

Fig. 9.

The quantitative results of applying different intensity normalization strategies to (a) Decathlon, (b) LiTS, and (c) FNH, which are from “same CT contrast, different pathology.”

Table 2.

Performance on testing data (same CT contrast, different pathology).

| STN | WIR | SWN [10,10] | SWN [10,100] | SWN [50,50] | SWN [100,10] | SWN [100,100] | |

|---|---|---|---|---|---|---|---|

| Decathlon—pancreas | |||||||

| Median | 0.6908 | 0.6407 | 0.6996 | 0.6880 | 0.6933 | 0.6870 | 0.6972 |

| Mean | 0.6480 | 0.6009 | 0.6714 | 0.6612 | 0.6607 | 0.6590 | 0.6665 |

| STD | 0.1639 | 0.1645 | 0.1432 | 0.1403 | 0.1507 | 0.1467 | 0.1416 |

| Ref. | * ↓ | * ↑ | — | * ↑ | — | * ↑ | |

| * ↑ | Ref. | * ↑ | * ↑ | * ↑ | * ↑ | * ↑ | |

| LiTS—liver | |||||||

| Median | 0.9396 | 0.9425 | 0.9414 | 0.9420 | 0.9439 | 0.9389 | 0.9425 |

| Mean | 0.9321 | 0.9294 | 0.9315 | 0.9351 | 0.9335 | 0.9288 | 0.9346 |

| STD | 0.0307 | 0.0472 | 0.0405 | 0.0300 | 0.0376 | 0.0398 | 0.0300 |

| Ref. | — | — | * ↑ | * ↑ | * ↑ | * ↑ | |

| — | Ref. | — | — | — | * ↓ | — | |

| FNH—liver | |||||||

| Median | 0.9317 | 0.9395 | 0.9389 | 0.9430 | 0.9422 | 0.9408 | 0.9443 |

| Mean | 0.9295 | 0.9367 | 0.9386 | 0.9408 | 0.9423 | 0.9361 | 0.9399 |

| STD | 0.0264 | 0.0181 | 0.0138 | 0.0119 | 0.0139 | 0.0203 | 0.0166 |

| Ref. | — | — | — | ↑ | — | ↑ | |

| — | Ref. | — | — | — | — | — | |

Note: The best DSC results are marked as bold. The symbol “—” indicates that the difference between the corresponding method and the reference method (“Ref.”) is not significant. The symbols “↑” and “↓” mean significantly higher and lower, respectively, using the Wilcoxon signed-rank test with . “*” means the FDR corrected value is also .

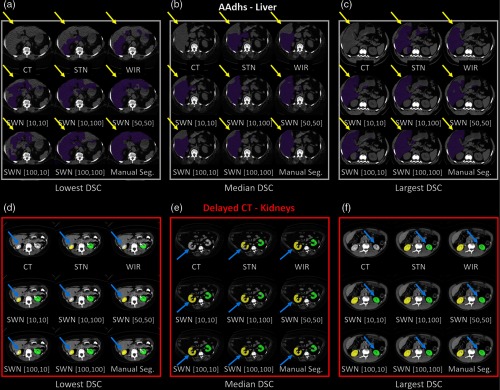

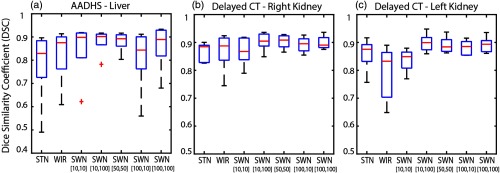

5.3. External Validation on Different Imaging Protocol

The trained model from portal venous phase CT scans is evaluated using the noncontrast CT scans (AADHS) and delayed phase CT scans (delayed). In this scenario, the HU intensities of livers in AADHS are systematically different from training data. Meanwhile, the HU intensities of kidneys in delayed are systematically different from training data. Therefore, the intensities of targeting organs in training and testing datasets are heterogeneous. The qualitative and quantitative results are shown in Figs. 10 and 11. The corresponding detailed measurements are provided in Table 3. From the results, the proposed SWN method achieved superior performance compared with the canonical STN and WIR methods.

Fig. 10.

The qualitative results of applying different intensity normalization strategies on (a)–(c) AADHS and (d)–(f) delayed datasets are provided. The segmentation results of three scans with the lowest, median, and highest DSC (in SWN [50, 50]) are presented. The yellow and blue arrows indicate the key observations among different methods.

Fig. 11.

The quantitative results of applying different intensity normalization strategies on the testing scans, which are from “different CT contrast and pathology” compared with training. (a) AADHS—liver, (b) AADHS—right kidney, and (c) AADHS—left kidney.

Table 3.

Performance on testing data (different CT contrast and pathology).

| STN | WIR | SWN [10, 10] | SWN [10, 100] | SWN [50, 50] | SWN [100, 10] | SWN [100, 100] | |

|---|---|---|---|---|---|---|---|

| AADHS—liver | |||||||

| Median | 0.8290 | 0.8752 | 0.8983 | 0.9017 | 0.8924 | 0.8433 | 0.8892 |

| Mean | 0.7799 | 0.8214 | 0.8458 | 0.8811 | 0.8797 | 0.8084 | 0.8589 |

| STD | 0.1661 | 0.1248 | 0.1266 | 0.0559 | 0.0449 | 0.1433 | 0.1039 |

| Ref. | — | ↑ | — | ↑ | ↑ | ↑ | |

| — | Ref. | ↑ | — | ↑ | — | ↑ | |

| Delayed—right kidney | |||||||

| Median | 0.8847 | 0.8875 | 0.8678 | 0.9048 | 0.9084 | 0.8954 | 0.8905 |

| Mean | 0.8652 | 0.8673 | 0.8690 | 0.9035 | 0.9031 | 0.8921 | 0.8995 |

| STD | 0.0352 | 0.0719 | 0.0526 | 0.0341 | 0.0256 | 0.0281 | 0.0245 |

| Ref. | — | — | ↑ | ↑ | — | — | |

| — | Ref. | — | — | — | — | — | |

| Delayed—left kidney | |||||||

| Median | 0.8755 | 0.8328 | 0.8491 | 0.8994 | 0.8841 | 0.8853 | 0.8936 |

| Mean | 0.8580 | 0.7898 | 0.8359 | 0.8987 | 0.8910 | 0.8818 | 0.8913 |

| STD | 0.0605 | 0.1010 | 0.0427 | 0.0334 | 0.0298 | 0.0272 | 0.0293 |

| Ref. | ↓ | — | — | — | — | — | |

| ↑ | Ref. | — | — | — | — | ↑ | |

Note: The best DSC results are marked as bold. The symbol “—” indicates that the difference between the corresponding method and the reference method (“Ref.”) is not significant. The symbols “↑” and “↓” mean significantly higher and lower, respectively, using the Wilcoxon signed-rank test with .

6. Conclusion and Discussion

We evaluate the effectiveness of both tissue window normalization and nonwindowed methods for deep learning on CT organ segmentation tasks. The soft tissue window typically yields superior performance on segmenting smaller and more challenging organs (pancreas and stomach). Meanwhile, the segmentation performance of without using tissue window techniques achieved superior performance on larger and easier organs (liver and spleen).

From internal validation (training and testing data are from the same scanner and population), the STN and WIR achieved overall better segmentation performance (Fig. 7 and Table 1). We propose a new SWN method and evaluate the STN, WIR, and SWN methods using simulation (Fig. 5) of different external testing cohorts.

According to the absolute differences in Dice values (highlighted in bold), the proposed SWN method achieved generally better Dice scores, when evaluated on the testing scans acquired from the different scanner but same contrast (Fig. 9 and Table 2). When evaluated on the testing scans acquired from different modalities and different pathologies (Fig. 11 and Table 3), the proposed SWN method also achieved generally superior Dice values compared with STN and WIR. The proposed SWN provided better generalizability of a trained model while preserving the specificity compared with STN and WIR.

The standard Wilcoxon signed-rank test statistical analyses (highlighted with colors) is used in the study. When the training and testing scans are regimented to be acquired from the same scanner, protocol, and patient population (Table 1), the proposed method demonstrates improved benchmarks as compared to the standard method. It means the simple standard intensity normalization methods are more proper for internal validation. But in the real world, we typically would like to train a more generalizable deep learning model, which can be applied directly to different cohorts and populations (Tables 2 and 3). Under such external validation scenarios, the generalizability of the trained model is essential, especially when the number of available training cases is typically in small-scale for medical imaging applications. The proposed method achieves overall superior performance when the testing and training cohorts are more heterogeneous, which leverages the segmentation performance of the trained models on the different testing imaging protocols. Under the more restricted scenarios, FDR correction is applied to correct the original -values for multiple comparisons (highlighted with “*”). After FDR correction, the differences for MLBCV-spleen, MLBCV-stomach (Table 1), FNH-liver (Table 2), AADHS-liver, delayed-left kidney, and delayed-right kidney (Table 3) are not significant. The nonsignificant comparisons in Tables 2 and 3 are due to the relatively small sizes of available cohorts (i.e., five to eight patients).

The standard 2D U-Net is employed as the segmentation network to evaluate the performance of using tissue windows. While this combination is successful, we do not claim optimality of using 2D U-Net. To achieve the superior segmentation network is not the major aim of this work. In the future, it would be also interesting to have the organs from different contrasts labeled by different human experts. In that case, the inter-rater reliability is able to be calculated, which can be used to evaluate the automatic detection with human variability.

The proposed method is validated on the soft tissue window. However, other types of tissue windows (e.g., lung, cardiac, liver window, etc.) have also been widely used in different applications. Theoretically, the stochastic tissue window would also improve the generalizability of deep network for such applications. Therefore, it would be useful to extend and validate the proposed method to such applications in the future. Another limitation of the proposed window-based normalization is that it sacrifices the physical information behind the HU standardization.

Acknowledgments

We thank Naiyun Zhou for helping organize part of the data. The authors of the paper are directly employed by the institutes or companies provided in this study. This research was supported by NSF CAREER 1452485 and NIH grants 5R21EY024036, R01EB017230, 1R21NS064534, 1R03EB012461, R01 DK113980, and 6R01 DK112262. Y. H., Y. T., R. G. A., and B. A. L. are supported by the Vanderbilt-12 Sigma Research Grant (Huo/Abramson/Landman). R. G. A. is also receiving partial support from 2U01CA142565 and P30 CA068485. J. J. C. and J. G. T. are in part supported by R01 DK113980, DK Locke (PI) (09/01/17-08/31/22) “CKD risk prediction among obese living kidney donors.” This project will evaluate biomarkers of risk as related to obese living kidney donors. J. J. C. and J. G. T. are coinvestigators 6R01 DK112262, NIDDK Koethe (PI) (02/01/17-01/31/22) “The role of adipose-resident T cells in HIV-associated glucose intolerance.”

Biographies

Yuankai Huo is a research assistant professor at Vanderbilt University. He received his BS degree in telecommunication engineering from Nanjing University of Posts and Telecommunications in 2008, his MS degree in information and telecommunication engineering and computer science from Southeast University and Columbia University in 2011 and 2014, respectively, and his PhD in electrical engineering from Vanderbilt University in 2018. He is the author of more than 50 journal and conference papers in medical image analysis. He is a member of SPIE.

Biographies of the other authors are not available.

Disclosures

No conflicts of interest, financial or otherwise, are declared by Yunqiang Chen, Dashan Gao, Shizhong Han, Shunxing Bao, and Smita De.

Contributor Information

Yuankai Huo, Email: yuankai.huo@vanderbilt.edu.

Yucheng Tang, Email: yucheng.tang@vanderbilt.edu.

Yunqiang Chen, Email: yunqiang@12sigma.ai.

Dashan Gao, Email: dgao@12sigma.ai.

Shizhong Han, Email: hanshizhong1105@gmail.com.

Shunxing Bao, Email: shunxing.bao@vanderbilt.edu.

Smita De, Email: smita.de@gmail.com.

James G. Terry, Email: james.g.terry@vumc.org.

Jeffrey J. Carr, Email: j.jeffrey.carr@vumc.org.

Richard G. Abramson, Email: rgabramson@gmail.com.

Bennett A. Landman, Email: bennett.landman@vanderbilt.edu.

References

- 1.Sahi K., et al. , “The value of” liver windows” settings in the detection of small renal cell carcinomas on unenhanced computed tomography,” Can. Assoc. Radiol. J. 65(1), 71–76 (2014). 10.1016/j.carj.2012.12.005 [DOI] [PubMed] [Google Scholar]

- 2.Pomerantz S. M., et al. , “Liver and bone window settings for soft-copy interpretation of chest and abdominal CT,” Am. J. Roentgenol. 174(2), 311–314 (2000). 10.2214/ajr.174.2.1740311 [DOI] [PubMed] [Google Scholar]

- 3.Yan K., Lu L., Summers R. M., “Unsupervised body part regression via spatially self-ordering convolutional neural networks,” in 15th Int. Symp. Biomedical Imaging (ISBI 2018), IEEE, pp. 1022–1025 (2017). [Google Scholar]

- 4.Lee H., et al. , “Pixel-level deep segmentation: artificial intelligence quantifies muscle on computed tomography for body morphometric analysis,” J. Digital Imaging 30(4), 487–498 (2017). 10.1007/s10278-017-9988-z [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Dorn S., et al. , “Organ-specific context-sensitive CT image reconstruction and display,” Proc. SPIE 10537, 1057326 (2018). 10.1117/12.2291897 [DOI] [Google Scholar]

- 6.Huo Y., et al. , “Splenomegaly segmentation on multi-modal MRI using deep convolutional networks,” IEEE Trans. Med. Imaging 38, 1185–1196 (2018). 10.1109/TMI.42 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Ronneberger O., Fischer P., Brox T., “U-net: convolutional networks for biomedical image segmentation,” in Int. Conf. Med. Image Comput. and Comput. Assisted Interv., pp. 234–241 (2015). 10.1007/978-3-319-24574-4_28 [DOI] [Google Scholar]

- 8.Kingma D. P., Ba J., “Adam: a method for stochastic optimization,” arXiv:1412.6980 (2014).

- 9.Landman B., et al. , “Multi-atlas labeling beyond the cranial vault,” (2015).

- 10.Simpson A. L., et al. , “A large annotated medical image dataset for the development and evaluation of segmentation algorithms,” arXiv:1902.09063 (2019).

- 11.Bilic P., et al. , “The liver tumor segmentation benchmark (LiTS),” arXiv:1901.04056 (2019). [DOI] [PMC free article] [PubMed]

- 12.Bowden D. W., et al. , “Review of the Diabetes Heart Study (DHS) family of studies: a comprehensively examined sample for genetic and epidemiological studies of type 2 diabetes and its complications,” Rev. Diabetic Stud. 7(3), 188 (2010). 10.1900/RDS.2010.7.188 [DOI] [PMC free article] [PubMed] [Google Scholar]