Abstract

Decision‐making in the somatosensory domain has been intensively studied using vibrotactile frequency discrimination tasks. Results from human and monkey electrophysiological studies from this line of research suggest that perceptual choices are encoded within a sensorimotor network. These findings, however, rely on experimental settings in which perceptual choices are inextricably linked to sensory and motor components of the task. Here, we devised a novel version of the vibrotactile frequency discrimination task with saccade responses which has the crucial advantage of decoupling perceptual choices from sensory and motor processes. We recorded human fMRI data from 32 participants while they performed the task. Using a whole‐brain searchlight multivariate classification technique, we identify the left lateral prefrontal cortex and the oculomotor system, including the bilateral frontal eye fields (FEF) and intraparietal sulci, as representing vibrotactile choices. Moreover, we show that the decoding accuracy of choice information in the right FEF correlates with behavioral performance. Not only are these findings in remarkable agreement with previous work, they also provide novel fMRI evidence for choice coding in human oculomotor regions, which is not limited to saccadic decisions, but pertains to contexts where choices are made in a more abstract form.

Keywords: decision‐making, fMRI, multivariate pattern analysis, perceptual choice, vibrotactile frequency

1. INTRODUCTION

A perceptual decision comprises multiple stages converting sensory inputs via a categorical judgment about the perceived information into an appropriate behavior. One of the main aims of perceptual decision‐making research has been to identify, characterize, and dissociate brain activities directly linked to the decision from other signals that accompany this chain of processes.

In the somatosensory domain, neural mechanisms underlying perceptual choices have been extensively studied with electrophysiology in monkeys using vibrotactile frequency discrimination tasks (Romo & de Lafuente, 2013). In these studies, monkeys compare two sequentially presented vibrotactile stimuli and indicate whether the frequency of the second stimulus (f2) is higher or lower than the first (f1) with a manual response. The findings suggest that the comparison process and the resulting perceptual choice are encoded within a sensorimotor network, including prefrontal, premotor, motor, and sensory cortices (Haegens et al., 2011; Hernández, Zainos, & Romo, 2002; Hernández et al., 2010; Romo, Hernández, & Zainos, 2004).

In humans, the initial attempt to identify neural correlates of vibrotactile decision‐making was conducted with fMRI (Pleger et al., 2006; Preuschhof, Heekeren, Taskin, Schubert, & Villringer, 2006). These authors revealed that multiple regions, particularly the dorsolateral prefrontal cortex and the insula are involved in decision‐making (see also Kelly & O'Connell, 2015 for a review of fMRI studies in the broader field of visual decision making). However, due to the sluggish nature of the BOLD response, the question of how the observed changes in BOLD amplitude are related to different components during a decision process, for example, sensory‐, decision‐, and motor‐driven signals, remains a matter of debate (see Mulder, van Maanen, & Forstmann, 2014), rendering it difficult to ground these studies within a greater context. Further evidence from human data has been recently reported in EEG studies. In line with research focusing on oscillatory activity in monkeys (Haegens et al., 2011), Herding and colleagues found that choices are encoded by differential power of upper beta band oscillations in premotor structures. Notably, the most likely source of the beta band modulation moved according to the response effector: the medial premotor cortex for manual responses (Herding, Spitzer, & Blankenburg, 2016) and the frontal eye field for saccades (Herding, Ludwig, & Blankenburg, 2017). Taken together, the electrophysiological findings across species suggest a pivotal role of sensorimotor regions, in particular the premotor regions, in computing and representing vibrotactile choice. Moreover, these findings align well with a large body of literature on monkey studies in the visual domain which suggests that perceptual decisions are mainly formed in brain regions involved in preparing and selecting actions (Cisek & Kalaska, 2010; Gold & Shadlen, 2007).

The vibrotactile frequency discrimination task has been a powerful tool for exploring the neural underpinnings of somatosensory decision‐making. However, in the standard versions of this task, perceptual choices are inextricably linked to the sensory and motor components of the task. That is, f1 is typically set as the reference frequency against which f2 is compared. Thus, observers will typically decide “higher” if frequencies are presented in an increasing order (f1 < f2), and “lower” if they are in a decreasing order (f1 > f2), resulting in a correlation between stimulus order and perceptual choice that precludes a clear dissociation between sensory‐ and choice‐related signals. Furthermore, each perceptual choice is most often directly mapped to a movement toward a specific spatial target so that brain signals reflecting perceptual choice cannot be separated from brain signals related to action selection. This raises the question of whether the previously reported premotor regions would still encode perceptual choices when choices are independent of action selection (cf., Huk, Katz, & Yates, 2017). This is particularly relevant in light of a growing body of evidence suggesting that abstract, motor‐independent choices are represented by brain regions that are not primarily associated with action selection (Filimon, Philiastides, Nelson, Kloosterman, & Heekeren, 2013; Hebart, Donner, & Haynes, 2012).

With the present fMRI study, we aimed to identify human brain regions that represent vibrotactile choice independent of the sensory and motor components of the task. We modified the vibrotactile frequency discrimination task so that the choice is disentangled from the preceding stimulus order and the succeeding saccade movement direction. Importantly, we employed a searchlight multivariate pattern analysis (Kriegeskorte, Goebel, & Bandettini, 2006), which allowed the isolation of choice‐related activity patterns from those associated with other task components across the whole brain without a priori assumptions about where to expect such a representational code.

2. MATERIALS AND METHODS

2.1. Participants

Thirty‐two healthy, right‐handed volunteers with normal or corrected‐to‐normal vision participated in the experiment. All participants gave written informed consent prior to the experiment. The experimental protocols were approved by the local ethics committee of the Freie Universität Berlin. Data from two participants were discarded due to excessive head motion (>8 mm), leaving 30 participants for further analyses (21 female, mean age = 27 years, age range = 22–39).

2.2. Experimental procedure and stimuli

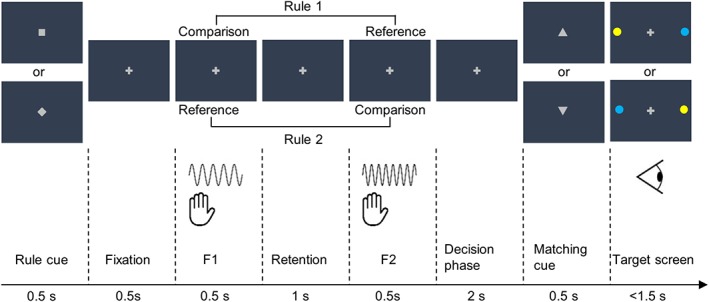

Participants compared frequencies of two vibrotactile stimuli sequentially administered to the distal phalanx of the left index finger and decided whether the comparison frequency was higher or lower than the reference frequency by making a saccade toward a color‐coded target (Figure 1). To decouple perceptual choice (higher vs. lower) from stimulus order (f1 < f2 vs. f1 > f2), f1 and f2 alternately served as the comparison frequency based on the rule presented at the beginning of each trial. In half of the trials, participants indicated whether f1 was higher or lower than f2 (rule 1) and in the other half, they indicated whether f2 was higher or lower than f1 (rule 2). Furthermore, instead of pre‐assigning a choice to a specific spatial target or target color, participants reported a match or mismatch between their perceptual choice and the proposition indicated by a matching cue. Importantly, the matching cue and the following target screen were presented after the decision phase so that participants could neither anticipate the target color nor prepare a saccade response toward the spatial target.

Figure 1.

Experimental paradigm. A rule cue informed which of the two rules applied (pseudo‐randomized across trials and counterbalanced across participants). Rule 1 indicated that participants had to compare f1 against f2, while rule 2 indicated a comparison in the reversed direction. This was followed by f1 and f2 presented to the participants' left index finger. After the decision phase, participants compared their perceptual choice with a visual matching cue (an upward‐pointing triangle indicated “higher,” while a downward‐pointing triangle indicated “lower”) and reported a match or mismatch with a saccade to either the blue or the yellow dots on the target screen. The spatial locations of the colored dots switched across target screens and the color code were counterbalanced across participants. The matching cues and target screens were orthogonal to each other and pseudo‐randomly interleaved across trials so that participants were not able to anticipate the appropriate saccade directions during the decision phase [Color figure can be viewed at http://wileyonlinelibrary.com]

Each trial began with a fixation period of variable duration (3, 4, 5, or 6 s). A rule cue (square or diamond) was shown at the center of the presentation screen for 500 ms and instructed participants which of the subsequently presented vibrotactile stimuli served as the comparison stimulus. The specific association between cue symbols and rules was counterbalanced across participants. The rule cue was followed by two vibrotactile stimuli with different frequencies (each 500 ms), which were separated by a 1 s retention period. After a decision phase of 2 s, an equilateral triangle, serving as a visual matching cue, was centrally presented for 500 ms. An upward‐pointing triangle indicated a comparison stimulus of higher frequency, whereas a downward‐pointing triangle indicated a comparison stimulus of lower frequency. The matching cues were pseudo‐randomly interleaved across trials. Participants compared their perceptual choice with the matching cue and reported a match or mismatch by making a saccade to one of the two color‐coded targets (blue vs. yellow dot) presented in the periphery along the horizontal meridian after the matching cue offset. The color code was counterbalanced across participants and the location of the blue and yellow dots on the target screen alternated pseudo‐randomly across trials. Participants were instructed to respond as fast as possible. A response later than 1.5 s after the target screen onset was considered a missed trial.

Vibrotactile stimuli were delivered to the distal phalanx of the left index fingers by a piezoelectric Braille display with 16 pins (4 × 4 quadratic matrix, 2.5 mm spacing), controlled by a programmable stimulator (QuaeroSys Medical Devices, Schotten, Germany). The frequency of the first stimulus (f1) varied between 16, 20, 24, and 28 Hz. Each f1 was paired with an f2 that was either 4 Hz higher or lower, resulting in a total of eight stimulus pairs (16 vs. 12 Hz, 16 vs. 20 Hz, 20 vs. 16 Hz, 20 vs. 24 Hz, 24 vs. 20 Hz, 24 vs. 28 Hz, 28 vs. 24 Hz, and 28 vs. 32 Hz). All stimuli lay well within the flutter range (~5–50 Hz; Romo & Salinas, 2003).

A functional run consisted of 64 trials. Each of the stimulus pairs was presented eight times, each time with a different combination of rule cues (square vs. diamond), matching cues (upward‐pointing vs. downward‐pointing), and target screens (blue‐left, yellow‐right vs. blue‐right, yellow‐left, Figure 1). The variable durations of the fixation period were balanced across rules and stimulus pairs. Trials lasted 11.5 s on average and were presented in a randomized order. The duration of a functional run was approximately 12.5 min and participants were asked to complete six runs. During each run, they were instructed to fixate throughout the entire duration of the experiment except for when they made saccadic responses.

Prior to the fMRI session, participants completed a training session to become familiar with the experimental procedure. The training session consisted of 64–128 trials and lasted a maximum of 45 min.

Importantly, the use of such a balanced design enabled the decoupling of choice‐related signals from those related to stimulus order and preparation for a specific saccade response direction without requiring the temporal jittering of event onsets. This is because, due to the balanced design, each specific choice was expected to have approximately the same number of trials associated with each stimulus order and each saccade direction respectively (Hebart et al., 2012). This further ensured an equal estimability of all conditions of interest and minimized the possibility of classifying choices using the difference in the variability of the beta weight estimates (Hebart & Baker, 2017).

2.3. Data acquisition

Saccadic eye movements were recorded using an MRI‐compatible eye‐tracker with a sampling rate of 500 Hz (Eyelink 1,000, SR Research Ltd, Mississauga, Ontario, Canada). MRI data were recorded with a 3 T Tim Trio scanner (Siemens, Erlangen) equipped with a 12‐channel head coil at the Center for Cognitive Neuroscience Berlin. For each participant, we collected 378 functional volumes per run (T2*‐weighted gradient‐echo echo‐planar images, TE: 30 ms, TR: 2,000 ms, flip angle: 90°, FOV: 192 mm, matrix size: 64 × 64, 3 × 3 × 3mm3, 0.6 mm gap, 37 slices, ascending sequence). In addition, anatomical images (T1 weighted MPRAGE, TE: 2.52 ms, TR: 1,900 ms, flip angle: 9°, FOV: 256 mm, matrix size: 256 × 256, 176 slices, 1 × 1 × 1 mm3) were collected for co‐registration and spatial normalization purposes. Of the 30 participants whose data was analyzed, 28 completed six functional runs, while the remaining two completed five functional runs.

2.4. Data analyses

2.4.1. Preprocessing

fMRI data preprocessing and analyses based on general linear models (GLM) were performed using SPM12 (Wellcome Trust Centre for Neuroimaging, http://www.fil.ion.ucl.ac.uk/spm). Possible artifacts in individual slices of the functional volumes were corrected via an interpolation approach as implemented in the SPM ArtRepair toolbox (Mazaika, Hoeft, Glover, & Reiss, 2009). Preprocessing steps prior to multivariate pattern analysis (MVPA) included slice‐time correction and spatial realignment to the mean functional image. MVPA was performed using The Decoding Toolbox (Hebart, Goergen, & Haynes, 2015). We used the SPM Anatomy toolbox (Eickhoff et al., 2005) for cytoarchitectonic reference. In addition, we used probabilistic maps of visual topography in human cortex as reference to identify brain regions that can be classified as the frontal eye fields (FEF; Wang, Mruczek, Arcaro, & Kastner, 2015; http://www.princeton.edu/~napl/vtpm.htm).

2.4.2. Decoding perceptual choices

We used a searchlight decoding method that allowed us to identify brain regions that carry information about the perceptual choice during decision phases in a spatially unbiased fashion. Prior to the decoding analysis, we fit a GLM (192 s high pass filtered) to each participant's preprocessed data to obtain run‐wise beta weights for each voxel. Each perceptual choice (higher vs. lower) was modeled as a stick regressor at the onsets of decision phases in correct trials and convolved with the canonical hemodynamic response function. Incorrect and missed trials were modeled with a separate regressor of no‐interest. Additionally, 10 principal components accounting for the variance in the white matter (WM) and cerebrospinal fluid (CSF) signal time courses (Behzadi, Restom, Liau, & Liu, 2007) were included in the GLM alongside six head motion parameters as nuisance regressors. Finally, constant terms were included to account for run‐specific effects, resulting in 20 regressors per run. Note that we only included data from correct trials in the subsequent decoding analysis based on the reasoning that incorrect trials were likely accompanied by indecisions during the time window of interest and would diminish the decodability of choice information.

For each participant, we employed a searchlight decoding analysis with linear support vector machine classifiers (SVM) in the implementation of LIBSVM (c = 1; Chang & Lin, 2011) and a leave‐one‐run‐out cross‐validation scheme. Beta weights for the choice regressors from each functional run were used as samples, yielding a total of 12 samples for participants who completed six runs and 10 samples for participants who completed five runs. Beta weights were normalized by subtracting the mean and dividing by the standard deviation across samples for each voxel before they were forwarded to the classification.

In each searchlight step, we extracted beta weights from all voxels within a 4‐voxel radius sphere (maximal 251 voxels) at a given location of the brain to create pattern vectors. An SVM classifier was trained to distinguish between the pattern vectors of different choices with the data from all but one run and tested for its generalizability on the data from the remaining run. The performance of the classifier was indicated by the decoding accuracy on the test run, that is, the percentage of correctly classified samples. This training–testing procedure was iterated so that every run had been used as the test data once. We averaged decoding accuracies across all iterations and assigned the mean decoding accuracy to the center voxel of the searchlight. The described searchlight procedure was repeated for every voxel in the brain, yielding a continuous brain map of mean decoding accuracies which was considered to reflect the amount of information about a participant's choice across the whole brain.

For the group inference, the decoding accuracy map of each participant was normalized to MNI space, resliced to 2 mm3 voxel size, and smoothed with a full width at half maximum Gaussian kernel of 5 mm. We computed a one‐tailed one‐sample t‐test to assess whether the observed decoding accuracies were significantly higher than chance level (50%) across the whole brain. Voxels showing significant decoding accuracies indicated that the local activity patterns carried information about perceptual choices. To assess whether decoding accuracies in the identified regions and behavioral performances were statistically dependent across participants, we computed a t‐contrast with decoding accuracy as the dependent variable and behavioral performance as the covariate.

2.4.3. Decoding task rule

We were also interested in whether any brain regions represent information about the task rule during the decision phases. To test this, we used a GLM with regressors modeling the task rules at the onsets of decision phases. Again, we modeled correct and incorrect/missed trials in separate regressors and included the WM/CSF signal and motion parameters as nuisance regressors. Furthermore, the analogous procedure for the searchlight decoding analysis and the group inference was applied to the resulting beta weights, with the difference that the pattern vectors corresponded to activity patterns evoked by the different task rules.

2.5. Control analyses

To ensure the thoroughness of the present study, we conducted further analyses to verify that the informative brain regions detected in the choice decoding analysis were indeed driven by choice representation and not confounded by stimulus order or saccade direction. To this end, we performed two sets of decoding analyses. For the first set, we employed a GLM with regressors modeling participants' perceptual choices (higher vs. lower) for trials of each stimulus order (f1 < f2 vs. f1 > f2) separately. Beta weights corresponding to the resulting four regressors were subjected to two searchlight decoding analyses, one for each stimulus order, using the identical parameters as in the main analysis. This way, local activity patterns associated with “higher” and “lower” choices were ensured to be independent of stimulus order. The resulting decoding accuracy maps from the two analyses were then averaged, resulting in an averaged decoding accuracy map for choices controlling for stimulus order. Using the analogous procedure, we further obtained an averaged decoding accuracy map in which choice‐related activity patterns were classified separately for each of the saccade directions. Finally, participants' averaged decoding accuracy maps from these two sets of decoding analyses were forwarded to group inferences in order to identify regions carrying choice information. These analyses fully controlled for confounds related to the stimulus order or the saccade direction at the cost of a significantly reduced number of trials (50%) for the decoding analyses and accordingly, reduced power. Nonetheless, if the informative activity patterns identified by the main choice decoding analysis were indeed driven by perceptual choice, we would expect to observe similar results in the control analyses.

We further tested whether the observed choice‐selective regions could be accounted for by overall changes in the BOLD activation in single voxels. For this purpose, we ran an analogous searchlight decoding analysis, but reduced the number of voxels within the local searchlight to one. If the observed choice information was mainly represented in a multivariate code, this analysis based on a single voxel should not be able to detect choice‐related information.

3. RESULTS

3.1. Behavioral results

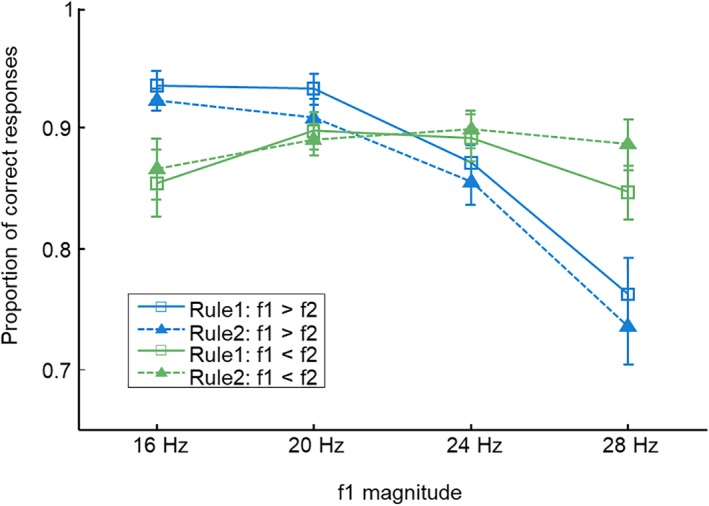

The average proportion of correct responses across 30 participants was 0.877 (SD: ±0.057, range: 0.726–0.966). To assess effects of different task components on behavioral performance, we computed a three‐way anova with task rule (rule 1 vs. rule 2), stimulus order (f1 > f2 vs. f1 < f2), and magnitude of f1 (16, 20, 24, and 28 Hz) as within‐subject factors. This analysis did not reveal main effects of task rule (F [1,29] = 0.256, p = 0.617) or stimulus order (F [1,29] = 0.585, p = 0.451), indicating that the cognitive demands were approximately equal across these factors. Furthermore, the analysis revealed a significant interaction between the stimulus order and the magnitude of f1 (F [3,87] = 17.046, p < 0.001, η G 2 = 0.083). For trials with f1 in the lower range, participants performed better when f2 was comparatively low (f1 > f2) than when f2 was comparatively high (f1 < f2). Conversely, the performance for trials with f1 in the higher range was better when f2 was higher than f1 compared with those with lower f2 (Figure 2). Such a behavioral pattern is a frequently observed phenomenon in studies using comparison tasks and is referred to as the time‐order effect or contraction bias (Ashourian & Loewenstein, 2011; Fassihi, Akrami, Esmaeili, & Diamond, 2014; Herding et al., 2016; Preuschhof, Schubert, Villringer, & Heekeren, 2009). This effect suggests a biased perception or memory trace of f1 toward the mean of the stimulus set. Importantly, this effect remains stable across the different task rules, as indicated by a nonsignificant three‐way interaction (F [3,87] = 1.049, p = 0.375).

Figure 2.

The average behavioral performance across participants. The performance was modulated by the contraction bias (see text), irrespective of what rule was applied. Error bars represent the standard error of the mean [Color figure can be viewed at http://wileyonlinelibrary.com]

To address the concern that the exclusion of incorrect and missed trials from fMRI analyses may result in a biased distribution of stimulus orders (f1 > f2 vs. f1 < f2) and saccade directions (right vs. left) across choices and thereby confound the fMRI choice decoding results, we computed two Pearson chi‐square tests for each participant, respectively. No systematic association between the choice behavior and either of these two variables was revealed in any participant (stimulus order: all p > 0.1; saccade direction: all p > 0.1). We further explored whether participants' eye position during decision phases varied systematically across choices. Due to technical problems during data collection, we were only able to acquire eye movements along the x‐ and y‐axis for 20 participants', while the data of the remaining 10 participants consisted of only eye movements along the x‐axis. For each participant, we extracted the average eye position along the x‐ and, when possible, y‐axis across the 2 s decision phase of each trial. Next, we computed a two‐tailed two‐sample t‐test to scrutinize systematic deviations between eye positions corresponding to different choices for the x‐ and y‐axis respectively. No systematic relationship with choice was revealed in any of the participants (all p > 0.05, Holm–Bonferroni corrected across axes for each participant).

Collectively, the behavioral results suggest that participants' choice behavior was neither modulated by the stimulus order, nor by the eye movements during and after the decision phase. Thus, it is unlikely that these factors influenced the choice decoding results reported below.

3.2. Neuroimaging results

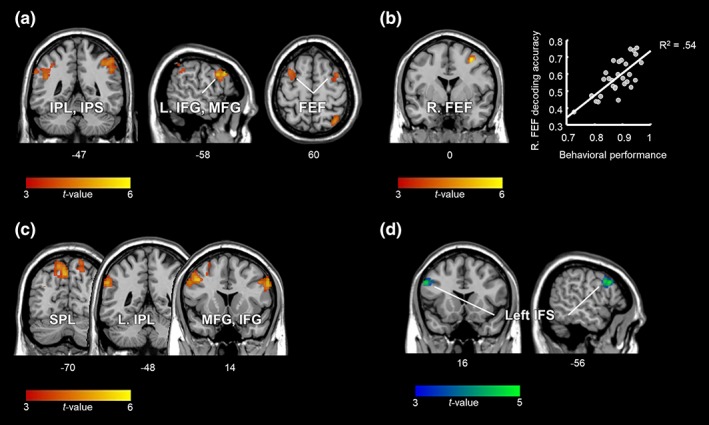

3.2.1. Choice‐selective brain regions

To identify brain regions that carry choice information independent of stimulus order and saccade selection during the decision phase, we applied a searchlight MVPA across the whole brain. The results are shown in Figure 3a and Table 1 (p < 0.05, false discovery rate [FDR] corrected for multiple comparisons at the cluster level with a cluster‐defining voxel‐wise threshold of p < 0.001). As indicated by the significant above‐chance decoding accuracies, this analysis revealed multiple clusters with distinguishable activity patterns for different choices. These clusters were located within the bilateral posterior parietal cortices (PPC) including the intraparietal sulci (IPS) and the inferior parietal lobules (IPL), the left lateral prefrontal cortex (lPFC), including the inferior and middle frontal gyrus (IFG, MFG), as well as the bilateral precentral gyri (PreCG) encroaching into the caudal‐most part of the superior frontal sulci (SFS) which are known as the FEF (identified with probabilistic maps by Wang et al., 2015; cf. also Amiez, Kostopoulos, Champod, & Petrides, 2006; Kastner et al., 2007).

Figure 3.

Neuroimaging results. (a) Brain regions carrying choice information independent of the stimulus order and the direction of the ensuing saccade (cluster corrected at p FDR < 0.05). (b) A significant correlation between participants' behavioral performance and choice decoding accuracy was observed in the right FEF (p FWE < 0.05, small volume corrected within the brain regions shown in a). (c) Brain regions containing information about the task rule. (d) Results from the conjunction analysis showing brain regions that represent both the choice and the rule information (c and d cluster corrected at p FDR < 0.05). The unthresholded statistical maps are available at https://neurovault.org/collections/PTJKPIWY/ [Color figure can be viewed at http://wileyonlinelibrary.com]

Table 1.

Brain regions identified as containing information related to choice, task rule, and both

| Anatomical regions | Cluster size | MNI (x,y,z) | t‐value | Mean accuracy | ||

|---|---|---|---|---|---|---|

| Choice | ||||||

| R. IPL (PFm), IPS (hIP2, hIP3), SPL | 953 | 32 | −64 | 50 | 5.28 | 59.28 |

| L. IFG, MFG, PreCG, SFS (FEF) | 1,137 | −58 | 18 | 32 | 5.28 | 53.52 |

| L. IPL (PF, PFm), IPS (hIP1, hIP2) | 661 | −52 | −42 | 36 | 4.66 | 56.92 |

| R. PreCG, SFS (FEF) | 249 | 34 | 4 | 52 | 4.58 | 57.95 |

| Task rule | ||||||

| L. MFG, PreCG | 1,001 | −52 | 8 | 38 | 5.25 | 58.97 |

| R. IFG, PreCG | 512 | 58 | 12 | 32 | 5.21 | 58.24 |

| L. SPL | 567 | −4 | −70 | 48 | 4.99 | 59.84 |

| L. IPL/supramarginal gyrus (PFm, PF, PGa, PGp) | 422 | −60 | −50 | 34 | 4.97 | 57.88 |

| R. SPL | 249 | 20 | −74 | 64 | 4.65 | 52.43 |

| Conjunction | ||||||

| L. IFG, IFS, MFG, PreCG | 377 | −56 | 16 | 34 | 4.54 | |

All results are reported at a cluster corrected statistical level of p FDR < 0.05 with an initial voxel‐wise threshold of p < 0.001. MNI coordinates, t‐values, and the mean accuracies refer to the peak voxel within each cluster.

Furthermore, an additional t‐contrast with the percentage of correct responses included as a covariate, revealed that, amid the identified regions, the behavioral performance was significantly correlated with the decoding accuracy in the right FEF (Figure 3b: x = 34, y = 0, z = 52, Pearson correlation coefficient r = 0.736, t [28] = 5.73, p < 0.05, peak‐level family wise error (FWE) corrected for small volume within the detected choice‐selective regions). To illustrate that the significant correlation was not merely driven by the participant with the lowest values in both variables (see Figure 3b), we ran the same analysis without that participant's data. The correlation coefficient of the reduced sample size decreased slightly to r = 0.683, however, it remained statistically significant (t (27) = 4.86, p FWE < 0.05, corrected for small volume).

3.2.2. Rule‐selective brain regions

Next, we investigated whether information about the task rule was represented during the decision phase. Rule‐selective activity patterns were observed in the prefrontal regions of both hemispheres including the left MFG and bilateral IFG, the PPC including the bilateral superior parietal lobules (SPL), and the left supramarginal gyrus (SMG) in the IPL. We further computed a “null” conjunction of the choice and the rule contrasts and found that a cluster centered around the left inferior frontal sulcus (IFS) was the only brain region to code both choice and rule (p < 0.001, cluster corrected at p FDR < 0.05; Figure 3c,d and Table 1).

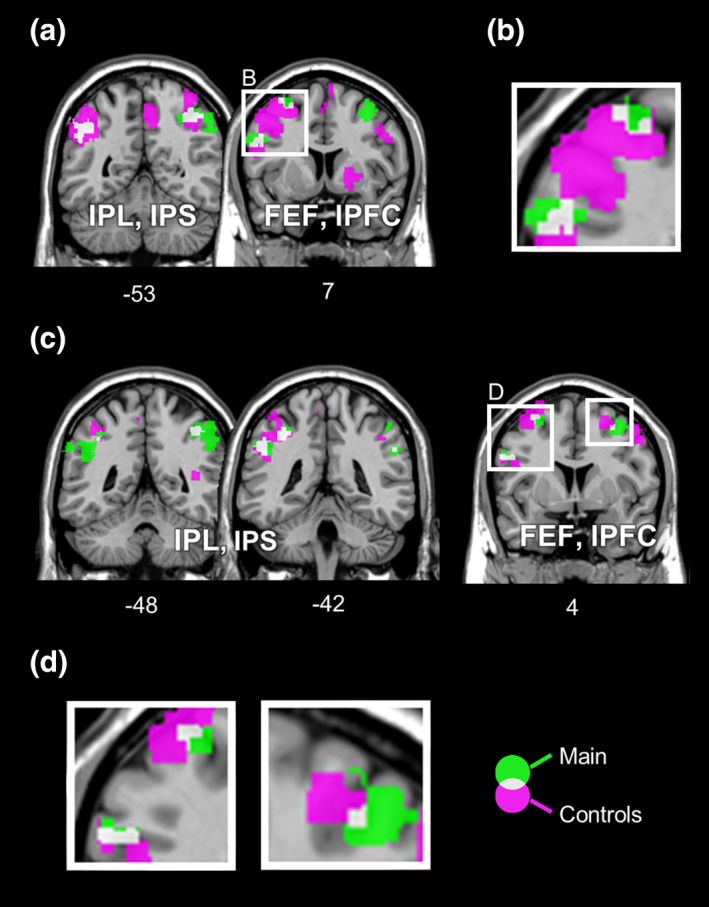

3.3. Control analyses

To further ensure that the results from the choice decoding analysis were mainly driven by choice‐related BOLD signals, we conducted two additional sets of decoding analyses. These analyses controlled for effects related to stimulus order and saccade direction. The results are displayed in Figure 4 (p < 0.001, uncorrected at voxel level due to the significant reduction in amount of data). Importantly, both sets of analyses yielded highly similar decoding results to the main results, with overlapping clusters in bilateral IPS, FEF, and the left lPFC. This result demonstrates that our paradigm has effectively disentangled choice representation from stimulus order and saccade selection and confirms that the results derived from the main analysis are choice‐specific.

Figure 4.

Comparisons between choice‐selective regions identified in the main analysis with regions detected in the additional analyses controlled for effects related to the stimulus order (a, b) and the saccade selection (c, d). Results of the main analysis are displayed in green, while results from the control analyses are depicted in magenta. (a) Except the right FEF, all other clusters found in the main analysis (the bilateral intraparietal regions, the left lPFC, and the left FEF) overlap partially with those from the analysis controlled for the stimulus order. (b) Detail of the left prefrontal regions (cf. a) showing partial overlaps. (c) In addition to the overlapping regions shown in a, an overlap in the right FEF is also evident between the main analysis and the analysis controlled for saccade selection. (d) The left panel depicts the detail of overlaps in the left frontal regions, while a detailed view of the right FEF is displayed in the right panel (cf. c). Results are shown at a cluster corrected threshold of p FDR < 0.05 for the main analysis and at uncorrected voxel‐wise threshold of p < 0.001 for the control analyses. The unthresholded statistical maps are available at https://neurovault.org/collections/PTJKPIWY/ [Color figure can be viewed at http://wileyonlinelibrary.com]

Finally, to assess whether overall changes in the activity level of single voxels within a cluster could account for the observed choice information, we ran a decoding analysis with a single voxel searchlight. The analysis did not reveal any significantly informative brain regions (p < 0.001, cluster corrected at p FDR < 0.05), indicating that choice‐related information was indeed represented by locally distributed activity patterns rather than a univariate code.

4. DISCUSSION

In the present study we employed a modified version of the vibrotactile frequency discrimination task to explore brain regions that carry information about perceptual choice independent of stimulus order and saccade selection. Using MVPA on human fMRI data, we found vibrotactile choice‐selective brain activity patterns in oculomotor regions including bilateral FEF and intraparietal regions, as well as the left lPFC. We thereby provide novel fMRI evidence for brain regions representing abstract choice in somatosensory decision‐making.

The identification of choice information distributed across effector‐specific premotor (FEF) and lateral prefrontal structures aligns well with previous electrophysiological studies in monkeys using the vibrotactile frequency discrimination task (Haegens et al., 2011; Hernández et al., 2002, 2010; Romo et al., 2004). Most interestingly, and yet to be explored in the monkey literature, we also observed vibrotactile choice‐selective activity patterns in intraparietal regions (IPS and IPL), which constitute, alongside the FEF and subcortical structures, the oculomotor system. This finding is compatible with a vast amount of evidence from monkey research using saccade responses in visual random‐dot motion (RDM) tasks suggesting a major involvement of monkey FEF and LIP (homologous to human IPS) in sensory evidence accumulation toward a decision (Ding & Gold, 2012; Kim & Shadlen, 1999; Roitman & Shadlen, 2002; Shadlen & Newsome 2001). With our results, we establish an important link between researches from two influential perceptual decision‐making paradigms and thereby promote the notion of supramodal decision making mechanisms.

Note however, that previously reported decision‐related signals in the FEF and LIP were mainly observed in studies in which perceptual choice was directly mapped to a specific, predictable saccade direction. A significant portion of decision‐related signals in the FEF or LIP disappeared when saccade directions were decorrelated from perceptual choices (Bennur & Gold, 2011; Gold & Shadlen, 2003; reviewed in Huk et al., 2017). Similarly, recent human fMRI studies also failed to capture decision‐related signals in the FEF or IPS when there was no fixed mapping between choice and saccade direction (Filimon et al., 2013; Hebart et al., 2012; Li Hegner, Lindner, & Braun, 2015). From these results one might conclude that oculomotor regions may merely represent the motor decisions. Crucially, there are several aspects of our study which render this interpretation unlikely: The current experiment was designed so that choice‐related signals could be separated from sensory and motor components of the task. Moreover, we further validated the effectiveness of this experimental protocol with control analyses on behavioral and fMRI data. Thus, we are confident that the distinctive activity patterns observed in oculomotor regions were mainly driven by the choice information. In light of this, our data provide novel evidence for choice selectivity in human oculomotor regions, which is not confined to saccadic decisions, but pertains to contexts where choices are made in a more abstract form.

One question emerged from our findings is why oculomotor regions represent perceptual choice despite its independence from the ensuing saccade direction? In fact, there is a growing body of literature showing that intraparietal regions are selective for various kinds of task‐relevant information during a decision process (reviewed Huk et al., 2017). In particular, the observed choice selectivity in intraparietal regions is consistent with previous human fMRI studies which successfully decoded categorical choice regarding the identity of visual stimuli (Hebart, Schriever, Donner, & Haynes, 2014; Li, Mayhew, & Kourtzi, 2009). It also agrees with the well‐established role of the monkey PPC in representing abstract categorical information (Freedman & Assad, 2011, 2016). Indeed, these authors have proposed a common neural mechanism underlying abstract decision‐making and categorization. Likewise, in line with our findings in the FEF, several studies have demonstrated that the functionality of premotor structures goes beyond the coding of motor‐related information and extends to sensory and task information (Ferrera, Yanike, &, Cassanello, 2009; Mante, Sussillo, Shenoy, & Newsome, 2013; Nakayama, Yamagata, Tanji, & Hoshi, 2008; Siegel, Buschman, & Miller, 2015; Yamagata, Nakayama, Tanji, & Hoshi, 2009, 2012). Intriguingly, we show that the differentiability of choice representations in the right FEF is linked to participants' choice behavior. That is, the higher the decoding accuracy, the better participants performed the task. Such a link between decoding accuracy and behavioral performance was, however, not evident in the other reported regions, suggesting that only information in the right FEF can be read out by downstream systems controlling behavior (Williams, Dang, & Kanwisher, 2007; see also De‐Wit, Alexander, Ekroll, & Wagemans, 2016). It is not immediately apparent from our data what functional role this observed correlation may reflect. Nevertheless, there is compatible evidence from recent studies in rats suggesting that the behavioral performance in a decision task is causally related to premotor structures' ability to categorize accumulated evidence into discrete choices (Erlich, Brunton, Duan, Hanks, & Body, 2015; Hanks et al., 2015). Accordingly, one possible interpretation is that decoding accuracies in the FEF index the quality of such categorization processes and are, hence, predictive of the behavioral performance. In concert with the implicated role of premotor structures in the transformation of abstract concepts into concrete motor commands (Nakayama et al., 2008; Yamagata et al., 2009, 2012), it is possible that choice information in the FEF reflects a temporary storage, waiting for additional information in order to be transformed into an appropriate saccade movement. This interpretation agrees with the growing body of evidence for a continuous flow of all task relevant information across a distributed brain network (Siegel et al., 2015). While this interpretation is appealing, further research is warranted to enable a temporal characterization of the information transformation from sensory processing to abstract choice and finally motor output.

In addition to the FEF and IPS, we found choice information in the left lPFC. This is consistent with previous monkey research using the vibrotactile frequency discrimination task, which shows that the lPFC computes perceptual choices (Hernández et al., 2010; Jun et al., 2010). Moreover, an involvement of the lPFC is also compatible with previous human fMRI studies suggesting lPFC's role in encoding perceptual choice independent of motor preparation (Hebart et al., 2014) and accumulating sensory evidence (Filimon et al., 2013; Heekeren, Marrett, Bandettini, & Ungerleider, 2004; Heekeren, Marrett, Ruff, Bandettini, & Ungerleider, 2006; Liu & Pleskac, 2011; Pleger et al., 2006). Notably, although choice‐selective regions detected in the current study are compatible with those reported in previous studies, the fMRI‐MVPA approach used here does not allow inference regarding the origin of choice information or where the sensory evidence is accumulated. With respect to this question, Shadlen, Kiani, Hanks, and Churchland (2008) suggest that abstract decisions evolves via the accumulation of evidence toward the implementation of particular rules and that prefrontal regions are, due to their central role in the rule representation (Sakai, 2008), the most likely regions to host such a process. Considering that the left IFS has been identified as carrying both rule and choice information in the present study, it appears to be a promising candidate region for future studies to scrutinize the evolution of abstract vibrotactile decisions in humans. Indeed, there is evidence from a previous fMRI study in the visual domain highlighting left IFS' role in sensory evidence accumulation when choices are decoupled from specific motor commands (Filimon et al., 2013).

In addition to the left IFS, we suggest that, given their well‐established role in magnitude processing (Jacob, Vallentin, & Nieder, 2012; Nieder, 2016), intraparietal regions are another potential candidate structure for deliberating decisions on the relation between two analog quantities, such as vibrotactile frequencies. A shift to focusing on intraparietal regions and their interaction with other areas in monkey electrophysiology may provide substantial complementary insight into the neural mechanisms underlying vibrotactile decisions.

In conclusion, our results suggest that the human lPFC and oculomotor regions represent vibrotactile choice independent of stimulus order and saccade selection. These results are highly consistent with previous results from monkey electrophysiology and provide empirical support for a pivotal role of human oculomotor regions in decision‐making beyond the mere processing of saccadic movements.

CONFLICT OF INTEREST

The authors declare that they have no conflicts of interest.

ACKNOWLEDGMENTS

We thank J. Herding for valuable discussions, H. Heekeren for comments on a previous version of the manuscript and C. Kainz for technical support during fMRI data acquisition. This work was supported by the Berlin School of Mind and Brain and the German Research Foundation (DFG: GRK 1589/2).

Wu Y‐h, Velenosi LA, Schröder P, Ludwig S, Blankenburg F. Decoding vibrotactile choice independent of stimulus order and saccade selection during sequential comparisons. Hum Brain Mapp. 2019;40:1898–1907. 10.1002/hbm.24499

Funding information Berlin School of Mind and Brain; Deutsche Forschungsgemeinschaft, Grant/Award Number: GRK 1589/2

REFERENCES

- Amiez, C. , Kostopoulos, P. , Champod, A. , & Petrides, M. (2006). Local morphology predicts functional organization of the dorsal premotor region in the human brain. Journal of Neuroscience, 26(10), 2724–2731. 10.1523/JNEUROSCI.4739-05.2006 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ashourian, P. , & Loewenstein, Y. (2011). Bayesian inference underlies the contraction bias in delayed comparison tasks. PLoS One, 6(5), e19551 10.1371/journal.pone.0019551 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Behzadi, Y. , Restom, K. , Liau, J. , & Liu, T. T. (2007). A component based noise correction method (CompCor) for BOLD and perfusion based fMRI. NeuroImage, 37(1), 90–101. 10.1016/j.neuroimage.2007.04.042 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bennur, S. , & Gold, J. I. (2011). Distinct representations of a perceptual decision and the associated Oculomotor plan in the monkey lateral Intraparietal area. The Journal of Neuroscience, 31(3), 913–921. 10.1523/JNEUROSCI.4417-10.2011 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chang, C.‐C. , & Lin, C.‐J. (2011). LIBSVM. ACM Transactions on Intelligent Systems and Technology, 2(3), 1–27. 10.1145/1961189.1961199 [DOI] [Google Scholar]

- Cisek, P. , & Kalaska, J. F. (2010). Neural mechanisms for interacting with a world full of action choices. Annual Review of Neuroscience, 33, 269–298. 10.1146/annurev.neuro.051508.135409 [DOI] [PubMed] [Google Scholar]

- De‐Wit, L. , Alexander, D. , Ekroll, V. , & Wagemans, J. (2016). Is neuroimaging measuring information in the brain? Psychonomic Bulletin & Review, 23(5), 1415–1458. 10.3758/s13423-016-1002-0 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ding, L. , & Gold, J. I. (2012). Neural correlates of perceptual decision making before, during, and after decision commitment in monkey frontal eye field. Cerebral Cortex, 22(5), 1052–1067. 10.1093/cercor/bhr178 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Eickhoff, S. B. , Stephan, K. E. , Mohlberg, H. , Grefkes, C. , Fink, G. R. , Amunts, K. , & Zilles, K . (2005). A new SPM toolbox for combining probabilistic cytoarchitectonic maps and functional imaging data. NeuroImage, 25(4), 1325–1335. 10.1016/j.neuroimage.2004.12.034 [DOI] [PubMed] [Google Scholar]

- Erlich, J. C. , Brunton, B. W. , Duan, C. A. , Hanks, T. D. , & Brody, C. D. (2015). Distinct effects of prefrontal and parietal cortex inactivations on an accumulation of evidence task in the rat. eLife, 4, 1–28. 10.7554/eLife05457 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fassihi, A. , Akrami, A. , Esmaeili, V. , & Diamond, M. E. (2014). Tactile perception and working memory in rats and humans. Proceedings of the National Academy of Sciences of the United States of America, 111(6), 2331–2336. 10.1073/pnas.1315171111 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ferrera, V. P. , Yanike, M. , & Cassanello, C. (2009). Frontal eye field neurons signal changes in decision criteria. Nature Neuroscience, 12(11), 1458–1462. 10.1038/nn.2434 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Filimon, F. , Philiastides, M. G. , Nelson, J. D. , Kloosterman, N. A. , & Heekeren, H. R. (2013). How embodied is perceptual decision making ? Evidence for separate processing of perceptual and motor decisions. The Journal of Neuroscience, 33(5), 2121–2136. 10.1523/JNEUROSCI.2334-12.2013 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Freedman, D. J. , & Assad, J. A. (2011). A proposed common neural mechanism for categorization and perceptual decisions. Nature Neuroscience, 14(2), 143–146. 10.1038/nn.2740 [DOI] [PubMed] [Google Scholar]

- Freedman, D. J. , & Assad, J. A. (2016). Neuronal mechanisms of visual categorization: An abstract view on decision making. Annual Review of Neuroscience, 39(1), 129–147. 10.1146/annurev-neuro-071714-033919 [DOI] [PubMed] [Google Scholar]

- Gold, J. I. , & Shadlen, M. N. (2003). The influence of behavioral context on the representation of a perceptual decision in developing oculomotor commands. The Journal of Neuroscience : The Official Journal of the Society for Neuroscience, 23(2), 632–651. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gold, J. I. , & Shadlen, M. N. (2007). The neural basis of decision making. Annual Review of Neuroscience, 30(1), 535–574. 10.1146/annurev.neuro.29.051605.113038 [DOI] [PubMed] [Google Scholar]

- Haegens, S. , Nácher, V. , Hernández, A. , Luna, R. , Jensen, O. , & Romo, R. (2011). Beta oscillations in the monkey sensorimotor network reflect somatosensory decision making. PNAS, 108(26), 10708–10713. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hanks, T. D. , Kopec, C. D. , Brunton, B. W. , Duan, C. A. , Erlich, J. C. , & Brody, C. D. (2015). Distinct relationships of parietal and prefrontal cortices to evidence accumulation. Nature, 520(7546), 220–223. 10.1038/nature14066 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hebart, M. N. , & Baker, C. I. (2017). Deconstructing multivariate decoding for the study of brain function. NeuroImage, 180, 4–18. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hebart, M. N. , Donner, T. H. , & Haynes, J. D. (2012). Human visual and parietal cortex encode visual choices independent of motor plans. NeuroImage, 63(3), 1393–1403. 10.1016/j.neuroimage.2012.08.027 [DOI] [PubMed] [Google Scholar]

- Hebart, M. N. , Goergen, K. , & Haynes, J.‐D. (2015). The decoding toolbox (TDT): A versatile software package for multivariate analyses of functional imaging data. Frontiers in Neuroinformatics, 8(88), 1–18. 10.3389/fninf.2014.00088 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hebart, M. N. , Schriever, Y. , Donner, T. H. , & Haynes, J.‐D. (2014). The relationship between perceptual decision variables and confidence in the human brain. Cerebral Cortex, 26(1), 118–130. [DOI] [PubMed] [Google Scholar]

- Heekeren, H. R. , Marrett, S. , Bandettini, P. A. , & Ungerleider, L. G. (2004). A general mechanism for perceptual decision‐making in the human brain. Nature, 431(7010), 859–862. 10.1038/nature02966 [DOI] [PubMed] [Google Scholar]

- Heekeren, H. R. , Marrett, S. , Ruff, D. A. , Bandettini, P. A. , & Ungerleider, L. G. (2006). Involvement of human left dorsolateral prefrontal cortex in perceptual decision making is independent of response modality. Proceedings of the National Academy of Sciences of the United States of America, 103(26), 10023–10028. 10.1073/pnas.0603949103 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Herding, J. , Ludwig, S. , & Blankenburg, F. (2017). Response‐modality‐specific encoding of human choices in upper Beta band oscillations during Vibrotactile comparisons. Frontiers in Human Neuroscience, 11(118), 1–11. 10.3389/fnhum.2017.00118 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Herding, J. , Spitzer, B. , & Blankenburg, F. (2016). Upper Beta band oscillations in human premotor cortex encode subjective choices in a Vibrotactile comparison task. Journal of Cognitive Neuroscience, 28(5), 668–679. 10.1162/jocn_a_00932 [DOI] [PubMed] [Google Scholar]

- Hernández, A. , Nácher, V. , Luna, R. , Zainos, A. , Lemus, L. , Alvarez, M. , … Romo, R. (2010). Decoding a perceptual decision process across cortex. Neuron, 66(2), 300–314. 10.1016/j.neuron.2010.03.031 [DOI] [PubMed] [Google Scholar]

- Hernández, A. , Zainos, A. , & Romo, R. (2002). Temporal evolution of a decision‐making process in medial premotor cortex. Neuron, 33, 959–972. [DOI] [PubMed] [Google Scholar]

- Huk, A. C. , Katz, L. N. , & Yates, J. L. (2017). The role of the lateral Intraparietal area in (the study of) decision making. Annual Review of Neuroscience, 40(1), 349–372. 10.1146/annurev-neuro-072116-031508 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jacob, S. N. , Vallentin, D. , & Nieder, A. (2012). Relating magnitudes: The brain's code for proportions. Trends in Cognitive Sciences, 16(3), 157–166. 10.1016/J.TICS.2012.02.002 [DOI] [PubMed] [Google Scholar]

- Jun, J. K. , Miller, P. , Hernández, A. , Zainos, A. , Lemus, L. , Brody, C. D. , & Romo, R. (2010). Heterogenous population coding of a short‐term memory and decision task. The Journal of Neuroscience : The Official Journal of the Society for Neuroscience, 30(3), 916–929. 10.1523/JNEUROSCI.2062-09.2010 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kastner, S. , Desimone, K. , Konen, C. S. , Szczepanski, S. M. , Weiner, K. S. , & Schneider, K. A. (2007). Topographic maps in human frontal cortex revealed in memory‐guided saccade and spatial working‐memory tasks. Journal of Neurophysiology, 97(5), 3494–3507. 10.1152/jn.00010.2007 [DOI] [PubMed] [Google Scholar]

- Kelly, S. P. , & O'Connell, R. G. (2015). The neural processes underlying perceptual decision making in humans: Recent progress and future directions. Journal of Physiology‐Paris, 109(1–3), 27–37. 10.1016/J.JPHYSPARIS.2014.08.003 [DOI] [PubMed] [Google Scholar]

- Kim, J.‐N. , & Shadlen, M. N. (1999). Neural correlates of a decision in the dorsolateral prefrontal cortex of the macaque. Nature Neuroscience, 2(2), 176–185. 10.1038/5739 [DOI] [PubMed] [Google Scholar]

- Kriegeskorte, N. , Goebel, R. , & Bandettini, P. A. (2006). Information‐based functional brain mapping. Proceedings of the National Academy of Sciences of the United States of America, 103(10), 3863–3868. 10.1073/pnas.0600244103 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Li, S. , Mayhew, S. D. , & Kourtzi, Z. (2009). Learning shapes the representation of behavioral choice in the human. Brain, 62, 441–452. 10.1016/j.neuron.2009.03.016 [DOI] [PubMed] [Google Scholar]

- Li Hegner, Y. , Lindner, A. , & Braun, C. (2015). Cortical correlates of perceptual decision making during tactile spatial pattern discrimination. Human Brain Mapping, 36, 3339–3350. 10.1002/hbm.22844 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Liu, T. , & Pleskac, T. J. (2011). Neural correlates of evidence accumulation in a perceptual decision task, 48824, 2383–2398. 10.1152/jn.00413.2011 [DOI] [PubMed] [Google Scholar]

- Mante, V. , Sussillo, D. , Shenoy, K. V. , & Newsome, W. T. (2013). Context‐dependent computation by recurrent dynamics in prefrontal cortex. Nature, 503(7474), 78–84. 10.1038/nature12742 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mazaika, P. , Hoeft, F. , Glover, G. H. , & Reiss, A. L. (2009). Methods and software for fMRI analysis for clinical subjects. San Francisco, CA: Annual Meeting of the Organization for Human Brain Mapping. [Google Scholar]

- Mulder, M. J. , van Maanen, L. , & Forstmann, B. U. (2014). Perceptual decision neurosciences ‐ a model‐based review. Neuroscience, 277, 872–884. 10.1016/j.neuroscience.2014.07.031 [DOI] [PubMed] [Google Scholar]

- Nakayama, Y. , Yamagata, T. , Tanji, J. , & Hoshi, E. (2008). Transformation of a virtual action plan into a motor plan in the premotor cortex. The Journal of Neuroscience : The Official Journal of the Society for Neuroscience, 28(41), 10287–10297. 10.1523/JNEUROSCI.2372-08.2008 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nieder, A. (2016). The neuronal code for number. Nature Reviews. Neuroscience, 17(6), 366–382. 10.1038/nrn.2016.40 [DOI] [PubMed] [Google Scholar]

- Pleger, B. , Ruff, C. C. , Blankenburg, F. , Bestmann, S. , Wiech, K. , Stephan, K. E. , … Dolan, R. J. (2006). Neural coding of tactile decisions in the human prefrontal cortex. Journal of Neuroscience, 26(48), 12596–12601. 10.1523/JNEUROSCI.4275-06.2006 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Preuschhof, C. , Heekeren, H. R. , Taskin, B. , Schubert, T. , & Villringer, A. (2006). Neural correlates of vibrotactile working memory in the human brain. The Journal of Neuroscience : The Official Journal of the Society for Neuroscience, 26(51), 13231–13239. 10.1523/JNEUROSCI.2767-06.2006 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Preuschhof, C. , Schubert, T. , Villringer, A. , & Heekeren, H. R. (2009). Prior information biases stimulus representations during vibrotactile decision making. Journal of Cognitive Neuroscience, 22(5), 875–887. 10.1162/jocn.2009.21260 [DOI] [PubMed] [Google Scholar]

- Roitman, J. D. , & Shadlen, M. N. (2002). Response of neurons in the lateral intraparietal area during a combined visual discrimination reaction time task. The Journal of Neuroscience : The Official Journal of the Society for Neuroscience, 22(21), 9475–9489. 10.1523/JNEUROSCI.22-21-09475.2002 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Romo, R. , & de Lafuente, V. (2013). Conversion of sensory signals into perceptual decisions. Progress in Neurobiology, 103, 41–75. 10.1016/j.pneurobio.2012.03.007 [DOI] [PubMed] [Google Scholar]

- Romo, R. , Hernández, A. , & Zainos, A. (2004). Neuronal correlates of a perceptual decision in ventral premotor cortex. Neuron, 41(1), 165–173. 10.1016/S0896-6273(03)00817-1 [DOI] [PubMed] [Google Scholar]

- Romo, R. , & Salinas, E. (2003). Flutter discrimination: Neural codes, perception, memory and decision making. Nature Reviews. Neuroscience, 4(3), 203–218. 10.1038/nrn1058 [DOI] [PubMed] [Google Scholar]

- Sakai, K. (2008). Task set and prefrontal cortex. Annual Review of Neuroscience, 31(1), 219–245. 10.1146/annurev.neuro.31.060407.125642 [DOI] [PubMed] [Google Scholar]

- Shadlen, M. N. , Kiani, R. , Hanks, T. D. , & Churchland, A. K. (2008). Neurobiology of decision making ‐ an intentional framework In Engel C. & Singer W. (Eds.), Better than conscious?: Decision making, the human mind, and implications for institutions. Cambridge: MIT Press. [Google Scholar]

- Shadlen, M. N. , & Newsome, W. T. (2001). Neural basis of a perceptual decision in the parietal cortex (area LIP) of the rhesus monkey. Journal of Neurophysiology, 86(4), 1916–1936. 10.1152/jn.2001.86.4.1916 [DOI] [PubMed] [Google Scholar]

- Siegel, M. , Buschman, T. J. , & Miller, E. K. (2015). Cortical information flow during flexible sensorimotor decisions. Science (New York, N.Y.), 348(6241), 1352–1355. 10.1126/science.aab0551 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wang, L. , Mruczek, R. E. B. , Arcaro, M. J. , & Kastner, S. (2015). Probabilistic maps of visual topography in human cortex. Cerebral Cortex, 25(10), 3911–3931. 10.1093/cercor/bhu277 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Williams, M. A. , Dang, S. , & Kanwisher, N. G. (2007). Only some spatial patterns of fMRI response are read out in task performance. Nature Neuroscience, 10(6), 2006–2007. 10.1038/nn1900 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yamagata, T. , Nakayama, Y. , Tanji, J. , & Hoshi, E. (2009). Processing of visual signals for direct specification of motor targets and for conceptual representation of action targets in the dorsal and ventral premotor cortex. Journal of Neurophysiology, 102(6), 3280–3294. 10.1152/jn.00452.2009 [DOI] [PubMed] [Google Scholar]

- Yamagata, T. , Nakayama, Y. , Tanji, J. , & Hoshi, E. (2012). Distinct information representation and processing for goal‐directed behavior in the dorsolateral and ventrolateral prefrontal cortex and the dorsal premotor cortex. The Journal of Neuroscience: The Official Journal of the Society for Neuroscience, 32(37), 12934–12949. 10.1523/JNEUROSCI.2398-12.2012 [DOI] [PMC free article] [PubMed] [Google Scholar]