Abstract

Recently, neurophysiological findings about social interaction have been investigated widely, and hardware has been developed that can measure multiple subjects' brain activities simultaneously. These hyperscanning studies have enabled us to discover new and important evidences of interbrain interactions. Yet, very little is known about verbal interaction without any visual input. Therefore, we conducted a new hyperscanning study based on verbal, interbrain turn‐taking interaction using simultaneous EEG/MEG, which measures rapidly changing brain activities. To establish turn‐taking verbal interactions between a pair of subjects, we set up two EEG/MEG systems (19 and 146 channels of EEG and MEG, respectively) located ∼100 miles apart. Subjects engaged in verbal communication via condenser microphones and magnetic‐compatible earphones, and a network time protocol synchronized the two systems. Ten subjects participated in this experiment and performed verbal interaction and noninteraction tasks separately. We found significant oscillations in EEG alpha and MEG alpha/gamma bands in several brain regions for all subjects. Furthermore, we estimated phase synchronization between two brains using the weighted phase lag index and found statistically significant synchronization in EEG and MEG data. Our novel paradigm and neurophysiological findings may foster a basic understanding of the functional mechanisms involved in human social interactions. Hum Brain Mapp 39:171–188, 2018. © 2017 Wiley Periodicals, Inc.

Keywords: social interaction, turn‐taking verbal interaction, phase synchronization, hyperscanning, simultaneous EEG/MEG

INTRODUCTION

Reciprocal social interaction has played an important role in establishing human relationships throughout our evolution. From a cognitive perspective, social interaction requires several mental actions, including perception, comprehension, information processing, representation, and anticipation, among others. Each person's personality, childhood background, academic ability, retrospective memory, and social status influence such interactions. Many previous studies in sociology and anthropology have attempted to investigate the characteristics of human social interactions [Chartrand and Bargh, 1999]. Recently, even neuroscientists have become interested in social interaction because several brain functions are attributed to social behaviors and interactions [Hari and Kujala, 2009]. Montague et al. [2002] first adopted a hyperscanning technique in a functional magnetic resonance imaging (fMRI) study to investigate neural substrates of human social interactions. They equipped two fMRI systems simultaneously and conducted a simple game task to determine whether it was possible to measure important biological substrates of human social interaction. Since then, fMRI hyperscanning studies have revealed intersubject correlations and synchronization of blood oxygen level‐dependent (BOLD) responses in trust games based on multiround economic exchanges [King‐Casas et al., 2005; Tomlin et al., 2006]. These studies have allowed brain activations to be estimated in both subjects based on multiple instances. Self‐response diminishment during the trust game on the part of people with autism spectrum disorder also has been reported [Chiu et al., 2008]. They commonly found significant changes in the anterior cingulate cortex (ACC), a brain region known well to be associated with decision‐making processes [Bush et al., 2002; Kennerley et al., 2006; Rushworth et al., 2007; Sanfey et al., 2003]. In addition to ACC, one study [Fliessbach et al., 2007] identified BOLD changes in the ventral striatum during monetary reward tasks, and demonstrated that social interaction affects activation levels in this region. Such cerebral hemodynamic changes also were observed in joint attention [Bilek et al., 2015; Koike et al., 2016; Saito et al., 2010], gestural [Schippers et al., 2010], facial [Anders et al., 2011], and verbal communication [Spiegelhalder et al., 2014].

Functional near‐infrared spectroscopy (fNIRS), an emerging portable imaging technique that measures hemodynamic changes in the brain, has been investigated widely in recent years because it is simple to set up the measurement in hyperscanning studies. To record two subjects simultaneously, a single fNIRS device is split into two; one half of the channels are attached to one subject, and the other half to another. Funane et al. [2011] first measured hemodynamic changes in two subjects simultaneously using prefrontal fNIRS recording during a cooperative task as a form of social process. They attempted to investigate the relationship between subjects' coinstantaneous brain activation using fNIRS hyperscanning and found significant spatiotemporal covariance. Brain activation in the frontal cortex has played a critical role in fNIRS hyperscanning, and neurophysiological evidence from it has been observed in joint n‐back [Dommer et al., 2012], button‐press tasks [Cui et al., 2012], and imitation tasks [Holper et al., 2012]. To establish a more naturalistic context, face‐to‐face verbal communication was conducted based on turn‐taking conversation [Jiang et al., 2012]. Researchers attached detectors and emitters to the left hemisphere, which plays a major role in language function and processing in mirror neuron systems (MNS) [Rizzolatti and Craighero, 2004; Stephens et al., 2010]. They showed significant neural synchronization in the left inferior frontal cortex during face‐to‐face dialogue compared to that in no interaction. Increased left frontal neural synchronization also was observed in cooperative singing by comparison to singing or humming alone [Osaka et al., 2015]. In contrast, one study revealed right frontal synchronization in turn‐taking cooperation without verbal communication [Liu et al., 2015]. Recently, several researchers have investigated interbrain neural synchronization during face‐to‐face economic exchanges [Tang et al., 2016], cooperative games [Liu et al., 2016], and more natural conversation [Nozawa et al., 2016], and gender effects [Cheng et al., 2015].

Measuring hemodynamic changes in the brain with fMRI and fNIRS enables researchers to investigate the way in which blood flow is synchronized between two brains; further, because of its high temporal resolution, electroencephalography (EEG) may yield neurophysiological findings related to oscillations and correlates of rapid interaction. The EEG technique is cost‐effective and portable; therefore, by comparison to other techniques, it has been the primary method of choice in hyperscanning studies [Babiloni and Astolfi, 2014; Koike et al., 2015]. Babiloni et al. [2007, 2006] first designed a hyperscanning EEG paradigm using an Italian card game based on the Prisoner's Dilemma. Reflecting this pioneering experimental design, other researchers have investigated interbrain synchronizations between two or more brains while playing guitar together [Lindenberger et al., 2009; Sänger et al., 2012, 2013], an iterative Prisoner's Dilemma game [Fallani et al., 2010], or an Italian card game [Astolfi et al., 2010, 2011]. By applying high‐resolution spectral analysis to EEG, a neuromarker referred to as the phi complex of human social coordination was discovered in the right centro‐parietal cortex during self‐paced rhythmic finger movements between with and without vision [Tognoli et al., 2007]. In this study, they found that a pair of oscillatory phi components (phi1 and phi2) could distinguish between independent and coordinated behaviors in the right centro‐parietal area in the 9.2–11.5 Hz spectral range. In addition to self‐movement, imitating a partner's hand movements was designed as a social interaction, and the phase synchronization between two subjects who played different roles (model and imitator) reflected a top–down modulation of the different roles [Dumas et al., 2010]. Furthermore, using spectral analysis, anatomical connectivity [Dumas et al., 2012a] and oscillatory distinctions between the two different roles [Dumas et al., 2012b; Delaherche et al., 2015] were observed in the same task.

In general, extracting common oscillations from both subjects is one of the key findings in hyperscanning studies. Therefore, several EEG hyperscanning studies have attempted to identify such oscillations. One major finding is that alpha suppression is observed commonly in interaction tasks, such as a turn‐taking game [Liao et al., 2015], the Rock–Paper–Scissors game [Perry et al., 2011], joint attention [Lachat et al., 2012], vision‐based motor tasks [Naeem et al., 2012a; Naeem et al., 2012b], finger tapping [Konvalinka et al., 2014], and action observation [Ménoret et al., 2014]. Previous EEG hyperscanning studies have focused on natural interactions, such as playing a card game and a guitar together, visual interactions, imitating a hand movement, and joint attention. However, these experimental paradigms did not focus on one sensory aspect of interaction. For example, playing a card game requires various mental processes, such as preparation, cognition, decision, and deception, and includes vision and hearing on the part of other persons. Although these experimental designs could resemble natural interactions in real‐world situations more closely, it is difficult to extract precise neural signatures from EEG. Furthermore, imitating a hand movement tends to focus largely on hand and finger movements, not on real interactions with a partner. Therefore, it is necessary to design an experimental paradigm for an interaction task that focuses on one sense with little or no distraction.

To the best of our knowledge, only two EEG hyperscanning studies to date have used verbal communication. One was performed using an audio‐visual stimulus recorded by speakers [Kuhlen et al., 2012]. In this study, they recorded speakers' stories and played them back to listeners thereafter. They found a correlation in the EEG data between speakers and listeners with a time delay of ∼12.5 s. The other study was designed such that subjects could see their partner's face [Kawasaki et al., 2013]. The authors designed a more interactive verbal interaction based on alternating speech tasks. Two subjects were instructed to alternate pronouncing the alphabet from “A” to “G,” and two types of tasks were designed: human‐to‐human and human‐to‐machine. The authors found oscillations in theta/alpha bands and significant correlations in the left temporal and right centro‐parietal areas during both tasks. This study provided considerable evidence of brain synchronization during verbal communication between individuals. However, the effects of viewing a scene during face‐to‐face conversation were not eliminated completely, and communication with recorded machine voices may generate weak communication. Subjects faced each other during verbal communication, so that responses may have been affected by the partner's emotion or behavior [Kashihara, 2014; Yun, 2013], while interaction with machines should be designed to represent a more human‐like situation.

More recently, an experimental hardware setup for dual magnetoencephalography (MEG) hyperscanning was developed that uses an online connection [Baess et al., 2012; Zhdanov et al., 2015]. Two MEG systems were installed in a single room [Hirata et al., 2014]. Neural signatures of hand movements were observed in this dual MEG setup by alpha and beta powers [Zhou et al., 2016], but neurophysiological findings in verbal interaction still were not investigated fully using MEG acquisition.

Therefore, in this work, we designed a turn‐taking verbal interaction based on number counting without any visual input, and used simultaneous EEG and MEG measurements to find common oscillations on the part of all subjects and phase synchronization between two subjects. EEG alpha synchronization may yield significant oscillatory changes and phase synchronization in interaction tasks [Konvalinka et al., 2014; Lachat et al., 2012; Liao et al., 2015; Perry et al., 2011; Ménoret et al., 2014]. Further, MEG gamma band activity may play a critical role in social interaction [Pavlova et al., 2010], speech processing [Palva et al., 2002], and working memory [Jensen et al., 2007; Jokisch and Jensen, 2007]. Simultaneous recording of EEG and MEG—multimodal analysis—may yield complementary information and MEG gamma band activity may provide significant findings that are not revealed using EEG [Ahn et al., 2013].

MATERIALS AND METHODS

Subjects

Nine males and one female (five pairs, aged 23.9 ± 3.3) participated in a turn‐taking verbal interaction. All subjects were native Koreans. None was aware of any personal information, such as the names, faces, ages, or personalities of the other subjects before the experiment. Each was assigned to one of two different sites, either the Yonsei Severance Hospital or the Korea Research Institute of Standards and Science. The distance between the two sites is ∼100 miles by carriageway. All subjects signed a consent form describing the detailed experimental procedure and received approximately $15 per hour for their participation. The purposes of and instructions for the experiment were explained clearly to all the subjects prior to recording. This study was approved by the Institutional Review Board at Gwangju Institute of Science and Technology (20150615‐HR‐18‐02‐03).

Recording and Synchronization

All subjects were engaged in the task in two shielded rooms. Magnetic‐compatible, custom‐built EEG electrodes were attached to the scalp. Brain Products and Biosemi EEG amplifiers were used to record electrical activities for each subject with a 1,024 Hz sampling rate. Nineteen EEG electrodes were attached over the entire scalp (Fp1, Fp2, F3, F4, C3, C4, P3, P4, O1, O2, F7, F8, T3, T4, T5, T6, Fz, Cz, Pz) based on the 10–20 international position system. Common mode sense (CMS) and driven right leg (DRL) electrodes were used for reference and formed a feedback loop to drive the average potential of the subject. This is known as CMS‐DRL referencing (http://www.biosemi.com). Vertical and horizontal EOG were attached around the eyes with two ECGs around the collarbone. Two MEG measurement systems were installed in two different sites, using the 152‐sensor, whole‐head configuration at a 1,024 Hz sampling rate developed by the Korea Research Institute of Standards and Science [Lee et al., 2009]. The sensors were first‐order axial gradiometers with a baseline of 5 cm.

All EEG and MEG data were synchronized by digital signal from the MEG system to EEG acquisition software, and real‐time synchronization between the two sites was conducted with a network time protocol (NTP). Once we synchronized a two‐system clock using a Windows NTP server, the clock timer initiated the experimental paradigm automatically at a specific time. These triggered the simultaneous display of instruction screens to the two tele‐subjects. A “ping” command in Windows computed the latency of the network connection. Prompt commands with a delay of <1 ms were obtained in all experimental cases.

Experimental Procedures

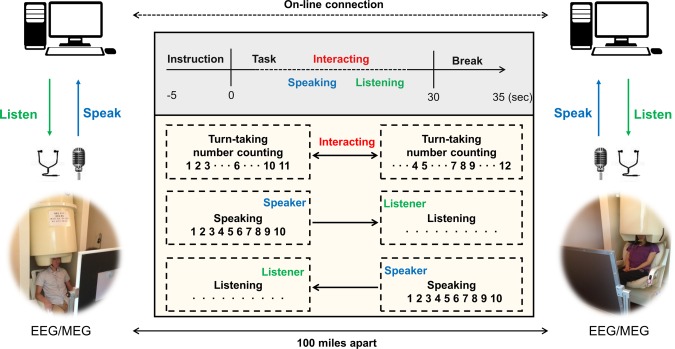

Each subject sat in a magnetically and electrically shielded room and visual instructions were displayed on a screen ∼100 cm from them. A trial lasted 40 s, including 5 s instruction and break periods before and after the task, as depicted in Figure 1 (top gray box). For the first 5 s, visual instructions appeared on the screen in yellow characters on a black background, and the subjects prepared to perform the given task. After the instruction period, the task period began with a blank black screen that lasted 30 s to eliminate any visual input. The experiment included three tasks: interacting, speaking and listening.

Figure 1.

Experimental setup in turn‐taking verbal interaction using simultaneous EEG/MEG. Verbal communication between subjects was conducted using a condenser microphone and earphones in an online environment. There are three types of tasks: interacting, speaking, and listening. Interacting: counting numbers in turn from 1 to time limit. Speaking: each subject speaks the numbers alone from 1 to time limit without any listening and visual input. Listening: each subject listens to his/her partner's number counting from 1 to time limit without any response. The distance between the two rooms was ∼100 miles. [Color figure can be viewed at http://wileyonlinelibrary.com]

-

Interacting task

Each subject was instructed to perform turn‐taking number counting beginning with the number 1; they counted the numbers consecutively and verbally for only 30 s. One subject (initiator) began by saying the number 1, and the other subject (partner) then spoke the numbers that follow. Rules of the turn‐taking task were that each subject could count at most three consecutive numbers during one turn, and a partner could say the following consecutive number followed by the numbers, which the initiator counted during a previous turn. However, the partner could not say the same number as that counted by the initiator. For example, if an initiator counted three consecutive numbers 1–2‐3, then a partner must count 4, or 4–5, but could not count 4–5‐6. This rule is expected to keep subjects attentive in that the subjects were asked to focus on the task continuously and encourage active interactions during turn‐taking situation. If they were allowed to say the numbers arbitrarily without this rule, they might say numbers instinctively without regard to numbers that the partner said in a previous turn. Finally, the initiator of the task changed continuously by turns.

-

Speaking task

Each subject counted numbers without stopping for 30 s, beginning with 1. Subjects could not listen to the voice of their partners and simply spoke alone during this period. This task was designed as a non‐interaction/control task. Each subject spoke numbers, but there was no interaction between the two.

-

Listening task

Each subject listened naturally to his/her partner's number counting without responding. Subjects were instructed to keep their eyes open while they listened.

Subjects performed the three different tasks repeatedly in a regular sequence. After the task period, there was a 5 s break, during which the subjects stopped counting and prepared for the next instructions. The task consisted of 18 trials (6 trials for each of the 3 tasks) per run, and 5 viable runs were conducted; thus, a total of 90 trials (30 trials per task) was obtained. If a subject made a mistake during the task, the partner was instructed to raise his/her hand to stop the trial until the break period. We excluded such trials from the data analysis.

To deliver the subjects' voices to their partners at each site, we used a condenser microphone that was 31.7 mm high and 41.8 mm wide (ETM‐003, Edutige Corporation, Seoul, South Korea). It was located 100 cm from the subjects and recorded their voices clearly. Audition 3.0 (Adobe Systems, San Jose, CA) was used to record the voice with a 44 kHz sampling rate and it was delivered simultaneously to the partner through online communication software. Subjects could listen to the voices of their partners using a magnetic‐compatible earphone constructed of sponge and tube. All procedures for the experimental paradigms are shown in Figure 1.

Data Analysis

Among the three tasks (interacting, speaking, and listening), a comparison was made primarily between interacting and speaking tasks. It is believed that the demands required saying numbers in these two tasks (interacting and speaking) may be quite similar. However, the listening task may require a notably different demand. Thus, the speaking task may be a good control condition as a noninteracting task because subjects simply spoke numbers without interacting with partners. On the other hand, the listening task is not a reasonable control condition because subjects simply listen, which requires no demand. Therefore, trials from the listening task were used to validate our results later and are described in the Discussion.

We inspected all epochs in the EEG data visually, and eliminated trials having abnormal spikes, and those interrupted because of subjects' mistakes. The EEG data were downsampled to 512 Hz, and band‐pass filtered from 1 to 50 Hz. Thereafter, logistic infomax independent component analysis (ICA) [Bell and Sejnowski, 1995] was used to remove muscle, eye‐related, and heartbeats artifacts. By applying ICA to EEG data, we obtained ICA components and identified that were associated with artifacts by visual inspection of the topographical distribution and power spectral density of each component. Then, artifact components were rejected, which allowed us to obtain artifact‐free EEG data. To calculate spectral band power, we calculated the power spectral density (PSD) using Welch's method with the Hanning window. The PSD, which had a 0.5 Hz frequency bin, was calculated for each trial and averaged across trials. Furthermore, to reduce subject variability, it was normalized for each channel by a z‐score. All analyses were performed by EEGLAB [Delorme and Makeig, 2004], FieldTrip [Oostenveld et al., 2010], and custom‐built scripts in MATLAB (Mathworks, Natick, MA). Statistically significant features and channels were extracted with the Wilcoxon‐signed rank test with the false discovery rate (FDR) correction for multiple testing [Benjamini and Hochberg, 1995] based on trials. Among all 300 trials per task, 292 and 294 trials were retained after removing the interrupted trials in the interacting and speaking tasks. To compute phase synchronization in EEG sensor space, we adopted a debiased weighted phase lag index (WPLI) to minimize the effects of volume conduction and noise from a different region of scalp EEG data [Vinck et al., 2011]. It also was debiased with respect to sample size. Phase synchronizations between each pair of subjects was calculated and averaged across trials after elaborate preprocessing. We calculated the WPLI at each 0.5 Hz frequency bin and averaged these values according to four different spectral bands: theta (4–7 Hz), alpha (8–12 Hz), beta (13–30 Hz), and gamma (31–50 Hz). We constructed surrogate data to obtain statistical significance for functional connectivity. We used the circular block bootstrapping [Politis and Romano, 1992] for dependent EEG data to preserve autocorrelations for statistical test since resampling in the time series can destroy the dependence between successive values in the time series. Block‐length for each calculation was selected by the automatic block‐length algorithm [Politis and White, 2004]. Each trial was resampled using the circular block bootstrapping with defined block‐length. After constructing this surrogate data in this way up to 1,000 times (n = 1,000), only statistically significant WPLI values were extracted (P < 0.05 with FDR correction). Thus, we obtained symmetric matrices for WPLI and corresponding P values that represented phase synchronization between subjects. Thereafter, we calculated the mean WPLI over subjects and plotted it as a topography that describes seeds of functional connections. The WPLI ranged from 0 (no phase synchronization) to 1 (perfect phase synchronization).

We inspected the entire MEG dataset visually and rejected bad channels. In the MEG acquisition, 6 of 152 sensors were excluded because of technical problems in the acquisition system and abnormal spikes during recording. Thus, we obtained MEG data from 146 sensors. Data from each sensor were transformed computationally from an axial to a planar gradient because a dipole appears as a single local maximum in a sensor just above its location [Gross et al., 2013]. Data with 1,024 Hz were downsampled to 512 Hz and ICA was used to remove artifacts. Analyses of oscillations and phase synchronization in the MEG data followed the same procedure as in the EEG.

Speech Recording

Two condenser microphones were used to record each subject's voice, which was saved simultaneously by software. The number of peak points was estimated using a simple peak point detection algorithm, which indicates the number counted on the part of each subject. The numbers of peak points extracted for all subjects and tasks are tabulated in Table 1. As the table shows, the mean of the numbers each subject counted was 11.79 ± 1.80, and that of each pair of interacting subjects was 23.53 ± 1.81 (summation of numbers counted for each subject) in an average of 30 s of task duration. Similarly, subjects spoke an average of 24.23 ± 1.57 numbers alone in the speaking task. There was no significant difference between the two tasks (interacting and speaking tasks) with respect to the number of numbers spoken (P > 0.05). Therefore, we inferred that there was no task‐related dependence in this experiment. The representative example depicted in Supporting Information, Figure S1 shows the way in which a pair of subjects interacted in verbal number counting by voice recording and the way in which a subject spoke alone as well.

Table 1.

The number of counting numbers for each subject

| Subjects | A1 | A2 | B3 | B4 | C5 | C6 | D7 | D8 | E9 | E10 | Mean |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Inteacting | 11.81 (1.96) | 11.71 (1.93) | 12.01 (1.84) | 11.89 (1.98) | 11.54 (1.78) | 11.43 (1.92) | 12.11 (1.54) | 11.84 (1.48) | 11.76 (1.79) | 11.56 (1.84) | 11.79 (1.80) |

| Sum | 23.52 (1.94) | 23.90 (1.91) | 22.97 (1.85) | 23.95 (1.51) | 23.32 (1.82) | 23.53 (1.81) | |||||

| Speaking | 24.91 (1.25) | 23.69 (1.76) | 23.94 (1.45) | 24.15 (1.57) | 24.84 (1.76) | 24.54 (1.47) | 23.87 (1.68) | 24.14 (1.72) | 23.97 (1.48) | 24.28 (1.39) | 24.23 (1.57) |

| Avg | 24.30 (1.51) | 24.05 (1.51) | 24.69 (1.62) | 24.01 (1.70) | 24.13 (1.44) | 24.23 (1.55) | |||||

Mean and standard deviation (in parenthesis) for all conditions and subjects are summarized. Sum: summation of counting numbers from two subjects in interacting task, Avg: average of counting numbers from two subjects in speaking task. Subjects having the same alphabetical character are paired subjects.

RESULTS

Oscillations

EEG alpha

To compare EEG oscillations between two tasks (interacting and speaking), we calculated the grand‐averaged PSD in the four different bands (theta: 4–7 Hz, alpha: 8–12 Hz, beta: 13–30 Hz, gamma: 31–50 Hz). Statistically significant oscillations among them occurred only in the alpha band. Detailed PSDs for all channels are depicted in Supporting Information, Figure S2.

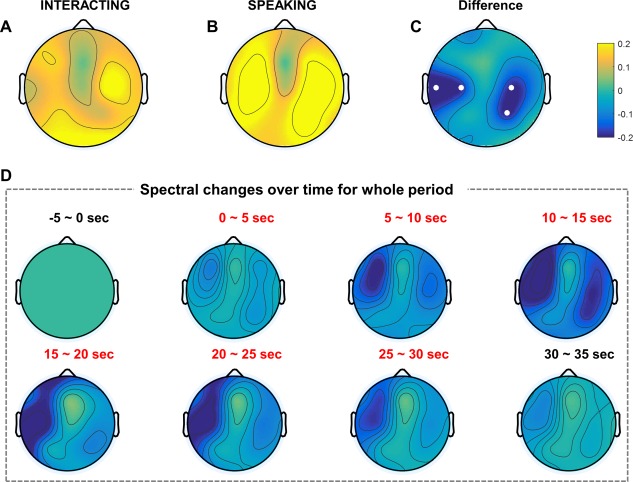

We obtained grand‐averaged normalized log power as topographical representation for each task (Fig. 2A,B). Topographies were obtained by subtracting the baseline for each trial (i.e., 5 s instruction period before the interacting or speaking tasks). Figure 2C indicates the difference in the grand‐averaged normalized log power between two tasks, and thus shows which brain regions' alpha oscillations increased or decreased during the turn‐taking verbal interaction compared to during the control task. As Fig. 2C shows, the left temporal and right centro‐parietal regions demonstrated suppression in the alpha band during the interaction. The small white dots represent electrodes that differed significantly (P < 0.05 with FDR correction).

Figure 2.

EEG grand‐averaged topography in the alpha band of two tasks. Each topography indicates the normalized log power difference in the interacting (A) and speaking (B) tasks compared to baseline. (C) Topography map shows the difference between the two tasks (interacting, speaking). Small white dots represent electrodes that exhibited statistically significant differences (P < 0.05) with FDR correction. (D) EEG grand‐averaged topography of normalized power differences in the alpha band (interacting–speaking) over time. Baseline: −5–0 s; task: 0–30 s; break: 30–35 s. [Color figure can be viewed at http://wileyonlinelibrary.com]

Furthermore, we investigated the grand‐averaged normalized log power corresponding to the time sequence. As mentioned in Materials and Methods section, the entire trial lasted 40 s with a 5 s baseline before tasks, and a 5 s break after the tasks. Figure 2D shows the differences in each 5 s time period (interacting–speaking) for all subjects. At the beginning of the 5 s baseline, subjects simply stared at the instructions and prepared for the turn‐taking verbal interaction. This period was calculated as a baseline for the power difference over time. After the 5 s baseline, normalized power differences in the task (interacting–speaking) and break were obtained by subtracting the baseline power. As Fig. 2D shows, the left temporal region became suppressed over time and recovered gradually as the end of the task approached. After the task period, alpha suppression nearly disappeared; thus, power behavior similar to that at 0–5 s was exhibited.

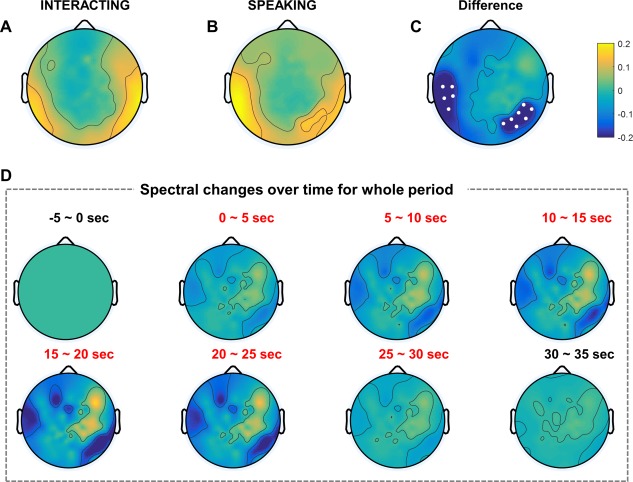

MEG alpha and gamma

We investigated MEG oscillations in the four different bands. We calculated the grand‐averaged log power after normalization with a z‐score for all 146 sensors after transformation to a planar gradient. Among oscillations in the four different bands, we found significant power changes in the alpha and gamma bands. Figure 3 shows the grand‐averaged normalized log power in the alpha band for each task. Fig. 3A,B represent the topography in the interacting and speaking tasks compared to the baseline period, respectively. As Fig. 3C shows, we found alpha suppression in the left temporal and right centro‐parietal regions similar to the EEG alpha suppression. Similarly, Fig. 3D indicates the grand‐averaged normalized log power corresponding to the time sequence in the alpha band. Only right centro‐parietal alpha suppression appeared until 15 s into the task period. From 15 to 25 s, clear suppression appeared in the left temporal lobe, which nearly disappeared after 25 s. Similar to the EEG alpha suppression, we also found MEG alpha suppression in the left temporal and right centro‐parietal regions.

Figure 3.

MEG grand‐averaged topography in the alpha band of two tasks. Each topography indicates the normalized log power difference in the interacting (A) and speaking (B) tasks compared to baseline. (C) The topography map shows the difference between the two tasks (interacting, speaking). Small white dots represent sensors that exhibited statistically significant differences (P < 0.05) with FDR correction. (D) MEG grand‐averaged topography of normalized power differences in the alpha band (interacting, speaking) over time. Baseline: −5–0 s; task: 0–30 s; break: 30–35 s. [Color figure can be viewed at http://wileyonlinelibrary.com]

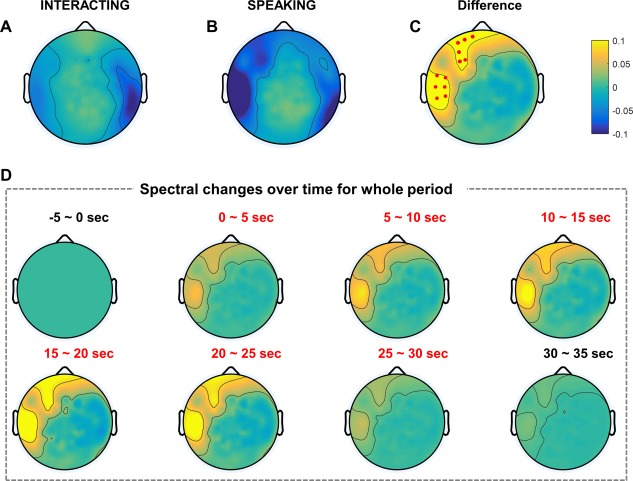

Furthermore, in addition to MEG alpha suppression, we observed clear MEG gamma power changes in the two tasks. Several studies have demonstrated that MEG gamma activity plays a crucial role in identifying the brain functions related to attention or working memory [Jensen et al., 2007; Kaiser et al., 2003]. Similarly, we found MEG gamma band activity in this study, particularly in the lower gamma band (31–50 Hz). Figure 4A,B represents the topography in the interacting and speaking tasks, respectively. In the interacting task, strong and weak gamma power suppressions were obtained in the right parietal and left temporal regions. In the speaking task, stronger gamma power suppression was observed in the left temporal and frontal regions compared to those in the interacting task. To determine the statistical difference between the two tasks, we evaluated the gamma power difference and statistical significance based on sensors. As shown in Fig. 4C, gamma band activity increased in the left temporal and frontal regions compared to the control task. There was no statistically significant power difference in the EEG gamma band because of huge inherent artifacts and small amplitudes in the EEG data. However, MEG did detect gamma band activity because magnetic fields are distorted less by the skull and scalp than is EEG [Flemming et al., 2005]. In addition, we obtained the MEG gamma log power in a time sequence, as Fig. 4D illustrates. First, 5 s after the task period began, gamma band activity increased slightly in the left temporal and frontal regions compared to the baseline. Thereafter, gamma band activity increased more than in the previous time sequence and decreased gradually near the end of the task period. After the task period, gamma band activity demonstrated patterns similar to those in the baseline.

Figure 4.

MEG grand‐averaged topography in the gamma band of two tasks. Each topography indicates the normalized log power differences in the interacting (A) and speaking (B) tasks compared to baseline. (C) The topography map shows the difference between the two tasks (interacting, speaking). Small red dots represent the sensors that exhibited statistically significant differences (P < 0.05) with FDR correction. (D) MEG grand‐averaged topography of normalized power differences in the gamma band (interacting, speaking) over time. Baseline: −5–0 s; task: 0–30 s; break: 30–35 s. [Color figure can be viewed at http://wileyonlinelibrary.com]

Interbrain Phase Synchronization

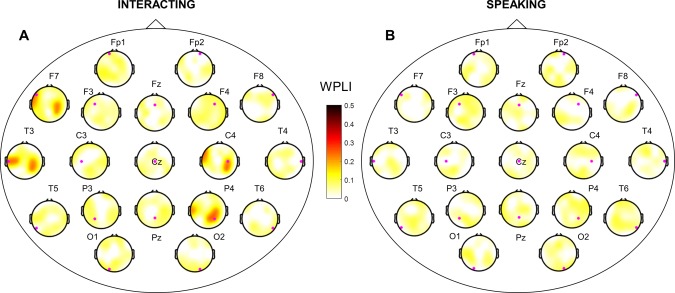

EEG alpha

The ultimate purpose of this study was to determine which brain regions are synchronized between two interacting subjects in specific frequency bands using the EEG/MEG hyperscanning technique. Therefore, we investigated phase synchronization between two brains using WPLI in the four different spectral bands (theta, alpha, beta, and gamma). Among these frequency bands, we obtained statistically significant phase synchronization only in the alpha band in EEG data. Note that we have not discussed intrabrain (single brain) phase synchronization here because we observed no significant differences between the two tasks. We obtained grand‐averaged WPLI between two brains after statistical testing (1,000 surrogate data, P < 0.05 with FDR correction) in the two tasks (Fig. 5). Each topography of a large head circle indicates phase synchronization between two brains based on 19 EEG channels in the sensor space. For example, the small magenta dot in each topography represents each channel from the initiator (seed); its topographical color map indicates the WPLI with a partner. The left fronto‐temporal and right centro‐parietal regions showed strong phase synchronization (WPLI > 0.3) between two brains in the interacting task for pair‐wise channels, especially at the T3, F7, C4, and P4 channels (Fig. 5A). Synchronization for pair‐wise channels indicates that the same brain regions were synchronized simultaneously between two subjects with respect to phase. In addition to pair‐wise channels, we found strong phase synchronizations between the left temporal (T3 and F7 channels) and the right centro‐parietal (C4 and P4 channels) regions (Fig. 5A). Clear interbrain phase synchronization was observed in the left fronto‐temporal region (T3 and F7 channels) at the base of the right centro‐parietal (C4 and P4 channels). Interbrain synchronization for non‐pair‐wise channels indicates that two different brain regions (channels) were synchronized. In summary, we found strong interbrain phase synchronizations in the right centro‐parietal (C4 and P4 channels) region (WPLI > 0.3) from the seed of the left fronto‐temporal region (T3 and F7 channels) in the interacting task. In contrast, we found relatively weak synchronization in the speaking task (Fig. 5B, WPLI < 0.1). These results suggest that two brains are synchronized during turn‐taking interaction only compared to noninteracting task.

Figure 5.

Interbrain phase synchronization in EEG alpha band. WPLI for all pairs of synchronization in the two different tasks (interacting, speaking). WPLI across subjects was represented based on statistical testing (1,000 surrogate data, P < 0.05 with FDR correction). (A) and (B) are topographies of interbrain phase synchronization in the interacting and speaking tasks, respectively. Small magenta dots represent seeds from the initiator. [Color figure can be viewed at http://wileyonlinelibrary.com]

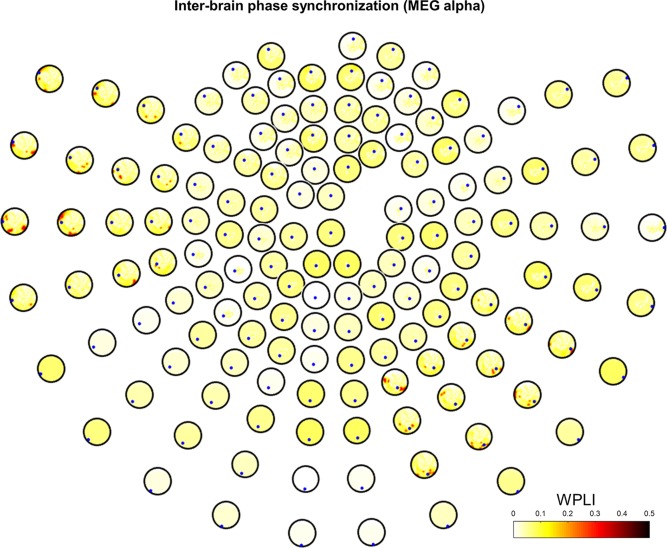

MEG alpha and gamma

As in EEG phase synchronization, we calculated WPLI between two brains in the four different spectral bands (theta, alpha, beta, and gamma) in the MEG data, and extracted interbrain phase synchronization only for all pairs. We note that, similar to EEG, intrabrain phase synchronization is not discussed here. In the MEG data, we found statistically significant phase synchronization in the alpha and gamma bands. We obtained grand‐averaged interbrain phase synchronization in the alpha band using WPLI in the interacting task (Fig. 6). As described in the Materials and Methods section, 6 of the 152 sensors were removed because of technical issues; thus, we plotted the WPLI topography on 146 sensors. Empty space in sensor space indicates the location of the sensors removed. We found that the left fronto‐temporal and right centro‐parietal regions have strong synchronization for pair‐wise sensors (Fig. 6). In addition, the left fronto‐temporal and right parietal regions were synchronized; several other sensors were synchronized weakly with the left parietal region. The right centro‐parietal region showed clear phase synchronization with the left fronto‐temporal region (WPLI > 0.3). On the other hand, we found relatively weak interbrain phase synchronization in the speaking task (WPLI < 0.1), as shown in Supporting Information, Figure S3.

Figure 6.

Interbrain phase synchronization in MEG alpha band. Grand‐averaged WPLI is represented based on statistical testing (1,000 surrogate data, P < 0.05 with FDR correction) in the interacting task. Small blue dots represent seeds from the initiator. [Color figure can be viewed at http://wileyonlinelibrary.com]

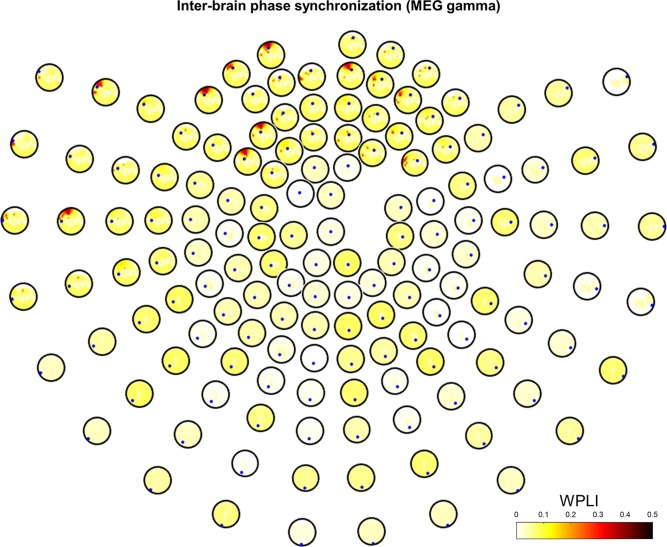

In the gamma band, we obtained statistically significant interbrain phase synchronization in the left temporal and frontal regions for pair‐wise channels during verbal interaction (Fig. 7). Particularly, left frontal phase synchronization (WPLI > 0.4) was much stronger than in any other regions. Phase synchronization in the left temporal region (WPLI > 0.3) was slightly weaker than that in the left frontal, but still maintained strong synchronization compared to other regions. Similar to the alpha band, we also obtained very weak interbrain synchronization in the speaking task (WPLI < 0.1), as shown in Supporting Information, Figure S4. This indicated that there was no interaction in the speaking task, although there may have been some intrabrain synchronization in a single subject. We calculated intrabrain (single brain) phase synchronization in both interacting and speaking tasks, and discovered some phase synchronization, but it was not notable.

Figure 7.

Interbrain phase synchronization in MEG gamma band. Grand‐averaged WPLI is represented based on statistical testing (1,000 surrogate data, P < 0.05 with FDR correction) in the interacting task. Small blue dots represent seeds from the initiator. [Color figure can be viewed at http://wileyonlinelibrary.com]

DISCUSSION

Effects of Subjects' Speaking

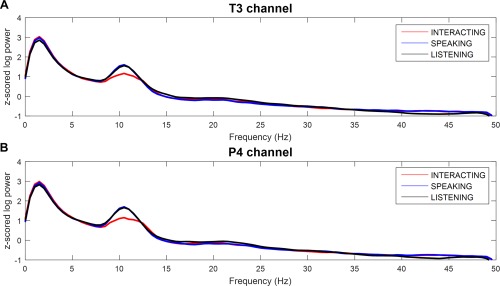

During the turn‐taking verbal interactions, subjects interacted verbally with their partners; jaw movement during speaking therefore contaminates the signal, causing muscle artifacts in the left and right temporal regions [Goncharova et al., 2003]. Therefore, we adopted the ICA technique using multichannel EEG and MEG to remove these artifacts. After thorough visual inspection of each ICA component, unusual rapid increases in amplitude in the left and right temporal regions and frontal region also were considered artifacts attributable to jaw movements and eye blinking, respectively, and these components were rejected. As described in Speech Recording, the number of numbers each subject spoke in the interacting task was lower than that in the speaking task (roughly half); therefore, we inferred that this discrepancy may influence spectral power changes between the interacting and speaking tasks. To clarify this potential effect, we inserted a supplementary task, the listening task, as described in Experimental Procedures, and compared its spectral power to that of the other tasks. In the listening task, subjects were instructed simply to listen to their partner's voice without speaking. Figure 8 shows the grand‐averaged PSD for the left temporal (T3 channel, Fig. 8A) and right centro‐parietal (P4 channel, Fig. 8B) regions in the interacting, speaking, and listening tasks. As this figure illustrates, the PSD in the listening task had an amplitude similar to that in the speaking task, which decreased more in the interacting task than in the speaking and listening tasks. Thus, we believe that the amount of numbers counted in the interacting task did not differ from that in the speaking task. Furthermore, the differences in oscillations in the left temporal and right centro‐parietal regions indicated that there was clear neurophysiological evidence of the interaction task.

Figure 8.

Comparison of power spectral density for each conditions. (A) Left temporal (T3 channel) and (B) right centro‐parietal (P4 channel) regions in the three different tasks (interacting, speaking, and listening). Power suppression around the alpha band (8–12 Hz) was obtained in the interacting compared to the speaking and listening tasks. [Color figure can be viewed at http://wileyonlinelibrary.com]

EEG Alpha Suppression

Many previous studies have proposed that EEG alpha suppression is a signature of MNS [Frenkel‐Toledo et al., 2014; Hobson and Bishop, 2016; Iacoboni and Dapretto, 2006; Oberman et al., 2007; Perry and Bentin, 2009]. Accordingly, several social interaction studies have referred to MNS because experimental paradigms for social interaction also are very similar to the behavior of MNS as coupled dynamics [Hasson and Frith, 2016]. In the field of social interaction in particular, the phi‐rhythm in the right centro‐parietal region, referred to as the phi complex, has been a neuromarker for visual social coordination [Tognoli et al., 2007]. Since then, many studies have found alpha suppression in the right centro‐parietal region [Dumas et al., 2012b, 2010; Naeem et al., 2012a, 2012b], but several others found that the brain regions suppressed varied according to the types of interaction paradigms. Some examples are left centro‐parietal alpha suppression in joint attention tasks [Lachat et al., 2012], frontal alpha suppression in finger‐tapping tasks [Konvalinka et al., 2014], and central alpha suppression in nonverbal hand movement tasks [Ménoret et al., 2014]. In this study, we also found alpha suppression in the centro‐parietal region, as shown in Figures 2 and 3. Further, we identified alpha suppression in the left temporal region, which is consistent with a previous social interaction study that used speech rhythms [Kawasaki et al., 2013]. Kawasaki and his colleagues attempted to identify brain synchronization between subjects in a human–human condition compared to a human–machine condition. They found theta and alpha synchronization and discussed the fact that these activities are related to short‐term working memory [Jensen et al., 2002; Kawasaki et al., 2010; Klimesch et al., 2008; Sauseng et al., 2009]. We concur with their findings because short‐term working memory plays a major role in turn‐taking verbal interactions. To perform the turn‐taking verbal interaction of number counting with partners perfectly, subjects must concentrate on the numbers their partner's speak and remember them to repeat them correctly. Therefore, the alpha suppression demonstrated in this work supports the significant evidence of previous studies regarding human social interaction.

Hyperscanning MEG Studies

Researchers rarely have used a hyperscanning study with MEG because it is difficult to set up the hardware and synchronize two MEG systems. Baess et al. [2012] first equipped two MEG systems located in two separate sites ∼5 km apart; this connection was synchronized via the internet, and the researchers thus demonstrated that real‐time auditory interaction between two persons is possible. Since then, their research group has succeeded in developing a real‐time audio‐visual link [Zhdanov et al., 2015] and found neural signatures of hand movements in different social roles [Zhou et al., 2016]. In their study, they found a stronger beta modulation in the occipital region among followers than leaders. This finding suggests that there is a specific role difference in the kinematics between leaders and followers. In addition, Hirata et al. [2014] developed a hyperscanning MEG system in a single room, rather than in two different places; this system allowed two subjects to see the visual scene in real‐time. This development has considerable potential in the investigation of interbrain synchronization between subjects, and offers an easier way to conduct various hyperscanning experiments compared to experiments performed in two different locations. These approaches can accelerate the study of hyperscanning MEG, the novel neurophysiological findings of which have played a significant role in neuroimaging studies of social interaction. In our work, we used two custom‐built MEG systems developed by the Korea Research Institute of Standards and Science (KRISS) that were located at Yonsei Severance Hospital and KRISS, respectively. Although there is a great distance between the two sites, we verified systemic synchronization carefully through many tests before the experiments were conducted. We expect that this configuration of a tele‐MEG system may serve as a stepping‐stone to accelerate studies in the field of social interaction.

MEG Gamma Roles in the Left Temporal and Frontal Regions

Many studies have focused on in‐depth investigations of gamma oscillations in humans. Human gamma oscillations could be a major key in understanding neuronal processes such as attention and memory [Jensen et al., 2007; Jokisch and Jensen, 2007]. The functional significance of the gamma band also has been found in the motor cortex with hand movement [Cheyne and Ferrari, 2013; Gaetz et al., 2013; Muthukumaraswamy, 2010], and in the somatosensory cortex with tactile stimulation [Bauer et al., 2006]. Among many other functional roles of the gamma band, speech and auditory processing, including that of language, is one of its dominant functions. Further, neurophysiological evidence of gamma band function has been found in MEG [Basirat et al., 2008; Kaiser et al., 2003; Kingyon et al., 2015; Palva et al., 2002] and ECoG studies [Crone et al., 2001a, 2001b; Pasley et al., 2012; Potes et al., 2014; Towle et al., 2008]. In our study, we found MEG low gamma band oscillations in the left temporal region. In general, the left temporal lobe, especially the left superior temporal gyrus (BA 22) functions typically to support speech perception [Hickok and Poeppel, 2007]. This region is related closely to the conventional Vernicke's area with respect to speech processing [Hickok and Poeppel, 2000, 2004], and left dominant activations were discovered clearly in neuroanatomical studies conducted several decades ago [Démonet et al., 1992; Price et al., 1996; Zatorre et al., 1992]. Many previous studies have found significant brain activations and correlations in the left temporal region, and we found gamma oscillations in the left temporal region in this study as well.

In addition to gamma oscillations in the left temporal region, we also discovered frontal gamma oscillations during the interactions. Frontal gamma oscillations reflect various types of attention, memory, and task performance [Ahn et al., 2013; Benchenane et al., 2011; Jensen et al., 2007], and functional correlations between the frontal and temporal regions have been found in speech motor control tasks using ECoG [Kingyon et al., 2015], although they appeared in the high gamma band (70–150 Hz). These findings, which converged on left‐hemisphere and left‐lateralization dominance in the primary auditory cortex, are referred to as functional asymmetry [Devlin et al., 2003] and are distinct features in auditory processing. We calculated interbrain synchronization using WPLI in the gamma band and found statistically significant differences between the left temporal and frontal regions. Because the turn‐taking verbal interaction performed in this work incorporated both speech processing and working memory, we found correlated brain activations in specific regions related to the task. Most studies in the field of hyperscanning have focused on EEG alpha oscillations [Babiloni and Astolfi, 2014; Koike et al., 2015] because high‐frequency brain activities, such as gamma band activity, are sometimes very difficult to extract from EEG data [Muthukumaraswamy, 2013]. In addition, hyperscanning studies using MEG have been undertaken rarely because of the difficulty of recording two MEG systems simultaneously. Therefore, attempting a hyperscanning study in this work using two MEG systems simultaneously was both unique and innovative. In addition, oscillations and synchronization in the MEG gamma band are novel findings that may improve our understanding of social interactions or turn‐taking verbal interactions.

Limitations and Future Work

In this study, we proposed a social interaction paradigm using verbal communication alone. Naturally, subjects' verbal output must be recorded for behavioral analysis after the experiment. Similarly, in this work, we recorded the subjects' voices during all trials and analyzed them to identify the number of numbers spoken. However, we could not obtain triggers for each spoken number because of the technical limitations of a simultaneous EEG/MEG system. We used custom‐built KRISS MEG and EEG systems for recording, and the experimental paradigm for verbal interaction was performed by software we made as well. This software was able to transmit triggers to MEG and EEG recording software, including predefined triggers and button presses. Unfortunately, it does not include any speech perception techniques for triggering voices; therefore, we recorded voices alone to identify the number of numbers spoken. To resolve this problem, we are now developing an algorithm for speech perception and a strategy to transmit them to recording systems as digital signals. An alternative method is to use software optimized for designing complicated experiments, such as Presentation® software (Neurobehavioral Systems, Inc., Berkeley, CA, http://www.neurobs.com). At present, we are testing whether this is feasible for more detailed analyses of verbal interaction.

Studies of social interaction have obtained several role‐dependent results, such as those of model vs imitator in hand movement tasks [Dumas et al., 2012a, 2012b, 2010], leader vs follower in finger‐tapping tasks [Konvalinka et al., 2014; Yun et al., 2012], and in natural discussion [Jiang et al., 2015]. In this work, however, we could not address the effects of role, although the subject who commenced each interaction task changed over trials. Because verbal interaction in our experiment was based on turn‐taking communication, no subject had a specific role. Therefore, we could not identify any directionality of interbrain synchronization in this work, and determined WPLI instead, which does not represent directionality between two brains. Future work must consider this in as much detail as possible, as role‐dependent findings can help us understand social interactions in real‐world situations.

Many previous studies have attempted to identify brain connectivity using EEG, MEG, or simultaneous MEG/EEG, and have proposed novel methods to estimate brain connectivity [Friston, 2011]. To identify appropriate approaches for our EEG and MEG data, we surveyed previous connectivity methods thoroughly and calculated functional connectivity using existing approaches. We believe that effective connectivity based on causal information flow is less meaningful in these tasks because it is difficult to identify each bout of speaking triggered during the interaction task. Thus, we evaluated the functional connectivity only. Among various measures, we were interested in phase synchronization attributable to volume conduction effects in EEG and MEG. The phase‐locking value has been used commonly to evaluate the phase synchrony in brain signals [Lachaux et al., 1999]. Furthermore, the phase lag index (PLI) was introduced by minimizing the effects of volume conduction and active reference electrodes [Stam et al., 2007] in EEG robustly. More recently, PLI has been weighted by the magnitude of the imaginary component of the cross‐spectrum called WPLI [Vinck et al., 2011] to reduce the effects of uncorrelated noise sources and sample size bias. In this work, we evaluated the phase synchronization for our data based on those methods and found stronger and clearer phase synchronization between two brains using WPLI.

The other limitation is the number of participants required to derive strong conclusions based on the results of this study. Most EEG and MEG studies collect data from ∼15–20 participants and extract common oscillations; we collected EEG/MEG data from only 10 participants. However, we designed the verbal interaction paradigm first based on tele‐EEG/MEG systems, and it was therefore difficult to collect data from many participants because of limitations in technology, labor, and time. Further, we believe that our EEG/MEG data contain less noise compared to those in any other studies because we collected the data in a magnetically and electrically shielded room. Therefore, we believe it was appropriate to extract alpha and gamma oscillations from 10 participants to derive consistent results.

CONCLUSIONS

In this work, we designed a novel turn‐taking verbal interaction using hyperscanning EEG/MEG recording. To the best of our knowledge, our hyperscanning study using simultaneous EEG/MEG is the first to identify the oscillations and interbrain phase synchronization involved in turn‐taking verbal interactions. Further, most existing hyperscanning studies have not addressed tracing interbrain synchronization in the MEG gamma band because of the difficulty in equipping the hardware of two MEG systems. Here we found statistically significant oscillations and interbrain phase synchronization between two subjects performing an interaction task compared to a control task. We also found gamma oscillations and phase synchronization in MEG data, which we observed first in the turn‐taking verbal interaction. In conclusion, our proposed approach constitutes a promising tool for in‐depth investigations of social interactions in hyperscanning studies.

Supporting information

Supporting Information Figure 1

Supporting Information Figure 2

Supporting Information Figure 3

Supporting Information Figure 4

Supporting Information Figure

REFERENCES

- Ahn M, Ahn S, Hong JH, Cho H, Kim K, Kim BS, Chang JW, Jun SC (2013): Gamma band activity associated with BCI performance: Simultaneous MEG/EEG study. Front Hum Neurosci 7:848. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Anders S, Heinzle J, Weiskopf N, Ethofer T, Haynes J‐D (2011): Flow of affective information between communicating brains. NeuroImage 54:439–446. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Astolfi L, Toppi J, Fallani FDV, Vecchiato G, Salinari S, Mattia D, Cincotti F, Babiloni F (2010): Neuroelectrical hyperscanning measures simultaneous brain activity in humans. Brain Topogr 23:243–256. [DOI] [PubMed] [Google Scholar]

- Astolfi L, Toppi J, D, Vico Fallani F, Vecchiato G, Cincotti F, Wilke CT, Yuan H, Mattia D, Salinari S, He B, Babiloni F (2011): Imaging the social brain by simultaneous hyperscanning during subject interaction. IEEE Intell Syst 26:38–45. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Babiloni F, Astolfi L (2014): Social neuroscience and hyperscanning techniques: Past, present and future. Neurosci Biobehav Rev 44:76–93. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Babiloni F, Cincotti F, Mattia D, Fallani FDV, Tocci A, Bianchi L, Salinari S, Marciani M, Colosimo A, Astolfi L (2007): High Resolution EEG Hyperscanning During a Card Game. 29th Annual International Conference of the IEEE Engineering in Medicine and Biology Society, pp 4957–4960. [DOI] [PubMed]

- Babiloni F, Cincotti F, Mattia D, Mattiocco M, Fallani FDV, Tocci A, Bianchi L, Marciani MG, Astolfi L (2006): Hypermethods for EEG hyperscanning. 28th Annual International Conference of the IEEE Engineering in Medicine and Biology Society, pp 3666–3669. [DOI] [PubMed]

- Baess P, Zhdanov A, Mandel A, Parkkonen L, Hirvenkari L, Mäkelä JP, Jousmäki V, Hari R (2012): MEG dual scanning: A procedure to study real‐time auditory interaction between two persons. Front Hum Neurosci 6:83. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Basirat A, Sato M, Schwartz J‐L, Kahane P, Lachaux J‐P (2008): Parieto‐frontal gamma band activity during the perceptual emergence of speech forms. NeuroImage 42:404–413. [DOI] [PubMed] [Google Scholar]

- Bauer M, Oostenveld R, Peeters M, Fries P (2006): Tactile spatial attention enhances gamma‐band activity in somatosensory cortex and reduces low‐frequency activity in parieto‐occipital areas. J Neurosci 26:490–501. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bell AJ, Sejnowski TJ (1995): An information‐maximization approach to blind separation and blind deconvolution. Neural Comput 7:1129–1159. [DOI] [PubMed] [Google Scholar]

- Benchenane K, Tiesinga PH, Battaglia FP (2011): Oscillations in the prefrontal cortex: A gateway to memory and attention. Curr Opin Neurobiol 21:475–485. [DOI] [PubMed] [Google Scholar]

- Benjamini Y, Hochberg Y (1995): Controlling the false discovery rate: A practical and powerful approach to multiple testing. J R Stat Soc Ser B 57:289–300. [Google Scholar]

- Bilek E, Ruf M, Schäfer A, Akdeniz C, Calhoun VD, Schmahl C, Demanuele C, Tost H, Kirsch P, Meyer‐Lindenberg A (2015): Information flow between interacting human brains: Identification, validation, and relationship to social expertise. Proc Natl Acad Sci 112:5207–5212. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bush G, Vogt BA, Holmes J, Dale AM, Greve D, Jenike MA, Rosen BR (2002): Dorsal anterior cingulate cortex: A role in reward‐based decision making. Proc Natl Acad Sci 99:523–528. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chartrand TL, Bargh JA (1999): The chameleon effect: The perception–behavior link and social interaction. J Pers Soc Psychol 76:893–910. [DOI] [PubMed] [Google Scholar]

- Cheng X, Li X, Hu Y (2015): Synchronous brain activity during cooperative exchange depends on gender of partner: A fNIRS‐based hyperscanning study. Hum Brain Mapp 36:2039–2048. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cheyne D, Ferrari P (2013): MEG studies of motor cortex gamma oscillations: Evidence for a gamma “fingerprint” in the brain? Front Hum Neurosci 7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chiu PH, Kayali MA, Kishida KT, Tomlin D, Klinger LG, Klinger MR, Montague PR (2008): Self responses along cingulate cortex reveal quantitative neural phenotype for high‐functioning autism. Neuron 57:463–473. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Crone NE, Hao L, Hart J, Boatman D, Lesser RP, Irizarry R, Gordon B (2001a): Electrocorticographic gamma activity during word production in spoken and sign language. Neurology 57:2045–2053. [DOI] [PubMed] [Google Scholar]

- Crone NE, Boatman D, Gordon B, Hao L (2001b): Induced electrocorticographic gamma activity during auditory perception. Clin Neurophysiol 112:565–582. [DOI] [PubMed] [Google Scholar]

- Cui X, Bryant DM, Reiss AL (2012): NIRS‐based hyperscanning reveals increased interpersonal coherence in superior frontal cortex during cooperation. NeuroImage 59:2430–2437. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Delaherche E, Dumas G, Nadel J, Chetouani M (2015): Automatic measure of imitation during social interaction: A behavioral and hyperscanning‐EEG benchmark. Pattern Recognit Lett 66:118–126. [Google Scholar]

- Delorme A, Makeig S (2004): EEGLAB: An open source toolbox for analysis of single‐trial EEG dynamics including independent component analysis. J Neurosci Methods 134:9–21. [DOI] [PubMed] [Google Scholar]

- Démonet J‐F, Chollet F, Ramsay S, Cardebat D, Nespoulous J‐L, Wise R, Rascol A, Frackowiak R (1992): The anatomy of phonological and semantic processing in normal subjects. Brain 115:1753–1768. [DOI] [PubMed] [Google Scholar]

- Devlin JT, Raley J, Tunbridge E, Lanary K, Floyer‐Lea A, Narain C, Cohen I, Behrens T, Jezzard P, Matthews PM, Moore DR (2003): Functional asymmetry for auditory processing in human primary auditory cortex. J Neurosci 23:11516–11522. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dommer L, Jäger N, Scholkmann F, Wolf M, Holper L (2012): Between‐brain coherence during joint n‐back task performance: A two‐person functional near‐infrared spectroscopy study. Behav Brain Res 234:212–222. [DOI] [PubMed] [Google Scholar]

- Dumas G, Chavez M, Nadel J, Martinerie J (2012a): Anatomical connectivity influences both intra‐ and inter‐brain synchronizations. PLoS One 7:e36414. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dumas G, Martinerie J, Soussignan R, Nadel J (2012b): Does the brain know who is at the origin of what in an imitative interaction? Front Hum Neurosci 6:128. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dumas G, Nadel J, Soussignan R, Martinerie J, Garnero L (2010): Inter‐brain synchronization during social interaction. PLoS One 5:e12166. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fallani FDV, Nicosia V, Sinatra R, Astolfi L, Cincotti F, Mattia D, Wilke C, Doud A, Latora V, He B, Babiloni F (2010): Defecting or not defecting: How to “read” human behavior during cooperative games by EEG measurements. PLoS One 5:e14187. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Flemming L, Wang Y, Caprihan A, Eiselt M, Haueisen J, Okada Y (2005): Evaluation of the distortion of EEG signals caused by a hole in the skull mimicking the fontanel in the skull of human neonates. Clin Neurophysiol 116:1141–1152. [DOI] [PubMed] [Google Scholar]

- Fliessbach K, Weber B, Trautner P, Dohmen T, Sunde U, Elger CE, Falk A (2007): Social comparison affects reward‐related brain activity in the human ventral striatum. Science 318:1305–1308. [DOI] [PubMed] [Google Scholar]

- Frenkel‐Toledo S, Bentin S, Perry A, Liebermann DG, Soroker N (2014): Mirror‐neuron system recruitment by action observation: Effects of focal brain damage on mu suppression. NeuroImage 87:127–137. [DOI] [PubMed] [Google Scholar]

- Friston KJ (2011): Functional and effective connectivity: A review. Brain Connect 1:13–36. [DOI] [PubMed] [Google Scholar]

- Funane T, Kiguchi M, Atsumori H, Sato H, Kubota K, Koizumi H (2011): Synchronous activity of two people's prefrontal cortices during a cooperative task measured by simultaneous near‐infrared spectroscopy. J Biomed Opt 16:77010–77011. [DOI] [PubMed] [Google Scholar]

- Gaetz W, Liu C, Zhu H, Bloy L, Roberts TPL (2013): Evidence for a motor gamma‐band network governing response interference. NeuroImage 74. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Goncharova II, McFarland DJ, Vaughan TM, Wolpaw JR (2003): EMG contamination of EEG: Spectral and topographical characteristics. Clin Neurophysiol 114:1580–1593. [DOI] [PubMed] [Google Scholar]

- Gross J, Baillet S, Barnes GR, Henson RN, Hillebrand A, Jensen O, Jerbi K, Litvak V, Maess B, Oostenveld R, Parkkonen L, Taylor JR, van Wassenhove V, Wibral M, Schoffelen J‐M (2013): Good practice for conducting and reporting MEG research. NeuroImage 65:349–363. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hari R, Kujala MV (2009): Brain basis of human social interaction: From concepts to brain imaging. Physiol Rev 89:453–479. [DOI] [PubMed] [Google Scholar]

- Hasson U, Frith CD (2016): Mirroring and beyond: Coupled dynamics as a generalized framework for modelling social interactions. Phil Trans R Soc B 371:20150366. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hickok G, Poeppel D (2000): Towards a functional neuroanatomy of speech perception. Trends Cogn Sci 4:131–138. [DOI] [PubMed] [Google Scholar]

- Hickok G, Poeppel D (2004): Dorsal and ventral streams: A framework for understanding aspects of the functional anatomy of language. Cognition 92:67–99. [DOI] [PubMed] [Google Scholar]

- Hickok G, Poeppel D (2007): The cortical organization of speech processing. Nat Rev Neurosci 8:393–402. [DOI] [PubMed] [Google Scholar]

- Hirata M, Ikeda T, Kikuchi M, Kimura T, Hiraishi H, Yoshimura Y, Asada M (2014): Hyperscanning MEG for understanding mother–child cerebral interactions. Front Hum Neurosci 8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hobson HM, Bishop DVM (2016): Mu suppression – A good measure of the human mirror neuron system? Cortex 82:290–310. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Holper L, Scholkmann F, Wolf M (2012): Between‐brain connectivity during imitation measured by fNIRS. NeuroImage 63:212–222. [DOI] [PubMed] [Google Scholar]

- Iacoboni M, Dapretto M (2006): The mirror neuron system and the consequences of its dysfunction. Nat Rev Neurosci 7:942–951. [DOI] [PubMed] [Google Scholar]

- Jensen O, Gelfand J, Kounios J, Lisman JE (2002): Oscillations in the alpha band (9–12 Hz) increase with memory load during retention in a short‐term memory task. Cereb Cortex 12:877–882. [DOI] [PubMed] [Google Scholar]

- Jensen O, Kaiser J, Lachaux J‐P (2007): Human gamma‐frequency oscillations associated with attention and memory. Trends Neurosci 30:317–324. [DOI] [PubMed] [Google Scholar]

- Jiang J, Chen C, Dai B, Shi G, Ding G, Liu L, Lu C (2015): Leader emergence through interpersonal neural synchronization. Proc Natl Acad Sci 112:4274–4279. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jiang J, Dai B, Peng D, Zhu C, Liu L, Lu C (2012): Neural synchronization during face‐to‐face communication. J Neurosci 32:16064–16069. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jokisch D, Jensen O (2007): Modulation of gamma and alpha activity during a working memory task engaging the dorsal or ventral stream. J Neurosci 27:3244–3251. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kaiser J, Ripper B, Birbaumer N, Lutzenberger W (2003): Dynamics of gamma‐band activity in human magnetoencephalogram during auditory pattern working memory. NeuroImage 20:816–827. [DOI] [PubMed] [Google Scholar]

- Kashihara K (2014): A brain‐computer interface for potential non‐verbal facial communication based on EEG signals related to specific emotions. Neuroprosthetics 8:244. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kawasaki M, Kitajo K, Yamaguchi Y (2010): Dynamic links between theta executive functions and alpha storage buffers in auditory and visual working memory. Eur J Neurosci 31:1683–1689. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kawasaki M, Yamada Y, Ushiku Y, Miyauchi E, Yamaguchi Y (2013): Inter‐brain synchronization during coordination of speech rhythm in human‐to‐human social interaction. Sci Rep 3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kennerley SW, Walton ME, Behrens TEJ, Buckley MJ, Rushworth MFS (2006): Optimal decision making and the anterior cingulate cortex. Nat Neurosci 9:940–947. [DOI] [PubMed] [Google Scholar]

- King‐Casas B, Tomlin D, Anen C, Camerer CF, Quartz SR, Montague PR (2005): Getting to know you: Reputation and trust in a two‐person economic exchange. Science 308:78–83. [DOI] [PubMed] [Google Scholar]

- Kingyon J, Behroozmand R, Kelley R, Oya H, Kawasaki H, Narayanan NS, Greenlee JDW (2015): High‐gamma band fronto‐temporal coherence as a measure of functional connectivity in speech motor control. Neuroscience 305:15–25. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Klimesch W, Freunberger R, Sauseng P, Gruber W (2008): A short review of slow phase synchronization and memory: Evidence for control processes in different memory systems? Brain Res 1235:31–44. [DOI] [PubMed] [Google Scholar]

- Koike T, Tanabe HC, Okazaki S, Nakagawa E, Sasaki AT, Shimada K, Sugawara SK, Takahashi HK, Yoshihara K, Bosch‐Bayard J, Sadato N (2016): Neural substrates of shared attention as social memory: A hyperscanning functional magnetic resonance imaging study. NeuroImage 125:401–412. [DOI] [PubMed] [Google Scholar]

- Koike T, Tanabe HC, Sadato N (2015): Hyperscanning neuroimaging technique to reveal the “two‐in‐one” system in social interactions. Neurosci Res 90:25–32. [DOI] [PubMed] [Google Scholar]

- Konvalinka I, Bauer M, Stahlhut C, Hansen LK, Roepstorff A, Frith CD (2014): Frontal alpha oscillations distinguish leaders from followers: Multivariate decoding of mutually interacting brains. NeuroImage 94:79–88. [DOI] [PubMed] [Google Scholar]

- Kuhlen AK, Allefeld C, Haynes J‐D (2012): Content‐specific coordination of listeners' to speakers' EEG during communication. Front Hum Neurosci 6:266. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lachat F, Hugueville L, Lemaréchal J‐D, Conty L, George N (2012): Oscillatory brain correlates of live joint attention: A dual‐EEG study. Front Hum Neurosci 6:156. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lachaux J‐P, Rodriguez E, Martinerie J, Varela FJ (1999): Measuring phase synchrony in brain signals. Hum Brain Mapp 8:194–208. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lee YH, Yu KK, Kwon H, Kim JM, Kim K, Park YK, Yang HC, Chen KL, Yang SY, Horng HE (2009): A whole‐head magnetoencephalography system with compact axial gradiometer structure. Supercond Sci Technol 22:45023. [Google Scholar]

- Liao Y, Acar ZA, Makeig S, Deak G (2015): EEG imaging of toddlers during dyadic turn‐taking: Mu‐rhythm modulation while producing or observing social actions. NeuroImage 112:52–60. [DOI] [PubMed] [Google Scholar]

- Lindenberger U, Li S‐C, Gruber W, Müller V (2009): Brains swinging in concert: Cortical phase synchronization while playing guitar. BMC Neurosci 10:22. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Liu N, Mok C, Witt EE, Pradhan AH, Chen JE, Reiss AL (2016): NIRS‐based hyperscanning reveals inter‐brain neural synchronization during cooperative Jenga game with face‐to‐face communication. Front Hum Neurosci 82. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Liu T, Saito H, Oi M (2015): Role of the right inferior frontal gyrus in turn‐based cooperation and competition: A near‐infrared spectroscopy study. Brain Cogn 99:17–23. [DOI] [PubMed] [Google Scholar]

- Ménoret M, Varnet L, Fargier R, Cheylus A, Curie A, des Portes V, Nazir TA, Paulignan Y (2014): Neural correlates of non‐verbal social interactions: A dual‐EEG study. Neuropsychologia 55:85–97. [DOI] [PubMed] [Google Scholar]

- Montague PR, Berns GS, Cohen JD, McClure SM, Pagnoni G, Dhamala M, Wiest MC, Karpov I, King RD, Apple N, Fisher RE (2002): Hyperscanning: Simultaneous fMRI during linked social interactions. NeuroImage 16:1159–1164. [DOI] [PubMed] [Google Scholar]

- Muthukumaraswamy SD (2010): Functional properties of human primary motor cortex gamma oscillations. J Neurophysiol 104:2873–2885. [DOI] [PubMed] [Google Scholar]

- Muthukumaraswamy SD (2013): High‐frequency brain activity and muscle artifacts in MEG/EEG: A review and recommendations. Front Hum Neurosci 7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Naeem M, Prasad G, Watson DR, Kelso JAS (2012a): Electrophysiological signatures of intentional social coordination in the 10–12 Hz range. NeuroImage 59:1795–1803. [DOI] [PubMed] [Google Scholar]

- Naeem M, Prasad G, Watson DR, Kelso JAS (2012b): Functional dissociation of brain rhythms in social coordination. Clin Neurophysiol 123:1789–1797. [DOI] [PubMed] [Google Scholar]

- Nozawa T, Sasaki Y, Sakaki K, Yokoyama R, Kawashima R (2016): Interpersonal frontopolar neural synchronization in group communication: An exploration toward fNIRS hyperscanning of natural interactions. NeuroImage 133:484–497. [DOI] [PubMed] [Google Scholar]

- Oberman LM, Pineda JA, Ramachandran VS (2007): The human mirror neuron system: A link between action observation and social skills. Soc Cogn Affect Neurosci 2:62–66. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Oostenveld R, Fries P, Maris E, Schoffelen J‐M, Oostenveld R, Fries P, Maris E, Schoffelen J‐M (2010): FieldTrip: Open source software for advanced analysis of MEG, EEG, and invasive electrophysiological data. Comput Intell Neurosci 2011:e156869. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Osaka N, Minamoto T, Yaoi K, Azuma M, Shimada YM, Osaka M (2015): How two brains make one synchronized mind in the inferior frontal cortex: fNIRS‐based hyperscanning during cooperative singing. Percept Sci 1811. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Palva S, Palva JM, Shtyrov Y, Kujala T, Ilmoniemi RJ, Kaila K, Näätänen R (2002): Distinct gamma‐band evoked responses to speech and non‐speech sounds in humans. J Neurosci 22:RC211. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pasley BN, David SV, Mesgarani N, Flinker A, Shamma SA, Crone NE, Knight RT, Chang EF (2012): Reconstructing speech from human auditory cortex. PLoS Biol 10:e1001251. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pavlova M, Guerreschi M, Lutzenberger W, Krägeloh‐Mann I (2010): Social interaction revealed by motion: Dynamics of neuromagnetic gamma activity. Cereb Cortex 20:2361–2367. [DOI] [PubMed] [Google Scholar]

- Perry A, Bentin S (2009): Mirror activity in the human brain while observing hand movements: A comparison between EEG desynchronization in the mu‐range and previous fMRI results. Brain Res 1282:126–132. [DOI] [PubMed] [Google Scholar]

- Perry A, Stein L, Bentin S (2011): Motor and attentional mechanisms involved in social interaction—Evidence from mu and alpha EEG suppression. NeuroImage 58:895–904. [DOI] [PubMed] [Google Scholar]

- Politis DN, Romano JP (1992): A circular block‐resampling procedure for stationary data In: LePage R, Billard L, editors. Exploring the Limits of Bootstrap. New York: John Wiley & Sons. [Google Scholar]

- Politis DN, White H (2004): Automatic block‐length selection for the dependent bootstrap. Econom Rev 23:53–70. [Google Scholar]

- Potes C, Brunner P, Gunduz A, Knight RT, Schalk G (2014): Spatial and temporal relationships of electrocorticographic alpha and gamma activity during auditory processing. NeuroImage 97:188–195. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Price CJ, Price CJ, Wise RJS, Warburton EA, Moore CJ, Howard D, Patterson K, Frackowiak RSJ, Friston KJ (1996): Hearing and saying. Brain 119:919–931. [DOI] [PubMed] [Google Scholar]

- Rizzolatti G, Craighero L (2004): The mirror‐neuron system. Annu Rev Neurosci 27:169–192. [DOI] [PubMed] [Google Scholar]

- Rushworth MFS, Behrens TEJ, Rudebeck PH, Walton ME (2007): Contrasting roles for cingulate and orbitofrontal cortex in decisions and social behaviour. Trends Cogn Sci 11:168–176. [DOI] [PubMed] [Google Scholar]

- Saito DN, Tanabe HC, Izuma K, Hayashi MJ, Morito Y, Komeda H, Uchiyama H, Kosaka H, Okazawa H, Fujibayashi Y, Sadato N (2010): “Stay tuned”: Inter‐individual neural synchronization during mutual gaze and joint attention. Front Integr Neurosci 4:127. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sanfey AG, Rilling JK, Aronson JA, Nystrom LE, Cohen JD (2003): The neural basis of economic decision‐making in the ultimatum game. Science 300:1755–1758. [DOI] [PubMed] [Google Scholar]

- Sänger J, Müller V, Lindenberger U (2012): Intra‐ and interbrain synchronization and network properties when playing guitar in duets. Front Hum Neurosci 6:312. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sänger J, Müller V, Lindenberger U (2013): Directionality in hyperbrain networks discriminates between leaders and followers in guitar duets. Front Hum Neurosci 7:234. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sauseng P, Klimesch W, Heise KF, Gruber WR, Holz E, Karim AA, Glennon M, Gerloff C, Birbaumer N, Hummel FC (2009): Brain oscillatory substrates of visual short‐term memory capacity. Curr Biol 19:1846–1852. [DOI] [PubMed] [Google Scholar]

- Schippers MB, Roebroeck A, Renken R, Nanetti L, Keysers C (2010): Mapping the information flow from one brain to another during gestural communication. Proc Natl Acad Sci 107:9388–9393. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Spiegelhalder K, Ohlendorf S, Regen W, Feige B, Tebartz van Elst L, Weiller C, Hennig J, Berger M, Tüscher O (2014): Interindividual synchronization of brain activity during live verbal communication. Behav Brain Res 258:75–79. [DOI] [PubMed] [Google Scholar]

- Stam CJ, Nolte G, Daffertshofer A (2007): Phase lag index: Assessment of functional connectivity from multi channel EEG and MEG with diminished bias from common sources. Hum Brain Mapp 28:1178–1193. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stephens GJ, Silbert LJ, Hasson U (2010): Speaker–listener neural coupling underlies successful communication. Proc Natl Acad Sci 107:14425–14430. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tang H, Mai X, Wang S, Zhu C, Krueger F, Liu C (2016): Interpersonal brain synchronization in the right temporo‐parietal junction during face‐to‐face economic exchange. Soc Cogn Affect Neurosci 11:23–32. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tognoli E, Lagarde J, DeGuzman GC, Kelso JAS (2007): The phi complex as a neuromarker of human social coordination. Proc Natl Acad Sci 104:8190–8195. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tomlin D, Kayali MA, King CB, Anen C, Camerer CF, Quartz SR, Montague PR (2006): Agent‐specific responses in the cingulate cortex during economic exchanges. Science 312:1047–1050. [DOI] [PubMed] [Google Scholar]

- Towle VL, Yoon H‐A, Castelle M, Edgar JC, Biassou NM, Frim DM, Spire J‐P, Kohrman MH (2008): ECoG gamma activity during a language task: Differentiating expressive and receptive speech areas. Brain 131:2013–2027. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vinck M, Oostenveld R, van Wingerden M, Battaglia F, Pennartz CMA (2011): An improved index of phase‐synchronization for electrophysiological data in the presence of volume‐conduction, noise and sample‐size bias. NeuroImage 55:1548–1565. [DOI] [PubMed] [Google Scholar]

- Yun K (2013): On the same wavelength: Face‐to‐face communication increases interpersonal neural synchronization. J Neurosci 33:5081–5082. [DOI] [PMC free article] [PubMed] [Google Scholar]