Abstract

Human brains spontaneously differentiate between various emotional and neutral stimuli, including written words whose emotional quality is symbolic. In the electroencephalogram (EEG), emotional–neutral processing differences are typically reflected in the early posterior negativity (EPN, 200–300 ms) and the late positive potential (LPP, 400–700 ms). These components are also enlarged by task‐driven visual attention, supporting the assumption that emotional content naturally drives attention. Still, the spatio‐temporal dynamics of interactions between emotional stimulus content and task‐driven attention remain to be specified. Here, we examine this issue in visual word processing. Participants attended to negative, neutral, or positive nouns while high‐density EEG was recorded. Emotional content and top‐down attention both amplified the EPN component in parallel. On the LPP, by contrast, emotion and attention interacted: Explicit attention to emotional words led to a substantially larger amplitude increase than did explicit attention to neutral words. Source analysis revealed early parallel effects of emotion and attention in bilateral visual cortex and a later interaction of both in right visual cortex. Distinct effects of attention were found in inferior, middle and superior frontal, paracentral, and parietal areas, as well as in the anterior cingulate cortex (ACC). Results specify separate and shared mechanisms of emotion and attention at distinct processing stages. Hum Brain Mapp 37:3575–3587, 2016. © 2016 Wiley Periodicals, Inc.

Keywords: EEG/ERP, emotion, attention, words, visual cortex, inferior frontal gyri, anterior cingulate cortex, language

INTRODUCTION

Love is just a word. Although it is a simple array of letters, it has emotional significance. Despite the fact that language is abstract and arbitrary, we learn to relate certain meanings to words, which can subsequently differ in their emotional quality. Electroencephalographic (EEG) studies show that our brains differentiate between emotional and neutral words, even when emotion is not directly relevant to the experiment [Kanske et al., 2011; Ortigue et al., 2004; Schacht and Sommer, 2009; Schindler et al., 2014]. Typically, effects are reflected in an increased early posterior negativity (EPN) and late parietal positivity (LPP) for emotional words. The EPN is thought to be a highly automatic component, which arises at about 200 ms. It is related to lexical [Kissler and Herbert, 2013] and perceptual tagging [Kissler et al., 2007], and early attention [Schupp et al., 2004a]. The LPP occurs from about 400 ms after word presentation and reflects later stages of attention, stimulus evaluation, and episodic memory encoding [Herbert et al., 2008; Hofmann et al., 2009; Kissler et al., 2006]. Similar EPN and LPP enhancements have been reported for various emotional stimuli, including pictures [Olofsson et al. 2008; Schupp et al., 2007], faces [Schupp et al., 2004b; Wieser et al., 2010], and gestures [Flaisch et al., 2011; Wieser et al., 2014].

Interestingly, there are strong neurophysiological similarities between effects of emotional content and explicit attention instructions. Many EEG studies report a larger EPN both for attended, task‐relevant stimuli [Codispoti et al., 2006; Delorme et al. 2004], and inherently emotional stimuli [Junghöfer et al., 2001; Schupp et al., 2003, 2006]. Also, research has found increased LPP amplitudes for explicitly attended [Azizian et al. 2006; Codispoti et al., 2006] as well as emotionally significant stimuli [Flaisch et al., 2011; Schupp et al., 2006]. Accordingly, the model of motivated attention states that emotional stimuli guide visual attention, enhancing attention‐sensitive ERP components already during passive processing [Schupp et al., 2006].

Similarly, hemodynamic imaging studies report stronger responses in visual areas for attended compared to unattended stimuli [Coull and Nobre, 1998; Vuilleumier et al., 2001], as well as for emotional compared to neutral pictures [Bradley et al., 2003 ; Junghöfer et al., 2006; Lang et al., 1998], faces [e.g., Vuilleumier and Pourtois, 2007], and words [Beauregard et al., 1997; Compton, 2003; Herbert et al., 2009; Hoffmann et al., 2015; Isenberg et al., 1999]. In emotional word processing, often additional inferior frontal activities have been reported [Kuchinke et al., 2005; Nakic et al., 2006; Straube et al., 2011], probably as a correlate of these structures' involvement in semantic processing in general [Lindquist et al., 2012].

Larger visual responses to emotional stimuli could be driven by re‐entrant mechanisms from other brain structures, such as via bi‐directional projections from the amygdala to the visual cortices [Vuilleumier, 2005]. Accordingly, amygdala activation has been reported to co‐vary with visual activation in the processing of various emotional stimuli such as pictures [Sabatinelli et al., 2005], and faces [Vuilleumier et al., 2004], or words [Herbert et al., 2009]. Effects of task‐driven attention in visual cortex are often thought to be orchestrated by pre‐frontal and parietal brain structures [Corbetta and Shulman, 2002; Petersen and Posner, 2012].

Thus, emotional content itself attracts visual attention due to its greater relevance for reproduction and survival [Lang et al., 1997], but basic effects can be also found for stimuli with purely symbolic emotional relevance such as words. Regarding the electrophysiological dynamics of emotion–attention interactions in picture processing, separate effects of explicit attention and emotion have been found in the EPN time window [Ferrari et al., 2008; Schupp et al., 2007]. For the LPP, a study by Schupp et al. [2007] has further identified interactive effects of task‐driven and emotionally motivated attention. The processing of emotional stimuli, as reflected by LPP amplitude, was shown to benefit more from explicit attention than did the processing of neutral stimuli [Schupp et al., 2007]. By contrast, Ferrari et al. [2008] report separate, parallel, main effects of attention and emotion on the LPP, with larger LPPs for emotional than for neutral stimuli and attention equally benefitting emotional and neutral stimuli. Regarding visual word processing, separate effects of emotion and attention on both EPN and LPP were found when participants counted either adjectives or nouns in a stream of words consisting of adjectives and nouns that varied in emotional valence [Kissler et al., 2009].

Of note, the studies by Ferrari et al. [2008] and Kissler et al. [2009] manipulated attention to a class of stimuli (e.g., to pictures of humans or to adjectives/nouns), which itself consisted of emotional and neutral stimuli. Thus, emotional content was not directly task‐relevant in these studies. In contrast, Schupp et al. [2007] directly drew attention to emotional content by instructing participants to count negative, neutral, or positive stimuli. This difference in task relevance of emotional stimuli might explain the different ERP patterns.

Here, we investigated the effect of attention to emotion in visual word processing, separately manipulating the emotional content of nouns and the participants' attention toward these nouns. Crucially, we explicitly directed attention toward the emotional content itself. Participants were requested to count negative, neutral, or positive words, or to simply passively view them (closely resembling the task of Schupp et al.'s [2007] picture processing study). We further aimed to extend previous findings related to the neural basis of motivated attention, and investigated the cortical generators of early and late effects of attention, emotion, and their possible interaction. To this end, we estimated the sources of both EPN and LPP, expecting that emotional words, as well as attended words, would lead to more activity in visual cortex. Since the present task required participants to press a button in response to each detected target, we also expected more task‐related activity in motor areas. We further assumed that for attended words more activity would be observed in fronto‐parietal attention networks. Finally, we tested for other effects of emotion and attention in source space and were particularly interested in determining when and where emotion and attention might interact.

METHODS

Participants

Thirty participants were recruited at the University of Bielefeld. Participants gave written informed consent and received course credit or 10 Euros for participation. Due to large artifacts, five participants had to be excluded. The resulting 25 participants were 25.16 years of age on average (SD = 4.00), 17 of them were female. All participants were right‐handed and native German speakers, with no reported history of neurological or psychiatric disorders. Screenings with the Beck Depression Inventory (BDI; M = 3.16, SD = 3.48) and the State and Trait Anxiety Inventory (STAI; M = 33.60, SD = 5.98) revealed no clinically relevant depression or anxiety scores. The study protocol was approved by the Ethics Committee of the University of Bielefeld and in accordance with the Helsinki Declaration.

Stimuli

Nouns had been previously rated by 20 other students in terms of valence and arousal using the Self‐Assessment Manikin [Bradley and Lang, 1994]. Two hundred and ten nouns (70 negative, 70 neutral, 70 positive) were selected and matched in their arousal and concreteness ratings as well as in other linguistic properties, such as word length, frequency, familiarity, regularity, and semantic relatedness (see Table 1). Linguistic parameters were assessed using the dlex database, a corpus of the German language that draws on a wide variety of sources and includes more than 100 million written words (Heister et al., 2011). Semantic relatedness was estimated by the average number of co‐occurring words in the German Wikipedia [Gabrilovich and Markovitch, 2007]. Negative and positive nouns differed only in rated valence, while neutral nouns were also lower in arousal than were emotional nouns.

Table 1.

Comparisons of negative, neutral, and positive nouns

| Variable | Negative nouns (n = 70) | Neutral nouns (n = 70) | Positive nouns (n = 70) | F (2/147) |

|---|---|---|---|---|

| Valence | 2.86a (0.94) | 5.11b (0.44) | 7.06c (0.77) | 554.86a |

| Arousal | 5.57a (1.16) | 2.60b (0.90) | 5.51a (1.24) | 163.34a |

| Concreteness | 3.03 (0.59) | 3.00 (1.00) | 2.87 (0.76) | 0.75 |

| Word length | 7.00 (2.20) | 7.36 (2.34) | 7.16 (2.47) | 0.41 |

| Word frequency (per million) | 11.33 (24.42) | 12.92 (17.44) | 11.44 (15.69) | 0.15 |

| Semantic relatedness (ESA) | 0.18 (0.19) | 0.14 (0.12) | 0.14 (0.12) | 1.86 |

| Familiarity (absolute) | 9,726.31 (20,201.19) | 7,159.44 (13,394.58) | 11,235.01 (24,073.42) | 0.77 |

| Regularity (absolute) | 137.71 (308.78) | 116.29 (171.78) | 130.40 (253.68) | 0.13 |

| Neighbors Coltheart (absolute) | 9.37 (10.98) | 7.64 (11.08) | 10.29 (14.23) | 0.85 |

| Neighbors Levenshtein (absolute) | 14.36 (13.81) | 13.11 (14.39) | 15.89 (18.68) | 0.54 |

***P ≤ 0.001.

Standard deviations appear in parentheses next to the means; means in the same row sharing the same superscript letter do not differ significantly from one another at P ≤ 0.05; means that do not share superscripts differ at P ≤ 0.05 based on LSD test post‐hoc comparisons.

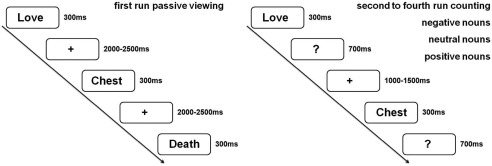

Procedure

Participants first completed the BDI, STAI, and a demographic questionnaire. Then, they started with a passive viewing run, during which the negative, neutral, and positive nouns were presented for reading, but explicit attention was not drawn to emotional content (see Fig. 1). Participants were instructed to avoid eye‐movements and blinks while reading. Nouns were presented for 300 ms followed by a variable fixation‐cross lasting between 2,000 and 2,500 ms. After the initial passive viewing run, active runs were presented, where participants were instructed to count nouns of a given valence category, starting either with negative, neutral, or positive nouns. In each run, all 70 negative, 70 neutral, and 70 positive nouns were presented. The sequence of the counting conditions was counterbalanced across participants. In each counting block, all nouns were presented for 300 ms followed by a question mark, which was presented for 1 s. During this interval, participants were able to respond. Participants were instructed to press a button in response to all nouns belonging to the given emotional target category. After the response, a variable fixation‐cross lasted between 1,000 and 1,500 ms before the next trial started. Stimuli were created and presented using presentation software (http://www.neurobehavioralsystems.com). The entire experiment took about 90 min. In the middle of each run and after each run, participants could take a break to rest. Break‐duration was self‐paced.

Figure 1.

Experimental design. In each run all nouns were presented (70 negative, 70 neutral, 70 positive nouns). For the analyses attended nouns were compared to all unattended nouns of a given emotion category (70 vs. 210).

EEG Recording and Pre‐processing

Continuous EEG was recorded from 128 BioSemi active electrodes using Biosemis Actiview software (http://www.biosemi.com). Four additional electrodes measured horizontal and vertical eye‐movement. Recording sampling rate was 1,024 Hz. Preprocessing and statistical analyses were performed using SPM8 for EEG data (http://www.fil.ion.ucl.ac.uk/spm/) and EMEGS [http://www.emegs.org/; Peyk et al., 2011]. Offline data were re‐referenced to average reference, down‐sampled to 250 Hz and band‐pass filtered from 0.166 to 30 Hz. Recorded eye movement was subtracted from EEG data. Filtered data were segmented from 100 ms before stimulus onset until 1,000 ms after stimulus presentation. Baseline‐correction used 100 ms before stimulus onset. Automatic artifact detection rejected trials exceeding a threshold of 200 µV. Data were averaged using a robust averaging algorithm, which excludes possible further artifacts [Litvak et al., 2011]. Robust averaging down‐weights outliers for each channel and each time point, thereby preserving a higher number of trials as artifacts are not supposed to distort the whole trial, but most of the time corrupt only parts of the trial. We used the offset of the weighting function recommended by the program developers, which preserves ∼95% of the data points drawn from a random Gaussian distribution [Litvak et al., 2011]. Overall, 6.19 percent of all electrodes were interpolated. On average 17.62 percent of all trials were rejected. Rejection rate did not differ between emotion conditions (F (2,48) = 0.40, P = .68; = 0.02) and there was no interaction between attention and emotion (F (1.57,37.57) = 0.38, P = .68; = 0.02). Still, differences between trials containing attended and unattended stimuli were observed (F (1,24) = 28.19, P < .001; = 0.54). More trials from passive (20%) than from active runs (12%) had to be rejected due to blinks, alpha activity, or other artifacts.

Behavioral Data

Data analysis was conducted using IBM SPSS 20 (http://www.ibm.com/spss). Repeated measure ANOVAs were calculated to compare hits and reaction times between negative, neutral, and positive target‐nouns.

EEG Data Analyses

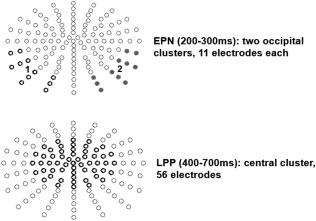

EEG scalp‐data were statistically analyzed with EMEGS. Two (attention: attended versus passive viewing) by three (content: positive, negative, neutral) repeated measure ANOVAs were set‐up to investigate main effects of emotion, attention, and their interaction in time windows and electrode clusters of interest. To this end, the three runs of counting negative, counting neutral, and counting positive, were compared to all unattended nouns of the respective emotion category (unattended nouns in the initial run and in each of the counting runs). To confirm that our results were not biased by the smaller number of attended compared to unattended nouns (70 vs. 210 each), confirmatory analyses compared attended nouns only with the initial passive viewing run (70 trials per condition), leading to similar results, but showing even larger attention main effects. Time windows of interest were chosen based on previous reports of ERP modulations by attention and emotion as well as visually conspicuous differences in the ERPs. Partial eta‐squared ( ) was estimated to describe effect sizes, where = 0.02 describes a small, = 0.13 a medium, and = 0.26 a large effect [Cohen, 1988]. Time windows were segmented from 200 to 300 ms to investigate EPN effects [Kissler et al., 2007] and from 400 to 700 ms to investigate LPP effects [Kissler et al., 2009; Schindler et al., 2015a]. For the EPN, two symmetrical occipital clusters were examined. For the LPP, a large symmetrical central cluster was investigated (see Fig. 2).

Figure 2.

Examined electrode clusters for the EPN and LPP time window.

Source Estimations

Source reconstructions of the likely generators of significant ERP differences were calculated and statistically assessed with SPM8 for EEG [Litvak and Friston, 2008; Lopez et al., 2013], following the procedures recommended by Litvak et al. [2011]. First, a realistic boundary element head model (BEM) was derived from a's template head model based on a Montreal Neurological Institute (MNI) brain built into SPM. Electrode positions were then transformed to match the template head, which is thought to generate reasonable results even when individual subjects' heads differ from the template [Litvak et al., 2011]. Average electrode positions as provided by BioSemi were co‐registered with the cortical mesh template for source reconstruction. This cortical mesh was used to calculate the forward solution. The inverse solution was then calculated from −100 ms to 1,000 ms after word onset. Group inversion [Litvak and Friston, 2008] was computed and the multiple sparse priors algorithm implemented in SPM8 was applied. This method allows activated sources to vary in the degree of activity, but restricts the activated sources to be the same in all subjects [Litvak and Friston, 2008]. Compared to single subject matrix inversion, this results in more robust source estimations [Litvak and Friston, 2008; Sohoglu et al., 2012].

For each analyzed time window in scalp space, three‐dimensional source reconstructions were generated as NIFTI images (voxel size = 2 mm × 2 mm × 2 mm). These images were smoothed using a 8 mm full‐width half‐maximum. The statistical comparisons used in source space were based on significant differences on the scalp. For significant main and interaction effects, post‐hoc comparisons were calculated to determine the direction of the differences. In line with previous studies, we show statistical differences in source activity of voxels differing at least at an uncorrected threshold of P < 0.005 and a minimum of 25 significant voxels per cluster [Schindler and Kissler, 2016; Schindler et al., 2015a,b]. Additionally, resulting cluster sizes with an uncorrected threshold of P < 0.001, as well as with a family‐wise error (fwe) corrected threshold of P < 0.05, are displayed in all tables. The identification of activated brain regions was performed using the LONI atlas [http://www.loni.usc.edu; Shattuck et al., 2008]

RESULTS

Behavioral Data

The overall accuracy for counting negative (84%), neutral (88%), and positive nouns (83%) was good. There were no differences in the accuracy of counting negative, neutral, or positive nouns F (1,24) = 2.11, P = 0.13, = 0.08. Further, reaction times for counting negative (745 ms), neutral (761 ms), or positive nouns (735 ms) did not differ significantly from each other F (1,24) = 1.76, P = 0.18, = 0.08.

ERP Scalp Data

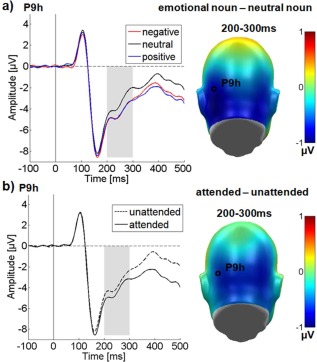

EPN

Over the occipital sensor clusters, significant main effects of attention F (1,24) = 24.59, P < .05, = 0.51 and emotional content F (2,48) = 40.58, P < 0.001, = 0.63 were found. Post hoc comparisons showed that attended nouns elicited a significantly larger EPN than unattended nouns. Further, negative and positive nouns led to a larger EPN than neutral nouns (Ps < 0.001). The EPN to positive nouns was in tendency more negative‐going than for negative nouns (P = 0.07).

There was also a trend‐level effect of channel group F(1,24) = 3.08, P = 0.09, = 0.11, EPN distribution being somewhat left‐lateralized (see Fig. 3a). Emotion and channel group interacted: F (2,48) = 3.59, P < 0.05, = 0.13. Over the left electrode cluster, negative nouns elicited a larger EPN compared to the right cluster (P < 0.05), but there were no significant laterality differences for neutral (P = 0.13), or positive nouns (P = 0.14). Importantly, there was no significant interaction between attention and emotion in the EPN time window F (2,48) = 1.40, P = 0.26, = 0.06 as well as no further interaction (Ps > 0.15).

Figure 3.

Results for the occipital electrode cluster in the EPN time window. Representative electrodes showing the time‐course are displayed on the left, difference topographies are displayed on the right. Blue color indicates more negativity and red color more positivity for the difference. (a) Main effect of emotional content. (b) Main effect of attention. [Color figure can be viewed in the online issue, which is available at http://wileyonlinelibrary.com.]

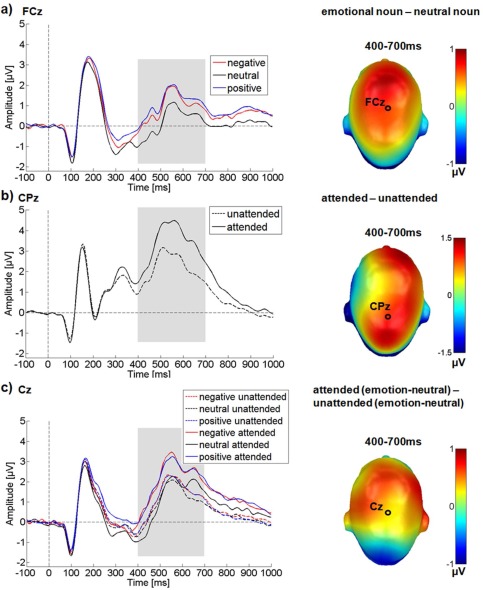

LPP

In the LPP time window, over a large central sensor cluster, significant main effects of attention F (1,24) = 19.27, P < 0.001, = 0.45 and of emotional content were observed F (2,48) = 37.35, P < 0.001, = 0.61 (see Fig. 4).

Figure 4.

Results for the central electrode cluster in the LPP time window. ERP time‐course for selected electrodes is displayed on the left, difference topographies are displayed on the right. Blue color indicates more negativity and red color more positivity for the difference. (a) Main effect of emotional content. (b) Main effect of attention. (c) Interaction between emotion and attention. A selectively enlarged LPP can be observed for attended emotional nouns. [Color figure can be viewed in the online issue, which is available at http://wileyonlinelibrary.com.]

Crucially, in the LPP time window attention and emotion interacted significantly F (2,48) = 8.34, P = 0.001, = 0.26.

Post hoc comparisons showed that attended target words were associated with larger LPP amplitudes than unattended ones. For the emotion main effect, both negative and positive nouns elicited larger LPP amplitudes than neutral nouns (P < 0.001), while not differing from each other (P = 0.26). The significant interaction reflects a considerably larger LPP when attention was directed to emotional nouns. ERPs elicited by attended negative (M attended negative‐neutral = 0.58 µV, P < 0.001) and attended positive nouns (M attended positive‐neutral = 0.74 µV, P < 0.001) were significantly larger than those elicited by attended neutral nouns. During passive viewing, the differences between negative and neutral nouns (M unattended negative‐neutral = 0.23 µV; P = 0.001), and positive and neutral nouns (M unattended positive‐neutral = 0.21 µV; P < 0.001), were also significant, but the difference was considerably smaller in magnitude than in the attended conditions (see also Fig. 4).

Source Reconstruction

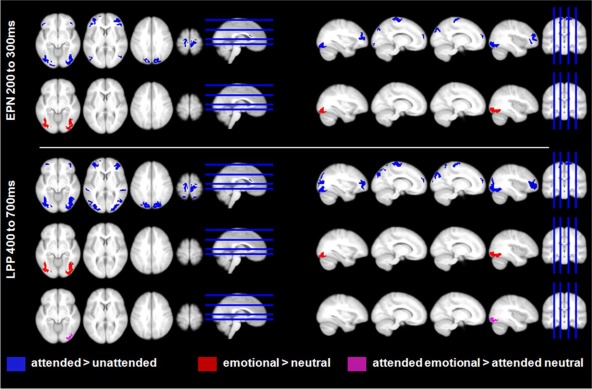

Significant differences in source space were found both for emotion and attention, and for both the EPN and LPP time windows. Further, in the LPP window a significant interaction between emotion and attention mirrored the significant interaction on the scalp (see Fig. 5). For all comparisons activations were only significantly larger for emotional than for neutral and for attended than for unattended nouns. The reverse comparison did not lead to significant differences, even when using a liberal threshold of uncorrected P < 0.01.

Figure 5.

Source estimations for the main effects of attention, emotion, and the interaction of attention with emotion displayed at P < 0.005. Attention (top row in both panels) led to enhanced activity in both the EPN and LPP time window in broad occipital, parietal, frontal, and motor areas, as well as in the ACC. Emotion main effects (second row in both panels) were found mostly in inferior occipital/fusiform regions. The significant interaction (third row, lower panel) was due to more activity for attended emotional nouns compared to attended neutral nouns in right inferior occipital regions. [Color figure can be viewed in the online issue, which is available at http://wileyonlinelibrary.com.]

In the EPN time window, attention led to enhanced activity in broad occipital as well as motor‐related areas, multiple frontal areas, the cuneus, and the anterior cingulate cortex (ACC). In frontal regions, more activation was found in the bilateral inferior, middle, and superior frontal gyri (see Fig. 5, Table 2).

Table 2.

Main effects of attention

| Cluster‐level | Peak‐level | MNI coordinates | LONI | |||

|---|---|---|---|---|---|---|

| No. of sig. voxels | Peak t (1, 90) | Peak P‐unc | x (mm) | y (mm) | z (mm) | Area label |

| EPN time window (200–300 ms) | ||||||

| 478 (430a, 207b) | 5.44 | <0.001 | 46 | 44 | 8 | Inf Frontal G R |

| 625 (563a, 76b) | 5.16 | <0.001 | 34 | −90 | −10 | Inf Occipital G R |

| 736 (614a, 46b) | 5.14 | <0.001 | −34 | −88 | −14 | Inf Occipital G L |

| 750 (642a, 94b) | 5.12 | <0.001 | −38 | 50 | 10 | Mid Frontal G L |

| 71 (58a) | 4.12 | <0.001 | 2 | 22 | 14 | Cingulate G R |

| 405 (306a) | 4.00 | <0.001 | 16 | −86 | 36 | Sup Occipital G R |

| 111 (74a) | 3.79 | <0.001 | −14 | 60 | 18 | Mid Frontal G R |

| 73 (50a) | 3.76 | <0.001 | 10 | 60 | 22 | Sup Frontal G R |

| 295 (240a) | 3.75 | <0.001 | −10 | −24 | 74 | Precentral G L |

| 228 (82a) | 3.74 | <0.001 | 40 | 40 | −4 | Inf Frontal G R |

| 304 (246a) | 3.71 | <0.001 | 12 | −24 | 70 | Precentral G R |

| 197 | 3.44 | <0.001 | −6 | −86 | 34 | Cuneus L |

| 163 | 3.15 | <0.001 | −44 | −80 | 14 | Mid Occipital G L |

| 69 | 3.02 | <0.001 | 44 | −68 | 12 | Mid Occipital G R |

| LPP time window (400–700 ms) | ||||||

| 1593 (1406a, 601b) | 7.56 | <0.001 | 38 | −62 | −12 | Fusiform G R |

| 2287 (1350a, 579b) | 7.52 | <0.001 | −40 | −64 | −10 | Fusiform G L |

| 784 (742a, 496b) | 7.15 | <0.001 | 22 | −84 | 40 | Sup Occipital G R |

| 105 (95a, 52b) | 5.59 | <0.001 | 2 | 22 | 14 | Cingulate G R |

| 385 (354a, 171b) | 5.33 | <0.001 | −10 | −24 | 74 | Precentral G L |

| 388 (356a, 174b) | 5.31 | <0.001 | 10 | −18 | 72 | Precentral G R |

| 92 (58a) | 4.40 | <0.001 | 16 | −56 | 68 | Sup Parietal G R |

| 49 (38a) | 4.37 | <0.001 | −16 | −54 | 68 | Sup Parietal G L |

| 650 (544a) | 4.31 | <0.001 | −32 | 52 | 12 | Mid Frontal G L |

| 719 (507a) | 4.18 | <0.001 | 44 | 50 | 4 | Inf Frontal G R |

| 95 (55a) | 3.54 | <0.001 | −62 | −32 | 10 | Sup Temporal G L |

| 101 (60a) | 3.50 | <0.001 | 58 | −34 | 8 | Sup Temporal G R |

| 25 | 3.42 | <0.001 | −60 | −4 | 16 | Postcentral G L |

| 117 (50a) | 3.40 | <0.001 | −16 | 58 | 20 | Mid Frontal G L |

| 97 (43a) | 3.36 | <0.001 | 14 | 62 | 18 | Mid Frontal G R |

| 48 | 3.14 | =0.001 | −56 | −34 | 22 | Supramarginal G L |

| 52 | 3.12 | =0.001 | 56 | −36 | 18 | Supramarginal G R |

Initial cluster sizes are given for P < 0.005 and a cluster extent of at least 25 voxels.

Resulting cluster size for a P < 0.001 threshold.

Resulting cluster size for a FWE‐corrected threshold of P < 0.05.

No. of sig. voxels = the number of voxels which differ significantly between both conditions. Peak coordinates (x, y, and z) are displayed in MNI space. A cluster may exhibit more than one peak, while only the largest peak is reported. Area = peak brain region as identified by the LONI atlas. R/L = laterality right or left.

G = Gyrus; Inf = inferior, Mid = middle, Sup = superior.

Within significant main effects of attention, post hoc differences were only found for the comparison attended > unattended

In the LPP, stronger activations were additionally observed in bilateral superior parietal gyri and middle occipital gyri, as well as in bilateral superior temporal gyri. The task‐related activity increase in pre‐ and post‐central regions probably mainly reflects the button press, as participants were requested to give a motor response during the attention task. Therefore, enhanced motor activity was to be expected. On the other hand, the stronger activations in broad visual, parietal, frontal, and ACC regions seem to reflect the enhanced activity of large‐scale attention networks.

For the emotion main effect, significant differences were found only in visual areas. In the EPN time window, larger activity for emotional words was located in the bilateral inferior and middle occipital gyri, as well as in the bilateral fusiform gyri (see Fig. 5, Table 3). In the LPP time window, enhanced activity for emotional words remained in bilateral fusiform gyri.

Table 3.

Main effects of emotion

| Cluster‐level | Peak‐level | MNI coordinates | LONI | |||

|---|---|---|---|---|---|---|

| no. of sig. voxels | Peak t (1, 90) | Peak P‐unc | x (mm) | y (mm) | z (mm) | Area label |

| EPN time window (200–300 ms) | ||||||

| 675 (669a, 250b) | 5.29 | <0.001 | −24 | −86 | −16 | Inf Occipital G L |

| 601 (582a) | 4.70 | <0.001 | 36 | −90 | −8 | Mid Occipital G R |

| LPP time window (400–700 ms) | ||||||

| 694 (694a, 433b) | 6.29 | <0.001 | −40 | −64 | −10 | Fusiform G L |

| 734 (722a, 430b) | 6.05 | <0.001 | 38 | −62 | −12 | Fusiform G R |

Initial cluster sizes are given for P < 0.005 and a cluster extent of at least 25 voxels.

Resulting cluster size for a P < 0.001 threshold.

Resulting cluster size for a FWE‐corrected threshold of P < 0.05.

No. of sig. voxels = the number of voxels which differ significantly between both conditions. Peak coordinates (x, y, and z) are displayed in MNI space. A cluster may exhibit more than one peak, while only the largest peak is reported. Area = peak brain region as identified by the LONI atlas. R/L = laterality right or left.

G = Gyrus; Inf = inferior, Mid = middle, Sup = superior.

Within significant main effects of emotions, post hoc differences were only found for the comparison emotional nouns > neutral nouns

For the significant interaction between emotion and attention in the LPP time window, interaction terms were also calculated in source space. Within significant interactions, we found only differences between attended emotional and attended neutral nouns. These differences were found in the right inferior occipital gyrus (t (1,144) = 5.89, P < 0.001, number of significant voxels = 314 (fwe‐corrected 313), MNI coordinates x = 30, y = −90, z = −10).

DISCUSSION

This study tested effects of emotion, attention, and their interactions in visual word processing, and localized the respective cortical sources. To this end, we manipulated emotional content and participants' attention focus on positive, negative, or neutral words. Words are perceptually similar across conditions and acquire their emotional significance exclusively via associative learning. Furthermore, they can be controlled for many parameters of no‐interest, making them well‐suited for neuroscience experiments.

Increased EPN and LPP amplitudes were found for both emotional and attended nouns. Crucially, on the LPP we also found an interaction: Explicit attention to emotional words led to a substantial increase of LPP amplitudes compared to passively looking at them. Source localization revealed bilateral visual cortex generators of emotion effects, visual and fronto‐parietal generators of attention effects, and right occipital cortex as the site of their interaction.

The ERP results closely resemble those of a similar study on affective picture processing. This study reported separate main effects of attention and emotion for the EPN and an additional interaction between both for the LPP [Schupp et al., 2007]. They are not as compatible with other findings of parallel, non‐interacting main effects of emotion and attention in word [Kissler et al., 2009] and picture processing [Ferrari et al., 2008] at both processing stages. However, these studies used tasks that directed participants' attention to target categories that implicitly varied in emotional content, but did not draw attention to the emotional stimuli themselves. Thus, a focus on emotional content might be necessary to potentiate the LPP as found here and in the Schupp et al.'s study [2007]. As in previous studies, no major differences between attention to positive and negative content were observed [Herbert et al., 2008; Kissler et al., 2009; Schindler et al., 2014].

The EPN was significantly more negative for emotional than for neutral words, which is frequently reported [Kissler and Herbert, 2013; Schacht and Sommer, 2009; Scott et al., 2009]. In source space, this effect resulted from increased visual activations for emotional compared to neutral words, largely confirming previous source reconstructions of emotion effects in word processing [Keuper et al., 2014; Kissler et al., 2007; Schindler et al., 2015a]. In tendency, in line with previous word processing studies [Kissler et al., 2007], the EPN was left lateralized and there was also a trend for positive words to elicit a larger EPN than did negative words. In the absence of a clear pattern across studies or a clear significant difference, the latter is hard to interpret. Tentatively, it might be related to a positivity offset in the motivational system [Cacioppo, 2004], where at relatively low levels of arousal, as occurs in context‐free word processing studies, affective systems may respond more to positive than to negative stimuli.

In parallel to the emotion effect, a clear attention effect was found: The EPN was larger for attended than for unattended stimuli. Other research already demonstrated enhanced early posterior negativities for task‐relevant stimuli [Codispoti et al., 2006; Delorme et al., 2004; Holmes et al., 2003], but to the best of our knowledge no previous study has localized the generators of this feature‐based attention‐EPN. Present source reconstructions showed visual, paracentral, anterior cingulate (ACC), and frontal effects, as well as some activity in the cuneus. Visual cortex effects are in line with fMRI findings of larger visual processing of attended compared to unattended stimuli [Coull and Nobre, 1998; Vuilleumier et al., 2001]. Frontal EPN attention effects were localized in inferior frontal gyrus (IFG) and superior frontal gyrus (SFG), revealing early prefrontal involvement in feature‐based attention. This is in line with the proposed fronto‐parietal attention networks [Corbetta and Shulman, 2002; Petersen and Posner, 2012]. In particular, in word processing, larger activations in the left inferior frontal gyrus can also be found for semantic orienting toward words [Cristescu et al., 2006]. Thus, when participants actively focused on the words' valence, they probably also retrieved more information about their semantic meaning. A previous ERP study of attentional cuing toward negative words found a P2 effect for content cueing [Kanske et al., 2011], supporting effects of affective‐semantic orienting in the P2/EPN time window [see, Trauer et al., 2015 for a discussion of the similarity of P2 and EPN effects in word processing].

Superior frontal sources could reflect the evaluative nature of the present task. They have generally been observed in evaluative judgments [Zysset et al., 2002], including evaluation of self‐relevance [Ochsner et al., 2005]. In line with the current localizations, a parallel fMRI study from our group on emotion–attention interactions in auditory word processing revealed practically identical SFG activations (Wegrzyn et al., in preparation).

Sustained ACC activity was also observed for attended words. The ACC is thought to be highly engaged in attentional/effortful control mechanism [Pessoa, 2009] and its activity in the present task may reflect keeping participants focused on the target category throughout the experiment, in particular in the presence of emotional stimuli [see also, Kanske et al., 2011]. Finally, since participants had to press a button when counting, we also found enhanced motor‐related activity for the attention task. Thus, already in the EPN window, source localization revealed widespread activity in frontal and visual structures, consistent with frontal structures regulating attention influences on visual cortex. In parallel, occipital structures, including the fusiform gyri, responded to emotional content.

In the LPP window, larger amplitudes for emotional compared to neutral nouns were confirmed, in line with LPP enlargements for emotional stimuli in general [Flaisch et al., 2011; Kissler et al., 2006; Schindler et al., 2014; Schupp et al., 2006]. Again, stronger visual/occipital generators accounted for emotion main effects. This accords with the model of Motivated Attention, stating that emotional stimuli serve as natural targets due to their high motivational relevance [Lang et al., 1998]. Previous EEG [Sabatinelli et al., 2007] or MEG [Moratti et al., 2011] picture processing studies located generators of emotional LPP enhancements in occipito‐parietal [Moratti et al., 2011; Sabatinelli et al., 2007; Schupp et al., 2007] as well as right prefrontal structures [Moratti et al., 2011]. Here, as well as in our previous studies, localizations of LPP emotion word effects were restricted to occipital structures of the ventral stream, including fusiform regions [Schindler and Kissler, 2016; Schindler et al., 2015a]. While visual processing enhancement by emotion seems consistent across stimuli and is in line with previous fMRI studies [Beauregard et al., 1997; Compton, 2003; Herbert et al., 2009; Hoffmann et al., 2015], stimulus‐dependent effects may exist, with complex pictures activating more dorsal stream regions, whereas words and faces may activate more ventral stream regions [Sabatinelli et al., 2011]. Whether frontal differences are stimulus‐ or task‐dependent, reflect sensitivity differences between MEG and EEG, or differences between localization algorithms remains to be determined.

Attention effects also persisted in the LPP window. In line with other studies, attended nouns led to larger LPP amplitudes [Azizian et al., 2006; Codispoti et al., 2006; Holmes et al., 2003; Kissler et al., 2009]. Source estimations confirmed frontal attention main effects in anterior‐medial parts of the superior frontal gyri, as well as in precentral/supplementary motor areas and the ACC. Additionally, superior parietal sources were observed. Again, the pattern is well in line with activation of fronto‐parietal attention networks [Corbetta and Shulman, 2002; Petersen and Posner, 2012]. Brain stimulation has revealed differential involvement of frontal and parietal areas in visual attention [Ruff et al., 2008], suggesting that frontal areas might play a causal role in mediating early attention processing for successful memory encoding [Zanto et al., 2011]. Further, single cell recordings show earlier responses to top‐down attention in frontal regions [Buschman and Miller, 2007] and Granger causalities are significantly larger from frontal to parietal areas than vice versa [Bressler et al., 2008], suggesting that frontal regions precede parietal regions on top‐down driven attention tasks. In line with this, superior parietal sources were more prominent in the LPP window. Thus, data indicate fast, task‐driven recruitment of frontal regions, but future studies should investigate the temporal dynamics more closely, comparing different types of attention tasks and using directional analyses.

Importantly, on the LPP, an interaction between emotion and attention was observed, with selectively enlarged amplitudes for attended emotional words. In source space, this interaction was due to the fact that in the right inferior occipital gyrus, including fusiform regions, attended emotional words elicited significantly more activity than attended neutral words, whose processing did not benefit equally from attention deployment. A mechanistic explanation of this interaction could be that at late processing stages both emotional salience feedback from the amygdala and top‐down signaling from fronto‐parietal attention networks synergistically increase processing in the visual cortex [Pourtois et al., 2013]. In line with this, amygdalae responses to task demands seem to occur at later stages compared to emotional modulations [Pourtois et al., 2010]. This might have resulted in this potentiated influence of attention and emotion at late stages, in line with previous findings [Schupp et al., 2007]. Still, it is important to note that the present localization technique did not reveal amygdala activation. This may be due to shortcomings of the localization method or a true absence of such activity. Source estimations are clearly not as sensitive and accurate as fMRI in localizing differences in subcortical structures [but for correlations of subcortical fMRI activations with LPP amplitude see Sabatinelli et al., 2013]. Otherwise, local networks in visual cortex might be intrinsically emotion sensitive, their reactivity being potentiated by attention.

Synergistic effects of emotion and attention were lateralized to right occipital areas. This may appear surprising. However, because of the left hemisphere dominance for language in general, in word processing the right hemisphere may benefit more from synergies of attention and emotion. In lateralized lexical decision tasks, the right hemisphere/left visual field shows a bigger emotion advantage than the left one, although overall performance is best for the left hemisphere [Graves et al. 1981; Ortigue et al., 2004]. Further, emotional concepts can be selectively spared after left hemispheric lesions [Graves et al., 1981; Landis et al., 1982]. Overall, the right hemisphere's residual language capacities may benefit more from explicit attention deployment, whereas left hemisphere capacities may already operate close to maximum.

The presently used stimulus categories might limit generalizability of the results: Following previous research within the dimensional emotion framework [e.g., Cuthbert et al., 2000; Ferrari et al., 2008; Schupp et al., 2007], positive and negative stimuli differed from neutral ones in both valence and arousal, reflecting the typical, u‐shaped, co‐variation in subjective appraisals of these two dimensions [Bradley et al., 1994; Lang et al., 1993]. In keeping with this, we interpret our ERP emotion effects as reflecting effects of arousal equally inherent in both types of valenced stimuli, but requiring a certain degree of activation in either motivational system. The interaction on the LPP is thought to indicate that attention amplifies these effects during late stages of cortical processing. However, without full factorial manipulations of valence and arousal, including low and high arousing positive, negative, and neutral stimuli, the unique contribution of either dimension cannot be determined conclusively. A recent EEG study on emotion word processing reports unique effects of valence and arousal on the LPP, which further varied with task [Delaney‐Busch et al., 2016] and other findings suggest LPP arousal modulations for valence‐neutral arousing stimuli [Bayer et al., 2012; Recio et al., 2014]. The current design precludes conclusions on these issues. However, it is debated whether all theoretically possible cells in the valence‐by‐arousal space represent meaningful natural categories of emotional stimuli or whether some might be due to large variability in valence ratings.

CONCLUSION

To summarize, we detected separate main effects of emotion and attention for the EPN and LPP components as well as an interaction between attention and emotion in the LPP, leading to highest amplitudes for attended emotional nouns. The source of the interaction was located in right occipital visual areas. Mirroring the significant scalp interaction, this enhanced visual activity was found only for attended emotional nouns. Moreover, we showed an early, sustained enhancement of visual processing as a result of both attention and emotion. Specifically attention also led to more activity in frontal and parietal areas, perfectly in line with assumed attention networks. We conclude that emotion and attention act independently at early processing stages. During later processing their synergy confers additional processing advantages on the organism. However, this interaction may be restricted to situations when attention is directed to the emotional category.

ACKNOWLEDGMENTS

The authors declare that they have no conflict of interest with respect to their authorship or the publication of this article. Authors thank Liana Stritz, Maleen Fiddicke and Havva Mayadali for their help with data acquisition and all participants contributing to this study.

REFERENCES

- Azizian A, Freitas AL, Parvaz MA, Squires NK (2006): Beware misleading cues: Perceptual similarity modulates the N2/P3 complex. Psychophysiology 43:253–260. http://doi.org/10.1111/j.1469‐8986.2006.00409.x. [DOI] [PubMed] [Google Scholar]

- Bayer M, Sommer W, Schacht A (2012): P1 and beyond: Functional separation of multiple emotion effects in word recognition. Psychophysiology 49:959–969. [DOI] [PubMed] [Google Scholar]

- Beauregard M, Chertkow H, Bub D, Murtha S, Dixon R, Evans A (1997): The neural substrate for concrete, abstract, and emotional word lexica a positron emission tomography study. J Cogn Neurosci 9:441–461. http://doi.org/10.1162/jocn.1997.9.4.441 [DOI] [PubMed] [Google Scholar]

- Bradley MM, Lang PJ (1994): Measuring emotion: The self‐assessment manikin and the semantic differential. J Behav Ther Exp Psychiatry 25:49–59. [DOI] [PubMed] [Google Scholar]

- Bressler SL, Tang W, Sylvester CM, Shulman GL, Corbetta M (2008): Top‐down control of human visual cortex by frontal and parietal cortex in anticipatory visual spatial attention. J Neurosci 28:10056–10061. http://doi.org/10.1523/JNEUROSCI.1776‐08.2008 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Buschman TJ, Miller EK (2007): Top‐down versus bottom‐up control of attention in the prefrontal and posterior parietal cortices. Science 315:1860–1862. http://doi.org/10.1126/science.1138071. [DOI] [PubMed] [Google Scholar]

- Cacioppo, J. T. (2004). Asymmetries in affect laden information processing In Jost JT, Banaji MR, Prentice DA, editors. Perspectivism in Social Psychology: The Yin and Yang of Scientific Progress. Washington, DC: American Psychological Association; pp 85–95. [Google Scholar]

- Codispoti M, Ferrari V, Junghöfer M, Schupp HT (2006): The categorization of natural scenes: Brain attention networks revealed by dense sensor ERPs. NeuroImage 32:583–591. http://doi.org/10.1016/j.neuroimage.2006.04.180 [DOI] [PubMed] [Google Scholar]

- Compton RJ (2003): The interface between emotion and attention: A review of evidence from psychology and neuroscience. Behav Cogn Neurosci Rev 2:115–129. http://doi.org/10.1177/1534582303002002003 [DOI] [PubMed] [Google Scholar]

- Corbetta M, Shulman GL (2002): Control of goal‐directed and stimulus‐driven attention in the brain. Nat Rev Neurosci 3:201–215. http://doi.org/10.1038/nrn755 [DOI] [PubMed] [Google Scholar]

- Coull JT, Nobre AC (1998): Where and when to pay attention: The neural systems for directing attention to spatial locations and to time intervals as revealed by both PET and fMRI. J Neurosci 18:7426–7435. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cristescu TC, Devlin JT, Nobre AC (2006): Orienting attention to semantic categories. NeuroImage 33:1178–1187. http://doi.org/10.1016/j.neuroimage.2006.08.017 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cuthbert BN, Schupp HT, Bradley MM, Birbaumer N, Lang PJ (2000): Brain potentials in affective picture processing: Covariation with autonomic arousal and affective report. Biol Psychol 52:95–111. [DOI] [PubMed] [Google Scholar]

- Delaney‐Busch N, Wilkie G, Kuperber G (2016): Vivid: How valence and arousal influence word processing under different task demands. Cogn Affect Behav Neurosci 1–18. 2016 Jan 29. [Epub ahead of print]. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Delorme A, Rousselet GA, Macé MJM, Fabre‐Thorpe M (2004): Interaction of top‐down and bottom‐up processing in the fast visual analysis of natural scenes. Brain Res Cogn Brain Res 19:103–113. http://doi.org/10.1016/j.cogbrainres.2003.11.010 [DOI] [PubMed] [Google Scholar]

- Ferrari V, Codispoti M, Cardinale R, Bradley MM (2008): Directed and motivated attention during processing of natural scenes. J Cogn Neurosci 20:1753–1761. http://doi.org/10.1162/jocn.2008.20121 [DOI] [PubMed] [Google Scholar]

- Flaisch T, Häcker F, Renner B, Schupp HT (2011): Emotion and the processing of symbolic gestures: An event‐related brain potential study. Soc Cogn Affect Neurosci 6:109–118. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gabrilovich E, Markovitch S (2007): Computing semantic relatedness USING Wikipedia‐based explicit semantic analysis In: Proceedings of the 20th International Joint Conference on Artifical Intelligence. San Francisco, CA, USA: Morgan Kaufmann Publishers Inc; pp 1606–1611. Retrieved from http://dl.acm.org/citation.cfm?id=1625275.1625535 [Google Scholar]

- Graves R, Landis T, Goodglass H (1981): Laterality and sex differences for visual recognition of emotional and non‐emotional words. Neuropsychologia 19:95–102. [DOI] [PubMed] [Google Scholar]

- Herbert C, Junghofer M, Kissler J (2008): Event related potentials to emotional adjectives during reading. Psychophysiology 45:487–498. [DOI] [PubMed] [Google Scholar]

- Herbert C, Ethofer T, Anders S, Junghofer M, Wildgruber D, Grodd W, Kissler J (2009): Amygdala activation during reading of emotional adjectives—An advantage for pleasant content. Soc Cogn Affect Neurosci 4:35–49. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hofmann MJ, Kuchinke L, Tamm S, Võ MLH, Jacobs AM (2009): Affective processing within 1/10th of a second: High arousal is necessary for early facilitative processing of negative but not positive words. Cogn Affect Behav Neurosci 9:389–397. http://doi.org/10.3758/9.4.389 [DOI] [PubMed] [Google Scholar]

- Hoffmann M, Mothes‐Lasch M, Miltner WHR, Straube T (2015): Brain activation to briefly presented emotional words: Effects of stimulus awareness. Hum Brain Mapp 36:655–665. http://doi.org/10.1002/hbm.22654 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Holmes A, Vuilleumier P, Eimer M (2003): The processing of emotional facial expression is gated by spatial attention: Evidence from event‐related brain potentials. Cogn Brain Res 16:174–184. http://doi.org/10.1016/S0926‐6410(02)00268‐9 [DOI] [PubMed] [Google Scholar]

- Isenberg N, Silbersweig D, Engelien A, Emmerich S, Malavade K, Beattie B, Leon AC, Stern E (1999): Linguistic threat activates the human amygdala. Proc Natl Acad Sci USA 96:10456–10459. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Junghöfer M, Bradley MM, Elbert TR, Lang PJ (2001): Fleeting images: A new look at early emotion discrimination. Psychophysiology 38:175–178. [PubMed] [Google Scholar]

- Kanske P, Plitschka J, Kotz SA (2011): Attentional orienting towards emotion: P2 and N400 ERP effects. Neuropsychologia 49:3121–3129. [DOI] [PubMed] [Google Scholar]

- Keuper K, Zwanzger P, Nordt M, Eden A, Laeger I, Zwitserlood P, Kissler J, Junghöfer M, Dobel C (2014): How “love” and “hate” differ from “sleep”: Using combined electro/magnetoencephalographic data to reveal the sources of early cortical responses to emotional words. Hum Brain Mapp 35:875–888. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kissler J, Herbert C (2013): Emotion, etmnooi, or emitoon?—Faster lexical access to emotional than to neutral words during reading. Biol Psychol 92:464–479. [DOI] [PubMed] [Google Scholar]

- Kissler J, Assadollahi R, Herbert C (2006): Emotional and semantic networks in visual word processing: Insights from ERP studies. Prog Brain Res 156:147–183. [DOI] [PubMed] [Google Scholar]

- Kissler J, Herbert C, Peyk P, Junghofer M (2007): Buzzwords: Early cortical responses to emotional words during reading. Psychol Sci 18:475–480. [DOI] [PubMed] [Google Scholar]

- Kissler J, Herbert C, Winkler I, Junghofer M (2009): Emotion and attention in visual word processing—An ERP study. Biol Psychol 80:75–83. [DOI] [PubMed] [Google Scholar]

- Kuchinke L, Jacobs AM, Grubich C, Võ MLH, Conrad M, Herrmann M (2005): Incidental effects of emotional valence in single word processing: An fMRI study. NeuroImage 28:1022–1032. http://doi.org/10.1016/j.neuroimage.2005.06.050 [DOI] [PubMed] [Google Scholar]

- Lang PJ, Greenwald MK, Bradley MM, Hamm AO (1993): Looking at pictures: Affective, facial, visceral, and behavioral reactions. Psychophysiology 30:261–273. [DOI] [PubMed] [Google Scholar]

- Landis T, Graves R, Goodglass H (1982): Aphasic reading and writing: Possible evidence for right hemisphere participation. Cortex 18:105–112. [DOI] [PubMed] [Google Scholar]

- Lindquist KA, Wager TD, Kober H, Bliss‐Moreau E, Barrett LF (2012): The brain basis of emotion: A meta‐analytic review. Behav Brain Sci 35:121–143. http://doi.org/10.1017/S0140525X11000446 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Moratti S, Saugar C, Strange BA (2011): Prefrontal‐occipitoparietal coupling underlies late latency human neuronal responses to emotion. J Neurosci 31:17278–17286. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nakic M, Smith BW, Busis S, Vythilingam M, Blair RJR (2006): The impact of affect and frequency on lexical decision: The role of the amygdala and inferior frontal cortex. NeuroImage 31:1752–1761. http://doi.org/10.1016/j.neuroimage.2006.02.022 [DOI] [PubMed] [Google Scholar]

- Ochsner KN, Beer JS, Robertson ER, Cooper JC, Gabrieli JDE, Kihsltrom JF, D'Esposito M (2005): The neural correlates of direct and reflected self‐knowledge. NeuroImage 28:797–814. http://doi.org/10.1016/j.neuroimage.2005.06.069 [DOI] [PubMed] [Google Scholar]

- Olofsson JK, Nordin S, Sequeira H, Polich J (2008): Affective picture processing: An integrative review of ERP findings. Biol Psychol 77:247–265. http://doi.org/10.1016/j.biopsycho.2007.11.006 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ortigue S, Michel CM, Murray MM, Mohr C, Carbonnel S, Landis T (2004): Electrical neuroimaging reveals early generator modulation to emotional words. Neuroimage 21:1242–1251. [DOI] [PubMed] [Google Scholar]

- Pessoa L (2009): How do emotion and motivation direct executive control? Trends Cogn Sci 13:160–166. http://doi.org/10.1016/j.tics.2009.01.006 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Petersen SE, Posner MI (2012): The attention system of the human brain: 20 years after. Annu Rev Neurosci 35:73–89. http://doi.org/10.1146/annurev‐neuro‐062111‐150525 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pourtois G, Spinelli L, Seeck M, Vuilleumier P (2010): Temporal precedence of emotion over attention modulations in the lateral amygdala: Intracranial ERP evidence from a patient with temporal lobe epilepsy. Cogn Affect Behav Neurosci 10:83–93. http://doi.org/10.3758/CABN.10.1.83 [DOI] [PubMed] [Google Scholar]

- Pourtois G, Schettino A, Vuilleumier P (2013): Brain mechanisms for emotional influences on perception and attention: What is magic and what is not. Biol Psychol 92:492–512. http://doi.org/10.1016/j.biopsycho.2012.02.007 [DOI] [PubMed] [Google Scholar]

- Recio G, Conrad M, Hansen LB, Jacobs AM (2014): On pleasure and thrill: The interplay between arousal and valence during visual word recognition. Brain Lang 134:34–43. [DOI] [PubMed] [Google Scholar]

- Ruff CC, Bestmann S, Blankenburg F, Bjoertomt O, Josephs O, Weiskopf N, Deichmann R, Driver J (2008): Distinct causal influences of parietal versus frontal areas on human visual cortex: Evidence from concurrent TMS–fMRI. Cereb Cortex 18:817–827. http://doi.org/10.1093/cercor/bhm128 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sabatinelli D, Bradley MM, Fitzsimmons JR, Lang PJ (2005): Parallel amygdala and inferotemporal activation reflect emotional intensity and fear relevance. NeuroImage 24:1265–1270. http://doi.org/10.1016/j.neuroimage.2004.12.015 [DOI] [PubMed] [Google Scholar]

- Sabatinelli D, Lang PJ, Keil A, Bradley MM (2007): Emotional perception: Correlation of functional MRI and event‐related potentials. Cereb Cortex 17:1085–1091. Epub 2006 Jun 12. [DOI] [PubMed] [Google Scholar]

- Sabatinelli D, Fortune EE, Li Q, Siddiqui A, Krafft C, Oliver WT, Beck S, Jeffries J (2011): Emotional perception: Meta‐analyses of face and natural scene processing. NeuroImage 54:2524–2533. http://doi.org/10.1016/j.neuroimage.2010.10.011 [DOI] [PubMed] [Google Scholar]

- Sabatinelli D, Keil A, Frank DW, Lang PJ (2013): Emotional perception: Correspondence of early and late event‐related potentials with cortical and subcortical functional MRI. Biol Psychol 92:513–519. http://doi.org/10.1016/j.biopsycho.2012.04.005 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schacht A, Sommer W (2009): Time course and task dependence of emotion effects in word processing. Cogn Affect Behav Neurosci 9:28–43. doi: 10.3758/CABN.9.1.28. [DOI] [PubMed] [Google Scholar]

- Schindler S, Kissler J (2016): People matter: Perceived sender identity modulates cerebral processing of socio‐emotional language feedback. NeuroImage 134:160–169. http://doi.org/10.1016/j.neuroimage.2016.03.052 [DOI] [PubMed] [Google Scholar]

- Schindler S, Wegrzyn M, Steppacher I, Kissler JM (2014): It's all in your head—How anticipating evaluation affects the processing of emotional trait adjectives. Front Psychol 5:1292. http://doi.org/10.3389/fpsyg.2014.01292 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schindler S, Wegrzyn M, Steppacher I, Kissler J (2015a): Perceived communicative context and emotional content amplify visual word processing in the fusiform gyrus. J Neurosci 35:6010–6019. http://doi.org/10.1523/JNEUROSCI.3346‐14.2015 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schindler S, Wolff W, Kissler JM, Brand R (2015b): Cerebral correlates of faking: Evidence from a brief implicit association test on doping attitudes. Front Behav Neurosci 9:139. http://doi.org/10.3389/fnbeh.2015.00139 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schupp HT, Junghöfer M, Weike AI, Hamm AO (2003): Emotional facilitation of sensory processing in the visual cortex. Psychol Sci 14:7–13. [DOI] [PubMed] [Google Scholar]

- Schupp HT, Junghöfer M, Weike AI, Hamm AO (2004a): The selective processing of briefly presented affective pictures: An ERP analysis. Psychophysiology 41:441–449. [DOI] [PubMed] [Google Scholar]

- Schupp HT, Öhman A, Junghöfer M, Weike AI, Stockburger J, Hamm AO (2004b): The facilitated processing of threatening faces: An ERP analysis. Emotion 4:189–200. http://doi.org/10.1037/1528‐3542.4.2.189 [DOI] [PubMed] [Google Scholar]

- Schupp HT, Flaisch T, Stockburger J, Junghofer M (2006): Emotion and attention: Event‐related brain potential studies. Prog Brain Res 156:31–51. [DOI] [PubMed] [Google Scholar]

- Schupp HT, Stockburger J, Codispoti M, Junghöfer M, Weike AI, Hamm AO (2007): Selective visual attention to emotion. J Neurosci 27:1082–1089. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Scott GG, O'Donnell PJ, Leuthold H, Sereno SC (2009): Early emotion word processing: Evidence from event‐related potentials. Biol Psychol 80:95–104. doi: 10.1016/j.biopsycho.2008.03.010. Epub 2008 Mar 22. [DOI] [PubMed] [Google Scholar]

- Straube T, Sauer A, Miltner WHR (2011): Brain activation during direct and indirect processing of positive and negative words. Behav Brain Res 222:66–72. http://doi.org/10.1016/j.bbr.2011.03.037 [DOI] [PubMed] [Google Scholar]

- Trauer SM, Kotz SA, Müller MM (2015): Emotional words facilitate lexical but not early visual processing. BMC Neurosci 16:89. http://doi.org/10.1186/s12868‐015‐0225‐8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vuilleumier P, Pourtois G (2007): Distributed and interactive brain mechanisms during emotion face perception: Evidence from functional neuroimaging. Neuropsychologia 45:174–194. [DOI] [PubMed] [Google Scholar]

- Vuilleumier P, Armony JL, Driver J, Dolan RJ (2001): Effects of attention and emotion on face processing in the human brain: An event‐related fMRI study. Neuron 30:829–841. [DOI] [PubMed] [Google Scholar]

- Vuilleumier P, Richardson MP, Armony JL, Driver J, Dolan RJ (2004): Distant influences of amygdala lesion on visual cortical activation during emotional face processing. Nat Neurosci 7:1271–1278. http://doi.org/10.1038/nn1341 [DOI] [PubMed] [Google Scholar]

- Wieser MJ, Pauli P, Reicherts P, Mühlberger A (2010): Don't look at me in anger! Enhanced processing of angry faces in anticipation of public speaking. Psychophysiology 47:271–280. [DOI] [PubMed] [Google Scholar]

- Wieser MJ, Flaisch T, Pauli P (2014): Raised middle‐finger: Electrocortical correlates of social conditioning with nonverbal affective gestures. PLoS One 9:e102937. http://doi.org/10.1371/journal.pone.0102937 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zanto TP, Rubens MT, Thangavel A, Gazzaley A (2011): Causal role of the prefrontal cortex in top‐down modulation of visual processing and working memory. Nat Neurosci 14:656–661. http://doi.org/10.1038/nn.2773 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zysset S, Huber O, Ferstl E, von Cramon DY (2002): The anterior frontomedian cortex and evaluative judgment: An fMRI study. NeuroImage 15:983–991. http://doi.org/10.1006/nimg.2001.1008 [DOI] [PubMed] [Google Scholar]