Abstract

The risky decision-making task (RDT) measures risk-taking in a rat model by assessing preference between a small, safe reward and a large reward with increasing risk of punishment (mild foot shock). It is well-established that dopaminergic drugs modulate risk-taking; however, little is known about how differences in baseline phasic dopamine signaling drive individual differences in risk preference. Here, we used in vivo fixed potential amperometry in male Long-Evans rats to test if phasic nucleus accumbens shell (NACs) dopamine dynamics are associated with risk-taking. We observed a positive correlation between medial forebrain bundle-evoked dopamine release in the NACs and risky decision-making, suggesting that risk-taking is associated with elevated dopamine sensitivity. Moreover, “risk-taking” subjects were found to demonstrate greater phasic dopamine release than “risk-averse” subjects. Risky decision-making also predicted enhanced sensitivity to the dopamine reuptake inhibitor nomifensine, and elevated autoreceptor function. Importantly, this hyperdopaminergic phenotype was selective for risky decision-making, as delay discounting performance was not predictive of phasic dopamine release or dopamine supply. These data identify phasic NACs dopamine release as a possible therapeutic target for alleviating the excessive risk-taking observed across multiple forms of psychopathology.

Subject terms: Neurotransmitters, Predictive markers, Brain

Introduction

Multiple factors contribute to transformation of reward value during economic decision-making. For example, some rewards are accompanied by risk of an aversive event, which “discounts” the value of the reward [1, 2]. Excessive risky decision-making is prevalent in substance use disorder (SUD) [3–6]. Therefore, understanding the neurobiological factors that drive individual differences in decision-making may have utility for precise medical treatment for vulnerable individuals.

The risky decision-making task (RDT) models risk-taking in rats by measuring preference for a small, safe reward or a large reward accompanied by the risk of foot shock [1]. Importantly, risk-taking in RDT is independent of general motivation and pain tolerance/shock sensitivity [7, 8]. Individual differences in this task predict several phenotypes associated with vulnerability to SUD, with risk-preferring rats demonstrating elevated cocaine self-administration, impulsive action, nicotine sensitivity, and sign-tracking [9–11]. Therefore, understanding the underpinnings of RDT may reveal biomarkers associated with several SUD endophenotypes.

Dopamine release in the nucleus accumbens (NAC) is a canonical mechanism involved in valuation of rewards and cues [12–14]. Manipulating dopamine transmission alters multiple rodent assessments of risky decision-making, including RDT. Systemic amphetamine administration reduces risky decision-making, whereas cocaine reduces sensitivity to changing risk levels [1, 7, 8]. Chronic exposure to dopaminergic drugs, which causes long-lasting changes in dopamine activity [15], shifts decision-making toward greater risky decision-making [9]. Furthermore, risk-taking in RDT predicts ex vivo dopamine receptor expression in NAC shell (NACs), but not core [7]. However, little is known about how individual differences in risk-taking are related to functional dopamine release dynamics in NACs.

Electrically stimulating projections from medial forebrain bundle (MFB) to NAC mimics biologically relevant phasic dopamine release, a critical component of motivated behavior [16, 17]. Fixed potential amperometry is an ideal neurochemical tool for assessing aspects of dopamine release in vivo, given its high temporal resolution of 10,000 samples/s [18]. Pharmacological studies in both mice and rats have confirmed the recorded current changes in the NAC to be dopamine dependent [19–21]. Here, we characterized rats in RDT, then assessed how individual differences in risk-taking predict evoked NACs dopamine release, supply, autoreceptor function, and sensitivity to the dopamine transporter inhibitor nomifensine. In addition, we compared NACs dopamine release dynamics to delay discounting (DD)/impulsive choice, another form of decision-making associated with SUD vulnerability [22–24].

Materials and methods

Subjects

Male Long-Evans rats aged approximately 90 days old (n = 20) were obtained from Envigo. Rats were pair-housed (unless fighting or food domination was observed) on a 12-h reverse light/dark cycle and food restricted to 90% free feeding weight to increase motivation in behavioral tasks. All experiments were approved by The University of Memphis Institutional Animal Care and Use Committee.

Behavioral apparatus

Behavior was measured in Med Associates modular rat chambers (29.53 × 24.84 × 18.67 cm) equipped with one retractable lever on each side of an illuminable food trough and pellet dispenser, as well as a shock-generating grating (Fairfax, VA). Food trough entries were detected with a 0.635 cm recessed photobeam.

Risky decision-making task

Rats trained in both RDT and DD to determine individual preference for risky and delayed rewards, with task order counterbalanced across subjects. Shaping was adapted from past experiments [25]. Briefly, rats learned to associate the food trough with food delivery, initiate lever extension with a food trough entry, and press either lever for pellet delivery. Then, rats learned to discriminate between simultaneously presented levers that yielded either large (3 pellet) or small (1 pellet) rewards, with lever identity counterbalanced across subjects.

Upon demonstrating consistent preference for the large reward (>75% choice within a session), rats began training in RDT [see [26] for detailed task description]. Sessions consisted of five blocks of 18 trials, totaling 90 trials per session. All trials began with simultaneous illumination of house and trough lights. Rats had 10 s to initiate a trial via food trough entry, which extinguished the food trough light and extended either 1 (free forced choice) or both levers (free choice). Requiring rats to enter the trough located between the levers prior to decision-making reduced the likelihood of an enduring side bias that affected decision-making. The two levers were classified as a safe lever, which resulted in a single food pellet, and a risky lever, which delivered three food pellets with the risk of an immediate 1 s foot shock. Shock probability escalated across blocks (0%, 25%, 50%, 75%, and 100%). Each block began with eight forced choice trials in which a single lever was extended, with four risky and four safe trials presented in pseudorandom order. These trials served to establish the new block/risk level. Forced choice trials were followed by 10 free choice trials, which offered choice between the safe and risky levers. Upon lever press and pellet delivery/shock, levers retracted and the food trough light illuminated. After food was collected or 10 s passed, the food trough and house lights were extinguished and an intertrial interval (ITI, 10 ± 4 s) preceded the next trial. Failure to initiate a trial or press a lever within 10 s of instrumental activation resulted in the trial being marked as an omission and proceeding to the ITI.

Initially, sessions did not include risk of shock, allowing rats to acquire magnitude discrimination (1 vs. 3 pellets). After subjects demonstrated a minimum of 75% preference for large reward within a session, risk of shock was added. Shock intensity was increased from 0.15 to 0.35 mA in 0.05 increments, as using 0.35 mA for the initial shock amplitude can induce an excessive number of omitted trials and a strong bias away from the large reward during acquisition [27]. After reaching 0.35 mA, rats continued training until stable performance was achieved over 5 days, defined as a lack of effect of day or day × block interaction via repeated measures ANOVA. The total percent choice of the risky reward across blocks averaged across the final five sessions served as a measure of risky decision-making.

Impulsive choice

Impulsive choice was assessed using a DD task (modified from [25]). Task shaping was identical to RDT, with rats learning to nose poke for lever extension, lever press for reward, and discriminate between levers producing a large (3 pellet) and small (1 pellet) reward. DD trials were almost identical to RDT, except in the DD task, there was no risk of punishment. Instead, the large reward was delivered after a delay that increased throughout the session (0, 4, 8, 16, and 32 s) over 5 blocks of 10 choice trials each. Each choice block was preceded with two forced choice trials with only one lever available to establish changes in delay. For both free and forced choice trials in which rats chose the small reward, a delay identical to that preceding the large reward was added to the ITI after each small reward choice to keep trial length consistent between trial types. Rats required 20 days of training to acquire DD. The total percent choice of the delayed reward over a final 5-day average served as a measure of impulsive choice, or willingness to wait for a larger reward. The sequence of behavioral tasks was counterbalanced across subjects to ensure previous training did not affect behavior. Location of the large and small reward levers were kept the same between tasks.

Fixed potential amperometry

Phasic dopamine release in the NACs was measured using in vivo fixed potential amperometry. Each recording session lasted approximately 90 min, and all experiments began between noon and three PM during the dark cycle. Rats were anesthetized with urethane (1.5 g/kg, i.p.), and then fixed into a stereotaxic frame. Urethane has been shown to produce anesthesia without significantly altering dopamine release or uptake kinetics when compared to awake animals [28]. A concentric bipolar stimulating electrode was placed into the MFB (AP −4.2 mm from bregma, ML + 1.8 mm from midline, and DV −7.8 mm from dura [29]) to evoke NAC dopamine release [30, 31]. A combination reference and auxiliary electrode was placed in cortical contact contralateral to the stimulating electrode. Finally, a carbon fiber recording microelectrode (active recording surface of 500 µm length × 7 µm o.d.) was placed in NACs (AP +1.6, ML +0.6, DV −6.6) and received a fixed +0.8 V via the auxiliary electrode. Stimulation parameters varied depending on the aspect of dopamine transmission being measured. Initially, the stimulation protocol consisted of 20 monophasic 0.5 ms duration pulses (800 μA) at 50 Hz every 30 s to establish a baseline response. This stimulation protocol is intended to emulate behaviorally relevant phasic dopamine levels [32]. Importantly, optogenetic studies have determined that a similar phasic activation was sufficient to drive behavioral conditioning, whereas lower frequency stimulation was not [33].

Dopamine autoreceptor sensitivity was assessed by applying a pair of test stimuli (T1 and T2, each 10 pulses at 50 Hz separated by 10 s) to the MFB every 30 s. Five sets of conditioning pulses (1, 5, 10, 20, and 40; 0.5 ms pulse duration at 15 Hz) were delivered prior to T2 with 0.3 s between the end of the conditioning pulse train and T2 [19, 20, 31]. Autoreceptor-mediated inhibition of evoked dopamine release was expressed in terms of change in the amplitude of T2 with respect to T1 for each set of conditioning pulses (i.e., higher autoreceptor sensitivity results in lower amplitude of T2 relative to T1).

Upon completion of the autoreceptor sensitivity test, stimulation parameters were reset to 20 pulses at 50 Hz every 30 s. Phasic dopamine release (magnitude of the stimulation-evoked response) and dopamine synaptic half-life (the latency between peak dopamine release and restoration to 50% of baseline) were quantified. Specifically, dopamine half-life was calculated as time of return to baseline current minus time of peak dopamine response divided by 2 [20, 31]. Next, subjects were injected with the dopamine transporter (DAT) inhibitor nomifensine (10 mg/kg, i.p.) [34], while MFB stimulations continued every 30 s. We then measured dopamine half-life at 20, 40, and 60 min post-nomifensine. The percent change in dopamine half-life from pre- to post-nomifensine provided a measure of susceptibility to the presence of a dopamine reuptake inhibitor [20, 31]. The supply of available dopamine was measured by calculating total dopamine efflux during 3 min of continuous electrical stimulation (9000 pulses at 50 Hz) [19, 31]. This was not intended to approximate physiological levels, but was instead optimized to completely deplete dopamine release, providing a measure of the available dopamine neuronal reserve [19, 31].

Following each experiment, an iron deposit was created to mark the stimulating electrode site by sending direct anodic current (100 μA for 10 s) through the electrode. Rats were then euthanized with intracardial injection of urethane (0.345 g/mL). Brains were removed and stored in a 30% sucrose/10% formalin solution with 0.1% potassium ferricyanide, coronal sections were sliced via cryostat, and electrode placements were identified using a light microscope [29]. In vitro electrode calibration was accomplished by exposing each carbon fiber recording microelectrode to known solutions of dopamine (0.2–1.2 μM) via a flow injection system [35, 36].

Statistical analysis

Rats were separated into “Risk-Taking” and “Risk-Averse” groups as determined by a k-means cluster analysis on each subject’s percent choice of large risky reward in each block averaged across the final 5 days of training. This algorithm uses an iterative distance-from-center minimization technique to identify a user-specified number of clusters [37]. High- and low-impulsivity groupings were divided “High-Impulsivity” and “Low-Impulsivity” via a similar criterion. Performance in the RDT and in DD was investigated using 2 × 5 repeated measures ANOVAs with risk or impulsivity group as a between subjects factor and percent chance of shock or delay duration as a within subjects factor. Pearson correlations were used to test for linear relationships between risk preference and delay preference.

Regarding amperometric recordings, independent samples t-tests were used to compare baseline dopamine release, half-life, and supply between risk and impulsivity groups. Differences in autoreceptor-mediated dopamine inhibition were examined using repeated measures ANOVAs with risk group as between subjects factor and number of conditioning pulses (0, 1, 5, 10, 20, 40) as a within subject factor. Differences in post-drug dopamine half-life were examined using repeated measures ANOVAs with risk or impulsivity group as a between-subjects factor and time (0, 20, 40, 60 min) as a within-subjects factor. Pearson correlations were utilized to probe for linear relationships between risk preference and measures of dopamine transmission, and were used to test for linear relationships between impulsive choice and dopamine release characteristics.

Results

Risky decision-making

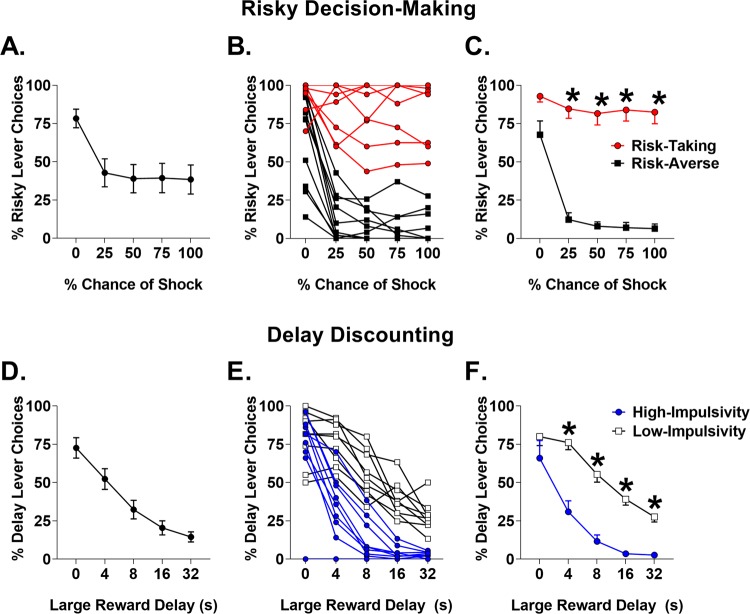

Analyses indicated a significant main effect of test block on the percentage of risky choices (F(4,72) = 19.14, p < 0.001, Fig. 1a), indicating reduced preference for the risky reward as risk level increased. A k-means clustering analysis was utilized to separate rats into groups based on risky decision-making (averaged across the final five sessions): “risk-taking” rats, which demonstrated preference for the risky choice (n = 8), and “risk-averse” rats, which preferred the safe option (n = 11, Fig. 1b). Percentage of risky choices was greater in risk-taking than risk-averse rats as indicated by a main effect of risk group (F(1,17) = 136.07, p < 0.001; Fig. 1c), and no risk group × block interaction was observed (F(3,51) = 0.27, p = 0.841). Risk-averse rats exhibited lower choice of the large reward in block 1 despite no risk of shock (t(17) = 2.262, p < 0.037). There was a significant effect of risk block, suggesting that rats discounted rewards based on risk level; however, there was no effect of block with block one removed (F(3,51) = 1.011, p = .395), suggesting limited discrimination between probabilities in blocks 2–5. There were no differences between-risk groups in trial omissions or latency to select either reward (Table 1). Finally, RDT vs DD task order had no effect on risky decision-making (F(1,17) = 0.964, p < 0.34), suggesting that previous exposure to DD did not influence risk-taking.

Fig. 1.

Performance on risky decision-making and delay discounting tasks. a Mean percent choice of risky reward across all subjects. b Individual differences in risky decision-making. c Risk-taking vs risk-averse group means. d Mean percent choice of delayed reward. e Individual differences in delay discounting. f High-impulsivity vs low-impulsivity group means. Error bars represent ±SEM. *p < 0.05

Table 1.

No differences were observed between risk or delay groups in omissions or choice latency for large or small reward in either task

| Risk-taking group comparison | Block × Risk-taking group interaction | Impulsivity group comparison | Block × Impulsivity group interaction | |

|---|---|---|---|---|

| Omissions | t(17) = 0.142 | F(4,68) = 1.040 | t(17) = 0.862 | F(4,68) = 1.279 |

| p = 0.889 | p = 0.393 | p = 0.401 | p = 0.277 | |

| Large reward choice latency | t(17) = 1.666 | N/A | t(17) = .529 | N/A |

| p = 0.114 | p = 0.604 | |||

| Small reward choice latency | t(17) = 1.470 | N/A | t(17) = 0.082 | N/A |

| p = 0.160 | p = 0.936 |

There were several blocks with choice of only one type of reward, which prevented assessment of group× block interactions for latency measures for large or small reward choice

Impulsive choice

Rats shifted choice preference from large to small rewards as delay increased, indicative of DD (F(4,72) = 48.44, p < 0.001, Fig. 1d). A k-means cluster analysis was used to assign rats into low (n = 9) or high impulsive choice (n = 10) phenotypes (Fig. 1e). Percent choice of delayed reward during blocks 2–5 was compared between the high- and low-impulsivity groups, revealing that the high-impulsivity group selected large reward less with increasing delays than the low-impulsivity group (F(1,17) = 33.91, p < 0.001; interaction: F(3,51) = 3.94, p < 0.001; Fig. 1f). There were also no differences between impulsivity groups in latency to select either reward option or in choice omissions (Table 1). As with RDT, task order had no effect on DD (F(1,17) = 0.293, p = 0.595).

There was no correlation between impulsive choice and risk preference (r = 0.13, p = 0.578), suggesting that risk of punishment and delay produce different patterns of reward discounting. Moreover, we observed no difference between risk-takers and risk-averse in reward preference during DD (F(1,18) = 0.08, p = 0.775) and no difference between high- and low-impulsive rats on risk-taking on RDT (F(1,18) = 0.02, p = 0.888).

Risk-taking and phasic dopamine dynamics in the nucleus accumbens

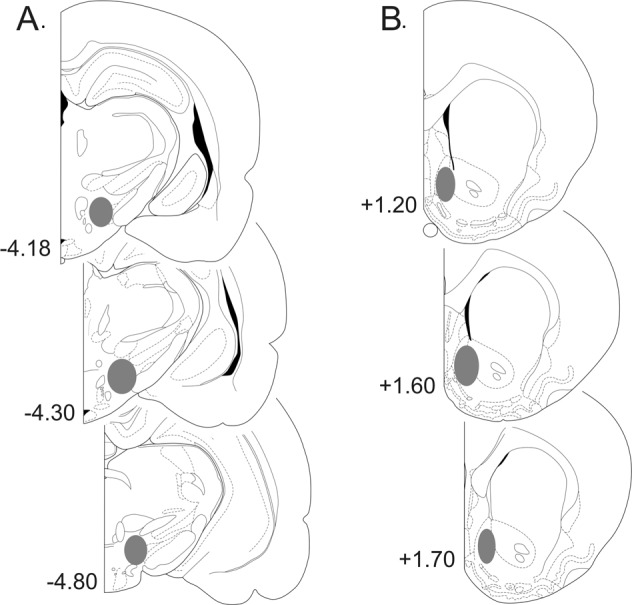

Fixed potential amperometry was used to characterize differences in dopamine release dynamics in the NACs of risk-taking and risk-averse rats. Electrodes were accurately positioned (Fig. 2), and MFB stimulation successfully induced dopamine efflux in all subjects. Additionally, the range of baseline dopamine release in the NACs (0.62 ± 0.08 µM) and the percent increase in intra-NAC dopamine release following nomifensine administration (322.53 ± 60.48%) were similar to previous studies [19, 38].

Fig. 2.

Representative coronal sections of the rat brain (adapted from the atlas of Paxinos and Watson29), with gray shaded areas indicating the placements of a stimulating electrodes in the medial forebrain bundle and b amperometric recording electrodes in the nucleus accumbens shell. Numbers correspond to mm from bregma

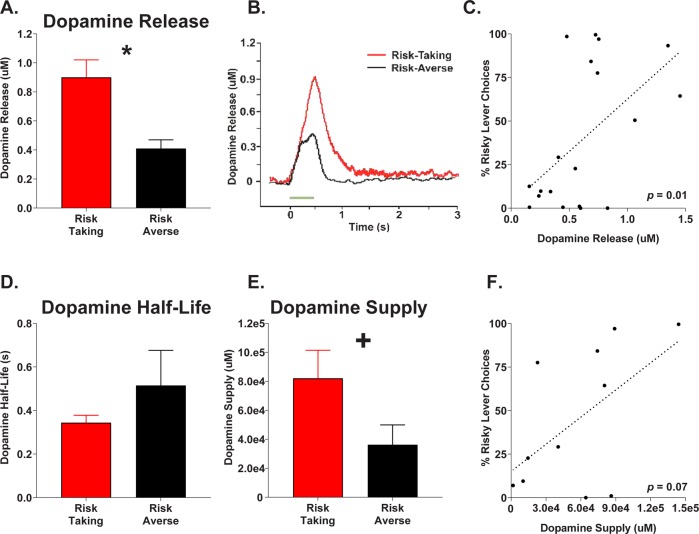

Analyses revealed significantly elevated dopamine release in risk-taking rats relative to risk-averse rats (t(17) = 3.85, p < 0.001; Fig. 3a, b). Pearson correlation analysis revealed that risk preference was positively associated with evoked dopamine release (r = 0.54, p = 0.01; Fig. 3c). On average, risk-taking and risk-averse rats demonstrated similar baseline durations of dopamine half-life (t(17) = −0.89, p = 0.387; Fig. 3d). Dopamine supply was determined by continuously stimulating at 50 Hz for 3 min to evoke the release of all available dopamine in the NAC. Analyses indicated a trend toward increased dopamine supply in risk-taking relative to risk-averse rats (t(9) = 1.99, p = 0.078; Fig. 3e), as well as a near significant positive correlation between risk-taking and dopamine supply (r = 0.56, p = 0.072, Fig. 3f).

Fig. 3.

Nucleus accumbens shell evoked dopamine release and dopamine supply in risk-taking and risk-averse subpopulations. a Mean evoked dopamine release. b Sample evoked dopamine waveforms in risk-taking and risk-averse rats. c Correlation between total risk preference and dopamine release across all subjects. d Mean dopamine half-life in risk subpopulations. e Mean dopamine supply in risk subpopulations. f Correlation between risk preference and dopamine supply. Error bars represent ±SEM. *p < 0.05; +p < 0.8

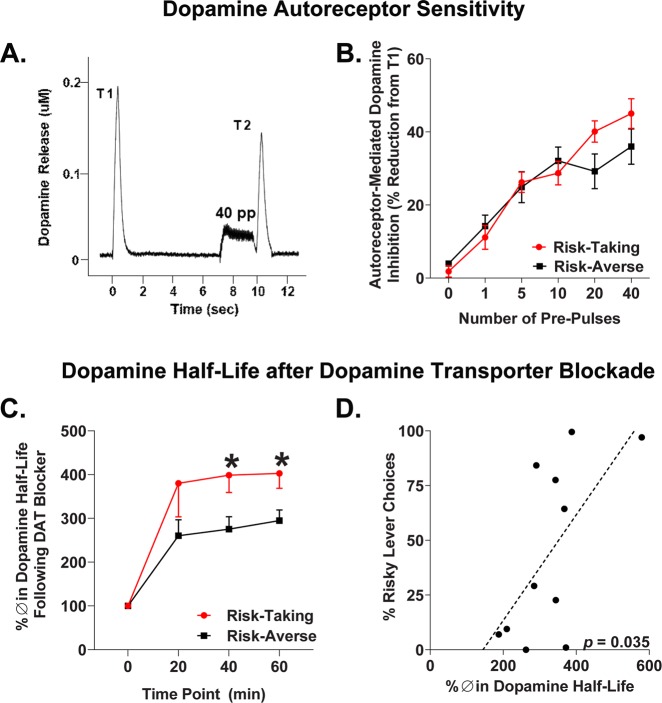

Autoreceptor-mediated inhibition of evoked dopamine release was expressed in terms of the percentage change between test stimulations (T1 and T2) for each set of conditioning pulses (or pre-pulses). With 0 pre-pulse stimulation pairs near 100% (no change between T1 and T2), increased % change of T2 relative to T1 indicates more autoreceptor activity (Fig. 4b). As expected, there was a significant main effect of the number of pre-pulses on autoreceptor-mediated inhibition (F(5,85) = 48.80, p < 0.001), indicating dopamine release is inhibited to a greater extent with more pre-pulses. Importantly, there was a significant risk group × pre-pulses interaction on autoreceptor-mediated inhibition (F(5,85) = 2.44, p = 0.041; Fig. 4b), such that risk-taking rats demonstrated greater autoreceptor sensitivity with higher pre-pulse stimulations compared to risk-averse rats.

Fig. 4.

Nucleus accumbens shell dopamine autoreceptor function and nomifensine sensitivity. a Representative response depicting autoreceptor-mediated inhibition of dopamine release in terms of change from test stimulation 1 (T1) to test stimulation 2 (T2). Autoreceptor inhibition of dopamine release is evoked by a train of pulses prior to T2 (shown: 40 pulses). b Risk-taking rats express increased autoreceptor sensitivity with higher stimulation parameters, confirmed by a significant risk group × pulse interaction. c Percent change in dopamine half-life following nomifensine treatment during 60 min amperometric recordings for risk-taking and -averse rats. d Correlation between risk preference and average percent change in dopamine half-life across the 20, 40, and 60 min time points. Error bars represent ±SEM. *p < 0.05

Finally, we assessed sensitivity to the DAT inhibitor nomifensine in risk-taking and risk-averse phenotypes by measuring % change in dopamine half-life at 20 min intervals postsystemic nomifensine administration. There was a significant risk group × time interaction on percent change in dopamine half-life following nomifensine administration (F(3,27) = 2.99, p = 0.048; Fig. 4c). Subsequent analyses revealed that dopamine half-life was increased in risk-taking rats at the 40 (t(9) = 2.60, p = 0.029) and 60 min time points (t(9) = 2.65, p = 0.027) relative to risk-averse rats (Fig. 4c). Additionally, there were significant positive correlations between risk preference and average percent change in dopamine half-life across the 20, 40, and 60 min time points (r = 0.63, p = 0.035; Fig. 4d). In summary, dopamine half-life is significantly elevated in risk-taking compared to risk-averse rats following exposure to a DAT inhibitor.

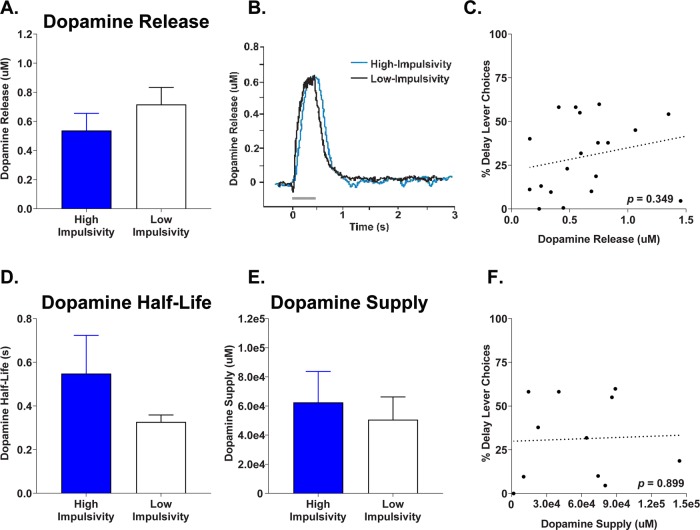

DD and phasic dopamine dynamics in the nucleus accumbens

Phasic dopamine release dynamics in the NACs was also compared to impulsive choice, with subjects divided into high- and low-impulsive responders. Analyses indicated no difference between groups in evoked dopamine release (t(17) = −1.03, p = 0.317; Fig. 5a, b), and no correlation between dopamine release in NACs and delayed reward choice (r = 0.23, p = 0.349, Fig. 5c). Dopaminergic half-lives were of similar durations between high-impulsivity and low-impulsivity rats (t(17) = 1.38, p = 0.186; Fig. 3d). Finally, there was no difference between groups in overall NACs dopamine supply (t(9) = 0.43, p = 0.670; Fig. 5e), and no correlation between supply and reward choice (r = 0.04, p = 0.899, Fig. 5f).

Fig. 5.

Nucleus accumbens shell evoked dopamine release and dopamine supply for impulsivity subpopulations. a Mean evoked dopamine release. b Sample evoked dopamine waveform in high- vs low-impulsive choice rats. c Correlation between baseline dopamine release and total delay preference. d Mean dopamine half-life in impulsivity subpopulations. e Mean dopamine supply in impulsivity phenotypes. f Correlation between dopamine supply and impulsive choice. Error bars represent ±SEM

Discussion

We utilized fixed potential amperometry to examine dopamine release in NACs of anesthetized rats previously characterized in both risky decision-making and DD. MFB stimulation-evoked dopamine release in NACs was both positively correlated with risk preference and enhanced in risk-taking rats. There was also a trend toward enhanced dopamine supply in risk-takers, and risk preference predicted sensitivity to the DAT blocker nomifensine. Finally, risk-takers demonstrated elevated NACs autoreceptor function compared to risk-averse subjects. Conversely, dopamine release and dopamine supply were not predicted by individual differences in impulsive choice.

Risky decision-making predicts phasic dopamine release in NAC

Risk-taking in the RDT provides an addiction-relevant model of punishment-driven risky decision-making that is independent of external factors such as pain tolerance and reward motivation [7], and remains consistent under either ascending or descending order of probabilities [1]. RDT has been associated with dopamine receptor expression in NACs ex vivo [7, 9], yet little is known about the relationship between risk-taking and biologically relevant dopamine signaling. The current observation of elevated NACs phasic dopamine release suggests that risk-taking rats may be more susceptible to the motivational effects of environmental cues, as phasic dopamine release mediates the attribution of motivational salience to reward-predictive stimuli [39]. This is consistent with risk-taking predicting enhanced salience attributed to reward-predictive cues, manifested as elevated sign-tracking [11]. These data also suggest that the previously observed abundance of D1 receptors in the NACs of risk-taking rats may be a response to enhanced dopamine activity in the region [7].

The magnitude of stimulation-evoked dopamine response is in part determined by the rate of dopamine reuptake. However, given that there were no differences between risk groups in the synaptic half-life of dopamine, the observed differences in dopamine release is likely not related to DAT functioning. Dopamine half-life is related to DAT sensitivity, with a shorter half-life being indicative of greater sensitivity [20, 40], suggesting that reuptake following dopamine release does not vary as a function of risk-taking phenotype. In addition, we observed a near-significant trend toward enhanced dopamine supply in risk-taking rats. This may be a compensatory mechanism resulting from elevated phasic dopamine release. Conversely, elevated dopamine supply may contribute to enhanced phasic dopamine release in risk takers, although further experimentation is necessary to confirm the causal direction of this relationship.

It is possible that elevated phasic dopamine release reflects heightened sensitivity to reward rather than risk preference, as risk-taking rats preferred the large reward more than risk averse in the punishment-free first block of trials. However, there was no difference in reward preference during task shaping, in which rats learned to choose between one and three pellets prior to the introduction of shock, suggesting that neither motivation nor reward magnitude discrimination differed between groups (data not shown). Furthermore, risk-takers did not demonstrate increased preference for the large reward in block one of DD, which was identical to block one of RDT, suggesting that risk-averse rats’ attenuated preference of the large reward in the absence of risk was driven by a generalized association between that reward lever and impending foot shock rather than gross motivational differences. Similar disparities in reward choice between-risk groups in the risk-free block have been observed in prior investigations [7, 9, 10, 41].

It is also noteworthy that rats demonstrated markedly flat discounting curves after the punishment-free block, consistently selecting either the high- or low-risk lever regardless of probability. This overall lack of a clear discounting curve has been observed in other studies utilizing RDT [1, 41]. The lack of clear discrimination between risk probabilities raises the possibility that the dopaminergic distinctions between “risk-taking” and “risk-averse” rats are more generally reflective of punishment insensitive vs punishment avoidant strategies. However, performance in RDT is distinct from single-response reward/punishment conflict tasks in sensitivity to dopaminergic drugs (D2 activation reduces punished responding in RDT but increases it in Vogel conflict task [7, 42]). Furthermore, conflict tasks are classically affected by anxiety level [43], whereas RDT has been shown to be independent of baseline anxiety [7] or shock sensitivity [7]. Therefore, we propose that risk-taking in RDT is distinct from punished responding, and that the dopaminergic distinctions observed here are not solely determined by propensity to avoid or disregard punishment.

Risky decision-making is predictive of impulsive action [10], a facet of impulsivity characterized by ongoing reward seeking despite unfavorable outcomes [44, 45]. Like risk-taking, impulsive action is mediated through striatal dopamine activity [9, 46–49]. In addition, both impulsive action and risk-taking predict cocaine self-administration [9, 50, 51]. Thus, elevated phasic dopamine release in NACs may produce the co-expression of both impulsive and risk-taking phenotypes in addition to increased vulnerability to psychostimulant drugs of abuse. This cluster of addiction-relevant behavioral traits in conjunction with elevated dopamine sensitivity suggests that RDT may have utility for detecting both cognitive and neuropharmacological vulnerability to substance use.

Previous studies have examined the relationship between dopamine in NAC and probabilistic discounting, which quantifies decision-making governed by the risk of reward omission rather than punishment [52]. Tonic dopamine efflux in NAC has been shown via microdialysis to vary at the same rate as preference for increasingly probabilistic rewards [53]. In addition, dopamine neuron activity scales with probability of reward delivery [54] and influences behavioral shifts based on choice outcome [55]. While this provides evidence for dopamine’s role in behavioral flexibility in the face of uncertain rewards, it does not account for the relationship between individual differences in risky decision-making and phasic dopamine release dynamics.

The current study focused on NAC shell, as dopamine receptors in shell but not core were previously shown to correlate with risk-taking under punishment [7, 9]. This contrasts with investigations of risk-taking using a probabilistic discounting task, which suggest that general risk-attitude and trial-by-trial risk-taking recruits the NAC core rather than shell [56, 57]. A critical distinction between these and the current study is operationalization of risk. Probability-based risky decision-making defines risk as probability of omission of a large reward, whereas in RDT rewards are always delivered, but there is a risk of a foot shock punishment [52]. The presence of punishment during decision-making may cause selective recruitment of the shell over the core. Indeed, NAC shell dopamine release is evoked by shocks and shock-paired cues [58, 59], and shell rather than core is necessary for suppression of reward seeking due to punishment [60]. While further investigation is necessary to preclude a role of NAC core dopamine in punishment-based risk-taking, the relationship between NAC shell dopamine dynamics and RDT provides further evidence that punishment and reward-omission based risk-taking employ distinct mesolimbic mechanisms.

Fixed potential amperometry provides advantages over other neurochemical assessments due to its extremely high sampling rate, which allows precise assessment of evoked dopaminergic measures such as autoreceptor function, synaptic half-life, and dopamine supply [18, 19, 31]. However, this technique requires anesthetized subjects, which prevents direct comparison between behavioral assessment and dopamine release. Thus, it is difficult to disentangle whether hyper-sensitive dopamine dynamics in NAC shell cause a preference for risk-taking, or a history of risk-taking in RDT (associated with greater exposure to food pellet reinforcers and shocks) elevates measures of dopamine dynamics. We propose that NAC shell dopamine hypersensitivity drives risk preference for multiple reasons. First, previous exposure to RDT, regardless of risk preference, does not affect performance in several behavioral assays that involve NAC dopamine transmission, including locomotion, impulsive choice [10], and appetitive motivation [7]. Another cognitive phenotype that involves NAC shell dopamine, impulsive action [61], is correlated with risk-taking in RDT, but is not altered by previous RDT performance [10]. A previous study showed that a history of cued rewards during the rat gambling task increased sensitivity to cocaine; however, this pattern was not observed in rats that preferred a risk-taking strategy [62]. Additionally, the NAC shell has been causally implicated in punished reward seeking [60], a critical component of RDT, and phasic dopamine release in NAC has been shown to predict poor punishment avoidance [63], which further suggests that the elevated baseline phasic release we observed in risk-takers may cause elevated risky reward preference. Despite this evidence, further research that eliminates the temporal gap between risk-taking and assessment of phasic dopamine release is necessary to disentangle the direction of causality.

Risk-taking predicts autoreceptor sensitivity and effects of dopamine transporter blockade

Dopamine autoreceptors are located on the presynaptic axon terminal of dopamine neurons, and attenuate dopamine release upon activation. Autoreceptor sensitivity can be assessed in vivo by inducing dopamine overflow via rapid electrical stimulation immediately prior to measuring phasic dopamine efflux, with autoreceptor function corresponding with inhibition of phasic dopamine. Here, we observed that dopamine autoreceptors in NACs are more effective in risk-taking vs risk-averse rats, indicating that a risk-taking phenotype is associated with efficient ability to reduce dopamine release via negative feedback. This may be compensation for the elevated phasic dopamine release and supply observed in risk-takers. NACs expression of the D2 receptor, which has an autoreceptor isoform, is comparable between risk-taking and risk-averse adults [7]. Therefore, it is likely that the enhanced autoreceptor sensitivity in risk-takers is not a result of increased receptor availability in NACs, but increased effectiveness or sensitivity of receptors. Alternatively, elevated autoreceptor function in risk-takers may be a function of elevated NACs D3 rather than D2 autoreceptor availability.

Risk-taking rats demonstrated elevated sensitivity to the DAT inhibitor nomifensine, reflected as increased latency for dopamine to restore to baseline concentration after electrical stimulation. Elevated sensitivity to nomifensine translates to increased sensitivity to dopaminergic drugs of abuse that affect DAT, which include cocaine and amphetamine [34, 64, 65]. Consistent with this finding, risk-taking rats have previously been shown to self-administer more cocaine than risk-averse rats [9]. A possible explanation for increased sensitivity to nomifensine is diminished DAT capacity [20]. However, this is unlikely due to the similarities in dopamine half-life prior to nomifensine. Additionally, this elevated sensitivity cannot be attributed to reduced autoreceptor-mediated dopamine suppression, as we observed that autoreceptors are more effective in risk-takers. A more plausible explanation is that elevated post-nomifensine half-life is resultant of elevated dopamine supply, causing a greater concentration of dopamine to flood the synapse after stimulation, requiring extended time for clearance.

DD is not associated with phasic dopamine release

We found no relationship between phasic dopamine release and DD, a measure of preference for immediate gratification. This was somewhat surprising, as past research has found dopamine to modulate impulsive choice, with systemic dopamine receptor activation and optically evoked NAC core dopamine release affecting impulsive choice [17, 66, 67]. However, NAC dopamine depletion has no effect on DD [68], which is consistent with our observation that dopamine supply does not predict impulsive choice and further suggests dissociable roles of the NAC core and shell in impulsive choice. Furthermore, a recent report observed that associations between DD and dopamine receptors were restricted to clinical populations, with no relationship in healthy individuals [69]. Therefore, while NAC dopamine may play a modulatory role in DD on a trial by trial basis, phasic dopamine activity in shell at baseline is not a reliable correlate of individual differences in impulsive choice in non-pathological populations. Rather, elevated dopamine release in NAC shell appears to be specific to a preference for risky decision-making rather than a bias that generalizes across other forms of economic decision-making such as DD.

Conclusions

Punishment-driven risk taking can be readily extrapolated to the risk-taking performed during SUDs, in which the reward (drug reinforcement) often differs from the consequences (risk of arrest or overdose). Therefore, understanding the neurobiology underlying biases in this form of risk-taking may have utility for early detection and treatment of vulnerable individuals. These data reveal multiple measures of a hyper-sensitive mesolimbic dopamine system in rats predisposed to risk-taking, while demonstrating a distinction in the dopaminergic correlates of risk-taking and DD. This suggests NACs phasic dopamine dynamics as a potential therapeutic target for pathological risk-taking, but not impulsive choice. This identification of enhanced dopamine sensitivity in a readily identifiable subpopulation of rats may provide further utility toward investigation of neuronal and genetic correlates of SUD vulnerability.

Funding and Disclosure

This research was supported by a Young Investigator Grant from the Brain and Behavior Research Foundation and a Faculty Research Grant from the University of Memphis (NWS).

Acknowledgements

We would like to thank Amber Woods, Haleigh Joyner, Samantha Morrison, Andrew Starnes, and Alan Rasheed for technical assistance.

Conflict of interest

None of the authors have any financial conflicts of interest to disclose.

Footnotes

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

These authors contributed equally: Timothy G. Freels, Daniel B. K. Gabriel

References

- 1.Simon NW, Gilbert RJ, Mayse JD, Bizon JL, Setlow B. Balancing risk and reward: a rat model of risky decision making. Neuropsychopharmacology. 2009;34:2208–2217. doi: 10.1038/npp.2009.48. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Negus SS. Effects of punishment on choice between cocaine and food in rhesus monkeys. Psychopharmacology (Berl) 2005;181:244–252. doi: 10.1007/s00213-005-2266-7. [DOI] [PubMed] [Google Scholar]

- 3.Verdejo-Garcia Antonio, Chong Trevor T.-J., Stout Julie C., Yücel Murat, London Edythe D. Stages of dysfunctional decision-making in addiction. Pharmacology Biochemistry and Behavior. 2018;164:99–105. doi: 10.1016/j.pbb.2017.02.003. [DOI] [PubMed] [Google Scholar]

- 4.Bechara A, Dolan S, Denburg N, Hindes A, Anderson SW, Nathan PE. Decision-making deficits, linked to a dysfunctional ventromedial prefrontal cortex, revealed in alcohol and stimulant abusers. Neuropsychologia. 2001;39:376–389. doi: 10.1016/s0028-3932(00)00136-6. [DOI] [PubMed] [Google Scholar]

- 5.Brand M, Roth-Bauer M, Driessen M, Markowitsch HJ. Executive functions and risky decision-making in patients with opiate dependence. Drug Alcohol Depend. 2008;97:64–72. doi: 10.1016/j.drugalcdep.2008.03.017. [DOI] [PubMed] [Google Scholar]

- 6.Brevers D, Bechara A, Cleeremans A, Kornreich C, Verbanck P, Noël X. Impaired decision-making under risk in individuals with alcohol dependence. Alcohol Clin Exp Res. 2014;38:1924–1931. doi: 10.1111/acer.12447. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Simon NW, Montgomery KS, Beas BS, Mitchell MR, LaSarge CL, Mendez IA, et al. Dopaminergic modulation of risky decision-making. J Neurosci. 2011;31:17460–17470. doi: 10.1523/JNEUROSCI.3772-11.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Mitchell MR, Vokes CM, Blankenship AL, Simon NW, Setlow B. Effects of acute administration of nicotine, amphetamine, diazepam, morphine, and ethanol on risky decision-making in rats. Psychopharmacology (Berl) 2011;218:703–712. doi: 10.1007/s00213-011-2363-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Mitchell MR, Weiss VG, Beas BS, Morgan D, Bizon JL, Setlow B. Adolescent risk taking, cocaine self-administration, and striatal dopamine signaling. Neuropsychopharmacology. 2014;39:955–962. doi: 10.1038/npp.2013.295. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Gabriel DBK, Freels TG, Setlow B, Simon NW. Risky decision-making is associated with impulsive action and sensitivity to first-time nicotine exposure. Behav Brain Res. 2019;359:579–288. doi: 10.1016/j.bbr.2018.10.008. [DOI] [PubMed] [Google Scholar]

- 11.Olshavsky ME, Shumake J, Rosenthal AA, Kaddour-Djebbar A, Gonzalez-Lima F, Setlow B, et al. Impulsivity, risk-taking, and distractibility in rats exhibiting robust conditioned orienting behaviors. J Exp Anal Behav. 2014;102:162–178. doi: 10.1002/jeab.104. [DOI] [PubMed] [Google Scholar]

- 12.Floresco SB, West AR, Ash B, Moorel H, Grace AA, Moore H, et al. Afferent modulation of dopamine neuron firing differentially regulates tonic and phasic dopamine transmission. Nat Neurosci. 2003;6:968–973. doi: 10.1038/nn1103. [DOI] [PubMed] [Google Scholar]

- 13.Schultz W. Predictive reward signal of dopamine neurons. J Neurophysiol. 1998;80:1–27. doi: 10.1152/jn.1998.80.1.1. [DOI] [PubMed] [Google Scholar]

- 14.Wise RA, Bozarth MA. Brain mechanisms of drug reward and euphoria. Psychiatr Med. 1985;3:445–460. [PubMed]

- 15.Robinson TE, Berridge KC. The neural basis of drug craving: an incentive salience theory of addiction. Brain Res Rev. 1993;8:247–291. doi: 10.1016/0165-0173(93)90013-p. [DOI] [PubMed] [Google Scholar]

- 16.Ikemoto S, Panksepp J. The role of nucleus accumbens dopamine in motivated behavior: a unifying interpretation with special reference to reward-seeking. Brain Res Rev. 1999;31:6–41. doi: 10.1016/s0165-0173(99)00023-5. [DOI] [PubMed] [Google Scholar]

- 17.Saddoris MP, Sugam JA, Stuber GD, Witten IB, Deisseroth K, Carelli RM. Mesolimbic dopamine dynamically tracks, and is causally linked to, discrete aspects of value-based decision making. Biol Psychiatry. 2015;77:903–911. doi: 10.1016/j.biopsych.2014.10.024. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Benoit-Marand M, Suaud-Chagny M-F, Gonon F. Presynaptic regulation of extracellular dopamine as studied by continuous amperometry in anesthetized animals. In: Electrochemical Methods for Neuroscience. (eds Michael AC, Borland LM) Chapter 3, CRC Press/Taylor & Francis; 2007. [PubMed]

- 19.Holloway ZR, Freels TG, Comstock JF, Nolen HG, Sable HJ, Lester DB. Comparing phasic dopamine dynamics in the striatum, nucleus accumbens, amygdala, and medial prefrontal cortex. Synapse. 2018;73:e22074. doi: 10.1002/syn.22074. [DOI] [PubMed] [Google Scholar]

- 20.Mittleman G, Call SB, Cockroft JL, Goldowitz D, Matthews DB, Blaha CD. Dopamine dynamics associated with, and resulting from, schedule-induced alcohol self-administration: analyses in dopamine transporter knockout mice. Alcohol. 2011;45:325–339. doi: 10.1016/j.alcohol.2010.12.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Tye KM, Mirzabekov JJ, Warden MR, Ferenczi EA, Tsai C, Finkelstein J, et al. Dopamine neurons modulate neural encoding and expression of depression-related behaviour. Nature. 2013;493:537–541. doi: 10.1038/nature11740. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Garavan H, Hester R. The role of cognitive control in cocaine dependence. Neuropsychol Rev. 2007;17:337–345. doi: 10.1007/s11065-007-9034-x. [DOI] [PubMed] [Google Scholar]

- 23.Perry JL, Carroll ME. The role of impulsive behavior in drug abuse. Psychopharmacology. 2008;200:1–26. doi: 10.1007/s00213-008-1173-0. [DOI] [PubMed] [Google Scholar]

- 24.Bickel WK, Johnson MW, Koffarnus MN, MacKillop J, Murphy JG. The behavioral economics of substance use disorders: reinforcement pathologies and their repair. Annu Rev Clin Psychol. 2014;10:641–677. doi: 10.1146/annurev-clinpsy-032813-153724. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Simon NW, Mendez IA, Setlow B. Cocaine exposure causes long-term increases in impulsive choice. Behav Neurosci. 2007;121:543–549. doi: 10.1037/0735-7044.121.3.543. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Orsini CA, Blaes SL, Setlow B, Simon NW. Recent updates in modeling risky decision-making in rodents. Methods Mol Biol. 2019;2011:79–92 [DOI] [PubMed]

- 27.Simon NW, Setlow B. Modeling risky decision making in rodents. Methods Mol Biol. 2012;829:165–175. doi: 10.1007/978-1-61779-458-2_10. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Sabeti J, Gerhardt GA, Zahniser NR. Chloral hydrate and ethanol, but not urethane, alter the clearance of exogenous dopamine recorded by chronoamperometry in striatum of unrestrained rats. Neurosci Lett. 2003;343:9–12. doi: 10.1016/s0304-3940(03)00301-x. [DOI] [PubMed] [Google Scholar]

- 29.Paxinos G, Watson C. The rat brain in stereotaxic coordinates. 3rd ed. San Diego: Acad Press; 1997.

- 30.Dugast C., Suaud-Chagny M.F., Gonon F. Continuousin vivo monitoring of evoked dopamine release in the rat nucleus accumbens by amperometry. Neuroscience. 1994;62(3):647–654. doi: 10.1016/0306-4522(94)90466-9. [DOI] [PubMed] [Google Scholar]

- 31.Fielding JR, Rogers TD, Meyer AE, Miller MM, Nelms JL, Mittleman G, et al. Stimulation-evoked dopamine release in the nucleus accumbens following cocaine administration in rats perinatally exposed to polychlorinated biphenyls. Toxicol Sci. 2013;136:144–153. doi: 10.1093/toxsci/kft171. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Hyland B.I, Reynolds J.N.J, Hay J, Perk C.G, Miller R. Firing modes of midbrain dopamine cells in the freely moving rat. Neuroscience. 2002;114(2):475–492. doi: 10.1016/s0306-4522(02)00267-1. [DOI] [PubMed] [Google Scholar]

- 33.Tsai H-C, Zhang F, Adamantidis A, Stuber GD, Bonci A, Lecea Lde, et al. Phasic firing in dopaminergic neurons is sufficient for behavioral conditioning. Science. 2009;324:1080–1084. doi: 10.1126/science.1168878. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Carboni E, Imperato A, Perezzani L, Di Chiara G. Amphetamine, cocaine, phencyclidine and nomifensine increase extracellular dopamine concentrations preferentially in the nucleus accumbens of freely moving rats. Neuroscience. 1989;28:653–661. doi: 10.1016/0306-4522(89)90012-2. [DOI] [PubMed] [Google Scholar]

- 35.Prater WT, Swamy M, Beane MD, Lester DB. Examining the effects of common laboratory methods on the sensitivity of carbon fiber electrodes in amperometric recordings of dopamine. J Behav Brain Sci. 2018;8:117–125. [Google Scholar]

- 36.Michael DJ, Wightman RM. Electrochemical monitoring of biogenic amine neurotransmission in real time. J Pharm Biomed Anal. 1999;19:33–46. [DOI] [PubMed]

- 37.MacQueen J. Some methods for classification and analysis of multivariate observations. In Fifth Berkeley Symp. Math. Stat. Probab. Statistical Laboratory, University of California, Berkeley, (1967).

- 38.Freels TG, Lester DB, Cook MN. Arachidonoyl serotonin (AA-5-HT) modulates general fear-like behavior and inhibits mesolimbic dopamine release. Behav Brain Res. 2019;362:140–151. doi: 10.1016/j.bbr.2019.01.010. [DOI] [PubMed] [Google Scholar]

- 39.Flagel SB, Clark JJ, Robinson TE, Mayo L, Czuj A, Willuhn I, et al. A selective role for dopamine in stimulus-reward learning. Nature. 2011;469:53–9. doi: 10.1038/nature09588. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Benoit-Marand M, Jaber M, Gonon F. Release and elimination of dopamine in vivo in mice lacking the dopamine transporter: functional consequences. Eur J Neurosci. 2000;12:2985–2992. doi: 10.1046/j.1460-9568.2000.00155.x. [DOI] [PubMed] [Google Scholar]

- 41.Shimp KG, Mitchell MR, Beas BS, Bizon JL, Setlow B. Affective and cognitive mechanisms of risky decision making. Neurobiol Learn Mem. 2015;117:60–70. doi: 10.1016/j.nlm.2014.03.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Millan MJ, Brocco M, Papp M, Serres F, La RochelleCD, Sharp T, et al. S32504, a novel naphtoxazine agonist at dopamine D3/D2 receptors: III. Actions in models of potential antidepressive and anxiolytic activity in comparison with ropinirole. J Pharm Exp Ther. 2004;309:936–950. doi: 10.1124/jpet.103.062463. [DOI] [PubMed] [Google Scholar]

- 43.Bali A, Jaggi AS. Electric foot shock stress: a useful tool in neuropsychiatric studies. Rev Neurosci. 2015;26:655–677. doi: 10.1515/revneuro-2015-0015. [DOI] [PubMed] [Google Scholar]

- 44.Everitt BJ, Robbins TW. Drug addiction: updating actions to habits to compulsions ten years on. Annu Rev Psychol. 2016;67:23–50. doi: 10.1146/annurev-psych-122414-033457. [DOI] [PubMed] [Google Scholar]

- 45.Dalley JW, Robbins TW. Fractionating impulsivity: neuropsychiatric implications. Nat Rev Neurosci. 2017;18:158–171. doi: 10.1038/nrn.2017.8. [DOI] [PubMed] [Google Scholar]

- 46.Robbins TW. The 5-choice serial reaction time task: behavioural pharmacology and functional neurochemistry. Psychopharmacology (Berl) 2002;163:362–380. doi: 10.1007/s00213-002-1154-7. [DOI] [PubMed] [Google Scholar]

- 47.Zalocusky KA, Ramakrishnan C, Lerner TN, Davidson TJ, Knutson B, Deisseroth K. Nucleus accumbens D2R cells signal prior outcomes and control risky decision-making. Nature. 2016;531:642–646. doi: 10.1038/nature17400. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Pattij T, Janssen MCW, Vanderschuren LJMJ, Schoffelmeer ANM, van Gaalen MM. Involvement of dopamine D1 and D2 receptors in the nucleus accumbens core and shell in inhibitory response control. Psychopharmacology (Berl) 2007;191:587–598. doi: 10.1007/s00213-006-0533-x. [DOI] [PubMed] [Google Scholar]

- 49.Besson M, Belin D, McNamara R, Theobald DEH, Castel A, Beckett VL, et al. Dissociable control of impulsivity in rats by dopamine D2/3 receptors in the core and shell subregions of the nucleus accumbens. Neuropsychopharmacology. 2010;35:560–569. doi: 10.1038/npp.2009.162. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Dalley JW, Fryer TD, Brichard L, Robinson ESJ, Theobald DEH, Laane K, et al. Nucleus accumbens D2/3 receptors predict trait impulsivity and cocaine reinforcement. Science. 2007;315:1267–1270. doi: 10.1126/science.1137073. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Belin D, Mar AC, Dalley JW, Robbins TW, Everitt BJ. High impulsivity predicts the switch to compulsive cocaine-taking. Science. 2008;320:1352–1356. doi: 10.1126/science.1158136. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Orsini CA, Moorman DE, Young JW, Setlow B, Floresco SB. Neural mechanisms regulating different forms of risk-related decision-making: Insights from animal models. Neurosci Biobehav Rev. 2015;58:147–167. doi: 10.1016/j.neubiorev.2015.04.009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.St. Onge JR, Ahn S, Phillips AG, Floresco SB. Dynamic fluctuations in dopamine efflux in the prefrontal cortex and nucleus accumbens during risk-based decision making. J Neurosci. 2012;32:16880–16891. doi: 10.1523/JNEUROSCI.3807-12.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Fiorillo CD, Tobler PN, Schultz W. Discrete coding of reward dopamine neurons. Science. 2003;299:1898–1902. doi: 10.1126/science.1077349. [DOI] [PubMed] [Google Scholar]

- 55.Stopper CM, Tse MTL, Montes DR, Wiedman CR, Floresco SB. Overriding phasic dopamine signals redirects action selection during risk/reward decision making. Neuron. 2014;84:177–189. doi: 10.1016/j.neuron.2014.08.033. [DOI] [PubMed] [Google Scholar]

- 56.Sugam JA, Saddoris MP, Carelli RM. Nucleus accumbens neurons track behavioral preferences and reward outcomes during risky decision making. Biol Psychiatry. 2014;75:807–816. doi: 10.1016/j.biopsych.2013.09.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Stopper CM, Floresco SB. Contributions of the nucleus accumbens and its subregions to different aspects of risk-based decision making. Cogn Affect Behav Neurosci. 2011;11:97–112. doi: 10.3758/s13415-010-0015-9. [DOI] [PubMed] [Google Scholar]

- 58.de Jong Johannes W., Afjei Seyedeh Atiyeh, Pollak Dorocic Iskra, Peck James R., Liu Christine, Kim Christina K., Tian Lin, Deisseroth Karl, Lammel Stephan. A Neural Circuit Mechanism for Encoding Aversive Stimuli in the Mesolimbic Dopamine System. Neuron. 2019;101(1):133-151.e7. doi: 10.1016/j.neuron.2018.11.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Badrinarayan A, Wescott SA, CMV Weele, Saunders BT, Couturier BE, Maren S, et al. Aversive stimuli differentially modulate real-time dopamine transmission dynamics within the nucleus accumbens core and shell. J Neurosci. 2012;32:15779–15790. doi: 10.1523/JNEUROSCI.3557-12.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Piantadosi PT, Yeates DCM, Wilkins M, Floresco SB. Contributions of basolateral amygdala and nucleus accumbens subregions to mediating motivational conflict during punished reward-seeking. Neurobiol Learn Mem. 2017;140:92–105. doi: 10.1016/j.nlm.2017.02.017. [DOI] [PubMed] [Google Scholar]

- 61.Simon NW, Beas BS, Montgomery KS, Haberman RP, Bizon JL, Setlow B. Prefrontal cortical–striatal dopamine receptor mRNA expression predicts distinct forms of impulsivity. Eur J Neurosci. 2013;37:1779–1788. doi: 10.1111/ejn.12191. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Ferland JN, Hynes TJ, Hounjet CD, Lindenbach D, Haar CV, Adams WK, et al. Prior exposure to salient win-paired cues in a rat gambling task increases sensitivity to cocaine self-administration and suppresses dopamine efflux in nucleus accumbens: support for the reward deficiency hypothesis of addiction. J Neurosci. 2019;39:1842–1854. doi: 10.1523/JNEUROSCI.3477-17.2018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Gentry RN, Lee B, Roesch MR. Phasic dopamine release in the rat nucleus accumbens predicts approach and avoidance performance. Nat Commun. 2016;7:1–11. doi: 10.1038/ncomms13154. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Kuczenski R, Segal D. Concomitant characterization of behavioral and striatal neurotransmitter response to amphetamine using in vivo microdialysis. J Neurosci. 1989;9:2051–2065. doi: 10.1523/JNEUROSCI.09-06-02051.1989. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65.Mcelvain JS, Schenk JO. A multisubstrate mechanism of striatal dopamine uptake and its inhibition by cocaine. Biochem Pharm. 1992;43:2189–2199. doi: 10.1016/0006-2952(92)90178-l. [DOI] [PubMed] [Google Scholar]

- 66.Wade TR, De Wit H, Richards JB. Effects of dopaminergic drugs on delayed reward as a measure of impulsive behavior in rats. Psychopharmacology (Berl) 2000;150:90–101. doi: 10.1007/s002130000402. [DOI] [PubMed] [Google Scholar]

- 67.van Gaalen Marcel M., van Koten Reinout, Schoffelmeer Anton N.M., Vanderschuren Louk J.M.J. Critical Involvement of Dopaminergic Neurotransmission in Impulsive Decision Making. Biological Psychiatry. 2006;60(1):66–73. doi: 10.1016/j.biopsych.2005.06.005. [DOI] [PubMed] [Google Scholar]

- 68.Winstanley CA, Theobald DEH, Dalley JW, Robbins TW. Interactions between serotonin and dopamine in the control of impulsive choice in rats: therapeutic implications for impulse control disorders. Neuropsychopharmacology. 2005;30:669–682. doi: 10.1038/sj.npp.1300610. [DOI] [PubMed] [Google Scholar]

- 69.Castrellon JJ, Seaman KL, Crawford JL, Young JS, Smith CT, Dang LC, et al. Individual differences in dopamine are associated with reward discounting in clinical groups but not in healthy adults. J Neurosci. 2019;39:321–332. doi: 10.1523/JNEUROSCI.1984-18.2018. [DOI] [PMC free article] [PubMed] [Google Scholar]