Abstract

Implementation Science (IS) is the study of approaches designed to increase adoption and sustainability of research evidence into routine practice. This article provides an overview of IS and ideas for its integration with Nutrition Education and Behavior (NEB) practice and research. IS application in NEB practice can inform real-word implementation efforts. Research opportunities include advancing common approaches to implementation measurement. In addition, the article provides suggestions for future studies (e.g., comparative effectiveness trials comparing implementation strategies) to advance the knowledge base of both fields. An example from ongoing research is included to illustrate concepts and methods of IS.

INTRODUCTION

Currently, dietary behaviors in the United States fall short of recommendations1–3 Yet, a quality diet is important for reducing chronic disease risk.4–6 To address this problem, researchers and practitioners in the field of Nutrition Education and Behavior (NEB) employ multifaceted interventions in complex settings (e.g., schools, worksites, food banks) with the aim of improving health outcomes for target audiences. Despite continued gaps, improvements in the US diet in recent years (e.g., increased whole grain intake, decreased sugar-sweetened beverage consumption7) suggest the implementation of NEB interventions produce valuable effects.8

Past publications in the Journal of Nutrition Education and Behavior (JNEB) illustrate attention to the process of implementing NEB interventions to produce desired effects. Specifically, the NEB field has used theory and formative evaluation work to assess participants’ perceived barriers and facilitators to change9–12 or to determine preferences of the target audience to inform design or improvement of intervention implementation.13–15 Process evaluation is also relatively common with researchers documenting the activities of a program implementation effort.16–19 Further, involvement of stakeholders in the design of interventions reflects awareness of the importance of culturally relevant programs for implementation success.20–23 These are important strengths of the field that suggest a readiness for further integration of Implementation Science (IS) and NEB.

Implementation Science is “the scientific study and application of strategies to promote the systematic uptake of research findings and other evidence-based practices into routine use.”24 (See IS terms and definitions in Table 1). In other words, IS applies rigorous research methods to test and understand what approaches work to get individuals and systems to use evidence. An explicit interest in IS by the field of NEB began as early as 2012 when the need for application of IS approaches was noted by authors Allicock and colleagues.25 These authors tested the effects of a fruit and vegetable intervention in churches led by church coordinators without utilizing researcher support for conducting the intervention (i.e., no extra resources, limited technical support). This study concluded, “dissemination may not achieve public health impact unless support systems are strengthened for adequate implementation.” Since that time, interest in IS has continued to grow with application of IS theories26,27 and focused attention on implementation processes.28–30 This paper, after an introduction to IS, will present a continuum of expanded opportunities for engagement with IS for professionals in NEB.

Table 1.

Implementation Science Terms and Definitions

| Term | Definition |

|---|---|

| Adaptation | Process of changes to an innovation to increase suitability for a particular population or organization while keeping core components; may happen deliberately or passively. |

| Dissemination | Targeted spread of information/interventions to a targeted audience |

| Context | The setting in which the implementation takes place; features of the inner and outer setting that may impact implementation including, but not limited to, culture, organizational structure, local policy, leadership, capacity, networks, and environmental (in)stability.82 |

| Hybrid Designs | Research designs with a dual focus on clinical effectiveness (i.e., health outcomes) and implementation outcomes. |

| Facilitation | A process whereby a designated person (facilitator) uses a set of implementation strategies differentially between sites in response to varying contextual needs and barriers; akin to the current use of the term “technical assistance” in nutrition education and behavior, which has a different meaning in IS. |

| Innovation | A program, practice, product, pill, policy, principle, or procedure which has shown to be effective through outcomes evaluation to some degree for come contexts. |

| Implementation Strategy | The “how-to” of changing practitioner or organizational behavior toward the goal of improving implementation outcomes. |

| Implementation Research | The scientific study of implementation which focuses on the how and why of successes and failures of innovations in real-world settings; goal is generalizable knowledge. |

| Readiness | Degree to which an individual or organization is prepared to implement change58 |

| Scale Up | Broadening the delivery of an innovation through deliberate efforts to reach a wider but similar audience and context to that in which the innovation was tested originally. |

| Stakeholders | Individuals or organizations impacted by the implementation effort; can include community members or patients targeted by the effort and/or frontline practitioners delivering the innovation. |

| Technical Assistance | Use of local or centralized personnel (e.g., call-in helpline) on an as-needed basis to address issues with implementation; an implementation strategy; one implementation strategy |

| Implementation Outcomes | |

| Acceptability | Practitioner or stakeholder satisfaction with elements of the innovation (e.g., content, complexity). |

| Adoption | Initial implementation or uptake of innovation by practitioner or organization. |

| Appropriateness | Perceived fit; relevance; compatibility; usefulness for practitioner, stakeholder, or organization. |

| Costs | Organizational resources to deliver innovation or implementation strategy(ies); cost-effectiveness or cost benefit to system. |

| Feasibility | Suitability for everyday use by practitioner or organization give available resources. |

| Fidelity | Program delivery quality by practitioner; extent of delivery as intended. |

| Penetration | Degree of institutionalization and/or spread across organization. |

| Sustainability | Organizational continuation of innovation; maintained integration into setting. |

Introduction to Implementation Science

Implementation Science recognizes that effective implementation of research evidence (e.g., programs, practices, products, pills, policies, principles, procedures)31 demands active and systematic methods rather than passive processes to establish widespread use. Terms for IS in other countries include knowledge translation, knowledge transfer, and research utilization.32 Promoting the adoption and sustainment of research evidence into everyday use is the primary goal of IS. IS concepts, methods, and theories are useful for the simultaneous study of effectiveness and implementation.33 For example, IS can contribute to basic research when new research evidence is being created (to avoid investments in discoveries with low implementability) and when real-world circumstances encourage the spread of practices which have not yet been tested for effectiveness thoroughly (e.g., policy mandates).

In IS, the research evidence being implemented is referred to as an “innovation.” Implementation Science pays attention to (a) the innovation being implemented, (b) by whom, (c) in what context, (d) at what interval, and (e) with what approach. Each of these is considered a factor for study and/or modification to understand and/or improve implementation. Unlike Intervention Science and Intervention Mapping techniques,34 which work to create sound interventions, Implementation Science is concerned with how interventions and other innovations are adopted, adapted, scaled, and sustained. This paper will focus on several key features of IS with immediate applicability to JNEB authors and readers and refer the reader to additional sources for a more comprehensive review of IS.35–38

Theories, Models, and Frameworks.

Implementation Science involves the application of theories, models, and frameworks as described by Nilsen and colleagues.32 Theories propose relationships among variables; models recommend the sequence of implementation efforts; and frameworks describe determinants of implementation outcomes. Together, theories, models, and frameworks can help researchers to understand implementation problems, design implementation studies to test mechanisms, and evaluate implementation efforts. At present, there are over 60 identified theories, models, and frameworks in IS.32,39,40 As application of theory is time-honored and necessary to the NEB field, IS theories, models, and frameworks may provide a fresh lens for NEB work.

A first step in using IS theories, models, and frameworks is in distinguishing among their functions.32 Theories include both classical theories and implementation theories. Classical theories come from outside implementation science (e.g., Social Cognitive Theory41) and propose causes of behavior relevant to implementation. Theories are most useful for testing hypotheses about why an implementation effort worked or failed (e.g., implementation strategy targeting self-efficacy for disease management). Implementation theories (e.g., Normalization Process Theory42) originate from implementation research and attempt to explain the casual pathways of implementation processes. Process models are prescriptive in nature and provide guidance for how an implementation process might progress (e.g., Replicating Effective Programs43). For example, adapting and deploying an existing diabetes intervention designed for one cultural group and introduced to a new cultural group might benefit from the application of a process model. Framework types in IS include both determinant and evaluative types. A key feature of determinant frameworks (e.g., Consolidated Framework for Implementation Research42) is the emphasis on contextual and systems factors that may influence the adoption of NEB innovations. Nutrition education researchers and practitioners might use frameworks to anticipate barriers and facilitators to implementation of a new weight management intervention or to identify existing barriers to an ongoing program to address food insecurity among college students. Evaluation frameworks (e.g., Re-AIM44) guide decisions about how best to evaluate an implementation effort. Selection of a theory, model, or framework reflects stakeholder needs, goals of implementation, and the intended application.

Implementation Strategies

Implementation strategies are “methods or techniques used to enhance the adoption, implementation, and sustainability (p 2)” of research evidence.45 The Expert Recommendations for Implementing Change project provides definitions of 73 implementation strategies to promote commonality in language and approach for implementation process.46 These strategies are conceptually grouped into 9 clusters (See Table 2).47 An implementation effort may focus on selecting strategies within 1 cluster to meet implementation needs or may consider selection of multiple strategies across several clusters to best support implementation. Inputs for strategy selection include information on the barriers and facilitators of the innovation; the theory, model, or framework informing the study; the existing evidence about the effects of given strategy or strategy combination, and the preferences of stakeholders.

Table 2.

Clusters and Examples of Implementation Strategies Drawn from Expert Recommendations for Implementing Change (ERIC) project

| Cluster of Strategies | Example Strategy |

|---|---|

| Engage Consumers | Use mass media; Prepare consumers to be active participants |

| Use Evaluative and Iterative Strategies | Audit and feedback; Develop a formal implementation blueprint |

| Change Infrastructure | Create or change credentialing and/or licensure standards; Change physical structure/equipment |

| Adapt and Tailor to the Context | Promote adaptability; Tailor strategies |

| Develop Stakeholder Interrelationships | Identify and prepare champions; Build a coalition |

| Utilize Financial Strategies | Develop disincentives; Use new payment schemes |

| Support Practitioners | Remind practitioners; Revise professional roles |

| Provide Interactive Assistance | Provide local technical assistance; Provide supervision |

| Train and Educate Stakeholders | Use train-the-trainer strategies; Develop educational materials |

Implementation strategies are distinct from the innovation being implemented. Prudent selection of implementation strategies balances concerns of resources (e.g., personnel, material costs), pragmatics, effectiveness, and tailoring to barriers and facilitators for the target population. Proctor and colleagues45 detail the process for reporting on specific implementation strategies which includes naming, defining, and specifying details of the strategy to be utilized. Explicitly, specification of strategies includes description of who deploys the strategy (the actor), the steps they take (the action), who receives the action (action target), on what schedule (temporality) with what intensity (dose), to what end (implementation outcome affected), and for what reason (justification). See Table 3 for an example. This is important because detailed á priori specification allows the researchers to monitor the delivery of the strategy relative to the design. Further, other researchers in the field can fully understand the implementation support design, replicate the design, and consider the intensity of the strategy when interpreting study findings. Common language46 and parameters for specification of implementation strategies45 promotes understanding of the action that is taken in an implementation effort.

Table 3.

Example of Strategy Specification to Support Implementation of Motivational Interviewing (MI)

| Strategy | Strategy Cluster | Definition | Actors | Action | Temporality | Dose | |

|---|---|---|---|---|---|---|---|

| Make training dynamic. | Train and Educate Stakeholders | Interactive opportunities to practice and reflect | Experienced MI trainers | One-time workshop | 1–2 weeks before start of MI intervention | 6 hours | Provide foundational skills in MI. |

| Send reminders. | Support Practitioners | Electronic reminders via email | Automated by MI staff | Send reminders of key training messages | Once per week for 6 months | Approximately 24 emails | Remind trainees by commonly used mode of communication. |

| Provide audit and feedback. | Use Evaluative Strategies | MI trainer watches recorded session of trainee and provides feedback. | MI trainers | Identify strengths and weaknesses among new trainees. | Twice within first 6 months | 1 hour of feedback and coaching on each occasion (Total of 2 hours) | Providing tailored feedback in supportive environment to encourage further MI skill |

Implementation Research Designs

Implementation Science employs study designs which are constructed to determine the most effective and feasible approaches to implementing an innovation. Selection of a research design is driven by the implementation research question. Brown and colleagues31 detail study designs with application to implementation research, illustrating the breadth of options for conducting implementation studies. Over and above the more traditional designs (e.g., pre-post, between site comparisons, comparative effectiveness, factorial designs), use of adaptive and hybrid designs is growing in implementation research. Adaptive designs include Multiphase Optimization Strategy (MOST)48,49 and Sequential Multiple Assignment Randomized Trial (SMART).48 The MOST design employs an iterative process to identify the most effective components of an intervention or implementation approach. The SMART implementation design involves an initial randomization followed by re-randomization of non-responders in the treatment group to receive more implementation support, allowing for resource savings and ability to characterize responders/non-responders at each level of randomization.

Hybrid designs combine elements of traditional effectiveness research with implementation research across 3 types: Type I) investigate effectiveness and implementation processes and outcomes during early stages; Type II) concurrently focus on intervention effectiveness and an implementation strategy; or Type III) primarily compare the effects of differing implementation strategies on implementation outcomes while secondarily collecting health outcome data. Hybrid designs recognize the variability in evidence strength for the innovations researchers and practitioners are tasked with studying and deploying and suggest different approaches depending on the strength of the evidence base. For example, a mandated change (e.g., Supplemental Nutrition Assistance Program benefit restructure) may be rolled out before a large-scale, randomized study is conducted; a Hybrid I could be an appropriate design for studying both the effectiveness as well as the ways of implementing the change. This illustrates that IS is not applicable only after a strong evidence base has been established but can be utilized concurrent to establishment of best practice. For more detail, the reader is referred to Curran et al.33

Measurement of implementation outcomes allows for determination of the reasons for success or failure of an implementation effort. Proctor and colleagues50 outline 8 key implementation outcomes (See Table 1). Although all 8 may not necessarily be evaluated for every implementation effort, this list provides a common set of outcomes that can provide “a framework for evaluating implementation strategies.” Selection of implementation outcomes for measurement is informed by the stage of implementation and the interests of key stakeholders. Unlike studies which measure these constructs as process measures, IS has the goal of addressing these as primary outcomes with research questions aimed at understanding the predictors of these outcomes, designing implementation strategies for the benefit of these outcomes, and identifying mechanisms linking implementation strategies and implementation outcomes.

A frequent goal of IS is the selection and deployment of implementation strategies for the improvement of implementation outcomes. One combination of strategies may lead to better implementation outcomes than another. Testing associations between implementation outcomes and health outcomes advances understanding of how to best target selected implementation outcomes with effective implementation strategies. For example, even with high fidelity, low perceived feasibility may limit the long-term uptake and effectiveness of a program. Understanding these relationships guides practitioners and researchers in where to invest their efforts in implementation strategies for targeting their population of interest. The primary focus on affecting process is a key distinction of IS from process evaluation alone which would document implementation outcomes without necessarily seeking to prioritize understanding their predictors and/or relationships with the targeted health outcomes. Conversely, IS seeks to rigorously test structured manipulations of process, rather than record process.

Focusing on common implementation outcomes in IS allows for important conclusions. Strong implementation outcomes without improved health outcomes suggest an ineffective innovation (i.e., innovation failure); poor implementation outcomes without improved health outcomes suggests a need for improved implementation (i.e., implementation failure).50 Adding measures of the characteristics of the innovation (e.g., complexity) and context (e.g., readiness, climate) can further facilitate comparisons of the effects of implementation strategies across disciplines and varied contexts. Over time, combination of such well-measured studies will contribute to understanding of what type of strategies work best for certain features of an innovation, within context, and with certain characteristics.50

DISCUSSION

A Spectrum of Implementation Science Opportunities for Nutrition Education & Behavior

Much of the existing knowledge base of IS has been drawn from work in healthcare settings.24,51 That is, IS researchers have often targeted health care providers and clinics to improve their use of evidence-based practice in clinical care by developing, testing, and comparing implementation strategies’ effects on implementation of care and patient outcomes. This has produced a robust understanding of factors that influence implementation in clinical settings and generated a repertoire of strategies to improve healthcare delivery. However, clinical settings sometimes have greater resources and practitioners with different training perspectives than many settings of NEB implementation (e.g., Supplemental Nutrition Assistance Programs, school-based interventions). These differences create key opportunities to determine which lessons from healthcare can translate to NEB improvements.

Apply Implementation Science to NEB Practice.

Frontline practitioners in NEB are vital to implementation efforts. A primary opportunity for NEB practitioners is to engage in the practice of implementation52 by using the IS knowledge base to improve the quality of everyday service delivery to targeted populations. Practitioners have critical expertise in the local context and in the needs and characteristics of the population they serve. In many projects, frontline practitioners (e.g., extension agents, Women Infants and Children counselors, school-based registered dietitians) are the key stakeholders for implementation; in other cases, they provide invaluable relationships with the audience being served by the innovation (i.e., clients, families, children). Regardless, knowledge of the context prepares practitioners to engage in successful implementation and/or to collaborate with researchers in selecting innovations for implementation as well as designing and evaluating implementation efforts. Further, practitioners can use the knowledge base of IS to inform selection of innovations that are most likely to be implementable (i.e., appropriate complexity, flexibility, adaptability)42 or to drive appropriate adaptations of a program for their context.

Examples of the practice of implementation include consideration of a model or framework to design an implementation process; intentional selection of implementation strategies based on knowledge of the target population and context targeted; and monitoring implementation outcomes to adjust implementation approaches when needed. More specifically, a practitioner may be asked to lead implementation of a new nutrition education and physical activity program in a school setting that was originally developed for youth camps. Using IS, the practitioner could select a process model (e.g., Replicating Effective Programs43) to guide the adaptation of, preparation for, and launch of the program. Knowing that school leadership may not be inclined to prioritize NEB relative to academics, for example, the practitioner might select implementation strategies to engage leadership (e.g., formal commitments46), monitor and report teacher perceptions of feasibility, and report on the link between student participant and behavioral and academic outcomes to the leadership. Thus, IS provides practical supports at multiple decision points for real-world implementation.

Integrate Implementation Science Approaches in NEB Research.

Implementation research goes beyond the practice and documentation of implementation process and embraces the study of the most effective approaches for increasing adoption of research evidence. To date, evaluation approaches in the NEB field reflect a rich history in formative and process evaluation26–28,53,54 Integration of additional IS approaches has the potential to strengthen NEB research by providing common terminology and by further advancing measurement processes. Implementation Science is also consistent with current strengths of NEB (e.g., stakeholder engagement and involvement in intervention development) and provides further structure for engaging stakeholders in implementation.

In IS, clear description of the innovation and the implementation strategy is essential to conducting implementation research. That is, studies and clinical trial registries require specification of interventions with enough detail to enable future studies to replicate them or bring them to scale. With a clear description of the innovation, context, and outcome measures in place, NEB researchers can then specify and describe the implementation strategies with the common language and recommendations of implementation science, thus further aligning the fields.45,46,55 Even when strategies are simple (e.g., training, reminders) and not manipulated in a study, clear description and full specification can facilitate transparency. These recommendations are consistent with the Standards for Reporting Implementation Studies Statement.56

A process-oriented and framework-driven measurement plan is another aspect of implementation research which NEB researchers can apply readily. Such plans have at least 2 elements beyond ensuring quality measurement of implementation outcomes. First, a plan to track the delivery and use of implementation strategies is essential.55 This allows the researcher to know if the strategy was delivered as designed and if the target audience utilized the support. For example, an audit and feedback report may be delivered to a school but not used; measurement of both delivery and utilization would provide important information. The researcher can then determine if variability in delivery and use of implementation support need to be addressed through changes to the implementation plan or stricter monitoring. Measuring the frequency and content of implementation strategy delivery and use also allows researchers to monitor the resources associated with an implementation effort. Without such measurement, researchers may reach inaccurate conclusions about contributing factors to implementation outcomes and be under-prepared to improve future implementation efforts.45

Second, researchers and practitioners can use common measures of implementation outcomes and context when possible. Often, the selection of these measures is driven by an evaluation framework. Weiner and colleagues (2017) have validated measures of acceptability, appropriateness, and feasibility which can be adapted for use with most implementation efforts.57 Measures of organizational readiness,58,59 implementation climate, and leadership support60 are also adaptable to many contexts. Besides the time savings in item testing and psychometric validation of new measures for every study, common measures would facilitate comparison of implementation outcomes and influential factors on implementation across studies.

Consistent with principles and existing work in NEB, community-engaged dissemination and implementation research (CEDI)61,62 structures the research process to engage stakeholders (e.g., implementers, end users, policy makers) in decision making and action planning. CEDI involves stakeholders in activities such as selection of an implementation framework, adapting an intervention, deciding health and implementation outcomes, choosing implementation strategies, and tailoring implementation strategies to their contexts. Long-term goals of CEDI are to improve implementation capacity among the stakeholders and to plan collaboratively for future projects and sustainability. Examples of CEDI include Evidence Based Quality Improvement (EBQI),63,64 concept mapping, group model building, and conjoint analysis.65

Aligning with Implementation Science to Contribute to the Knowledge Base.

At present, there is increasing overlap between contributors in the field of IS and researchers in the field of NEB. Several IS topics are ripe for study within the context of NEB. Research in these areas can simultaneously advance IS and NEB.

A key opportunity to advance both NEB and IS includes the testing of strategies with a strong evidence base from the clinical realm (e.g., audit and feedback,66 academic detailing67) in new, community-based contexts. Nuanced questions about which implementation strategies work, under which conditions, and why, are likely to provide practical value. However, studies comparing many implementation strategies to no implementation support (all or none approaches) will have limited significance. As of yet, the IS field has limited data on which strategies or combinations of strategies outperform other strategies and combinations in community settings. To address this gap in IS knowledge, Ivers and Grimshaw68 have advocated for embedding sequential comparisons of implementation strategies and strategy combinations in projects across disciplines to identify different levels of efficacy, feasibility, acceptability, and costs. These types of head-to-head comparisons have the potential to prevent future research and practice waste by providing valuable information to inform selection of strategies.

Another opportunity for NEB researchers is contribution to common measurements. In particular, pressing needs are for common measures of fidelity and “technical assistance.” A recent review of family-based interventions to improve preschool diets documented that few studies (less than 20%) included implementation fidelity measures.69 Likely, there are common aspects of fidelity measurement in NEB interventions that could be validated and standardized across projects (e.g., adherence to lesson frequency), particularly those of a similar content area (e.g., cooking skills, gardening). Schoenwald and colleagues70 provide guidance on development of fidelity measures. Issues of frequency, source, and timing of fidelity collection are complex, and require intentional justified decisions for each project.71 Application of this guidance to development of common, adaptable fidelity measures has the potential to strengthen the field.

An example of a key measurement issue is that of quantifiably measuring the activities that support implementation. For example, projects in nutrition education and behavior often use the term “technical assistance” to refer to site-level support provided for implementation from an outside expert. “Facilitation” is the term most often used to describe this type of support in the IS literature (See Table 1 for definitions of each). IS researchers have illustrated how to define facilitation, track the activities of facilitation, train facilitators in a standard fashion, and evaluate the effects of facilitation with precision.72 The field of NEB can apply this robust approach to tracking expert-level, on-the-ground support to understand its effects on implementation by measuring its frequency, content, target, and necessary associated resources.

A third opportunity is to move forward the study of program and intervention adaptation. Often, NEB researchers and practitioners intentionally take an intervention known to be effective for one group and translate it for use and/or testing in another group. Frequently, the goal in this process is to ensure adherence to gold-standard fidelity, expecting it will work in a new setting if deployed in the exact fashion it was previously found to be effective. However, IS recognizes the unavoidable and even desirable reality of adaptation. Beyond deliberate adaptations, organizations trained and equipped to deliver nutrition interventions often make situational and responsive adaptations in use or delivery of a program. In both cases, it is important to describe which aspects of the intervention or the implementation strategy are adapted and why. Application of the Wiltsey-Stirman framework73 can assist with description of adaptations and, in the long-term, assist with identifying which adaptations contribute to success and longevity of a program. Further, researchers can move IS forward by answering key research questions about adaptation.74,75 For example, it could be highly valuable to study decision-making about adaptations across different implementation contexts and to discover the drivers of decisions about such adaptations. Better study of adaptation processes has the potential to improve the delivery of research evidence to under-served groups who are often the recipients of adapted program and interventions.

Examining the Use of Implementation Science in NEB

In the authors’ work, a study is underway to design and test solutions to an implementation problem identified in an earlier research investigation. From 2011–2016, the research team developed, implemented, and evaluated the Together, We Inspire Smart Eating (WISE) curriculum.76 This evaluation documented gaps between the evidence-based practices WISE trained educators use and the practices they continued to use or did not adopt in their classrooms. For example, almost half of educators (46.7%) were proficient at the evidence-based WISE practice of role modeling by the end of the year; however, only 26% of educators, on average, adopted the practice of using the curriculum mascot as trained.

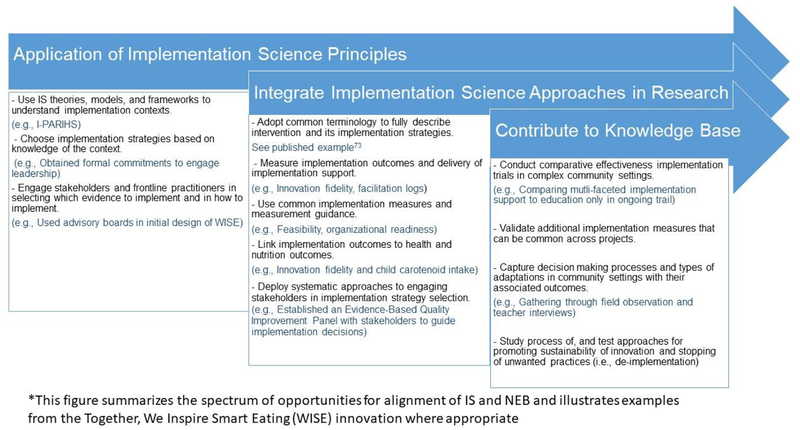

The subsequent IS study was designed to identify the barriers and facilitators to use of the evidence-based practices (i.e., developmental formative evaluation77), to prioritize and address this information with stakeholder input, and to develop and test strategies to improve implementation of the WISE practices. The full protocol is published elsewhere.78 In brief, positive deviance methods79–81 were used to identify educators at both ends of the implementation spectrum – those failing at the practices and those succeeding, using data from the previous study. Understanding these failures and successes was the initial focus of the subsequent research, which was accomplished through interviews with the educators based on the revised Promoting Action on Research Implementation (i-PARIHS).82 Next, in a series of EBQI sessions, a stakeholder panel of educators, parents, and administrators prioritized the most important barriers and facilitators to be addressed. After the session, priorities were mapped to potential strategies (by the researchers) and then described in a subsequent session to the EBQI panel. Using concept mapping in real time, the panel rated the feasibility and importance of potential strategies aimed to address the prioritized barriers and facilitators. Table 4 links examples of originally identified barriers to selected strategies, proposed mechanism for improvements, targeted implementation outcomes, and related theoretical constructs. This set of strategies is currently being tested in a cluster randomized Hybrid III trial33 and compared to a basic implementation approach of training and newsletter reminders. Data is being collected on delivery of implementation support (e.g., facilitator logs), utilization of implementation support (e.g., teacher report), implementation outcomes (e.g., observed fidelity), and child health outcomes (e.g., carotenoid intake). Figure 1 summarizes the spectrum of opportunities to align NEB and IS and provides examples from WISE as applicable.

Table 4.

Example of Implementation Barriers Mapped to Targeted Strategies/Mechanisms and Implementation Outcomes

| Barrier | Strategies | Mechanism | Implementation Outcome | Measure(s) | Theoretical Construct |

|---|---|---|---|---|---|

| Lack of support of admin/director | Onboarding meetings, Signed commitment agreement, Implementation blueprint | Leadership increases buy-in and support; creates norms/expectations | Feasibility, Sustainability | Weiner measure57 of feasibility at baseline & follow up | i-PARIHS; leadership support; inner context, local level |

| Insufficient mechanisms for change (e.g., designated roles) | Champion training, Facilitator support | Center is given additional support to navigate establishing increased capacity for change. | Feasibility, Sustainability, Costs | Organization al readiness59 – at training and follow up; Weiner measure of feasibility at baseline & follow up | i-PARIHS; inner context, local level |

| Inconsistent Teacher Beliefs | Training, Educational materials, Champion Support, Facilitator support | Present counter evidence to challenge beliefs; social pressure to get on board. | Adoption, Fidelity | Personal diet/knowledge at baseline & follow up; Role beliefs at baseline & follow up | i-PARIHS; recipient values and beliefs, recipient motivation |

Figure 1.

Spectrum of opportunities for Nutrition Education and Behavior in Implementation Science: The WISE Example

Exemplar work by other researchers in nutrition education and behavior provides examples of implementation of policies and programs in diverse settings. For example, Australian researchers (Nathan, Seward, Sutherland, Wolfenden, Wyse, Yoong et al.) have a robust collection of studies targeting childcare and school settings for promotion of implementation of policies to improve food offerings, physical activity opportunities, and adherence to guidelines for healthy food.83–85 Their studies have targeted cooks, executive staff, and teachers as implementation agents. A specific, recent example from this group of researchers86 applied Rogers’ Diffusion of Innovation theory to the selection of 8 implementation strategies (e.g. audit and feedback, leadership support) targeting key theoretical constructs with the goal of promoting implementation of a healthy canteen (i.e., cafeteria) policy in Australian schools. Using Re-AIM to guide the evaluation of the project, the team found significant increases in reach, adoption and six-month maintenance of the policy. These studies illustrate examples of the types of implementation challenges in NEB that IS can address.

IMPLICATIONS FOR RESEARCH AND PRACTICE

This paper was designed to provided practical opportunities in IS across the spectrum of NEB science and practice while illustrating the inherent pragmatic nature of IS and providing examples for improvement of implementation in the real world. Specifically, practitioners in nutrition education and behavior can contribute to meaningful advancement of the field through application of IS and involvement in study designs. Adoption of IS lens will lead to continued and increased engagement of front-line nutrition educators into the development of innovations that are more implementable and improved deployment and sustainment of existing programs. For NEB research, IS suggests research questions to understand the success or failure of implementation efforts and new methods to improve the field’s ability to determine effective approaches to implementation. Application of IS can help to avoid discarding interventions with potential and to speed the translation and long-term sustainability of effective interventions. Finally, NEB researchers are well-positioned to advance the knowledge base through application of IS. Determining the relative value of implementation strategies and their combinations to one another is a clear contribution the field can make, particularly as these comparisons are needed in complex community settings.66 Comparisons are not only needed for implementing innovations but also for sustaining them74 or stopping practices known to be harmful (i.e., de-implementation).87 Ultimately, application of IS in NEB has the potential to accelerate the adoption, scale-up, and sustainability of innovations while contributing to exciting scientific discoveries which will transcend disciplines.

Acknowledgements:

TS is supported by the National Institute of Diabetes and Digestive and Kidney Diseases (K01 DK110141 and R03 DK117197) and the National Institute of General Medicine Sciences (5P20GM109096) of the National Institutes for Health as well as by the Arkansas Biosciences Institute and the Lincoln Health Foundation. The content is solely the responsibility of the authors and does not necessarily represent the official views of funding agencies. The content is solely the responsibility of the authors and does not necessarily represent the official views of the funding agencies. We would also like to thank Dr. Madeleine Sigman-Grant for her helpful review of the paper as well as Audra Staley for her assistance with reference formatting.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- 1.Dietary Guidelines Advisory Committee. Scientific Report of the 2015 Dietary Guidelines Advisory Committee: Advisory Report to the Secretary of Health and Human Services and the Secretary of Agriculture. Washington, DC: United States Department of Agriculture; 2015. [Google Scholar]

- 2.Moore LV, Thompson FE. Adults meeting fruit and vegetable intake recommendations - United States, 2013. MMWR Morb Mortal Wkly Rep. 2015;64:709–713. [PMC free article] [PubMed] [Google Scholar]

- 3.Moore LV, Thompson FE, Demissie Z. Percentage of youth meeting federal fruit and vegetable intake recommendations, Youth Risk Behavior Surveillance System, United States and 33 States, 2013. J Acad Nutr Diet. 2017;117:545–553.e3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Micha R, Peñalvo JL, Cudhea F, Imamura F, Rehm CD, Mozaffarian D. Association between dietary factors and mortality from heart disease, stroke, and type 2 diabetes in the United States. JAMA. 2017;317:912–924. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.World Cancer Research Fund/American Institute for Cancer Research. Diet, Nutrition, Physical Activity and Cancer: a Global Perspective Continuous Update Project Expert Report 2018. [Google Scholar]

- 6.Thomson CA, McCullough ML, Wertheim BC, et al. Nutrition and physical activity cancer prevention guidelines, cancer risk, and mortality in the women’s health initiative. Cancer Prev Res (Phila). 2014;7:42–53. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Rehm CD, Peñalvo JL, Afshin A, Mozaffarian D. Dietary intake among US adults, 1999–2012. JAMA. 2016;315:2542. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Jarpe-Ratner E, Folkens S, Sharma S, Daro D, Edens NK. An experiential cooking and nutrition education program increases cooking self-efficacy and vegetable consumption in children in grades 3–8. J Nutr Educ Behav. 2016;48:697–705.e1. [DOI] [PubMed] [Google Scholar]

- 9.Parker S, Hunter T, Briley C, et al. Formative assessment using social marketing principles to identify health and nutrition perspectives of Native American women living within the Chickasaw Nation boundaries in Oklahoma. J Nutr Educ Behav. 2011;43:55–62. [DOI] [PubMed] [Google Scholar]

- 10.Mayfield KE, Carolan M, Weatherspoon L, Chung KR, Hoerr SM. African American women’s perceptions on access to food and water in Flint, Michigan. J Nutr Educ Behav. 2017;49:519–524.e1. [DOI] [PubMed] [Google Scholar]

- 11.Berger-Jenkins E, Jarpe-Ratner E, Giorgio M, Squillaro A, McCord M, Meyer D. Engaging caregivers in school-based obesity prevention initiatives in a predominantly Latino immigrant community: A qualitative analysis. J Nutr Educ Behav. 2017;49:53–59.e1. [DOI] [PubMed] [Google Scholar]

- 12.Hammerschmidt P, Tackett W, Golzynski M, Golzynski D. Barriers to and facilitators of healthful eating and physical activity in low-income schools. J Nutr Educ Behav. 2011;43:63–68. [DOI] [PubMed] [Google Scholar]

- 13.Swindle TM, Ward WL, Whiteside-Mansell L, Bokony P, Pettit D. Technology use and interest among low-income parents of young children: Differences by age group and ethnicity. J Nutr Educ Behav. 2014;46:484–490. [DOI] [PubMed] [Google Scholar]

- 14.Haynes-Maslow L, Auvergne L, Mark B, Ammerman A, Weiner BJ. Low-income individuals’ perceptions about fruit and vegetable access programs: A qualitative study. J Nutr Educ Behav. 2015;47:317–324.e1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Folta S, Koch-Weser S, Tanskey L, et al. Branding a school-based campaign combining healthy eating and eco-friendliness. J Nutr Educ Behav. 2017;50:180–189. [DOI] [PubMed] [Google Scholar]

- 16.Joseph S, Stevens AM, Ledoux T, O’Connor TM, O’Connor DP, Thompson D. Rationale, design, and methods for process evaluation in the Childhood Obesity Research Demonstration Project. J Nutr Educ Behav. 2015;47:560–565.e1. [DOI] [PubMed] [Google Scholar]

- 17.Torquati L, Kolbe-Alexander T, Pavey T, Leveritt M. Changing diet and physical activity in nurses: A pilot study and process evaluation highlighting challenges in workplace health promotion. J Nutr Educ Behav. 2018;50:1015–1025. [DOI] [PubMed] [Google Scholar]

- 18.Poelman MP, Steenhuis IHM, de Vet E, Seidell JC. The development and evaluation of an internet-based intervention to increase awareness about food portion sizes: A randomized, controlled trial. J Nutr Educ Behav. 2013;45:701–707. [DOI] [PubMed] [Google Scholar]

- 19.Greaney ML, Hardwick CK, Spadano-Gasbarro JL, et al. Implementing a multicomponent school-based obesity prevention intervention: A qualitative study. J Nutr Educ Behav. 2014;46:576–582. [DOI] [PubMed] [Google Scholar]

- 20.Gellar L, Druker S, Osganian SK, Gapinski MA, LaPelle N, Pbert L. Exploratory research to design a school nurse-delivered intervention to treat adolescent overweight and obesity. J Nutr Educ Behav. 2012;44:46–54. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Oropeza S, Sadile MG, Phung CN, et al. STRIVE, San Diego! Methodology of a community-based participatory intervention to enhance healthy dining at Asian and Pacific Islander restaurants. J Nutr Educ Behav. 2018;50:297–306.e1. [DOI] [PubMed] [Google Scholar]

- 22.White AH, Wilson JF, Burns A, et al. Use of qualitative research to inform development of nutrition messages for low-income mothers of preschool children. J Nutr Educ Behav. 2011;43:19–27. [DOI] [PubMed] [Google Scholar]

- 23.Cousineau T, Houle B, Bromberg J, Fernandez KC, Kling WC. A pilot study of an online workplace nutrition program: The value of participant input in program development. J Nutr Educ Behav. 2008;40:160–167. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Eccles MP, Mittman BS. Welcome to Implementation Science. Implement Sci. 2006;1:1 [Google Scholar]

- 25.Allicock M, Campbell MK, Valle CG, Carr C, Resnicow K, Gizlice Z. Evaluating the dissemination of Body & Soul, an evidence-based fruit and vegetable intake intervention: Challenges for dissemination and implementation research. J Nutr Educ Behav. 2012;44:530–538. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Grady A, Seward K, Finch M, et al. Barriers and enablers to implementation of dietary guidelines in early childhood education centers in Australia: Application of the Theoretical Domains Framework. J Nutr Educ Behav. 2018;50:229–237.e1. [DOI] [PubMed] [Google Scholar]

- 27.Jørgensen TS, Krølner R, Aarestrup AK, Tjørnhøj-Thomsen T, Due P, Rasmussen M. Barriers and facilitators for teachers’ implementation of the curricular component of the Boost Intervention targeting adolescents’ fruit and vegetable intake. J Nutr Educ Behav. 2014;46:e1–e8. [DOI] [PubMed] [Google Scholar]

- 28.Hastmann TJ, Bopp M, Fallon EA, Rosenkranz RR, Dzewaltowski DA. Factors influencing the implementation of organized physical activity and fruit and vegetable snacks in the HOP’N after-school obesity prevention program. J Nutr Educ Behav. 2013;45:60–68. [DOI] [PubMed] [Google Scholar]

- 29.Whittemore R, Chao A, Jang M, et al. Implementation of a school-based internet obesity prevention program for adolescents. J Nutr Educ Behav. 2013;45:586–594. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Diker A, Cunningham-Sabo L, Bachman K, Stacey JE, Walters LM, Wells L. Nutrition educator adoption and implementation of an experiential foods curriculum. J Nutr Educ Behav. 2013;45:499–509. [DOI] [PubMed] [Google Scholar]

- 31.Brown CH, Curran G, Palinkas LA, et al. An overview of research and evaluation designs for dissemination and implementation. Annu Rev Public Health. 2017;38:1–22. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Nilsen P, Pratkanis A, Leippe M, Baumgardner M, Hardeman W, Jonston M. Making sense of implementation theories, models and frameworks. Implement Sci. 2015;10:53. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Curran G, Bauer M, Mittman B, Pyne J, Stetler C. Effectiveness-implementation hybrid designs: Combining elements of clinical effectiveness and implementation research to enhance public health impact. Med Care. 2012;50:217–226. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Bartholomew LK, Parcel GS, Kok G. Intervention Mapping: A process for developing theory and evidence-based health education programs. Heal Educ Behav. 1998;25:545–563. [DOI] [PubMed] [Google Scholar]

- 35.Durlak JA, DuPre EP. Implementation Matters: A Review of research on the influence of implementation on program outcomes and the factors affecting implementation. Am J Community Psychol. 2008;41:327–350. [DOI] [PubMed] [Google Scholar]

- 36.Kirchner JE, Woodward EN, Smith JL, et al. Implementation Science Supports Core Clinical Competencies: An Overview and Clinical Example. Prim care companion CNS Disord. 2016;18. [DOI] [PubMed] [Google Scholar]

- 37.Ogden T, Fixsen DL. Implementation Science: A brief overview and look ahead. Z Psychol. 2014;222:4–11. [Google Scholar]

- 38.Bauer MS, Damschroder L, Hagedorn H, Smith J, Kilbourne AM. An introduction to Implementation Science for the non-specialist. BMC Psychol. 2015;3:32. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Tabak R, Khoong E, Chambers D, Brownson R. Models in Dissemination and Implementation research: Useful tools in public health services and systems research. Front Public Heal Serv Syst Res. 2013;2. [Google Scholar]

- 40.Dissemination & Implementation Models in Health Research and Practice. http://dissemination-implementation.com/index.aspx. Accessed February 4, 2019.

- 41.Bandura A Health promotion by social cognitive means. Heal Educ Behav. 2004;31:143–164. [DOI] [PubMed] [Google Scholar]

- 42.Kirk MA, Kelley C, Yankey N, Birken SA, Abadie B, Damschroder L. A systematic review of the use of the Consolidated framework for Implementation research. Implement Sci. 2015;11:72. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Kilbourne AM, Neumann MS, Pincus HA, Bauer MS, Stall R. Implementing evidence-based interventions in health care: Application of the Replicating Effective Programs framework. Implement Sci. 2007;2:42. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Glasgow R What types of evidence are most needed to advance behavioral medicine? Ann Behav Med. 2008;35:19–25. [DOI] [PubMed] [Google Scholar]

- 45.Proctor EK, Powell BJ, Mcmillen JC. Implementation strategies: recommendations for specifying and reporting. Implement Sci. 2013;8:139. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Powell B, Waltz T, Chinman M, et al. A refined compilation of implementation strategies: results from the Expert Recommendations for Implementing Change (ERIC) project. Implement Sci. 2015;10:21. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Waltz T, Powell B, Matthieu M, et al. Use of concept mapping to characterize relationships among implementation strategies and assess their feasibility and importance: results from the Expert Recommendations for Implementing Change (ERIC) study. Implement Sci. 2015;10:109. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Collins LM, Murphy SA, Strecher V. The Multiphase Optimization Strategy (MOST) and the Sequential Multiple Assignment Randomized Trial (SMART): New methods for more potent ehealth interventions. Am J Prev Med. 2007;32:S112–S118. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Collins LM. Conceptual Introduction to the Multiphase Optimization Strategy (MOST) In: Optimization of Behavioral, Biobehavioral, and Biomedical Interventions. Springer, Cham; 2018:1–34. [Google Scholar]

- 50.Proctor E, Silmere H, Raghavan R, et al. Outcomes for implementation research: conceptual distinctions, measurement challenges, and research agenda. Adm Policy Ment Heal Ment Heal Serv Res. 2011;38:65–76. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Eccles MP, Armstrong D, Baker R, et al. An implementation research agenda. Implement Sci. 2009;4:18. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Livet M, Haines ST, Curran GM, et al. Implementation Science to advance care delivery: A primer for pharmacists and other health professionals. Pharmacother J Hum Pharmacol Drug Ther. 2018;38:490–502. [DOI] [PubMed] [Google Scholar]

- 53.Quintanilha M, Downs S, Lieffers J, Berry T, Farmer A, McCargar LJ. Factors and barriers associated with early adoption of nutrition guidelines in Alberta, Canada. J Nutr Educ Behav. 2013;45:510–517. [DOI] [PubMed] [Google Scholar]

- 54.Lyn R, Evers S, Davis J, Maalouf J, Griffin M. Barriers and supports to implementing a nutrition and physical activity intervention in child care: Directors’ perspectives. J Nutr Educ Behav. 2014;46:171–180. [DOI] [PubMed] [Google Scholar]

- 55.Bunger A, Powell B. Tracking implementation strategies: A Description of a practical approach and early findings. Health (Irvine Calif). 2017;15:15. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Pinnock H, Barwick M, Carpenter CR, et al. Standards for Reporting Implementation Studies (StaRI) Statement. BMJ. 2017;356:i6795. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Weiner BJ, Lewis CC, Stanick C, et al. Psychometric assessment of three newly developed implementation outcome measures. Implement Sci. 2017;12:108. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Shea CM, Jacobs SR, Esserman DA, Bruce K, Weiner BJ. Organizational readiness for implementing change: A Psychometric assessment of a new measure. Implement Sci. 2014;9:7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Helfrich C, Li Y, Sharp N, Sales A. Organizational readiness to change assessment (ORCA): Development of an instrument based on the Promoting Action on Research in Health Services (PARIHS) framework. Implement Sci. 2009;4:38. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Aarons GA, Ehrhart MG, Farahnak LR. The Implementation Leadership Scale (ILS): Development of a brief measure of unit level implementation leadership. Implement Sci. 2014;9:45. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Shea CM, Young TL, Powell BJ, et al. Researcher readiness for participating in community-engaged dissemination and implementation research: A Conceptual framework of core competencies. Transl Behav Med. 2017;7:1–12. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Holt CL, Chambers DA. Opportunities and challenges in conducting community-engaged dissemination/implementation research. Transl Behav Med. 2017;7:389–392. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Curran GM, Mukherjee S, Allee E, Owen RR. A process for developing an implementation intervention: QUERI Series. Implement Sci. 2008;3:17. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Rubenstein L, Stockdale S, Sapir N, Altman L. A Patient-centered primary care practice approach using evidence-based quality improvement: Rationale, methods, and early assessment of implementation. J Gen. 2014; 29:589–597. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65.Powell BJ, Beidas RS, Lewis CC, et al. Methods to improve the selection and tailoring of implementation strategies. J Behav Heal Serv Res. 2017;44:177–194. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66.Ivers NM, Grimshaw JM, Jamtvedt G, et al. Growing literature, stagnant science? Systematic review, meta-regression and cumulative analysis of audit and feedback interventions in health care. J Gen Intern Med. 2014;29:1534–1541. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67.Anthierens S, Verhoeven V, Schmitz O, Coenen S. Academic detailers’ and general practitioners’ views and experiences of their academic detailing visits to improve the quality of analgesic use: Process evaluation alongside a pragmatic cluster randomized controlled trial. BMC Health Serv Res. 2017;17:841. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 68.Ivers NM, Grimshaw JM. Reducing research waste with implementation laboratories. Lancet (London, England). 2016;388:547–548. [DOI] [PubMed] [Google Scholar]

- 69.Bekelman TA, Bellows LL, Johnson SL. Are family routines modifiable determinants of preschool children’s eating, dietary intake, and growth? A Review of intervention studies. Curr Nutr Rep. 2017;6:171–189. [Google Scholar]

- 70.Schoenwald SK, Garland AF, Chapman JE, Frazier SL, Sheidow AJ, Southam-Gerow MA. Toward the effective and efficient measurement of implementation fidelity. Adm Policy Ment Heal Ment Heal Serv Res. 2011;38:32–43. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 71.Swindle T, Selig J, Rutledge J, Whiteside-Mansell L, Curran G. Fidelity monitoring in complex interventions: A case study of the WISE intervention. Arch Public Heal. 2018;76:53. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 72.Ritchie MJ, Dollar KM, Miller CJ, Oliver KA, Smith JL, Lindsay JA KJ. Using Implementation Facilitation to Improve Care in the Veterans Health Administration. Veterans Health Administration, Quality Enhancement Research Initiative (QUERI) for Team-Based Behavioral Health,; 2017. https://www.queri.research.va.gov/tools/implementation/Facilitation-Manual.pdf. [Google Scholar]

- 73.Stirman SW, Miller CJ, Toder K, Calloway A. Development of a framework and coding system for modifications and adaptations of evidence-based interventions. Implement Sci. 2013;8:65. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 74.Wiltsey Stirman S, Kimberly J, Cook N, Calloway A, Castro F, Charns M. The sustainability of new programs and innovations: a review of the empirical literature and recommendations for future research. Implement Sci. 2012;7:17. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 75.Scheirer MA, Dearing JW. An Agenda for research on the sustainability of public health programs. Am J Public Health. 2011;101:2059–2067. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 76.Whiteside-Mansell L, Swindle TM. Together We Inspire Smart Eating: A preschool curriculum for obesity prevention in low-income families. J Nutr Educ Behav. 2017;49:789–792. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 77.Stetler CB, Legro MW, Wallace CM, et al. The role of formative evaluation in implementation research and the QUERI experience. J Gen Intern Med. 2006;21:S1–S8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 78.Swindle T, Johnson SL, Whiteside-Mansell L, Curran GM. A mixed methods protocol for developing and testing implementation strategies for evidence-based obesity prevention in childcare: a cluster randomized hybrid type III trial. Implement Sci. 2017;12:90. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 79.Lawton R, Taylor N, Clay-Williams R. Positive deviance: a different approach to achieving patient safety. BMJ Qual. 2014; 23:880–883. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 80.Marra A, Guastelli L, Araújo C. Positive deviance: a program for sustained improvement in hand hygiene compliance. Am J. 2011;39:1–5. [DOI] [PubMed] [Google Scholar]

- 81.Gabbay R, Friedberg M, Miller-Day M. A positive deviance approach to understanding key features to improving diabetes care in the medical home. Ann Fam. 2013;11:S99–S107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 82.Harvey G, Kitson A. Implementing Evidence-Based Practice in Healthcare: A Facilitation Guide. Abingdon, Oxon: Routledge; 2015. [Google Scholar]

- 83.Jones J, Wolfenden L, Wyse R, et al. A randomised controlled trial of an intervention to facilitate the implementation of healthy eating and physical activity policies and practices in childcare services. BMJ Open. 2014;4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 84.Wolfenden L, Nathan N, Williams CM, et al. A randomised controlled trial of an intervention to increase the implementation of a healthy canteen policy in Australian primary schools: Study protocol. Implement Sci. 2014;9:147. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 85.Bell A, Davies L, Finch M, et al. An implementation intervention to encourage healthy eating in centre-based child-care services: Impact of the Good for Kids Good for Life programme. Public Health Nutr. 2015;18:1610–1619. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 86.Reilly KL, Nathan N, Wiggers J, Yoong SL, Wolfenden L. Scale up of a multi-strategic intervention to increase implementation of a school healthy canteen policy: Findings of an intervention trial. BMC Public Health. 2018;18:860. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 87.van Bodegom-Vos L, Davidoff F, Marang-van de Mheen PJ. Implementation and de-implementation: Two sides of the same coin? BMJ Qual Saf. 2016;26:495–501. [DOI] [PubMed] [Google Scholar]