Abstract

Visual perception is heavily influenced by “top-down” factors, including goals, expectations, and prior knowledge about the environmental context. Recent research has demonstrated the beneficial role threat-related cues play in perceptual decision making; however, the psychological processes contributing to this differential effect remain unclear. Since visual imagery helps to create perceptual representations or “templates” based on prior knowledge (e.g., cues), the present study examines the role vividness of visual imagery plays in enhanced perceptual decision making following threatening cues. In a perceptual decision-making task, participants used threat-related and neutral cues to detect perceptually degraded fearful and neutral faces presented at predetermined perceptual thresholds. Participants’ vividness of imagery was measured by the Vividness of Visual Imagery Questionnaire-2 (VVIQ-2). Our results replicated prior work demonstrating that threat cues improve accuracy, perceptual sensitivity, and speed of perceptual decision making compared to neutral cues. Furthermore, better performance following threat and neutral cues was associated with higher VVIQ-2 scores. Importantly, more precise and rapid perceptual decision making following threatening cues was associated with greater VVIQ-2 scores, even after controlling for performance related to neutral cues. This association may be because greater imagery ability allows one to conjure more vivid threat-related templates, which facilitate subsequent perception. While the detection of threatening stimuli is well studied in the literature, our findings elucidate how threatening cues occurring prior to the stimulus aid in subsequent perception. Overall, these findings highlight the necessity of considering top-down threat-related factors in visual perceptual decision making.

Keywords: perception, mental templates, vividness of imagery, top-down processing

Making fast and accurate decisions about threats in our environment is critical for survival. In our everyday life, we use prior knowledge in a “top-down” manner to detect incoming potential threats. For example, a driver navigating roads during a blizzard likely utilizes prior knowledge of this experience in conjunction with warning signs indicating treacherous driving conditions to anticipate and avoid icy patches. It is unlikely that the driver in these conditions would wait to respond to icy roads until the sheen of the ice had been captured by “bottom-up” processes. Emerging research shows that top-down factors, such as threat-related cues and contexts, enhance the sensitivity and speed of subsequent perceptual decision making more than neutral cues and contexts (Sussman, Weinberg, Szekely, Hajcak, & Mohanty, 2017; Szekely, Rajaram, & Mohanty, 2017); however, the psychological processes facilitating this effect remain unclear. Prestimulus visual imagery may play an important role, as links have been well-established between visual imagery and perception in cognitive and affective neuroscience research (for reviews, see Albright, 2012; Kosslyn, Ganis, & Thompson, 2001; Lang, 1979; Pearson, Naselaris, Holmes, & Kosslyn, 2015). Hence, the current study aimed to examine whether threatening cues in our environment benefit perception due to the vividness with which we can mentally image threatening stimuli compared to neutral stimuli.

According to the predictive coding hypothesis, prior knowledge is instantiated in the form of a template of the anticipated stimulus to which subsequent incoming sensory information can then be matched (Dosher & Lu, 1999; Friston, 2005). Similarly, visual search models propose that prior knowledge regarding a target’s features contributes to the formation of a template that can be used to match against sensory evidence (Dosher & Lu, 1999; Schmidt & Zelinsky, 2009; Wolfe, Horowitz, Kenner, Hyle, & Vasan, 2004). The visual imagery process is thought to simulate perceptual representations on the basis of past experience and provides a perceptual template that can influence subsequent perception (Albright, 2012). For example, behavioral evidence shows that imagining a stimulus, such as a letter, prior to its arrival improves detection of that stimulus (Farah, 1985; Ishai & Sagi, 1995). Neurally, participants asked to maintain a mental image of specific stimuli show increased activity in stimulus-specific cortices and the patterns of activity during mental imagery are similar to patterns elicited by the actual stimulus, indicating overlapping neural mechanisms for imagery and perception of stimuli (Albright, 2012; Kosslyn, Thompson, Kim, & Alpert, 1995; Pearson et al., 2015). Similarly, prior work has demonstrated that overlapping neural networks for imagery and perception also exist for affective facial stimuli (Kim et al., 2007). While much of the literature on imagery involves the cuing of explicit imagery, prior work that has informed the current paradigm demonstrates that cues prompting implicit imagery evoke similar internal processes (Summerfield et al., 2006), including in the case of facial stimuli (Greening, Mitchell, & Smith, 2018).

Most research examining the role of templates and imagery has been conducted using relatively nonemotional stimuli. However, this research suggests that mental imagery could play a particularly important role in threat perception, as imagery of threatening stimuli is more vivid (Bywaters, Andrade, & Turpin, 2004) and produces stronger emotional arousal than neutral imagery (Lang, 1979). Additionally, literature in emotional face perception has demonstrated that anticipating the predicted emotionality of a face through visual imagery can influence the perceived affect of forthcoming facial stimuli (Diekhof et al., 2011). Furthermore, both vividness of imagery and emotional arousal aid perception (Bywaters et al., 2004; Sutherland & Mather, 2012). Here, we measured individual differences in vividness of imagery and examined their relationship with perceptual measures following threatening compared to neutral cues. To do so, we used a perceptual discrimination task in which participants used threatening and neutral face cues to detect perceptually degraded threatening or neutral faces presented at participants’ predetermined perceptual thresholds. Thus, the task encouraged participants to use two prestimulus templates, one to detect threatening and another to detect neutral perceptually degraded faces. We hypothesized that threatening cues will result in faster and more sensitive perceptual performance than neutral cues due to keener cue-related mental representations for threatening compared to neutral faces. These sharper threatening face templates will aid not only in the detection of subsequently presented fearful faces, but also in the correct identification of neutral faces. Additionally, we hypothesized the improvement in perceptual performance following threatening and neutral cues will be associated with greater vividness of mental imagery, which aids the formation of sharper mental templates. Finally, we predicted that improved perceptual performance following threatening cues (controlling for neutral cues) will be more strongly associated with mental imagery since it facilitates the formation of sharper mental templates for threatening compared to neutral faces.

Method

Participants

Data were collected from 131 (82 female, 49 male) undergraduate students (M = 21.0 years) at Stony Brook University who participated in this experiment for course credit. Since no direct investigations have examined correlations between vividness of imagery and perceptual sensitivity, we based our sample size on related studies measuring vividness of imagery and perceptual imagery manipulations (Hatakeyama, 1981; N = 120). One participant was excluded from analyses for being an outlier (greater than 2 standard deviations from the mean) on d-prime for fear cue trials. Participants consented to participation prior to engaging in any procedural tasks. This investigation was approved by the Stony Brook University Institutional Review Board.

Measure: The Vividness of Visual Imagery Questionnaire-2 (VVIQ-2)

The VVIQ-2 (Marks, 1995) is a 32-item measure that assesses for vividness of mental imagery by asking participants to rate on a 1 (No image at all, you only “know” that you are thinking of an object) to 5 (Perfectly clear and as vivid as normal vision) scale how clearly they are able to conjure mental representations of various situations (e.g., “Think of the rising sun. Consider carefully the picture that comes before your mind’s eye … A rainbow appears”). The VVIQ-2 has been found to have high internal consistency reliability (α= .91) and construct validity (Campos, 2011).

Stimuli

The task utilized 32 face (16 fearful, FF; 16 neutral, NF) images from the Nim Stim set (Tottenham et al., 2009). These images were converted to grayscale (512 × 512 pixels) and equalized for luminance and spatial frequency using the SHINE (Spectrum, Histogram, and Intensity Normalization and Equalization) toolbox for Matlab (Willenbockel et al., 2010). The SHINE toolbox aids in minimizing confounds due to low-level image properties (Fiset, Blais, Gosselin, Bub, & Tanaka, 2008). Stimulus presentations were followed by perceptual masks. The masks were created by first averaging four randomly selected faces (2 of each valence type). Then, the resulting image was divided into 100-pixel squares that were randomly reorganized into one mask. The masks were designed to have the same low-level image properties as the stimuli used in the experiment.

Tasks

Threshold task.

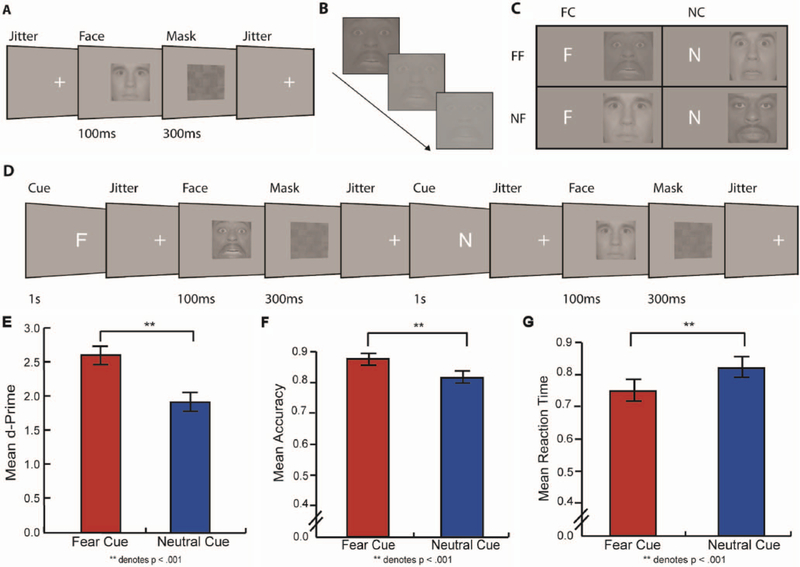

Each participant’s perceptual threshold (75% correct) was determined separately for fearful face and neutral face images using a perceptual discrimination task (Summerfield et al., 2006). Images were presented in 16 blocks of 16 trials each using PsychoPy software (Peirce, 2007), resulting in 128 fearful face trials and 128 neutral face trials. On each trial, an initial fixation cross was presented for 2–3 s followed by a perceptually degraded face (fearful or neutral) for 100 ms, followed by a perceptual mask for 300 ms (Figure 1A). Participants identified the face as fearful or neutral by pressing one of two adjacent buttons on a keyboard. Perceptual degradation was achieved by manipulating contrast of images on a scale ranging from 100% to 0%, such that 100% corresponded to no contrast degradation and 0% corresponded complete removal of contrast, leaving the image as a gray square. Fearful face and neutral face images were initially presented at a reduced contrast level at 10%, making images visible, but not easy to see. The level of contrast on subsequent trials was governed by adaptive staircasing until each participant’s individual threshold of discrimination (75% correct) for fearful faces and neutral faces was separately determined (Figure 1B).

Figure 1.

Upper panel: A. Timeline of the threshold task. Perceptual thresholds, 75% correct, were found for fearful and neutral faces. B. Adaptive staircases, which made images harder or easier to see based on subject responses, were used in the threshold task to find each participant’s threshold for fearful and neutral faces. C. Cue and stimulus pairs used in the cued task: fear cue/fearful face (FC/FF), neutral cue/fearful face (NC/FF), fear cue/neutral face (FC/NF), and neutral cue/neutral face (NC/NF). D. Timeline of cue task. Participants used cues to respond to a perceptually degraded fearful or neutral face. Lower Panel: Compared to neutral cues, threatening cues led to improvement in E. d-Prime, F. accuracy, and G. Reaction time Faces used in this figure are from the NimStim Face Stimulus Set (Tottenham et al., 2009), a publically available set of emotional face stimuli. The models pictured above have consented to having images of their faces published in scientific journals.

Cued discrimination task.

The cue task (Figure 1D for time-line) was designed to examine the impact of threat-related cues on subsequent perceptual decision-making. The task began with the presentation of either the letter F (fear face cue; FC), N (neutral face cue; NC), or I (uninformative cue; UC). Fear cues indicated that participants would be making a “fearful or not” decision for subsequently presented faces and neutral cue indicated that they would be making a “neutral or not” decision for subsequent faces. In the uninformative cue condition, cue-related information was withheld and participants were asked to simply respond to whether the faces were fearful or neutral. Participants were then shown fearful and neutral face images perceptually degraded to contrast levels ranging from 6% less than to 8% more than their previously determined perceptual threshold to provide a distribution to draw presented contrast values from Summerfield et al. (2006) to prevent practice effects (Adini, Wilkonsky, Haspel, Tsodyks, & Sagi, 2004). Participants responded by pressing adjacent “yes”/“no” keyboard buttons. The same fearful and neutral face stimuli were presented following all cue types. Thus, participants discriminated between fear and neutral face stimuli by using either a fear cue-related “fearful face perceptual set”, NC-related “neutral face perceptual set”, or no perceptual set. The cue was only indicative of upcoming decision and not indicative of probability; both fearful face and neutral face trials were distributed equally for all cue types. Participants were presented with eight blocks of 24 trials, resulting in 64 fear cue (32 fearful face and 32 neutral face), 64 neutral cue (32 fearful face and 32 neutral face), and 64 uninformative cue (32 fear face and 32 neutral face) trials (Figure 1C). Responses and reaction time (RT) were recorded.

Results

From the initial thresholding task, the threshold for perception of fearful faces (M = 0.177, SD = 0.267) was slightly greater than the threshold for perception of neutral faces (M = 0.118, SD =0.188), t(130) = 2.402, SE = .025, d = 0.184, p = .018. Since we hypothesized that fear cues will improve perceptual performance even after controlling for improvement from neutral cues, we first compared behavioral effects following each cue type. Across the whole study sample fear cue trials (M = 0.880, SD = 0.071) compared to neutral cue trials (M = 0.811, SD = 0.097) led to greater accuracy, t(130) = 8.992, SE = 0.008, d = 0.964, p < .001, 2). Fear cue trials (M = 2.655, SD = 0.765) compared to neutral cue trials (M = 1.985, SD = 0.787) also resulted in greater d-prime, t(130) = 9.205, SE = 0.073, d = 0.816, p < .001. Finally, fear cue trials (M = 0.741, SD = 0.172) compared to neutral cue trials (M = 0.834, SD = 0.164) led to faster RT, t(130) = −11.49, SE = 0.008, d = 0.984, p < .001 (Figure 1E–1G).

Additionally, we aimed to investigate the effects of congruence between cue and stimulus pairs by conducting a 2 cue (fear and neutral) × 2 congruence (congruent cue–stimulus pair and incongruent cue–stimulus pair) ANOVA on both accuracy and RT. For accuracy, these analyses yielded a significant main effect of cue, F(1, 130) = 83.584, p < .001, such that fear cues (M = 0.880, SE = 0.006) led to improved accuracy compared to neutral cues (M = 0.811, SE = 0.009). There was a significant main effect of congruence, F(1, 130) = 5.352, p = .022, such that incongruent trials (M = 0.857, SE = 0.008) led to improved accuracy compared to congruent trials (M = 0.834, SE = 0.009). There was no interaction between cue and congruence for accuracy, F(1, 130) =0.346, p = .557. For RT, these analyses yielded a significant main effect of cue, F(1, 130) = 130.910, p < .001, such that fear cues (M = 0.743, SE = 0.015) led to faster RT than neutral cues (M =0.833, SE = 0.014). Additionally, the analyses demonstrated a main effect of congruence, F(1, 130) = 38.003, p < .001, such that congruent trials (M = 0.764, SE = 0.015) led to faster RT than incongruent trials (M = 0.812, SE = 0.015). Lastly, a significant interaction was found for cue and congruence, F(1, 130) = 13.154, p < .001, such that congruent faces were detected faster than incongruent faces more so for fear cues than for neutral cues.

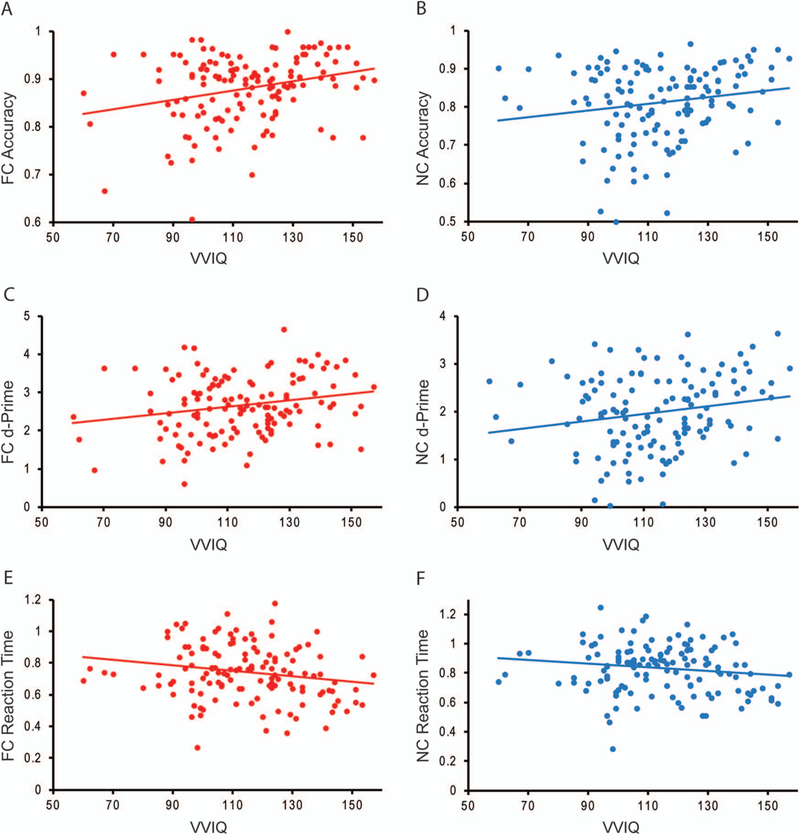

Next, we examined the hypothesis that improved perceptual performance following fearful and neutral cues that involve the formulation of specific perceptual sets would be associated with higher VVIQ-2 scores across subjects. Correlational analyses showed that higher VVIQ-2 scores were associated with (a) greater accuracy for fear cue trials, r(131) = 0.265, p = .002 and neutral cue trials, r(131) = 0.172, p = .049, (b) greater d-prime for fear cue trials, r(131) = 0.213, p = .015 and neutral cue trials, r(131) = 0.193, p = .027, as well as (c) faster RT for fear cue trials, r(131) = −0.194, p = .026 but not neutral cue trials, r(131) = −0.145, p = .099 (Figure 2A–F). We sought to confirm that this relationship between improved perceptual performance and higher VVIQ-2 scores did not exist in the absence of cue-related information. Correlational analyses did not demonstrate a relationship between VVIQ-2 scores and accuracy, r(131) =0.097, p = .269, d-prime, r(131) = 0.071, p = .419, or RT, r(131) = −0.140, p = .111 for uninformative cue trials. Regression analyses conducted with informative (fear and neutral) cue accuracy and uninformative cue accuracy predicting VVIQ-2 scores, R2 = 0.063, Adjusted R2 = 0.049, F(2, 128) = 4.316, p = .015, demonstrated a positive relationship between informative cue accuracy, b = 77.261, t(128) = 2.709, p = .008, 95% CI [20.825, 133.698] and VVIQ-2, which did not exist for uninformative cues, b = −24.324, t(128) = −0.727, p = .468, 95% CI [−90.493, 41.844]. Regression analyses with informative and uninformative cue d-prime predicting VVIQ-2 scores, R2 = 0.064, Adjusted R2 =0.049, F(2, 128) = 4.353, p = .015, yielded a similar pattern of results such that informative cue d-prime, b = 8.649, t(128) = 2.831, p = .005, 95% CI [2.603, 14.694], demonstrated a positive relationship with VVIQ-2 scores, whereas uninformative cues did not show this relationship, b = −2.600, t(128) = −0.887, p = .377, 95% CI [−8.400, 3.199]. Lastly, regression analyses with informative and uninformative cue RT predicting VVIQ-2 scores was not significant, R2 = 0.031, Adjusted R2 = 0.016, F(2, 128) = 2.064, p = .131. Neither predictor contributed significantly to the model.

Figure 2.

Scatterplots displaying correlations between vividness of imagery (VVIQ-2) scores and behavioral performance measures showing greater VVIQ-2 was associated with A. increased accuracy for fear cues (FC),B. increased accuracy for neutral cues (NC), C. improved d-prime for FC, D. improved d-prime for NC, E. faster reaction time (RT) for FC, F. a trending but nonsignificant negative relationship with RT for NC.

Subsequently, we examined the hypothesis that better perceptual performance following fear cues would be associated with VVIQ-2 scores over and above the relationship with neutral cues. The overall model with fear and neutral cue accuracy simultaneously predicting VVIQ-2 scores was significant, R2 = 0.072, Adjusted R2 = 0.058, F(2, 128) = 5.000, p = .008. Additionally, greater fear cue accuracy was associated with higher VVIQ-2 scores, b = 65.149, t(128) = 2.430, p = .017, 95% CI [12.090, 118.207] even after controlling for neutral cue accuracy, but neutral cue accuracy did not show the same relationship, b = 10.845, t(128) = 0.554, p = .580, 95% CI [−27.877, 49.568]. We further examined whether this relationship between greater fear cue accuracy and higher VVIQ-2 scores was driven by accuracy for congruent or incongruent faces following these cues. Regression analyses, R2 = 0.271, Adjusted R2 = 0.073, F(2, 128) = 5.073, p = .008, showed that accuracy for congruent faces (i.e., hits) was marginally associated, b = 30.212, t(128) = 1.759, p = .081, 95% CI [−3.769, 64.193] with VVIQ-2 scores, whereas accuracy for incongruent faces (i.e., correct rejections) was significantly associated, b = 42.431, t(128) = 2.646, p = .009, 95% CI [10.698,74.165] with VVIQ-2 scores. Next, we examined the relationship between d-prime for fear cue and neutral cues with VVIQ-2 scores. Regression analyses showed that the overall model was significant, R2 = 0.058, Adjusted R2 = 0.044, F(2, 128) = 3.966, p = .021, with higher fear cue d-prime showing a marginal association with higher VVIQ-2 scores, b = 4.056, t(128) = 1.689, p = .094, 95% CI [−0.697, 8.808] after controlling for neutral cue d-prime, which was not the case for neutral cue d-prime, b = 3.101, t(128) = 1.328, p = .187, 95% CI [−1.519, 7.721]. Finally, regression analysis with fear cue and neutral cue RT predicting VVIQ-2 scores was marginally significant, R2 = 0.039, Adjusted R2 = 0.024, F(2, 128) = 2.626, p = .076, however neither fear cue RT, b = 4.056, t(128) = 1.689, p = .094, 95% CI [−0.697, 8.808] nor neutral cue RT, b = 4.056, t(128) = 1.689, p = .094, 95% CI [−0.697, 8.808] contributed significantly over and above the other.

Discussion

In everyday life, we frequently use prior knowledge to anticipate and detect upcoming stimuli, particularly potential threats. Empirical studies show that prior threat-related cues facilitate perceptual decision making; however, the psychological processes by which this facilitation occurs are unclear. In the present study, we aimed to examine the contributory role vividness of imagery may play in threat cue-related facilitation of perceptual decision making. Our results showed that, compared to neutral cues, threat-related cues improved the accuracy, perceptual sensitivity, and speed with which subsequently presented facial stimuli were detected. Additionally, this facilitating effect existed irrespective of whether the subsequent faces were congruent or incongruent with the preceding cue, indicating that threat-related cues helped in the detection of both threatening and neutral faces.

Next, we showed that greater self-reported vividness of visual imagery was associated with greater perceptual benefits for threatening and neutral cues resulting in faster, more accurate, and more sensitive detection of subsequently presented faces. Importantly, we found that greater self-reported vividness of visual imagery was associated with greater perceptual benefits for threatening cues controlling for neutral cues. Thus, prior to stimulus arrival, our ability to vividly image the forthcoming stimulus is associated with how well we use cues to detect the stimulus, especially in case of threatening cues. This positive association between imagery ability and cue-related perceptual gains may be in part due to the clarity with which vivid imagers are able to conjure mental templates used to aid perception. It is hypothesized that prestimulus cues guide our perception and attention by generating preparatory representations of forthcoming stimuli, or perceptual templates, against which incoming sensory evidence is matched (Clark, 2013). While there is debate whether these templates are pictorial or semantic in content, there is now substantial neural evidence supporting their pictorial nature, which includes representations of expected colors, features, locations, viewpoints, and so forth (for review, see Reeder, 2017). Considerable evidence shows that mental imagery of a simple visual object or feature, as well as a complex facial stimulus (Finke, 1986; Wu, Duan, Tian, Wang, & Zhang, 2012) prior to its arrival aids in its subsequent identification, possibly because better imagery aids in matching the pre-stimulus representations with the actual stimulus. Neurally, this relationship may be supported by evidence showing that individuals with greater imagery ability show more synchronous neural activity between mentally imaging and perceiving a stimulus (Pearson et al., 2015).

Our study provides additional evidence for the view that vivid imagery aids formulation of keener perceptual templates that subsequently aid perception. This study demonstrates that the relationship between better imagery and perceptual performance exists specifically in cued conditions in which participants can utilize perceptual sets or templates to help guide perception, as opposed to conditions in which the same stimuli are detected without any prior cues. Importantly, our findings extend the literature on imagery and visual perception by demonstrating a positive association between vividness of imagery and improved perceptual performance following threatening cues even after accounting for variance due to neutral cues. Because threatening images are more vividly represented than neutral images (Bywaters et al., 2004), and emotional faces, specifically, are better encoded and maintained in memory than neutral faces (Sergerie, Lepage, & Armony, 2005; Sessa, Luria, Gotler, Jolicœur, & Dell’Acqua, 2011), greater mental imagery ability may be especially helpful in generating well-defined prestimulus templates of threatening faces. Additionally, vivid imagery from threatening cues may elicit greater physiological arousal (Lang, 1979), which has been shown to benefit subsequent perception of not only threatening but also neutral stimuli (Kensinger, Garoff-Eaton, & Schacter, 2007; Mather & Sutherland, 2011). Furthermore, aversive conditioning, which pairs sensory stimuli with unconditioned aversive stimuli such as loud noises or shocks, has been shown to increase perceptual sensitivity in both visual (Åhs, Miller, Gordon, & Lundström, 2013; Parma, Ferraro, Miller, Åhs, & Lundström, 2015) and olfactory (Rhodes, Ruiz, Ríos, Nguyen, & Miskovic, 2018) domains. Future research that aims to disentangle the impact of imagery versus the impact of arousal on perception will aid in the mechanistic understanding of threat perception.

The present findings may have substantial translational value for understanding the mechanisms of anxiety and for strengthening treatments. The facilitating effect of cues on subsequent perceptual sensitivity has been shown to be specific to threatening but not happy cues (Sussman et al., 2016). What differentiates adaptive from maladaptive fear responding is the tendency for clinically anxious individuals to fabricate a level of predicted threat that is irrational or unreasonable given the reality of a situation (Grupe & Nitschke, 2013), indicating that anxious individuals will utilize threatening cues differently (Sussman et al., 2016). Additionally, imagery, in the form of vivid intrusive images of feared stimuli, has been shown to play key role in most anxiety disorders, and treatments for anxious pathology have involved reducing and neutralizing vivid negative imagery (Hirsch & Holmes, 2007). Our findings provide preliminary evidence for the increased utility of threat cues in perceptual discrimination for individuals with stronger imagery ability. As both expectations of negative future outcomes and vivid imagery are exaggerated in anxiety, it is important to include these in ecologically valid models of anxious pathology. Future research could expand this line of research to investigate the role that mental imagery plays in treatment responsivity and outcome, particularly for individuals with anxious pathology.

Contributor Information

Gabriella Imbriano, Department of Psychology, Stony Brook University;.

Tamara J. Sussman, Irving Medical Center, Columbia University;

Jingwen Jin, Department of Psychology, Stony Brook University..

Aprajita Mohanty, Department of Psychology, Stony Brook University..

References

- Adini Y, Wilkonsky A, Haspel R, Tsodyks M, & Sagi D (2004). Perceptual learning in contrast discrimination: The effect of contrast uncertainty. Journal of Vision, 4(12), 2 10.1167/4.12.2 [DOI] [PubMed] [Google Scholar]

- Åhs F, Miller SS, Gordon AR, & Lundström JN (2013). Aversive learning increases sensory detection sensitivity. Biological Psychology, 92, 135–141. 10.1016/j.biopsycho.2012.11.004 [DOI] [PubMed] [Google Scholar]

- Albright TD (2012). On the perception of probable things: Neural substrates of associative memory, imagery, and perception. Neuron, 74, 227–245. 10.1016/j.neuron.2012.04.001 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bywaters M, Andrade J, & Turpin G (2004). Determinants of the vividness of visual imagery: The effects of delayed recall, stimulus affect and individual differences. Memory, 12, 479–488. 10.1080/09658210444000160 [DOI] [PubMed] [Google Scholar]

- Campos A (2011). Internal consistency and construct validity of two versions of the Revised Vividness of Visual Imagery Questionnaire. Perceptual and Motor Skills, 113, 454–460. 10.2466/04.22.PMS.113.5.454-460 [DOI] [PubMed] [Google Scholar]

- Clark A (2013). Whatever next? Predictive brains, situated agents, and the future of cognitive science. Behavioral and Brain Sciences, 36, 181–204. 10.1017/S0140525X12000477 [DOI] [PubMed] [Google Scholar]

- Diekhof EK, Kipshagen HE, Falkai P, Dechent P, Baudewig J, & Gruber O (2011). The power of imagination—How anticipatory mental imagery alters perceptual processing of fearful facial expressions. NeuroImage, 54, 1703–1714. 10.1016/j.neuroimage.2010.08.034 [DOI] [PubMed] [Google Scholar]

- Dosher BA, & Lu Z-L (1999). Mechanisms of perceptual learning. Vision Research, 39, 3197–3221. 10.1016/S0042-6989(99)00059-0 [DOI] [PubMed] [Google Scholar]

- Farah MJ (1985). Psychophysical evidence for a shared representational medium for mental images and percepts. Journal of Experimental Psychology: General, 114, 91–103. 10.1037/0096-3445.114.1.91 [DOI] [PubMed] [Google Scholar]

- Finke RA (1986). Some consequences of visualization in pattern identification and detection. The American Journal of Psychology, 99, 257–274. 10.2307/1422278 [DOI] [PubMed] [Google Scholar]

- Fiset D, Blais C, Gosselin F, Bub D, & Tanaka J (2008). Potent features for the categorization of Caucasian, African American, and Asian faces in Caucasian observers. Journal of Vision, 8(6), 258 10.1167/8.6.258 [DOI] [Google Scholar]

- Friston K (2005). A theory of cortical responses. Philosophical Transactions of the Royal Society of London Series B: Biological Sciences, 360, 815–836. 10.1098/rstb.2005.1622 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Greening SG, Mitchell DGV, & Smith FW (2018). Spatially generalizable representations of facial expressions: Decoding across partial face samples. Cortex, 101, 31–43. 10.1016/j.cortex.2017.11.016 [DOI] [PubMed] [Google Scholar]

- Grupe DW, & Nitschke JB (2013). Uncertainty and anticipation in anxiety: An integrated neurobiological and psychological perspective. Nature Reviews Neuroscience, 14, 488–501. 10.1038/nrn3524 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hatakeyama T (1981). Individual differences in imagery ability and mental rotation. Tohoku Psychologica Folia, 40, 6–23. [Google Scholar]

- Hirsch CR, & Holmes EA (2007). Mental imagery in anxiety disorders. Psychiatry, 6, 161–165. 10.1016/j.mppsy.2007.01.005 [DOI] [Google Scholar]

- Ishai A, & Sagi D (1995). Common mechanisms of visual imagery and perception. Science, 268, 1772–1774. 10.1126/science.7792605 [DOI] [PubMed] [Google Scholar]

- Kensinger EA, Garoff-Eaton RJ, & Schacter DL (2007). Effects of emotion on memory specificity: Memory trade-offs elicited by negative visually arousing stimuli. Journal of Memory and Language, 56, 575–591. 10.1016/j.jml.2006.05.004 [DOI] [Google Scholar]

- Kim SE, Kim JW, Kim JJ, Jeong BS, Choi EA, Jeong YG, … Ki SW (2007). The neural mechanism of imagining facial affective expression. Brain Research, 1145, 128–137. 10.1016/j.brainres.2006.12.048 [DOI] [PubMed] [Google Scholar]

- Kosslyn SM, Ganis G, & Thompson WL (2001). Neural foundations of imagery. Nature Reviews Neuroscience, 2, 635–642. 10.1038/35090055 [DOI] [PubMed] [Google Scholar]

- Kosslyn SM, Thompson WL, Kim IJ, & Alpert NM (1995). Topographical representations of mental images in primary visual cortex. Nature, 378, 496–498. 10.1038/378496a0 [DOI] [PubMed] [Google Scholar]

- Lang PJ (1979). A bio-informational theory of emotional imagery. Psychophysiology, 16, 495–512. 10.1111/j.1469-8986.1979.tb01511.x [DOI] [PubMed] [Google Scholar]

- Marks DF (1995). New directions for mental imagery research. Journal of Mental Imagery, 19, 153–167. [Google Scholar]

- Mather M, & Sutherland MR (2011). Arousal-biased competition in perception and memory. Perspectives on Psychological Science, 6, 114–133. 10.1177/1745691611400234 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Parma V, Ferraro S, Miller SS, Åhs F, & Lundström JN (2015). Enhancement of odor sensitivity following repeated odor and visual fear conditioning. Chemical Senses, 40, 497–506. 10.1093/chemse/bjv033 [DOI] [PubMed] [Google Scholar]

- Pearson J, Naselaris T, Holmes EA, & Kosslyn SM (2015). Mental imagery: Functional mechanisms and clinical applications. Trends in Cognitive Sciences, 19, 590–602. 10.1016/j.tics.2015.08.003 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Peirce JW (2007). PsychoPy—Psychophysics software in Python. Journal of Neuroscience Methods, 162, 8–13. 10.1016/j.jneumeth.2006.11.017 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Reeder RR (2017). Individual differences shape the content of visual representations. Vision Research, 141, 266–281. 10.1016/j.visres.2016.08.008 [DOI] [PubMed] [Google Scholar]

- Rhodes LJ, Ruiz A, Ríos M, Nguyen T, & Miskovic V (2018). Differential aversive learning enhances orientation discrimination. Cognition and Emotion, 32, 885–891. 10.1080/02699931.2017.1347084 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schmidt J, & Zelinsky GJ (2009). Search guidance is proportional to the categorical specificity of a target cue. Quarterly Journal of Experimental Psychology, 62, 1904–1914. 10.1080/17470210902853530 [DOI] [PubMed] [Google Scholar]

- Sergerie K, Lepage M, & Armony JL (2005). A face to remember: Emotional expression modulates prefrontal activity during memory formation. NeuroImage, 24, 580–585. 10.1016/j.neuroimage.2004.08.051 [DOI] [PubMed] [Google Scholar]

- Sessa P, Luria R, Gotler A, Jolicœur P, & Dell’Acqua R (2011). Interhemispheric ERP asymmetries over inferior parietal cortex reveal differential visual working memory maintenance for fearful versus neutral facial identities. Psychophysiology, 48, 187–197. 10.1111/j.1469-8986.2010.01046.x [DOI] [PubMed] [Google Scholar]

- Summerfield C, Egner T, Greene M, Koechlin E, Mangels J, & Hirsch J (2006). Predictive codes for forthcoming perception in the frontal cortex. Science, 314, 1311–1314. 10.1126/science.1132028 [DOI] [PubMed] [Google Scholar]

- Sussman TJ, Szekely A, Hajcak G, & Mohanty A (2016). It’s all in the anticipation: How perception of threat is enhanced in anxiety. Emotion, 16, 320–327. 10.1037/emo0000098 [DOI] [PubMed] [Google Scholar]

- Sussman TJ, Weinberg A, Szekely A, Hajcak G, & Mohanty A (2017). Here comes trouble: Prestimulus brain activity predicts enhanced perception of threat. Cerebral Cortex, 27, 2695–2707. 10.1093/cercor/bhw104 [DOI] [PubMed] [Google Scholar]

- Sutherland MR, & Mather M (2012). Negative arousal amplifies the effects of saliency in short-term memory. Emotion, 12, 1367–1372. 10.1037/a0027860 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Szekely A, Rajaram S, & Mohanty A (2017). Context learning for threat detection. Cognition and Emotion, 31, 1525–1542. 10.1080/02699931.2016.1237349 [DOI] [PubMed] [Google Scholar]

- Tottenham N, Tanaka JW, Leon AC, McCarry T, Nurse M, Hare TA, … Nelson C (2009). The NimStim set of facial expressions: Judgments from untrained research participants. Psychiatry Research, 168, 242–249. 10.1016/j.psychres.2008.05.006 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Willenbockel V, Sadr J, Fiset D, Horne GO, Gosselin F, & Tanaka JW (2010). Controlling low-level image properties: The SHINE tool-box. Behavior Research Methods, 42, 671–684. 10.3758/BRM.42.3.671 [DOI] [PubMed] [Google Scholar]

- Wolfe JM, Horowitz TS, Kenner N, Hyle M, & Vasan N (2004). How fast can you change your mind? The speed of top-down guidance in visual search. Vision Research, 44, 1411–1426. 10.1016/j.visres.2003.11.024 [DOI] [PubMed] [Google Scholar]

- Wu J, Duan H, Tian X, Wang P, & Zhang K (2012). The effects of visual imagery on face identification: An ERP study. Frontiers in Human Neuroscience, 6, 305 10.3389/fnhum.2012.00305 [DOI] [PMC free article] [PubMed] [Google Scholar]