Abstract

Background

Peer reviewed research is paramount to the advancement of science. Ideally, the peer review process is an unbiased, fair assessment of the scientific merit and credibility of a study; however, well-documented biases arise in all methods of peer review. Systemic biases have been shown to directly impact the outcomes of peer review, yet little is known about the downstream impacts of unprofessional reviewer comments that are shared with authors.

Methods

In an anonymous survey of international participants in science, technology, engineering, and mathematics (STEM) fields, we investigated the pervasiveness and author perceptions of long-term implications of receiving of unprofessional comments. Specifically, we assessed authors’ perceptions of scientific aptitude, productivity, and career trajectory after receiving an unprofessional peer review.

Results

We show that survey respondents across four intersecting categories of gender and race/ethnicity received unprofessional peer review comments equally. However, traditionally underrepresented groups in STEM fields were most likely to perceive negative impacts on scientific aptitude, productivity, and career advancement after receiving an unprofessional peer review.

Discussion

Studies show that a negative perception of aptitude leads to lowered self-confidence, short-term disruptions in success and productivity and delays in career advancement. Therefore, our results indicate that unprofessional reviews likely have and will continue to perpetuate the gap in STEM fields for traditionally underrepresented groups in the sciences.

Keywords: Peer review, Underrepresented minorities, STEM, Intersectionality

Introduction

The peer review process is an essential step in protecting the quality and integrity of scientific publications, yet there are many issues that threaten the impartiality of peer review and undermine both the science and the scientists (Kaatz, Gutierrez & Carnes, 2014; Lee et al., 2013). A growing body of quantitative evidence shows violations of objectivity and bias in the peer review process for reasons based on author attributes (e.g., language, institutional affiliation, nationality, etc.), author identity (e.g., gender, sexuality) and reviewer perceptions of the field (e.g., territoriality within field, personal gripes with authors, scientific dogma, discontent/distrust of methodological advances) (Lee et al., 2013). The most influential demonstrations of systemic biases within the peer review system have relied on experimental manipulation of author identity or attributes (e.g., Goldberg’s, 1968 classic study “Joan” vs “John”; Goldberg, 1968; Wennerås & Wold, 1997) or analyses of journal-reported metrics such as number of papers submitted, acceptance rates, length of time spent in review and reviewer scores (Fox, Burns & Meyer, 2016; Fox & Paine, 2019; Helmer et al., 2017; Lerback & Hanson, 2017). These studies have focused largely on the inequality of outcomes resulting from inequities in the peer review process. While these studies have been invaluable for uncovering trends and patterns and increasing awareness of existing biases, they do not specifically assess the content of the reviews (Resnik, Gutierrez-Ford & Peddada, 2008), the downstream effects that unfair, biased and ad hominem comments may have on authors and how these reviewer comments may perpetuate representation gaps in science, technology, engineering, and mathematics (STEM) fields.

In the traditional peer review process, the content, tone and thoroughness of a manuscript review is the sole responsibility of the reviewer (the identity of whom is often protected by anonymity), yet the contextualization and distribution of reviews to authors is performed by the assigned (handling) editor at the journal to which the paper was submitted. In this tiered system, journal editors are largely considered responsible for policing reviewer comments and are colloquially referred to as the “gatekeepers” of peer review. Both reviewers and editors are under considerable time pressures to move manuscripts through peer review, often lack compensation commensurate with time invested, experience heavy workloads and are subject to inherent biases of their own, which may translate into irrelevant and otherwise unprofessional comments being first written and then passed along to authors (Resnik & Elmore, 2016; Resnik, Gutierrez-Ford & Peddada, 2008).

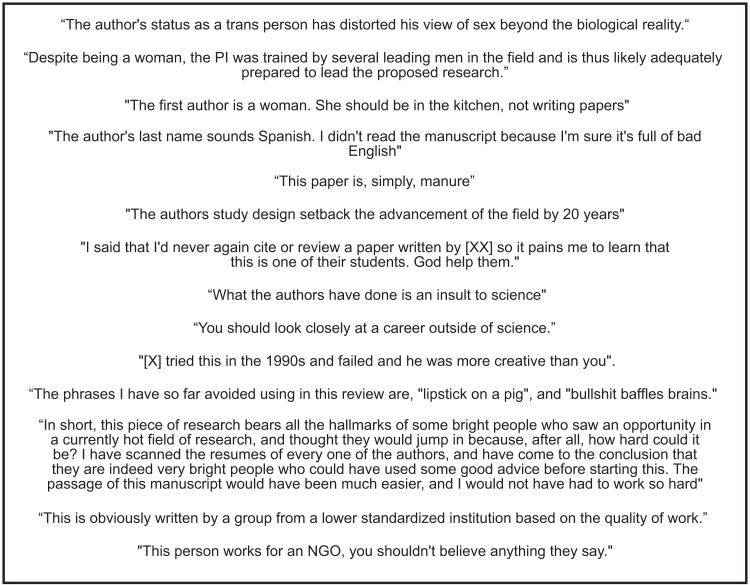

We surveyed STEM scientists that have submitted manuscripts to a peer-reviewed journal as first author to understand the impacts of receiving unprofessional peer review comments on the perception of scientific aptitude (confidence as a scientist), productivity (publications per year) and career advancement (ability to advance within the field). This study defined an unprofessional peer review comment as any statement that is unethical or irrelevant to the nature of the work; this includes comments that: (1) lack constructive criticism, (2) are directed at the author(s) rather than the nature or quality of the work, (3) use personal opinions of the author(s)/work rather than evidence-based criticism, or (4) are “mean-spirited” or cruel (e.g., of comments received by survey respondents that fit these criteria see Fig. 1). The above definition was provided as a guideline for survey respondents to separate frank, constructive and even harsh reviews from those that are blatantly inappropriate or irrelevant. Specifically, this study aimed to understand the content of the unprofessional peer reviews, the frequency at which they are received and the subsequent impacts on the recipient’s perception of their abilities. Given that psychological studies show that overly harsh criticisms can lead to diminished success (Baron, 1988), we tested for the effects of unprofessional peer review on the perception of scientific aptitude, productivity and career advancement of all respondents. Underrepresented groups in particular are vulnerable to stereotype threat, wherein negative societal stereotypes about performance abilities and aptitude are internalized and subsequently expressed (Leslie et al., 2015). Further, the combination of social categorizations may lead to amplified sources of oppression and stereotype threat (Crenshaw, 1991); therefore, it is necessary to assess the impacts of unprofessional peer review comments across intersectional gender and racial/ethnic groups.

Figure 1. Examples of unprofessional peer reviews from survey respondents.

Permission to publish these comments was explicitly given by respondents who certified the comments were reported accurately.

Materials and Methods

Survey methods and administration

The data for this study came from an anonymous survey of international members of the STEM community using Qualtrics survey software. Data were collected under institutional review board agreements and federalwide assurance at Occidental College (IRB00009103, FWA00005302) and California State University, Northridge (IRB00001788, FWA00001335). The survey was administered between 28 February 2019 and 10 May 2019 using the online-based platform in English and was open to anyone self-identifying as a member of the STEM community that published their scholarly work in a peer reviewed system. Participation in the survey was voluntary and no compensation or incentives were offered. All respondents had to certify that they were 18 years or older and read the informed consent statement; participants were able to exit the survey at any point without consequence. Participants were recruited broadly through social media platforms, direct posting on scientific list-serves and email invitations to colleagues, department chairs and organizations focused on diversity and inclusive practices in STEM fields (see Supplemental Files for distribution methods). Targeted emails were used to increase representation of respondents. Data on response rates from specific platforms were not collected.

The survey required participants to provide basic demographic information including gender identity, level of education, career stage, country of residence, field of expertise and racial and/or ethnic identities (see Supplemental Files for specific survey questions). Throughout the entire survey, all response fields included “I prefer not to say” as an opt-out choice. Once demographic information was collected from participants, the study’s full definition of an unprofessional peer review was presented and respondents self-identified whether they had ever received a peer review comment as first author that fit this definition. Survey respondents answering “no” or “I prefer not to say” were automatically redirected to the end of the survey, as no additional questions were necessary. Respondents answering “yes” were asked a series of follow-up questions designed to determine the nature of the unprofessional comments, the total number of scholarly publications to date and the number of independent times an unprofessional review was experienced.

The perceived impact of the unprofessional reviews on the scientific aptitude, productivity and career advancement of each respondent was assessed using the following questions: (1) To what degree did the unprofessional peer review(s) make you doubt your scientific aptitude? (1–5) 1 = not at all, 5 = I fully doubted my scientific abilities; (2) To what degree do you feel that receiving the unprofessional review(s) limited your overall productivity? Please rate this from 1 to 5. 1 = not at all, 5 = greatly limited number of publications per year; (3) To what degree do you feel that receiving the unprofessional review(s) delayed career advancement? Please rate this from 1 to 5. 1 = not at all, 5 = greatly impacted/delayed career advancement. Finally, respondents were invited to provide direct quotes of unprofessional reviews received (although the respondents were told they could remove any personal or identifying information such as field of study, pronouns, etc.). Participants choosing to share peer review quotes were able to specify whether they gave permission for the quote to be shared/distributed. Explicit permission from each respondent was received to use and distribute all quotes displayed in Fig. 1. At the end of the survey, all respondents were required to certify that all the information provided was true and accurate to the best of their abilities and that the information was shared willingly.

We recognize that there are limitations to our survey design. First, our survey was only administered in English. There also may have been non-response bias for individuals who did not experience negative comments during peer review even though any advertisement of this survey indicated that we sought input from anyone who has ever published a peer-reviewed study as first author. There could also be a temporal element, where authors who received comments more recently may have responded differently than those who received unprofessional reviews many years ago. Additionally, the order of questions was not randomized and participants were asked to complete demographic information before answering questions about their peer review experience, which may have primed respondents to select answers that were more in line with racial or gender-specific stereotypes (Steele & Ambady, 2006). In order to maintain the anonymity of our respondents, we did not ask for any bibliometric data from the authors. Given that our sample of respondents represented a diverse array of career stages, STEM fields, countries of residence and racial/ethnic identities, we do not believe that any of the above significantly limits the interpretation of our results.

Data analysis

We tested for the pervasiveness and downstream effects of unprofessional peer reviews on four intersecting gender and racial/ethnic groups: (1) women of color and non-binary people of color, (2) men of color, (3) white women and white non-binary people and (4) white men. Due to the small number of respondents identifying as non-binary (<1% of respondents), we statistically analyzed women and non-binary genders together in a category as marginalized genders in the sciences. However, refer to Table S1 for full breakdown of responses from each gender identity so that patterns may be assessed by readers without the constraints conferred by statistical assumptions and analyses. To protect the anonymity of individuals that may be personally identified based on the combination of their race/ethnicity and gender, respondents who identified as a race or ethnicity other than white, including those who checked multiple racial and/or ethnic categories were grouped together for the statistical analysis (Fig. S1). It is important to note that by grouping the respondents into four categories, the analysis captures only the broad patterns for intersectional groups and does not relay the unique experiences of each respondent, which should not be discounted.

Survey respondents (N = 1,106) were given the opportunity to opt out of any question; therefore, the sample sizes were different for each statistical analysis. We tested for differences in the probability of receiving an unprofessional peer review across four intersectional groups (N = 1,077) using a Bayesian logistic regression (family = Bernoulli, link = logit). Of the 642 people who indicated that they received an unprofessional peer review, 617, 620 and 618 answered the questions regarding perceived impacts to their scientific aptitude, productivity and career advancement, respectively. We ran individual Bayesian ordinal logistic regressions (family = cumulative, link = logit) for each of the three questions to test for differences in probabilities of selecting a 1–5 across the four groups. All models were run using the BRMS package (Bürkner & Others, 2017) in Rv3.5.2 which uses the Hamiltonian Monte Carlo algorithm in STAN (Hoffman & Gelman, 2014; Stan Development Team, 2015). Each model was sampled from four chains, 4,000 iterations post-warmup, and half student t distributions for all priors. Model convergence was assessed using Gelman–Rubin diagnostics ( for all parameters; Gelman & Rubin, 1992) and visual inspection of trace plots. Posterior predictive checks were visually inspected using the pp_check() function and all assumptions were met. Data are presented as medians and two-tailed 95% Bayesian credible intervals (BCI).

Results

We received 1,106 responses from people in 46 different countries across >14 STEM disciplines (Fig. 2). Overall, 58% of all the respondents (N = 642) indicated that they had received an unprofessional review, with 70% of those individuals reporting multiple instances (3.5 ± 5.8 reviews, mean ± SD, across all participants). There were no significant differences in the likelihood of receiving an unprofessional review among the intersectional groups (Fig. S2); however, there were clear and consistent differences in downstream effects between groups in perceived impacts on self-confidence, productivity and career trajectories after receiving an unprofessional review.

Figure 2. Survey demographics.

(A) Representative career stages (N = 11), (B) scientific disciplines (N = 14) and (C) countries (N = 46) from survey participants. Color in subset (C) represents number of surveys from each country where white is 0.

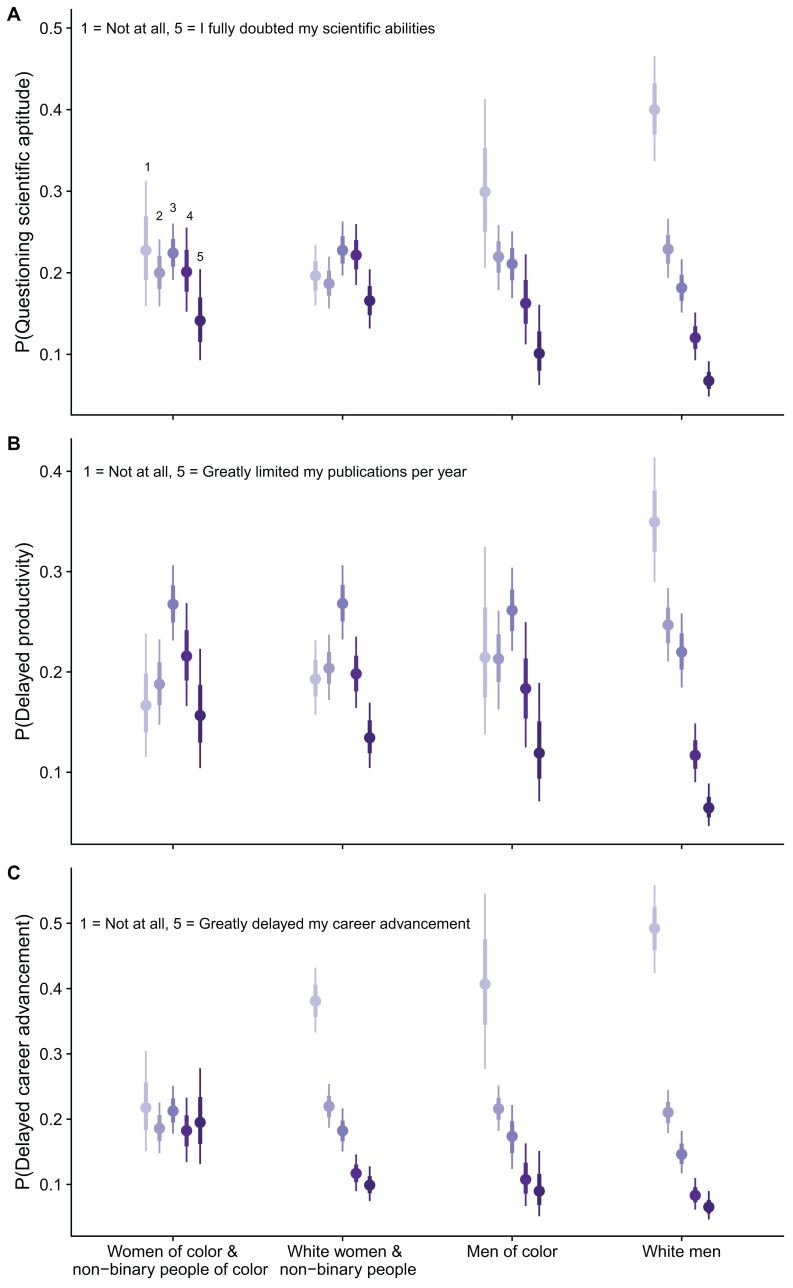

White men were most likely to report no impact to their scientific aptitude (score of 1) after receiving an unprofessional peer review (P[1] = 0.40, 95% BCI [0.34–0.47], where P[score] denotes the probability of selecting a particular score), with a 5.7 times higher probability of selecting a 1 than a 5 (fully doubted their scientific aptitude; P[5] = 0.07, 95% BCI [0.05–0.09]). Notably, white men were 1.3, 2.0 and 1.7 times more likely to indicate no resultant doubt of their scientific aptitude than men of color (P[1] = 0.30, 95% BCI [0.20–0.41]), white women and white non-binary people (P[1] = 0.20, 95% BCI [0.16–0.23]) and women of color and non-binary people of color (P[1] = 0.23, 95% BCI [0.16–0.31]), respectively (Fig. 3A). Together, these results indicate that receiving unprofessional peer reviews had less of an overall impact on the scientific aptitude of white men relative to the remaining three groups.

Figure 3. Results from Bayesian ordinal logistic regression.

Figure shows the probability of selecting a 1–5 for (A) doubting scientific aptitude (N = 617), (B) delayed productivity (N = 620) and (C) delayed career advancement (N = 618) across intersectional groups after receiving an unprofessional peer review. Data are medians and two-tailed 95% BCI. Colors represent level of impact with the lightest (1) as no perceived impact and the darkest (5) as the highest impact. Women and non-binary people were grouped for the statistical analysis to represent marginalized genders in STEM fields.

Similar patterns among intersectional groups emerged for reported impacts of unprofessional reviews on productivity (measured in number of publications per year). Specifically, women of color and non-binary people of color, white women and white non-binary people and men of color were mostly likely to select a 3 (moderate level of perceived negative impact on productivity), whereas white men were most likely to select a 1 (no perceived impact on their productivity; Fig. 3B). White men were also the least likely of all groups to indicate that receiving unprofessional reviews greatly limited their number of publications per year (P[5] = 0.06, 95% BCI [0.05–0.09]), which significantly differed from groups of women and non-binary people, but not men of color (Fig. 3B).

Women of color and non-binary people of color had the most distinct pattern in reported negative impacts on career advancement (Fig. 3C). Women of color and non-binary people of color had a nearly equal probability of reporting each level of impact (1–5); whereas, men of color, white women and white non-binary people and white men had a decreasing probability of selecting scores indicative of a higher negative impact on career advancement (Fig. 3C). Specifically, women of color and non-binary people of color were the most likely to select that they had significant delays in career advancement as a result of receiving an unprofessional review (P[5] = 0.20, 95% BCI [0.13–0.28]). Women of color and non-binary people of color were also the least likely of the groups to report no impact on career advancement as a result of unprofessional reviews (P[1] = 0.22, 95% BCI [0.15–0.31]).

Discussion

Our data show that unprofessional peer reviews are pervasive in STEM disciplines, regardless of race/ethnicity or gender, with over half of participants reporting that they had received unprofessional comments. Our study did not assess peer review outcomes of participants, but it is possible that unprofessional reviews could impact acceptance rates across groups differently because reviewer perception of competence is implicitly linked to gender regardless of content (Goldberg, 1968; Kaatz, Gutierrez & Carnes, 2014; Wennerås & Wold, 1997). Previous studies have demonstrated clear differences in acceptance/rejection rates between genders (Murray et al., 2019; Symonds et al., 2006; Fox & Paine, 2019) and future studies should test if receiving an unprofessional peer review leads to different acceptance outcomes depending on gender and/or race/ethnicity. While there were no statistical differences in the number of unprofessional reviews received among the four intersectional groups in our study, there were clear and consistent differences in the downstream impacts that the unprofessional reviews had among groups.

Overall, white men were the least likely to question their scientific aptitude, or report delays in productivity or career advancement than any other group after receiving an unprofessional review. Groups that reported the highest self-doubt after unprofessional comments also reported the highest delays in productivity. This finding corroborates studies showing destructive criticism leads to self-doubt (Baron, 1988) and vulnerability to stereotype threat (Leslie et al., 2015), which has quantifiable negative impacts on productivity (Kahn & Scott, 1997) and career advancement (Howe-Walsh & Turnbull, 2014). Conversely, high self-confidence is related to increased persistence after failure (Baumeister et al., 2003). Therefore, scientists with a higher evaluation of their own scientific aptitude after an unprofessional review may be less likely to have reduced productivity following a negative peer review experience.

Women and non-binary people were the most likely to report significant delays in productivity after receiving unprofessional reviews. It is well known that publication rates in STEM fields differ between genders (Symonds et al., 2006; Bird, 2011). Men have 40% more publications than women on average, with women taking up to 2.5 times as long to achieve the same output rate as men in some fields (Symonds et al., 2006). While our study cannot confer causality leading to diminished productivity, the results show that unprofessional reviews reinforce bias that is already being encountered by underrepresented groups on a daily basis. Other well-studied mechanisms leading to reduced productivity for women include (but are not limited to) papers by women authors spend more time in review than papers by men (Hengel, 2017), men are significantly less likely to publish coauthored papers with women than with other men (Salerno et al., 2019), women receive less research funding than men in some countries (Witteman et al., 2019) and women spend more time doing service work than men at academic institutions (Guarino & Borden, 2017). Women are also underrepresented in the peer review process leading to substantial biases in peer review (Goldberg, 1968; Kaatz, Gutierrez & Carnes, 2014). For example, studies have shown that women are underrepresented as editors which leads to fewer refereed papers by women (Fox, Burns & Meyer, 2016; Lerback & Hanson, 2017; Helmer et al., 2017; Cho et al., 2014) and that authors of all genders are less likely to recommend women reviewers (Lerback & Hanson, 2017) which contributes to inequity in peer review outcomes (i.e., fewer first and last authored papers accepted by women) (Murray et al., 2019). However, some strategies, such as double-blind reviewing and open peer review, have been shown to alleviate gender inequity in publishing (Darling, 2015; Budden et al., 2008; Groves, 2010), although there are notable exceptions (Webb, O’Hara & Freckleton, 2008). The difficulty in publishing and lack of productivity may contribute to the high attrition rate of women in academia (Cameron, Gray & White, 2013).

Assessment of intersectional groups has been generally overlooked in research on publication and peer review biases. Yet, traditionally underrepresented racial and ethnic groups experience substantial pressures and limitations to inclusion in STEM fields. Indeed, in our study there were significant differences, especially in perceived delays in career advancement, between white women and white non-binary people, and women of color and non-binary people of color (Fig. 3C). Had we focused on only gender or racial differences, the distinct experiences of women of color and non-binary people of color would have been obscured. Because both gender and racial biases lead to diminished recruitment and retention, as well as higher rates of attrition in the sciences (Xu, 2008; Alfred, Ray & Johnson, 2019), intersectionality cannot be ignored. Our results indicate that receiving unprofessional peer reviews is an yet another barrier to equity in career trajectories for women of color and non-binary people of color, in addition to the quality of mentorship, intimidation and harassment, lack of representation and many others (Howe-Walsh & Turnbull, 2014; Zambrana et al., 2015).

Our study indicates that unprofessionalism in reviewer comments is pervasive in STEM fields. Although we found clear patterns indicating that unprofessional peer reviewer comments had a stronger negative impact on underrepresented intersectional groups in STEM, all groups had at least some members reporting the highest level of impact in every category. This unprofessional behavior often occurs under the cloak of anonymity and is being perpetuated by the scientific community upon its members. Comments like several received by participants in our study (see Fig. 1) have no place in the peer review process. Interestingly, less than 3% of our participants that received an unprofessional peer review stated that the review was from an open review journal, where the peer reviews and responses from authors are published with the final manuscript (Pulverer, 2010). While a recent laboratory study showed that open peer review practices led to higher cooperation and peer review accuracy (Leek, Taub & Pineda, 2011), less is known about how transparent review practices affect professionalism in peer review comments. Our data indicate that open reviews may help curtail unprofessional comments, but more research on this topic is needed.

Individual scientists have the power and responsibility to address the occurrence of unprofessional peer reviews directly and enact immediate change. We therefore recommend the following: (1) Make peer review mentorship an active part of student and peer learning. For example, departments and scientific agencies should hold workshops on peer review ethics. (2) Follow previously published best practices in peer review (Huh & Sun, 2008; Kaatz, Gutierrez & Carnes, 2014). (3) Practice self-awareness and interrogate whether comments are constructive and impartial (additionally, set aside enough time to review thoroughly, assess relevance and re-read any comments). (4) Encourage journals that do not already have explicit guidelines for the review process to create a guide, as well as implement a process to reprimand or remove reviewers that are acting in an unprofessional manner. For example, the journal could contact the reviewer’s department chair or senior associate if they submit an unprofessional review. (5) Societies should add acceptable peer review practices to their code of conduct and a structure that reprimands or removes society members that submit unprofessional peer reviews. (6) Editors should be vigilant in preventing unprofessional reviews from reaching authors directly and follow published best practices (D’Andrea & O’Dwyer, 2017; Resnik & Elmore, 2016). (7) When in doubt use the “golden rule” (review others as you wish to be reviewed).

Conclusions

Our study shows that unprofessional peer reviews are pervasive and that they disproportionately harm underrepresented groups in STEM. Specifically, underrepresented groups were most likely to report direct negative impacts on their scientific aptitude, productivity and career advancement after receiving an unprofessional peer review. While it was beyond the scope of this study, future investigations should also focus on the effect of unprofessional peer reviews on first-generation scientists English as a second language, career stage, peer review in grants, and other factors that could lead to differences in downstream effects. Unprofessional peer reviews have no place in the scientific process and individual scientists have the power and responsibility to enact immediate change. However, we recognize and applaud those reviewers and editors (and there are many!) that spend a significant amount of time and effort writing thoughtful, constructive, and detailed criticisms that are integral to moving science forward.

Supplemental Information

Detailed information on survey distribution for this study.

Acknowledgments

We thank the hundreds of respondents who bravely shared some of their harshest, darkest critiques. We thank M. Siple, A. Mattheis, M. DeBiasse, J. Carroll, and the editor and two reviewers for comments and feedback on the manuscript. This is CSUN Marine Biology contribution # 296. This is a co-first authored paper with author order determined by ranking the most unprofessional peer reviews received by each author to date, and alphabetical order.

Funding Statement

The authors received no funding for this work.

Additional Information and Declarations

Competing Interests

The authors declare that they have no competing interests.

Author Contributions

Nyssa J. Silbiger conceived and designed the experiments, performed the experiments, analyzed the data, contributed reagents/materials/analysis tools, prepared figures and/or tables, authored or reviewed drafts of the paper, approved the final draft.

Amber D. Stubler conceived and designed the experiments, performed the experiments, contributed reagents/materials/analysis tools, prepared figures and/or tables, authored or reviewed drafts of the paper, approved the final draft.

Human Ethics

The following information was supplied relating to ethical approvals (i.e., approving body and any reference numbers):

Occidental College (IRB00009103, FWA00005302) and California State University, Northridge (IRB00001788, FWA00001335) granted ethical approval to carry out the study.

Data Availability

The following information was supplied regarding data availability:

Code is available at GitHub: https://github.com/njsilbiger/UnproReviewsInSTEM.

Code is also available at Zenodo: DOI 10.5281/zenodo.3533928.

The survey collected no unique personal identifying information beyond basic demographic information. However, respondent anonymity may be jeopardized through revealing specific combinations of the demographic information collected (e.g., field of study, gender, race/ethnicity). The data that support the findings of this study have been redacted to protect the anonymity of the respondents and is available as a Supplemental File.

References

- Alfred, Ray & Johnson (2019).Alfred MV, Ray SM, Johnson MA. Advancing women of color in STEM: an imperative for U.S. global competitiveness. Advances in Developing Human Resources. 2019;21(1):114–132. doi: 10.1177/1523422318814551. [DOI] [Google Scholar]

- Baron (1988).Baron RA. Negative effects of destructive criticism: impact on conflict, self-efficacy, and task performance. Journal of Applied Psychology. 1988;73(2):199–207. doi: 10.1037/0021-9010.73.2.199. [DOI] [PubMed] [Google Scholar]

- Baumeister et al. (2003).Baumeister RF, Campbell JD, Krueger JI, Vohs KD. Does high self-esteem cause better performance, interpersonal success, happiness, or healthier lifestyles? Psychological science in the public interest. A Journal of the American Psychological Society. 2003;4(1):1–44. doi: 10.1111/1529-1006.01431. [DOI] [PubMed] [Google Scholar]

- Bird (2011).Bird KS. Do women publish fewer journal articles than men? Sex differences in publication productivity in the social sciences. British Journal of Sociology of Education. 2011;32(6):921–937. doi: 10.1080/01425692.2011.596387. [DOI] [Google Scholar]

- Budden et al. (2008).Budden AE, Tregenza T, Aarssen LW, Koricheva J, Leimu R, Lortie CJ. Double-blind review favours increased representation of female authors. Trends in Ecology & Evolution. 2008;23(1):4–6. doi: 10.1016/j.tree.2007.07.008. [DOI] [PubMed] [Google Scholar]

- Bürkner & Others (2017).Bürkner P-C, Others Brms: an R package for Bayesian multilevel models using stan. Journal of Statistical Software. 2017;80(1):1–28. [Google Scholar]

- Cameron, Gray & White (2013).Cameron EZ, Gray ME, White AM. Is publication rate an equal opportunity metric? Trends in Ecology & Evolution. 2013;28(1):7–8. doi: 10.1016/j.tree.2012.10.014. [DOI] [PubMed] [Google Scholar]

- Cho et al. (2014).Cho AH, Johnson SA, Schuman CE, Adler JM, Gonzalez O, Graves SJ, Huebner JR, Marchant DB, Rifai SW, Skinner I, Bruna EM. Women are underrepresented on the editorial boards of journals in environmental biology and natural resource management. PeerJ. 2014;2:e542. doi: 10.7717/peerj.542. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Crenshaw (1991).Crenshaw K. Mapping the margins: intersectionality, identity politics, and violence against women of color. Stanford Law Review. 1991;43(6):1241–1299. doi: 10.2307/1229039. [DOI] [Google Scholar]

- Darling (2015).Darling ES. Use of double-blind peer review to increase author diversity. Conservation Biology. 2015;29(1):297–299. doi: 10.1111/cobi.12333. [DOI] [PubMed] [Google Scholar]

- D’Andrea & O’Dwyer (2017).D’Andrea R, O’Dwyer JP. Can editors save peer review from peer reviewers? PLOS ONE. 2017;12(10):e0186111. doi: 10.1371/journal.pone.0186111. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fox, Burns & Meyer (2016).Fox CW, Burns CS, Meyer JA. Editor and reviewer gender influence the peer review process but not peer review outcomes at an ecology journal. Functional Ecology. 2016;30(1):140–153. [Google Scholar]

- Fox & Paine (2019).Fox CW, Paine CET. Gender differences in peer review outcomes and manuscript impact at six journals of ecology and evolution. Ecology and Evolution. 2019;9(6):3599–3619. doi: 10.1002/ece3.4993. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gelman & Rubin (1992).Gelman A, Rubin DB. Inference from iterative simulation using multiple sequences. Statistical Science: A Review Journal of the Institute of Mathematical Statistics. 1992;7(4):457–472. [Google Scholar]

- Goldberg (1968).Goldberg P. Are women prejudiced against women? Trans-Action. 1968;5(5):28–30. [Google Scholar]

- Groves (2010).Groves T. Is open peer review the fairest system? Yes. BMJ. 2010;341:c6424. doi: 10.1136/bmj.c6424. [DOI] [PubMed] [Google Scholar]

- Guarino & Borden (2017).Guarino CM, Borden VMH. Faculty service loads and gender: are women taking care of the academic family? Research in Higher Education. 2017;58(6):672–694. doi: 10.1007/s11162-017-9454-2. [DOI] [Google Scholar]

- Helmer et al. (2017).Helmer M, Schottdorf M, Neef A, Battaglia D. Gender bias in scholarly peer review. eLife. 2017;6:e21718. doi: 10.7554/eLife.21718. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hengel (2017).Hengel E. Publishing while female are women held to higher standards? Evidence from peer review. 2017. https://www.repository.cam.ac.uk/handle/1810/270621 https://www.repository.cam.ac.uk/handle/1810/270621

- Hoffman & Gelman (2014).Hoffman MD, Gelman A. The No-U-Turn sampler: adaptively setting path lengths in Hamiltonian Monte Carlo. Journal of Machine Learning Research. 2014;15(1):1593–1623. [Google Scholar]

- Howe-Walsh & Turnbull (2014).Howe-Walsh L, Turnbull S. Barriers to women leaders in academia: tales from science and technology. Studies in Higher Education. 2014;41(3):415–428. doi: 10.1080/03075079.2014.929102. [DOI] [Google Scholar]

- Huh & Sun (2008).Huh S. Peer review and manuscript management in scientific journals: guidelines for good practice. Journal of Educational Evaluation for Health Professions. 2008;5:5. doi: 10.3352/jeehp.2008.5.5. [DOI] [Google Scholar]

- Kaatz, Gutierrez & Carnes (2014).Kaatz A, Gutierrez B, Carnes M. Threats to objectivity in peer review: the case of gender. Trends in Pharmacological Sciences. 2014;35(8):371–373. doi: 10.1016/j.tips.2014.06.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kahn & Scott (1997).Kahn JH, Scott NA. Predictors of research productivity and science-related career goals among counseling psychology doctoral students. Counseling Psychologist. 1997;25(1):38–67. doi: 10.1177/0011000097251005. [DOI] [Google Scholar]

- Lee et al. (2013).Lee CJ, Sugimoto CR, Zhang G, Cronin B. Bias in peer review. Journal of the American Society for Information Science and Technology. 2013;64(1):2–17. doi: 10.1002/asi.22784. [DOI] [Google Scholar]

- Leek, Taub & Pineda (2011).Leek JT, Taub MA, Pineda FJ. Cooperation between referees and authors increases peer review accuracy. PLOS ONE. 2011;6:e26895. doi: 10.1371/journal.pone.0026895. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lerback & Hanson (2017).Lerback J, Hanson B. Journals invite too few women to referee. Nature. 2017;541(7638):455–457. doi: 10.1038/541455a. [DOI] [PubMed] [Google Scholar]

- Leslie et al. (2015).Leslie S-J, Cimpian A, Meyer M, Freeland E. Expectations of brilliance underlie gender distributions across academic disciplines. Science. 2015;347(6219):262–265. doi: 10.1126/science.1261375. [DOI] [PubMed] [Google Scholar]

- Murray et al. (2019).Murray D, Siler K, Larivière V, Chan WM, Collings AM, Raymond J, Sugimoto CR. Author-reviewer homophily in peer review. bioRxiv. 2019;16(3):322. doi: 10.1101/400515. [DOI] [Google Scholar]

- Pulverer (2010).Pulverer B. Transparency showcases strength of peer review. Nature. 2010;468:29–31. doi: 10.1038/468029a. [DOI] [PubMed] [Google Scholar]

- Resnik & Elmore (2016).Resnik DB, Elmore SA. Ensuring the quality, fairness, and integrity of journal peer review: a possible role of editors. Science and Engineering Ethics. 2016;22(1):169–188. doi: 10.1007/s11948-015-9625-5. [DOI] [PubMed] [Google Scholar]

- Resnik, Gutierrez-Ford & Peddada (2008).Resnik DB, Gutierrez-Ford C, Peddada S. Perceptions of ethical problems with scientific journal peer review: an exploratory study. Science and Engineering Ethics. 2008;14(3):305–310. doi: 10.1007/s11948-008-9059-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Salerno et al. (2019).Salerno PE, Páez-Vacas M, Guayasamin JM, Stynoski JL. Male principal investigators (almost) don’t publish with women in ecology and zoology. PLOS ONE. 2019;14(6):e0218598. doi: 10.1371/journal.pone.0218598. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stan Development Team (2015).Stan Development Team Stan: a C++ library for probability and sampling. Version 2.8.02015

- Steele & Ambady (2006).Steele JR, Ambady N. “Math is hard!” the effect of gender priming on women’s attitudes. Journal of Experimental Social Psychology. 2006;42(4):428–436. doi: 10.1016/j.jesp.2005.06.003. [DOI] [Google Scholar]

- Symonds et al. (2006).Symonds MRE, Gemmell NJ, Braisher TL, Gorringe KL, Elgar MA, Tregenza T. Gender differences in publication output: towards an unbiased metric of research performance. PLOS ONE. 2006;1(1):e127. doi: 10.1371/journal.pone.0000127. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Webb, O’Hara & Freckleton (2008).Webb TJ, O’Hara B, Freckleton RP. Does double-blind review benefit female authors? Trends in Ecology & Evolution. 2008;23(7):351–353. doi: 10.1016/j.tree.2008.03.003. [DOI] [PubMed] [Google Scholar]

- Wennerås & Wold (1997).Wennerås C, Wold A. Nepotism and sexism in peer-review. Nature. 1997;387(6631):341–343. doi: 10.1038/387341a0. [DOI] [PubMed] [Google Scholar]

- Witteman et al. (2019).Witteman HO, Hendricks M, Straus S, Tannenbaum C. Are gender gaps due to evaluations of the applicant or the science? A natural experiment at a national funding agency. Lancet. 2019;393(10171):531–540. doi: 10.1016/s0140-6736(18)32611-4. [DOI] [PubMed] [Google Scholar]

- Xu (2008).Xu YJ. Gender disparity in STEM disciplines: a study of faculty attrition and turnover intentions. Research in Higher Education. 2008;49(7):607–624. doi: 10.1007/s11162-008-9097-4. [DOI] [Google Scholar]

- Zambrana et al. (2015).Zambrana RE, Ray R, Espino MM, Castro C, Douthirt Cohen B, Eliason J. Don’t leave us behind’ the importance of mentoring for underrepresented minority faculty. American Educational Research Journal. 2015;52(1):40–72. doi: 10.3102/0002831214563063. [DOI] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Detailed information on survey distribution for this study.

Data Availability Statement

The following information was supplied regarding data availability:

Code is available at GitHub: https://github.com/njsilbiger/UnproReviewsInSTEM.

Code is also available at Zenodo: DOI 10.5281/zenodo.3533928.

The survey collected no unique personal identifying information beyond basic demographic information. However, respondent anonymity may be jeopardized through revealing specific combinations of the demographic information collected (e.g., field of study, gender, race/ethnicity). The data that support the findings of this study have been redacted to protect the anonymity of the respondents and is available as a Supplemental File.