Abstract

Designing modern photonic devices often involves traversing a large parameter space via an optimization procedure, gradient based or otherwise, and typically results in the designer performing electromagnetic simulations of a large number of correlated devices. In this paper, we investigate the possibility of accelerating electromagnetic simulations using the data collected from such correlated simulations. In particular, we present an approach to accelerate the Generalized Minimal Residual (GMRES) algorithm for the solution of frequency-domain Maxwell’s equations using two machine learning models (principal component analysis and a convolutional neural network). These data-driven models are trained to predict a subspace within which the solution of the frequency-domain Maxwell’s equations approximately lies. This subspace is then used for augmenting the Krylov subspace generated during the GMRES iterations, thus effectively reducing the size of the Krylov subspace and hence the number of iterations needed for solving Maxwell’s equations. By training the proposed models on a dataset of wavelength-splitting gratings, we show an order of magnitude reduction (~10–50) in the number of GMRES iterations required for solving frequency-domain Maxwell’s equations.

Subject terms: Computational science, Nanophotonics and plasmonics

Introduction

Numerical algorithms for solving Maxwell’s equations are often required in a large number of design problems, ranging from integrated photonic devices to RF antennas and filters. Recent advances in large-scale computational capabilities have opened up the door for algorithmic design of electromagnetic devices for a number of applications1–3. These design algorithms typically explore very high dimensional parameter spaces using gradient-based optimization methods. A single device optimization requires ~500–1000 electromagnetic simulations, making them the primary computational bottleneck. However, during such a design process, the electromagnetic simulations being performed are on correlated permittivity distributions (e.g. permittivity distributions generated at different steps of a gradient-based design algorithm). The availability of such data opens up the possibility of using data-driven approaches for accelerating electromagnetic simulations.

Accelerating simulations in a gradient-based optimization algorithm has been previously investigated in structural engineering designs via Krylov subspace recycling4,5 — the key idea in such approaches is to use the subspace spanned by simulations in an optimization trajectory to augment future simulations being performed in the same trajectory. However, these approaches become computationally infeasible if an attempt is made to exploit the full extent of the available simulation data (e.g. use simulations performed in a large number of correlated optimization trajectories) since the augmenting subspace becomes increasingly high-dimensional. Approaches that intelligently select a suitable low-dimensional subspace using machine learning models, like the one explored in this paper, are thus expected to be more efficient at exploiting the available simulation data in practical design settings. Data-driven methods for solving partial-differential equations have only recently been investigated, with demonstration of learning an ‘optimal’ finite-difference stencil6 for time-domain simulations, or using neural networks for solving partial differential equations7.

In this paper, we investigate the possibility of accelerating finite difference frequency domain (FDFD) simulation of Maxwell’s equations using data-driven models. Performing an FDFD simulation is equivalent to solving a large sparse system of linear equations, which is typically done using an iterative solver such as Generalized Minimal Residual (GMRES) algorithm8. Here we develop an accelerated solver (data-driven GMRES) by interfacing a machine learning model with GMRES. The machine learning model is trained to predict a subspace that approximates the simulation result, and the subspace is used to augment the GMRES iterations. Since the simulation is still being performed with an iterative solver, it is guaranteed that the result of the simulation will be accurate — the performance of the machine learning model only affects how fast the solution is obtained. This is a major advantage of this approach over other data-driven attempts for solving Maxwell’s equations9,10 that have been presented so far, in which case a misprediction by the model would result in an inaccurate simulation. Using wavelength-splitting gratings as an example, we show an order of magnitude reduction in the number of GMRES iterations required for solving frequency-domain Maxwell’s equations.

This paper is organized as follows - Section 1 outlines the data-driven GMRES algorithm and Section 2 presents results of applying the data-driven GMRES algorithm using two machine learning models, principal component analysis and convolutional neural networks, to simulate wavelength splitting gratings. We show that data-driven GMRES not only achieves an order of magnitude speedup against GMRES, but also outperforms a number of commonly used data-free preconditioning techniques.

Data-driven GMRES

In the frequency domain, Maxwell’s equations can be reduced to a partial differential equation relating the electric field E(x) to its source J(x):

| 1 |

where is the frequency of the simulation, and is the permittivity distribution as a function of space. The finite difference frequency domain method11 is a popular approach for numerically solving this partial differential equation – it discretizes this equation on the Yee grid with perfectly matched layers together and periodic boundary conditions used for terminating the simulation domain to obtain a system of linear equations, , where A is a sparse matrix describing the operator and b is a vector describing the source term on the Yee grid.

For large-scale problems, this system of equation is typically solved via a Krylov subspace-based iterative method8. Krylov subspace methods have an advantage that they only access the matrix A via matrix-vector products, which can be performed very efficiently since A is a sparse matrix. The iterative algorithm that we focus on in this paper is GMRES8. In ith iteration of standard GMRES, the solution to is approximated by fi, where:

| 2 |

where is the Krylov subspace of dimension i generated by . The Krylov subspace can be generated iteratively i.e. if an orthonormal basis for has been computed, then an orthonormal basis for can be efficiently computed with one additional matrix-vector product. In practical simulation settings, the GMRES iteration is performed till the Krylov subspace is large enough for the residual to be smaller than a user-defined threshold. We also note that GMRES is a completely data-free algorithm — it only requires knowledge of the source vector b and the ability to multiply the matrix A with an arbitrary vector.

The number of GMRES iterations can be significantly reduced if an estimate of the solution is known. To this end, we train a data-driven model on simulations of correlated structures to predict a (low-dimensional) subspace within which is expected to lie. More specifically, the model predicts vectors, , such that . These vectors can then be used to augment the GMRES iterations (we refer to the augmented version of GMRES as data-driven GMRES throughout the paper) — the ith iteration of data-driven GMRES can be formulated as:

| 3 |

where , and are given by:

| 4 |

| 5 |

where is the operator projecting a vector out of the space spanned by . Note that while in GMRES (Eq. 2) the generated Krylov subspace is responsible for estimating the entire solution , in data-driven GMRES (Eq. 3) the generated Krylov subspace is only responsible for estimating the projection of perpendicular to the subspace . Therefore, a large speedup in the solution of can be expected if is a good estimate of a subspace within which lies. Moreover, as is shown in the supplement, an efficient update algorithm for data-driven GMRES can be formulated in a manner identical to that formulated for Generalized Conjugate Residual with inner Orthogonalization and outer Truncation (GCROT)5,12.

Results

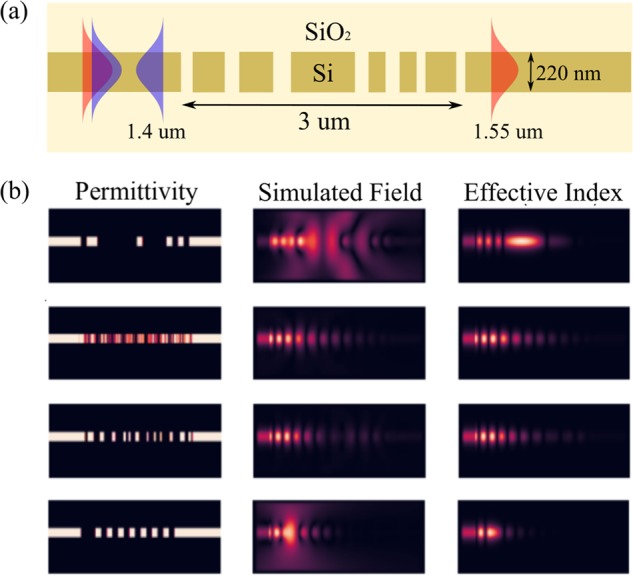

We investigate two data-driven models to predict the vectors : principal component analysis and a convolutional neural network. The dataset that we use for training and evaluating these models comprises of a collection of 2D grating splitters [Fig. 1(a)] which reflect an incident waveguide mode at μm and transmit an incident waveguide mode at μm. Throughout this paper, we focus on accelerating simulations of the grating splitters at μm — so as to train our data-driven models, we provide the dataset with the full simulations of the electric fields in the grating splitter at 1.4 μm [Fig. 1(b)]. Additionally, we inuitively expect a well designed data-driven model to perform better if supplied with an approximation to the simulated field as input for predicting the subspace . To this end, we provide the dataset with effective index simulations13 of the electric fields in the grating splitter [Fig. 1(b)]. The effective index simulations are very cheap to perform since they are equivalent to solving Maxwell’s equations in 1D, making them an attractive approximation to the simulated field that the data-driven model can exploit.

Figure 1.

(a) Schematic of the grating splitter device that comprises the dataset. All the gratings in the dataset are 3 μm long and are designed for a 220 nm silicon-on-insulator (SOI) platform with oxide cladding. We use a uniform spatial discretization of 20 nm while representing Eq. 1 as a system of linear equations. The resulting system of linear equations has 229 × 90 = 20,610 unknown complex numbers. (b) Visualizing samples from the dataset — shown are permittivity distribution, simulated electric fields and effective index fields for 4 randomly chosen samples. All fields are shown at a wavelength of 1.4 μm.

Principal component analysis

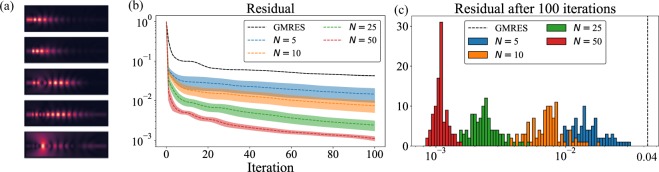

The first data-driven model that we consider for accelerating FDFD simulations is using the principal components14 computed from the simulated fields in the training dataset as . The first 5 principal components of the training dataset are shown in Fig. 2(a) — we computed these principal components by performing an incomplete singular value decomposition of a matrix formed with the electric field vectors of the training dataset as its columns. The first two principal components appear like fields “reflected” from the grating region, whereas the higher order principal components capture fields that are either transmitted or scattered away from the grating devices. Note that the principal components are not necessarily solutions to Maxwell’s equations for a grating structure, but provide an estimate of a basis on which the solutions of Maxwell’s equations for the grating structures can be accurately represented.

Figure 2.

(a) First five principal components of the electric fields in the grating splitter dataset. (b) Performance of data-driven GMRES on the evaluation dataset when supplied with different number of principal components (~200 samples from the training set were used for computing the principal components) — the dotted line shows the mean residual, and the solid colored background indicates the region within one standard deviation around the mean residual. (c) Histogram of the residual after 100 data-driven GMRES iterations for different N computed over 100 randomly chosen samples from the evaluation dataset. The black vertical dashed line indicates the mean residual after 100 iterations of GMRES over the evaluation dataset.

Using principal components as in data-driven GMRES, Fig. 2(b) shows the residual as a function of the number of iterations i and Fig. 2(c) shows the histogram of the residual after 100 iterations over the evaluation dataset. We clearly see an order of magnitude speed up in convergence rate for GMRES when supplemented with ≥5 principal components. Note that a typical trajectory of data-driven GMRES shows a significant reduction in the residual in the first iteration. This corresponds to GMRES finding the most suitable vector minimizing the residual within the space spanned by b and the supplied principal components. Moreover, the residuals in the data-driven GMRES decrease more rapidly than in GMRES. This acceleration can be attributed to the fact that the Krylov subspace generated corresponds to the matrix defined in Eq. 4 instead of A. Since A corresponds to a double derivative operator, its application is equivalent to convolving the electric field on the grid with a 3 × 3 filter. Therefore, A generates a Krylov subspace almost on a pixel by pixel basis15. On the other hand, application of to a vector is equivalent to application of A (which is a 3 × 3 filter) followed by the projection operator (which is a fully dense operator). can thus generate a Krylov subspace that spans the entire simulation region within the first few iterations, leading to a larger decrease in the residual ri in data-driven GMRES as compared to GMRES.

We also reemphasize the fact that the computation of principal components needs to be performed only once for a given training dataset. Additionally, we observed that we did not need to use the entire dataset (which had ~22,500 electric field vectors) for computing the principal components — using ~200 randomly chosen data samples already provided a good estimate of the dominant principal components. Consequently, the computational cost of data-driven GMRES is still dominated by the iterative solve which indicates that the residual obtained by data-driven GMRES when compared to GMRES is a good measure of the obtained speedup.

Convolutional neural network

While using principal components achieves an order of magnitude speed up over GMRES, this approach predicts the same subspace irrespective of the permittivity distribution being simulated. Intuitively, it might be expected that a data-driven model that specializes to the permittivity distribution being simulated would unlock an even greater speed up over GMRES. To this end, we train a convolutional neural network that takes as input the permittivity distribution of the grating device as well as the effective index electric field and predicts the vectors [Fig. 3(a)] that can be used in data-driven GMRES to simulate the permittivity distribution under consideration. To train the convolutional neural network, we consider two different loss functions:

-

Projection loss function: For the kth training example, the projection loss is defined by the square of length of the simulated field that is perpendicular to the space spanned by the vectors relative to the square of the length of :

6 Note that , with indicating that lies in the subspace and indicating that is orthogonal to .

Residual loss function: The residual loss function can remedy this issue. For the kth training example, the residual loss is defined by:

| 7 |

where is the sparse matrix corresponding to the operator for the kth training example. Similar to the projection loss, , with lies within the subspace and is orthogonal to the subspace with respect to the positive definite matrix (i.e. ). However, unlike the projection loss function, the residual loss function is an unsupervised loss function i.e. the simulated electric fields for the structure are not required for its computation.

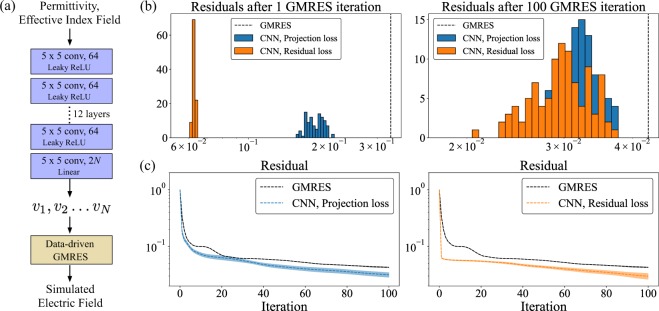

Figure 3.

(a) Schematic of the CNN based data-driven GMRES — a convolutional neural network takes as input the permittivity and effective index field and produces as an output the vectors . These vectors are then supplied to the data-driven GMRES algorithm, which produces the full simulated field. (b) Histogram of the residual after 1 and 100 data-driven GMRES iterations evaluated over the evaluation dataset. We consider neural networks trained with both the projection loss function and residual loss function . The vertical dashed lines indicate the mean residual after 1 and 100 iterations of GMRES over the evaluation dataset. (c) Performance of the data-driven GMRES on the evaluation dataset when supplied with the vectors at the output of the convolutional neural networks trained with the projection loss function and the residual loss function . The dotted line shows the mean residual, and the solid colored background indicates the region within ±standard deviation around the mean residual.

Since the residual loss function trains the neural network to minimize the residual directly, it accelerates data-driven GMRES significantly in the first few iterations as compared to the projection loss function. This can be clearly seen in Fig. 3(b), which shows histograms of the residual after 1 iteration and 100 iterations over the evaluation dataset. However, as is seen from the residual vs iteration number plots in Fig. 3(c), data-driven GMRES with the convolutional neural network trained using the residual loss function slows down after the first few iterations. It is not clear why this slow down happens, and is part of ongoing research.

We also note that the use of fields obtained with an effective index simulation as an input to the convolutional neural network significantly improves the performance of the convolutional neural network. Empirically, we observed that if the convolutional neural network was trained with the projection loss function but without the effective index fields as an input, the training loss would saturate at ~0.2, indicating that on an average approximately 20% of the simulated fields lie outside the subspace . With a convolutional neural network that takes the effective index fields as an input in addition to the permittivity distribution, the training loss would saturate at a significantly lower value of ~0.05 indicating that 95% of the simulated field lies in the subspace .

Finally, we point out that the convolutional neural network needs to be trained only once before using it to accelerate GMRES over all the simulations in the evaluation dataset. The results presented in this paper were obtained using a convolutional neural network that required ~10 hours to train, with the training distributed over ~8 GPUs. However, once the neural network was trained, computing the vectors for a given structure (i.e. performing the feedforward computation for the neural network shown in Fig. 3(a)) is extremely fast, and the time take for this computation is negligible when compared to the iterative solve. For full three-dimensional simulations, we expect the iterative solve to be even slower than feedforward computations of most convolutional neural network and consequently believe that data-driven approaches can significantly accelerate electromagnetic simulations in practical settings.

Benchmarks against data-free preconditioning techniques

Accelerating the solution of linear system of equations is a problem that has been studied by the scientific computing community via data-free approaches for several decades. For iterative solvers in particular, using various preconditioners16 to improve the spectral properties of the system of linear equations has emerged as a common strategy to solve this problem. In order to gauge the performance of the data-driven approaches outlined in this paper, we benchmark their performance against three stationary preconditioning techniques (Jacobi preconditioner, Gauss-Seidel preconditioner, Symmetric over-relaxation (SOR) preconditioner), incomplete LU preconditioner and a preconditioner designed specifically for finite-difference frequency domain simulations of Maxwell’s equations17 (see the supplement for residual vs. iteration plots of GMRES with different preconditioners on the evaluation dataset). Table 1 shows the results of these benchmarks:

Training time: While using data-driven GMRES augmented with principal components, the training time refers to the amount of time taken to compute the PCA vectors from the training data. While using data-driven GMRES augmented with the output of the CNN, training time refers to the amount of time taken to train the convolutional neural network by minimizing the projection loss function or the residual loss function over the training data. It can be noted that training is done only once, and the same PCA vectors or the same CNN are used for augmenting data-driven GMRES over all the structures in the evaluation dataset.

Setup time: The setup time refers to the amount of time taken to compute the augmenting vectors or the preconditioner for a given evaluation data sample. Note that there is no setup time while using principal components since they are computed once from the training data. The setup time for the convolutional neural network refers to the amount of time taken to perform the feedforward computation. The setup time for the preconditioners refers to the time taken for computing the preconditioners.

GMRES time: This refers to the amount of time taken to run GMRES iterations on the evaluation data sample to reduce the residual below a threshold rth. The results in Table 1 are for which is chosen to be equal to the mean residual achieved by unpreconditioned GMRES after 100 iterations.

Total solve time: This is the amount of time taken for performing one simulation. It is calculated as a sum of the setup time (i.e. time required for calculating the augmenting vectors or the preconditioner) and the GMRES time. We do not include the training time in the solve time since the training is done exactly once for the entire evaluation dataset.

Number of iterations: This refers to the number of iterations for which GMRES needs to be performed to reduce the residual to below a threshold residual .

Table 1.

Benchmarking data-driven GMRES against data-free preconditioning techniques.

| Method | Training time | Setup time | GMRES time | Total solve time | Number of iterations |

|---|---|---|---|---|---|

| GMRES | × | × | 23.34 s | 23.34 s | 115.7 |

| PCA: | 7.63 s | × | 0.87 s | 0.87 s | 6.1 |

| PCA: | 7.63 s | × | 0.59 s | 0.59 s | 3.5 |

| PCA: | 7.63 s | × | 0.62 s | 0.62 s | 2.1 |

| PCA: | 7.63 s | × | 0.98 s | 0.98 s | 2.0 |

| CNN: Projection loss | ~10 hr | 0.18 s | 13.46 s | 13.52 s | 64.5 |

| CNN: Residual loss | ~10 hr | 0.40 s | 13.07 s | 13.47 s | 63.3 |

| Jacobi | × | 0.57 ms | 39.30 s | 39.30 s | 153.8 |

| Gauss seidel | × | 17.59 ms | 136.82 s | 136.82 s | 107.3 |

| Ref. 17 | × | 5.80 ms | 24.87 s | 24.87 s | 118.7 |

| SOR: | × | 16.40 ms | 170.17 s | 170.17 s | 132.0 |

| SOR: | × | 17.55 ms | 152.21 s | 152.21 s | 120.2 |

| SOR: | × | 17.10 ms | 140.56 s | 140.56 s | 111.7 |

| SOR: | × | 18.33 ms | 138.67 s | 138.67 s | 107.5 |

| SOR: | × | 20.55 ms | 169.73 s | 169.73 s | 120.4 |

| SOR: | × | 21.37 ms | 349.27 s | 349.27 s | 235.9 |

| ILU: Drop tol. = 10−1 | × | 6.62 s | 13.90 s | 20.52 s | 66.2 |

| ILU: Drop tol. = 10−2 | × | 7.01 s | 4.59 s | 11.60 s | 28.6 |

| ILU: Drop tol. = 10−3 | × | 9.29 s | 0.74 s | 10.03 s | 3.0 |

The training time refers to the amount of time taken to compute the principal components or train the convolutional neural network using the training data. This is done only once, and the same principal components or trained neural network are used for augmenting GMRES over the evaluation data. The setup time is the time taken for computing the augmenting vectors or for computing the preconditioner for an evaluation data sample. The GMRES time is the time taken for running GMRES to reach a threshold residual of over the evaluation data. The total solve time is computed by summing the setup time and the GMRES time. The number of iterations shown are the number of GMRES iterations required to reduce the residual to below . The setup time, GMRES time and number of iterations shown in the table are averaged values computed over 50 randomly chosen evaluation data samples. The evaluation times reported are for a machine with 16 CPU cores and 60 GB RAM.

The numbers presented for setup time, GMRES time and number of iterations are average values obtained by simulating 50 randomly chosen evaluation data samples. We see from Table 1 that data-driven GMRES outperforms every preconditioning technique other than the incomplete LU preconditioner by at least an order of magnitude in terms of total solve time as well as the number of GMRES iterations performed. The best case performance of these preconditioners is only slightly better than unpreconditioned GMRES. Preconditioning partial differential equations describing wave propagation is known to be a difficult problem due to the solution of the wave equations being delocalized over the entire simulation domain even when the source has compact spatial support15. We also note that while the incomplete LU preconditioner does provide a notable speedup over unpreconditioned GMRES, the PCA-based data-driven GMRES outperforms it in terms of the total solve time and CNN-based data-driven GMRES performs comparably to it. Clearly, these benchmarks indicate that the data-driven approaches introduced in this paper have the potential to provide a scalable alternative to data-free preconditioning techniques for accelerating electromagnetic simulations.

Conclusion

In conclusion, we present a framework for accelerating finite difference frequency domain (FDFD) simulations of Maxwell’s equations using data-driven models that can exploit simulations of correlated permittivity distributions. We analyze two data-driven models, based on principal component analysis and a convolutional neural network, to accelerate these simulations, and show that these models can unlock an orders of magnitude acceleration over data-free solver. Such data-driven methods would likely be important in scenarios where a large number of simulations with similar permittivity distributions are performed e.g. during a gradient-based optimization of a photonic device.

Methods

Dataset

The grating splitters are designed to reflect an incident waveguide mode at λ = 1.4 um and transmit an incident waveguide mode at λ = 1.55 um using a gradient-based design technique similar to that used for grating couplers3. Different devices in the dataset are generated by seeding the optimization with a different initial structure. Note that all the devices generated at different stages of the optimization are part of the dataset. Consequently, the dataset not only has grating splitters that have a discrete permittivity distribution (i.e. have only two materials – silicon and silicon oxide), but also grating splitters that have a continuous distribution (i.e. permittivity of the grating splitter can assume any value between that of silicon oxide and silicon). Moreover, the dataset has poorly performing grating splitters (i.e. grating splitters generated at the initial steps of the optimization procedure) as well as well-performing grating splitters (i.e. grating splitters generated towards the end of the optimization procedure). Our dataset has a total of ~30,000 examples, which we split into a training data set (75%) and an evaluation dataset (25%).

Implementation of data-driven models

Both the data-driven models (PCA and CNN) were implemented using the python library Tensorflow18. Any complex inputs (e.g. effective index fields) to the CNN were fed as an image of depth 2 comprising of the real and imaginary parts of the complex input. We use the ADAM optimizer19 with a batch size of 30 for training the convolutional neural network — it required ~10,000 steps to train the network.

Supplementary information

Acknowledgements

R.T. acknowledges funding from Kailath graduate fellowship. This work has been supported Google Inc, grant number 134920.

Author contributions

R.T. and L.S. conceived the idea, R.T. and J.L. designed and implemented the numerical experiments, M.F.S. and J.V. supervised the project. All authors contributed to the discussion and analysis of results.

Competing interests

The authors declare no competing interests.

Footnotes

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

is available for this paper at 10.1038/s41598-019-56212-5.

References

- 1.Piggott AY, et al. Inverse design and demonstration of a compact and broadband on-chip wavelength demultiplexer. Nature Photonics. 2015;9:374–377. doi: 10.1038/nphoton.2015.69. [DOI] [Google Scholar]

- 2.Piggott AY, et al. Inverse design and implementation of a wavelength demultiplexing grating coupler. Scientific reports. 2014;4:7210. doi: 10.1038/srep07210. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Su L, et al. Fully-automated optimization of grating couplers. Optics express. 2018;26:4023–4034. doi: 10.1364/OE.26.004023. [DOI] [PubMed] [Google Scholar]

- 4.Parks ML, De Sturler E, Mackey G, Johnson DD, Maiti S. Recycling krylov subspaces for sequences of linear systems. SIAM Journal on Scientific Computing. 2006;28:1651–1674. doi: 10.1137/040607277. [DOI] [Google Scholar]

- 5.Wang S, Sturler ED, Paulino GH. Large-scale topology optimization using preconditioned krylov subspace methods with recycling. International journal for numerical methods in engineering. 2007;69:2441–2468. doi: 10.1002/nme.1798. [DOI] [Google Scholar]

- 6.Bar-Sinai, Y., Hoyer, S., Hickey, J. & Brenner, M. P. Data-driven discretization: a method for systematic coarse graining of partial differential equations. arXiv preprint arXiv:1808.04930 (2018).

- 7.Han, J., Jentzen, A. & Weinan, E. Solving high-dimensional partial differential equations using deep learning. Proceedings of the National Academy of Sciences 201718942 (2018). [DOI] [PMC free article] [PubMed]

- 8.Golub, G. H. & Van Loan, C. F. Matrix computations, vol. 3 (JHU Press, 2012).

- 9.Peurifoy J, et al. Nanophotonic particle simulation and inverse design using artificial neural networks. Science advances. 2018;4:eaar4206. doi: 10.1126/sciadv.aar4206. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Liu D, Tan Y, Khoram E, Yu Z. Training deep neural networks for the inverse design of nanophotonic structures. ACS Photonics. 2018;5:1365–1369. doi: 10.1021/acsphotonics.7b01377. [DOI] [Google Scholar]

- 11.Shin, W. 3D finite-difference frequency-domain method for plasmonics and nanophotonics. Ph.D. thesis, Stanford University (2013).

- 12.Hicken JE, Zingg DW. A simplified and flexible variant of gcrot for solving nonsymmetric linear systems. SIAM Journal on Scientific Computing. 2010;32:1672–1694. doi: 10.1137/090754674. [DOI] [Google Scholar]

- 13.Hammer M, Ivanova OV. Effective index approximations of photonic crystal slabs: a 2-to-1-d assessment. Optical and quantum electronics. 2009;41:267–283. doi: 10.1007/s11082-009-9349-3. [DOI] [Google Scholar]

- 14.Jolliffe, I. Principal component analysis. In International encyclopedia of statistical science, 1094–1096 (Springer, 2011).

- 15.Ernst, O. G. & Gander, M. J. Why it is difficult to solve helmholtz problems with classical iterative methods. In Numerical analysis of multiscale problems, 325–363 (Springer, 2012).

- 16.Saad, Y. Iterative methods for sparse linear systems, vol. 82 (SIAM, 2003).

- 17.Shin, W. & Fan, S. Simulation of phenomena characterized by partial differential equations (US patent, 2014).

- 18.Abadi, M. Tensorflow: learning functions at scale. In Acm Sigplan Notices, vol. 51, 1–1 (ACM, 2016).

- 19.Kingma, D. P. & Ba, J. Adam: A method for stochastic optimization. arXiv preprint arXiv:1412.6980 (2014).

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.