Abstract

Chaos, or exponential sensitivity to small perturbations, appears everywhere in nature. Moreover, chaos is predicted to play diverse functional roles in living systems. A method for detecting chaos from empirical measurements should therefore be a key component of the biologist’s toolkit. But, classic chaos-detection tools are highly sensitive to measurement noise and break down for common edge cases, making it difficult to detect chaos in domains, like biology, where measurements are noisy. However, newer tools promise to overcome these limitations. Here, we combine several such tools into an automated processing pipeline, and show that our pipeline can detect the presence (or absence) of chaos in noisy recordings, even for difficult edge cases. As a first-pass application of our pipeline, we show that heart rate variability is not chaotic as some have proposed, and instead reflects a stochastic process in both health and disease. Our tool is easy-to-use and freely available.

Subject terms: Computational biophysics, Data processing

Daniel Toker et al. develop the Chaos Decision Tree Algorithm, a method for automatically assessing the presence and degree of chaos from empirical measurements. This study suggests that researchers can use this code to detect stochasticity, periodicity, or chaos in noisy empirical measurements.

Introduction

A remarkable diversity of natural phenomena are thought to be chaotic. Formally, a system is chaotic if it is bounded (meaning that, like a planet circling a star, its dynamics stay inside an orbit rather than escaping off to infinity), and if it is deterministic (meaning that, with the exact same initial conditions, it will always evolve over time in the same way), and if tiny perturbations to the system get exponentially amplified (Glossary (Supplementary Information), Supplementary Figs. 1, 2). The meteorologist Edward Lorenz famously described this phenomenon as the butterfly effect: in a chaotic system, something as small as the flapping of a butterfly’s wings can cause an effect as big as a tornado. This conceptually simple phenomenon—i.e., extreme sensitivity to small perturbations—is thought to appear everywhere in nature, from cosmic inflation1, to the orbit of Hyperion2, to the Belousov–Zhabotinskii chemical reaction3, to the electrodynamics of the stimulated squid giant axon4. These are only a few examples of the many places in nature where chaos has been found.

It is relatively simple to determine if a simulated system is chaotic: just run the simulation a few times, with very slightly different initial conditions, and see how quickly the simulations diverge (Supplementary Fig. 1). But, if all that is available are measurements of how a real, non-simulated system evolves over time—for e.g., how a neuron’s membrane potential changes over time, or how the brightness of a star changes over time—how can it be determined if those observations come from a chaotic system? Or if they are just noise? Or if the system is in fact periodic (Glossary, Supplementary Figs. 1, 2), meaning that, like a clock, small perturbations do not appreciably influence its dynamics?

While a reliable method for detecting chaos using empirical recordings should be an essential part of any scientist’s toolbox, such a tool might be especially helpful to biologists, as chaos is predicted to play an important functional role in a wide variety of biological processes (that said, we note that real biological systems cannot be purely chaotic in the strict mathematical since, since they certainly contain some level of dynamic noise—see Glossary—but that researchers have long speculated that many biological processes are still predominantly deterministic, but chaotic5). For example, following early speculations about the presence of chaos in the electrodynamics of both cardiac6 and neural7 tissue, the science writer Robert Pool posited in 1989 that “chaos may provide a healthy flexibility for the heart, brain, and other parts of the body.”8 Though this point has been intensely debated since the 1980s5,9, a range of more specific possible biological functions for chaos have since been proposed, including potentially maximizing the information processing capacity of both neural systems10 and gene regulatory networks11, enabling multistable perception12, allowing neural systems to flexibly transition between different activity patterns13, and boosting cellular survival rates through the promotion of heterogeneous gene expression14. And there is good reason to expect chaos to exist in biological systems, as a large range of simulations of biological processes15, and in particular of neural systems9, show clear evidence of chaos. Moreover, unambiguous evidence of biological chaos has been found in a very small number of real cases that were amenable to comparison to good theoretical models; these include periodically stimulated squid giant axons4 and cardiac cells16, as well as the discharges of the Onchidium pacemaker neuron17 and the Nitella flexillis internodal cell18. But, beyond simulations and these select empirical cases, most attempts to test the presence or predicted functions of chaos in biology have fallen short due to high levels of measurement noise (Glossary) in biological recordings. For this reason, it has long been recognized that biologists need a noise-robust tool for detecting the presence (or absence) of chaos in their noisy empirical data9,15.

Researchers also need a tool that can detect varying degrees of chaos (Glossary) in noisy recordings. In strongly chaotic systems, initially similar system states diverge faster than they do in weakly chaotic systems. And such varying degrees of chaos are predicted to occur in biology, with functional consequences. For example, a model of white blood cell concentrations in chronic granulocytic leukemia can display varying levels of chaos, and knowing how chaotic those concentrations are in actual leukemia patients could have important implications for health outcomes19. As another example, models of the human cortex predict that macro-scale cortical electrodynamics should be weakly chaotic during waking states and should be strongly chaotic under propofol anesthesia20; if this prediction is true, then detecting changing levels of chaos in large-scale brain activity could be useful for monitoring depth of anesthesia and for basic anesthesia research. Thus, it is imperative to develop tools that can not only determine that an experimental system is chaotic, but also tools to assess changing levels of chaos in a system.

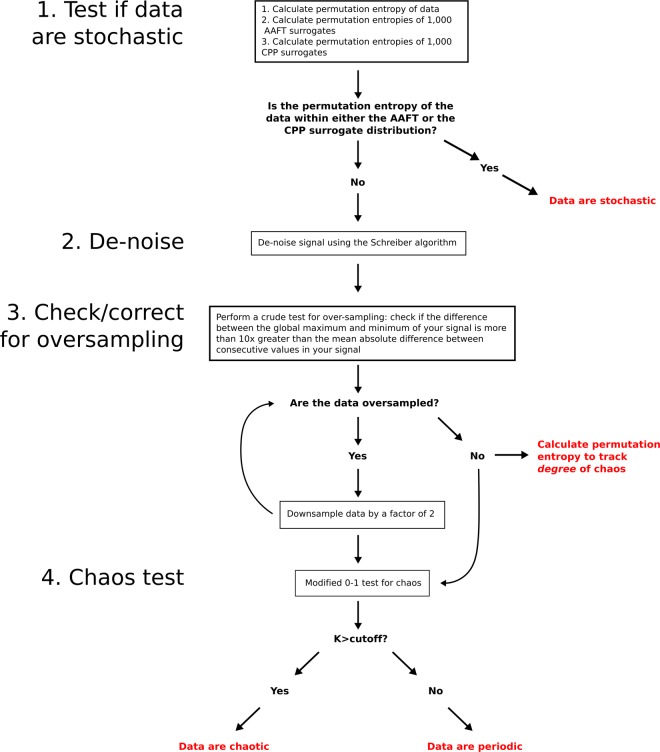

Although classic tools for detecting the presence and degree of chaos in data are slow, require large amounts of data, are highly sensitive to measurement noise, and break down for common edge cases, more recent mathematical research has provided new, more robust tools for detecting chaos or a lack thereof in noisy time-series recordings. Here, for the first time (to our knowledge), we combine several key mathematical tools into a single, fully automated Matlab processing pipeline, which we call the Chaos Decision Tree Algorithm21 (Fig. 1). The Chaos Decision Tree Algorithm takes a single time-series of any type—be it recordings of neural spikes, time-varying transcription levels of a particular gene, fluctuating oil prices, or recordings of stellar flux - and classifies those recorded data as coming from a system that is predominantly (or “operationally”22) stochastic, periodic, or chaotic. The algorithm requires no input from the user other than a time-series recording, though we have structured our code such that users can also select from among a number of alternative subroutines (see Methods section, Fig. 1).

Fig. 1. The Chaos Decision Tree Algorithm21.

The first step of the algorithm is to test if data are stochastic. The Chaos Decision Tree Algorithm uses a surrogate-based approach to test for stochasticity, by comparing the permutation entropy of the original time-series to the permutation entropies of random surrogates of that time series. If the user does not specify which surrogate algorithms to use, the Chaos Decision Tree Algorithm automatically picks a combination of Amplitude Adjusted Fourier Transform33 surrogates and Cyclic Phase Permutation35 surrogates—see Supplementary Tables 2, 3. If the permutation entropy of the original time-series falls within either surrogate distribution, the time-series is classified as stochastic; if the permutation entropy falls outside the surrogate distributions, then the algorithm proceeds to denoise the inputted signal. Several de-noising subroutines are available, but if the user does not specify a subroutine, the pipeline will use Schreiber’s noise-reduction algorithm36 (Supplementary Table 5). The pipeline then checks for signal oversampling; if data are oversampled, the pipeline iteratively downsamples the data until they are no longer oversampled (note that an alternative downsampling method proposed by Eyébé Fouda and colleagues68 may be selected instead—see Supplementary Table 6). Finally, the Chaos Decision Tree Algorithm performs the 0–1 chaos test on the input data, which has been modified from the original 0–1 test to be less sensitive to noise, suppress correlations arising from quasi-periodicity, and normalize the standard deviation of the test signal (see Methods section). The value for the parameter that suppresses signal correlations can be specified by the user, but is otherwise automatically chosen based on ROC analyses performed here (Supplementary Fig. 4). The modified 0–1 test provides a single statistic, K, which approaches 1 for chaotic systems and approaches 0 for periodic systems. Any cutoff for K may be inputted to the pipeline, and if no cutoff is provided, the pipeline will use a cutoff based on the length of the time-series (Supplementary Fig. 6). If K is greater than the cutoff, the data are classified as chaotic, and if they are less than or equal to the cutoff, they are classified as periodic.

In this paper, we show that the Chaos Decision Tree Algorithm performs with very high accuracy across a wide variety of both real and simulated systems, even in the presence of relatively high levels of measurement noise. Moreover, our pipeline can accurately track changing degrees of chaos (for e.g., transitions from weak to strong chaos). With an eye toward applications to biology, the simulated systems we tested included a high-dimensional mean-field model of cortical electrodynamics, a model of a spiking neuron, a model of white blood cell concentrations in chronic granulocytic leukemia, and a model of the transcription of the NF-κB protein complex. We also tested the algorithm on a wide variety of non-biological simulations, including several difficult edge cases; these included strange non-chaotic systems, quasi-periodic systems, colored noise, and nonlinear stochastic systems (see Glossary for definitions of these terms), which are all classically difficult to distinguish from chaotic systems23–26. We also tested the algorithm on a hyperchaotic system (Glossary), which can be difficult to distinguish from noise25, as well as on several non-stationary processes (Glossary) in order to test the robustness of the algorithm against non-stationarity. Finally, we tested the Chaos Decision Tree Algorithm on several empirical (i.e. non-simulated) datasets for which the ground-truth presence or absence of chaos has been previously established by other studies. These included an electronic circuit in periodic, strange non-chaotic, and chaotic states27, a chaotic laser28, the stellar flux of a strange non-chaotic star29, the linear/stochastic North Atlantic Oscillation index30, and nonlinear/stochastic Parkinson’s and essential tremors26. Overall, our pipeline performed with near-perfect accuracy in classifying these data as stochastic, periodic, or chaotic, as well as in tracking changing degrees of chaos in both real and simulated systems. Finally, we applied our algorithm to electrocardiogram recordings from healthy subjects, patients with congestive heart failure, and patients with atrial fibrillation31, and provide evidence that heart rate variability reflects a predominantly stochastic, rather than chaotic process.

We have made our Matlab code freely and publicly available at https://figshare.com/s/80891dfb34c6ee9c8b34.

Results

The Chaos Decision Tree Algorithm21 is depicted graphically in Fig. 1. The pipeline consists of four steps: (1) Determine if the data are stochastic using permutation entropy32 and a combination of Amplitude Adjusted Fourier transform surrogates33,34 and Cyclic Phase Permutation surrogates34,35 (Glossary), (2) De-noise the data using the Schreiber de-noising algorithm36 (Glossary), (3) Correct for possible signal oversampling, and (4) Test for chaos using a modified 0–1 test for chaos23,37–40 (Glossary). For each step of the processing pipeline, we compared the performance of different available tools (i.e., different surrogate-based tests for stochasticity, different de-noising methods, different downsampling methods, and different chaos-detection methods), and chose the tools with the highest classification performance (Supplementary Tables 1–14). Note that with user input, the Chaos Decision Tree Algorithm can use any of the alternative tools tested here, and that with no user input other than a time-series recording, the algorithm will automatically use the tools we found maximized its performance. All results reported in the main body of this paper are for this automated set of high-performing tools. See Supplementary Fig. 3 for example time-traces illustrating each step of the algorithm.

We tested the performance of the (automated) Chaos Decision Tree Algorithm in detecting the presence and degree of chaos in a wide range of simulated and empirical systems for which the ground-truth presence of chaos, periodicity, or stochasticity has already been established. Details about each dataset and how the ground-truth presence or absence of chaos in those systems was previously determined are included in the Methods. Note that some systems are labeled “SNA,” which is an abbreviation for “strange non-chaotic attractor” (Glossary). These are systems whose attractors in phase space (Glossary) are fractal (like chaotic systems), but which are periodic (i.e., non-chaotic). Among these, we included the only known non-artificial strange non-chaotic system, the stellar flux of the so-called golden star KIC 5520878, as recorded by the Kepler space telescope29. All simulated datasets consisted of 10,000 time-points, and all initial conditions were randomized. For systems with more than one variable, we here report results for linear combinations of those variables (see Methods section), under the assumption that in most real-life cases, empirical recordings will contain features of multiple components of the system of study; that said, we also confirmed that the Chaos Decision Tree Algorithm has very high performance for individual system variables (Supplementary Table 15).

Results for simulations of biological systems are reported in Table 1, and results for non-biological simulations are reported in Table 2. Note that no measurement noise was added to the colored noise signals in Table 2, as doing so would flatten their power spectra. Because the datasets in Tables 1 and 2 were used to choose between alternative methods for detecting stochasticity (Supplementary Tables 1–4), de-noising (Supplementary Table 5), downsampling (Supplementary Table 6), and alternative tests of chaos (Supplementary Tables 8–13), as well as to optimize the 0–1 test for chaos (Supplementary Figs. 4–6), we further tested the Chaos Decision Tree Algorithm on held out datasets, which were not used to adjudicate between alternative tools. These held out datasets included both simulated systems (Table 3) and recordings from real (non-simulated) systems (Table 4). Several of these held out datasets were of direct biological relevance: the periodically stimulated Poincaré oscillator in Table 3 is thought to be a good model of cardiac cell electrodynamics41, which, like the Poincaré oscillator, are chaotic when periodically stimulated with certain delays between stimulation pulses16; the integrated circuit in Table 4 is a physical implementation of equations that are based on the Hodgkin-Huxley neuron model42; and the tremor signals in Table 4 are direct recordings from patients. The Chaos Decision Tree Algorithm classified the systems in Tables 1–4 as stochastic, periodic, or chaotic with near-perfect accuracy even at high levels of measurement noise, with the exception of the noise-driven sine map (Table 2)—see Discussion section. Finally, we tested the performance of the Chaos Decision Tree Algorithm on sub-samples of all systems in Tables 1–3, and confirmed that it is still highly accurate for data with just 1000 time-points (Supplementary Table 16) or 5000 time-points (Supplementary Table 17), though we note that performance for some systems did go down with less data, which is to be expected40.

Table 1.

The Chaos Decision Tree Algorithm classified biological simulations as periodic or chaotic with near-perfect accuracy.

| Measurement noise level (% of std. dev.) | |||||

|---|---|---|---|---|---|

| System | 0% | 10% | 20% | 30% | 40% |

| Cortical model20 (chaotic) | 100/100 | 100/100 | 100/100 | 97/100 | 83/100 |

| Cortical model20 (periodic) | 100/100 | 100/100 | 100/100 | 100/100 | 100/100 |

| Spiking neuron49 (chaotic) | 100/100 | 100/100 | 100/100 | 100/100 | 98/100 |

| Granulocyte levels19 (chaotic) | 100/100 | 100/100 | 100/100 | 100/100 | 100/100 |

| Granulocyte levels19 (periodic) | 100/100 | 100/100 | 100/100 | 100/100 | 83/100 |

| NF-κB transcription14 (chaotic) | 99/100 | 99/100 | 100/100 | 100/100 | 100/100 |

| NF-κB transcription14 (periodic) | 100/100 | 100/100 | 97/100 | 100/100 | 100/100 |

Table 2.

The Chaos Decision Tree Algorithm classified non-biological simulations as stochastic, periodic, or chaotic with high accuracy. These simulated systems include strange non-chaotic attractors (SNAs), linear stochastic processes, and nonlinear stochastic processes, all of which are classically difficult to distinguish from chaos.

| Measurement noise level (% of std. dev.) | |||||

|---|---|---|---|---|---|

| System | 0% | 10% | 20% | 30% | 40% |

| Cubic map50 (chaotic) | 100/100 | 100/100 | 100/100 | 100/100 | 100/100 |

| Cubic map50 (periodic) | 100/100 | 100/100 | 100/100 | 100/100 | 100/100 |

| Cubic map50 (SNA HH) | 100/100 | 100/100 | 100/100 | 100/100 | 100/100 |

| Cubic map50 (SNA S3) | 100/100 | 100/100 | 100/100 | 100/100 | 0/100 |

| GOPY map51 (SNA) | 100/100 | 100/100 | 100/100 | 99/100 | 14/100 |

| Logistic map52 (chaotic) | 100/100 | 100/100 | 100/100 | 100/100 | 100/100 |

| Logistic map52 (periodic) | 100/100 | 100/100 | 100/100 | 100/100 | 100/100 |

| Lorenz system53 (chaotic) | 100/100 | 100/100 | 97/100 | 82/100 | 36/100 |

| Generalized Hénon map54 (hyperchaotic) | 100/100 | 100/100 | 100/100 | 100/100 | 93/100 |

| Freitas map55 (nonlinear stochastic) | 78/100 | 83/100 | 98/100 | 98/100 | 74/100 |

| Noise-driven sine map55 (nonlinear stochastic) | 55/100 | 3/100 | 22/100 | 5/100 | 78/100 |

| Bounded random walk56 (nonlinear stochastic) | 100/100 | 97/100 | 59/100 | 95/100 | 100/100 |

| Cyclostationary process57 (linear stochastic) | 99/100 | 100/100 | 99/100 | 100/100 | 100/100 |

| ARMA process (linear stochastic) | 85/100 | 98/100 | 99/100 | 99/100 | 100/100 |

| Trended random walk (linear stochastic) | 100/100 | 89/100 | 90/100 | 98/100 | 100/100 |

| Random walk (linear stochastic) | 100/100 | 98/100 | 100/100 | 100/100 | 100/100 |

| Violet noise58 (linear stochastic) | 99/100 | ||||

| Blue noise58 (linear stochastic) | 100/100 | ||||

| White noise58 (linear stochastic) | 100/100 | ||||

| Pink noise58 (linear stochastic) | 100/100 | ||||

| Red noise58 (linear stochastic) | 100/100 | ||||

Table 3.

Classification accuracy in held out simulated systems. While the datasets in Tables 1, 2 were used to optimize the Chaos Decision Tree Algorithm, these datasets were not. Performance was near-perfect.

| Measurement noise level (% of std. dev.) | |||||

|---|---|---|---|---|---|

| System | 0% | 10% | 20% | 30% | 40% |

| Rössler system59 (chaotic) | 70/100 | 90/100 | 96/100 | 100/100 | 100/100 |

| Ikeda map60 (chaotic) | 100/100 | 100/100 | 100/100 | 100/100 | 14/100 |

| Hénon map61 (periodic) | 100/100 | 100/100 | 100/100 | 100/100 | 100/100 |

| Cubic map61 (period-doubled) | 100/100 | 100/100 | 100/100 | 100/100 | 100/100 |

| Poincaré oscillator41 (periodic) | 100/100 | 100/100 | 100/100 | 100/100 | 57/100 |

| Poincaré oscillator41 (quasi-periodic) | 100/100 | 100/100 | 100/100 | 100/100 | 100/100 |

| Poincaré oscillator41 (chaotic) | 100/100 | 100/100 | 100/100 | 100/100 | 100/100 |

| Multivariate AR model (linear stochastic) | 100/100 | 100/100 | 100/100 | 100/100 | 100/100 |

Table 4.

Classification accuracy in empirical (non-simulated) data from stochastic, periodic, strange non-chaotic (SNA), and chaotic systems. As was the case for the data in Table 3, these datasets were not used to optimize algorithm performance, and so are also held out datasets. Algorithm performance was perfect.

| System | |

|---|---|

| Neuron integrated circuit27 (chaotic) | 10/10 |

| Neuron integrated circuit27 (SNA) | 10/10 |

| Neuron integrated circuit27 (periodic) | 10/10 |

| Laser28 (chaotic) | 1/1 |

| Stellar flux29 (SNA) | 1/1 |

| North Atlantic Oscillation index30 (linear stochastic) | 1/1 |

| Essential tremor26 (nonlinear stochastic) | 1/1 |

| Parkinson’s tremor26 (nonlinear stochastic) | 1/1 |

Table 5 reports the accuracy of the Chaos Decision Tree Algorithm in detecting degree of chaos. Formally, a system’s degree of chaos is quantified by the magnitude of its largest Lyapunov exponent (Glossary). Unfortunately, largest Lyapunov exponents are very difficult to estimate from finite, noisy time-series recordings. But, directly estimating largest Lyapunov exponents may not be necessary for tracking changing degrees of chaos in real systems: following prior observations of a strong correlation between a quick-to-compute and noise-robust measure called permutation entropy (Glossary) and the largest Lyapunov exponents of several systems32,43, the Chaos Decision Tree Algorithm approximates degree of chaos by calculating the permutation entropy of the inputted signal, after it has been de-noised and corrected for possible over-sampling. In agreement with prior findings, we found that permutation entropy tracked degree of chaos in the logistic map, the Hénon map, the Lorenz system, a high-dimensional mean-field model of the cortex, and an electronic circuit. See Methods for details on the parameters that were used to generate dynamics with different degrees of chaos in these systems, and for details on how ground-truth largest Lyapunov exponents were calculated. Note that without downsampling, the correlation between the largest Lyapunov exponents and permutation entropy breaks down in continuous systems (Supplementary Table 14), which is to be expected, as permutation entropy has only been analytically proven to track the degree of chaos in discrete-time systems32,44 (see Glossary).

Table 5.

The Chaos Decision Tree Algorithm uses permutation entropy, calculated from data that have been de-noised and downsampled (if oversampled), to track the degree of chaos in a system, which might change as the state of the system changes.

| Measurement noise level (% of std. dev.) | |||||

|---|---|---|---|---|---|

| System | 0% | 10% | 20% | 30% | 40% |

| Logistic map52 | 0.93*** | 0.80*** | 0.93*** | 0.93*** | 0.91*** |

| Hénon map61 | 0.92*** | 0.93*** | 0.94*** | 0.92*** | 0.88*** |

| Lorenz system53 | 0.81*** | 0.71*** | 0.73*** | 0.69*** | 0.62*** |

| Cortical model20 | 0.69*** | 0.55*** | 0.39*** | 0.33*** | 0.24*** |

| Neuron integrated circuit27 | 0.94*** | ||||

We here show Spearman correlations between permutation entropy and largest Lyapunov exponents, which measure degree of chaos but which are difficult to estimate from empirical data. Data include four simulated systems and recordings from an integrated circuit in different states. See Methods for how ground-truth largest Lyapunov exponents were calculated for these systems

***p < 0.001 (two-tailed) after Bonferroni-correcting for multiple comparisons to the same set of ground-truth largest Lyapunov exponents

Finally, as a first-pass implementation of our method, we applied the Chaos Decision Tree Algorithm to recordings of human heart rate variability, made available by Physionet31. There has been considerable debate over whether or not the irregularities of heart rate signals (in either health or disease) reflect a predominantly chaotic process. While many classic chaos-detection methods have identified heart rate variability as chaotic (see Glass5 for a review), other studies have argued that this is an erroneous classification, suggesting that heart rate variability is, in fact, a nonlinear stochastic process45,46, and that prior classifications of heart rate signals as chaotic simply reflect the shortcomings of classic chaos-detection methods. In agreement with this view, we here show that the Chaos Decision Tree Algorithm classified heart rate signals from healthy subjects, congestive heart failure patients, and atrial fibrillation patients as stochastic, rather than chaotic, with the exception of two congestive heart failure patients (Table 6).

Table 6.

The Chaos Decision Tree Algorithm consistently classifies heart rate recordings, across conditions, as stochastic.

| Classification | |||

|---|---|---|---|

| Condition | Stochastic | Periodic | Chaotic |

| Healthy controls31 | 5/5 | 0/5 | 0/5 |

| Congestive heart failure31 | 3/5 | 2/5 | 0/5 |

| Atrial fibrillation31 | 5/5 | 0/5 | 0/5 |

The only exceptions were the heart rate signals recorded from two patients with congestive heart failure, which were classified as periodic

Discussion

In this paper, we have introduced a processing pipeline, called the Chaos Decision Tree Algorithm21, that can accurately detect whether a time-series signal is generated by a predominantly stochastic, periodic, or chaotic system, and can also accurately track changing levels of chaos within a system using permutation entropy. The pipeline makes no assumptions about the input data. The Chaos Decision Tree Algorithm consists of four broad steps: (1) testing for stochasticity using surrogate data methods, (2) de-noising, (3) downsampling if data are oversampled, and (3) testing for chaos using the modified 0–1 test. We tested the performance of several different surrogate data generation algorithms, de-noising algorithms, downsampling algorithms, and parameters for the modified 0–1 test. Each alternative algorithm and parameter choice has its relative strengths and weaknesses, and we have structured our code such that a user can specify which algorithms and parameters to use for each step of the pipeline. If a user only inputs a time-series recording without specifying any sub-algorithms or parameters, then our pipeline will automatically use the methods and parameters we found yielded the most accurate results across a large and diverse set of data. All analyses reported in the main body of this paper are for this automated set of subroutines.

We tested the (automated) Chaos Decision Tree Algorithm on a diverse range of simulations of biological systems, non-biological simulations, and empirical (non-simulated) data recordings. Empirical data were recorded from an integrated circuit in a periodic, strange non-chaotic, and chaotic state, a chaotic laser, the stellar flux of a strange non-chaotic star, the North Atlantic Oscillation index, a Parkinson’s tremor, an essential tremor, and heart rate variability from congestive heart failure patients, atrial fibrillation patients, and healthy controls. In the cases for which the ground-truth was known (i.e., all datasets other than heart rate variability), the Chaos Decision Tree Algorithm performed at very high accuracy even at relatively high levels of measurement noise. For heart rate variability, our results support the hypothesis that cardiac rhythm variability is stochastic45,46. Overall, these findings make us confident that the Chaos Decision Tree Algorithm can be fruitfully applied to biological and non-biological signals contaminated by measurement noise.

We note a few limitations/shortcomings of our algorithm. First, the 0–1 test used in our pipeline might classify some very weakly chaotic systems (i.e., systems whose largest Lyapunov exponent is positive but very near zero) as periodic if the length of the time-series provided is short; but, with longer time-series, the test is guaranteed to provide accurate results40. We also note that the algorithm performed poorly for the noise-driven sine map, which was consistently mis-classified as chaotic (Table 2). It is possible that this system was not classified as stochastic because its level of intrinsic noise was very low; in support of this, we found that the Chaos Decision Tree Algorithm classified nonlinear dynamical systems with very low levels of intrinsic noise as deterministic, and that classifications of stochasticity became more frequent as the level of intrinsic noise was increased (Supplementary Table 18). It is also possible that this system is, in fact, an example of noise-induced chaos47. Finally, although the choice of system observables did not appreciably affect the performance of our method (Supplementary Table 15), we agree with Letellier and colleagues48 that some system observables are better representations of a system’s dynamics than others, and that this can have important consequences for the accuracy of nonlinear time-series analysis methods such as this one. In light of these potential limitations, it bears re-emphasizing that the absence, presence, and degree of chaos can only be determined with absolute certainty in a computer model that is free of measurement noise, by running multiple simulations and seeing how quickly initially similar states diverge. Thus, although the Chaos Decision Tree Algorithm pipeline performs at very high accuracy, it should, when possible, be used in conjunction with analyses of a computer model of the system at hand.

We hope that the Chaos Decision Tree Algorithm will help advance the decades-old effort to bring the insights of chaos theory to biology. While a diverse range of biological simulations and a small number of real biological cases have been shown to be chaotic, detecting the presence and degree of chaos in biological recordings has been difficult. The Chaos Decision Tree Algorithm overcomes the difficulties of prior tests, by being fast, highly robust to measurement noise, and, unless the user specifies specific alternative subroutines, fully automated. We welcome any efforts to identify edge cases for which our pipeline systematically breaks down; given that our pipeline is a modular decision tree, new subroutines can be added to accommodate such edge cases. We hope that our pipeline (and perhaps future iterations of it) will be useful to any of the domains of science—and in particular of biology—in which chaos has been invoked, but not tested.

Methods

The Chaos Decision Tree Algorithm

To understand the logic of the Chaos Decision Tree Algorithm21, we begin with the final test in the decision tree. The crux of the Chaos Decision Tree Algorithm is the 0–1 test for chaos. The 0–1 test for chaos was originally developed by Gottwald and Melbourne37, who later offered a slightly modified version of the test, which can cope with moderate amounts of measurement noise38. Several years later, Dawes and Freeland further modified the test, such that it could suppress correlations induced by quasi-periodic dynamics, and thus more effectively distinguish between chaotic and strange non-chaotic dynamics, which are difficult to distinguish given only a time-series recording23. The modified 0–1 test involves taking a one-dimensional time-series of interest , and using it to drive the following two-dimensional system:

| 1 |

where is a random value bounded between 0 and . For a given , the solution to Eq. (1) yields:

| 2 |

Gottwald and Melbourne show that if the inputted time-series is regular, the motion of p and q is bounded, while p and q display asymptotic Brownian motion if is chaotic. The time-averaged mean square displacement of p and q, plus the noise term proposed by Dawes and Freeland23, is

| 3 |

where is a uniformly distributed random variable between and is the noise level. Finally, the outputted -statistic of the 0–1 test uses a correlation coefficient to measure the growth rate of the mean squared displacement of the two-dimensional system in Eq. (1):

| 4 |

is computed for 100 different values of , randomly sampled between 0 and , and the final output of the test is the median across different values of . For chaotic systems, this median value will approach 1, and for periodic systems, will approach 023,37–40.

There are two parameters in this modified 0–1 test: the parameter that controls the level of added noise in Eq. (3), and the cutoff for what -statistic values are classified as indicating chaos or periodicity in a finite time-series. We performed ROC-curve analyses for different values of and found that maximized classification performance across systems and noise levels (Supplementary Fig. 4), and so our pipeline automatically sets to 0.5 if is not specified by the user. Note that for non-zero values of , approaches zero as the standard deviation of a test signal approaches zero (Supplementary Fig. 5), and so the Chaos Decision Tree Algorithm multiplies a test signal by a constant to fix its standard deviation at 0.5 before applying the 0–1 test. A cutoff for K can also be inputted to our Matlab script, such that data that yield a K value greater than that cutoff are classified as chaotic and data that yield a K value less than or equal to that cutoff are classified as periodic. If no cutoff is provided, a cutoff is chosen based on an analysis of optimal cutoffs as a function of time-series length (Supplementary Fig. 6). If the automatically selected cutoff is greater than 0.99, the cutoff is set to , as is upper-bounded by 1. We have confirmed that this automated cutoff selection yields highly accurate results for sub-samples of both test and held-out datasets (Supplementary Tables 16, 17).

The 0–1 test described above only yields accurate results for data that are deterministic24,40,62,63. A system is considered deterministic if, given the exact same initial conditions, it always evolves over time the same way, whereas a system is considered stochastic if there is appreciable randomness built in to its evolution over time (Glossary, Supplementary Fig. 1, 2). Not only are all chaotic systems (predominantly) deterministic—and thus the possibility of chaos can be automatically rejected if a system is found to be stochastic (though we note that a mathematically rigorous definition of chaos has recently been extended to the domain of stochastic systems, under the framework of the Supersymmetric Theory of Stochastics47)—but the 0–1 test is also known to incorrectly classify stochastic dynamics as chaotic24,62,63. Thus, the Chaos Decision Tree Algorithm first rules out the possibility that data are predominantly stochastic before applying the modified 0–1 test. To do so, it uses a noise-robust method recently developed by Zunino and Kulp64, which tests for determinism using surrogate statistics33, with permutation entropy32 as the test statistic. The calculation of permutation entropy relies on two parameters: permutation order and time-lag. We follow the recommendation from Bandt and Pompe32 and set the time-lag to 1, and found that a permutation order of 8 maximized stochasticity detection accuracy (Supplementary Tables 2, 3). Moreover, we use a combination of amplitude adjusted Fourier transform surrogates33 and Cyclic Phase Permutation surrogates35, unlike Zunino and Kulp, who used iterative amplitude adjusted Fourier transform33 surrogates, because we found that this combination led to far higher classification accuracy (Supplementary Tables 2, 3). The Chaos Decision Tree Algorithm classifies data as stochastic (and thus does not proceed to subsequent steps) if the permutation entropy of the original data falls within either surrogate distribution. The algorithm uses the Toolboxes for Complex Systems implementation of the permutation entropy algorithm, written by Andreas Müller65. Surrogates are generated using the Matlab toolbox recently released by Lancaster and colleagues34. Note that because Fourier-based surrogates are strictly stationary, surrogate-based tests that use only Fourier-based algorithms are only valid if the test time-series is also stationary34,57; that said, we found that non-stationarity did not affect the accuracy of a stochasticity test that uses a combination of amplitude adjusted Fourier transform and Cyclic Phase Permutation surrogates (Supplementary Tables 1–4). We also did not find that a normality transformation of the data improved the performance of our surrogate-based stochasticity test (Supplementary Table 2), counter to what has been suggested elsewhere22.

If data “pass” the stochasticity test described above and are deemed operationally deterministic, then the Chaos Decision Tree Algorithm automatically denoises the inputted signal. We compared three de-noising algorithms: a moving average filter (using Matlab’s smooth.m function), the Matlab Chaotic Systems Toolbox’s66 implementation of Schreiber’s noise-reduction algorithm36 (Glossary), and wavelet de-noising using an empirical Bayesian method with a Cauchy prior (using Matlab’s wdenoise.m function). Although it is considerably slower to run, Schreiber denoising markedly outperforms the other two approaches in recovering the deterministic component of signals contaminated by measurement noise (Supplementary Table 5), and markedly improves the performance of the modified 0–1 test (Supplementary Table 6, Supplementary Fig. 4). Thus, the Chaos Decision Tree Algorithm automatically uses Schreiber de-noising before testing for chaos, unless the user specifies one of the other two de-noising algorithms tested here to be used instead.

The final step of the Chaos Decision Tree Algorithm before applying the 0–1 test is to check if data are oversampled and to downsample them if they are. Gottwald and Melbourne have shown39 that the 0–1 test can give inaccurate results for continuous (i.e., non-discrete-time) systems sampled at a very high frequency, but that it can accurately differentiate between periodic dynamics and chaotic dynamics in continuous deterministic systems when data are properly downsampled. In light of this, the Chaos Decision Tree Algorithm utilizes the (crude) test for oversampling used by Matthews67, by calculating a measure , which is the difference between the global maximum and global minimum of the data divided by the mean absolute difference between consecutive time-points in the data. If , then the data are deemed to be oversampled, and the Chaos Decision Tree Algorithm iteratively downsamples the data by a factor of 2 until or until there are fewer than 100 time-points left in the signal. We compared this approach both to no downsampling and to an alternative method, suggested by Eyébé Fouda and colleagues68 to improve 0–1 test performance, which downsamples by taking just the local minima and maxima of oversampled signals. We found that downsampling after de-noising yields more accurate results than either alternative approach when oversampled signals are contaminated by measurement noise (Supplementary Table 6). We also note that recorded experimental data may be unlikely to be oversampled (Supplementary Table 7), and that this problem may be more likely to arise in simulated continuous systems. If the data are not oversampled, or if they have been downsampled, the Chaos Decision Tree Algorithm then applies the modified 0–1 test to the data, as described above.

Finally, the algorithm uses the permutation entropy of the inputted signal as a proxy for the degree of chaos in the system. Though the algorithm uses permutation entropy to establish whether or not a signal is predominantly deterministic (see above), permutation entropy has also been shown to tightly track the largest Lyapunov exponent (and therefore the degree of chaos) of the logistic map32, the tent map69, and the Duffing oscillator43. We should in general expect a close correspondence between permutation entropy and Lyapunov exponents, in light of the equivalence in discrete-time systems between permutation entropy and Kolmogorov-Sinai entropy44,70–72, which is upper-bounded by the sum of a system’s positive Lyapunov exponents—a relationship known as the “Pesin identity”73. When calculating permutation entropy to track degree of chaos (rather than for determinism testing as above), we follow Bandt and Pompe’s32 recommendation and simply set the time-lag to 1 and the permutation order to 5, which we showed tracks degree of chaos in all systems tested (Table 5). Because this equivalence is only known to hold for discrete-time systems44, permutation entropy is only calculated after the inputted signal has been de-noised and, if oversampled, downsampled; this considerably improves its ability to track degree of chaos in continuous systems (Table 5, Supplementary Table 14).

The full decision tree of our algorithm is depicted graphically in Fig. 1.

Data

Biological simulations

The following describes the simulations of biological systems analyzed in this paper. We only picked biological simulations for which the presence or absence of chaos has been established in prior work. Initial conditions were randomized in all simulations. We also tested the effect of measurement noise on the accuracy of the Chaos Decision Tree Algorithm in classifying systems, by adding white noise to our simulated data, the amplitude of which was up to 40 the standard deviation of the original data. For each simulated system and level of measurement noise, we created 100 datasets with 10,000 time points.

Chaotic mean-field cortical model. Steyn-Ross, Steyn-Ross, and Sleigh20 describe a mean-field model of the cortex based on the equations first introduced by Liley and colleagues74,75, which includes electrical gap-junction synapses in addition to the standard chemical synapses used in the earlier models. The model contains both inhibitory and excitatory neural populations communicating locally through gap junctions and chemical synapses and communicating over long ranges via myelinated axons. The dynamics of each neural population in the model are determined by two first-order and six second-order partial differential equations, which is equivalent to 14 first-order differential equations. The primary output of the model is the mean excitatory firing rates of 120 neural populations, which approximates the large-scale cortical signals that might be measured through electrocortigraphy, magnetoencephalography, or electroencephalography. Steyn-Ross, Steyn-Ross, and Sleigh20 show that just by varying the inhibitory gap-junction diffusive-coupling strength parameter in their model, they can produce dynamics ranging from periodicity to strong chaos. In their simulation of “waking” cortical dynamics, Turing (spatial) and Hopf (temporal) instabilities interact to produce chaotic, low-frequency spatiotemporal oscillations. For chaotic dynamics, we simulated 2,000,000 time-points of their “wake” simulation, with the inhibitory gap-junction diffusive-coupling strength parameter set to 0.4, and then downsampled the data to 10,000 time-points. We only applied our algorithm to the mean excitatory firing rate of one neural population, i.e. to just one out of 14 variables describing the dynamics of just one out of 120 interacting such 14-dimensional systems (though the variable is biologically well-defined). The Matlab code for the simulations is available in the Supplementary Material of Steyn-Ross, Steyn-Ross, and Sleigh20.

Periodic mean-field cortical model. Steyn-Ross, Steyn-Ross, and Sleigh show that their cortical mean-field model enters a periodic, seizure-like state dominated by a Hopf instability when the inhibitory gap-junction diffusive-coupling strength parameter is set to 0.1. Just as in the chaotic case, we simulated 2,000,000 time-points and then downsampled to 10,000 time-points. Note that Steyn-Ross, Steyn-Ross, and Sleigh estimate the largest Lyapunov exponent of their model to be around zero when the inhibitory gap-junction diffusive-coupling strength parameter is 0.1, whereas our own estimate (using an automated version of their same method—see below) placed the largest Lyapunov exponent more clearly in the periodic regime, at −2.1.

Chaotic spiking neuron. Izhikevich49,76 describes a simple neuron model that can display both spiking and bursting behavior. The model consists of a neuron’s membrane potential , a membrane recovery variable , an input current , and parameters , , , and :

| 5 |

with the auxiliary after-spike resetting:

| 6 |

When , , , , and , the neuron’s membrane potential (which is the variable we analyze) displays chaotic spikes49,76. We simulated the Izhikevich neuron using a first-order Euler method, with an integration step of 0.25 ms. We generated 50,000 time points, and dowsampled by a factor of 5 (to avoid over-sampling).

Periodic white blood cell concentration. Inspired by the finding that chronic granulocytic leukemia involves apparently aperiodic oscillations in the concentration of circulating white blood cells77, Mackey and Glass19 study mathematical models of oscillating physiological control systems. They describe a simple mathematical model of the concentration of circulating white blood cells:

| 7 |

where , , and . The parameter represents the delay between white blood cell production in bone marrow and the release of those cells into the blood stream. Since this cellular generation delay time is increased in some patients with chronic granulocytic leukemia, Mackey and Glass study the behavior of this system as a function of the delay time . They find that as increased, the resulting oscillations produced by this equation became aperiodic. Through formal analysis of Lyapunov exponents of this system, Farmer78 later confirmed that for , the oscillations of this system are periodic. We simulated 100,000 time-points of the periodic Mackey-Glass system using a first-order Euler method with an integration step of 1, and then downsampled by a factor of 10 (to avoid over-sampling).

Chaotic white blood cell concentration. For , Farmer confirmed78 that the Mackey-Glass equation (Eq. (7)) for the concentration of circulating white blood cells yields a chaotic oscillation. We simulated 100,000 time-points of the chaotic Mackey-Glass system using a first-order Euler method with an integration step of 1, and then downsampled by a factor of 10 (to avoid over-sampling).

Periodic NF-κB transcription. Heltberg and colleageus14 recently described a five-dimensional mathematical model of oscillating concentrations of the transcription factor NF-κB, which regulates several genes involved in immune responses and is widely studied in immunity and cancer research. They show that the dynamics of NF-κB concentration are coupled to varying levels of a cytokin-like tumor necrosis factor (TNF). They show that when TNF oscillations have a low amplitude, NF-B oscillations are periodic. We simulated periodic NF-B oscillations using Heltberg and colleagues’ Matlab code, available at https://github.com/Mathiasheltberg/ChaoticDynamicsInTranscriptionFactors.

Chaotic NF-κB transcription. Heltberg and colleagues14 show that by increasing the amplitude of the TNF signal, the oscillating number of NF-κB molecules in their model becomes chaotic. We simulated chaotic NF-κB oscillations using Heltberg and colleagues’ Matlab code, available at https://github.com/Mathiasheltberg/ChaoticDynamicsInTranscriptionFactors.

Non-biological simulations

Because there are only a limited number of biological simulations for which the presence of chaos has already been established, we also applied the Chaos Decision Tree Algorithm to a wide range of mathematical systems previously studied in the chaos theory and nonlinear time-series analysis literatures:

Chaotic cubic map. Venkatesan and Lakshmanan50 describe a quasiperiodically forced cubic map, which can exhibit a large diversity of periodic, chaotic, and strange non-chaotic dynamics. In particular, the map exhibits many different routes to chaos. Their system is described by the following:

| 8 |

where (the golden ratio). We set , , and , which Venkatesan and Lakshmanan have shown lead to chaotic dynamics50. For the results reported in Table 2, we followed Dawes and Freeland23 in taking a linear combination of x and : . Results for x individually are reported in Supplementary Table 15 (results for on its own are not informative, as is an independent, quasi-periodic process).

Periodic cubic map. Venkatesan and Lakshmanan50 show that the system in Eq. (8) exhibits periodic (one-frequency torus) dynamics when , , and . We picked these parameters for periodic dynamics. To get a time-series from the cubic map, we again took a linear combination of x and : . Results for x and individually are reported in Supplementary Table 15.

Strange non-chaotic cubic map (HH). We set , , and for one type of strange non-chaotic dynamics, which Venkatesan and Lakshmanan50 have shown bring the forced cubic map into a strange non-chaotic regime via the Heagy-Hammel route (i.e., collision of a period-doubled quasi-periodic torus with its unstable parent). Results for x individually are reported in Supplementary Table 15.

Strange non-chaotic cubic map (S3). We set , , and for a second type of strange non-chaotic dynamics, which Venkatesan and Lakshmanan50 have shown push the forced cubic map into a strange non-chaotic regime via Type-3 Intermittency (i.e., inverse period-doubling bifurcation). Results for x individually are reported in Supplementary Table 15.

Period-doubled cubic map. Venkatesan and Lakshmanan50 show that the system in Eq. (8) exhibits period-doubled dynamics when , , and . We picked these parameters for period-doubled dynamics. To get a time-series from the cubic map, we again took a linear combination of x and : . Results for x individually are reported in Supplementary Table 15.

Strange non-chaotic GOPY model. The first known strange non-chaotic system was described by Grebogi, Ott, Pelikan, and Yorke, commonly referred to as the GOPY model51. The GOPY model is described by the following:

| 9 |

where , , and (the golden ratio). To get a time-series from the GOPY model, we followed Dawes and Freeland23 in taking a linear combination of x and : . Results for x individually are reported in Supplementary Table 15 (as is the case for the cubic map, results for on its own are not informative, as is an independent, quasi-periodic process).

Chaotic logistic map. The logistic map is one of the simplest known systems that can exhibit both periodic and chaotic behavior. It was originally introduced by biologist Robert May52 as a discrete-time model of population growth. It is described by the following equation:

| 10 |

where represents the ratio of the population size at time to the maximum possible population size. For chaotic dynamics52, we set .

Periodic logistic map. For periodic dynamics52 in the logistic map, we set in Eq. (10).

Chaotic Lorenz system. Perhaps the most famous of all chaotic systems, the Lorenz model of atmospheric convection is described by the following system of equations53:

| 11 |

where x is the rate of convection, y is the horizontal temperature variation, and z is the vertical temperature variation. Though the equations were initially meant to model atmospheric convection, identical equations have been found in models of a wide variety of physical systems, including lasers79 and chemical reactions80. We set , , and , for which the Lorenz system exhibits chaos (determined by calculating the largest Lyapunov exponent of the system with these parameters, using Ramasubramanian’s algorithm81). We integrated the equations for the Lorenz system using the Fourth Order Runge-Kutta method with an integration step of 0.01. To get a single time-series from the Lorenz model, we took a linear combination of x and y: =x + y. Results for x, y, and z individually are reported in Supplementary Table 15.

Hyperchaotic generalized Henon map. Data from hyperchaotic systems, which contain more than one positive Lyapunov exponent, can be difficult to distinguish from noise25. As such, hyperchaotic systems present a challenge to tests of determinism from time-series data, which might mistake hyperchaos for stochasticity. To demonstrate the robustness of the Chaos Decision Tree Algorithm’s stochasticity test, we analyzed the Generalized Henon Map, which is described by the following equation:

| 12 |

We set and , for which the Generalized Henon Map produces hyperchaos54.

Noise-driven sine map. Freitas and colleagues55 describe a non-chaotic, randomly driven system:

| 13 |

where , is a random Bernoulli process that equals 1 with probability 0.01 and 0 with probability 0.99, and is a random variable uniformly distributed between −2 and 2. Freitas and colleagues show that a chaos-detection technique called “noise titration”82 incorrectly classifies this system as chaotic.

Freitas map. Freitas and colleagues55 also describe a nonlinear correlated noise process, which we here call the “Freitas map.” The Freitas map contains dynamic noise added to a nonlinear moving average filter:

| 14 |

where is a uniform random variable distributed between 0 and 1. Freitas and colleagues show that the noise titration technique also incorrectly classifies this system as chaotic.

Bounded random walk. Nicolau56 describes a bounded random walk (BRW), which is a globally stationary process with local unit roots (i.e. local non-stationarities):

| 15 |

where , , , , and are parameters, and is a white noise error term. Note that the bounded random walk can be decomposed into a random walk, , plus an adjustment function . The adjustment function serves to pull the random walk toward if the process deviates too far from . Though it is a stationary process (albeit with local non-stationarities), the bounded random walk is often mis-classified as non-stationary by stationarity tests83. Following Nicolau56 and Patterson83, we set , , , , and , which generates a random walk that remains roughly within the interval of 1005.

Cyclostationary process. A cyclostationary autoregressive process is essentially a combination of a noise-driven linear damped oscillator and linear relaxators. Cyclostationary systems are non-stationary because their probability distributions vary cyclically with time. Following Timmer57, we simulate a cyclostationary process described by the following:

| 16 |

where is a white noise error term and

| 17 |

is the relaxation time and is the oscillation period. We set and , which Timmer has shown leads to incorrect classification of this system as nonlinear or deterministic by surrogate tests that only use Fourier-based surrogates, which are strictly stationary.

ARMA process. A general autoregressive moving-average (ARMA) process is described by the following:

| 18 |

where is a constant, and are parameters, and are white noise error terms. An ARMA process with a lag of 1, or an ARMA(1) process, is:

| 19 |

When , the ARMA process is (weakly) stationary. When is close to but less than 1 and , ARMA processes, though stationary, are often mis-classified as non-stationary by stationarity tests84. All ARMA processes simulated in this paper were lag 1, and we set and . For the analyses in Supplementary Tables 1–4 and in Table 2, we drew from a random, normal distribution with mean and standard deviation for each simulation. We also tested ARMA(1) processes for values fixed at −0.5, 0, 0.5, and 0.9 (Supplementary Table 19).

Random walk. A random walk is modeled by an autoregressive process with a unit root:

| 20 |

where is a white noise error term with mean and standard deviation . Random walks are non-stationary.

Trended random walk. A trended random walk introduces a secondary non-stationarity, namely, a linear trend, to the random walk:

| 21 |

where is a white noise error term with mean and standard deviation and is the slope of the linear trend. For all trended random walks simulated in this paper, was randomly drawn from a Gaussian distribution with mean and standard deviation .

Colored noise. Colored noise refers to a stationary, stochastic process with a non-uniform power spectrum. White noise has a uniform power spectrum (meaning equal power at all frequencies); pink noise has a power spectral density proportional to , where is frequency; red noise has a power spectral density proportional to ; blue noise has a power spectral density proportional to ; and violet noise has a power spectral density proportional to . All colored noise signals were simulated using Zhivomirov’s algorithm58, available at https://www.mathworks.com/matlabcentral/fileexchange/42919-pink-red-blue-and-violet-noise-generation-with-matlab.

Rössler system. The Rössler system is described by the following system of differential equations59:

| 22 |

We set , , and , for which the Rössler system exhibits chaos59. controls the frequency of the system’s oscillations, and was set to 1. We integrated the equations for the Rössler system using the Fourth Order Runge-Kutta method with an integration step of 0.01. We generated 5,000,000 time-points, and then downsampled to 10,000 datapoints. To get a single time-series from the Rössler model, we took a linear combination of x and y: = x + y. Results for x, y, and z individually are reported in Supplementary Table 15.

Ikeda map. Ikeda and colleagues described a chaotic model of light passing through a nonlinear optical resonator85. The model can be simplified into a two-dimensional map86:

| 23 |

where is a parameter and

| 24 |

We set , for which the Ikeda map exhibits chaos86. Table 3 reports results for a linear combination of the two variables, = x + y. Results for x and y individually are reported in Supplementary Table 15.

Hénon map. The Hénon map61 is a two-dimensional system of equations:

| 25 |

We set and , for which the Hénon map is periodic87. Table 3 reports results for a linear combination of the two variables, = x + y. Results for x and y individually are reported in Supplementary Table 15.

Periodic Poincaré oscillator. The Poincaré oscillator has been widely studied as a model of biological oscillations, particularly as a model of the effect of periodic stimulation on the dynamics of biological oscillators88. The oscillator is described by the following equations:

| 26 |

where is a positive value that controls the oscillator’s relaxation rate. The phase of this system is described by its angular coordinate in a unit cycle. Periodic stimulation of the system is modeled as a perturbation of magnitude away from the unit cycle, which leads to an instantaneous resetting of the phase of the oscillator, as determined by the following phase resetting curve:

| 27 |

The period of the stimulation is determined by a parameter . For periodic dynamics, we analyze the time-varying phase of the Poincaré oscillator with and , which Guevara and Glass show leads to phase locking between the oscillator and the periodic perturbations41.

Quasi-periodic Poincaré oscillator. For quasi-periodic dynamics41 in the Poincaré oscillator, wet set in Eq. (20), with an inter-stimulus delay .

Chaotic Poincaré oscillator. For chaotic dynamics41 in the Poincaré oscillator, wet set and .

Stochastic Lorenz system. To study the effect of dynamic noise on our algorithm’s classification of stochastic chaotic systems, we took the Lorenz system described in Eq. (11), and added intrinsic/dynamic Gaussian noise to the x component of the system (we found that the system was far less sensitive to noise being injected into the y variable):

| 28 |

where is a normally distributed random variable with mean 0 and standard deviation 1, and is a parameter that controls the amplitude of the dynamic/intrinsic noise. As for the deterministic case, we set , , and . The stochastic Lorenz system was simulated using the Fourth Order Runge-Kutta method with an integration step of 0.01. Supplementary Table 18 reports results for different values of , both for all system variables individually and for the linear combination x + y.

Stochastic Rössler system. We also took the Rössler system described in Eq. (22), and added dynamical Gaussian noise to the x component of the system:

| 29 |

where is a normally distributed random variable with mean 0 and standard deviation 1, and is a parameter that controls the amplitude of the dynamic/intrinsic noise. As for the deterministic case, we set , , and , and simulated the model using the Fourth Order Runge-Kutta method with an integration step of 0.01. We generated 5,000,000 time-points, and then downsampled to 10,000 datapoints. Supplementary Table 18 reports results for different values of , both for all system variables individually and for the linear combination of x + y.

Multivariate AR model. We generated random multivariate autoregressive (AR) models using the Multivariate Granger Causality (MVGC) toolbox89. To create random regression matrices, we created random 5-node dense positive definite matrices using Matlab’s sprandsym.m function, with a graph density of 1. To ensure stationary dynamics, we used the MVGC toolbox’s var_specrad.m function to decay the coefficients of the random dense positive definite matrices so that their spectral radii were 0.8. To ensure uncorrelated noise in the resulting AR model, we created error matrices with diagonal elements set to 1 and off-diagonal elements set to 0. We then inputted these regression and error matrices into the MVGC toolbox’s var_to_tsdata.m function to create multivariate time-series with 5 nodes and 10,000 time-points. We then applied the Chaos Decision Tree Algorithm to just the univariate activity of the first node of the resulting multivariate signal.

Empirical data

We here describe the real-world data analyzed in this paper, and how these data were previously classified as stochastic, periodic, or chaotic:

A chaotic neuron integrated circuit. Data recorded from an integrated circuit were kindly sent to us by Seiji Uenohara and colleagues. The circuit is a physical implementation of a chaotic neuron model that is based on the Hudgkin-Huxley equations90. The equations governing the circuit’s behavior can be reduced to the following two-dimensional map:

| 30 |

where is a monotonically decreasing nonlinear output function, controls the amplitude of the quasi-periodic forcing, and (the golden ratio). The quasi-periodic external forcing was inputted to the circuit using an analog board PXI-6289, which was also used to record the circuit’s output. Varying the parameter can bring the circuit into periodic, strange non-chaotic, and chaotic states, which Uenohara and colleagues were able to classify by analyzing the consistency of the circuit’s response to an external input27. There are 10 datasets recorded from the circuit’s chaotic state.

A strange non-chaotic neuron integrated circuit. There are 10 datasets recorded from the strange non-chaotic state of Uenohara and colleagues’ circuit.

A periodic neuron integrated circuit. There are 10 datasets recorded from the periodic state of Uenohara and colleagues’ circuit.

Chaotic laser. Hübner and colleagues28 used phase portrait, correlation integral, and autocorrelation function analyses to detect chaos in the intensity pulsing of an unidirectional far-infrared NH3 ring laser. Laser data were downloaded from https://www.pdx.edu/biomedical-signal-processing-lab/chaotic-time-series.

Stellar flux of a strange non-chaotic star. We analyzed stellar flux from KIC 5520878, the only known non-artificial strange non-chaotic system29. Data were sent to us by John F. Lindner, who, together with colleagues, determined the status of KIC 5520878 as a strange non-chaotic system using a series of spectral scaling analyses29. Data were originally obtained from the Mikulski Archive for Space Telescopes. Because there are large shifts in the data due to the stellar flux being recorded in different pixels of the Kepler Space Telescope, we visually inspected the data to find a relatively stable period (i.e. a period in between large shifts) and then detrended the data. We thus exclusively analyzed time points 11,620 to 14,003 from the dataset analzed in Lindner and colleagues’ paper29.

North Atlantic Oscillation Index. We analyzed the monthly mean North Atlantic Oscillation (NAO) Index from January 1950 to December 2018. The NAO Index is the difference in atmospheric pressure at sea level between the Azores high and the Icelandic low, and has been shown by several groups of researchers, employing a range of techniques, to be stochastic25,91–96. Data were downloaded from the Climate Prediction Center website (http://www.cpc.ncep.noaa.gov/).

Parkinson’s tremor. We analyzed recordings of a Parkinson’s patient’s hand acceleration, measured for 30 s at a sampling rate of 1000 Hz by piezoresistive accelerometers. Through analyses of correlation integrals, Poincaré and return maps, Lyapunov exponents, and the - method, Timmer and colleageus57 showed that this Parkinson’s tremor was a nonlinear stochastic oscillator. Data were downloaded from http://jeti.uni-freiburg.de/path_tremor/readme.

Essential tremor. We analyzed recordings of hand acceleration from a patient with an essential tremor, also measured for 30 seconds at a sampling rate of 1000 Hz by piezoresistive accelerometers. As they did with the Parkinson’s tremor, Timmer and colleagues used correlation integrals, Poincaré and return maps, Lyapunov exponents, and the - method to show that this essential tremor was a nonlinear stochastic oscillator. Data were downloaded from http://jeti.uni-freiburg.de/path_tremor/readme.

Heart rate (healthy subjects). Five heart beat (RR-interval) time-series recordings from healthy subjects were downloaded from Physionet31: https://www.physionet.org/challenge/chaos/. The signals were recorded using continuous ambulatory (Holter) electrocardiograms, and are in sinus rhythm. Outliers were filtered out of the data using Physionet’s WFDB software package. Though a full 24 h of data were available for each subject, we only took the first 2.78 h of data, corresponding to 10,000 time-points. This was both to save on computation time and to be consistent with the length of other time-series analyzed in this paper.

Heart rate (congestive heart failure patients). Five heart beat (RR-interval) time-series recordings from patients with congestive heart failure were downloaded from Physionet31. Like the healthy rate signals, these data were recorded using continuous ambulatory (Holter) electrocardiograms, are in sinus rhythm, and were filtered for outliers. Though a full 24 h of data were available for each subject, we only took the first 2.78 h of data.

Heart rate (atrial fibrillation). Five heart beat (RR-interval) time-series recordings from patients with congestive heart failure were downloaded from Physionet31. Like the healthy rate signals, these data were recorded using continuous ambulatory (Holter) electrocardiograms and were filtered for outliers, but are not in sinus rhythm. We only took the first 2.78 h of data, corresponding to 10,000 time-points.

Parameters and largest Lyapunov exponents for data in Table 5

We here describe the methods used to generate data with different degrees of chaos for the analyses reported in Table 5, as well as the methods used to calculate largest Lyapunov exponents in these systems.

Logistic map. The logistic map has only a single parameter, (see above). Following Bandt and Pompe32, we varied between 3.5 and 4, in intervals of 0.001, to generate 501 10,000 time-point signals with different levels of chaos. Ground-truth largest Lyapunov exponents were calculated using the derivative method, which does not involve generating time-series data.

Hénon map. To generate different degrees of chaos in the Hénon map, we varied its parameter (see above) between 1 and 1.4, in intervals of 0.001, to generate 401 10,000 time-point signals with different degrees of chaos. Ground-truth largest Lyapunov exponents were calculated using code provided in Dynamical Systems with Applications using Matlab97, available at https://github.com/springer-math/Dynamical-Systems-with-Applications-using-MATLAB/.

Lorenz system. For the Lorenz system, we varied its parameter between 5.75 and 15, in intervals of 0.05, and generated 10,000 time-points per simulation. Within this parameter range, the Lorenz system is chaotic, but displays varying degrees of chaos. To calculate largest Lyapunov exponents for each parameter, we used the algorithm provided by Ramasubramanian81, which, like the algorithms used for the logistic and Hénon maps, does not involve generating time-series data.

Mean-field cortical model. Following Steyn-Ross, Steyn-Ross, and Sleigh20, different levels of chaos in their mean-field cortical model were generated by varying two parameters: postsynaptic inhibitory response and inhibitory diffusion. The postsynaptic inhibitory response parameter ( in their model) was varied between 0.98 and 1.018 in intervals of 0.001, and the inhibitory diffusion parameter ( in their model) was varied between 0.1 and 0.8 in intervals of 0.05, producing a total of 585 parameter configurations. We simulated 25,000 time-points in the model, with no downsampling, so we could again test the effect of the Chaos Decision Tree Algorithm’s automated downsampling on permutation entropy’ ability to track level of chaos in continuous systems. Unfortunately, there are no tools for analytically estimating the largest Lyapunov exponent of the mean-field cortical model, and so largest Lyapunov exponents were approximated by running two noise-free simulations of the model for each parameter configuration, with very slightly different initial conditions, and fitting a line to the rate of divergence between the two simulations from the beginning of the simulations to the point when their divergence rate saturates and flattens out. The slope of the fitted line is taken as the estimate of the largest Lyapunov exponent10. While Steyn-Ross, Steyn-Ross, and Sleigh’s code for estimating largest Lyapunov exponents using this method requires subjective evaluation of where to fit the line (i.e. finding the non-saturated part of the divergence rate plot), we automated this process by fitting a line from the beginning of the divergence rate plot to the point where its mean abruptly changes, reflecting saturation; this point was determined using Matlab’s findchangepts.m function. Simulations with approximated largest Lyapunov exponents less than −5 or greater than 5 were excluded from the analysis, as these are likely poor estimates; visual inspection confirmed that for these cases, there was often no clear point of saturation for the divergence rate between simulations, and so lines were often automatically fitted to particularly steep, short sub-segments of the plot. Visual inspection further confirmed that in most other cases, there was a clear, linear rate of divergence between simulations followed by saturation, and that the automatically fitted line was a good fit.

Neuron integrated circuit. The integrated circuit data analyzed in Table 5 are the same as those analyzed in Table 4. Because the circuit is a physical implementation of a known and simple two-dimensional system of equations, Uenuhara and colleagues used those equations to calculate the ground-truth largest Lyapunov exponents of the circuit in its three different states (periodic, strange non-chaotic, and chaotic), and report those largest Lyapunov exponents in their paper27.

Statistics and reproducibility

In reporting the performance of the Chaos Decision Tree Algorithm, we only made recourse to statistical tests in Table 5 and Supplementary Table 14, where we report the (two-tailed) p-values of Spearman correlations between largest Lyapunov exponents and permutation entropies. Because these correlations were calculated against the same set of ground-truth largest Lyapunov exponents for each system, p-values were Bonferroni-corrected for multiple comparisons. Elsewhere, we only report the fraction out of all datasets that were correctly classified as stochastic, periodic, or chaotic (with exact sample sizes provided in all tables). Within the Chaos Decision Tree Algorithm itself, statistical tests appear in two locations. First, if the user chooses to test for stationarity, then the pipeline will only proceed to test for stochasticity if the test signal passes a (two-tailed) stationarity test with = 0.05. Moreover, the pipeline uses surrogate statistics to test for determinism: if the permutation entropy of the inputted signal lies outside the distribution of permutation entropies of 1000 surrogates (which is equivalent to a two-tailed statistical test with = 9.99e−4), then the data are classified as being generated by a predominantly deterministic system. No sample size calculation was performed before analyzing the data presented, as our results simply report classification accuracy for a large number of datasets (and no statistical analyses were performed beyond reporting classification accuracy). No data were excluded from the analysis. Data in Tables 1, 2 were used to optimize our algorithm, which was then re-tested on datasets in Tables 3, 4. Finally, note that no p-value is associated with the -statistic outputted by the 0–1 test for chaos. To facilitate the reproducibility of our analyses, we have included code alongside our pipeline, as well as links to code in our Methods, for generating all simulated datasets tested in this paper. Links to most empirical datasets analyzed here have been provided in the Methods.

Reporting summary

Further information on research design is available in the Nature Research Reporting Summary linked to this article.

Supplementary information

Acknowledgements

We thank Seiji Uenohara and colleagues for kindly sending us data recorded from their chaotic neuron integrated circuit, and we thank John F. Lindner for sending us data on the stellar flux of KIC 5520878. We also thank Michael Jansson for his feedback on stationarity testing.

Author contributions

D.T. conceived of the project, wrote the code for the Chaos Decision Tree Algorithm, analyzed results, and wrote the manuscript. F.T.S. and M.D. contributed to discussing, editing, and revising the paper.

Data availability

Links to Matlab scripts for simulating the mean-field cortical model and NF-κB transcription are provided in the Methods. Matlab scripts for simulating all other systems described in this paper are provided along with the code for the Chaos Decision Tree Algorithm at https://figshare.com/s/80891dfb34c6ee9c8b34 (DOI: doi.org/10.6084/m9.figshare.7476362.v7). All empirical datasets except for the integrated circuit recordings are freely available online, and URLs to each data source are provided in the Methods.

Code availability

The code for the Chaos Decision Tree Algorithm is provided at https://figshare.com/s/80891dfb34c6ee9c8b34 (DOI: doi.org/10.6084/m9.figshare.7476362.v7).

Competing interests

The authors declare no competing interests.

Footnotes

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Supplementary information is available for this paper at 10.1038/s42003-019-0715-9.

References

- 1.Linde AD. Eternally existing self-reproducing chaotic inflanationary universe. Phys. Lett. B. 1986;175:395–400. doi: 10.1016/0370-2693(86)90611-8. [DOI] [Google Scholar]

- 2.Wisdom J, Peale SJ, Mignard F. The chaotic rotation of hyperion. Icarus. 1984;58:137–152. doi: 10.1016/0019-1035(84)90032-0. [DOI] [Google Scholar]

- 3.Hudson J, Mankin J. Chaos in the belousov-zhabotinskii reaction. J. Chem. Phys. 1981;74:6171–6177. doi: 10.1063/1.441007. [DOI] [Google Scholar]

- 4.Kaplan DT, et al. Subthreshold dynamics in periodically stimulated squid giant axons. Phys. Rev. Lett. 1996;76:4074. doi: 10.1103/PhysRevLett.76.4074. [DOI] [PubMed] [Google Scholar]

- 5.Glass L. Introduction to controversial topics in nonlinear science: Is the normal heart rate chaotic? Chaos. 2009;19:028501. doi: 10.1063/1.3156832. [DOI] [PubMed] [Google Scholar]

- 6.Goldberger AL, West BJ. Applications of nonlinear dynamics to clinical cardiology. Ann. N. Y. Acad. Sci. 1987;504:195–213. doi: 10.1111/j.1749-6632.1987.tb48733.x. [DOI] [PubMed] [Google Scholar]

- 7.Skarda CA, Freeman WJ. How brains make chaos in order to make sense of the world. Behav. brain Sci. 1987;10:161–173. doi: 10.1017/S0140525X00047336. [DOI] [Google Scholar]

- 8.Pool R. Is it healthy to be chaotic? Science. 1989;243:604–607. doi: 10.1126/science.2916117. [DOI] [PubMed] [Google Scholar]

- 9.Korn H, Faure P. Is there chaos in the brain? ii. experimental evidence and related models. C. R. Biol. 2003;326:787–840. doi: 10.1016/j.crvi.2003.09.011. [DOI] [PubMed] [Google Scholar]

- 10.Destexhe A. Oscillations, complex spatiotemporal behavior, and information transport in networks of excitatory and inhibitory neurons. Phys. Rev. E. 1994;50:1594. doi: 10.1103/PhysRevE.50.1594. [DOI] [PubMed] [Google Scholar]

- 11.Lizier, J.T., Prokopenko, M. & Zomaya, A.Y. The information dynamics of phase transitions in random boolean networks. In ALIFE, 374–381 (2008).

- 12.Nagao N, Nishimura H, Matsui N. A neural chaos model of multistable perception. Neural Process. Lett. 2000;12:267–276. doi: 10.1023/A:1026511124944. [DOI] [Google Scholar]