Abstract

Correlation coefficients are abundantly used in the life sciences. Their use can be limited to simple exploratory analysis or to construct association networks for visualization but they are also basic ingredients for sophisticated multivariate data analysis methods. It is therefore important to have reliable estimates for correlation coefficients. In modern life sciences, comprehensive measurement techniques are used to measure metabolites, proteins, gene-expressions and other types of data. All these measurement techniques have errors. Whereas in the old days, with simple measurements, the errors were also simple, that is not the case anymore. Errors are heterogeneous, non-constant and not independent. This hampers the quality of the estimated correlation coefficients seriously. We will discuss the different types of errors as present in modern comprehensive life science data and show with theory, simulations and real-life data how these affect the correlation coefficients. We will briefly discuss ways to improve the estimation of such coefficients.

Subject terms: Biochemical networks, Biomarkers, Statistics

Introduction

The concept of correlation and correlation coefficient dates back to Bravais1 and Galton2 and found its modern formulation in the work of Fisher and Pearson3,4, whose product moment correlation coefficient has become the most used measure to describe the linear dependence between two random variables. From the pioneering work of Galton on heredity, the use of correlation (or co-relation as is it was termed) spread virtually in all fields of research and results based on it pervade the scientific literature.

Correlations are generally used to quantify, visualize and interpret bivariate (linear) relationships among measured variables. They are the building blocks of virtually all multivariate methods such as Principal Component Analysis (PCA5–7), Partial Least Squares regression, Canonical Correlation Analysis (CCA8) which are used to reduce, analyze and interpret high-dimensional omics data sets and are often the starting point for the inference of biological networks such as metabolite-metabolite associations networks9,10, gene regulatory networks11,12 an co-expression networks13,14.

Fundamentally, correlation and correlation analysis are pivotal for understanding biological systems and the physical world. With the increase of comprehensive measurements (liquid-chromatography mass-spectrometry, nuclear magnetic resonance (NMR), gas-chromatography mass-spectrometry (MS) in metabolomics and proteomics; RNA-sequencing in transcriptomics) in life sciences, correlations are used as a first tool for visualization and interpretation, possibly after selection of a threshold to filter the correlations. However, the complexity and the difficulty of estimating correlation coefficients is not fully acknowledged.

Measurement error is intrinsic to every experimental technique and measurement platform, be it a simple ruler, a gene sequencer or a complicated array of detectors in a high-energy physics experiment, and already in the early days of statistics it was known that measurement error can bias the estimation of correlations15. This bias was first called attenuation because it was found that under the error condition considered, the correlation was attenuated towards zero. The attenuation bias has been known and discussed in some research fields16–19 but it seems to be totally neglected in modern omics-based science. Moreover, contemporary comprehensive omics measurement techniques have far more complex measurement error structures than the simple ones considered in the past on which early results were based.

In this paper, we intend to show the impact of measurement errors on the quality of the calculated correlation coefficients and we do this for several reasons. First, to make the omics community aware of the problems. Secondly, to make the theory of correlation up to date with current omics measurements taking into account more realistic measurement error models in the calculation of the correlation coefficient and third, to propose ways to alleviate the problem of distortion in the estimation of correlation induced by measurement error. We will do this by deriving analytical expressions supported by simulations and simple illustrations. We will also use real-life metabolomics data to illustrate our findings.

Measurement Error Models

We start with the simple case of having two correlated biological entities and which are randomly varying in a population. This may, e.g., be concentrations of two blood metabolites in a cohort of persons or gene-expressions of two genes in cancer tissues. We will assume that these variables are normally distributed

| 1 |

with underlying mean

| 2 |

and variance-covariance matrix

| 3 |

Under this model the variance components and describe the biological variability for and , respectively. The correlation , between and is given by

| 4 |

We refer to as the true correlation.

Whatever the nature of the variables and and whatever the experimental technique used to measure them there is always a random error component (also referred to as noise or uncertainty) associated with the measurement procedure. This random error is by its own nature not reproducible (in contrast with systematic error which is reproducible and can be corrected for) but can be modeled, i.e. described, in a statistical fashion. Such models have been developed and applied in virtually every area of science and technology and can be used to adjust for measurement errors or to describe the bias introduced by it. The measured variables will be indicated by and to distinguished them from and which are their errorless counterparts.

The correlation coefficient is sought to be estimated from these measured data. Assuming that samples are taken, the sample correlation is calculated as

| 5 |

where is the sample mean over observations and are the usual sample standard deviation estimators. This sample correlation is used as a proxy of . The population value of this sample correlation is

| 6 |

and it also holds that

| 7 |

We will call the expected correlation. Ideally, but this is unfortunately not always the case. In plain words: certain measurement errors do not cancel out if the number of samples increases.

In the following section we will introduce three error models and will show with both simulated and real data how measurement error impacts the estimation of the Pearson correlation coefficient. We will focus mainly on and .

Additive error

The most simple error model is the additive error model where the measured entities and are modeled as

| 8 |

where it is assumed that the error components and are independently normally distributed around zero with variance and and are also independent from and . The subscripts , stand for additive uncorrelated error () on variables and .

Variables and represent measured quantities accessible to the experimenter. This error model describes the case in which the measurement error causes within-sample variability, which means that measurement replicates of observation of variable will all have slightly different values due to the random fluctuation of the error component ; the extent of the variability among the replicates depends on the magnitude of the error variance (and similarly for the variable). This can be seen in Fig. 1A where it is shown that in the presence of measurement error (i.e. ) the two variables and are more dispersed. Due to the measurement error, the expected correlation coefficient is always biased downwards, i.e. , as already shown by Spearman15 (see Fig. 1B) who also provided an analytical expression for the attenuation of the expected correlation coefficient as a function of the error components (a modern treatment can be found in reference20):

| 9 |

where

| 10 |

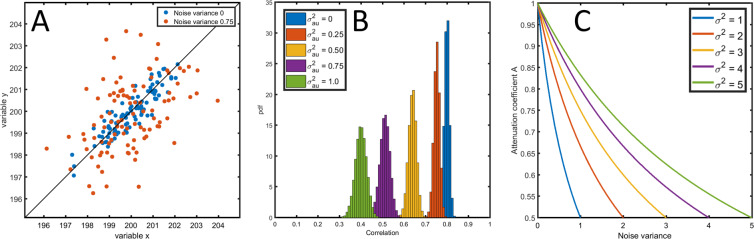

Figure 1.

(A) Correlation plot of two variables and () generated without () and with uncorrelated additive error () with underlying true correlation (model 8). (B) Distribution of the sample correlation coefficient for different levels of measurement error () for a true correlation . (C) The attenuation coefficient from Eq. (10) as a function the measurement error for different level of the variance of the variables and . See Material and Methods section 6.5.1 for details on the simulations.

Equation (9) implies that in presence of measurement error the expected correlation is different from the true correlation which is sought to be estimated. The attenuation A is always strictly smaller than 1 and it is a decreasing function of the size of the measurement error relative to the biological variation (see Fig. 1C), as it can be seen from Eq. (10). The attenuation of the expected correlation, despite being known since 1904, has sporadically resurfaced in the statistical literature in the psychological, epidemiology and behavioral sciences (where it is known as attenuation due to intra-person or intra-individual variability, see19 and reference therein) but has been largely neglected in the life sciences, despite its relevance.

The error model (8) can be extended to include a correlated error term

| 11 |

with normally distributed around zero with variance ; the correlated error term takes on exactly the same value for and in a given sample. The ‘±’ models the sign of the error correlation. When has a positive sign in both and the error is positively correlated; if the sign is discordant the error is negatively correlated. The subscript ac is used to indicate additive correlated error. The variance for is given by

| 12 |

and likewise for the variable y. In general, additive correlated error can have different causes depending on the type of instruments and measurement protocols used. For example, in transcriptomics, metabolomics and proteomics, usually samples have to be pretreated (sample work-up) prior to the actual instrumental analysis. Any error in a sample work-up step may affect all measured entities in a similar way21. Another example is the use of internal standards for quantification: any error in the amount of internal standard added may also affect all measured entities in a similar way. Hence, in both cases this leads to (positively) correlated measurement error. In some cases in metabolomics and proteomics the data are preprocessed using deconvolution tools. In that case two co-eluting peaks are mathematically separated and quantified. Since the total area under the curve is constant and (positive) error in one of the deconvoluted peaks is compensated by a (negative) error in the second peak, this may give rise to negatively correlated measurement error.

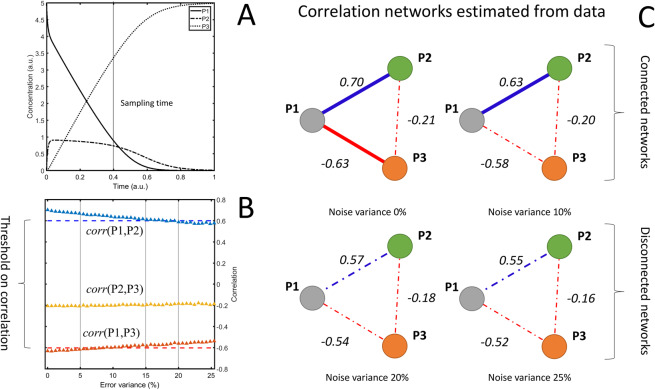

To show the effect of additive uncorrelated measurement error we consider the concentration profiles of three hypothetical metabolites P1, P2 and P3 simulated using a simple dynamic model (see Fig. 2A and Section 6.5.2) where additive uncorrelated measurement error is added before calculating the pairwise correlations among P1, P2 and P3: also in this case the magnitude of the correlation is attenuated, and the attenuation increases with the error variance (see Fig. 2B).

Figure 2.

Consequences of measurement error when using correlation in systems biology. (A) Time concentration profile of three metabolites P1, P2 and P3 generated through a simple enzymatic metabolic model; 100 profiles are generated by randomly varying the kinetic parameters defining the model and sampled at time 0.4 (a.u.). (B) Average pairwise correlation of P1, P2 and P3 as a function of the variance of the additive uncorrelated error. (C) Inference of a metabolite-metabolite correlation network: two metabolites are associated if their correlation is above 0.623 (see threshold in B). The increasing level of measurement error hampers the network inference (compare the different panels). See Material and Methods section 6.5.2 for details on the simulations.

This has serious repercussions when correlations are used for the definition of association networks, as commonly done in systems biology and functional genomics10,22: measurement error drives correlation towards zero and this impacts network reconstruction. If a threshold of 0.6 is imposed to discriminate between correlated and non correlated variables as usually done in metabolomics23, an error variance of around 15% (see Fig. 2B, point where the correlation crosses the threshold) of the biological variation will attenuate the correlation to the point that metabolites will be deemed not to be associated even if they are biologically correlated leading to very different metabolite association networks (see Fig. 2C).

Multiplicative error

In many experimental situations it is observed that the measurement error is proportional to the magnitude of the measured signal; when this happens the measurement error is said to be multiplicative. The model for sampled variables in presence of multiplicative measurement error is

| 13 |

where , , , and have the same distributional properties as before in the additive error case, and the last three terms represent the multiplicative uncorrelated errors in and , respectively, and the multiplicative correlated error.

The characteristics of the multiplicative error and the variance of the measured entities depend on the level of the signal to be measured (for a derivation of Eq. (14) see Section 6. 6.1.1):

| 14 |

while in the additive case the standard deviation is similar for different concentrations and does not depend explicitly on the signal intensity, as shown in Eq. (12). A similar equation holds for the variable y.

It has been observed that multiplicative errors often arises because of the different procedural steps like sample aliquoting24: this is the case of deep sequencing experiments where the multiplicative error is possibly introduced by the pre-processing steps like, for example, linker ligation and PCR amplification which may vary from tag to tag and from sample to sample25. In other cases the multiplicative error arises from the distributional properties of the signal, like in those experiments where the measurement comes down to counts like in the case of RNA fragments in an RNA-seq experiment or numbers of ions in a mass-spectrometer that are governed by Poisson distributions for which the standard deviation is equal to the mean. For another example, in NMR spectroscopy measured intensities are affected by the sample magnetization conditions: fluctuations in the external electromagnetic field or instability of the rf pulses affect the signal in a fashion that is proportional to the signal itself 26.

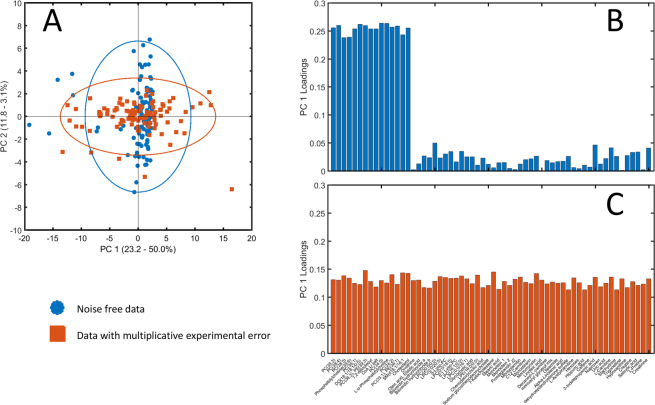

A multiplicative error distorts correlations and this affects the results of any data analysis approach which is based on correlations. To show the effect of multiplicative error we consider the analysis of a metabolomic data set simulated from real mass-spectrometry (MS) data, on which extra uncorrelated and correlated multiplicative measurement errors have been added. As it can be seen in Fig. 3A, the addition of error affects the underlying data structure: the error free data is such that only a subset of the measured variables contributes to explain the pattern in a low dimensional projection of the data, i.e. have PCA loadings substantially different from zero (3B). The addition of extra multiplicative error perturbs the loading structure to the point that all variables contribute equally to the model (3C), obscuring the real data structure and hampering the interpretation of the PCA model. This is not necessarily caused by the multiplicative nature of the error, but it is caused by the correlated error part. Since the term is common to all variables, it introduces the same amount of correlation among all the variables and this leads to all the variables contributing similarly to the latent vector (principal component). One may also observe that the variation explained by the first principal component increases when adding the correlated measurement error.

Figure 3.

Consequences of multiplicative (correlated and uncorrelated) measurement error for data analysis. (A) Scatter plot of the overlayed view of the first two components of two PCA models of simulated data sets; one without multiplicative error and one with multiplicative error. For visualization purposes, the scores are plotted in the same graph, but the subspaces spanned by the first two principal components for the two data sets are of course different. The labels on both axes also present the percentage explained variation for the two analyses. (B) Loading plot for the error free data. (C) Loading plot for the data with multiplicative error. See Material and Methods section 6.5.3 for details on the simulations.

Realistic error

The measurement process usually consists of different procedural steps and each step can be viewed as a different source of measurement error with its own characteristics, which sum to both additive and multiplicative error components as is the case of comprehensive omics measurements27. The model for this case is:

| 15 |

where all errors have been introduced before and are all assumed to be independent of each other and independent of the true (biological) signals ( and ).

This realistic error model has a multiplicative as well as an additive component and also accommodates correlated and uncorrelated error. It is an extension of a much-used error model for analytical chemical data which only contains uncorrelated error28. From model (15) it follows that the error changes not only quantitatively but also qualitatively with changing signal intensity: the importance of the multiplicative component increases when the signal intensity increases, whereas the relative contribution of the additive error component increases when the signal decreases.

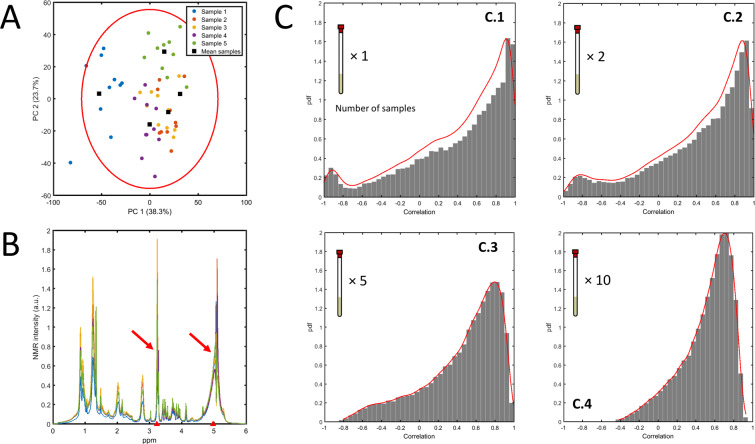

Since most of the measurements do not usually fall at the extremity of the dynamic range of the instruments used, the situation in which both additive and multiplicative error are important is realistic. For example, this is surely the case of comprehensive NMR and Mass Spectrometry measurements, where multiplicative errors are due to sample preparation and carry-over effect (in the case of MS) and the additive error is due to thermal error in the detectors29. To illustrate this we consider an NMR experiment where a different number of technical replicates are measured for five samples (Fig. 4A,B). We are interested in establishing the correlation patterns across the (binned) resonances. For sake of simplicity we focus on two resonances, binned at 3.22 and 4.98 ppm. If one calculates the correlation using only one (randomly chosen) replicate per sample, the resulting correlation can be anywhere between −1 and 1 (see Fig. 4C.1). The variability reduces considerably if more replicates are taken and averaged before calculating the correlation (see Fig. 4C), but there is still a rather large variation, induced by the limited sample size. Averaging across the technical replicates reduces variability among the sample means: however this not accompanied by an equal reduction in the variability of the correlation estimation. This is because the error structure is not taken into account in the calculation of the correlation coefficient.

Figure 4.

(A) PCA plot of 5 different samples of fish extract measured with technical replicates (10×) using NMR29. (B) Overlap of the average binned NMR spectra of the 5 samples: the two resonances whose correlation is investigated are highlighted (3.23 and 4.98 ppm). (C) Distribution of the correlation coefficient between the two resonances calculated, taking as input the average over different numbers of technical replicates (see inserts). See Material and Methods section 6.5.4 for more details on the estimation procedure.

Estimation of Pearson’s Correlation Coefficient in Presence of Measurement Error

In the ideal case of an error free measurement, where the only variability is due to intrinsic biological variation, coincides with the true correlation . If additive uncorrelated error is present, then is given by Eqs. (9) and (10) which explicitly take into account the error component; it holds that .

In the next Section we will derive analytical expressions, akin to Eqs. (9) and (10), for the correlation for variables sampled with measurement error (additive, multiplicative and realistic) as introduced in Section 2.

Before moving on, we define more specifically the error components. The error terms in models (11), (13) and (15) are assumed to have the following distributional properties

| 16 |

with variance-covariance matrices

| 17 |

and

| 18 |

From definitions (16), (17) and (18) it follows that:

- The expected value of the errors is zero:

19 - The covariance between and the error terms is zero because and errors are independent,

20 The covariance between the different error components is zero because the errors are independent from each other.

| 21 |

The Pearson correlation in the presence of additive measurement error

We show here a detailed derivation of the correlation among two variables and sampled under the additive error model (11). The variance for variable (similar considerations hold for ) is given by

| 22 |

where

| 23 |

and

| 24 |

It follows that

| 25 |

The covariance of and is

| 26 |

with

| 27 |

Considering (20) and (21), Eq. (27) reduces to

| 28 |

with

| 29 |

and

| 30 |

with ± depending on the sign of the measurement error correlation. From Eqs. (23), (28), (29) and (30) it follows

| 31 |

Plugging (25) and (31) into (6) and defining the attenuation coefficient

| 32 |

where , , and ; the superscript in stands for additive.

The Pearson correlation in presence of additive measurement error is obtained as:

| 33 |

where the sign ± signifies positively and negatively correlated error.

The attenuation coefficient is a decreasing function of the measurement error ratios, that is, the ratio between the variance of the uncorrelated and the correlated error to the variance of the true signal. Compared to Eq. (9), in formula (33) there is an extra additive term related to the correlated measurement error expressing the impact of the correlated measurement error relative to the original variation. In the presence of only uncorrelated error (i.e. ), Eq. (33) reduces to the Spearman’s formula for the correlation attenuation given by (9) and (10). As previously discussed, in this case the correlation coefficient is always biased towards zero (attenuated).

Given the true correlation , the expected correlation coefficient (33) is completely determined by the measurement error ratios. Assuming the errors on and to be the same (, , an assumption not unrealistic if and are measured with the same instrument and under the same experimental conditions during an omics comprehensive experiment) and taking for simplicity , then and and Eq. (33) can be simplified to:

| 34 |

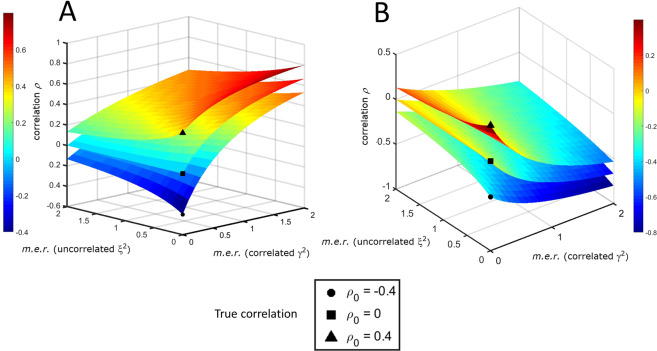

and can be visualized graphically as a function of the uncorrelated and correlated measurement error ratios and as shown in Fig. 5.

Figure 5.

The expected correlation coefficient in the presence of additive measurement error as a function of the uncorrelated () and correlated () measurement error ratios (m.e.r.) for different values of the true correlation . (A) Positively correlated error. (B) Negatively correlated error.

In the presence of positively correlated error, the correlation is attenuated towards 0 if the uncorrelated error increases and inflated if the additive correlated error increases (Fig. 5A, which refers to Eq. (34)) when . If the distortion introduced by the correlated error can be so severe that the correlation can become positive. When the error is negatively correlated (Fig. 5B), the correlation is biased towards 0 when (and can change sign), while it can be attenuated or inflated if .

A set of rules can be derived to describe quantitatively the bias of . For positively correlated measurement error (for negatively correlated measurement error see Section 6.2) if the true correlation is positive the correlation is always strictly positive: this is shown on Fig. 6A where the relationship between and is shown by means of Monte Carlo simulation (see Figure caption for more details). The magnitude of () depends on how (for readability in the following equations we will use ) and the additive term compensate each other. In particular when

| 35 |

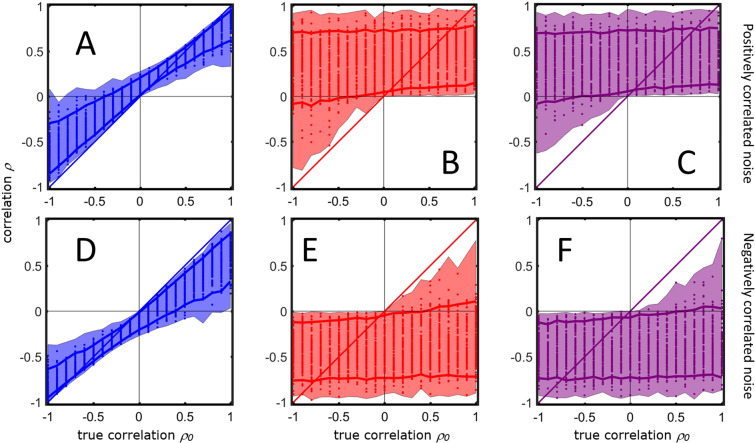

Figure 6.

Calculations of the correlation coefficient (40) as a function of the different realizations of the signal means and the size of the error components for different values of the true correlation . The shadowed area encloses the maximum and the minimum of the values of calculated in the simulation using the different error models. The dots represent the realized values of (only 100 of 105 Monte Carlo realizations for different values of the variances of error component are shown). The solid lines represent the 5-th and the 95-th percentiles of the observed values. (A) Additive measurement error with positive correlated error. (B) Multiplicative measurement error with positive correlated error. (C) Realistic case with both additive and multiplicative measurement error with positive correlated error. (D) Additive measurement error with negative correlated error. (E) Multiplicative measurement error with negative correlated error. (F) Realistic case with both additive and multiplicative measurement error with negative correlated error. For more details on the simulations see Material and Methods section 6.5.5.

This means that is always a biased estimator of the true correlation , with the exception of the second case which happens only for specific values of and . This is unlikely to happen in practice.

If it holds that

| 36 |

The interpretation of Eq. (36) is similar to that of Eq. (35) but additionally, the correlation coefficient can even change sign. In particular, this happens when

| 37 |

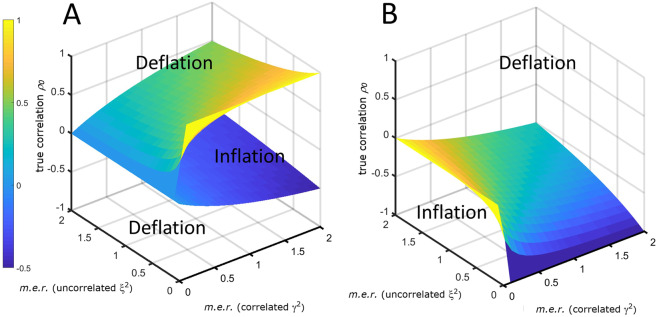

The terms and in Eqs. (35), (36), (71) and (72) describe limiting surfaces of values delineating the regions of attenuation and inflation of the correlation coefficient . As can be seen from Fig. 7, these surfaces are not symmetric with respect to zero correlation, indicating that the behavior of is not symmetric around 0 with respect to the sign of and of the correlated error.

Figure 7.

Limiting surfaces S for the inflation and deflation region of the correlation coefficient in presence of additive measurement error. The surfaces are a function of the uncorrelated () and correlated () measurement error ratios (m.e.r.). (A) S in the case of positively correlated error. (B) S for negatively correlated error. The plot refers to defined by Eq. (34) with and .

The Pearson correlation in presence of multiplicative measurement error

The correlation in the presence of multiplicative error can be derived using similar arguments and detailed calculations can be found in Section 6.1.1. Here we only state the main result:

| 38 |

with , (biological signal to biological variation ratios) and is the attenuation coefficient (the superscript stands for multiplicative):

| 39 |

In this case, the correlation coefficient depends explicitly on the mean of the variables, as an effect of the multiplicative nature of the error component. Our simulations show that if the signal intensity is not too large, the correlation can change sign (as shown in Fig. 6B); if the signal intensity is very large the multiplicative error will have a very large effect and if the correlated error is positive the expected correlation will also be positive, and will be negative if the error are negatively correlated. but simulations cannot be exhaustive (as shown in Fig. 6B).

The Pearson correlation in presence of realistic measurement error

When both additive and multiplicative error are present, the correlation coefficient is a combination of formula (33) and (38) (see Section 6.1.2 for detailed derivation):

| 40 |

where the and parameters have been previously defined for the additive and multiplicative case. Ar is the attenuation coefficient (the superscript stands for realistic):

| 41 |

General rules governing the sign of the numerator and denominator in Eq. (40) cannot be determined since it depends on the interplay of the six error components, the true mean and product thereof. Within the parameter setting of our simulations, the results presented in Fig. 6C show that the behavior of under error model 15 is qualitatively similar to that in presence of only multiplicative error. However different behavior could be emerge with different parameter settings.

Generalized correlated error model

The error models presented in Eqs. (11), (13) and (15) assume a perfect correlation of the correlated errors, since the correlated error terms appear simultaneously in both and ; the same hold true for . A more general model that accounts for different degrees of correlation between the error components can be obtained by modifying the model (15) (other cases are treated in Section 6.3). to

| 42 |

where the correlated error components , , and are distributed as

| 43 |

with variance-covariance matrices

| 44 |

where is the covariance between error term and and is the covariance between error term and .

It is possible to derive expression for the correlation coefficient under the model (43) as shown in Section 3.1 and in the Section 6.1.1 and 6.1.2. The only difference is that under this model the terms and in Eqs. (27), (58), (65) and (66) are replaced by and , respectively.

From the definition of covariance it follows that

| 45 |

and

| 46 |

where and are the correlations among the error terms for which it holds − and −. If and are negative the errors are negatively correlated. Equation (40) becomes now:

| 47 |

with , , and

| 48 |

This model generalizes the correlation coefficient among and from Eq. (40) to account for different strength of the correlation among the correlated error components. All considerations discussed in the previous sections do apply also to this model. Expressions for in the case of additive and multiplicative error can be found in the Section 6.3.1 and 6.3.2.

By setting , , and (perfect correlation), model (40) is obtained, and similarly models (33) and (38).

Correction for Correlation Bias

Because virtually all kinds of measurement are affected by measurement error, the correlation calculated from sampled data is distorted to some degree depending on the level of the measurement error and on its nature. We have seen that experimental error can inflate or deflate the correlation and that (and hence its sample realization ) is almost always a biased estimation of the true correlation . An estimator that gives a theoretically unbiased estimate of the correlation coefficient between two variables and taking into account the measurement error model can be derived. For simple uncorrelated additive error this is given by the Spearman’s formula (49): this is a known results which in the past has been presented and discussed in many different fields16–19. To obtain similar correction formulas for the error models considered here it is sufficient to solve for from the defining Eqs. (33), (38) and (40). The correction formulas are as follows (the ± indicates positive and negatively correlated error):

- Correction for simple additive error (only uncorrelated error):

49 - Correction for additive error:

50 - Correction for multiplicative error:

51 Correction for realistic error:

| 52 |

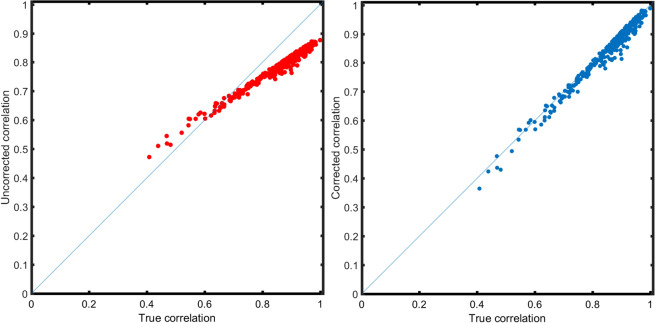

In practice, to obtain a corrected estimation of the correlation coefficient , the is substituted by in (50), (51) and (52), which is the sample correlation calculated from the data. The effect of the correction is shown, for the realistic error model (15), in Fig. 8 where the true know error variance components have been used. It should be noted that it is possible that the corrected correlation exceeds ±1.0. This phenomenon has already been observed and discussed16,30: it is due to the fact that the sampling error of a correlation coefficient corrected for distortion is greater than would be that of an uncorrected coefficient of the same size (at least for the uncorrelated additive error4,18,31). When this happens the corrected correlation can be rounded to ±1.019,31.

Figure 8.

Correction of the distortion induced by the realistic measurement error (see Eq. (15)). (A) Pairwise correlations among 25 metabolites calculated from simulated data with additive and multiplicative measurement error vs the true correlation . (B) Corrected correlation coefficients using Eq. (52) and using the known error variance components. See Section 6.5.6 for details on the data simulation.

Estimation of the error variance components

Simulations shown in Fig. 8 have been performed using the known parameters for the error components used to generate the data. In practical applications the error components needs to be estimated from the measured data and the quality of the correction will depend on the accuracy of the error variance estimate.

The case of purely additive uncorrelated measurement error () has been addressed in the past18,19,32: in this case the variance components and can be substituted with their sample estimates ( and ) obtained from measured data, while the error variance components ( and ) can be estimated if an appropriate experimental design is implemented, i.e. if replicates are measured for each observation.

Unfortunately, there is no simple and immediate approach to estimate the error component in the other cases when many variance components need to be estimated (6 error variances in the case of error model (15) and 8 in the case of the generalized model (42), to which the estimations of and must be added).

Different approaches can be foreseen to estimate the error components which is not a trivial task, including the use of (generalized) linear mixed model33,34, error covariance matrix formulation29,35,36 or common factor analysis factorization37. None of these approaches is straightforward and require some extensive mathematical manipulations to be implemented; an accurate investigation of the simulation of the error component is outside the scope of this paper and will presented in a future publication.

Discussion

Since measurement error cannot be avoided, correlation coefficients calculated from experimental data are distorted to a degree which is not known and that has been neglected in life sciences applications but can be expected to be considerable when comprehensive omics measurement are taken.

As previously discussed, the attenuation of the correlation coefficient in the presence of additive (uncorrelated) error has been known for more than one century. The analytical description of the distortion of the correlation coefficient in presence of more complex measurement error structures (Eqs. (33), (38) and (40)) has been presented here for the first time to the best of our knowledge.

The inflation or attenuation of the correlation coefficient depends on the relationship between the value of true correlation and the error component. In most cases in practice, is a biased estimator for . In absence of correlated error, there is always attenuation; in the presence of correlated error there can also be increase (in absolute value) of the correlation coefficient. This has also been observed in regression analysis applied to nutritional epidemiology and it has been suggested that correlated error can, in principle, be used to compensate for the attenuation38. Moreover, the distortion of the correlation coefficient also has implications for hypothesis testing to assess the significance of the measured correlation .

To illustrate the counterintuitive consequences of correlated measurement error consider the following. Suppose that the true correlation is null. In that case, Eqs. (33), (38) and (40) reduce to

| 53 |

| 54 |

and

| 55 |

which implies that the correlation coefficient is not zero. Moreover, in real-life situations there is also sampling variability superimposed on this which may in the end result in estimated correlations of the size as found in several omics applications (in metabolomics observed correlations are usually lower than 0.610,23; similar patterns are also observed in transcriptomics39,40) while the true biological correlation is zero.

The correction equations presented need the input of estimated variances. Such estimates also carry uncertainty and the quality of these estimates will influence the quality of the corrections. This will be the topic of a follow-up paper. Prior information regarding the sizes of the variance components would be valuable and this points to new requirements for system suitability tests of comprehensive measurements. In metabolomics, for example, it would be worthwhile to characterize an analytical measurement platform in terms of such error variances including sizes of correlated error using advanced (and to be developed) measurement protocols.

Distortion of the correlation coefficient has implications also for experimental planning. In the case of additive uncorrelated error, the correction depends explicitly on the sample size used to calculate and on the number of replicates , used to estimate the intraclass correlation (i.e. the error variance components): since in real life the total sample size is fixed, there is a trade off between the sample size and the number of replicates that can be measured and the experimenter has to decide whether to increase or .

The results presented here are derived under the assumption of normality of both measurement and measurement errors. If and are normally distributed, then and will be, in presence of additive measurement error, normally distributed, with variance given by (12). For multiplicative and realistic error the distribution of and will be far from normality since it involves the distribution of the product of normally distributed quantities which is usually not normal41. It is known that departure from normality can result in the inflation of the correlation coefficient42 and in distortion43 of its (sampling) distribution and this will add to the corruption induced by the measurement error.

We think that in general correlation coefficients are trusted too much on face value and we hope to have triggered some doubts and pointed to precautions in this paper.

Material and Methods

Mathematical calculations

Derivation of ρ in presence of multiplicative measurement error

In presence of purely multiplicative error it holds

| 56 |

and

| 57 |

using (19)–(21) to calculate the expectation of the cross terms. For it holds

| 58 |

Because of the independence of , and the error terms, the expectations of all cross terms is null except

| 59 |

where is given by Eq. (29). Plugging (56), (57) and (58) in (6), the expected correlation coefficient is

| 60 |

and it can re-written as (38) by setting and , and defining the attenuation coefficient (39).

Derivation of ρ in presence of realistic measurement error

To simplify calculations we set

| 61 |

and similarly we define and for variable . It holds

| 62 |

and

| 63 |

E is given by Eq. (57). Because error components are independent and with zero expectation (see Eqs. (19)–(21)) it holds

| 64 |

| 65 |

| 66 |

It follows that

| 67 |

| 68 |

and

| 69 |

Plugging (67), (68), and (69) into (6) one gets the expression for the correlation coefficient in presence of additive and multiplicative measurement error:

| 70 |

that can re-written as (40) by using previously defined and and defining the attenuation coefficient (41).

Behavior of ρ in the case of additive negatively correlated error

For negative correlated error, when the true correlation is positive

| 71 |

Since , is always smaller than the true correlation. When the true correlation is negative () the expected correlation is always negative, but it can be, in absolute value, smaller or larger than the absolute value of the true correlation:

| 72 |

Correlation coefficient under the generalized error model

Additive error

Under the generalized additive correlated error model

| 73 |

with and defined in Eq. (43), the correlation coefficient can be expressed as:

| 74 |

with , , and

| 75 |

Multiplicative error

Under the generalized multiplicative error model

| 76 |

with and defined in Eq. (43), the correlation coefficient can be expressed as:

| 77 |

with

| 78 |

General realistic error

Formulas for the correlation coefficient under the generalized realistic correlated error model are to be found in the main text in Eqs. (47) and (48).

Correction of the correlation coefficient under the generalized correlated error model

Additive error

Under the generalized additive correlated error model the corrected correlation coefficient is

| 79 |

Multiplicative error

Under the generalized multiplicative correlated error model the corrected correlation coefficient is

| 80 |

Realistic error

Under the generalized realistic correlated error model the corrected correlation coefficient is

| 81 |

Simulations

We provide here details on the simulation performed and shown in Figs. 1–4, 6 and 8.

Simulations in Figure 1

N = 100 realizations of two variables and were generated under model with additive uncorrelated measurement error (11), with , and . Error variance components were set to and to (Panel A).

Simulations in Figure 2

The time concentrations profiles , and of three hypothetical metabolites P1, P2 and P3 are simulated using the following dynamic model

| 82 |

which is the model of an irreversible enzyme-catalyzed reaction described by Michaelis-Menten kinetics. Using this model, concentration time profiles for P1, P2 and P3 were generated by solving the system of differential equations after varying the kinetic parameters , and by sampling them from a uniform distribution. For the realization of the jth concentration profile

| 83 |

with population values , and . Initial conditions were set to with and . All quantities are in arbitrary units. Time profiles were sampled at a.u. and collected in a data matrix of size 100 × 3. The variability in data matrix is given by biological variation. The concentration time profiles of P1, P2 and P3 shown in Panel A are obtained using the population values for the kinetic parameters and for the initial conditions.

Additive uncorrelated and correlated measurement error is added on following model (11) where P1, P2 and P3 in play the role of and of an additional third variable which follows a similar model. The variance of the error component was varied in 50 steps between 0 and 25% of the sample variance and calculated from . The variance of the correlated error was set to in all simulations. Pairwise Pearson correlations with were calculated for the error free case and for data with measurement error added. 100 error realizations were simulated for each error value and the average correlation across the 100 realization is calculated and it is shown in Panel B.

The “mini” metabolite-metabolite association networks shown in Panel C are defined by first taking the Pearson correlation among P1, P2 and P3 and then imposing a threshold on to define the connectivity matrix

| 84 |

For more details see reference10.

Simulations in Figure 3

Principal component analysis was performed on a 100 × 133 experimental metabolomic data set (see Section 6.6 for a description). The 15 variables with the highest loading (in absolute value) and the 45 variables with the smallest loading (in absolute value) on the first principal component where selected to form a 100 × 60 data set (we call this now the error free data, as if it only contained biological variation). On this subset a new a principal component analysis was performed. Then multiplicative correlated and uncorrelated measurement error was added on . The variance of the additive error was set with where is the variance calculated for the jth column of , i.e., the biological variance. The variance of the correlated error was fixed to 5% of the average variance observed in the error free data .

Simulations in Figure 4

Let and denote the intensities of the resonances measured at 3.23 and 4.98 in the randomly drawn replicate of sample Fi () and define the 5 × 1 vectors of means

| 85 |

The correlation is calculated for , and 10; for each J the replicates used to calculate and are randomly and independently sampled, for each sample separately, from the total set of the 12 to 15 replicates available per sample. The procedure is repeated 105 times to construct the distributions of the correlation coefficient shown in Fig. 4C.

Simulations in Figure 6

Simulation results presented in Fig. 6 show the results from calculations of the sample correlation coefficient as a function of the true correlation and of the true means ( and ), the variances ( and of the signals and and the measurement error variances as they appear in the definitions of under the dif ferent error models (Eqs. (33), (38) and (40)). The calculations were done multiple times for varying values for and , which were randomly and independently sampled from a uniform distribution , where was set to be equal to 23.4, which was the maximum values observed in Data set 1 (see Section 6.6). Values for and were randomly and independently sampled from a uniform distribution , where was set to be equal to the average variance observed in the experimental Data set 1. The values of the variance of all error components are randomly and independently sampled from . The overall procedure was repeated 104 for each value of in the range in steps of 0.1.

Simulations in Figure 8

The first 25 variables from Data set 1 have been selected and used to compute the means μ0 and the correlation/covariance matrix used to generate error-free data of size 104 × 25 on which additive and multiplicative measurement error (correlated and uncorrelated) is added (error model (15)) to obtain . All error variances are set to 0.1 which is approximately equal to 5% of the average variance observed in . Pairwise correlations among the 25 metabolites are calculated from . The correlations are corrected using Eq. (52) using the known distributional and error parameters used to generate the data. The data generation is repeated 103 times and correlations (uncorrected and corrected) are averaged over the repetitions.

Data sets

Data set 1

A publicly available data set containing measurements of 133 blood metabolites from 2139 subjects was used as a base for the simulation to obtain realistic distributional and correlation patterns among measured features. The data comes from a designed case-cohort and a matched sub-cohort (controls) stratified on age and sex from the TwinGene project44. The first 100 observation were used in the simulation described in Section 6.5.3 and shown in Fig. 3.

Data were downloaded from the Metabolights public repository45 (www.ebi.ac.uk/metabolights) with accession number MTBLS93. For full details on the study protocol, sample collection, chromatography, GC-MS experiments and metabolites identification and quantification see the original publication46 and the Metabolights accession page.

Data set 2

This data set was acquired in the framework of a study aiming to the “Characterization of the measurement error structure in Nuclear Magnetic Resonance (NMR) data for metabolomic studies29”. Five biological replicates of fish extract F1 - F5 were originally pretreated in replicates (12 to 15) and acquired using 1H NMR. The replicates account for variability in sample preparation and instrumental variability. For details on the sample preparation and NMR experiments we refer to the original publication.

Software

All calculations were performed in Matlab (version 2017a 9.2). Code to generate data under the measurement error models (11), (13) and (15) is available at systemsbiology.nl under the SOFTWARE tab.

Acknowledgements

This work has been partially funded by The Netherlands Organization for Health Research and Development (ZonMW) through the PERMIT project (Personalized Medicine in Infections: from Systems Biomedicine and Immunometabolism to Precision Diagnosis and Stratification Permitting Individualized Therapies, project contract number 456008002) under the PerMed Joint Transnational call JTC 2018 (Research projects on personalised medicine - smart combination of pre-clinical and clinical research with data and ICT solutions). The authors acknowledge Peter Wentzell (Halifax, Canada) for kindly making available the NMR data set.

Author contributions

E.S. and A.S. conceived the study and performed theoretical calculations. E.S., M.H. and A.S. analysed and interpreted the results. E.S. and M.H. performed simulations. E.S., M.H. and A.S. wrote, reviewed and approved the manuscript in its final form.

Competing interests

The authors declare no competing interests.

Footnotes

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

These authors contributed equally: Edoardo Saccenti and Age K. Smilde.

Change history

12/20/2023

A Correction to this paper has been published: 10.1038/s41598-023-46128-6

References

- 1.Bravais, A. Analyse mathématique sur les probabilités des erreurs de situation d’un point (Impr. Royale, 1844).

- 2.Galton F. Co-relations and their measurement, chiefly from anthropometric data. Proceedings of the Royal Society of London. 1889;45:135–145. doi: 10.1098/rspl.1888.0082. [DOI] [Google Scholar]

- 3.Pearson K. Note on regression and inheritance in the case of two parents. Proceedings of the Royal Society of London. 1895;58:240–242. doi: 10.1098/rspl.1895.0041. [DOI] [Google Scholar]

- 4.Spearman, C. Demonstration of formulae for true measurement of correlation. The American Journal of Psychology 161–169 (1907).

- 5.Pearson K. On lines and planes of closest fit to systems of points in space. The London, Edinburgh, and Dublin Philosophical Magazine and Journal of Science. 1901;2:559–572. doi: 10.1080/14786440109462720. [DOI] [Google Scholar]

- 6.Hotelling H. Analysis of a complex of statistical variables into principal components. Journal of educational psychology. 1933;24:417. doi: 10.1037/h0071325. [DOI] [Google Scholar]

- 7.Jolliffe, I. Principal component analysis (Springer, 2011).

- 8.Härdle, W. & Simar, L. Applied multivariate statistical analysis, vol. 22007 (Springer, 2007).

- 9.Müller-Linow M, Weckwerth W, Hütt M-T. Consistency analysis of metabolic correlation networks. BMC Systems Biology. 2007;1:44. doi: 10.1186/1752-0509-1-44. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Jahagirdar, S., Suarez-Diez, M. & Saccenti, E. Simulation and reconstruction of metabolite-metabolite association networks using a metabolic dynamic model and correlation based-algorithms. Journal of proteome research (2019). [DOI] [PubMed]

- 11.Dunlop MJ, Cox RS, III., Levine JH, Murray RM, Elowitz MB. Regulatory activity revealed by dynamic correlations in gene expression noise. Nature genetics. 2008;40:1493. doi: 10.1038/ng.281. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Marbach D, et al. Wisdom of crowds for robust gene network inference. Nature Methods. 2012;9:796–804. doi: 10.1038/nmeth.2016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Stuart JM, Segal E, Koller D, Kim SK. A gene-coexpression network for global discovery of conserved genetic modules. Science. 2003;302:249–255. doi: 10.1126/science.1087447. [DOI] [PubMed] [Google Scholar]

- 14.Zhang, B. & Horvath, S. A general framework for weighted gene co-expression network analysis. Statistical applications in genetics and molecular biology4 (2005). [DOI] [PubMed]

- 15.Spearman C. The proof and measurement of association between two things. The American journal of psychology. 1904;15:72–101. doi: 10.2307/1412159. [DOI] [PubMed] [Google Scholar]

- 16.Thouless RH. The effects of errors of measurement on correlation coefficients. British Journal of Psychology. 1939;29:383. [Google Scholar]

- 17.Beaton GH, et al. Sources of variance in 24-hour dietary recall data: implications for nutrition study design and interpretation. The American journal of clinical nutrition. 1979;32:2546–2559. doi: 10.1093/ajcn/32.12.2546. [DOI] [PubMed] [Google Scholar]

- 18.Rosner B, Willett W. Interval estimates for correlation coefficients corrected for within-person variation: implications for study design and hypothesis testing. American journal of epidemiology. 1988;127:377–386. doi: 10.1093/oxfordjournals.aje.a114811. [DOI] [PubMed] [Google Scholar]

- 19.Adolph SC, Hardin JS. Estimating phenotypic correlations: correcting for bias due to intraindividual variability. Functional Ecology. 2007;21:178–184. doi: 10.1111/j.1365-2435.2006.01209.x. [DOI] [Google Scholar]

- 20.Fuller, W. A. Measurement error models, vol. 305 (John Wiley & Sons, 2009).

- 21.Moseley HN. Error analysis and propagation in metabolomics data analysis. Computational and structural biotechnology journal. 2013;4:e201301006. doi: 10.5936/csbj.201301006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Rosato A, et al. From correlation to causation: analysis of metabolomics data using systems biology approaches. Metabolomics. 2018;14:37. doi: 10.1007/s11306-018-1335-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Camacho D, de la Fuente A, Mendes P. The origin of correlations in metabolomics data. Metabolomics. 2005;1:53–63. doi: 10.1007/s11306-005-1107-3. [DOI] [Google Scholar]

- 24.Werner M, Brooks SH, Knott LB. Additive, multiplicative, and mixed analytical errors. Clinical chemistry. 1978;24:1895–1898. doi: 10.1093/clinchem/24.11.1895. [DOI] [PubMed] [Google Scholar]

- 25.Balwierz PJ, et al. Methods for analyzing deep sequencing expression data: constructing the human and mouse promoterome with deepcage data. Genome biology. 2009;10:R79. doi: 10.1186/gb-2009-10-7-r79. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Mehlkopf A, Korbee D, Tiggelman T, Freeman R. Sources of t1 noise in two-dimensional nmr. Journal of Magnetic Resonance (1969) 1984;58:315–323. doi: 10.1016/0022-2364(84)90221-X. [DOI] [Google Scholar]

- 27.Van Batenburg MF, Coulier L, van Eeuwijk F, Smilde AK, Westerhuis JA. New figures of merit for comprehensive functional genomics data: the metabolomics case. Analytical chemistry. 2011;83:3267–3274. doi: 10.1021/ac102374c. [DOI] [PubMed] [Google Scholar]

- 28.Rocke DM, Lorenzato S. A two-component model for measurement error in analytical chemistry. Technometrics. 1995;37:176–184. doi: 10.1080/00401706.1995.10484302. [DOI] [Google Scholar]

- 29.Karakach TK, Wentzell PD, Walter JA. Characterization of the measurement error structure in 1D 1H NMR data for metabolomics studies. Analytica Chimica Acta. 2009;636:163–174. doi: 10.1016/j.aca.2009.01.048. [DOI] [PubMed] [Google Scholar]

- 30.Pearson K, Lee A. On the laws of inheritance in man: I. Inheritance of physical characters. Biometrika. 1903;2:357–462. doi: 10.2307/2331507. [DOI] [Google Scholar]

- 31.Winne, P. H. & Belfry, M. J. Interpretive problems when correcting for attenuation. Journal of Educational Measurement 125–134 (1982).

- 32.Liu K, Stamler J, Dyer A, McKeever J, McKeever P. Statistical methods to assess and minimize the role of intra-individual variability in obscuring the relationship between dietary lipids and serum cholesterol. Journal of chronic diseases. 1978;31:399–418. doi: 10.1016/0021-9681(78)90004-8. [DOI] [PubMed] [Google Scholar]

- 33.McCulloch, C. E. & Neuhaus, J. M. Generalized linear mixed models. Encyclopedia of biostatistics4 (2005).

- 34.Verbeke, G. & Molenberghs, G. Linear mixed models for longitudinal data (Springer Science & Business Media, 2009).

- 35.Leger MN, Vega-Montoto L, Wentzell PD. Methods for systematic investigation of measurement error covariance matrices. Chemometrics and Intelligent Laboratory Systems. 2005;77:181–205. doi: 10.1016/j.chemolab.2004.09.017. [DOI] [Google Scholar]

- 36.Wentzell PD, Cleary CS, Kompany-Zareh M. Improved modeling of multivariate measurement errors based on the wishart distribution. Analytica chimica acta. 2017;959:1–14. doi: 10.1016/j.aca.2016.12.009. [DOI] [PubMed] [Google Scholar]

- 37.Comrey, A. L. & Lee, H. B. A first course in factor analysis (Psychology press, 2013).

- 38.Day N, et al. Correlated measurement error—implications for nutritional epidemiology. International Journal of Epidemiology. 2004;33:1373–1381. doi: 10.1093/ije/dyh138. [DOI] [PubMed] [Google Scholar]

- 39.Pereira V, Waxman D, Eyre-Walker A. A problem with the correlation coefficient as a measure of gene expression divergence. Genetics. 2009;183:1597–1600. doi: 10.1534/genetics.109.110247. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Reynier F, et al. Importance of correlation between gene expression levels: application to the type i interferon signature in rheumatoid arthritis. PloS one. 2011;6:e24828. doi: 10.1371/journal.pone.0024828. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Springer, M. D. The algebra of random variables (Wiley and Sons, 1979).

- 42.Bishara AJ, Hittner JB. Reducing bias and error in the correlation coefficient due to nonnormality. Educational and psychological measurement. 2015;75:785–804. doi: 10.1177/0013164414557639. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Kowalski CJ. On the effects of non-normality on the distribution of the sample product-moment correlation coefficient. Journal of the Royal Statistical Society: Series C (Applied Statistics) 1972;21:1–12. [Google Scholar]

- 44.Magnusson PK, et al. The swedish twin registry: establishment of a biobank and other recent developments. Twin Research and Human Genetics. 2013;16:317–329. doi: 10.1017/thg.2012.104. [DOI] [PubMed] [Google Scholar]

- 45.Haug K, et al. Metabolights—an open-access general-purpose repository for metabolomics studies and associated meta-data. Nucleic acids research. 2012;41:D781–D786. doi: 10.1093/nar/gks1004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Ganna A, et al. Large-scale non-targeted metabolomic profiling in three human population-based studies. Metabolomics. 2016;12:4. doi: 10.1007/s11306-015-0893-5. [DOI] [Google Scholar]