Abstract

Background

In 2009, the National Institute of Mental Health launched the Research Domain Criteria, an attempt to move beyond diagnostic categories and ground psychiatry within neurobiological constructs that combine different levels of measures (e.g., brain imaging and behavior). Statistical methods that can integrate such multimodal data, however, are often vulnerable to overfitting, poor generalization, and difficulties in interpreting the results.

Methods

We propose an innovative machine learning framework combining multiple holdouts and a stability criterion with regularized multivariate techniques, such as sparse partial least squares and kernel canonical correlation analysis, for identifying hidden dimensions of cross-modality relationships. To illustrate the approach, we investigated structural brain–behavior associations in an extensively phenotyped developmental sample of 345 participants (312 healthy and 33 with clinical depression). The brain data consisted of whole-brain voxel-based gray matter volumes, and the behavioral data included item-level self-report questionnaires and IQ and demographic measures.

Results

Both sparse partial least squares and kernel canonical correlation analysis captured two hidden dimensions of brain–behavior relationships: one related to age and drinking and the other one related to depression. The applied machine learning framework indicates that these results are stable and generalize well to new data. Indeed, the identified brain–behavior associations are in agreement with previous findings in the literature concerning age, alcohol use, and depression-related changes in brain volume.

Conclusions

Multivariate techniques (such as sparse partial least squares and kernel canonical correlation analysis) embedded in our novel framework are promising tools to link behavior and/or symptoms to neurobiology and thus have great potential to contribute to a biologically grounded definition of psychiatric disorders.

Keywords: Adolescence, Brain–behavior relationship, Depression, Framework, RDoC, SPLS

Psychiatric diagnoses [e.g., DSM-5 (1), ICD-10 (2)] lack neurobiological validity (3, 4, 5). To address this, the National Institute of Mental Health launched Research Domain Criteria (RDoC) (6) in 2009, a research framework that “integrates many levels of information (from genomics and circuits to behavior) in order to explore basic dimensions of functioning that span the full range of human behaviour from normal to abnormal” (https://www.nimh.nih.gov/research-priorities/rdoc/index.shtml). RDoC represents a paradigm shift in psychiatry and highlights the need to include measures of genes, brain, and behavior to understand psychopathology (4,6). RDoC is structured as a matrix with 4 dimensions: 1) domains of functioning (e.g., negative–positive valence systems) that are further divided into constructs (e.g., attention, perception), 2) units of analysis (e.g., genes, circuits, behavior), 3) developmental aspects, and 4) environmental aspects. Analyzing data containing multiple such modalities, however, poses statistical challenges. Here, we propose a novel framework that is robust to some typical problems arising from high-dimensional neurobiological data such as overfitting, poor generalization, and interpretability of the results.

Factor analysis and related methods (e.g., principal component analysis [PCA]) have long traditions in statistics and psychology (7,8). These techniques decompose a single set of measures (e.g., self-report questionnaires) into a parsimonious, latent dimensional representation of the data. Applications of these approaches include general intelligence [g factor (8)], the five-factor personality model (9), and many others (10, 11, 12, 13). However, factor analysis cannot integrate different sets of measures/modalities (e.g., investigate brain–behavior relationships).

A principled way to find latent dimensions of one modality (or data type) that is related to another modality (or data type) is to use partial least squares (PLS) (14) or the closely related canonical correlation analysis (CCA) (15). PLS was introduced to neuroimaging by McIntosh et al. (16), and it has been widely used (17, 18, 19, 20, 21, 22). Unfortunately, the high dimensionality of neuroimaging data makes PLS and CCA models prone to overfitting; moreover, the interpretation of the identified latent dimensions is usually difficult. Regularized versions of PLS and CCA algorithms address these issues (23, 24, 25); two popular choices are lasso (26) and elastic net (27) regularization, which constrain the optimization problem to select the most relevant variables.

Sparse CCA and sparse PLS (SPLS) were originally proposed in genetics (23,28, 29, 30) and have since been used in cognition (31, 32, 33, 34), working memory (35,36), dementia (37, 38, 39, 40, 41), psychopathology in adolescents (42), psychotic disorders (43, 44, 45, 46), and pharmacological interventions (47). However, most of these studies used approaches for selecting the regularization parameter (model selection) and inferring statistical significance of the identified relationships (model evaluation) that do not account for the generalizability and stability of the results.

Here, we propose an innovative framework combining stability and generalizability as optimization criteria in a multiple holdout framework (48) that is applicable to both regularized PLS and CCA approaches. Crucially, it increases the reproducibility and generalizability of these models by 1) applying stability/reproducibility for model selection and 2) using out-of-sample correlations of the data for model evaluation. To demonstrate this novel framework, we investigated associations between whole-brain voxel-based gray matter volumes and item-level measures of self-report questionnaires, IQ, and demographics in a sample of healthy adolescents and young adults (n = 312) and adolescents and young adults with depression (n = 33). We report the results from using SPLS in the main text, and for comparison we include results with another regularized approach, kernel CCA (KCCA), in the Supplement.

Methods and Materials

PLS/CCA and Other Latent Variable Models

Figure 1 illustrates how PLS/CCA models can be used to identify latent dimensions of brain–behavior relationships. PLS/CCA maximizes the association (covariation for PLS and correlation for CCA) between linear combinations of brain and behavioral variables. The model’s inputs are brain and behavioral variables for multiple subjects (e.g., voxel-level gray matter volumes and item-level questionnaires). Its outputs, for each brain–behavior relationship, are brain and behavioral weights, brain and behavioral scores, and a value denoting the strength of the correlation/covariation.

Figure 1.

Overview of the partial least squares/canonical correlation analysis (PLS/CCA) models. PLS/CCA models search for weight vectors that maximize the covariance (PLS) or correlation (CCA) between linear combinations of the brain and behavioral variables. Importantly, the sparsity constraints of sparse PLS set some of the brain and behavioral weights to zero. The linear combination (i.e., weighted sum) of brain and behavioral variables (columns of X and Y) with the respective weights (elements of u and v) results in brain and behavioral scores (Xu and Yv) for each individual subject. The brain and behavioral scores can be combined to create a brain–behavior latent space showing how the brain–behavior relationship (i.e., association) is expressed across the whole sample.

The brain and behavioral weights have the same dimensionality as their respective data and quantify each brain and behavioral variable’s contribution to the identified brain–behavior relationship or association. Once the weights are found, then brain and behavioral scores can be computed for each subject as a linear combination (i.e., weighted sum) of their brain and behavioral variables, respectively. The brain and behavioral scores can then be combined to create a latent space of brain–behavior relationships across the sample. Furthermore, each brain–behavior relationship can be removed from the data (by a process called deflation) and new relationships sought.

Next, we present a brief overview of PLS/CCA and some other latent variable models to contextualize our modeling approaches. Essentially, all these models search for weight vectors or directions, such that the projection of the dataset(s) (e.g., the brain and/or behavior) onto the obtained weight vector(s) has maximal variance (PCA), correlation (CCA), or covariance (PLS) (49, 50, 51). Note that PCA is limited to finding latent dimensions in one dataset (e.g., behavior). Although its principal components can be used in a multiple regression (referred to as principal component regression), such as to predict brain variables, the directions of high variance identified by PCA might be uncorrelated with the brain variables, while a relatively low variance component might be a useful predictor. Therefore, CCA and PLS can be seen as extensions of principal component regression to find latent dimensions relating two sets of data to each other (50,52).

In the regularized versions of CCA/PLS, additional constraints (governed by regularization parameters) are added to the optimization problem to control the complexity of the CCA/PLS model and reduce overfitting. A regularized version of CCA was proposed by Hardoon et al. (53), in which two regularization parameters control a smooth transition between maximizing correlation (i.e., a CCA-like least-regularized solution) and maximizing covariance (i.e., a PLS-like most-regularized solution). Our KCCA implementation is an extension of this regularized CCA, where the kernel formulation makes the algorithm computationally more efficient (50).

A regularized sparse version of CCA was proposed by Witten et al. (30), which applies elastic net regularization to the weight vectors. Interestingly, because the variance matrices are assumed to be identity matrices in this optimization, their formulation becomes equivalent to our SPLS implementation. Elastic net regularization combines the L1 and L2 constraints of the lasso and ridge methods, respectively. The L1 constraint shrinks some weights and sets others to zero, leading to automatic variable selection (26); however, it has 3 main limitations: 1) selecting at most as many variables as the number of examples/samples in the data, 2) selecting only a few from correlated groups of variables, and 3) leading to worse prediction than ridge regression when the variables are highly correlated (27). The L2 constraint shrinks the weights but does not set them to zero, enabling correlated variables to have similar weights. Combining both the L1 and L2 constraints, elastic net regularization can simultaneously enforce sparse solutions and select correlated variables while enabling optimal prediction performance (27). For further details of PLS/CCA models, see the Supplement.

Model Selection and Statistical Evaluation

To motivate our proposed framework, we briefly review two landmark SPLS applications and their methods of model selection (i.e., regularization parameter choice) and statistical inference.

In one of the most popular SPLS applications, Witten et al. (30) proposed 2 approaches: 1) fixing the regularization parameters of the data a priori and performing permutation testing for model evaluation and 2) using the same permutation test for both selecting the regularization parameters and evaluating the model. In the permutation test, the SPLS model is fitted to the original datasets and to the permuted datasets (i.e., after randomly shuffling one of the datasets); p values are calculated by comparing the SPLS model correlations from the original and the permuted (null) data. When this framework is also used for selecting the regularization parameters, the same procedure is repeated for each combination of regularization parameters (there is one regularization parameter for each dataset, e.g., brain and behavior), and the combination of values leading to the smallest p value is selected. Many other studies followed similar approaches either fixing the regularization parameters (23,32,38,41,54) or choosing them based on permutation tests (55,56). This framework might be preferable when the sample size is small; however, because it does not test whether the identified association generalizes to unseen or holdout data, this approach might overfit the data.

Monteiro et al. (48) proposed a multiple holdout framework to optimize the regularization parameters and test the generalizability of the optimized SPLS models (Figure 2). This framework fits the SPLS model on an optimization set (e.g., 80% of the data) and assesses the identified multivariate associations on a holdout set (e.g., 20% of the data). The regularization parameters are selected by further splitting the optimization set into training and validation sets and choosing the combination of parameters with better generalization performance (measured by the out-of-sample correlation) on the validation set. To further test the robustness of the SPLS model, the entire procedure is repeated 10 times. This framework goes beyond many other SPLS approaches, which split the data once (or use cross-validation) to select the regularization parameters but do not evaluate the model generalizability on an independent test or holdout set (28,33,42,57). Although this framework provides a good test of model generalizability, it does not account for stability of the models across the different data splits while selecting the regularization parameters.

Figure 2.

Multiple holdout framework. The original data are randomly split to an optimization set (80% of the data) and a holdout set (20% of the data). The optimization set is used to fit the regularized partial least squares/canonical correlation analysis model and optimize the regularization parameters in 50 further training and validation splits. The best regularization parameter is used to fit the regularized partial least squares/canonical correlation analysis model on the whole optimization set, and the resulting model is evaluated on the holdout set using permutation testing. Finally, the entire procedure is repeated 10 times.

Our proposed framework is similar to that of Monteiro et al. (48), but it performs regularization parameter selection using stability and generalizability as a joint optimization criterion, extending the work of Baldassarre et al. (58) to regularized PLS/CCA models (Supplemental Figure S1). We measure generalizability as the average out-of-sample correlation on the validation and holdout sets for selecting regularization parameter and model evaluation, respectively. Stability is measured by the average similarity of weights (corrected overlap for SPLS and absolute correlation for KCCA) across splits, that is, how often the models (trained on different subsets of the data) select similar brain and behavioral variables (see Supplement). This joint criterion for parameter selection should enable the identification of brain–behavior associations that are stable and can generalize well to new data.

Data

A total of 345 participants from the NeuroScience in Psychiatry Network (NSPN) project (59) were included in this study (312 healthy participants, mean age = 19.14 ± 2.93 years, 156 female; 33 participants with depression, mean age = 16.50 ± 1.23 years, 23 female). See the Supplement for the details of data acquisition and processing.

All participants completed an abbreviated IQ test and extensive self-report questionnaires assessing well-being, affective symptoms, anxiety, impulsivity and compulsivity, self-esteem, self-harm, antisocial and callous-unemotional characteristics, psychosis spectrum symptoms, substance use, relations with peers and family, and experience of trauma. We added 3 demographic variables (age, sex, and socioeconomic status index) to the items of these questionnaires, resulting in a total of 364 variables, which we call behavioral data for simplicity. Including these demographic variables explicitly in the SPLS model permits investigation of whether these variables interact with brain–behavior relationships. Structural imaging scans were acquired on identical 3T Siemens Magnetom Tim Trio systems (Siemens, Erlangen, Germany) across 3 sites. Only scans at the baseline study visit were included in the current analysis. Structural scans (∼19 minutes) were acquired using a quantitative multiparameter mapping protocol (60). Structural magnetic resonance imaging data preprocessing was performed using SPM12 (https://www.fil.ion.ucl.ac.uk/spm), including segmentation, normalization, downsampling, and smoothing (see Supplement). We then applied a mask selecting voxels with ≥10% probability of containing gray matter to all participants, resulting in a total of 219,079 voxels (brain data). Two confounds were removed (i.e., regressed out) from both datasets: total intracranial volume and data collection site (17,61).

Results

SPLS identified 2 significant latent dimensions of brain–behavior associations in our sample. Because the proposed framework fits the model to different splits of the data, here we present the results for the split that presented the best combination of generalizability (measured by the out-of-sample correlation on the holdout set) and stability (measured by the similarity of weights across the optimization sets). Results for the other data splits are in Supplemental Tables S1–S3 and Supplemental Figures S2 and S3.

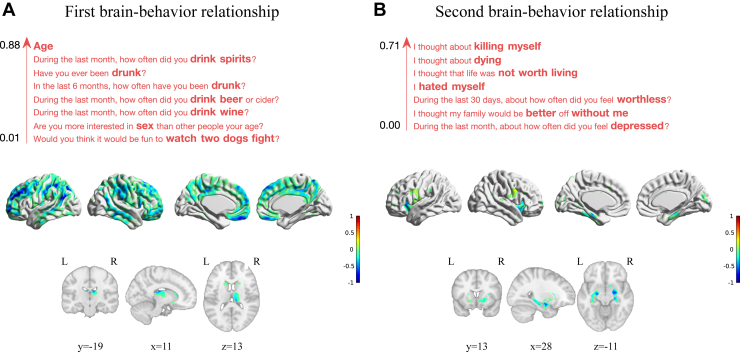

The first brain–behavior relationship (p = .001) captured an association between age and drinking habits and a widespread set of frontoparietotemporal cortical regions, including the medial wall (middle and posterior cingulate and medial orbital cortices), inferior parietal cortex, orbitofrontal cortex, dorsolateral prefrontal cortex, right inferior frontal gyrus, and middle temporal gyri (Figure 3). The brain weights are further summarized using an anatomical atlas in Supplemental Table S4. As expected, the SPLS weights were sparse, selecting 2% of the behavioral variables (1.73 ± 0.48% SEM across data splits) and 22% of the brain variables (36.75 ± 4.62% SEM across data splits).

Figure 3.

Brain and behavioral weights of the two significant associative brain–behavior relationships identified by sparse partial least squares. The brain voxels are color coded by weight, normalized for visualization purposes, and displayed on Montreal Neurological Institute 152 template separately for subcortical (including hippocampus) and cortical regions. The behavioral variables are ordered by weight and color coded with red for positive weights. (A) Brain and behavioral weights of the first brain–behavior relationship. (B) Brain and behavioral weights of the second brain–behavior relationship. L, left; R, right.

The second brain–behavior relationship (p = .014) captured an association between behavioral items related to depression, self-harm, and gray matter volume in a small set of regions, including the hippocampus, parahippocampal gyrus, insula, amygdala, pallidum, and putamen (Figure 3). The behavioral variables related to depression included items such as “feeling worthless,” “hated myself,” and “feeling depressed,” and the behavioral items related to self-harm included thinking about “killing myself” and “thought about dying.” The brain weights are further summarized using an anatomical atlas in Supplemental Table S4. Again, SPLS resulted in rather sparse weights, selecting 2% of the behavioral variables (3.35 ± 1.13% SEM across data splits) and 5% of the brain variables (11.85 ± 3.15% SEM across data splits).

Scatterplots of the brain and behavioral scores allow us to examine how the brain–behavior relationship is expressed across the whole sample (Figure 4). The first multivariate associative effect clearly maps to age, while the second multivariate effect captured a brain–behavior association that varied from healthy to depressed, with subjects with depression presenting higher brain and behavioral scores.

Figure 4.

Two significant brain–behavior latent spaces identified by sparse partial least squares. (A) Scatterplot of the brain and behavioral scores of the first brain–behavior relationship with subjects color coded by age. (B) Scatterplot of the brain and behavioral scores of the second brain–behavior relationship with subjects color coded by clinical diagnosis.

For comparison, we performed 2 additional analyses. First, we added age to the confounds in the SPLS analysis to discount any sampling bias given that the subjects with depression were younger. Here, we identified 1 significant brain–behavior relationship that was very similar to the second depression-related associative effect of the main analysis (p = .047) (Supplemental Figures S4 and S5 and Supplemental Tables S5 and S6). Second, we used KCCA to demonstrate the framework with an alternative regularized approach. Here, we identified 2 significant brain–behavior relationships that were very similar to those identified by SPLS (first associative effect: p = .001 [Supplemental Figures S6A and S7 and Supplemental Tables S7 and S8]; second associative effect: p = .006 [Supplemental Figures S6B and S8 and Supplemental Tables S7 and S8]). For a detailed description of these results, see the Supplemental Results.

Discussion

We presented a novel framework combining stability and generalizability as optimization criteria for regularized multivariate methods, such as SPLS and KCCA, which decreases their risk of detecting spurious associations, particularly in high-dimensional data. Furthermore, we demonstrated that this framework can identify brain–behavior relationships that capture developmental variation as well as variations from normal to abnormal functioning.

Our proposed framework coheres with the overarching intentions of RDoC (62,63). First, SPLS and KCCA link different levels of measures in a principled integrated analysis: here, the key levels are circuits and physiology (in brain imaging) and behavior and self-report. Indeed, RDoC views circuits as the key level anchoring and integrating the rest; however, without robust multivariate techniques, it is challenging to relate circuits to behavior in large-scale human datasets. Second, the latent dimensions identified by SPLS and KCCA may be fundamental axes of neurobiological variation spanning healthy to abnormal functioning. Application of this framework to sufficiently large clinical samples therefore might yield domains of mental (dys)function that are driven by data rather than chosen by experts (as in RDoC itself). Third, the SPLS and KCCA models output brain and behavioral scores for each individual subject in the identified latent dimensions; this is a crucial step toward using RDoC (or similar approaches) for clinical diagnosis.

The model selection and statistical inference in our framework differs from those of other SPLS approaches in the literature. (Note also that these methods are not limited to SPLS but are also relevant for any regularized PLS/CCA models, including KCCA.) For model selection, some suggest fixing the regularization parameters a priori (23,30,32,38,41,54) or choosing the regularization parameters based on the performance of the SPLS model (e.g., maximizing the correlation or the associated p value of the obtained model) (36,45,46,56). Our framework is similar to other data-driven approaches that split the data into training and validation sets and use the validation set to evaluate the SPLS model and select the optimal regularization parameters (28,43,57). For model evaluation, most studies use permutation testing to evaluate the SPLS model based on all available data (30,32,45,55,56); however, this approach does not assess the model’s generalization to new data. To perform statistical inference on how the SPLS model generalizes to unseen data, independent test data (or holdout set) are needed to evaluate it (e.g., Figure 2) [as used, for example, in (23,39)]. If a validation set is used to select the optimal regularization parameter, 3 divisions of data are required: training, validation and test/holdout data (31,43,48).

There are 2 main approaches in the literature to address the stability and reliability of SPLS results. The first approach is based on stability selection, which involves subsampling the (training) data and fitting SPLS with given regularization parameters. After many repetitions of this procedure, the variables selected in all SPLS models (64) or in a proportion of the SPLS models (65) are kept as the relevant variables to describe the association. This procedure can be applied to selecting variables (43,66) and to guiding model selection (40,67); however, it is computationally expensive and depends on additional parameters (e.g., number of repetitions, subsample sizes) that might need to be further optimized (68). The second approach is useful only for model evaluation and involves resampling the (overall) data (e.g., via bootstrapping) to provide confidence intervals for the SPLS model. Thus, this procedure is a complement to permutation testing; permutation testing indicates whether the identified SPLS model is different from a model obtained by chance, while bootstrapping assesses the reliability of the SPLS model (69,70).

Next, we discuss the 2 significant brain–behavior relationships identified in our dataset. The first associative effect in the main SPLS results captured a relationship between age (with the highest weight) and alcohol use and gray matter volume in the cingulate and association (frontoparietotemporal) cortices. The supplementary analysis with SPLS did not find a similar effect after regressing out age from the data, whereas the KCCA analysis included age and a mixture of other factors relating to anxiety, interpersonal difficulties, and externalizing (for details, see Supplemental Results). These results demonstrate that including demographic variables such as age in the SPLS/KCCA model enables identifying variables that covary/interact with demographic variables. Furthermore, the interactive deflation procedure can be seen as an alternative strategy to remove effects (e.g., age) from the data. The frontoparietotemporal areas show the biggest loss of gray matter during adolescence (71, 72, 73); accordingly, 2 recent studies using the same community sample showed that myelination is a key factor in cortical shrinkage in these regions (74,75). These areas also relate to alcohol use; landmark studies have shown that their structural attributes (especially in frontal cortex) can predict drinking behavior later in adolescence (76,77).

The second associative effect captured a relationship between depression-related items and mainly limbic regions. The main SPLS results included mainly items related to suicidality in this effect. The supplementary analyses with SPLS and KCCA selected additional items, including key depression symptoms (e.g., low mood, anhedonia, loss of energy and concentration) and core depressive beliefs (e.g., worthlessness, hopelessness, guilt, low self-esteem). Interestingly, some classic biological symptoms of depression (e.g., sleep, appetite, psychomotor disturbances) do not feature in the selected items, which are concentrated in the cognitive and behavioral aspects of depression. The brain regions with highest weights (in KCCA) or selected variables (in SPLS) were similar in all three analyses comprising amygdala, hippocampus, and parahippocampal gyrus, putamen, vermis, and insula. Despite having only 33 subjects with depression in this sample, there is a remarkable degree of overlap between these areas and those associated with depression in much larger studies. A large meta-analysis of voxel-based morphometry studies (n = 4101 major depressive disorder [MDD] subjects) (78) also found gray matter differences in depression in insula, inferior frontal gyrus, hippocampal areas, caudate, and fusiform gyrus (and in vermis in bipolar disorder), all of which feature in this latent dimension. Whether volumes of other subcortical regions such as amygdala and putamen contribute to depression risk is more controversial; large univariate analyses have not found significant associations with depression (79,80).

Although the specificity of these findings for depression is unclear—similar hippocampal and subcortical volume associations are seen in posttraumatic stress disorder (81) and attention-deficit/hyperactivity disorder (82), respectively—even cross-disorder findings may be useful for predicting outcome and, in particular, treatment response. For example, in relatively small samples, insula volume has been shown to predict relapse in MDD (83), and a combination of amygdala, hippocampus, insula, and vermis (and 3 cortical areas) can predict treatment response to computerized cognitive behavioral therapy for MDD.

The identified latent dimensions also relate to existing RDoC domains, namely positive valence systems (i.e., reward anticipation and satiation that is excessive in the first [alcohol use] but impaired in the second [e.g., anhedonia]) and social processing (in KCCA only), in the attribution of negative and critical mental states to others. Another important element of the RDoC framework is the interaction of its domains with neurodevelopmental trajectories and environmental risk factors. Although our subjects were adolescents and young adults, our brain structure results were consilient with the adult depression literature (reviewed above). This is important because although some studies in children find hippocampal volume associations with both depression (84) and anxiety (85), a meta-analysis in adults with MDD concluded that hippocampal volume associations were absent at first episode (79). Indeed, our previous study of functional imaging data in this dataset revealed 2 latent dimensions of depression that had opposite relationships with age, one of which related to trauma (sexual abuse) (86).

This study has some limitations. The sample size is modest, especially for participants with depression (n = 33). This, combined with the likely heterogeneity of the disorder, makes the results for the depression-related modes somewhat unstable; that is, although the selected behavioral variables are similar, some of the out-of-sample correlations are close to zero. Validation of our SPLS/KCCA models in a larger dataset of healthy adolescents and young adults and adolescents and young adults with depression would further strengthen the generalizability of our findings. Furthermore, the inclusion of a broader selection of clinical disorders would reveal the specificity of these findings for depression rather than for psychological distress in general.

Finally, we suggest some key areas for future work. First, future studies should investigate other regularization strategies for CCA and PLS; for example, applying group sparsity can capture group structures in the data that might exist owing to either preprocessing (e.g., smoothing) or a biological mechanism (43). Second, nonlinear approaches [e.g., KCCA with nonlinear kernel (50)] could explore more complex relationships between brain and behavioral data. Third, regularized CCA and PLS approaches can be used to find associations across more than 2 types of data (56,87), which may enable a more complete description of latent neurobiological (and other) factors. Fourth, the obtained latent space could be embedded in a predictive model to enable predictions of future outcomes such as treatment response. Finally, further research should investigate how these latent dimensions relate to the currently used diagnostic categories.

In conclusion, we have shown that regularized multivariate methods, such as SPLS and KCCA, embedded in our novel framework yield stable results that generalize to holdout data. The identified multivariate brain–behavior relationships are in agreement with many established findings in the literature concerning age, alcohol use, and depression-related changes in brain volume. In particular, it is very encouraging that our depression-related results agree with a wider literature despite having only a small number of subjects with MDD. The depression-related dimension also contained largely cognitive and behavioral aspects of depression rather than its biological features. Altogether, we propose that SPLS/KCCA combined with our innovative framework provides a principled way to investigate basic dimensions of brain–behavior relationships and has great potential to contribute to a biologically grounded definition of psychiatric disorders.

Acknowledgments and Disclosures

This work was supported by a Wellcome Trust Strategic Award (No. 095844 [to IMG, RD, ETB, PBJ, and PF]) that provides core funding for the NSPN. Scanning at the Wellcome Centre for Human Neuroimaging was funded under Grant No. 203147/Z/16/Z. AM, MJR, and JM-M were funded by the Wellcome Trust under Grant No. WT102845/Z/13/Z. FSF was funded by a Ph.D. scholarship awarded by Fundacao para a Ciencia e a Tecnologia (No. SFRH/BD/120640/2016). MM received support from the University College London Hospitals (UCLH) National Institute for Health Research (NIHR) Biomedical Research Centre (BRC). RAA was supported by a Medical Research Council (MRC) Skills Development Fellowship (Grant No. MR/S007806/1). PF was in receipt of an NIHR Senior Investigator Award (Grant No. NF-SI-0514-10157) and was in part supported by the NIHR Collaboration for Leadership in Applied Health Research and Care (CLAHRC) North Thames at Barts Health NHS Trust. The views expressed are those of the authors and not necessarily those of the National Health Service, the NIHR, or the Department of Health.

ETB is employed half-time by the University of Cambridge and half-time by GlaxoSmithKline, and he holds stock in GlaxoSmithKline. The other authors report no biomedical financial interests or potential conflicts of interest.

Footnotes

NSPN Consortium staff members: Tobias Hauser, Sharon Neufeld, Rafael Romero-Garcia, Michelle St Clair, Petra E. Vértes, Kirstie Whitaker, Becky Inkster, Cinly Ooi, Umar Toseeb, Barry Widmer, Junaid Bhatti, Laura Villis, Ayesha Alrumaithi, Sarah Birt, Aislinn Bowler, Kalia Cleridou, Hina Dadabhoy, Emma Davies, Ashlyn Firkins, Sian Granville, Elizabeth Harding, Alexandra Hopkins, Daniel Isaacs. Janchai King, Danae Kokorikou, Christina Maurice, Cleo McIntosh, Jessica Memarzia, Harriet Mills, Ciara O’Donnell, Sara Pantaleone, and Jenny Scott. Affiliated scientists: Pasco Fearon, John Suckling, Anne-Laura van Harmelen, and Rogier Kievit.

Supplementary material cited in this article is available online at https://doi.org/10.1016/j.biopsych.2019.12.001.

Contributor Information

Agoston Mihalik, Email: a.mihalik@ucl.ac.uk.

NeuroScience in Psychiatry Network (NSPN) Consortium:

Tobias Hauser, Sharon Neufeld, Rafael Romero-Garcia, Michelle St Clair, Petra E. Vértes, Kirstie Whitaker, Becky Inkster, Cinly Ooi, Umar Toseeb, Barry Widmer, Junaid Bhatti, Laura Villis, Ayesha Alrumaithi, Sarah Birt, Aislinn Bowler, Kalia Cleridou, Hina Dadabhoy, Emma Davies, Ashlyn Firkins, Sian Granville, Elizabeth Harding, Alexandra Hopkins, Daniel Isaacs, Janchai King, Danae Kokorikou, Christina Maurice, Cleo McIntosh, Jessica Memarzia, Harriet Mills, Ciara O’Donnell, Sara Pantaleone, Jenny Scott, Pasco Fearon, John Suckling, Anne-Laura van Harmelen, and Rogier Kievit

Supplementary Material

References

- 1.American Psychiatric Association . 5th ed. American Psychiatric Publishing; Washington, DC: 2013. Diagnostic and Statistical Manual of Mental Disorders. [Google Scholar]

- 2.World Health Organization . 10th rev. World Health Organization; Geneva, Switzerland: 1992. International Statistical Classification of Diseases and Related Health Problems. [Google Scholar]

- 3.Hyman S.E. Can neuroscience be integrated into the DSM-V? Nat Rev Neurosci. 2007;8:725–732. doi: 10.1038/nrn2218. [DOI] [PubMed] [Google Scholar]

- 4.Cuthbert B.N., Insel T.R. Toward the future of psychiatric diagnosis: The seven pillars of RDoC. BMC Med. 2013;11:126. doi: 10.1186/1741-7015-11-126. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Craddock N., Owen M.J. The Kraepelinian dichotomy—Going, going … but still not gone. Br J Psychiatry. 2010;196:92–95. doi: 10.1192/bjp.bp.109.073429. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Insel T.R., Cuthbert B.N., Garvey M., Heinssen R., Pine D.S., Quinn K. Research domain criteria (RDoC): Toward a new classification framework for research on mental disorders. Am J Psychiatry. 2010;167:748–751. doi: 10.1176/appi.ajp.2010.09091379. [DOI] [PubMed] [Google Scholar]

- 7.Pearson K. On lines and planes of closest fit to systems of points in space. Philos Mag. 1901;2:559–572. [Google Scholar]

- 8.Spearman C. “General intelligence,” objectively determined and measured. Am J Psychol. 1904;15:201–292. [Google Scholar]

- 9.Digman J.M. Personality structure: Emergence of the five-factor model. Annu Rev Psychol. 1990;41:417–440. [Google Scholar]

- 10.Hauser T.U., Allen M., Rees G., Dolan R.J., Bullmore E.T., Goodyer I. Metacognitive impairments extend perceptual decision making weaknesses in compulsivity. Sci Rep. 2017;7:6614. doi: 10.1038/s41598-017-06116-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Carragher N., Teesson M., Sunderland M., Newton N.C., Krueger R.F., Conrod P.J. The structure of adolescent psychopathology: A symptom-level analysis. Psychol Med. 2016;46:981–994. doi: 10.1017/S0033291715002470. [DOI] [PubMed] [Google Scholar]

- 12.Cameron D.H., Streiner D.L., Summerfeldt L.J., Rowa K., McKinnon M.C., McCabe R.E. A comparison of cluster and factor analytic techniques for identifying symptom-based dimensions of obsessive-compulsive disorder. Psychiatry Res. 2019;278:86–96. doi: 10.1016/j.psychres.2019.05.040. [DOI] [PubMed] [Google Scholar]

- 13.St Clair M.C., Neufeld S., Jones P.B., Fonagy P., Bullmore E.T., Dolan R.J. Characterising the latent structure and organisation of self-reported thoughts, feelings and behaviours in adolescents and young adults. PLoS One. 2017;12 doi: 10.1371/journal.pone.0175381. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Wold H. Partial least squares. In: Kotz S., Johnson N., editors. Encyclopedia of Statistics in Behavioral Science. Wiley Online Library; New York: 1985. pp. 581–591. [Google Scholar]

- 15.Hotelling H. Relations between two sets of variates. Biometrika. 1936;28:321–377. [Google Scholar]

- 16.McIntosh A.R., Bookstein F.L., Haxby J.V., Grady C.L. Spatial pattern analysis of functional brain images using partial least squares. NeuroImage. 1996;3:143–157. doi: 10.1006/nimg.1996.0016. [DOI] [PubMed] [Google Scholar]

- 17.Ziegler G., Dahnke R., Winkler A.D., Gaser C. Partial least squares correlation of multivariate cognitive abilities and local brain structure in children and adolescents. NeuroImage. 2013;82:284–294. doi: 10.1016/j.neuroimage.2013.05.088. [DOI] [PubMed] [Google Scholar]

- 18.Menzies L., Achard S., Chamberlain S.R., Fineberg N., Chen C.H., Del Campo N. Neurocognitive endophenotypes of obsessive-compulsive disorder. Brain. 2007;130:3223–3236. doi: 10.1093/brain/awm205. [DOI] [PubMed] [Google Scholar]

- 19.Nestor P.G., O’Donnell B.F., McCarley R.W., Niznikiewicz M., Barnard J., Shen Z.J. A new statistical method for testing hypotheses of neuropsychological/MRI relationships in schizophrenia: Partial least squares analysis. Schizophr Res. 2002;53:57–66. doi: 10.1016/s0920-9964(00)00171-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Vértes P.E., Rittman T., Whitaker K.J., Romero-Garcia R., Váša F., Kitzbichler M.G. Gene transcription profiles associated with inter-modular hubs and connection distance in human functional magnetic resonance imaging networks. Philos Trans R Soc B. 2016;371:20150362. doi: 10.1098/rstb.2015.0362. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Romero-Garcia R., Warrier V., Bullmore E.T., Baron-Cohen S., Bethlehem R.A.I. Synaptic and transcriptionally downregulated genes are associated with cortical thickness differences in autism. Mol Psychiatry. 2019;24:1053–1064. doi: 10.1038/s41380-018-0023-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Price J.C., Ziolko S.K., Weissfeld L.A., Klunk W.E., Lu X., Hoge J.A. Quantitative and statistical analyses of PET imaging studies of amyloid deposition in humans. IEEE Symp Conf Rec Nucl Sci 2004. 2004;5:3161–3164. [Google Scholar]

- 23.Lê Cao K.-A., Rossouw D., Robert-Granié C., Besse P. A Sparse PLS for variable selection when integrating omics data. Stat Appl Genet Mol Biol. 2008;7:35. doi: 10.2202/1544-6115.1390. [DOI] [PubMed] [Google Scholar]

- 24.Chun H., Keles S. Sparse partial least squares regression for simultaneous dimension reduction and variable selection. J R Stat Soc Ser B Stat Methodol. 2010;72:3–25. doi: 10.1111/j.1467-9868.2009.00723.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Uurtio V., Monteiro J.M., Kandola J., Shawe-Taylor J., Fernandez-Reyes D., Rousu J. A Tutorial on Canonical Correlation Methods. ACM Comput Surv. 2017;50:1–33. [Google Scholar]

- 26.Tibshirani R. Regression shrinkage and selection via the lasso. J R Stat Soc Ser B Methodol. 1996;58:267–288. [Google Scholar]

- 27.Zou H., Hastie T. Regularization and variable selection via the elastic net. J R Stat Soc Ser B Stat Methodol. 2005;67:301–320. [Google Scholar]

- 28.Waaijenborg S., Verselewel de Witt Hamer P.C., Zwinderman A.H. Quantifying the association between gene expressions and DNA-markers by penalized canonical correlation analysis. Stat Appl Genet Mol Biol. 2008;7:3. doi: 10.2202/1544-6115.1329. [DOI] [PubMed] [Google Scholar]

- 29.Parkhomenko E., Tritchler D., Beyene J. Sparse canonical correlation analysis with application to genomic data integration. Stat Appl Genet Mol Biol. 2009;8:1. doi: 10.2202/1544-6115.1406. [DOI] [PubMed] [Google Scholar]

- 30.Witten D.M., Tibshirani R., Hastie T. A penalized matrix decomposition, with applications to sparse principal components and canonical correlation analysis. Biostatistics. 2009;10:515–534. doi: 10.1093/biostatistics/kxp008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Wang H.T., Bzdok D., Margulies D., Craddock C., Milham M., Jefferies E., Smallwood J. Patterns of thought: Population variation in the associations between large-scale network organisation and self-reported experiences at rest. NeuroImage. 2018;176:518–527. doi: 10.1016/j.neuroimage.2018.04.064. [DOI] [PubMed] [Google Scholar]

- 32.Wang H.-T., Poerio G., Murphy C., Bzdok D., Jefferies E., Smallwood J. Dimensions of experience: Exploring the heterogeneity of the wandering mind. Psychol Sci. 2018;29:56–71. doi: 10.1177/0956797617728727. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Le Floch E., Guillemot V., Frouin V., Pinel P., Lalanne C., Trinchera L. Significant correlation between a set of genetic polymorphisms and a functional brain network revealed by feature selection and sparse partial least squares. NeuroImage. 2012;63:11–24. doi: 10.1016/j.neuroimage.2012.06.061. [DOI] [PubMed] [Google Scholar]

- 34.Grellmann C., Bitzer S., Neumann J., Westlye L.T., Andreassen O.A., Villringer A., Horstmann A. Comparison of variants of canonical correlation analysis and partial least squares for combined analysis of MRI and genetic data. NeuroImage. 2015;107:289–310. doi: 10.1016/j.neuroimage.2014.12.025. [DOI] [PubMed] [Google Scholar]

- 35.Moser D.A., Doucet G.E., Ing A., Dima D., Schumann G., Bilder R.M., Frangou S. An integrated brain–behavior model for working memory. Mol Psychiatry. 2018;23:1974–1980. doi: 10.1038/mp.2017.247. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Lee W.H., Moser D.A., Ing A., Doucet G.E., Frangou S. Behavioral and health correlates of resting-state metastability in the Human Connectome Project. Brain Topogr. 2019;32:80–86. doi: 10.1007/s10548-018-0672-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.McMillan C.T., Toledo J.B., Avants B.B., Cook P.A., Wood E.M., Suh E. Genetic and neuroanatomic associations in sporadic frontotemporal lobar degeneration. Neurobiol Aging. 2014;35:1473–1482. doi: 10.1016/j.neurobiolaging.2013.11.029. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Avants B.B., Cook P.A., Ungar L., Gee J.C., Grossman M. Dementia induces correlated reductions in white matter integrity and cortical thickness: A multivariate neuroimaging study with sparse canonical correlation analysis. NeuroImage. 2010;50:1004–1016. doi: 10.1016/j.neuroimage.2010.01.041. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Avants B.B., Libon D.J., Rascovsky K., Boller A., McMillan C.T., Massimo L. Sparse canonical correlation analysis relates network-level atrophy to multivariate cognitive measures in a neurodegenerative population. NeuroImage. 2014;84:698–711. doi: 10.1016/j.neuroimage.2013.09.048. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Olson Hunt M.J., Weissfeld L., Boudreau R.M., Aizenstein H., Newman A.B., Simonsick E.M. A variant of sparse partial least squares for variable selection and data exploration. Front Neuroinform. 2014;8:18. doi: 10.3389/fninf.2014.00018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Monteiro J.M., Rao A., Ashburner J., Shawe-Taylor J., Mourão-Miranda J. Leveraging clinical data to enhance localization of brain atrophy. In: Rish I., Langs G., Wehbe L., Cecchi G., Chang K., Murphy B., editors. Machine Learning and Interpretation in Neuroimaging. Springer International; Cham, Switzerland: 2014. pp. 60–68. [Google Scholar]

- 42.Xia C.H., Ma Z., Ciric R., Gu S., Betzel R.F., Kaczkurkin A.N. Linked dimensions of psychopathology and connectivity in functional brain networks. Nat Commun. 2018;9:3003. doi: 10.1038/s41467-018-05317-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Lin D., Calhoun V.D., Wang Y.P. Correspondence between fMRI and SNP data by group sparse canonical correlation analysis. Med Image Anal. 2014;18:891–902. doi: 10.1016/j.media.2013.10.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Sui X., Li S., Liu J., Zhang X., Yu C., Jiang T. 12th International Symposium on Biomedical Imaging. IEEE; New York: 2015. Sparse canonical correlation analysis reveals correlated patterns of gray matter loss and white matter impairment in Alzheimer’s disease; pp. 470–473. [Google Scholar]

- 45.Leonenko G., Di Florio A., Allardyce J., Forty L., Knott S., Jones L. A data-driven investigation of relationships between bipolar psychotic symptoms and schizophrenia genome-wide significant genetic loci. Am J Med Genet B Neuropsychiatr Genet. 2018;177:468–475. doi: 10.1002/ajmg.b.32635. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Moser D.A., Doucet G.E., Lee W.H., Rasgon A., Krinsky H., Leibu E. Multivariate associations among behavioral, clinical, and multimodal imaging phenotypes in patients with psychosis. JAMA Psychiatry. 2018;75:386–395. doi: 10.1001/jamapsychiatry.2017.4741. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Rosa M.J., Mehta M.A., Pich E.M., Risterucci C., Zelaya F., Reinders A.A.T.S. Estimating multivariate similarity between neuroimaging datasets with sparse canonical correlation analysis: An application to perfusion imaging. Front Neurosci. 2015;9:366. doi: 10.3389/fnins.2015.00366. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Monteiro J.M., Rao A., Shawe-Taylor J., Mourão-Miranda J. A multiple hold-out framework for sparse partial least squares. J Neurosci Methods. 2016;271:182–194. doi: 10.1016/j.jneumeth.2016.06.011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Rosipal R., Krämer N. Overview and recent advances in partial least squares. In: Saunders C., Grobelnik M., Gunn S., Shawe-Taylor J., editors. Subspace, Latent Structure and Feature Selection. Springer; Berlin, Germany: 2006. pp. 34–51. [Google Scholar]

- 50.Shawe-Taylor J., Cristianini N. Cambridge University Press; Cambridge, UK: 2004. Kernel Methods for Pattern Analysis. [Google Scholar]

- 51.Corrochano E.B., De Bie T., Cristianini N., Rosipal R. Eigenproblems in Pattern Recognition. In: Corrochano E.B., editor. Handbook of Geometric Computing. Springer-Verlag; Berlin, Germany: 2005. pp. 129–167. [Google Scholar]

- 52.Abdi H. Partial least squares regression and projection on latent structure regression (PLS regression) Wiley Interdiscip Rev Comput Stat. 2010;2:97–106. [Google Scholar]

- 53.Hardoon D.R., Szedmak S., Shawe-Taylor J. Canonical correlation analysis: An overview with application to learning methods. Neural Comput. 2004;16:2639–2664. doi: 10.1162/0899766042321814. [DOI] [PubMed] [Google Scholar]

- 54.Lê Cao K.A., Martin P.G.P., Robert-Granié C., Besse P. Sparse canonical methods for biological data integration: Application to a cross-platform study. BMC Bioinformatics. 2009;10:34. doi: 10.1186/1471-2105-10-34. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Monteiro J.M., Rao A., Ashburner J., Shawe-Taylor J., Mourão-Miranda J. International Workshop on Pattern Recognition in Neuroimaging. IEEE; New York: 2015. Multivariate effect ranking via adaptive sparse PLS; pp. 25–28. [Google Scholar]

- 56.Witten D.M., Tibshirani R.J. Extensions of sparse canonical correlation analysis with applications to genomic data. Stat Appl Genet Mol Biol. 2009;8:28. doi: 10.2202/1544-6115.1470. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Waaijenborg S., Zwinderman A.H. Sparse canonical correlation analysis for identifying, connecting and completing gene-expression networks. BMC Bioinformatics. 2009;10:315. doi: 10.1186/1471-2105-10-315. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Baldassarre L., Pontil M., Mourão-Miranda J. Sparsity is better with stability: Combining accuracy and stability for model selection in brain decoding. Front Neurosci. 2017;11:62. doi: 10.3389/fnins.2017.00062. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Kiddle B., Inkster B., Prabhu G., Moutoussis M., Whitaker K.J., Bullmore E.T. Cohort profile: The NSPN 2400 cohort: A developmental sample supporting the Wellcome Trust NeuroScience in Psychiatry Network. Int J Epidemiol. 2018;47:18g–19g. doi: 10.1093/ije/dyx117. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Weiskopf N., Suckling J., Williams G., Correia M.M., Inkster B., Tait R. Quantitative multi-parameter mapping of R1, PD(*), MT, and R2(*) at 3T: A multi-center validation. Front Neurosci. 2013;7:95. doi: 10.3389/fnins.2013.00095. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Smith S.M., Nichols T.E., Vidaurre D., Winkler A.M., Behrens T.E.J., Glasser M.F. A positive-negative mode of population covariation links brain connectivity, demographics and behavior. Nat Neurosci. 2015;18:1565–1567. doi: 10.1038/nn.4125. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Insel T.R., Cuthbert B.N. Brain disorders? Precisely. Science. 2015;348:499–500. doi: 10.1126/science.aab2358. [DOI] [PubMed] [Google Scholar]

- 63.Cuthbert B.N. The RDoC framework: Facilitating transition from ICD/DSM to dimensional approaches that integrate neuroscience and psychopathology. World Psychiatry. 2014;13:28–35. doi: 10.1002/wps.20087. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Bach F. Model-consistent sparse estimation through the bootstrap [published online ahead of print Jan 21] arXiv. 2009 [Google Scholar]

- 65.Meinshausen N., Bühlmann P. Stability selection. J R Stat Soc Ser B Stat Methodol. 2010;72:417–473. [Google Scholar]

- 66.Labus J.S., Van Horn J.D., Gupta A., Alaverdyan M., Torgerson C., Ashe-McNalley C. Multivariate morphological brain signatures predict patients with chronic abdominal pain from healthy control subjects. Pain. 2015;156:1545–1554. doi: 10.1097/j.pain.0000000000000196. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67.Lê Cao K.-A., Boitard S., Besse P. Sparse PLS discriminant analysis: Biologically relevant feature selection and graphical displays for multiclass problems. BMC Bioinformatics. 2011;12:253. doi: 10.1186/1471-2105-12-253. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 68.Rondina J.M., Hahn T., De Oliveira L., Marquand A.F., Dresler T., Leitner T. SCoRS—A method based on stability for feature selection and apping in neuroimaging. IEEE Trans Med Imaging. 2014;33:85–98. doi: 10.1109/TMI.2013.2281398. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69.McIntosh A.R., Lobaugh N.J. Partial least squares analysis of neuroimaging data: Applications and advances. NeuroImage. 2004;23:250–263. doi: 10.1016/j.neuroimage.2004.07.020. [DOI] [PubMed] [Google Scholar]

- 70.Krishnan A., Williams L.J., McIntosh A.R., Abdi H. Partial least squares (PLS) methods for neuroimaging: A tutorial and review. NeuroImage. 2011;56:455–475. doi: 10.1016/j.neuroimage.2010.07.034. [DOI] [PubMed] [Google Scholar]

- 71.Tamnes C.K., Herting M.M., Goddings A.-L., Meuwese R., Blakemore S.-J., Dahl R.E. Development of the cerebral cortex across adolescence: A multisample study of inter-related longitudinal changes in cortical volume, surface area, and thickness. J Neurosci. 2017;37:3402–3412. doi: 10.1523/JNEUROSCI.3302-16.2017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 72.Giedd J.N., Blumenthal J., Jeffries N.O., Castellanos F.X., Liu H., Zijdenbos A. Brain development during childhood and adolescence: A longitudinal MRI study. Nat Neurosci. 1999;2:861–863. doi: 10.1038/13158. [DOI] [PubMed] [Google Scholar]

- 73.Tau G.Z., Peterson B.S. Normal development of brain circuits. Neuropsychopharmacology. 2010;35:147–168. doi: 10.1038/npp.2009.115. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 74.Whitaker K.J., Vértes P.E., Romero-Garcia R., Váša F., Moutoussis M., Prabhu G. Adolescence is associated with genomically patterned consolidation of the hubs of the human brain connectome. Proc Natl Acad Sci U S A. 2016;113:9105–9110. doi: 10.1073/pnas.1601745113. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 75.Ziegler G., Hauser T.U., Moutoussis M., Bullmore E.T., Goodyer I.M., Fonagy P. Compulsivity and impulsivity traits linked to attenuated developmental frontostriatal myelination trajectories. Nat Neurosci. 2019;22:992–999. doi: 10.1038/s41593-019-0394-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 76.Squeglia L.M., Ball T.M., Jacobus J., Brumback T., McKenna B.S., Nguyen-Louie T.T. Neural predictors of initiating alcohol use during adolescence. Am J Psychiatry. 2017;174:172–185. doi: 10.1176/appi.ajp.2016.15121587. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 77.Whelan R., Watts R., Orr C.A., Althoff R.R., Artiges E., Banaschewski T. Neuropsychosocial profiles of current and future adolescent alcohol misusers. Nature. 2014;512:185–189. doi: 10.1038/nature13402. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 78.Wise T., Radua J., Via E., Cardoner N., Abe O., Adams T.M. Common and distinct patterns of grey-matter volume alteration in major depression and bipolar disorder: Evidence from voxel-based meta-analysis. Mol Psychiatry. 2017;22:1455–1463. doi: 10.1038/mp.2016.72. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 79.Schmaal L., Veltman D.J., van Erp T.G.M., Sämann P.G., Frodl T., Jahanshad N. Subcortical brain alterations in major depressive disorder: Findings from the ENIGMA Major Depressive Disorder working group. Mol Psychiatry. 2016;21:806–812. doi: 10.1038/mp.2015.69. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 80.Shen X., Reus L.M., Cox S.R., Adams M.J., Liewald D.C., Bastin M.E. Subcortical volume and white matter integrity abnormalities in major depressive disorder: Findings from UK Biobank imaging data. Sci Rep. 2017;7:5547. doi: 10.1038/s41598-017-05507-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 81.Logue M.W., van Rooij S.J.H., Dennis E.L., Davis S.L., Hayes J.P., Stevens J.S. Smaller hippocampal volume in posttraumatic stress disorder: A multisite ENIGMA-PGC study: Subcortical volumetry results from Posttraumatic Stress Disorder Consortia. Biol Psychiatry. 2018;83:244–253. doi: 10.1016/j.biopsych.2017.09.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 82.Hoogman M., Bralten J., Hibar D.P., Mennes M., Zwiers M.P., Schweren L.S.J. Subcortical brain volume differences in participants with attention deficit hyperactivity disorder in children and adults: A cross-sectional mega-analysis. Lancet Psychiatry. 2017;4:310–319. doi: 10.1016/S2215-0366(17)30049-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 83.Zaremba D., Dohm K., Redlich R., Grotegerd D., Strojny R., Meinert S. Association of brain cortical changes with relapse in patients with major depressive disorder. JAMA Psychiatry. 2018;75:484–492. doi: 10.1001/jamapsychiatry.2018.0123. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 84.Barch D.M., Tillman R., Kelly D., Whalen D., Gilbert K., Luby J.L. Hippocampal volume and depression among young children. Psychiatry Res Neuroimaging. 2019;288:21–28. doi: 10.1016/j.pscychresns.2019.04.012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 85.Gold A.L., Steuber E.R., White L.K., Pacheco J., Sachs J.F., Pagliaccio D. Cortical thickness and subcortical gray matter volume in pediatric anxiety disorders. Neuropsychopharmacology. 2017;42:2423–2433. doi: 10.1038/npp.2017.83. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 86.Mihalik A., Ferreira F.S., Rosa M.J., Moutoussis M., Ziegler G., Monteiro J.M. Brain-behaviour modes of covariation in healthy and clinically depressed young people. Sci Rep. 2019;9:11536. doi: 10.1038/s41598-019-47277-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 87.Li Y., Wu F.-X., Ngom A. A review on machine learning principles for multi-view biological data integration. Brief Bioinform. 2018;19:325–340. doi: 10.1093/bib/bbw113. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.