Abstract

Persistent homology (PH) is a method used in topological data analysis (TDA) to study qualitative features of data that persist across multiple scales. It is robust to perturbations of input data, independent of dimensions and coordinates, and provides a compact representation of the qualitative features of the input. The computation of PH is an open area with numerous important and fascinating challenges. The field of PH computation is evolving rapidly, and new algorithms and software implementations are being updated and released at a rapid pace. The purposes of our article are to (1) introduce theory and computational methods for PH to a broad range of computational scientists and (2) provide benchmarks of state-of-the-art implementations for the computation of PH. We give a friendly introduction to PH, navigate the pipeline for the computation of PH with an eye towards applications, and use a range of synthetic and real-world data sets to evaluate currently available open-source implementations for the computation of PH. Based on our benchmarking, we indicate which algorithms and implementations are best suited to different types of data sets. In an accompanying tutorial, we provide guidelines for the computation of PH. We make publicly available all scripts that we wrote for the tutorial, and we make available the processed version of the data sets used in the benchmarking.

Electronic Supplementary Material

The online version of this article (doi:10.1140/epjds/s13688-017-0109-5) contains supplementary material.

Keywords: persistent homology, topological data analysis, point-cloud data, networks

Introduction

The amount of available data has increased dramatically in recent years, and this situation — which will only become more extreme — necessitates the development of innovative and efficient data-processing methods. Making sense of the vast amount of data is difficult: on one hand, the sheer size of the data poses challenges; on the other hand, the complexity of the data, which includes situations in which data is noisy, high-dimensional, and/or incomplete, is perhaps an even more significant challenge. The use of clustering techniques and other ideas from areas such as computer science, machine learning, and uncertainty quantification — along with mathematical and statistical models — are often very useful for data analysis (see, e.g., [1–4] and many other references). However, recent mathematical developments are shedding new light on such ‘traditional’ ideas, forging new approaches of their own, and helping people to better decipher increasingly complicated structure in data.

Techniques from the relatively new subject of ‘topological data analysis’ (TDA) have provided a wealth of new insights in the study of data in an increasingly diverse set of applications — including sensor-network coverage [5], proteins [6–9], 3-dimensional structure of DNA [10], development of cells [11], stability of fullerene molecules [12], robotics [13–15], signals in images [16, 17], periodicity in time series [18], cancer [19–22], phylogenetics [23–25], natural images [26], the spread of contagions [27, 28], self-similarity in geometry [29], materials science [30–33], financial networks [34, 35], diverse applications in neuroscience [36–43], classification of weighted networks [44], collaboration networks [45, 46], analysis of mobile phone data [47], collective behavior in biology [48], time-series output of dynamical systems [49], natural-language analysis [50], and more. There are numerous others, and new applications of TDA appear in journals and preprint servers increasingly frequently. There are also interesting computational efforts, such as [51].

TDA is a field that lies at the intersection of data analysis, algebraic topology, computational geometry, computer science, statistics, and other related areas. The main goal of TDA is to use ideas and results from geometry and topology to develop tools for studying qualitative features of data. To achieve this goal, one needs precise definitions of qualitative features, tools to compute them in practice, and some guarantee about the robustness of those features. One way to address all three points is a method in TDA called persistent homology (PH). This method is appealing for applications because it is based on algebraic topology, which gives a well-understood theoretical framework to study qualitative features of data with complex structure, is computable via linear algebra, and is robust with respect to small perturbations in input data.

Types of data sets that can be studied with PH include finite metric spaces, digital images, level sets of real-valued functions, and networks (see Section 5.1). In the next two paragraphs, we give some motivation for the main ideas of persistent homology by discussing two examples of such data sets.

Finite metric spaces are also called point-cloud data sets in the TDA literature. From a topological point of view, finite metric spaces do not contain any interesting information. One thus considers a thickening of a point cloud at different scales of resolution and then analyzes the evolution of the resulting shape across the different resolution scales. The qualitative features are given by topological invariants, and one can represent the variation of such invariants across the different resolution scales in a compact way to summarize the ‘shape’ of the data.

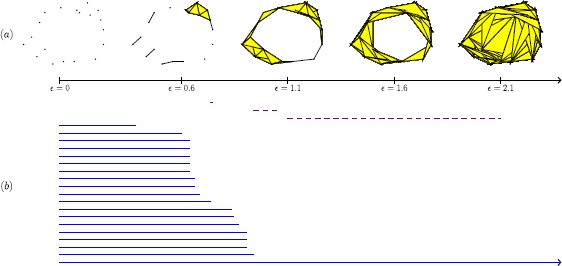

As an illustration, consider the set of points in that we show in Figure 1. Let ϵ, which we interpret as a distance parameter, be a nonnegative real number (so gives the set of points). For different values of ϵ, we construct a space composed of vertices, edges, triangles, and higher-dimensional polytopes according to the following rule: We include an edge between two points i and j if and only if the Euclidean distance between them is no larger than ϵ; we include a triangle if and only if all of its edges are in ; we include a tetrahedron if and only if all of its face triangles are in ; and so on. For , it then follows that the space is contained in the space . This yields a nested sequence of spaces, as we illustrate in Figure 1(a). Our construction of nested spaces gives an example of a ‘filtered Vietoris–Rips complex,’ which we define and discuss in Section 5.2.

Figure 1.

Example of persistent homology for a point cloud. (a) A finite set of points in (for ) and a nested sequence of spaces obtained from it (from to ). (b) Barcode for the nested sequence of spaces illustrated in (a). Solid lines represent the lifetime of components, and dashed lines represent the lifetime of holes.

By using homology, a tool in algebraic topology, one can measure several features of the spaces — including the numbers of components, holes, and voids (higher-dimensional versions of holes). One can then represent the lifetime of such features using a finite collection of intervals known as a ‘barcode.’ Roughly, the left endpoint of an interval represents the birth of a feature, and its right endpoint represents the death of the same feature. In Figure 1(b), we reproduce such intervals for the number of components (blue solid lines) and the number of holes (violet dashed lines). In Figure 1(b), we observe a dashed line that is significantly longer than the other dashed lines. This indicates that the data set has a long-lived hole. By contrast, in this example one can potentially construe the shorter dashed lines as noise. (However, note that while widespread, such an intepretation is not correct in general; for applications in which one considers some short and medium-sized intervals as features rather than noise, see [52, 53].) When a feature is still ‘alive’ at the largest value of ϵ that we consider, the lifetime interval is an infinite interval, which we indicate by putting an arrowhead at the right endpoint of the interval. In Figure 1(b), we see that there is exactly one solid line that lives up to . One can use information about shorter solid lines to extract information about how data is clustered in a similar way as with linkage-clustering methods [3].

One of the most challenging parts of using PH is statistical interpretation of results. From a statistical point of view, a barcode like the one in Figure 1(b) is an unknown quantity that one is trying to estimate; one therefore needs methods for quantitatively assessing the quality of the barcodes that one obtains with computations. The challenge is twofold. On one hand, there is a cultural obstacle: practitioners of TDA often have backgrounds in pure topology and are not well-versed in statistical approaches to data analysis [54]. On the other hand, the space of barcodes lacks geometric properties that would make it easy to define basic concepts such as mean, median, and so on. Current research is focused both on studying geometric properties of this space and on studying methods that map this space to spaces that have better geometric properties for statistics. In Section 5.4, we give a brief overview of the challenges and current approaches for statistical interpretation of barcodes. This is an active area of research and an important endeavor, as few statistical tools are currently available for interpreting results in applications of PH.

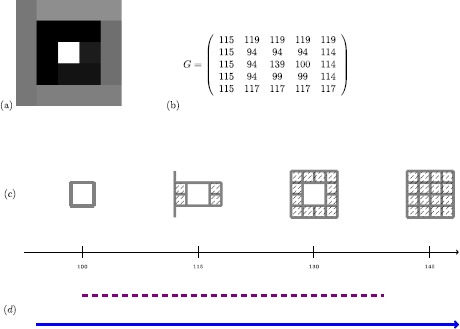

We now discuss a second example related to digital images. (For an illustration, see Figure 2(a).) Digital images have a cubical structure, given by the pixels (for 2-dimensional digital images) or voxels (for 3-dimensional images). Therefore, one approach to study digital images uses combinatorial structures called ‘cubical complexes.’ (For a different approach to the study of digital images, see Section 5.1.) Roughly, cubical complexes are topological spaces built from a union of vertices, edges, squares, cubes, and higher-dimensional hypercubes. An efficient way [55] to build a cubical complex from a 2-dimensional digital image consists of assigning a vertex to every pixel, then joining vertices corresponding to adjacent pixels by an edge, and filling in the resulting squares. One proceeds in a similar way for 3-dimensional images. One then labels every vertex with an integer that corresponds to the gray value of the pixel, and one labels edges (respectively, squares) with the maximum of the values of the adjacent vertices (respectively, edges). One can then construct a nested sequence of cubical complexes , where for each , the cubical complex contains all vertices, edges, squares, and cubes that are labeled by a number less than or equal to i. (See Figure 2(c) for an example.) Such a sequence of cubical complexes is also called a ‘filtered cubical complex.’ Similar to the previous example, one can use homology to measure several features of the spaces (see Figure 2(d)).

Figure 2.

Example of persistent homology for a gray-scale digital image. (a) A gray-scale image, (b) the matrix of gray values, (c) the filtered cubical complex associated to the digital image, and (d) the barcode for the nested sequence of spaces in panel (c). A solid line represents the lifetime of a component, and a dashed line represents the lifetime of a hole.

In the present article, we focus on persistent homology, but there are also other methods in TDA — including the Mapper algorithm [56], Euler calculus (see [57] for an introduction with an eye towards applications), cellular sheaves [57, 58], and many more. We refer readers who wish to learn more about the foundations of TDA to the article [59], which discusses why topology and functoriality are essential for data analysis. We point to several introductory papers, books, and two videos on PH at the end of Section 4.

The first algorithm for the computation of PH was introduced for computation over (the field with two elements) in [60] and over general fields in [61]. Since then, several algorithms and optimization techniques have been presented, and there are now various powerful implementations of PH [62–68]. Those wishing to try PH for computations may find it difficult to discern which implementations and algorithms are best suited for a given task. The field of PH is evolving continually, and new software implementations and updates are released at a rapid pace. Not all of them are well-documented, and (as is well-known in the TDA community), the computation of PH for large data sets is computationally very expensive.

To our knowledge, there exists neither an overview of the various computational methods for PH nor a comprehensive benchmarking of the state-of-the-art implementations for the computation of persistent homology. In the present article, we close this gap: we introduce computation of PH to a general audience of applied mathematicians and computational scientists, offer guidelines for the computation of PH, and test the existing open-source published libraries for the computation of PH.

The rest of our paper is organized as follows. In Section 2, we discuss related work. We then introduce homology in Section 3 and introduce PH in Section 4. We discuss the various steps of the pipeline for the computation of PH in Section 5, and we briefly examine algorithms for generalized persistence in Section 6. In Section 7, we give an overview of software libraries, discuss our benchmarking of a collection of them, and provide guidelines for which software or algorithm is better suited to which data set. (We provide specific guidelines for the computation of PH with the different libraries in the Tutorial in Additional file 2 of the Supplementary Information (SI).) In Section 8, we discuss future directions for the computation of PH.

Related work

In our work, we introduce PH to non-experts with an eye towards applications, and we benchmark state-of-the-art libraries for the computation of PH. In this section, we discuss related work for both of these points.

There are several excellent introductions to the theory of PH (see the references at the end of Section 4.1), but none of them emphasizes the actual computation of PH by providing specific guidelines for people who want to do computations. In the present paper, we navigate the theory of PH with an eye towards applications, and we provide guidelines for the computation of PH using the open-source libraries javaPlex, Perseus, Dionysus, DIPHA, Gudhi, and Ripser. We include a tutorial (see Additional file 2 of the SI) that gives specific instructions for how to use the different functionalities that are implemented in these libraries. Much of this information is scattered throughout numerous different papers, websites, and even source code of implementations, and we believe that it is beneficial to the applied mathematics community (especially people who seek an entry point into PH) to find all of this information in one place. The functionalities that we cover include plots of barcodes and persistence diagrams and the computation of PH with Vietoris–Rips complexes, alpha complexes, Čech complexes, witness complexes, cubical complexes for image data. We also discuss the computation of the bottleneck and Wasserstein distances. We thus believe that our paper closes a gap in introducing PH to people interested in applications, while our tutorial complements existing tutorials (see, e.g. [69–71]).

We believe that there is a need for a thorough benchmarking of the state-of-the-art libraries. In our work, we use twelve different data sets to test and compare the libraries javaPlex, Perseus, Dionysus, DIPHA, Gudhi, and Ripser. There are several benchmarkings in the PH literature; we are aware of the following ones: the benchmarking in [72] compares the implementations of standard and dual algorithms in Dionysus; the one in [73] compares the Morse-theoretic reduction algorithm with the standard algorithm; the one in [62] compares all of the data structures and algorithms implemented in PHAT; the benchmarking in [74] compares PHAT and its spin-off DIPHA; and the benchmarking in C. Maria’s doctoral thesis [75] is to our knowledge the only existing benchmarking that compares packages from different authors. However, Maria compares only up to three different implementations at one time, and he used the package jPlex (which is no longer maintained) instead of the javaPlex library (its successor). Additionally, the widely used library Perseus (e.g., it was used in [22, 27, 30, 31]) does not appear in Maria’s benchmarking.

Homology

Assume that one is given data that lies in a metric space, such as a subset of Euclidean space with an inherited distance function. In many situations, one is not interested in the precise geometry of these spaces, but instead seeks to understand some basic characteristics, such as the number of components or the existence of holes and voids. Algebraic topology captures these basic characteristics either by counting them or by associating vector spaces or more sophisticated algebraic structures to them. Here we are interested in homology, which associates one vector space to a space X for each natural number . The dimension of counts the number of path components in X, the dimension of is a count of the number of holes, and the dimension of is a count of the number of voids. An important property of these algebraic structures is that they are robust, as they do not change when the underlying space is transformed by bending, stretching, or other deformations. In technical terms, they are homotopy invariant.1

It can be very difficult to compute the homology of arbitrary topological spaces. We thus approximate our spaces by combinatorial structures called ‘simplicial complexes,’ for which homology can be easily computed algorithmically. Indeed, often one is not even given the space X, but instead possesses only a discrete sample set S from which to build a simplicial complex following one of the recipes described in Sections 3.2 and 5.2.

Simplicial complexes and their homology

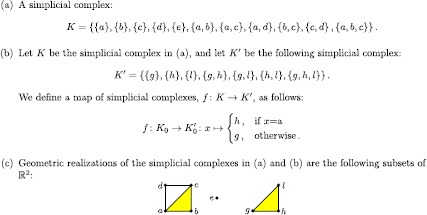

We begin by giving the definitions of simplicial complexes and of the maps between them. Roughly, a simplicial complex is a space that is built from a union of points, edges, triangles, tetrahedra, and higher-dimensional polytopes. We illustrate the main definitions given in this section with the example in Figure 3. As we pointed out in Section 1, ‘cubical complexes’ give another way to associate a combinatorial structure to a topological space. In TDA, cubical complexes have been used primarily to study image data sets. One can compute PH for a nested sequence of cubical complexes in a similar way as for simplicial complexes, but the theory of PH for simplicial complexes is richer, and we therefore examine only simplicial homology and complexes in our discussions. See [76] for a treatment of cubical complexes and their homology.

Figure 3.

A simple example. (a) A simplicial complex, (b) a map of simplicial complexes, and (c) a geometric realization of the simplicial complex in (a).

Definition 1

A simplicial complex 2 is a collection K of non-empty subsets of a set such that for all , and and guarantees that . The elements of are called vertices of K, and the elements of K are called simplices. Additionally, we say that a simplex has dimension p or is a p-simplex if it has a cardinality of . We use to denote the collection of p-simplices. The k-skeleton of K is the union of the sets for all . If τ and σ are simplices such that , then we call τ a face of σ, and we say that τ is a face of σ of codimension if the dimensions of τ and σ differ by . The dimension of K is defined as the maximum of the dimensions of its simplices. A map of simplicial complexes, , is a map such that for all .

We give an example of a simplicial complex in Figure 3(a) and an example of a map of simplicial complexes in Figure 3(b). Definition 1 is rather abstract, but one can always interpret a finite simplicial complex K geometrically as a subset of for sufficiently large N; such a subset is called a ‘geometric realization,’ and it is unique up to a canonical piecewise-linear homeomorphism. For example, the simplicial complex in Figure 3(a) has a geometric realization given by the subset of in Figure 3(c).

We now define homology for simplicial complexes. Let denote the field with two elements. Given a simplicial complex K, let denote the -vector space with basis given by the p-simplices of K. For any , we define the linear map (on the basis elements)

For , we define to be the zero map. In words, maps each p-simplex to its boundary, the sum of its faces of codimension 1. Because the boundary of a boundary is always empty, the linear maps have the property that composing any two consecutive maps yields the zero map: for all , we have . Consequently, the image of is contained in the kernel of , so we can take the quotient of by . We can thus make the following definition.

Definition 2

For any , the pth homology of a simplicial complex K is the quotient vector space

Its dimension

is called the pth Betti number of K. Elements in the image of are called p-boundaries, and elements in the kernel of are called p-cycles.

Intuitively, the p-cycles that are not boundaries represent p-dimensional holes. Therefore, the pth Betti number ‘counts’ the number of p-holes. Additionally, if K is a simplicial complex of dimension n, then for all , we have that , as is empty and hence . We therefore obtain the following sequence of vector spaces and linear maps:

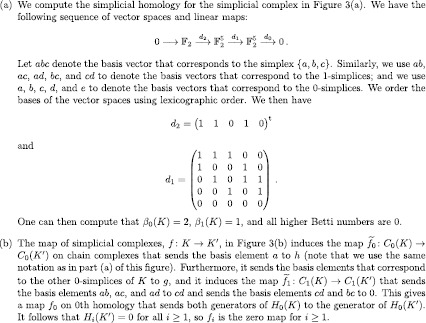

We give an example of such a sequence in Figure 4(a), for which we also report the Betti numbers.

Figure 4.

Examples to illustrate simplicial homology. (a) Computation of simplicial homology for the simplicial complex in Figure 3(a) and (b) induced map in 0th homology for the map of simplicial complexes in Figure 3(b).

One of the most important properties of simplicial homology is ‘functoriality.’ Any map of simplicial complexes induces the following -linear map:

where . Additionally, , and the map therefore induces the following linear map between homology vector spaces:

(We give an example of such a map in Figure 4(b).) Consequently, to any map of simplicial complexes, we can assign a map for any . This assignment has the important property that given a pair of composable maps of simplicial complexes, and , the map is equal to the composition of the maps induced by f and g. That is, . The fact that a map of simplicial complexes induces a map on homology that is compatible with composition is called functoriality, and it is crucial for the definition of persistent homology (see Section 4.1).

When working with simplicial complexes, one can modify a simplicial complex by removing or adding a pair of simplices , where τ is a face of σ of codimension 1 and σ is the only simplex that has τ as a face. The resulting simplicial complex has the same homology as the one with which we started. In Figure 3(a), we can remove the pair and then the pair without changing the Betti numbers. Such a move is called an elementary simplicial collapse [77]. In Section 5.2.6, we will see an application of this for the computation of PH.

In this section, we have defined simplicial homology over the field — i.e., ‘with coefficients in .’ One can be more general and instead define simplicial homology with coefficients in any field (or even in the integers). However, when , one needs to take more care when defining the boundary maps to ensure that remains the zero map. Consequently, the definition is more involved. For the purposes of the present paper, it suffices to consider homology with coefficients in the field . Indeed, we will see in Section 4 that to obtain topological summaries in the form of barcodes, we need to compute homology with coefficients in a field. Furthermore, as we summarize in Table 2 (in Section 7), most of the implementations for the computation of PH work with .

Table 2.

Overview of existing software for the computation of PH that have an accompanying peer-reviewed publication (and also

[

68

], because of its performance)

[

68

], because of its performance)

| Software | javaPlex | Perseus | jHoles | Dionysus | PHAT | DIPHA | Gudhi | SimpPers | Ripser |

|---|---|---|---|---|---|---|---|---|---|

| (a) Language | Java | C++ | Java | C++ | C++ | C++ | C++ | C++ | C++ |

| (b) Algorithms for PH | standard, dual, zigzag | Morse reductions, standard | standard (uses javaPlex) | standard, dual, zigzag | standard, dual, twist, chunk, spectral sequence | twist, dual, distributed | dual, multifield | simplicial map | twist, dual |

| (c) Coefficient field | , | (standard, zigzag), (dual) | |||||||

| (d) Homology | simplicial, cellular | simplicial, cubical | simplicial | simplicial | simplicial, cubical | simplicial, cubical | simplicial, cubical | simplicial | simplicial |

| (e) Filtrations computed | VR, W, | VR, lower star of cubical complex | WRCF | VR, α, C̆ | - | VR, lower star of cubical complex | VR, α, W, lower star of cubical complex | - | VR |

| (f) Filtrations as input | simplicial complex, zigzag, CW | simplicial complex, cubical complex | - | simplicial complex, zigzag | boundary matrix of simplicial complex | boundary matrix of simplicial complex | - | map of simplicial complexes | - |

| (g) Additional features | Computes some homological algebra constructions, homology generators | weighted points for VR | - | vineyards, circle-valued functions, homology generators | - | - | - | - | - |

| (h) Visualization | barcodes | persistence diagram | - | - | - | persistence diagram | - | - | - |

The symbol ‘-’ signifies that the associated feature is not implemented. For each software package, we indicate the following items. (a) The language in which it is implemented. (b) The implemented algorithms for the computation of barcodes from the boundary matrix. (c) The coefficient fields for which PH is computed, where the letter p denotes any prime number in the coefficient field . (d) The type of homology computed. (e) The filtered complexes that are computed, where VR stands for Vietoris–Rips complex, W stands for the weak witness complex, stands for parametrized witness complexes, WRCF stands for the weight rank clique filtration, α stands for the alpha complex, and Č for the Čech complex. Perseus, DIPHA, and Gudhi implement the computation of the lower-star filtration [160] of a weighted cubical complex; one inputs data in the form of a d-dimensional array; the data is then interpreted as a d-dimensional cubical complex, and its lower-star filtration is computed. (See the Tutorial in Additional file 2 of the SI, for more details.) Note that DIPHA and Gudhi use the efficient representation of cubical complexes presented in [55], so the size of the cubical complex that is computed by these libraries is smaller than the size of the resulting complex with Perseus. (f) The filtered complexes that one can give as input. javaPlex supports the input of a filtered CW complex for the computation of cellular homology [78]; in contrast with simplicial complexes, there do not currently exist algorithms to assign a cell complex to point-cloud data. (g) Additional features implemented by the library. javaPlex supports the computation of some constructions from homological algebra (see [66] for details), and Perseus implements the computation of PH with the VR for points with different ‘birth times’ (see Section 5.1.3). The library Dionysus implements the computation of vineyards [155] and circle-valued functions [127]. Both javaPlex and Dionysus support the output of representatives of homology classes for the intervals in a barcode. (h) Whether visualization of the output is provided

We conclude this section with a warning: changing the coefficient field can affect the Betti numbers. For example, if one computes the homology of the Klein bottle (see Section 7.1.1) with coefficients in the field with p elements, where p is a prime, then for all primes p. However, and if , but and for all other primes p. The fact that for arises from the nonorientability of the Klein bottle. The treatment of different coefficient fields is beyond the scope of our article, but interested readers can peruse [78] for an introduction to homology and [76] for an overview of computational homology.

Building simplicial complexes

As we discussed in Section 3.1, computing the homology of finite simplicial complexes boils down to linear algebra. The same is not true for the homology of an arbitrary space X, and one therefore tries to find simplicial complexes whose homology approximates the homology of the space in an appropriate sense.

An important tool is the Čech (Č) complex. Let be a cover of X — i.e., a collection of subsets of X such that the union of the subsets is X. The k-simplices of the Čech complex are the non-empty intersections of sets in the cover . More precisely, we define the nerve of a collection of sets as follows.

Definition 3

Let be a non-empty collection of sets. The nerve of is the simplicial complex with set of vertices given by I and k-simplices given by if and only if .

If the cover of the sets is sufficiently ‘nice,’ then the Nerve Theorem implies that the nerve of the cover and the space X have the same homology [79, 80]. For example, suppose that we have a finite set of points S in a metric space X. We then can define, for every , the space as the union , where denotes the closed ball with radius ϵ centered at x. It follows that is a cover of , and the nerve of this cover is the Čech complex on S at scale ϵ. We denote this complex by . If the space X is Euclidean space, then the Nerve Theorem guarantees that the simplicial complex recovers the homology of .

From a computational point of view, the Čech complex is expensive because one has to check for large numbers of intersections. Additionally, in the worst case, the Čech complex can have dimension , and it therefore can have many simplices in dimensions higher than the dimension of the underlying space. Ideally, it is desirable to construct simplicial complexes that approximate the homology of a space but are easy to compute and have ‘few’ simplices, especially in high dimensions. This is a subject of ongoing research: In Section 5.2, we give an overview of state-of-the-art methods to associate complexes to point-cloud data in a way that addresses one or both of these desiderata. See [80, 81] for more details on the Čech complex, and see [79, 80] for a precise statement of the Nerve Theorem.

Persistent homology

Assume that we are given experimental data in the form of a finite metric space S; there are points or vectors that represent measurements along with some distance function (e.g., given by a correlation or a measure of dissimilarity) on the set of points or vectors. Whether or not the set S is a sample from some underlying topological space, it is useful to think of it in those terms. Our goal is to recover the properties of such an underlying space in a way that is robust to small perturbations in the data S. In a broad sense, this is the subject of topological inference. (See [82] for an overview.) If S is a subset of Euclidean space, one can consider a ‘thickening’ of S given by the union of balls of a certain fixed radius ϵ around its points and then compute the Čech complex. One can thus try to compute qualitative features of the data set S by constructing the Čech complex for a chosen value ϵ and then computing its simplicial homology. The problem with this approach is that there is a priori no clear choice for the value of the parameter ϵ. The key insight of PH is the following: To extract qualitative information from data, one considers several (or even all) possible values of the parameter ϵ. As the value of ϵ increases, simplices are added to the complexes. Persistent homology then captures how the homology of the complexes changes as the parameter value increases, and it detects which features ‘persist’ across changes in the parameter value. We give an example of persistent homology in Figure 5.

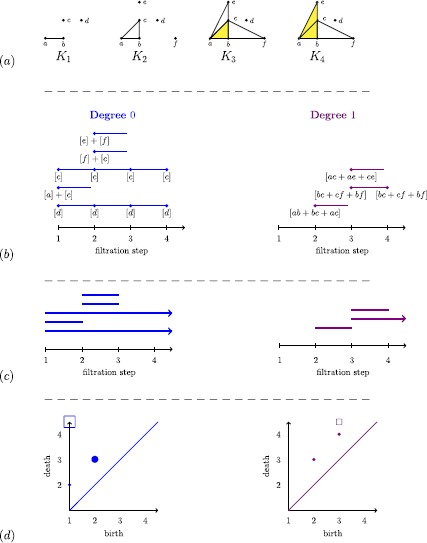

Figure 5.

Example of persistent homology for a finite filtered simplicial complex. (a) We start with a finite filtered simplicial complex. (b) At each filtration step i, we draw as many vertices as the dimension of (left column) and (right column) . We label the vertices by basis elements, the existence of which is guaranteed by the Fundamental Theorem of Persistent Homology, and we draw an edge between two vertices to represent the maps , as explained in the main text. We thus obtain a well-defined collection of disjoint half-open intervals called a ‘barcode.’ We interpret each interval in degree p as representing the lifetime of a p-homology class across the filtration. (c) We rewrite the diagram in (b) in the conventional way. We represent classes that are born but do not die at the final filtration step using arrows that start at the birth of that feature and point to the right. (d) An alternative graphical way to represent barcodes (which gives exactly the same information) is to use persistence diagrams, in which an interval is represented by the point in the extended plane , where . Therefore, a persistence diagram is a finite multiset of points in . We use squares to signify the classes that do not die at the final step of a filtration, and the size of dots or squares is directly proportional to the number of points being represented. For technical reasons, which we discuss briefly in Section 5.4, one also adds points on the diagonal to the persistence diagrams. (Each of the points on the diagonal has infinite multiplicity.)

Filtered complexes and homology

Let K be a finite simplicial complex, and let be a finite sequence of nested subcomplexes of K. The simplicial complex K with such a sequence of subcomplexes is called a filtered simplicial complex. See Figure 5(a) for an example of filtered simplicial complex. We can apply homology to each of the subcomplexes. For all p, the inclusion maps induce -linear maps for all with . By functoriality (see Section 3.1), it follows that

| 1 |

We therefore give the following definition.3

Definition 4

Let be a filtered simplicial complex. The pth persistent homology of K is the pair

where for all with , the linear maps are the maps induced by the inclusion maps .

The pth persistent homology of a filtered simplicial complex gives more refined information than just the homology of the single subcomplexes. We can visualize the information given by the vector spaces together with the linear maps by drawing the following diagram: at filtration step i, we draw as many bullets as the dimension of the vector space . We then connect the bullets as follows: we draw an interval between bullet u at filtration step i and bullet v at filtration step if the generator of that corresponds to u is sent to the generator of that corresponds to v. If the generator corresponding to a bullet u at filtration step i is sent to 0 by , we draw an interval starting at u and ending at . (See Figure 5(b) for an example.) Such a diagram clearly depends on a choice of basis for the vector spaces , and a poor choice can lead to complicated and unreadable clutter. Fortunately, by the Fundamental Theorem of Persistent Homology [61], there is a choice of basis vectors of for each such that one can construct the diagram as a well-defined and unique collection of disjoint half-open intervals, collectively called a barcode.4 We give an example of a barcode in Figure 5(c). Note that the Fundamental Theorem of PH, and hence the existence of a barcode, relies on the fact that we are using homology with field coefficients. (See [61] for more details.)

There is a useful interpretation of barcodes in terms of births and deaths of generators. Considering the maps written in the basis given by the Fundamental Theorem of Persistent Homology, we say that (with ) is born in if it is not in the image of (i.e., ). For (with ), we say that x dies in if is the smallest index for which . The lifetime of x is represented by the half-open interval . If for all j such that , we say that x lives forever, and its lifetime is represented by the interval .

Remark 5

Note that some references (e.g., [80]) introduce persistent homology by defining the birth and death of generators without using the existence of a choice of compatible bases, as given by the Fundamental Theorem of Persistent Homology. The definition of birth coincides with the definition that we have given, but the definition of death is different. One says that (with ) dies in if is the smallest index for which either or there exists with such that . In words, this means that x and y merge at filtration step j, and the class that was born earlier is the one that survives. In the literature, this is called the elder rule. We do not adopt this definition, because the elder rule is not well-defined when two classes are born at the same time, as there is no way to choose which class will survive. For example, in Figure 5, there are two classes in that are born at the same stage in . These two classes merge in , but neither dies. The class that dies is .

There are numerous excellent introductions to PH, such as the books [57, 80, 82, 83] and the papers [59, 84–88]. For a brief and friendly introduction to PH and some of its applications, see the video https://www.youtube.com/watch?v=h0bnG1Wavag. For a brief introduction to some of the ideas in TDA, see the video https://www.youtube.com/watch?v=XfWibrh6stw.

Computation of PH for data

We summarize the pipeline for the computation of PH from data in Figure 6. In the following subsections, we describe each step of this pipeline and state-of-the-art algorithms for the computation of PH. The two features that make PH appealing for applications are that it is computable via linear algebra and that it is stable with respect to perturbations in the measurement of data. In Section 5.5, we give a brief overview of stability results.

Figure 6.

PH pipeline.

Data

As we mentioned in Section 1, types of data sets that one can study with PH include finite metric spaces, digital images, and networks. We now give a brief overview of how one can study these types of data sets using PH.

Networks

One can construe an undirected network as a 1-dimensional simplicial complex. If the network is weighted, then filtering by increasing or decreasing weight yields a filtered 1-dimensional simplicial complex. To obtain more refined information about the network, it is desirable to construct higher-dimensional simplices. There are various methods to do this. The simplest method, called a weight rank clique filtration (WRCF), consists of building a clique complex on each subnetwork. (See Section 5.2.1 for the definition of ‘clique complex.’) See [89] for an application of this method. Another method to study networks with PH consists of mapping the nodes of the network to points of a finite metric space. There are several ways to compute distances between nodes of a network; the method that we use in our benchmarking in Section 7 consists of computing a shortest path between nodes. For such a distance to be well-defined, note that one needs the network to be connected (although conventionally one takes the distance between nodes in different components to be infinity). There are many methods to associate an unfiltered simplicial complex to both undirected and directed networks. See the book [90] for an overview of such methods, and see the paper [91] for an overview of PH for networks.

Digital images

As we mentioned in Section 1, digital images have a natural cubical structure: 2-dimensional digital images are made of pixels, and 3-dimensional images are made of voxels. Therefore, to study digital images, cubical complexes are more appropriate than simplicial complexes. Roughly, cubical complexes are spaces built from a union of vertices, edges, squares, cubes, and so on. One can compute PH for cubical complexes in a similar way as for simplicial complexes, and we will therefore not discuss this further in this paper. See [76] for a treatment of computational homology with cubical complexes rather than simplicial complexes and for a discussion of the relationship between simplicial and cubical homology. See [55] for an efficient algorithm and data structure for the computation of PH for cubical data, and [92] for an algorithm that computes PH for cubical data in an approximate way. For an application of PH and cubical complexes to movies, see [73].

Other approaches for studying digital images are also useful. In general, given a digital image that consists of N pixels or voxels, one can consider this image as a point in a -dimensional space, with each coordinate storing a vector of length c representing the color of a pixel or voxel. Defining an appropriate distance function on such a space allows one to consider a collection of images (each of which has N pixels or voxels) as a finite metric space. A version of this approach was used in [26], in which the local structure of natural images was studied by selecting patches of pixels of the images.

Finite metric spaces

As we mentioned in the previous two subsections, both undirected networks and image data can be construed as finite metric spaces. Therefore, methods to study finite metric spaces with PH apply to the study of networks and image data sets.

In some applications, points of a metric space have associated ‘weights.’ For instance, in the study of molecules, one can represent a molecule as a union of balls in Euclidean space [93, 94]. For such data sets, one would therefore also consider a minimum filtration value (see Section 5.2 for the description of such filtration values) at which the point enters the filtration. In Table 2(g), we indicate which software libraries implement this feature.

Filtered simplicial complexes

In Section 3.2, we introduced the Čech complex, a classical simplicial complex from algebraic topology. However, there are many other simplicial complexes that are better suited for studying data from applications. We discuss them in this section.

To be a useful tool for the study of data, a simplicial complex has to satisfy some theoretical properties dictated by topological inference; roughly, if we build the simplicial complex on a set of points sampled from a space, then the homology of the simplicial complex has to approximate the homology of the space. For the Čech complex, these properties are guaranteed by the Nerve Theorem. Some of the complexes that we discuss in this subsection are motivated by a ‘sparsification paradigm’: they approximate the PH of known simplicial complexes but have fewer simplices than them. Others, like the Vietoris–Rips complex, are appealing because they can be computed efficiently. In this subsection, we also review reduction techniques, which are heuristics that reduce the size of complexes without changing the PH. In Table 1, we summarize the simplicial complexes that we discuss in this subsection.

Table 1.

We summarize several types of complexes that are used for PH

| Complex K | Size of K | Theoretical guarantee |

|---|---|---|

| Čech | Nerve theorem | |

| Vietoris–Rips (VR) | Approximates Čech complex | |

| Alpha | (N points in ) | Nerve theorem |

| Witness | For curves and surfaces in Euclidean space | |

| Graph-induced complex | Approximates VR complex | |

| Sparsified Čech | Approximates Čech complex | |

| Sparsified VR | Approximates VR complex |

We indicate the theoretical guarantees and the worst-case sizes of the complexes as functions of the cardinality N of the vertex set. For the witness complexes (see Section 5.2.4), L denotes the set of landmark points, while Q denotes the subsample set for the graph-induced complex (see Section 5.2.5).

For the rest of this subsection denotes a metric space, and S is a subset of X, which becomes a metric space with the induced metric. In applications, S is the collection of measurements together with a notion of distance, and we assume that S lies in the (unknown) metric space X. Our goal is then to compute persistent homology for a sequence of nested spaces , where each space gives a ‘thickening’ of S in X.

Vietoris–Rips complex

We have seen that one of the disadvantages of the Čech complex is that one has to check for a large number of intersections. To circumvent this issue, one can instead consider the Vietoris–Rips (VR) complex, which approximates the Čech complex. For a non-negative real number ϵ, the Vietoris–Rips complex at scale ϵ is defined as

The sense in which the VR complex approximates the Čech complex is that, when S is a subset of Euclidean space, we have . Deciding whether a subset is in is equivalent to deciding if the maximal pairwise distance between any two vertices in σ is at most 2ϵ. Therefore, one can construct the VR complex in two steps. One first computes the ϵ-neighborhood graph of S. This is the graph whose vertices are all points in S and whose edges are

Second, one obtains the VR complex by computing the clique complex of the ϵ-neighborhood graph. The clique complex of a graph is a simplicial complex that is defined as follows: The subset is a k-simplex if and only if every pair of vertices in is connected by an edge. Such a collection of vertices is called a clique. This construction makes it very easy to compute the VR complex, because to construct the clique complex one has only to check for pairwise distances — for this reason, clique complexes are also called ‘lazy’ in the literature. Unfortunately, the VR complex has the same worst-case complexity as the Čech complex. In the worst case, it can have up to simplices and dimension .

In applications, one therefore usually only computes the VR complex up to some dimension . In our benchmarking, we often choose and .

The paper [95] overviews different algorithms to perform both of the steps for the construction of the VR complex, and it introduces fast algorithms to construct the clique complex. For more details on the VR complex, see [80, 96]. For a proof of the approximation of the Čech complex by the VR complex, see [80]; see [97] for a generalization of this result.

The Delaunay complex

To avoid the computational problems of the Čech and VR complexes, we need a way to limit the number of simplices in high dimensions. The Delaunay complex gives a geometric tool to accomplish this task, and most of the new simplicial complexes that have been introduced for the study of data are based on variations of the Delaunay complex. The Delaunay complex and its dual, the Voronoi diagram, are central objects of study in computational geometry because they have many useful properties.

For the Delaunay complex, one usually considers , so we also make this assumption. We subdivide the space into regions of points that are closest to any of the points in S. More precisely, for any , we define

The collection of sets is a cover for that is called the Voronoi decomposition of with respect to S, and the nerve of this cover is called the Delaunay complex of S and is denoted by . In general, the Delaunay complex does not have a geometric realization in . However, if the points S are ‘in general position’5 then the Delaunay complex has a geometric realization in that gives a triangulation of the convex hull of S. In this case, the Delaunay complex is also called the Delaunay triangulation.

The complexity of the Delaunay complex depends on the dimension d of the space. For , the best algorithms have complexity , where N is the cardinality of S. For , they have complexity . The construction of the Delaunay complex is therefore costly in high dimensions, although there are efficient algorithms for the computation of the Delaunay complex for and . Developing efficient algorithms for the construction of the Delaunay complex in higher dimensions is a subject of ongoing research. See [98] for a discussion of progress in this direction, and see [99] for more details on the Delaunay complex and the Voronoi diagram.

Alpha complex

We continue to assume that S is a finite set of points in . Using the Voronoi decomposition, one can define a simplicial complex that is similar to the Čech complex, but which has the desired property that (if the points S are in general position) its dimension is at most that of the space. Let , and let denote the union . For every , consider the intersection . The collection of these sets forms a cover of , and the nerve complex of this cover is called the alpha (α) complex of S at scale ϵ and is denoted by . The Nerve Theorem applies, and it therefore follows that has the same homology as .

Furthermore, is the Delaunay complex; and for , the alpha complex is a subcomplex of the Delaunay complex. The alpha complex was introduced for points in the plane in [100], in 3-dimensional Euclidean space in [101], and for Euclidean spaces of arbitrary dimension in [102]. For points in the plane, there is a well-known speed-up for the alpha complex that uses a duality between 0-dimensional and 1-dimensional persistence for alpha complexes [86]. (See [103] for the algorithm, and see [104] for an implementation.)

Witness complexes

Witness complexes are very useful for analyzing large data sets, because they make it possible to construct a simplicial complex on a significantly smaller subset of points that are called ‘landmark’ points. Meanwhile, because one uses information about all points in S to construct the simplicial complex, the points in S are called ‘witnesses.’ Witness complexes can be construed as a ‘weak version’ of Delaunay complexes. (See the characterization of the Delaunay complex in [105].)

Definition 6

Let be a metric space, and let be a finite subset. Suppose that σ is a non-empty subset of L. We then say that is a weak witness for σ with respect to L if and only if for all and for all . The weak Delaunay complex of S with respect to L has vertex set given by the points in L, and a subset σ of L is in if and only if it has a weak witness in S.

To obtain nested complexes, one can extend the definition of witnesses to ϵ-witnesses.

Definition 7

A point is a weak ϵ-witness for σ with respect to L if and only if for all and for all .

Now we can define the weak Delaunay complex at scale ϵ to be the simplicial complex with vertex set L, and such that a subset is in if and only if it has a weak ϵ-witness in S. By considering different values for the parameter ϵ, we thereby obtain nested simplicial complexes. The weak Delaunay complex is also called the ‘weak witness complex’ or just the ‘witness complex’ in the literature.

There is a modification of the witness complex called the lazy witness complex . It is a clique complex, and it can therefore be computed more efficiently than the witness complex. The lazy witness complex has the same 1-skeleton as , and one adds a simplex σ to whenever its edges are in . Another type of modification of the witness complex yields parametrized witness complexes. Let and for all define to be the distance to the νth closest landmark point. Furthermore, define for all . Let be the simplicial complex whose vertex set is L and such that a 1-simplex is in if and only if there exists s in S for which

A simplex σ is in if and only if all of its edges belong to . For , note that . For , we have that approximates the VR complex . That is,

Note that parametrized witness complexes are often called ‘lazy witness complexes’ in the literature, because they are clique complexes.

The weak Delaunay complex was introduced in [105], and parametrized witness complexes were introduced in [106]. Witness complexes can be rather useful for applications. Because their complexity depends on the number of landmark points, one can reduce the complexity by computing simplicial complexes using a smaller number of vertices. However, there are theoretical guarantees for the witness complex only when S is the metric space associated to a low-dimensional Euclidean submanifold. It has been shown that witness complexes can be used to recover the topology of curves and surfaces in Euclidean space [107, 108], but they can fail to recover topology for submanifolds of Euclidean space of three or more dimensions [109]. Consequently, there have been studies of simplicial complexes that are similar to the witness complexes but with better theoretical guarantees (see Section 5.2.5).

Additional complexes

Many more complexes have been introduced for the fast computation of PH for large data sets. These include the graph-induced complex [110], which is a simplicial complex constructed on a subsample Q, and has better theoretical guarantees than the witness complex (see [111] for the companion software); an approximation of the VR complex that has a worst-case size that is linear in the number of data points [112]; an approximation of the Čech complex [97] whose worst-case size also scales linearly in the data; and an approximation of the VR complex via simplicial collapses [113]. We do not discuss such complexes in detail, because thus far (at the time of writing) none of them have been implemented in publicly-available libraries for the computation of PH. (See Table 2 in Section 7 for information about which complexes have been implemented.)

Reduction techniques

Thus far, we have discussed techniques to build simplicial complexes with possibly ‘few’ simplices. One can also take an alternative approach to speed up the computation of PH. For example, one can use a heuristic (i.e., a method without theoretical guarantees on the speed-up) to reduce the size of a filtered complex while leaving the PH unchanged.

For simplicial complexes, one such method is based on discrete Morse theory [114], which was adapted to filtrations of simplicial complexes in [115]. The basic idea of the algorithm developed in [115] is that one can compute a partial matching of the simplices in a filtered simplicial complex so that (i) pairs occur only between simplices that enter the filtration at the same step, (ii) unpaired simplices determine the homology, and (iii) one can remove paired simplices from the filtered complex without altering the PH. Such deletions are examples of the elementary simplicial collapses that we mentioned in Section 3.1. Unfortunately, the problem of finding an optimal partial matching was shown to be NP complete [116], and one thus relies on heuristics to find partial matchings to reduce the size of the complex.

One particular family of elementary collapses, called strong collapses, was introduced in [117]. Strong collapses preserve cycles of shortest length in the representative class of a generator of a hole [118]; this feature makes strong collapses useful for finding holes in networks [118]. A distributed version of the algorithm proposed in [118] was presented in [119] and adapted for the computation of PH in [120].

A method for the reduction of the size of a complex for clique complexes, such as the VR complex, was proposed in [121] and is called the tidy-set method. Using maximal cliques, this method extracts a minimal representation of the graph that determines the clique complex. Although the tidy-set method cannot be extended to filtered complexes, it can be used for the computation of zigzag PH (see Section 6) [122]. The tidy-set method is a heuristic, because it does not give a guarantee to minimize the size of the output complex.

From a filtered simplicial complex to barcodes

To compute the PH of a filtered simplicial complex K and obtain a barcode like the one illustrated in Figure 5(c), we need to associate to it a matrix — the so-called boundary matrix — that stores information about the faces of every simplex. To do this, we place a total ordering on the simplices of the complex that is compatible with the filtration in the following sense:

a face of a simplex precedes the simplex;

a simplex in the ith complex precedes simplices in for , which are not in .

Let n denote the total number of simplices in the complex, and let denote the simplices with respect to this ordering. We construct a square matrix δ of dimension by storing a 1 in if the simplex is a face of simplex of codimension 1; otherwise, we store a 0 in .

Once one has constructed the boundary matrix, one has to reduce it using Gaussian elimination.6 In the following subsections, we discuss several algorithms for reducing the boundary matrix.

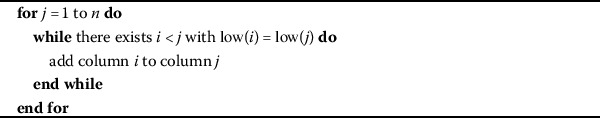

Standard algorithm

The so-called standard algorithm for the computation of PH was introduced for the field in [60] and for general fields in [61]. For every , we define to be the largest index value i such that is different from 0.7 If column j only contains 0 entries, then the value of is undefined. We say that the boundary matrix is reduced if the map low is injective on its domain of definition. In Algorithm 1, we illustrate the standard algorithm for reducing the boundary matrix. Because this algorithm operates on columns of the matrix from left to right, it is also sometimes called the ‘column algorithm.’ In the worst case, the complexity of the standard algorithm is cubic in the number of simplices.

Algorithm 1.

The standard algorithm for the reduction of the boundary matrix to barcodes

Reading off the intervals

Once the boundary matrix is reduced, one can read off the intervals of the barcode by pairing the simplices in the following way:

If , then the simplex is paired with , and the entrance of in the filtration causes the birth of a feature that dies with the entrance of .

If is undefined, then the entrance of the simplex in the filtration causes the birth of a feature. It there exists k such that , then is paired with the simplex , whose entrance in the filtration causes the death of the feature. If no such k exists, then is unpaired.

A pair gives the half-open interval in the barcode, where for a simplex we define to be the smallest number l such that . An unpaired simplex gives the infinite interval . We give an example of PH computation in Figure 7.

Figure 7.

Example of PH computation with the standard algorithm (see Algorithm 1).

Other algorithms

After the introduction of the standard algorithm, several new algorithms were developed. Each of these algorithms gives the same output for the computation of PH, so we only give a brief overview and references to these algorithms, as one does not need to know them to compute PH with one of the publicly-available software packages. In Section 7.2, we indicate which implementation of these libraries is best suited to which data set.

As we mentioned in Section 5.3.1, in the worst case, the standard algorithm has cubic complexity in the number of simplices. This bound is sharp, as Morozov gave an example of a complex with cubic complexity in [123]. Note that in cases such as when matrices are sparse, complexity is less than cubic. Milosavljević, Morozov, and Skraba [124] introduced an algorithm for the reduction of the boundary matrix in , where ω is the matrix-multiplication coefficient (i.e., is the complexity of the multiplication of two square matrices of size n). At present, the best bound for ω is 2.376 [125]. Many other algorithms have been proposed for the reduction of the boundary matrix. These algorithms give a heuristic speed-up for many data sets and complexes (see the benchmarkings in the forthcoming references), but they still have cubic complexity in the number of simplices. Sequential algorithms include the twist algorithm [126] and the dual algorithm [72, 127]. (Note that the dual algorithm is known to give a speed-up when one computes PH with the VR complex, but not necessarily for other types of complexes (see also the results of our benchmarking for the vertebra data set in Additional file 1 of the SI).) Parallel algorithms in a shared setting include the spectral-sequence algorithm (see Section VII.4 of [80]) and the chunk algorithm [128]; parallel algorithms in a distributed setting include the distributed algorithm [74]. The multifield algorithm is a sequential algorithm that allows the simultaneous computation of PH over several fields [129].

Statistical interpretation of topological summaries

Once one has obtained barcodes, one needs to interpret the results of computations. In applications, one often wants to compare the output of a computation for a certain data set with the output for a null model. Alternatively, one may be studying data sets from the output of a generative model (e.g., many realizations from a model of random networks), and it is then necessary to average results over multiple realizations. In the first instance, one needs both a way to compare the two different outputs and a way to evaluate the significance of the result for the original data set. In the second case, one needs a way to calculate appropriate averages (e.g., summary statistics) of the result of the computations.

From a statistical perspective, one can interpret a barcode as an unknown quantity that one tries to estimate by computing PH. If one wants to use PH in applications, one thus needs a reliable way to apply statistical methods to the output of the computation of PH. To our knowledge, statistical methods for PH were addressed for the first time in the paper [130]. Roughly speaking, there are three current approaches to the problem of statistical analysis of barcodes. In the first approach, researchers study topological properties of random simplicial complexes (see, e.g., [131, 132]) and the review papers [133, 134]. One can view random simplicial complexes as null models to compare with empirical data when studying PH. In the second approach, one studies properties of a metric space whose points are persistence diagrams. In the third approach, one studies ‘features’ of persistence diagrams. We will provide a bit more detail about the second and third approaches.

In the second approach, one considers an appropriately defined ‘space of persistence diagrams,’ defines a distance function on it, studies geometric properties of this space, and does standard statistical calculations (means, medians, statistical tests, and so on). Recall that a persistence diagram (see Figure 5 for an example) is a multiset of points in and that it gives the same information as a barcode. We now give the following precise definition of a persistence diagram.

Definition 8

A persistence diagram is a multiset that is the union of a finite multiset of points in with the multiset of points on the diagonal , where each point on the diagonal has infinite multiplicity.

In this definition, we include all of the points on the diagonal in with infinite multiplicity for technical reasons. Roughly, it is desirable to be able to compare persistence diagrams by studying bijections between their elements, and persistence diagrams must thus be sets with the same cardinality.

Given two persistence diagrams X and Y, we consider the following general definition of distance between X and Y.

Definition 9

Let . The pth Wasserstein distance between X and Y is defined as

for and as

for , where d is a metric on and ϕ ranges over all bijections from X to Y.

Usually, one takes for . One of the most commonly employed distance functions is the bottleneck distance .

The development of statistical analysis on the space of persistence diagrams is an area of ongoing research, and presently there are few tools that can be used in applications. See [135–137] for research in this direction. Until recently, the library Dionysus [64] was the only library to implement computation of the bottleneck and Wasserstein distances (for ); the library hera [138] implements a new algorithm [139] for the computation of the bottleneck and Wasserstein distances that significantly outperforms the implementation in Dionysus. The library TDA Package [140] (see [69] for the accompanying tutorial) implements the computation of confidence sets for persistence diagrams that was developed in [141], distance functions that are robust to noise and outliers [142], and many more tools for interpreting barcodes.

The third approach for the development of statistical tools for PH consists of mapping the space of persistence diagrams to spaces (e.g., Banach spaces) that are amenable to statistical analysis and machine-learning techniques. Such methods include persistence landscapes [143], using the space of algebraic functions [144], persistence images [145], and kernelization techniques [146–149]. See the papers [6, 52] for applications of persistence landscapes. The package Persistence Landscape Toolbox [150] (see [70] for the accompanying tutorial) implements the computation of persistence landscapes, as well as many statistical tools that one can apply to persistence landscapes, such as mean, ANOVA, hypothesis tests, and many more.

Stability

As we mentioned in Section 1, PH is useful for applications because it is stable with respect to small perturbations in the input data.

The first stability theorem for PH, proven in [151], asserts that, under favorable conditions, step (2) in the pipeline in Figure 6 is 1-Lipschitz with respect to suitable distance functions on filtered complexes and the bottleneck distance for barcodes (see Section 5.4). This result was generalized in the papers [152–154]. Stability for PH is an active area of research; for an overview of stability results, their history and recent developments, see [82], Chapter 3.

Excursus: generalized persistence

One can use the algorithms that we described in Section 5 to compute PH when one has a sequence of complexes with inclusion maps that are all going in the same direction, as in the following diagram:

An algorithm, called the zigzag algorithm, for the computation of PH for inclusion maps that do not all go in the same direction, as, e.g., in the diagram

was introduced in [155]. In the more general setting in which maps are not inclusions, one can still compute PH using the simplicial map algorithm [156].

One may also wish to vary two or more parameters instead of one. This yields multi-filtered simplicial complexes, as, e.g., in the following diagram:

In this case, one speaks of multi-parameter persistent homology. Unfortunately, the Fundamental Theorem of Persistent Homology is no longer valid if one filters with more than one parameter, and there is no such thing as a ‘generalized interval.’ The topic of multi-parameter persistence is under active research, and several approaches are being studied to extract topological information from multi-filtered simplicial complexes. See [82, 157] for the theory of multi-parameter persistent homology, and see [158] (and [159] for its companion paper) for upcoming software for the visualization of invariants for 2-parameter persistent homology.

Software

There are several publicly-available implementations for the computation of PH. We give an overview of the libraries with accompanying peer-reviewed publication and summarize their properties in Table 2.

The software package javaPlex [66], which was developed by the computational topology group at Stanford University, is based on the Plex library [161], which to our knowledge is the first piece of software to implement the computation of PH. Perseus [65] was developed to implement Morse-theoretic reductions [115] (see Section 5.2.6). jHoles [162] is a Java library for computing the weight rank clique filtration for weighted undirected networks [89]. Dionysus [64] is the first software package to implement the dual algorithm [72, 127]. PHAT [62] is a library that implements several algorithms and data structures for the fast computation of barcodes, takes a boundary matrix as input, and is the first software to implement a matrix-reduction algorithm that can be executed in parallel. DIPHA [63], a spin-off of PHAT, implements a distributed computation of the matrix-reduction algorithm. Gudhi [67] implements new data structures for simplicial complexes and the boundary matrix. It also implements the multi-field algorithm, which allows simultaneous computation of PH over several fields [129]. This library is currently under intense development, and a Python interface was just released in the most recent version of the library (namely, Version 2.0.0, whereas the version that we study in our tests is Version 1.3.1). The library ripser [68], the most recently developed software of the set that we examine, uses several optimizations and shortcuts to speed up the computation of PH with the VR complex. This library does not have an accompanying peer-reviewed publication. However, because it is currently the best-performing (both in terms of memory usage and in terms of wall-time seconds8) library for the computation of PH with the VR complex, we include it in our study. The library SimpPers [163] implements the simplicial map algorithm. Libraries that implement techniques for the statistical interpretation of barcodes include the TDA Package [140] and the Persistence Landscape Toolbox [150]. (See Section 5.4 for additional libraries for the interpretation of barcodes.) RIVET, a package for visualizing 2-parameter persistent homology, is slated to be released soon [158]. We summarize the properties of the libraries for the computation of PH that we mentioned in this paragraph in Table 2, and we discuss the performance for a selection of them in Section 7.1.3 and in Additional file 1 of the SI. For a list of programs, see https://github.com/n-otter/PH-roadmap.

Benchmarking

We benchmark a subset of the currently available open-source libraries with peer-reviewed publication for the computation of PH. To our knowledge, the published open-source libraries are jHoles, javaPlex, Perseus, Dionysus, PHAT, DIPHA, SimpPers, and Gudhi. To these, we add the library ripser, which is currently the best-performing library to compute PH with the VR complex. To study the performance of the packages, we restrict our attention to the algorithms that are implemented by the largest number of libraries. These are the VR complex and the standard and dual algorithms for the reduction of the boundary matrix. PHAT only takes a boundary matrix as input, so it is not possible to conduct a direct comparison of it with the other implementations. However, the fast data structures and algorithms implemented in PHAT are also implemented in its spin-off software DIPHA, which we include in the benchmarking. The software jHoles computes PH using the WRCF for weighted undirected networks, and SimpPers takes a map of simplicial complexes as input, so these two libraries cannot be compared directly to the other libraries. In Additional file 1 of the SI, we report benchmarking of some additional features that are implemented by some of the six libraries (i.e., javaPlex, Perseus, Dionysus, DIPHA, Gudhi, and Ripser) that we test. Specifically, we report results for the computation of PH with cubical complexes for image data sets and the computation of PH with witness, alpha, and Čech complexes.

We study the software packages javaPlex, Perseus, Dionysus, DIPHA, Gudhi, and Ripser using both synthetic and real-world data from three different perspectives:

Performance measured in CPU seconds and wall-time (i.e., elapsed time) seconds.

Memory required by the process.

Maximum size of simplicial complex allowed by the software.

Data sets

In this subsection, we describe the data sets that we use for our benchmarking. We use data sets from a variety of different mathematical and scientific areas and applications. In each case, when possible, we use data sets that have already been studied using PH. Our list of data sets is far from complete; we view this list as an initial step towards building a comprehensive collection of benchmarking data sets for PH.

Data sets (1)–(4) are synthetic; they arise from topology (1), stochastic topology (2), dynamical systems (3), and from an area at the intersection of network theory and fractal geometry (4). (As we discuss below, data set (4) was used originally to study connection patterns in the cerebral cortex.) Data sets (5)–(12) are from empirical experiments and measurements: they arise from phylogenetics (5)–(6), image analysis (7), genomics (9), neuroscience (8), medical imaging (10), political science (11), and scientometrics (12).

In each case, these data sets are of one of the following three types: point clouds, weighted undirected networks, and gray-scale digital images. To obtain a point cloud from a real-world weighted undirected network, we compute shortest paths using the inverse of the nonzero weights of edges as distances between nodes (except for the US Congress networks and the human genome network; see below). For the synthetic networks, the values assigned to edges are interpreted as distances between nodes, and we therefore use these values to compute shortest paths. We make all processed versions of the data sets that we use in the benchmarking available at https://github.com/n-otter/PH-roadmap/tree/master/data_sets. We provide the scripts that we used to produce the synthetic data sets at https://github.com/n-otter/PH-roadmap/tree/master/matlab/synthetic_data_sets_scripts.

We now describe all data sets in detail:

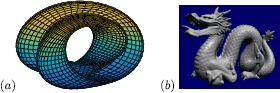

Klein bottle. The Klein bottle is a one-sided nonorientable surface (see Figure 8). We linearly sample points from the Klein bottle using its ‘figure-8’ immersion in and size sample of 400 points. We denote this data set by Klein. Note that the image of the immersion of the Klein bottle does not have the same homotopy type as the original Klein bottle, but it does have the same singular homology9 with coefficients in . We have , , and , where B denotes the Klein bottle and is the ith singular homology group with coefficients in .

Random VR complexes (uniform distribution) [165]. The parameters for this model are positive integers N and d; the random VR complex for parameters N and d is the VR complex , where X is a set of N points sampled from . (Equivalently, the random VR complex is the clique complex on the random geometric graph [166].) We sample N points uniformly at random from . We choose and we denote this data set by random. The homology of random VR complexes was studied in [165].

Vicsek biological aggregation model. This model was first introduced in [167] and was studied using PH in [48]. We implement the model in the form in which it appears in [48]. The model describes the motion of a collection of particles that interact in a square with periodic boundary conditions. The parameters for the model are the length l of the side of the square, the initial angle , the (constant) absolute value for the velocity, the number N of particles, a noise parameter η, and the number T of time steps. The output of the model is a point cloud in 3-dimensional Euclidean space in which each point is specified by its position in the 2-dimensional box and its velocity angle (‘heading’). We run three simulations of the model using the parameter values from [48]. For each simulation, we choose two point clouds that correspond to two different time frames. See [48] for further details. We denote this data set by Vicsek.

-

Fractal networks. These are self-similar networks introduced in [168] to investigate whether connection patterns of the cerebral cortex are arranged in self-similar patterns. The parameters for this model are natural numbers b, k, and n. To generate a fractal network, one starts with a fully-connected network with nodes. Two copies of this network are connected to each other so that the ‘connection density’ between them is , where the connection density is the number of edges between the two copies divided by the number of total possible edges between them. Two copies of the resulting network are connected with connection density . One repeats this type of connection process until the network has size , but with a decrease in the connection density by a factor of at each step.

We define distances between nodes in two different ways: (1) uniformly at random, and (2) with linear weight-degree correlations. In the latter, the distance between nodes i and j is distributed as , where is the degree of node i and X is a random variable uniformly distributed on the unit interval. We use the parameters , , and ; and we compute PH for the weighted network and for the network in which all adjacent nodes have distance 1. We denote this data set by fract and distinguish between the two ways of defining distances between weights using the abbreviations ‘r’ for random, and ‘l’ for linear.

Genomic sequences of the HIV virus. We construct a finite metric space using the independent and concatenated sequences of the three largest genes — gag, pol, and env — of the HIV genome. We take different genomic sequences and compute distances between them by using the Hamming distance. We use the aligned sequences studied using PH in [23]. (The authors of that paper retrieved the sequences from [169].) We denote this data set by HIV.

Genomic sequences of H3N2. This data set consists of different genomic sequences of H3N2 influenza. We compute the Hamming distance between sequences. We use the aligned sequences studied using PH in [23]. We denote this data set by H3N2.

Stanford Dragon graphic. We sample points uniformly at random from 3-dimensional scans of the dragon [164], whose reconstruction we show in Figure 8. The sample sizes include and points. We denote these data sets by drag 1 and drag 2, respectively.

C. elegans neuronal network. This is a weighted, undirected network in which each node is a neuron and edges represent synapses or gap junctions. We use the network studied using PH in [89]. (The authors of the paper used the data set studied in [170], which first appeared in [171].) Recall that for this example, and also for the other real-world weighted networks (except for the human genome network and the US Congress networks), we convert each nonzero edge weight to a distance by taking its inverse. We denote this data set by eleg.

Human genome. This is a weighted, undirected network representing a sample of the human genome. We use the network studied using PH in [89]. (The authors of that paper created the sample using data retrieved from [172].) Each node represents a gene, and two nodes are adjacent if there is a nonzero correlation between the expression levels of the corresponding genes. We define the weight of an edge as the inverse of the correlation.10 We denote this data set by genome.