Abstract

Guideline methods to develop recommendations dedicate most effort around organising discovery and corroboration knowledge following the evidence-based medicine (EBM) framework. Guidelines typically use a single dimension of information, and generally discard contextual evidence and formal expert knowledge and consumer's experiences in the process. In recognition of the limitations of guidelines in complex cases, complex interventions and systems research, there has been significant effort to develop new tools, guides, resources and structures to use alongside EBM methods of guideline development. In addition to these advances, a new framework based on the philosophy of science is required. Guidelines should be defined as implementation decision support tools for improving the decision-making process in real-world practice and not only as a procedure to optimise the knowledge base of scientific discovery and corroboration. A shift from the model of the EBM pyramid of corroboration of evidence to the use of broader multi-domain perspective graphically depicted as ‘Greek temple’ could be considered. This model takes into account the different stages of scientific knowledge (discovery, corroboration and implementation), the sources of knowledge relevant to guideline development (experimental, observational, contextual, expert-based and experiential); their underlying inference mechanisms (deduction, induction, abduction, means-end inferences) and a more precise definition of evidence and related terms. The applicability of this broader approach is presented for the development of the Canadian Consensus Guidelines for the Primary Care of People with Developmental Disabilities.

Key words: Evidence-based psychiatry, framing of scientific knowledge, complexity, implementation, clinical guidelines, quality assessment, intellectual developmental disorders

Introduction

Guidelines are implementation tools which evolved within the framework of evidence-based medicine (EBM). The EBM structures used to develop a guideline include the formulation of a clinical question (population, intervention, comparison, outcome commonly referred to as PICO) (Guyatt et al. 2008; World Health Organization WHO, 2012); standards and a protocol for systematic searching of empirical research evidence on this question; and quality appraisal of the research followed by use of an analytic framework to formulate and grade the recommendations from the body of research evidence. The principle underpinning guidelines is to guide health care practice and optimise patient care by reducing practice variability; improve quality and maximise resource utilisation (World Health Organization WHO, 2012; National Institute for Health and Care Excellence, 2016), the premise being that quality peer reviewed published scientific research is the best evidence of effectiveness (what interventions work and which do not work in real practice).

Over the past 25 years, guideline development has become standard practice. In August 2016, the Library on the Guideline International Network (GIN) website listed 48 guidelines on depression, 35 on schizophrenia and 47 on anxiety in different languages (Guidelines International Network GIN, 2016). Yet the translation of research evidence, implementation of guideline recommendations to health care practice faces significant challenges (Grimshaw et al. 2004; Grol & Buchan, 2006), particularly in highly complex areas such as mental health, where guidelines should be developed under conditions of high ambiguity, uncertainty and variability (Salvador-Carulla et al. 2013). A recent systematic review of studies on the implementation of mental health guidelines concluded that guideline implementation does not appear to have an impact on provider performance, although it may influence patient outcomes (Girlanda et al. 2016).

What are the mainstream solutions to improve guideline development?

One of the earliest concerns was the disparate methods and procedures used to develop guidelines and their recommendations in the 1990–2000s. These concerns led to the development of standards for guideline development (Institute of Medicine, 2011; National Health and Medical Research Council, 2011; Qaseem et al. 2012; National Institute for Health and Care Excellence, 2016); the AGREE guideline appraisal tool (Brouwers et al. 2010); and grading systems and tools such as GRADE (Guyatt et al. 2008; Goldet & Howick, 2013).

Aligned to this methodological problem were the inconsistencies in the strength applied to recommendations where the same research evidence was used (Guyatt et al. 2008); the cost of duplication of guidelines and the subsequent appeals to adapt guidelines to different contexts rather than develop guidelines de novo (Fervers et al. 2006; The ADAPTE Collaboration, 2009). Other concerns referred to the trustworthiness of guidelines due to potential conflicts of interest of experts involved in their development (Minhas, 2007; Mitchell et al. 2016; Shnier et al. 2016) which led to new standards for reporting and managing financial and intellectual conflicts (Boyd & Bero, 2006; Guyatt, 2010).

The overall usability and applicability of guideline recommendations to different contexts and for different targets has also raised concerns specifically around the limited attention to variability in the real world and to local environmental factors. In addition, there are difficulties in accommodating person-centred health care principles of shared decision making, patient values, preferences, choice and control to the recommendations (Charles et al. 2011; Rosner, 2012; Salvador-Carulla et al. 2013; Greenhalgh et al. 2014, 2015; Fernandez et al. 2015; Bingeman, 2016). A series of biases related to this challenge have been enumerated (Charles et al. 2011; Rosner, 2012; Salvador-Carulla et al. 2013; Greenhalgh et al. 2014, 2015; Fernandez et al. 2015; Bingeman, 2016). Increasing emphasis has been placed on the involvement of consumers in the development of guidelines (Schunemann et al. 2006; Nilsen et al. 2010; Guideline International Network (GIN), 2015). Other authors have called for improving implementation strategies, for developing new tools and frameworks to support use of guidelines in the real world (Shiffman et al. 2005; Doherty, 2006; Francke et al. 2008; Hakkennes & Dodd, 2008; Gagliardi et al. 2011; Brouwers et al. 2015; Fleuren et al. 2015); and to incorporate the analysis of the context and the impacts of guideline implementation in individual and population outcomes (Damschroder et al. 2009; Kirk et al. 2016).

Undoubtedly these efforts to amend existing problems in guideline development have had a very positive impact, but questions arise as to whether amendments to the EBM model will suffice to meet the current challenges or a conceptual change is required.

Should the EBM model be changed for improving guideline development?

In the past few decades there has been a substantial questioning of the applicability and practicality of the EBM framework and a ‘one size fits all’ approach to clinical practice (Charles et al. 2011; Rosner, 2012; Salvador-Carulla et al. 2013; Greenhalgh et al. 2014, 2015; Fernandez et al. 2015; Bingeman, 2016). A number of new guideline development methods that follow different approaches have emerged in the recent years. For example, Yelovich has advocated an alternative perspective of the patient–physician relationship as a meeting of the professional expert and ‘experiencer’, with authentic recognition and value of the patient's tacit knowledge (Yelovich, 2016). Lukersmith et al. have proposed an alternative method to develop recommendations using framing with an international classification of functioning disability and health to ‘pack’ different sources of scientific and context knowledge (Lukersmith et al. 2016). Ivers and Grimshaw have suggested the setup of ‘implementation laboratories’ to utilise and apply learning, context and delivery knowledge as evidence to inform health system policy (Ivers & Grimshaw, 2016). Ostuzzi and colleagues have used a ‘bottom-up’ approach of expert knowledge to develop recommendations on the complexity of prescribing psychotropic medications (Ostuzzi et al. 2013). There have been calls for guidelines to adopt the biopsychosocial framework of health, include patient reported outcome measures (van Dulmen et al. 2015); and the incorporation of a context knowledge base on use and costs of people with intellectual disability for evidence informed policies (Salvador-Carulla & Symonds, 2016).

The need for a philosophy of science approach and an extended model of scientific knowledge to guideline development

From our perspective the traditional model of EBM is necessary but not sufficient for developing practical recommendations for guiding decision making under conditions of complexity. It requires a critical revision of its foundation that draws on three major challenges for the success of guidelines as implementation tools:

The difficulties of adapting the EBM framework to a systems thinking and complexity approach

Systems thinking and complexity approaches are essential in health systems research, guidelines for complex interventions (e.g., community-based care), complex target health conditions (e.g., mental and developmental disorders, multi-morbidity) and complex practice contexts where decisions are made under conditions of high uncertainty such as in primary care and mental health care (Sturmberg et al. 2010; Salvador-Carulla et al. 2013; Huckel Schneider et al. 2016 (in press)). Complexity in clinical situations requires reasoning and judgment that may rely on broader sources of knowledge than the unidimensional guideline recommendation. In addition, a systems thinking approach is not intended to generate a single and definite solution to a PICO question, but to add new information to the knowledge base to improve decision making from a formative/summative perspective to organisational learning (Hargreaves, 2010; Snilstveit et al. 2012).

The lack of a philosophy of science approach to guideline development

We have revised this problem in a series of prior papers where we advocate for the extended multi-domain approach to scientific knowledge (Salvador-Carulla et al. 2014; Fernandez et al. 2015; Lukersmith et al. 2016). The definition of a series of key terms related to the development of this model is provided in Table 1. First, it is necessary to consider the differences between the three stages of scientific knowledge: discovery, corroboration and implementation (Schurz, 2014) and their contribution to guideline development. The traditional approach to EBM implicitly assumed that the analysis and ordering of the existing knowledge base of discovery and corroboration could be automatically translated, implemented and applied in practice. However, knowledge translation and implementation research is emerging in response to the need to identify the determinants, processes and outcomes of translating (corroboration) research into practice (Rabin et al. 2008; Peters et al. 2013).

Table 1.

Concepts and definitions of an extended multi-domain approach to scientific knowledgea

| Basic concepts | Definitiona |

|---|---|

| Scientific knowledge | A fluid mix of framed evidence and expertise acquired by means of standardised methods of research following the principles of commensurability (definitions of units of analysis that can be compared like-with-like), transparency for corroboration (including replicability and falsifiability) and transferability (including generalisability to broader contexts) |

| Evidence | The part of scientific knowledge based on contextualised information from facts and data, and which is analysed using quantitative approaches alone or combined with qualitative methods to generate inferences using mainly deductive reasoning, but also non-deductive logical reasoning (induction and abduction) |

| Expertise | The part of scientific knowledge based on expert know-how, understanding, experience and insight and on the perceived experience of a phenomenon. Experiential knowledge is here considered part of this category together with professional (expert) knowledge |

| Stages of scientific knowledge | |

| Discovery | Generation of new and relevant scientific evidence mainly using experimental approaches and deductive inference. Discovery can also be generated by consilience using inductive inference |

| Corroboration | Justification of the new scientific evidence by determining the degree of confirmation given the acceptability of experimental and observational data using logical induction. It requires transparency of prior information and uses quantitative techniques for ordering available evidence (e.g., meta-analysis), and qualitative techniques for reaching expert consensus (inter-subjectivity) |

| Implementation | Factual application of corroborated scientific knowledge to real life practice and policy and to controversial cases. Under conditions of uncertainty and non-monotonicity it requires expert knowledge and incorporates abduction and means-end inferences logical reasoning in the decision-making process |

| Types of logical reasoning | |

| Inference | Process of deriving logical conclusions from premises known or assumed to be true. |

| Deductive | Inferences from general instances to a specific conclusion. Is the main necessary inference, i.e. the certainty of the explanation can be derived from the certainty of the premises |

| Inductive | Inferences from specific instances to general conclusion or explanation. General statements are made based on specific observations |

| Abductive | Inference to the best explanation. It needs a prior knowledge-base to select the best or the most plausible explanation |

| Means-end inferences | Relates fundamental norms to the means to achieve a pre-determined end. This requires experts to decide which is the best or optimal mean from a set of alternatives to achieve the final goal |

| Domains of scientific knowledge | |

| Experimental evidence | Information acquired conducting an experiment to test a formal hypothesis using deductive reasoning (e.g., Randomised control trials) |

| Observational evidence | Information acquired by the standard recording of phenomena under natural conditions and analysed mainly using inductive reasoning (e.g., cohort studies, surveys). Ecological evidence refers to observations of the phenomenon gathered in a clearly defined area and environment |

| Contextual evidence and knowledge | Context refers to the totality of circumstances that comprise the milieu of a given phenomenon. In health care it includes all sources of evidence of the local system: geography, social and demographic factors, other environmental factors, service availability, capacity, use and costs. It also includes legislation and expertise on the milieu (e.g., the historical account of the current state of the art) |

| Expert knowledge | A set of formalised know-how, understanding, experience and insight in a defined area of knowledge which is informed, contextualised, stable, consistent and connected. It is elicited using qualitative approaches alone or combined with quantitative methods to generate means-end inferences and non-inferential knowledge to complement evidence |

| Experiential knowledge | The knowledge and understanding of the health condition, intervention and context derived from exposure as a patient, consumer or carer |

| New types of scientific research relevant for guideline development | |

| Framing of scientific knowledge (FSK) | A group of studies of ‘expert knowledge’ specifically aimed at generating formal scientific frames. FSK studies contribute to the formulation of research questions, to understand and to represent complex phenomena and to guide decision making under conditions of uncertainty and insufficient evidence. FSK studies generate formal scientific frames that could be used to analyse and to interpret complexity in health sciences, particularly in public and health systems research. FSK includes scientific declarations and charts, conceptual maps, classifications and recommendations of clinical guidelines |

Second, it is important to provide operational definitions of the different domains of scientific knowledge (experimental evidence, observational evidence, contextual evidence, expert knowledge and experiential knowledge), the areas of scientific research relevant to understand recommendations (e.g., framing of scientific knowledge as a new research area of expert knowledge) (Salvador-Carulla et al. 2014), and logical inferences apart from deduction and induction, and which are extremely relevant to recommendations’ development such as abduction and mean-ends inferences (Table 1).

Alternatives to the EBM pyramid for ranking the hierarchy of research evidence

The traditional EBM hierarchy (Howick et al. 2011) graphically portrayed as the EBM pyramid places a higher value on deductive inference from experimental evidence although systematic reviews rely heavily on induction. In the EBM model, randomised controlled trials are considered the highest quality, whilst observational evidence is de-valued, and context, expert ‘opinion’ and consumers’ experience are factually excluded as relevant sources of knowledge in the systematic reviews and the subsequent recommendations (Rosner, 2012). Expertise is not only important as a source of knowledge but also to generate recommendations. The ‘gold standard’ for guideline methods requires the inclusion of expert clinicians and experiencers consumer groups in the guideline development team. Yet, their contribution to the recommendations is typically not made transparent. Paradoxically the recent attempts to refine and improve the pyramid model (e.g., EBHC pyramid 5.0) (Alper & Haynes, 2016) insist on the analysis of the quality of evidence based on its internal validity and not on its external validity which is critical in complex questions which are context-dependent (Fernandez et al. 2015).

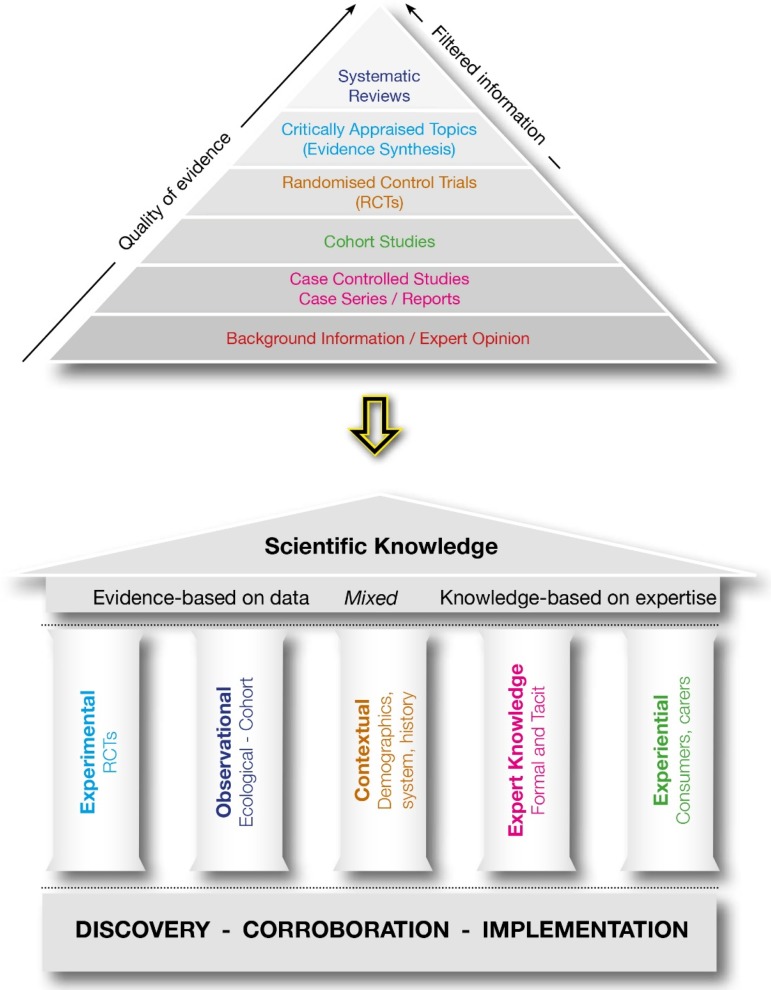

It is clear that the methods to formulate guideline recommendations need to accommodate the complexity, uncertainty and variability of health care practice. In our opinion, there needs to be a new framework for the development of guidelines if they are to be ‘fit for purpose’, feasible, useful and assimilated into practice. The framework should incorporate all the advances in the application of the knowledge-base of discovery to clinical practice made by EBM, but it also requires repeatable and explicit methods to integrate other scientific knowledge types described in Table 1. As guidelines are implementation tools, a new framework must consider scientific knowledge derived from implementation research (context and impact analysis). We have taken the first step and developed a preliminary model to frame an extended multi-domain approach to scientific knowledge that is graphically represented as a ‘Greek temple’. Figure 1 illustrates the movement from the unidimensional approach to broader extended multi-domain model of scientific knowledge to generate clinical guidelines and recommendations.

Fig. 1.

Moving from the pyramid model of scientific knowledge to the General framework: Greek temple model of scientific knowledge.

The five columns of scientific knowledge used across discovery, corroboration and implementation research, represent the domains of scientific knowledge described in Table 1. We consider that although experimental evidence derived from discovery and corroboration is a basic pillar for developing guidelines, other domains should be considered in guideline development and they cannot be merged together in a unidimensional ranking. Separate analyses should be provided to summarise the experimental knowledge and the observational knowledge using the criteria already available (e.g., EBHC pyramid 5.0) (Alper & Haynes, 2016), to provide a scientific framing together with information on context analysis, expert knowledge on appropriateness/adequacy, and the experiential knowledge on acceptability. For example, electroconvulsive therapy may show a high-efficacy and cost-effectiveness for major depressive disorder (Milev et al. 2016), and at the same time high differences in local availability (context), and in the judgment by expert professionals (appropriateness) and by consumers (acceptability). It is extremely difficult to display this complexity in a single dimension. This case may be better represented by a multi-dimensional profile than a single statement followed by the strength of the recommendation. However, much more work needs to be done to develop and test transparent, rigorous and repeatable guideline development methods to integrate and package all scientific knowledge on the topic of concern in an acceptable, applicable and practical way.

Case example: Canadian consensus guidelines for the primary care of people with intellectual and developmental disabilities (IDD)

The ‘Canadian Consensus Guidelines for the primary care of people with developmental disabilities’ were developed in 2006 (Sullivan et al. 2006), revised in 2011 (Sullivan et al. 2011) and are currently being revised in 2016. We describe the complexities of the health condition and practice context; a problem intervention; and an outline of the guideline methods. The case example is from the 3rd version of the Canadian Consensus Guidelines on the primary care of adults with developmental disabilities, 2016 updates. The IDD Canadian Guidelines for primary care providers propose preventative health care recommendations that they can and should implement during regular health care assessments of adults with IDD. However, these guidelines illustrate the challenges associated with identifying scientifically robust recommendations using an EBM approach for a population with often complex health conditions, such as adults with IDD.

Health condition and practice context:

‘Intellectual developmental disorder’ or ‘IDD’ shows unsolved differences in its terminology, standard diagnostic criteria and assessment (Bertelli et al. 2016a, b); as well as in its care delivery system, planning and policy (Salvador-Carulla & Symonds, 2016). Adults with IDD often have health conditions that differ from those of the rest of the population, but there are few PICO-type studies for this group of patients. Most of the scientific knowledge regarding this population is rated as low-quality expert opinion within the EBM framework. Even when the same health conditions are present in the IDD population as the general population, evidence-based recommendations are not necessarily inclusive of adults with IDD because of the wide variability from person to person of factors such as level of disability (i.e., mild to profound) and aetiology, that could affect the outcome of interventions. Moreover, many adults with IDD experience co-morbid physical, behavioural and mental health conditions (for which separate evidence-based guidelines might exist but do not include the IDD population). The practical usability of guidelines for adults with IDD is highly limited due to conflicting recommendations and the risk of engaging in multiple recommended interventions (e.g. the use of medications in comorbid and complex cases).

A clear example is provided by the use of antipsychotic medications for problem behaviours in persons with IDD. Antipsychotics are often prescribed by family physicians in Ontario (Cobigo et al. 2013). Available studies provide insufficient evidence to support such off-label prescribing practices (Deb et al. 2015). In addition, EBM neither elucidates factors that influence clinical decisions to start or continue such prescriptions nor does it assist clinicians or consumers in determining, whether the benefits of prescribing antipsychotic medications outweigh its burdens and risks (e.g., sedation and metabolic syndrome).

Problems in the implementation of the IDD Canadian guidelines

Authors updating the IDD Canadian guidelines recognised several methodological problems in the former version (2011). First, most of the recommendations were based on expert consensus only, which is regarded as the lowest quality of evidence in EBM. Second, the strength of a recommendation was assumed to be the same as the quality of the best supporting research evidence. For instance, this assumption was not conducive to judging that level I evidence (of the highest quality in EBM) could ever warrant a weak recommendation for action, because of factors relating to the context of care or patient or caregiver preferences and values, for example, just as level III evidence (of the lowest quality) might sometimes warrant a strong recommendation. As a result, there was very little in the 2011 Guidelines that could be proposed as a strongly recommended action. Third, for the level III evidence on which most of the 2011 Guidelines were based, the authors did not consider whether or not the experts had based their views on experiences from comparable medical contexts or had taken into account the complexities of caring for a heterogeneous patient population among adults with IDD with varying health needs, experiences, preferences and values. Fourth, the link between recommendations and framing values, such as a commitment to patient-centred care, adaptations to practices to accommodate the specific needs of people with IDD, and a commitment to supported decision-making, was not required by an EBM approach to developing the guidelines.

The implementation rate of any regular preventive care assessment of adults with IDD in primary care settings in Ontario, Canada, was found to be low (Ouellette-Kuntz et al. 2013) . In a subsequent study regarding implementing health checks for people with IDD in different primary care settings, many system and other factors that influence their successful implementation were identified (Durbin et al. 2016). The EBM-knowledge on which prior versions of the IDD guideline recommendations were based was silent on these contextual factors, and the authors sought to explicitly consider other types of knowledge and clinical context in the updated version of the guidelines.

Outline of the methods for the development of the 3rd version of the IDD Canadian Guidelines

Given these and other limitations of a strictly EBM approach to guideline development, several alternatives were undertaken. First, the authors supplemented the knowledge base for guidelines by incorporating other sources of knowledge related to context, expert knowledge, and consumer and carer knowledge, as described above. These sources of knowledge were especially useful for deciding which guidelines to revise, delete or add based on the cumulative experience of Canadian primary care providers who had implemented the 2011 Guidelines. By making these additional categories of knowledge explicit, the authors allowed for some recommendations for action for which there was no ‘evidence’ according to the EBM model. It is important to note that the addition of context knowledge was made possible by the previous work of the Healthcare Access Research and Developmental Disabilities (H-CARDD) programme. This is a partnership of scientists, policymakers, and clinicians which uses administrative data to provide information on the health of a cohort of adults with IDD relative to other adults in Ontario. The key findings of H-CARDD have been published in the Atlas of the Primary care of Developmental Disabilities in Ontario (Lunsky et al. 2013; Salvador-Carulla & Symonds, 2016).

Second, the authors ranked quality according to different criteria for each of the types of knowledge profiled. For example, empirical studies were ranked ‘level I’ if they were randomised control trials or systematic reviews and ‘level II’ for other types of studies, whereas expert knowledge was classified as ‘level I’ if they had been generated by an explicit consensus process and ‘level II’ if they had not. By profiling the different domains of knowledge considered (rather than simply ranking the quality of knowledge as an aggregate), the authors enabled clinicians to make their own judgments regarding recommended actions based on what they deemed most relevant to particular patients and their context of care.

Third, the authors took a complexity approach to their proposed action for each guideline by providing ‘advice’ or guidance that is relevant for individual patient care rather than being based abstractly on generalised ‘evidence’. Three different levels of recommendation have been established for every domain:

-

(1)

‘Recommend’: always apply. The primary care provider should regard the guideline as a basic standard of care in Canada.

-

(2)

‘Consider’: indicated to primary care providers that it should be applied at the physician's discretion. The professional would need to take into account factors specific to each patient and context before deciding to implement the guideline.

-

(3)

‘Aspire’: authors endorsed the action as an ideal/future standard of care that should be applied if possible but acknowledged that advocacy and changes to contexts and systems of care might be necessary for clinicians to have the capacity and resources to apply such a guideline routinely.

By distinguishing among these types of advice, the authors were able to reduce the number of guideline recommendations as basic standards of care. They were also able to add new guidelines that could be regarded as aspirational but were excluded from the 2011 Guidelines (which focused only on what was judged to be attainable at that time within the Canadian health care system). The authors were also able to flag those guidelines for which additional particular factors in varying contexts of care and in different patients would play a significant role in clinical decision making.

Finally, framing values were incorporated into the guidelines themselves by adding to the General Issues section of the 2011 Guidelines some guidance regarding approaches to care. In addition, the authors revised each guideline with the view of ensuring consistency with these framing values. They also sought to promote good concrete clinical decisions by acknowledging the importance of considering additional particular factors for each patient prior to implementing the action they were to consider, such as the patient's strengths and vulnerabilities in decision making, and regard for patient and caregiver input and participation in decisions concerning the proportion of benefit to harm for the patient of the intervention being considered.

Conclusions and practical implications

Clinical guidelines are implementation tools aimed at supporting decision making in the real world and in different contexts to enhance health care quality, maximise resources and innovation. Adopting a systems thinking approach may lead to the development of new methods and a different arrangement and integration of scientific knowledge that may reframe and broaden the approach provided to guideline development in the past two decades.

Guidelines should incorporate information from all five domains of scientific knowledge (observational, experimental, contextual, expert and experiential knowledge) in their systematic review section. The section of recommendations is actually a separate type of knowledge based on expertise (framing of scientific knowledge) that should be generated following a transparent qualitative method. Recommendations could be provided as a profile of domains following a ranking that is not only based on the strength of the recommendation following the example of the IDD Canadian guidelines. Guidelines updated over time should be considered in a higher-order category of clinical guidelines and they should incorporate information on their implementation impact during the previous phase.

Financial Support

This research received no funding.

Conflict of Interest

The authors declare no conflict of interest.

Author Contributions

Luis Salvador-Carulla and Sue Lukersmith contributed equally to the concepts and drafts of the manuscript. William Sullivan contributed the case example and manuscript revisions.

References

- Alper BS, Haynes RB (2016). EBHC Pyramid 5.0 for accessing preappraised evidence and guidance. Evidence based Medicine 21, 123–125. [DOI] [PubMed] [Google Scholar]

- Bertelli MO, Munir K, Harris J, Salvador-Carulla L (2016a). Intellectual developmental disorders: reflections on the international consensus document for redefining. mental retardation-intellectual disability in ICD-11. Advances in Mental Health and Intellectual Disability 10(1), 36–58. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bertelli MO, Munir K, Salvador-Carulla L (2016b). Fair is foul, and foul is fair: reframing neurodevelopmental disorders in the neurodevelopmental perspective. Acta Psychiatrica Scandinavica. August 1 [Epub ahead of print]. [DOI] [PMC free article] [PubMed]

- Bingeman E (2016). Evaluating normative epistemic frameworks in medicine: EBM and casuistic medicine. Journal of Evaluation in Clinical Practice 22(4), 490–495. [DOI] [PubMed] [Google Scholar]

- Boyd EA and Bero LA (2006). Improving the use of research evidence in guideline development: 4. Managing conflicts of interests. Health Research Policy and Systems 4, 16. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brouwers M, Kho ME, Browman GP, Burgers JS, Cluzeau F, Feder G, Fervers B, Graham ID, Grimshaw J, Hanna S, Littlejohns P, Makarski J, Zitzelsberger L and for the AGREE Next Steps Consortium (2010). AGREE II: advancing guideline development, reporting and evaluation in healthcare. Canadian Medical Association Journal. Retrieved October 2016 from http://www.agreetrust.org/ [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brouwers MC, Makarski J, Kastner M, Hayden L, Bhattacharyya O and Team G-MR (2015). The Guideline Implementability Decision Excellence Model (GUIDE-M): a mixed methods approach to create an international resource to advance the practice guideline field. Implementation Science 10, 36. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Charles C, Gafni A, Freeman E (2011). The evidence-based medicine model of clinical practice: scientific teaching or belief-based preaching? Journal of Evaluation in Clinical Practice 17, 597–605. [DOI] [PubMed] [Google Scholar]

- Cobigo V, Ouellette-Kuntz HMJ, Lake JK, Wilton AS (2013). Chapter 6: medication use In Atlas on the Primary Care of Adults with Developmental Disabilities in Ontario (ed. Lunsky Y Klein-Geltink JE and Yates EA), pp. 117–136. Health Care Access Research and Developmental Disabilities (HCARDD). Institute for Clinical Evaluative Sciences and Centre for Addiction and Mental Health: Toronto, Ontario. [Google Scholar]

- Damschroder LJ, Aron DC, Keith RE, Kirsh SR, Alexander JA, Lowery JC (2009). Fostering implementation of health services research findings into practice: a consolidated framework for advancing implementation science. Implementation Science 4, 50. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Deb S, Unwin G, Deb T (2015). Characteristics and the trajectory of psychotropic medication use in general and antipsychotics in particular among adults with an intellectual disability who exhibit aggressive behaviour. Journal of Intellectual Disability Research 59, 11–25. [DOI] [PubMed] [Google Scholar]

- Doherty S (2006). Evidence-based implementation of evidence-based guidelines. International Journal of Health Care Quality Assurance 19(1), 32–41. [DOI] [PubMed] [Google Scholar]

- Durbin J, Selick A, Casson I, Green L, Spassiani N, Perry A, Lunsky Y (2016). Evaluating the implementation of health checks for adults with intellectual and developmental disabilities in primary care: the importance of organizational context. Intellectual and Developmental Disabilities 154, 136–150. [DOI] [PubMed] [Google Scholar]

- Fernandez A, Sturmberg J, Lukersmith S, Madden R, Torkfar G, Colagiuri R, Salvador-Carulla L (2015). Evidence-based medicine: is it a bridge too far? Health Research Policy and Systems 13(1), 66. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fervers B, Burgers JS, Haugh M, Latrelle J, Milika-Cabanne N, Paquet L, Coulombe M, Poirer M and Burnand B (2006). Adaptation of clinical guidelines: literature review and proposition for a framework and procedure. International Journal for Quality in Health Care 18(3), 167–176. [DOI] [PubMed] [Google Scholar]

- Fleuren MA, van Dommelen P, Dunnink T (2015). A systematic approach to implementing and evaluating clinical guidelines: the results of fifteen years of Preventive Child Health Care guidelines in the Netherlands. Social Science & Medicine 136–137, 35–43. [DOI] [PubMed] [Google Scholar]

- Francke AL, Smit MC, de Veer AJ, Mistiaen P (2008). Factors influencing the implementation of clinical guidelines for health care professionals: a systematic meta-review. BMC Medical Informatics Decision Making 8, 38. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gagliardi AR, Brouwers MC, Palda VA, Lemieux-Charles L, Grimshaw JM (2011). How can we improve guideline use? A conceptual framework of implementability. Implementation Science 6, 26. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Girlanda F, Fiedler I, Becker T, Barbui C, Koesters M (2016). The evidence-practice gap in specialist mental healthcare: systematic review and meta-analysis of guideline implementation studies. British Journal of Psychiatry, July 21 [Epub ahead of print]. [DOI] [PubMed] [Google Scholar]

- Goldet G, Howick J (2013). Understanding GRADE: an introduction. Journal of Evidence-Based Medicine 6(1), 50–54. [DOI] [PubMed] [Google Scholar]

- Greenhalgh T, Howick J, Maskrey N and G. Evidence Based Medicine Renaissance (2014). Evidence based medicine: a movement in crisis? British Medical Journal 348, g3725. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Greenhalgh T, Snow R, Ryan S, Rees S, Salisbury H (2015). Six ‘biases’ against patients and carers in evidence-based medicine. Biomed Central Medicine 13, 200. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grimshaw J, Thomas R, Maclennan G, Fraser C, Ramsay C, Vale L, Whitty P, Eccles M, Matowe L, Shirran L, Wensing M, Dijkstra R, Donaldson C (2004). Effectiveness and efficiency of guideline dissemination and implementation strategies. Health Technology Assessment 8(6), iii–iv, 1–72. [DOI] [PubMed] [Google Scholar]

- Grol R, Buchan H (2006). Clinical guidelines what can we do to increase their use. Medical Journal of Australia 185(6), 301–302. [DOI] [PubMed] [Google Scholar]

- Guideline International Network (GIN) (2015). GIN Public Toolkit: Patient and Public Involvement in Guidelines.

- Guidelines International Network GIN (2016). International Guideline Library. Retrieved 29 August 2016 from http://www.g-i-n.net/library/international-guidelines-library

- Guyatt G (2010). The vexing problem of guidelines and conflicts of interest: a potential solution. Annals of Internal Medicine 152, 738–741. [DOI] [PubMed] [Google Scholar]

- Guyatt GH, Oxman AD, Vist GE, Kunz R, Falck-Ytter Y, Alonso-Coello P, Schunemann HJ, Group GW (2008). GRADE: an emerging consensus on rating quality of evidence and strength of recommendations. BMJ 336(7650), 924–926. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hakkennes S, Dodd K (2008). Guideline implementation in allied health professions: a systematic review of the literature. Quality & Safety in Health Care 17(4), 296–300. [DOI] [PubMed] [Google Scholar]

- Hargreaves MB (2010). Evaluating System Change: A Planning Guide. Methods Brief. Mathematica [Internet]. Retrieved October 2016 from http://www.mathematica-mpr.com/~/media/publications/PDFs/health/eval_system_change_methodbr.pdf.

- Howick J, Chalmers I, Glasziou P, Greenhalgh T, Heneghan C, Liberatir A, Moschetti I, Phillips B, Thornton H (2011). The 2011 Oxford CEBM Evidence Levels of Evidence (introductory document). [Internet]. Retrieved October 2016 from http://www.cebm.net/index.aspx?o=5653

- Huckel Schneider C, Sturmber J, Gillespie J, Wilson A, Lukersmith S, S-CL (2016) (in press). Applying complex adaptive systems thinking to Australian healthcare; an expert commentary brokered by the Sax Institute for the Australian Commission on Safety and Quality in health Care.

- Institute of Medicine (2011). Clinical Practice Guidelines We Can Trust. N. A. Program: Washington, DC. [Google Scholar]

- Ivers NM, Grimshaw JM (2016). Reducing research waster with implementation laboratories. The Lancet 388(August 6), 547–548. [DOI] [PubMed] [Google Scholar]

- Kirk M, Kelley C, Yoankey N, Birken S, Abadie B, Damschroder LJ (2016). A systematic review of the use of the Consolidated Framework for Implementation Research. Implementation Science 11, 72. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lukersmith S, Hopman K, Vine K, Krahe L, McColl A (2016). A new framing approach in guideline development to manage different sources of knowledge. Journal of Evaluation in Clinical Practice. May 19 [Epub ahead of print]. [DOI] [PubMed] [Google Scholar]

- Lunsky Y, Klein-Geltink JE, Yates EA, Eds. (2013). Atlas on the Primary Care of Adults with Developmental Disabilities in Ontario. Institute for Clinical Evaluative Sciences and Centre for Addiction and Mental Health: Toronto, Ontario. [Google Scholar]

- Milev RV, Giacobbe P, Kennedy SH, Blumberger DM, Daskalakis ZJ, Downar J, Modirrousta M, Patry S, Vila-Rodriguez F, Lam RW, MacQueen GM, Parikh SV, Ravindran AV and CANMAT Depression Work Group. Canadian Network for Mood and Anxiety Treatments (CANMAT) (2016). Clinical guidelines for the management of adults with major depressive disorder: section 4. Neurostimulation treatments. Canadian Journal of Psychiatry 61(9), 561–575. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Minhas R (2007). Eminence-based guidelines: a quality assessment of the second Joint British Societies’ guidelines on the prevention of cardiovascular disease. International Journal of Clinical Practice 61, 1137–1144. [DOI] [PubMed] [Google Scholar]

- Mitchell AP, Basch E, Dusetzina SJO (2016). Financial relationships with industry among National Comprehensive Cancer Network guideline authors. JAMA Oncology. August 25 [Epub ahead of print]. [DOI] [PubMed] [Google Scholar]

- National Health and Medical Research Council (2011). Procedures and requirements to meet the 2011 NHMRC standard for clinical practice guidelines. Retrieved July 2011 from http://www.nhmrc.gov.au/_files_nhmrc/publications/attachments/cp133_nhmrc_procedures_requirements_guidelines_v1.1_120125.pdf

- National Institute for Health and Care Excellence (2016). Developing NICE guidelines: the manual. Retrieved 26 August 2016 from https://www.nice.org.uk/process/pmg20/chapter/introduction-and-overview [PubMed]

- Nilsen E, Myrhaug H, Johansen M, Oliver S, Oxman A (2010). Methods of consumer involvement in developing healthcare policy and research, clinical practice guidelines and patient information material (Review). Cochrane Database of Systematic Reviews 3(Art. No.: CD004563). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ostuzzi G, Bighelli I, Carrara B, Dusi N, Imperadore G, Lintas C, Nifosi F, Nose M, Piazza C, Purgato M, Rizzo R, Barbui C (2013). Making the use of psychotropic drugs more rational through the development of GRADE recommendations in specialist mental healthcare. International Journal of Mental Health Systems 7, 14. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ouellette-Kuntz HMJ, Cobigo V, Balogh R, Wilton AS, Lunsky Y (2013). Chapter 4: Secondary prevention In Atlas on the Primary Care of Adults with Developmental Disabilities in Ontario (ed. Lunksy Y, Klein-Geltink JE and Yates EA), pp. 65–91. Institute for Clinical Evaluative Sciences and Centre for Addiction and Mental Health: Toronto, Ontario. [Google Scholar]

- Peters D, Adam T, Alonge O, Akua Agyepong I, Tran N (2013). Implementation research: what it is and how to do it. British Medical Journal 347, f6753. [DOI] [PubMed] [Google Scholar]

- Qaseem A, Forland F, Macbeth F, Ollenschlager G, Phillips S, van der Wees P (2012). Guidelines international netwrok: towards international standards for clinical practice guidelines. Annals of Internal Medicine 156, 525–531. [DOI] [PubMed] [Google Scholar]

- Rabin B, Brownson R, Haire-Joshu D, Kreuter M, Weaver N (2008). A glossary for dissemination and implementation research in health. Journal of Public Health Management Practice 14(2), 117–123. [DOI] [PubMed] [Google Scholar]

- Rosner AL (2012). Evidence-based medicine: revisiting the pyramid of priorities. Journal of Bodywork and Movement Therapies 16(1), 42–49. [DOI] [PubMed] [Google Scholar]

- Salvador-Carulla L, Symonds S (2016). Health services use and costs in people with intellectual disability: building a context knowledge base for evidence-informed policy. Current Opinion in Psychiatry 29(2), 89–94. [DOI] [PubMed] [Google Scholar]

- Salvador-Carulla L, Garcia-Alonso C, Gibert K, Vazquez-Bourgon J (2013). Incorporating local information and prior expert knowledge to evidence-informed mental health system research In Improving Mental Health Care: The Global Challenge (ed. G Thornicroft, M Ruggeri and D Goldberg), pp. 211–228. John Wiley & Sons: London. [Google Scholar]

- Salvador-Carulla L, Fernandez A, Madden R, Lukersmith S, Colagiuri R, Torkfar G, Sturmberg J (2014). Framing of scientific knowledge as a new category of health care research. Journal of Evaluation in Clinical Practice 20(6), 1045–1055. [DOI] [PubMed] [Google Scholar]

- Schunemann HJ, Fretheim A, Oxman AD (2006). Improving the use of research evidence in guideline development: 10. Integrating values and consumer involvement. Health Research Policy and Systems 4, 22. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schurz G (2014). Philosophy of Science New York Taylor & Francis.

- Shiffman R, Dixon J, Brandt C, Essaihi A, Hsiao A, Michel G, O'Connell R (2005). The GuideLine Implementability APpraisal (GLIA): development of an instrument to identify obstacles to guide implementation. BMC Medical Informatics and Decision Making 5, 23. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shnier A, Lexchin J, Romero M, Brown K (2016). Reporting of financial conflicts of interest in clinical practice guidelines: a case study analysis of guidelines from the Canadian Medical Association Infobase. BMC Health Services Research 16(383). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Snilstveit B, Oliver S, Vojtkova M (2012). Narrative approaches to systematic review and synthesis of evidence for international development policy and practice. Journal of Development Effectiveness 4(3), 409–429. [Google Scholar]

- Sturmberg J, O'Halloran DM, Martin C (2010). People at the centre of complex adaptive health systems reform. Medical Journal of Australia 193(8), 474–478. [DOI] [PubMed] [Google Scholar]

- Sullivan W, Heng J, Cameron D, Lunsky Y, Cheetham T, Hennen B, Bradley E, Berg J, Korossy M, Forster-Gibson C, Gitta M, Stavrakaki C, McCreary B, Swift I (2006). Consensus guidelines for primary health care of adults with developmental disabilities. Canadian Family Physician 52, 1410–1418. [PMC free article] [PubMed] [Google Scholar]

- Sullivan W, Berg J, Bradley E, Cheetham T, Denton R, Heng J, Hennen B, Joyce D, Kelly M, Korossy M, Lunsky Y, McMillan S (2011). Primary care of adults with developmental disabilities; Canadian Consensus Guidelines. Canadian Family Physician 57, 541–553. [PMC free article] [PubMed] [Google Scholar]

- The ADAPTE Collaboration (2009). The ADAPTE Process: Resource Toolkit for Guideline Adaptation. [Internet]. Retreived October 2016 from http://www.g-i-n.net/

- van Dulmen SA, Lukersmith S, Muxlow J, Santa Mina E, Nijhuis-van der Sanden MW, van der Wees PJ, GINAHS Group (2015). Supporting a person-centred approach in clinical guidelines. A position paper of the Allied Health Community – Guidelines International Network (G-I-N). Health Expectations 18(5), 1543–1558. [DOI] [PMC free article] [PubMed] [Google Scholar]

- World Health Organization WHO (2012). WHO Handbook for Guideline Development. G. R. Committee. Geneva: WHO. [Google Scholar]

- Yelovich MC (2016). The patient-physician interaction as a meeting of experts: one solution to the problem of patient non-adherence. Journal of Evaluation in Clinical Practice 22(4), 558–564. [DOI] [PubMed] [Google Scholar]