Abstract

Introduction

Massive open online courses (MOOCs) offer a flexible approach to online and distance learning, and are growing in popularity. Several MOOCs are now available, to help learners build on their knowledge in a number of healthcare topics. More research is needed to determine the effectiveness of MOOCs as an online education tool, and explore their long-term impact on learners’ professional practice. We present a protocol describing the design of comprehensive, mixed-methods evaluation of a MOOC, ‘Quality Improvement (QI) in Healthcare: the Case for Change’, which aims to improve learner’s knowledge and understanding of QI approaches in healthcare, and to increase their confidence in participating, and possibly leading QI projects.

Methods and analysis

A pre-post study design using quantitative and qualitative methods will be used to evaluate the QI MOOC. Different elements of the RE-AIM (reach, effectiveness and maintenance) and Kirkpatrick (reaction, learning and behaviour) models will be used to guide the evaluation. All learners who register for the course will be invited to participate in the QI MOOC evaluation study. Those who consent will be asked to complete a presurvey to assess baseline QI knowledge (self-report and objective) and perceived confidence in engaging in QI activities. On completion of the course, participants will complete a postsurvey measuring again knowledge and perceived confidence. Feedback on the course content and how it can be improved. A subset of participants will be invited to take part in a follow-up qualitative interview, 3 months after taking the course, to explore in depth how the MOOC impacted their behaviour in practice.

Ethics and dissemination

The study has been approved by the University of Bath Human Research Ethics Committee (reference: 2958). Study findings will be published in peer-reviewed journals, and disseminated at conference and departmental presentations, and more widely using social media, microblogging sites and periodicals aimed at healthcare professionals.

Keywords: MOOC, massive open online course, quality improvement, healthcare, evaluation, Kirkpatrick model, RE-AIM, education

Strengths and limitations of this study.

Application of the RE-AIM and Kirkpatrick models to capture the impact of the first UK-based quality improvement (QI) massive open online course (MOOC) on participants’ knowledge and perceived confidence in participating in QI projects.

Use of mixed methods to conduct a comprehensive evaluation of the QI MOOC and contribute to evidence on MOOC effectiveness in healthcare settings.

Participant self-select to participate in the study, thereby limiting control over study recruitment and retention, but potentially creating a selection bias.

Those who choose/self-select to participate in the study may provide different responses from those who do not choose to participate in the study.

The study does not measure any patient or system-related outcomes that may be influenced by learners’ participation in the MOOC.

Introduction

In an era of online education and distance learning, massive open online courses (MOOCs) provide a platform to disseminate information on a large scale and reach a global audience with different disciplinary and cultural backgrounds.1 MOOCs are generally offered for free, and developed by academics working in higher education institutes, in collaboration with professional and commercial organisations who host the MOOCs via their online platforms.2 They have predominately been created in high-income countries such as Australia, the USA and the UK, although their potential in low-income and middle-income countries is increasingly recognised.3 4 Most MOOCs use a variety of learning formats such as video lectures, online discussion, articles, recommended reading lists and self-assessments/quizzes, to engage learners within a global virtual classroom setting.5

Despite MOOCs growing in popularity over the past decade, more research is needed to determine whether MOOCs are successful in engaging learners and delivering education effectively to achieve learning outcomes. A better understanding of the role and impact of MOOCs as an online learning tool compared with more traditional methods of teaching and learning is also required, as well as identifying what particular formats and materials appeal to particular learners.6 7 In addition, very little is known about the long-term impact that MOOCs might achieve with regard to learners bringing about changes in their professional and clinical practice through the acquisition of new knowledge after taking the course.8

The number of MOOCs delivering healthcare and continuing medical education is steadily increasing.9–11 MOOCs have been developed to train physiotherapists about how to manage spinal cord injuries,12 13 improve people’s understanding of dementia,14 deliver education to medical students about anatomy,15 educate healthcare professionals on antimicrobial stewardship in low-income and middle-income countries,16 raise awareness of the real-world data science methods in medicine17 18 and teach students skills of interacting with patients using virtual patients.19 Previous studies have evaluated the impact of the medical MOOC on learner’s knowledge, confidence and perceptions of how it influenced their clinical practice. Results from these evaluation studies are generally promising, in terms of MOOCs increasing public engagement about a particular topic,14 15 facilitating collaborative learning13 and enabling learners to apply new knowledge into clinical practice.16 19 For example, a MOOC designed to help healthcare professionals better communicate with patients using interactive, virtual patient scenarios on stress and sleep problems found that 90% of participants thought the virtual exercise was useful to their learning; qualitative results showed that participants felt more confident in using the methods learnt on the course in everyday interactions with patients, friends and family.19 Another MOOC, designed for healthcare professionals to empower them to provide safe, high-quality antibiotic use (antimicrobial stewardship), found that nearly half of participants (49%) at 6 months follow-up reported that they had started to implement interventions into their own setting.16 A randomised trial of a MOOC teaching physiotherapy students about spinal cord injuries was found to be as effective as an online learning module in improving knowledge, confidence and satisfaction. It also gave learners the opportunity to interact with other students from around the world.13

Given the increasing number of medical and healthcare MOOCs available, it is important that they are evaluated properly to determine their success in achieving their short-term and long-term learning aims and objectives. This in turn will help to ensure that their quality or performance is upheld, and areas for improvement are identified for future learners.20 21 There is also a lack of qualitative work exploring why learners decided to do the course, whether it met their expectations and how it influenced their everyday practice. This will help the course developers to improve the course and enhance its sustainability. Research into the quality of MOOCs has focused on the instructional design quality of MOOCs, and proposed various principles considered to be important for quality assurance check purposes.20 22 23 A recent study assessing the instructional design of medical MOOCs found that application, authentic resources, problem-centeredness and goal-setting existed in many courses, however, activation, collective knowledge, differentiation and demonstration were present in less than half of the courses, and integration, collaboration and expert feedback were only found in <15% of the MOOCs.20 According to Hood and Littlejohn (2016), a MOOC’s quality depends on the MOOC’s goals and the learner’s perspective. This suggests that a MOOC may be perceived as high quality if the learner achieved or learnt what they wanted to, and that MOOC completion rates may not necessarily be an appropriate indicator of quality.20 21 To build on the MOOC evaluation literature, we aim to present an evaluation framework, drawing on two commonly used approaches to evaluating the success of training courses—the RE-AIM24 25 and Kirkpatrick models26—to create a bespoke framework designed to identify whether the MOOC achieved its key aims and learning objectives, and the impact of the course on learner’s knowledge and behaviour in their professional or work practice.

The current study focuses on the impact of a 6-week MOOC, entitled, ‘Quality Improvement in Healthcare: the Case for Change’ primarily designed to train people in QI methods, who are either working in, or with an interest in health and social care organisations (e.g. clinicians, allied health professionals, nurses, managers, administrators, caterers, porters, patients, carers), to build their confidence in participating, initiating and perhaps leading QI projects. Broadly speaking, QI seeks to improve the delivery of healthcare for patients by enhancing their experience of care and safety.27 QI involves the application of a systematic approach that uses specific techniques or methods to improve quality.28 29 QI is widely endorsed by professional bodies around the world30–32 and has become an important part of medical education curriculum.33 34

The QI MOOC was developed by academics and clinicians/consultants with expertise and leadership roles in QI and systems modelling in healthcare based at, or affiliated with the Bath Centre for Healthcare Innovation and Improvement (CHI2) School of Management, University of Bath, in collaboration with the West of England Academic Health Science Network. It is hosted on the FutureLearn© platform. Since September 2016 and as of April 2019, there have been 17 416 joiners (someone who registers for a course), 10 662 learners (a joiner who views at least one step in a course), 7749 active learners (a learner who goes on to mark at least one step as complete in a course) and 2869 social learners (a learner who leaves at least one comment in a course)35 across eight runs. While participant feedback as collected routinely by the delivery platform has been largely positive, it is important to conduct a more rigorous evaluation of the impact of the MOOC on learner’s knowledge and how learners apply their new knowledge in the workplace or professional practice after completing the course.

Training healthcare professionals in QI using team-based learning has shown to be an effective way to influence knowledge and behaviour (Armstrong et al 36, Jones et al 37). For example, a project-based training programme to mentor and support learners in designing and delivering their own QI initiatives found that participants had higher levels of knowledge after completing the programme and felt more confident in leading QI initiatives. Six months after programme, 62% had lead QI projects.38 Compared with existing training programmes, MOOCs offer, at least in principle, an inexpensive and flexible way to train healthcare professionals about QI. This work will further contribute to evidence on whether large and diverse online learning environments are an effective way to teach people about QI and equip them with the knowledge and confidence to participate in QI initiatives.

The study was designed to be a comprehensive evaluation of the MOOC. The MOOC’s aims and corresponding learning objectives (listed in table 1), as well as the methodological approaches proposed by the RE-AIM and Kirkpatrick models (commonly used to evaluate training courses and interventions) informed the primary and secondary research questions and the bespoke evaluation framework developed for this study. A mixed-methods approach, comprising pre-MOOC and post-MOOC surveys and a follow-up semi-structured interview, was chosen to better understand the immediate and long-term impact of the MOOC on a number of different outcomes.

Table 1.

Core topics, related content and learning objectives of the MOOC

| Week of course | Topic | Content | Learning objectives |

| 1 | Introduction to quality improvement (QI) | Quality improvement as a concept, historical context of QI in healthcare, underlying principles of quality improvement, challenges in healthcare settings. | Be able to identify what quality and process improvement entails, especially in a health and social care setting. |

| 2 | Quality improvement approaches | Examples of QI approaches (eg, Plan, Do, Study, Act (PDSA)—the model for improvement, Lean methodology, six sigma), QI initiatives implementations, microsystems to improve care for patients and reducing delays. | Be able to discuss how QI can help you deal with complexity in organisational systems and identify how to improve key areas without worsening others. |

| 3 | Putting patients at the heart of quality improvement and safety | What is person-centred care? Importance of patient experience, putting person-centred care into practice and patient safety. | Be able to explain how QI can lead to better outcomes for staff and organisations, including customers and/or patients. |

| 4 | Evaluating quality improvement | The system of profound knowledge, measurement for improvement. | Be able to understand how to evaluate QI projects. |

| 5 | Systems modelling in quality improvement | What is systems modelling and how it can help, modelling demand and capacity, computer simulation for improvement. | Be able to explore how systems modelling and analytics techniques support QI initiatives. |

| 6 | Making the case for quality improvement | Mobilising system leadership, sustainability, next steps on the improvement journey. | Be able to gain confidence to start and lead a QI project within your organisation, identify how to access additional support and get others to join with you in making improvements. |

QI, Quality Improvement

MOOC, massive open online course.

The aims of the QI MOOC are to improve learner’s knowledge and understanding of QI approaches, and to increase their perceived confidence in participating in QI initiatives. To identify whether the MOOC is successful in achieving its aims and learning objectives, the primary research question of the evaluation study is: To what extent does the MOOC improve learner’s knowledge and understanding of QI approaches, and increase perceived confidence in participating in QI initiatives? (effectiveness)

The secondary research questions of the MOOC comprise the following:

What are the characteristics of the learners taking the MOOC? (reach)

How did learners react to the course? (reaction)

How did the learners learn and how did they engage with other learners? (learning)

What evidence suggests that learners retained knowledge acquired from the course? (maintenance/sustainability)

What evidence suggests that the MOOC increased participation in QI initiatives? (behaviour)

Methods and analysis

MOOC development and delivery

The QI MOOC was developed in an iterative process involving regular meetings between the course leads/project team of AB, CV and TW via face-to-face meetings, emails and conference calls. Educators drew on their own clinical and academic practice and coaching, as well as published research in this area. The course is promoted via the FutureLearn© platform, the University of Bath website and social media (Facebook, Twitter, Linkedin) of the relevant organisation and those of the educators. In June 2019, it was accredited by the CPD Certification Service as part of a wider initiative of the FutureLearn© platform. Details about the MOOC can be found at: https://www.futurelearn.com/courses/quality-improvement.

The MOOC is open to the public via the FutureLearn© platform and requires learners to spend about 3 hours of study per week for 6 weeks. Each week of the course covers different topic areas and objectives (table 1) and is facilitated by the course team. A range of educational formats and strategies are used to engage the learner: short lecture-style videos, interview videos, articles to read with links to additional reading and resources and multiple choice knowledge quizzes at the end of each week. The course is designed to be interactive and learners are encouraged to reflect on their own QI practice and share their thoughts and suggestions with the educators and other learners via an online discussion forum. At the end of each week, one of the course educators does a wrap-up video to summarise the week and address any common queries raised by learners. Learners can purchase a course completion certificate as evidence of participation.

Study design

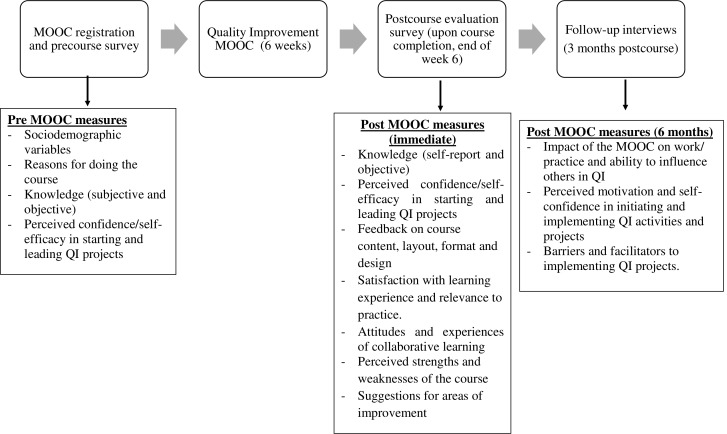

A pre-post design using mixed methods (surveys, semi-structured interviews) will be used to evaluate the impact of the QI MOOC on learners’ knowledge, and perceived confidence in engaging in QI activities (figure 1).

Figure 1.

Pre-MOOC and post-MOOC evaluation: flow of study procedure. MOOC, massive open online course.

Drawing on approaches used in previous MOOC evaluation studies,17 18 39 two comprehensive models, the RE-AIM and Kirkpatrick, will help to guide the current study.24–26 While there is overlap in the two models, their key elements are slightly different. RE-AIM comprises five evaluative dimensions, including Reach (participation rate within the target audience and participant characteristics), Efficacy (short-term impact of the intervention on key outcomes), Adoption (workplaces adopting the intervention), Implementation (extent to which the intervention is implemented in the real-world) and Maintenance (extent to which the programme is sustained over time). By contrast, the Kirkpatrick model encompasses the following four elements of assessment: Reaction (participants’ responses to the intervention), Learning (extent to which participants acquire the intended knowledge and confidence), Behaviour (extent to which knowledge is translated into practice) and Results (overall success of the intervention or training in resolving problems and achieving organisations goals).

For the current study, we selected specific RE-AIM and Kirkpatrick dimensions that were considered to be most relevant and applicable for evaluating the QI MOOC. Table 2 outlines the data collection methods, timelines and dimensions of RE-AIM and Kirkpatrick model to be used in the current study. The dimensions, ‘Adoption’ (RE-AIM), ‘Implementation’ (RE-AIM) and ‘Results (Kirkpatrick) will not be used because they tend to focus on the impact of the intervention at the organisational (rather than individual) level which is beyond the scope of this study. Future evaluation work of the QI MOOC will seek to assess its impact at the organisation level. The current study focuses on measuring impact at the participant or individual level.

Table 2.

Evaluation framework methodology based on the RE-AIM and Kirkpatrick models—measures, data collection methods, timeline points

| Evaluation model dimensions | Indicators | Corresponding research question | Outcome measures | Data collection methods | Timepoint of assessment |

| Effectiveness | Knowledge and perceived confidence in QI | Primary research question— To what extent does the MOOC improve learner’s knowledge and understanding of QI approaches and increasing perceived confidence in participating in QI initiatives? |

Knowledge assessment—subjective/self-report and objective. | Survey items | Pre-MOOC and post-MOOC (immediate) |

| Reach | Learners’ characteristics | Secondary research question— What are the characteristics of the learners taking the MOOC? |

Sociodemographic information—age, country, gender, language, education level, employment. | Survey items | Pre-MOOC |

| Reaction | Self-efficacy and motivation | Secondary research question— How did learners react to the course? |

Reasons for doing the MOOC. Self-efficacy in learner’s ability to dedicate time and complete the course. |

Survey items Qualitative interview data |

Pre-MOOC and post-MOOC (immediate) |

| Satisfaction and relevance | Satisfaction with learning experience and relevance to practice. How participants valued the course—strengths and weaknesses, areas of improvement. Feedback on course content, layout, format and design. |

||||

| Learning | Collaborative learning | Secondary research question—How did the learners learn and how did they engage with other learners? | Attitudes and experiences of engaging with others on the course and asking for help. Collaborative learning—advantages and disadvantages. | Survey items | Pre-MOOC and post-MOOC (immediate and 3-month follow-up) |

| Higher order learning | Perceptions of whether higher order learning was achieved during the course—apply new information to new situations, acquired new knowledge and understanding of QI. | Qualitative interview data | |||

| Reflective and integrative learning | Connected their learning to problems that could be addressed by QI, better understanding of how a QI problem might look from another person perspective (eg, patient), learnt something that changed the way they understood a concept or idea, connected ideas from the course to prior knowledge and experience. | ||||

| Capability | The degree to which participants acquire the knowledge and confidence to engage in QI efforts based on their participation in the MOOC. | ||||

| Maintenance (sustainable) | Long-term effects of the MOOC | Secondary research question— What evidence suggests that learners retained knowledge acquired from the course? |

Learner’s confidence in their ability to design, implement, sustain QI activities. | Survey items Qualitative interview data |

Post-MOOC (immediate and 3-month follow-up) |

| Behaviour | Postcourse practices in work environment and professional practice | Secondary research question—What evidence suggests that the MOOC increased participation in QI initiatives? | Perceived self-efficacy, motivation, confidence in initiating/implementing QI activities. Impact of the MOOC on work/practice and ability to influence others in QI. Barriers and facilitators to implementing QI projects. |

Survey items Qualitative interview data |

Post-MOOC (immediate and 3-month follow-up) |

MOOC, massive open online course; QI, quality improvement.

With regard to RE-AIM, the focus will be on assessing three elements—reach, effectiveness and maintenance of the MOOC at the individual level. Evaluation of reach will be achieved by examining the recruitment and completion rates for the MOOC and collecting sociodemographic data pre-MOOC to determine learners’ characteristics. Knowledge (self-report and objective) and perceived confidence in starting and leading QI initiatives will be measured pre-MOOC and post-MOOC to determine the effectiveness of the MOOC. Maintenance (sustainability) of the MOOC will be assessed using postcourse survey data and semi-structured interviews conducted 3 months post-MOOC to understand the effect of the course over time and participants’ future engagement with QI activities beyond course completion, such as the types of QI projects that participants engaged with, or led in the work place. The post-MOOC interviews will also explore perceived facilitators and barriers to setting up QI projects. The RE-AIM model was chosen because it is concerned with the long-term impact of interventions in real-world settings. This was considered important since we want to examine whether the MOOC equips learners with the knowledge and confidence to participate, help initiate and perhaps lead a QI project in practice once the course has finished.

Three levels from the Kirkpatrick model will be used to evaluate the MOOC, namely reaction, learning and behaviour. The postcourse survey data and qualitative interviews (3 months post-MOOC) will explore learners’ motivations for doing the course and their reactions to it, such as appraisal of the course format, design and structure, overall learning experience, the course’s strengths and weaknesses and how it could be improved. For the learning dimension, the survey data and semi-structured interviews will investigate a number of issues, including participants’ attitudes and experiences of engaging with others on the course (collaborative or social learning), thoughts as to whether they had acquired sufficient knowledge about QI to apply in practice (higher order learning), perceptions as to whether they had a better grasp of how to address and tackle QI problems in their work practice (reflective learning) and think critically about the process of acquiring new knowledge and confidence to apply in their professional practice (capability). Lastly, participant’s behaviour will be assessed through semi-structured interviews to explore whether participants reported applying their new knowledge to inform others about QI and engage in QI activities. The Kirkpatrick model has previously been applied to MOOC evaluation studies17 39 and was considered an appropriate tool to guide the evaluation of the current study.

Study participants and recruitment procedure

Figure 1 displays the flow of your study procedure. We propose to start the study in January 2020 of the QI MOOC, with follow-up interviews commencing around June 2020 (3 months post-MOOC completion). All learners who enrol in the QI MOOC (via the FutureLearn© platform) will be invited to take part in the MOOC evaluation study (online supplementary appendix 1), and will be provided with a participant information sheet informing them of the study procedures (online supplementary appendix 2). Informed consent will be sought from learners who choose to participate in the study (online supplementary appendix 3). The precourse and postcourse surveys will be integrated into the MOOC (online supplementary appendix 4—postcourse survey). We will aim to recruit at least 50 participants, ~10% of active learners in recent runs. However, if >50 consent to participate this will be allowed.

bmjopen-2019-031973supp001.pdf (38.7KB, pdf)

bmjopen-2019-031973supp002.pdf (78.8KB, pdf)

bmjopen-2019-031973supp003.pdf (34.1KB, pdf)

bmjopen-2019-031973supp004.pdf (108.3KB, pdf)

A subset of participants will be invited by email to take part in a semi-structured interview to explore in depth how the MOOC impacted their learning and behaviour in practice after completing the course (online supplementary appendix 5). We will aim to recruit and interview around 20 learners, or until no new themes or concepts are observed in the data analysis. That is, when thematic data saturation has been achieved.40 Purposive sampling will be used to recruit a mixture of men and women from different age groups, professional backgrounds, organisations and countries. The QI MOOC is designed for people working in health and social care organisations such as clinicians, junior doctors/registrars, nurses, allied health professionals, managers, porters and caterers. Learners who took part in previous runs of the QI MOOC reflect this target audience so it is likely that the evaluation study will also reflect these groups.

bmjopen-2019-031973supp005.pdf (33.1KB, pdf)

Data collection

Online surveys (pre-MOOC and post-MOOC)

The precourse and postcourse surveys will be integrated into the MOOC online system enabling learners to complete the surveys online once they have consented to the study.

The pre-MOOC surveys will collect sociodemographic variables, and identify learners’ motivations for completing the course and any prior QI training and experience. Knowledge of QI (self-report and objective) and perceived confidence in designing and leading QI activities will be measured before and after the MOOC to determine the effect of the MOOC on these outcomes. Knowledge about QI was assessed using a 12-item multiple-choice test to measure core knowledge and understanding of QI that could be acquired from taking the course (online supplementary appendix 6). Each question had five possible answers with one answer correct. On completion of the MOOC, a postcourse survey (online supplementary appendix 4), using closed and open-ended questions, will be administered to investigate participant’s overall reactions to the course (content and design), their satisfaction with the learning experience, attitudes and experiences of engaging with others on the course, capacity building—acquisition of new knowledge and perceived confidence to participate in (and possibly lead) QI projects, and thoughts on how the course could be improved.

bmjopen-2019-031973supp006.pdf (67.5KB, pdf)

Table 2 provides an overview of the different measures in accordance with the RE-AIM and Kirkpatrick models, and when they will be assessed (pre-MOOC, post-MOOC or 3 months post-MOOC).

Qualitative interviews

Semi-structured interviews will be conducted 3 months post-MOOC to explore in-depth the impact of the MOOC on participants’ learning and behaviour in relation to designing, leading implementing QI activities as well as identifying factors perceived as barriers or facilitators to implementing QI projects (online supplementary appendix 5). Given the global nature of the MOOC and participants can be from countries around the world, interviews will be carried out through telephone or Skype calls. It is anticipated that interviews will be no more than 1 hour long. All interviews will be recorded and transcribed verbatim by an independent transcription service.

Data analysis

We are undertaking a mixed-methods approach to analysis. Quantitative data will be analysed using SPSS V.25.0. Basic descriptive statistics, means and SD for continuous variables, frequency and per cent for categorical variables, will be generated for sociodemographic variables, attitudes towards collaborative learning and feedback on the QI MOOC. We will test for pre-post intervention changes in knowledge and perceived confidence in participating in QI projects using χ2 and paired t-tests, as appropriate. To estimate the change in objective knowledge, we will use a logistic generalised linear mixed model to account for the correlation between an individual’s responses to the same question at different time points. We will use Spearman’s rho correlations to describe the relationship between subjective and objective knowledge.

35In the postsurvey, we ask whether participants have done “all”, “some” or “none” of the course. .For the analysis, we shall group participants into these categories to identify differences between the groups. Logistic regression will be used to identify statistically significant differences between groups.

The interview data will be analysed by two qualitative researchers using the Framework approach, a thematic analysis method involving five stages which deductively uses prior questions drawn from the aims of the study and inductively identifies themes arising from the data.41 The five stages of Framework are (1) familiarisation with data; a selection of five identified transcripts were independently read and themes identified, (2) developing a coding framework; a framework of themes and subthemes was created to code the data and further refined, (3) indexing; all transcripts were coded using the framework, (4) charting; the data were synthesised within a set of thematic matrix charts, where each participant was assigned a row and each subtheme a column and (5) mapping; similarities and differences of participants’ experiences were identified and discussed.

Study ethics

During week 1 of the MOOC, all learners will be invited to take part in the study and provided with a participant information sheet and consent form to read and sign online. Study data will be de-identified by allocating participants with a unique ID to ensure data are anonymous and confidential. All research data will be stored securely on the University of Bath network drives with security measures in place. A password-protected participant database will be used to store patient identification number allocation. Only the researchers directly associated with the study will have access to the data. As appreciation for participant’s time, 10 participants who complete both surveys will be randomly chosen to receive a £20 amazon voucher.

Ethics and dissemination

The study will be conducted in accordance with University of Bath’s Code of Good Practice in Research Integrity. Results of this study will be published in peer-reviewed journals, presented at national and international conferences and disseminated through social media.

Patient and public involvement

There were no funds or time allocated for patient and public involvement in the design of the MOOC evaluation study, so we were unable to involve patients or members of the public. Since the course started in 2016, changes have been made to the MOOC in response to feedback from learners. We intend to disseminate the results of the study to learners and will seek public involvement in the dissemination strategy.

Supplementary Material

Acknowledgments

The authors would like to thank Marie Salter and Paul Pinkney from the University of Bath for supporting the development and delivery of the QI MOOC, and FutureLearn for hosting the course.

Footnotes

Twitter: @SianK_Smith, @@ProfChristosV

Contributors: SKS-L and CV conceived the QI MOOC evaluation study aims, methods and design. SKS-L drafted the first draft of the manuscript. CV, TW and AB reviewed and commented on the first draft, and SKS-L addressed their feedback and suggestions. All authors approved the final manuscript.

Funding: The authors have not declared a specific grant for this research from any funding agency in the public, commercial or not-for-profit sectors.

Competing interests: Three of the authors (AB, TW and CV) of this paper are Lead Educators of the QI MOOC.

Patient consent for publication: Not required.

Ethics approval: This study was approved by the University of Bath Human Research Ethics Committee (reference: 2958).

Provenance and peer review: Not commissioned; externally peer reviewed.

References

- 1. UK Department for Business Innovation and Skills (BIS) The maturing of the MOOC: literature review of massive open online courses and other forms of online distance learning. London, UK: Skills DfBIa, 2013. [Google Scholar]

- 2. FutureLearn Welcoming one million people to FutureLearn Milton Keynes, UK: the open university, 2015. Available: https://about.futurelearn.com/blog/one-million-learners/comment-page-1 [Accessed 3 April 2019].

- 3. Liyanagunawardena TR, Aboshady OA. Massive open online courses: a resource for health education in developing countries. Glob Health Promot 2018;25:74–6. 10.1177/1757975916680970 [DOI] [PubMed] [Google Scholar]

- 4. Aboshady OA, Radwan AE, Eltaweel AR, et al. Perception and use of massive open online courses among medical students in a developing country: multicentre cross-sectional study. BMJ Open 2015;5:e006804 10.1136/bmjopen-2014-006804 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5. Hendriks RA, de Jong PGM, Admiraal WF, et al. Teaching modes and social-epistemological dimensions in medical massive open online courses: lessons for integration in campus education. Med Teach 2019;41:917–26. 10.1080/0142159X.2019.1592140 [DOI] [PubMed] [Google Scholar]

- 6. Maxwell WD, Fabel PH, Diaz V, et al. Massive open online courses in U.S. healthcare education: practical considerations and lessons learned from implementation. Curr Pharm Teach Learn 2018;10:736–43. 10.1016/j.cptl.2018.03.013 [DOI] [PubMed] [Google Scholar]

- 7. Pickering JD, Henningsohn L, DeRuiter MC, et al. Twelve tips for developing and delivering a massive open online course in medical education. Med Teach 2017;39:691–6. 10.1080/0142159X.2017.1322189 [DOI] [PubMed] [Google Scholar]

- 8. Foley K, Alturkistani A, Carter A, et al. Massive open online courses (MOOC) evaluation methods: protocol for a systematic review. JMIR Res Protoc 2019;8:e12087 10.2196/12087 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9. Harder B. Are MOOCs the future of medical education? BMJ 2013;346:f2666 10.1136/bmj.f2666 [DOI] [PubMed] [Google Scholar]

- 10. Vallee A. The MOOCs are a new approach to medical education? La Revue du praticien 2017;67:487–8. [PubMed] [Google Scholar]

- 11. Liyanagunawardena TR, Williams SA. Massive open online courses on health and medicine: review. J Med Internet Res 2014;16:e191 10.2196/jmir.3439 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12. Harvey LA, Glinsky JV, Lowe R, et al. A massive open online course for teaching physiotherapy students and physiotherapists about spinal cord injuries. Spinal Cord 2014;52:911–8. 10.1038/sc.2014.174 [DOI] [PubMed] [Google Scholar]

- 13. Hossain MS, Shofiqul Islam M, Glinsky JV, et al. A massive open online course (MOOC) can be used to teach physiotherapy students about spinal cord injuries: a randomised trial. J Physiother 2015;61:21–7. 10.1016/j.jphys.2014.09.008 [DOI] [PubMed] [Google Scholar]

- 14. Goldberg LR, Bell E, King C, et al. Relationship between participants’ level of education and engagement in their completion of the Understanding Dementia Massive Open Online Course. BMC Med Educ 2015;15:60 10.1186/s12909-015-0344-z [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15. Swinnerton BJ, Morris NP, Hotchkiss S, et al. The integration of an anatomy massive open online course (MOOC) into a medical anatomy curriculum. Anat Sci Educ 2017;10:53–67. 10.1002/ase.1625 [DOI] [PubMed] [Google Scholar]

- 16. Sneddon J, Barlow G, Bradley S, et al. Development and impact of a massive open online course (MOOC) for antimicrobial stewardship. J Antimicrob Chemother 2018;73:1091–7. 10.1093/jac/dkx493 [DOI] [PubMed] [Google Scholar]

- 17. Meinert E, Alturkistani A, Brindley D, et al. Protocol for a mixed-methods evaluation of a massive open online course on real world evidence. BMJ Open 2018;8:e025188 10.1136/bmjopen-2018-025188 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18. Meinert E, Alturkistani A, Car J, et al. Real-World evidence for postgraduate students and professionals in healthcare: protocol for the design of a blended massive open online course. BMJ Open 2018;8:e025196 10.1136/bmjopen-2018-025196 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19. Berman AH, Biguet G, Stathakarou N, et al. Virtual patients in a behavioral medicine massive open online course (MOOC): a qualitative and quantitative analysis of participants' perceptions. Acad Psychiatry 2017;41:631–41. 10.1007/s40596-017-0706-4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20. Hendriks RA, de Jong PGM, Admiraal WF, et al. Instructional design quality in medical massive open online courses for integration into campus education. Med Teach 2019;8:1–8. 10.1080/0142159X.2019.1665634 [DOI] [PubMed] [Google Scholar]

- 21. Hood N, Littlejohn A. Mooc quality: the need for new measures. J Learn Dev 2016;3:28–42. [Google Scholar]

- 22. Margaryan A, Bianco M, Littlejohn A. Instructional quality of massive open online courses (MOOCs). Comput & Education 2015;80:77–83. 10.1016/j.compedu.2014.08.005 [DOI] [Google Scholar]

- 23. Merrill MD. First principles of instruction. Educ Technol Res Dev 2002;50:43–59. 10.1007/BF02505024 [DOI] [Google Scholar]

- 24. Glasgow RE, et al. Evaluating the impact of health promotion programs: using the RE-AIM framework to form summary measures for decision making involving complex issues. Health Educ Res 2006;21:688–94. 10.1093/her/cyl081 [DOI] [PubMed] [Google Scholar]

- 25. Glasgow RE, Vogt TM, Boles SM. Evaluating the public health impact of health promotion interventions: the RE-AIM framework. Am J Public Health 1999;89:1322–7. 10.2105/AJPH.89.9.1322 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26. Kirkpatrick D. Great ideas revisited: revisiting Kirkpatrick's four-level model. Training and Development 1996;50:54–9. [Google Scholar]

- 27. Dixon-Woods M. Harveian Oration 2018: Improving quality and safety in healthcare. Clin Med 2019;19:47–56. 10.7861/clinmedicine.19-1-47 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28. Jones B, Vaux E, Olsson-Brown A. How to get started in quality improvement. BMJ 2019;10:k5437 10.1136/bmj.k5437 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29. Batalden PB, Davidoff F. What is "quality improvement" and how can it transform healthcare? Qual Saf Health Care 2007;16:2–3. 10.1136/qshc.2006.022046 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30. The Health Foundation UK Quality improvement made simple. what everyone should know about health care quality improvement. London, UK: The Health Foundation, 2013. [Google Scholar]

- 31. Ham C, Berwick D, Dixon J. Improving quality in the English NHS. The King's Fund, London, UK: The King's Fund, 2016. [Google Scholar]

- 32. Institute of Medicine US Crossing the quality chasm. A new health system for the 21st century. Washington (DC), US: Institute of Medicine (US) Committee on Quality of Health Care in America, 2001. [PubMed] [Google Scholar]

- 33. Ogrinc G, Headrick LA, Mutha S, et al. A framework for teaching medical students and residents about practice-based learning and improvement, synthesized from a literature review. Acad Med 2003;78:748–56. 10.1097/00001888-200307000-00019 [DOI] [PubMed] [Google Scholar]

- 34. Tartaglia KM, Walker C. Effectiveness of a quality improvement curriculum for medical students. Med Educ Online 2015;20:27133 10.3402/meo.v20.27133 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35. O'Grady N, Jenner M. Are learners learning? (and how do we know?). A snapshot of research into what we know about how FutureLearners learn. UK: FutureLearn, 2018. https://about.futurelearn.com/research-insights/learners-learning-know (accessed 3 April 2019). [Google Scholar]

- 36. Armstrong G, Headrick L, Madigosky W, et al. Designing education to improve care. Jt Comm J Qual Patient Saf 2012;38:5–AP2. 10.1016/S1553-7250(12)38002-1 [DOI] [PubMed] [Google Scholar]

- 37. Jones AC, Shipman SA, Ogrinc G. Key characteristics of successful quality improvement curricula in physician education: a realist review. BMJ Qual Saf 2015;24:77–88. 10.1136/bmjqs-2014-002846 [DOI] [PubMed] [Google Scholar]

- 38. O'Leary KJ, Fant AL, Thurk J, et al. Immediate and long-term effects of a team-based quality improvement training programme. BMJ Qual Saf 2019;28:366–73. 10.1136/bmjqs-2018-007894 [DOI] [PubMed] [Google Scholar]

- 39. Lin J, Cantoni L. Assessing the performance of a tourism MOOC using the kirkpatrick model: a supplier’s point of view. Information and Communication Technologies in Tourism 2017:129–42. [Google Scholar]

- 40. Saunders B, Sim J, Kingstone T, et al. Saturation in qualitative research: exploring its conceptualization and operationalization. Qual Quant 2018;52:1893–907. 10.1007/s11135-017-0574-8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41. Ritchie J, Lewis J. Qualitative research practice: a guide for social science students and researchers. London, UK: Sage Publications, 2003. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

bmjopen-2019-031973supp001.pdf (38.7KB, pdf)

bmjopen-2019-031973supp002.pdf (78.8KB, pdf)

bmjopen-2019-031973supp003.pdf (34.1KB, pdf)

bmjopen-2019-031973supp004.pdf (108.3KB, pdf)

bmjopen-2019-031973supp005.pdf (33.1KB, pdf)

bmjopen-2019-031973supp006.pdf (67.5KB, pdf)