Abstract

To meet the general requirement for transparency in EFSA's work, all its scientific assessments must consider uncertainty. Assessments must say clearly and unambiguously what sources of uncertainty have been identified and what is their impact on the assessment conclusion. This applies to all EFSA's areas, all types of scientific assessment and all types of uncertainty affecting assessment. This current Opinion describes the principles and methods supporting a concise Guidance Document on Uncertainty in EFSA's Scientific Assessment, published separately. These documents do not prescribe specific methods for uncertainty analysis but rather provide a flexible framework within which different methods may be selected, according to the needs of each assessment. Assessors should systematically identify sources of uncertainty, checking each part of their assessment to minimise the risk of overlooking important uncertainties. Uncertainty may be expressed qualitatively or quantitatively. It is neither necessary nor possible to quantify separately every source of uncertainty affecting an assessment. However, assessors should express in quantitative terms the combined effect of as many as possible of identified sources of uncertainty. The guidance describes practical approaches. Uncertainty analysis should be conducted in a flexible, iterative manner, starting at a level appropriate to the assessment and refining the analysis as far as is needed or possible within the time available. The methods and results of the uncertainty analysis should be reported fully and transparently. Every EFSA Panel and Unit applied the draft Guidance to at least one assessment in their work area during a trial period of one year. Experience gained in this period resulted in improved guidance. The Scientific Committee considers that uncertainty analysis will be unconditional for EFSA Panels and staff and must be embedded into scientific assessment in all areas of EFSA's work.

Keywords: uncertainty analysis, principles, scientific assessment, guidance

Short abstract

This publication is linked to the following EFSA Journal article: http://onlinelibrary.wiley.com/doi/10.2903/j.efsa.2018.5123/full

Summary

EFSA's role is to provide scientific advice on risks and other issues relating to food safety, to inform decision‐making by the relevant authorities. A fundamental principle of EFSA's work is the requirement for transparency in the scientific basis for its advice, including scientific uncertainty. The Scientific Committee considers that all EFSA scientific assessments must include consideration of uncertainties and that application of the Guidance on uncertainty analysis should be unconditional for the European Food Safety Authority (EFSA). Assessments must say clearly and unambiguously what uncertainties have been identified and what is their impact on the overall assessment outcome.

This Opinion presents the principles and methods behind EFSA's Guidance on Uncertainty Analysis in Scientific Assessments, which is published separately. The Guidance and this Opinion should be used together as EFSA's approach to addressing uncertainty. EFSA's earlier guidance on uncertainty in exposure assessment (EFSA, 2006, 2007) continues to be relevant but, where there are differences (e.g. regarding characterisation of overall uncertainty, for the assessment as a whole), this document and the new Guidance (REF GD) take priority.

Uncertainty is defined as referring to all types of limitations in the knowledge available to assessors at the time an assessment is conducted and within the time and resources available for the assessment. The Guidance is applicable to all areas of EFSA and all types of scientific assessment, including risk assessment and all its constituent parts (hazard identification and characterisation, exposure assessment and risk characterisation). ‘Assessor’ is used as a general term for those providing scientific advice, including risk assessment, and ‘decision‐maker’ for the recipients of the scientific advice, including risk managers.

The Guidance does not prescribe specific methods for uncertainty analysis but rather provides a harmonised and flexible framework within which different methods may be selected, according to the needs of each assessment. A range of methods are summarised in this Opinion and described in more detail in its Annexes, together with simple worked examples. The examples were produced during the development of the Opinion, before the Guidance document was drafted, and therefore do not illustrate application of the final Guidance. The final Guidance will be applied in future EFSA outputs, and readers are encouraged to refer to those for more relevant examples.

As a general principle, assessors are responsible for characterising uncertainty, while decision‐makers are responsible for resolving the impact of uncertainty on decisions. Resolving the impact on decisions means deciding whether and in what way decision‐making should take account of the uncertainty. Therefore, assessors need to inform decision‐makers about scientific uncertainty when providing their advice.

In all types of assessment, the primary information on uncertainty needed by decision‐makers is: what is the range of possible answers, and how likely are they? Assessors should also describe the nature and causes of the main sources of uncertainty, for use in communication with stakeholders and the public, and, when needed, to inform targeting of further work to reduce uncertainty.

The time and resources available for scientific assessment vary from days or weeks for urgent requests to months or years for complex opinions. Therefore, the Guidance provides a flexible framework for uncertainty analysis, so that assessors can select methods that are fit for purpose in each case.

Uncertainty may be expressed qualitatively (descriptive expression or ordinal scales) or quantitatively (individual values, bounds, ranges, probabilities or distributions). It is neither necessary nor possible to quantify separately every uncertainty affecting an assessment. However, assessors should aim to express overall uncertainty in quantitative terms to the extent that is scientifically achievable, as is also stated in EFSA Guidance on Transparency and the Codex Working Principles for Risk Analysis. The principal reasons for this are the ambiguity of qualitative expressions, their tendency to imply value judgements outside the remit of assessors, and the fact that many decisions inherently imply quantitative comparisons (e.g. between exposure and hazard) and therefore require quantitative information on uncertainty.

During the trial period of the Guidance, various concerns were raised about quantifying uncertainty, many of them relating to the role of expert judgement in this. Having considered the advantages of quantitative expression, and addressed the concerns, the Scientific Committee concludes that assessors should express in quantitative terms the combined effect of as many as possible of the identified sources of uncertainty, while recognising that how this is reported must be compatible with the requirements of decision‐makers and legislation.

Any sources of uncertainty that assessors are unable to include in their quantitative expression of overall uncertainty, for whatever reason, must be documented qualitatively and reported alongside it, because they will have significant implications for decision‐making.

During the trial period for this Guidance, it was suggested that uncertainty analysis might not be relevant for some types of EFSA scientific assessment. Having considered the suggested examples, the Scientific Committee confirms that the Guidance applies to all EFSA scientific assessments. There are five key reasons for this: scientific conclusions must be based on evidence, which requires consideration of uncertainties affecting the evidence; decision‐makers need to understand the degree of certainty or uncertainty affecting each assessment, as this determines how much they should rely on it when making decisions; EFSA's Founding Regulation states that risk assessments should be undertaken in a transparent manner, which implies transparency about uncertainty and its impact on conclusions; in some areas, risk managers or legislation require an unqualified positive or negative conclusion, but this can be addressed by agreeing appropriate criteria; and concerns about time and resources have been addressed by making the Guidance scalable to any situation.

Key concepts for uncertainty analysis are introduced:

Questions and quantities of interest must be well defined, to avoid ambiguity in the scientific assessment and allow uncertainties to be identified and characterised.

Uncertainty is personal and temporal. The task of uncertainty analysis is to express the uncertainty of the assessors, at the time they conduct the assessment: there is no single ‘true’ uncertainty.

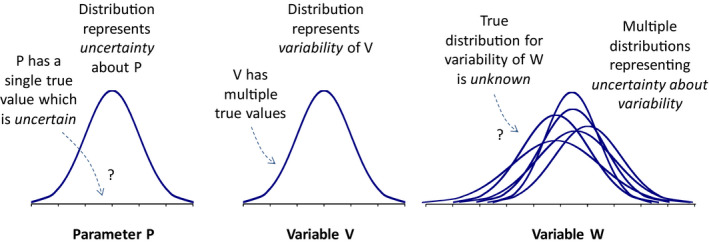

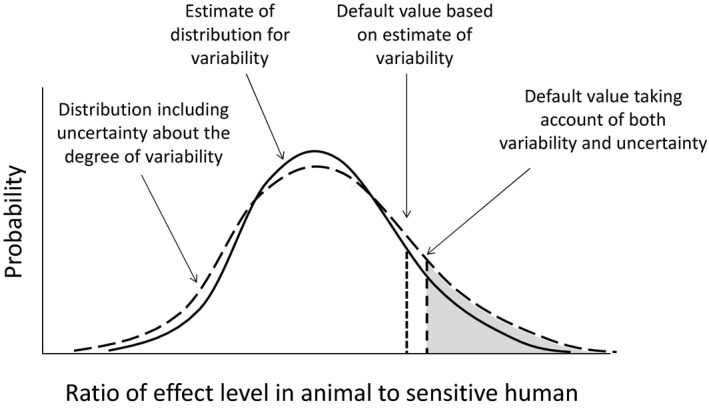

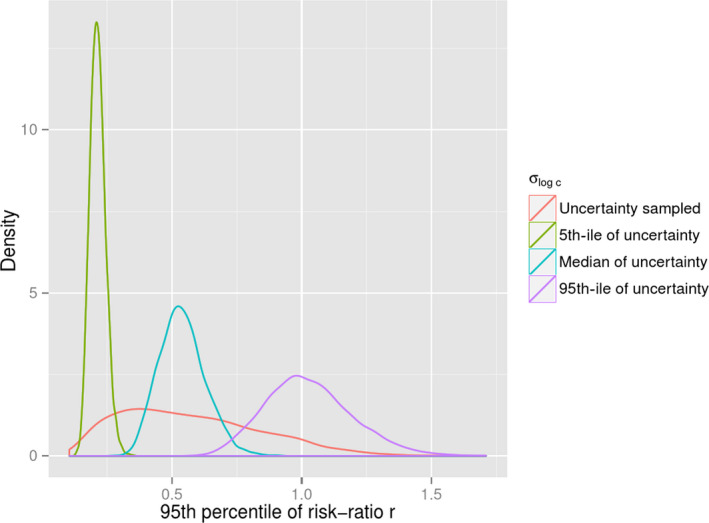

It is important to distinguish uncertainty and variability and analyse them appropriately, because they have differing implications for decisions about options for managing risk and reducing uncertainty.

Dependencies between different sources of uncertainty can greatly affect the overall uncertainty of the assessment outcome, so it is important to identify them and take them into account.

All scientific assessment involves models, which may be qualitative or quantitative, and account must be taken of uncertainties about model structure as well as the evidence that goes into them.

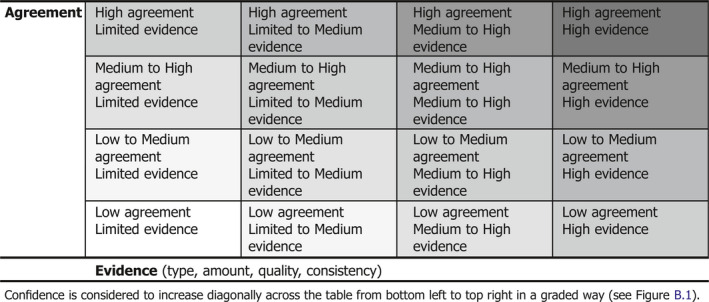

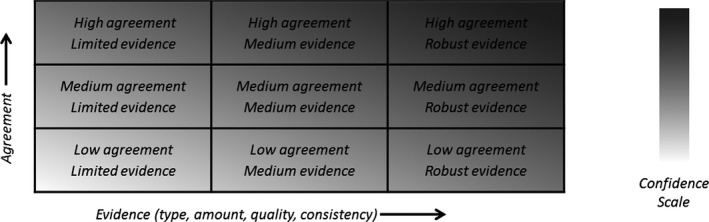

Evidence, weight of evidence, agreement, confidence and conservatism are distinct concepts, related to uncertainty. Measures of evidence and agreement may be useful in assessing uncertainty but are not sufficient alone. Confidence and conservatism are partial measures of uncertainty, and useful if adequately defined.

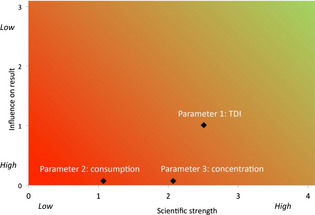

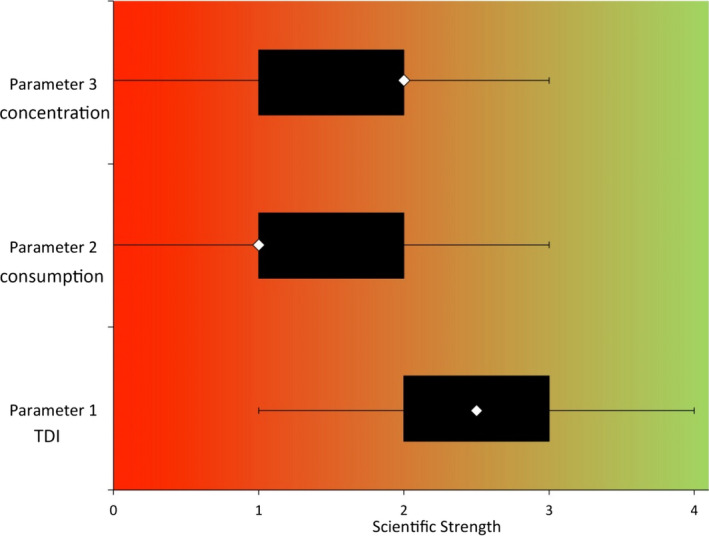

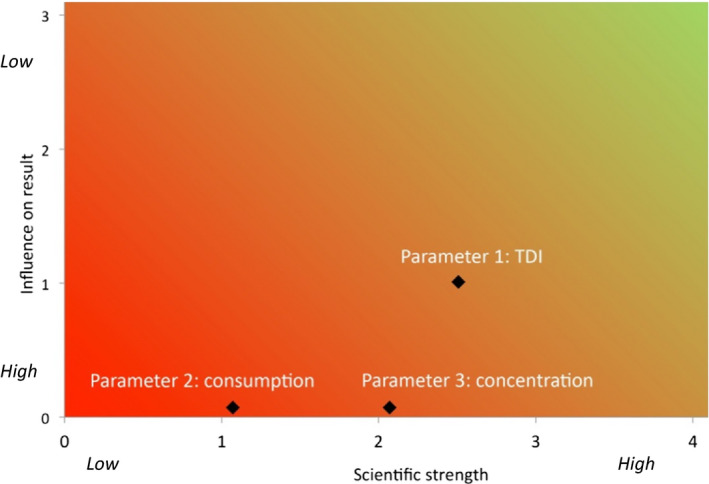

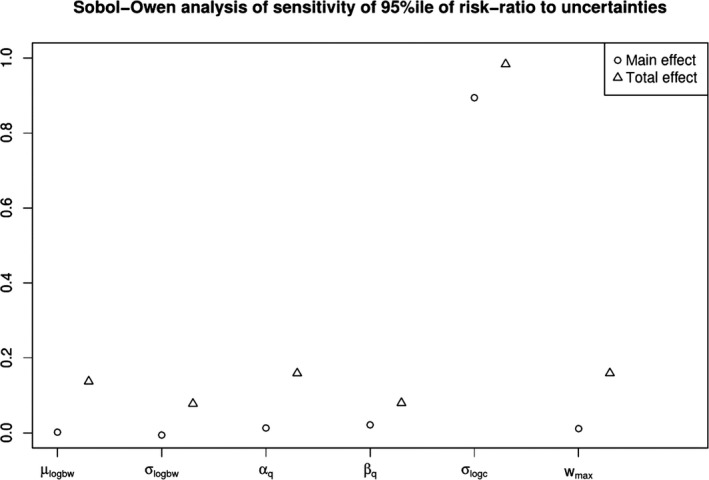

Prioritisation of uncertainties is useful in assessment and decision‐making and can be informed by influence or sensitivity analysis using various methods.

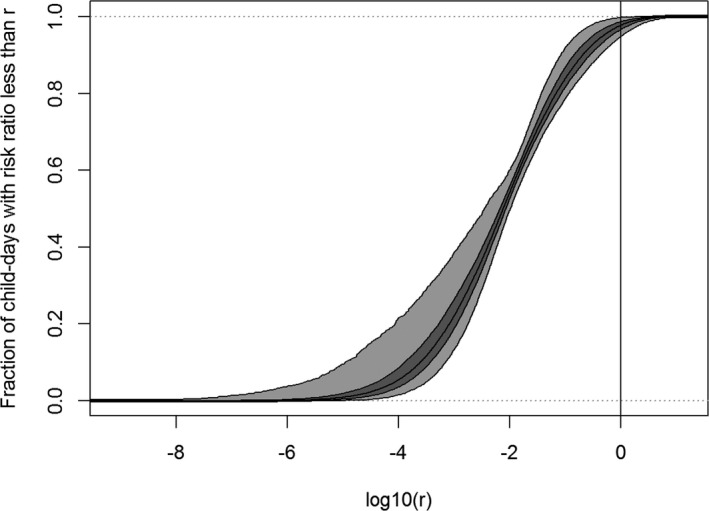

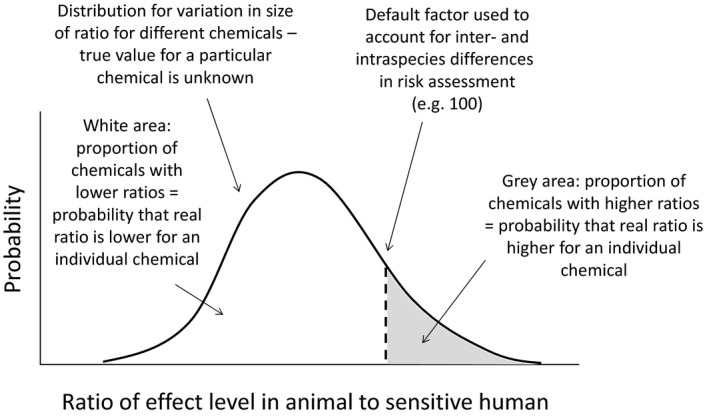

Conservative approaches are useful in many areas of EFSA's work, but the coverage they provide for uncertainty should be quantified; probability bounds analysis may be helpful for this.

Expert judgements are essential in scientific assessment and uncertainty analysis; they should be elicited in a rigorous way and, when appropriate, using formal methodology.

Probability is the preferred measure for expressing uncertainty, as it quantifies the relative likelihood of alternative outcomes, which is what decision‐makers need to know. Uncertainty can be quantified for all well‐defined questions and quantities, using subjective probability, which enables rigorous calculation of their combined impact.

Overall uncertainty is what matters for decision‐making. Uncertainty analysis should characterise the collective impact of all uncertainties identified by the assessors; unknown unknowns cannot be included.

When assessors are unable to quantify some uncertainties, those uncertainties cannot be included in quantitative characterisation of overall uncertainty. The quantitative characterisation is then conditional on assumptions made for the uncertainties that could not be quantified, and it should be made clear that the likelihood of other conditions and outcomes is unknown.

Approaches for uncertainty analysis depend on the type of assessment: four main types are distinguished in the Guidance: standardised assessments (which are especially common for regulated products), case‐specific assessments, urgent assessments and the development or revision of guidance documents.

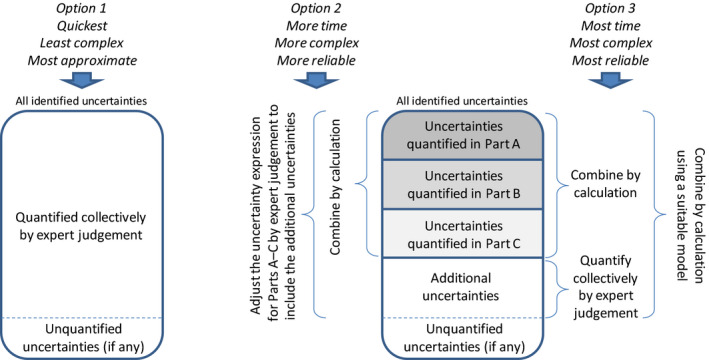

The following main elements of uncertainty analysis are distinguished: dividing the uncertainty analysis into parts, ensuring the questions or quantities of interest are well defined, identifying uncertainties, prioritising uncertainties, characterising uncertainty for parts of the uncertainty analysis, combining uncertainty from different parts of the uncertainty analysis, characterising overall uncertainty and reporting. Most of these are always required, but others depend on the needs of the assessment. Furthermore, the approach to each element varies between assessments. The Guidance starts by identifying the type of assessment in hand and then uses a series of flow charts to describe the sequence of elements that is recommended for each type.

Assessors should be systematic in identifying uncertainties, checking each part of their assessment for different types of uncertainty to minimise the risk of overlooking important uncertainties. Existing frameworks for evidence appraisal are designed to identify uncertainties and should be used where they are suitable for the assessment in hand. All identified uncertainties should be documented when reporting the assessment, either in the main report or in an annex, together with any initial assessment that is made to prioritise them for further analysis.

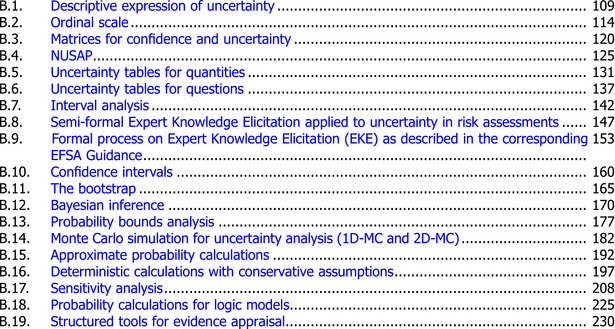

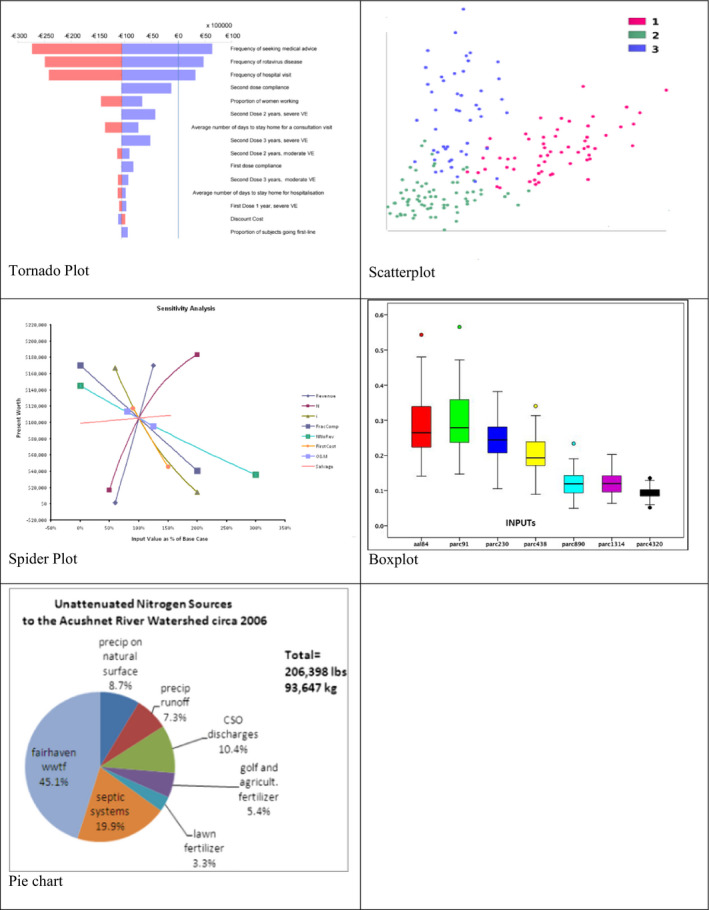

The Guidance describes a selection of qualitative and quantitative methods that can contribute to one or more elements of uncertainty analysis and evaluates their suitability for use in EFSA's assessments. The qualitative methods are:

Descriptive approaches, using narrative phrases or text to describe uncertainties.

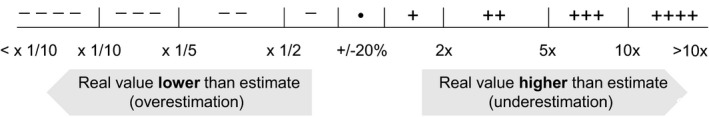

Ordinal scales, characterising uncertainties using an ordered scale of categories with qualitative definitions (e.g. high, medium or low uncertainty).

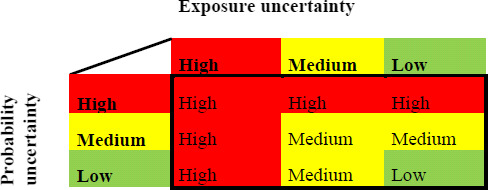

Uncertainty matrices, providing standardised rules for combining two or more ordinal scales describing different aspects or dimensions of uncertainty.

NUSAP method, using a set of ordinal scales to characterise different dimensions of each source of uncertainty, and its influence on the assessment outcome, and plotting these together to indicate which uncertainties contribute most to the uncertainty of the assessment outcome.

Uncertainty tables for quantitative questions, listing sources of uncertainty affecting a quantitative question and assessing their individual and combined impacts on the uncertainty of the assessment outcome on an ordinal scale.

Uncertainty tables for categorical questions, listing lines of evidence contributing to answering a categorical question, identifying their strengths and weaknesses, and expressing the uncertainty of the answer to the question.

Structured tools for evidence appraisal assess risk of bias in individual studies and the overall body of evidence when using data from literature, and can also be applied to studies submitted for regulated products.

The quantitative methods reviewed are:

Quantitative uncertainty tables, similar to the qualitative versions but expressing uncertainty on scales with quantitative definitions.

Interval analysis, computing a range of values for the output of a calculation or quantitative model based on specified ranges for the individual inputs.

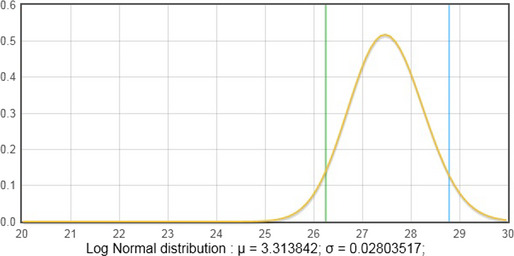

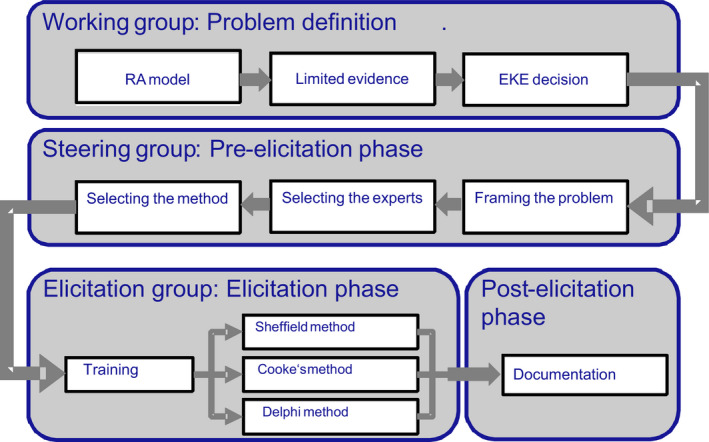

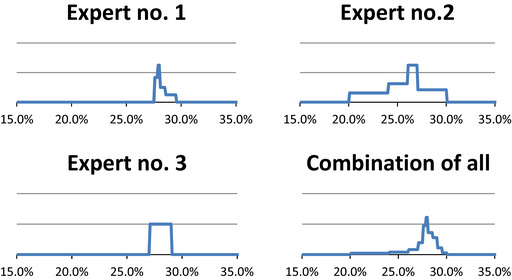

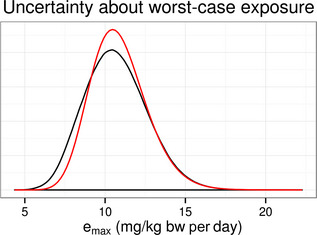

Expert knowledge elicitation (EKE), a collection of formal and informal methods for quantification of expert judgements of uncertainty, about an assessment input or output, using subjective probability.

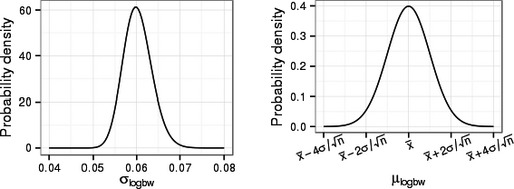

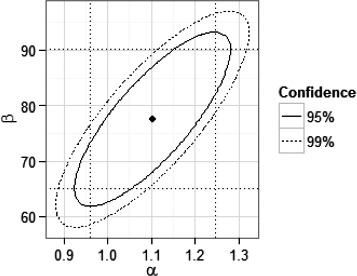

Confidence intervals quantifying uncertainty about parameters in a statistical model based on data.

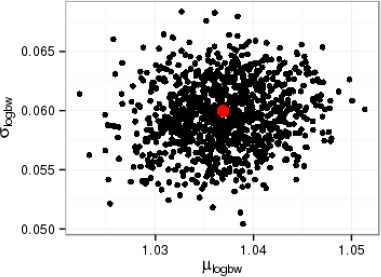

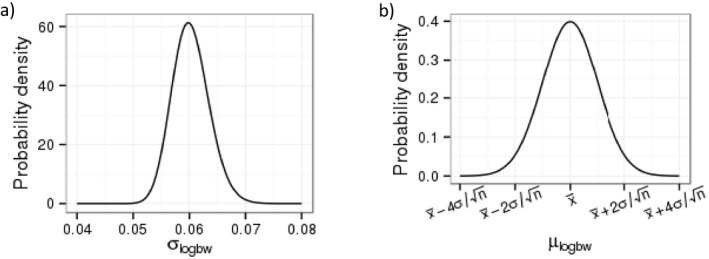

The bootstrap, quantifying uncertainty about parameters in a statistical model on the basis of data.

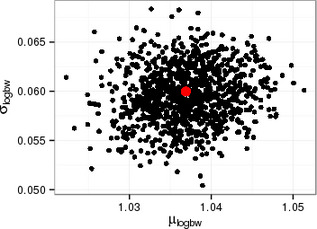

Bayesian inference, quantifying uncertainty about parameters in a statistical model on the basis of data and expert judgements about the values of the parameters.

Probability bounds analysis, a method for combining probability bounds (partial expressions of uncertainty) about inputs in order to deduce a probability bound for the output of a calculation or quantitative model.

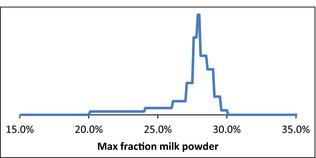

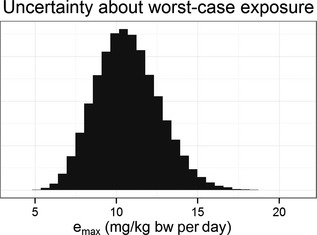

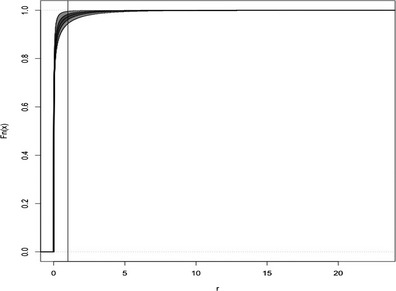

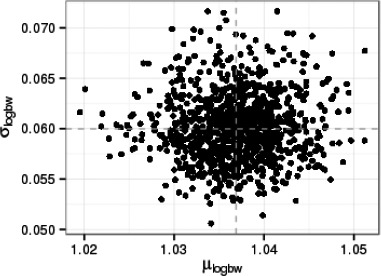

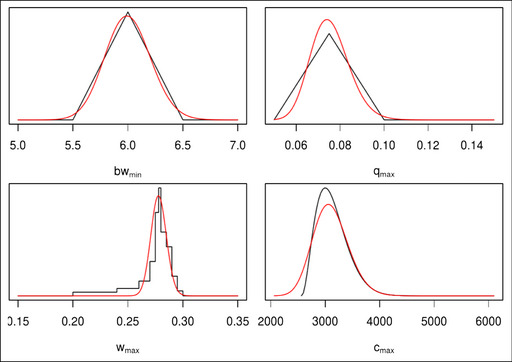

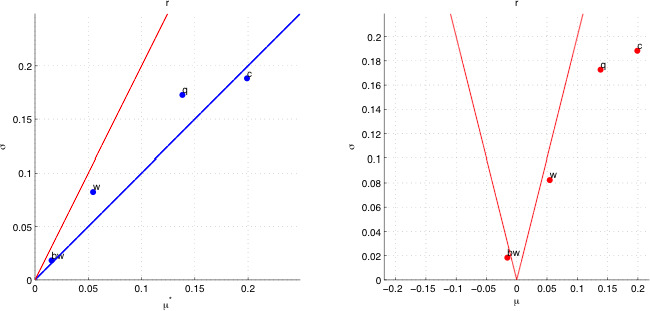

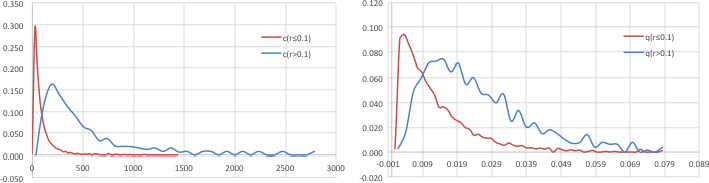

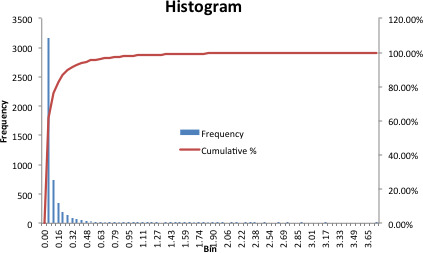

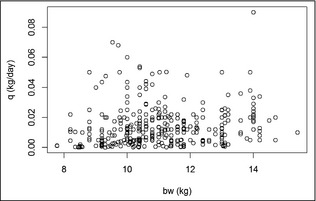

Monte Carlo simulation, taking random samples from probability distributions representing uncertainty and/or variability to: (i) calculate combined uncertainty about the output of a calculation or quantitative model deriving from uncertainties about inputs expressed using probability distributions; (ii) carry out certain kinds of sensitivity analysis.

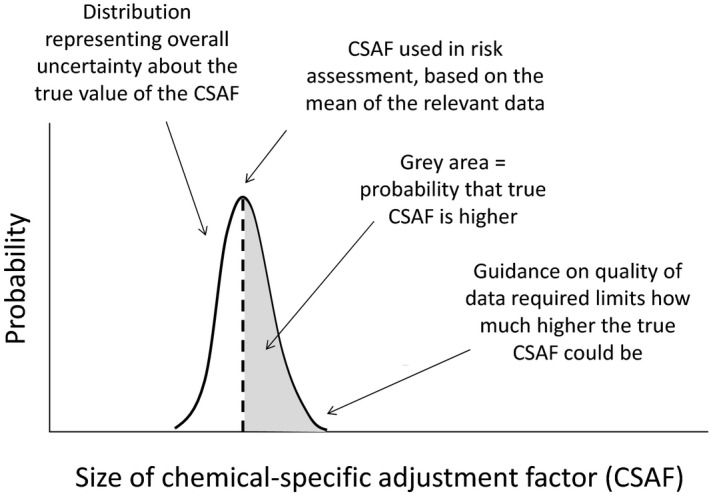

Deterministic calculations with conservative assumptions are a common approach to uncertainty and variability in EFSA assessments. They include default values, assessment factors and decision criteria (‘trigger values’) which are generic and applicable to many assessments, as well as conservative assumptions and adjustments that are specific to particular cases.

Approximate probability calculations replacing probability distributions obtained by EKE or statistical analysis of data with approximations that make probability calculations for combining uncertainties straightforward to carry out using a calculator or spreadsheet.

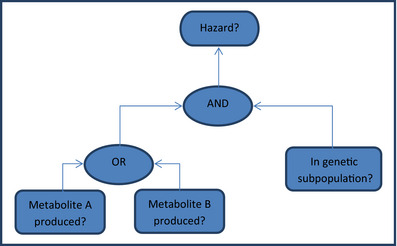

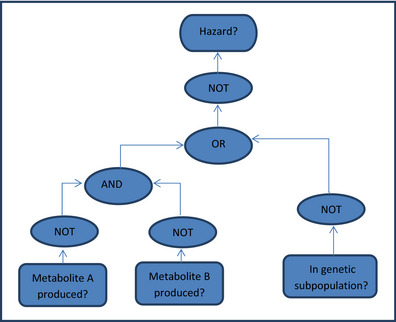

Probability calculations for logic models, quantifying uncertainty about a logical argument comprising a series or network of yes/no questions.

Other quantitative methods described more briefly: uncertainty expressed in terms of possibilities, imprecise probabilities and Bayesian modelling.

Sensitivity Analysis, a suite of methods for assessing sensitivity, of the output (or an intermediate value) of a calculation or quantitative model, to the inputs and to choices made when expressing uncertainty about inputs. It has multiple objectives: (i) to help prioritise uncertainties for quantification: (ii) to help prioritise uncertainties for collecting additional data; (iii) to investigate sensitivity of final output to assumptions made; (iv) to investigate sensitivity of final uncertainty to assumptions made.

All of the methods reviewed have stronger and weaker aspects. Qualitative methods score better on criteria related to simplicity and ease of use but less well on criteria related to technical rigour and meaning of the output, while the reverse tends to apply to quantitative methods.

The final output of uncertainty analysis should be an overall characterisation of uncertainty that takes all identified uncertainties into account. The methods and results of all steps of the uncertainty analysis should be reported fully and transparently, in keeping with EFSA (2012a,b,c) Guidance on Transparency. Wherever statistical methods have been used, reporting of these should follow EFSA (2014a,b) Guidance on Statistical Reporting.

Various arguments have been made both for and against communicating uncertainty to the general public, but there is little empirical evidence to support either view or to define best practice. From EFSA's perspective, communicating scientific uncertainties is of fundamental importance to its core mandate, reaffirming EFSA's role in the Risk Analysis process. Therefore, EFSA has conducted a focus group study and a web survey to test approaches for handling uncertainty in public communications, and is reviewing the literature on this subject. The results of this work are being used to develop a separate guidance document on communication of uncertainty, and to update EFSA's Handbook on Risk Communication.

1. Introduction

‘Open EFSA’ aspires both to improve the overall quality of the available information and data used for its scientific outputs and to comply with normative and societal expectations of openness and transparency (EFSA, 2009, 2014a). In line with this, the European Food Safety Authority (EFSA) is publishing three separate but closely related guidance documents to guide its expert Panels for use in their scientific assessments (EFSA Scientific Committee, 2015). These documents address three key elements of the scientific assessment: the analyses of Uncertainty, Weight of Evidence and Biological Relevance.

The first topic is the analysis of uncertainty. This current opinion provides the scientific principles, background and methods to guide how to identify, characterise, document and explain all types of uncertainty arising within an individual assessment for all areas of EFSA's remit. This is a supporting document for the concise, practical guidance document which is published separately (EFSA Scientific Committee, 2018). Neither document prescribes which specific methods should be used from the toolbox but rather provide a harmonised and flexible framework within which different described qualitative and quantitative methods may be selected according to the needs of each assessment.

The second topic concerns the weight of evidence approach (EFSA Scientific Committee, 2017a) which provides a general framework for considering and documenting the approach used to evaluate and weigh the assembled evidence when answering the main question of each scientific assessment or questions that need to be answered in order to provide, in conjunction, an overall answer. This includes assessing the relevance, reliability and consistency of the evidence. The guidance document further indicates the types of qualitative and quantitative methods that can be used to weigh and integrate evidence and points to where details of the listed individual methods can be found. The weight of evidence approach carries elements of uncertainty analysis that part of uncertainty which is addressed by weight of evidence analysis does not need to be reanalysed in the overall uncertainty analysis, but may be added to.

The third guidance document (EFSA Scientific Committee, 2017b) provides a general framework to addresses the question of biological relevance at various stages of the assessment: the collection, identification and appraisal of relevant data for the specific assessment question to be answered. It identifies generic issues related to biological relevance in the appraisal of pieces of evidence, in particular, and specific criteria to consider when deciding on whether or not an observed effect is biologically relevant, i.e. adverse (or shows a positive health effect). A decision tree is developed to aid the collection, identification and appraisal of relevant data for the specific assessment question to be answered. The reliability of the various pieces of evidence used and how they should be integrated with other pieces of evidence is considered by the weight of evidence guidance document.

EFSA will continue to strengthen links between the three distinct but related topics to ensure the transparency and consistency of its various scientific outputs while keeping them fit for purpose.

1.1. Background and Terms of Reference as provided by EFSA

Background

The EFSA Science Strategy for the period 2012–2016 identifies four strategic objectives: (i) further develop excellence of EFSA's scientific advice, (ii) optimise the use of risk assessment capacity in the EU, (iii) develop and harmonise methodologies and approaches to assess risks associated with the food chain, and (iv) strengthen the scientific evidence for risk assessment and risk monitoring. The first and third of these objectives underline the importance of characterising in a harmonised way the uncertainties underlying in EFSA risk assessments, and communicating these uncertainties and their potential impact on the decisions to be made in a transparent manner.

In December 2006, the EFSA Scientific Committee adopted its opinion related to uncertainties in dietary exposure assessment, recommending a tiered approach to analysing uncertainties (1/qualitative, 2/deterministic, 3/probabilistic) and proposing a tabular format to facilitate qualitative evaluation and communication of uncertainties. At that time, the Scientific Committee ‘strongly encouraged’ EFSA Panels to incorporate the systematic evaluation of uncertainties in their risk assessment and to communicate it clearly in their opinions.

During its inaugural Plenary meeting on 23–24 July 2012, the Scientific Committee set as one of its priorities to continue working on uncertainty and expand the scope of the previously published guidance to cover the whole risk assessment process.

Terms of reference

The European Food Safety Authority requests the Scientific Committee to establish an overarching working group to develop guidance on how to characterise, document and explain uncertainties in risk assessment. The guidance should cover uncertainties related to the various steps of the risk assessment, i.e. hazard identification and characterisation, exposure assessment and risk characterisation. The working group will aim as far as possible at developing a harmonised framework applicable to all relevant working areas of EFSA. The Scientific Committee is requested to demonstrate the applicability of the proposed framework with case studies.

When preparing its guidance, the Scientific Committee is requested to consider the work already done by the EFSA Panels and other organisations, e.g. WHO, OIE.

1.2. Interpretation of Terms of Reference

The Terms of Reference (ToR) require a framework applicable to all relevant working areas of EFSA. As some areas of EFSA conduct types of assessment other than risk assessment, e.g. benefit and efficacy assessments, the Scientific Committee decided to develop guidance applicable to all types of scientific assessment in EFSA.

Therefore, wherever this document refers to scientific assessment, risk assessment is included, and ‘assessors’ is used as a general term including risk assessors. Similarly, wherever this document refers to ‘decision‐making’, risk management is included, and ‘decision‐makers’ should be understood as including risk managers and others involved in the decision‐making process.

In this document, the Scientific Committee reviews the general applicability of principles and methods for uncertainty analysis to EFSA's work, in order to establish a general framework for addressing uncertainty in EFSA. The Scientific Committee's recommendations for practical application of the principles and methods in EFSA's work are set out in a more concise Guidance document, which is published separately (EFSA Scientific Committee, 2018).

1.3. Definitions of uncertainty and uncertainty analysis

Uncertainty is a familiar concept in everyday language, and may be used as a noun to refer to the state of being uncertain, or to something that makes one feel uncertain. The adjective ‘uncertain’ may be used to indicate that something is unknown, not definite or not able to be relied on or, when applied to a person, that they are not completely sure or confident of something (Oxford Dictionaries, 2015). Its meaning in everyday language is generally understood: for example, the weather tomorrow is uncertain, because we are not sure how it will turn out. In science and statistics, we are familiar with concepts such as measurement uncertainty and sampling uncertainty, and that weaknesses in methodological quality of studies used in assessments can be important sources of uncertainty. Uncertainties in how evidence is used and combined in assessment – e.g. model uncertainty, or uncertainty in weighing different lines of evidence in a reasoned argument – are also important sources of uncertainty. General types of uncertainty that are common in EFSA assessments are outlined in Section 8.

In the context of risk assessment, various formal definitions have been offered for the word ‘uncertainty’. For chemical risk assessment, IPCS (2004) defined uncertainty as ‘imperfect knowledge concerning the present or future state of an organism, system, or (sub) population under consideration’. Similarly, EFSA PLH Panel (2011) guidance on environmental risk assessment of plant pests defines uncertainty as ‘inability to determine the true state of affairs of a system’. In EFSA's previous guidance on uncertainties in chemical exposure assessment, uncertainty was described as resulting from limitations in scientific knowledge (EFSA, 2007) while EFSA's BIOHAZ Panel has defined uncertainty as ‘the expression of lack of knowledge that can be reduced by additional data or information’ (EFSA BIOHAZ Panel, 2012). The US National Research Council's Committee on Improving Risk Analysis Approaches defines uncertainty as ‘lack or incompleteness of information’ (NRC, 2009). The EU non‐food scientific committees SCHER, SCENIHR and SCCS (2013) described uncertainty as ‘the expression of inadequate knowledge’. The common theme emerging from these and other definitions is that uncertainty refers to limitations of knowledge. It is also implicit in these definitions that uncertainty relates to the state of knowledge for a particular assessment, conducted at a particular time (the conditional nature of uncertainty is discussed further in Section 5.2).

In this document, uncertainty is used as a general term referring to all types of limitations in available knowledge that affect the range and probability of possible answers to an assessment question. Available knowledge refers here to the knowledge (evidence, data, etc.) available to assessors at the time the assessment is conducted and within the time and resources agreed for the assessment.

The nature of uncertainty and its relationship to variability are discussed in Sections 5.2 and 5.3. There are many types of uncertainty in scientific assessment. Referring to these may be helpful when identifying the sources of uncertainty affecting a particular assessment, and is discussed further in Section 8.

Uncertainty analysis is defined in this document as the process of identifying and characterising uncertainty about questions of interest and/or quantities of interest in a scientific assessment. A question or quantity of interest may be the subject of the assessment as a whole, i.e. that which is required by the ToR for the assessment, or it may be the subject of a subsidiary part of the assessment which contributes to addressing the ToR (e.g. exposure and hazard assessment are subsidiary parts of risk assessment).

1.4. Scope, audience and degree of obligation

The ToR require the provision of guidance on how to characterise, document and explain all types of uncertainty arising in EFSA's scientific assessments. This document and the accompanying Guidance (EFSA Scientific Committee, 2018) are aimed at all those assessors contributing to EFSA assessments and provides a harmonised, but flexible framework that is applicable to all areas of EFSA, all types of scientific assessment, including risk assessment, and all types of uncertainty affecting scientific assessment. These two documents should be used as EFSA's primary guidance on addressing uncertainty. EFSA's earlier guidance on uncertainty in exposure assessment (EFSA, 2006, 2007) continues to be relevant but, where there are differences (e.g. regarding characterisation of overall uncertainty, for the assessment as a whole), this document and the new Guidance (EFSA Scientific Committee, 2018) take priority.

The guidance on uncertainty should be used alongside other cross‐cutting guidance on EFSA's approaches to scientific assessment including, but not limited to, existing guidance on transparency, systematic review, expert knowledge elicitation (EKE), weight‐of‐evidence assessment, biological relevance and statistical reporting (EFSA, 2009, 2010a, 2014a,b; EFSA Scientific Committee, 2017a,b) and also EFSA's Prometheus project (EFSA, 2015a,b,c).

The Scientific Committee is of the view that all EFSA scientific assessments must include consideration of uncertainties. Therefore, application of the guidance document is unconditional for EFSA. For reasons of transparency and in line with EFSA (2007, 2009), assessments must say what sources of uncertainty have been identified and characterise their overall impact on the assessment conclusion. This must be reported clearly and unambiguously, in a form compatible with the requirements of decision‐makers and any legislation applicable to the assessment in hand.

During the trial period for this Guidance, it was suggested that uncertainty analysis might not be relevant for some types of EFSA scientific assessment. Having considered the suggested examples, the Scientific Committee confirms that the Guidance applies to all EFSA scientific assessments. There are five fundamental reasons for this. First, the conclusions of EFSA's scientific assessments must be based on evidence: this requires evaluation of the evidence, which necessarily involves assessment of uncertainties affecting the evidence and of their implications for the conclusions. Second, EFSA's scientific assessments are used, or may be used in the future, to inform risk management and other types of decision‐making by the Commission and/or other parties. Decision‐makers need to understand the degree of certainty or uncertainty affecting each assessment, as this determines how much they should rely on it when making their decisions (this is discussed in more detail in Section 3.1). Third, the EFSA Founding Regulation (EC No 178/2002) states that risk assessments should be undertaken in a transparent manner: this implies a requirement for transparency about scientific uncertainty and its impact on scientific conclusions (EFSA, 2009); this and the two preceding points apply to all types of scientific assessment and conclusions including self‐tasking by EFSA and assessments prepared in response to open questions, such as reviews of the literature. Fourth, although in some areas of EFSA's work risk managers or legislation require an unqualified positive or negative conclusion, this is not incompatible with the Guidance. The conclusions should still be evidence‐based and therefore still require an uncertainty analysis, but they can be expressed in unqualified form if appropriate criteria for this are defined (Section 3.5). Finally, EFSA sometimes receives urgent requests which limit the time available for scientific assessment; however, the Guidance contains specific approaches for this, which are scalable to whatever time is available. This document considers general principles and reviews different approaches and methods which can be used to help assessors to systematically identify, characterise, explain and account for sources of uncertainty at different stages of the assessment process. For brevity, we refer to these processes collectively as ‘uncertainty analysis’. The reader is referred to other sources for technical details on the implementation and use of each method.

The Scientific Committee emphasises that assessors do not have to use or be familiar with every method described in this document. Practical advice on how to select suitable methods for particular assessments is provided in the accompanying Guidance document (EFSA Scientific Committee, 2018).

Uncertainties in decision‐making, and specifically in risk management, are outside the scope of EFSA, as are uncertainties in the framing of the question for scientific assessment. When uncertainties about the meaning of an assessment question are detected, they should be referred to the decision‐makers for clarification, which is likely to be an iterative process requiring discussion between assessors and decision‐makers.

The primary audience for this document and the accompanying Guidance comprises all those contributing to EFSA's scientific assessments. It is anticipated that assessors will use the Guidance document in their day‐to‐day work, and refer to specific sections of the current document when needed. For this reason, some information is repeated in different sections, where cross‐referencing would not suffice. Some sections will be of particular interest to other readers, for example, Sections 3 and 16 are especially relevant for decision‐makers and Section 16 for communications specialists.

2. Approach taken to develop the Guidance

The approach taken to developing the Guidance was as follows. A Working Group was established, comprising members of EFSA's Scientific Committee and its supporting staff, a Panel member or staff member nominated by each area of EFSA's work, some additional experts with experience in uncertainty analysis (identified and invited in accordance with EFSA procedures), and an EFSA communications specialist. Activities carried out by the Scientific Committee and its Working Group included: a survey of sources of uncertainty encountered by different EFSA Panels and Units and their approaches for dealing with them (which were taken into account when reviewing applicable methods); consideration of approaches that deal with uncertainty described in existing guidance documents of EFSA, of other bodies and in the scientific literature; meetings with selected risk managers in the European Commission and communications specialists from EFSA's Advisory Forum; and a public consultation on a Draft of the Guidance Document. These activities informed three main strands of work by the Scientific Committee: development of the harmonised framework and guidance contained in the main sections of this document; development of annex sections focussed on different methods that can be used in uncertainty analysis; and development of illustrative examples using a common case study.

While preparing the Guidance, the Scientific Committee has taken account of existing guidance and related publications by EFSA and other relevant organisations, including (but not limited to) EFSA's guidances on uncertainty in dietary exposure assessment, transparency in risk assessment, selection of default values, probabilistic exposure assessment, expert elicitation and statistical reporting (EFSA, 2006, 2007, 2009, 2012a,b, 2014a,b); the Scientific Committee's opinion on risk terminology (EFSA Scientific Committee, 2012); specific guidance and procedures of different EFSA Panels (e.g. EFSA PLH Panel, 2011); Guidance document on uncertainty analysis in exposure assessment of the German Federal Institute for Risk Assessment (BfR, 2015); opinion on uncertainty in risk assessment of the French Agency for Food, Environmental and Occupational Health & Safety (ANSES, 2016); the European Commission's communication on the precautionary principle (European Commission, 2000); the Opinion of the European Commission's non‐food Scientific Committees on making risk assessment more relevant for decision‐makers (SCHER, SCENIHR, SCCS, 2013); the chapter on uncertainty in the Guidance on Information requirements and safety assessment (ECHA, 2012); the US Environmental Protection Agency's guiding principles for Monte Carlo analysis and risk characterisation handbook (US EPA, 1997, 2000), as well as guidance on science integration for decision making (US EPA, 2012); the US National Research Council publications on science and risk (NRC, 1983, 1996, 2009), the USDA guideline on microbial risk assessment (US DA, 2012); the Codex Working Principles for Risk Analysis (Codex, 2016); the OIE guidance on measurement uncertainty (OIE Validation Guidelines, 2014); the IPCS guidance documents on uncertainty in exposure and hazard characterisation (IPCS, 2004, 2014); the FAO/WHO guidance on microbial hazard characterisation (FAO/WHO, 2009); and the guidance of the Intergovernmental Panel on Climate Change (Mastrandrea et al., 2010).

When evaluating the potential of different methods of uncertainty analysis for use in EFSA's work, the Scientific Committee considered two primary aspects. First, the Scientific Committee identified which of the main elements of uncertainty analysis (introduced in Section 6) each method can contribute to. Second, the Scientific Committee assessed each method against a set of criteria which it established for describing the nature of each method and evaluating the contribution it could make. The criteria used to evaluate the methods were as follows:

Evidence of current acceptance

Expertise needed to conduct

Time needed

Theoretical basis

Degree/extent of subjectivity

Method of propagation

Treatment of uncertainty and variability

Meaning of output

Transparency and reproducibility

Ease of understanding for non‐specialist

Definitions for these criteria are shown in Section 13 where the different methods are reviewed.

A single draft version of the Guidance was published for public consultation in June 2014.1 The document was then revised in the light of comments received and published as a revised draft for testing by EFSA Panels and Units during a trial period. At the end of the trial period, an internal workshop was held in EFSA to review lessons learned and advice on improvements to the draft Guidance. As part of this, it was agreed to produce a much more concise and standalone Guidance document, focussed on providing specific practical advice, and to publish a revised version of the previous draft as an accompanying document, to provide more detailed information to support the application of the Guidance. The current document comprises that detailed material, and the concise Guidance is published separately (EFSA Scientific Committee, 2018). The main factors considered when selecting approaches to include in the Guidance are summarised in Section 7 of the current document.

2.1. Case study

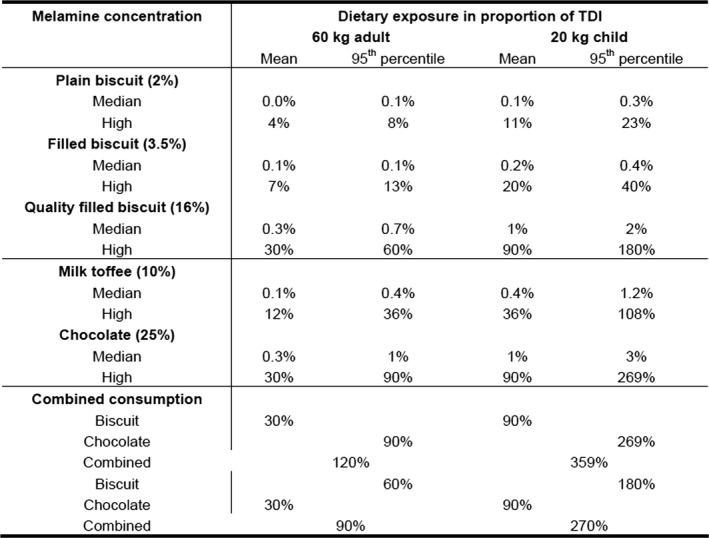

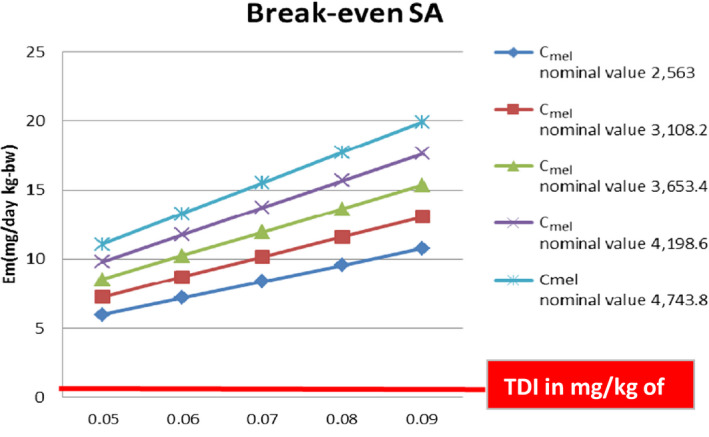

Worked examples are provided in Annexes to this document to illustrate different elements of uncertainty analysis and different methods for addressing them. To increase the coherence of the document a single case study was selected to enable comparison of the different methods, based on an EFSA Statement on melamine that was published in 2008 (EFSA, 2008). While this is an example from chemical risk assessment for human health, the principles and methodologies illustrated by the examples are general and could in principle be applied to any other area of EFSA's work, although the details of implementation would vary.

The EFSA (2008) statement was selected for the case study in this document because it is short, which facilitates extraction of the key information and identification of the sources of uncertainty and makes it accessible for readers who would like more details, and also because it incorporates a range of types of uncertainty.

An introduction to the melamine case study is provided in Annex A, together with examples of output from different methods used in uncertainty analysis. Details of how the example outputs were generated are presented in Annex B, together with short descriptions of each method.

It is emphasised that the case study is provided for the purpose of illustration only, is limited to the information that was available in 2008, and should not be interpreted as contradicting the subsequent full risk assessment of melamine in food and feed (EFSA, 2010b). Furthermore, the examples were conducted only at the level needed to illustrate the principles of the approaches and the general nature of their outputs. They are not representative of the level of consideration that would be needed in a real assessment and must not be interpreted as examples of good practice.

The melamine case study was produced for an earlier version of this document, before the concise Guidance was developed, and therefore does not illustrate the application of the final Guidance. The final Guidance will be applied in future EFSA outputs, and readers are encouraged to refer to those for more relevant examples.

3. Roles of assessors and decision‐makers in addressing uncertainty

Some of the literature that is cited in this section refers to risk assessment, risk assessors and risk managers, but the principles apply equally to other types of scientific assessment and other types of assessors and decision‐makers. Both terms are used in the plural: generally assessment is conducted by a group of experts and multiple parties contribute to decision‐making (e.g. officials and committees at EU and/or national level).

Risk analysis is the general framework for most of EFSA's work including food safety, import risk analysis and pest risk analysis, all of which consider risk analysis as comprising three distinct but closely linked and interacting parts: risk assessment, risk management and risk communication (EFSA Scientific Committee, 2012). Basic principles for addressing uncertainty in risk analysis are stated in the Codex Working Principles for Risk Analysis:

‘Constraints, uncertainties and assumptions having an impact on the risk assessment should be explicitly considered at each step in the risk assessment and documented in a transparent manner’

‘Responsibility for resolving the impact of uncertainty on the risk management decision lies with the risk manager, not the risk assessors’ (Codex, 2016).

These principles apply equally to the treatment of uncertainty in all areas of science and decision‐making. In general, assessors are responsible for characterising uncertainty2 and decision‐makers are responsible for resolving the impact of uncertainty on decisions. Resolving the impact on decisions means deciding whether and in what way decision‐making should be altered to take account of the uncertainty.

This division of roles is rational: assessing scientific uncertainty requires scientific expertise, while resolving the impact of uncertainty on decision‐making involves weighing the scientific assessment against other considerations, such as economics, law and societal values, which require different expertise and are also subject to uncertainty. The weighing of these different considerations is defined in Article 3 of the EU Food Regulation 178/20023 as risk management. The Food Regulation establishes EFSA with responsibility for scientific assessment on food safety, and for communication on risks, while the Commission and Member States are responsible for risk management and for communicating on risk management measures. In more general terms, assessing and communicating about scientific uncertainty is the responsibility of EFSA, while decision‐making and communicating on management measures is the responsibility of others.

Although risk assessment and risk management are conceptually distinct activities (NRC, 1983, p. 7), they should not be isolated – two‐way interaction between them is essential (NRC, 1996, p. 6) and needs to be conducted efficiently. Discussions with risk managers during the preparation of this document identified opportunities for improving this interaction, particularly with regard to specification of the question for assessment and expression of uncertainty in conclusions (see below), and indicated a need for closer interaction in future.

3.1. Information required for decision‐making

Given the division of responsibilities between assessors and decision‐makers, it is important to consider what information decision‐makers need about uncertainty. Scientific assessment is aimed at answering questions about risks and other issues, to inform decisions on how to manage them. Uncertainty refers to limitations in knowledge, which are always present to some degree. This means scientific knowledge about the answer to a decision‐maker's question will be limited, so in general a range of answers will be possible. In principle, therefore, decision‐makers need to know the range of possible answers, so they can consider whether any of them would imply risk of undesirable management consequences (e.g. adverse effects).

Decision‐maker's questions relate to real‐world problems that they have responsibility for managing. Therefore, when the range of possible answers includes undesirable consequences, the decision‐makers need information on how likely they are, so they can weigh options for management action against other relevant considerations (economic, legal, etc.). This includes the option of provisional measures when adverse consequences are possible but uncertain (the precautionary principle, as described in Article 7 of the Food Regulation; see also European Commission, 2000).

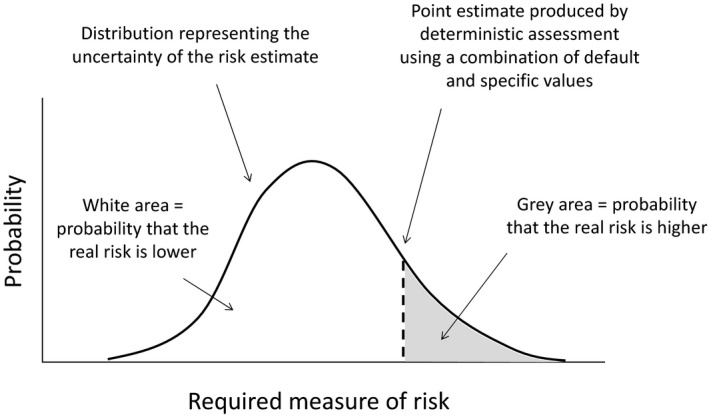

In principle, then, decision‐makers need assessors to provide information on the range and probability of possible answers to questions submitted for scientific assessment. In practice, partial information on this may be sufficient: for example, an approximate probability (see Section 5.10) or appropriate ‘conservative’ assessment (see Section 5.8) may indicate a sufficiently low probability of adverse consequences, without characterising the full distribution of possible consequences. In some cases a range for a quantity of interest may be sufficient, for example, if all values within the range are considered acceptable by the decision‐makers.

For some types of assessment, e.g. for regulated products, decision‐makers need EFSA to provide an unqualified positive or negative conclusion to comply with the requirements of legislation, or of procedures established to implement legislation. In general, the underlying assessment will be subject to at least some uncertainty, as is all scientific assessment. In such cases, therefore, the positive or negative conclusion refers to whether the level of certainty is sufficient for the purpose of decision‐making, i.e. whether the assessment provides ‘practical certainty’ (see Section 3.4).

Information on the magnitude of uncertainty and the main sources of uncertainty is also important to inform decisions about whether it would be worthwhile to invest in obtaining further data or conducting more analysis, with the aim of reducing uncertainty. Information on the relative importance of different sources of uncertainty may also be useful when communicating with stakeholders and the public about the reasons for uncertainty.

Some EFSA work comprises forms of scientific assessment that do not directly address specific risks or benefits. For example, EFSA is sometimes asked to review the state of scientific knowledge in a particular area. Conclusions from such a review may influence the subsequent actions of decision‐makers. Scientific knowledge is never complete, so the conclusions are always uncertain to some degree and other conclusions might be possible. Therefore, again, managers need information about how different the alternative conclusions might be, and how probable they are, as this may have implications for decision‐making.

All EFSA scientific assessments require at least a basic analysis of uncertainty, for the following reasons. Questions are posed to EFSA because the requestor does not know or is uncertain of the answer and that the amount of uncertainty affects decisions or actions they need to take. The requestor seeks scientific advice from EFSA because they anticipate that this may reduce the uncertainty, or at least provide a more expert assessment of it. If the uncertainty of the answer did not matter, then it would not be rational or economically justified for the requestor to pose the question to EFSA – the requestor would simply use their own judgement, or even a random guess. So the fact that the question was asked implies that the amount of uncertainty matters for decision‐making, and it follows that information about uncertainty is a necessary part of EFSA's response. This logic applies regardless of the nature or subject of the question, therefore providing information on uncertainty is relevant in all cases. It follows that uncertainty analysis is needed in all EFSA scientific assessments, though the form and extent of that analysis and the form in which the conclusions are expressed should be adapted to the needs of each case, in consultation with decision‐makers, as is provided for in the Guidance accompanying this document.

3.2. Time and resource constraints

Decision‐makers generally need information within specified limits of resources and time, including the extreme case of urgent situations where advice might be required within weeks, days or even hours. To be fit for purpose, therefore, EFSA's guidance on uncertainty analysis includes options for different levels of resource and different timescales, and methods that can be implemented at different levels of detail/refinement, to fit different timescales and levels of resource. Consideration of uncertainty is always required, even in urgent situations, because reduced time and resource for scientific assessment increases uncertainty and its potential implications for decision‐making.

Decisions on how far to refine the assessment and whether to obtain additional data may be taken by assessors when they fall within the time and resources agreed for the assessment. Actions that require additional time or resources should be decided in consultation between assessors and decision‐makers. Ultimately, it is for decision‐makers to decide when the characterisation of uncertainty is sufficient for decision‐making and when further refinement is needed, taking into account the time and costs involved.

3.3. Questions for assessment by EFSA

Questions for assessment by EFSA may be posed by the European Commission, the European Parliament, and EU Member State or by EFSA itself.4 Many questions to EFSA request assessment of consequences (risks, benefits, etc.) of current policy, conditions or practice. They may also request scientific assessment of consequences in alternative scenarios, e.g. under different risk management options. It is important that the scenarios and consequences of interest are well‐defined (see Section 5.1). This should be achieved through the normal procedures for initiation of EFSA assessments, including agreement and interpretation of the ToR. Occasionally, decision‐makers pose open questions to EFSA, where the scenarios or consequences of interest are not known in advance, e.g. a request to review the state of scientific knowledge on a particular subject. In such cases, assessors should ensure instead that their conclusions refer to well‐defined scenarios and consequences.

3.4. Acceptable level of uncertainty

The Food Regulation and other EU law relating to risks of different types frequently refer to the need to ‘ensure’ protection from adverse effects. The word ‘ensure’ implies a societal requirement for some degree of certainty that adverse effects will not occur, or be managed within acceptable limits. Complete certainty is never possible, however. Deciding how much certainty is required or, equivalently, what level of uncertainty would warrant precautionary action, is the responsibility of decision‐makers, not assessors. This level of certainty could be described as ‘practical certainty’, as it is sufficient for the practical purpose at hand: this concept may be helpful in situations where decision‐makers need an unqualified positive or negative conclusion (see Sections 3.1 and 3.5). It may be helpful if the decision‐makers can specify in advance how much uncertainty is acceptable for a particular question, e.g. about whether a quantity of interest will exceed a given level. This is because the required level of certainty has implications for what outputs should be produced from uncertainty analysis, e.g. what probability levels should be used for confidence intervals. Also, it may reduce the need for the assessors to consult with the decision‐makers during the assessment, when considering how far to refine the assessment. Often, however, the decision‐makers may not be able to specify in advance the level of certainty that is sought or the level of uncertainty that is acceptable, e.g. because this may vary from case to case depending on the costs and benefits involved. Another option is for assessors to provide results for multiple levels of certainty, e.g. confidence intervals with a range of confidence levels, so that decision‐makers can consider at a later stage what level of uncertainty to accept. Alternatively, as stated in Section 3.1 above, partial information on uncertainty may be sufficient for the decision‐makers provided it meets or exceeds their required level of certainty: e.g. an approximate probability (see Section 5.10) or appropriate ‘conservative’ assessment (see Section 5.8).

3.5. Expression of uncertainty in assessment conclusions

In its Opinion on risk terminology, the EFSA Scientific Committee recommended that ‘Scientific Panels should work towards more quantitative expressions of risk and uncertainty whenever possible, i.e. quantitative expression of the probability of the adverse effect and of any quantitative descriptors of that effect (e.g. frequency and duration), or the use of verbal terms with quantitative definitions. The associated uncertainties should always be made clear, to reduce the risk of over‐precise interpretation’ (EFSA Scientific Committee, 2012). The reasons for quantifying uncertainty are discussed in Section 4, together with an overview of different forms of qualitative and quantitative expression. This section considers the implications for interaction between assessors and decision‐makers in relation to the assessment conclusions.

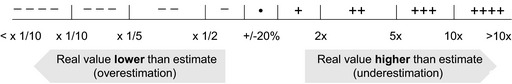

Ranges and probabilities are the natural metric for quantifying uncertainty and can be applied to any well‐defined question or quantity of interest (see Section 5.1). This means that the question for assessment, or at least the eventual conclusion, needs to be well‐defined, in order for its uncertainty to be assessed. For example, in order to say whether an estimate might be an over‐ or under‐estimate, and to what degree, it is necessary to specify what the assessment is required to estimate. Therefore, if this has not been specified precisely in the ToR (see Section 3.1), assessors should provide a series of alternative estimates (e.g. for different percentiles of the population), each with a characterisation of uncertainty, so that the decision‐makers can choose which to act on.

If qualitative terms are used to describe the degree of uncertainty, they should be clearly defined with objective scientific criteria (EFSA Scientific Committee, 2012). Specifically, the definition should identify the quantitative expression of uncertainty associated with the qualitative term as is done, for example, in the approximate probability scale shown in Table 3 (Section 11.3.3).

Table 3.

Summary evaluation of which types of assessment subject (questions or quantities of interest, see Section 5.1) each method can be applied to, and which forms of uncertainty expression the provide (defined in Section 4.1)

| Method | Types of assessment subject | Forms of uncertainty expression provided |

|---|---|---|

| Expert group judgement | Questions and quantities | All |

| Expert knowledge elicitation (EKE) | Questions and quantities | All |

| Descriptive expression | Questions and quantities | Descriptive |

| Ordinal scales | Questions and quantities | Ordinal |

| Matrices | Questions and quantities | Ordinal |

| NUSAP | Questions and quantities | Ordinal |

| Uncertainty table for quantities | Quantities | Ordinal, range or probability bound |

| Uncertainty table for questions | Questions | Ordinal and probability |

| Evidence appraisal tools | Questions and quantities | Descriptive and ordinal |

| Interval Analysis | Quantities | Range |

| Confidence Intervals | Quantities | Range (with confidence level) |

| The Bootstrap | Quantities | Distribution |

| Bayesian inference | Questions and quantities | Distribution/probability |

| Probability calculations for logic models | Questions | Probability |

| Probability bounds analysis | Quantities | Probability bound |

| Monte Carlo | Questions and quantities | Distribution/probability |

| Approximate probability calculations | Quantities | Distribution |

| Conservative assumptions | Quantities | Bound or probability bound |

| Sensitivity analysis | Questions and quantities | Sensitivity of output to input uncertainty |

The Scientific Committee recognises, however, that while the impact of uncertainty must be reported clearly and unambiguously, this must be done in a form that is compatible with the requirements of decision‐makers and any legislation applicable to the assessment in hand (Section 1.4). For some types of assessment, decision‐makers need EFSA to provide an unqualified positive or negative conclusion, for reasons explained in Section 3.1. The positive or negative conclusion does not imply that there is complete certainty, since this is never achieved, but that the level of certainty is sufficient for the purpose of decision‐making (‘practical certainty’, see Section 3.4). In such cases, the assessment conclusion and summary may simply report the positive or negative conclusion but, for transparency, the justification for the conclusion should be documented somewhere, e.g. in the body of the assessment or an annex. In some cases, justification would comprise a quantitative expression of the uncertainty that is present and confirmation that this reaches the level of practical certainty set by, or agreed with, decision‐makers. However, in cases where a standardised procedure has been used, and no non‐standard uncertainties have been identified, this is sufficient to justify practical certainty without further analysis (see Section 7.1.2). In all cases, if the criteria for practical certainty are not met, then either the uncertainty should be expressed quantitatively, or assessors should report that their assessment is inconclusive and that they ‘cannot conclude’ on the question.

Sometimes it may not be possible to quantify uncertainty (Section 5.12). In such cases, assessors must report that the probability of different answers is unknown and avoid using any language that could be interpreted as implying a probability statement (e.g. ‘likely’, ‘unlikely’), as this would be misleading. In addition, as stated previously by the Scientific Committee (EFSA Scientific Committee, 2012b), the assessors should avoid any verbal expressions that have risk management connotations in everyday language, such us ‘negligible’ and ‘concern’. When used without further definition, such expressions imply two simultaneous judgements: a judgement about the probability (or approximate probability) of adverse effects, and a judgement about the acceptability of that probability. The first of these judgements is within the remit of assessors, but the latter is not.

In all cases, it is essential that there should be no incompatibility between the detailed reporting of the uncertainty analysis and the assessment conclusions or summary. In principle, no such incompatibility should occur, because sound scientific conclusions will take account of relevant uncertainties, and therefore, should be compatible with an appropriate analysis of those uncertainties. If, for example, an unqualified positive or negative conclusion is reported, implying practical certainty, the supporting analysis should justify this. If there appears to be any incompatibility, assessors should review and if necessary revise both the uncertainty analysis and the conclusion to ensure that they are compatible with one another and with what the science supports.

The remainder of this document sets out a framework and principles for assessing uncertainty using methods and procedures that address the needs identified above, including the need to distinguish appropriately between risk assessment and risk management, and the requirement for flexibility to operate within varying limitations on timescale and resource so that each individual assessment can be fit for purpose.

4. Qualitative and quantitative approaches to expressing uncertainty

This section considers the role of qualitative and quantitative approaches to expressing uncertainty. The role of qualitative and quantitative approaches in other parts of scientific assessment, and their implications for uncertainty analysis, are discussed in Section 5.14.

4.1. Types of qualitative and quantitative expression

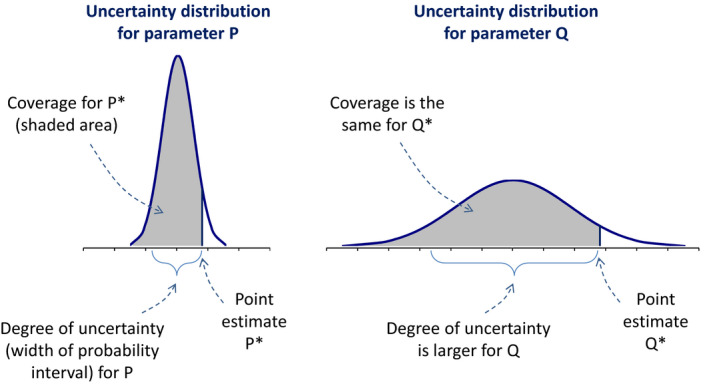

Expression of uncertainty requires two components: expression of the range of possible true answers to a question of interest, or a range of possible true values for a quantity of interest, and some expression of the probabilities of the different answers or values. Quantitative approaches express one or both of these components on a numerical scale. Qualitative approaches express them using words, categories or labels. They may rank the magnitudes of different uncertainties, and are sometimes given numeric labels, but they do not quantify the magnitudes of the uncertainties nor their impact on an assessment conclusion.

It is useful to distinguish descriptive expression and ordinal scales as different categories of qualitative expression: descriptive expression allows free choice of language to characterise uncertainty, while ordinal scales provide a standardised and ordered scale of qualitative expressions facilitating comparison of different uncertainties. It is also useful to distinguish different categories of quantitative expression, which differ in the extent to which they quantify uncertainty. A complete quantitative expression of uncertainty would specify all the answers or values that are considered possible and probabilities for them all. Partial quantitative expression provides only partial information on the probabilities and in some cases partial information on the possibilities (specifying a selection of possible answers or values). Partial quantitative expression requires less information or judgements but may be sufficient for decision‐making in some assessments, whereas other cases may require fuller quantitative expression.

Different types of qualitative and quantitative expression of uncertainty are described in Box 1 below. Note that when the answer to a question is expressed qualitatively uncertainty about it can still be expressed quantitatively, provided the question is well‐defined (see Section 5.1). Methods that can provide the different forms of qualitative and quantitative expression are summarised in Section 11.

Box 1: Differing ways of expressing uncertainty

Qualitative expression

Descriptive expression: Uncertainty described in narrative text or characterised using verbal terms without any quantitative definition.

Ordinal scale: Uncertainty described by ordered categories, where the magnitude of the difference between categories is not quantified.

Quantitative expression

Individual values: Uncertainty partially quantified by specifying some possible values, without specifying what other values are possible or setting upper or lower limits.

Bound: Uncertainty partially quantified by specifying either an upper limit or a lower limit on a quantitative scale, but not both.

Range: Uncertainty partially quantified by specifying both a lower and upper limit on a quantitative scale, without expressing the probabilities of different values within the limits.

Probability: Uncertainty about a binary outcome (including the answer to a yes/no question) fully quantified by specifying the probability or approximate probability of both possible outcomes.

Probability bound: Uncertainty about a non‐variable quantity partially quantified by specifying a bound or range with an accompanying probability or approximate probability.

Distribution: Uncertainty about a non‐variable quantity fully quantified by specifying the probability of all possible values on a quantitative scale.

When using bounds or ranges it is important to specify whether the limits are absolute, i.e. contain all possible values, or contain the ‘true’ value with a specified probability (e.g. 95%), or contain the true value with at least a specified probability (e.g. 95% or more). When an assessment factor (e.g. for species differences in toxicity) is said to be ‘conservative’, this implies that it is a bound that is considered or assumed to have sufficient probability of covering the uncertainty (and, in many cases, variability) which the factor is supposed to address. What level of probability is considered sufficient involves a risk management judgement and should, for transparency, be specified.

As well as differing in the amount of information or judgements they require, the different categories of quantitative expression differ in the information they provide to decision‐makers. Individual values give only examples of possible values, although often accompanied by a qualitative expression of where they lie in the possible range (e.g. ‘conservative’). A bound for a quantity can provide a conservative estimate, while a range provides both a conservative estimate and an indication of the potential for less adverse values, and therefore, the potential benefits of reducing uncertainty. A distribution provides information on the probabilities of all possible values of a quantity: this is useful when decision‐makers need information on the probabilities of multiple values with differing levels of adversity.

Assessments using probability distributions to characterise variability and/or uncertainty are often referred to as ‘probabilistic’. The term ‘deterministic’ is often applied to assessments using individual values without probabilities (e.g. IPCS, 2005, 2014; EFSA, 2007; ECHA, 2008).

The term ‘semi‐quantitative’ is not used in this document. Elsewhere in the literature it is sometimes applied to methods that are, in some sense, intermediate between fully qualitative and fully quantitative approaches. This might be considered to include ordinal scales with qualitative definitions, since the categories have a defined order but the magnitude of differences between categories and their probabilities are not quantified. Sometimes, ‘semi‐quantitative’ is used to describe an assessment that comprises a mixture of qualitative and quantitative approaches.

4.2. The role of quantitative expression in uncertainty analysis

The Codex Working Principles on Risk Analysis (Codex, 2016) state that ‘Expression of uncertainty or variability in risk estimates may be qualitative or quantitative, but should be quantified to the extent that is scientifically achievable’. A similar statement is included in EFSA's (2009) guidance on transparency. Advantages and disadvantages of qualitative and quantitative expression are discussed in the EFSA Scientific Committee (2012) Scientific Committee Opinion on risk terminology, which recommends that EFSA should work towards more quantitative expression of both risk and uncertainty.

The principal reasons for preferring quantitative expressions of uncertainty are as follows:

Qualitative expressions are ambiguous. The same word or phrase can mean different things to different people as has been demonstrated repeatedly (e.g. Theil, 2002; Morgan, 2014). As a result, decision‐makers may misinterpret the assessors’ assessment of uncertainty, which may result in suboptimal decisions. Stakeholders may also misinterpret qualitative expressions of uncertainty, which may result in overconfidence or unnecessary alarm.

Decision‐making often depends on quantitative comparisons, for example, whether a risk exceeds some acceptable level, or whether benefits outweigh costs. Therefore, decision‐makers need to know whether the uncertainty affecting an assessment is large enough to alter the comparison in question, e.g. whether the uncertainties around an estimated exposure of 10 and an estimated safe dose of 20 are large enough that the exposure could in reality exceed the safe dose. This requires uncertainty to be expressed in terms of how different each estimate might be, and how probable that is.

If assessors provide only a single answer or estimate and a qualitative expression of the uncertainty, decision‐makers will have to make their own quantitative interpretation of how different the real answer or value might be. Even if this is not intended or explicit, such a judgement will be implied when the decision is made. Therefore, at least an implicit quantitative judgement is, in effect, unavoidable, and this is better made by assessors, since they are better placed to understand the sources of uncertainty affecting the assessment and judge their effect on its conclusion.

Qualitative expressions often imply, or may be interpreted as implying, judgements about the implications of uncertainty for decision‐making, which are outside the remit of EFSA. For example, ‘low uncertainty’ tends to imply that the uncertainty is too small to influence decision‐making, and ‘no concern’ implies firmly that this is the case. Qualitative terms can be used if they are based on scientific criteria agreed with decision‐makers, so that assessors are not making risk management judgements (see Section 3.5). However, for transparency they need to be accompanied by quantitative expression of uncertainty, to make clear what range and probability of consequences is being accepted.

When different assessors work on the same assessment, e.g. in a Working Group, they cannot reliably understand each other's assessment of uncertainty if it is expressed qualitatively. Assessors may assess uncertainty differently yet agree on a single qualitative expression, because they interpret it differently.

Expressing uncertainties in terms of their quantitative impact on the assessment conclusion will reveal differences of opinion between experts working together on an assessment, enabling a more rigorous discussion and hence improving the quality of the final conclusion.

It has been demonstrated that people often perform poorly at judging combinations of probabilities (Gigerenzer, 2002). This implies they may perform poorly at judging how multiple uncertainties in an assessment combine. It may therefore be more reliable to divide the uncertainty analysis into parts and quantify uncertainty separately for those parts containing important sources of uncertainty, so that they can be combined by calculation (see Section 7.2).

Quantifying uncertainty enables decision‐makers to weigh the probabilities of different consequences against other relevant considerations (e.g. cost, benefit). Unquantified uncertainties cannot be weighed in this way and make decision‐making more difficult (Section 5.13). It is therefore important to quantify the overall impact of as many as possible of the identified uncertainties, and identify any that cannot be quantified. The most direct way to achieve this is to try to quantify the overall impact of all identified uncertainties, as this will reveal any that cannot be quantified.

Many concerns and objections to quantitative expression of uncertainty have been raised by various parties during the public consultation and trial period for this document and in the literature. These are listed in Box 2; many, not all, relate to the role of expert judgement in quantifying uncertainty. The Scientific Committee has considered these concerns carefully and concludes that all of them can be addressed, either by improved explanation of the principles involved or through the use of appropriate methods for obtaining and using quantitative expressions. These are also summarised in Box 2.

Having considered the advantages of quantitative expression, and addressed the concerns, the Scientific Committee concludes that assessors should express in quantitative terms the combined effect of as many as possible of the identified sources of uncertainty, while recognising that how this is reported must be compatible with the requirements of decision‐makers and legislation (Section 3.5). Any sources of uncertainty that assessors are unable to include in their quantitative expression, for whatever reason, must be documented qualitatively and reported alongside it, because they will have significant implications for decision‐making (see Section 5.13). Together, the quantified uncertainty and the description of unquantified uncertainties provide the overall characterisation of uncertainty, and express it as unambiguously as is possible. The role of qualitative approaches in this is discussed in Section 4.3.

This recommended approach is thus consistent with the requirement of the Codex Working Principles for Risk Analysis (Codex, 2016) and the EFSA Guidance on Transparency (EFSA, 2010a,b), which state that uncertainty be ‘quantified to the extent that is scientifically achievable’. However, the phrase ‘scientifically achievable’ requires careful interpretation. It does not mean that uncertainties should be quantified using the most sophisticated scientific methods available (e.g. a fully probabilistic analysis); this would be inefficient in cases where simpler methods of quantification would provide sufficient information on uncertainty for decision‐making. Rather, scientifically achievable should be interpreted as referring to including as many as possible of the identified sources of uncertainty within the quantitative assessment of overall uncertainty, and omitting only those which the assessors are unable to quantify.

The recommended approach does not imply a requirement to quantify ‘unknown unknowns’ or ignorance. These are always potentially present, but cannot be included in assessment, as the assessors are unaware of them (see Section 5.13). The recommended approach refers to the immediate output of the assessment, and does not imply that all communications of that output should also be quantitative. It is recognised that quantitative information may raise issues for communication with stakeholders and the public. These issues and options for addressing them are discussed in Section 16.

Box 2: Common concerns and objections to quantitative expression of uncertainty, and how they are addressed by the approach developed in this document and the accompanying Guidance.

Quantifying uncertainty requires complex computations, or excessive time or resource: most of the options in the Guidance do not require complex computations, and the methods are scalable to any time and resource limitation, including urgent situations.

Quantifying uncertainty requires extensive data: uncertainty can be quantified by expert judgement for any well‐defined question or quantity (Section 5.1), provided there is at least some relevant evidence.

Data are preferable to expert judgement: the Guidance recommends use of relevant data where available (see Section 5.9).

Subjectivity is unscientific: All judgement is subjective, and judgement is a necessary part of all scientific assessment. Even when good data are available, expert judgement is involved in evaluating and analysing them, and when using them in risk assessment.

Subjective judgements are guesswork and speculation: all judgements in EFSA assessments will be based on evidence and reasoning, which will be documented transparently (Section 5.9).

Expert judgement is subject to psychological biases: EFSA's guidance on uncertainty analysis and expert knowledge elicitation use methods designed to counter those biases (EFSA, 2014a; EFSA Scientific Committee, 2018).

Quantitative judgements are over‐precise: EFSA's methods produce judgements that reflect the experts’ uncertainty – if they feel they are over‐precise, they should adjust them accordingly.

Uncertainty is exaggerated: identify your reasons for thinking the uncertainty is exaggerated, and revise your judgements to take them into account.