Abstract

In 2013, EFSA published a comprehensive systematic review of epidemiological studies published from 2006 to 2012 investigating the association between pesticide exposure and many health outcomes. Despite the considerable amount of epidemiological information available, the quality of much of this evidence was rather low and many limitations likely affect the results so firm conclusions cannot be drawn. Studies that do not meet the ‘recognised standards’ mentioned in the Regulation (EU) No 1107/2009 are thus not suited for risk assessment. In this Scientific Opinion, the EFSA Panel on Plant Protection Products and their residues (PPR Panel) was requested to assess the methodological limitations of pesticide epidemiology studies and found that poor exposure characterisation primarily defined the major limitation. Frequent use of case–control studies as opposed to prospective studies was considered another limitation. Inadequate definition or deficiencies in health outcomes need to be avoided and reporting of findings could be improved in some cases. The PPR Panel proposed recommendations on how to improve the quality and reliability of pesticide epidemiology studies to overcome these limitations and to facilitate an appropriate use for risk assessment. The Panel recommended the conduct of systematic reviews and meta‐analysis, where appropriate, of pesticide observational studies as useful methodology to understand the potential hazards of pesticides, exposure scenarios and methods for assessing exposure, exposure–response characterisation and risk characterisation. Finally, the PPR Panel proposed a methodological approach to integrate and weight multiple lines of evidence, including epidemiological data, for pesticide risk assessment. Biological plausibility can contribute to establishing causation.

Keywords: epidemiology, pesticides, risk assessment, quality assessment, evidence synthesis, lines of evidence, weight‐of‐evidence

Summary

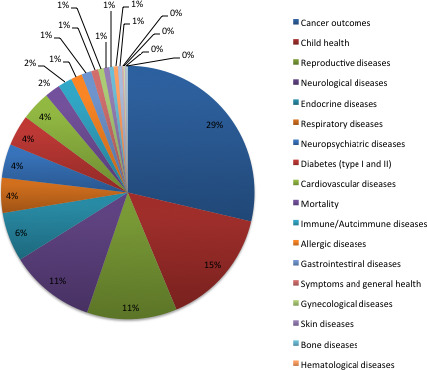

The European Food Safety Authority (EFSA) asked the Panel on Plant Protection Products and their Residues (PPR Panel) to develop a Scientific Opinion on the follow‐up of the findings of the External Scientific Report ‘Literature review of epidemiological studies linking exposure to pesticides and health effects’ (Ntzani et al., 2013). This report was based on a systematic review and meta‐analysis of epidemiological studies published between 2006 and 2012 and summarised the associations found between pesticide exposure and 23 major categories of human health outcomes. Most relevant significant associations were found for liver cancer, breast cancer, stomach cancer, amyotrophic lateral sclerosis, asthma, type II diabetes, childhood leukaemia and Parkinson's disease. While the inherent weaknesses of the epidemiological studies assessed do not allow firm conclusions to be drawn on causal relationships, the systematic review raised a concern about the suitability of regulatory studies to inform on specific and complex human health outcomes.

The PPR Panel developed a Scientific Opinion to address the methodological limitations affecting the quality of epidemiological studies on pesticides. This Scientific Opinion is intended only to assist the peer review process during the renewal of pesticides under Regulation (EC) 1107/2009 where the evaluation of epidemiological studies, along with clinical cases and poisoning incidents following any kind of human exposure, if available, is a data requirement. Epidemiological data concerning exposures to pesticides in Europe will not be available before first approval of an active substance and so will not be expected to contribute to a draft assessment report (DAR). However, there is the possibility that earlier prior approval has been granted for use of an active substance in another jurisdiction and epidemiological data from that area may be considered relevant. Regulation (EC) No 1107/2009 requires a search of the scientific peer‐reviewed open literature, which includes existing epidemiological studies. This type of data is more suited for the renewal process of active substances, also in compliance with Regulation (EC) 1141/2010 which indicates that ‘The dossiers submitted for renewal should include new data relevant to the active substance and new risk assessments’.

In this Opinion, the PPR Panel proposed a methodological approach specific for pesticide active substances to make appropriate use of epidemiological data for risk assessment purposes, and proposed recommendations on how to improve the quality and reliability of epidemiological studies on pesticides. In addition, the PPR Panel discussed and proposed a methodology for the integration of epidemiological evidence with data from experimental toxicology as both lines of evidence can complement each other for an improved pesticide risk assessment process.

First, the opinion introduces the basic elements of observational epidemiological studies1 and contrasts them with interventional studies which are considered to provide the most reliable evidence in epidemiological research as the conditions for causal inference are usually met. The major observational study designs are described together with the importance of a detailed description of pesticide exposure, the use of validated health outcomes and appropriate statistical analysis to model exposure–health relationships. The external and internal study validity is also addressed to account for the role of chance in the results and to ascertain whether factors other than exposure can distort the associations found. Several types of human data can contribute to the risk assessment process of pesticides, particularly to support hazard identification. Besides formal epidemiological studies, other sources of human data such as case series, disease registries, poison control centre information, occupational health surveillance data and post‐marketing surveillance programmes, can provide useful information for hazard identification, particularly in the context of acute, specific health effects.

However, many of the existing epidemiological studies on pesticides exposure and health effects suffer from a range of methodological limitations or deficiencies (Terms of Reference (ToR) 1). The Panel notes that the complexity of studying associations between exposure to pesticides and health outcomes in observational settings among humans is more challenging than in many other disciplines of epidemiology. This complexity lies in some specific characteristics in the field of pesticide epidemiology such as the large number of active substances in the market (around 480 approved for use in the European Union (EU)), the difficulties to measure exposure, and the frequent lack of quantitative (and qualitative) data on exposure to individual pesticides. The systematic appraisal of epidemiological evidence carried out in an EFSA external scientific report (Ntzani et al., 2013) identified a number of methodological limitations. Poor exposure characterisation primarily defines the major limitation of most existing studies because of the lack of direct and detailed exposure assessment to specific pesticides (e.g. use of generic pesticide definitions). Frequent use of case–control studies as opposed to prospective studies is also a limitation. Inadequate definition or deficiencies in health outcomes, deficiencies in statistical analysis and poor quality reporting of research findings were identified as other limitations of some pesticide epidemiological studies. These limitations are to some extent responsible for heterogeneity or inconsistency of data that challenge drawing robust conclusions on causality. Given the small effect sizes for most of the outcomes addressed by Ntzani et al. (2013), the contribution of bias in the study design can play a role.

The PPR Panel also provides a number of refinements (ToR 2) and recommendations (ToR 3) to improve future pesticide epidemiological studies that will benefit the risk assessment. The quality and relevance of epidemiological research can be enhanced by (a) an adequate assessment of exposure, preferentially by using personal exposure monitoring or biomarker concentrations of specific pesticides (or combination of pesticides) at an individual level, reported in a way that minimises misclassification of exposure and allows for dose–response assessment; (b) a sufficiently valid and reliable outcome assessment (well defined clinical entities or validated surrogates); (c) adequately accounting for potentially confounding variables (including other known exposures affecting the outcomes); (d) conducting and reporting subgroup analysis (e.g. stratification by gender, age, etc.). A number of reporting guidelines and checklists developed specifically for studies on environmental epidemiology are of interest for epidemiological studies assessing pesticide exposures. This is the case for extensions of the modified STROBE (STrengthening the Reporting of OBservational studies in Epidemiology) criteria, among others, which includes recommendations on what should be included in an accurate and complete report of an observational study.

Exposure assessment can be improved at the individual level (direct and detailed exposure assessment to specific pesticides in order to provide a reliable dosimeter for the pesticide of concern that can be supplemented with other direct measures such as biomonitoring). Besides, exposure can be assessed at population level by using registered data that can then be linked to electronic health records. This will provide studies with unprecedented sample size and information on exposure and subsequent disease. Geographical information systems (GIS) and small area studies might also serve as an additional way to provide estimates of residential exposures. These more generic exposure assessments have the potential to identify general risk factors and may be important both informing overall regulatory policies, and for identification of matters for further epidemiological research. The development of ‐omic technologies also presents intriguing possibilities for improving exposure assessment through measurement of a wide range of molecules, from xenobiotics and metabolites in biological matrices (metabolomics) to complexes with DNA and proteins (adductomics). Omics have the potential to measure profiles or signatures of the biological response to the cumulative exposure to complex chemical mixtures and allows a better understanding of biological pathways. Health outcomes can be refined by using validated biomarkers of effect, that is, a quantifiable biochemical, physiological or any other change that, is related to level of exposure, is associated with a health impairment and also helps to understand a mechanistic pathway of the development of a disease.

The incorporation of epidemiological studies into regulatory risk assessment (ToR 4) represents a major challenge for scientists, risk assessors and risk managers. The findings of the different epidemiological studies can be used to assess associations between potential health hazards and adverse health effects, thus contributing to the risk assessment process. Nevertheless, and despite the large amount of available data on associations between pesticide exposure and human health outcomes, the impact of such studies in regulatory risk assessment is still limited. Human data can be used for many stages of risk assessment; however, a single (not replicated) epidemiological study, in the absence of other studies on the same pesticide active substance, should not be used for hazard characterisation unless it is of high quality and meets the ‘recognised standards’ mentioned in the Regulation (EU) No 1107/2009. As these ‘recognised standards’ are not detailed in the Regulation, a number of recommendations should be considered for optimal design and reporting of epidemiological studies to support regulatory assessment of pesticides. Although further specific guidance will be helpful, this is beyond the ToR of this Opinion. Evidence synthesis techniques, such as systematic reviews and meta‐analysis (where appropriate) offer a useful approach. While these tools allow generation of summary data, increased statistical power and precision of risk estimates by combining the results of all individual studies meeting the selection criteria, they cannot overcome methodological flaws or bias of individual studies. Systematic reviews and meta‐analysis of observational studies have the capacity of large impact on risk assessment as these tools provide information that strengthens the understanding of the potential hazards of pesticides, exposure scenarios and methods for assessing exposure, exposure–response characterisation and risk characterisation. Although systematic reviews are also considered a potential tool for answering toxicological questions, their methodology would need to be adapted to the different lines of evidence.

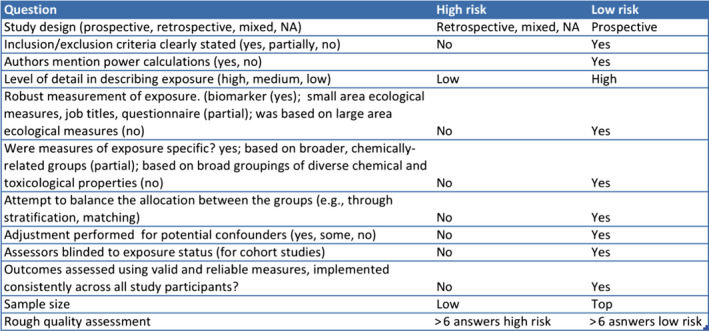

Study evaluation should be performed within a best evidence synthesis framework as it provides an indication on the nature of the potential biases each specific study may have and an assessment of overall confidence in the epidemiological database. This Opinion reports the study quality parameters to be evaluated in single epidemiological studies and the associated weight (low, medium and high) for each parameter. Three basic categories are proposed as a first tier to organise human data with respect to risk of bias and quality: (a) low risk of bias and high/medium reliability; (b) medium risk of bias and medium reliability; (c) high risk of bias and low reliability because of serious methodological limitations or flaws that reduce the validity of results or make them largely uninterpretable for a potential causal association. These categories are intended to parallel the reliability and relevance rating of each stream of evidence according to the EFSA peer review of active substances: acceptable, supplementary and unacceptable. Risk assessment should not be based on results of epidemiological studies that do not meet well‐defined data quality standards in order to meet the ‘recognised standards’ mentioned in the Regulation (EU) No 1107/2009.

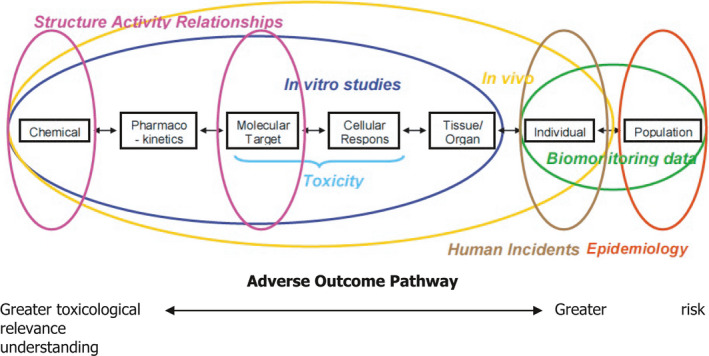

Epidemiological studies provide complementary data that can be integrated together with data from in vivo laboratory animal studies, mechanistic in vitro models and ultimately in silico technology for pesticide risk assessment (ToR 4). The combination of all these lines of evidence can contribute to a Weight‐of‐Evidence (WoE) analysis in the characterisation of human health risks with the aim of improving decision‐making. Although the different sets of data can be complementary and confirmatory, and thus serve to strengthen the confidence of one line of evidence on another, they may individually be insufficient and pose challenges for characterising properly human health risks. Hence, all four lines of evidence (epidemiology, animal, in vitro, in silico) make a powerful combination, particularly for chronic health effects of pesticides, which may take decades to be clinically manifested in an exposed human population.

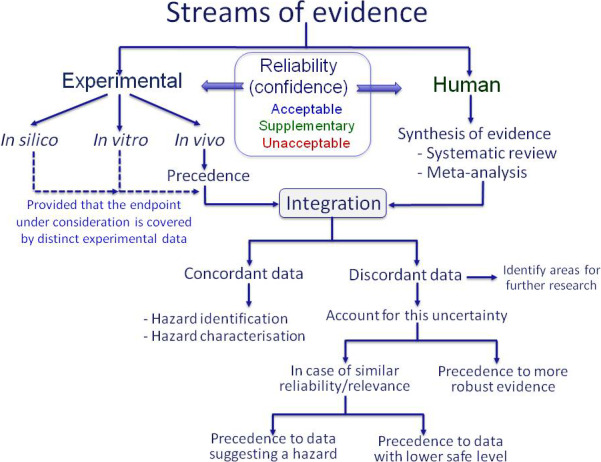

The first consideration is how well the health outcome under consideration is covered by existing toxicological and epidemiological studies on pesticides. When both types of studies are available for a given outcome/endpoint, both should be assessed for strengths and weaknesses before being used for risk assessment. Once the reliability of available human evidence (observational epidemiology and vigilance data), experimental evidence (animal and in vitro data) and non‐testing data (in silico studies) has been evaluated, the next step involves weighting these sources of data. This opinion proposed an integrated approach where all lines of evidence are considered in an overall WoE framework to better support the risk assessment. This framework relies on a number of principles highlighting when one line should take precedence over another. The concordance or discordance between human and experimental data should be assessed in order to determine which data set should be given precedence. Although the totality of evidence should be assessed, the more reliable data should be given more weight, regardless of whether the data comes from human or experimental studies. The more challenging situation is when study results are not concordant. In such cases, the reasons for the difference should be considered and efforts should be made to develop a better understanding of the biological basis for the contradiction.

Human data on pesticides can help verify the validity of estimations made based on extrapolation from the full toxicological database regarding target organs, dose–response relationships and the reversibility of toxic effects, and to provide reassurance on the extrapolation process without direct effects on the definition of reference values. Thus, pesticide epidemiological data can form part of the overall WoE of available data using modified Bradford Hill criteria as an organisational tool to increase the likelihood of an underlying causal relationship.

1. Introduction

1.1. Regulatory data requirements regarding human health in pesticide risk assessment

Regulatory authorities in developed countries conduct a formal human risk assessment for each registered pesticide based on mandated toxicological studies, done according to specific study protocols, and estimates of likely human exposure.

In the European Union (EU), the procedure for the placing of plant protection products (PPP) on the market is laid down by Commission Regulation No 1107/20092. Commission Regulations No 283/20133 and 284/20134 set the data requirements for the evaluation and re‐evaluation of active substances and their formulations.

The data requirements regarding mammalian toxicity of the active substance are described in part A of Commission Regulation (EU) No 283/2013 for chemical active substances and in part B for microorganisms including viruses. With regard to the requirements for pesticide active substances, reference to the use of human data may be found in different chapters of Section 5 related to different end‐points. For instance, data on toxicokinetics and metabolism that include in vitro metabolism studies on human material (microsomes or intact cell systems) belong to Chapter 5.1 that deals with studies of absorption, distribution, metabolism and excretion in mammals; in vitro genotoxicity studies performed on human material are described in Chapter 5.4 on genotoxicity testing and specific studies such as acetylcholinesterase inhibition in human volunteers are found in Chapter 5.7 on neurotoxicity studies. Chapter 5.8 refers to supplementary studies on the active substance, and some specific studies, such as pharmacological or immunological investigations.

Although the process of pesticide evaluation is mainly based on experimental studies, human data could add relevant information to that process. The requirements relating to human data are mainly found in Chapter 5.9 ‘Medical data’ of Regulation (EU) No 283/2013. It includes medical reports following accidental, occupational exposure or incidents of intentional self‐poisoning as well as monitoring studies such as on surveillance of manufacturing plant personnel and others. The information may be generated and reported through official reports from national poison control centres as well as epidemiological studies published in the open literature. The Regulation requires that ‘relevant’ information on the effects of human exposure, where available, shall be used to confirm the validity of extrapolations regarding exposure and conclusions with respect to target organs, dose–response relationships, and the reversibility of adverse effects.

Regulation (EU) No 1107/2009 equally states that, ‘where available, and supported with data on levels and duration of exposure, and conducted in accordance with recognised standards, epidemiological studies are of particular value and must be submitted’. However, it is clear that there is no obligation for the petitioners to conduct epidemiological studies specific for the active substance undergoing the approval or renewal process. Rather, according to Regulation (EC) No 1107/2009, applicants submitting dossiers for approval of active substances shall provide ‘scientific peer‐reviewed public available literature […]. This should be on the active substance and its relevant metabolites dealing with side‐effects on health […] and published within the last ten years before the date of submission of the dossier’.

In particular, epidemiological studies on pesticides should be retrieved from the literature according to the EFSA Guidance entitled ‘Submission of scientific‐peer reviewed open literature for the approval of pesticide active substances under Regulation (EC) No 1107/2009’ (EFSA, 2011a), which follows the principles of the Guidance ‘Application of systematic review methodology to food and feed safety assessments to support decision‐making’ (EFSA, 2010a). As indicated in the EFSA Guidance, ‘the process of identifying and selecting scientific peer‐reviewed open literature for active substances, their metabolites, or plant protection products’ is based on a literature review which is systematic in the approach.

The submission of epidemiological studies and more generally of human data by the applicants in Europe has especially previously sometimes been incomplete and/or has not been performed in compliance with current EFSA Guidance (EFSA, 2011a). This is probably owing to the fact that a mandatory requirement to perform an (epidemiological) literature search according to specific EFSA Guidance is relatively recent, e.g. introduced for AIR‐3 substances (Regulation AIR‐3: Reg. (EU) No 844/2012; Guidance Document SANCO/2012/11251 – rev.4).

The integration of epidemiological data with toxicological findings in the peer review process of pesticides in the EU should be encouraged but is still lacking. A recent and controversial example is the one related to the evaluation of glyphosate in which significant efforts were made to include epidemiological studies in the risk assessment, but the conclusion was that these studies provided very limited evidence of an association between glyphosate and health outcomes.

In the case of the peer review of 2,4‐D, most of epidemiological data were not used in the risk assessment because it was critical to know the impurity profile of the active substance and this information was not available in the publications (as happens frequently in epidemiological studies). In conclusion, within the European regulatory system there is no example of a pesticide active substance approval being influenced by epidemiological data.

Now that a literature search including epidemiological studies is mandatory and guidance is in place (EFSA, 2011a), a more consistent approach can facilitate risk assessment. However, no framework has been established on how to assess such epidemiological information in the regulatory process. In particular, none of the classical criteria used for the evaluation of these studies is included in the current regulatory framework (e.g. study design, use of odd ratios and relative risks, potential confounders, multiple comparisons, assessment of causality). It follows that specific criteria or guidance for the appropriate use of epidemiological findings in the process of writing and peer reviewing Draft Assessment Reports (DARs) or Renewal Assessment Reports (RAR) is warranted. The EFSA Stakeholder Workshop (EFSA, 2015a) anticipated that the availability of more robust and methodologically sound studies presenting accurate information on exposure would bolster the regulation of pesticides in the EU.

Another potential challenge is synchronisation between the process of renewal of active substances and the output of epidemiological studies. Indeed, the planning, conduct, and analysis of epidemiological studies often require a substantial amount of time, especially where interpretation of data is complex.

1.2. Background and Terms of Reference as provided by the requestor

In 2013, the European Food Safety Authority (EFSA) published an External scientific report ‘Literature review on epidemiological studies linking exposure to pesticides and health effects’ carried out by the University of Ioannina Medical School (Ntzani et al., 2013). The report is based on a systematic review of epidemiological studies published between 2006 and 2012 and summarises the association between pesticide exposure and any health outcome examined (23 major categories of human health outcomes). In particular, a statistically significant association was observed through fixed and random effect meta‐analyses between pesticide exposure and the following health outcomes: liver cancer, breast cancer, stomach cancer, amyotrophic lateral sclerosis, asthma, type II diabetes, childhood leukaemia and Parkinson's disease.

Despite the large number of research articles and analyses (> 6,000) available, the authors of the report could not draw any firm conclusions for the majority of the health outcomes. This observation is in line with previous studies assessing the association between the use of pesticides and the occurrence of human health adverse effects which all acknowledge that such epidemiological studies suffer from a number of limitations and large heterogeneity of data. The authors especially noted that broad pesticides definitions in the epidemiological studies limited the value of the results of meta‐analyses. Also, the scope of the report did not allow the in‐depth associations between pesticide exposure and specific health outcomes. Nonetheless, the report highlights a number of health outcomes where further research is needed to draw firmer conclusions regarding their possible association with pesticide exposures.

Nevertheless, the outcomes of the External scientific report are in line with other similar studies published in Europe,5 , 6 and raise a number of questions and concerns, with regard to pesticide exposure and the associations with human health outcomes. Furthermore, the results of the report open the way for discussion on how to integrate results from epidemiological studies into pesticide risk assessments. This is particularly important for the peer‐review team at EFSA dealing with the evaluation of approval of plant protection products for which the peer‐review needs to evaluate epidemiological findings according to EU Regulation No 283/2013. The regulation states that applicants must submit ‘relevant’ epidemiological studies, where available.

For the Scientific Opinion, the PPR Panel will discuss the associations between pesticide exposure and human health effects observed in the External scientific report (Ntzani et al., 2013) and how these findings could be interpreted in a regulatory pesticide risk assessment context. Hence, the PPR Panel will systematically assess the epidemiological studies collected in the report by addressing major data gaps and limitations of the studies and provide related recommendations.

The PPR Panel will specifically:

collect and review all sources of gaps and limitations, based on (but not necessarily limited to) those identified in the External scientific report in regard to the quality and relevance of the available epidemiological studies.

based on the gaps and limitations identified in point 1, propose potential refinements for future epidemiological studies to increase the quality, relevance and reliability of the findings and how they may impact pesticide risk assessment. This may include study design, exposure assessment, data quality and access, diagnostic classification of health outcomes, and statistical analysis.

identify areas in which information and/or criteria are insufficient or lacking and propose recommendations for how to conduct pesticide epidemiological studies in order to improve and optimise the application in risk assessment. These recommendations should include harmonisation of exposure assessment (including use of biomonitoring data), vulnerable population subgroups and/or health outcomes of interest (at biochemical, functional, morphological and clinical level) based on the gaps and limitations identified in point 1.

discuss how to make appropriate use of epidemiological findings in risk assessment of pesticides during the peer review process of draft assessment reports, e.g. weight‐of‐evidence (WoE) as well as integrating the epidemiological information with data from experimental toxicology, adverse outcome pathways (AOP), mechanism of actions, etc.

The PRAS Unit will consult the Scientific Committee on the consensual approach to EFSA's overarching scientific areas,7 including the integration of epidemiological studies in risk assessment.

1.3. Interpretation of the Terms of Reference

In the Terms of Reference (ToR), EFSA requested the PPR Panel to write a scientific Opinion on the follow up of the results from the External Scientific Report on a systematic review of epidemiological studies published between 2006 and 2012 linking exposure to pesticides and human health effects (Ntzani et al., 2013). According to EU Regulation No 283/2013, the integration of epidemiological data into pesticide risk assessment is important for the peer review process of DAR and RAR of active substances for EU approval and their intended use as plant protection products.

In its interpretation of the terms of reference, the PPR Panel will then develop a Scientific Opinion to address the methodological limitations identified in epidemiological studies on pesticides and to make recommendations to the sponsors of such studies on how to improve them in order to facilitate their use for regulatory pesticide risk assessment, particularly for substances in the post‐approval period. The PPR Panel notes that experimental toxicology studies also present limitations related to their methodology and quality of reporting; however, the assessment of these limitations is beyond the ToR of this Opinion.

This Scientific Opinion is intended to assist the peer review process during the renewal of pesticides under Regulation 1107/2009 where the evaluation of epidemiological studies, along with clinical cases and poisoning incidents following any kind of human exposure, if available, represent a data requirement. Epidemiological data concerning exposures to pesticides in Europe will not be available before first approval of an active substance (with the exception of incidents produced during the manufacturing process, which are expected to be very unlikely) and so will not be expected to contribute to a DAR. However, there is the possibility that earlier prior approval has been granted for use of an active substance in another jurisdiction and epidemiological data from that area may be considered relevant. Regulation (EC) No 1107/2009 requires a search of the scientific peer‐reviewed open literature, where it is expected to retrieve existing epidemiological studies. It is therefore recognised that epidemiological studies are more suitable for the renewal process of active substances, also in compliance with the provision of the EC regulation 1141/2010 indicating that ‘The dossiers submitted for renewal should include new data relevant to the active substance and new risk assessments to reflect any changes in data requirements and any changes in scientific or technical knowledge since the active substance was first included in Annex I to Directive 91/414/EEC’.

The PPR Panel will specifically address the following topics:

Review inherent weaknesses affecting the quality of epidemiological studies (including gaps and limitations of the available pesticide epidemiological studies) and their relevance in the context of regulatory pesticide risk assessment. How can these weaknesses be addressed?

What are potential contributions of epidemiological studies that complement classical toxicological studies conducted in laboratory animal species in the area of pesticide risk assessment?

Discuss and propose a methodological approach specific for pesticide active substances on how to make appropriate use of epidemiological studies, focusing on how to improve the gaps and limitations identified.

Propose refinements to practice and recommendations for better use of the available epidemiological evidence for risk assessment purposes. Discuss and propose a methodology for the integration of epidemiological information with data from experimental toxicology.

This Scientific Opinion, particularly Section 2–4, is not intended to address the bases of epidemiology as a science. Those readers willing to deepen into specific aspects of this science are encouraged to read general textbook of epidemiology (e.g. Rothman et al., 2008).

It should be taken into account that this Opinion is focussed only on pesticide epidemiology studies in the EU regulatory context and not from a general scientific perspective. Therefore, the actual limitations and weaknesses of experimental toxicology studies will not be addressed herein.

1.4. Additional information

In order to fully address topics 1–4 above (Section 1.3), attention has been paid to a number of relevant reviews of epidemiological studies and the experience of other National and International bodies with knowledge of epidemiology in general and in applying epidemiology to pesticide risk assessment specifically. Detailed attention has been given to these studies in Annex A and drawn from the experience of the authors that have contributed constructively to understanding in this area. Also Annex A records published information that has been criticised for its lack of rigour showing how unhelpful some published studies may be. The lessons learned from such good (and less‐good) practice have been incorporated into the main text by cross‐referring to Annex A. In this way, this Scientific Opinion has the aim of clearly distilling and effectively communicating the arguments in the main text without overwhelming the reader with all the supporting data which is nevertheless accessible.

In addition, Annex B contains a summary of the main findings of a project that EFSA outsourced in 2015 to further investigate the role of human biological monitoring (HBM) in occupational health and safety strategies as a tool for refined exposure assessment in epidemiological studies and to contribute to the evaluation of potential health risks from occupational exposure to pesticides (Bevan et al., 2017).

2. General framework of epidemiological studies on pesticides

This section introduces the basic elements of epidemiological studies on pesticides and contrasts them with other types of studies. For more details general textbook on epidemiology are recommended (Rothman et al., 2008; Thomas, 2009).

2.1. Study design

Epidemiology studies the distribution and determinants of health outcomes in human or other target species populations, to ascertain how, when and where diseases occur. This can be done through observational studies and intervention studies (i.e. clinical trials),8 which compare study groups subject to differing exposure to a potential risk factor. Both types of studies are carried out in a natural setting, which is a less controlled environment than laboratories.

Information on cases of disease occurring in a natural setting can also be systematically recorded in the form of case reports or case series of exposed individuals only. Although case series/reports do not compare study groups according to differing exposure, they may provide useful information, particularly on acute effects following high exposures, which makes them potentially relevant for hazard identification.

In randomised clinical trials, the exposure of interest is randomly allocated to subjects and, whenever possible, these subjects are blinded to their treatment, thereby eliminating potential bias due to their knowledge about their exposure to a particular treatment. This is why they are called intervention studies. Observational epidemiological studies differ from clinical intervention studies in that the exposure of interest is not randomly assigned to the subjects enrolled and participants are often not blinded to their exposure. This is why they are called observational. As a result, randomised clinical trials rank higher in terms of design as they provide unbiased estimates of average treatment effects.

The lack of random assignment of exposure in observational studies represents a key challenge, as other risk factors that are associated with the occurrence of disease may be unevenly distributed between those exposed and non‐exposed. This means that known confounders need to be measured and accounted for. However, there is always the possibility that unknown or unmeasured confounders are left unaccounted for, although unknown confounders cannot be addressed. Furthermore, the fact that study participants are often unaware of their current or past exposure or may not recall these accurately in observational studies (e.g. second‐hand smoke, dietary intake or occupational hazards) may result in biased estimates of exposure if it is based on self‐report. As an example, it is not unlikely that when cancer cases and controls are asked whether they have previously been exposed to a pesticide the cancer cases may report their exposure differently from controls, even in cases where the past exposures did not differ between the two groups.

Traditionally, designs of observational epidemiological studies are classified as either ecological, cross‐sectional, case–control or cohort studies. This approach is based on the quality of exposure assessment and the ability to assess directionality from exposure to outcome. These differences largely determine the quality of the study (Rothman and Greenland, 1998; Pearce, 2012).

Ecological studies are observational studies where either exposure, outcome or both are measured on a group but not at individual level and the correlation between the two is then examined. Most often, exposure is measured on a group level while the use of health registries often allows for extraction of health outcomes on an individual level (cancer, mortality). These studies are often used when direct exposure assessment is difficult to achieve and in cases where large contrast in exposures are needed (comparing levels between different countries or occupations). Given the lack of exposure and/or outcome on an individual level, these studies are useful for hypothesis generation but results generally need to be followed up using more rigorous design in either humans or use of experimental animals.

In cross‐sectional studies, exposure and health status are assessed at the same time, and prevalence rates (or incidence over a limited recent time) in groups varying in exposure are compared. In such studies, the temporal relationship between exposure and disease cannot be established since the current exposure may not be the relevant time window that leads to development of the disease. The inclusion of prevalent cases is a major drawback of (most) cross‐sectional studies, particularly for chronic long‐term diseases. Cross‐sectional studies may nevertheless be useful for risk assessment if exposure and effect occur more or less simultaneously or if exposure does not change over time.

Case–control studies examine the association between estimates of past exposures among individuals that already have been diagnosed with the outcome of interest (e.g. cases) to a control group of subjects from the same population without such outcome. In population‐based incident case–control studies, cases are obtained from a well‐defined population, with controls selected from members of the population who are disease free at the time a case is incident. The advantages of case–control studies are that they require less sample sizes, time and resources compared to prospective studies and often they are the only viable option when studying rare outcomes such as some types of cancer. In case–control studies, past exposure is most often not assessed based on ‘direct’ measurement but rather through less certain measurements such as a recall captured through interviewer or self‐administered questionnaires or proxies such as job descriptions titles or task histories. Although case–control studies may allow for proper exposure assessment, these studies are prone to recall‐bias when estimating exposure. Other challenges include the selection of appropriate controls; as well as the need for appropriate confounder control.

In cohort studies, the population under investigation consists of individuals who are at risk of developing a specific disease or health outcome at some point in the future. At baseline and at later follow‐ups (prospective cohort studies) relevant exposures, confounding factors and health outcomes are assessed. After an appropriate follow‐up period, the frequency of occurrence of the disease is compared among those differently exposed to the previously assessed risk factor of interest. Cohort studies are therefore by design prospective as the assessment of exposure to the risk factor and covariates of interest are measured before the health outcome has occurred. Thus, they can provide better evidence for causal associations compared to the other designs mentioned above. In some cases, cohort studies may be based on estimates of past exposure. Such retrospective exposure assessment is less precise than direct measure and prone to recall bias. As a result, the quality of evidence from cohort studies varies according to the actual method used to assess exposure and the level of detail by which information on covariates were collected. Cohort studies are particularly useful for the study of relatively common outcomes. If sufficiently powered in terms of size, they can also be used to appropriately address relatively rare exposures and health outcomes. Prospective cohort studies are also essential to study different critical exposure windows. An example of this is longitudinal birth cohorts that follow children at regular intervals until adult age. Cohort studies may require a long observation period when outcomes have a long latency prior to onset of disease. Thus, such studies are both complex and expensive to conduct and are prone to loss of follow‐up.

2.2. Population and sample size

A key strength of epidemiological studies is that they study diseases in the very population about which conclusions are to be drawn, rather than a proxy species. However, only rarely will it be possible to study the whole population. Instead, a sample will be drawn from the reference population for the purpose of the study. As a result, the observed effect size in the study population may differ from that in the population if the former does not accurately reflect the latter. However, observations made in a non‐representative sample may still be valid within that sample but care should then be made when extrapolating findings to the general population.

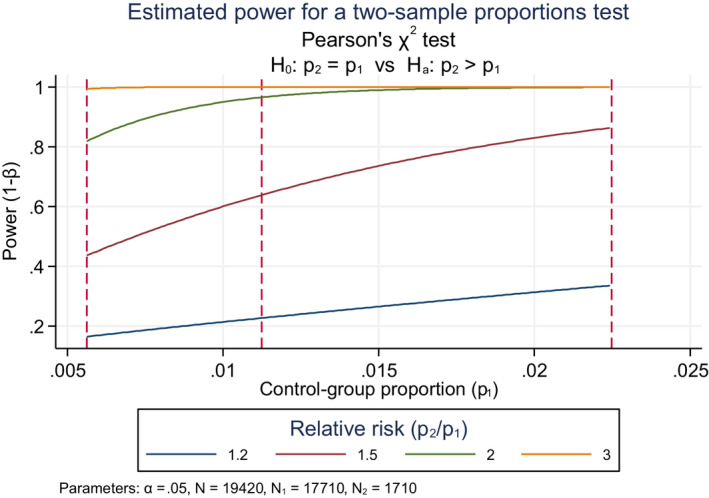

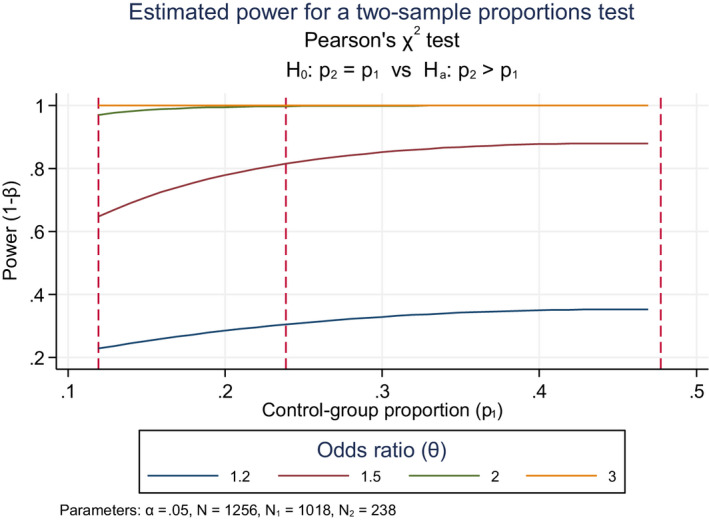

Having decided how to select individuals for the study, it is also necessary to decide how many participants should minimally be enrolled. The sample size of a study should be large enough to warrant sufficient statistical power. The standard power (also called sensitivity) is 80%, which means the ability of a study to detect an effect of a given magnitude when that effect actually exists in the target population; in other words, there is 80% probability of drawing the right conclusion from the results of the analyses and a corresponding probability of 20% or drawing the wrong conclusion and missing a true effect. Power analysis is often used to calculate the minimum sample size required to likely detect an effect of a given size. Small samples are likely to constitute an unrepresentative sample. The statistical power is also closely related to risk inflation, which needs to be given special attention when interpreting statistically significant results from small or underpowered studies (see Annex D).

Epidemiological studies, like toxicological studies in laboratory animals, are often designed to examine multiple endpoints unlike clinical trials that are designed and conducted to test one single hypothesis, e.g. efficacy of a medical treatment. To put this in context, for laboratory animal toxicology test protocols, OECD guidance for pesticides may prescribe a minimum number of animals to be enrolled in each treatment group. This does not guarantee adequate power for any of the multitude of other endpoints being tested in the same study. It is thus important to appropriately consider the power of a study when conducting both epidemiology and laboratory studies.

2.3. Exposure

The quality of the exposure measurements influences the ability of a study to correctly ascertain the causal relationship between the (dose of) exposure and a given adverse health outcome.

In toxicological studies in laboratory animals, the ‘treatment regime’ i.e. dose, frequency, duration and route are well defined beforehand and its implementation can be verified. This often allows expression of exposure in terms of external dose administered daily via oral route for example in a 90‐day study, by multiplying the amount of feed ingested every day by a study animal with the intended (and verified) concentration of the chemical present in the feed. Also, in the future, the internal exposure has to be determined in the pivotal studies.

In the case of pesticides, estimating exposure in a human observational setting is difficult as the dose, its frequency and duration over time and the route of exposure are not controlled and not even well known.

Measuring the intensity, frequency and duration of exposure is often necessary for investigating meaningful associations. Exposure may involve a high dose over a relatively short period of time, or a low‐level prolonged dose over a period from weeks to years. While the effects of acute, high‐dose pesticide exposure may appear within hours or days, the effects of chronic, low‐dose exposures may not appear until years later. Also, a disease may require a minimal level of exposure but increase in probability with longer exposure.

There may be differences in absorption and metabolism via different routes (dermal, inhalation and oral). While dermal or inhalation are often the routes exposure occurs in occupational settings, ingestion (food, water) may be the major route of pesticide exposure for the general population. Pharmacokinetic differences among individuals may result in differing systemic or tissue/organ doses even where the absorbed external doses may appear similar.

2.4. Health outcomes

The term health outcome refers to a disease state, event, behaviour or condition associated with health that is under investigation. Health outcomes are those clinical events (usually represented as diagnosis codes, i.e. International Classification of Diseases (ICD) 10) or outcomes (i.e. death) that are the focus of the research. Use of health outcomes requires a well‐defined case definition, a system to report and record the cases and a measure to express the frequency of these events.

A well‐defined case definition is necessary to ensure that cases are consistently diagnosed, regardless of where, when and by whom they were identified and thus avoid misclassification. A case definition involves a standard set of criteria, which can be a combination of clinical symptoms/signs, sometimes supplemented by confirmatory diagnostic tests with their known sensitivity and specificity. The sensitivity of the whole testing procedure (i.e. the probability that a person with an adverse health condition is truly diagnosed) must be known to estimate the true prevalence or incidence.

The clinical criteria may also involve other characteristics (e.g. age, occupation) that are associated with increased disease risk. At the same time, appropriately measured and defined phenotypes or hard clinical outcomes add validity to the results.

Disease registries contain clinical information of patients on diagnosis, treatment and outcome. These registries periodically update patient information and can thus provide useful data for epidemiological research. Mortality, cancer and other nation‐wide health registries generally meet the case‐definition requirements and provide (almost) exhaustive data on the incident cases within a population. These health outcomes are recorded and classified in national health statistics databases, which depend on accepted diagnostic criteria that are evolving and differ from one authority to another. This may confound attempts to pool data usefully for societal benefit. Registry data present many opportunities for meaningful analysis, but the degree of data completeness and validity may challenge making appropriate inferences. Also, changes in coding conventions over the lifetime of the database may have an impact on retrospective database research.

Although the disease status is typically expressed as a dichotomous variable, it may also be measured as an ordinal variable (e.g. severe, moderate, mild or no disease) or as a quantitative variable for example by measuring molecular biomarkers of toxic response in target organs or physiological measures such as blood pressure or serum concentration of lipids or specific proteins.

The completeness of the data capture and its consistency are key contributors to the reliability of the study. Harmonisation of diagnostic criteria, data storage and utility would bring benefits to the quality of epidemiological studies.

A surrogate endpoint is used as substitute for a well‐defined disease endpoint, an outcome measure, commonly a laboratory measurement (biomarker of response). These measures are considered to be on the causal pathway for the clinical outcome. In contrast to overt clinical disease, such biological markers of health may allow to detect subtle, subclinical toxicodynamic processes. For such outcomes, detailed analytical protocols for quantification should be specified to enable comparison or replication across laboratories. The use of AOPs can highlight differences in case definitions.

Although surrogate outcomes may offer additional information, the suitability of the surrogate outcome examined needs to be carefully assessed. In particular, the validity of surrogate outcomes may represent a major limitation to their use (la Cour et al., 2010). Surrogate endpoints that have not been validated should thus be avoided.

When the health status is captured in other ways, such as from self‐completed questionnaires or telephone interviews, from local records (medical or administrative databases) or through clinical examination only, these should be validated to demonstrate that they reflect the underlying case definition.

2.5. Statistical analysis and reporting

Reporting in detail materials, methods and results, and conducting appropriate statistical analyses are key steps to ensure quality of epidemiological studies. Regarding statistical analysis, one can distinguish between descriptive statistics and modelling of exposure–health outcome relationship.

2.5.1. Descriptive statistics

Descriptive statistics aim to summarise the important characteristics of the study groups, such as exposure measures, health outcomes, possible confounding factors and other relevant factors. The descriptive statistics often include frequency tables and measures of central tendency (e.g. means and medians) and dispersion (e.g. variance and interquartile range) of the parameters or variables studied.

2.5.2. Modelling exposure–health outcome relationship

Modelling of the exposure–health relationship aims to assess the possible relationship between the exposure and the health outcome under consideration. In particular, it can evaluate how this relationship may depend on dose and mode of exposure and other possible intervening factors.

Statistical tests determine the probability that the observations found in scientific studies may have occurred as a result of chance. This is done by summarising the results from individual observations and evaluating whether these summary estimates differ significantly between, e.g. exposed and non‐exposed groups, after taking into consideration random errors in the data.

For dichotomous outcomes, the statistical analysis compares study groups by assessing whether there is a difference in disease frequency between the exposed and control populations. This is usually done using a relative measure. The relative risk (RR) in cohort studies estimates the relative magnitude of an association between exposure and disease comparing those that are exposed (or those that have a higher exposure level) with those that are not exposed (or those that have a lower exposure level). It indicates the likelihood of developing the disease in the exposed group relative to those who are not (or less) exposed. An odds ratio (OR), generally an outcome measure in case–control and cross‐sectional studies, represents the ratio of the odds of exposure between cases and controls (or diseased and non‐diseased individuals in a cross‐sectional study) and is often the relative measure used in statistical testing. Different levels or doses of exposure can be compared in order to see if there is a dose–response relationship. For continuous outcome measures, mean or median change in the outcome are often examined across different level of exposure; either through analyses of variance or through other parametric statistics.

While the statistical analysis will show that observed differences are significantly different or not significantly different, both merit careful reflection (Greenland et al., 2016).

Interpretation of the absence of statistically significant difference. Failure to reject the null hypothesis does not necessarily mean that no association is present because the study may not have sufficient power to detect it. The power depends on the following factors:

sample size: with small sample sizes, statistical significance is more difficult to detect, even if true;

variability in individual response or characteristics, either by chance or by non‐random factors: the larger the variability, the more difficult to demonstrate statistical significance;

effect size or the magnitude of the observed difference between groups: the smaller the size of the effect, the more difficult to demonstrate statistical significance.

Interpretation of statistically significant difference. Statistical significance means that the observed difference is not likely due to chance alone. However, such a result still merits careful consideration.

Biological relevance. Rejection of the null hypothesis does not necessarily mean that the association is biologically meaningful, nor does it mean that the relationship is causal (Skelly, 2011). The key issue is whether the magnitude of the observed difference (or ‘effect size’) is large enough to be considered biologically relevant. Thus, an association that is statistically significant may be or may be not biologically relevant and vice versa. While epidemiological results that are statistically significant may be dismissed as ‘not biologically relevant’, non‐statistically significant results are seldom determined to be ‘biologically relevant’. Increasingly, researchers and regulators are looking beyond statistical significance for evidence of a ‘minimal biologically important difference’ for commonly used outcomes measures. Factoring biological significance relevance into study design and power calculations, and reporting results in terms of biological as well as statistical significance will become increasingly important for risk assessment (Skelly, 2011). This is the subject of an EFSA Scientific Committee guidance document outlining generic issues and criteria to be taken into account when considering biological relevance (EFSA Scientific Committee, 2017a); also a framework is being developed to consider biological relevance at three main stages related to the process of dealing with evidence (EFSA Scientific Committee, 2017b).

Random error. Evaluation of statistical precision involves consideration of random error within the study. Random error is the part of the study that cannot be predicted because that part is attributable to chance. Statistical tests determine the probability that the observations found in scientific studies have occurred as a result of chance. In general, as the number of study participants increases, precision (often expressed as standard error) of the estimate of central tendency (e.g. the mean) is increased and the ability to detect a statistically significant difference, if there is a real difference between study groups, i.e. the study's power, is enhanced. However, there is always a possibility, at least in theory, that the results observed are due to chance only and that no true differences exist between the compared groups (Skelly, 2011). Very often this value is set at 5% (significance level).

Multiple testing. As mentioned previously when discussing sample size, modelling of the exposure–health relationship is in principle hypothesis‐driven, i.e. it is to be stated beforehand in the study objectives what will be tested. However, in reality, epidemiological studies (and toxicological studies in laboratory animals) often explore a number of different health outcomes in relation to the same exposure. If many statistical tests are conducted, some 5% of them will be statistically significant by chance. Such testing of multiple endpoints (hypotheses) increases the risk of false positive results and this can be controlled for by use of Bonferroni, Sidak or Benjamini–Hochberg corrections or other suitable methods. But this is often omitted. Thus, when researchers carry out many statistical tests on the same set of data, they can conclude that there are real differences where in fact there are none. Therefore, it is important to consider large number of statistical results as preliminary indications that require further validation. The EFSA opinion on statistical significance and biological significance notes that the assumptions derived from a statistical analysis should be related to the study design (EFSA, 2011b).

-

Effect size magnification. An additional source of bias, albeit one that is lesser known, is that which may result from small sample sizes and the consequent low statistical power. This lesser known type of bias is ‘effect size magnification’ which can result from low powered studies. While it is generally widely known that small, low‐powered studies can result in false negatives since the study power is inadequate to reliably detect a meaningful effect size, it is less well known that these studies can result in inflation of effect sizes if those estimated effects pass a statistical threshold (e.g. the common p < 0.05 threshold used to judge statistical significance). This effect –also known as effect size magnification – is a phenomenon by which a ‘discovered’ association (i.e. one that has passed a given threshold of statistical significance) from a study with suboptimal power to make that discovery will produce an observed effect size that is artificially – and systematically – inflated. This is because smaller, low‐powered studies are more likely to be affected by random variation among individuals than larger ones. Mathematically, conditional on a result passing some predetermined threshold of statistical significance, the estimated effect size is a biased estimate of the true effect size, with the magnitude of this bias inversely related to power of the study.

As an example, if a trial were run thousands of times, there will be a broad distribution of observed effect sizes, with smaller trials systematically producing a wider variation in observed effect sizes than larger trials, but the median of these estimated effect sizes is close to the true effect size. However, in a small and low powered study, only a small proportion of observed effects will pass any given (high) statistical threshold of significance and these will be only the ones with the greatest of effect sizes. Thus, when these smaller, low powered studies with greater random variation do indeed find a significance‐triggered association as a result of passing a given statistical threshold, they are more likely to overestimate the size of that effect. What this means is that research findings of small and significant studies are biased in favour of finding inflated effects. In general, the lower the background (or control or natural) rate, the lower the effect size of interest, and the lower the power of the study, the greater the tendency towards and magnitude of inflated effect sizes.

It is important to note, however, that this phenomenon is only present when a ‘pre‐screening’ for statistical significance is done. The bottom line is that if it is desired to estimate a given quantity such as an OR or RR, ‘pre‐screening’ a series of effect sizes for statistical significance will result in an effect size that is systematically biased away from the null (larger than the true effect size). To the extent that regulators, decision‐makers, and others are acting in this way – looking for statistically significant results in what might be considered a sea of comparisons and then using those that cross a given threshold of statistical significance to evaluate and judge the magnitude of the effect – will likely result in an exaggerated sense of the magnitude of the hypothesised association. Additional details and several effect size simulations are provided in Annex D of this document.

Confounding occurs when the relationship between the exposure and disease is to some extent attributable to the effect of another risk factor, i.e. the confounder. There are several traditionally recognised requirements for a risk factor to actually act as a confounder as described by McNamee (2003) and illustrated below. The factor must:

be a cause of the disease, or a surrogate measure of the cause, in unexposed people; factors satisfying this condition are called ‘risk factors’;

be correlated, positively or negatively, with exposure in the study populations independently from the presence of the disease. If the study population is classified into exposed and unexposed groups, this means that the factor has a different distribution (prevalence) in the two groups;

not be an intermediate step in the causal pathway between the exposure and the disease

Confounding can result in an over‐ or underestimation of the relationship between exposure and disease and occurs because the effects of the two risk factors have not been separated or ‘disentangled’. In fact, if strong enough, confounding can also reverse an apparent association. For instance, because agriculture exposures cover many different exposure categories, farmers are likely to be more highly exposed than the general population to a wide array of risk factors, including biological agents (soil organisms, livestock, farm animals), pollen, dust, sunlight and ozone amongst others, which may act as potential confounding factors.

A number of procedures are available for controlling confounding, both in the design phase of the study or in the analytical phase. For large studies, control in the design phase is often preferable. In the design phase, the epidemiological researcher can limit the study population to individuals that share a characteristic which the researcher wishes to control. This is known as ‘restriction’ and in fact removes the potential effect of confounding caused by the characteristic which is now eliminated. A second method in the design phase through which the researcher can control confounding is by ‘matching’. Here, the researcher matches individuals based on the confounding variable which ensures that this is evenly distributed between the two comparison groups.

Beyond the design phase, at the analysis stage, control for confounding can be done by means of either stratification or statistical modelling. One means of control is by stratification in which the association is measured separately, under each of the confounding variables (e.g. males and females, ethnicity or age group). The separate estimates can be ‘brought together’ statistically – when appropriate – to produce a common OR, RR or other effect size measure by weighting the estimates measured in each stratum (e.g. using Mantel–Haenszel approaches). This can be done at the cost of reducing the sample size for the analysis. Although relatively easy to perform, there can be difficulties associated with the inability of this stratification to deal with multiple confounders simultaneously. For these situations, control can be achieved through statistical modelling (e.g. multiple logistic regression).

Regardless of the approaches available for control of confounding in the design and analysis phases of the study described above, it is important – prior to any epidemiological studies being initiated in the field – that careful consideration be given to confounders because researchers cannot control for a variable which they have not considered in the design or for which they have not collected data.

Epidemiological studies – published or not – are often criticised for ignoring potential confounders that may possibly either falsely implicate or inappropriately negate a given risk factor. Despite these critiques, rarely is an argument presented on the likely size of the impact of the bias from such possible confounding. It should be emphasised that a confounder must be a relatively strong risk factor for the disease to be strongly associated with the exposure of interest to create a substantial distortion in the risk estimate. It is not sufficient to simply raise the possibility of confounding; one should make a persuasive argument explaining why a risk factor is likely to be a confounder, what its impact might be and how important that impact might be to the interpretation of findings. It is important to consider the magnitude of the association as measured by the RR, OR, risk ratio, regression coefficient, etc. since strong relative risks are unlikely to be due to unmeasured confounding, while weak associations may be due to residual confounding by variables that the investigator did not measure or control in the analysis (US‐EPA, 2010b).

Effect modification. Effects of pesticides, and other chemicals, on human health can hardly be expected to be identical across all individuals. For example, the effect that any given active substance might have on adult healthy subjects may not be the same as that it may have on infants, elderly, or pregnant women. Thus, some subsets of the population are more likely to develop a disease when exposed to a chemical because of an increased sensitivity. For this, the term ‘vulnerable subpopulation’ has been used, which means children, pregnant women, the elderly, individuals with a history of serious illness and other subpopulations identified as being subject to special health risks from exposure to environmental chemicals (i.e. because of genetic polymorphisms of drug‐metabolising enzymes, transporters or biological targets). The average effect measures the effect of an exposure averaged over all subpopulations. However, there may be heterogeneity in the strength of an association between various subpopulations. For example, the magnitude of the association between exposure to chemical A and health outcome B may be stronger in children than in healthy adults, and absent in those wearing protective clothing at the time of exposure or in those of different genotype. If heterogeneity is truly present, then any single summary measure of an overall association would be deficient and possibly misleading. The presence of heterogeneity is assessed by testing for the presence of statistically significant interaction between the factor and the effect in the various subpopulations. But, in practice, this requires large sample size.

Investigating the effect in subpopulations defined by relevant factors may advance knowledge on the effect on human health of the risk factor of interest.

2.6. Study validity

When either a statistically significant association or no such significant association between, for example, pesticide exposures and a health outcome is observed, there is a need to also evaluate the validity of a research study, assessing factors that might distort the true association and/or influence its interpretation. These imperfections relate to systematic sources of error that result in a (systematically) incorrect estimate of the association between exposure and disease. In addition, the results from a single study takes on increased validity when it is replicated in independent investigations conducted on other populations of individuals at risk of developing the disease.

Temporal sequence. Any claim of causation must involve the cause preceding in time the presumed effect. Rothman (2002) considered temporality as the only criterion that is truly causal, such that lack of temporality rules out causality. While the temporal sequence of an epidemiological association implies the necessity for the exposure to precede the outcome (effect) in time, measurement of the exposure is not required to precede measurement of the outcome. This requirement is easier met in prospective study designs (i.e. cohort studies), than when exposure is assessed retrospectively (case–control studies) or assessed at the same time than the outcome (cross‐sectional studies). However, also in prospective studies, the time sequence for cause and effect and the temporal direction might be difficult to ascertain if a disease developed slowly and initial forms of disease were difficult to measure (Höfler, 2005).

The generalisability of the result from the population under study to a broader population should also be considered for study validity. While the random error discussed previously is considered a precision problem and is affected by sampling variability, bias is considered a validity issue. More specifically, bias issues generally involve methodological imperfections in study design or study analysis that affect whether the correct population parameter is being estimated. The main types of bias include selection bias, information bias (including recall bias and interviewer/observer bias) and confounding. An additional potential source of bias is effect size magnification, which has already been mentioned.

Selection bias concerns a systematic error relating to validity that occurs as a result of the procedures and methods used to select subjects into the study, the way that subjects are lost from the study or otherwise influence continuing study participation.

Typically, such a bias occurs in a case–control study when inclusion (or exclusion) of study subjects on the basis of disease is somehow related to the prior exposure status being studied. One example might be the tendency for initial publicity or media attention to a suspected association between an exposure and a health outcome to result in preferential diagnosis of those that had been exposed compared to those that had not. Selection bias can also occur in cohort studies if the exposed and unexposed groups are not truly comparable as when, for example, those that are lost from the study (loss to follow‐up, withdrawn or non‐response) are different in status to those who remain. Selection bias can also occur in cross‐sectional studies due to selective survival: only those that have survived are included in the study. These types of bias can generally be dealt with by careful design and conduct of a study (see also Sections 4, 6 and 8).

The ‘healthy worker effect’ (HWE) is a commonly recognised selection bias that illustrates a specific bias that can occur in occupational epidemiology studies: workers tend to be healthier than individuals from the general population overall since they need to be employable in a workforce and can thus often have a more favourable outcome status than a population‐based sample obtained from the general population. Such a HWE bias can result in observed associations that are masked or lessened compared to the true effect and thus can lead to the appearance of lower mortality or morbidity rates for workers exposed to chemicals or other deleterious substances.

Information bias concerns a systematic error when there are systematic differences in the way information regarding exposure or the health outcome are obtained from the different study groups that result in incorrect or otherwise erroneous information being obtained or measured with respect to one or more covariates being measured in the study. Information bias results in misclassification which in turn leads to incorrect categorisation with respect to either exposure or disease status and thus the potential for bias in any resulting epidemiological effect size measure such as an OR or RR.

Misclassification of exposure status can result from imprecise, inadequate or incorrect measurements; from a subject's incorrect self‐report; or from incorrect coding of exposure data.

Misclassification of disease status can, for example, arise from laboratory error, from detection bias, from incorrect or inconsistent coding of the disease status in the database, or from incorrect recall. Recall bias is a type of information bias that concerns a systematic error when the reporting of disease status is different, depending on the exposure status (or vice versa). Interviewer bias is another kind of information bias that occurs where interviewers are aware of the exposure status of individuals and may probe for answers on disease status differentially – whether intended or not – between exposure groups. This can be a particularly pernicious form of misclassification – at least for case–control studies – since a diseased subject may be more likely to recall an exposure that occurred at an earlier time period than a non‐diseased subject. This will lead to a bias away from null value (of no relation between exposure and disease) in any effect measure.

Importantly, such misclassifications as described above can be ‘differential’ or ‘non‐differential’ and these relate to (i) the degree to which a person that is truly exposed (or diseased) is correctly classified as being truly exposed or diseased and (ii) the degree to which an individual who is truly not exposed (or diseased) is correctly classified in that way. The former is known as ‘sensitivity’ while the latter is referred to as ‘specificity’ and both of these play a role in determining the existence and possible direction of bias. Differential misclassification means that misclassification has occurred in a way that depends on the values of other variables, while non‐differential misclassification refers to misclassifications that do not depend on the value of other variables.

What is important from an epidemiological perspective is that misclassification biases – either differential or non‐differential – depend on the sensitivity and specificity of the study's methods used to categorise such exposures and can have a predictable effect on the direction of bias under certain (limited) conditions: this ability to characterise the direction of the bias based on knowledge of the study methods and analyses can be useful to the regulatory decision‐maker since it allows the decision maker to determine whether the epidemiological effect sizes being considered (e.g. OR, RR) are likely underestimates or overestimates of the true effect size. While it is commonly assumed by some that non‐differential misclassification bias produces predictable biases towards the null (and thus systematically under‐predicts the effect size), this is not necessarily the case. Also, the sometimes common assumption in epidemiology studies that misclassification is non‐differential (which is sometimes also paired with the assumption that non‐differential misclassification bias is always towards the null) is not always justified (e.g. see Jurek et al., 2005).

When unmeasured confounders are thought to affect the results, researchers should conduct sensitivity analyses to estimate the range of impacts and the resulting range of adjusted effect measures (US‐EPA, 2010b). Quantitative sensitivity (or bias) analyses are, however, not typically conducted in many epidemiological studies, with most researchers instead describing various potential biases qualitatively in the form of a narrative in the discussion section of a paper.

It is often advisable that the epidemiological investigator performs sensitivity analysis to estimate the impact of biases, such as exposure misclassification or selection bias, by known but unmeasured risk factors or to demonstrate the potential effects that a missing or unaccounted for confounder may have on the observed effect sizes (see Lash et al., 2009; Gustafson and McCandless, 2010). Sensitivity analyses should be incorporated in the list of criteria for reviewing epidemiological data for risk assessment purposes.

3. Key limitations of the available epidemiological studies on pesticides

3.1. Limitations identified by the authors of the EFSA external scientific report

The EFSA External scientific report (Ntzani et al., 2013; summarised in Annex A) identified a plethora of epidemiological studies which investigate diverse health outcomes. In an effort to systematically appraise the epidemiological evidence, a number of methodological limitations were highlighted. In the presence of these limitations, robust conclusions could not be drawn, but outcomes for which supportive evidence from epidemiology existed were highlighted for future investigation. The main limitations identified included (Ntzani et al., 2013):

Lack of prospective studies and frequent use of study designs that are prone to bias (case–control and cross‐sectional studies). In addition, many of the studies assessed appeared to be insufficiently powered.

Lack of detailed exposure assessment, at least compared to many other fields within epidemiology. The information on specific pesticide exposure and co‐exposures was often lacking, and appropriate biomarkers were seldom used. Instead, many studies relied on broad definition of exposure assessed through questionnaires (often not validated).

Deficiencies in outcome assessment (broad outcome definitions and use of self‐reported outcomes or surrogate outcomes).

Deficiencies in reporting and analysis (interpretation of effect estimates, confounder control and multiple testing).

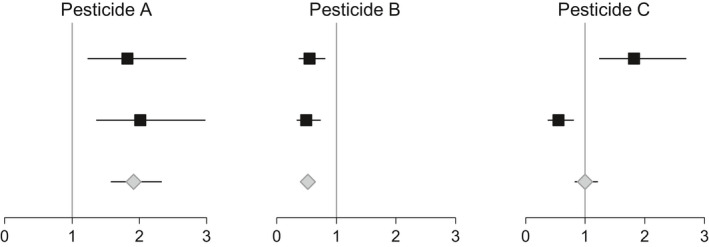

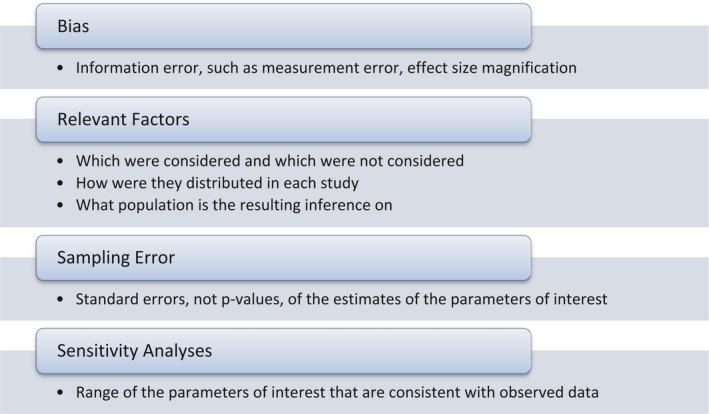

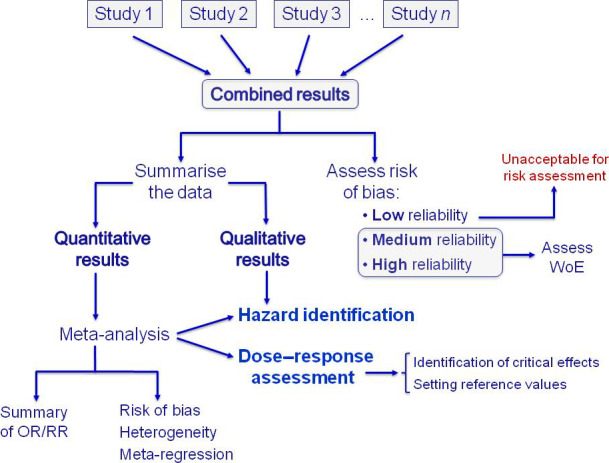

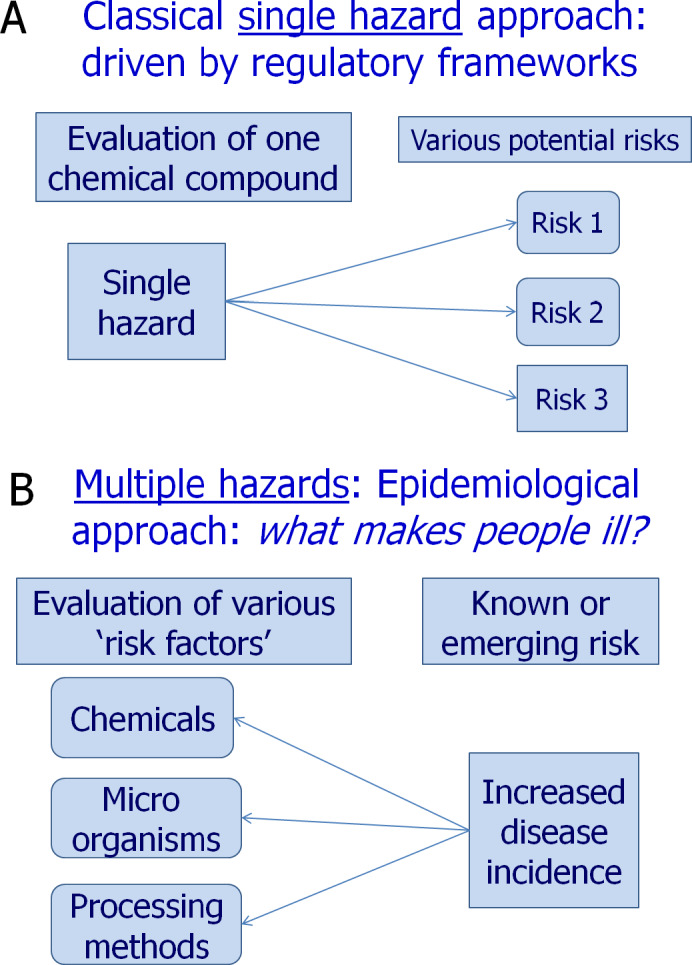

Selective reporting, publication bias and other biases (e.g. conflict of interest).