Abstract

Social processes that change quickly are difficult to study, because they require frequent survey measurement. Weekly, daily, or even hourly measurement may be needed depending on the topic. With more frequent measurement comes the prospect of more complex patterns of missing data. The mechanisms creating the missing data may be varied, ranging from technical issues such as lack of an Internet connection to refusal to complete a requested survey. We examine one approach to mitigating the damage of these missing data – a follow-up or closeout interview that is completed after the frequent measurement. The Relationship Dynamics and Social Life (RDSL) study used this approach. The study asked women weekly about their attitudes and behaviors related to sexual relationships and pregnancy. The surveys were carried out for 130 weeks and concluded with a closeout interview. We explore the patterns of missing data in the RDSL study and then examine associations between the data collected in the closeout survey and key variables collected in the weekly survey. We then assess the extent to which data from the closeout survey are useful in repairing the damage caused by missing data.

Keywords: Longitudinal survey, frequent measurement, survey nonresponse, missing data

1. Introduction

Social science research creates nearly unlimited demand for more data. Studies of social processes that occur over time create particularly high demand for increasingly detailed measures of those processes across time. Longitudinal studies of the same individual across time are the state-of-the-art for research on many different social behaviors, including employment, income and poverty (Bureau of Labor Statistics, 2005; McGonagle, et al. 2012; Parent, 2000), education and attainment (Deluca and Rosenbaum, 2001; Dowd and Melguizo, 2008; Fall and Roberts, 2012; Kienzl and Kena, 2006; Pudrovska, 2014), courtship, sex, marriage and childbearing (Brauner-Otto and Geist, 2018; Paik and Woodley, 2012; Resnick et al., 1997; Sassler and Goldscheider, 2004; Thornton et al., 2007), child development and the transition to adulthood (Kendig et al., 2014; Wightman, et al. 2017), and alcohol and substance use (Miech et al., 2015; Patrick et al., 2009, 2011). In each of these substantive domains, specific questions about processes over time often demand increasingly frequent and detailed measurement of change over time. Technological changes, including the broad diffusion of the Internet and smart phones in the US population, have created more opportunities for frequent measurement of such processes. However, concomitant with the new technological opportunity to provide increasingly frequent measures of social processes are new threats to the quality of those measures with potentially significant and wide-ranging consequences for the substantive conclusions based on the analyses of such measures.

Here we focus on this topic in detail, probing the potential for missing data, generated by nonresponse to even small portions of a frequent measurement, to significantly alter the substantive conclusions we reach from analyses of such data. This potential problem is important because increasingly frequent measures greatly increase the opportunity for each individual to skip one or more measures. These choices may not be random, and the potential consequences of such missing data are poorly understood. As a result, the possibility that missing data alters the substantive conclusions from models based on frequent measurement is a potential threat across all of the substantive domains that may use frequent measures.

We focus careful attention on one substantive example – the social processes of sex and pregnancy – that is especially important for many reasons. First, the dynamics of sex and pregnancy can take place very quickly, often in under an hour, but are rarely measured at that frequency. So, more frequent measurement has the potential to illuminate crucial aspects of these processes (Axinn, Jennings and Couper, 2015). Retrospective reporting on sex and pregnancy can be prone to error. Measurements that are closer in time to the events are less likely to be measured in error (McAuliffe, et al., 2007; Gillmore, et al., 2010; Graham, et al. 2003; Yawn, et al., 1998). Second, the rapid dynamics of sex and pregnancy have long-lasting social consequences that affect the health and wellbeing of both individuals and families (Axinn et al., 1998; Barber et al., 1999; Barber and East 2009, 2011). In this context, missing data from frequent measurements can lead to incorrect substantive conclusions which may, in turn, lead to policies that are inefficient or even harmful. Third, though we focus on a particular social process, the influence of missing data on our substantive understanding of sex and pregnancy yields important insights into the potential of missing data in other substantive areas to influence conclusions that are also based on frequent measurement. Thus our detailed examination of this topic provides crucial new insight into the potential scope of this issue across topics.

To create frequent measurements scientist often employ survey measures that may or may not supplement measurements from smartphones or other mobile devices, such as heart rate or GPS location data. These frequent measurements across time lead to more diverse patterns of nonresponse. Measurements may be missed for a diverse set of reasons – lack of cell phone coverage or Internet access, passive non-compliance such as forgetting to complete a survey, or active resistance. Given that the mechanisms behind the missing data may be varied, accounting for these patterns of nonresponse may be difficult. As with all forms of missing data, this nonresponse can produce biased estimates in some cases. The missing data lost to nonresponse might differ systematically from data that are observed. For example, if the nonresponse is caused by a life event that is being measured, such as pregnancy, then the missing data might systematically differ from the observed data.

There are a limited number of methods for dealing with missing data in this situation. On the data collection side, the focus is on developing methods that produce the most complete data possible. However, even the best methods will likely not lead to complete data. Therefore, some post-collection adjustment strategy is usually necessary in order to account for differences between the reported measurements and those that are missed. The strategies are limited largely to weighting and imputation. Both of these strategies are improved when measures that are highly correlated with the missing data are available for these calculations.

In the case of frequent measurement, one potential source of data is a survey that is conducted after the frequent measurements are finished. A follow-up or “closeout” interview of this type may produce data that are predictive of the missing frequent measurements. The value of closeout interview data depends upon the relationships between those data and both the frequent measurement data and nonresponse to the frequent measurements. Hence, the value of these data may vary across variables, analyses, and substantive topics.

2. Missing Data in a Frequent Measurement Context

Missing data can be problematic in all social research, and can be damaging to our understanding of the topic being studied when the missing information is not missing at random. Systematic missing data, where the missingness is correlated with the topic being studied, has high potential to introduce bias into conclusions based on those data (Little and Rubin, 2002). Frequent measurement increases the opportunities for data to be missing and adds complexity to the potential patterns of missing data. Here we conceptualize the potential for missing data to introduce bias in studies that feature frequent measurement, including identifying likely patterns of missing data. These patterns include the potential for individuals to drop out of a frequent measurement study entirely, or to respond intermittently at a pace slower than potential changes in the measured outcomes. The impact of missingness may differ across the various patterns. For example, it may be that intermittent response is simpler to repair than complete dropout.

For simplicity, we will use the term “nonresponders” to define persons who exhibit a pattern of missing data – either attrition or intermittent wave nonresponse. For example, dropping out of the study is one pattern of missing data while responding to about a third of the requested surveys across the course of the study is another. We will examine seven patterns of missing data. These patterns are drawn from several sources. Three are drawn from a previously published analysis of nonresponse to the survey (Barber et al., 2016). These definitions are based upon the number of interviews completed, the amount of time between the first and last interviews, and the proportion of time that an interview was completed late. An additional definition is based upon our imputation framework (described below) and looks at how many days of the field period do not have any reported data. The last three patterns are based upon a latent class analysis to identify common patterns of responding (Lugtig, 2014).

This study examines the value of the closeout interview data for ameliorating the impact of data that are missing due to attrition or intermittent nonresponse. Using multiple patterns is an important facet of the examination of potential biases due to missing data and understanding of the value of the closeout interview. In particular, understanding the mechanisms for each pattern of missing data is a necessary precursor to developing a remedy – either through post-survey statistical adjustment or through nonresponse reduction in future surveys with a similar design.

We use multiple methods to assess the impact of missing data. First, we examine how responders and nonresponders to the frequent measurement (under several patterns of missing data) differ with respect to both baseline and closeout interview data. Second, we impute missing values using models with and without closeout interview data to see how this changes estimates of means and variances for two key statistics. Third, we estimate multivariate models predicting key outcomes from the frequent measurements to see if the two sets of imputations (i.e. those excluding and including the closeout interview data) yield different estimated coefficients in these models.

3. Data and Methods

a. Sample

The Relationship Dynamics and Social Life (RDSL) study was based on a simple random sample of 1,198 young women, ages 18–19, residing in a county in Michigan. To be eligible, women had to be 18 or 19 and living in the selected county at the time of sampling. We also included women who were temporarily living outside the county to attend school, job training, etc. The sampling frame was the Michigan Department of State driver’s license and Personal Identification Card (PID) database. The sampling method was a cost-based decision because of the relatively sparse distribution of 18 to 19-year-olds in the general population. However, comparison of the driver’s license and PID data by ZIP code to 2000 Census-based projections revealed 96% agreement between the frame count and the projections for this population (authors’ calculations).

The sample (n=1,198) was released in a series of four replicates. Each replicate sample is an independent, random sample drawn from the sampling frame. Prior to each replicate sample release, the frame of licensed drivers and PID card holders was updated to capture newly eligible women (those who had just turned 18) as well as 18- and 19-year-olds who had moved into the sample county during the months since the prior replicate sample release. Near the end of the field period, a two-phase sampling approach (Hansen and Hurwitz, 1946), where a subsample of remaining cases was selected for intensive recruitment, was used. This sampling procedure led to unequal probabilities of selection. These are accounted for in the analyses using design weights. The current study involves a follow-up, “closeout” interview with one of the four replicates. The closeout interview was conducted in a single replicate for cost reasons.

b. Data Collection Procedures: Baseline Interview, Journal, Closeout Interview

The first component of data collection was a baseline face-to-face survey interview conducted between March 2008 and July 2009, assessing sociodemographic characteristics, attitudes, relationship characteristics and history, contraceptive use, and pregnancy history. The length of the recruitment period for each replicate sample release was 18 weeks: 15 weeks of general recruitment effort and a final 3 weeks of intensive recruitment effort. For the final 3 weeks, a subsample of the remaining active cases was selected for intensive recruitment. Over the full sample, 1,003 women completed the baseline interview. For the replicate under examination in this paper, 235 women participated in the baseline interview. Response rates are presented in Table 1.

Table 1.

Sample Size, Response Rates, and Interviews for Full Sample and Replicate Sample

| Full Sample | Closeout Interview Replicate | ||

|---|---|---|---|

| Baseline Interview | Eligible Sample Size | 1,198 | 253 |

| Completed Interviews | 1,003 | 235 | |

| Weighted Response Rate | 82.4% | 86.0% | |

| Closeout Interview | Completed Closeout Interviews | 215 | |

| Weighted Response Rate | 91.5% |

One innovative aspect of the RDSL study design was the second component of data collection – dynamic, prospective measurement of pregnancy desires, contraceptive use, and pregnancy, as well as relationship characteristics and behaviors such as commitment and sex, collected in a weekly journal format. The journal interview could be completed online or over the telephone. It was designed to be short, with a median length of about 4 minutes (Barber et al., 2011, 2016). At the conclusion of the baseline interview, respondents were invited to participate in the journal-based survey every week for two and a half years (or 130 weeks).

Of the 1,003 women who completed the baseline interview, 95% participated in the weekly journal by completing at least one journal (N=953); 92% reported regular access to the Internet and usually completed the journal online each week. The remaining 8% called in to the Survey Research Center’s phone lab to complete their weekly journals. In addition, we allowed respondents to switch mode (from Internet to phone and vice versa) at any time, for any duration (i.e. one week or more, see also Axinn, Gatny and Wagner (2015) for additional detail on the impact of using multiple modes). Respondents were offered an incentive of $1 per weekly journal with $5 bonuses for on-time completion of five journals in a row. Additional details regarding data collection procedures are available in Barber et al., 2016.

Journal response rates were high (Barber et al., 2016). At the conclusion of the study, 84% of baseline survey respondents had participated in the journal study for at least 6 months, 79% for at least 12 months, and 75% for at least 18 months. Over the 130-week period of data collection, the mean number of journals completed by the 235 women was 65.9.

Overall, the journals had good participation, but there are missing elements in the data due to a combination of missed journals and dropping out of the study before the end. Journals that were completed less than 14 days after the prior journal adjusted the referenced period to between the current journal and prior journal. In other words, there is no missing data for these journals. Whether the longer recall period (14 days compared to 7 days) reduces the quality of reporting is a separate issue. If the journal completion occurred at 14 days or later, the reference period is the prior week only. Thus, when journals are not completed within a two-week interval, missing data occurs. We refer to the period between journals as a week, as shorthand, even though it may vary from 5 to 13 days.

After the journal study was complete, a closeout interview was conducted by telephone as an experiment to determine if additional information could be collected in this manner that would be useful for dealing with data missing due to nonresponse. For cost reasons this closeout interview was restricted to one of the four replicates. The closeout interview collected updates on attitudes and preferences first measured at baseline, as well as activities that compete with childbearing. Respondent opinions about the journal portion of the study such as if the journals were short enough and if the journal incentive was large enough were also obtained during the closeout interview.

Of the 235 women who completed the baseline interview, 215 completed the closeout interviews (see Table 1). A comparison of the closeout responders (n=215) and nonresponders (n=20) did not reveal significant differences in most of the baseline measures tested (see Appendix A). The lack of differences may be due to a lack of power resulting from the small number of nonresponders (which can also be seen as a good thing). We also examined differences between closeout responders and nonresponders using the journal data (see Appendix B). In general, closeout nonrespondents also gave fewer journal reports. This led to less reporting of several characteristics for closeout nonrespondents in the journal (ever forgot to use birth control, number of new sexual partners, and number of pregnancy changes).

c. Analyses

Across the 130 weeks of the study, each individual could have a unique pattern of missing data. In order to do statistical analysis, we identified several different definitions of nonresponse that created a binary classification of all cases as either better or worse responders. We use these alternative definitions in order to examine the impact of the closeout data (relative to the baseline and/or journal data) on the ability to detect differences between responders and nonresponders.

The first three definitions are drawn from Barber et al. (2016). Their first definition (Row 1, in Table 2) is based on the number of journals. The cutpoint of fewer than 60 journals was chosen as this would be near the minimum number of journals required for complete reporting (i.e. once every two weeks). The second definition (Row 2) is based upon the length of time between the first and last completed journals. The mean number of days was 775, but the distribution was quite skewed. The 10th percentile was 230 days and the 90th percentile was 919 days. The 730 day cutpoint was selected with anyone less than that length of time being considered a nonresponder. The third definition (Row 3) is based on lateness. Each journal was coded as being late or not, where “late” means more than 14 days after the previous journal. For each participant, the proportion of journals that were late was then calculated. The mean is 35%. The first quartile is 13% while the third quartile is 50%. A participant who had more than 75% of her journals late was categorized as a nonresponder.

Table 2.

Definition of the Patterns of Missing Data

| Definition of Pattern | Fit in Pattern | |

|---|---|---|

| Yes n (%) |

No n |

|

| 1. Fewer than 60 journals | 118 (50%) | 117 |

| 2. Days between first and last journal completion fewer than 730 | 57 (24%) | 178 |

| 3. Proportion of journals that are late > 75% | 45 (19%) | 190 |

| 4. Nonresponse rates increase to week 50 but hold steady at response rates between 0.7 and 0.8 | 144 (61%) | 91 |

| 5. Nonresponse rates increase to week 50, hold at about 0.4 | 51 (22%) | 184 |

| 6. High Responders | 28 (12%) | 207 |

| 7. More than 180 days missing | 115 (49%) | 120 |

The next three definitions focus on patterns of response over time. The fourth definition (Row 4) includes those who responded at a relatively high rate throughout the field period (between 70 and 80%). The fifth definition (Row 5) is a group who had relatively low response rates of about 40% throughout the field period. The sixth group (Row 6) is a set of high responders who responded at rates as high as 95% but not below 90% each week. The definitions and counts of the number of cases meeting each definition are listed in Table 2.

A final definition was derived from our imputation approach and was based on the amount of missing data. It relies on examining each day in the field period to see if the panel member responded to journal questions about their behavior and status on that day. This definition (Row 4) is based upon the number of days that were missing from the final grid of weekly response described below. In this grid of 235 respondents with 130 weeks, 28% of the cells were missing data about sexual partners while 40% were missing data on pregnancy status. This includes both missing journals and missing items within journals. If more than 180 days were missing, then the participant was classified as a nonresponder.

The definitions are not mutually exclusive. Each definition is a binary classification. In other words, for each definition, all 235 cases are classified into one of two groups. This approach allows us to assess the impact of different classification schemes on potential differences induced by nonresponse. It is not an exploration, for example, of seven different subgroups in the sample. The latter might be useful, but addresses a different question. Namely, are there different types of nonresponders. Such an examination, while worthwhile, is probably beyond our capability given our somewhat limited sample size.

These definitions are used to determine whether the closeout interview adds information about differences between responders and nonresponders. We have taken several approaches to this question.

The first method for assessing the value of the closeout interview uses the definitions of nonresponse in Table 2 to compare responders and nonresponders using the baseline and closeout interview data. We look at bivariate associations in order to assess the relative strengths of the baseline data as compared to the closeout interview data as predictors of nonresponse. Calculations for these comparisons incorporate sampling weights and produce design-based variance estimates.

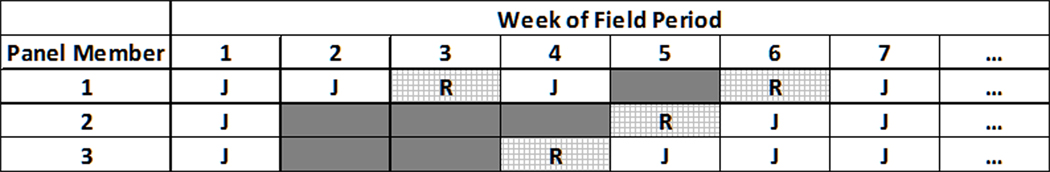

A second step assesses the value of closeout interview data through the use of imputation. The missing journal data were imputed using two different models – the first with only the baseline interview data as covariates in the imputation model and the second with both the baseline and closeout interview data as covariates. This allowed for the comparison of final estimates developed with and without the closeout interview data included in the adjustments for the missing data in the journals. In order to impute the missing journal data, the field period was laid out in 7-day weeks for each respondent. If a key variable was observed in a week or the following week, then the value from the journal was included for that week. If a key variable was not observed in the current week or the following week, then it is missing. This mimics the recall period rule that asks about the last 5 to 13 days depending upon when the previous journal was completed. Figure 1 shows a graphical example of how these patterns were created for three example panel members. The “J”s indicate where a journal was completed. The grey cells are missing data. Several cells contain an “R” with grey cross-hatching – for example, week 6 for panel member 1. In these cases, a journal was completed in the following week (in the example, week 7), and panel members were asked to recall events over the previous 14 days. Given this level of retrospective reporting, the data are considered complete.

Figure 1.

Example Patterns of Missing Data

There was a small amount of item missing data in the baseline and closeout interviews. These data were first imputed using Sequential Regression Multiple Imputation implemented via the IVEware software package (Raghunathan et al., 2001). A single imputed value was generated for each missing value. The resulting complete data were then used in the second set of imputations.

The second set of imputations were generated for the missing journal data. Two variables were imputed – the number of new sexual partners and the number of pregnancies. These are two key measurements taken by the journal and have generated several publications focused on these variables as outcomes (Gatny et al., 2014; Hall et al., 2014; Kusunoki et al., 2016; Miller et al., 2013, 2016, 2017; Paat et al., 2016).We thought interactions between predictors may be important in the case of the number of new sexual partners. Therefore, Classification and Regression Tree (CART) models were used to create the imputations. The imputations for the journal data were conducted in R using the MICE package (van Buuren and Groothuis-Oudshoorn, 2011), using the CART models implemented in MICE. Because pregnancies were relatively rare events, the time period was consolidated from one week to 26 weeks (about six months). If a pregnancy was observed during that time period or if all the weeks were observed and there was no indication of a pregnancy, the data were considered complete. Otherwise, a value was imputed. In this case, logistic regression was used as the basis of the imputation. In order to stabilize estimates of the fraction of missing information (FMI), a large number of imputations (200) were used (Graham et al., 2007; Harel, 2007). Two sets of imputations were created for each of the two variables. One imputation included the journal data and the baseline interview data. The other imputation included the journal, baseline and closeout interview data. The estimates, variances, and the FMI from the two sets of imputations are compared in order to determine the relative value of the closeout interview. The FMI is a measure of the uncertainty due to imputation (Wagner, 2010). It is the proportion of the total variance of the estimate that is due to the variance between imputations. A higher FMI indicates more variability in the imputed values. All imputation models used in this paper are based on a Missing-At-Random (MAR, Little and Rubin, 2002) assumption. That is, the models are estimated from observed data and then used to predict the missing data. This allows us to test whether the missing data are Not-Missing –At-Random (NMAR) when the closeout interview data are excluded. Whether the data are still NMAR when the closeout data are included is not a focus for this paper, but is certainly possible.

A third comparison uses the two different multiply-imputed datasets to estimate published models predicting, on the one hand, unintended pregnancy and, on the other hand, the number of new sexual partners. As with the comparisons of estimated means, we compare the estimated coefficients, standard errors, and FMI in the two versions of the models.

4. Results

We first examine bivariate associations between survey data obtained at baseline and closeout interviews and nonresponse. We then examine imputed estimates, both univariate and in multivariate models, where the imputation models include and exclude data from the closeout interview data.

a. Bivariate Comparisons

We started from a set of 20 variables drawn from the baseline interview that were frequently used to control for background characteristics in substantive analyses (Barber et al., 2013, 2015; Chang et al., 2015; Gatny et al., 2014; Hall et al., 2014, 2015; Kusunoki et al., 2016; Miller et al., 2013, 2016, 2017). For each variable, we checked whether it was associated with any of the seven different definitions of patterns of missing data presented in the previous section. We selected 17 variables from the closeout interview using the same criterion that these were frequently used as control variables in substantive analyses. We checked these closeout interview variables for associations with the same definitions of nonresponse. In the baseline interview, there are more questions about respondent demographics, including characteristics of their parents or guardians. The closeout interview included more questions about attitudes and behaviors of the respondent. The results of these tests are summarized in Table 3. The details of these comparisons, including details about the questions, are available in Appendix D.

Table 3.

Number of Significant Differences (p<0.05) between Responders and Nonresponders among 20 Comparisons using Baseline Interview Data and 17 Comparisons using Closeout Interview Data

| Definition of Pattern | Number of Significant Differences |

|

|---|---|---|

| Baseline (out of 20) | Closeout (out of 17) | |

| 1. Fewer than 60 journals | 11 | 4 |

| 2. Days between first and last journal completion fewer than 730 | 1 | 1 |

| 3. Proportion of journals that are late > 75% | 3 | 0 |

| 4. More than 180 days missing | 12 | 4 |

| 5. Nonresponse rates increase to week 50 but hold steady at response rates between 0.7 and 0.8 | 12 | 5 |

| 6. Nonresponse rates increase to week 50, hold at about 0.4 | 7 | 3 |

| 7. High Responders | 9 | 4 |

From Table 3, it is clear that the definition of nonresponse does matter for these kinds of comparisons. Focusing on the baseline interview data first, under definition 2 (remained in study less than 730 days), there is only one significant difference between responders and nonresponders. It appears that the baseline data are not very useful for predicting early dropout. Under definitions 4 (more than 180 days missing) and 5 (the group that had consistent response rates to the journal of between 70 and 80%), 12 of the 20 statistics are different between the responders and nonresponders.

Next, we examine differences between responders and nonresponders using the closeout interview data. From Table 3, it is clear that there are fewer differences between responders and nonresponders in the closeout data.

It is also possible to look at each of the variables from the baseline and closeout interviews to determine for each of these variables, how many of the definitions of nonresponse have a significant association with nonresponse. This shows how robust an association each of the variables has across the different definitions of nonresponse. Table 4 provides counts of the number of significant differences between responders and nonresponders each baseline and closeout interview variable has. The first row (i.e. “Number of Significant Differences”=0) indicates that 3 of the 20 baseline interview variables and 7 of the 17 closeout interview variables were not associated with any of the measures of nonresponse.

Table 4.

Number of Significant Differences (p<0.05) Each of the Baseline and Closeout Interview Variables has with the Seven Definitions of Patterns of Missing Data

| Number of Significant Differences | Number of Variables |

|

|---|---|---|

| Baseline (out of 20) | Closeout (out of 17) | |

| 0 | 3 | 7 |

| 1 | 4 | 4 |

| 2 | 3 | 3 |

| 3 | 3 | 2 |

| 4 | 2 | 0 |

| 5 | 2 | 1 |

| 6 | 3 | 0 |

| 7 | 0 | 0 |

Looking at the baseline interview variables (see Appendix D for details), several are consistently predictive of the different definitions of patterns of missing data. Focusing on specific closeout interview variables (see Appendix D), three had associations with nonresponse across several of the definitions.

Three conclusions can be drawn from the information in Tables 3 and 4 concerning the baseline interview data. First, the definition of the pattern of missing data does make a difference. That is, these comparisons will vary depending upon the type of nonresponse being discussed. Second, the baseline data are useful predictors of most kinds of nonresponse. We note that these baseline data include variables that should be strongly related to data collected in the journals (Gatny et al., 2014; Miller et al., 2013, 2016, 2017). Third, in bivariate comparisons, although the closeout interview data have fewer associations with nonresponse, 10 of these closeout interview variables do have associations with nonresponse.

b. Means and Variances of Key Statistics using Imputed Data

The closeout interview has fewer associations with outcomes measured by the journal. This may be due to the particular questions that were asked in the closeout interview. However, the closeout interview data may still provide additional information that can improve estimates – either by reducing biases related to selectivity of who responds or by reducing the variance of the estimates.

In our analyses, we used imputation to create the post-survey adjustments. We have two fully imputed estimates – the number of pregnancies and the number of new sexual partners observed during the 130-week journal period. We imputed the values using, on the one hand, only the baseline data and, on the other hand, both the baseline and the closeout interview data. We then compared the estimates, the standard errors of these estimates, and the fraction of missing information under the two approaches. The results are presented in Table 5.

Table 5.

Estimated Mean, Variance, and FMI from Complete-Case Data (Mean Only), Fully-imputed Data based on Baseline Interview Data, and Fully-Imputed Data based on Baseline and Closeout Interview Data

| Variable | Unadjusted | Baseline Imputation | Baseline and Closeout Imputation |

|---|---|---|---|

| New Sexual Partners Weekly | |||

| Mean | 0.028 | 0.027 | 0.026 |

| Standard Error | 0.00113 | 0.00101 | 0.00097 |

| FMI | 0.1754 | 0.1480 | |

| Pregnancies Every 26 Weeks | |||

| Mean | 0.115 | 0.200 | 0.178 |

| Standard Error | 0.01496 | 0.01372 | 0.01270 |

| FMI | 0.2767 | 0.2324 |

From this table it is apparent that the closeout data are effective at reducing the variance of estimates. This shows up in both the variance and in the fraction of missing information. The closeout interview data reduces both of these relative to the data where the imputations are based only on baseline interview data. The estimates themselves are also somewhat different, especially for the pregnancy variable. In general, the imputations lead to increased estimates of the number of pregnancies. Further, the imputations that include the closeout data result in fewer imputed pregnancies and a somewhat smaller number of new sexual partners. The differences between the three estimates (unadjusted, baseline imputation, and baseline and closeout imputation) are not, however, significant for either of the two variables.

c. Multivariate Models using Imputed Data

In this section, the fully-imputed data are used to assess the additional information obtained from the closeout interview. Two previously-published models are re-estimated using both of the multiply-imputed datasets (that is, the imputations developed with and without the closeout data).

The first model is based upon Hall et al. (2014). This model estimates the influence of baseline depression and stress symptoms on women’s risk of unintended pregnancy over one year using logistic regression. Other factors shown to predict poor contraceptive behavior and unintended pregnancy among young women, including low income, having a mother who had a teenage pregnancy, relationship context, and earlier age at first sex, are included in the model (Finer and Henshaw, 2006; Frost and Darroch, 2008; Kusunoki and Upchurch, 2011; Rosenberg et al., 1995). Following Hall et al., all pregnancies are treated as unintended, given that the women are all 18–19 years of age and 98% stated at baseline that they had no intention of becoming pregnant. The model is based upon data from the baseline interview (the predictors) and journal data (the outcome) from the first 12 months of data collection. Table 6 presents the model estimates for the two multiply-imputed datasets. The original paper presents “adjusted relative risk,” (aRR) which can be estimated by the odds ratio in the case of a rare event. Here, we present the coefficients and standard errors on the logit scale, since the standard errors would be skewed on the scale of the odds ratio. The “Observed Data” column excludes the imputed values. There are 235 respondents included, with an outcome based on summary data from the journal (any pregnancy in the first 52 weeks). Each respondent supplied a varying number of journals. The model differs from Hall et al. (2014) in that it is based upon the closeout replicate only. The original paper uses the data from all four replicates (n=940).

Table 6.

Untransformed Coefficients from Logistic Regression Predicting any Unintended Pregnancies in the First 12 Months of Data Collection using Data Imputed with and without Closeout Interview Data

| Observed Data | Baseline Imputation | Baseline and Closeout Imputation | ||||||

|---|---|---|---|---|---|---|---|---|

| Parameter | Estimate | SE | Estimate | SE | FMI | Estimate | SE | FMI |

| Depression Symptoms | −0.55 | 0.40 | −0.38 | 0.48 | 0.32 | −0.37 | 0.44 | 0.23 |

| Receiving Public Assistance | 0.77 | 0.39 | 1.14 | 0.48 | 0.33 | 0.75 | 0.43 | 0.19 |

| Mother’s Age at First Birth | 0.99 | 0.36 | 2.14 | 0.46 | 0.32 | 1.14 | 0.40 | 0.21 |

| Cohabitation Status | 0.52 | 0.43 | 0.98 | 0.52 | 0.28 | 0.91 | 0.46 | 0.18 |

| Age at First Sex (< 16) | 0.77 | 0.38 | 0.77 | 0.46 | 0.32 | 1.12 | 0.44 | 0.23 |

NOTE: Bolded coefficients are significantly different from 0 at the p<0.05 level.

There are three things to note from these results. First, the main conclusion of the paper, that symptoms of depression are not a significant predictor of pregnancy, is the same in these models. Second, the conclusions with respect to some of the parameters differ across the two models. Mother’s Age at First Birth is a significant predictor in each model. However, Receiving Public Assistance is significant when the closeout interview data are not included in the imputation models. Cohabitation Status and Age at First Sex <16 are significant when the closeout interview data are used in the imputation models. Third, the standard errors and fraction of missing information are consistently lower when the closeout interview data are used in the imputation models. The closeout interview data do provide additional information.

The next model is based upon Kusunoki et al. (2016). The model uses data from the baseline interview and journal to examine black-white and other sociodemographic differences in the number of new sexual partners. Theoretically-relevant and empirically-tested sociodemographic variables used in previously published RDSL research were included in the model (Gatny et al., 2014; Hall et al., 2014, 2015; Miller et al., 2013, 2016). The model is a Poisson regression for the count of the number of new sexual partners reported in the first year of the RDSL data collection. The model presented here differs from the published model in that the published model uses data from the full study (n=940), while these results are estimated using a single replicate. The “Observed Data” column excludes the imputed values. The outcome, number of new sexual partners, is summarized from the journal data. Again, the number of journals supplied varied across the respondents. Further, one of the predictors in the published model, proportion of weeks in an exclusive partnership, was not available and, hence, not included. Table 7 presents the model estimates.

Table 7.

Untransformed Coefficients from Poisson Regression Predicting the Number of New Sexual Partners in the First 12 Months of Data Collection using Data Imputed with and without Closeout Interview Data

| Observed Data | Baseline Imputation | Baseline and Closeout Imputation | ||||||

|---|---|---|---|---|---|---|---|---|

| Predictor | Coef | SE | Coef | SE | FMI | Coef | SE | FMI |

| Black (ref=white) | 0.01 | 0.14 | −0.01 | 0.13 | 0.12 | 0.05 | 0.13 | 0.10 |

| High Religious Importance | 0.03 | 0.06 | 0.04 | 0.06 | 0.10 | 0.03 | 0.06 | 0.06 |

| Biological mother <20 years old at first birth | −0.01 | 0.11 | 0.00 | 0.11 | 0.15 | 0.08 | 0.11 | 0.12 |

| Biological mother high school graduate or less | −0.47 | 0.21 | −0.46 | 0.20 | 0.13 | −0.41 | 0.21 | 0.12 |

| Grew up with both biological parents | 0.00 | 0.11 | −0.09 | 0.11 | 0.13 | 0.03 | 0.11 | 0.12 |

| Childhood public assistance | −0.22 | 0.12 | −0.32 | 0.12 | 0.15 | −0.26 | 0.12 | 0.15 |

| Receiving public assistance at age 18–19 | −0.12 | 0.14 | −0.01 | 0.14 | 0.15 | −0.09 | 0.14 | 0.14 |

| Enrolled in postsecondary education at age 18–19 | 0.18 | 0.12 | −0.01 | 0.12 | 0.16 | 0.08 | 0.12 | 0.15 |

| Employed at age 18–19 | −0.18 | 0.10 | −0.03 | 0.10 | 0.13 | −0.12 | 0.10 | 0.09 |

| Age at first sex <= 16 years | −0.24 | 0.12 | −0.28 | 0.12 | 0.13 | −0.23 | 0.12 | 0.11 |

| Two or more sex partners by age 18–19 | 0.35 | 0.13 | 0.33 | 0.13 | 0.08 | 0.36 | 0.13 | 0.10 |

| Had sex without birth control by age 18–19 | 0.20 | 0.11 | 0.06 | 0.11 | 0.12 | 0.12 | 0.12 | 0.11 |

| At least one pregnancy by age 18–19 | 0.01 | 0.14 | −0.08 | 0.14 | 0.15 | 0.06 | 0.14 | 0.11 |

| Proportion of weeks in an exclusive partnership | ||||||||

| Intercept | 0.41 | 0.28 | 0.79 | 0.27 | 0.12 | 0.48 | 0.28 | .09 |

NOTE: Bolded coefficients are significantly different from 0 at the p<0.05 level. Proportion of weeks in an exclusive partnership in original model was not available for this analysis.

Here, there are three things to note. First, the conclusion of the paper, that there are not black-white differences with respect to the number of new sexual partners, is validated in both models. Second, the model estimates are very similar. Only Age at First Sex <= 16 years is significant in the model estimated using data that were imputed without the closeout interview data. Third, the pattern with respect to the standard errors and fraction of missing information is not as pronounced as it was for the models in Table 6. That is, the standard errors are very similar across the two models and the fraction of missing information is not always smaller for the model estimated from the data imputed using the closeout interview. For some of the coefficients, the fraction of missing information is larger for the model estimated from the data imputed using the closeout interview. In this case, the closeout interview does not add as much information.

5. Discussion

In this paper, we focus on the potential for missing data generated by nonresponse to frequent measurement to significantly alter the substantive conclusions we reach from analyses of such data. We create a conceptual framework for the study of this problem, we document the extent of the issue in various measures in the substantive domain of sex and pregnancy, and we investigate both baseline and closeout interviews as potential remedies for errors in substantive conclusions that can be produced by missing data. The investigation demonstrates that such missing data can alter substantive conclusions. In this case, the closeout interview data, while they were associated with nonresponse, did not lead to different conclusions in either descriptive or multivariate analyses. There was some evidence that estimates could be changed with the addition of closeout interview data, but the evidence was rather weak. However, these data do help reduce variance estimates. They also make it possible to give a partial evaluation of whether the missing journal data, conditional on baseline interview data, might be NMAR. In this sense, the closeout interview performs a protective function.

In studies that use frequent measurement, dealing with missing data may be a complex issue. The mechanisms producing the missingness (e.g. forgetting, technical issues, or life changes such as pregnancy) may have varying impact on the bias of estimates. For example, technical failures (e.g. an inability to connect to the Internet) may be fairly random and not related at all to the characteristics being measured. On the other hand, other mechanisms that may cause nonresponse such as becoming pregnant – in this case, one of the key survey outcomes – might lead to biased estimates. In order to address these varying threats to inference, it is important to have additional data that may be useful for improving the inferences. In this paper, we consider the relative value of adding an interview conducted after the frequent measurements have been completed – a “closeout” interview. These data are used to supplement data from baseline interview and the journal data and give an indication of whether the observed patterns of missing data may be leading to biased inference.

We found that the baseline data were useful predictors of nonresponse, under a variety of definitions of patterns of missing data. Family characteristics, baseline sexual and pregnancy experiences, and demographic characteristics of study participants were all useful predictors. The closeout interview asked about lifetime sexual and pregnancy experiences, attitudes about relationships, sex, and pregnancy, and detailed information on all pregnancies. This information was also useful in predicting whether someone would have been a nonresponder under the several different definitions that were used, but had fewer associations than the baseline interview data.

We also evaluated the impact of the closeout interview data by imputing the missing data from the journals. After filling in the missing data, the estimates of two key items were not significantly different; however, the variance of these estimates was reduced when the closeout interview data were included. The estimates of previously published models led to similar results. The estimated coefficients were consistent across the two models. However, the standard errors and related fractions of missing information were lower for the models including the closeout interview data. This result was more pronounced for one of the two models that we estimated.

This study suggests that a closeout interview adds useful information to a study with frequent measurement. This information is useful for making adjustments to account for missing data. The value of this information varies depending upon the specific variables or analyses considered. We found that although the closeout interview data led to reductions in estimated variance of means, they were more valuable for multivariate models that involve complex relationships. The value of data from a closeout interview may also vary across topics. If the factors causing the nonresponse are related to the information to be gathered in the frequent measurements, then a closeout interview is likely to be a valuable tool. An important step for maximizing the value of a closeout interview occurs at the design stage. Survey designers should select survey items that have the characteristic of being correlated with both nonresponse and the variables collected via frequent measurement. Further, potential closeout interview variables that are measured with error are also likely to have relationships with key survey variables attenuated. Finding constructs that are measured with minimal error may also be useful. For example, specific questions about behaviors might be the most useful covariates. As the number of studies employing frequent measurement grows, additional evidence about the value of a closeout interview from other substantive areas should be collected.

There are several limitations to this study. This study was conducted in one county in Michigan. Although the sample is not nationally representative, Michigan falls around the national median for many of the measures of interest in the study: cohabitation, marriage, age at first birth, completed family size, non-marital childbearing, and teenage childbearing (see Lesthaeghe and Neidert, 2006). The county in Michigan was chosen because of the racial/ethnic and socioeconomic variation of individuals within a single geographic area (i.e. poor African Americans, poor Whites, middle-class African Americans, and middle-class Whites). A geographically concentrated sample also allowed us to minimize costs and maximize investigator involvement.

The RDSL study focused on women ages 18 to 19 because these ages are characterized by high rates of unintended pregnancy, which is the research focus of the RDSL study. This is a specialized population and the results may not extend to other populations. Likewise we focus on a single substantive domain – processes of sex and pregnancy – which limits our direct understanding of missing data in other domains of frequent measurement. Nevertheless, the strategies we apply to the problem of missing data in frequent measures are quite likely to be valuable across many ages and many substantive domains.

Nonresponse bias to any of these surveys could have an impact on our understanding of the value of the closeout data. First, selectiveness at the baseline interview limits our ability to describe the population. Second, selectiveness to the journals is the phenomenon we would like to explain. Finally, nonresponse to the closeout interview can limit our ability to characterize the missing data from the journals. This would be particularly true if the nonrespondents to the journal were also nonrespondents to the closeout interview.

There may also be issues caused by measurement error in the closeout interview variables. As mentioned earlier, such measurement error is likely to attenuate associations with key survey variables. If this were the case, then this would diminish the value of the closeout interview data. Questions with less measurement error might increase the utility of such an interview.

In sum, it appears that the closeout interview adds value beyond the baseline interview for repairing missing data due to nonresponse. If a closeout interview can be completed at a reasonable cost, then this is a useful method for dealing with the complex patterns of missing data that may result from frequent measurement.

As frequent measurement continues to grow in the social sciences, the issues we document here will also grow in importance for the substantive conclusions reached by research using those measures. Studies of social process that occur over time create particularly high demand for increasingly detailed measures of process across time, including studies of employment, income and poverty, education and attainment, courtship, sex, marriage and childbearing, child development and the transition to adulthood, and alcohol and substance use. Technological changes, including the broad diffusion of the Internet and smart phones in the US population, have created more opportunities for frequent measurement in each of these substantive domains. Specific questions about processes over time often demand increasingly frequent and detailed measurement of change over time in all of these areas, potentially raising the possibility that missing data creates substantial and wide-ranging consequences for the substantive conclusions based on the analyses of such measures. The strategies we demonstrate here constitute important tools for addressing these issues.

Acknowledgement

This research was supported by a grant from the National Institute on Drug Abuse (R21 DA024186, PI Axinn), two grants from the National Institute of Child Health and Human Development (R01 HD050329, R01 HD050329-S1, PI Barber), and a population center grant from the National Institute of Child Health and Human Development to the University of Michigan’s Population Studies Center (R24 HD041028). The authors gratefully acknowledge the Survey Research Operations unit at the Survey Research Center of the Institute for Social Research for their help with the data collection, particularly Vivienne Outlaw, Sharon Parker, and Meg Stephenson. The authors also gratefully acknowledge the intellectual contributions of the other members of the RDSL project team, Jennifer Barber, Heather Gatny, Steven Heeringa, and Yasamin Kusunoki.

Appendix

Appendix A.

Baseline Interview Estimates for Closeout Interview Respondents (n=215) and Nonrespondents (n=20)

| Variable and Value |

Closeout Respondent |

Closeout Nonrespondent |

ChiSq P-Value |

|---|---|---|---|

| R Church Attendance Several times a week | 16.1 | 14.1 | 0.799 |

| R Age of First Sex (CASI) <=14 | 15.8 | 9.4 | 0.046 |

| R Employed | 51.4 | 51.6 | 0.984 |

| R Money Left Over | 48.1 | 34.3 | 0.196 |

| R Enrolled in School= | 73.1 | 56.3 | 0.195 |

| R Ever Had Sex | 73.3 | 85.9 | 0.147 |

| R’s Family Structure Both Bio/Adopt | 45.0 | 28.2 | 0.440 |

| R Hispanic | 8.0 | 4.7 | 0.505 |

| R Religion Not Important | 7.7 | 9.4 | 0.610 |

| R Completed <= 12th Grade | 72.1 | 71.8 | 0.982 |

| R Lives with Parent/Grandparent | 68.5 | 71.8 | 0.753 |

| R Lives with Romantic Partner | 15.9 | 18.8 | 0.747 |

| Parents’ Income <$15k | 16.3 | 41.5 | 0.054 |

| R Has Public Assistance (CASI) | 25.4 | 32.9 | 0.495 |

| R Race White | 68.1 | 46.9 | 0.186 |

| R Ever Had Sex without Birth Control | 43.6 | 51.6 | 0.501 |

| R Age | 18.7 | 18.7 | 0.948 |

| R Lifetime Number of Sexual Partners (CASI) | 3.1 | 6.4 | 0.194 |

Appendix

Appendix B.

Journal Estimates for Closeout Interview Respondents (n=215) and Nonrespondents (n=20)

| Journal Characteristic | Closeout Respondent |

Closeout Nonrespondent |

ChiSq P-Value |

|---|---|---|---|

| R Had Any Conflict (Threaten/Push/Hit/Throw) with Partner | 19.7 | 18.0 | 0.888 |

| R Ever Forgot to use Birth Control | 31.4 | 10.9 | 0.030 |

| R Ever Did Not use Birth Control Every Time Had Sex | 58.9 | 50.8 | 0.533 |

| R Had Any Live Birth | 15.7 | 5.5 | 0.109 |

| R Had Any New Partners | 67.4 | 38.3 | 0.045 |

| R Had Any New Reports of Positively Pregnant | 19.3 | 29.0 | 0.470 |

| R Ever Changed Pregnancy Status Between Journal | 30.0 | 29.0 | 0.937 |

| R Had Sex | 83.7 | 78.1 | 0.590 |

| Number of New Sexual Partners Reported over the Journal Field Period | 2.0 | 1.2 | 0.012 |

| Number of Live Births | 0.2 | 0.1 | 0.074 |

| Number of Pregnancy Changes Between Journals | 0.9 | 0.5 | 0.073 |

Table C1.

Closeout Interview Response Rate by Definition of Pattern of Missing Data

| Meet Definition | ||

|---|---|---|

| Pattern of Missing Data | Yes | No |

| 1. Fewer than 60 journals | 83.7 | 99.2 |

| 2. Days between first and last journal completion fewer than 730 | 70.0 | 98.4 |

| 3. Proportion of journals that are late > 75% | 81.1 | 93.8 |

| 4. More than 180 days missing | 83.3 | 99.2 |

| 5. Attrition rates increase to week 50 but hold steady at response rates between 0.7 and 0.8 | 94.0 | 87.2 |

| 6. Attrition rates increase to week 50, hold at about 0.4 | 84.9 | 93.2 |

| 7. High Responders | 100.0 | 90.2 |

Appendix

Appendix D: Baseline (Table D1) and Closeout (Table D2) Interview Data by Pattern of Missing Data under Seven Different Definitions of Nonresponse.

Table D1.

Comparisons of Nonresponders (NR) and Responders (R) across Seven Patterns of Missing Data using the Baseline Survey Data

| 1 | 2 | 3 | 4 | 5 | 6 | 7 | |||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Baseline Characteristic | NR | R | ChiSq P-Value | NR | R | ChiSq P-Value | NR | R | ChiSq P-Value | NR | R | ChiSq P-Value | NR | R | ChiSq P-Value | NR | R | ChiSq P-Value | NR | R | ChiSq P-Value |

| Church Attendance Several times a week | 16.1 | 15.7 | 0.647 | 19.7 | 14.6 | 0.681 | 21.0 | 14.7 | 0.486 | 15.6 | 16.1 | 0.597 | 14.7 | 17.7 | 0.263 | 10.9 | 17.3 | 0.420 | 35.7 | 13.3 | 0.005 |

| Categorical Age of First Sex <=14 (CASI) | 18.7 | 11.6 | 0.023 | 13.6 | 15.7 | 0.595 | 16.8 | 14.8 | 0.596 | 19.2 | 11.3 | 0.017 | 6.7 | 28.4 | 0.709 | 27.3 | 11.7 | 0.709 | 21.4 | 14.4 | 0.709 |

| One Previous Pregnancies | 18.5 | 9.9 | 0.008 | 14.8 | 14.1 | 0.591 | 16.8 | 13.7 | 0.053 | 18.1 | 10.5 | 0.010 | 6.7 | 26.0 | 0.507 | 18.2 | 13.1 | 0.079 | 32.1 | 12.0 | 0.044 |

| R Employed | 58.0 | 44.7 | 0.043 | 55.2 | 50.2 | 0.524 | 58.0 | 49.8 | 0.336 | 59.4 | 43.6 | 0.016 | 51.8 | 50.8 | 0.887 | 70.3 | 46.0 | 0.002 | 17.9 | 55.7 | 0.000 |

| Money Left Over | 44.1 | 49.9 | 0.674 | 47.5 | 46.8 | 0.936 | 38.4 | 49.0 | 0.472 | 44.4 | 49.5 | 0.718 | 54.0 | 36.0 | 0.021 | 37.0 | 49.8 | 0.255 | 35.7 | 48.4 | 0.475 |

| R Enrolled in School=1 | 61.8 | 81.8 | 0.001 | 59.6 | 75.6 | 0.038 | 63.7 | 73.6 | 0.229 | 62.5 | 80.6 | 0.002 | 82.4 | 55.0 | 0.000 | 53.9 | 76.7 | 0.005 | 50.0 | 74.4 | 0.022 |

| R Ever Had Sex | 82.9 | 65.6 | 0.003 | 76.5 | 73.7 | 0.676 | 82.6 | 72.4 | 0.154 | 83.3 | 65.6 | 0.002 | 57.8 | 100.0 | 0.675 | 100.0 | 67.0 | 0.675 | 100.0 | 71.1 | 0.675 |

| R’s Family Structure Both Bio/Adop | 33.4 | 54.0 | 0.016 | 28.4 | 48.6 | 0.057 | 25.8 | 47.8 | 0.097 | 34.2 | 52.7 | 0.041 | 57.4 | 22.1 | 0.000 | 18.8 | 50.7 | 0.000 | 35.7 | 44.6 | 0.268 |

| R Hispanic | 10.4 | 5.0 | 0.107 | 4.9 | 8.7 | 0.284 | 12.6 | 6.6 | 0.244 | 10.7 | 4.8 | 0.087 | 8.0 | 7.3 | 0.825 | 5.5 | 8.4 | 0.428 | 14.3 | 6.9 | 0.286 |

| R Religion Not Important | 7.5 | 8.3 | 0.317 | 6.6 | 8.3 | 0.118 | 13.2 | 6.6 | 0.570 | 7.7 | 8.1 | 0.183 | 6.0 | 10.7 | 0.613 | 7.8 | 7.9 | 0.920 | 17.9 | 6.6 | 0.232 |

| R Completed <= 12th Grade | 76.5 | 67.5 | 0.132 | 76.5 | 70.6 | 0.387 | 78.4 | 70.5 | 0.293 | 75.9 | 68.3 | 0.202 | 64.1 | 84.4 | 0.000 | 78.1 | 70.3 | 0.245 | 100.0 | 68.4 | 0.648 |

| R Lives with Parent/Grandparent | 65.8 | 71.9 | 0.320 | 65.0 | 70.0 | 0.500 | 57.4 | 71.5 | 0.094 | 65.8 | 71.8 | 0.330 | 75.9 | 57.8 | 0.005 | 61.2 | 71.0 | 0.215 | 57.1 | 70.3 | 0.194 |

| R Lives with Romantic Partner | 16.5 | 15.7 | 0.868 | 18.6 | 15.3 | 0.605 | 21.6 | 14.8 | 0.346 | 17.0 | 15.3 | 0.747 | 6.7 | 30.8 | 0.000 | 16.9 | 15.9 | 0.871 | 57.1 | 10.9 | 0.000 |

| Parents’ Income <$15k | 24.7 | 12.5 | 0.075 | 26.5 | 16.0 | 0.097 | 29.2 | 16.6 | 0.013 | 26.2 | 11.3 | 0.043 | 9.1 | 35.1 | 0.000 | 32.0 | 15.4 | 0.065 | 30.4 | 17.2 | 0.144 |

| R has Public Assistance (CASI) | 35.3 | 16.5 | 0.001 | 28.4 | 25.3 | 0.657 | 38.4 | 23.1 | 0.066 | 35.3 | 16.9 | 0.001 | 9.4 | 51.9 | 0.000 | 43.0 | 21.2 | 0.008 | 50.0 | 23.0 | 0.012 |

| R Race White | 56.1 | 76.6 | 0.002 | 58.0 | 69.0 | 0.366 | 46.8 | 70.9 | 0.031 | 55.9 | 76.4 | 0.002 | 75.0 | 52.5 | 0.002 | 44.5 | 72.4 | 0.003 | 92.9 | 62.8 | 0.003 |

| R Ever Had Sex without Birth Control | 52.7 | 35.6 | 0.008 | 51.4 | 41.9 | 0.225 | 55.3 | 41.6 | 0.111 | 52.3 | 36.3 | 0.014 | 26.8 | 71.3 | 0.000 | 66.1 | 38.0 | 0.001 | 75.0 | 40.3 | 0.001 |

| Age | 18.7 | 18.6 | 0.480 | 18.7 | 18.7 | 0.749 | 18.7 | 18.7 | 0.641 | 18.7 | 18.6 | 0.612 | 18.7 | 18.6 | 0.753 | 18.6 | 18.7 | 0.657 | 18.5 | 18.7 | 0.177 |

| R Lifetime Number of Sexual Partners (CASI) | 4.1 | 2.7 | 0.050 | 4.5 | 3.0 | 0.178 | 2.9 | 3.5 | 0.396 | 4.2 | 2.6 | 0.020 | 1.9 | 5.6 | 0.000 | 4.6 | 3.0 | 0.047 | 4.4 | 3.2 | 0.154 |

Table D2.

Comparisons of Nonresponders (NR) and Responders (R) across Seven Patterns of Missing Data using the Closeout Survey Data

| 1 | 2 | 3 | 4 | 5 | 6 | 7 | |||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Closeout Characteristic | NR | R | ChiSq P-Value | NR | R | ChiSq P-Value | NR | R | ChiSq P-Value | NR | R | ChiSq P-Value | NR | R | ChiSq P-Value | NR | R | ChiSq P-Value | NR | R | ChiSq P-Value |

| Ever had sex | 94.2 | 83.7 | 0.017 | 93.0 | 87.5 | 0.264 | 94.8 | 87.3 | 0.101 | 95.1 | 83.2 | 0.006 | 82.5 | 98.8 | 0.000 | 97.9 | 86.1 | 0.002 | 100.0 | 86.9 | 0.002 |

| Currently Pregnant | 7.2 | 5.0 | 0.516 | 7.6 | 5.7 | 0.684 | 5.6 | 6.2 | 0.897 | 7.4 | 4.9 | 0.473 | 4.3 | 8.5 | 0.246 | 11.2 | 4.6 | 0.191 | 7.1 | 5.9 | 0.811 |

| Ever used BC | 89.4 | 82.2 | 0.132 | 95.3 | 83.3 | 0.009 | 89.6 | 84.7 | 0.393 | 91.1 | 81.0 | 0.032 | 82.0 | 91.7 | 0.035 | 91.4 | 84.1 | 0.150 | 92.9 | 84.5 | 0.146 |

| Ever Pushed, Hit, or Hurt | 2.7 | 4.2 | 0.695 | 6.5 | 2.9 | 0.585 | . | 4.1 | 0.585 | 2.9 | 4.0 | 0.790 | 4.1 | 2.8 | 0.726 | 5.3 | 3.0 | 0.690 | . | 4.1 | 0.690 |

| Age at First Sex | 16.4 | 16.9 | 0.100 | 16.5 | 16.7 | 0.615 | 16.4 | 16.7 | 0.433 | 16.4 | 16.9 | 0.068 | 17.4 | 15.7 | 0.000 | 15.8 | 17.0 | 0.000 | 15.7 | 16.8 | 0.001 |

| Lifetime Number of Sexual Partners | 4.8 | 5.3 | 0.518 | 4.9 | 5.1 | 0.854 | 4.2 | 5.2 | 0.112 | 5.1 | 5.0 | 0.838 | 4.9 | 5.2 | 0.609 | 4.7 | 5.2 | 0.473 | 5.1 | 5.0 | 0.968 |

| CASI Number of Pregnancies | 1.0 | 0.7 | . | 1.1 | 0.8 | . | 1.1 | 0.8 | . | 1.0 | 0.7 | . | 0.4 | 1.5 | . | 1.4 | 0.7 | . | 1.5 | 0.8 | . |

| Age | 21.5 | 21.5 | 0.519 | 21.5 | 21.5 | 0.829 | 21.6 | 21.5 | 0.663 | 21.5 | 21.5 | 0.762 | 21.5 | 21.5 | 0.498 | 21.5 | 21.5 | 0.808 | 21.4 | 21.5 | 0.417 |

| Condom a Sign of Mistrust (1–5) | 3.1 | 3.4 | 0.012 | 3.1 | 3.3 | 0.182 | 3.1 | 3.3 | 0.102 | 3.1 | 3.4 | 0.011 | 3.3 | 3.3 | 0.872 | 3.4 | 3.3 | 0.400 | 3.3 | 3.3 | 0.820 |

| No Sex Before Marriage (1–5) | 2.5 | 2.5 | 0.671 | 2.4 | 2.5 | 0.582 | 2.5 | 2.5 | 0.885 | 2.5 | 2.5 | 0.730 | 2.4 | 2.6 | 0.245 | 2.5 | 2.5 | 0.772 | 3.0 | 2.4 | 0.001 |

| Motherhood is Most Fulfilling (1–5) | 1.8 | 2.3 | 0.000 | 1.8 | 2.1 | 0.068 | 1.9 | 2.1 | 0.267 | 1.8 | 2.3 | 0.000 | 2.2 | 1.8 | 0.003 | 1.8 | 2.1 | 0.052 | 1.9 | 2.1 | 0.359 |

| Children Improve Relationships (1–5) | 3.0 | 2.9 | 0.510 | 3.0 | 3.0 | 0.473 | 3.1 | 2.9 | 0.159 | 3.0 | 2.9 | 0.405 | 2.8 | 3.2 | 0.000 | 3.3 | 2.9 | 0.000 | 3.0 | 3.0 | 0.615 |

| Wait for Perfect Time (1–5) | 2.9 | 2.9 | 0.356 | 2.9 | 2.9 | 0.681 | 3.0 | 2.9 | 0.427 | 2.9 | 2.9 | 0.534 | 2.9 | 2.9 | 0.379 | 2.9 | 2.9 | 0.912 | 3.0 | 2.9 | 0.606 |

| OK to be a Single Mom (1–5) | 2.4 | 2.4 | 0.520 | 2.4 | 2.4 | 0.975 | 2.5 | 2.4 | 0.431 | 2.3 | 2.5 | 0.046 | 2.4 | 2.4 | 0.853 | 2.5 | 2.4 | 0.419 | 2.3 | 2.4 | 0.394 |

| Cohabiting OK Without Plan of Marriage (1–5) | 2.4 | 2.4 | 0.437 | 2.3 | 2.4 | 0.556 | 2.6 | 2.4 | 0.098 | 2.3 | 2.4 | 0.360 | 2.4 | 2.3 | 0.326 | 2.4 | 2.4 | 0.683 | 2.1 | 2.4 | 0.011 |

| Children Cause Worry (1–5) | 2.4 | 2.3 | 0.102 | 2.3 | 2.4 | 0.554 | 2.4 | 2.3 | 0.586 | 2.5 | 2.3 | 0.100 | 2.3 | 2.4 | 0.823 | 2.4 | 2.3 | 0.672 | 2.2 | 2.4 | 0.311 |

| Ideal Number of Children (1–5) | 2.8 | 2.4 | 0.050 | 2.6 | 2.5 | 0.568 | 3.2 | 2.4 | 0.110 | 2.7 | 2.4 | 0.103 | 2.4 | 2.9 | 0.028 | 3.1 | 2.4 | 0.052 | 2.5 | 2.6 | 0.744 |

References

- Axinn WG, Barber J and Thornton A (1998). “The Long-Term Impact of Parents’ Childbearing Decisions on Children’s Self-Esteem.” Demography 35(4): 435–443. [PubMed] [Google Scholar]

- Axinn WG, Gatny H and Wagner J (2015). “Maximizing Data Quality using Mode Switching in Mixed-Device Survey Design: Nonresponse Bias and Models of Demographic Behavior.” Methods, Data, Analyses 9(2): 163–184. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Axinn WG, Jennings EA and Couper MP (2015). “Response of Sensitive Behaviors to Frequent Measurement.” Social Science Research 49: 1–15. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barber J, Axinn WG and Thornton A (1999). “Unwanted Childbearing, Health, and Mother-Child Relationships.” Journal of Health and Social Behavior 40(3): 231–257. [PubMed] [Google Scholar]

- Barber J and East P (2009). “Home and Parenting Resources Available to Siblings Depending on Their Birth Intention Status.” Child Development 80(3): 921–939. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barber J and East P (2011). “Children’s Experiences after the Unintended Birth of a Sibling.” Demography 48(1): 101–126. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barber J, Kusunoki Y and Gatny H (2011). “Design and Implementation of an Online Weekly Survey to Study Unintended Pregnancies.” Vienna Yearbook of Population Research 9: 327–334. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barber J, Kusunoki Y, Gatny H and Yarger J (2013). “Young Women’s Relationships, Contraception and Unintended Pregnancy in the United States” Pp. 121–140 in Buchanan A and Rotkirch A (eds) Fertility Rates and Population Decline: No Time for Children?. London, Palgrave Macmillan. [Google Scholar]

- Barber J, Kusunoki Y, Gatny H and Schulz P (2016). “Participation in an Intensive Longitudinal Study with Weekly Web Surveys Over 2.5 Years.” Journal of Medical Internet Research 18(6): e105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barber J, Yarger J and Gatny H (2015). “Black-White Differences in Attitudes Related to Pregnancy among Young Women.” Demography 52(3): 751–786. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brauner-Otto S and Geist C (2018). “Uncertainty, Doubts, and Delays: Economic Circumstances and Childbearing Expectations among Emerging Adults.” Journal of Family and Economic Issues 39(1): 88–102. [Google Scholar]

- Bureau of Labor Statistics, U. S. Department of Labor (2005). “25 Years of the National Longitudinal Survey - Youth Cohort.” Monthly Labor Review [Special Issue] 128(2). [Google Scholar]

- Chang T, Davis M, Kusunoki Y, Ela EJ, Hall K and Barber J (2015). “Sexual Behavior and Contraceptive Use among 18 to 19 Year Old Adolescent Women by Weight Status: A Longitudinal Analysis.” Journal of Pediatrics 167(3): 586–593. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Deluca S and Rosenbaum JE (2001). “Individual Agency and the Life Course: Do Low-SES Students Get Less Long-Term Payoff for Their School Efforts?” Sociological Focus 34(4): 357–376. [Google Scholar]

- Dowd AC and Melguizo T (2008). “Socioeconomic Stratification of Community College Transfer Access in the 1980s and 1990s: Evidence from HS&B and NELS.” The Review of Higher Education 31(4): 377–400. [Google Scholar]

- Fall AM and Roberts G (2012). “High School Dropouts: Interactions between Social Context, Self-Perceptions, School Engagement, and Student Dropout.” Journal of Adolescence 35(4): 787–798. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Finer LB and Henshaw SK (2006). “Disparities in Rates of Unintended Pregnancy in the United States, 1994 and 2001.” Perspectives on Sexual and Reproductive Health 38(2): 90–96. [DOI] [PubMed] [Google Scholar]

- Frost JJ and Darroch JE (2008). “Factors Associated with Contraceptive Choice and Inconsistent Method Use, United States, 2004.” Perspectives on Sexual and Reproductive Health 40(2): 94–104. [DOI] [PubMed] [Google Scholar]

- Gatny H, Kusunoki Y and Barber J (2014). “Pregnancy Scares and Subsequent Unintended Pregnancy.” Demographic Research 31(40): 1229–1242. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Graham J, Olchowski A and Gilreath T (2007). “How Many Imputations are Really Needed? Some Practical Clarifications of Multiple Imputation Theory.” Prevention Science 8(3): 206–213. [DOI] [PubMed] [Google Scholar]

- Hall KS, Kusunoki Y, Gatny H and Barber J (2014). “The Risk of Unintended Pregnancy among Young Women with Mental Health Symptoms.” Social Science & Medicine 100: 62–71. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hall KS, Kusunoki Y, Gatny H and Barber J (2015). “Social Discrimination, Stress, and Risk of Unintended Pregnancy among Young Women.” Journal of Adolescent Health 56(3): 330–337. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hansen MH and Hurwitz WN (1946). “The Problem of Non-Response in Sample Surveys.” Journal of the American Statistical Association 41(236): 517–529. [DOI] [PubMed] [Google Scholar]

- Harel O (2007). “Inferences on Missing Information under Multiple Imputation and Two-Stage Multiple Imputation.” Statistical Methodology 4(1): 75–89. [Google Scholar]

- Kendig SM, Mattingly MJ and Bianchi SM (2014). “Childhood Poverty and the Transition to Adulthood.” Family Relations 63(2): 271–286. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kienzl G and Kena G (2006). “Economic Outcomes of High School Completers and Noncompleters 8 Years Later” NCES 2007–019. Washington, D. C, National Center for Education Statistics, U. S. Department of Education. [Google Scholar]

- Kusunoki Y, Barber J, Ela EJ and Bucek A (2016). “Black-White Differences in Sex and Contraceptive Use among Young Women.” Demography 53(5): 1399–1428. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kusunoki Y and Upchurch D (2011). “Contraceptive Method Choice among Youth in the United States: the Importance of Relationship Context.” Demography 45(4): 1451–1472. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lesthaeghe RJ and Neidert L (2006). “The Second Demographic Transition in the United States: Exception or Textbook Example?” Population and Development Review 32(4): 669–698. [Google Scholar]

- Little RJA and Rubin DB (2002). Statistical Analysis with Missing Data. Hoboken, N.J., Wiley. [Google Scholar]

- McGonagle KA, Schoeni RF, Sastry N and Freedman VA (2012). “The Panel Study of Income Dynamics: Overview, Recent Innovations, and Potential for Life Course Research.” Longitudinal and Life Course Studies 3(2): 268–284. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Miech R, Johnston L, O’Malley PM, Keyes KM and Heard K (2015). “Prescription Opioids in Adolescence and Future Opioid Misuse.” Pediatrics 136(5): e1169–e1177. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Miller WB, Barber J and Gatny H (2013). “The Effects of Ambivalent Fertility Desires on Pregnancy Risk in Young Women in the USA.” Population Studies 67(1): 25–38. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Miller WB, Barber J and Gatny H (2017). “Mediation Models of Pregnancy Desires and Unplanned Pregnancy in Young, Unmarried Women.” Journal of Biosocial Science, 1–21. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Miller WB, Barber J and Schulz P (2016). “Do Perceptions of their Partners’ Childbearing Desires Affect Young Women’s Pregnancy Risk? Further Study of Ambivalence.” Population Studies 71(1): 101–116. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Paat YF and Markham CM (2016). “Young Women’s Sexual Involvement in Emerging Adulthood.” Social Work in Health Care 55(8): 559–579. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Paik A and Woodley V (2012). “Symbols and Investments as Signals: Courtship Behaviors in Adolescent Sexual Relationships.” Rationality and Society 24(1): 3–36. [Google Scholar]

- Parent D (2000). “Industry-Specific Capital and the Wage Profile: Evidence from the National Longitudinal Survey of Youth and the Panel Study of Income Dynamics.” Journal of Labor Economics 18(2): 306–323. [Google Scholar]

- Patrick ME and Maggs JL (2009). “Does Drinking Lead to Sex? Daily Alcohol-Sex Behaviors and Expectancies among College Students.” Psychology of Addictive Behaviors 23(3): 472. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Patrick ME and Schulenberg JE (2011). “How Trajectories of Reasons for Alcohol Use Relate to Trajectories of Binge Drinking: National Panel Data Spanning Late Adolescence to Early Adulthood.” Developmental Psychology 47(2): 311. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pudrovska T (2014). “Early-Life Socioeconomic Status and Mortality at Three Life Course Stages: An Increasing Within-Cohort Inequality.” Journal of Health and Social Behavior 55(2): 181–195. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Raghunathan T, Lepkowski JM, Van Hoewyk J and Solenberger P (2001). “A Multivariate Technique for Multiply Imputing Missing Values Using a Sequence of Regression Models.” Survey Methodology 27(1): 85–95. [Google Scholar]

- Resnick MD, Bearman PS, Blum RW, Bauman KE, Harris KM, Jones J, Tabor J, Beuhring T, Sieving RE, Shew M, Ireland M, Bearinger LH and Udry JR (1997). “Protecting Adolescents from Harm: Findings from the National Longitudinal Study on Adolescent Health.” JAMA 278(10): 823–832. [DOI] [PubMed] [Google Scholar]

- Rosenberg MJ, Waugh MS and Long S (1995). “Unintended Pregnancies and Use, Misuse and Discontinuation of Oral Contraceptives.” Journal of Reproductive Medicine 40(5): 355–360. [PubMed] [Google Scholar]

- Sassler S and Goldscheider F (2004). “Revisiting Jane Austen’s Theory of Marriage Timing: Changes in Union Formation among American Men in the Late 20th Century.” Journal of Family Issues 25(2): 139–166. [Google Scholar]

- Thornton A, Axinn WG and Xie Y (2007). Marriage and Cohabitation. Chicago, University of Chicago Press. [Google Scholar]

- van Buuren Stef and Karin Groothuis-Oudshoo (2011). mice: Multivariate Imputation by Chained Equations in R. Journal of Statistical Software, 45(3), 1–67. URL https://www.jstatsoft.org/v45/i03/. [Google Scholar]

- Wagner J (2010). “The Fraction of Missing Information as a Tool for Monitoring the Quality of Survey Data.” Public Opinion Quarterly 74(2): 223–243. [Google Scholar]

- Wightman P, Schoeni RF, Patrick ME and Schulenberg JE (2017). “Transitioning to Adulthood in the Wake of the Great Recession: Context and Consequences” Pp. 235–268 in Schoon I and Bynner J (eds) Young People’s Development and the Great Recession: Uncertain Transition. Cambridge, Cambridge University Press. [Google Scholar]