Significance

The second law states that on average, it is impossible for a process to reduce the entropy of the universe. Recent progress has strengthened this inequality by quantifying the dissipation associated with various scenarios. Moving a thermodynamic system dissipates energy because the system is pushed out of equilibrium, quantified by friction. Also, precise control of a degree of freedom requires dissipation due to broken time-reversal symmetry. When considered alone, these two bounds can be made arbitrarily small by either moving slowly or moving imprecisely. However, here we show that for a self-contained thermodynamic process, which must incur both of these costs, there is a lower energetic bound which cannot be made arbitrarily small, even when all changes happen infinitely slowly.

Keywords: dissipation, nonequilibrium, energy bounds, entropy production, molecular machines

Abstract

How much free energy is irreversibly lost during a thermodynamic process? For deterministic protocols, lower bounds on energy dissipation arise from the thermodynamic friction associated with pushing a system out of equilibrium in finite time. Recent work has also bounded the cost of precisely moving a single degree of freedom. Using stochastic thermodynamics, we compute the total energy cost of an autonomously controlled system by considering both thermodynamic friction and the entropic cost of precisely directing a single control parameter. Our result suggests a challenge to the usual understanding of the adiabatic limit: Here, even infinitely slow protocols are energetically irreversible.

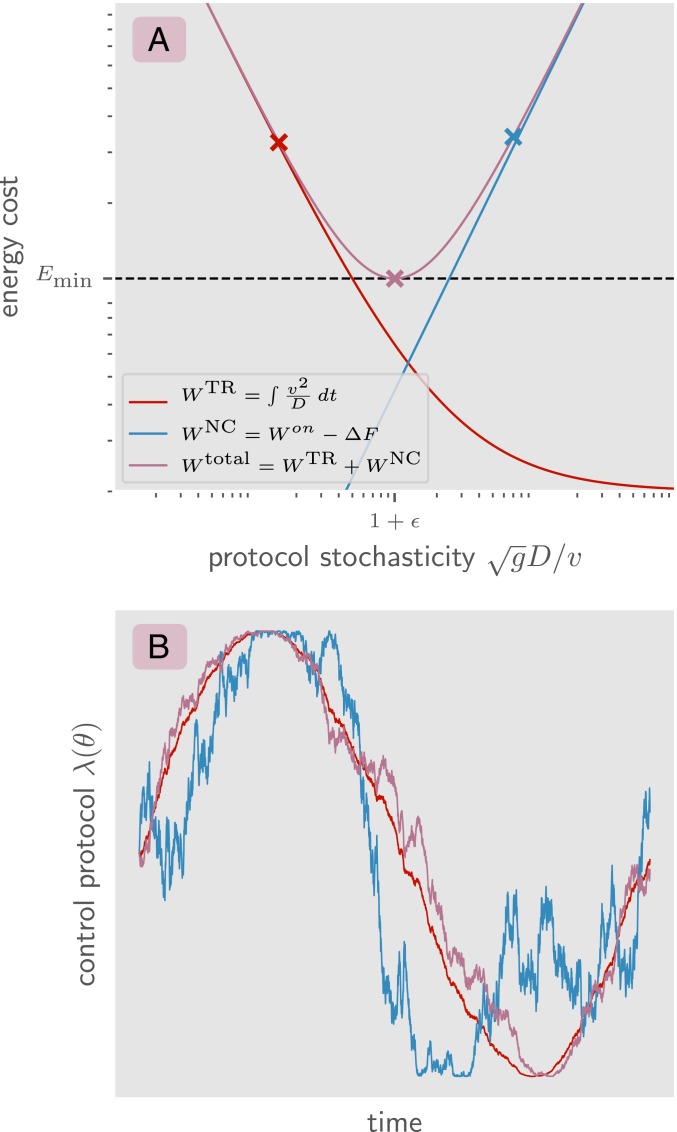

Controlling the state of a thermodynamic system requires interacting with and doing work on its degrees of freedom. Given an initial thermodynamic state and a final state , the second law of thermodynamics implies that the average work required to produce the transition between these states is at least the difference in their free energy: . Recent work has strengthened this inequality, quantifying two distinct sources of energetic dissipation. First, to transform a system at a finite rate, energy must be expended to overcome thermodynamic friction (1–7). Second, energy must be expended to bias the motion of itself in a preferred direction (8–15). Here we consider both of these energetic costs together. We find that while either cost can be made arbitrarily small, they cannot be minimized simultaneously, as illustrated in Fig. 1. This leads to a lower bound on the required energetic cost for controlling a thermodynamic system that remains nonzero even in the limit that the process moves infinitely slowly and imprecisely.

Fig. 1.

(A) The contribution to the energy dissipation from the nonconservative work done on the system (blue), the contribution from the cost of directing the control protocol in time (red), and the total energy dissipation cost (purple). On the axis is the ratio of the diffusion constant of the control protocol to the speed of the control protocol, which captures the level of stochasticity of the protocol. The marked points in A correspond to different levels of stochasticity in the control protocol. For each mark, a sample trajectory with that stochasticity is shown in B. The blue mark is a suboptimal protocol which is too stochastic, doing more work than is necessary on the system. The red mark is a suboptimal protocol which is too deterministic, spending more energy on reducing noise than is necessary. The purple mark is an optimal protocol which minimizes this energetic tradeoff by balancing these two constraints.

Any process that transforms a system in a finite amount of time must necessarily push the system out of equilibrium. This requires doing nonconservative work which causes the irreversible loss of useable energy, a familiar example of which is the heat dissipated while moving an object through a viscous medium. This dissipative cost, incurred by thermodynamic friction, can be written as , where the dissipation equals , the change in the entropy of the system and environment* (16). Recent work has explicitly quantified this form of dissipation, finding an elegant geometric interpretation for the nonconservative work done in a finite-time process (2, 3).

In the above analysis, the energetic cost is identified with , the average work done on the thermodynamic system by an externally defined and deterministic control protocol . However, for an autonomous system which implements its own control protocol, energy must be dissipated to move itself in a directed manner. Physical systems obey microscopically time-reversible dynamics.† Thus to bias the motion of , breaking time-reversal symmetry, forward progress must be accompanied by an increase in entropy, which can be accomplished by dissipating energy into a heat bath. This entropic increase causes forward movement to be more likely than backward movement, and without this, there can be no “arrow of time” (9). A classic example of this is the consumption of ATP by myosin motors to “walk” along actin polymers (17). Without the irreversible consumption of free energy, the system would be constrained to have equilibrium dynamics, transitioning backward and forward at the same rate. Recently, there has been considerable work done in understanding the tradeoffs between this bias cost and the accuracy and speed of system trajectories (8, 13–15, 18–21), culminating with the development of so-called thermodynamic uncertainty relations (22–24).

Here we consider the total dissipative cost of autonomously controlled thermodynamic systems, including contributions from both thermodynamic friction and entropic bias. In particular, we consider thermodynamic systems with state and control parameter , where the dynamics of are now stochastic due to the fact that the entropic bias required to run a deterministic path is infinite (6). Additionally, we suppose that the dynamics of depend on but the dynamics of do not receive feedback from . This one-way coupling distinguishes as a physical control parameter rather than as simply a degree of freedom in a larger thermodynamic system. We can think of as mediating a coupling between and different thermodynamic baths, as in ref. 25. This setup is analogous to a control knob changing the amount of power flowing into a thermodynamic system in that the work required to turn the knob‡ is independent of the state of the system.

Examples of this class of processes are found throughout biology in systems that undergo regulated change. In these systems, energy is expended both in running the regulatory mechanism and in instantiating downstream changes (26–28). In muscles, the release of calcium modulates the pulling force by activating myosin heads which then hydrolyze ATP to produce a step (29, 30). In this case, the bias cost is the energy used to control the amount of calcium that is released through concentration gradients. Energy is also dissipated through the nonconservative work that the muscles themselves do as they consume ATP to contract. Another example is biological cycles, where energy is used both to coordinate phase in the cycle and to run downstream processes. Cyclins, which underlie the cell cycle and KaiABC proteins which implement circadian oscillations, are kinases that phosphorylate each other to implement a cycle as well as downstream targets to instantiate the changes that must occur throughout the cycle (13, 28, 31–36). Phosphorylating these downstream targets also consumes energy. In both of these examples, the control degrees of freedom (calcium concentration and cyclin phosphostate) receive minimal feedback from their downstream target’s state (contraction state of myosin heads and phosphostate of targets).

Our central result is that thermodynamic processes cannot be controlled in an energetically reversible manner. While each of these two energetic costs can be made small in isolation, they cannot be made small together. The cost of thermodynamic friction can be minimized only by moving the system slowly and precisely, while the bias cost can be made small only by moving imprecisely and with large fluctuations. These competing constraints cannot be satisfied at the same time (Fig. 1). We thus suggest that the notion of an energetically reversible control process exists only as an abstraction for when the control itself is considered external to the object under study. A realistic self-contained thermodynamic machine has no reversible transformations, even in the quasistatic limit.

Our work builds on a framework developed in refs. 2 and 3 which considered lower bounds on the energy dissipation arising from a deterministic control protocol moving at finite speed. Those authors found that the dissipation rate is given by

| [1] |

where is the Kirkwood friction tensor (7) and where we have replaced the energy dissipation with the equivalent notion of entropy production (16, 37). This rate yields a lower bound on the energy dissipation,

| [2] |

where is the minimum length path in space between and with the metric (38) and where is the total time of the protocol. Energetically optimal control protocols are geodesics in the Riemannian thermodynamic space defined by .

Importantly, this result implies that in the quasistatic limit where the protocol moves infinitely slowly (), the excess energy cost of transforming the system becomes negligible (Eq. 2). However, in this framework, the breaking of time-reversal symmetry arises from the deterministic trajectory , whose cost is neglected from this energetic bookkeeping.

When we include the cost of the time-reversal symmetry breaking in the control protocol , the optimal control protocols are no longer deterministic. Instead, we consider a protocol where is a stochastic variable that moves with net drift velocity and diffusion . When we include the bias cost of the control protocol itself, we find that the total rate of energy dissipation in the near-equilibrium limit is given by

| [3] |

where is the Fisher information metric on (Eq. 10). The first term of Eq. 3 captures the thermodynamic friction associated with pushing the system out of equilibrium. We will see that this term is the natural generalization of Eq. 1 to a stochastic system. The second term captures the dissipation associated with breaking time-reversal symmetry, moving the control protocol forward in time. The third term is the result of the redundant thermodynamic friction caused by the stochastic system trajectory.

From this rate, we show that for a simple system, the total energetic cost of moving the system from to has a lower bound given by

| [4] |

where and are the lengths traversed in thermodynamic space with metrics and and where is a small correction that disappears as or in the limit of large protocol size.

This result is significant because the first term defies the quasistatic limit, remaining nonzero even in the limit of an infinitely slow protocol (). The result we obtain from the simple system analyzed here suggests a more general principle: Every thermodynamic transformation has a minimal energy requirement that cannot be made arbitrarily small by using a slow control protocol. This energy requirement is determined by the geometry of thermodynamic space.

Our result generalizes and clarifies a bound anticipated in ref. 25, which considered coupling a thermodynamic system to a succession of particle reservoirs. Here we explicitly take a continuum limit and consider protocols which move at a finite rate, connecting this to other bounds in the literature (3). Our results clarify that this bound is far more general, showing that the minimum energy bound is not specific to that class of systems but is rather a fundamental property of thermodynamic machines. In particular, it holds for any thermodynamic system in which a single degree of freedom obeying the microscopic laws of thermodynamics mediates a coupling to a generalized thermodynamic reservoir.

Derivation

Consider a system of particles interacting through an energy of the form

| [5] |

where denotes the microstate of the system and parameterizes the path of the control functions around a cycle. Here we use Greek letters , etc., to index the controlling potentials and their conjugate variables and we use Roman letters , , to index the microstate . We also use natural units and unit temperature such that .

The control functions are all periodic in , allowing the system to reach a steady state where the system cycles are fully self-contained. We suppose that the control parameter is driven stochastically with net drift velocity and diffusion constant . We describe both and using overdamped Langevin equations (37):

| [6] |

The terms and represent thermal noise. The overdamped force on due to the interaction energy is given by . As discussed in the Introduction, by control parameter we mean precisely that the term is absent from the stochastic equation for ; the dynamics of do not receive feedback from the state of the system.

The Langevin dynamics of the system give rise to probability currents:

| [7] |

Using stochastic thermodynamics, we can derive the average rate of energy dissipation by equivalently calculating the average rate of total entropy production (37):

| [8] |

Because the system is periodic and the Langevin dynamics (Eq. 6) are time independent, the system will eventually reach a steady state that can be described by the joint probability distribution . To compute the energy dissipation, we first compute the average rate of energy dissipation conditioned on the control parameter being in the known state . We can then compute the total energy dissipation by averaging over a full cycle in . Denote to be the nonequilibrium steady-state probability of finding the system in the microstate given that the system is at . Denote to mean an average over the nonequilibrium distribution for a fixed . Finally, denote to mean an average over the equilibrium Boltzmann weight . We find the rate of entropy production when the system is at is given by (see SI Appendix, Eqs. 4–20 for the details of the derivation)

| [9] |

where is the deviance of the conjugate force at from its equilibrium value and is the Fisher information metric with respect to the basis:

| [10] |

The Fisher information metric can be thought of as a measure of how strongly the distribution changes as the value of changes as determined by the Kullback–Leibler divergence.

So far this is an exact result. In the next section we use linear response to approximate , assuming that and are small.

Linear Response for Stochastic Control.

Since we are interested in a lower energetic bound, we consider the low dissipation regime where the system is driven near equilibrium. In this regime, we can use linear response to compute the thermodynamic friction of the system. To evaluate the term , we use a linear response approximation. For a fixed control parameter path , the average linear response over all possible microstate paths is well understood (3). Here we extend this result to an average over all possible microstate and control parameter paths.

If an ensemble of systems undergoes the same deterministic protocol , then at time , the average linear response of over this ensemble is given by (39)

| [11] |

where is the equilibrium time correlation between the conjugate forces and and where we have written for shorthand.

Assuming the protocol speed is much slower than the timescale of system relaxation, by integrating by parts we find (3)

| [12] |

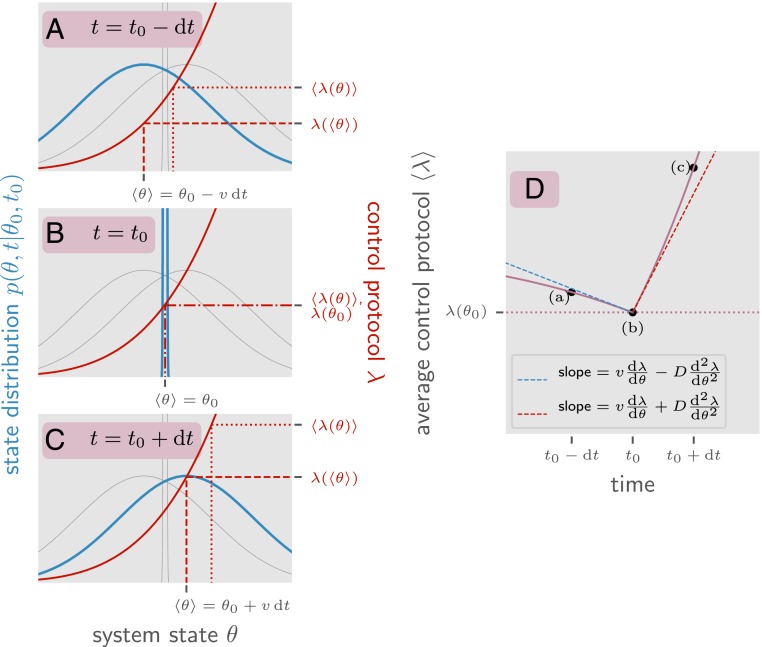

We now have to extend this result to an ensemble of stochastic protocols which are all located at the same point at time (to emphasize that we are referring to a specific point, we have switched from using the generic and to and ). This process is a bit more challenging (see SI Appendix, Eqs. 21–33 for the exact details). However, the form of the result is manifestly the same: We need only to replace with the ensemble average of , where the ensemble is taken over all control parameter trajectories such that . As illustrated in Fig. 2, this quantity is discontinuous at time . Its left- and right-sided limits are

| [13] |

which correspond to ensemble velocities under the reverse-Ito and Ito conventions (40). Here we have dropped terms quadratic in and . Since the domain of Eq. 11 is , it is the left-sided limit that is relevant in our calculation, and thus we obtain

| [14] |

To obtain this result we have required that both the control parameter velocity and the diffusion rate are small with respect to the system relaxation timescale at equilibrium, . Explicitly, we demand

| [15] |

where is related to the length scale associated with the control function . Finally, by using the result given by Eq. 14 in Eq. 9, we obtain our central result, Eq. 3.

Fig. 2.

An illustration of why the time derivative of the ensemble value of is discontinuous. This system state is described by a variable with net drift and diffusion (explicitly ) and a control function . Here we look at the ensemble of all possible system trajectories such that for a fixed point . In blue is the probability of finding the system at a particular value of conditioned on the system being at at time for three different times: immediately before , at , and directly after . Since the value of at is known, is a Dirac delta function. and are identical Gaussians shifted forward and backward by . In red is the value of the control parameter as a function of . The important quantity is , the value of at a given time averaged over trajectories in . Note that while increases as a function of time (from to ) and increases as a function of , it is not the case that increases as a function of time. This is because of diffusion. Between and , the distribution of diffuses away from . Because is convex, this causes to increase. The same argument applies backward in time as well. As we move backward in time from to the distribution also diffuses away from , which in turn increases due to the convexity of . This means that the minimum value of occurs at the intermediate time . This is the origin of the discontinuity shown in . In purple is a plot of over time averaged over the same ensemble of trajectories . The time derivative has a discontinuity at related to (the convexity of ). The left- and right-sided limits of the time derivative (shown in blue and red) are the derivatives given by the Ito and reverse-Ito conventions for stochastic calculus (40).

Discussion

The first term in Eq. 3 represents the frictional dissipation arising from pushing the system out of equilibrium. This term is a generalization of the dissipation rate found in the deterministically controlled coupled system studied in ref. 3. To obtain that result, the authors made the assumption that the system moves much slower than the relaxation timescale of the system. Concretely, this is the assumption that . In moving from Eq. 11 to Eq. 14, we make essentially the same assumption, but we also require that . This is merely the statement that the random movements of the control parameter cannot be large enough to take us away from the linear response regime. Since we are searching for a lower energetic bound, this is certainly true in the limiting behavior. The system of ref. 3 can be seen as a special case where and the term is ignored.

The other two terms in Eq. 3 are analogous to dissipation terms found in an earlier paper (25) investigating a discrete system. The first of these, , is the energy required to break time-reversal symmetry in the control parameter, i.e., the energy required for “constancy” in the control clock (8, 41). The final term can be thought of as the energetic cost of straying from the optimal protocol, a geodesic (38).

Fig. 1A plots the contribution to the total energy cost of a control protocol from the two sources of dissipation: the nonconservative work done by the control parameter on the system ( shown in blue) and the work required to break time-reversal symmetry and direct the movement of forward in time ( shown in red). We see that they are minimized on opposite ends of the stochasticity spectrum.

In Eq. 3 there is an implicit energetic tradeoff. A control protocol that is very precise () (e.g., the red curve in Fig. 1B) may minimize the dissipation due to thermodynamic friction done by the control parameter on the system; however, such a protocol pays a high energetic cost for strongly breaking time-reversal symmetry. On the other hand, a control protocol that only weakly breaks time-reversal symmetry () (e.g., the blue curve in Fig. 1B) pays a high energetic cost for undergoing suboptimal trajectories and performing redundant thermodynamic transitions. Ref. 3 investigated the energetically optimal control path . This work shows that there is also the question of the energetically optimal “diffusive tuning” between and which minimizes this tradeoff (e.g., the purple mark and curve in Fig. 1 A and B). In particular, the optimal control protocol is not deterministic .

As an example, consider a 2D harmonic oscillator where the center is moved in a circle of radius :

| [16] |

Here is the control function and is the conjugate force. The Fisher information metric is given by , where is the 2D identity matrix and in the basis we have . Using Eq. 3, we find the average dissipation rate:

| [17] |

If we require that the protocol takes an average of time per cycle, this fixes the net drift velocity . To find the minimum dissipation, we then optimize with respect to . This yields

| [18] |

where is a small order correction due to the small term. Plugging this in yields a total dissipation per cycle of

| [19] |

where and are the thermodynamic lengths of paths under the metrics and . In particular, we note that the dissipation remains bounded by in the limit of an infinitely long protocol .

In units where , the Fisher information metric is . Thus the correction to the Sivak and Crooks bound can be neglected whenever the energy scale of the control is greater than the average thermal fluctuation.

The nonvanishing bound scales with , the size of an average fluctuation in the system. Thus it is subextensive and disappears in the macroscopic limit. Its contribution is also dwarfed by thermodynamic friction when the control protocol is fast. Therefore it is expected that this bound should become relevant only in slow microscopic systems.

We also note that this analysis applies only to autonomous thermodynamic machines: those whose control is independent of environmental signals. In cases where the source of time-reversal symmetry breaking occurs externally, e.g., a system reacting to cyclic changes in heat from the Sun, this bound does not necessarily apply, although other bounds likely do. This is because the origin of time-reversal symmetry breaking in such networks is environmental and thus no energy must be expended to drive the system in a particular direction. Such reactive systems would be more appropriately characterized by ref. 4, which likewise addressed the question of optimality and energetic bounds in stochastically controlled systems, only without taking into account the cost of breaking time-reversal symmetry. Those authors found a minimum bound on the energy of control which cannot be made arbitrarily small in the presence of a noisy control protocol. However, their bound is proportional to the magnitude of the control noise and thus can be made arbitrarily small in the limit of noiseless protocols.

This work elucidates additional constraints in the design of optimal thermodynamic machines. In previous studies, it has been found that systems must dissipate more energy to increase the accuracy of their output (22–24, 42). The optimal diffusive tuning found here indicates that below a certain level of accuracy, increasing precision actually decreases the dissipation cost. In addition, the lower dissipation bound indicates that for very slow microscopic thermodynamic transformations, the dissipation cost no longer scales inversely with time. The diminishing energetic returns from increasing the length of control protocols perhaps set a characteristic timescale for optimal microscopic machines without time constraints.

Supplementary Material

Acknowledgments

We thank Nicholas Read and Daniel Seara for useful discussions about our project. This work was supported in part by a Simons Investigator award in Mathematical Model of Living Systems (to B.B.M.) and by NSF Grant DMR-1905621 (to S.J.B. and B.B.M.).

Footnotes

The authors declare no competing interest.

This article is a PNAS Direct Submission.

*Since and since the energy of the universe is constant, changes in the total amount of free energy can be identified with changes in the entropy of the universe.

†Although not all physical processes are fully invariant under time reversal, the distinction is not important for our current understanding of how this type of directionality arises in in biological end engineered systems.

‡Since the cost of turning a control knob does not scale with the system size, for any macroscopic system one would naturally ignore this cost.

This article contains supporting information online at https://www.pnas.org/lookup/suppl/doi:10.1073/pnas.1915676117/-/DCSupplemental.

References

- 1.Jarzynski C., Nonequilibrium equality for free energy differences. Phys. Rev. Lett. 78, 2690–2693 (1997). [Google Scholar]

- 2.Sivak D. A., Crooks G. E., Near-equilibrium measurements of nonequilibrium free energy. Phys. Rev. Lett. 108, 150601 (2012). [DOI] [PubMed] [Google Scholar]

- 3.Sivak D. A., Crooks G. E., Thermodynamic metrics and optimal paths. Phys. Rev. Lett. 108, 190602 (2012). [DOI] [PubMed] [Google Scholar]

- 4.Large S. J., Chetrite R., Sivak D. A., Stochastic control in microscopic nonequilibrium systems. Europhys. Lett. 124, 20001 (2018). [Google Scholar]

- 5.Burbea J., Rao C. R., Entropy differential metric, distance and divergence measures in probability spaces: A unified approach. J. Multivar. Anal. 12, 575–596 (1982). [Google Scholar]

- 6.Crooks G. E., Entropy production fluctuation theorem and the nonequilibrium work relation for free energy differences. Phys. Rev. E 60, 2721–2726 (1999). [DOI] [PubMed] [Google Scholar]

- 7.Kirkwood J. G., The statistical mechanical theory of transport processes I. General theory. J. Chem. Phys. 14, 180–201 (1946). [Google Scholar]

- 8.Cao Y., Wang H., Ouyang Q., Tu Y., The free-energy cost of accurate biochemical oscillations. Nat. Phys. 11, 772–778 (2015). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Feng E. H., Crooks G. E., Length of time’s arrow. Phys. Rev. Lett. 101, 090602 (2008). [DOI] [PubMed] [Google Scholar]

- 10.Brown A. I., Sivak D. A., Allocating dissipation across a molecular machine cycle to maximize flux. Proc. Natl. Acad. Sci. U.S.A. 114, 11057–11062 (2017). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Parrondo J. M. R., Van den Broeck C., Kawai R., Entropy production and the arrow of time. New J. Phys. 11, 073008 (2009). [Google Scholar]

- 12.Costa M., Goldberger A. L., Peng C. K., Broken asymmetry of the human heartbeat: Loss of time irreversibility in aging and disease. Phys. Rev. Lett. 95, 198102 (2005). [DOI] [PubMed] [Google Scholar]

- 13.Zhang D., Cao Y., Ouyang Q., Tu Y., The energy cost and optimal design for synchronization of coupled molecular oscillators. Nat. Phys. 16, 95–100 (2019). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Barato A. C., Seifert U., Cost and precision of Brownian clocks. Phys. Rev. X 6, 041053 (2016). [Google Scholar]

- 15.Verley G., Esposito M., Willaert T., Van den Broeck C., The unlikely Carnot efficiency. Nat. Commun. 5, 4721 (2014). [DOI] [PubMed] [Google Scholar]

- 16.Seifert U., Entropy production along a stochastic trajectory and an integral fluctuation theorem. Phys. Rev. Lett. 95, 040602 (2005). [DOI] [PubMed] [Google Scholar]

- 17.Pollard T. D., Korn E. D., Acanthamoeba myosin I. Isolation from Acanthamoeba castellanii OF an enzyme similar to muscle myosin. J. Biol. Chem. 248, 4682–4690 (1973). [PubMed] [Google Scholar]

- 18.Verley G., Van den Broeck C., Esposito M., Work statistics in stochastically driven systems. New J. Phys. 16, 095001 (2014). [Google Scholar]

- 19.Verley G., Willaert T., Van den Broeck C., Esposito M., Universal theory of efficiency fluctuations. Phys. Rev. E 90, 052145 (2014). [DOI] [PubMed] [Google Scholar]

- 20.Barato A. C., Seifert U., Coherence of biochemical oscillations is bounded by driving force and network topology. Phys. Rev. E 95, 062409 (2017). [DOI] [PubMed] [Google Scholar]

- 21.Pietzonka P., Barato A. C., Seifert U., Universal bound on the efficiency of molecular motors. J. Stat. Mech. Theory Exp. 2016, 124004 (2016). [Google Scholar]

- 22.Gingrich T. R., Horowitz J. M., Perunov N., England J. L., Dissipation bounds all steady-state current fluctuations. Phys. Rev. Lett. 116, 120601 (2016). [DOI] [PubMed] [Google Scholar]

- 23.Barato A. C., Seifert U., Thermodynamic uncertainty relation for biomolecular processes. Phys. Rev. Lett. 114, 158101 (2015). [DOI] [PubMed] [Google Scholar]

- 24.Horowitz J. M., Gingrich T. R., Proof of the finite-time thermodynamic uncertainty relation for steady-state currents. Phys. Rev. E 96, 020103 (2017). [DOI] [PubMed] [Google Scholar]

- 25.Machta B. B., Dissipation bound for thermodynamic control. Phys. Rev. Lett. 115, 260603 (2015). [DOI] [PubMed] [Google Scholar]

- 26.Goldbeter A., Berridge M. J., Biochemical Oscillations and Cellular Rhythms: The Molecular Bases of Periodic and Chaotic Behaviour (Cambridge University Press, 1996). [Google Scholar]

- 27.Pomerening J. R., Sontag E. D., Ferrell J. E., Building a cell cycle oscillator: Hysteresis and bistability in the activation of Cdc2. Nat. Cell Biol. 5, 346–351 (2003). [DOI] [PubMed] [Google Scholar]

- 28.Rodenfels J., Neugebauer K. M., Howard J., Heat oscillations driven by the embryonic cell cycle reveal the energetic costs of signaling. Dev. Cell 48, 646–658.e6 (2019). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Szent-Györgyi A., Calcium regulation of muscle contraction. Biophys. J. 15, 707–723 (1975). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Eisenberg E., Hill T., Muscle contraction and free energy transduction in biological systems. Science 227, 999–1006 (1985). [DOI] [PubMed] [Google Scholar]

- 31.van Zon J. S., Lubensky D. K., Altena P. R. H., ten Wolde P. R., An allosteric model of circadian KaiC phosphorylation. Proc. Natl. Acad. Sci. U.S.A. 104, 7420–7425 (2007). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Nakajima M., et al. , Reconstitution of circadian oscillation of cyanobacterial KaiC phosphorylation in vitro. Science 308, 414–415 (2005). [DOI] [PubMed] [Google Scholar]

- 33.Terauchi K., et al. , ATPase activity of KaiC determines the basic timing for circadian clock of cyanobacteria. Proc. Natl. Acad. Sci. U.S.A. 104, 16377–16381 (2007). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Monti M., Lubensky D. K., ten Wolde P. R., Theory of circadian metabolism. arXiv:1805.04538 (11 May 2018).

- 35.Rust M. J., Markson J. S., Lane W. S., Fisher D. S., O’Shea E. K., Ordered phosphorylation governs oscillation of a three-protein circadian clock. Science 318, 809–812 (2007). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Zwicker D., Lubensky D. K., ten Wolde P. R., Robust circadian clocks from coupled protein-modification and transcription-translation cycles. Proc. Natl. Acad. Sci. U.S.A. 107, 22540–22545 (2010). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Seifert U., Stochastic thermodynamics, fluctuation theorems and molecular machines. Rep. Prog. Phys. 75, 126001 (2012). [DOI] [PubMed] [Google Scholar]

- 38.Crooks G. E., Measuring thermodynamic length. Phys. Rev. Lett. 99, 100602 (2007). [DOI] [PubMed] [Google Scholar]

- 39.Zwanzig R., Nonequilibrium Statistical Mechanics (Oxford University Press, Oxford, UK; New York, NY, 2001). [Google Scholar]

- 40.Gardiner C. W., Handbook of Stochastic Methods for Physics, Chemistry, and the Natural Sciences (Springer Series in Synergetics, Springer-Verlag, Berlin, Germany; New York, NY, ed. 3, 2004). [Google Scholar]

- 41.Pietzonka P., Seifert U., Universal trade-off between power, efficiency, and constancy in steady-state heat engines. Phys. Rev. Lett. 120, 190602 (2018). [DOI] [PubMed] [Google Scholar]

- 42.Lan G., Sartori P., Neumann S., Sourjik V., Tu Y., The energy–speed–accuracy trade-off in sensory adaptation. Nat. Phys. 8, 422–428 (2012). [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.