Abstract

Introduction:

To fill the void created by insufficient dental terminologies, a multi-institutional workgroup was formed among members of the Consortium for Oral Health Research and Informatics to develop the Dental Diagnostic System (DDS) in 2009. The adoption of dental diagnosis terminologies by providers must be accompanied by rigorous usability and validity assessments to ensure their effectiveness in practice.

Objectives:

The primary objective of this study was to describe the utilization and correct use of the DDS over a 4-y period.

Methods:

Electronic health record data were amassed from 2013 to 2016 where diagnostic terms and Current Dental Terminology procedure code pairs were adjudicated by calibrated dentists. With the resultant data, we report on the 4-y utilization and validity of the DDS at 5 dental institutions. Utilization refers to the proportion of instances that diagnoses are documented in a structured format, and validity is defined as the frequency of valid pairs divided by the number of all treatment codes entered.

Results:

Nearly 10 million procedures (n = 9,946,975) were documented at the 5 participating institutions between 2013 and 2016. There was a 1.5-fold increase in the number of unique diagnoses documented during the 4-y period. The utilization and validity proportions of the DDS had statistically significant increases from 2013 to 2016 (P < 0.0001). Academic dental sites were more likely to document diagnoses associated with orthodontic and restorative procedures, while the private dental site was equally likely to document diagnoses associated with all procedures. Overall, the private dental site had significantly higher utilization and validity proportions than the academic dental sites.

Conclusion:

The results demonstrate an improvement in utilization and validity of the DDS terminology over time. These findings also yield insight into the factors that influence the usability, adoption, and validity of dental terminologies, raising the need for more focused training of dental students.

Knowledge Transfer Statement:

Ensuring that providers use standardized methods for documentation of diagnoses represents a challenge within dentistry. The results of this study can be used by clinicians when evaluating the utility of diagnostic terminologies embedded within the electronic health record.

Keywords: dentistry, diagnostic terminology, standardized diagnostic terms, electronic health record, dental education, clinic management

Introduction

The World Health Organization introduced its inaugural edition of the International Classification of Diseases (ICD) at the turn of the 20th century in an effort to observe and track information on mortality and its potential causes (Quan et al. 2008). To incorporate the growing need for evidence-based diagnostic data, the ICD has been updated periodically to welcome advances in disease comprehension and health, but there has not been a proportionate level of attention to the inclusion of dental and oral health diagnoses. Besides the ICD, the Systematized Nomenclature of Medicine–Clinical Terms (SNOMED-CT), a more exhaustive clinical terminology that also includes diagnoses, is becoming a more prevalent diagnostic system among countries such as the United Kingdom, Spain, and the United States (Cornet and de Keizer 2008; Lee et al. 2014).

Although standardized diagnostic terminologies such as the ICD and SNOMED-CT enjoy appreciable familiarity, provider buy-in, and use within medicine, this is not the case in dentistry (Tokede et al. 2013). Oral and dental health diagnoses have become available for these terminologies in only more recent iterations, which may explain the discrepancy in diagnostic terminology development. Similarly, there has been a deficiency of necessary coverage and a lack of appropriate specificity on several dental concepts (Adams 2004; Torres-Urquidy and Schleyer 2006; White et al. 2011). The dental profession responded to this deficiency by the creation of standardized diagnostic terminologies tailored for use by dentists.

In 2007 the American Dental Association established the Systematized Nomenclature of Dentistry (SNODENT) as a subset of SNOMED-CT, to be used as a dental and oral health vocabulary within the electronic environment and designed to accompany the Current Dental Terminology (CDT). SNODENT is composed of diagnoses, signs, symptoms, and complaints and currently includes about 8,000 concepts (Tokede et al. 2013). Its purpose is to provide standardized concepts describing oral health outcomes with clinical specificity allowing providers to document patient care in such a way that rigorous analysis can be done within the electronic health records (EHRs; American Dental Association 2016). Unlike SNOMED-CT and ICD, SNODENT has not been well integrated within the dental provider culture (Goldberg et al. 2005). Potential reasons include the following: diagnoses not required for billing (as in medicine), inadequate coverage, implementation challenges (as it contains a large number of concepts), need for postcoordination, and so on (White et al. 2011; Tokede et al. 2013). Furthermore, SNODENT has not been completed and is not available for use by general practitioners or dental schools. It was also reported that SNODENT requires significant updates to the “content, quality of coding, quality of ontological structure” before it can be integrated into SNOMED-CT (Goldberg et al. 2005).

To fill the apparent void created by insufficient dental terminologies, a multi-institutional workgroup was formed among members of the Consortium for Oral Health Research and Informatics to develop the Dental Diagnostic System (DDS; formerly known as the EZcodes) in 2009 (Stark et al. 2010; Kalenderian et al. 2011). The new terminology comprised 17 categories, 106 subcategories, and 1,714 diagnostic terms—all constructed in strict accordance with best practices (Kalenderian et al. 2011). It maintains the advantage of being updatable and easily mapped to other terminologies (Kalenderian et al. 2011). While the development of this functional dental diagnosis terminology and its adoption by dental care providers are paramount, the continued rigorous assessment of the use and validity of any terminology must be done to ensure its effectiveness in practice.

Conscientious attention has been paid to the evaluation of diagnosis terminologies, but the focus has been geared toward reliable code entry versus manual review of diagnoses (the gold standard) to ensure consistency (Fisher et al. 1992; MacIntyre et al. 1997; Humphries et al. 2000; Quan et al. 2004; Henderson et al. 2006). This form of validation represents a key contribution to the auditing process (Henderson et al. 2006), but the methodology cannot examine the clinical legitimacy (accuracy) of provider diagnoses. Knowledge on some of the known barriers to mainstream diagnostic terminology usage (including inadequate content and inconsistent mappings across versions and types; Spackman 2005; Wade and Rosenbloom 2009; White et al. 2011; Lee et al. 2014) has led to an evolution of various methods of assessment, such as qualitative evaluations of the size and scope of use through literature identification and classification. Others labored to describe usability (Bakhshi-Raiez et al. 2012). A few studies sought to describe usage and validity of diagnostic terminologies in clinical practice (Cornet and de Keizer 2008; Lee et al. 2014).

In an earlier study, Tokede et al. (2013) developed an approach to estimate utilization and validity with the precursor to the DDS in a single reporting year. Utilization—defined as the proportion of times that diagnostic information was documented in a structured format (i.e., via a DDS concept)—was 12%. Validity—defined as the proportion of diagnostic terms correctly paired with a CDT code—was 60%. The provision of these quantifiable assessments allows for the thorough examination of oral health care and population-level oral health status. In turn, these measures deliver evidence of the need for further clinical research of the diagnosis-treatment link and quality assurance.

In this article, we report on the longitudinal (4 y) utilization and validity of the DDS at 5 US dental institutions (4 dental schools and a large dental service organization) that have adopted this standardized dental diagnostic terminology. Permission to carry out this study was granted by each participating institution’s Institutional Review Board. Furthermore, we explore cultural characteristics (professional development and education) that may influence the utility of use, and accuracy of, a standardized diagnostic terminology. Our primary objectives are to develop comprehensive working estimates of the utilization and validity proportions overall and among the sites. Second, we explore how utilization and validity vary over time and by institution, institution type, and treatment procedure categories.

Methods

The following inclusion criteria were required for institutional participation: Consortium for Oral Health Research and Informatics membership, use of an EHR (e.g., axiUm) for documentation of patient care, and adoption of the DDS terminology. Each institution was required to maintain full implementation of any DDS version for the duration of the 4-y study period (2013 to 2016). Academic and private practice leadership (e.g., deans, department chairs, presidents, and CEOs) and clinical champions (e.g., clinical directors, clinicians) from the DDS founding institutions (sites 1, 2, and 3 [described later]) were responsible for engaging all potential users. Each organization was given wide latitude with respect to the implementation process, but there were some common attributes that improved the likelihood of DDS uptake: 1) development of an implementation plan through the construction of an implementation team, 2) assessment of the organizational culture and readiness, 3) understanding how the DDS would affect the dental organization, 4) calibration of the DDS interface and workflow, and 5) pilot testing prior to going live. All sites had a DDS implementation project manager who was the primary driver of the implementation effort. Each site found a champion within the clinic who was committed to the terminology and could serve in a leadership position to influence others and facilitate implementation of the DDS terminology. Each site had to develop an IT support staff responsible for installing and managing the operation of the software and hardware (e.g., workstations, wireless tablets, printers, and scanners). Each site enlisted training and support staff who were in charge of training new staff on the terminology and how to use it within the EHR. Vendors provided well-designed interfaces, system upgrades, customer support, machine and software replacements, and aid with interoperability.

Five institutions satisfied the requisite data threshold spanning the prespecified observational period. To maintain anonymity of the study sites, the following nomenclature are utilized: sites 1 to 5 represent the 5 distinct US dental institutions. “Academic dental” (AD) refers to the dental schools in aggregate; “private dental” (PD) refers to the remaining site. Raw data and descriptive information regarding the distribution and frequency of use were extracted from the EHR via a centrally developed uniform EHR script and made available to the study sites. All 5 institutions use the same EHR: axiUm (Exan Group, Henry Schein). Each institution arranged periodical training seminars to be performed in a group demonstration format or as hands-on computer-based training for providers. Beyond these initial training sessions, it was important to have access to the DDS user guide for reference purposes, which was made available to all participating health care providers. As a key component of EHR training, providers (students, residents, and faculty) were trained in all aspects of DDS use, including treatment planning and diagnoses entry. Regardless of the initial training, use of the DDS terms remained at the discretion of the providers at most of the participating institutions.

Data cleaning involved the removal of certain repetitive and unstandardized diagnoses and/or treatment information. The ultimate goal of this was to prevent redundancy and to ensure that data from all sites were exactly comparable. There were 2 main groups of exclusions:

Step codes: treatment codes that represent intermediate or preparatory steps in the treatment chain. For example, for dental crowns, treatment at some sites was often split into crown prep, impression, and delivery. Inlay/onlay veneer was also often split into financial arrangement, prep, final impression, and so on.

Other: treatment codes that represent site-specific and/or administrative functions. These include local administration, financial billing codes, and non-CDT treatment codes. Examples include surgical room fees, office reschedules, notice of diagnosis, graduate clinic visits. Most excluded codes usually had an irregular form (e.g., D2740B), a departure from the regular CDT convention (e.g., D0150).

Statistical Analysis

There were 2 primary outcomes of interest in the assessment of the diagnosis terminology: utilization and validity. Utilization refers to the proportion of instances that diagnoses are documented in a structured format. It is measured as a binary variable: “yes” when any diagnosis has been paired with a planned/completed procedure and “no” otherwise. The utilization proportion is calculated by dividing the total frequency of documented diagnostic codes by the number of corresponding treatment codes entered over the same period.

Validity (accuracy) of the diagnostic terminology was determined by the examination of every unique pairing of diagnostic term and CDT code. All reviewers assumed that patients received the appropriate procedures, as this is a highly regulated process within the United States and important for billing and reimbursements. Working under this assumption, we assumed that each recorded treatment (and corresponding CDT code) was correctly performed and reported. Three trained and calibrated dentists were tasked with determining whether a specific diagnosis could be plausibly matched with its corresponding treatment procedure. This was accomplished through an iterative auditing process where 3 evaluators independently rated every diagnosis-CDT pairing as valid or nonvalid and subsequently met to adjudicate their conclusions. During the calibration process, each rater was instructed to exact conservative judgements on the diagnosis–treatment (CDT code) pairing, so it was often the case that there would be several valid treatments for a single diagnosis. We did not validate the provider diagnoses. All procedure and diagnosis pairs were judged to be valid only when the raters perceived a clear association between them. As a result, some pairs judged as nonvalid may have been correct in certain clinical scenarios or may reflect a difference in professional judgment. Given the binary nature of the variable, overall validation for an institution was defined as the frequency of valid pairs divided by the number of all treatment codes entered.

Independent-sample z test for proportions was conducted to determine whether there are statistically significant differences in utilization and validity between PD and AD sites. A chi-square test for homogeneity of proportions was conducted to determine statistically significant differences in utilization and validity over time (4 y; 2013 to 2016), by site, and by CDT categories. Analysis of proportions (ANOP) was used to determine if any site had a utilization or validity proportion significantly different from the overall average. All hypothesis tests were conducted at the standard significance level of 0.05 (α = 0.05). Based on the sample sizes, a significance level of α = 0.05, and a specified power of 80% (1 – β = 0.80), the 2-sample z test for proportions was able to detect a small effect difference of 0.005 in utilization (Cohen 1977; Faul et al. 2007). All chi-square tests of homogeneity of proportions were able to detect an effect size for utilization and validity of <0.02 for all tests, which represents a small effect size with 80% power (Cohen 1977; Faul et al. 2007). To control the false discovery rate (type 1 error), we employed the Benjamini-Hochberg false discovery rate method (Benjamini and Hochberg 1995). All statistical analyses were performed with Stata Statistical Software (release 13; StataCorp).

Results

For the participating AD sites, 2,045,075 procedures (296,191 in 2013; 314,332 in 2014; 660,682 in 2015; 772,870 in 2016) were performed between 2013 and 2016. After the removal of step codes, non-CDT treatment codes, local administrative and billing codes, and other codes, 51.8% of the data remained, yielding 1,059,382 comparable procedures among the 4 AD sites over the 4-y period. Among the excluded treatment codes, the majority were step codes. Within the same 4-y period, 7,901,900 procedures (1,712,516 in 2013; 1,976,090 in 2014; 2,062,106 in 2015; 2,151,188 in 2016) were performed at the participating PD site. All data from the PD site were readily comparable, and no step codes, non-CDT treatment codes, local administrative and billing codes, or other treatment codes were removed.

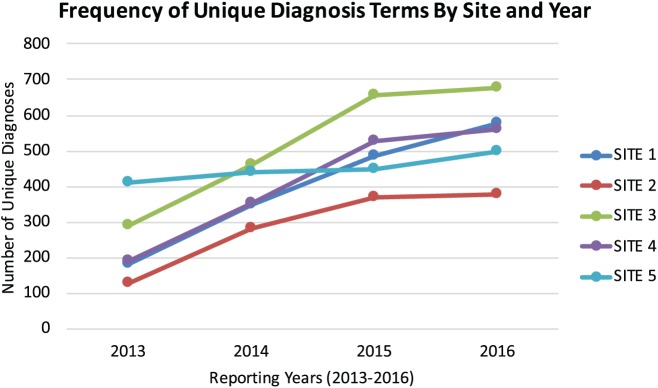

Figure 1 shows that the frequency of unique diagnosis terms used by each site increased by a 1.5-fold average during the 4-y period. The most frequently utilized valid diagnoses were examination/consultation/follow-up/office visit (DDS code 976178) at sites 1, 2, and 4. No term can be assigned–hygiene visit (DDS code 999989) was most frequently seen at site 3, and healthy periodontium (DDS code 1816302) was most frequently seen at site 5. See Appendix Table 1 for an accounting of the top 5 most frequent diagnoses by site. The most frequently seen documented diagnoses and CDT pairing varied acutely by site:

Figure 1.

Frequency of unique diagnoses by year and dental site.

Site 1: Examination/consultation/follow-up/office visit (DDS code 976178) treated with periodic oral evaluation (D0150)

Site 2: Nonrestorable carious tooth (DDS code 473642) treated with an extraction (D7140)

Site 3: No term can be assigned–hygiene visit (DDS code 999989) treated with prophylaxis—adult (D1110)

Site 4: Examination/consultation/follow-up/office visit (DDS code 976178) treated with diagnostics cast or study models (D0470)

Site 5: Healthy periodontium (DDS code 1816302) treated with periodic oral evaluation (D0140)

See Appendix Table 2 for an accounting of the top 5 most frequent pairs by site.

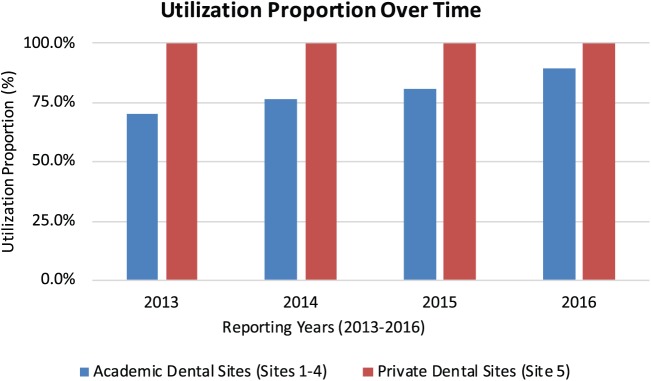

The overall utilization proportion for diagnostic terms was 70.2% (95% CI, 70.0% to 70.5%) in 2013, 76.4% (95% CI, 76.1% to 76.7%) in 2014, 78.8% (95% CI, 78.6% to 78.9%), and 89.2% (95% CI, 89.1% to 89.3%) in 2016 among all AD sites (Fig. 2). This represents a statistically significant increase over 4 y (χ2 = 21,910.0, df = 3, P < 0.0001). There were also significant differences in the utilization proportion across AD sites (χ2 = 161,827.5, df = 3, P < 0.0001). Site 3 recorded the highest average utilization proportion (96.7%) and site 1, the lowest (46.2%). Alternatively, the utilization proportion for the PD site remained constant at nearly 100.0% throughout the study period. The utilization proportion in the PD site (99.9%) was significantly higher than in the AD sites (80.9%; z = 943.2, P < 0.0001).

Figure 2.

Utilization proportions over time in academic and private dental sites.

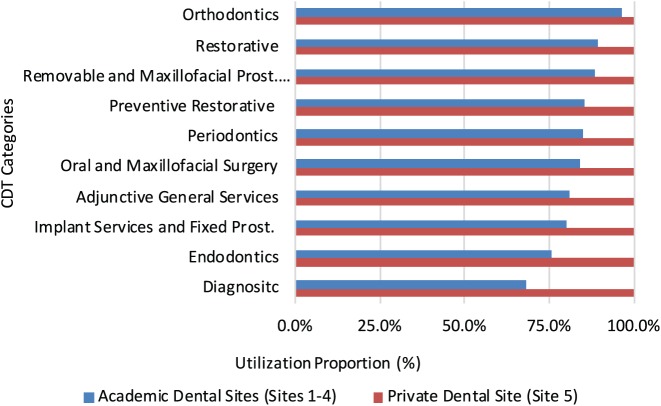

Figure 3 shows the total utilization proportions by CDT code categories. Among AD sites, the utilization proportion varied significantly by CDT category (χ2 = 53,686.80, df = 9, P < 0.0001). Figure 3 also shows that diagnoses paired with CDT codes within the orthodontic (D8000 to D8999) category recorded the highest overall utilization proportion (96.3%) over the 4 reporting years, while the diagnoses paired with diagnostic (D0000 to D0999) and endodontic (D9000 to D9999) CDT codes had the lowest utilization (68.0% and 75.9%, respectively) among AD sites. The mean utilization proportion for the PD site was 99.9% for all CDT categories, and there were no significant differences over time.

Figure 3.

Utilization proportions by Current Dental Terminology categories in academic and private dental sites.

The analysis of means for proportions shows that the documented diagnoses paired with diagnostic (D0000 to D0999), endodontic (D3000 to D3999), and implant services and fixed prosthodontics (D6000 to D6999) were significantly below the overall mean utilization proportion. See Appendix Figure 1 for full ANOP results. While diagnoses paired with oral and maxillofacial surgery (D7000 to D7999), periodontics (D4000 to D4999), preventive (D1000 to D1999), removable and maxillofacial prosthetics (D5000 to D5999), restorative (D2000 to D2999), and orthodontic (D8000 to D8999) were significantly higher than the overall mean utilization proportion.

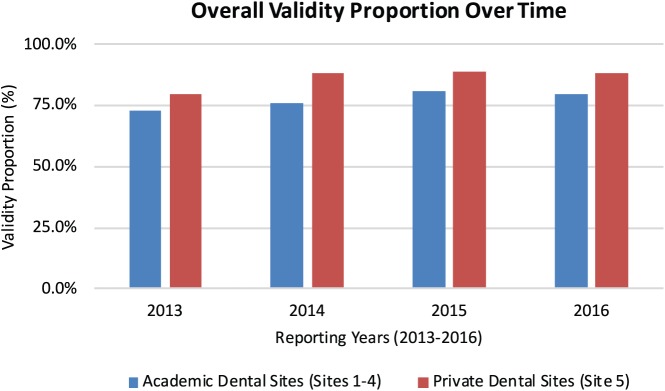

Among the procedures within the AD sites where diagnoses were properly documented (utilized), there were 686,797 procedure-diagnosis pairs where the correct use (validity) was assessed (82,076 records with documented diagnoses in 2013; 103,202 records in 2014; 214,771 in 2015; and 286,748 in 2016). Figure 4 shows that the validity proportion for diagnostic terms and CDT code pairs was 72.6% (95% CI, 72.3% to 73.1%) in 2013, 75.8% (95% CI, 75.5% to 76.1%) in 2014, 80.8% (95% CI, 80.6% to 81.0%) in 2015, and 79.4% (95% CI, 79.2% to 79.6%) in 2016, representing a statistically significant increase over the 4-y period (χ2 = 1,974.8, df = 3, P < 0.0001). The validity proportions varied significantly by academic site (χ2 = 5,312.5, df = 3, P < 0.0001). The highest validity proportions in the AD sites were recorded by site 3 (96.7%) and site 2 (89.5%), while sites 1 and 4 recorded the lower validity proportion scores (46.3% and 69.8%, respectively).

Figure 4.

Validity proportions over time in academic and private dental sites.

Within the PD site, there were 4,091,234 properly documented pairs (utilized) where the correct use (validity) was assessed (969,058 records with documented diagnoses in 2013; 1,028,766 records in 2014; 1,037,215 in 2015; and 1,056,195 in 2016). The validity proportion increased significantly over the study period (χ2 = 5,000.5, df = 3, P < 0.0001). The validity proportion in the PD site (86.2%) was significantly higher than in the AD sites (77.7%; z = 182.4, P < 0.0001).

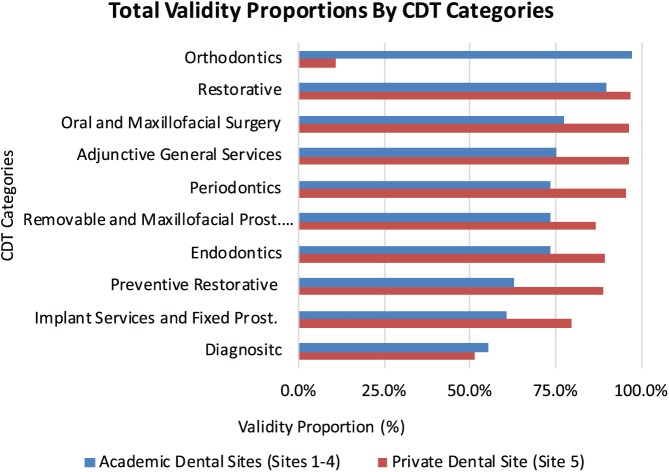

Figure 5 shows the total validity proportions by CDT code categories. According to the chi-square test, there were significant differences in the validity proportion across CDT code categories over the 4 y at the AD sites (χ2 = 61,783.1, df = 9, P < 0.0001). The diagnoses paired with CDT codes within the orthodontic (D8000 to D8999) category recorded the highest overall validity proportion (97.2%) over the 4 reporting years, while the diagnoses paired with diagnostic (D0000 to D0999) and implant services (D6000 to D6999) CDT codes had the lowest utilization (55.3% and 60.8%, respectively). Furthermore, the ANOP showed that documented diagnoses paired with diagnostic (D0000 to D0999), implant services and fixed prosthodontics (D6000 to D6999), and preventive (D1000 to D1999) were significantly below the overall mean validity proportion. Diagnoses paired with preventive (D1000 to D1999), restorative (D2000 to D2999), endodontics (D3000 to D3999), periodontics (D4000 to D4999), removable and maxillofacial prosthetics (D5000 to D5999), oral and maxillofacial surgery (D7000 to D7999), orthodontic (D8000 to D8999), and adjunctive general services (D9000 to D9999) were each significantly higher than the overall mean validity proportion. See Appendix Figure 2 for an accounting of the ANOP.

Figure 5.

Validity proportions by Current Dental Terminology categories in academic and private dental sites.

There were also significant differences in the validity proportion across CDT code categories over the 4 y at the PD site (χ2 = 62,730.7, df = 9, P < 0.0001). The diagnoses paired with CDT codes within the restorative (D2000 to D2999) category recorded the highest overall validity proportion (97.0%) over the 4 reporting years, while the diagnoses paired with diagnostic (D0000 to D0999) and orthodontics (D8000 to D8999) CDT codes had the lowest validity (51.5% and 10.7%, respectively). The ANOP (see Appendix Fig. 3) showed that documented diagnoses paired with diagnostic (D0000 to D0999) and implant services and fixed prosthodontics (D6000 to D6999) were significantly below the overall mean validity proportion for the PD site. All other diagnoses paired with preventive (D1000 to D1999), restorative (D2000 to D2999), endodontics (D3000 to D3999), periodontics (D4000 to D4999), removable and maxillofacial prosthetics (D5000 to D5999), oral and maxillofacial surgery (D7000 to D7999), orthodontic (D8000 to D8999), and adjunctive general services (D9000 to D9999) were significantly above the mean validity proportion.

Discussion

In this article, we document the utilization and validity proportions of a standardized dental diagnostic terminology within an EHR at 4 dental education institutions and a PD group practice over a 4-y period. To our knowledge, this is the first longitudinal study to quantify the utilization and validity proportions with observational data directly from the clinic. The purpose of the DDS terminology was to help providers properly and consistently document diagnoses, through changes in provider culture, such as the establishment and use of a diagnostic terminology, the full incorporation of the terminology within the EHR, and the motivation from providers in leadership positions regarding the importance of documentation of diagnoses in the treatment-planning stages. Each of these soft interventions reassured providers that they were equipped with the necessary tools to make and record diagnoses prior to the completion of treatment procedures.

Diagnostic terms were used approximately 80% of the time within the AD institutions and nearly 100% of the time in the PD site over 4 y. During the 4-y period, there were yearly increases in the total number of diagnoses entered, and there were corresponding annual increases in the number of unique diagnoses entered at AD and PD sites. This potentially indicates an increased familiarity with the DDS platform and a better understanding of the scope of the terminology. All sites progressively recorded higher utilization because providers were becoming increasingly familiar with the enhancements made to the dental software and the terminology structure, which improved the user experience. Furthermore, each dental institution transitioned to mandatory use of the DDS over time to increase utilization. The decision regarding mandatory diagnosing was made at the organizational level (e.g., chair/thought leader at AD sites and CEO of the PD site), while implementation was enacted by quality assurance officers within each clinic. Mandatory use of the DDS occurred at each site. The AD sites had, on average, 3 y of mandatory DDS use while the PD site had 3 y.

The PD site consistently outperformed the AD sites in utilization, for several potential reasons: 1) The diagnosis entry field is “forced” within the dental software at the PD site, which means that a provider cannot enter a treatment plan into the EHR without first documenting the diagnosis. At the AD sites, diagnosis is not a forced entry for all providers. This was the predominant reason for the difference in utilization. 2) The training styles differed vastly between the AD sites and the PD site. The PD site implemented the DDS at the same time that it adopted the health care software. Because of this combined adoption, training in EHR use was fused into training for DDS use and vice versa. As such, users had extensive hands-on training in using standardized diagnostic terminologies. However, the AD sites all adopted EHRs before adopting the DDS. 3) There were demographic differences between the providers at the PD and AD sites that could partially explain the discrepancies in the utilization proportions. The AD sites included predoctoral students and residents who were less experienced DDS end users, while the PD site consisted of nonstudent and nonresident providers.

Over 4 reporting years, the most consistently documented DDS terms utilized were found in the caries and periodontics diagnostic categories within the AD and PD sites, which represent marked increases from similar previous studies (Tokede et al. 2013). Providers from the AD sites were more likely to document diagnoses for orthodontic (D8000 to D8999) and restorative (D2000 to D2999) treatments in our cohort. During the 4 y, the pairings of DDS terms with orthodontic treatments had a utilization proportion of 96.3%, while the utilization proportion for restorative treatments was 89.5%. The providers from the PD site consistently documented every diagnosis irrespective of the treatment categories. These values exceed those found by White et al. (2011) approximately 9-fold. The reason for this discrepancy in utilization could be attributed to the large sample size of our study relative to the smaller sample sizes exhibited in these categories for their study. It is also likely due to the larger variety of orthodontics- and periodontics-related diagnoses in the DDS as compared to the Z codes used in the study by White et al. Furthermore, providers who performed diagnostic, endodontic, implant services, or fixed prosthodontics treatments were less likely to document their diagnoses.

Among the utilized diagnoses, the validity proportions were calculated to measure the legitimate coupling of documented diagnoses and their corresponding dental treatments. The overall validity proportions were 77.7% at the AD sites and 86.2% at the PD site. Increases in validity were recorded over the 4 y, although the gains were not monotonic, which represented an approximate 7% increase in the AD sites and 8% in the PD site by the final year. Appreciable increases in validity were recorded by 2 of the 4 AD sites and the PD site over the 4-y span. Organizational stability is partially responsible for the variations in the validity proportions. Beyond that, the AD sites have a provider population that remains in flux due to fluctuations in the student body. To determine the specific reasons for site variations, more data must be collected and “root cause analysis” performed. Among those who used the DDS to diagnose, there was an increased likelihood that the completed procedure would be valid if the provider was performing treatments within the orthodontic (D8000 to D8999) and restorative (D2000 to D2999) CDT families at the AD sites and restorative CDT families at the PD site. Alternatively, providers in our cohort were less likely to record a valid DDS-CDT treatment pairing if the completed procedures came from the diagnostic, implant services and fixed prosthodontics, and preventive restorative CDT groups from either site.

This study reports on a 4-y use of a dental diagnostic terminology at 5 American dental institutions. During the 4-y reporting period, the utilization proportion experienced similar percentage increases as the validity proportions, except in the final year. The results demonstrate an improvement in utilization and a valid use of the DDS terminology over time. These findings also yield insight into the factors that influence use and validity, raising the need for more focused training of dental students. The data seem to indicate that the utilization and validity proportions are affected by the professional and clinic-led cultural direction and DDS implementation efforts, as evidenced by increases of 8% and 7% over the reporting period. Data acquisition and feedback from studies that use observational data from clinical practices can facilitate evaluating the uptake of terminology and provider performance through patterns of utilization and validity. Our data suggest that the longer a terminology is used, the higher the chances that it will be understood and better utilized by end users.

Author Contributions

A. Yansane, contributed to conception, design of the project, and data analysis, drafted and critically revised the manuscript; O. Tokede, J. White, J. Etolue, contributed to conception, design of the project, and data analysis, critically revised the manuscript; L. McClellan, M. Walji, E. Obadan-Udoh, E. Kalenderian, contributed to conception and design of the project, critically revised the manuscript. All authors gave final approval and agree to be accountable for all aspects of the work.

Supplemental Material

Supplemental material, DS_10.1177_2380084418815150 for Utilization and Validity of the Dental Diagnostic System over Time in Academic and Private Practice by A. Yansane, O. Tokede, J. White, J. Etolue, L. McClellan, M. Walji, E. Obadan-Udoh and E. Kalenderian in JDR Clinical & Translational Research

Footnotes

A supplemental appendix to this article is available online.

This research was supported by the National Institute of Dental and Craniofacial Research, National Institutes of Health (grant 1R01DE023061-01A1).

The authors declare no potential conflicts of interest with respect to the authorship and/or publication of this article.

References

- Adams R. 2004. Testimony to the Subcommittee on Standards and Security National Committee on Vital and Health Statistics on Dental Standards Issues. Washington (DC): National Association of Dental Plans. [Google Scholar]

- American Dental Association. 2016. What is SNODENT? [accessed 2018 November 2]. http://www.ada.org/en/member-center/member-benefits/practice-resources/dental-informatics/snodent.

- Bakhshi-Raiez F, de Keizer N, Cornet R, Dorrepaal M, Dongelmans D, Jaspers MW. 2012. A usability evaluation of a SNOMED CT based compositional interface terminology for intensive care. Int J Med Inform. 81(5):351–362. [DOI] [PubMed] [Google Scholar]

- Benjamini Y, Hochberg Y. 1995. Controlling the false discovery rate: a practical and powerful approach to multiple testing. J R Stat Soc Series B Stat Methodol. 57(1):289–300. [Google Scholar]

- Cohen J. 1977. Statistical power analysis for the behavioral sciences (revised ed.). New York (NY): Academic Press. [Google Scholar]

- Cornet R, de Keizer N. 2008. Forty years of SNOMED: a literature review. BMC Med Inform Decis Mak. 8 Suppl 1:S2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Faul F, Erdfelder E, Lang A-G, Buchner A. 2007. G* Power 3: a flexible statistical power analysis program for the social, behavioral, and biomedical sciences. Behav Res Methods. 39(2):175–191. [DOI] [PubMed] [Google Scholar]

- Fisher ES, Whaley FS, Krushat WM, Malenka DJ, Fleming C, Baron JA, Hsia DC. 1992. The accuracy of medicare’s hospital claims data: progress has been made, but problems remain. Am J Public Health. 82(2):243–248. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Goldberg LJ, Ceusters W, Eisner J, Smith B. 2005. The significance of SNODENT. Stud Health Technol Inform. 116:737–742. [PubMed] [Google Scholar]

- Henderson T, Shepheard J, Sundararajan V. 2006. Quality of diagnosis and procedure coding in ICD-10 administrative data. Med Care. 44(11):1011–1019. [DOI] [PubMed] [Google Scholar]

- Humphries KH, Rankin JM, Carere RG, Buller CE, Kiely FM, Spinelli JJ. 2000. Co-morbidity data in outcomes research: are clinical data derived from administrative databases a reliable alternative to chart review? J Clin Epidemiol. 53(4):343–349. [DOI] [PubMed] [Google Scholar]

- Kalenderian E, Ramoni RL, White JM, Schoonheim-Klein ME, Stark PC, Kimmes NS, Zeller GG, Willis GP, Walji MF. 2011. The development of a dental diagnostic terminology. J Dent Educ. 75(1):68–76. [PMC free article] [PubMed] [Google Scholar]

- Lee D, de Keizer N, Lau F, Cornet R. 2014. Literature review of SNOMED CT use. J Am Med Inform Assoc. 21(e1):e11–e19. [DOI] [PMC free article] [PubMed] [Google Scholar]

- MacIntyre CR, Ackland MJ, Chandraraj EJ, Pilla JE. 1997. Accuracy of ICD-9-CM codes in hospital morbidity data, Victoria: implications for public health research. Aust N Z J Public Health. 21(5):477–482. [DOI] [PubMed] [Google Scholar]

- Quan H, Li B, Duncan Saunders L, Parsons GA, Nilsson CI, Alibhai A, Ghali WA. 2008. Assessing validity of ICD-9-CM and ICD-10 administrative data in recording clinical conditions in a unique dually coded database. Health Serv Res. 43(4):1424–1441. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Quan H, Parsons GA, Ghali WA. 2004. Validity of procedure codes in International Classification of Diseases, 9th Revision, Clinical Modification administrative data. Med Care. 42(8):801–809. [DOI] [PubMed] [Google Scholar]

- Spackman KA. 2005. Rates of change in a large clinical terminology: three years experience with SNOMED clinical terms. AMIA Annu Symp Proc. 2005:714–718. [PMC free article] [PubMed] [Google Scholar]

- Stark PC, Kalenderian E, White JM, Walji MF, Stewart DC, Kimmes N, Meng TR, Willis GP, DeVries T, Chapman RJ. 2010. Consortium for Oral Health-Related Informatics: improving dental research, education, and treatment. J Dent Educ. 74(10):1051–1065. [PMC free article] [PubMed] [Google Scholar]

- Tokede O, White J, Stark PC, Vaderhobli R, Walji MF, Ramoni R, Schoonheim-Klein M, Kimmes N, Tavares A, Kalenderian E. 2013. Assessing use of a standardized dental diagnostic terminology in an electronic health record. J Dent Educ. 77(1):24–36. [PMC free article] [PubMed] [Google Scholar]

- Torres-Urquidy MH, Schleyer T. 2006. Evaluation of the Systematized Nomenclature of Dentistry (SNODENT) using case reports: preliminary results. AMIA Annu Symp Proc. 2006:1124. [PMC free article] [PubMed] [Google Scholar]

- Wade G, Rosenbloom ST. 2009. The impact of SNOMED CT revisions on a mapped interface terminology: terminology development and implementation issues. J Biomed Inform. 42(3):490–493. [DOI] [PMC free article] [PubMed] [Google Scholar]

- White JM, Kalenderian E, Stark PC, Ramoni RL, Vaderhobli R, Walji MF. 2011. Evaluating a dental diagnostic terminology in an electronic health record. J Dent Educ. 75(5):605–615. [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Supplemental material, DS_10.1177_2380084418815150 for Utilization and Validity of the Dental Diagnostic System over Time in Academic and Private Practice by A. Yansane, O. Tokede, J. White, J. Etolue, L. McClellan, M. Walji, E. Obadan-Udoh and E. Kalenderian in JDR Clinical & Translational Research