Abstract

Photoacoustic (PA) imaging (or optoacoustic imaging) is a novel biomedical imaging method in biological and medical research. This modality performs morphological, functional, and molecular imaging with and without labels in both microscopic and deep tissue imaging domains. A variety of innovations have enhanced 3D PA imaging performance and thus has opened new opportunities in preclinical and clinical imaging. However, the 3D visualization tools for PA images remains a challenge. There are several commercially available software packages to visualize the generated 3D PA images. They are generally expensive, and their features are not optimized for 3D visualization of PA images. Here, we demonstrate a specialized 3D visualization software package, namely 3D Photoacoustic Visualization Studio (3D PHOVIS), specifically targeting photoacoustic data, image, and visualization processes. To support the research environment for visualization and fast processing, we incorporated 3D PHOVIS onto the MATLAB with graphical user interface and developed multi-core graphics processing unit modules for fast processing. The 3D PHOVIS includes following modules: (1) a mosaic volume generator, (2) a scan converter for optical scanning photoacoustic microscopy, (3) a skin profile estimator and depth encoder, (4) a multiplanar viewer with a navigation map, and (5) a volume renderer with a movie maker. This paper discusses the algorithms present in the software package and demonstrates their functions. In addition, the applicability of this software to ultrasound imaging and optical coherence tomography is also investigated. User manuals and application files for 3D PHOVIS are available for free on the website (www.boa-lab.com). Core functions of 3D PHOVIS are developed as a result of a summer class at POSTECH, “High-Performance Algorithm in CPU/GPU/DSP, and Computer Architecture.” We believe our 3D PHOVIS provides a unique tool to PA imaging researchers, expedites its growth, and attracts broad interests in a wide range of studies.

Keywords: Volume mosaic, 3D scan-conversion, Skin detection, Visualization, Rendering, Volume imaging, 3D imaging

1. Introduction

Photoacoustic imaging (PAI, or optoacoustic imaging) is a biomedical imaging technique that detects ultrasound (US) signals generated via light-induced thermal expansion and relaxation of cells within tissues, called the photoacoustic (PA) effect. [1] Based on the fundamental hybrid nature, PAI has two unique competitive differentiators: (1) strong image contrast on the basis of rich intrinsic (e.g., melanin [2], DNA/RNA [3], hemoglobin [[4], [5], [6]], lipid [7], water [8], and others [9]) and extrinsic (e.g., various contrast agents) optical properties, and (2) deep tissue imaging capability in an optical diffusion regime providing high spatial resolution [10]. Due to these unique features, not only biological applications but also medical applications have been investigated in the recent decade [[11], [12], [13], [14], [15]].

Many research activities in the PAI field boost the hardware and software performance, develop various novel PA derivative techniques, and apply to the technology in life science [[16], [17], [18]] and medicine [[19], [20], [21], [22], [23]]. However, research activities benefitting the visualization of the 3D PAI are rarely found. Mainly, few commercial medical data processing and visualization tools such as ImageJ (Wayne Rasband, USA), AMIRA (Thermo Fisher Scientific, USA) and VolView (Kitware, USA) are available, and they do not provide visualization tools specifically targeted for PAI. In addition, they are relatively expensive, do not support incorporating newly available visualization techniques, often have insufficient image qualities, and cannot be integrated with the most popular data processing tool in academia, i.e., MATLAB (MathWorks, USA).

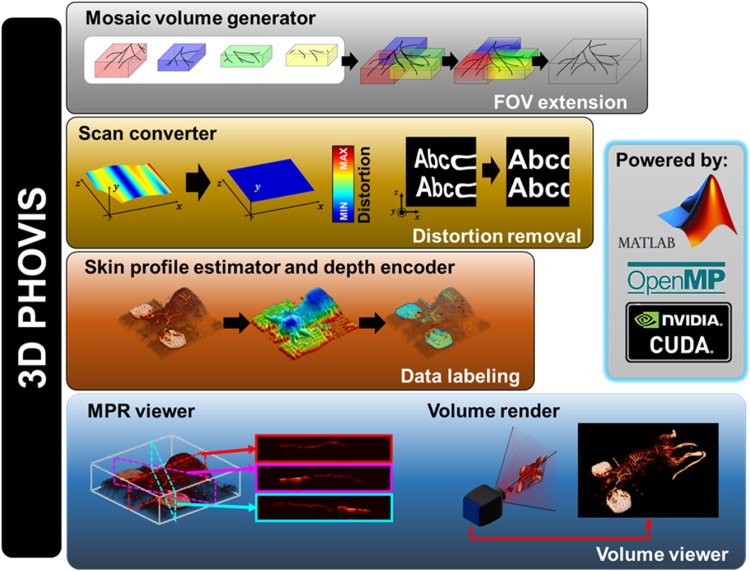

Here, we have developed a novel 3D data/image processing and visualization tool for PAI, called 3D Photoacoustic Visualization Studio (3D PHOVIS). The unique features of 3D PHOVIS are illustrated in Fig. 1. We implemented the 3D PHOVIS on the most commonly used MATLAB platform and leveraged parallel processing technology to provide real-time interactive work interface. The MATLAB platform allows researchers to incorporate their own newly developed visualization algorithm to improve the image quality further. The 3D PHOVIS consist of the following applications: (1) a mosaic volume generator, (2) a scan converter for optical scanning photoacoustic microscopy (PAM), (3) a skin profile estimator and depth encoder, (4) a multiplanar reconstruction (MPR) viewer with a navigation map, and (5) a volume renderer with a movie maker. In addition to PAI, we also explored the use of the 3D PHOVIS for other popular biological and medical imaging tools, such as optical coherence tomography (OCT) and US imaging. We expect this free software package available on the website (www.boa-lab.com) will broadly impact the research of PAI in the field of biological and medical imaging.

Fig. 1.

Main features of 3D Photoacoustic Visualization Studio (3D PHOVIS). FOV, field of view; PAM, photoacoustic microscopy; and MPR: multiplanar reformation.

2. Design concept

2.1. Goal of development and expected user environments

The goal of our 3D PHOVIS is to provide intuitive volume editing and high-quality volume visualization experiences for all researchers in the field of biomedical imaging. The 3D PHOVIS can handle any data type that can be imported into MATLAB. Detailed data preparation procedures are described in the instruction manual with sample scripts.

2.2. Preparation of test data set

Acoustic-resolution (AR) PAM system (MicroPhotoAcoustics, USA) with a water-immersible micro-electro-mechanical systems (MEMS) scanner (OPTICHO, Republic of Korea) was used to acquire 3D PAM volume data for operational testing. The animal experiments were performed as per regulations and protocols approved by the Institutional Animal Care and Use Committee (IACUC) of Pohang University of Science and Technology (POSTECH), and regulations of the National Institutes of Health Guide for the Care and Use of Laboratory Animals. We performed PAI of a healthy mouse to acquire 3D volumetric PAM data (POSTECH Biotech Center; 15−25 g, 6 weeks old) in a back-coronal view. We first anesthetized the mouse with a vaporized isoflurane (1 L/min of oxygen and 0.75 % isoflurane), and then removed its hair with depilatory lotion. The mouse was placed on an imaging table custom-designed for PAI during the in vivo imaging experiments and the mouse body temperature was maintained with a heating pad. Ultrasound gel (Ecosonic, SANIPIA, Republic of Korea) was applied to enhance acoustic impedance matching between the mouse body and the water. The optical energy illuminated on the mouse skin surface was 2.3 mJ/cm2, which is below the maximum permissible exposure for single laser pulse (MPESLP) safety limits of 20 mJ/cm2 at 532 nm. For multiple pulse trains, the maximum permissible exposure for pulse train (MPEtrain) is 269 mJ/cm2, which is calculated for the scanning range of 2.5 mm, the optical beam size of 1.5 mm, the imaging pixel step size of 16.7 μm, and the exposure time of 3.6 ms. For 90 adjacent overlapped laser pulses, resulting maximum permissible exposure for MPESLP is 3.0 mJ/cm2, which is well above the illuminated optical energy of 2.3 mJ/cm2 in the imaging experiment [24]. The MPESLP and MPEtrain are governed by American National Standards Institute (ANSI).

For demonstrating the use of 3D PHOVIS for OCT image rendering, we used the OCT data published by Golabbakhsh et al. [25]. For the US image rendering demo, we acquired a 3D US volume data of a fetus phantom (Model 065-36, CISR Inc., Norfolk, VA) with a mechanically-swiveling 3D transducer interfaced to the EUB-6500 ultrasound machine (Hitachi Healthcare America, USA).

2.3. Design of interactive graphical user interface

All graphical user interface (GUI) applications are developed using the 'GUIDE' toolkit provided by MATLAB 2016a. The GUI controllers are custom-developed to give a more intuitive experience than MATLAB built-in GUI controls. All applications utilized the custom-made slider classes because the default MATLAB slider class could not provide an interactive response when moving the slider. A custom-made color map editor class is implemented in every application and can be embedded in a GUI window. In addition, several custom-made interactive graphics classes are also developed to facilitate data visualization, such as navigation cursor, volume position tool, Gaussian brush tool, window/level tool, transfer function adjustment tool, and rendering direction setting tool.

2.4. Software structure

Since the interpreter-based software is slow, the functions based on MATLAB script are only used to determine the sequential processes or the interactive behavior of the GUI. If a function requires a two-dimensional loop process for calculation, we use a MATLAB built-in pre-compiled or custom-developed MATLAB executable (MEX) function. All loops in the MEX function are parallelized by the OpenMP library or streaming single instruction multiple data extensions (SSE). For functions requiring 3D data manipulation, we implemented parallel thread execution (PTX) kernels based on CUDA general-purpose computing for graphics processing unit (GPGPU). A majority of computation is implemented based on single-precision floating-point values for speed. All included functions and class structures are loaded by the main window of 3D PHOVIS. All applications are called in the main window and are associated with their GUIs and built-in features.

3. Result

3.1. Mosaic volume generator

3.1.1. Background

One of the most efficient ways to create wide-field images is to mosaic sequentially acquired multiple images. Many techniques have been developed to create panoramic images in 2D by employing feature extraction algorithms on 2D dense data [26]. However, in many applications, automatic feature extraction and matching algorithms do not always work [27,28]. Thus, commercial 2D viewer usually support manual image alignment interfaces to flexibly position the individual images [27,29,30]. For PAI also, it is difficult to apply feature extraction algorithms because the data is sparse and three dimensional [31]. Thus, convenient user interface is necessary to precisely align the individual images to create a mosaic 3D volume imaging. We have prepared a manual volume positioning interface for the volume mosaic process, allowing users to simultaneously observe and assign 3D positions of volumes in multiple planes.

3.1.2. Algorithm

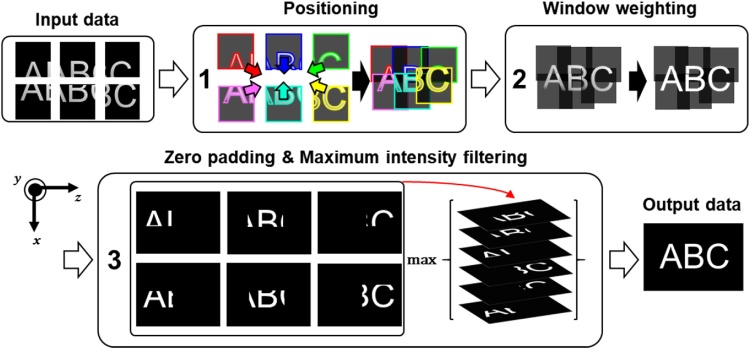

The volume merging algorithm consists of the following three steps: positioning, window weighting, and zero padding & maximum intensity filtering (Fig. 2). Note that all these steps are processed in the 3D domain. The first step to create a mosaic image is to identify the relative positions between individual images. In the proposed algorithm, the 3D positioning of each volumetric image set is determined manually. The difference in the PA signals in the overlapped regions are interpolated with window weighting to eliminate motion/ghost artifacts and compensate for uneven signal [28]. In the final step, zero-padding is applied to each volume data to match the size of the final volume, and the final volume is generated via maximum value filtering.

Fig. 2.

Schematic of a 3D volumetric mosaic process.

3.1.3. Application

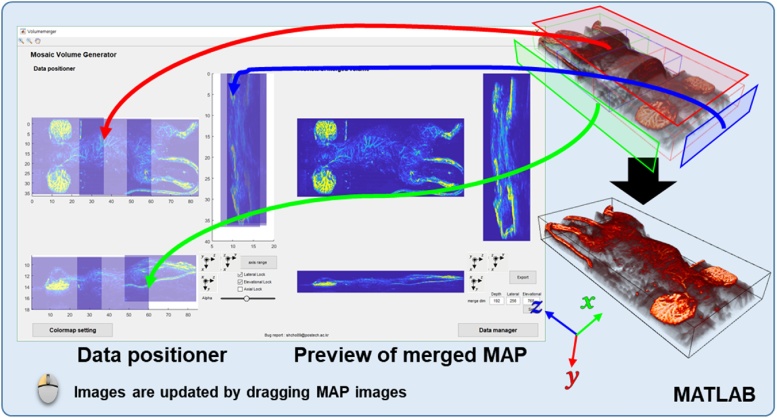

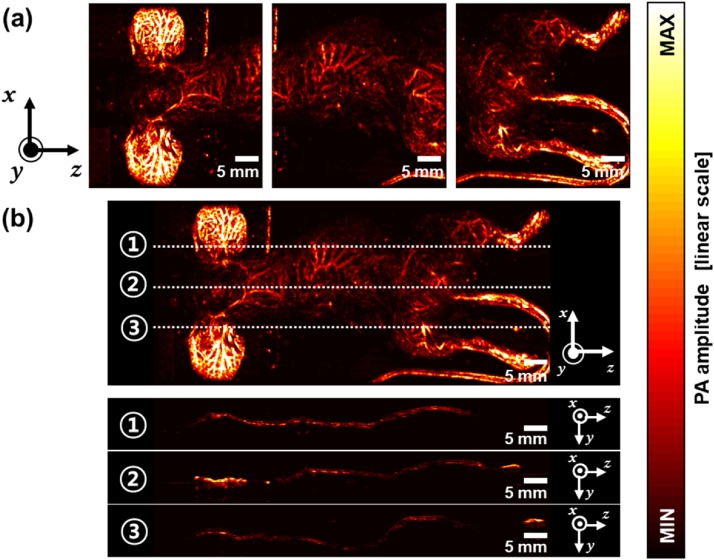

The “Mosaic volume generator” application is shown in Fig. 3 and Supplementary Movie S1. The basic GUI consists of a data positioner, a merged images preview, a color map editor, a data manager, and window weighting. In this application, it is important to locate the individual volume data accurately. Thus, the interactive data positioner is designed to perform intuitive fine-tuning of the volume position. The data positioner has three different views for each orthogonal coordinate (i.e., x, y, and z axes). The data positioner allows the user to reposition each volume by dragging the PA maximum amplitude projection (MAP) images. The user can also set the volume location manually by entering an absolute location in the location editor. For fine-tuning the position, the user can take advantage of MATLAB's zoom and pan features. In the data positioner, the PA MAP images are displayed as translucent images to visualize overlapped areas. A 2D window weighting function is included in the data manager menu to perform appropriate window weight multiplication. In the merging process, zero padding and maximum intensity filtering are performed automatically, and the resulting MAP image in each orthogonal direction is displayed in the preview menu. After filtering, the merged volume could be exported from the preview menu. Fig. 4 shows the PA MAP mosaic image. Three individual PA MAP images (Fig. 4a) are merged into one seamless PA MAP mosaic image. The cross-sectional PA B-scan images are shown in Fig. 4b after seamless mosaic imaging.

Fig. 3.

User interface of the “Mosaic volume generator” application (Supplementary Movie S1).

Fig. 4.

PA MAP mosaic image. (a) Three individual PA MAP input images. (b) PA MAP mosaic image and the depth-resolved PA B-scan images along the lines 1, 2, and 3. PA, photoacoustic and MAP, maximum amplitude projection.

3.2. Scan converter for optical scanning photoacoustic microscopy

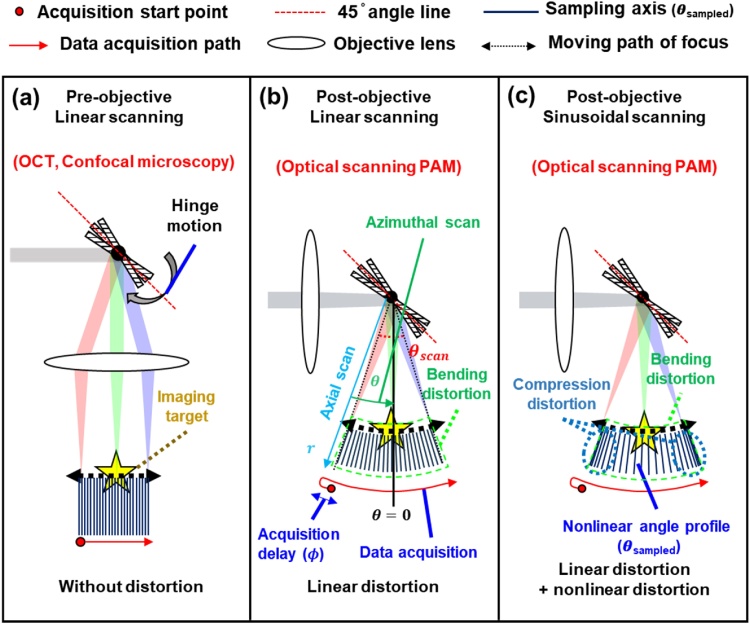

3.2.1. Background

The image distortions depending on various optical systems and scanning schemes and are shown in Fig. 5. The PAM images either suffer from linear distortion with linear scanning or linear and nonlinear distortions with sinusoidal scanning. The linear bending distortion is induced by the scanning geometry, and the nonlinear compression distortion is caused by the nonlinear movement of the scanning mirror. A pre-objective system that performs linear scanning shows no distortion in the acquired 3D image (Fig. 5a). However, image topology produced by a post-objective system with linear scanning (i.e., usually in optical scanning PAM [32]) is slightly deformed by a spherical (2D) or cylindrical (1D) pattern of light focusing on a target (Fig. 5b). The declination of the mirror causes additional distortions such as shear, scaling, and rotational deformation in the acquired images. As the azimuthal scanning geometry causes the linear distortion, this can be corrected through a linear transformation as follows [33]:

| (1) |

where is a position vector in the Cartesian coordinator, T is a coordinator conversion matrix, and is a position vector in the cylindrical or spherical coordinator. Furthermore, to remove the image distortion due to mirror declination, an affine transformation is applied as follows:

| (2) |

where A is the affine transformation matrix. The 3D linear distortion can be effectively removed in optical scanning PAM with the linear scanning. More recently, a sinusoidal-driven galvanometer or MEMS mirrors have been applied to optical scanning PAM to achieve rapid scanning speeds via resonance motion [24,[34], [35], [36], [37], [38], [39], [40]]. When using a post-objective system with the sinusoidal scanning motion, the nonlinear distortion is also induced (Fig. 5c). In this case, the coordinator conversion and affine transformation cannot correct for distortion because of nonlinear distortion. Thus, the angle profile should be linearized through the following equation before correcting the distortion:

| (3) |

where θsampled is a sampled angle, θ is a linearized angle, θscan is a scanning range of the scanner, and ϕ is a phase shift caused by acquisition delay. Without linearization, the images are divided into central and peripheral areas [41,42]. Since the difference between sinθ and θ are negligible in the central area, the angular interval between sampling axes is considered identical. However, the angular interval becomes dramatically nonlinear in the peripheral regions resulting expansion distortion. In the next section, we present a 3D scan converter for cylindrical coordination scanning with a sinusoidal mirror movement. We designed the 3D image warp transform based on inverse mapping approach to simultaneously remove linear and nonlinear distortions.

Fig. 5.

Image distortion vs various scanning methods.

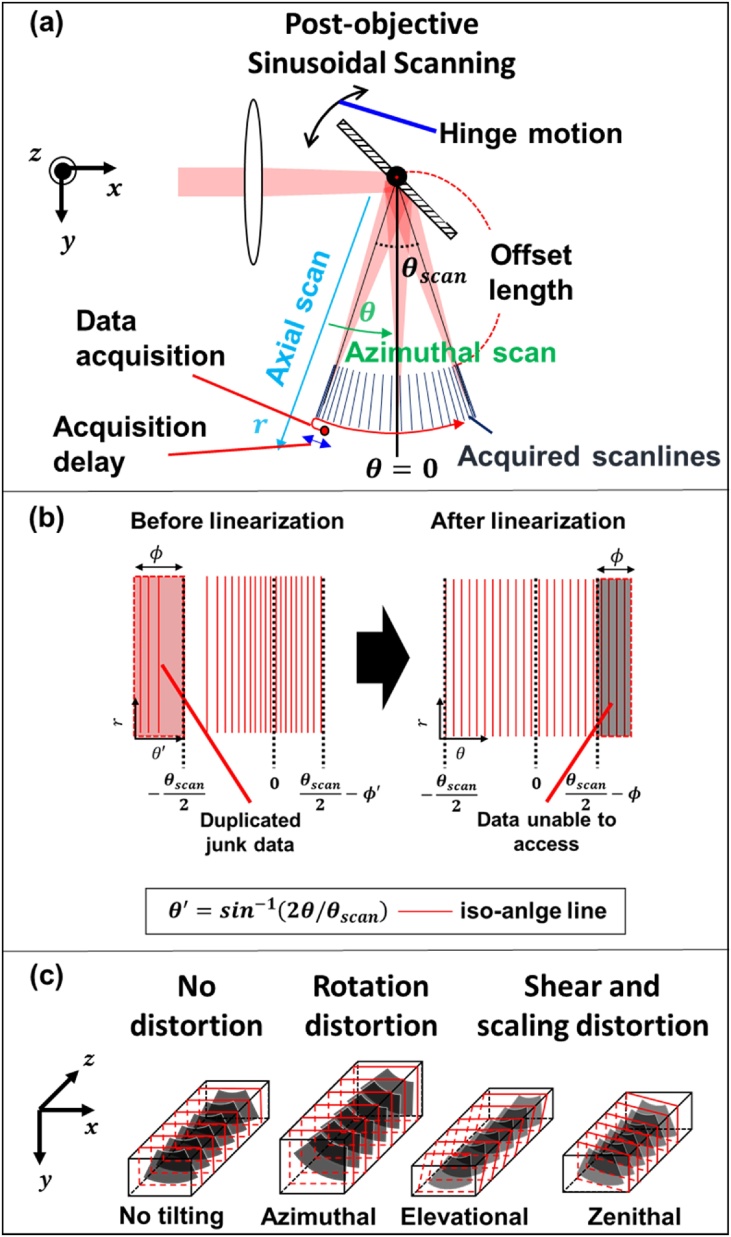

3.2.2. Algorithm

As per the scanning mechanism in optical scanning PAM (Fig. 6a), the azimuth scanned data should be converted from the polar to Cartesian coordinator with consideration of declination and nonlinear motion of the mirror to correct the distortion. First, we perform the scan conversion by calculating the inverse representation of Eq. (2) as follows:

| (4) |

Fig. 6.

Principle of the scan conversion algorithm. (a) Scanning geometry and motion of the mirror. (b) Angular distribution of the acquired scanlines and angle profile linearization. (c) Effects of mirror declination in volumetric imaging.

Since the elevational and zenithal tilting of the mirror induces shear deformation and the azimuthal tilting also brings rotational deformation, the affine matrix is represented as follows:

| (5) |

To remove this nonlinear distortion, the nonlinear angular profile between the scan lines are linearized by inverse mapping of the angle data (Fig. 6b) via the following Equation:

| (6) |

After the linearization, the 3D warping transformation is performed to remove the distortion in the 3D volume image (Fig. 6c). The 3D scan conversion algorithm then reduces both nonlinear and linear distortions, and is based on the following Equations:

| (7) |

| (8) |

| (9) |

where x (horizontal), y (axial), and z (elevational) represent the location of the voxel to be restored in the Cartesian coordinator. The obtained data is being represented in the distorted cylindrical coordinate using the inverse sine scale angle instead of the normal angle. r, θ' and z' are the radial, distorted angle, and elevational positions, respectively. ϕ is the phase shift described in Fig. 6b. δ represent the declination of the mirror in each tilting direction in Fig. 6c.

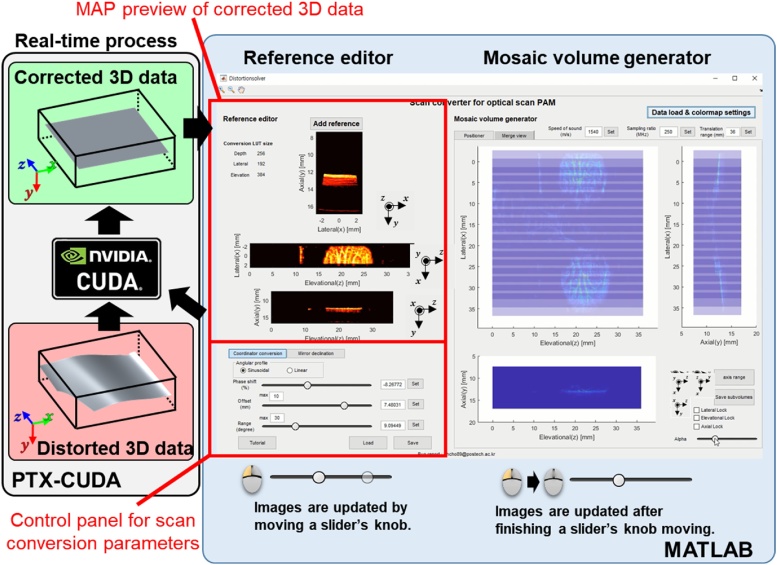

3.2.3. Application

For an intuitive and interactive working environment, the volume scan conversions are carried out using CUDA GPGPU technology. The application supports binary files as input data with headers. Details of the file header are described in the user manual. To support binary file preparation, 3D PHOVIS provides class functions for adding headers and exporting binary files. Fig. 7 shows the screenshot of the “Scan converter” application, and the detailed process is shown in Supplementary Movie S2. The volume scan conversion is performed through two-step process. In the first step, “Reference editor” application chooses one data set as the reference volume to visualize the effects of real-time parameter adjustments, utilizing a GPU assisted scan conversion process. The following six parameters are interactively adjusted: (1) offset data radius, (2) scanning range, (3) azimuth tilt, (4) elevation tilt, (5) zenithal tilt, and (6) phase shift of mirror motion. In the second step, “Mosaic volume generator,” user confirms the adjusted parameters by comparing the relationship between different volume data sets. For these data sets, each image is updated after the mouse button from the parameter slider’s knob is released. Each loaded volume is visualized in “Data positioner” as a semi-transparent MAP image. By observing overlapped areas, the user can accurately optimize the parameters.

Fig. 7.

User interface of the “Scan converter for optical scanning photoacoustic microscopy” application (Supplementary Movie S2).

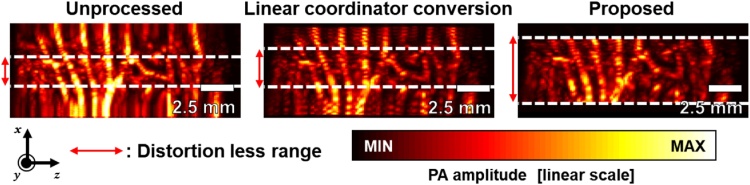

Without motion estimation, the area where θ ≒ sin−1θ is relatively free from distortion. Unlike the simple linear coordinator conversion method, the newly proposed scan conversion method achieves wider FOV and less distortion (Fig. 8). As one can see, the effective image length along the lateral direction increases to approximately 5 mm with our non-linear distortion correction, while they are about 2.5 mm in the linear coordinator conversion method and less than 2.5 mm in the unprocessed original image.

Fig. 8.

Comparison of the effective field of views in photoacoustic maximum amplitude projection images.

3.3. Skin profile estimator and depth encoder

3.3.1. Background

Appropriate segmentation of the biological tissues is very important for 3D rendering. The depth-encoded colored PA MAP image is commonly used to show the 3D volumetric PA data in the 2D projection domains. However, a simple depth-encoded 3D rendered image based on the Cartesian coordinate induces significant errors in the rendered image because of irregular biological skin profiles. To accomplish successful depth encoding process, estimating the correct skin surface is essential [[43], [44], [45], [46]]. In the next sub-section, we build a software application that automatically assesses the skin profiles and creates the true colored depth-encoded images.

3.3.2. Algorithm

Since the PA signals on the skin are not continuous, it is challenging to correctly contour the skin profiles in the PA images [45]. To delineate the continuous and smooth boundaries, the Gaussian blurring [46], cloth simulation [45], or random sample consensus (RANSAC) fitting [43] have been explored. While these methods show the convincing estimation of the skin profiles, they still have limitations. The Gaussian blurring and cloth simulation methods are vulnerable to outlier signals caused by artifacts. The RANSAC approach is robust even if the data contain many outliers, but only applicable when the appropriate analytical model exists.

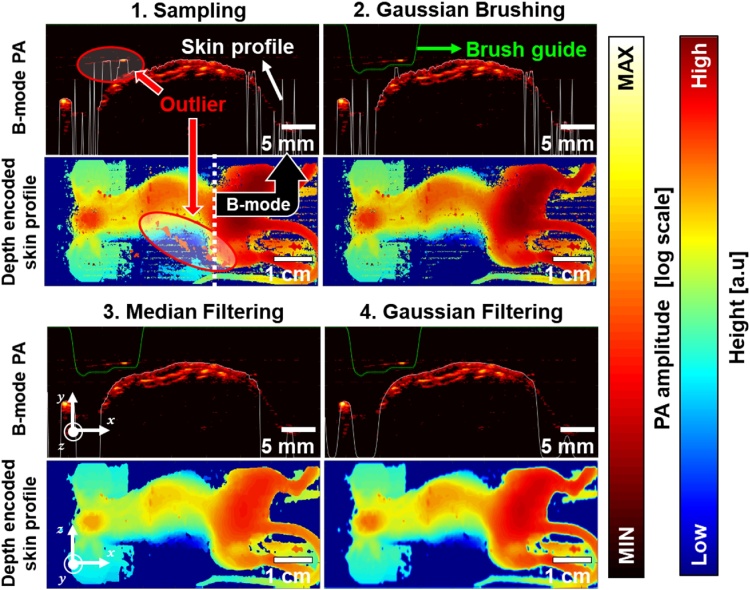

To estimate the skin profiles in the PA images, the rough skin profile is sampled by detecting the first PA signals along time-resolved A-line images. The sampled profile is filtered by median filtering and Gaussian blurring. The reliable skin profile, regardless of outliers, is achieved by manually tweaking the profile estimation process using the Gaussian Brush tool provided with the application. The detailed procedure is illustrated in Fig. 9. The white line indicates the estimated skin profile and the green line represents the brush guide. Typically, the outliers with large areas are excluded by the Gaussian Brushing and the smaller areas are removed by 2D median filtering. Finally, we apply the Gaussian filtering to smooth the profile.

Fig. 9.

Skin profile estimation process in a B-mode photoacoustic (PA) image and estimated depth-encoded skin profile in the 3D PA image.

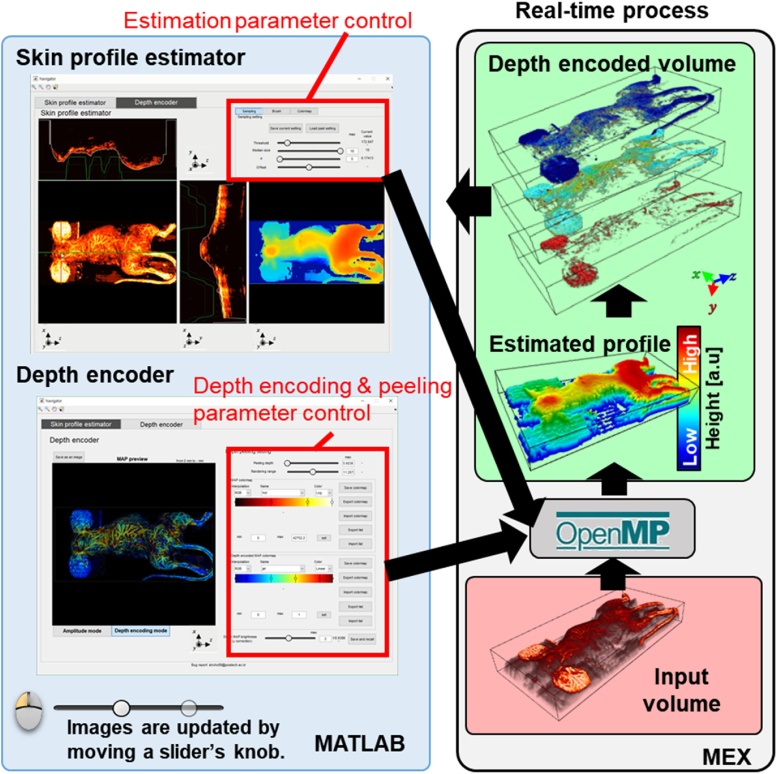

3.3.3. Application

The “Skin profile estimator and depth encoder” application estimates the skin profile and generates the colored depth-encoded image. This application is driven by MEX functions, which are parallelized by the OpenMP to interactively process and control the volume data in real-time. The GUI interface for this application is shown in Fig. 10, and the detailed process is shown in Supplementary Movie S3. The first panel is the “Skin profile estimator” panel. The profile estimation is controlled by four sliders. These slider control the sampling threshold, 2D square median filter size, Gaussian blur amount (using σ, standard deviation), and the overall offset movement of the estimated surface in the axial direction. The expected skin profile is visualized as a white line in each orthogonal slice image and projection image in the profile monitor. The Gaussian brush tool is provided to avoid any incorrect and noisy estimation. The Gaussian brush guides appear as green lines in each orthogonal slice image. The second panel is the “Depth encoder” panel. In this panel, regular and depth-encoded MAP images are displayed in the preview menu. All parameters for MAP rendering (e.g., a surface peel depth, a short-range MAP rendering range, rendering colors, and image brightness) are controlled by the depth peeling tool and two-color map editors. The depth peeling tool consists of two sliders that determine the depth of the surface peel and the range of rendering. The parameter settings of the peeling tool can be saved and recalled later by the reference manager. The signal brightness and depth encoding colors of MAP images are each controlled by separate color map editors. For additional brightness adjustment of the depth-encoded images, γ correction slider is provided. The generated MAP images, volume data, and depth indices can be exported from the Export menu.

Fig. 10.

User interface of the “Skin profile estimator and depth encoder” application (Supplementary Movie S3).

3.4. 3D volume viewer

3.4.1. Background

Many 3D visualizations techniques have been developed and applied to visualize 3D PA data, and each has its own pros and cons [47]. Among various visualization methods, the multiplanar reconstruction (MPR) and volume rendering are most commonly used. The MPR generates a 2D image slice for any desired trajectory, and this method is easy and fast. The volume rendering generates a 3D-like image by simulating a virtual camera, volume subject, and light source. Since the visibility of the 3D image depends on the rendering algorithm and parameters, it is important to construct a specialized interactive interface to optimize the rendering algorithm and 3D parameter updates. To visualize 3D PA data effectively, we developed the "MPR viewer" and the "Volume renderer" with interactive interfaces in the MATLAB environment.

3.4.2. Multiplanar reconstruction (MPR) viewer

3.4.2.1. Algorithm

We implement the MPR and projection rendering algorithms. Since each MPR slice is a radial slice in the viewing field, each 2D slice is reconstructed by the next linear transformation:

| (10) |

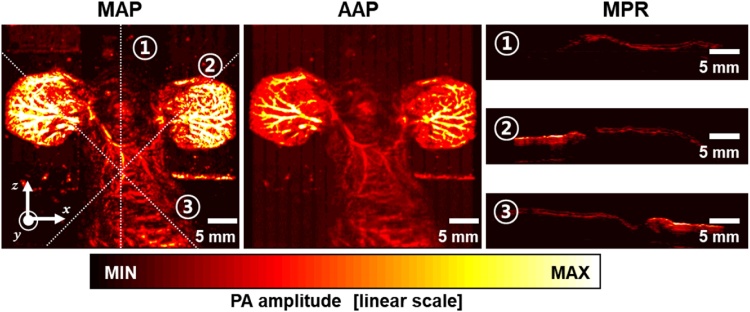

where is the position vector in the cylindrical coordinator, is the position vector in the Cartesian coordinator, T is the coordination transform matrix, and R is the viewpoint rotation matrix. To provide navigation, the projection image is provided. The shear warp factorization algorithm [48] based on multi-core CPU and SSE is used to render the projection image in an arbitrary viewing direction. For the projection image, a MAP or average amplitude projection (AAP) image can be used. Fig. 11 shows one MAP image, one AAP image, and three MPR slices cut along the lines “1”, “2”, and “3” in the MAP image. Note that the navigation map image plays an important role in selecting the appropriate MPR configuration angle. In Fig. 11, the MAP image represents the distribution of high amplitude signals, while the AAP implies high-density signals.

Fig. 11.

Maximum amplitude projection (MAP) image, average amplitude projection (AAP) image, and multiplanar reconstruction (MPR) images extracted along the lines “1”, “2”, and “3” in the MAP image.

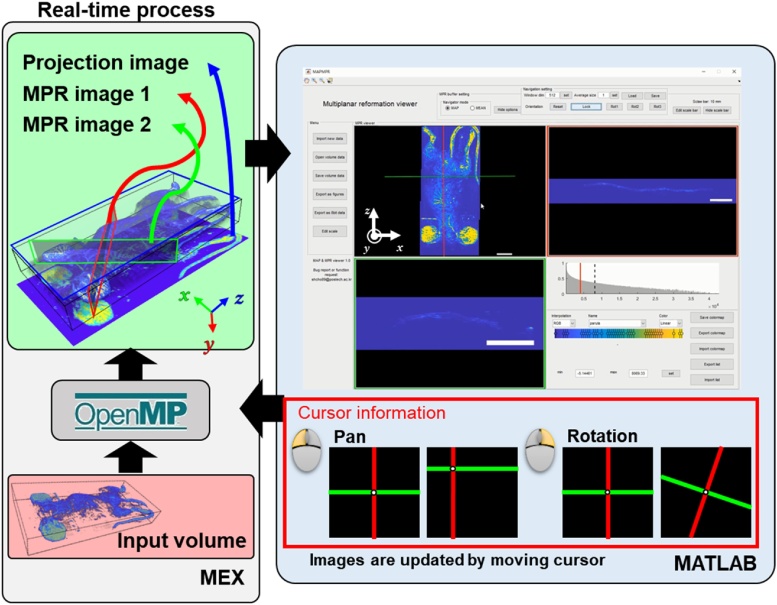

3.4.2.2. Application

The user interface and control method of the MPR viewer application is shown in Fig. 12 and Supplementary Movie S4. The image reconstruction process is powered by the OpenMP library and by employing multi-core CPU parallelization. The MEX function reconstructs MPR, and projection images based on the input parameters, and imaging results are displayed in the GUI interface. The GUI interface has three parts: a navigation map, two MPR viewers, and a transfer function editor. The navigation cursor is controlled by two orthogonal lines with a center point in the projection map image. The position and angle of the cursor and the orthogonal lines are controlled by mouse movement. When the navigation cursor moves, the two MPR slices are automatically updated. The transfer function editor has interactive controls to instantly adjust the dynamic range and color map. The viewing direction in the navigation map can be rotated by right angle in each axial direction or in arbitrary direction. The arbitrary directional rotation is controlled by computer mouse motion.

Fig. 12.

User interface of the “Multiplanar reformation viewer” application (Supplementary Movie S4). MPR: multiplanar reformation.

3.4.3. Volume renderer with a moviemaker

3.4.3.1. Algorithm

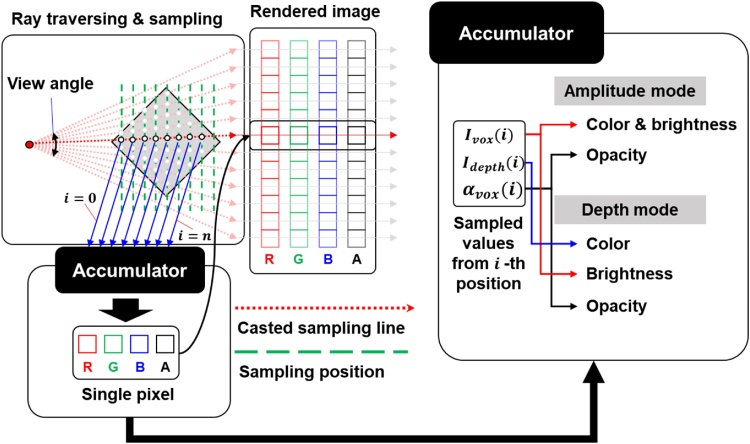

We use a ray casting algorithm, which can provide the best image quality among volume rendering algorithms [49], for “Volume renderer” application. Because the volume rendering process is more computationally expensive than any other process, CUDA GPGPU acceleration is leveraged to execute the rendering processes in real-time. In volume rendering, it is important to determine the voxel information using opacity and colors [50]. We use colormaps to visualize the PA amplitudes in “Amplitude mode” and the PA depth information in “Depth mode.” To classify visible information, we simultaneously sample the PA amplitude and depth data. The detail rendering process is shown in Fig. 13 and it consists of a ray traversing, sampling, and accumulation process. The ray traversing process determines the sampling position depending on the traversing direction and sampling depth. We sample the values from the input data based on the calculated sampling position by trilinear interpolation. The accumulation process transfers the sampled values to red, green, blue and opacity values, and accumulates the sampled values to generate the rendered 2D images. In Fig. 13, Ivox(i) is the sampled value that represents the PA signal amplitude, Idepth(i) is the sampled imaging depth information of the volume, and αvox(i) is the opacity of the voxel data in the i-th sampling position. Ivox(i) is the voxel’s color and brightness in the “Amplitude mode” and brightness in the “Depth mode”. Idepth(i) is the voxel’s color in the “Depth mode”.

Fig. 13.

Principle of the volume ray casting process. Ivox, the sampled PA signal amplitude value; Idepth, the sampled PA imaging depth value; and αvox, the opacity of the sample.

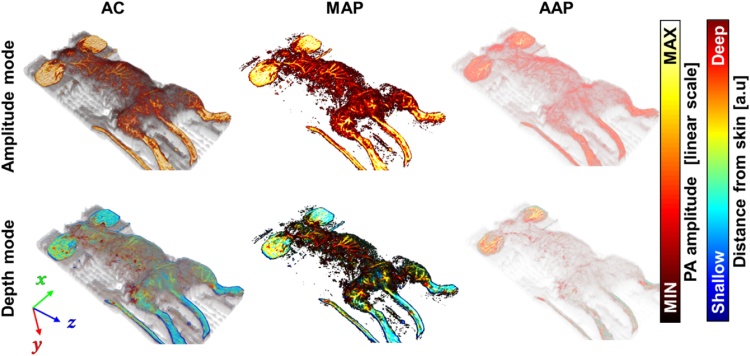

Fig. 14 shows the rendering examples and corresponding algorithms in the “Amplitude mode”, and “Depth mode.” For the accumulation process, alpha composition (AC), MAP, and AAP methods are implemented. To render a single pixel, AC accumulates the sampled voxels with partial transparency [51], MAP finds the maximum values among the sampled voxels, and AAP returns the average values among the sample voxels along the corresponding transfer line. We used the depth index information generated from the “Skin profile estimator and depth encoder” application.

Fig. 14.

Photoacoustic amplitude and depth images processed through the AC, MAP, and AAP algorithms. AC: alpha composition, MAP: maximum amplitude projection, AAP: average amplitude projection.

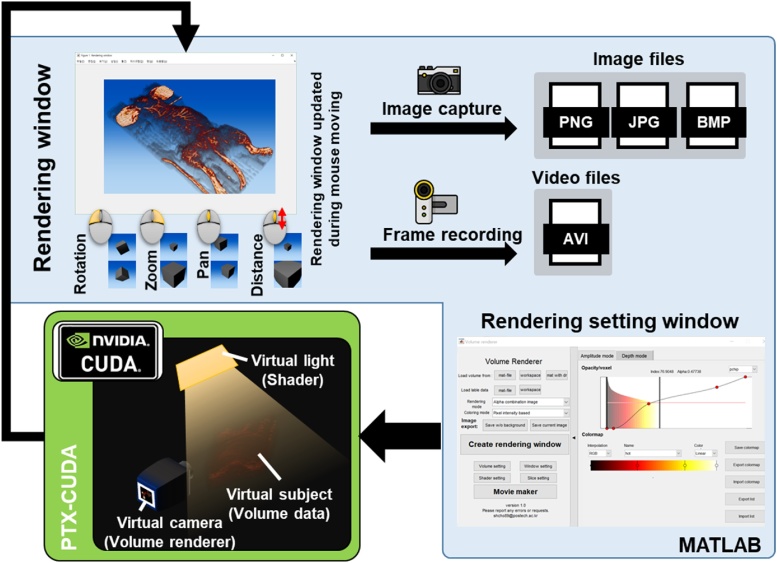

3.4.3.2. Application

The volume rendering application specializes in interactive real-time high-quality volume-rendered image and automatic motion frame generation. CUDA GPGPU acceleration technology is utilized for real-time volume rendering. Fig. 15 and Supplementary Movie S5 show the user interface for the “Volume renderer” application. For visualizing the volume data, the CUDA function generates a volume rendering image corresponding to the input data, and then the resulting image is displayed in the rendering window. The view orientation and perspective of an image can be fully adjusted in the render window with mouse control, while other software systems typically use a fixed distance between the camera and volume, or only supports non-perspective projection. The rendering results can be captured as an image file, or multiple image frames, which is generated by the automatic frame generator, can be recorded as a video file. The example videos created by this application is available in Supplementary Movies S6.

Fig. 15.

User interface of the “Volume renderer” application (Supplementary Movie S5). The example videos created by this application is available in Supplementary Movies S6–11.

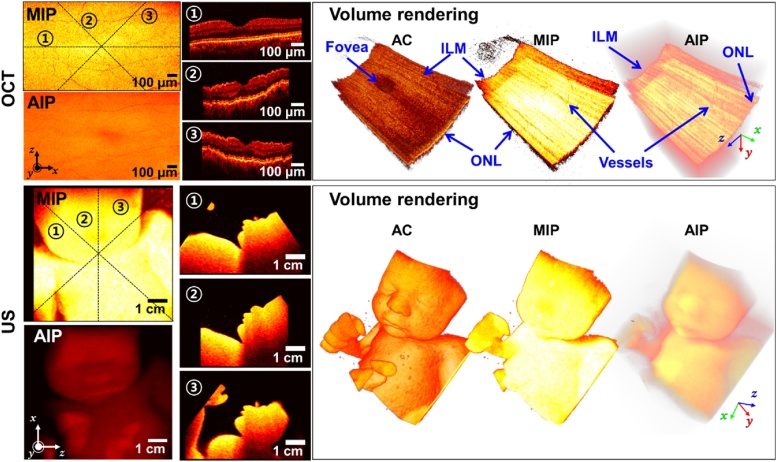

We also explored the feasibility of our volume renderer for 3D OCT and US imaging. Fig. 16 shows the 2D projection, MPR images, and 3D volume-rendered OCT and US images. The volume-rendered movies are shown in Supplementary Movies S7-8. The OCT data published in Golabbakhsh et al. [25] is employed for OCT image rendering, and we acquired the 3D US volume data of a fetus phantom (Model 065-36, CISR Inc., Norfolk, VA) with a mechanically-swiveling 3D transducer interfaced to the EUB-6500 ultrasound machine (Hitachi Healthcare America, USA). The OCT and US maximum intensity projection (MIP, equivalent algorithm with MAP) and average intensity projection (AIP, an equivalent algorithm with AAP) images are based on optical and acoustic scattering, respectively. These examples imply that our volume renderer is flexible for use in various 3D volumetric imaging modalities.

Fig. 16.

OCT and US MIP and AIP images and corresponding MPR images cut along the lines “1”, “2”, and “3” in the MIP images from “MPR viewer” and 3D volume rendered images processed by AC, MIP, and algorithms. The 3D volume rendered OCT and US images are shown in Supplementary Movie S10-11. AC: alpha composition, AIP: average intensity projection, MIP: maximum intensity projection, OCT: optical coherence tomography, US: ultrasound, ILM: inner limiting layer, ONL: outer nuclear layer.

4. System requirements

Software kits and user manuals are available on the Bio Optics and Acoustics Laboratory (BOA-Lab) website (www.boa-lab.com). All features are designed to work on 64-bit Windows platforms. All MEX functions have been compiled with a 64-bit compiler. Note that this software kit does not work with 32-bit MATLAB. MATLAB version 2016a or later is recommended for normal operation. The parallel and image processing toolboxes must be installed with MATLAB. Our software kit requires a resolution of 1920 × 1080 or higher for normal use. Otherwise, the application windows do not display images appropriately on the screen. The scan converter and volume renderer applications require a CUDA-capable video accelerator card. We further recommend using modern multi-core processors. 3D PHOVIS was developed and tested on workstations using Intel i5-4670 processor and NVIDIA GTX 750ti.

5. Discussion and conclusion

A variety of innovations have enhanced 3D PA imaging performance but ours is the first effort to enhance visualization of PA image, which is very critical for the analysis of results. Here, we demonstrated a specialized 3D visualization software package, 3D PHOVIS, specifically targeting photoacoustic data, image, and visualization processes. We incorporated 3D PHOVIS onto the MATLAB with graphical user interface and developed multi-core graphics processing unit modules for fast processing. The five main components of 3D PHOVIS are, namely mosaic volume generator, scan converter, skin profile estimator and depth encoder, MPR viewer, and volume renderer, have unique advantages. In the “Mosaic volume generator”, users provide flexibility to the user to intuitively and manually manipulate data volumes for better registration. Our maximum filtering method does not cause any signal loss or distortion, unlike other commonly used blending methods. Precise manual positioning and window weighting further minimizes data discrepancy for mosaic imaging [24]. The scan conversion algorithm proposed here corrects the distortion of nonlinear mirror scanning in PAM. The “Skin profile estimator and depth encoder” application provides a convenient depth peeling feature that allows us to visualize the unique characteristics of biological tissues based on depth information. The Gaussian brush tool in the “Skin profile estimator” can effectively correct false estimates caused by noises or artifacts. The intuitive slicing position control in the “MPR viewer” application is beneficial to analyze PA images and other 3D images like US and OCT images. In the “Volume renderer” application, three-volume rendering algorithms (e.g., AC, MAP, and AAP) shows their unique characteristics of volume data. As shown in Fig. 14, AC represents the most stereoscopic image, MAP represents the strong signal with a high contrast ratio, and AAP represents the signal density in the viewing direction. Since, we implemented the 3D PHOVIS on the most commonly used MATLAB platform, it provides researchers to incorporate their own newly developed visualization algorithm to improve the image quality further. 3D PHOVIS can directly import any type of data from the MATLAB workspace. In addition, we also demonstrated the applicability of this software to ultrasound imaging and optical coherence tomography. User manuals and application files for 3D PHOVIS are available for free on the website (www.boa-lab.com). We believe our 3D PHOVIS provides a unique tool to PAI researchers, expedites its growth, and attracts broad interests in a wide range of studies. In addition, our 3D software has several advantages over other commercially available including it is free, the applications available are optimized for PA imaging, and is based upon most commonly used research platform, i.e., MATLAB.

There are some limitations to 3D PHOVIS, which can be overcome in near future. The proposed scan conversion algorithm only works for 1-axis cylindrical scanning and different algorithms exists for 2-axes mirror scanning [35] or other scanning mechanisms, which can be incorporated into 3D PHOVIS. Some modules are operator dependent, such as “Mosaic volume generator” and “Skin profile estimator and depth encoder” applications. Future research can automate these applications and incorporated into 3D PHOVIS for other researchers to use. In addition, since all the features and class methods of 3D PHOVIS are designed for GUI input, they are not configured properly for script coding without GUI control. More sophistication can be added to the GUI control. Nevertheless, we strongly believe the proposed 3D visualization tool is very valuable for high-quality research activities in the field of biological and biomedical imaging.

Declaration of Competing Interest

Chulhong Kim has financial interests in OPTICHO, which, however, did not support this work.

Acknowledgments

This work was supported by the National Research Foundation of Korea (NRF) grant funded by the Korea government (MSIT) (No. NRF-2019R1A2C2006269).

Biographies

Seonghee Cho completed his B.H.S in Dental Laboratory Science and Engineering and B.E in Biomedical Engineering at Korea University. He is now a Ph.D. candidate in the School of Interdisciplinary Bioscience and Bioengineering at POSTECH. His research interests include ultrasound and photoacoustic imaging and high performance computing.

Jin-woo Baik completed his B.S in Electrical Engineering at POSTECH, and now he is a Ph.D. candidate in the Creative IT Engineering Department at POSTECH. His research interests include photoacoustic microscopy and deep learning.

Ravi Managuli received the Ph.D. degree in electrical engineering from the University of Washington, Seattle, in 2000. He is a Research Scientist at Hitachi Heathcare America, Seattle, WA, and is also an Affiliate Faculty Member at the University of Washington, Seattle. His research interests are in systems, algorithms and application for medical imaging, digital signal processing, image processing, computer architecture, AI, and real-time multimedia applications.

Dr. Chulhong Kim studied for his Ph.D. degree and postdoctoral training at Washington University in St. Louis, St. Louis, Missouri. He currently holds Mueunjae Chaired Professorship and an Associate Professor of Creative IT Engineering at Pohang University of Science and Technology in Republic of Korea. He was the recipients of the 2017 IEEE EMBS Early Career Achievement Award, the 2017 Korean Academy of Science and Technology Young Scientist Award, the 2016 Nightingale Award from IFMBE, and the 2017 KOSOMBE Young Investigator Award “Contributions to multi-scale photoacoustic imaging from super-resolution atomic force photoactivated microscopy for research to systems for clinical applications.

Footnotes

Supplementary material related to this article can be found, in the online version, at doi:https://doi.org/10.1016/j.pacs.2020.100168.

Contributor Information

Seonghee Cho, Email: shcho89@postech.ac.kr.

Jinwoo Baik, Email: bjwpp@postech.ac.kr.

Ravi Managuli, Email: ravim@uw.edu, ravim@u.washington.edu.

Chulhong Kim, Email: chulhong@postech.edu.

Appendix A. Supplementary data

The following are Supplementary data to this article:

References

- 1.Wang L.V., Yao J. A practical guide to photoacoustic tomography in the life sciences. Nat. Methods. 2016;13(8):627–638. doi: 10.1038/nmeth.3925. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Moore M.J., El-Rass S., Xiao Y., Wang Y., Wen X.-Y., Kolios M.C. Simultaneous ultra-high frequency photoacoustic microscopy and photoacoustic radiometry of zebrafish larvae in vivo. Photoacoustics. 2018;12:14–21. doi: 10.1016/j.pacs.2018.08.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Wong T.T.W., Zhang R., Zhang C., Hsu H.C., Maslov K.I., Wang L., Shi J., Chen R., Shung K.K., Zhou Q., Wang L.V. Label-free automated three-dimensional imaging of whole organs by microtomy-assisted photoacoustic microscopy. Nat. Commun. 2017;8(1):1386. doi: 10.1038/s41467-017-01649-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Arthuis C.J., Novell A., Raes F., Escoffre J.M., Lerondel S., Le Pape A., Bouakaz A., Perrotin F. Real-time monitoring of placental oxygenation during maternal hypoxia and hyperoxygenation using photoacoustic imaging. PLoS One. 2017;12(1) doi: 10.1371/journal.pone.0169850. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Kim J., Kim J.Y., Jeon S., Baik J.W., Cho S.H., Kim C. Super-resolution localization photoacoustic microscopy using intrinsic red blood cells as contrast absorbers. Light Sci. Appl. 2019;8(1):1–11. doi: 10.1038/s41377-019-0220-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Park B., Lee H., Jeon S., Ahn J., Kim H.H., Kim C. Reflection‐mode switchable subwavelength Bessel‐beam and Gaussian‐beam photoacoustic microscopy in vivo. J. Biophotonics. 2019;12(2) doi: 10.1002/jbio.201800215. [DOI] [PubMed] [Google Scholar]

- 7.Liang S., Lashkari B., Choi S.S.S., Ntziachristos V., Mandelis A. The application of frequency-domain photoacoustics to temperature-dependent measurements of the Grüneisen parameter in lipids. Photoacoustics. 2018;11:56–64. doi: 10.1016/j.pacs.2018.07.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Xu Z., Li C., Wang L.V. Photoacoustic tomography of water in phantoms and tissue. J. Biomed. Opt. 2010;15(3) doi: 10.1117/1.3443793. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Shi J., Wong T.T., He Y., Li L., Zhang R., Yung C.S., Hwang J., Maslov K., Wang L.V. High-resolution, high-contrast mid-infrared imaging of fresh biological samples with ultraviolet-localized photoacoustic microscopy. Nat. Photonics. 2019:1. doi: 10.1038/s41566-019-0441-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Jeon M., Kim J., Kim C. Multiplane spectroscopic whole-body photoacoustic imaging of small animals in vivo. Med. Biol. Eng. Comput. 2016;54(2–3):283–294. doi: 10.1007/s11517-014-1182-6. [DOI] [PubMed] [Google Scholar]

- 11.Choi W., Park E.Y., Jeon S., Kim C. Clinical photoacoustic imaging platforms. Biomed. Eng. Lett. 2018;8(2):139–155. doi: 10.1007/s13534-018-0062-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Shiina T., Toi M., Yagi T. Development and clinical translation of photoacoustic mammography. Biomed. Eng. Lett. 2018;8(2):157–165. doi: 10.1007/s13534-018-0070-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Upputuri P.K., Pramanik M. Fast photoacoustic imaging systems using pulsed laser diodes: a review. Biomed. Eng. Lett. 2018;8(2):167–181. doi: 10.1007/s13534-018-0060-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Jeon S., Park E.-Y., Choi W., Managuli R., Jong Lee K., Kim C. Real-time delay-multiply-and-sum beamforming with coherence factor for in vivo clinical photoacoustic imaging of humans. Photoacoustics. 2019;15 doi: 10.1016/j.pacs.2019.100136. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Liu C., Liao J., Chen L., Chen J., Ding R., Gong X., Cui C., Pang Z., Zheng W., Song L. The integrated high-resolution reflection-mode photoacoustic and fluorescence confocal microscopy. Photoacoustics. 2019;14:12–18. doi: 10.1016/j.pacs.2019.02.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Liu W., Yao J. Photoacoustic microscopy: principles and biomedical applications. Biomed. Eng. Lett. 2018;8(2):203–213. doi: 10.1007/s13534-018-0067-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Lee S., Kwon O., Jeon M., Song J., Shin S., Kim H., Jo M., Rim T., Doh J., Kim S. Super-resolution visible photoactivated atomic force microscopy. Light Sci. Appl. 2017;6(11) doi: 10.1038/lsa.2017.80. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Cai X., Zhang Y., Li L., Choi S.-W., MacEwan M.R., Yao J., Kim C., Xia Y., Wang L.V. Investigation of neovascularization in three-dimensional porous scaffolds in vivo by a combination of multiscale photoacoustic microscopy and optical coherence tomography. Tissue Eng. Part C: Methods. 2012;19(3):196–204. doi: 10.1089/ten.tec.2012.0326. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Jung H., Park S., Gunassekaran G.R., Jeon M., Cho Y.-E., Baek M.-C., Park J.Y., Shim G., Oh Y.-K., Kim I.-S. A peptide probe enables photoacoustic-guided imaging and drug delivery to lung tumors in K-rasLA2 mutant mice. Cancer Res. 2019 doi: 10.1158/0008-5472.CAN-18-3089. canres.3089.2018. [DOI] [PubMed] [Google Scholar]

- 20.Choi W., Kim C. Toward in vivo translation of super-resolution localization photoacoustic computed tomography using liquid-state dyed droplets. Light Sci. Appl. 2019;8 doi: 10.1038/s41377-019-0171-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Bi R., Dinish U., Goh C.C., Imai T., Moothanchery M., Li X., Kim J.Y., Jeon S., Pu Y., Kim C. In vivo label‐free functional photoacoustic monitoring of ischemic reperfusion. J. Biophotonics. 2019:e201800454. doi: 10.1002/jbio.201800454. [DOI] [PubMed] [Google Scholar]

- 22.Shin M.H., Park E.Y., Han S., Jung H.S., Keum D.H., Lee G.H., Kim T., Kim C., Kim K.S., Yun S.H. Multimodal cancer theranosis using hyaluronate‐conjugated molybdenum disulfide. Adv. Healthc. Mater. 2019;8(1) doi: 10.1002/adhm.201801036. [DOI] [PubMed] [Google Scholar]

- 23.Lee D., Beack S., Yoo J., Kim S.K., Lee C., Kwon W., Hahn S.K., Kim C. In vivo photoacoustic imaging of livers using biodegradable hyaluronic acid‐conjugated silica nanoparticles. Adv. Funct. Mater. 2018;28(22) [Google Scholar]

- 24.Baik J.W., Kim J.Y., Cho S., Choi S., Kim J., Kim C. Super wide-field photoacoustic microscopy of animals and humans in vivo. IEEE Trans. Med. Imaging. 2019 doi: 10.1109/TMI.2019.2938518. [DOI] [PubMed] [Google Scholar]

- 25.Golabbakhsh M., Rabbani H. Vessel-based registration of fundus and optical coherence tomography projection images of retina using a quadratic registration model. IET Image Process. 2013;7(8):768–776. [Google Scholar]

- 26.Pravenaa S., Menaka R. A methodical review on image stitching and video stitching techniques. Int. J. Appl. Eng. Res. Dev. 2016;11(5):3442–3448. [Google Scholar]

- 27.Mills A., Dudek G. Image stitching with dynamic elements. Image Vis. Comput. 2009;27(10):1593–1602. [Google Scholar]

- 28.Szeliski R. Springer Science & Business Media; 2010. Computer Vision: Algorithms and Applications. [Google Scholar]

- 29.Gledhill D., Tian G.Y., Taylor D., Clarke D. Panoramic imaging—a review. Comput. Graph. 2003;27(3):435–445. [Google Scholar]

- 30.Jain P.M., Shandliya V. A review paper on various approaches for image mosaicing. Int. J. Computat. Eng. Res. 2013;3(4):106–109. [Google Scholar]

- 31.Liu C., Ma J., Ma Y., Huang J. Retinal image registration via feature-guided Gaussian mixture model. J. Opt. Soc. Am. A Opt. Image Sci. Vis. 2016;33(7):1267–1276. doi: 10.1364/JOSAA.33.001267. [DOI] [PubMed] [Google Scholar]

- 32.Xie J., Huang S., Duan Z., Shi Y., Wen S. Correction of the image distortion for laser galvanometric scanning system. Opt. Laser Technol. 2005;37(4):305–311. [Google Scholar]

- 33.Zhuang B., Shamdasani V., Sikdar S., Managuli R., Kim Y. Real-time 3-d ultrasound scan conversion using a multicore processor. IEEE Trans. Inf. Technol. Biomed. 2009;13(4):571–574. doi: 10.1109/TITB.2008.2010856. [DOI] [PubMed] [Google Scholar]

- 34.Duma V.-F., Lee K.-s., Meemon P., Rolland J.P. Experimental investigations of the scanning functions of galvanometer-based scanners with applications in OCT. Appl. Opt. 2011;50(29):5735–5749. doi: 10.1364/AO.50.005735. [DOI] [PubMed] [Google Scholar]

- 35.Kim J.Y., Lee C., Park K., Lim G., Kim C. Fast optical-resolution photoacoustic microscopy using a 2-axis water-proofing MEMS scanner. Sci. Rep. 2015;5:7932. doi: 10.1038/srep07932. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Lee C., Kim J.Y., Kim C. Recent progress on photoacoustic imaging enhanced with microelectromechanical systems (MEMS) technologies. Micromachines (Basel) 2018;9(11) doi: 10.3390/mi9110584. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Kim S., Lee C., Kim J.Y., Kim J., Lim G., Kim C. Two-axis polydimethylsiloxane-based electromagnetic microelectromechanical system scanning mirror for optical coherence tomography. J. Biomed. Opt. 2016;21(10) doi: 10.1117/1.JBO.21.10.106001. [DOI] [PubMed] [Google Scholar]

- 38.Kim J.Y., Lee C., Park K., Han S., Kim C. High-speed and high-SNR photoacoustic microscopy based on a galvanometer mirror in non-conducting liquid. Sci. Rep. 2016;6:34803. doi: 10.1038/srep34803. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Park K., Kim J.Y., Lee C., Jeon S., Lim G., Kim C. Handheld photoacoustic microscopy probe. Sci. Rep. 2017;7(1):13359. doi: 10.1038/s41598-017-13224-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Jeon S., Kim J., Lee D., Woo B.J., Kim C. Review on practical photoacoustic microscopy. Photoacoustics. 2019 doi: 10.1016/j.pacs.2019.100141. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Yoo H.W., Ito S., Schitter G. High speed laser scanning microscopy by iterative learning control of a galvanometer scanner. Control Eng. Pract. 2016;50:12–21. [Google Scholar]

- 42.Arrasmith C.L., Dickensheets D.L., Mahadevan-Jansen A. MEMS-based handheld confocal microscope for in-vivo skin imaging. Opt. Express. 2010;18(4):3805–3819. doi: 10.1364/OE.18.003805. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Jeon S., Song H.B., Kim J., Lee B.J., Managuli R., Kim J.H., Kim J.H., Kim C. In vivo photoacoustic imaging of anterior ocular vasculature: a random sample consensus approach. Sci. Rep. 2017;7(1):4318. doi: 10.1038/s41598-017-04334-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Sekiguchi H., Yoshikawa A., Matsumoto Y., Asao Y., Yagi T., Togashi K., Toi M. Body surface detection method for photoacoustic image data using cloth-simulation technique, photons plus ultrasound: imaging and sensing 2018. Int. Soc. Opt. Photonics. 2018:1049459. [Google Scholar]

- 45.Matsumoto Y., Asao Y., Yoshikawa A., Sekiguchi H., Takada M., Furu M., Saito S., Kataoka M., Abe H., Yagi T. Label-free photoacoustic imaging of human palmar vessels: a structural morphological analysis. Sci. Rep. 2018;8(1):786. doi: 10.1038/s41598-018-19161-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Zhang H.F., Maslov K., Wang L.V. Automatic algorithm for skin profile detection in photoacoustic microscopy. J. Biomed. Opt. 2009;14(2) doi: 10.1117/1.3122362. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Zhang Q., Eagleson R., Peters T.M. Volume visualization: a technical overview with a focus on medical applications. J. Digit. Imaging. 2011;24(4):640–664. doi: 10.1007/s10278-010-9321-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Lacroute P., Levoy M. Fast volume rendering using a shear-warp factorization of the viewing transformation. Proceedings of the 21st Annual Conference on Computer Graphics and Interactive Techniques; ACM; 1994. pp. 451–458. [Google Scholar]

- 49.Weiskopf D. Springer; 2007. GPU-Based Interactive Visualization Techniques. [Google Scholar]

- 50.Kniss J., Kindlmann G., Hansen C. Multidimensional transfer functions for interactive volume rendering. IEEE Trans. Vis. Comput. Graph. 2002;8(3):270–285. [Google Scholar]

- 51.Porter T., Duff T. Compositing digital images. ACM Siggraph Computer Graphics; ACM; 1984. pp. 253–259. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.