Significance

Our main message is that the popular method of low-dimensional embeddings provably cannot capture important properties of real-world complex networks. A widely used algorithmic technique for modeling these networks is to construct a low-dimensional Euclidean embedding of the vertices of the network, where proximity of vertices is interpreted as the likelihood of an edge. Contrary to common wisdom, we argue that such graph embeddings do not capture salient properties of complex networks. We mathematically prove that low-dimensional embeddings cannot generate graphs with both low average degree and large clustering coefficients, which have been widely established to be empirically true for real-world networks. This establishes that popular low-dimensional embedding methods fail to capture significant structural aspects of real-world complex networks.

Keywords: graph embeddings, graph representations, low-dimensional embeddings, low-rank representations, singular value decomposition

Abstract

The study of complex networks is a significant development in modern science, and has enriched the social sciences, biology, physics, and computer science. Models and algorithms for such networks are pervasive in our society, and impact human behavior via social networks, search engines, and recommender systems, to name a few. A widely used algorithmic technique for modeling such complex networks is to construct a low-dimensional Euclidean embedding of the vertices of the network, where proximity of vertices is interpreted as the likelihood of an edge. Contrary to the common view, we argue that such graph embeddings do not capture salient properties of complex networks. The two properties we focus on are low degree and large clustering coefficients, which have been widely established to be empirically true for real-world networks. We mathematically prove that any embedding (that uses dot products to measure similarity) that can successfully create these two properties must have a rank that is nearly linear in the number of vertices. Among other implications, this establishes that popular embedding techniques such as singular value decomposition and node2vec fail to capture significant structural aspects of real-world complex networks. Furthermore, we empirically study a number of different embedding techniques based on dot product, and show that they all fail to capture the triangle structure.

Complex networks (or graphs) are a fundamental object of study in modern science, across domains as diverse as the social sciences, biology, physics, computer science, and engineering (1–3). Designing good models for these networks is a crucial area of research, and also affects society at large, given the role of online social networks in modern human interaction (4–6). Complex networks are massive, high-dimensional, discrete objects, and are challenging to work with in a modeling context. A common method of dealing with this challenge is to construct a low-dimensional Euclidean embedding that tries to capture the structure of the network (see ref. 7 for a recent survey). Formally, we think of the vertices as vectors , where is typically constant (or very slowly growing in ). The likelihood of an edge is proportional to (usually a nonnegative monotone function in) (8, 9). This gives a graph distribution that the observed network is assumed to be generated from.

The most important method to get such embeddings is the singular value decomposition (SVD) or other matrix factorizations of the adjacency matrix (8). Recently, there has also been an explosion of interest in using methods from deep neural networks to learn such graph embeddings (9–12) (refer to ref. 7 for more references). Regardless of the specific method, a key goal in building an embedding is to keep the dimension small—while trying to preserve the network structure—as the embeddings are used in a variety of downstream modeling tasks such as graph clustering, nearest-neighbor search, and link prediction (13). Yet a fundamental question remains unanswered: To what extent do such low-dimensional embeddings actually capture the structure of a complex network?

These models are often justified by treating the (few) dimensions as “interests” of individuals, and using similarity of interests (dot product) to form edges. Contrary to the dominant view, we argue that low-dimensional embeddings are not good representations of complex networks. We demonstrate mathematically and empirically that they lose local structure, one of the hallmarks of complex networks. This runs counter to the ubiquitous use of SVD in data analysis. The weaknesses of SVD have been empirically observed in recommendation tasks (14–16), and our result provides a mathematical validation of these findings.

Let us define the setting formally. Consider a set of vectors (denoted by the matrix ) used to represent the vertices in a network. Let denote the following distribution of graphs over the vertex set . For each index pair , independently insert (undirected) edge with probability . (If is negative, is never inserted. If , is always inserted.) We will refer to this model as the “embedding” of a graph , and focus on this formulation in our theoretical results. This is a standard model in the literature, and subsumes the classic Stochastic Block Model (17) and Random Dot Product Model (18, 19). There are alternate models that use different functions of the dot product for the edge probability, which are discussed in Alternate Models. Matrix factorization is a popular method to obtain such a vector representation: The original adjacency matrix is “factorized” as , where the columns of are .

Two hallmarks of real-world graphs are 1) sparsity, where the average degree is typically constant with respect to , and 2) triangle density, where there are many triangles incident to low-degree vertices (5, 20–22). The large number of triangles is considered a local manifestation of community structure. Triangle counts have a rich history in the analysis and algorithmics of complex networks. Concretely, we measure these properties simultaneously as follows.

Definition 1. For parameters and , a graph with vertices has a -triangle foundation if there are at least triangles contained among vertices of degree, at most, . Formally, let be the set of vertices of degree, at most, . Then, the number of triangles in the graph induced by is at least .

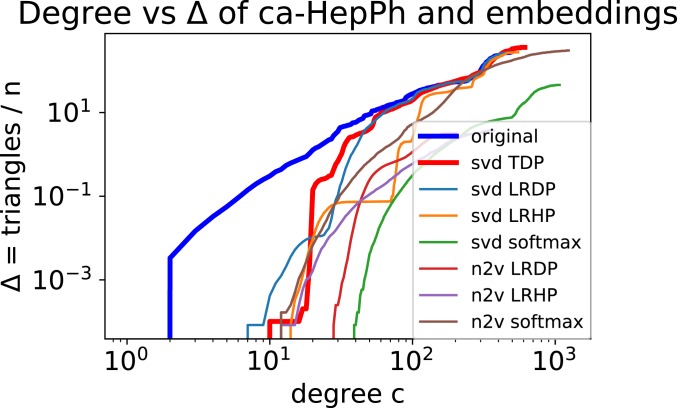

Typically, we think of both and as constants. We emphasize that is the total number of vertices in , not the number of vertices in (as defined above). Refer to real-world graphs in Table 1. In Fig. 1, we plot the value of vs. . (Specifically, the axis is the number of triangles divided by .) This is obtained by simply counting the number of triangles contained in the set of vertices of degree, at most, . Observe that, for all graphs, for , we get a value of (in many cases, ).

Table 1.

Datasets used

| Dataset name | Network type | Number of nodes | Number of edges |

| Facebook (29) | Social network | 4,000 | 88,000 |

| cit-HePh (31, 32) | Citation | 34,000 | 420,000 |

| String_hs (30) | PPI | 19,000 | 5.6 million |

| ca-HepPh (29) | Coauthorship | 12,000 | 120 million |

All numbers are rounded to one decimal point of precision. PPI, protein–protein interaction.

Fig. 1.

Plots of degree vs. : For a High Energy Physics coauthorship network, we plot versus the total number of triangles only involving vertices of degree, at most, . We divide the latter by the total number of vertices , so it corresponds to , as in Definition 1. We plot these both for the original graph (in thick blue) and for a variety of embeddings (explained in Alternate Models). For each embedding, we plot the maximum in a set of 100 samples from a 100-dimensional embedding. The embedding analyzed by our main theorem (TDP) is given in thick red. Observe how the embeddings generate graphs with very few triangles among low-degree vertices. The gap in for low degree is two to three orders of magnitude. The other lines correspond to alternate embeddings, using the node2vec vectors and/or different functions of the dot product.

Our main result is that any embedding of graphs that generates graphs with -triangle foundations, with constant , must have near-linear rank. This contradicts the belief that low-dimensional embeddings capture the structure of real-world complex networks.

Theorem 1. Fix . Suppose the expected number of triangles in that only involve vertices of expected degree is at least . Then, the rank of is at least .

Equivalently, graphs generated from low-dimensional embeddings cannot contain many triangles only on low-degree vertices. We point out an important implication of this theorem for Stochastic Block Models. In this model, each vertex is modeled as a vector in , where the th entry indicates the likelihood of being in the th community. The probability of an edge is exactly the dot product. In community detection applications, is thought of as a constant, or at least as much smaller than . On the contrary, Theorem 1 implies that must be to accurately model the low-degree triangle behavior.

Empirical Validation

We empirically validate the theory on a collection of complex networks detailed in Table 1. For each real-world graph, we compute a 100-dimensional embedding through SVD (basically, the top 100 singular vectors of the adjacency matrix). We generate 100 samples of graphs from these embeddings, and compute their vs. plot. This is plotted with the true vs. plot. (To account for statistical variation, we plot the maximum value of observed in the samples, over all graphs. The variation observed was negligible.) Fig. 1 shows such a plot for a physics coauthorship network. More results are given in SI Appendix.

Note that this plot is significantly off the mark at low degrees for the embedding. Around the lowest degree, the value of (for the graphs generated by the embedding) is two to three order of magnitude smaller than the original value. This demonstrates that the local triangle structure is destroyed around low-degree vertices. Interestingly, the total number of triangles is preserved well, as shown toward the right side of each plot. Thus, a nuanced view of the triangle distribution, as given in Definition 1, is required to see the shortcomings of low dimensional embeddings.

Alternate Models

We note that several other functions of dot product have been proposed in the literature, such as the softmax function (10, 12) and linear models of the dot product (7). Theorem 1 does not have direct implications for such models, but our empirical validation holds for them as well. The embedding in Theorem 1 uses the truncated dot product (TDP) function to model edge probabilities. We construct other embeddings that compute edge probabilities using machine learning models with the dot product and Hadamard product as features. This subsumes linear models as given in ref. 7. Indeed, the TDP can be smoothly approximated as a logistic function. We also consider (scaled) softmax functions, as in ref. 10, and standard machine learning models [Logistic Regression on the Dot Product (LRDP) and Logistic Regression on the Hadamard Product (LRHP)]. (Details about these models are given in Alternate Graph Models.)

For each of these models (softmax, LRDP, and LRHP), we perform the same experiment described above. Fig. 1 also shows the plots for these other models. Observe that none of them capture the low-degree triangle structure, and their values are all two to three orders of magnitude lower than the original.

In addition (to the extent possible), we compute vector embeddings from a recent deep learning-based method [node2vec (12)]. We again use all of the edge probability models discussed above, and perform an identical experiment (in Fig. 1, these are denoted by “n2v”). Again, we observe that the low-degree triangle behavior is not captured by these deep learned embeddings.

Broader Context

The use of geometric embeddings for graph analysis has a rich history, arguably going back to spectral clustering (23). In recent years, the Stochastic Block Model has become quite popular in the statistics and algorithms community (17), and the Random Dot Product Graph model is a generalization of this notion [refer to recent surveys (19, 24)]. As mentioned earlier, Theorem 1 brings into question the standard uses of these methods to model social networks. The use of vectors to represent vertices is sometimes referred to as latent space models, where geometric proximity models the likelihood of an edge. Although dot products are widely used, we note that some classic latent space approaches use Euclidean distance (as opposed to dot product) to model edge probabilities (25), and this may avoid the lower bound of Theorem 1. Beyond graph analysis, the method of Latent Semantic Indexing also falls in the setting of Theorem 1, wherein we have a low-dimensional embedding of “objects” (like documents), and similarity is measured by dot product (https://en.wikipedia.org/wiki/Latent_semantic_analysis).

High-Level Description of the Proof

In this section, we sketch the proof of Theorem 1. The sketch provides sufficient detail for a reader who wants to understand the reasoning behind our result, but is not concerned with technical details. We will make the simplifying assumption that all have the same length . We note that this setting is interesting in its own right, since it is often the case, in practice, that all vectors are nonnegative and normalized. In this case, we get a stronger rank lower bound that is linear in . Dealing with Varying Lengths provides intuition on how we can remove this assumption. The full details of the proof are given in Proof of Theorem 1.

First, we lower-bound . By Cauchy–Schwartz, . Let be the indicator random variable for the edge being present. Observe that all are independent, and .

The expected number of triangles in is

| [1] |

| [2] |

| [3] |

Note that is, at most, the degree of , which is, at most, . (Technically, the term creates a self-loop, so the correct upper bound is . For the sake of cleaner expressions, we omit the additive in this sketch.)

The expected number of triangles is at least . Plugging these bounds in,

| [4] |

Thus, the vectors have a length of at least . Now, we lower-bound the rank of . It will be convenient to deal with the Gram matrix , which has the same rank as . Observe that . We will use the following lemma stated first by Swanapoel (26), but which has appeared in numerous forms previously

Lemma 1 (Rank lemma). Consider any square matrix . Then

Note that , so the numerator . The denominator requires more work. We split it into two terms.

| [5] |

If, for , , then is an edge with probability 1. Thus, there can be, at most, such pairs. Overall, there are, at most, pairs such that . So, . Overall, we lower-bound the denominator in the rank lemma by .

We plug these bounds into the rank lemma. We use the fact that is decreasing for positive , and that .

Dealing with Varying Lengths.

The math behind Eq. 4 still holds with the right approximations. Intuitively, the existence of at least triangles implies that a sufficiently large number of vectors have a length of at least . On the other hand, these long vectors need to be “sufficiently far away” to ensure that the vertex degrees remain low. There are many such long vectors, and they can only be far away when their dimension/rank is sufficiently high.

The rank lemma is the main technical tool that formalizes this intuition. When vectors are of varying length, the primary obstacle is the presence of extremely long vectors that create triangles. The numerator in the rank lemma sums , which is the length of the vectors. A small set of extremely long vectors could dominate the sum, increasing the numerator. In that case, we do not get a meaningful rank bound.

But, because the vectors inhabit low-dimensional space, the long vectors from different clusters interact with each other. We prove a “packing” lemma (Lemma 5) showing that there must be many large positive dot products among a set of extremely long vectors. Thus, many of the corresponding vertices have large degree, and triangles incident to these vertices do not contribute to low-degree triangles. Operationally, the main proof uses the packing lemma to show that there are few long vectors. These can be removed without affecting the low-degree structure. One can then perform a binning (or “rounding”) of the lengths of the remaining vectors, to implement the proof described in the above section.

Proof of Theorem 1

For convenience, we restate the setting. Consider a set of vectors , that represent the vertices of a social network. We will also use the matrix for these vectors, where each column is one of the . Abusing notation, we will use to represent both the set of vectors and the matrix. We will refer to the vertices by the index in .

Let denote the following distribution of graphs over the vertex set . For each index pair , independently insert (undirected) edge with probability .

The Basic Tools.

We now state some results that will be used in the final proof. Lemma 2 is an existing result. For all other statements, the proofs are provided in SI Appendix.

Lemma 2. [Rank lemma (26)] Consider any square matrix . Then

Lemma 3. Consider a set of vectors in .

Recall that an independent set is a collection of vertices that induce no edge.

Lemma 4. Any graph with vertices and maximum degree has an independent set of at least .

Proposition 1. Consider the distribution . Let denote the degree of vertex . .

A key component of dealing with arbitrary-length vectors is the following dot product lemma. This is inspired by results of Alon (27) and Tao (28), who get a stronger lower bound of for absolute values of the dot products.

Lemma 5. Consider any set of unit vectors in . There exists some such that .

The Main Argument.

We prove by contradiction. We assume that the expected number of triangles contained in the set of vertices of expected degree, at most, is at least . We remind the reader that is the total number of vertices. For convenience, we simply remove the vectors corresponding to vertices with expected degree of at least . Let be the matrix of the remaining vectors, and we focus on . The expected number of triangles in is at least .

The overall proof can be thought of in three parts.

Part 1, remove extremely long vectors: Our final aim is to use the rank lemma (Lemma 2) to lower bound the rank of . The first problem we encounter is that extremely long vectors can dominate the expressions in the rank lemma, and we do not get useful bounds. We show that the number of such long vectors is extremely small, and they can be removed without affecting too many triangles. In addition, we can also remove extremely small vectors, since they cannot participate in many triangles.

Part 2, find a “core” of sufficiently long vectors that contains enough triangles: The previous step gets a “cleaned” set of vectors. Now, we bucket these vectors by length. We show that there is a large bucket, with vectors that are sufficiently long, such that there are enough triangles contained in this bucket.

Part 3, apply the rank lemma to the “core”: We now focus on this core of vectors, where the rank lemma can be applied. At this stage, the mathematics shown in High-Level Description of the Proof can be carried out almost directly.

Now for the formal proof. For the sake of contradiction, we assume that (for some sufficiently small constant ).

Part 1: Removing extremely long (and extremely short) vectors

We begin by showing that there cannot be many long vectors in .

Lemma 6. There are, at most, vectors of length at least .

Proof. Let be the set of “long” vectors, those with a length of at least . Let us prove by contradiction, and so assume there are more than long vectors. Consider a graph , where vectors () are connected by an edge if . We choose the bound to ensure that all edges in are edges in .

Formally, for any edge in , . So is an edge with probability 1 in . The degree of any vertex in is, at most, . By Lemma 4, contains an independent set of a size of at least . Consider an arbitrary sequence of (normalized) vectors in . Applying Lemma 5 to this sequence, we deduce the existence of in () such that . Then, the edge should be present in , contradicting the fact that is an independent set.

Denote by the set of all vectors in with length in the range .

Proposition 2. The expected degree of every vertex in is, at most, , and the expected number of triangles in is at least .

Proof. Since removal of vectors can only decrease the degree, the expected degree of every vertex in is, naturally, at most, . It remains to bound the expected number of triangles in . By removing vectors in , we potentially lose some triangles. Let us categorize them into those that involve at least one “long” vector (length ) and those that involve at least one “short” vector (length ) but no long vector.

We start with the first type. By Lemma 6, there are, at most, long vectors. For any vertex, the expected number of triangles incident to that vertex is, at most, the expected square of the degree. By Proposition 1, the expected degree squares is, at most, . Thus, the expected total number of triangles of the first type is, at most, .

Consider any triple of vectors where is short and neither of the others are long. The probability that this triple forms a triangle is, at most,

Summing over all such triples, the expected number of such triangles is, at most, 4.

Thus, the expected number of triangles in is at least .

Part 2: Finding core of sufficiently long vectors with enough triangles

For any integer , let be the set of vectors . Observe that the form a partition of . Since all lengths in are in the range , there are, at most, nonempty . Let be the set of indices such that . Furthermore, let be .

Proposition 3. The expected number of triangles in is at least .

Proof. The total number of vectors in is, at most, . By Proposition 1 and linearity of expectation, the expected sum of squares of degrees of all vectors in is, at most, . Since the expected number of triangles in is at least (Proposition 2) and the expected number of triangles incident to vectors in is, at most, , the expected number of triangles in is at least .

We now come to an important proposition. Because the expected number of triangles in is large, we can prove that must contain vectors of at least constant length.

Proposition 4. .

Proof. Suppose not. Then every vector in has a length of, at most, . By Cauchy–Schwartz, for every pair , . Let denote the set of vector indices in (this corresponds to the vertices in ). For any two vertices , let be the indicator random variable for edge being present. The expected number of triangles incident to vertex in is

Observe that is, at most, . Furthermore, (recall that is the degree of vertex ). By Proposition 1, this is, at most, . The expected number of triangles in is, at most, . This contradicts Proposition 3.

Part 3: Applying the rank lemma to the core

We are ready to apply the rank bound of Lemma 2 to prove the final result. The following lemma contradicts our initial bound on the rank , completing the proof. We will omit some details in the following proof, and provide a full proof in SI Appendix.

Lemma 7. .

Proof. It is convenient to denote the index set of be . Let be the Gram matrix ; so, for , . By Lemma 2, . Note that is , which is at least for . Let us denote by , so all vectors in have a length of, at most, . By Cauchy–Schwartz, all entries in are, at most, .

We lower-bound the numerator.

A series of technical calculations are needed to upper-bound the denominator, . These details are provided in SI Appendix. The main upshot is that we can prove .

Crucially, by Proposition 4, . Thus, . Combining all of the bounds (and setting ),

Details of Empirical Results

Data Availability.

The datasets used are summarized in Table 1. We present here four publicly available datasets from different domains. The ca-HepPh is a coauthorship network, Facebook is a social network, and cit-HepPh is a citation network, all obtained from the SNAP graph database (29). The String_hs dataset is a protein–protein interaction network obtained from ref. 30. (The citations provide the link to obtain the corresponding datasets.)

We first describe the primary experiment, used to validate Theorem 1 on the SVD embedding. We generated a -dimensional embedding for various values of using the SVD. Let be a graph with the (symmetric) adjacency matrix , with eigendecomposition . Let be the matrix with the diagonal matrix with the largest magnitude eigenvalues of along the diagonal. Let be the matrix with the corresponding eigenvectors as columns. We compute the matrix and refer to this as the spectral embedding of . This is the standard principal components analysis (PCA) approach.

From the spectral embeddings, we generate a graph from by considering every pair of vertices and generate a random value in . If the entry of is greater than the random value generated, the edge is added to the graph. Otherwise, the edge is not present. This is the same as taking and setting all negative values to 0 and all values >1 to 1 and performing Bernoulli trials for each edge with the resulting probabilities. In all of the figures, this is referred to as the “SVD TDP” embedding.

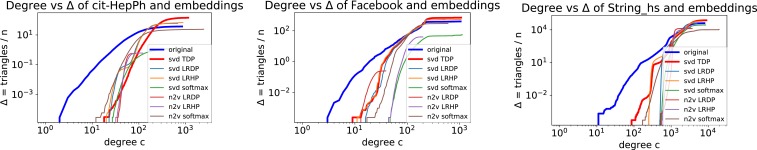

Triangle Distributions.

To generate Figs. 1 and 2, we calculated the number of triangles incident to vertices of different degrees in both the original graphs and the graphs generated from the embeddings. Each of the plots shows the number of triangles in the graph on the vertical axis and the degrees of vertices on the horizontal axis. Each curve corresponds to some graph, and each point in a given curve shows that the graph contains triangles if we remove all vertices with a degree of at least . We then generate 100 random samples from the 100-dimensional embedding, as given by SVD (described above). For each value of , we plot the maximum value of over all of the samples. This is to ensure that our results are not affected by statistical variation (which was quite minimal).

Fig. 2.

Plots of degree vs. : For each network, we plot versus the total number of triangles only involving vertices of degree of, at most, . We divide the latter by the number of vertices, so it corresponds to , as in the main definition. In each plot, we plot these for both the original graph and the maximum in a set of 100 samples from a 100-dimensional embedding. Observe how the embeddings generate graphs with very few triangles among low-degree vertices. The gap in for low degree is two to three orders of magnitude in all instances.

Alternate Graph Models.

We consider three other functions of the dot product, to construct graph distributions from the vector embeddings. Details on parameter settings and the procedure used for the optimization are given in SI Appendix.

LRDP.

We consider the probability of an edge to be the logistic function , where are parameters. Observe that the range of this function is , and hence can be interpreted as a probability. We tune these parameters to fit the expected number of edges, to the true number of edges. Then, we proceed as in the TDP experiment. We note that the TDP can be approximated by a logistic function, and thus the LRDP embedding is a “closer fit” to the graph than the TDP embedding.

LRHP.

This is inspired by linear models used on low-dimensional embeddings (7). Define the Hadamard product to be the -dimensional vector where the th coordinate is the product of the th coordinates. We now fit a logistic function over linear functions of (the coordinates of) . This is a significantly richer model than the previous model, which uses a fixed linear function (sum). Again, we tune parameters to match the number of edges.

Softmax.

This is inspired by low-dimensional embeddings for random walk matrices (10, 12). The idea is to make the probability of edge proportional to softmax, . This tends to push edge formation even for slightly higher dot products, and one might imagine this helps triangle formation. We set the proportionality constant separately for each vertex to ensure that the expected degree is the true degree. The probability matrix is technically undirected, but we symmetrize the matrix.

node2vec experiments.

We also applied node2vec, a recent deep learning-based graph embedding method (12), to generate vector representations of the vertices. We use default parameters to run node2vec. (More details are provided in SI Appendix.) The node2vec algorithm tries to model the random walk matrix associated with a graph, not the raw adjacency matrix. The dot products between the output vectors are used to model the random walk probability of going from to , rather than the presence of an edge. It does not make sense to apply the TDP function to these dot products, since this will generate (in expectation) only edges (one for each vertex). We apply the LRDP or LRHP functions, which use the node2vec vectors as inputs to a machine learning model that predicts edges.

In Figs. 1 and 2, we show results for all of the datasets. We note that, for all datasets and all embeddings, the models fail to capture the low-degree triangle behavior.

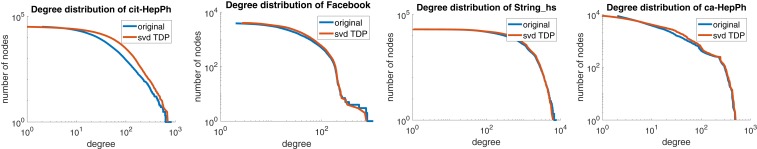

Degree Distributions.

We observe that the low-dimensional embeddings obtained from SVD and TDP can capture the degree distribution accurately. In Fig. 3, we plot the degree distribution (in loglog scale) of the original graph with the expected degree distribution of the embedding. For each vertex , we can compute its expected degree by the sum , where is the probability of the edge . In all cases, the expected degree distribution is close to the true degree distributions, even for lower degree vertices. The embedding successfully captures the “first-order” connections (degrees), but not the higher-order connections (triangles). We believe that this reinforces the need to look at the triangle structure to discover the weaknesses of low-dimensional embeddings.

Fig. 3.

Plots of degree distributions: For each network, we plot the true degree distribution vs. the expected degree distribution of a 100-dimensional embedding. Observe how the embedding does capture the degree distribution quite accurately at all scales.

Supplementary Material

Acknowledgments

C.S. acknowledges the support of NSF Awards CCF-1740850 and CCF-1813165, and ARO Award W911NF1910294.

Footnotes

The authors declare no competing interest.

This article is a PNAS Direct Submission. M.E.J.N. is a guest editor invited by the Editorial Board.

This article contains supporting information online at https://www.pnas.org/lookup/suppl/doi:10.1073/pnas.1911030117/-/DCSupplemental.

References

- 1.Wasserman S., Faust K., Social Network Analysis: Methods and Applications (Cambridge University Press, 1994). [Google Scholar]

- 2.Newman M. E. J., The structure and function of complex networks. SIAM Rev. 45, 167–256 (2003). [Google Scholar]

- 3.Easley D., Kleinberg J., Networks, Crowds, and Markets: Reasoning about a Highly Connected World (Cambridge University Press, 2010). [Google Scholar]

- 4.Barabasi A. L., Albert R., Emergence of scaling in random networks. Science 286, 509–512 (1999). [DOI] [PubMed] [Google Scholar]

- 5.Watts D., Strogatz S., Collective dynamics of ‘small-world’ networks. Nature 393, 440–442 (1998). [DOI] [PubMed] [Google Scholar]

- 6.Chakrabarti D., Faloutsos C., Graph mining: Laws, generators, and algorithms. ACM Comput. Surv. 38, 2 (2006). [Google Scholar]

- 7.Hamilton W. L., Ying R., Leskovec J., “Inductive representation learning on large graphs” in Neural Information Processing Systems, NIPS’17, Guyon I., von Luxburg U., Bengio S., Wallach H. M., Fergus R., Vishwanathan S. V. N., Garnett Roman, Eds. (Curran Associates Inc., 2017), pp. 1025–1035. [Google Scholar]

- 8.Ahmed A., Shervashidze N., Narayanamurthy S., Josifovski V., Smola A. J., “Distributed large-scale natural graph factorization” in Conference on World Wide Web, Almeida V. A. F., Glaser H., Baeza-Yates R., Moon S. B., Eds. (ACM, 2013), pp. 37–48. [Google Scholar]

- 9.Cao S., Lu W., Xu Q., “Deep neural networks for learning graph representations” in AAAI Conference on Artificial Intelligence, Schuurmans D., Wellman M. P., Eds. (Association for the Advancement of Artificial Intelligence, 2016), pp. 1145–1152. [Google Scholar]

- 10.Perozzi B., Al-Rfou R., Skiena S., “Deepwalk: Online learning of social representations” in SIGGKDD Conference of Knowledge Discovery and Data Mining, S. A. M., Perlich C., Leskovec J., Wang W., Ghani R., Eds. (Association for Computing Machinery, 2014), pp. 701–710. [Google Scholar]

- 11.Tang J., et al. , “Line: Large-scale information network embedding” in Conference on World Wide Web, Gangemi A., Leonardi S., Panconesi A., Eds. (ACM, 2015), pp. 1067–1077. [Google Scholar]

- 12.Grover A., Leskovec J., “node2vec: Scalable feature learning for networks” in SIGGKDD Conference of Knowledge Discovery and Data Mining, Krishnapuram B., Shah M., Smola A. J., Aggarwal C. C., Shen D., Rastogi R., Eds. (Association for Computing Machinery, 2016), pp. 855–864. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.@twittereng, Embeddings@twitter. https://blog.twitter.com/engineering/en_us/topics/insights/2018/embeddingsattwitter.html.

- 14.Bahmani B., Chowdhury A., Goel A., Fast incremental and personalized pagerank. Proc.VLDB Endowment 4, 173–184 (2010). [Google Scholar]

- 15.Gupta P., et al. , “WTF: The Who to Follow service at Twitter” in Conference on World Wide Web, Gangemi A., Leonardi S., Panconesi A., Eds. (ACM, 2013), pp. 505–514. [Google Scholar]

- 16.Kloumann I. M., Ugander J., Kleinberg J., Block models and personalized pagerank. Proc. Natl. Acad. Sci. U.S.A. 114, 33–38 (2017). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Holland P. W., Laskey K., Leinhardt S., Stochastic blockmodels: First steps. Soc. Networks 5, 109–137 (1983). [Google Scholar]

- 18.Young S. J., Scheinerman E. R., “Random dot product graph models for social networks” in Algorithms and Models for the Web-Graph, Bonata A., Chung F. R. K., Eds. (Springer, 2007), pp. 138–149. [Google Scholar]

- 19.Athreya A., et al. , Statistical inference on random dot product graphs: A survey. J. Mach. Learn. Res. 18, 1–92 (2018). [Google Scholar]

- 20.Sala A., et al. , “Measurement-calibrated graph models for social network experiments” in Conference on World Wide Web, Rappa M., Jones P., Freire J., Chakrabarti S., Eds. (ACM, 2010), pp. 861–870. [Google Scholar]

- 21.Seshadhri C., Kolda T. G., Pinar A., Community structure and scale-free collections of Erdös-Rényi graphs. Phys. Rev. E 85, 056109 (2012). [DOI] [PubMed] [Google Scholar]

- 22.Durak N., Pinar A., Kolda T. G., Seshadhri C., “Degree relations of triangles in real-world networks and graph models” in Conference on Information and Knowledge Management, Chen X-w., Lebanon G., Wang H., Zaki M. J., Eds. (CIKM) (ACM, 2012), pp. 1712–1716. [Google Scholar]

- 23.Fiedler M., Algebraic connectivity of graphs. Czech. Math. J. 23, 298–305 (1973). [Google Scholar]

- 24.Abbe E., Community detection and stochastic block models: Recent developments. J. Mach. Learn. Res. 18, 1–86 (2018). [Google Scholar]

- 25.Hoff P. D., Raftery A. E., Handcock M. S., Latent space approaches to social network analysis. J. Am. Stat. Assoc. 97, 1090–1098 (2002). [Google Scholar]

- 26.Swanapoel K., The rank lemma. https://konradswanepoel.wordpress.com/2014/03/04/the-rank-lemma/.

- 27.Alon N., Problems and results in extremal combinatorics, part I, discrete math. Discrete Math. 273, 31–53 (2003). [Google Scholar]

- 28.Tao T., A cheap version of the Kabatjanskii-Levenstein bound for almost orthogonal vectors. https://terrytao.wordpress.com/2013/07/18/a-cheap-version-of-the-kabatjanskii-levenstein-bound-for-almost-orthogonal-vectors/.

- 29.Leskovec J., Stanford Network Analysis Project. http://snap.stanford.edu/. Accessed 1 November 2019.

- 30.STRING Consortium, String database. http://version10.string-db.org/. Accessed 1 November 2019.

- 31.Tang J., Zhang J., Yao L., Li J., Zhang L., Su Z., Citation network dataset. https://aminer.org/citation. Accessed 1 November 2019.

- 32.Yao L., Li J., Zhang L., Su Z., “ArnetMiner: Extraction and mining of academic social networks” in Proceedings of the 14th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, Li Y., Liu B., Sarawagi S., Eds. (ACM, 2008), pp. 990–998. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

The datasets used are summarized in Table 1. We present here four publicly available datasets from different domains. The ca-HepPh is a coauthorship network, Facebook is a social network, and cit-HepPh is a citation network, all obtained from the SNAP graph database (29). The String_hs dataset is a protein–protein interaction network obtained from ref. 30. (The citations provide the link to obtain the corresponding datasets.)

We first describe the primary experiment, used to validate Theorem 1 on the SVD embedding. We generated a -dimensional embedding for various values of using the SVD. Let be a graph with the (symmetric) adjacency matrix , with eigendecomposition . Let be the matrix with the diagonal matrix with the largest magnitude eigenvalues of along the diagonal. Let be the matrix with the corresponding eigenvectors as columns. We compute the matrix and refer to this as the spectral embedding of . This is the standard principal components analysis (PCA) approach.

From the spectral embeddings, we generate a graph from by considering every pair of vertices and generate a random value in . If the entry of is greater than the random value generated, the edge is added to the graph. Otherwise, the edge is not present. This is the same as taking and setting all negative values to 0 and all values >1 to 1 and performing Bernoulli trials for each edge with the resulting probabilities. In all of the figures, this is referred to as the “SVD TDP” embedding.