Abstract

Online social networks provide users with unprecedented opportunities to engage with diverse opinions. At the same time, they enable confirmation bias on large scales by empowering individuals to self-select narratives they want to be exposed to. A precise understanding of such tradeoffs is still largely missing. We introduce a social learning model where most participants in a network update their beliefs unbiasedly based on new information, while a minority of participants reject information that is incongruent with their preexisting beliefs. This simple mechanism generates permanent opinion polarization and cascade dynamics, and accounts for the aforementioned tradeoff between confirmation bias and social connectivity through analytic results. We investigate the model’s predictions empirically using US county-level data on the impact of Internet access on the formation of beliefs about global warming. We conclude by discussing policy implications of our model, highlighting the downsides of debunking and suggesting alternative strategies to contrast misinformation.

Subject terms: Complex networks, Computational science

Introduction

We currently live in a paradoxical stage of the information age. The more we gain access to unprecedented amounts of knowledge thanks to digital technologies, the less our societies seem capable of discerning what is true from what is false, even in the presence of overwhelming evidence in support of a particular position. For example, large segments of our societies do not believe in the reality of climate change1 or believe in the relationship between vaccinations and autism2.

As recent studies indicate, over two-thirds of US adults get information from online and social media, with the proportion growing annually3,4. Hence, the impact such media have in shaping societal narratives cannot be understated. Online media empower their users to choose the news sources they want to be exposed to. This, in turn, makes it easier to restrict exposure only to narratives that are congruent to pre-established viewpoints5–8, and this positive feedback mechanism is further exacerbated by the widespread use of personalized news algorithms9. In other words, confirmation bias10,11 is enabled at unprecedented scales12.

Another major impact of digital technologies has been the increase in connectivity fostered by the growth of online social networks, which plays a double-edged role. On the one hand, it can compound the effects of confirmation bias, as users are likely to re-transmit the same information they are selectively exposed to, leading to fragmented societies that break down into online “echo chambers” where the same opinions keep being bounced around12,13. On the other hand, it also translates into a potentially increased heterogeneity of the information and viewpoints users are exposed to14,15.

Online social networks can therefore both improve and restrict the diversity of information individuals engage with, and their net effect is still very much debated. Empirical research is still in its infancy, with evidence for both positive and negative effects being found15–17. The theoretical literature is lagging somewhat further behind. While there exist a plethora of models related to information diffusion and opinion formation in social networks, a sound theoretical framework accounting for the emergence of the phenomena that are relevant to modern information consumption (rather than explicitly introducing them ad hoc), is still largely lacking.

In bounded confidence models18–20 agents only interact with others sharing similar opinions, and thus are characterized by a form of confirmation bias. In such models polarization is a natural outcome assuming agents are narrow enough in their choice of interaction partners21. However, these models tend to lack behavioural micro-foundations and a clear mechanism to link information diffusion to opinion formation, making it hard to draw conclusions about learning and accuracy amongst agents.

Social learning models22–24 provide a broader, empirically grounded, and analytically tractable framework to understand information aggregation25. Their main drawback, however, is that by design they tend to produce long run population consensus, hence fail to account for any form of opinion heterogeneity or polarization26. Polarization can be generated by introducing “stubborn” agents that remain fully attached to their initial opinions rather than interacting and learning from their neighbors27,28, a mechanism reminiscent of confirmation bias. However, the conditions under which polarization occurs are very strict, as populations converge towards consensus as soon as stubborn agents accept even a negligible fraction of influence from their neighbors26. A similar phenomenon is explored in the social physics literature where it is referred to as networks with “zealots”, which similarly impede consensus, such as in Ref. 29. A key distinction in the model we introduce in the following is that all agents are free to vary their opinions over time, resulting in cascade dynamics that separate consensus and polarization regimes.

Overall, while it is clear from the literature that some notion of “bias” in networks is a key requirement to reproduce realistic dynamics of opinion formation, it is still difficult to provide a unified framework that can account for information aggregation, polarization and learning. The purpose of the present paper is to develop a framework that naturally captures the effect of large-scale confirmation bias on social learning, and to examine how it can drastically change the way a networked, decentralized, society processes information. We are able to provide analytic results at all scales of the model. At the macroscopic scale, we determine under what conditions the model ends up in a polarised state or cascades towards a consensus. At the mesoscopic scale, we are able to provide an intuitive characterization of the trade-off between bias and connectivity in the context of such dynamics, and explain the role echo chambers play in such outcomes. At the microscopic scale, we are able to study the full distribution of each agent’s available information and subsequent accuracy, and demonstrate that small amounts of bias can have positive effects on learning by preserving information heterogeneity. Our model unveils a stylized yet rich phenomenology which, as we shall discuss in our final remarks, has substantial correspondence with the available empirical evidence.

Results

Social learning and confirmation bias

We consider a model of a social network described by a graph consisting of a set of agents (where = ), and the edges between them . Each agent seeks to learn the unobservable ground truth about a binary statement such as, e.g., “global warming is/is not happening” or “gun control does/does not reduce crime”. The value represents the statement’s true value, whose negation is .

Following standard social learning frameworks25, at the beginning of time (), each agent () independently receives an initial signal , which is informative of the underlying state, i.e. 1/2. Signals can be thought of as news, stories, quotations, etc., that support or detract from the ground truth. The model evolves in discrete time steps, and at each time step all agents synchronously share with their neighbors the full set of signals they have accrued up to that point. For example, the time information set of an agent with two neighbors and will be , their time set will be , where denotes the set of all signals incoming from nodes at distance (i.e., ’s and ’s neighbors), and so on. Furthermore, we define the following:

| 1 |

where and denote, respectively, the number of positive and negative signals accrued by up to time . We refer to this quantity as an agent’s signal mix, and we straightforwardly generalize it to any set of agents , i.e., we indicate the fraction of positive signals in their pooled information sets at time as . The list of all agents’ signal mixes at time is vectorised as .

Each agent forms a posterior belief of the likelihood of the ground truth given their information sets using Bayes’ rule. This is done under a bounded rationality assumption, as the agents fail to accurately model the statistical dependence between the signals they receive, substituting it with a naive updating rule that assumes all signals in their information sets to be independent (/, where is computed as a factorization over probabilities associated to individual signals, i.e. , where denotes the cth component of the vector), which is a standard assumption of social learning models25. Under such a framework (and uniform priors), the best guess an agent can make at any time over the statement given their information set is precisely equal to their orientation , where for 1/2 and for 1/2 (without loss of generality, in the following we shall choose network structures that rule out the possibility of 1/2 taking place). The orientations of all agents at time are vectorized as ; the fraction of positively oriented agents in a group of nodes is denoted as .

The polarization of the group is then defined as the fraction of agents in that group that have the minority orientation. Note that polarization equals zero when there is full consensus and all agents are either positively or negatively oriented. It is maximized when there are exactly half the group in each orientation.

It is useful to think of , and as respectively representing the pool of available signals, the conclusions agents draw on the basis of the available signals, and a summary measure of the heterogeneity of agents’ conclusions. In the context of news diffusion, for example, they would represent the availability of news of each type across agents, the resulting agents’ opinions on some topic, and the extent to which those opinions have converged to a consensus.

We distinguish between two kinds of agents in the model: unbiased agents and biased agents. Both agents share signals and update their posterior beliefs through Bayes’ rule, as described in the previous section. However, they differ in how they acquire incoming signals. Unbiased agents accept the set of signals provided by their neighbors without any distortion. On the other hand, biased agents exercise a model of confirmation bias11,30, and are able to distort the information sets they accrue. We denote the two sets of agents as and , respectively.

To describe the behaviour of these biased agents we use a slight variation of the confirmation bias model introduced by Rabin and Shrag31. We refer to an incoming signal as congruent to if it is aligned with ’s current orientation, i.e. if , and incongruent if . When biased agents are presented with incongruent signals, they reject them with a fixed probability and replace them with a congruent signal, which they add to their information set and propagate to their neighbors. We refer to as the confirmation bias parameter. Denote the set of positively (negatively) oriented biased agents at time as (), and the corresponding fraction as /. Note that this is an important departure from “stubborn agent” models, as such biased agents do have a non-zero influence from their neighbors, and they can change their beliefs over time as they aggregate information.

An intuitive interpretation of what this mechanism is intended to model is as follows: biased agents are empowered to reject incoming signals they disagree with, and instead refer to preferred sources of information to find signals that are congruent with their existing viewpoint (see Fig. 1). This mechanism models both active behaviour, where agents deliberately choose to ignore or contort information that contradicts their beliefs (mirroring the “backfire effect” evidenced both in psychological experiments32,33 and in online social network behaviour34), and passive behaviour, where personalized news algorithms filter out incongruent information and select other information which coheres with the agents’ beliefs15.

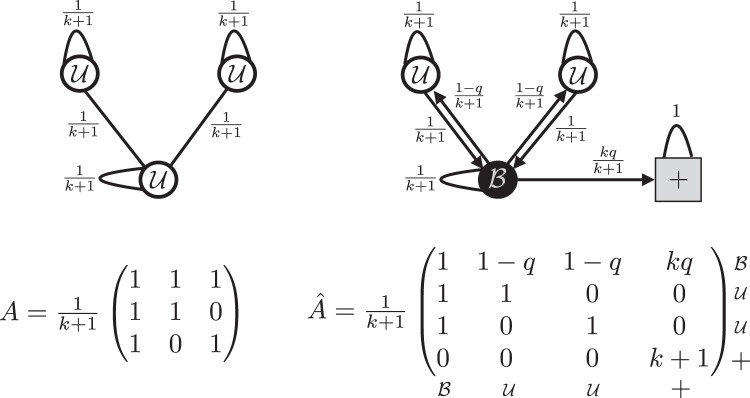

Figure 1.

Sketch of an unbiased (left) and biased (right) network with three nodes. Below each sketch is the update matrix of the corresponding (deterministic) DeGroot model. The network on the right shows that the stochastic “signal distortion” behaviour can be approximated with a biased agent () reducing the weight it places on each of its neighbors to /, and placing the remaining weight on an external positively oriented “ghost” node. When a biased agent changes orientation, it switches such an edge to an external negatively oriented node instead.

In the following, we shall denote the fraction of biased agents in a network as . We shall refer to networks where as unbiased networks, and to networks where as biased networks.

For the bulk of the analytic results in the paper, we assume that the social network is an undirected -regular network. The motivation for this is two-fold. Firstly, empirical research35 suggests that for online social networks such as Facebook (where social connections are symmetric), heterogenous network features such as hubs do not play a disproportionately significant role in the diffusion of information. Intuitively, while social networks themselves might be highly heterogenous, the network of information transmission is a lot more restricted, as individuals tend to discuss topics with a small group such as immediate friends and family. Secondly, utilizing a simple -regular network allows for considerable analytical tractability. However, one can show that our main results can be easily extended to hold under a variety of network topologies characterized by degree heterogeneity.

The assumption of regular network structure (coupled with the aforementioned synchronous belief update dynamics) allows the information sets of all agents to grow at the same rate, and as a result the evolution of the signal mix can be mapped to a DeGroot averaging process for unbiased networks36: , where is an matrix with entries / for each pair of connected nodes.

For biased networks, one can demonstrate (see Section S1 of the Supplementary Materials) that the above confirmation bias mechanics can be reproduced by introducing a positive and negative “ghost” node which maintain respective signal mixes of and . Biased agents sample each signal from their orientation-aligned ghost node with probability , and from their neighborhood with probability .

Furthermore, while the process is stochastic, we also show that it converges to a deterministic process with a simple update matrix described as follows. In Section S3 of the Supplementary Materials we discuss in detail the correspondence and convergence between the stochastic and deterministic processes. Biased agents down-weight their connections to neighbors by a factor and place the remaining fraction / of their outgoing weight on the corresponding ghost node. With these positions the updating process now simply reads , where is an asymmetric matrix whose entries in its upper-left block are as those in , except / when . The positive (negative) ghost node corresponds to node () of the augmented matrix , and we shall label it as () for convenience, i.e., we shall have / (/) for () and . Similarly, denotes an augmented signal mix vector where , and .

The time dependence of the matrix is due to the fact that whenever a biased agent switches orientation its links to the ghost nodes change. This happens whenever the agent’s signal mix (see Eq. (1)) goes from below to above 1/2 or vice versa, due to an overwhelming amount of incongruent incoming signals from its neighbors. For example, when switching from being positively to negatively oriented, a biased agent will change its links as follows: /, and /. In the following, all quantities pertaining to biased networks will be denoted with aˆ symbol.

We provide a sketch of the above mapping in Fig. 1 (for the sake of simplicity we present an arbitrary small network instead of a strictly -regular one). There is an appealing intuition to this interpretation: biased agents have a “preferred” information source they sample from in lieu of incongruent information provided from their peers. If their beliefs change, their preferred information source can change.

Our main focus will be on the long-run properties of the dynamics introduced above. In this respect, it is important to establish whether the agents actually reach an equilibrium over their signal mixes and orientations, or whether they continue to oscillate. It is straightforward to demonstrate that unbiased networks always converge to a limiting steady state for their signal mixes and orientations, which follows directly from the correspondence between such networks and DeGroot models23. The convergence of biased networks is much less trivial to prove due to the non-linear dynamics introduced by the confirmation bias mechanics. It can be shown however that convergence holds under fairly general conditions, and in Section S2 of the Supplementary Materials we demonstrate such convergence under a number of network topologies.

Building on this, we are able to establish the following results on -regular networks of any size (in the following, and throughout the rest of the paper, we shall denote the steady state value of a variable as ).

For biased -regular networks, denote as the time after which biased agents cease switching their orientation. Define as the steady state fraction of positively oriented biased agents. Then the following holds (see Section S3 of the Supplementary Materials).

The signal mix vector converges to some for both biased and unbiased networks, where is a steady-state matrix of influence weights which can be computed explicitly (see Section S3 of the Supplementary Materials).

Unbiased networks achieve consensus, and converge to influence weights of / for all pairs . This ensures that, for all , , where is the intial average signal mix.

Biased networks where achieve consensus, and converge to influence weights for all pairs , and for all .

Biased networks where do not achieve consensus, and converge to influence weights for all , and for all .

From the above, we can conclude that while unbiased networks efficiently aggregate the information available to them at , the outcome of the information aggregation process in biased networks ends up being entirely determined by the long-run orientations of biased agents. We shall devote the following sections to examine the consequences of the model in greater detail through mean field approximations coupled with numerical verifications on finite networks.

Cascades and consensus

We begin by studying the signal mix of unbiased agents in biased networks () to provide a like for like comparison with the fully unbiased networks. In the context of the diffusion of news, the global signal mix can be thought of as a model of the long term balance of news of different types that survive following the diffusion dynamics.

For unbiased networks, it is demonstrated in37 that . That is, the steady state signal mix in unbiased networks precisely reflects the original, unbiased informative signals injected into the network. Determining the steady state signal mix of biased networks entails considering the interactions between three subpopulations - the unbiased agents , positively biased agents , and negatively biased agents . One can show (see Section S4 of the Supplementary Materials) that this can be approximated as:

| 2 |

Let us now consider the situation under which , i.e. where the initial orientation of each biased agent does not change, and is therefore equal to the initial signal it receives. Intuitively, this will occur for large which allows for biased agents to reject the majority of incongruent signals they receive (shortly we demonstrate in fact this generally occurs for /). Given Eq. (2), we can therefore calculate the steady state signal mix of any subset of agents based on our knowledge of the distribution of the initial signals.

In this scenario, the average signal mix of unbiased agents is determined by the initial proportion of positively oriented biased agents , which is the mean of i.i.d. Bernoulli variables with probability (which, we recall, denotes the probability of an initially assigned signal being informative). One can compare this to unbiased networks (), where the long run average signal mix is , and is hence the mean of i.i.d. Bernoulli variables with probability . Applying the central limit theorem we see that injecting a fraction of biased agents therefore amplifies the variance of the long run global signal mix by a factor of with respect to the unbiased case:

| 3 |

This means that the “wisdom of unbiased crowds” is effectively undone by small biased populations, and the unbiased network’s variability is recovered for , and not for , as one might intuitively expect.

Consider now the general case where biased agents can, in principle, switch orientation a few times before settling on their steady state orientation. Using mean-field methods one can determine the general conditions under which a cascade in these orientation changes can be expected (see Section S4 of the Supplementary Materials) but here we only provide some intuition. As is lower, it is easier for an initial majority camp of biased agents to convert the minority camp of biased agents. As the conversion of the minority camp begins, this triggers a domino effect as newly converted biased agents add to the critical mass of the majority camp and are able to overwhelm the minority orientation.

This mechanism allows us to derive analytic curves in the parameter space to approximate the steady state outcome of the unbiased agent population’s average signal mix based on the orientations of the biased agents at time :

| 4 |

The above result is sketched in Fig. 2, and we have verified that it matches numerical simulations even for heterogenous networks. For 1/2 biased agents can convert at least half of the incongruent signals they receive to their preferred type, meaning that biased agents of either orientation cannot be eradicated from the network, which preserves signals of both types in the steady state. For sufficiently small values of , on the other hand, small variations in the initial biased population translate to completely opposite consensus, and only by increasing the confirmation bias , paradoxically, the model tends back to a balance of signals that resembles the initially available information.

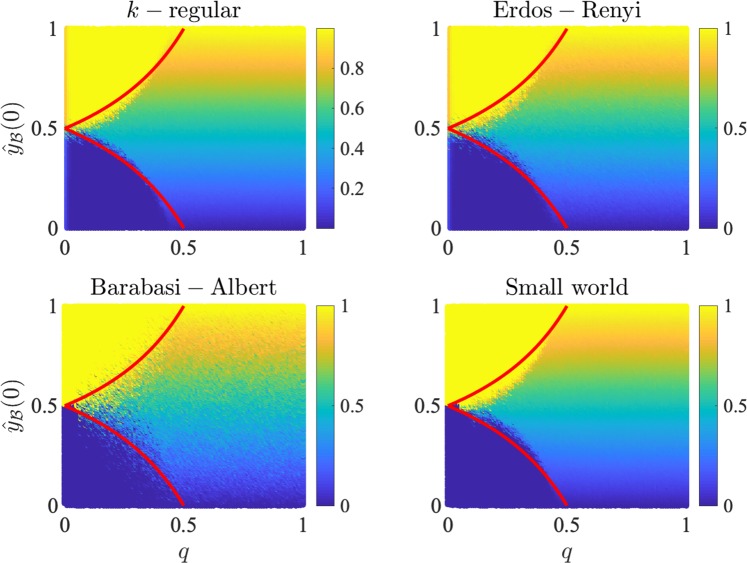

Figure 2.

Average steady state signal mix of unbiased agents () as a function of the time fraction of positively oriented biased agents () and confirmation bias . The color gradient denotes the average long run signal mix for unbiased agents from to . The top-left and bottom-left regions are characterized, respectively, by a global signal mix of 1 and 0 respectively, and are separated by a discontinuous transition from a region characterized by a steady state that maintains a mixed set of signals. That is, in the top-left region almost all negative signals have been removed from the network, leaving almost entirely positive signals in circulation (and vice versa for the bottom-left region). In the remaining region, signal mixes of both types survive in the long run, and the balance between positive and negative signals reflects the fraction of positively oriented biased agents. The lower falls, the easier it is to tip the network into a total assimilation of a single signal type. Results are shown for simulations on -regular (top left), Erdős-Rényi (top right), Barabasi-Albert (bottom left) and Small-world (bottom right) networks. Analytic predictions (given by Eq. (4)) are denoted by solid red lines. The parameters used in the simulations were , , (which corresponds to an average degree in all cases), .

Putting the above results together, we note that biased networks with small and are, surprisingly, the most unstable. Indeed, such networks sit on a knife-edge between two extremes where one signal type flourishes and the other is totally censored. In this context, the model indicates that confirmation bias helps preserve a degree of information heterogeneity, which, in turn, ensures that alternative viewpoints and information are not eradicated. In subsequent sections we consider a normative interpretation of this effect in the context of accuracy and learning.

Polarization, echo chambers and the bias-connectivity trade-off

So far we have derived the statistical properties of the average steady state signal mix across all unbiased agents. We now aim to establish how these signals are distributed across individual agents. Throughout the following, assume the global steady state signal mix has been determined.

In the limit of large and , for is normally distributed with mean and variance that can be approximated as follows (see Section S4 of the Supplementary Materials):

| 5 |

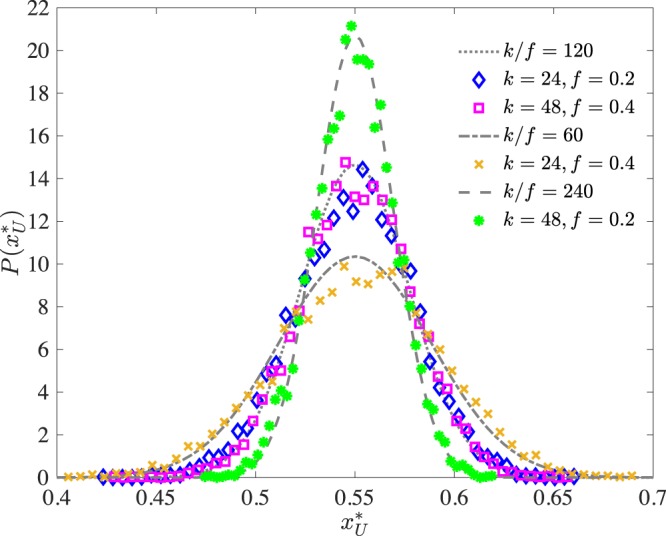

and this result is quite accurate even when compared with simulations for small and , as demonstrated in Fig. 3.

Figure 3.

Distribution of individual unbiased agents’ steady state signal mixes (points) vs analytic predictions (dashed lines). As discussed in the main text, the model predicts such distribution to be a Gaussian (for both large and ) with mean equal to and variance given by Eq. (5). is kept fixed to demonstrate the effect of varying and . As shown in the case for , and trade off, and scaling both by the same constant results in the same distribution. The parameters used in the simulations were , , .

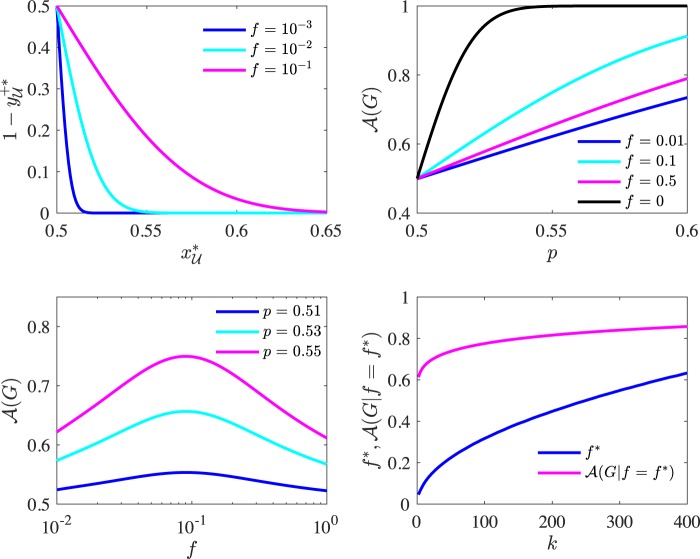

This result further shows that the presence of biased agents is effectively responsible for the polarization of unbiased agents in the steady state. Indeed, both a larger biased population and higher confirmation bias - i.e. higher or , respectively - result in an increased variance and steady state polarization , since a larger variance implies larger numbers of agents displaying the minority orientation. This is illustrated in the top left panel of Fig. 4.

Figure 4.

(Top left panel) Polarization of the unbiased agent population as a function of the average signal mix in the steady state calculated as /2, with given by Eq. (5) . (Top right panel) Expected accuracy (Eq. (6)) as a function of the initial signals’ informativeness . (Bottom left panel) Expected accuracy (Eq. (6)) as a function of the fraction of biased agents. (Bottom right panel) Behaviour of the accuracy-maximizing value and of the corresponding accuracy as functions of . In the first three panels the model’s parameters are , , , while the parameters in the last panel are , , . In all cases we assume without loss of generality.

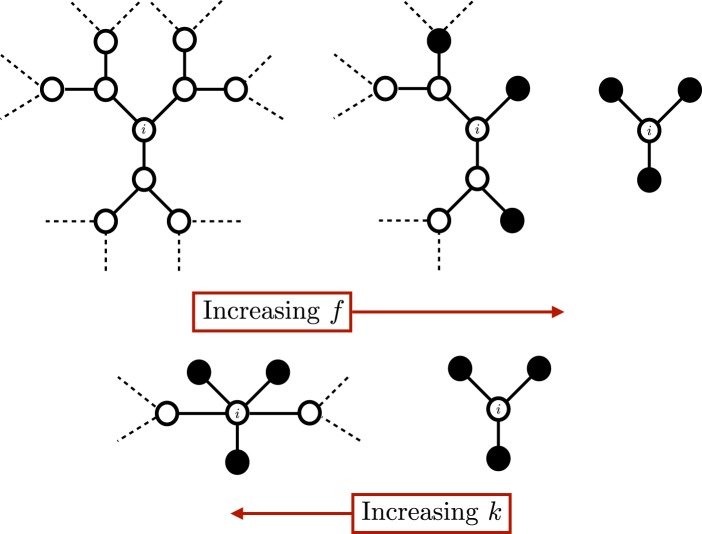

On the other hand, a larger degree contrasts this effect by creating more paths to transport unbiased information. It is worth pointing out that the variance in Eq. (5) does not decay with , showing that steady state polarization persists even in the large limit. We refer to this as the bias-connectivity trade-off, and the intuition behind this result is illustrated in Fig. 5.

Figure 5.

For illustration of the bias-connectivity trade-off, consider a Cayley -tree structure and let . When , each unbiased node has 3 independent sources of novel information, one from each branch associated with a neighbor. As increases, unbiased neighbors are steadily replaced with biased agents that act as gatekeepers and restrict the flow of novel information from their branches, resulting in an equivalence with fewer branches overall. Increasing social connectivity bypasses biased neighbors and enables the discovery of novel information. This can also be interpreted as agents being encased in “echo chambers” as bias grows, and circumventing these chambers as connectivity increases.

Further intuition for this result can be found at the mesoscopic level of agent clusters, where we see the emergence of natural “echo chambers” in the model. We define an echo chamber as a subset of unbiased agents such that: , where denotes the neighborhood of agent . In other words, an echo chamber is a set of connected unbiased agents such that all nodes are either connected to other nodes in the echo chamber or to biased agents. Therefore, biased agents form the echo chamber’s boundary, which we refer to as . Echo chambers in our model represent groups of unbiased agents that are completely surrounded by biased agents who effectively modulate the information that can flow in and out of these groups.

Echo chambers allow us to examine the qualitative effect of confirmation bias () and connectivity . Let us label the fraction of unbiased agents enclosed in an echo chamber as . Leveraging some simple results from percolation theory38 we can show that increases with and decreases with , as the creation of more pathways that bypass biased agents effectively breaks up echo chambers. Furthermore, the equilibrium signal mix of unbiased agents inside echo chambers is well approximated by a weighted average between the signal mix of the biased agents surrounding them () and the signal mix of the whole population: . The confirmation bias parameter therefore determines the “permeability” of echo chambers to the information flow from the broader network. Hence, unbiased agents enclosed in echo chambers are likely to be exceedingly affected by the views of the small set of biased agents surrounding them, and, as such, to hold information sets that are unrepresentative of the information available to the broader network. In doing so, we can envision these echo chambers as effective “building blocks” of the overall polarization observed in the network.

Accuracy, efficiency and learning

Up until now, we have not attempted to make any normative interpretations of the ground truth . In the following, we shall refer to unbiased agents whose steady state orientation is positive (negative) as accurate (inaccurate) agents, and we shall define the overall accuracy of a network as the expected fraction of accurate agents in the steady state. This allows us to investigate how biased and unbiased networks respond to changes in the reliability of the available information, which ultimately depends on the prevalence of positive or negative signals (modulated by the parameter ), which, loosely speaking, can be interpreted as “real” and “fake” news.

The accuracy of unbiased networks obtains a neat closed form that can be approximated as /2 (see37). For , we compute the expected accuracy as the expected fraction of accurate agents with respect to a certain global signal mix. This reads:

| 6 |

where is the distribution of the average signal mix across unbiased agents (see Eq. (3)) (we take the simplifying case of , but this can easily be extended to the case for using Eq. (4)), and where we have used the previously mentioned Gaussian approximation for the distribution of individual signal mixes (whose variance is given by Eq. (5)).

The top right panel in Fig. 4 contrasts biased and unbiased networks, and shows how the former remain very inefficient in aggregating information compared to the latter, even as the reliability of the signals () improve. However, accuracy in biased networks is non-monotonic with respect to . As shown in the bottom left panel in Fig. 4, accuracy reaches a maximum in correspondence of an optimal value (see Section S5 of the Supplementary Materials for a comparison with numerical simulations). Intuitively, this is because for small values of , as already discussed, the model can converge to the very inaccurate views of a small set of biased agents. As grows, the views of the two biased camps tend to cancel each other out, and the signal set will match more closely the balance of the original distribution of signals (Eq. (3)). However, in doing so large values of lead to increased polarization (Eq. (5)), where accurate and inaccurate agents coexist. The trade-off between balance and polarization is optimised at .

It is also interesting to note that, as shown in the bottom right panel of Fig. 4, the optimal fraction of biased agents and the corresponding maximum accuracy both increase monotonically with the degree. This indicates that as networks are better connected, they can absorb a greater degree of confirmation bias without affecting accuracy.

Internet access, confirmation bias, and social learning

We now seek to test some of the model’s predictions against real world data. Clearly, a full validation of the model will require an experimental setup, but a simple test case on existing data can demonstrate the utility of the framework in disambiguating the competing effects of bias and connectivity. We employ the model to investigate the effect of online media in the process of opinion formation using survey data. Empirical literature on this phenomenon has been mixed, with different analyses reaching completely opposite conclusions, e.g., showing that Internet access increases39, decreases40 and has no effect41 on opinion polarization. In Section S6 of the Supplementary Materials we briefly review how our model can help better understand some of the inconsistencies between these results.

Our position is that the effect of Internet access can be split into the effect it has on social connectivity and social discussion () and the residual effect it has on enabling active and passive confirmation bias behaviours (). As per Eq. (5), assuming the majority of the population accurately learns the ground truth (1/2), increases in social discussion should improve consensus around the truth and reduce the fraction of inaccurate agents. However, when controlling for the improvement in social connectivity, we should expect an increase in Internet access to have the opposite effect.

We utilise data from the Yale Programme on Climate Change Communication42, which provides state and county level survey data on opinions on global warming, as well as information about the propensity to discuss climate change with friends and family, which proxies connectivity . We combine this with FCC reports on county level broadband internet penetration, which proxies for after controlling for the considerable effect this has on social connectivity. We also account for a range of covariates (income, age, education, etc) and make use of an instrumental variable approach to account for simultaneous causality. We then attempt to predict the fraction of each county’s population that correctly learns that “global warming is happening” (see Section S6 of the Supplementary Materials for details on assumptions and results).

As predicted by our model, we find the accurate fraction of the population to have statistically significant positive relationships with , and a negative relationship with . We find our model accounts for of the variance in the data. This indicates that, after controlling for the improvements on social connectivity, Internet access does indeed increase polarization and reduces a population’s ability to accurately learn. While simple, this analysis illustrates the value of our model: by explicitly accounting for the separate effects of large-scale online communication (confirmation bias and connectivity), it can shed light on the mixed empirical results currently available in the literature. In Section S6 of the Supplementary Materials we explore this further by reviewing some of these empirical results and showing how our model provides useful further interpretations of available findings.

It should be emphasized that this result is merely an initial exploration of how our model can provide some testable predictions to empirical data, as opposed to a detailed effort to understand the effect of Internet access on global warming beliefs. Having said that, the initial results are encouraging, and we hope the clarity of the analytic results of our model pave the way for testing variations of the idea of biased information aggregation in a range of outcomes and settings.

Discussion

We introduced a model of social learning in networked societies where only a fraction of the agents update beliefs unbiasedly based on the arrival of new information. The model only provides a stylized representation of the real-world complexity underpinning the propagation of information and the ensuing opinion formation process. Its value stands in the transparency of the assumptions made, and in the fact that it allows us to “unpack” blanket terms such as, e.g., social media and Internet penetration, by assigning specific parameters to their different facets, such as connectivity () and the level of confirmation bias it enables in a society (). This, in turn, yields quantitative testable predictions that contribute to shed light on the mixed results that the empirical literature has so far collected on the effects online media have in shaping societal debates.

Our model indicates the possibility that the “narratives” (information sets) biased societies generate can be entirely determined by the composition of their sub-populations of biased reasoners. This is reminiscent of the over-representation in public discourse of issues that are often supported by small but dedicated minorities, such as GMO opposition43, and of the domination of political news sharing on Facebook by heavily partisan users15; it also resonates with recent experimental results showing that committed minorities can overturn established social conventions44. The model indicates that societies that contain only small minorities of biased individuals () may be much more prone to producing long run narratives that deviate significantly from their initially available information set (see Eq. (3)) than societies where the vast majority of the agents actively propagate biases. This resonates, for example, with Gallup survey data about vaccine beliefs in the US population, where only of respondents report their belief in the relationship between vaccines and autism, but more than report to be unsure about it and almost report to have heard about the disadvantages of vaccinations45. Similarly, the model suggests that mild levels of confirmation bias () may prove to be the most damaging in this regard, as they cause societies to live on a knife-edge where small fluctuations in the information set initially available to the biased agent population can completely censor information signals from opposing viewpoints (see Fig. 2). All in all, the model suggests that a lack of confirmation bias can ensure that small biased minorities much more easily hijack and dictate public discourse.

The model suggests that as the prevalence of biased agents grows, the available balance of information improves and society is more likely to maintain a long term narrative that is representative of all the information available. On the other hand, it suggests that such societies may grow more polarised. When we examine the net effect of this trade off between bias and polarization through an ensemble approach, our model suggests that the expected accuracy of a society may initially improve with the growth of confirmation bias, then reach a maximum at a value before marginal returns to confirmation bias are negative, i.e. confirmation bias experiences an “optimal” intermediate value. The model suggests that such value and its corresponding accuracy should increase monotonically with a society’s connectivity, meaning that more densely connected societies can support a greater amount of biased reasoners (and healthy debate between biased camps) before partitioning into echo chambers and suffering from polarization.

Supplementary information

Acknowledgements

We thank Daniel Fricke and Simone Righi for helpful comments. G.L. acknowledges support from an EPSRC Early Career Fellowship in Digital Economy (Grant No. EP/N006062/1). P.V. acknowledges support from the UKRI Future Leaders Fellowship Grant MR/S03174X/1.

Author contributions

O.S., R.E.S., P.V. and G.L. designed research; O.S. and G.L. performed research and analyzed data; O.S., R.E.S., P.V. and G.L. wrote the paper.

Data availability

The data used to test the model’s predictions on global warming beliefs were gathered from the Yale Programme on Climate Change Communication 2016 Opinion Maps42, which provides state and county level survey data on opinions on global warming, as well as behaviours such as the propensity to discuss climate change with friends and family. These data were combined with FCC 2016 county level data on residential high speed Internet access (www.fcc.gov/general/form-477-county-data-internet-access-services). Also, a supplemental source was used in the data aggregated by the Joint Economic Council’s Social Capital Project www.jec.senate.gov/public/index.cfm/republicans/socialcapitalproject), a government initiative aiming to measure social capital at a county level by aggregating a combination of state and county level data from sources such as the American Community Survey, the Current Population Survey, and the IRS.

Competing interests

The authors declare no competing interests.

Footnotes

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

is available for this paper at 10.1038/s41598-020-62085-w.

References

- 1.McCright AM, Dunlap RE. The politicization of climate change and polarization in the american public’s views of global warming, 2001–2010. The Sociological Quarterly. 2011;52:155–194. doi: 10.1111/j.1533-8525.2011.01198.x. [DOI] [Google Scholar]

- 2.Horne Z, Powell D, Hummel JE, Holyoak KJ. Countering antivaccination attitudes. Proceedings of the National Academy of Sciences. 2015;112:10321–10324. doi: 10.1073/pnas.1504019112. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Levy, D., Newman, N., Fletcher, R., Kalogeropoulos, A. & Nielsen, R. K. Reuters institute digital news report 2014. Report of the Reuters Institute for the Study of Journalism. Available online: http://reutersinstitute.politics.ox.ac.uk/publication/digital-news-report-2014 (2014).

- 4.Gottfried, J. & Shearer, E. News Use Across Social Medial Platforms 2016 (Pew Research Center, 2016).

- 5.Mitchell, A. & Weisel, R. Political polarization & media habits: From fox news to facebook, how liberals, and conservatives keep up with politics. pew research center (2014).

- 6.Nikolov D, Oliveira DF, Flammini A, Menczer F. Measuring online social bubbles. PeerJ Computer Science. 2015;1:e38. doi: 10.7717/peerj-cs.38. [DOI] [Google Scholar]

- 7.Conover, M. D. et al. Political polarization on twitter. In Fifth international AAAI conference on weblogs and social media (2011).

- 8.Schmidt AL, et al. Anatomy of news consumption on facebook. Proceedings of the National Academy of Sciences. 2017;114:3035–3039. doi: 10.1073/pnas.1617052114. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Hannak, A. et al. Measuring personalization of web search. In Proceedings of the 22nd international conference on World Wide Web, 527–538 (ACM, 2013).

- 10.Jonas E, Schulz-Hardt S, Frey D, Thelen N. Confirmation bias in sequential information search after preliminary decisions: an expansion of dissonance theoretical research on selective exposure to information. Journal of personality and social psychology. 2001;80:557. doi: 10.1037/0022-3514.80.4.557. [DOI] [PubMed] [Google Scholar]

- 11.Nickerson RS. Confirmation bias: A ubiquitous phenomenon in many guises. Review of general psychology. 1998;2:175–220. doi: 10.1037/1089-2680.2.2.175. [DOI] [Google Scholar]

- 12.DelVicario M, et al. The spreading of misinformation online. Proceedings of the National Academy of Sciences. 2016;113:554–559. doi: 10.1073/pnas.1517441113. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.An J, Quercia D, Cha M, Gummadi K, Crowcroft J. Sharing political news: the balancing act of intimacy and socialization in selective exposure. EPJ Data Science. 2014;3:12. doi: 10.1140/epjds/s13688-014-0012-2. [DOI] [Google Scholar]

- 14.Messing S, Westwood SJ. Selective exposure in the age of social media: Endorsements trump partisan source affiliation when selecting news online. Communication research. 2014;41:1042–1063. doi: 10.1177/0093650212466406. [DOI] [Google Scholar]

- 15.Bakshy E, Messing S, Adamic LA. Exposure to ideologically diverse news and opinion on facebook. Science. 2015;348:1130–1132. doi: 10.1126/science.aaa1160. [DOI] [PubMed] [Google Scholar]

- 16.Flaxman S, Goel S, Rao JM. Filter bubbles, echo chambers, and online news consumption. Public opinion quarterly. 2016;80:298–320. doi: 10.1093/poq/nfw006. [DOI] [Google Scholar]

- 17.Goel S, Mason W, Watts DJ. Real and perceived attitude agreement in social networks. Journal of personality and social psychology. 2010;99:611. doi: 10.1037/a0020697. [DOI] [PubMed] [Google Scholar]

- 18.Hegselmann, R. et al. Opinion dynamics and bounded confidence models, analysis, and simulation. Journal of artificial societies and social simulation 5 (2002).

- 19.DelVicario M, Scala A, Caldarelli G, Stanley HE, Quattrociocchi W. Modeling confirmation bias and polarization. Scientific reports. 2017;7:40391. doi: 10.1038/srep40391. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Quattrociocchi W, Caldarelli G, Scala A. Opinion dynamics on interacting networks: media competition and social influence. Scientific reports. 2014;4:4938. doi: 10.1038/srep04938. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Lorenz J. Continuous opinion dynamics under bounded confidence: A survey. International Journal of Modern Physics C. 2007;18:1819–1838. doi: 10.1142/S0129183107011789. [DOI] [Google Scholar]

- 22.DeMarzo PM, Vayanos D, Zwiebel J. Persuasion bias, social influence, and unidimensional opinions. The Quarterly journal of economics. 2003;118:909–968. doi: 10.1162/00335530360698469. [DOI] [Google Scholar]

- 23.Golub B, Jackson MO. Naive learning in social networks and the wisdom of crowds. American Economic JournalMicroeconomics. 2010;2:112–49. doi: 10.1257/mic.2.1.112. [DOI] [Google Scholar]

- 24.Acemoglu D, Ozdaglar A. Opinion dynamics and learning in social networks. Dynamic Games and Applications. 2011;1:3–49. doi: 10.1007/s13235-010-0004-1. [DOI] [Google Scholar]

- 25.Mobius M, Rosenblat T. Social learning in economics. Annu. Rev. Econ. 2014;6:827–847. doi: 10.1146/annurev-economics-120213-012609. [DOI] [Google Scholar]

- 26.Golub, B. & Sadler, E. Learning in social networks (2017).

- 27.Acemoğlu D, Como G, Fagnani F, Ozdaglar A. Opinion fluctuations and disagreement in social networks. Mathematics of Operations Research. 2013;38:1–27. doi: 10.1287/moor.1120.0570. [DOI] [Google Scholar]

- 28.Acemoglu D, Ozdaglar A, ParandehGheibi A. Spread of (mis) information in social networks. Games and Economic Behavior. 2010;70:194–227. doi: 10.1016/j.geb.2010.01.005. [DOI] [Google Scholar]

- 29.Mobilia, M., Peterson, A. & Redner, S. On the role of zealotry in the voter model. Journal of Statistical Mechanics: Theory and Experiment (2007).

- 30.Kunda Z. The case for motivated reasoning. Psychological bulletin. 1990;108:480. doi: 10.1037/0033-2909.108.3.480. [DOI] [PubMed] [Google Scholar]

- 31.Rabin M, Schrag JL. First impressions matter: A model of confirmatory bias. The Quarterly Journal of Economics. 1999;114:37–82. doi: 10.1162/003355399555945. [DOI] [Google Scholar]

- 32.Redlawsk DP. Hot cognition or cool consideration? Testing the effects of motivated reasoning on political decision making. The Journal of Politics. 2002;64:1021–1044. doi: 10.1111/1468-2508.00161. [DOI] [Google Scholar]

- 33.Nyhan B, Reifler J. When corrections fail: The persistence of political misperceptions. Political Behavior. 2010;32:303–330. doi: 10.1007/s11109-010-9112-2. [DOI] [Google Scholar]

- 34.Zollo F, et al. Debunking in a world of tribes. PloS one. 2017;12:e0181821. doi: 10.1371/journal.pone.0181821. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Bessi, A. et al. Viral misinformation: The role of homophily and polarization. In WWW (Companion Volume), 355–356 (2015).

- 36.DeGroot MH. Reaching a consensus. Journal of the American Statistical Association. 1974;69:118–121. doi: 10.1080/01621459.1974.10480137. [DOI] [Google Scholar]

- 37.Livan G, Marsili M. What do leaders know? Entropy. 2013;15:3031–3044. doi: 10.3390/e15083031. [DOI] [Google Scholar]

- 38.Christensen, K. Percolation theory (course notes). Tech. Rep. (2002).

- 39.Lelkes Y, Sood G, Iyengar S. The hostile audience: The effect of access to broadband internet on partisan affect. American Journal of Political Science. 2017;61:5–20. doi: 10.1111/ajps.12237. [DOI] [Google Scholar]

- 40.Barberá, P. How social media reduces mass political polarization. evidence from germany, spain, and the us. Job Market Paper, New York University 46 (2014).

- 41.Boxell L, Gentzkow M, Shapiro JM. Greater internet use is not associated with faster growth in political polarization among us demographic groups. Proceedings of the National Academy of Sciences. 2017;114:10612–10617. doi: 10.1073/pnas.1706588114. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Howe PD, Mildenberger M, Marlon JR, Leiserowitz A. Geographic variation in opinions on climate change at state and local scales in the usa. Nature Climate Change. 2015;5:596. doi: 10.1038/nclimate2583. [DOI] [Google Scholar]

- 43.Blancke S, Van Breusegem F, De Jaeger G, Braeckman J, Van Montagu M. Fatal attraction: the intuitive appeal of gmo opposition. Trends in plant science. 2015;20:414–418. doi: 10.1016/j.tplants.2015.03.011. [DOI] [PubMed] [Google Scholar]

- 44.Centola D, Becker J, Brackbill D, Baronchelli A. Experimental evidence for tipping points in social convention. Science. 2018;360:1116–1119. doi: 10.1126/science.aas8827. [DOI] [PubMed] [Google Scholar]

- 45.Newport, F. In us, percentage saying vaccines are vital dips slightly. Gallup (2015).

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

The data used to test the model’s predictions on global warming beliefs were gathered from the Yale Programme on Climate Change Communication 2016 Opinion Maps42, which provides state and county level survey data on opinions on global warming, as well as behaviours such as the propensity to discuss climate change with friends and family. These data were combined with FCC 2016 county level data on residential high speed Internet access (www.fcc.gov/general/form-477-county-data-internet-access-services). Also, a supplemental source was used in the data aggregated by the Joint Economic Council’s Social Capital Project www.jec.senate.gov/public/index.cfm/republicans/socialcapitalproject), a government initiative aiming to measure social capital at a county level by aggregating a combination of state and county level data from sources such as the American Community Survey, the Current Population Survey, and the IRS.