Colonoscopy remains the preeminent standard for screening for colorectal cancer (CRC) around the world due to its effectiveness in both detecting adenomatous or pre-cancerous lesions and the ability to remove them intra-procedurally1. However, failure on the part of the endoscopist to detect lesions increases the chances of lesion progression to subsequent CRC. Ultimately, colonoscopy remains operator dependent as variations in technique, training, and diligence all contribute to the wide range of quality of the colonoscopy exam amongst colonoscopists1. It is classically understood that there are two complementary reasons why a polyp may be missed during the exam: (1) it failed to present in the field of view at all, or (2) it appeared in the field of view but was unrecognized by the endoscopist. Recently, advancements in artificial intelligence and machine learning, specifically within the realm of deep learning, have led to the development of numerous computer aided detection (CADe) systems to assist endoscopists during the colonoscopic exam in an effort to enhance adenoma detection rates2–5. Nearly all of the currently published systems address reason 2 above: these systems focus on utilizing machine learning as a “second observer” that can alert the endoscopist to a polyp in real-time. However, these systems still rely on the endoscopist’s ability to adequately inspect the entire surface area of the colon and ability to maneuver a potential polyp into the field of view. CADe systems that enhance detection of flat or small lesions rest on the assumption that the endoscopist adequately visualizes the colonic mucosa6. We hypothesize that a CADe system that addresses the underlying cause of missed polyps, a poor-quality colonoscopy without full visual inspection of the colonic surface area, could be used to provide objective metrics by which to judge the quality of the exam itself. We utilize quality indicators, termed here as quality of exam (QoE) metrics, such as the total percentage of colonic surface area visualized, colon preparation, clarity of the endoscopic view, and colon distension10. We employ an artificial intelligence model to calculate each of the QoE metrics in real-time, providing feedback to the endoscopist during the procedure. To date, no artificial intelligence-based system has been reported that calculates percentage of surface area seen in conjunction with a series of other quality metrics to provide real-time, easily interpretable feedback.

DESCRIPTION OF TECHNOLOGY

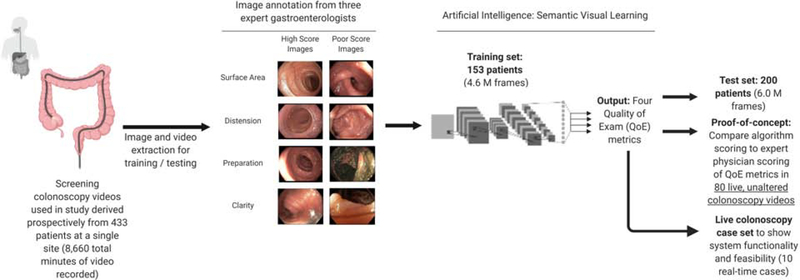

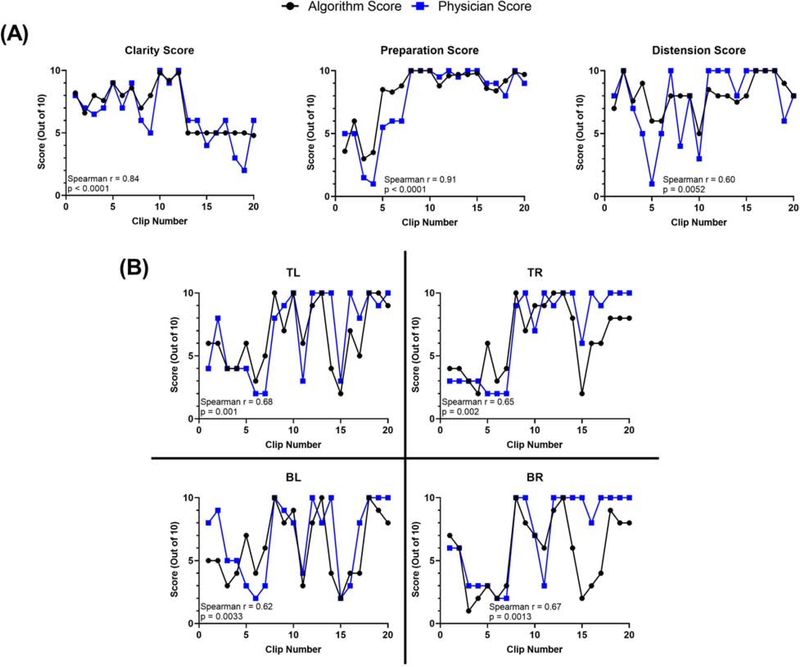

In this proof-of-concept study, we aim to (1) develop and test a novel artificial intelligence-based system that can provide real-time feedback to the endoscopist using four QoE metrics, and to (2) develop a readily interpretable interface that can be viewed by the endoscopist during the procedure. The study workflow can be seen in Figure 1. Patients attending a single site (Allegheny Health Network McCandless) for a screening colonoscopy were recruited for their colonoscopy recordings to be included in the study for model training or testing purposes or subsequent benchmarking. Recordings were collected prospectively from January 2014 to January 2016 from 433 patients (total duration 8660 minutes). Endoscopic videos were reviewed and annotated independently by three expert gastroenterologists (combined 15 years of experience each with > 10,000 colonoscopies performed) with extensive experience in the endoscopic assessment of the colon and were stored unaltered. Our system measures the following QoE metrics, which have all been previously described as important quality indicators of a thorough colonoscopy7–8: (1) visible surface area, (2) opened / distended colon, (3) preparation conditions, and (4) clarity of the current view. Training and test sets were generated based on patients, with videos and frames from 153 patients in the training set and 200 patients in the test set. Algorithm training was performed after generating still images from colonoscopy videos at a sampling rate of 25 frames per second. A full description of the training and testing process can be found in the Supplementary Methods section. After training and testing of the system using datasets of 4.6 million and 6.0 million independent frames, respectively, we functionally benchmark the algorithm by correlating the system’s scoring of the four QoE metrics against the scoring from an expert endoscopist (adenoma detection rate > 50%, total number of colonoscopies performed > 10,000 cases). We further demonstrated real-time assessment and feedback capabilities in a series of live case studies. We report strong correlations between the scoring of the system and endoscopist, showing that this system warrants further testing and has the potential to be used clinically to help endoscopists improve their colonoscopic exams (Figure 2).

Figure 1:

Schematic representation of our algorithm design and study workflow.

Figure 2:

In an effort to assess how the algorithm scored against an endoscopist not originally involved in the annotation of the images, we assessed the correlation between scoring from 80 unaltered colonoscopy videos. Our system showed strong correlations with physician scoring as evaluated using Spearman correlation. (A) QoE metrics: scoring from correlation analysis with clarity score, preparation score, and colon distension score. (B) Correlation results for each quadrant for surface area visualized. Key: TL = top left quadrant of colonoscope; TR = top right quadrant of colonoscope; BL = bottom left quadrant of colonoscope; BR = bottom right quadrant of colonoscope. Note that the algorithm scoring of surface area was divided by 10.

VIDEO DESCRIPTION

An important consideration for CADe systems is an easily discernible display, one that can be readily viewed and processed by the endoscopist during the procedure. In an effort to create a system that an endoscopist could feasibly use while operating the scope in real-time, each QoE metric was scored and displayed in the interface screen that is positioned near the actual endoscope feed. This display calculates the QoE metrics over each segment, allowing the endoscopist to view their inspection performance and adequacy of the view in real-time. We demonstrate real-time assessment and feedback capabilities during multiple live colonoscopy cases; live proof-of-concept renderings of this system providing real-time feedback in our developer view under different colonoscopy conditions can be found in the Supplemental Video.

TAKE HOME MESSAGE

The proposed system, first of its kind, utilizes artificial intelligence to provide real-time feedback to endoscopists about the quality of the colonoscopy exam. Because of the extensive operator variability in colonoscopy, this system has the potential to quantify an endoscopist’s performance to ultimately increase adenoma detection rates through a higher quality exam. We surmise that if the exam itself is of poor quality, then a polyp detection system is rendered less effective, still relying on the technique of the endoscopist for full colonic visualization. This system would aid the endoscopist in assessing performance, allowing them to re-intervene on areas that may have been missed or poorly visualized due to their own technique or due to inadequate bowel conditions. This system not only allows endoscopists to ensure patients are provided a quality of exam using objective metrics but can also be used to train young endoscopists.

Supplementary Material

ACKNOWLEDGEMENTS

Research reported in this publication was supported by the National Institute of General Medical Sciences (NIGMS) of the National Institutes of Health (NIH) under Award Number T32GM008208 to NC. The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institutes of Health. The authors would like to thank the other funding agencies (Disruptive Health Technology Institute & Innovations Works) for their generous support of this work.

Funding: Disruptive Health Technologies Institute (DHTI) Grant from Carnegie Mellon University & Highmark Health to ST, Innovation Works Grant from Carnegie Mellon University to ST, and the National Institutes of Health T32GM008208 to NC.

Footnotes

Conflicts of Interest: The authors report no conflicts of interest.

Writing Assistance: No writing assistance was used for this manuscript.

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

REFERENCES

- 1.Corley DA, et al. New England Journal of Medicine 2014;370:1298–306. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Wang P, et al. Nature Biomedical Engineering 2018;2:741. [DOI] [PubMed] [Google Scholar]

- 3.Byrne MF, et al. Gut 2019;68:94–100. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Urban G, et al. Gastroenterology 2018;155:1069–78. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Misawa M, et al. Gastroenterology 2018;154:2027–9. [DOI] [PubMed] [Google Scholar]

- 6.Rees CJ, Koo S. Nature Reviews Gastroenterology & Hepatology 2019:1. [DOI] [PubMed] [Google Scholar]

- 7.Rex DK, et al. The American Journal of Gastroenterology 2006;101:873. [DOI] [PubMed] [Google Scholar]

- 8.Allen JI. Current Gastroenterology Reports 2015;17:10. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.