Introduction

The neglected tropical diseases (NTDs) thrive mainly among the poorest populations of the world. The World Health Organization (WHO) has set ambitious targets for eliminating much of the burden (and the transmission when possible) of these diseases by 2020 [1], with new targets for 2030 being currently set [2]. Substantial international investment has been made with the London Declaration (2012) on NTDs to prevent the morbidity and premature mortality associated with these diseases through global programmes for their control and elimination.

The NTD Modelling Consortium [3] is an international effort to improve the health of the poorest populations in the world through the development and application of mathematical (including statistical and geographical) models for NTD transmission and control.

Although policy and intervention planning for disease control efforts have been supported by mathematical models [4–6], our general experience is that modelling-based evidence still remains less readily accepted by decision-making bodies than expert opinion or evidence from empirical research studies. Toward increasing modelling impact, we (1) conducted a review of the literature on (health-related) modelling principles and standards, (2) developed recommendations for areas of communication in policy-driven modelling to guide NTD programmes, and (3) presented this to the wider NTD Modelling Consortium.

Principles were formed as a guide for areas to communicate the quality and relevance of modelling to stakeholders. It is not guidance for communicating models to other modellers or how to conduct modelling. In adhering to a practise of these principles, our hope is that modelling will be of greater use to policy and decision makers in the field of NTD control, and possibly beyond that.

Examples of successes in modelling for policy in the field of NTDs

We first wish to recognise some of the successful examples of NTD programme relationships with modellers. The motivation for employing principled communication, as we propose, is to deliver a similarly positive impact consistently over time and for different NTDs. Onchocerciasis (a filarial disease caused by infection with Onchocerca volvulus and transmitted by blackfly, Simulium, vectors) probably provides the best example of impactful modelling, with its long history of using evidence—mostly from the ONCHOSIM and EPIONCHO transmission models [7]—to support decision-making within ongoing multicountry control initiatives (Table 1).

Table 1. Onchocerciasis modelling and policy impact.

| Specific public health challenge | How modelling addressed the challenge |

|---|---|

| What is the minimal duration of the OCP necessary to mitigate the risk of recrudescence after cessation of interventions? | ONCHOSIM guided duration of vector control operations in the OCP and investigated the combined impact of vector and ivermectin treatment to reduce programme duration (1997) [5]. |

| What is the feasibility of reaching elimination of onchocerciasis transmission based on ivermectin distribution as the sole intervention (i.e., in the absence of vector control)? | ONCHOSIM informed the Conceptual and Operational Framework of Onchocerciasis Elimination with Ivermectin Treatment launched by the APOC (2010) [8], and EPIONCHO and ONCHOSIM were fitted to data from proof-of-principle elimination studies in foci of Mali and Senegal (2017) [9]. |

| Areas where onchocerciasis–loiasis are coendemic present challenges for ivermectin treatment because of the risk of SAEs in individuals with high Loa loa burden. | Environmental risk modelling helped to guide distribution of ivermectin by mapping risk for L. loa coendemicity in Cameroon (2007) [10]. |

| Geostatistical mapping, based on RAPLOA data in 11 countries, informed where extra precautionary methods or alternative strategies are needed to minimise SAE risk (2011) [11]. | |

| Annual ivermectin distribution may not be sufficient to achieve elimination in foci with high baseline (precontrol) endemicity. | EPIONCHO and ONCHOSIM supported the shift to 6-monthly ivermectin treatment in highly endemic foci in Africa (2014) [12, 13]. |

| At the closure of the APOC in 2015, there was a need to delineate current and alternative/complementary intervention tools to reach elimination at the continental level. | EPIONCHO and ONCHOSIM supported deliberations and final APOC’s report on Strategic Options and Alternative Treatment Strategies for Accelerating Onchocerciasis Elimination in Africa (2015) [6]. |

| Drug discovery and clinical trial design and analysis are essential toward optimising alternative treatment strategies based on the use of macrofilaricides (drugs that kill adult O. volvulus). | Modelling facilitated analysis of clinical trials and informed drug discovery and development by the A∙WOL Consortium (2015–2017) [14, 15]. |

Abbreviations: APOC, African Programme for Onchocerciasis Control; A∙WOL, Anti-Wolbachia; OCP, Onchocerciasis Control Programme in West Africa; RAPLOA, Rapid Assessment of Prevalence of Loiasis; SAE, severe adverse event

From the start of the NTD Modelling Consortium in 2015, there have been several other examples of impactful modelling, which could be divided over three major scales of operations: (1) developing WHO guidelines (e.g., for triple-drug therapy, with ivermectin, diethylcarbamazine, and albendazole, against lymphatic filariasis [16, 17]); (2) informing funding decisions for new intervention tools (e.g., the development of a schistosomiasis vaccine [18]); and (3) guiding within-country targeting of control (e.g., local vector control for human African trypanosomiasis in the Democratic Republic of the Congo [19, 20] and Chad [21]).

Methods

Literature review

Our review aimed to inform the present synthesis of principles for the consortium. We evaluated published guidelines for good modelling practises in health-related modelling through a review and qualitative synthesis, following a systematised approach. We searched Equator Network Library for Health Research Reporting and PubMed with terms targeting guidance and good practises for mathematical modelling in the area of human health. The PubMed search applied the systematic[sb] filter with title-and-abstract terms (guideline* OR guidance OR reporting OR checklist OR ((best OR good) AND practice*))) AND model* NOT animal, plus any one of a combination of common modelling terms occurring in the full text. The full search strategy is described in S1 Appendix (Literature review search strategy and Search strategy and selection criteria). Studies in the form of reviews and guidelines were eligible for consideration, and those discussing modelling in the abstract or title were included. Results were then expanded by including references included in recent systematic and rapid reviews [22, 23]. Succinct statements were extracted for analysis, excluding elaborative text. Text was copied and pasted from PDF files to standardised study extraction spreadsheets.

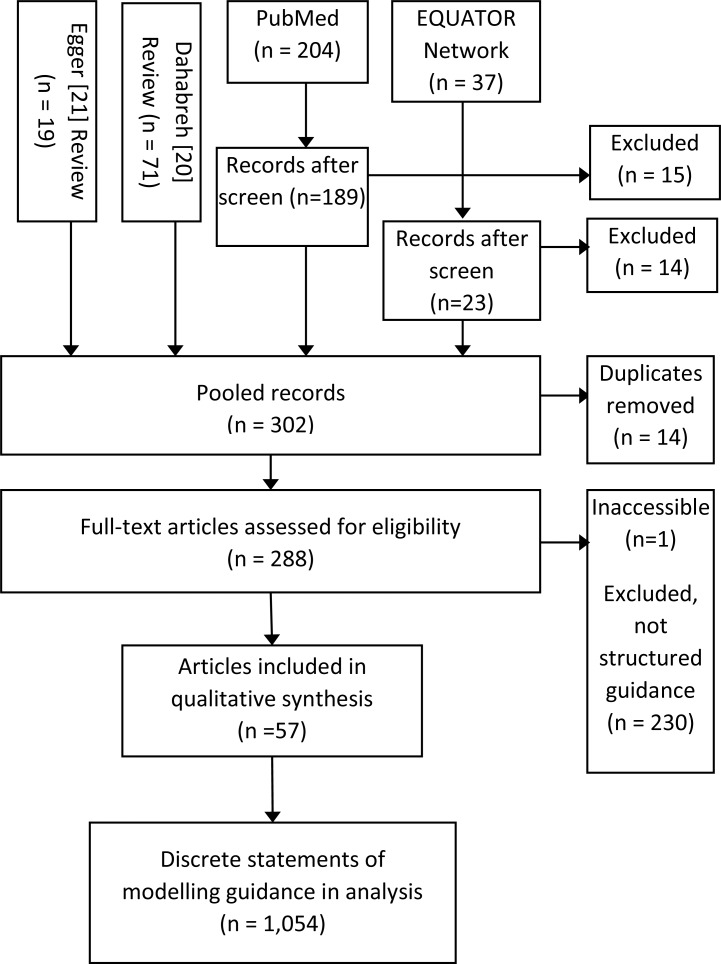

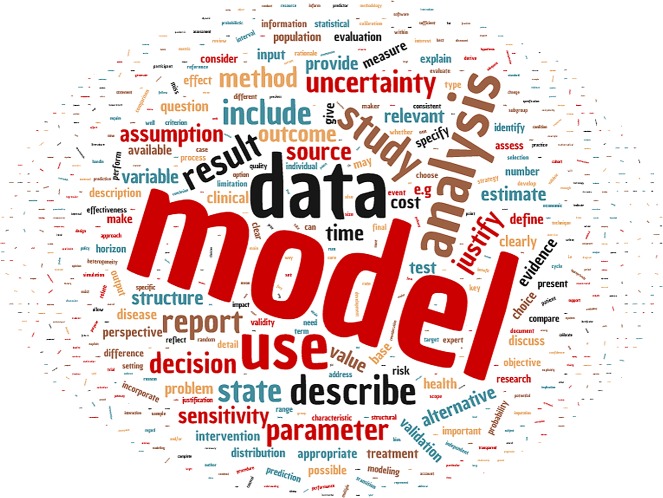

We identified 288 studies relevant to modelling practises, of which 57 were included [24–80] (Fig 1). See S1 Appendix (Table 1) for characteristics of included studies. Studies in the form of reviews and guidelines were eligible for consideration, and those discussing modelling conduct or reporting in the abstract or title were included. Studies were excluded if guidance to modellers was not presented in a list or table to facilitate inspection. However, exclusions were most frequently due to absence of guidance to modellers rather than because guidance was not provided in a structured format. Altogether, included studies contained 1,054 succinct statements of modelling guidance that were included in the qualitative synthesis. A summary of the data set contents is given visually (Fig 2) and as a table of word occurrence counts in S1 Appendix (Table 2).

Fig 1. Study selection.

Fig 2. Word cloud of the 1,054 modelling guidance statements.

Relative word frequencies are represented by size of the font.

Table 2. PRIME-NTD summary table.

| Principle | What has been done to satisfy the principle? |

Where in the manuscript is this described? |

|---|---|---|

| 1. Stakeholder engagement | ||

| 2. Complete model documentation | ||

| 3. Complete description of data used | ||

| 4. Communicating uncertainty | ||

| 5. Testable model outcomes |

Scoring the guidance statements

Authors coded the data set individually (MRB, TCP, WAS, SJdV) and jointly (M-GB, JIDH, MW), producing five independently coded sets of data (S1 Table). Modelling guideline statements were coded with the following ordinal scale of importance scores: 1, not applicable; 2, not necessary; 3, important; 4, extremely important; and 5, obvious (i.e., merely restates principles regarded as universally agreed upon; see S1 Table).

In coding the data set, we saw that many of the 1,054 statements rephrased the same concepts (e.g., ‘do an uncertainty analysis’). Statements similar in meaning could be given different scores simply because they were phrased differently (S1 Appendix, Interrater reliability).

We rank-sorted statements by score to select the top few statements we collectively considered extremely important. A group of 46 statements consistently received a score of 4 (extremely important) from at least four of the authors. The most important 46 ranked statements were selected, evaluated for content, and gradually categorised into five major themes (S2 Table) through discussion. In each theme, we formulated a single principle that distilled the statements grouped under that theme. Original text for each statement was preserved up to this final stage of our synthesis. Preliminary formulations of the principles were discussed with a subset of the larger NTD Modelling Consortium group at a meeting in New Orleans (28 October 2018) and further refined for presentation at the consortium Technical Meeting in Oxford (20 March 2019).

Five of the 46 guidance statements at the top of our ranked list did not fit well into the categories we settled upon for principles. From those that did not become part of a principle, we formed two philosophies that reflect some of our ideals. These will be presented in the Discussion.

Results

Consortium principles

Five principles (Box 1) are the results produced by our distillation and synthesis of guidance on good modelling practises we found in the literature. Adoption of these principles as consortium principles is a result of about 2 years of engagement with the consortium membership. See section Principles in practise for how adherence might be demonstrated.

Box 1: The five principles of the NTD modelling consortium

Don't do it alone. Engage stakeholders throughout, from the formulation of questions to the discussions on the implications of the findings.

Reproducibility is key! Prepare and make available (preferably as open-source material) a complete technical documentation of all model code, mathematical formulas, and assumptions and their justification, allowing others to reproduce the model.

Data play a critical role in any scientific modelling exercise. All data used for model quantification, calibration, goodness of fit, or validation should be described in sufficient detail to allow the reader to assess the type and quality of these analyses. When referencing data, apply Principle 2.

Communicating uncertainty is a hallmark of good modelling practise. Perform a sensitivity analysis of all key parameters, and for each paper reporting model predictions, include an uncertainty assessment of those model outputs within the paper.

Model outcomes should be articulated in the form of testable hypotheses. This allows comparison with other models and future events as part of the ongoing cycle of model improvement.

Principle 1: Stakeholder engagement

Policy makers and other stakeholders should be involved early and throughout the process of developing a model. Stakeholder engagement helps to ensure that the right balance is achieved between what decision makers and practitioners want and what modellers should and can provide to ensure that realistic policy options are being analysed and that proposed strategies for disease control are culturally or socially acceptable. The process of distilling what modelling needs to provide takes time to accomplish through dialogue. Stakeholders are essential to ensure the best available knowledge and evidence are used in model design, calibration, and validation. Finally, stakeholders are essential to interpret, translate, and integrate the implications of the findings.

Inclusion of stakeholders as authors in publications is important, including modeller stakeholders. Lack of trust in modelling studies partly reflects limited representation of modelling expertise from NTD-affected countries. The modelling community needs to support more local development of capacity for modelling and make sure that local technical capacity is genuinely engaged in discussions. Science on NTDs is increasingly changing in a positive way in this respect, but modelling has a longer way to go on this.

Building confidence in a model’s usefulness is a gradual process [81]. For this reason, we suggest that modelling studies choose to involve stakeholders early, ideally from the planning phase [82]. We believe that models that are considered to be jointly owned by modellers and stakeholders have a higher chance of becoming impactful for policy. Of course, at times, some stakeholders may not desire involvement of modelling teams, perhaps due to differences in perspective or even conflicts of interest, but stakeholder involvement in model development should remain a primary goal.

Principle 2: Complete model documentation

An analysis should be described in sufficient detail for others to be able to implement it and reproduce the results [83]. Striving for this degree of clarity and transparency is good for reproducibility [84, 85] and also motivates changes in conduct to raise quality [86, 87]. A protocol often used to document agent-based models has shown success in raising their rigour [88]. Open-source software is only the first step of documentation. Deterministic and stochastic models need to present the equations, diagrams, and event tables that describe their behaviour. Agent-based models require more attention to completeness to be clear about what events can happen to heterogeneous individuals and according to which probability distributions.

Information (data and code) generated in modelling should be maintained according to common good software practises [89, 90] to ensure longevity [91], ideally on data-sharing platforms [92]. The funding and resources for doing this maintenance could be considered when planning the projects. In computational practises [90], ‘…decision makers who use results from codes should begin requiring extensive, well documented verification and validation activities from code developers’. Perfection is not the goal here, but thoughtful practises. Academic groups can transfer practical experience [89], so good computational practises also belong in our discourse. We invite stakeholders to ask each other, and to ask modellers, which quality controls are protecting the integrity of the modelling work.

Principle 3: Complete description of data used

It should be understandable how empirical data and evidence were used (or not used) for model calibration, goodness-of-fit assessment, and partial validation (partial because models are typically used to predict policy outcomes for which sufficient empirical data are not always available). Employed data sets should be clearly described to allow readers to assess their quality and informativeness for specific model assumptions. The relevant context of data collection should additionally be communicated along with model results. Descriptions of employed data sets are central to building confidence in various assumptions in the model design. Model assumptions may be justified by support from data, and when key assumptions do gain acceptance conditioned on data, they must be reconsidered with multiple data sets. If the assumptions are valid, they should continue to be supported by new data sets over time, which may also lead to further dialogue on data requirements, before a model can be used to predict new scenarios. New information may dictate alterations to a model.

Calibration and validation are crucial for determining how well the model has been specified and parameterised, guiding identification of key processes that should be included in order to capture phenomena identified through model fitting to retrospective data and/or through forecasting. Principle 3 helps us to assess parametric assumptions and model analyses, as they may be limited by input data quality, and to identify data gaps and/or essential processes that may lead to reformulation of structural assumptions.

Principle 4: Communicating uncertainty

Robust decisions are likely to be successful in the face of future uncertain events. Arguably one of the most useful contributions of a model is to estimate how much uncertainty the future may hold so that decisions may reasonably balance cost with risk. Therefore, stakeholders might expect to receive a clear presentation of uncertainty relative to the decision problem. Broad categories of uncertainty sources might be classed as fitted parameters, data inputs, model structure, and stochasticity.

‘As with experimental results, the key to successfully reporting a mathematical model is to provide an honest appraisal and representation of uncertainty in the model’s prediction, parameters, and (where appropriate) in the structure of the model itself’ [93]. A sensitivity analysis will demonstrate which parameters (or combinations of parameters) are most important for the outcome of interest, thereby indicating for which parameters proper quantification based on high quality data is most essential. By using realistic assessments of uncertainty in parameter values and structural assumptions (i.e., parametric and structural uncertainty), it should then be demonstrated in a so-called robustness or uncertainty analysis how the model outcome is subject to overall uncertainty.

A consortium is a good forum (as exists for, among others, HIV, malaria, and NTDs) to understand structural uncertainties between multiple modelling groups, including reducing the overall level of uncertainty by ensembles [94] or other means of combining models. Openness in assumptions can further help assessing the impact of poorly understood sources of uncertainty on outcomes; for example, parameters called ‘fixed’ (i.e., an assumed value) may need assessment, as well as assumptions about the fundamental processes underlying data patterns. Modellers should excel in transparency of how uncertainty was estimated, and stakeholders should not accept a projection without uncertainty bounds.

Principle 5: Testable model outcomes

Specific challenges to the use of forecasting arise in a policy context. Nevertheless, prediction and falsification are of central importance in science [95, 96]. The life span of a model is typically long, and over time, the same model may be applied to different policy questions. Model validation thus becomes an ongoing process. Models are often used to predict future trends in infection and draw conclusions on specific policy questions in the absence of data. However, data may become available at a later time and should then be used to further validate the model, leading to a better model and more confidence in its predictions. Moreover, when possible, forecasts may be made for a range of scenarios outside those for which data will be collected, as data collection programmes may be expanded. Modelling studies aiming at defining a threshold or the most cost-effective strategy should also present expected future trends for situations in which this threshold or strategy would actually be applied so that these trends can potentially be compared with future data and proposed thresholds or strategies can be reevaluated if necessary, or the context in which they apply can be better defined and understood.

Model comparison, one of the main activities of the NTD Modelling Consortium [97], requires multiple independent modelling groups working on each disease to explain collaboratively any differences between their model results on that disease. Agreement on a weighting method allowing for an ensemble [98, 99], or otherwise placing results in a coherent framework, supports clear interpretation of all results. Model comparisons are generally best done in a masked manner, with data partitioned into a training set and a test set. A sufficient sample size, together with probabilistic forecasting with proper scoring [100], can be used in forecast comparisons, permitting objective and falsifiable comparisons. In looking to apply a model to new or future problems, models cannot be truly ‘validated’ for a future scenario outside of their training conditions, but an open and transparent collection of models, which have survived efforts at prospective testing, can provide more confidence in their prospective policy analyses. Forecasting is garnering increasing interest outside NTDs, as shown by the Centers for Disease Control and Prevention (CDC) sponsorship of an annual forecasting contest for the United States influenza-like illness data [101, 102]. Guidelines for structured model comparisons were recently proposed to improve the quality of information available for policy decisions [103]. Stakeholders can help build trust for objective comparison exercises by promoting right incentives for inclusive comparisons.

Finally, we conjecture two additional benefits of objective testing that might be communicated: (1) helping to avoid the danger of excessive agreement and ‘groupthink’—a failure to challenge conventional wisdom with a truly searching inquisition, and (2) helping avoid to bias.

Principles in practise: Policy-relevant items for reporting models in epidemiology of neglected tropical diseases summary table

How can these five principles be upheld in practise? The principles are alive and well when we regularly engage each other on demonstrations of the principles, express them in our publications, and demonstrate them in relationships with our stakeholders. Principles identify broad themes that modellers should consider when reporting and communicating their research findings. The reason we do this is to properly support the success of our stakeholders in making use of modelling evidence.

We offer a summary table as a simple tool to write how each principle was fulfilled, or perhaps what challenges were found. We call it the Policy-Relevant Items for Reporting Models in Epidemiology of Neglected Tropical Diseases (PRIME-NTD) Summary Table (Table 2 and S1 Appendix). It is a means to promote engagement with the principles and to improve accessibility, communication, and reporting of modelling results. We promise to show our stakeholders how we demonstrated the principles for them in a summary table to be included with presentations and publications on policy questions. We recommend more broadly that modellers follow a similar approach when making results available for policy matters. Stakeholders are then invited to verify that the principles are in fact used in the modelling studies.

Discussion

Although many guidelines on modelling are already available [22, 23, 64], they are often not implemented in practise. As part of an overall commitment to evidence-based decision-making, we have reaffirmed existing recommendations regarding reproducibility, fidelity to data, and accurate communication of uncertainty. We also found it important to extend existing recommendations to emphasise the importance of stakeholder involvement (Principle 1) and predictive testing [43, 104] (Principle 5). Stakeholder involvement can bring epidemiological expertise, analytic relevance, and ultimately richer data. Striving for predictive testing by providing forward projections can provide a sharper model test than one that fits to existing data alone, and it reflects a commitment to hypothesis testing and the scientific method. What makes our contribution notable is that we are adopting the guidance ourselves and making a commitment to our stakeholders that we are accountable to demonstrate our principles throughout engagement.

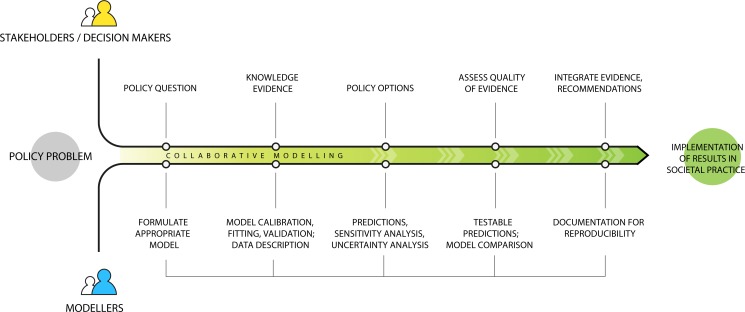

Dialogue with stakeholders can help to improve the quality and responsiveness of quantitative efforts to assess and inform health policy [105]. From formulating questions toward results and potentially to implementation, the timing and nature of feedback should follow some arranged plan for engagement that is not left to chance or whim. Fig 3 shows an example of a collaborative process.

Fig 3. Illustration of a collaborative process between modellers and stakeholders/decision makers.

Each group brings something to the table at different time points. The best modelling result with eventual impact is usually only obtained in collaboration. Although the process is depicted as linear, in practise each node may connect back to stakeholders and modellers for continuous dialogue and discussion; policy implementation can also be reevaluated in light of evidence.

From the review, we also arrived at two philosophies that reflect some of our ideals. The first is that modelling is an ongoing process, i.e., models should never be regarded as complete or immutable. They should be repeatedly updated, and sometimes abandoned and replaced, as new evidence or analyses become available to inform their structure or input. The second philosophy is that the NTD Modelling Consortium strives for a mechanistic formulation of models whenever possible. This means incorporating into the models processes underlying transmission and realistic operational contexts to measure things the same way a control or research programme measures them. Moreover, the needs of public health and policy, we believe, favour a mechanistic approach that permits testing counterfactual scenarios and helps in communication with lay, nonmathematical stakeholders.

Depending on the perspective, it may be a limitation of our study that key stakeholders outside our consortium are not included as coauthors of our piece. By design, this work represents our consortium’s understanding of what stakeholders have been asking us to do over the course of ongoing engagements. Also a limitation of our review and qualitative synthesis is that modelling fields outside of health were not searched, though they often relate well to the modelling of diseases. The review was designed to thoroughly cover concepts appearing in modelling guidance. It is not comprehensive of guidance issued. We abstracted some potential indicators of future practise, such as having a statement of adherence (S1 Appendix—Table 2), but we did not attempt to assess the use of guidance following their publication.

Guidelines for evidence synthesis allow unbiased integration of evidence in high-stakes controversial settings [106]. Our study enhances communication required for properly evaluating models, which complements recent initiatives by WHO on decision-making frameworks inclusive of mathematical models [23, 107], qualitative systematic reviews [108], and operational research [109]. These frameworks extend the Grading of Recommendations Assessment, Development and Evaluation (GRADE) [110]. Expert groups such as WHO Initiative for Vaccine Research sometimes evaluate models to support evidence synthesis, but there is yet no standard way to integrate modelling into WHO guidelines development as there is for clinical evidence [111]. One motivation for extending existing guidelines is that understanding risk of bias in models [23, 31, 112] cannot be done well using the same approaches to bias risk assessment for empirical studies.

The need for guidelines has been well established [113], which has led to accepted and practised standards for health research [114]. In this review, we found that only four [30, 38, 41, 45] of 57 guideline proposals had recognisable statements of commitment to their recommendations such that the authors or others were actively encouraged to follow them. There may be more adherents, but initial commitment is a striking indicator consistent with utilisation of modelling guidance [37]. Additional successfully established modelling guidelines exist, for example, on describing agent-based models [115] in theoretical ecology. In this example, the authors later conducted a review of studies applying their guidelines [88], updating them based on ongoing discussions with those who had adopted them to improve clarity and avoid redundancy. A subtle outcome of our own work was that the process of synthesis was important for the authors. Ongoing discussion throughout the synthesis process was shaped by our intent to adopt the principles, which allowed a better understanding of how these might be practised and of any potential barriers that might be encountered in doing so.

In conclusion, we believe that by distilling the five principles of the NTD Modelling Consortium for policy-relevant work, and communicating our adherence to them, we will improve as modellers over time and enjoy more effective partnerships in the meantime. We ask our stakeholders to hold us to our promise. We also believe that the impact of applied modelling in other fields may benefit from doing the same.

Supporting information

PRIME-NTD, Policy-Relevant Items for Reporting Models in Epidemiology of Neglected Tropical Diseases.

(DOCX)

(XLSX)

(XLSX)

Acknowledgments

For helpful comments on the manuscript, we thank Roy M. Anderson, Simon Brooker, Ronald E. Crump, Peter J. Diggle, T. Déirdre Hollingsworth, Matt J. Keeling, Thomas Lietman, Graham F. Medley, Simon E. F. Spencer, and Jaspreet Toor. For discussion on practical use of the principles (during the 2019 Technical Meeting of the NTD Modelling Consortium, 18–20 March 2019, University of Oxford), we are grateful to collaborators Benjamin Amoah, David J. Blok, Lloyd A. C. Chapman, Nakul Chitnis, Ronald E. Crump, Emma L. Davis, Peter J. Diggle, Louise Dyson, Claudio Fronterre, T. Déirdre Hollingsworth, Klodeta Kura, Veronica Malizia, Graham F. Medley, Joaquin M. Prada, Kat S. Rock, Jaspreet Toor, Panayiota Touloupou, Andreia Vasconcelos, and Xia Wang-Steverding. The NTD Modelling Consortium web site is located at https://www.ntdmodelling.org/about/who-we-are. T Déirdre Hollingsworth (Big Data Institute, University of Oxford) leads the NTD Modelling Consortium.

Funding Statement

M-GB, JIDH, TCP, WAS, MW, and SJV gratefully acknowledge funding of the NTD Modelling Consortium by the Bill and Melinda Gates Foundation (OPP1184344). M-GB and JIDH gratefully acknowledge joint Centre funding from the UK Medical Research Council and the Department for International Development (grant no. MR/R015600/1). MRB gratefully acknowledges the support of a Bill and Melinda Gates Foundation consultancy (#52577). The funders had no role in study design, data extraction and analysis, decision to publish, or preparation of the manuscript.

References

- 1.World Health Organization/Department of Control of Neglected Tropical Diseases. Integrating neglected tropical diseases into global health and development: Fourth WHO Report on Neglected Tropical Diseases. Geneva: World Health Organization; 2017 [cited 2019 Nov 18]. Available from: https://www.who.int/neglected_diseases/resources/9789241565448/en/.

- 2.World Health Organization. WHO launches global consultations for a new Roadmap on neglected tropical diseases. Geneva: World Health Organization; 2019. [cited 2019 Nov 18]. Available from: https://www.who.int/neglected_diseases/news/WHO-launches-global-consultations-for-new-NTD-Roadmap/en/. [Google Scholar]

- 3.ntdmodelling.org [Internet]. Oxford: NTD Modelling Consortium; 2020 Mar 13 [cited 2020 Mar 25]. Available from: http://www.ntdmodelling.org.

- 4.Plaisier AP, Alley ES, van Oortmarssen GJ, Boatin BA, Habbema JD. Required duration of combined annual ivermectin treatment and vector control in the Onchocerciasis Control Programme in west Africa. Bull World Health Organ. 1997;75(3):237–45. Epub 1997/01/01. [PMC free article] [PubMed] [Google Scholar]

- 5.Winnen M, Plaisier AP, Alley ES, Nagelkerke NJ, van Oortmarssen G, Boatin BA, et al. Can ivermectin mass treatments eliminate onchocerciasis in Africa? Bull World Health Organ. 2002;80(5):384–91. Epub 2002/06/22. [PMC free article] [PubMed] [Google Scholar]

- 6.African Programme for Onchocerciasis Control. Report of the consultative meetings on Strategic Options and Alternative Treatment Strategies for Accelerating Onchocerciasis Elimination in Africa. WHO/MG/15.20. Geneva: World Health Organization; 2015. [cited 2019 Nov 18]. Available from: https://www.who.int/apoc/ATS_Report_2015.12.pdf. [Google Scholar]

- 7.Basáñez MG, Walker M, Turner HC, Coffeng LE, de Vlas SJ, Stolk WA. River blindness: Mathematical models for control and elimination. Adv Parasitol. 2016; 94:247–341. Epub 2016/10/07. 10.1016/bs.apar.2016.08.003 . [DOI] [PubMed] [Google Scholar]

- 8.African Programme for Onchocerciasis Control. Conceptual and operational framework of onchocerciasis elimination with ivermectin treatment. WHO/APOC/MG/10.1. Geneva: World Health Organization; 2010. [cited 2019 Nov 18]. Available from: https://www.who.int/apoc/oncho_elimination_report_english.pdf. [Google Scholar]

- 9.Walker M, Stolk WA, Dixon MA, Bottomley C, Diawara L, Traoré MO, et al. Modelling the elimination of river blindness using long-term epidemiological and programmatic data from Mali and Senegal. Epidemics. 2017. March;18:4–15. 10.1016/j.epidem.2017.02.005 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Diggle PJ, Thomson MC, Christensen OF, Rowlingson B, Obsomer V, Gardon J, et al. Spatial modelling and the prediction of Loa loa risk: decision making under uncertainty. Ann Trop Med Parasitol. 2007;101(6):499–509. Epub 2007/08/25. 10.1179/136485913X13789813917463 . [DOI] [PubMed] [Google Scholar]

- 11.Zouré HG, Wanji S, Noma M, Amazigo UV, Diggle PJ, Tekle AH, et al. The geographic distribution of Loa loa in Africa: Results of large-scale implementation of the Rapid Assessment Procedure for Loiasis (RAPLOA). PLoS Negl Trop Dis. 2011;5(6):e1210 Epub 2011/07/09. 10.1371/journal.pntd.0001210 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Turner HC, Walker M, Churcher TS, Osei-Atweneboana MY, Biritwum NK, Hopkins A, et al. Reaching the London Declaration on Neglected Tropical Diseases goals for onchocerciasis: an economic evaluation of increasing the frequency of ivermectin treatment in Africa. Clin Infect Dis. 2014;59(7):923–32. Epub 2014/06/20. 10.1093/cid/ciu467 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Coffeng LE, Stolk WA, Hoerauf A, Habbema D, Bakker R, Hopkins AD, et al. Elimination of African onchocerciasis: modeling the impact of increasing the frequency of ivermectin mass treatment. PLoS ONE. 2014;9(12):e115886 Epub 2014/12/30. 10.1371/journal.pone.0115886 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Walker M, Specht S, Churcher TS, Hoerauf A, Taylor MJ, Basáñez MG. Therapeutic efficacy and macrofilaricidal activity of doxycycline for the treatment of river blindness. Clin Infect Dis. 2015;60(8):1199–207. Epub 2014/12/30. 10.1093/cid/ciu1152 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Aljayyoussi G, Tyrer HE, Ford L, Sjoberg H, Pionnier N, Waterhouse D, et al. Short-course, high-dose rifampicin achieves Wolbachia depletion predictive of curative outcomes in preclinical models of lymphatic filariasis and onchocerciasis. Sci Rep. 2017;7(1):210 Epub 2017/03/18. 10.1038/s41598-017-00322-5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Irvine MA, Stolk WA, Smith ME, Subramanian S, Singh BK, Weil GJ, et al. Effectiveness of a triple-drug regimen for global elimination of lymphatic filariasis: A modelling study. Lancet Infect Dis. 2017;17(4):451–8. Epub 2016/12/26. 10.1016/S1473-3099(16)30467-4 . [DOI] [PubMed] [Google Scholar]

- 17.World Health Organization. Guideline: Alternative mass drug administration regimens to eliminate lymphatic filariasis. WHO/HTM/NTD/PCT/201707. Geneva: World Health Organization; 2017. [2019 Nov 18]. Available from: https://apps.who.int/iris/handle/10665/259381. [PubMed] [Google Scholar]

- 18.Stylianou A, Hadjichrysanthou C, Truscott JE, Anderson RM. Developing a mathematical model for the evaluation of the potential impact of a partially efficacious vaccine on the transmission dynamics of Schistosoma mansoni in human communities. Parasit Vectors. 2017;10(1):294 Epub 2017/06/19. 10.1186/s13071-017-2227-0 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Rock KS, Torr SJ, Lumbala C, Keeling MJ. Predicting the impact of intervention strategies for sleeping sickness in two high-endemicity health zones of the Democratic Republic of Congo. PLoS Negl Trop Dis. 2017;11(1):e0005162 Epub 2017/01/06. 10.1371/journal.pntd.0005162 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Rock KS, Torr SJ, Lumbala C, Keeling MJ. Quantitative evaluation of the strategy to eliminate human African trypanosomiasis in the Democratic Republic of Congo. Parasit Vectors. 2015;8:532 Epub 2015/10/23. 10.1186/s13071-015-1131-8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Mahamat MH, Peka M, Rayaisse JB, Rock KS, Toko MA, Darnas J, et al. Adding tsetse control to medical activities contributes to decreasing transmission of sleeping sickness in the Mandoul focus (Chad). PLoS Negl Trop Dis. 2017;11(7):e0005792 Epub 2017/07/28. 10.1371/journal.pntd.0005792 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Dahabreh IJ, Chan JA, Earley A, Moorthy D, Avendano EE, Trikalinos TA, et al. Modeling and simulation in the context of health technology assessment: Review of existing guidance, future research needs, and validity assessment. AHRQ Methods for Effective Health Care. Report No 16(17)-EHC020-EF. Rockville, MD: Agency for Healthcare Research and Quality, 2017 [cited 2019 Nov 18]. Available from: https://www.ncbi.nlm.nih.gov/books/NBK424024/pdf/Bookshelf_NBK424024.pdf. [PubMed] [Google Scholar]

- 23.Egger M, Johnson L, Althaus C, Schoni A, Salanti G, Low N, et al. Developing WHO guidelines: time to formally include evidence from mathematical modelling studies. F1000Research. 2017;6:1584 Epub 2018/03/22. 10.12688/f1000research.12367.2 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Abuelezam NN, Rough K, Seage GR 3rd. Individual-based simulation models of HIV transmission: reporting quality and recommendations. PLoS ONE. 2013;8(9):e75624 Epub 2013/10/08. 10.1371/journal.pone.0075624 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Baldwin SA, Larson MJ. An introduction to using Bayesian linear regression with clinical data. Behav Res Ther. 2017;98:58–75. Epub 2017/01/14. 10.1016/j.brat.2016.12.016 . [DOI] [PubMed] [Google Scholar]

- 26.Bennett C, Manuel DG. Reporting guidelines for modelling studies. BMC Med Res Methodol. 2012;12:168 Epub 2012/11/09. 10.1186/1471-2288-12-168 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Bilcke J, Beutels P, Brisson M, Jit M. Accounting for methodological, structural, and parameter uncertainty in decision-analytic models: a practical guide. Med Decis Making. 2011;31(4):675–92. Epub 2011/06/10. 10.1177/0272989X11409240 . [DOI] [PubMed] [Google Scholar]

- 28.Briggs AH, Weinstein MC, Fenwick EA, Karnon J, Sculpher MJ, Paltiel AD. Model parameter estimation and uncertainty: a report of the ISPOR-SMDM Modeling Good Research Practices Task Force—6. Value Health. 2012;15(6):835–42. Epub 2012/09/25. 10.1016/j.jval.2012.04.014 . [DOI] [PubMed] [Google Scholar]

- 29.Burke DL, Billingham LJ, Girling AJ, Riley RD. Meta-analysis of randomized phase II trials to inform subsequent phase III decisions. Trials. 2014;15:346 Epub 2014/09/05. 10.1186/1745-6215-15-346 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.CADTH. Guidelines for the economic evaluation of health technologies: Canada. Canadian Agency for Drugs and Technologies in Health 2017. (4th Edition). Ottawa: CADTH; 2017 [cited 2019 Nov 18]. Available: https://www.cadth.ca/sites/default/files/pdf/guidelines_for_the_economic_evaluation_of_health_technologies_canada_4th_ed.pdf. [Google Scholar]

- 31.Caro JJ, Eddy DM, Kan H, Kaltz C, Patel B, Eldessouki R, et al. Questionnaire to assess relevance and credibility of modeling studies for informing health care decision making: an ISPOR-AMCP-NPC Good Practice Task Force report. Value Health. 2014;17(2):174–82. Epub 2014/03/19. 10.1016/j.jval.2014.01.003 . [DOI] [PubMed] [Google Scholar]

- 32.Carrasco LR, Jit M, Chen MI, Lee VJ, Milne GJ, Cook AR. Trends in parameterization, economics and host behaviour in influenza pandemic modelling: a review and reporting protocol. Emerg Themes Epidemiol. 2013;10(1):3 Epub 2013/05/09. 10.1186/1742-7622-10-3 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Chilcott J, Tappenden P, Rawdin A, Johnson M, Kaltenthaler E, Paisley S, et al. Avoiding and identifying errors in health technology assessment models: qualitative study and methodological review. Health Technol Assess. 2010;14(25):iii–iv, ix-xii, 1–107. Epub 2010/05/27. 10.3310/hta14250 . [DOI] [PubMed] [Google Scholar]

- 34.Chiou CF, Hay JW, Wallace JF, Bloom BS, Neumann PJ, Sullivan SD, et al. Development and validation of a grading system for the quality of cost-effectiveness studies. Med Care. 2003;41(1):32–44. Epub 2003/01/25. 10.1097/00005650-200301000-00007 . [DOI] [PubMed] [Google Scholar]

- 35.Cleemput I, van Wilder P, Huybrechts M, Vrijens F. Belgian methodological guidelines for pharmacoeconomic evaluations: toward standardization of drug reimbursement requests. Value Health. 2009;12(4):441–9. Epub 2009/11/11. 10.1111/j.1524-4733.2008.00469.x . [DOI] [PubMed] [Google Scholar]

- 36.Clemens K, Townsend R, Luscombe F, Mauskopf J, Osterhaus J, Bobula J. Methodological and conduct principles for pharmacoeconomic research. Pharmaceutical Research and Manufacturers of America. Pharmacoeconomics. 1995;8(2):169–74. Epub 1995/07/07. 10.2165/00019053-199508020-00008 . [DOI] [PubMed] [Google Scholar]

- 37.Collins GS, Reitsma JB, Altman DG, Moons KG. Transparent reporting of a multivariable prediction model for individual prognosis or diagnosis (TRIPOD): the TRIPOD statement. BMJ. 2015;350:g7594 Epub 2015/01/09. 10.1136/bmj.g7594 . [DOI] [PubMed] [Google Scholar]

- 38.Dahabreh IJ, Trikalinos TA, Balk EM, Wong JB. Recommendations for the conduct and reporting of modeling and simulation studies in health technology assessment. Ann Intern Med. 2016;165(8):575–81. Epub 2016/10/18. 10.7326/M16-0161 . [DOI] [PubMed] [Google Scholar]

- 39.Detsky AS. Guidelines for economic analysis of pharmaceutical products: a draft document for Ontario and Canada. Pharmacoeconomics. 1993;3(5):354–61. Epub 1993/04/08. 10.2165/00019053-199303050-00003 . [DOI] [PubMed] [Google Scholar]

- 40.Drummond M, Brandt A, Luce B, Rovira J. Standardizing methodologies for economic evaluation in health care. Practice, problems, and potential. Int J Technol Assess Health Care. 1993;9(1):26–36. Epub 1993/01/01. 10.1017/s0266462300003007 . [DOI] [PubMed] [Google Scholar]

- 41.Drummond MF, Jefferson TO. Guidelines for authors and peer reviewers of economic submissions to the BMJ. The BMJ Economic Evaluation Working Party. BMJ. 1996;313(7052):275–83. Epub 1996/08/03. 10.1136/bmj.313.7052.275 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Dykstra K, Mehrotra N, Tornoe CW, Kastrissios H, Patel B, Al-Huniti N, et al. Reporting guidelines for population pharmacokinetic analyses. J Clin Pharmacol. 2015;55(8):875–87. Epub 2015/07/08. 10.1002/jcph.532 . [DOI] [PubMed] [Google Scholar]

- 43.Eddy DM, Hollingworth W, Caro JJ, Tsevat J, McDonald KM, Wong JB. Model transparency and validation: a report of the ISPOR-SMDM Modeling Good Research Practices Task Force—7. Value Health. 2012;15(6):843–50. Epub 2012/09/25. 10.1016/j.jval.2012.04.012 . [DOI] [PubMed] [Google Scholar]

- 44.Evers S, Goossens M, de Vet H, van Tulder M, Ament A. Criteria list for assessment of methodological quality of economic evaluations: Consensus on Health Economic Criteria. Int J Technol Assess Health Care. 2005;21(2):240–5. Epub 2005/06/01. . [PubMed] [Google Scholar]

- 45.Fry RN, Avey SG, Sullivan SD. The Academy of Managed Care Pharmacy format for formulary submissions: an evolving standard—a Foundation for Managed Care Pharmacy Task Force report. Value Health. 2003;6(5):505–21. Epub 2003/11/25. 10.1046/j.1524-4733.2003.65327.x . [DOI] [PubMed] [Google Scholar]

- 46.Goldhaber-Fiebert JD, Stout NK, Goldie SJ. Empirically evaluating decision-analytic models. Value Health. 2010;13(5):667–74. Epub 2010/03/17. 10.1111/j.1524-4733.2010.00698.x . [DOI] [PubMed] [Google Scholar]

- 47.Husereau D, Drummond M, Petrou S, Carswell C, Moher D, Greenberg D, et al. Consolidated health economic evaluation reporting standards (CHEERS) statement. BMJ. 2013;346:f1049 Epub 2013/03/27. 10.1136/bmj.f1049 . [DOI] [PubMed] [Google Scholar]

- 48.Jackson DL. Reporting results of latent growth modeling and multilevel modeling analyses: Some recommendations for rehabilitation psychology. Rehabil Psychol. 2010;55(3):272–85. 10.1037/a0020462 [DOI] [PubMed] [Google Scholar]

- 49.Janssens AC, Ioannidis JP, van Duijn CM, Little J, Khoury MJ. Strengthening the reporting of genetic risk prediction studies: the GRIPS statement. PLoS Med. 2011;8(3):e1000420 Epub 2011/03/23. 10.1371/journal.pmed.1000420 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Kalil AC, Mattei J, Florescu DF, Sun J, Kalil RS. Recommendations for the assessment and reporting of multivariable logistic regression in transplantation literature. Am J Transplant. 2010;10(7):1686–94. Epub 2010/07/21. 10.1111/j.1600-6143.2010.03141.x [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Karnon J, Goyder E, Tappenden P, McPhie S, Towers I, Brazier J, et al. A review and critique of modelling in prioritising and designing screening programmes. Health Technol Assess. 2007;11(52):iii-iv, ix-xi, 1–145. Epub 2007/11/23. 10.3310/hta11520 . [DOI] [PubMed] [Google Scholar]

- 52.Karnon J, Stahl J, Brennan A, Caro JJ, Mar J, Moller J. Modeling using discrete event simulation: a report of the ISPOR-SMDM Modeling Good Research Practices Task Force—4. Value Health. 2012;15(6):821–7. Epub 2012/09/25. 10.1016/j.jval.2012.04.013 . [DOI] [PubMed] [Google Scholar]

- 53.Kerr KF, Meisner A, Thiessen-Philbrook H, Coca SG, Parikh CR. RiGoR: reporting guidelines to address common sources of bias in risk model development. Biomark Res. 2015;3(1):2 Epub 2015/02/03. 10.1186/s40364-014-0027-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Kostoulas P, Nielsen SS, Branscum AJ, Johnson WO, Dendukuri N, Dhand NK, et al. STARD-BLCM: standards for the reporting of diagnostic accuracy studies that use Bayesian latent class models. Prev Vet Med. 2017;138:37–47. 10.1016/j.prevetmed.2017.01.006 [DOI] [PubMed] [Google Scholar]

- 55.Liberati A, Sheldon TA, Banta HD. EUR-ASSESS project subgroup report on methodology: methodological guidance for the conduct of health technology assessment. Int J Technol Assess Health Care. 1997;13(2):186–219. Epub 1997/04/01. 10.1017/s0266462300010369 . [DOI] [PubMed] [Google Scholar]

- 56.Lopez-Bastida J, Oliva J, Antonanzas F, Garcia-Altes A, Gisbert R, Mar J, et al. Spanish recommendations on economic evaluation of health technologies. Eur J Health Econ. 2010;11(5):513–20. Epub 2010/04/21. 10.1007/s10198-010-0244-4 . [DOI] [PubMed] [Google Scholar]

- 57.Luo W, Phung D, Tran T, Gupta S, Rana S, Karmakar C, et al. Guidelines for developing and reporting machine learning predictive models in biomedical research: a multidisciplinary view. J Med Internet Res. 2016;18(12):e323 Epub 2016/12/18. 10.2196/jmir.5870 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.McCabe C, Dixon S. Testing the validity of cost-effectiveness models. Pharmacoeconomics. 2000;17(5):501–13. Epub 2000/09/08. 10.2165/00019053-200017050-00007 . [DOI] [PubMed] [Google Scholar]

- 59.Nuijten MJC, Pronk MH, Brorens MJA, Hekster YA, Lockefeer JHM, de Smet PAGM, et al. Reporting format for economic evaluation part II: focus on modelling studies. Pharmacoeconomics. 1998;14(3):259–68. 10.2165/00019053-199814030-00003 [DOI] [PubMed] [Google Scholar]

- 60.Olson BM, Armstrong EP, Grizzle AJ, Nichter MA. Industry's perception of presenting pharmacoeconomic models to managed care organizations. J Manag Care Pharm. Epub 2003/11/14. 10.18553/jmcp.2003.9.2.159 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Philips Z, Bojke L, Sculpher M, Claxton K, Golder S. Good practice guidelines for decision-analytic modelling in health technology assessment: a review and consolidation of quality assessment. Pharmacoeconomics. 2006;24(4):355–71. Epub 2006/04/12. 10.2165/00019053-200624040-00006 . [DOI] [PubMed] [Google Scholar]

- 62.Pitman R, Fisman D, Zaric GS, Postma M, Kretzschmar M, Edmunds J, et al. Dynamic transmission modeling: a report of the ISPOR-SMDM Modeling Good Research Practices Task Force—5. Value Health. 2012;15(6):828–34. Epub 2012/09/25. 10.1016/j.jval.2012.06.011 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Poldrack RA, Fletcher PC, Henson RN, Worsley KJ, Brett M, Nichols TE. Guidelines for reporting an fMRI study. Neuroimage. 2008;40(2):409–14. Epub 2008/01/15. 10.1016/j.neuroimage.2007.11.048 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Ramos MC, Barton P, Jowett S, Sutton AJ. A systematic review of research guidelines in decision-analytic modeling. Value Health. 2015;18(4):512–29. Epub 2015/06/21. 10.1016/j.jval.2014.12.014 . [DOI] [PubMed] [Google Scholar]

- 65.Roberts M, Russell LB, Paltiel AD, Chambers M, McEwan P, Krahn M. Conceptualizing a model: a report of the ISPOR-SMDM Modeling Good Research Practices Task Force-2. Med Decis Making. 2012;32(5):678–89. Epub 2012/09/20. 10.1177/0272989X12454941 . [DOI] [PubMed] [Google Scholar]

- 66.Rodrigues G, Lock M, D'Souza D, Yu E, Van Dyk J. Prediction of radiation pneumonitis by dose—volume histogram parameters in lung cancer—a systematic review. Radiother Oncol. 2004;71(2):127–38. Epub 2004/04/28. 10.1016/j.radonc.2004.02.015 . [DOI] [PubMed] [Google Scholar]

- 67.Schreiber JB. Latent class analysis: an example for reporting results. Res Social Adm Pharm. 2017;13(6):1196–201. 10.1016/j.sapharm.2016.11.011. 10.1016/j.sapharm.2016.11.011 [DOI] [PubMed] [Google Scholar]

- 68.Sculpher MJ, Pang FS, Manca A, Drummond MF, Golder S, Urdahl H, et al. Generalisability in economic evaluation studies in healthcare: a review and case studies. Health Technol Assess. 2004;8(49):iii-iv, 1–192. Epub 2004/11/17. 10.3310/hta8490 . [DOI] [PubMed] [Google Scholar]

- 69.Siebert U, Alagoz O, Bayoumi AM, Jahn B, Owens DK, Cohen DJ, et al. State-transition modeling: a report of the ISPOR-SMDM Modeling Good Research Practices Task Force—3. Value Health. 2012;15(6):812–20. Epub 2012/09/25. 10.1016/j.jval.2012.06.014 . [DOI] [PubMed] [Google Scholar]

- 70.Soto J. Health economic evaluations using decision analytic modeling: principles and practices—utilization of a checklist to their development and appraisal. Int J Technol Assess Health Care. 2002;18(1):94–111. Epub 2002/05/20. doi: undefined [PubMed] [Google Scholar]

- 71.Spiegel BM, Targownik LE, Kanwal F, Derosa V, Dulai GS, Gralnek IM, et al. The quality of published health economic analyses in digestive diseases: a systematic review and quantitative appraisal. Gastroenterology. 2004;127(2):403–11. Epub 2004/08/10. 10.1053/j.gastro.2004.04.020 . [DOI] [PubMed] [Google Scholar]

- 72.Sterne JA, White IR, Carlin JB, Spratt M, Royston P, Kenward MG, et al. Multiple imputation for missing data in epidemiological and clinical research: potential and pitfalls. BMJ. 2009;338:b2393 Epub 2009/07/01. 10.1136/bmj.b2393 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 73.Stevens GA, Alkema L, Black RE, Boerma JT, Collins GS, Ezzati M, et al. Guidelines for Accurate and Transparent Health Estimates Reporting: the GATHER statement. Lancet. 2016;388(10062):e19–e23. 10.1016/S0140-6736(16)30388-9 [DOI] [PubMed] [Google Scholar]

- 74.Stout NK, Knudsen AB, Kong CY, McMahon PM, Gazelle GS. Calibration methods used in cancer simulation models and suggested reporting guidelines. Pharmacoeconomics. 2009;27(7):533–45. Epub 2009/08/12. 10.2165/11314830-000000000-00000 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 75.Subramanian J, Simon R. Gene expression-based prognostic signatures in lung cancer: ready for clinical use? J Natl Cancer Inst. 2010;102(7):464–74. Epub 2010/03/18. 10.1093/jnci/djq025 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 76.Ultsch B, Damm O, Beutels P, Bilcke J, Bruggenjurgen B, Gerber-Grote A, et al. Methods for health economic evaluation of vaccines and immunization decision frameworks: a consensus framework from a European vaccine economics community. Pharmacoeconomics. 2016;34(3):227–44. Epub 2015/10/20. 10.1007/s40273-015-0335-2 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 77.Ungar WJ, Santos MT. The Pediatric Quality Appraisal Questionnaire: an instrument for evaluation of the pediatric health economics literature. Value Health. 2003;6(5):584–94. Epub 2003/11/25. 10.1046/j.1524-4733.2003.65253.x . [DOI] [PubMed] [Google Scholar]

- 78.van de Schoot R, Sijbrandij M, Winter SD, Depaoli S, Vermunt JK. The GRoLTS-Checklist: guidelines for reporting on latent trajectory studies. Struct Equ Modeling. 2016;24(3):451–67. 10.1080/10705511.2016.1247646 [DOI] [Google Scholar]

- 79.Vegter S, Boersma C, Rozenbaum M, Wilffert B, Navis G, Postma MJ. Pharmacoeconomic evaluations of pharmacogenetic and genomic screening programmes: a systematic review on content and adherence to guidelines. Pharmacoeconomics. 2008;26(7):569–87. Epub 2008/06/20. 10.2165/00019053-200826070-00005 . [DOI] [PubMed] [Google Scholar]

- 80.Weinstein MC, O'Brien B, Hornberger J, Jackson J, Johannesson M, McCabe C, et al. Principles of good practice for decision analytic modeling in health-care evaluation: report of the ISPOR Task Force on Good Research Practices—Modeling Studies. Value Health. 2003;6(1):9–17. Epub 2003/01/22. 10.1046/j.1524-4733.2003.00234.x . [DOI] [PubMed] [Google Scholar]

- 81.Barlas Y. Formal aspects of model validity and validation in system dynamics. Syst Dyn Rev. 1996;12(3):183–210. [DOI] [Google Scholar]

- 82.Rachow A, Ivanova O, Wallis R, Charalambous S, Jani I, Bhatt N, et al. TB sequel: Incidence, pathogenesis and risk factors of long-term medical and social sequelae of pulmonary TB–a study protocol. BMC Pulm Med. 2019;19(1):4 10.1186/s12890-018-0777-3 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 83.Stark P. Before reproducibility must come preproducibility. Nature. 2018;557(7707):613 10.1038/d41586-018-05256-0 . [DOI] [PubMed] [Google Scholar]

- 84.Chen X, Dallmeier-Tiessen S, Dasler R, Feger S, Fokianos P, Gonzalez JB, et al. Open is not enough. Nature Physics. 2019;15:113–9. 10.1038/s41567-018-0342-2 [DOI] [Google Scholar]

- 85.Bunge M. Philosophy of Science: Volume 1, From Problem to Theory Revised Edition. New York: Taylor & Francis; 2017. [Google Scholar]

- 86.Schulz KF, Moher D, Altman DG. Ambiguities and Confusions Between Reporting and Conduct. In: Moher D, Altman DG, Schulz KF, Simera I, Wager E, editors. Guidelines for Reporting Health Research: A User's Manual. Hoboken, NJ: John Wiley & Sons, Ltd; 2014. p. 41–7. [Google Scholar]

- 87.Editorials. Checklist checked. Nature, This Week. 2018;556(April 19):273–4.

- 88.Grimm V, Berger U, DeAngelis DL, Polhill JG, Giske J, Railsback SF. The ODD protocol: a review and first update. Ecol Modell. 2010;221(23):2760–8. 10.1016/j.ecolmodel.2010.08.019 [DOI] [Google Scholar]

- 89.Crusoe MR, Brown CT. Walking the talk: adopting and adapting sustainable scientific software development processes in a small biology lab. J Open Res Softw. 2016;4(1). Epub 2016/12/13. 10.5334/jors.35 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 90.Oberkampf WL, Trucano TG, Hirsch C. Verification, validation, and predictive capability in computational engineering and physics. App Mech Rev. 2004;57(5):345–84. 10.1115/1.1767847 [DOI] [Google Scholar]

- 91.Merson L, Gaye O, Guerin PJ. Avoiding data dumpsters—toward equitable and useful data sharing. New Engl J Med. 2016;374(25):2414–5. Epub 2016/05/11. 10.1056/NEJMp1605148 . [DOI] [PubMed] [Google Scholar]

- 92.figshare.com [Internet]. Figshare; [cited 2019 Nov 18]. Available from: https://figshare.com.

- 93.Kirk PDW, Babtie AC, Stumpf MPH. SYSTEMS BIOLOGY. Systems biology (un)certainties. Science. 2015;350(6259):386 10.1126/science.aac9505 . [DOI] [PubMed] [Google Scholar]

- 94.Smith ME, Singh BK, Irvine MA, Stolk WA, Subramanian S, Hollingsworth TD, et al. Predicting lymphatic filariasis transmission and elimination dynamics using a multi-model ensemble framework. Epidemics. 2017;18(Supplement C):16–28. 10.1016/j.epidem.2017.02.006. 10.1016/j.epidem.2017.02.006 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 95.Feynman RP, Leighton R, Sands M. The Feynman Lectures on Physics. Vol II–Mainly Electromagnetism and Matter The New Millenium Edition. New York; Basic Books: 2014. [Google Scholar]

- 96.Popper K. The Logic of Scientific Discovery. Taylor & Francis e-Library; 2005. [cited 2019 Nov 18]. Available from: http://strangebeautiful.com/other-texts/popper-logic-scientific-discovery.pdf. [Google Scholar]

- 97.Hollingsworth TD, Medley GF. Learning from multi-model comparisons: collaboration leads to insights, but limitations remain. Epidemics. 2017;18:1–3. Epub 2017/03/11. 10.1016/j.epidem.2017.02.014 . [DOI] [PubMed] [Google Scholar]

- 98.Ruiz D, Brun C, Connor SJ, Omumbo JA, Lyon B, Thomson MC. Testing a multi-malaria-model ensemble against 30 years of data in the Kenyan highlands. Malar J. 2014;13:206 Epub 2014/06/03. 10.1186/1475-2875-13-206 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 99.Hastie T, Tibshirani R, Friedman J. The Elements of Statistical Learning: Data Mining, Inference, and Prediction. 2nd ed New York: Springer-Verlag; 2009. [Google Scholar]

- 100.Bröcker J, Smith LA. Scoring probabilistic forecasts: the importance of being proper. Weather Forecast. 2007;22(2):382–8. 10.1175/waf966.1 [DOI] [Google Scholar]

- 101.Biggerstaff M, Alper D, Dredze M, Fox S, Fung IC-H, Hickmann KS, et al. Results from the Centers for Disease Control and Prevention’s predict the 2013–2014 Influenza Season Challenge. BMC Infect Dis. 2016;16(1):357 Epub 2016/07/22. 10.1186/s12879-016-1669-x [DOI] [PMC free article] [PubMed] [Google Scholar]

- 102.Biggerstaff M, Johansson M, Alper D, Brooks LC, Chakraborty P, Farrow DC, et al. Results from the second year of a collaborative effort to forecast influenza seasons in the United States. Epidemics. 2018;24:26–33. Epub 2018/02/24. 10.1016/j.epidem.2018.02.003 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 103.den Boon S, Jit M, Brisson M, Medley G, Beutels P, White R, et al. Guidelines for multi-model comparisons of the impact of infectious disease interventions. BMC Med. 2019;17(1):163 10.1186/s12916-019-1403-9 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 104.Caro JJ, Briggs AH, Siebert U, Kuntz KM. Modeling good research practices—overview: a report of the ISPOR-SMDM Modeling Good Research Practices Task Force—1. Value Health. 2012;15(6):796–803. Epub 2012/09/25. 10.1016/j.jval.2012.06.012 . [DOI] [PubMed] [Google Scholar]

- 105.Whitty CJ. What makes an academic paper useful for health policy? BMC Med. 2015;13:301 Epub 2015/12/18. 10.1186/s12916-015-0544-8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 106.Donnelly CA, Boyd I, Campbell P, Craig C, Vallance P, Walport M, et al. Four principles to make evidence synthesis more useful for policy. Nature. 2018;558(7710):361–64. Epub 2018/06/22. 10.1038/d41586-018-05414-4 . [DOI] [PubMed] [Google Scholar]

- 107.World Health Organization. Consultation on the development of guidance on how to incorporate the results of modelling into WHO guidelines, Geneva, Switzerland, 27–29 April 2016: meeting report. WHO/HIS/IER/REK/2017.2. Geneva: World Health Organization: 2016 [cited 2019 Nov 18]. Available from: https://apps.who.int/iris/handle/10665/258987.

- 108.Lewin S, Booth A, Glenton C, Munthe-Kaas H, Rashidian A, Wainwright M, et al. Applying GRADE-CERQual to qualitative evidence synthesis findings: introduction to the series. Implement Sci. 2018;13(Suppl 1):2 Epub 2018/01/25. 10.1186/s13012-017-0688-3 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 109.Hales S, Lesher-Trevino A, Ford N, Maher D, Ramsay A, Tran N. Reporting guidelines for implementation and operational research. Bull World Health Organ. 2016;94(1):58–64. Epub 2016/01/16. 10.2471/BLT.15.167585 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 110.Guyatt GH, Oxman AD, Vist GE, Kunz R, Falck-Ytter Y, Alonso-Coello P, et al. GRADE: an emerging consensus on rating quality of evidence and strength of recommendations. BMJ. 2008;336(7650):924–6. Epub 2008/04/26. 10.1136/bmj.39489.470347.AD [DOI] [PMC free article] [PubMed] [Google Scholar]

- 111.World Health Organization Strategic Advisory Group of Experts on Immunization. Guidance for the development of evidence-based vaccination-related recommendations 2017. Geneva: World Health Organization; 2017. [2019 Nov 18]. Available from: http://www.who.int/immunization/sage/Guidelines_development_recommendations.pdf. [Google Scholar]

- 112.Lewin S, Bosch-Capblanch X, Oliver S, Akl EA, Vist GE, Lavis JN, et al. Guidance for evidence-informed policies about health systems: assessing how much confidence to place in the research evidence. PLoS Med. 2012;9(3):e1001187 Epub 2012/03/27. 10.1371/journal.pmed.1001187 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 113.Altman DG, Simera I. A history of the evolution of guidelines for reporting medical research: the long road to the EQUATOR Network. J R Soc Med. 2016;109(2):67–77. Epub 2016/02/18. 10.1177/0141076815625599 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 114.Moher D, Altman DG, Schulz KF, Simera I, Wager E(Eds.). Guidelines for Reporting Health Research: A User's Manual. Hoboken, NJ: Wiley Blackwell: John Wiley & Sons, Ltd; 2014. 324 pp. [Google Scholar]

- 115.Grimm V, Berger U, Bastiansen F, Eliassen S, Ginot V, Giske J, et al. A standard protocol for describing individual-based and agent-based models. Ecol Modell. 2006;198(1):115–26. 10.1016/j.ecolmodel.2006.04.023 [DOI] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

PRIME-NTD, Policy-Relevant Items for Reporting Models in Epidemiology of Neglected Tropical Diseases.

(DOCX)

(XLSX)

(XLSX)