Abstract

Convolutional analysis operator learning (CAOL) enables the unsupervised training of (hierarchical) convolutional sparsifying operators or autoencoders from large datasets. One can use many training images for CAOL, but a precise understanding of the impact of doing so has remained an open question. This paper presents a series of results that lend insight into the impact of dataset size on the filter update in CAOL. The first result is a general deterministic bound on errors in the estimated filters, and is followed by a bound on the expected errors as the number of training samples increases. The second result provides a high probability analogue. The bounds depend on properties of the training data, and we investigate their empirical values with real data. Taken together, these results provide evidence for the potential benefit of using more training data in CAOL.

I. Introduction

LEARNING convolutional operators from large datasets is a growing trend in signal/image processing, computer vision, machine learning, and artificial intelligence. The convolutional approach resolves the large memory demands of patch-based operator learning and enables unsupervised operator learning from “big data,” i.e., many high-dimensional signals. See [1], [2] and references therein. Examples include convolutional dictionary learning [2], [3] and convolutional analysis operator learning (CAOL) [1], [4]. CAOL trains an autoencoding CNN in an unsupervised manner, and is useful for training multi-layer CNNs from many training images [1]. In particular, the block proximal gradient method using a majorizer [1], [2] leads to rapidly converging and memory-efficient CAOL [1]. However, a theoretical understanding of the impact of using many training images in CAOL has remained an open question.

This paper presents new insights on this topic. Our first main result provides a deterministic bound on filter estimation error, and is followed by a bound on the expected error when “model mismatch” has zero mean. (See Theorem 1 and Corollary 2, respectively.) The expected error bound depends on the training data, and we provide empirical evidence of its decrease with an increase in training samples. Our second main result provides a high probability bound that explicitly decreases with increasingly many i.i.d. training samples. The bound improves when model mismatch and samples are uncorrelated. (See Theorem 3.) Additional empirical findings provide evidence that the correlation can indeed be small in practice. Put together, our findings provide new insight into how using many samples can improve CAOL, underscoring the benefits of the low memory usage of CAOL.

II. Backgrounds and Preliminaries

A. CAOL with orthogonality constraints

CAOL seeks a set of filters that “best” sparsify a set of training images by solving the optimization problem [1, §II-A] (see Appendix for notation):

| (P0) |

where ⊛ denotes convolution, is a set of K ≥ R convolutional kernels, is a set of sparse codes, α > 0 is a regularization parameter controlling the sparsity of features {zl,k}, and ||·||0 denotes the ℓ0-quasi-norm. We group the K filters into a matrix:

| (1) |

The orthogonality condition in (P0) enforces 1) a tight-frame condition on the filters, i.e., , ∀x [1, Prop. 2.1]; and 2) filter diversity when R = K, since implies and each pair of filters is incoherent, i.e., |〈dk, dk′〉|2 = 0, ∀k ≠ k′. One often solves (P0) iteratively, by alternating between optimizing D (filter update) and optimizing {zl,k : ∀l, k} (sparse code update) [1], i.e., at the i iteration, the current iterates are updated as and .

B. Filter update in a matrix form

The key to our analysis lies in rewriting the filter update for (P0) in matrix form, to which we apply matrix perturbation and concentration inequalities. Observe first that

| (2) |

where is the circular shift operator and (·)n denotes the matrix product of its n copies. We consider a circular boundary condition to simplify the presentation of {Ψl} in (2), but our entire analysis holds for a general boundary condition with only minor modifications of {Ψl} as done in [1, §IV-A]. Using (2), the filter update of (P0) is rewritten as

| (P1) |

where contains all the current sparse code estimates for the lth sample, and we drop iteration superscript indices (·)(i) throughout. The next section uses this form to characterize the filter update solution D⋆.

III. Main Results:

Dependence of CAOL on Training Data

The main results in this section illustrate how training with many samples can reduce errors in the filter D⋆ from (P1) and characterize the reduction in terms of properties of the training data. Throughout we model the current sparse codes estimates as

| (3) |

where Dtrue is formed from optimal (orthogonal) filters analogously to (1), and captures model mismatch in the current sparse codes, e.g., due to the current iterate being far from convergence or being trapped in local minima.

The following theorem provides a deterministic characterization.

Theorem 1.

Suppose that both matrices

| (4) |

are full row rank, where {Ψl, Zl, Ztrue,l : l = 1, …, L} are defined in (2)–(3). Then, the solution D⋆ to (P1) has error with respect to Dtrue bounded as

| (5) |

where λmin(·) denotes the smallest eigenvalue of its argument.

The full row rank condition on (4) ensures that the estimated filters D⋆ and the true filters Dtrue are unique, and it further guarantees that the denominator of (5) is strictly positive. When the model mismatches E1, …, EL are independent and mean zero, we obtain the following expected error bound:

Corollary 2.

Under the construction of Theorem 1, suppose that El is a zero-mean random matrix for l = 1, …, L, and is independent over l. Then,

| (6) |

where denotes the expectation,

| (7) |

λmax(·) denotes the largest eigenvalue of its argument, and the expectation is taken over the model mismatch.

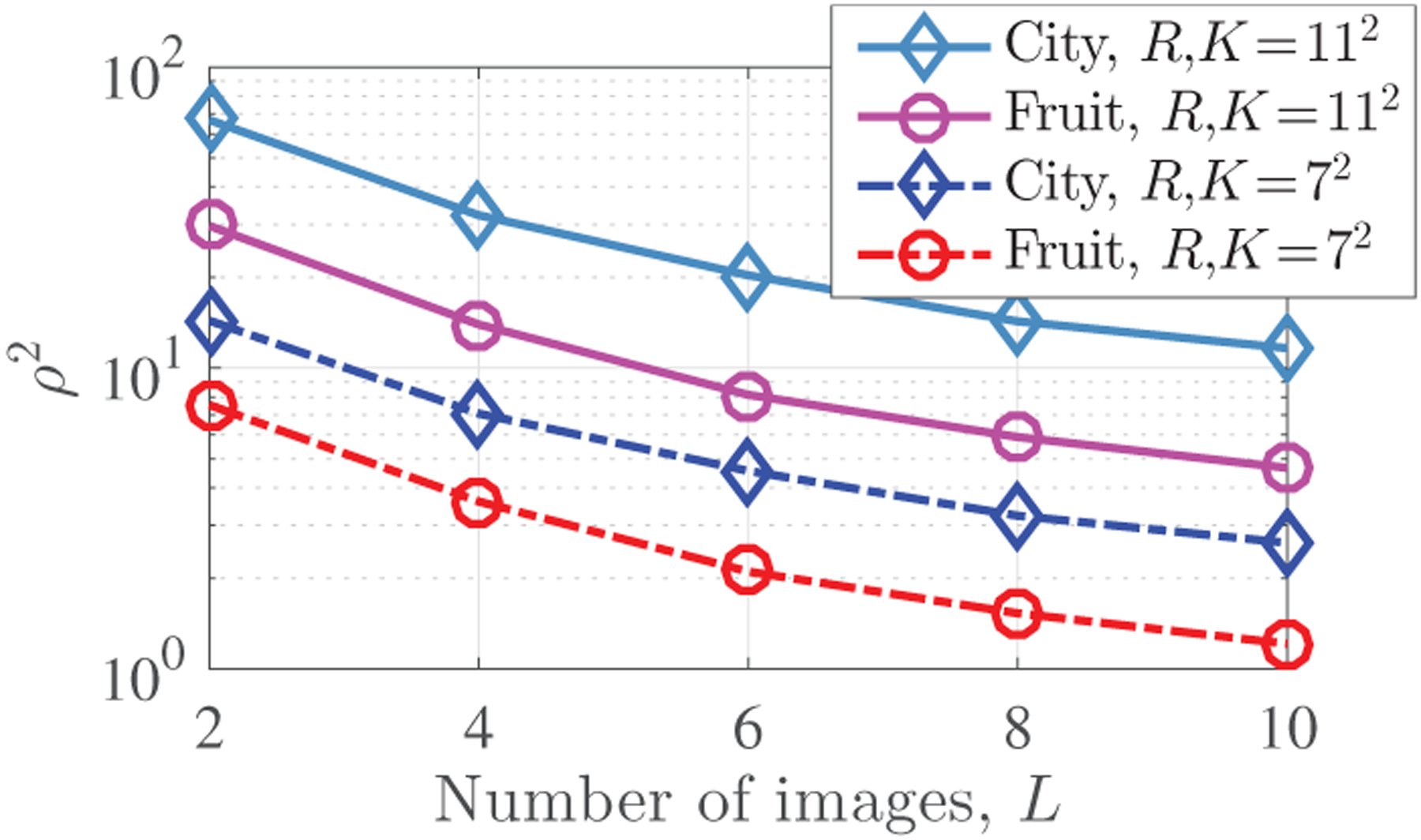

Given fixed K and R, it is natural to expect that is bounded by some constant independent of L, and so the expected error bound in (6) largely depends on ρ2 in (7). When training samples are i.i.d., one may further expect to concentrate around its expectation, roughly resulting in ρ2 ∝ 1/L, with a proportionality constant that depends on R and the statistics of the training data. Fig. 1 illustrates ρ2 for various image datasets, providing empirical evidence of this decrease in real data.

Fig. 1.

Empirical values of ρ2 in (7) show a decrease with L for different datasets and filter dimensions. (The fruit and city datasets with L =10 and N = 104 were preprocessed with contrast enhancement and mean subtraction; see details of datasets and experiments in [1], [2] and references therein. For L < 10, the results are averaged over 50 datasets randomly selected from the full datasets.) Under the assumptions of Corollary 2, the decrease in this quantity leads to a better expected error bound in (5). Without preprocessing, the quantity ρ2 increases by a factor of around 103.

Our second theorem provides a probabilistic error bound via concentration inequalities, given i.i.d. training sample and model mismatch pairs (x1, E1), …, (xL, EL).1 It removes the zero-mean assumption for the model mismatches {El : ∀l} in Corollary 2 that might be strong, e.g., if training data are not preprocessed to have zero mean.

Theorem 3.

Suppose that training sample and model mismatch pairs , where x and E are almost surely bounded, i.e.,

| (8) |

and the matrices in (4) are almost surely full row rank. Then, for any , the solution D⋆ to (P1) has error with respect to Dtrue bounded as

| (9) |

with probability at least

| (10) |

where and Ψ is constructed from x as in (2).

Taking δ sufficiently small, the high probability error bound (9) is primarily driven by

| (11) |

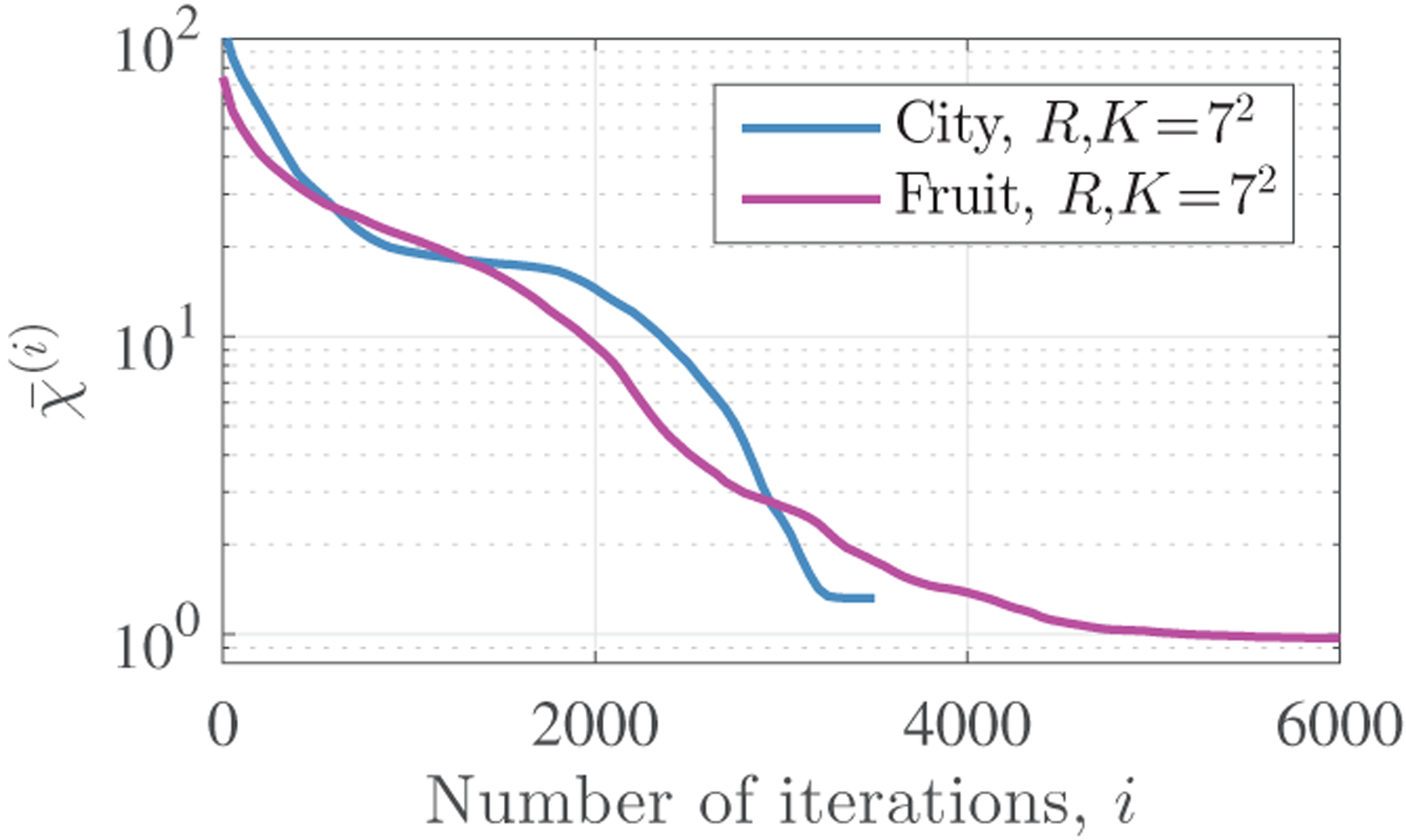

where is analogous to ρ in (7), and captures how correlated the model mismatch is to the training samples. As the number L of training samples increases, decreases as . On the other hand, is constant with respect to L and provides a floor for the bound. Fig. 2 illustrates for CAOL iterates from different image datasets, and provides empirical evidence that this term can indeed be small in real data. If the model mismatch is sufficiently uncorrelated with the training samples, i.e., is practically zero, then only the term remains and this term decreases with L. Namely, if model mismatch is entirely uncorrelated with the training samples, then using many samples decreases the error bound to (effectively) zero.

Fig. 2.

Empirical estimate of in (11) across iterations in the alternating optimization algorithm [1] that solves CAOL (P0) with α = 10−3. (The fruit and city datasets with L = 10 and N = 104 were preprocessed with contrast enhancement and mean subtraction; see details of datasets and experiments in [1], [2] and references therein. The model mismatches at the ith iteration were calculated every 50 iterations based on (3), where we use the converged filters for Dtrue.) Observe that generally decreases over iterations; when is small, the high probability error bound (9) in Theorem 3 depends primarily on defined in (11).

IV. Related Works

Sample complexity [7] and synthesis (or reconstruction) error [8] have been studied in the context of synthesis operator learning (e.g., dictionary learning [9]); see the cited papers and references therein. A similar understanding for (C)AOL has however remained largely open; existing works focus primarily on establishing (C)AOL models and their algorithmic challenges [1], [10]–[13]. The authors in [14] studied sample complexity for a patch-based AOL method, but the form of their model differs from that of ours (P0). Specifically, they consider the following AOL problem: , where f (·) is a sparsity promoting function (e.g., a smooth approximation of the ℓ0-quasi-norm [14]), g(·) is a regularizer or constraint for the filter matrix D, and is a set of training patches (not images).

V. Proof of Theorem 1

Rewriting (P1) yields that D⋆ is a solution of the (scaled) orthogonal Procrustes problem [1, §S.VII]:

| (12) |

where arises by stacking Ψ1, …, ΨL vertically and arises likewise from Z1, …, ZL. Similarly, since Ψ1 Dtrue = Ztrue,l as in (3), Dtrue is a solution of the analogous (scaled) orthogonal Procrustes problem

| (13) |

where arises by stacking Ztrue,1, …, Ztrue,L vertically.

By assumption, both and are full row rank and so (12) and (13) have unique solutions given by the unique (scaled) polar factors

| (14) |

where Q(·) denotes the polar factor of its argument, and can be computed as Q(A) = WVH from the (thin) singular value decomposition A = WΣVH.

Thus we have

| (15) |

where is exactly E1, …, EL stacked vertically, and σr (·) denotes the rth largest singular value of its argument. The first inequality holds by the perturbation bound in [15, Thm. 3], and the second holds since . Recalling that Ztrue,l = ΨlDtrue, we rewrite the denominator of (15) as

| (16) |

where the second equality holds because . Substituting (16) into (15) yields (5).

VI. Proof of Corollary 2

Taking the expectation of (5) over the model mismatch amounts to taking the expectation of the numerator of the upper bound in (5):

| (17) |

where the first equality holds by using the assumption that El is zero-mean and independent over l, the second equality follows by expanding the Frobenius norm then applying linearity of the trace and expectation, the first inequality holds since for any vector v and Hermitian matrix M, and the last inequality follows from the definition of . Rewriting (17) using the identity yields the result (6).

VII. Proof of Theorem 3

We derive two high probability bounds, one each for the numerator and denominator of (5). Then, the bound (9) with probability (10) follows by combining the two via a union bound. Before we begin, note that (8) implies that almost surely; our proofs use this inequality multiple times.

A. Upper bound for numerator

Observe first that

| (18) |

where for l = 1, …, L. We next bound via the vector Bernstein inequality [16, Cor. 8.44]. Note that ξ1, …, ξL are i.i.d. with (by construction). Furthermore, ξl is almost surely bounded as

Thus the vector Bernstein inequality [16, Cor. 8.44] yields that for any t > 0,

| (19) |

with probability at least

| (20) |

We obtained (19) by the following simplification:

where the third equality holds by . We obtained (20) by the following simplifications:

Applying (19) and (20) with to the square of (18) yields

| (21) |

with probability at least .

B. Lower bound for denominator

Observe that , where , so Weyl’s inequality [17] yields

| (22) |

and it remains to bound . We do so by using the Matrix Bernstein inequality [16, Cor. 8.15].

Note that Λ1, …, ΛL are i.i.d. (since x1, …, xL are i.i.d.) and . Furthermore, Λl is almost surely bounded as

Thus, the Matrix Bernstein inequality [16, Cor. 8.15] yields that for any t > 0,

| (23) |

where we use the following simplification:

Applying (23) with t = 2γ2RLδ to the square of (22) yields

| (24) |

with probability at least .

C. Combined bound

Combining the bounds (21) and (24) via a union bound yields (9) with probability at least

| (25) |

which is greater than or equal to (10).

Acknowledgments

This work is supported in part by the Keck Foundation and NIH grant U01 EB018753. BA is supported by NSERC grant 611675.

Footnotes

We follow the natural convention in sample size analyses of assuming that {xl : ∀l} are i.i.d. samples from an underlying training distribution; see the references cited in Section IV and [5], [6] for other examples. Model mismatches {El : ∀l} also become i.i.d. across samples at all iterations of CAOL, if “fresh” training samples are used for each update, e.g., as can be done when solving (P1) via mini-batch stochastic optimization.

Contributor Information

Ben Adcock, Department of Mathematics, Simon Fraser University, Burnaby, BC V5A 1S6 Canada.

Jeffrey A. Fessler, Department of Electrical Engineering and Computer Science, The University of Michigan, Ann Arbor, MI 48019 USA.

REFERENCES

- [1].Chun IY and Fessler JA, “Convolutional analysis operator learning: Acceleration and convergence,” submitted, January 2018. [Online]. Available: http://arxiv.org/abs/1802.05584 [DOI] [PMC free article] [PubMed]

- [2].Chun IY and Fessler JA, “Convolutional dictionary learning: Acceleration and convergence,” IEEE Trans. Image Process, vol. 27, no. 4, pp. 1697–1712, April 2018. [DOI] [PubMed] [Google Scholar]

- [3].Chun IY and Fessler JA, “Convergent convolutional dictionary learning using adaptive contrast enhancement (CDL-ACE): Application of CDL to image denoising,” in Proc. Sampling Theory and Appl. (SampTA), Tallinn, Estonia, July 2017, pp. 460–464. [Google Scholar]

- [4].Chun IY and Fessler JA, “Convolutional analysis operator learning: Application to sparse-view CT,” in Proc. Asilomar Conf. on Signals, Syst., and Comput, Pacific Grove, CA, October 2018, pp. 1631–1635. [Google Scholar]

- [5].Hastie T, Tibshirani R, and Friedman J, The elements of statistical learning: Data mining, inference, and prediction, ser. Springer series in statistics. New York, NY: Springer, 2009. [Google Scholar]

- [6].Mohri M, Rostamizadeh A, and Talwalkar A, Foundations of machine learning. Cambridge, MA: MIT Press, 2018. [Google Scholar]

- [7].Shakeri Z, Sarwate AD, and Bajwa WU, “Sample complexity bounds for dictionary learning from vector- and tensor-valued data,” in Information Theoretic Methods in Data Science, Rodrigues M and Eldar Y, Eds. Cambridge, UK: Cambridge University Press, 2019, ch. 5. [Google Scholar]

- [8].Singh S, Poczos B, and Ma J, “Minimax reconstruction risk of convolutional sparse dictionary learning,” in Proc. Int. Conf. on Artif. Int. and Stat, ser. Proc. Mach. Learn. Res., vol. 84, Playa Blanca, Lanzarote, Canary Islands, April 2018, pp. 1327–1336. [Google Scholar]

- [9].Aharon M, Elad M, and Bruckstein A, “K-SVD: An algorithm for designing overcomplete dictionaries for sparse representation,” IEEE Trans. Signal Process, vol. 54, no. 11, pp. 4311–4322, November 2006. [Google Scholar]

- [10].Yaghoobi M, Nam S, Gribonval R, and Davies ME, “Constrained overcomplete analysis operator learning for cosparse signal modelling,” IEEE Trans. Signal Process, vol. 61, no. 9, pp. 2341–2355, March 2013. [Google Scholar]

- [11].Hawe S, Kleinsteuber M, and Diepold K, “Analysis operator learning and its application to image reconstruction,” IEEE Trans. Image Process, vol. 22, no. 6, pp. 2138–2150, June 2013. [DOI] [PubMed] [Google Scholar]

- [12].Cai J-F, Ji H, Shen Z, and Ye G-B, “Data-driven tight frame construction and image denoising,” Appl. Comput. Harmon. Anal, vol. 37, no. 1, pp. 89–105, October 2014. [Google Scholar]

- [13].Ravishankar S and Bresler Y, “ℓ0 sparsifying transform learning with efficient optimal updates and convergence guarantees,” IEEE Trans. Sig. Process, vol. 63, no. 9, pp. 2389–2404, May 2015. [Google Scholar]

- [14].Seibert M, Wörmann J, Gribonval R, and Kleinsteuber M, “Learning co-sparse analysis operators with separable structures,” IEEE Trans. Signal Process, vol. 64, no. 1, pp. 120–130, January 2016. [Google Scholar]

- [15].Li R-C, “New perturbation bounds for the unitary polar factor,” SIAM J. Matrix Anal. Appl, vol. 16, no. 1, pp. 327–332, January 1995. [Google Scholar]

- [16].Foucart S and Rauhut H, A mathematical introduction to compressive sensing. New York, NY: Springer, 2013. [Google Scholar]

- [17].Weyl H, “Das asymptotische verteilungsgesetz der eigenwerte linearer partieller differentialgleichungen (mit einer anwendung auf die theorie der hohlraumstrahlung),” Mathematische Annalen, vol. 71, no. 4, pp. 441–479, December 1912. [Google Scholar]