Abstract

For visually guided navigation, the use of environmental cues is essential. Particularly, detecting local boundaries that impose limits to locomotion and estimating their location is crucial. In a series of three fMRI experiments, we investigated whether there is a neural coding of navigational distance in the human visual cortex (both female and male). We used virtual reality software to systematically manipulate the distance from a viewer perspective to different types of a boundary. Using a multivoxel pattern classification employing a linear support vector machine, we found that the occipital place area (OPA) is sensitive to the navigational distance restricted by the transparent glass wall. Further, the OPA was sensitive to a non-crossable boundary only, suggesting an importance of the functional constraint of a boundary. Together, we propose the OPA as a perceptual source of external environmental features relevant for navigation.

SIGNIFICANCE STATEMENT One of major goals in cognitive neuroscience has been to understand the nature of visual scene representation in human ventral visual cortex. An aspect of scene perception that has been overlooked despite its ecological importance is the analysis of space for navigation. One of critical computation necessary for navigation is coding of distance to environmental boundaries that impose limit on navigator's movements. This paper reports the first empirical evidence for coding of navigational distance in the human visual cortex and its striking sensitivity to functional constraint of environmental boundaries. Such finding links the paper to previous neurological and behavioral works that emphasized the distance to boundaries as a crucial geometric property for reorientation behavior of children and other animal species.

Keywords: fMRI, navigation, navigational distance, occipital place area, OPA, scene

Introduction

The use of visual information plays a critical role for spatial navigation. For example, when a navigator is in an unfamiliar environment without an internal map of the space, the navigator has to rely on external visual cues to establish the sense of direction and guide his/her movements. How does the human visual system extract and encode navigationally relevant visual cues from a scene environment?

A coherent set of recent evidence suggests that a scene-selective region in the human brain, the occipital place area (OPA; Dilks et al., 2013; Grill-Spector, 2003), is crucially engaged in processing navigation-related information of immediate environments. For example, the OPA showed sensitivity to a mirror-reversal of scenes (Dilks et al., 2011), which did not change acategory or an identity of the scene but changed the significance for navigation as the mirror-reversal flips the path's orientation. Systematic investigation using multivoxel pattern analysis showed that the OPA coded for the direction of navigable path, which was determined by the position of the doors or spatial arrangements of boundaries (“path affordance”; Bonner and Epstein, 2017). The OPA also showed sensitivity to changes in the viewer's distance to scenes (Persichetti and Dilks, 2016), and a voxelwise encoding model of 3D scene-structure (e.g., distance and orientations of surfaces) explained the most variance of the OPA activation (Lescroart and Gallant, 2019). Henriksson et al. (2019) further showed that multivoxel patterns of OPA represented the spatial layout of scenes invariant to changes in textures, whereas the parahippocampal place area (PPA; Epstein and Kanwisher, 1998) represented texture more specifically than the layout, suggesting the OPA's distinctive role in representing the local environmental geometry. Altogether, there is converging evidence suggesting that OPA is crucial in representing three-dimensional boundary structures in visual scenes.

However, there are remaining questions about the role of OPA in representing navigational information of visual boundaries. What boundary structure matters for a navigator, and does the OPA represent navigational cues that are functionally important? The OPA may be sensitive to the perceptual aspect of boundaries (e.g., walls with vertical structure that is visually distinctive from horizontal surface) or to the functional aspect of boundaries such as limits imposed on navigator's movements (e.g., walls blocking the path). Earlier researchers have proposed that boundaries might be defined in terms of their functional affordance (Kosslyn et al., 1974; Muller and Kubie, 1987). For example, any environmental structure providing a functional constraint can be considered as a boundary, beyond visible tall walls. If so, it makes ecological sense that the visual system that represents boundary and distance developed sensitivity to represent not only the presence of a functional boundary but also how far the functional boundary is from an observer (which hereinafter referred to as the navigational distance). The functional constraint in an environment can come from multiple objects (proximal or distal), at gradient and subjective levels based on an observer's physical abilities. For the purpose of this paper, we focus on one type of a functional boundary that has a significant impact on the navigational path and affordance in space, such as those that block an observer's movement to move forward in an environment.

Despite its importance, the neural correlate of navigational distance has not been explicitly tested. Imagine an environment where there is a glass wall in front of you that you can see through but cannot cross over. Will the OPA be sensitive to the navigational distance to the glass wall beyond the visible distance to the back wall? Here, we directly test this ecologically important question: whether and how the OPA represents the navigational distance in scenes. We hypothesized that if the OPA encodes the functional aspect of boundaries, it will be sensitive to the presence of structures blocking the movements (“navigational boundary”) and will represent the distance to such navigational boundaries (“navigational distance”). We systematically manipulated the navigational distance by artificially generating scenes with transparent glass walls as navigational boundaries.

Materials and Methods

Participants

Experiment 1

Eighteen participants (6 male, 12 female; 1 left-handed; ages 19–31) were recruited from the Johns Hopkins University (JHU) community with financial compensation. Three participants were excluded from the analysis. One participant was excluded because his regions-of-interest (ROIs) were not localized. The other two participants were excluded due to excessive movements. All had normal or corrected-to-normal vision. Written informed consent was obtained, and the study protocol was approved by the Institutional Review Board of the JHU School of Medicine.

Experiment 2

Fifteen participants (6 male, 9 female; 2 left-handed; ages 18–26) were recruited from the JHU community with financial compensation. All had normal or corrected-to-normal vision. Written informed consent was obtained, and the study protocol was approved by the Institutional Review Board of the JHU School of Medicine.

Experiment 3

Fourteen participants (6 male, 8 female; three left-handed; ages 18–29) were recruited from the JHU community for financial compensation. All had normal or corrected-to-normal vision. Written informed consent was obtained, and the study protocol was approved by the Institutional Review Board of the JHU School of Medicine.

Experimental Design

Stimuli

For all three experiments, artificially rendered indoor environments were used as the stimuli. The environments were constructed using virtual-reality software (Unreal Engine 4, Epic Games). Captured images from each environment were used as stimuli. The stimuli were presented in 600 × 465 pixel resolution (13.8° × 10.7° visual angle) in the scanner with an Epson PowerLite 7350 projector (type: XGA, brightness: 1600 ANSI lumens).

Each environment was identical in spatial layout but different in terms of surface texture on the floor and walls. For each environment, a unique set of two textures (floor and wall textures) were used. The texture on the ceiling was identical across all environments. In Experiment 1, 24 unique environments (http://web.yonsei.ac.kr/parklab/IMAGE_SETS.html) were created separately for each distance level (i.e., Near, Middle, and Far). Critically, we manipulated the navigational boundary (e.g., glass wall) within each environment by adding or removing the glass wall from same sets of environments. Thus, any texture difference across distance levels remained the same for the No-Glass-Wall and the Glass-Wall conditions. In Experiments 2 and 3, we generated 36 unique environments (http://web.yonsei.ac.kr/parklab/IMAGE_SETS.html) and used the same set of environments to create the stimuli for all distance levels.

Stimuli validation with behavioral ratings

To validate the distance perception of our stimuli, JHU undergraduate students who did not participate the fMRI experiment were separately recruited and compensated with course credits.

In Experiment 1, the stimuli were presented one at a time in a random order, and participants were asked, “How far would you be able to walk forward?” Participants estimated the perceived navigational distance (in feet or meters) and typed their answers using a keyboard. When there was no glass wall (No-Glass-Wall), the perceived navigational distance was significantly different across Near, Middle, and Far (one-way repeated-measures ANOVA: F(2,10) = 23.4, p < 0.01). A paired t test revealed that there is a stepwise increment between distance levels (Near vs Middle: t(10) = −5.08, p < 0.01, Middle vs Far: t(10) = 4.29, p < 0.01). In contrast, when there was a glass wall at the same location (Glass-Wall), the perceived navigational distance was about the same across Near, Middle, and Far (one-way repeated-measures ANOVA: F(2,10) = 1.1, p = 0.35). The results suggest that the perceived navigational distance was substantially changed by the presence of a transparent glass wall.

In Experiment 2 and 3, there were two questions asked. The first question was “Would you be able to reach the back wall of the room?”, and participants were asked to respond by pressing either “Yes” or “No” button. The purpose of this question was to test whether the boundary was perceived as non-crossable. As predicted, almost all participants (15/16 in Exp2, 17/18 in Exp3) chose to answer “No (cannot reach the back wall)” for the glass wall. On the other hand, 17 of 18 participants in Experiment 3 answered “Yes (can reach the back wall)” for the Curtain condition, confirming that the curtain is perceived as an environmental feature that is easily crossable, whereas the glass wall is perceived as a non-crossable boundary.

The second question was “How far would you be able to walk forward?” In Experiment 2, the perceived navigational distance was about the same across Near, Middle, and Far (one-way repeated-measures ANOVA: F(2,14) = 0.91, p = 0.42) when the glass wall was located at the same position (Constant-Glass-Wall). On the contrary, when the location of glass wall was varied while the visible distance was kept the same (Varying-Glass-Wall), the perceived navigational (Navig) distance was significantly different across Near-Navig, Middle-Navig, and Far-Navig (one-way repeated-measures ANOVA: F(2,14) = 100.34, p < 0.01). Further, a paired t test revealed a stepwise increment of the perceived navigational distance between Near-versus Middle-Navig (t(14) = −10.92, p < 0.01), and Middle-versus Far-Navig (t(14) = −6.54, p < 0.01). The results indicate that the perceived navigational distance is effectively manipulated by the location of the navigational boundary. In Experiment 3, the estimated navigational distance of Glass-Wall and No-Glass-Wall replicated findings from the previous experiments (Glass-Wall: F(2,17) = 2.25, p = 0.12; No-Glass-Wall: F(2,17) = 10.79, p < 0.01). Most importantly, the Curtain condition showed a significant difference of the perceived navigational distance across Near, Middle, and Far (one-way repeated-measures ANOVA: F(2,17) = 11.01, p < 0.01), suggesting that the curtain did not limit the navigational distance as the glass wall did.

fMRI main experimental runs

Experiment 1 consisted of 18 runs [each 4.17 min, 125 repetition time (TR)]. Twelve images from one of the six conditions were presented in blocks of 12 s each, and a fixation period (8 s) always followed the condition block. Two blocks per condition were acquired within a run. Block order was pseudo-randomized with the following constraint. We first determined the block order by randomizing conditions within a mini epoch, which was a set of all condition blocks. For example, in Experiment 1, there were six conditions and each condition block were repeated twice. The presentation order of each run was determined by randomizing six conditions within an epoch twice independently and concatenating them. In concatenating, we put a constraint so that there is no repetition of the same condition appearing in two successive blocks. Within a condition block, each image was presented for 600 ms, followed by a 400 ms blank screen. The stimuli presentation and the experiment program were produced and controlled by MATLAB and Psychophysics Toolbox (Brainard, 1997; Pelli, 1997). Participants performed a one-back repetition detection task in which they pressed a button whenever there was a repetition of an image, while paying attention to how far they can walk forward if they were inside the presented environment. The specific instruction given to participants was “Imagine that you are in the room and think about how far you would be able to walk forward. While doing that, if the same image repeats in a row, press a button.”

Experiment 2 consisted of eight runs (each 6.83 min, 205 (TR). Twelve images from one of the five conditions were presented in blocks of 12 s each, and a fixation period (8 s) followed each block. Four blocks per condition were acquired within a run. The order of blocks was randomly decided for each run following the same procedure and constraint as in Experiment 1. Each image was presented for 600 ms, followed by a 400 ms blank screen. The stimuli presentation and the experiment program were produced and controlled by MATLAB and Psychophysics Toolbox (Brainard, 1997; Pelli, 1997). Participants performed a one-back repetition detection task, in which they pressed a button whenever there was a repetition of an image. Participants were encouraged to immerse themselves in each environment while looking at the stimuli, but unlike in Experiment 1, they were not explicitly asked to perform any navigation-related tasks (e.g., imagining walking or estimating the distance). The specific instruction given to participants was “Pay attention to each image and try to imagine that you are in that room. While doing that, if the same image repeats in a row, press a button.”

Experiment 3 consisted of 10 runs (each 6.17 min, 185 TR). Twelve images from one of the nine conditions were presented in blocks of 12 s followed by a fixation period (8 s). Two blocks per condition were acquired within a run. The order of blocks was randomly decided for each run, following the same procedure and constraint as the previous two experiments. Each image was presented for 600 ms, followed by a 400 ms blank screen. The stimuli presentation and the experiment program were produced and controlled by MATLAB and Psychophysics Toolbox (Brainard, 1997; Pelli, 1997). Participants performed a one-back repetition detection task, in which they pressed a button whenever there was a repetition of an image. There was no explicit task about navigation or distance estimation, but participants were encouraged to imagine they were inside each environment. The specific instruction given to participants was “Pay attention to each image and try to imagine that you are in that room. While doing that, if the same image repeats in a row, press a button.”

Localizer runs

For each of three experiments, two functional localizer runs were performed independent of the main experimental runs. In a localizer run, participants saw blocks of faces, scenes, objects, and scrambled object images. A localizer run consisted of four blocks per each of these four image conditions, and 20 images were presented in each block (7.1 min, 213 TR). In each trial, a stimulus was presented for 600 ms, followed by 200 ms blank. Participants performed one-back repetition detection task.

fMRI data acquisition

The fMRI data were acquired with a 3-T Philips fMRI scanner with a 32-channel phased-array head coil at the F. M. Kirby Research Center for Functional Brain Imaging at JHU. Structural T1-weighted images were acquired by magnetization-prepared rapid-acquisition gradient echo with 1 × 1 × 1 mm voxels. Functional images were acquired with a gradient echoplanar T2* sequence [2.5 × 2.5 × 2.5 mm voxels; TR 2 s; TE 30 ms; flip angle = 70°; 36 axial 2.5 mm sliced (0.5 mm gap); acquired parallel to the anterior commissure–posterior commissure (ACPC) line]. All three experiments followed the same scanning protocol.

Statistical analyses

Preprocessing

The fMRI data were analyzed with Brain Voyager QX software (Brain Innovation). Preprocessing included slice scan-time correction, linear trend removal, and 3-D motion correction. No spatial or temporal smoothing was performed, and the data were analyzed in individual ACPC space. The same preprocessing pipeline was used for all three experiments.

Regions of interest

We defined three scene-selective areas (OPA, PPA, and RSC) to examine their independent roles in processing navigational distance in scenes. Recent fMRI literature suggests distinctive yet complementary roles of these scene-selective regions. The PPA responds to various aspects of scenes, such as spatial geometry (Epstein and Kanwisher, 1998; Henderson et al., 2011; Kravitz et al., 2011; Park et al., 2011), semantic categories (Walther et al., 2009, 2011), large objects (Konkle and Oliva, 2012; Konkle and Caramazza, 2013), and textures (Cant and Xu, 2012, 2015; Park and Park, 2017). Compared with the PPA, retrosplenial complex (RSC) responds sensitively to the familiarity of scenes (Sugiura et al., 2005; Epstein et al., 2007), the survey knowledge about environments (Wolbers and Büchel, 2005), and the heading direction (Aguirre and D'Esposito, 1999; Marchette et al., 2014; Silson et al., 2019). The OPA, our target ROI, represents distance and boundary information from a navigationally relevant scene (Bonner and Epstein, 2017; Persichetti and Dilks, 2016; Henriksson et al., 2019). Thus, we examined three scene-selective regions to examine unique and distinctive roles of these regions in representing navigational distance in scenes.

The ROIs were defined by following the same procedures across all three experiments, with two independent functional localizers for each experiment. The process of ROI localization was done in two steps. First, we individually identified ROIs using condition contrasts (Scenes – Faces; Epstein and Kanwisher, 1998) implemented in a general linear model. The single contiguous cluster of voxels that passed the threshold (p < 0.0001) was used. The OPA was defined by locating the cluster near transverse occipital sulcus. The PPA was defined by locating the cluster between posterior parahippocampal gyrus and lingual gyrus. The RSC was defined by locating the cluster near the posterior cingulate cortex.

Second, we redefined each ROI in each individual participant by intersecting the group-based anatomic constraint derived from a large number (N = 70) of localizer subject database (Bainbridge et al., 2017). The database contained individually defined ROIs using the same contrast and threshold as the current study. The group-based constraint was initially generated in Talairach space and then transformed to each individual's ACPC space. This second step ensured all ROIs have consistent anatomic locations across all participants. These ROIs were first identified separately for the left and right hemispheres and were tested whether there is a hemispheric difference. Specifically, we ran a two-way ANOVA with Condition and Hemisphere as factors and examined whether there is a significant interaction effect. The results suggested no hemispheric difference in any of ROIs across all experiments: Experiment 1 (OPA: F(1,52) = 0.11, p = 0.74; PPA: F(1,56) = 2.44, p = 0.12; RSC: F(1,44) = 0.8, p = 0.38), Experiment 2 (OPA: F(1,56) = 0.86, p = 0.36; PPA: F(1,56) = 0.67, p = 0.42; RSC: F(1,48) = 0.59, p = 0.45), and Experiment 3 (OPA: F(2,78) = 0.1, p = 0.9; PPA: F(2,78) = 0.34, p = 0.72; RSC: F(2,78) = 0.71, = 0.5). Thus, we merged the left and right ROIs together and defined as bilateral ROIs.

In addition, the early visual cortex (EVC) was defined based on the same database (N = 70; Bainbridge et al., 2017) used to generate the group-based anatomic constraint. The EVC in the database were anatomically defined for each subject in a standardized space (Talairach). Using these individual EVCs, we created a probabilistic map. This map was then thresholded at 60%, and the remaining voxels after thresholding were defined as the group-EVC. To define the EVC of current study participants, the group-EVC was transformed into each individual's ACPC space. Last, an experimenter visually inspected the ACPC-transformed EVC of each participant.

Multivariate analysis: pattern classification

For multivoxel pattern analysis (MVPA), patterns of activity were extracted across the voxels of an ROI for each block of the six conditions. The MRI signal intensity from each voxel within an ROI was transformed into z scores across time points for each run. The activity level of each individual voxel for each block was labeled with its respective condition. Four seconds (2 TR) of offset was added to the 12 s (6 TR) condition length to account for the hemodynamic delay of the blood oxygen level-dependent response. The obtained values for each time points were averaged to generate a pattern across voxels within an ROI for each condition.

Using the extracted multivoxel patterns from each ROI, a linear support vector machine (SVM; using LIBSVM: http://sourceforge.net/projects/svm) classifier was trained to test whether the trained algorithm can assign the correct condition label to the activation patterns. For each participant, the classification was performed separately for the Glass-Wall and No-Glass-Wall conditions. For both conditions, the SVM classifier was trained to classify across three visible distance levels (Near, Middle, and Far). A leave-one-out cross validation method was used. First, one of the blocks was left out of the training sample. Then, the classifier was tested to see whether it could provide the correct label for the block that it was not trained on. These steps were repeated such that each block of the dataset played a role in training and testing. Because there were three possible labels (Near, Middle, or Far), the chance level for the classification was one-third. The average classification accuracy of all tested blocks was used as a dependent measure for each participant. The same analysis steps were performed for the pattern classification in all three experiments.

For comparing the SVM classification performance across ROIs, we used a linear mixed-effects model to take into account unequal number of subjects between the ROIs and the variance across subjects. Specifically, we modeled the classification accuracy using the code package implemented in R (lme4; Bates et al., 2015), where the fixed effects were ROI, Condition, and ROI × Condition (interaction) and the random intercepts for Subjects were included. To estimate the p value for the model fit, we used the code package lmerTest, which uses Satterthwaite approximation (Satterthwaite, 1941; Kuznetsova et al., 2017).

Results

Coding of navigational distance limited by a boundary

In Experiment 1, we straightforwardly tested whether the OPA codes for the navigational distance in a visual scene. For realistic yet controlled visual stimuli, we generated artificial indoor environments using virtual-reality software (Unreal Engine 4, Epic Games). All environments had a flat and straight floor, which provided a clear path from the standpoint, and they were rendered to have the same width and height except for the distance to a back wall of an environment. Critically, to change navigational distance while minimizing the perceptual difference, a transparent glass wall was used as a boundary that limits one's movement in a local environment. For example, if there is a glass wall between the viewer and the back wall, the viewer would be able to walk only up to the glass wall, although the back wall would be still visible. The glass wall minimizes perceptual change or visibility of space beyond the glass wall while creating significant difference to one's movement in an environment.

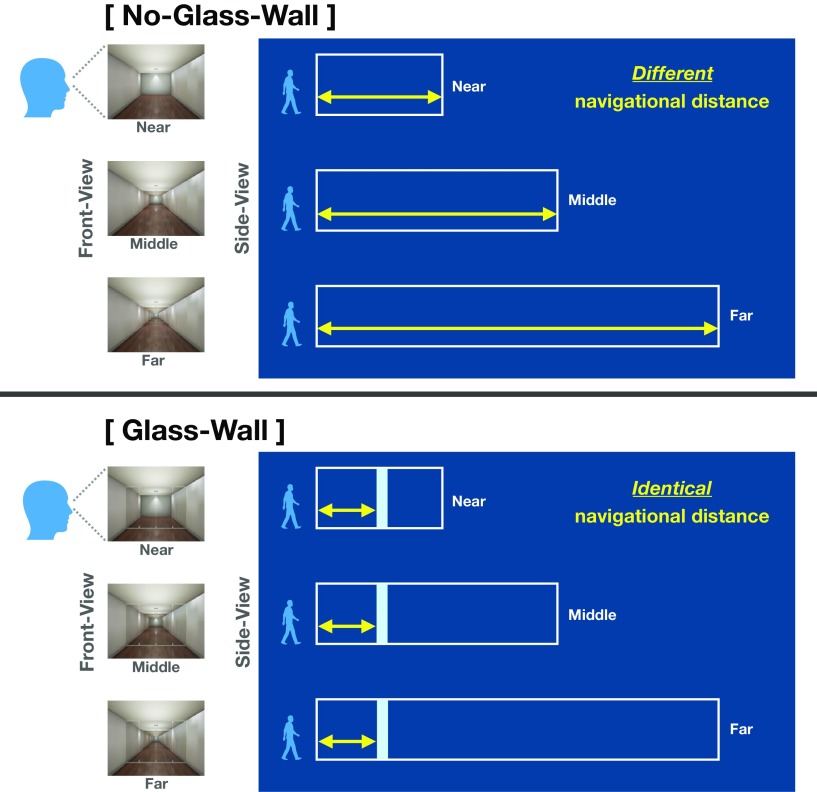

There were six stimuli conditions varied by two factors. A factor visible distance had three levels (Near, Middle, Far), which was defined as the distance from the standpoint to the back wall that was always visible. Another factor navigational boundary had two levels (Glass-Wall, No-Glass-Wall). When there was no glass wall, the navigational distance was determined by the location of the back wall, because the viewer would be able to walk up to the back wall of the environment (No-Glass-Wall conditions; Fig. 1, top). On the other hand, when there was a glass wall, the navigational distance was determined by location of the glass wall rather than the back wall, because the viewer would not be able to walk past the glass wall. Critically, we kept the location of the glass wall as the same across Near, Middle, and Far, which made the navigational distance identical regardless of the visible distance levels (Glass-Wall conditions; Fig. 1, bottom).

Figure 1.

Experiment 1 Stimuli and schematic illustration of conditions. There were six conditions, which were varied in three visible distance levels (Near, Middle, and Far) and two navigational boundary levels (No-Glass-Wall and Glass-Wall). Left, The front-view column shows stimuli example from each visible distance condition, which were presented to participants in the scanner. Right, The side-view panel illustrates schematic structure of environment for each condition. A white box represents each environment (e.g., a room), and a light blue rectangle in the Glass-Wall represents a transparent glass wall. A yellow arrow represents the navigational distance within each environment. Importantly, the navigational distance in the No-Glass-Wall was different at each visible distance level, whereas the navigational distance in the Glass-Wall was the same across all visible distance levels. We validated our navigational distance manipulation with a separate set of participants through a behavioral experiment.

In Experiment 1, 18 participants (12 female) viewed blocks of images from each of six conditions while being scanned in a 3T Philips fMRI scanner. Participants performed a one-back repetition detection task in which they pressed a button whenever there was a repetition of an image, while paying attention to how far they can walk forward if they were inside the presented environment. Each ROI (PPA, RSC, and OPA) was functionally defined using independent localizers (Scene – Face, p < 0.0001, uncorrected, and then thresholded using a group mask; see Materials and Methods). A linear SVM classifier was trained with multi-voxel patterns of activation from each ROIs to test whether the multivoxel pattern can correctly classify between the conditions. For each participant, the classification was performed separately for the Glass-Wall and No-Glass-Wall conditions. For both conditions, the SVM classifier was trained to classify across three visible distance levels (Near, Middle, and Far). A leave-one-out cross validation method was used (see Materials and Methods). Using this method, we make a pair of clear hypotheses about the classifier: if a brain region represents the navigational distance, multivoxel patterns from such region will be distinguishable if the navigational distance differs. At the same time, if a brain region represents the navigational distance, multivoxel patterns from such region will not be distinguishable if the navigational distance is equal. Note that our rationale about navigational distance is supported by fulfilling a combination of these two complementing hypotheses.

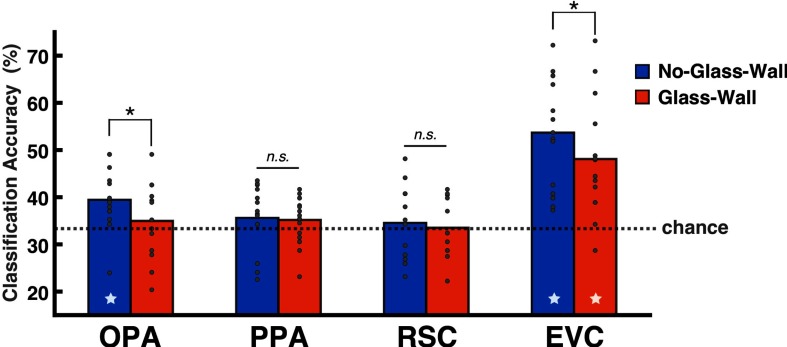

The accuracy of three-way SVM classification across visible distance levels (Near, Middle, Far) was significantly above chance in the No-Glass-Wall condition (mean: 39.50%, t(13) = 3.45, p < 0.01) but not in the Glass-Wall condition in the OPA (mean: 34.9%, t(13) = 0.75, p = 0.47; Fig. 2). In other words, the neural patterns from the OPA were distinguishable for three different navigational distances in No-Glass-Wall condition, but indistinguishable when a transparent glass wall was added to the environment to limit the navigational distance in the Glass-Wall condition. A paired t test between No-Glass-Wall and Glass-Wall conditions was also significant (t(13) = −2.24, p < 0.05), suggesting that the neural pattern of the OPA is modulated by the navigational distance present in scenes. Further, an analysis of the confusion matrix suggested that the above-chance classification accuracy in the OPA was not driven by a single distance level.

Figure 2.

The SVM classification results in Experiment 1. The y-axis shows the mean classification accuracy (%), and the dotted line marks the chance level of the three-way classification (0.33). The bar graph represents the mean accuracy, and gray dots on top of the bar represent individual subject data. The star at the bottom of a bar indicates above-chance classification accuracy. The statistical test suggested no hemispheric difference in any of ROIs, so we report results from bilaterally merged ROIs. Among three scene-selective regions, only the OPA showed above-chance classification across Near, Middle, and Far in the No-Glass-Wall condition (p < 0.01). Further, an analysis of the confusion matrix suggested that the above-chance classification accuracy was not driven by a single distance level. The EVC showed significant classification accuracy for both the No-Glass-Wall (p < 0.01) and Glass-Wall conditions (p < 0.01). *P < 0.05; n.s. indicates not significant.

The evidence for the coding of navigational distance was specifically found in the OPA among scene-selective regions. Neither the PPA nor RSC showed above-chance classification for navigational distance present in any conditions and showed no difference across No-Glass-Wall and Glass-Wall conditions: PPA (No-Glass-Wall: mean 35.62%, t(14) = 1.31, p = 0.21; Glass-Wall: 35.17%, t(14) = 1.39, p = 0.19; no difference between conditions, t(14) = −0.17, p = 0.87), and RSC (No-Glass-Wall: 34.55%, t(11) = 0.53, p = 0.61; Glass-Wall: 33.52%, t(11) = 0.1, p = 0.92; no difference between conditions, t(11) = −0.39, p = 0.71). These results suggest that the OPA is specifically involved in the coding of navigational distance, let alone the sensitivity to the presence of navigational boundary (i.e., glass wall).

To test whether the OPA's results are driven by the low-level visual features, we also examined the EVC (for the localization process, see Materials and Methods). Similar to the OPA, the EVC showed above-chance classification accuracy in the No-Glass-Wall condition (mean: 53.69%, t(14) = 6.7, p < 0.01); but, different from the OPA, it showed above-chance accuracy in the Glass-Wall condition, where the navigational distance was kept the same (mean: 48.09%, t(14) = 4.75, p < 0.01). This result suggests that the EVC pattern is unlikely to reflect the coding of navigational distance, but a low-level visual difference across conditions. However, we conserve our conclusion about whether the OPA's representation is unique from that of the EVC in Experiment 1, based on a following statistical test. We modeled the SVM classification accuracy with a linear mixed-effects model (lme4; Bates et al., 2015), where ROI, Condition, and ROI x Condition (interaction) were specified as fixed effects, and the random intercepts for Subjects were included. However, we could not find the significant ROI × Condition interaction (F(1,40.32) = 0.049, p = 0.83) in Experiment 1.

The only visual difference between the No-Glass-Wall and the Glass-Wall conditions was the presence of a transparent glass wall. Despite this subtle visual difference, the neural pattern of the OPA was significantly modulated by presence of the glass wall. When the glass wall was added in a scene, the OPA patterns were no longer distinguishable across conditions. However, it remains as a question what was driving the nonsignificant classification result from the Glass-Wall. There are at least two possible underlying reasons. One possibility is that the OPA is sensitive to the presence of the navigational boundary (i.e., the presence of a glass wall) but not the distance to it. In this case, the OPA detects the glass wall but does so without coding its specific location in a scene and will show nonsignificant classification for the three scenes with the glass wall because they all contain a glass wall. If this is the case, the OPA should not care whether the location of glass wall is the same or different across scenes. Another possibility is that the OPA actually codes for the navigational distance to the glass wall, and the nonsignificant classification found in the Glass-Wall condition reflects the equal navigational distance that scenes in Glass-Wall conditions have in Experiment 1. If this is the case, the OPA should differentiate the three scenes with the glass wall if the location of glass wall changes across scenes. We directly parse out these two possibilities in Experiment 2 by changing the glass wall position in scenes.

Sensitivity to different amount of navigational distance to boundaries within a scene

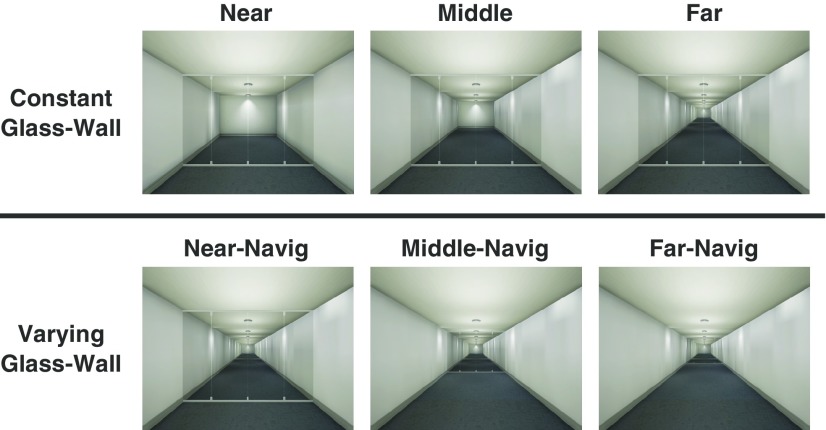

Experiment 2 tested whether the OPA is sensitive to different amount of navigational distance to the boundary (e.g., glass wall). If the OPA truly codes for the navigational distance, then multivoxel patterns from the OPA should be sensitive to a navigational distance change induced by different locations of the boundary. To test this in Experiment 2, the navigational distance was manipulated by changing the glass wall position only, while keeping the same visible distance (Varying-Glass-Wall conditions; Fig. 3, bottom row). As a contrast, the equal navigational distance was maintained by keeping the same glass wall position, while varying the visible distance (Constant-Glass-Wall conditions; Fig. 3, top row), which is the same condition from Experiment 1 Glass-Wall condition.

Figure 3.

Experiment 2 Stimuli. There were five conditions in total. Note that the Far condition in Constant-Glass-Wall is the same as the Near-Navig condition in Varying-Glass-Wall condition. This condition is presented twice in this figure for illustration purposes only. In the Constant-Glass-Wall condition, there were three levels of visible distance (Near, Middle, Far) with the same navigational distance. In the Varying-Glass-Wall condition, the navigational distance was varied in three levels (Near-Navig, Middle-Navig, Far-Navig) while keeping the same visible distance. We validated our navigational distance manipulation with a separate set of participants through a behavioral experiment. The SVM classification was separately tested for the Constant-Glass-Wall and Varying-Glass-Wall conditions (separated by a horizontal line).

Fifteen new participants (9 female) viewed blocks of images from each condition in a 3T Philips fMRI scanner. In Experiment 2, participants were instructed to simply detect a repeated image while passively viewing scenes (i.e., one-back task), contrasting with how Experiment 1 asked participants to pay attention to how far they can walk forward if they were inside the presented environment. As preview, consistent results were found across Experiments 1 and 2 regardless of the difference in instructions, suggesting that the coding of navigational distance is not critically affected by specific tasks, which is in line with previously suggested automatic and rapid coding of navigational affordance in the OPA (Bonner and Epstein, 2017; Henriksson et al., 2019).

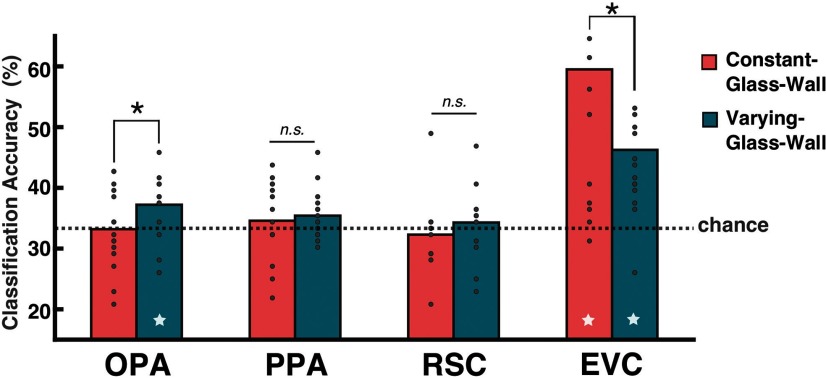

For the Varying-Glass-Wall condition, the SVM classification was performed across Near-Navig, Middle-Navig, and Far-Navig, which differ only in terms of navigational distance. For the Constant-Glass-Wall condition, the classification was performed across Near, Middle, and Far, which differ only in terms of visible distance. The three-way SVM classification demonstrated that the OPA could successfully classify scenes when there was a difference in the navigational distance (Varying-Glass-Wall condition), but not when there was a difference in the visible distance only and not in navigational distance (Constant-Glass-Wall condition). The OPA's classification accuracy was at chance in the Constant-Glass-Wall condition (mean: 33.19%, t(14) = −0.08, p = 0.94), replicating Experiment 1. Importantly, the classification accuracy in the Varying-Glass-Wall condition was significantly above chance (mean: 37.22%, t(14) = 2.91, p < 0.05) and significantly different from the Constant-Glass-Wall condition (t(14) = −2.28, p < 0.05; Fig. 4). These results suggest that the OPA is sensitive to the different amount of navigational distance changed by a position of a navigational boundary and reject an alternative possibility that the OPA represents the mere presence of a boundary.

Figure 4.

The SVM classification results in Experiment 2. The y-axis shows the mean classification accuracy (%), and the dotted line marks the chance level of three-way classification (0.33). The bar graph represents the mean accuracy, and gray dots on top of the bar represent individual subject data. The star at the bottom of a bar indicates above-chance classification accuracy. The OPA showed above-chance classification for the Varying-Glass-Wall condition (p < 0.05), where the navigational distance was different, but the chance-level classification performance for the Constant-Glass-Wall condition, where the navigational distance was identical. The EVC also showed significant classification accuracy for the Varying-Glass-Wall condition (p < 0.01), but it showed the above-chance accuracy for the Constant-Glass-Wall condition as well (p < 0.01), where the navigational distance was kept the same. *P < 0.05; n.s. indicates not significant.

Moreover, consistent with Experiment 1, other scene-selective areas did not show any significant modulation by the navigational distance: PPA (Constant-Glass-Wall: mean 34.58%, t(14) = 0.75, p = 0.47; Varying-Glass-Wall: 35.42%, t(14) = 1.91, p = 0.08; no significant difference between conditions, t(14) = −0.54, p = 0.6) and RSC (Constant-Glass-Wall: mean 32.29%, t(12) = −0.6, p = 0.56; Varying-Glass-Wall: 34.29%, t(12) = 0.56, p = 0.58; no significant difference between conditions, t(12) = −0.98, p = 0.34). Also consistent with Experiment 1, EVC showed above-chance classification accuracy in both Constant-Glass-Wall (mean: 59.51%, t(14) = 5.05, p < 0.01) and the Varying-Glass-Wall conditions (mean: 46.25%, t(14) = 4.29, p < 0.01). We used a linear mixed-effects model (R package lme4; Bates et al., 2015) to test whether there is a significant difference between the OPA and EVC (i.e., modeling the classification accuracy with the ROI and Condition as fixed effects and Subject as a random intercept), and there was a significant ROI × Condition interaction (F(1,42)=9.735, p < 0.01). The result shows that the EVC can classify scenes regardless of the navigational distance difference, and such result hints that the above-chance classification in the EVC does not reflect whether scenes have the same or different navigational distance. Together, Experiment 2 results further support an idea that the coding of the navigational distance is a distinctive role of the OPA, rather than a part of general scene processing.

Sensitivity to the functional constraint of boundaries

In Experiments 1 and 2, the glass wall has played a critical role in modulating the OPA response patterns. Multivoxel patterns of the OPA could be differentiated only when the navigational distance determined by the glass wall differed across conditions. One question, though, is which properties of glass wall the OPA responds to. We have argued that the glass wall is an important navigational boundary because it limits how far one can navigate. However, there is a possibility that such functional constraint may not be the critical feature modulating the OPA pattern; for example, the presence of an additional boundary feature itself may have driven the observed effects. Although Experiment 2 showed that the OPA is sensitive to the location of the glass wall, it remains as a question whether the OPA represents the functional constraint of the glass wall. In other words, does it actually matter to the OPA that the glass wall is not crossable?

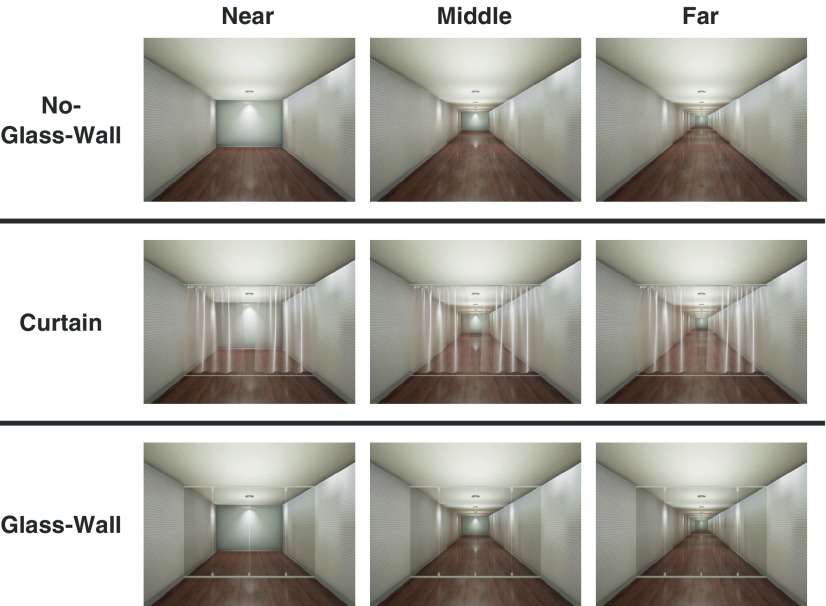

To test this in Experiment 3, we generated a boundary that is easily crossable: a transparent curtain. The curtain is different from the glass wall in that it is an easily crossable boundary, but it is similar to the glass wall in that it adds additional local boundary features to a scene while allowing to see the space beyond it (e.g., back wall of the room). To validate our navigational distance manipulation, we ran a behavioral experiment with a separate set of participants and asked them to estimate how far they would be able to walk forward in each scene. The behavioral results confirmed that participants perceive the curtain as crossable, and the curtain does not significantly affect the navigational distance estimation as the glass wall does. To investigate how a boundary without the functional constraint affects the neural coding of navigational distance in a scene, we added a Curtain condition to the same experimental paradigm of Experiment 1 (Fig. 5). All conditions (No-Glass-Wall, Glass-Wall, and Curtain) differed in terms of visible distance (i.e., Near, Middle, and Far). Just like in Experiment 1, the navigational distance differed in No-Glass-Wall condition, but not in Glass-Wall condition. The critical question was whether the curtain, a crossable boundary, will restrict the navigational distance in a scene. If the existence of any local boundary matters, then we expect to see a similar pattern in Curtain condition to the Glass-Wall condition. If, however, the functional constraint that a boundary imposes matters, then we expect to see a pattern in Curtain condition similar to the No-Glass-Wall condition. Fourteen new participants (8 female) were recruited and scanned while looking at images from nine condition blocks. As in Experiment 2, participants performed a one-back repetition detection task without any navigation-related tasks.

Figure 5.

Experiment 3 Stimuli. There were nine conditions, which were varied in three visible distance levels (Near, Middle, and Far; columns) and three navigational boundary levels (No-Glass-Wall, Curtain, and Glass-Wall; rows). The No-Glass-Wall and Glass-Wall conditions were identical to Experiment 1. In the Curtain condition, a transparent curtain was positioned instead of a glass-wall. The location of the curtain was identical across all visible distance levels. We validated our navigational distance manipulation with a separate set of participants through a behavioral experiment. The SVM classifier was trained and tested to classify across three visible distance levels (Near, Middle, and Far), separately for the No-Glass-Wall, Curtain, and Glass-Wall conditions (separated by a horizontal line).

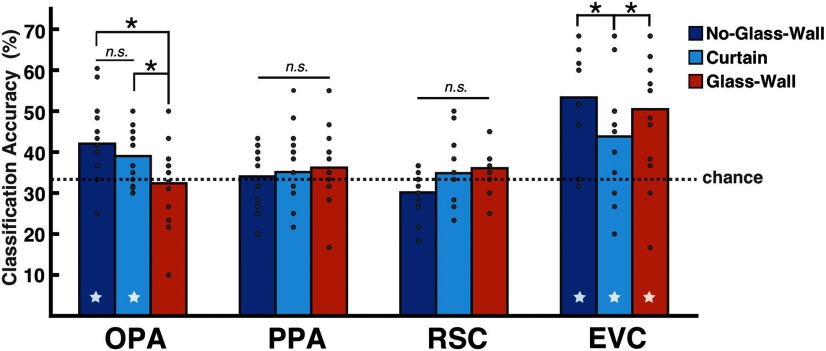

The SVM classification was performed for each boundary condition across three levels of visible distance: Near, Middle, and Far. In the OPA, the No-Glass-Wall and Glass-Wall conditions replicated the results from Experiment 1 (Fig. 6). Just like in previous experiments, the classification accuracy was significantly above-chance in the No-Glass-Wall condition (mean: 42.05%, t(13) = 3.3, p < 0.01), at chance level in the Glass-Wall condition (mean: 32.38%, t(13) = −0.36, p = 0.72), and the difference between these two accuracies were statistically significant (t(13) = −3.29, p < 0.01).

Figure 6.

The SVM classification results in Experiment 3. The y-axis shows the mean classification accuracy (%), and the dotted line marks the chance level of three-way classification (0.33). The bar graph represents the mean accuracy, and gray dots on top of the bar represent individual subject data. The star at the bottom of a bar indicates above-chance classification accuracy. The results for No-Glass-Wall (p < 0.01) and Glass-Wall conditions were replicated in all ROIs. Critically, the OPA showed above-chance classification for Curtain condition (p < 0.01), suggesting that the functional constraint of a boundary is crucial for the OPA. Consistent with previous experiments, other ROIs were not sensitive to the navigational distance; the PPA and RSC showed the chance-level classification in all conditions, whereas the EVC showed the above-chance classification in all conditions (all ps < 0.05). *P < 0.05; n.s. indicates not significant.

Critically, we found that a crossable boundary (curtain) did not affect the OPA patterns as the non-crossable boundary (glass wall) did. As in the No-Glass-Wall condition, a significantly above-chance accuracy was observed in the Curtain condition (mean: 39.02%, t(13) = 3.36, p < 0.01; Fig. 6), indicating that the OPA patterns were distinguishable across Near, Middle, and Far levels regardless of whether the curtain was present in a scene or not. Moreover, the classification performance of the Curtain condition was significantly higher than that of the Glass-Wall condition (t(13) = −2.19, p < 0.05), but not statistically different from the No-Glass-Wall condition (t(13) = 1.01, p = 0.33), confirming that the OPA responded to the Curtain condition similarly to the No-Glass-Wall condition rather than the Glass-Wall condition. Together, these results showed that even if there is a boundary feature in a scene, if the boundary does not functionally restrict the navigational distance, it essentially does not affect the neural patterns of the OPA. This finding corroborates our claim that the OPA codes for the navigational distance in a scene and further highlights the importance of functional constraint a boundary imposes in such computation.

Other scene-selective areas did not show any sensitivity to the functional constraint of a boundary or the navigational distance. The PPA and RSC both showed the chance-level classification accuracy in all conditions: PPA (No-Glass-Wall: mean 34.05%, t(13) = 0.37, p = 0.72; Glass-Wall: 36.19%, t(13) = 1.17, p = 0.26; Curtain: 35.12%, t(13) = 0.85, p = 0.41) and RSC (No-Glass-Wall: 30.83%, t(10) = −1.76, p = 0.11; Glass-Wall: 36.07%, t(10) = 1.55, p = 0.15; Curtain: 35.12%, t(10) = 0.55, p = 0.6). On the contrary, the EVC showed above-chance classification accuracy in all conditions (No-Glass-Wall: 53.55%, t(13) = 5.43, p < 0.01; Glass-Wall: 50.48%, t(13) = 4.18, p < 0.01; Curtain: 43.81%, t(13) = 2.92, p < 0.05). When we compared classification accuracy of the EVC to the OPA using a mixed-effects model (R package lme4; Bates et al., 2015), we found a significant interaction between the ROI (EVC, OPA) and the Condition (Glass-Wall, Curtain, No-Glass-Wall; F(2,65) = 4.0, p < 0.05). These results together suggest that the EVC is not sensitive to the functional constraint of a boundary and the above-chance classification in the Curtain condition is unlikely to be driven by the navigational distance.

Discussion

The current study examined the nature of neural coding for the navigational distance in visual scenes. In Experiment 1, we showed that neural patterns from the OPA is sensitive to the egocentric distance to a boundary limiting the navigational distance. Experiment 2 demonstrated that the OPA is sensitive to specific locations of boundaries that vary navigational distance rather than to the mere presence of the boundary in a scene. Experiment 3 directly tested the nature of navigational distance representation by asking whether the functional constraint on navigation imposed by the boundary plays a critical role. A boundary that does not block movements, such as a curtain, was treated as if there was no boundary, similar to the No-Glass-Wall condition.

Throughout three fMRI experiments, we showed that the OPA codes for the navigational distance in visual scenes as influenced by a functionally limiting local boundary. To our knowledge, this is the first empirical evidence demonstrating that the OPA is sensitive to the navigational distance.

Boundary and navigational path representation

The OPA consistently showed neural representation of navigational distance that is distinctive from other scene-selective areas. How might the OPA develop the sensitivity to functionally constraining boundary in a scene?

One possible explanation is based on the intrinsic retinotopic bias in the OPA. In contrast to the PPA that shows a bias for the upper visual field, the OPA shows a bias for the lower visual field (Silson et al., 2015; Arcaro and Livingstone, 2017). A study using a deep convolutional neural network recently showed that the majority of explained variance for the OPA's neural pattern could be attributed to the lower part of the image (Bonner and Epstein, 2018). Consistently, Henriksson et al. (2019) tested the relative contribution of each layout-defining components (back wall, ceiling, floor, and the left and right walls) and showed that the back wall and the floor have larger contribution to the explained variance of OPA. These results together highlight the importance of visual information at the low part of the image or the floor, where boundaries or obstacles often appear. Thus, the sensitivity of OPA to the lower visual field of a scene probably facilitated OPA's sensitivity to detect functional boundaries (e.g., glass wall) or obstacles on a path.

Beyond simple detection of obstacle structure, estimation of distance often bases on lower part of a scene as well, as the measurement starts from the current viewer's foot position, which usually matches to the bottom center of a scene view. Results of the current experiment adds to many recent fMRI findings that highlight the role of the OPA in representing environmental features in a scene important for navigation (Julian et al., 2016; Bonner and Epstein, 2017; Groen et al., 2018), as well as to a greater literature highlighting the importance of a boundary as one of the most fundamental spatial feature in scene space (Cheng, 1986; Gallistel, 1990; Cheng and Newcombe, 2005; Lee and Spelke, 2010; Cheng et al., 2013; Lee, 2017). In this study, we demonstrate that the OPA's representation of a boundary is constrained by the functional relevance of the boundary structure to a navigator.

Functional constraint representation

The navigational distance representation in the OPA was modulated only by a boundary with the functional constraint (e.g., a glass wall) but not by a boundary without the functional constraint on navigation (e.g., a curtain). These results suggest that the OPA can represent the functional value of an environmental structure.

How does the OPA get such information? Although the current study did not focus on this question, it's worth speculating about at least two broad possibilities here. First, the OPA neurons may be “tuned” to navigationally important perceptual features through learning. For example, as a child navigates through environments, the child will learn that a glass wall is impassible. After many repetitions of similar experience, the OPA neurons will develop sensitivity to visual features of navigationally relevant boundaries in environments. Another possibility is that the OPA gets online feedback from semantic areas about functional value of the current stimuli. In this case, the OPA pattern may be susceptible to a context of how the stimulus is presented or interpreted. Future work should explore the role of high-level knowledge over boundary representation in the OPA.

Another interesting question is whether the OPA represents the functional value of navigational boundaries in a binary or a more gradient manner. We operationalized the functional constraint in this study as binary (i.e., passable or impassable), but the boundaries in the real world have more fine-grained level of limits on movements. For example, a boundary like a half wall or a barrier may not completely block a viewer's movements but handicap the movement to a certain level. Would the OPA be sensitive to those intermediate level of functional limit as well? Future attempts to quantify and parameterizing functional limits of different boundaries will provide a valuable insight into understanding how the OPA represents the functional value of boundaries.

Navigational distance representation

How might the OPA represent navigational distance information? One possible interpretation is the observed effects are not purely driven by the distance representation but by other aspects of scenes, such as the volume of navigational space or the surface area of the navigable ground plane. Although those are naturally covarying factors in real environments, we acknowledge this as a potential limit of current study. A future study with systematic control of each geometric property will address the issue.

Another possible interpretation is based on the OPA's anatomical location, which partly overlaps with several retinotopically-defined areas such as V3B, V7, LO2, and partial V3A (Nasr et al., 2011; Silson et al., 2016). Because scene is a highly heterogeneous stimuli category, the scene-selectivity of the OPA may have developed based on the region's ability to process diverse features based on overlap with multiple retinotopic maps (Silson et al., 2016). It is plausible that the OPA uses such retinotopic information to compute the egocentric distance in a scene. In fact, the retinotopic areas overlapping with the OPA, such as V3A and V7, represent the position in depth information (Finlayson et al., 2017). Consistently, a recent study reported the functionally defined OPA's sensitivity to the depth information. The near or far depth activated higher univariate response in the OPA compared with the middle depth, and the OPA showed even stronger response when the stimuli were presented in both near and far depth plane, suggesting a bimodal preference to the depth information (Nag et al., 2019). Although these results are relevant to the findings in our study, there are several important differences. First, the depth information was provided in fundamentally different ways. We used monocular pictorial cues such as the perspective or texture gradient, whereas Nag et al. (2019) used the binocular disparity with the red/green anaglyph glasses. Second, whereas the depth sensitivity was shown with stronger univariate responses of the OPA to near and far depth planes in Nag et al. (2019), we did not find any significant univariate difference across Near, Middle, and Far navigational distances. Given that we were nonetheless able to differentiate the Near and Far navigational distances from the multivoxel patterns, it is possible that there are neurons in the OPA that represent the distance/depth information as a population, which would not necessarily lead to different univariate responses (Hatfield et al., 2016; Park and Park, 2017).

It is important to note that the navigational distance representation in the OPA is not a mere reflection of low-level inputs. In the current study, there were two measurable depth information: distance to local boundary (e.g., a glass wall) and distance to distal boundary (e.g., a back wall which is a visible boundary of a room). Nevertheless, the OPA was sensitive to the distance to the local boundary only. This selective sensitivity to a local boundary might be related the OPA's connection to the visually guided navigation network (dorsal occipito-premotor pathway). When navigating in an immediate environment, the local boundary has more critical value than a distal boundary due to its proximity to a navigator. In other words, estimating the distance to the back wall, which is located in the space already blocked by the glass wall, would not be as relevant as estimating the distance to the glass wall.

Thus, the OPA might use the low-level inputs (e.g., pictorial depth cues) to compute the navigational distance but the sensitivity to the distance is multiplied by its navigational value. The combination of low-level depth cues and the navigational relevance to a viewer will make OPA sensitive to the distance to only those boundaries that are directly relevant to the navigation.

Conclusion

The current study showed that the OPA codes for the navigational distance to environmental boundaries that limit one's movements. We propose that the OPA acts as a perceptual source of navigationally-relevant information in visual environments, which supports for navigation in a local environment and also for the formation of cognitive map.

Footnotes

This work was supported by a National Eye Institute Grant (R01EY026042), National Research Foundation of Korea Grant (funded by MSIP-2019028919), and Yonsei University Future-leading Research Initiative (2019-22-0217) to S.P. We thank the F.M. Kirby Research Center for Functional Brain Imaging in the Kennedy Krieger Institute, Johns Hopkins University, 21205.

The authors declare no competing financial interests.

References

- Arcaro MJ, Livingstone MS (2017) Retinotopic organization of scene areas in macaque inferior temporal cortex. J Neurosci 37:7373–7389. 10.1523/JNEUROSCI.0569-17.2017 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Aguirre GK, D'Esposito M (1999) Topographical disorientation: a synthesis and taxonomy. Brain 122:1613–1628. 10.1093/brain/122.9.1613 [DOI] [PubMed] [Google Scholar]

- Bainbridge WA, Dilks DD, Oliva A (2017) Memorability: a stimulus-driven perceptual neural signature distinctive from memory. Neuroimage 149:141–152. 10.1016/j.neuroimage.2017.01.063 [DOI] [PubMed] [Google Scholar]

- Bates D, Machler M, Bolker B, Walker S (2015) Fitting linear mixed-effects models using lme4. Journal of Statistical Software 67:1–48. [Google Scholar]

- Bonner MF, Epstein RA (2017) Coding of navigational affordances in the human visual system. Proc Natl Acad Sci U S A 114:4793–4798. 10.1073/pnas.1618228114 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bonner MF, Epstein RA (2018) Computational mechanisms underlying cortical responses to the affordance properties of visual scenes. PLoS computational biology 14:e1006111. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brainard D. (1997) The Psychophysics Toolbox. Spat Vis 10:433–436. 10.1163/156856897X00357 [DOI] [PubMed] [Google Scholar]

- Cant JS, Xu Y (2012) Object ensemble processing in human anterior-medial ventral visual cortex. J Neurosci 32:7685–7700. 10.1523/JNEUROSCI.3325-11.2012 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cant JS, Xu Y (2015) The impact of density and ratio on object-ensemble representation in human anterior-medial ventral visual cortex. Cereb Cortex 25:4226–4239. 10.1093/cercor/bhu145 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cheng K. (1986) A purely geometric module in the rat's spatial representation. Cognition 23:149–178. 10.1016/0010-0277(86)90041-7 [DOI] [PubMed] [Google Scholar]

- Cheng K, Huttenlocher J, Newcombe NS (2013) 25 years of research on the use of geometry in spatial reorientation: a current theoretical perspective. Psychon Bull Rev 20:1033–1054. 10.3758/s13423-013-0416-1 [DOI] [PubMed] [Google Scholar]

- Cheng K, Newcombe NS (2005) Is there a geometric module for spatial orientation? Squaring theory and evidence. Psychon Bull Rev 12:1–23. 10.3758/BF03196346 [DOI] [PubMed] [Google Scholar]

- Dilks DD, Julian JB, Kubilius J, Spelke ES, Kanwisher N (2011) Mirror-image sensitivity and invariance in object and scene processing pathways. J Neurosci 31:11305–11312. 10.1523/JNEUROSCI.1935-11.2011 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dilks DD, Julian JB, Paunov AM, Kanwisher N (2013) The occipital place area is causally and selectively involved in scene perception. J Neurosci 33:1331–1336. 10.1523/JNEUROSCI.4081-12.2013 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Epstein R, Higgins JS, Jablonski K, Feiler AM (2007) Visual scene processing in familiar and unfamiliar environments. J Neurophysiol 97:3670–3683. 10.1152/jn.00003.2007 [DOI] [PubMed] [Google Scholar]

- Epstein R, Kanwisher N (1998) A cortical representation of the local visual environment. Nature 392:598–601. 10.1038/33402 [DOI] [PubMed] [Google Scholar]

- Finlayson NJ, Zhang X, Golomb JD (2017) Differential patterns of 2D location versus depth decoding along the visual hierarchy. Neuroimage 147:507–516. 10.1016/j.neuroimage.2016.12.039 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gallistel CR. (1990) The organization of learning. Cambridge, MA: MIT. [Google Scholar]

- Grill-Spector K. (2003) The neural basis of object perception. Curr Opin Neurobiol 13:159–166. 10.1016/S0959-4388(03)00040-0 [DOI] [PubMed] [Google Scholar]

- Groen II, Greene MR, Baldassano C, Fei-Fei L, Beck DM, Baker CI (2018) Distinct contributions of functional and deep neural network features to representational similarity of scenes in human brain and behavior. eLife 7:e32962 10.7554/eLife.32962 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hatfield M, McCloskey M, Park S (2016) Neural representation of object orientation: a dissociation between MVPA and repetition suppression. Neuroimage 139:136–148. 10.1016/j.neuroimage.2016.05.052 [DOI] [PubMed] [Google Scholar]

- Henderson JM, Zhu DC, Larson CL (2011) Functions of parahippocampal place area and retrosplenial cortex in real-world scene analysis: an fMRI study. Vis Cogn 19:910–927. 10.1080/13506285.2011.596852 [DOI] [Google Scholar]

- Henriksson L, Mur M, Kriegeskorte N (2019) Rapid invariant encoding of scene layout in human OPA. Neuron 103:161–171.e3. 10.1016/j.neuron.2019.04.014 [DOI] [PubMed] [Google Scholar]

- Julian JB, Ryan J, Hamilton RH, Epstein RA (2016) The occipital place area is causally involved in representing environmental boundaries during navigation. Curr Biol 26:1104–1109. 10.1016/j.cub.2016.02.066 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Konkle T, Caramazza A (2013) Tripartite organization of the ventral stream by animacy and object size. J Neurosci 33:10235–10242. 10.1523/JNEUROSCI.0983-13.2013 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Konkle T, Oliva A (2012) A real-world size organization of object responses in occipitotemporal cortex. Neuron 74:1114–1124. 10.1016/j.neuron.2012.04.036 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kosslyn SM, Pick HL, Fariello GR (1974) Cognitive maps in children and men. Child Dev 45:707–716. [PubMed] [Google Scholar]

- Kravitz DJ, Peng CS, Baker CI (2011) Real-world scene representations in high-level visual cortex: it9s the spaces more than the places. J Neurosci 31:7322–7333. 10.1523/JNEUROSCI.4588-10.2011 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kuznetsova A, Brockhoff PB, Christensen RH (2017) lmerTest package: tests in linear mixed effects models. J Stat Soft 82:13 10.18637/jss.v082.i13 [DOI] [Google Scholar]

- Lee SA. (2017) The boundary-based view of spatial cognition: a synthesis. Cur Opin Behav Sci 16:58–65. 10.1016/j.cobeha.2017.03.006 [DOI] [Google Scholar]

- Lee SA, Spelke ES (2010) Two systems of spatial representation underlying navigation. Exp Brain Res 206:179–188. 10.1007/s00221-010-2349-5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lescroart MD, Gallant JL (2019) Human scene-selective areas represent 3D configurations of surfaces. Neuron 101:178–192. 10.1016/j.neuron.2018.11.004 [DOI] [PubMed] [Google Scholar]

- Marchette SA, Vass LK, Ryan J, Epstein RA (2014) Anchoring the neural compass: coding of local spatial reference frames in human medial parietal lobe. Nat Neurosci 17:1598–1606. 10.1038/nn.3834 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Muller RU, Kubie JL (1987) The effects of changes in the environment on the spatial firing of hippocampal complex-spike cells. J Neurosci 7:1951–1968. 10.1523/JNEUROSCI.07-07-01951.1987 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nag S, Berman D, Golomb JD (2019) Category-selective areas in human visual cortex exhibit preferences for stimulus depth. Neuroimage 196:289–301. 10.1016/j.neuroimage.2019.04.025 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nasr S, Liu N, Devaney KJ, Yue X, Rajimehr R, Ungerleider LG, Tootell RB (2011) Scene-selective cortical regions in human and nonhuman primates. J Neurosci 31:13771–13785. 10.1523/JNEUROSCI.2792-11.2011 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Park J, Park S (2017) Conjoint representation of texture ensemble and location in the parahippocampal place area. J Neurophysiol 117:1595–1607. 10.1152/jn.00338.2016 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Park S, Brady TF, Greene MR, Oliva A (2011) Disentangling scene content from spatial boundary: complementary roles for the parahippocampal place area and lateral occipital complex in representing real-world scenes. J Neurosci 31:1333–1340. 10.1523/JNEUROSCI.3885-10.2011 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pelli D. (1997) The VideoToolbox software for visual psychophysics: transforming numbers into movies. Spat Vis 10:437–442. 10.1163/156856897X00366 [DOI] [PubMed] [Google Scholar]

- Persichetti AS, Dilks DD (2016) Perceived egocentric distance sensitivity and invariance across scene-selective cortex. Cortex 77:155–163. 10.1016/j.cortex.2016.02.006 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Satterthwaite FE. (1941) Synthesis of variance. Psychometrika 6:309–316. 10.1007/BF02288586 [DOI] [Google Scholar]

- Silson EH, Chan AWY, Reynolds RC, Kravitz DJ, Baker CI (2015) A retinotopic basis for the division of high-level scene processing between lateral and ventral human occipitotemporal cortex. J Neurosci 35:11921–11935. 10.1523/JNEUROSCI.0137-15.2015 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Silson EH, Gilmore AW, Kalinowski SE, Steel A, Kidder A, Martin A, Baker CI (2019) A posterior–anterior distinction between scene perception and scene construction in human medial parietal cortex. J Neurosci 39:705–717. 10.1523/JNEUROSCI.1219-18.2018 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Silson EH, Steel AD, Baker CI (2016) Scene-selectivity and retinotopy in medial parietal cortex. Front Hum Neurosci 10:412. 10.3389/fnhum.2016.00412 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sugiura M, Shah NJ, Zilles K, Fink GR (2005) Cortical representations of personally familiar objects and places: functional organization of the human posterior cingulate cortex. J Cogn Neurosci 17:183–198. 10.1162/0898929053124956 [DOI] [PubMed] [Google Scholar]

- Walther DB, Caddigan E, Fei-Fei L, Beck DM (2009) Natural scene categories revealed in distributed patterns of activity in the human brain. J Neurosci 29:10573–10581. 10.1523/JNEUROSCI.0559-09.2009 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Walther DB, Chai B, Caddigan E, Beck DM, Fei-Fei L (2011) Simple line drawings suffice for functional MRI decoding of natural scene categories. Proc Natl Acad Sci U S A 108:9661–9666. 10.1073/pnas.1015666108 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wolbers T, Büchel C (2005) Dissociable retrosplenial and hippocampal contributions to successful formation of survey representations. J Neurosci 25:3333–3340. 10.1523/JNEUROSCI.4705-04.2005 [DOI] [PMC free article] [PubMed] [Google Scholar]